1. Introduction

Insurance companies typically operate in multiple lines of business and face different types of risks. It is important for them to evaluate the joint distribution of these different losses. Quite commonly, each type of these losses could be described by an aggregated loss model, which usually comprises two important components – loss frequency and loss size. Then, the joint distribution is a function of the interplay between the frequencies and sizes of different types of losses and the dependence among them (Wang, Reference Wang1998).

In the literature, there are several types of multivariate aggregate loss models. In one type, claim frequencies are dependent but claim sizes are independent. See for example, Hesselager (Reference Hesselager1996), Cossette et al. (Reference Cossette, Mailhot and Marceau2012), Kim et al. (Reference Kim, Jang and Pyun2019) and references therein. In another type, the claim frequency is one-dimensional, while each claim may cause multiple types of possibly dependent losses. See for example Sundt (Reference Sundt1999). Recently, models that allow dependence between claim frequencies and claim sizes have been developed. For example, in generalized linear model-based insurance pricing models such as Gschlöß l and Czado (2007) and Garrido et al. (Reference Garrido, Genest and Schulz2016), the regression of claim size includes claim count as a covariate. Alternatively, the expected values of claim frequency and claim size may depend on the same latent variables, as in Oh et al. (Reference Oh, Shi and Ahn2020).

Computing the risk measures for compound random variables, even for univariate cases, is not trivial, because the explicit formulas for the distribution functions usually do not exist. Listed below are some of the recent advances in the actuarial literature. Cossette et al. (Reference Cossette, Mailhot and Marceau2012) derived the capital allocation formulas for multivariate compound distributions under the Tail-Value-at-Risk measure, where the claim frequencies are dependent, the claim sizes are of the mixture Erlang type and mutually independent, and the claim frequency and size are independent. Kim et al. (Reference Kim, Jang and Pyun2019) derived a recursive algorithm to compute the risk measures of multivariate compound mixed Poisson models, where the Poisson-type claim frequencies depend on the same latent variables in a linear fashion. Denuit (Reference Denuit2020) derived formulas for the tail conditional expectations (TCE) of some univariate compound distributions. Denuit and Robert (Reference Denuit and Robert2021) presented some results for the TCE of a compound mixed Poisson model, where both the claim frequencies and sizes depend on several latent variables. Ren (Reference Ren2021) derived the formulas for the TCE and tail variance (TV) of multivariate compound models based on Sundt (Reference Sundt1999), where claim frequency is one-dimensional and one claim can yield multiple dependent losses.

The main goal of this paper is to present some easy-to-use formulas for computing the TCE and TV of some multivariate compound loss models, where the claim frequencies are dependent while the sizes are independent. Particularly, we study in detail the important dependence models in Hesselager (Reference Hesselager1996) and their extensions, which are widely used in the risk theory literature. See, for example, Cummins and Wiltbank (Reference Cummins and Wiltbank1983), Bermúdez (Reference Bermúdez2009), Cossette et al. (Reference Cossette, Mailhot and Marceau2012) and the references therein. We also discuss a case where the claim frequencies and sizes are dependent through a common mixing variable, following Denuit and Robert (Reference Denuit and Robert2021).

Methodologically, we apply the moment transform (which is also named as the size-biased transform) technique to our problems. The concept of moment transforms has a long history and is widely used in statistics (see, e.g., Patil and Ord Reference Patil and Ord1976; Arratia and Goldstein Reference Arratia and Goldstein2010, and the references therein). Its relevance to the study of actuarial risk measures has been exploited in the risk theory literature. For example, Furman and Landsman (Reference Furman and Landsman2005) applied the moment transform technique in computing the TCE of a portfolio of independent risks. Furman and Zitikis (Reference Furman and Zitikis2008) used it in determining TV and many other weighted risk measures. More recently, Denuit (Reference Denuit2020) applied this method in analyzing the TCE of univariate compound distributions. The concept of multivariate moment transform was studied in Denuit and Robert (Reference Denuit and Robert2021) and applied in analyzing multivariate risks. Ren (Reference Ren2021) applied this technique to study the tail risk of a multivariate compound sum introduced by Sundt (Reference Sundt1999), where the claim frequency is one-dimensional while each claim is a multidimensional random vector with dependent components.

The main contributions of this paper are summarized as follows. We first establish the relationship between the moment transform of a multivariate compound loss with those of its claim frequencies and sizes. It is shown that in many cases, the moment-transformed compound distribution can be represented by the convolution of a compound distribution, which is a mixture of some compound distributions that are in the same family of the original one, and the distribution of the moment transformed claim size. Such a representation allows us to evaluate the moment transform of a multivariate compound distribution efficiently by using either the fast Fourier transform (FFT) or recursive method, which are readily available in the literature (e.g., Hesselager Reference Hesselager1996). After deriving the moment transforms of multivariate compound distributions, we use them to evaluate the tail risks of multivariate aggregated losses and perform the associated capital allocation. Our main result also shows the effect of the distributions of claim frequencies and sizes on the tail risks of such aggregated losses. Our results generalize those in Denuit (Reference Denuit2020) and Kim et al. (Reference Kim, Jang and Pyun2019).

The remaining parts of the paper are organized as follows. Section 2 provides definitions and some preliminary results. Section 3 presents the main result for the moment transform of a general multivariate compound model with dependent claim frequencies and independent claim sizes. Section 4 studies the moment transform of the claim frequency in great detail. The case where claim frequencies and sizes are dependent is also studied. Section 5 provides numerical examples showing the risk capital allocation computation for each of the studied models.

2. Preliminaries and definitions

Suppose that an insurance company underwrites a portfolio of K types of risks. Let

![]() $\mathcal{K}=\{1,\cdots, K\}$

and for

$\mathcal{K}=\{1,\cdots, K\}$

and for

![]() $k\in \mathcal{K}$

, let

$k\in \mathcal{K}$

, let

![]() $N_k$

denote the number of type k claims. Let

$N_k$

denote the number of type k claims. Let

![]() $ \mathbf{N}=(N_1, \cdots, N_K)$

, whose joint probability function is denoted by

$ \mathbf{N}=(N_1, \cdots, N_K)$

, whose joint probability function is denoted by

For

![]() $k\in \mathcal{K}$

, let

$k\in \mathcal{K}$

, let

\begin{equation*}{S}_{N_k}= \sum_{i=1}^{N_k} {X}_{k,i},\end{equation*}

\begin{equation*}{S}_{N_k}= \sum_{i=1}^{N_k} {X}_{k,i},\end{equation*}

where

![]() ${X}_{k,i}, i=1, 2, \cdots, N_k,$

are i.i.d. random variables representing the size of a type k claim. They are assumed to have cumulative distribution function

${X}_{k,i}, i=1, 2, \cdots, N_k,$

are i.i.d. random variables representing the size of a type k claim. They are assumed to have cumulative distribution function

![]() $F_{X_k}$

. Loss size variables of different types are mutually independent and independent of

$F_{X_k}$

. Loss size variables of different types are mutually independent and independent of

![]() $\mathbf{N}$

.

$\mathbf{N}$

.

Let

denote the multivariate aggregate loss and

\begin{equation*}S_{\bullet}= \sum_{k=1}^{K} S_{N_k}\end{equation*}

\begin{equation*}S_{\bullet}= \sum_{k=1}^{K} S_{N_k}\end{equation*}

denote the total amount of all K types of claims.

In this paper, we study the following risk measures of

![]() $\mathbf{S}_{\mathbf{N}}$

:

$\mathbf{S}_{\mathbf{N}}$

:

The multivariate tail expectation (MTCE) of

$\mathbf{S}_{\mathbf{N}}$

at some level

$\mathbf{S}_{\mathbf{N}}$

at some level

$\mathbf{s}_{q}$

, which is defined by (see Landsman et al., Reference Landsman, Makov and Shushi2018) (2.2)where

$\mathbf{s}_{q}$

, which is defined by (see Landsman et al., Reference Landsman, Makov and Shushi2018) (2.2)where \begin{equation} \text{MTCE}_{\mathbf{S}_{\mathbf{N}}}(\mathbf{s}_{q}) = \mathbb{E}[\mathbf{S}_{\mathbf{N}} |\mathbf{S}_{\mathbf{N}} > \mathbf{s}_{q}], \end{equation}

\begin{equation} \text{MTCE}_{\mathbf{S}_{\mathbf{N}}}(\mathbf{s}_{q}) = \mathbb{E}[\mathbf{S}_{\mathbf{N}} |\mathbf{S}_{\mathbf{N}} > \mathbf{s}_{q}], \end{equation}

$\mathbf{s}_q = (s_{q_1}, \cdots, s_{q_K})$

and the expectation operation is taken to be element-wise.

$\mathbf{s}_q = (s_{q_1}, \cdots, s_{q_K})$

and the expectation operation is taken to be element-wise.

The multivariate tail covariance (MTCOV) of

$\mathbf{S}_{\mathbf{N}}$

at some level

$\mathbf{S}_{\mathbf{N}}$

at some level

$\mathbf{s}_{q}$

, which is defined by (2.3)

$\mathbf{s}_{q}$

, which is defined by (2.3) \begin{align} \text{MTCOV}_{\mathbf{S}_{\mathbf{N}}}(\mathbf{s}_q) & = \mathbb{E}[(\mathbf{S}_{\mathbf{N}}-\text{MTCE}_{\mathbf{S}_{\mathbf{N}}}(\mathbf{s}_q))(\mathbf{S}_{\mathbf{N}}-\text{MTCE}_{\mathbf{S}_{\mathbf{N}}}(\mathbf{s}_q))^\top |\mathbf{S}_{\mathbf{N}} > \mathbf{s}_q].\nonumber \\ & \end{align}

\begin{align} \text{MTCOV}_{\mathbf{S}_{\mathbf{N}}}(\mathbf{s}_q) & = \mathbb{E}[(\mathbf{S}_{\mathbf{N}}-\text{MTCE}_{\mathbf{S}_{\mathbf{N}}}(\mathbf{s}_q))(\mathbf{S}_{\mathbf{N}}-\text{MTCE}_{\mathbf{S}_{\mathbf{N}}}(\mathbf{s}_q))^\top |\mathbf{S}_{\mathbf{N}} > \mathbf{s}_q].\nonumber \\ & \end{align}

MTCE and MTCOV are multivariate extensions of univariate risk measures TCE and TV. They provide important information of the expected values and the variance––covariance dependence structure of the tail of a vector of dependent variables. Their properties are studied in Landsman et al. (Reference Landsman, Makov and Shushi2018).

To manage their insolvency risks, insurance companies are required to hold certain amount of capital, which is available to pay the claims arising from the adverse development of one or several types of risks. In order to measure and compare the different types of risks, it is important to determine how much capital should be assigned to each of them. Therefore, a capital allocation methodology is needed (Cummins, Reference Cummins2000). Methods for determining capital requirement and allocation have been studied extensively in the insurance/actuarial science literature. For more detailed discussions of such methods, one could refer to, for example, Cummins (Reference Cummins2000), Dhaene et al. (Reference Dhaene, Henrard, Landsman, Vandendorpe and Vanduffel2008), Furman and Landsman (Reference Furman and Landsman2008) and references therein. Since this paper focuses on tail risk measures such as TCE and TV, we apply the TCE- and TV-based capital allocation methods, which are described briefly in the following.

According to the TCE-based capital allocation rule, the capital required for the type k risk is given by

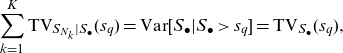

It is straightforward that

\begin{equation*}\sum_{k=1}^{K}\text{TCE}_{S_{N_k}|S_{\bullet}}(s_q)= \mathbb{E}[S_\bullet|S_\bullet>s_q]= \text{TCE}_{S_\bullet}(s_q),\end{equation*}

\begin{equation*}\sum_{k=1}^{K}\text{TCE}_{S_{N_k}|S_{\bullet}}(s_q)= \mathbb{E}[S_\bullet|S_\bullet>s_q]= \text{TCE}_{S_\bullet}(s_q),\end{equation*}

where

![]() $\text{TCE}_{S_\bullet}(s_q)$

is a commonly used criterion for determining the total capital requirement.

$\text{TCE}_{S_\bullet}(s_q)$

is a commonly used criterion for determining the total capital requirement.

Likewise, according to the TV-based capital allocation rule, the capital required for the type

![]() $k\in \mathcal{K}$

risk is given by

$k\in \mathcal{K}$

risk is given by

which satisfies

\begin{equation*}\sum_{k=1}^{K}\text{TV}_{S_{N_k}|S_{\bullet}}(s_q)= \text{Var}[S_\bullet|S_\bullet>s_q]= \text{TV}_{S_\bullet}(s_q),\end{equation*}

\begin{equation*}\sum_{k=1}^{K}\text{TV}_{S_{N_k}|S_{\bullet}}(s_q)= \text{Var}[S_\bullet|S_\bullet>s_q]= \text{TV}_{S_\bullet}(s_q),\end{equation*}

where

![]() $\text{TV}_{S_\bullet}(s_q)$

is another commonly used criterion for determining total capital requirement. It is worth pointing out that

$\text{TV}_{S_\bullet}(s_q)$

is another commonly used criterion for determining total capital requirement. It is worth pointing out that

![]() $\text{TV}_{S_{N_k}|S_{\bullet}}(s_q)$

can be computed through the quantities

$\text{TV}_{S_{N_k}|S_{\bullet}}(s_q)$

can be computed through the quantities

for which we will provide formulas in this paper.

Sometimes, the total capital is set exogenously by regulators or internal managers and may not necessarily be the TCE/TV of the sum

![]() $S_\bullet$

. The TCE/TV allocation rule can still be applied if tail risk is of the main concern. For example, with TCE allocation rule, the proportion of the total capital allocated to the type k risk can be determined by

$S_\bullet$

. The TCE/TV allocation rule can still be applied if tail risk is of the main concern. For example, with TCE allocation rule, the proportion of the total capital allocated to the type k risk can be determined by

This ratio is computed in Section 5 for the specific models studied in this paper.

In the following sections, we develop methods to compute the MTCE and MTCOV of

![]() $\mathbf{S}_{\mathbf{N}}$

, and the associated quantities for capital allocation. We do this by utilizing the moment transform of the random vector

$\mathbf{S}_{\mathbf{N}}$

, and the associated quantities for capital allocation. We do this by utilizing the moment transform of the random vector

![]() $\mathbf{S}_{\mathbf{N}}$

. For this purpose, we next introduce some definitions and preliminary results for moment transforms (see also Patil and Ord, Reference Patil and Ord1976).

$\mathbf{S}_{\mathbf{N}}$

. For this purpose, we next introduce some definitions and preliminary results for moment transforms (see also Patil and Ord, Reference Patil and Ord1976).

Definition 2.1. Let X be a non-negative random variable with distribution function

![]() $F_X$

and moment

$F_X$

and moment

![]() $\mathbb{E}[X^\alpha]<\infty$

for some positive integer

$\mathbb{E}[X^\alpha]<\infty$

for some positive integer

![]() $\alpha$

. A random variable

$\alpha$

. A random variable

![]() $\tilde{X}^{[\alpha]}$

is said to be a copy of the

$\tilde{X}^{[\alpha]}$

is said to be a copy of the

![]() $\alpha$

th moment transform of X if its cumulative distribution function (c.d.f.) is given by

$\alpha$

th moment transform of X if its cumulative distribution function (c.d.f.) is given by

The first moment transform of X is simply denoted as

![]() $\tilde{X}$

.

$\tilde{X}$

.

Definition 2.2. Let

![]() $\mathbf{X}=(X_1,\cdots, X_K)$

be a random vector with distribution function

$\mathbf{X}=(X_1,\cdots, X_K)$

be a random vector with distribution function

![]() $F_{\mathbf{X}}$

and moments

$F_{\mathbf{X}}$

and moments

![]() $\mathbb{E}[X_{k}^{\alpha}]<\infty$

and

$\mathbb{E}[X_{k}^{\alpha}]<\infty$

and

![]() $\mathbb{E}[X_{k_1}^{\alpha_1}X_{k_2}^{\alpha_2}]<\infty$

for some

$\mathbb{E}[X_{k_1}^{\alpha_1}X_{k_2}^{\alpha_2}]<\infty$

for some

![]() $k, k_1,k_2\in \{1,\cdots, K\}$

and positive integers

$k, k_1,k_2\in \{1,\cdots, K\}$

and positive integers

![]() $\alpha$

,

$\alpha$

,

![]() $\alpha_1$

and

$\alpha_1$

and

![]() $\alpha_2$

.

$\alpha_2$

.

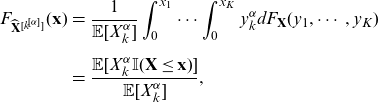

The kth component

![]() $\alpha$

th moment transform of

$\alpha$

th moment transform of

![]() $\mathbf{X}$

is any random vector

$\mathbf{X}$

is any random vector

![]() $\widehat{\mathbf{X}}^{[k^{[\alpha]}]}$

with c.d.f.

$\widehat{\mathbf{X}}^{[k^{[\alpha]}]}$

with c.d.f.

\begin{eqnarray} F_{\widehat{\mathbf{X}}^{[k^{[\alpha]}]}} (\mathbf{x}) &=& \frac{1}{\mathbb{E}[X_{k}^{\alpha}]}\int_0^{x_1} \cdots \int_0^{x_K}{y_{k}^{\alpha} d F_{\mathbf{X}} (y_1,\cdots, y_K)}\nonumber\\[5pt] &=&\frac{\mathbb{E}[X_{k}^{\alpha} \mathbb{I} (\mathbf{X} \le \mathbf{x})] }{\mathbb{E}[X_{k}^{\alpha}]}, \end{eqnarray}

\begin{eqnarray} F_{\widehat{\mathbf{X}}^{[k^{[\alpha]}]}} (\mathbf{x}) &=& \frac{1}{\mathbb{E}[X_{k}^{\alpha}]}\int_0^{x_1} \cdots \int_0^{x_K}{y_{k}^{\alpha} d F_{\mathbf{X}} (y_1,\cdots, y_K)}\nonumber\\[5pt] &=&\frac{\mathbb{E}[X_{k}^{\alpha} \mathbb{I} (\mathbf{X} \le \mathbf{x})] }{\mathbb{E}[X_{k}^{\alpha}]}, \end{eqnarray}

where

![]() $\mathbf{x}=(x_1,\cdots,x_K)$

. The kth component first moment transform of

$\mathbf{x}=(x_1,\cdots,x_K)$

. The kth component first moment transform of

![]() $\mathbf{X}$

is denoted as

$\mathbf{X}$

is denoted as

![]() $\hat{\mathbf{X}}^{[k]}$

.

$\hat{\mathbf{X}}^{[k]}$

.

The

![]() $(k_1,k_2)$

th component

$(k_1,k_2)$

th component

![]() $(\alpha_1,\alpha_2)$

th moment transform of

$(\alpha_1,\alpha_2)$

th moment transform of

![]() $\mathbf{X}$

is any random vector

$\mathbf{X}$

is any random vector

![]() $\widehat{\mathbf{X}}^{[k_1^{[\alpha_1]},k_2^{[\alpha_2]}]}$

with c.d.f.

$\widehat{\mathbf{X}}^{[k_1^{[\alpha_1]},k_2^{[\alpha_2]}]}$

with c.d.f.

\begin{eqnarray} F_{\widehat{\mathbf{X}}^{[k_1^{[\alpha_1]},k_2^{[\alpha_2]}]}} (\mathbf{x}) &=& \frac{1}{\mathbb{E}[X_{k_1}^{\alpha_1}X_{k_2}^{\alpha_2}]}\int_0^{x_1} \cdots \int_0^{x_K}{y_{k_1}^{\alpha_1}y_{k_2}^{\alpha_2} \, d F_{\mathbf{X}} (y_1,\cdots, y_K)}\nonumber\\[5pt] &=&\frac{\mathbb{E}[X_{k_1}^{\alpha_1}X_{k_2}^{\alpha_2} \mathbb{I} (\mathbf{X} \le \mathbf{x})] }{\mathbb{E}[X_{k_1}^{\alpha_1}X_{k_2}^{\alpha_2}]}. \end{eqnarray}

\begin{eqnarray} F_{\widehat{\mathbf{X}}^{[k_1^{[\alpha_1]},k_2^{[\alpha_2]}]}} (\mathbf{x}) &=& \frac{1}{\mathbb{E}[X_{k_1}^{\alpha_1}X_{k_2}^{\alpha_2}]}\int_0^{x_1} \cdots \int_0^{x_K}{y_{k_1}^{\alpha_1}y_{k_2}^{\alpha_2} \, d F_{\mathbf{X}} (y_1,\cdots, y_K)}\nonumber\\[5pt] &=&\frac{\mathbb{E}[X_{k_1}^{\alpha_1}X_{k_2}^{\alpha_2} \mathbb{I} (\mathbf{X} \le \mathbf{x})] }{\mathbb{E}[X_{k_1}^{\alpha_1}X_{k_2}^{\alpha_2}]}. \end{eqnarray}

The

![]() $(k_1,k_2)$

th component, (1,1)th moment transform of

$(k_1,k_2)$

th component, (1,1)th moment transform of

![]() $\mathbf{X}$

is denoted as

$\mathbf{X}$

is denoted as

![]() $\widehat{\mathbf{X}}^{[{k_1,k_2}]}$

.

$\widehat{\mathbf{X}}^{[{k_1,k_2}]}$

.

Remark 2.1. We have used the symbol

![]() $\tilde{X}$

to denote the moment transform of a univariate variable X and

$\tilde{X}$

to denote the moment transform of a univariate variable X and

![]() $\hat{\mathbf{X}}$

for the moment transform of a multivariate variable

$\hat{\mathbf{X}}$

for the moment transform of a multivariate variable

![]() $\mathbf{X}$

. In the sequel, we denote

$\mathbf{X}$

. In the sequel, we denote

![]() $\widehat{\mathbf{X}}^{[k^{[\alpha]}]}=(\widehat{{X}}_1^{[k^{[\alpha]}]}, \cdots, \widehat{{X}}_K^{[k^{[\alpha]}]})$

. In particular,

$\widehat{\mathbf{X}}^{[k^{[\alpha]}]}=(\widehat{{X}}_1^{[k^{[\alpha]}]}, \cdots, \widehat{{X}}_K^{[k^{[\alpha]}]})$

. In particular,

![]() $\widehat{{X}}_i^{[k]}$

denotes the ith element of

$\widehat{{X}}_i^{[k]}$

denotes the ith element of

![]() $\widehat{\mathbf{X}}^{[k]}$

, which is the kth component first moment transform of the random vector

$\widehat{\mathbf{X}}^{[k]}$

, which is the kth component first moment transform of the random vector

![]() $\mathbf{X}$

. It is not to be confused with

$\mathbf{X}$

. It is not to be confused with

![]() $\widetilde{{X}_i}^{[\alpha]}$

, which stands for the

$\widetilde{{X}_i}^{[\alpha]}$

, which stands for the

![]() $\alpha$

th moment transform of a univariate random variable

$\alpha$

th moment transform of a univariate random variable

![]() $X_i$

. The same convention applies to other components or moment transforms.

$X_i$

. The same convention applies to other components or moment transforms.

For discrete distributions, we work with factorial moment transform (Patil and Ord, Reference Patil and Ord1976). To this end, for some integers I and

![]() $\alpha$

, we define

$\alpha$

, we define

\begin{equation*}I^{(\alpha)}=\left\{\begin{array}{l@{\quad}l}I(I-1)\cdots (I-\alpha+1), & \text{if}\ \alpha \le I,\\[5pt]0, & \text{other cases}.\end{array}\right.\end{equation*}

\begin{equation*}I^{(\alpha)}=\left\{\begin{array}{l@{\quad}l}I(I-1)\cdots (I-\alpha+1), & \text{if}\ \alpha \le I,\\[5pt]0, & \text{other cases}.\end{array}\right.\end{equation*}

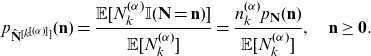

Definition 2.3. Let N be a discrete random variable having probability mass function

![]() $p_N(n)$

for

$p_N(n)$

for

![]() $n\ge 0$

. A random variable

$n\ge 0$

. A random variable

![]() $\tilde{N}^{[(\alpha)]}$

is said to be a copy of the

$\tilde{N}^{[(\alpha)]}$

is said to be a copy of the

![]() $\alpha$

th factorial moment transform of N if its probability mass function is given by

$\alpha$

th factorial moment transform of N if its probability mass function is given by

In the sequel, we denote the first factorial moment transform of N by

![]() $\tilde{N}$

.

$\tilde{N}$

.

Definition 2.4. Let

![]() $\mathbf{N}= (N_1,\cdots, N_K)$

be a vector of discrete random variables having probability mass function

$\mathbf{N}= (N_1,\cdots, N_K)$

be a vector of discrete random variables having probability mass function

![]() $p_{\mathbf{N}}(\mathbf{n})$

. A random vector

$p_{\mathbf{N}}(\mathbf{n})$

. A random vector

![]() $\hat{\mathbf{N}}^{[k^{[(\alpha)]}]}$

is said to be a copy of the kth component

$\hat{\mathbf{N}}^{[k^{[(\alpha)]}]}$

is said to be a copy of the kth component

![]() $\alpha$

th factorial moment transform of

$\alpha$

th factorial moment transform of

![]() $\mathbf{N}$

if its probability mass function is given by

$\mathbf{N}$

if its probability mass function is given by

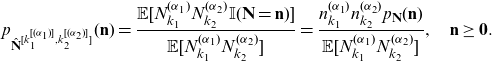

\begin{equation} p_{\hat{\mathbf{N}}^{[k^{[(\alpha)]}]}}(\mathbf{n})=\frac {\mathbb{E}[N_k^{(\alpha)} \mathbb{I}(\mathbf{N}=\mathbf{n})]}{\mathbb{E}[N_k^{(\alpha)}]} =\frac {n_k^{(\alpha)} p_{\mathbf{N}}(\mathbf{n})}{\mathbb{E}[N_k^{(\alpha)}]}, \quad \mathbf{n}\ge \mathbf{0}.\end{equation}

\begin{equation} p_{\hat{\mathbf{N}}^{[k^{[(\alpha)]}]}}(\mathbf{n})=\frac {\mathbb{E}[N_k^{(\alpha)} \mathbb{I}(\mathbf{N}=\mathbf{n})]}{\mathbb{E}[N_k^{(\alpha)}]} =\frac {n_k^{(\alpha)} p_{\mathbf{N}}(\mathbf{n})}{\mathbb{E}[N_k^{(\alpha)}]}, \quad \mathbf{n}\ge \mathbf{0}.\end{equation}

The kth component first moment transform of

![]() $\mathbf{N}$

is denoted as

$\mathbf{N}$

is denoted as

![]() $\hat{\mathbf{N}}^{[k]}$

.

$\hat{\mathbf{N}}^{[k]}$

.

A random vector

![]() $\hat{\mathbf{N}}^{[k_1^{[(\alpha_1)]}, k_2^{[(\alpha_2)]}]}$

is said to be a copy of the

$\hat{\mathbf{N}}^{[k_1^{[(\alpha_1)]}, k_2^{[(\alpha_2)]}]}$

is said to be a copy of the

![]() $(k_1,k_2)$

th component

$(k_1,k_2)$

th component

![]() $(\alpha_1,\alpha_2)$

th order factorial moment transform of

$(\alpha_1,\alpha_2)$

th order factorial moment transform of

![]() $\mathbf{N}$

if its probability mass function is given by

$\mathbf{N}$

if its probability mass function is given by

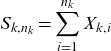

\begin{equation} p_{\hat{\mathbf{N}}^{[k_1^{[(\alpha_1)]},k_2^{[(\alpha_2)]}]}}(\mathbf{n})=\frac {\mathbb{E}[N_{k_1}^{(\alpha_1)} N_{k_2}^{(\alpha_2)} \mathbb{I}(\mathbf{N}=\mathbf{n})]}{\mathbb{E}[N_{k_1}^{(\alpha_1)} N_{k_2}^{(\alpha_2)}]} =\frac {n_{k_1}^{(\alpha_1)} n_{k_2}^{(\alpha_2)} p_{\mathbf{N}}(\mathbf{n})}{\mathbb{E}[N_{k_1}^{(\alpha_1)} N_{k_2}^{(\alpha_2)}]}, \quad \mathbf{n}\ge \mathbf{0}.\end{equation}

\begin{equation} p_{\hat{\mathbf{N}}^{[k_1^{[(\alpha_1)]},k_2^{[(\alpha_2)]}]}}(\mathbf{n})=\frac {\mathbb{E}[N_{k_1}^{(\alpha_1)} N_{k_2}^{(\alpha_2)} \mathbb{I}(\mathbf{N}=\mathbf{n})]}{\mathbb{E}[N_{k_1}^{(\alpha_1)} N_{k_2}^{(\alpha_2)}]} =\frac {n_{k_1}^{(\alpha_1)} n_{k_2}^{(\alpha_2)} p_{\mathbf{N}}(\mathbf{n})}{\mathbb{E}[N_{k_1}^{(\alpha_1)} N_{k_2}^{(\alpha_2)}]}, \quad \mathbf{n}\ge \mathbf{0}.\end{equation}

The reason why we work with the factorial moment transforms for the discrete distributions is that they have simple representations in many cases. See, for example, Table 2 of Patil and Ord (Reference Patil and Ord1976).

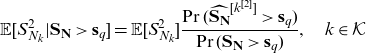

The relationship between risk measures such as TCE and TV and the moment transform of random variables has been studied extensively in the literature. In particular, the relationship

has been introduced and utilized in, for example, Furman and Landsman (Reference Furman and Landsman2005), Furman and Zitikis (Reference Furman and Zitikis2008), Denuit (Reference Denuit2020), and the references therein.

In the multivariate case, the MTCE and MTCOV of a random vector

![]() $\mathbf{X}=(X_1, \cdots, X_K)$

are related to its multivariate moment transform. We state the results in the following.

$\mathbf{X}=(X_1, \cdots, X_K)$

are related to its multivariate moment transform. We state the results in the following.

Lemma 2.1. Let

![]() $\mathcal{K} = \{1,2,\cdots, K\}$

,

$\mathcal{K} = \{1,2,\cdots, K\}$

,

![]() $\mathbf{X}=(X_1,\cdots, X_K)$

and

$\mathbf{X}=(X_1,\cdots, X_K)$

and

![]() $X_{\bullet}=\sum_{i=1}^{K} X_i$

, we have

$X_{\bullet}=\sum_{i=1}^{K} X_i$

, we have

-

(i) For

$k \in \mathcal{K}$

and

$k \in \mathcal{K}$

and

$\alpha\ge 1$

, (2.14)

$\alpha\ge 1$

, (2.14) \begin{equation} \mathbb{E} [X_k^\alpha | \mathbf{X} >\mathbf{x}] = \mathbb{E}[X_k^\alpha] \frac{\Pr\left(\hat{\mathbf{X}}^{[k^{[\alpha]}]}>\mathbf{x}\right)}{\Pr(\mathbf{X} >\mathbf{x})}.\end{equation}

\begin{equation} \mathbb{E} [X_k^\alpha | \mathbf{X} >\mathbf{x}] = \mathbb{E}[X_k^\alpha] \frac{\Pr\left(\hat{\mathbf{X}}^{[k^{[\alpha]}]}>\mathbf{x}\right)}{\Pr(\mathbf{X} >\mathbf{x})}.\end{equation}

-

(ii) For

$k_1, k_2 \in \mathcal{K}$

and

$k_1, k_2 \in \mathcal{K}$

and

$\alpha_1, \alpha_2\ge 1$

, (2.15)

$\alpha_1, \alpha_2\ge 1$

, (2.15) \begin{equation} \mathbb{E} [X_{k_1}^{\alpha_1} X_{k_2}^{\alpha_2} | \mathbf{X} >\mathbf{x}] = \mathbb{E}[X_{k_1}^{\alpha_1}X_{k_2}^{\alpha_2}] \frac{\Pr\left(\hat{\mathbf{X}}^{[k_1^{[\alpha_1]}, k_2^{[\alpha_2]}]}>\mathbf{x}\right)}{\Pr(\mathbf{X} >\mathbf{x})}.\end{equation}

\begin{equation} \mathbb{E} [X_{k_1}^{\alpha_1} X_{k_2}^{\alpha_2} | \mathbf{X} >\mathbf{x}] = \mathbb{E}[X_{k_1}^{\alpha_1}X_{k_2}^{\alpha_2}] \frac{\Pr\left(\hat{\mathbf{X}}^{[k_1^{[\alpha_1]}, k_2^{[\alpha_2]}]}>\mathbf{x}\right)}{\Pr(\mathbf{X} >\mathbf{x})}.\end{equation}

-

(iii) For

$k_1, k_2 \in \mathcal{K}$

and

$k_1, k_2 \in \mathcal{K}$

and

$\alpha_1, \alpha_2\ge 1$

, (2.16)where

$\alpha_1, \alpha_2\ge 1$

, (2.16)where \begin{equation} \mathbb{E} [X_{k_1}^{\alpha_1} X_{k_2}^{\alpha_2} | X_{\bullet} >x] = \mathbb{E}[X_{k_1}^{\alpha_1}X_{k_2}^{\alpha_2}] \frac{\Pr\left(\hat{X}_{\bullet}^{[k_1^{[\alpha_1]}, k_2^{[\alpha_2]}]}>{x}\right)}{\Pr(X_{\bullet} >x)},\end{equation}

and

\begin{equation} \mathbb{E} [X_{k_1}^{\alpha_1} X_{k_2}^{\alpha_2} | X_{\bullet} >x] = \mathbb{E}[X_{k_1}^{\alpha_1}X_{k_2}^{\alpha_2}] \frac{\Pr\left(\hat{X}_{\bullet}^{[k_1^{[\alpha_1]}, k_2^{[\alpha_2]}]}>{x}\right)}{\Pr(X_{\bullet} >x)},\end{equation}

and \begin{equation*}\hat{X}_{\bullet}^{[k_1^{[\alpha_1]}, k_2^{[\alpha_2]}]} = \sum_{k=1}^K \hat{X}_k^{[k_1^{[\alpha_1]}, k_2^{[\alpha_2]}]}\end{equation*}

\begin{equation*}\hat{X}_{\bullet}^{[k_1^{[\alpha_1]}, k_2^{[\alpha_2]}]} = \sum_{k=1}^K \hat{X}_k^{[k_1^{[\alpha_1]}, k_2^{[\alpha_2]}]}\end{equation*}

$\hat{X}_k^{[k_1^{[\alpha_1]}, k_2^{[\alpha_2]}]}$

is the kth element of

$\hat{X}_k^{[k_1^{[\alpha_1]}, k_2^{[\alpha_2]}]}$

is the kth element of

$\;\hat{\mathbf{X}}^{[k_1^{[\alpha_1]}, k_2^{[\alpha_2]}]}$

.

$\;\hat{\mathbf{X}}^{[k_1^{[\alpha_1]}, k_2^{[\alpha_2]}]}$

.

Proof. Statements (i) and (ii) are the direct results of Definition 2.2 of moment transforms. Statement (iii) is similar to Proposition 3.1 of Denuit and Robert (Reference Denuit and Robert2021), to which we refer the readers for more details.

With Lemma 2.1, we are ready to study the tail risk measures of the compound sum vector

![]() $\mathbf{S}_{\mathbf{N}}$

through its moment transforms.

$\mathbf{S}_{\mathbf{N}}$

through its moment transforms.

3. Evaluation of the tail risk measures of multivariate compound variables via moment transforms

In this section, we derive the explicit formulas for moment transforms of the compound sum vector

![]() $\mathbf{S}_{\mathbf{N}}$

. These formulas not only unveil the relationships between the moment transforms of

$\mathbf{S}_{\mathbf{N}}$

. These formulas not only unveil the relationships between the moment transforms of

![]() $\mathbf{S}_{\mathbf{N}}$

and those of

$\mathbf{S}_{\mathbf{N}}$

and those of

![]() $\mathbf{N}$

and

$\mathbf{N}$

and

![]() $X_k$

(for some

$X_k$

(for some

![]() $k\in\mathcal{K}$

) but also provide a method to compute the MTCE and MTCOV of

$k\in\mathcal{K}$

) but also provide a method to compute the MTCE and MTCOV of

![]() $\mathbf{S}_{\mathbf{N}}$

and to perform capital allocations (Equations (2.2)–(2.6)).

$\mathbf{S}_{\mathbf{N}}$

and to perform capital allocations (Equations (2.2)–(2.6)).

We first assume that

![]() $\mathbf{N}$

is a non-random vector, that is

$\mathbf{N}$

is a non-random vector, that is

![]() $\mathbf{N}=\mathbf{n}=(n_1,\cdots, n_K)$

. For

$\mathbf{N}=\mathbf{n}=(n_1,\cdots, n_K)$

. For

![]() $k\in \mathcal{K}$

, let

$k\in \mathcal{K}$

, let

\begin{equation*}S_{k,n_k}=\sum_{i=1}^{n_k} X_{k,i}\end{equation*}

\begin{equation*}S_{k,n_k}=\sum_{i=1}^{n_k} X_{k,i}\end{equation*}

and

Then by the results in Furman and Landsman (Reference Furman and Landsman2005) or Lemmas 2.1 and 2.2 of Ren (Reference Ren2021), for a positive integer

![]() $\alpha$

such that

$\alpha$

such that

![]() $\mathbb{E}[X_k^\alpha]<\infty$

, we have for

$\mathbb{E}[X_k^\alpha]<\infty$

, we have for

![]() $i\in(1,\cdots, n_k)$

that

$i\in(1,\cdots, n_k)$

that

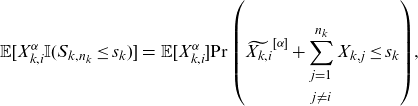

\begin{eqnarray} \mathbb{E}[X_{k,i}^\alpha \mathbb{I}(S_{k,n_k}\le s_k)]&=& \mathbb{E}[X_{k,i}^\alpha] {\Pr\left(\widetilde{X_{k,i}}^{[\alpha]}+\sum_{\substack{j=1 \\[5pt] j\neq i}}^{n_k} X_{k,j}\le s_k\right)},\end{eqnarray}

\begin{eqnarray} \mathbb{E}[X_{k,i}^\alpha \mathbb{I}(S_{k,n_k}\le s_k)]&=& \mathbb{E}[X_{k,i}^\alpha] {\Pr\left(\widetilde{X_{k,i}}^{[\alpha]}+\sum_{\substack{j=1 \\[5pt] j\neq i}}^{n_k} X_{k,j}\le s_k\right)},\end{eqnarray}

where all the variables in the parenthesis are mutually independent. Because

![]() $X_{k,i}$

’s are assumed to be i.i.d., we have

$X_{k,i}$

’s are assumed to be i.i.d., we have

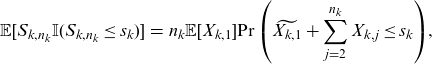

\begin{eqnarray} \mathbb{E}[S_{k,n_k} \mathbb{I}(S_{k,n_k}\le s_k)]&=& n_k \mathbb{E}[X_{k,1}] {\Pr\left(\widetilde{X_{k,1}}+\sum_{\substack{j=2}}^{n_k} X_{k,j}\le s_k\right)},\end{eqnarray}

\begin{eqnarray} \mathbb{E}[S_{k,n_k} \mathbb{I}(S_{k,n_k}\le s_k)]&=& n_k \mathbb{E}[X_{k,1}] {\Pr\left(\widetilde{X_{k,1}}+\sum_{\substack{j=2}}^{n_k} X_{k,j}\le s_k\right)},\end{eqnarray}

and

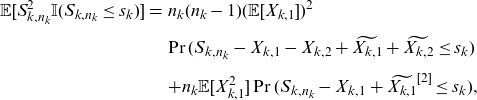

\begin{eqnarray} \mathbb{E}[S_{k,n_k}^2 \mathbb{I}(S_{k,n_k}\le s_k)]&=& n_k(n_k-1) (\mathbb{E}[X_{k,1}])^2 \nonumber \\[5pt] && {\Pr(S_{k,n_k}-X_{k,1}-X_{k,2}+\widetilde{X_{k,1}}+\widetilde{X_{k,2}}\le s_k)} \nonumber\\[5pt] \qquad &&+ n_k \mathbb{E}[X_{k,1}^2]\Pr(S_{k,n_k}-X_{k,1}+\widetilde{X_{k,1}}^{[2]}\le s_k),\end{eqnarray}

\begin{eqnarray} \mathbb{E}[S_{k,n_k}^2 \mathbb{I}(S_{k,n_k}\le s_k)]&=& n_k(n_k-1) (\mathbb{E}[X_{k,1}])^2 \nonumber \\[5pt] && {\Pr(S_{k,n_k}-X_{k,1}-X_{k,2}+\widetilde{X_{k,1}}+\widetilde{X_{k,2}}\le s_k)} \nonumber\\[5pt] \qquad &&+ n_k \mathbb{E}[X_{k,1}^2]\Pr(S_{k,n_k}-X_{k,1}+\widetilde{X_{k,1}}^{[2]}\le s_k),\end{eqnarray}

where all the variables in the parenthesis are mutually independent.

Since

![]() $\{S_{k,n_k}\}_{k=1,\cdots, K}$

are mutually independent, we have

$\{S_{k,n_k}\}_{k=1,\cdots, K}$

are mutually independent, we have

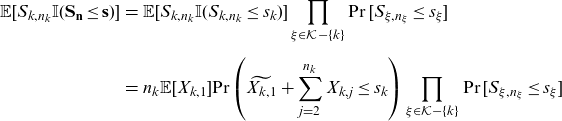

\begin{eqnarray} \mathbb{E}[S_{k,n_k} \mathbb{I}(\mathbf{S}_{\mathbf{n}}\le \mathbf{s})] &=& \mathbb{E}[S_{k,n_k} \mathbb{I}(S_{k,n_k}\le s_k)] \prod_{\xi \in \mathcal{K}-\{k\}} \Pr[S_{\xi,n_\xi}\le s_\xi]\nonumber \\[5pt] &=& n_k \mathbb{E}[X_{k,1}] {\Pr\left(\widetilde{X_{k,1}}+\sum_{j=2}^{n_k} X_{k,j}\le s_k\right)} \prod_{\xi\in \mathcal{K}-\{k\}} \Pr[S_{\xi,n_\xi}\le s_\xi] \nonumber \\[5pt] && \end{eqnarray}

\begin{eqnarray} \mathbb{E}[S_{k,n_k} \mathbb{I}(\mathbf{S}_{\mathbf{n}}\le \mathbf{s})] &=& \mathbb{E}[S_{k,n_k} \mathbb{I}(S_{k,n_k}\le s_k)] \prod_{\xi \in \mathcal{K}-\{k\}} \Pr[S_{\xi,n_\xi}\le s_\xi]\nonumber \\[5pt] &=& n_k \mathbb{E}[X_{k,1}] {\Pr\left(\widetilde{X_{k,1}}+\sum_{j=2}^{n_k} X_{k,j}\le s_k\right)} \prod_{\xi\in \mathcal{K}-\{k\}} \Pr[S_{\xi,n_\xi}\le s_\xi] \nonumber \\[5pt] && \end{eqnarray}

and

where the first term on the right side is given in (3.3).

Further, for

![]() $k_i,k_j \in \mathcal{K}$

,

$k_i,k_j \in \mathcal{K}$

,

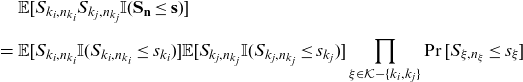

\begin{eqnarray*} &&\mathbb{E}[S_{k_i,n_{k_i}} S_{k_j,n_{k_j}} \mathbb{I}(\mathbf{S}_{\mathbf{n}}\le \mathbf{s})] \nonumber\\[5pt] &=& \mathbb{E}[S_{k_i,n_{k_i}} \mathbb{I}(S_{k_i,n_{k_i}}\le s_{k_i})] \mathbb{E}[S_{k_j,n_{k_j}} \mathbb{I}(S_{k_j,n_{k_j}}\le s_{k_j})] \prod_{\xi \in \mathcal{K}-\{k_i,k_j\}} \Pr[S_{\xi,n_\xi}\le s_\xi] \end{eqnarray*}

\begin{eqnarray*} &&\mathbb{E}[S_{k_i,n_{k_i}} S_{k_j,n_{k_j}} \mathbb{I}(\mathbf{S}_{\mathbf{n}}\le \mathbf{s})] \nonumber\\[5pt] &=& \mathbb{E}[S_{k_i,n_{k_i}} \mathbb{I}(S_{k_i,n_{k_i}}\le s_{k_i})] \mathbb{E}[S_{k_j,n_{k_j}} \mathbb{I}(S_{k_j,n_{k_j}}\le s_{k_j})] \prod_{\xi \in \mathcal{K}-\{k_i,k_j\}} \Pr[S_{\xi,n_\xi}\le s_\xi] \end{eqnarray*}

\begin{eqnarray}&=& n_{k_i} n_{k_j} \mathbb{E}[X_{k_i,1}]\mathbb{E}[X_{k_j,1}] {\prod_{\xi \in \{k_i,k_j\}}\!\Pr\!\left(\!\widetilde{X_{\xi,1}}+\sum_{j=2}^{n_{\xi}} X_{\xi,j}\le s_{\xi}\!\!\right)} \!\prod_{\xi \in \mathcal{K}-\{k_i,k_j\}} \!\!\Pr[S_{\xi,n_\xi}\le s_\xi], \nonumber \\[5pt] && \end{eqnarray}

\begin{eqnarray}&=& n_{k_i} n_{k_j} \mathbb{E}[X_{k_i,1}]\mathbb{E}[X_{k_j,1}] {\prod_{\xi \in \{k_i,k_j\}}\!\Pr\!\left(\!\widetilde{X_{\xi,1}}+\sum_{j=2}^{n_{\xi}} X_{\xi,j}\le s_{\xi}\!\!\right)} \!\prod_{\xi \in \mathcal{K}-\{k_i,k_j\}} \!\!\Pr[S_{\xi,n_\xi}\le s_\xi], \nonumber \\[5pt] && \end{eqnarray}

where all the variables in the parenthesis are mutually independent.

Now we are ready to present the results for the moment transforms of the compound sum vector

![]() $\mathbf{S}_{\mathbf{N}}$

.

$\mathbf{S}_{\mathbf{N}}$

.

Theorem 3.1. For

![]() $k\in \mathcal{K}$

, let

$k\in \mathcal{K}$

, let

![]() $\mathbf{1}^{[k]}$

denote a K dimensional vector with kth element being one and all others are zero. Let

$\mathbf{1}^{[k]}$

denote a K dimensional vector with kth element being one and all others are zero. Let

whose ith element is denoted by

![]() ${L}_i^{[k]}$

and

${L}_i^{[k]}$

and

\begin{equation*}\mathbf{S}_{\mathbf{L}^{[k]}}=\left(\sum_{j=1}^{{L}_i^{[k]}} X_{i,j}, i\in \mathcal{K}\right),\end{equation*}

\begin{equation*}\mathbf{S}_{\mathbf{L}^{[k]}}=\left(\sum_{j=1}^{{L}_i^{[k]}} X_{i,j}, i\in \mathcal{K}\right),\end{equation*}

then

Further, let

then

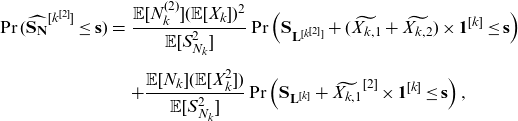

\begin{eqnarray} \Pr(\widehat{\mathbf{S}_{\mathbf{N}}}^{[k^{[2]}]}\le \mathbf{s}) &=& \frac{\mathbb{E}[N_k^{(2)}](\mathbb{E}[X_k])^2}{\mathbb{E}[S_{N_k}^{2}]} \Pr\left(\mathbf{S}_{\mathbf{L}^{[k^{[2]}]}} + (\widetilde{X_{k,1}}+\widetilde{X_{k,2}}) \times \mathbf{1}^{[k]}\le \mathbf{s}\right)\nonumber\\[5pt] &&+ \frac{\mathbb{E}[N_k](\mathbb{E}[X_k^2])}{\mathbb{E}[S_{N_k}^{2}]} \Pr\left({\mathbf{S}_{\mathbf{L}^{[k]}} + \widetilde{X_{k,1}}^{[2]}\times \mathbf{1}^{[k]}}\le \mathbf{s}\right), \end{eqnarray}

\begin{eqnarray} \Pr(\widehat{\mathbf{S}_{\mathbf{N}}}^{[k^{[2]}]}\le \mathbf{s}) &=& \frac{\mathbb{E}[N_k^{(2)}](\mathbb{E}[X_k])^2}{\mathbb{E}[S_{N_k}^{2}]} \Pr\left(\mathbf{S}_{\mathbf{L}^{[k^{[2]}]}} + (\widetilde{X_{k,1}}+\widetilde{X_{k,2}}) \times \mathbf{1}^{[k]}\le \mathbf{s}\right)\nonumber\\[5pt] &&+ \frac{\mathbb{E}[N_k](\mathbb{E}[X_k^2])}{\mathbb{E}[S_{N_k}^{2}]} \Pr\left({\mathbf{S}_{\mathbf{L}^{[k]}} + \widetilde{X_{k,1}}^{[2]}\times \mathbf{1}^{[k]}}\le \mathbf{s}\right), \end{eqnarray}

where

![]() $\widetilde{X_{k,1}}$

and

$\widetilde{X_{k,1}}$

and

![]() $\widetilde{X_{k,2}}$

are two independent copies of the first moment transform of

$\widetilde{X_{k,2}}$

are two independent copies of the first moment transform of

![]() ${X_{k}}$

and

${X_{k}}$

and

![]() $\widetilde{X_{k,1}}^{[2]}$

is a copy of the second moment transform of

$\widetilde{X_{k,1}}^{[2]}$

is a copy of the second moment transform of

![]() ${X_{k}}$

. All random variables in the above are mutually independent.

${X_{k}}$

. All random variables in the above are mutually independent.

In addition, for

![]() $k_1\ne k_2\in \mathcal{K}$

let

$k_1\ne k_2\in \mathcal{K}$

let

then

Proof. The proof of the above three statements is similar. We next prove statement (3.9).

Firstly, by the law of total probability,

which after applying (3.5) becomes

\begin{eqnarray*} &&\mathbb{E} [S_{N_k}^2 \mathbb{I}(\mathbf{S}_{\mathbf{N}}\le \mathbf{s})] \nonumber\\[8pt] &=& \sum_{\mathbf{n}\in (\mathbb{Z}^+)^K} p_{\mathbf{N}}(\mathbf{n}) \left( n_k(n_k-1) (\mathbb{E}[X_{k,1}])^2 \Pr\left(\widetilde{X_{k,1}}+\widetilde{X_{k,2}}+\sum_{j=3}^{n_k}X_{k,j} \right. \right. \nonumber \\[8pt] && \left. \left. \le s_k, \sum_{j=1}^{{n_m}}X_{m,j} \le s_m, m\in \mathcal{K}-\{k\}\right)\right. \nonumber\\[8pt] & & \quad \left. +n_k \mathbb{E}[X_{k,1}^2]\Pr\left(\widetilde{X_{k,1}}^{[2]}+\sum_{j=2}^{n_k}X_{k,j} \le s_k, \sum_{j=1}^{{n_m}}X_{m,j}\le s_m, m\in \mathcal{K}-\{k\}\right) \right) \nonumber\\[8pt] &=& \mathbb{E}[N_k^{(2)}] (\mathbb{E}[X_{k,1}])^2 \Pr\left(\widetilde{X_{k,1}}+\widetilde{X_{k,2}}+\sum_{j=1}^{\hat{N}_k^{[k^{[(2)]}]}-2}X_{k,j} \right. \nonumber \\[8pt] &&\left. \le s_k, \sum_{j=1}^{\hat{N}_m^{[k^{[(2)]}]}} X_{m,j}\le s_m, m\in \mathcal{K}-\{k\}\right) \nonumber\\[8pt] & & \quad + \mathbb{E}[N_k]\mathbb{E}[X_{k,1}^2]\Pr\left(\widetilde{X_{k,1}}^{[2]}+\sum_{j=1}^{\hat{N}_k^{[k]}-1} X_{k,j} \le s_k, \sum_{j=1}^{{\hat{N}_m}^{[k]}}X_{m,j}\le s_m, m\in \mathcal{K}-\{k\}\right). \nonumber \end{eqnarray*}

\begin{eqnarray*} &&\mathbb{E} [S_{N_k}^2 \mathbb{I}(\mathbf{S}_{\mathbf{N}}\le \mathbf{s})] \nonumber\\[8pt] &=& \sum_{\mathbf{n}\in (\mathbb{Z}^+)^K} p_{\mathbf{N}}(\mathbf{n}) \left( n_k(n_k-1) (\mathbb{E}[X_{k,1}])^2 \Pr\left(\widetilde{X_{k,1}}+\widetilde{X_{k,2}}+\sum_{j=3}^{n_k}X_{k,j} \right. \right. \nonumber \\[8pt] && \left. \left. \le s_k, \sum_{j=1}^{{n_m}}X_{m,j} \le s_m, m\in \mathcal{K}-\{k\}\right)\right. \nonumber\\[8pt] & & \quad \left. +n_k \mathbb{E}[X_{k,1}^2]\Pr\left(\widetilde{X_{k,1}}^{[2]}+\sum_{j=2}^{n_k}X_{k,j} \le s_k, \sum_{j=1}^{{n_m}}X_{m,j}\le s_m, m\in \mathcal{K}-\{k\}\right) \right) \nonumber\\[8pt] &=& \mathbb{E}[N_k^{(2)}] (\mathbb{E}[X_{k,1}])^2 \Pr\left(\widetilde{X_{k,1}}+\widetilde{X_{k,2}}+\sum_{j=1}^{\hat{N}_k^{[k^{[(2)]}]}-2}X_{k,j} \right. \nonumber \\[8pt] &&\left. \le s_k, \sum_{j=1}^{\hat{N}_m^{[k^{[(2)]}]}} X_{m,j}\le s_m, m\in \mathcal{K}-\{k\}\right) \nonumber\\[8pt] & & \quad + \mathbb{E}[N_k]\mathbb{E}[X_{k,1}^2]\Pr\left(\widetilde{X_{k,1}}^{[2]}+\sum_{j=1}^{\hat{N}_k^{[k]}-1} X_{k,j} \le s_k, \sum_{j=1}^{{\hat{N}_m}^{[k]}}X_{m,j}\le s_m, m\in \mathcal{K}-\{k\}\right). \nonumber \end{eqnarray*}

Dividing both sides of the above by

![]() $\mathbb{E}[S_{N_k}^2]$

and making use the definitions of

$\mathbb{E}[S_{N_k}^2]$

and making use the definitions of

![]() $\mathbf{L}^{[k]}$

and

$\mathbf{L}^{[k]}$

and

![]() $\mathbf{L}^{[k^{[2]}]}$

leads to (3.9).

$\mathbf{L}^{[k^{[2]}]}$

leads to (3.9).

Similarly, applying the law of total probability to Equations (3.4) and (3.6) respectively yields

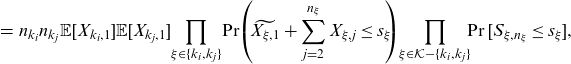

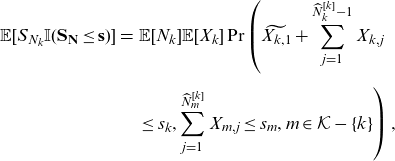

\begin{eqnarray} \mathbb{E}[S_{N_k} \mathbb{I}(\mathbf{S}_{\mathbf{N}}\le \mathbf{s})] &=& \mathbb{E}[N_k]\mathbb{E}[X_k] \Pr\left(\widetilde{X_{k,1}}+\sum_{j=1}^{\widehat{N}_k^{[k]}-1} X_{k,j} \right. \nonumber \\[5pt] && \left. \le s_k, \sum_{j=1}^{\widehat{N}_m^{[k]}}X_{m,j} \le s_m, m\in \mathcal{K}-\{k\} \right), \nonumber\\[5pt] \end{eqnarray}

\begin{eqnarray} \mathbb{E}[S_{N_k} \mathbb{I}(\mathbf{S}_{\mathbf{N}}\le \mathbf{s})] &=& \mathbb{E}[N_k]\mathbb{E}[X_k] \Pr\left(\widetilde{X_{k,1}}+\sum_{j=1}^{\widehat{N}_k^{[k]}-1} X_{k,j} \right. \nonumber \\[5pt] && \left. \le s_k, \sum_{j=1}^{\widehat{N}_m^{[k]}}X_{m,j} \le s_m, m\in \mathcal{K}-\{k\} \right), \nonumber\\[5pt] \end{eqnarray}

and

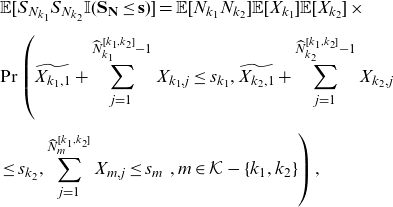

\begin{eqnarray} && \mathbb{E}[S_{N_{k_1}}S_{N_{k_2}} \mathbb{I}(\mathbf{S}_{\mathbf{N}}\le \mathbf{s})] = \mathbb{E}[N_{k_1}N_{k_2}]\mathbb{E}[X_{k_1}]\mathbb{E}[X_{k_2}] \times \nonumber\\[5pt] &&\Pr\left(\widetilde{X_{k_1,1}}+\sum_{j=1}^{\widehat{N}_{k_1}^{[k_1,k_2]}-1} X_{k_1,j}\le s_{k_1}, \widetilde{X_{k_2,1}}+\sum_{j=1}^{\widehat{N}_{k_2}^{[k_1,k_2]}-1} X_{k_2,j} \right. \nonumber \\[5pt] && \left. \le s_{k_2}, \sum_{j=1}^{\widehat{N}_{m}^{[k_1,k_2]}}X_{m,j}\le s_m \,\,, m\in \mathcal{K}-\{k_1,k_2\} \right),\nonumber\\[5pt] \end{eqnarray}

\begin{eqnarray} && \mathbb{E}[S_{N_{k_1}}S_{N_{k_2}} \mathbb{I}(\mathbf{S}_{\mathbf{N}}\le \mathbf{s})] = \mathbb{E}[N_{k_1}N_{k_2}]\mathbb{E}[X_{k_1}]\mathbb{E}[X_{k_2}] \times \nonumber\\[5pt] &&\Pr\left(\widetilde{X_{k_1,1}}+\sum_{j=1}^{\widehat{N}_{k_1}^{[k_1,k_2]}-1} X_{k_1,j}\le s_{k_1}, \widetilde{X_{k_2,1}}+\sum_{j=1}^{\widehat{N}_{k_2}^{[k_1,k_2]}-1} X_{k_2,j} \right. \nonumber \\[5pt] && \left. \le s_{k_2}, \sum_{j=1}^{\widehat{N}_{m}^{[k_1,k_2]}}X_{m,j}\le s_m \,\,, m\in \mathcal{K}-\{k_1,k_2\} \right),\nonumber\\[5pt] \end{eqnarray}

Remark 3.1. Theorem 3.1 generalizes Proposition 1 in Denuit (Reference Denuit2020) and Theorem 2 of Ren (Reference Ren2021), which gave formulas for the moment transforms of univariate compound distributions. In particular, with

![]() $K=1$

and denoting

$K=1$

and denoting

![]() $S_N=\sum_{i=1}^{N} X_i$

, Equation (3.9) becomes

$S_N=\sum_{i=1}^{N} X_i$

, Equation (3.9) becomes

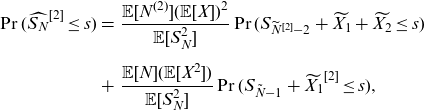

\begin{eqnarray} \Pr(\widehat{S_{N}}^{{[2]}}\le {s}) &=& \frac{\mathbb{E}[N^{(2)}](\mathbb{E}[X])^2}{\mathbb{E}[S_{N}^{2}]} \Pr(S_{\widetilde{N}^{[2]}-2} + \widetilde{X_{1}}+\widetilde{X_{2}} \le s)\nonumber\\[5pt] &+& \frac{\mathbb{E}[N](\mathbb{E}[X^2])}{\mathbb{E}[S_{N}^{2}]} \Pr(S_{\tilde{N}-1} + \widetilde{X_{1}}^{[2]}\le {s}), \end{eqnarray}

\begin{eqnarray} \Pr(\widehat{S_{N}}^{{[2]}}\le {s}) &=& \frac{\mathbb{E}[N^{(2)}](\mathbb{E}[X])^2}{\mathbb{E}[S_{N}^{2}]} \Pr(S_{\widetilde{N}^{[2]}-2} + \widetilde{X_{1}}+\widetilde{X_{2}} \le s)\nonumber\\[5pt] &+& \frac{\mathbb{E}[N](\mathbb{E}[X^2])}{\mathbb{E}[S_{N}^{2}]} \Pr(S_{\tilde{N}-1} + \widetilde{X_{1}}^{[2]}\le {s}), \end{eqnarray}

which is the result in Theorem 2 of Ren (Reference Ren2021).

Theorem 3.1 is different from Theorem 3 of Ren (Reference Ren2021), which is valid for cases with one dimensional claim frequency and multidimensional claim sizes.

Remark 3.2. Theorem 3.1 relates the moment transform of

![]() ${\mathbf{S}_{\mathbf{N}}}$

with those of

${\mathbf{S}_{\mathbf{N}}}$

with those of

![]() $\mathbf{N}$

and X. For example, Equation (3.9) shows that the distribution of

$\mathbf{N}$

and X. For example, Equation (3.9) shows that the distribution of

![]() $\widehat{\mathbf{S}_{\mathbf{N}}}^{[k^{[2]}]}$

is a mixture of

$\widehat{\mathbf{S}_{\mathbf{N}}}^{[k^{[2]}]}$

is a mixture of

and

Loosely speaking, to obtain

![]() $W_1$

, we first obtain

$W_1$

, we first obtain

![]() $\hat{\mathbf{N}}^{[k^{[(2)]}]}$

, which is the second factorial moment transform of

$\hat{\mathbf{N}}^{[k^{[(2)]}]}$

, which is the second factorial moment transform of

![]() $\mathbf{N}$

. Then, we replace two type k claims from

$\mathbf{N}$

. Then, we replace two type k claims from

![]() $\mathbf{S}_{\hat{\mathbf{N}}^{[k^{[(2)]}]}}$

with their independent moment transformed versions

$\mathbf{S}_{\hat{\mathbf{N}}^{[k^{[(2)]}]}}$

with their independent moment transformed versions

![]() $\widetilde{X_{k,1}}$

and

$\widetilde{X_{k,1}}$

and

![]() $\widetilde{X_{k,2}}$

. To obtain

$\widetilde{X_{k,2}}$

. To obtain

![]() $W_2$

, we replace a type k claim from

$W_2$

, we replace a type k claim from

![]() $\mathbf{S}_{\hat{\mathbf{N}}^{[k]}}$

with its independent second moment transformed version,

$\mathbf{S}_{\hat{\mathbf{N}}^{[k]}}$

with its independent second moment transformed version,

![]() $\widetilde{X_{k,1}}^{[2]}$

.

$\widetilde{X_{k,1}}^{[2]}$

.

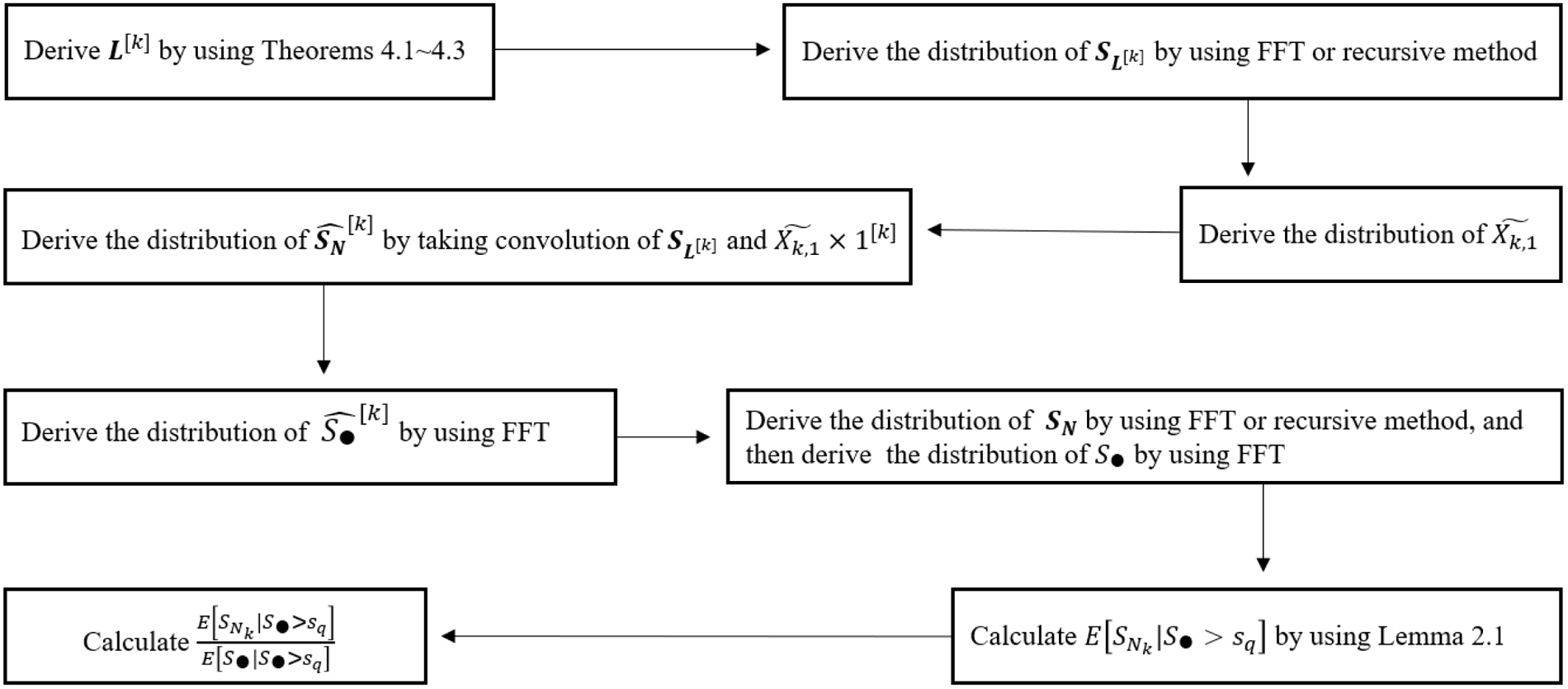

Remark 3.3. Theorem 3.1 provides an approach to calculate the distributions of the moment transformed

![]() $\mathbf{S}_{\mathbf{N}}$

. For example, to compute the distribution of

$\mathbf{S}_{\mathbf{N}}$

. For example, to compute the distribution of

![]() $\widehat{\mathbf{S}_{\mathbf{N}}}^{[k^{[2]}]}$

, we do the following.

$\widehat{\mathbf{S}_{\mathbf{N}}}^{[k^{[2]}]}$

, we do the following.

-

(i) Determine the distribution functions of

$\mathbf{L}^{[k]}$

and

$\mathbf{L}^{[k]}$

and

$\mathbf{L}^{[k^{[2]}]}$

. This is studied in great detail in Section 4 of this paper, where we show that for several commonly used models

$\mathbf{L}^{[k^{[2]}]}$

. This is studied in great detail in Section 4 of this paper, where we show that for several commonly used models

$\mathbf{N}$

studied in Hesselager (Reference Hesselager1996) and Kim et al. (Reference Kim, Jang and Pyun2019), the distribution of the

$\mathbf{N}$

studied in Hesselager (Reference Hesselager1996) and Kim et al. (Reference Kim, Jang and Pyun2019), the distribution of the

$\mathbf{L}$

’s are in fact mixture of some distributions in the same family as

$\mathbf{L}$

’s are in fact mixture of some distributions in the same family as

$\mathbf{N}$

and can be conveniently computed.

$\mathbf{N}$

and can be conveniently computed. -

(ii) Determine the distribution functions of

$\mathbf{S}_{\mathbf{L}^{[k^{[2]}]}}$

and

$\mathbf{S}_{\mathbf{L}^{[k^{[2]}]}}$

and

$\mathbf{S}_{\mathbf{L}^{[k]}}$

. This can be done by either (a) applying the recursive methods introduced in Hesselager (Reference Hesselager1996) and Kim et al. (Reference Kim, Jang and Pyun2019) or (b) applying the FFT method if the characteristic functions of

$\mathbf{S}_{\mathbf{L}^{[k]}}$

. This can be done by either (a) applying the recursive methods introduced in Hesselager (Reference Hesselager1996) and Kim et al. (Reference Kim, Jang and Pyun2019) or (b) applying the FFT method if the characteristic functions of

$\mathbf{N}$

(therefore

$\mathbf{N}$

(therefore

$\mathbf{L}$

’s) and

$\mathbf{L}$

’s) and

$\mathbf{X}$

are known. For details of the FFT method, see for example, Wang (Reference Wang1998) and Embrechts and Frei (Reference Embrechts and Frei2009).

$\mathbf{X}$

are known. For details of the FFT method, see for example, Wang (Reference Wang1998) and Embrechts and Frei (Reference Embrechts and Frei2009). -

(iii) Determine the distribution functions of

$W_1$

and

$W_1$

and

$W_2$

. Since the elements in

$W_2$

. Since the elements in

$W_1$

and

$W_1$

and

$W_2$

are independent, this can be done by applying direct (multivariate) convolution or FFT.

$W_2$

are independent, this can be done by applying direct (multivariate) convolution or FFT. -

(iv) Mixing the distribution functions of

$W_1$

and

$W_1$

and

$W_2$

using the weights in Equation (3.9).

$W_2$

using the weights in Equation (3.9).

With Theorem 3.1, the MTCE and MTCOV of

![]() $\mathbf{S}_{\mathbf{N}}$

, defined in (2.2) and (2.3) respectively, can be computed by applying items (i) and (ii) of Lemma 2.1. We summarize the results as follows.

$\mathbf{S}_{\mathbf{N}}$

, defined in (2.2) and (2.3) respectively, can be computed by applying items (i) and (ii) of Lemma 2.1. We summarize the results as follows.

\begin{equation} \mathbb{E} [S_{N_k} | \mathbf{S}_{\mathbf{N}} > \mathbf{s}_q] = \mathbb{E}[S_{N_k}] \frac{\Pr(\widehat{\mathbf{S}_{\mathbf{N}}}^{[k]}>\mathbf{s}_q)}{\Pr(\mathbf{\mathbf{S}_{\mathbf{N}}} >\mathbf{s}_q)}, \quad k \in \mathcal{K}\end{equation}

\begin{equation} \mathbb{E} [S_{N_k} | \mathbf{S}_{\mathbf{N}} > \mathbf{s}_q] = \mathbb{E}[S_{N_k}] \frac{\Pr(\widehat{\mathbf{S}_{\mathbf{N}}}^{[k]}>\mathbf{s}_q)}{\Pr(\mathbf{\mathbf{S}_{\mathbf{N}}} >\mathbf{s}_q)}, \quad k \in \mathcal{K}\end{equation}

\begin{equation} \mathbb{E} [S_{N_k}^{2} | \mathbf{S}_{\mathbf{N}} > \mathbf{s}_q] = \mathbb{E}[S_{N_k}^2] \frac{\Pr(\widehat{\mathbf{S}_{\mathbf{N}}}^{[k^{[2]}]}>\mathbf{s}_q)}{\Pr(\mathbf{\mathbf{S}_{\mathbf{N}}} >\mathbf{s}_q)}, \quad k \in \mathcal{K}\end{equation}

\begin{equation} \mathbb{E} [S_{N_k}^{2} | \mathbf{S}_{\mathbf{N}} > \mathbf{s}_q] = \mathbb{E}[S_{N_k}^2] \frac{\Pr(\widehat{\mathbf{S}_{\mathbf{N}}}^{[k^{[2]}]}>\mathbf{s}_q)}{\Pr(\mathbf{\mathbf{S}_{\mathbf{N}}} >\mathbf{s}_q)}, \quad k \in \mathcal{K}\end{equation}

and

\begin{equation} \mathbb{E} [S_{N_{k_1}} S_{N_{k_2}} | \mathbf{S}_{\mathbf{N}} > \mathbf{s}_q] = \mathbb{E}[S_{N_{k_1}} S_{N_{k_2}}] \frac{\Pr(\widehat{\mathbf{S}_{\mathbf{N}}}^{[k_1,k_2]}>\mathbf{s}_q)}{\Pr(\mathbf{\mathbf{S}_{\mathbf{N}}} >\mathbf{s}_q)}, \quad k_1, k_2 \in \mathcal{K}.\end{equation}

\begin{equation} \mathbb{E} [S_{N_{k_1}} S_{N_{k_2}} | \mathbf{S}_{\mathbf{N}} > \mathbf{s}_q] = \mathbb{E}[S_{N_{k_1}} S_{N_{k_2}}] \frac{\Pr(\widehat{\mathbf{S}_{\mathbf{N}}}^{[k_1,k_2]}>\mathbf{s}_q)}{\Pr(\mathbf{\mathbf{S}_{\mathbf{N}}} >\mathbf{s}_q)}, \quad k_1, k_2 \in \mathcal{K}.\end{equation}

To determine the quantities defined in (2.4) and (2.6) related to the capital allocation problem, we make use of item (iii) of Lemma 2.1. This yields

where

![]() $\widehat{S}_{\bullet}^{[k]} = \sum_{j=1}^K \widehat{S}_{N_j}^{[k]}$

, and

$\widehat{S}_{\bullet}^{[k]} = \sum_{j=1}^K \widehat{S}_{N_j}^{[k]}$

, and

![]() $\widehat{S}_{N_j}^{[k]}$

is the jth element of

$\widehat{S}_{N_j}^{[k]}$

is the jth element of

![]() $\widehat{\mathbf{S}_{\mathbf{N}}}^{[k]}$

, whose (joint) distribution can be computed using (3.7). Then, the probability

$\widehat{\mathbf{S}_{\mathbf{N}}}^{[k]}$

, whose (joint) distribution can be computed using (3.7). Then, the probability

![]() $\Pr[\widehat{S}_{\bullet}^{[k]} > s]$

can be calculated. For example, in the bivariate case, if the FFT method is used for

$\Pr[\widehat{S}_{\bullet}^{[k]} > s]$

can be calculated. For example, in the bivariate case, if the FFT method is used for

![]() $\widehat{\mathbf{S}_{\mathbf{N}}}^{[k]}$

, then the distribution of

$\widehat{\mathbf{S}_{\mathbf{N}}}^{[k]}$

, then the distribution of

![]() $\widehat{S}_{\bullet}^{[k]} $

can be obtained by the inverse fast Fourier transform of the diagonal terms of the array of the FFT of

$\widehat{S}_{\bullet}^{[k]} $

can be obtained by the inverse fast Fourier transform of the diagonal terms of the array of the FFT of

![]() $\widehat{\mathbf{S}_{\mathbf{N}}}^{[k]}$

.

$\widehat{\mathbf{S}_{\mathbf{N}}}^{[k]}$

.

In addition, for

![]() $k_1,k_2\in \mathcal{K}$

$k_1,k_2\in \mathcal{K}$

where

\begin{equation*}\widehat{S}_{\bullet}^{[k_1,k_2]}= \sum_{j=1}^K \widehat{S}_{N_j}^{[k_1,k_2]}.\end{equation*}

\begin{equation*}\widehat{S}_{\bullet}^{[k_1,k_2]}= \sum_{j=1}^K \widehat{S}_{N_j}^{[k_1,k_2]}.\end{equation*}

These quantities can be computed by applying Equations (3.9) and (3.11).

From Equations (3.15) to (3.19), it is seen that we essentially change the problem of computing tail moments to the problem of computing the tail probabilities of the moment transformed distributions. All the required quantities for computing the risk measures and performing the capital allocations can be determined if the moment transformed distributions of

![]() $\mathbf{S}_{\mathbf{N}}$

can be computed.

$\mathbf{S}_{\mathbf{N}}$

can be computed.

By Theorem 3.1, it is seen that the distribution functions of the moment transforms of

![]() $\mathbf{S}_{\mathbf{N}}$

rely on the distribution functions of

$\mathbf{S}_{\mathbf{N}}$

rely on the distribution functions of

![]() $\mathbf{L}^{[k]}$

,

$\mathbf{L}^{[k]}$

,

![]() $\mathbf{L}^{[k_1,k_2]}$

and

$\mathbf{L}^{[k_1,k_2]}$

and

![]() $\mathbf{L}^{[k^{[2]}]}$

. Therefore, in the next section, we derive formulas for determining them when

$\mathbf{L}^{[k^{[2]}]}$

. Therefore, in the next section, we derive formulas for determining them when

![]() $\mathbf{N}$

follows some commonly used multivariate discrete distributions, as introduced in Hesselager (Reference Hesselager1996) and Kim et al. (Reference Kim, Jang and Pyun2019).

$\mathbf{N}$

follows some commonly used multivariate discrete distributions, as introduced in Hesselager (Reference Hesselager1996) and Kim et al. (Reference Kim, Jang and Pyun2019).

4. Multivariate factorial moment transform of some commonly used discrete distributions

As discussed in Section 4.2 of Denuit and Robert (Reference Denuit and Robert2021), common mixture is a very flexible and useful method for constructing dependence models. It plays a fundamental role in the following derivations. Therefore, we first present a general result on moment transform of a random vector with common mixing variables.

Let

![]() $\mathbf{X}=(X_1,\cdots, X_K)$

. An external environment, described by a random variable

$\mathbf{X}=(X_1,\cdots, X_K)$

. An external environment, described by a random variable

![]() $\Lambda$

, affects each element of

$\Lambda$

, affects each element of

![]() $\mathbf{X}$

such that

$\mathbf{X}$

such that

![]() $\mathbf{X}\stackrel{d}{=} \mathbf{X}(\Lambda)$

and

$\mathbf{X}\stackrel{d}{=} \mathbf{X}(\Lambda)$

and

![]() $X_i \stackrel{d}{=} X_i(\Lambda)$

. Let

$X_i \stackrel{d}{=} X_i(\Lambda)$

. Let

![]() $\mathbf{X}(\lambda)$

be the random vector with the conditional distribution of

$\mathbf{X}(\lambda)$

be the random vector with the conditional distribution of

![]() $\mathbf{X}$

given

$\mathbf{X}$

given

![]() $\Lambda=\lambda$

, and then, we have the following result for the moment transform of

$\Lambda=\lambda$

, and then, we have the following result for the moment transform of

![]() $\mathbf{X}$

.

$\mathbf{X}$

.

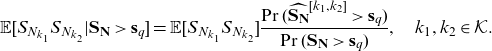

Proposition 4.1. The distribution function of the

![]() $(k_1, k_2)$

th component

$(k_1, k_2)$

th component

![]() $(\alpha_1, \alpha_2)$

th order moment transform of

$(\alpha_1, \alpha_2)$

th order moment transform of

![]() $\mathbf{X}=\mathbf{X}(\Lambda)$

is given by

$\mathbf{X}=\mathbf{X}(\Lambda)$

is given by

where

Proof.

\begin{eqnarray*} F_{\widehat{\mathbf{X}}^{[k_1^{[\alpha_1]},k_2^{[\alpha_2]}]}} (\mathbf{x}) &=& \frac{\mathbb{E}[X_{k_1}(\Lambda)^{\alpha_1}X_{k_2}(\Lambda)^{\alpha_2} \mathbb{I}(\mathbf{X}\le \mathbf{x})] }{\mathbb{E}[X_{k_1}(\Lambda)^{\alpha_1}X_{k_2}(\Lambda)^{\alpha_2}]}\nonumber\\[5pt] &=& \frac{\int \mathbb{E}[X_{k_1}(\lambda)^{\alpha_1}X_{k_2}^{\alpha_2}(\lambda) \mathbb{I}(\mathbf{X}(\lambda)\le \mathbf{x})] \, d F_{\Lambda} (\lambda)}{\mathbb{E}[X_{k_1}(\Lambda)^{\alpha_1}]\mathbb{E}[X_{k_2}(\Lambda)^{\alpha_2}]}\nonumber\\[5pt] &=& \frac{\int \mathbb{E}[X_{k_1}(\lambda)^{\alpha_1}X_{k_2}(\lambda)^{\alpha_2}] \mathbb{E}[\mathbb{I}({\widehat{\mathbf{X}(\lambda)}^{[k_1^{[\alpha_1]},k_2^{[\alpha_2]}]}} \le \mathbf{x})] \, d F_{\Lambda}(\lambda)}{\mathbb{E}[X_{k_1}(\Lambda)^{\alpha_1}]\mathbb{E}[X_{k_2}(\Lambda)^{\alpha_2}]}\nonumber\\[5pt] &=& \int \mathbb{E}[\mathbb{I}({\widehat{\mathbf{X}(\lambda)}^{[k_1^{[\alpha_1]},k_2^{[\alpha_2]}]}} \le \mathbf{x})] \, d F_{\Lambda^*} (\lambda)\nonumber\\[5pt] &=& \int F_{\widehat{\mathbf{X}(\lambda)}^{[k_1^{[\alpha_1]},k_2^{[\alpha_2]}]}} (\mathbf{x}) \, d F_{\Lambda^*} (\lambda). \end{eqnarray*}

\begin{eqnarray*} F_{\widehat{\mathbf{X}}^{[k_1^{[\alpha_1]},k_2^{[\alpha_2]}]}} (\mathbf{x}) &=& \frac{\mathbb{E}[X_{k_1}(\Lambda)^{\alpha_1}X_{k_2}(\Lambda)^{\alpha_2} \mathbb{I}(\mathbf{X}\le \mathbf{x})] }{\mathbb{E}[X_{k_1}(\Lambda)^{\alpha_1}X_{k_2}(\Lambda)^{\alpha_2}]}\nonumber\\[5pt] &=& \frac{\int \mathbb{E}[X_{k_1}(\lambda)^{\alpha_1}X_{k_2}^{\alpha_2}(\lambda) \mathbb{I}(\mathbf{X}(\lambda)\le \mathbf{x})] \, d F_{\Lambda} (\lambda)}{\mathbb{E}[X_{k_1}(\Lambda)^{\alpha_1}]\mathbb{E}[X_{k_2}(\Lambda)^{\alpha_2}]}\nonumber\\[5pt] &=& \frac{\int \mathbb{E}[X_{k_1}(\lambda)^{\alpha_1}X_{k_2}(\lambda)^{\alpha_2}] \mathbb{E}[\mathbb{I}({\widehat{\mathbf{X}(\lambda)}^{[k_1^{[\alpha_1]},k_2^{[\alpha_2]}]}} \le \mathbf{x})] \, d F_{\Lambda}(\lambda)}{\mathbb{E}[X_{k_1}(\Lambda)^{\alpha_1}]\mathbb{E}[X_{k_2}(\Lambda)^{\alpha_2}]}\nonumber\\[5pt] &=& \int \mathbb{E}[\mathbb{I}({\widehat{\mathbf{X}(\lambda)}^{[k_1^{[\alpha_1]},k_2^{[\alpha_2]}]}} \le \mathbf{x})] \, d F_{\Lambda^*} (\lambda)\nonumber\\[5pt] &=& \int F_{\widehat{\mathbf{X}(\lambda)}^{[k_1^{[\alpha_1]},k_2^{[\alpha_2]}]}} (\mathbf{x}) \, d F_{\Lambda^*} (\lambda). \end{eqnarray*}

This proposition is used in several settings in the sequel, where the moment transforms of several commonly used multivariate models for claim frequency are studied.

4.1. Multinomial – (a,b,0) mixture

This is model A of Hesselager (Reference Hesselager1996). Let M be a counting random variable whose probability mass function

![]() $p_M$

is in the (a,b,0) class with parameters a and b. That is,

$p_M$

is in the (a,b,0) class with parameters a and b. That is,

Assume that conditional on

![]() $M=m$

,

$M=m$

,

![]() $\mathbf{N}=(N_1, \cdots, N_K)$

follows a multinomial (MN) distribution with parameters

$\mathbf{N}=(N_1, \cdots, N_K)$

follows a multinomial (MN) distribution with parameters

![]() $(m,q_1, \cdots, q_K)$

. That is

$(m,q_1, \cdots, q_K)$

. That is

for non-negative

![]() $n_1,\cdots, n_K$

. It is understood that

$n_1,\cdots, n_K$

. It is understood that

![]() $\mathbf{N}=\mathbf{0}$

when

$\mathbf{N}=\mathbf{0}$

when

![]() $m=0$

.

$m=0$

.

As illustrated in Hesselager (Reference Hesselager1996), this model has a natural application in claim reserving where M is the total number of claims incurred in a fixed period and

![]() $n_1\sim n_K$

are the number of claims in different stages of settlement (reported, not-reported, paid, and so on).

$n_1\sim n_K$

are the number of claims in different stages of settlement (reported, not-reported, paid, and so on).

In the following, we refer to the unconditional distribution of

![]() $\mathbf{N}$

as the HMN distribution and write

$\mathbf{N}$

as the HMN distribution and write

![]() $\mathbf{N} \sim HMN(M, q_1, \cdots, q_K)$

. To obtain the moment transforms of

$\mathbf{N} \sim HMN(M, q_1, \cdots, q_K)$

. To obtain the moment transforms of

![]() $\mathbf{N}$

, we observe that M can be regarded as a common mixing variable for

$\mathbf{N}$

, we observe that M can be regarded as a common mixing variable for

![]() $(N_1, \cdots, N_K)$

. Thus, we write

$(N_1, \cdots, N_K)$

. Thus, we write

![]() $\mathbf{N} \stackrel{d}{=} \mathbf{N}(M) $

and let

$\mathbf{N} \stackrel{d}{=} \mathbf{N}(M) $

and let

![]() $\mathbf{N}(m)=(N_1(m), \cdots, N_K(m))$

denote the random vector with distribution

$\mathbf{N}(m)=(N_1(m), \cdots, N_K(m))$

denote the random vector with distribution

![]() $MN(m, q_1, \cdots, q_K)$

.

$MN(m, q_1, \cdots, q_K)$

.

By simple substitution, it is easy to verify that

for

$k\in \mathcal{K}$

and

$k\in \mathcal{K}$

and

$m\ge 1$

, (4.3)

$m\ge 1$

, (4.3) \begin{equation} \mathbf{L}^{[k]}(m) = \widehat{\mathbf{N}(m)}^{[k]}- \mathbf{1}^{[k]} \sim MN(m-1, q_1, \cdots, q_K), \end{equation}

\begin{equation} \mathbf{L}^{[k]}(m) = \widehat{\mathbf{N}(m)}^{[k]}- \mathbf{1}^{[k]} \sim MN(m-1, q_1, \cdots, q_K), \end{equation}

for

$k_1\neq k_2\in \mathcal{K}$

and

$k_1\neq k_2\in \mathcal{K}$

and

$m\ge 2$

(4.4)

$m\ge 2$

(4.4) \begin{equation} \mathbf{L}^{[k_1,k_2]}(m) = \widehat{\mathbf{N}(m)}^{[k_1,k_2]} - \mathbf{1}^{[k_1]} - \mathbf{1}^{[k_2]} \sim MN(m-2, q_1, \cdots, q_K),\end{equation}

\begin{equation} \mathbf{L}^{[k_1,k_2]}(m) = \widehat{\mathbf{N}(m)}^{[k_1,k_2]} - \mathbf{1}^{[k_1]} - \mathbf{1}^{[k_2]} \sim MN(m-2, q_1, \cdots, q_K),\end{equation}

for

$k\in \mathcal{K}$

and

$k\in \mathcal{K}$

and

$m\ge 2$

, (4.5)

$m\ge 2$

, (4.5) \begin{equation} \mathbf{L}^{[k^{[2]}]}(m) = \left(\widehat{\mathbf{N}(m)}^{[k^{[(2)]}]} - 2\times \mathbf{1}^{[k]} \right)\sim MN(m-2, q_1, \cdots, q_K)\end{equation}

\begin{equation} \mathbf{L}^{[k^{[2]}]}(m) = \left(\widehat{\mathbf{N}(m)}^{[k^{[(2)]}]} - 2\times \mathbf{1}^{[k]} \right)\sim MN(m-2, q_1, \cdots, q_K)\end{equation}

Combining Proposition 4.1 and the above three points yields the following results.

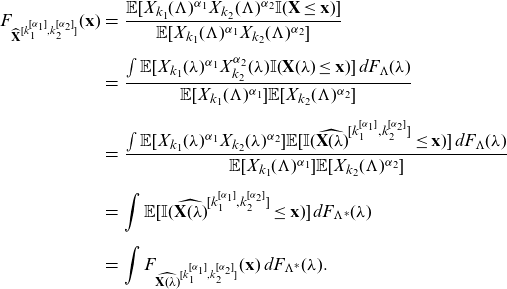

Theorem 4.1. Let

![]() $\mathbf{N}\sim HMN(M, q_1, \cdots, q_k)$

, then

$\mathbf{N}\sim HMN(M, q_1, \cdots, q_k)$

, then

Proof. By Proposition 4.1,

where

\begin{eqnarray*}p_{{M_{k}^*}}(m)&=&\frac{\mathbb{E}[N_k(m)]}{\mathbb{E}[N_k(M)]} p_{{M}}(m)\nonumber\\[5pt]&=&\frac{mq_k}{\mathbb{E}[M]q_k}p_{{M}}(m)\nonumber\\[5pt]&=& p_{\tilde{M}}(m).\end{eqnarray*}

\begin{eqnarray*}p_{{M_{k}^*}}(m)&=&\frac{\mathbb{E}[N_k(m)]}{\mathbb{E}[N_k(M)]} p_{{M}}(m)\nonumber\\[5pt]&=&\frac{mq_k}{\mathbb{E}[M]q_k}p_{{M}}(m)\nonumber\\[5pt]&=& p_{\tilde{M}}(m).\end{eqnarray*}

Therefore,

which by (4.3) indicates (4.6).

Similarly,

where

\begin{eqnarray*} p_{{M_{k_1,k_2}^*}}(m)&=&\frac{\mathbb{E}[N_{k_1}(m)N_{k_2}(m)]}{\mathbb{E}[N_{k_1}(M)N_{k_2}(M)]} p_{{M}}(m)\nonumber\\[5pt] &=&\frac{m(m-1)q_{k_1}q_{k_2}}{\mathbb{E}[M(M-1)]q_{k_1}q_{k_2}}p_{{M}}(m)\nonumber\\[5pt] &=& p_{\tilde{M}^{[(2)]}}(m),\end{eqnarray*}

\begin{eqnarray*} p_{{M_{k_1,k_2}^*}}(m)&=&\frac{\mathbb{E}[N_{k_1}(m)N_{k_2}(m)]}{\mathbb{E}[N_{k_1}(M)N_{k_2}(M)]} p_{{M}}(m)\nonumber\\[5pt] &=&\frac{m(m-1)q_{k_1}q_{k_2}}{\mathbb{E}[M(M-1)]q_{k_1}q_{k_2}}p_{{M}}(m)\nonumber\\[5pt] &=& p_{\tilde{M}^{[(2)]}}(m),\end{eqnarray*}

which leads to (4.7). In addition,

where

\begin{eqnarray*} p_{{M_{k^{[2]}}^*}}(m)&=&\frac{\mathbb{E}[N_{k}(m)(N_{k}(m)-1)]}{\mathbb{E}[N_{k}(M)(N_{k}(M)-1)]} p_{{M}}(m)\nonumber\\[5pt] &=&\frac{m(m-1)q_{k}^2}{\mathbb{E}[M(M-1)]q_{k}^2}p_{{M}}(m)\nonumber\\[5pt] &=& p_{\tilde{M}^{[(2)]}}(m),\end{eqnarray*}

\begin{eqnarray*} p_{{M_{k^{[2]}}^*}}(m)&=&\frac{\mathbb{E}[N_{k}(m)(N_{k}(m)-1)]}{\mathbb{E}[N_{k}(M)(N_{k}(M)-1)]} p_{{M}}(m)\nonumber\\[5pt] &=&\frac{m(m-1)q_{k}^2}{\mathbb{E}[M(M-1)]q_{k}^2}p_{{M}}(m)\nonumber\\[5pt] &=& p_{\tilde{M}^{[(2)]}}(m),\end{eqnarray*}

which leads to (4.8).

It was shown in Ren (Reference Ren2021) that if M is in (a,b,0) class with parameter (a,b), then

![]() $\tilde{M}-1$

is in the (a,b,0) class with parameter

$\tilde{M}-1$

is in the (a,b,0) class with parameter

![]() $(a,a+b)$

, and

$(a,a+b)$

, and

![]() $\tilde{M}^{[(2)]}-2$

is in the (a,b,0) class with parameter

$\tilde{M}^{[(2)]}-2$

is in the (a,b,0) class with parameter

![]() $(a,2a+b)$

. Therefore, the distributions of

$(a,2a+b)$

. Therefore, the distributions of

![]() $\mathbf{L}^{[k]}$

,

$\mathbf{L}^{[k]}$

,

![]() ${\mathbf{L}}^{[k^{[2]}]}$

,

${\mathbf{L}}^{[k^{[2]}]}$

,

![]() $\mathbf{L}^{[k_1,k_2]}$

, and the original

$\mathbf{L}^{[k_1,k_2]}$

, and the original

![]() $\mathbf{N}$

are all in the same HMN family of Binomial-(a,b,0) mixture distributions. Thus, all the nice properties discussed in Hesselager (Reference Hesselager1996) are preserved. A particular important fact is that the corresponding compound distributions of

$\mathbf{N}$

are all in the same HMN family of Binomial-(a,b,0) mixture distributions. Thus, all the nice properties discussed in Hesselager (Reference Hesselager1996) are preserved. A particular important fact is that the corresponding compound distributions of

![]() $\mathbf{S}_{\mathbf{L}^{[k]}}$

,

$\mathbf{S}_{\mathbf{L}^{[k]}}$

,

![]() $\mathbf{S}_{{\mathbf{L}}^{[k^{[2]}]}}$

,

$\mathbf{S}_{{\mathbf{L}}^{[k^{[2]}]}}$

,

![]() $\mathbf{S}_{\mathbf{L}^{[k_1,k_2]}}$

can be evaluated recursively by using Theorem 2.2 of Hesselager (Reference Hesselager1996). Alternatively, the characteristic functions of

$\mathbf{S}_{\mathbf{L}^{[k_1,k_2]}}$

can be evaluated recursively by using Theorem 2.2 of Hesselager (Reference Hesselager1996). Alternatively, the characteristic functions of

![]() $\mathbf{S}_{\mathbf{L}^{[k]}}$

,

$\mathbf{S}_{\mathbf{L}^{[k]}}$

,

![]() $\mathbf{S}_{{\mathbf{L}}^{[k^{[2]}]}}$

,

$\mathbf{S}_{{\mathbf{L}}^{[k^{[2]}]}}$

,

![]() $\mathbf{S}_{\mathbf{L}^{[k_1,k_2]}}$

can be found easily, and their distribution functions can be computed using the FFT method. Consequently, Theorem 3.1 can be applied to compute the distributions of

$\mathbf{S}_{\mathbf{L}^{[k_1,k_2]}}$

can be found easily, and their distribution functions can be computed using the FFT method. Consequently, Theorem 3.1 can be applied to compute the distributions of

![]() $\widehat{\mathbf{S}_{\mathbf{N}}}^{[k]}$

,

$\widehat{\mathbf{S}_{\mathbf{N}}}^{[k]}$

,

![]() $\widehat{\mathbf{S}_{\mathbf{N}}}^{[k^{[2]}]}$

and

$\widehat{\mathbf{S}_{\mathbf{N}}}^{[k^{[2]}]}$

and

![]() $\widehat{\mathbf{S}_{\mathbf{N}}}^{[k_1,k_2]}$

.

$\widehat{\mathbf{S}_{\mathbf{N}}}^{[k_1,k_2]}$

.

4.2. Additive common shock

For

![]() $k\in \{0\}\cup \mathcal{K}$

, let

$k\in \{0\}\cup \mathcal{K}$

, let

![]() $\{M_k\}$

be independent non-negative discrete random variables with distribution functions in the (a,b,0) class with parameters

$\{M_k\}$

be independent non-negative discrete random variables with distribution functions in the (a,b,0) class with parameters

![]() $\{(a_k,b_k)\}$

. For

$\{(a_k,b_k)\}$

. For

![]() $k\in \mathcal{K}$

, let

$k\in \mathcal{K}$

, let

![]() $N_k=M_0+M_k$

. We consider the vector

$N_k=M_0+M_k$

. We consider the vector

![]() $\mathbf{N}=(N_1,\cdots, N_K)$

.

$\mathbf{N}=(N_1,\cdots, N_K)$

.

The moment transforms of

![]() $\mathbf{N}$

could be derived by treating

$\mathbf{N}$

could be derived by treating

![]() $M_0$

as a common mixing random variable. However, we next take a seemingly more direct approach.

$M_0$

as a common mixing random variable. However, we next take a seemingly more direct approach.

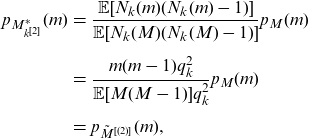

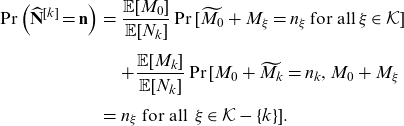

Theorem 4.2. The kth component first moment transform of

![]() $\mathbf{N}$

is given by

$\mathbf{N}$

is given by

\begin{eqnarray} \Pr\left(\widehat{\mathbf{N}}^{[k]}=\mathbf{n}\right)&=& \frac{\mathbb{E}[M_0]}{\mathbb{E}[N_k]} \Pr[\widetilde{M_0}+M_{\xi}=n_\xi \, \text{for all}\, \xi \in \mathcal{K} ]\nonumber\\[5pt] && +\frac{\mathbb{E}[M_k]}{\mathbb{E}[N_k]} \Pr[M_0+\widetilde{M_k}=n_k, M_0+M_\xi \nonumber \\[5pt] &=& n_\xi \text{ for all }\, \xi \in \mathcal{K} -\{k\}]. \end{eqnarray}

\begin{eqnarray} \Pr\left(\widehat{\mathbf{N}}^{[k]}=\mathbf{n}\right)&=& \frac{\mathbb{E}[M_0]}{\mathbb{E}[N_k]} \Pr[\widetilde{M_0}+M_{\xi}=n_\xi \, \text{for all}\, \xi \in \mathcal{K} ]\nonumber\\[5pt] && +\frac{\mathbb{E}[M_k]}{\mathbb{E}[N_k]} \Pr[M_0+\widetilde{M_k}=n_k, M_0+M_\xi \nonumber \\[5pt] &=& n_\xi \text{ for all }\, \xi \in \mathcal{K} -\{k\}]. \end{eqnarray}

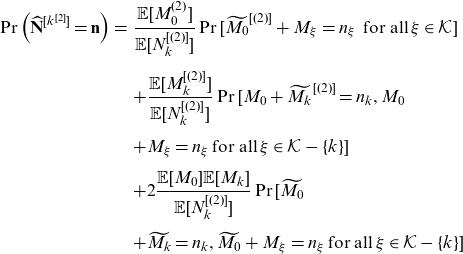

The kth component second factorial moment transform of

![]() $\mathbf{N}$

is given by

$\mathbf{N}$

is given by

\begin{eqnarray} \Pr\left(\widehat{\mathbf{N}}^{[k^{[2]}]} =\mathbf{n}\right)&=& \frac{\mathbb{E}[M_0^{(2)}]}{\mathbb{E}[N_k^{[(2)]}]} \Pr[\widetilde{M_0}^{[(2)]}+M_\xi=n_\xi \, \text{ for all}\, \xi \in \mathcal{K} ]\nonumber\\[5pt] &&+\frac{\mathbb{E}[M_k^{[(2)]}]}{\mathbb{E}[N_k^{[(2)]}]} \Pr[M_0+\widetilde{M_k}^{[(2)]}=n_k, M_0 \nonumber \\[5pt] && +M_\xi=n_\xi \, \text{for all}\,\xi \in \mathcal{K} -\{k\}]\nonumber\\[5pt] && +2\frac{\mathbb{E}[M_0]\mathbb{E}[M_k]}{\mathbb{E}[N_k^{[(2)]}]} \Pr[\widetilde{M_0} \nonumber \\[5pt] &&+\widetilde{M_k}=n_k, \widetilde{M_0}+M_\xi=n_\xi \, \text{for all}\,\xi \in \mathcal{K} -\{k\}] \end{eqnarray}

\begin{eqnarray} \Pr\left(\widehat{\mathbf{N}}^{[k^{[2]}]} =\mathbf{n}\right)&=& \frac{\mathbb{E}[M_0^{(2)}]}{\mathbb{E}[N_k^{[(2)]}]} \Pr[\widetilde{M_0}^{[(2)]}+M_\xi=n_\xi \, \text{ for all}\, \xi \in \mathcal{K} ]\nonumber\\[5pt] &&+\frac{\mathbb{E}[M_k^{[(2)]}]}{\mathbb{E}[N_k^{[(2)]}]} \Pr[M_0+\widetilde{M_k}^{[(2)]}=n_k, M_0 \nonumber \\[5pt] && +M_\xi=n_\xi \, \text{for all}\,\xi \in \mathcal{K} -\{k\}]\nonumber\\[5pt] && +2\frac{\mathbb{E}[M_0]\mathbb{E}[M_k]}{\mathbb{E}[N_k^{[(2)]}]} \Pr[\widetilde{M_0} \nonumber \\[5pt] &&+\widetilde{M_k}=n_k, \widetilde{M_0}+M_\xi=n_\xi \, \text{for all}\,\xi \in \mathcal{K} -\{k\}] \end{eqnarray}

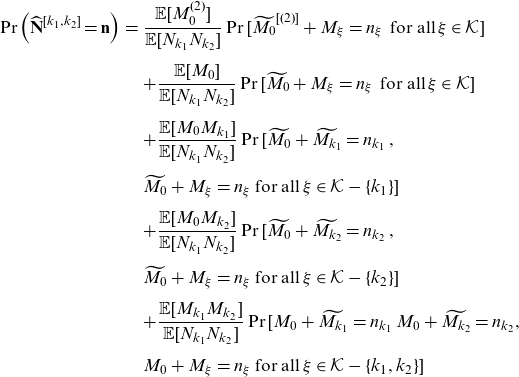

For

![]() $k_1\neq k_2 \in\mathcal{K}$

, the

$k_1\neq k_2 \in\mathcal{K}$

, the

![]() $(k_1,k_2)$

th component first-order moment transform of

$(k_1,k_2)$

th component first-order moment transform of

![]() $\mathbf{N}$

is given by

$\mathbf{N}$

is given by

\begin{eqnarray} \Pr\left(\widehat{\mathbf{N}}^{[k_1,k_2]}=\mathbf{n}\right)&=& \frac{\mathbb{E}[M_0^{(2)}]}{\mathbb{E}[N_{k_1}N_{k_2}]} \Pr[\widetilde{M_0}^{[(2)]}+M_\xi=n_\xi \, \text{ for all}\, \xi \in \mathcal{K} ]\nonumber\\[5pt] &&+ \frac{\mathbb{E}[M_0]}{\mathbb{E}[N_{k_1}N_{k_2}]} \Pr[\widetilde{M_0}+M_\xi=n_\xi \, \text{ for all}\, \xi \in \mathcal{K} ]\nonumber\\[5pt] &&+\frac{\mathbb{E}[M_0 M_{k_1}]}{\mathbb{E}[N_{k_1}N_{k_2}]} \Pr[\widetilde{M_0}+\widetilde{M_{k_1}}=n_{k_1} \,, \nonumber\\[5pt] &&\widetilde{M_0}+M_\xi = n_\xi \text{ for all}\, \xi \in \mathcal{K}-\{k_1\} ]\nonumber\\[5pt] &&+\frac{\mathbb{E}[M_0 M_{k_2}]}{\mathbb{E}[N_{k_1}N_{k_2}]} \Pr[\widetilde{M_0}+\widetilde{M_{k_2}}=n_{k_2} \,, \nonumber\\[5pt] && \widetilde{M_0}+M_\xi = n_\xi \text{ for all}\, \xi \in \mathcal{K}-\{k_2\} ]\nonumber\\[5pt] &&+\frac{\mathbb{E}[M_{k_1} M_{k_2}]}{\mathbb{E}[N_{k_1}N_{k_2}]} \Pr[{M_0}+\widetilde{M_{k_1}}=n_{k_1} \, {M_0}+\widetilde{M_{k_2}}=n_{k_2}, \nonumber\\[5pt] && {M_0}+M_\xi = n_\xi \text{ for all}\, \xi \in \mathcal{K}-\{k_1,k_2\}]\nonumber\\[5pt]\end{eqnarray}

\begin{eqnarray} \Pr\left(\widehat{\mathbf{N}}^{[k_1,k_2]}=\mathbf{n}\right)&=& \frac{\mathbb{E}[M_0^{(2)}]}{\mathbb{E}[N_{k_1}N_{k_2}]} \Pr[\widetilde{M_0}^{[(2)]}+M_\xi=n_\xi \, \text{ for all}\, \xi \in \mathcal{K} ]\nonumber\\[5pt] &&+ \frac{\mathbb{E}[M_0]}{\mathbb{E}[N_{k_1}N_{k_2}]} \Pr[\widetilde{M_0}+M_\xi=n_\xi \, \text{ for all}\, \xi \in \mathcal{K} ]\nonumber\\[5pt] &&+\frac{\mathbb{E}[M_0 M_{k_1}]}{\mathbb{E}[N_{k_1}N_{k_2}]} \Pr[\widetilde{M_0}+\widetilde{M_{k_1}}=n_{k_1} \,, \nonumber\\[5pt] &&\widetilde{M_0}+M_\xi = n_\xi \text{ for all}\, \xi \in \mathcal{K}-\{k_1\} ]\nonumber\\[5pt] &&+\frac{\mathbb{E}[M_0 M_{k_2}]}{\mathbb{E}[N_{k_1}N_{k_2}]} \Pr[\widetilde{M_0}+\widetilde{M_{k_2}}=n_{k_2} \,, \nonumber\\[5pt] && \widetilde{M_0}+M_\xi = n_\xi \text{ for all}\, \xi \in \mathcal{K}-\{k_2\} ]\nonumber\\[5pt] &&+\frac{\mathbb{E}[M_{k_1} M_{k_2}]}{\mathbb{E}[N_{k_1}N_{k_2}]} \Pr[{M_0}+\widetilde{M_{k_1}}=n_{k_1} \, {M_0}+\widetilde{M_{k_2}}=n_{k_2}, \nonumber\\[5pt] && {M_0}+M_\xi = n_\xi \text{ for all}\, \xi \in \mathcal{K}-\{k_1,k_2\}]\nonumber\\[5pt]\end{eqnarray}

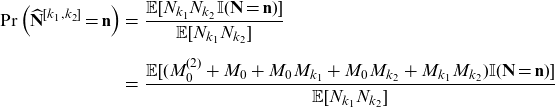

Proof. The proof of three statements is similar. We only prove (4.11) in the following.

\begin{eqnarray*} \Pr\left(\widehat{\mathbf{N}}^{[k_1,k_2]}=\mathbf{n}\right)&=&\frac{\mathbb{E}[N_{k_1}N_{k_2} \mathbb{I}(\mathbf{N}=\mathbf{n})]}{\mathbb{E}[N_{k_1}N_{k_2}]}\nonumber\\[5pt] &=&\frac{\mathbb{E}[(M_0^{(2)}+M_0+M_0M_{k_1}+M_0M_{k_2}+M_{k_1}M_{k_2}) \mathbb{I}(\mathbf{N}=\mathbf{n})]}{\mathbb{E}[N_{k_1}N_{k_2}]} \end{eqnarray*}

\begin{eqnarray*} \Pr\left(\widehat{\mathbf{N}}^{[k_1,k_2]}=\mathbf{n}\right)&=&\frac{\mathbb{E}[N_{k_1}N_{k_2} \mathbb{I}(\mathbf{N}=\mathbf{n})]}{\mathbb{E}[N_{k_1}N_{k_2}]}\nonumber\\[5pt] &=&\frac{\mathbb{E}[(M_0^{(2)}+M_0+M_0M_{k_1}+M_0M_{k_2}+M_{k_1}M_{k_2}) \mathbb{I}(\mathbf{N}=\mathbf{n})]}{\mathbb{E}[N_{k_1}N_{k_2}]} \end{eqnarray*}

Now, because

![]() $M_0, M_1, \cdots, M_K$

are mutually independent, we have for the first multiplication in the above

$M_0, M_1, \cdots, M_K$

are mutually independent, we have for the first multiplication in the above

which results in the first line of (4.11). Other terms can be obtained similarly.

Remark 4.1. Some insights can be gleaned from the results. Firstly, (4.9) indicates that the distribution of

![]() $\hat{\mathbf{N}}^{[k]}$

is a mixture of those of

$\hat{\mathbf{N}}^{[k]}$

is a mixture of those of

![]() $\mathbf{N}_A = \left(\widetilde{M_0}+M_1, \cdots, \widetilde{M_0}+M_k, \cdots, \widetilde{M_0}+M_K\right)$

and

$\mathbf{N}_A = \left(\widetilde{M_0}+M_1, \cdots, \widetilde{M_0}+M_k, \cdots, \widetilde{M_0}+M_K\right)$

and

![]() $\mathbf{N}_B = \left({M_0}+M_1, \cdots, {M_0}+\widetilde{M_k}, \cdots, {M_0}+M_K\right)$

.

$\mathbf{N}_B = \left({M_0}+M_1, \cdots, {M_0}+\widetilde{M_k}, \cdots, {M_0}+M_K\right)$

.

The Poisson case is especially interesting. Suppose that

![]() $M_\xi \sim \text{ Poisson } (\lambda_\xi)$

for

$M_\xi \sim \text{ Poisson } (\lambda_\xi)$

for

![]() $\xi \in \{0\}\cup \mathcal{K}$

. Then,

$\xi \in \{0\}\cup \mathcal{K}$

. Then,

![]() $\widetilde{M_\xi} \stackrel{d}{=} M_\xi+1$

for all

$\widetilde{M_\xi} \stackrel{d}{=} M_\xi+1$

for all

![]() $\xi$

. As a result, the distribution of

$\xi$

. As a result, the distribution of

![]() $\hat{\mathbf{N}}^{[k]}$

is a mixture of those of

$\hat{\mathbf{N}}^{[k]}$

is a mixture of those of

![]() $\mathbf{N}_A = \mathbf{N} + \mathbf{1}$

, where

$\mathbf{N}_A = \mathbf{N} + \mathbf{1}$

, where

![]() $\mathbf{1}$

is a K dimensional vector of ones, and

$\mathbf{1}$

is a K dimensional vector of ones, and

![]() $\mathbf{N}_B = \mathbf{N} + \mathbf{1}^{[k]}$

, with weights

$\mathbf{N}_B = \mathbf{N} + \mathbf{1}^{[k]}$

, with weights

![]() $\frac{\lambda_0}{(\lambda_0+\lambda_k)}$

and

$\frac{\lambda_0}{(\lambda_0+\lambda_k)}$

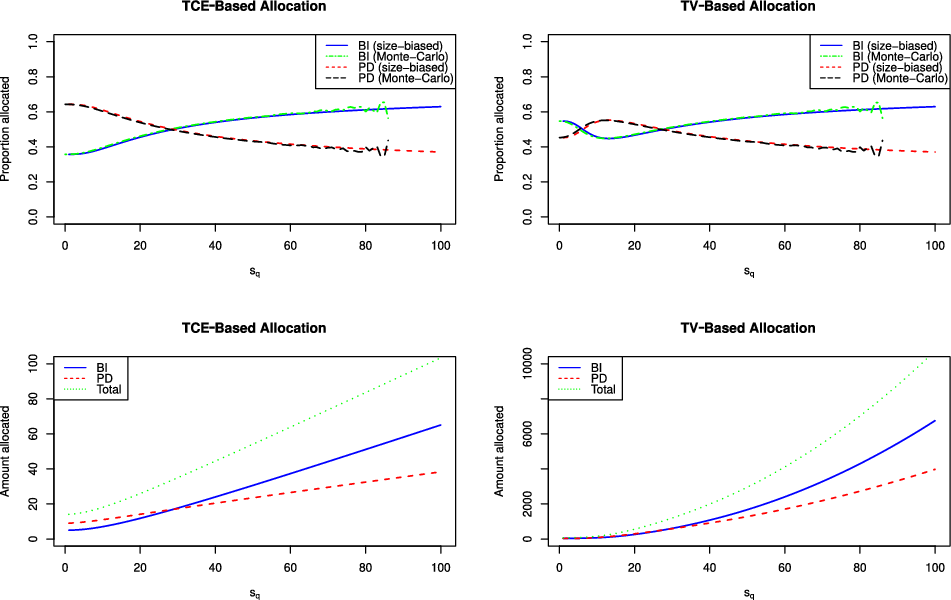

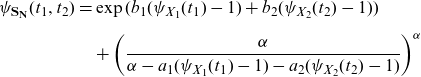

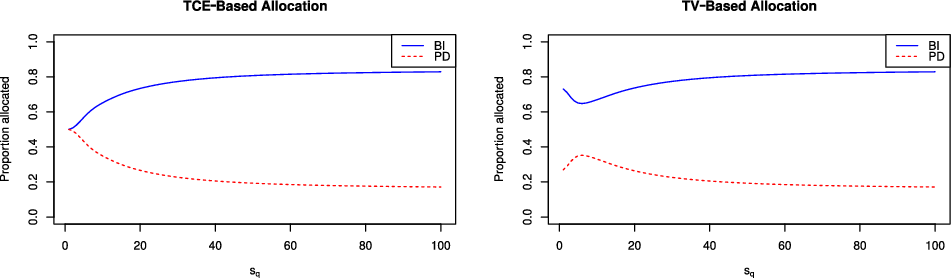

and