1. Introduction

Turbulence is a multiscale nonlinear dynamic phenomenon frequently observed in various flows in nature and industry. Certain deterministic dynamic features such as coherent structures have been found in turbulence (Hussain Reference Hussain1986); however, the behaviour of turbulence in most flows is chaotic. These characteristics make the accurate prediction of turbulence challenging despite the existence of governing equations for turbulence, called the Navier–Stokes equations. With the qualitative and quantitative expansion of computing resources over the past decades, various numerical approaches have been proposed. Direct numerical simulation (DNS), a full-resolution approach that can provide the most detailed description, is restricted to low Reynolds numbers. Moreover, the spatial filtering strategy of large-eddy simulation (LES) and the temporal averaging approach of the Reynolds-averaged Navier–Stokes (RANS) model, which provide relatively fast solutions, lack reliable general closure models. Most importantly, these traditional numerical approaches are based on the temporal advancement of the governing partial differential equations, which is still costly to use in practice, even with ever-increasing computing power since the small-scale motions, essential in turbulence evolution, need to be properly resolved.

Recently, machine learning (ML) and other data-driven approaches have become popular in many areas of science, engineering and technology owing to their effectiveness and efficiency in dealing with complex systems. Certainly, efforts have been made to apply ML to turbulence problems, particularly in fluid dynamics (Brenner, Eldredge & Freund Reference Brenner, Eldredge and Freund2019; Duraisamy, Iaccarino & Xiao Reference Duraisamy, Iaccarino and Xiao2019; Brunton, Noack & Koumoutsakos Reference Brunton, Noack and Koumoutsakos2020). Other studies have attempted to develop new closure models for turbulence models using ML, such as subgrid-scale models (Gamahara & Hattori Reference Gamahara and Hattori2017; Beck, Flad & Munz Reference Beck, Flad and Munz2018; Maulik et al. Reference Maulik, San, Rasheed and Vedula2018; Guan et al. Reference Guan, Chattopadhyay, Subel and Hassanzadeh2021; Kim et al. Reference Kim, Kim, Kim and Lee2022). Moreover, wall models for various flows in LES have been proposed (Yang et al. Reference Yang, Zafar, Wang and Xiao2019; Zhou, He & Yang Reference Zhou, He and Yang2021; Bae & Koumoutsakos Reference Bae and Koumoutsakos2022; Dupuy, Odier & Lapeyre Reference Dupuy, Odier and Lapeyre2023; Lozano-Durán & Bae Reference Lozano-Durán and Bae2023; Vadrot et al. Reference Vadrot, Yang, Bae and Abkar2023), and improvements in closure models for RANS have been attempted (Duraisamy, Zhang & Singh Reference Duraisamy, Zhang and Singh2015; Ling, Kurzawski & Templeton Reference Ling, Kurzawski and Templeton2016; Parish & Duraisamy Reference Parish and Duraisamy2016; Singh, Medida & Duraisamy Reference Singh, Medida and Duraisamy2017; Wu, Xiao & Paterson Reference Wu, Xiao and Paterson2018; Duraisamy et al. Reference Duraisamy, Iaccarino and Xiao2019; Zhu et al. Reference Zhu, Zhang, Kou and Liu2019). Some attempts have yielded promising results; however, more exploration is required to secure reliable general closure models.

Another approach using ML to predict turbulence dynamics based on reduced-order modelling (ROM) has been proposed. With low-dimensional representations obtained through mathematical decomposition, such as proper orthogonal decomposition (Sirovich Reference Sirovich1987), Koopman operator (Mezić Reference Mezić2005, Reference Mezić2013) and dynamic mode decomposition (Schmid Reference Schmid2010), or using recent network architectures such as autoencoder (AE) and convolutional neural networks (CNNs), the governing dynamics of latent space are trained using dynamic models, such as recurrent neural networks (RNNs). For example, Hennigh (Reference Hennigh2017) developed a model called Lat-Net based on the lattice Boltzmann method data by applying an AE structure. King et al. (Reference King, Hennigh, Mohan and Chertkov2018) developed a compressed convolutional long short-term memory (LSTM) combining AE and LSTM and showed that dynamic approaches such as Lat-Net and their method are more effective in reflecting turbulence physics, compared with static approaches. Wang et al. (Reference Wang, Xiao, Fang, Govindan, Pain and Guo2018) and Mohan & Gaitonde (Reference Mohan and Gaitonde2018) efficiently predicted the coefficients of basis functions using LSTM after applying proper orthogonal decomposition to various flows. Mohan et al. (Reference Mohan, Daniel, Chertkov and Livescu2019) extended the range of prediction to the forced homogeneous isotropic turbulence with two passive scalars. Srinivasan et al. (Reference Srinivasan, Guastoni, Azizpour, Schlatter and Vinuesa2019) confirmed the applicability of LSTM to wall-bounded near-wall turbulence using the nine-equation shear flow model. A recent study by Nakamura et al. (Reference Nakamura, Fukami, Hasegawa, Nabae and Fukagata2021) successfully applied nonlinear mode decomposition to predict the minimal turbulent channel flow using a CNN-based AE. Although ROM-based methods are efficient and easy to analyse, system characteristics such as nonlinear transients and multiscale phenomena can be easily lost during information compression when only the dominant modes are used. However, as complexity of ROM-based methods increases, models tend to capture most physical phenomena of turbulence. For example, as reported by Nakamura et al. (Reference Nakamura, Fukami, Hasegawa, Nabae and Fukagata2021), approximately 1500 modes were found to be sufficient to fully reconstruct turbulence characteristics. Then a question arises on how many modes need to be considered and the number of modes to properly represent turbulence might be not as small as intended.

The most basic and popular ML models are artificial neural networks (ANNs), also known as multilayer perceptrons, which determine nonlinear functional relationships between the input and output data and are relatively easy to train (Beck & Kurz Reference Beck and Kurz2021). However, when the input is in the form of a field, CNNs effectively capture embedded spatial patterns or correlations. In our previous work on the prediction of turbulent heat transfer (Kim & Lee Reference Kim and Lee2020b), a CNN successfully reconstructed the wall heat-flux distribution based on wall shear stresses. However, CNN-based models whose objective function is to minimise the pointwise mean-squared difference between the predicted and target fields sometimes produce blurry outputs (Kim & Lee Reference Kim and Lee2020a; Kim et al. Reference Kim, Kim, Won and Lee2021). Conversely, generative adversarial networks (GANs) (Goodfellow et al. Reference Goodfellow, Pouget-Abadie, Mirza, Xu, Warde-Farley, Ozair, Courville and Bengio2014), in which a generator (![]() $G$) and discriminator (

$G$) and discriminator (![]() $D$) network are trained simultaneously in an adversarial manner such that

$D$) network are trained simultaneously in an adversarial manner such that ![]() $G$ is trained to generate high-quality data whereas

$G$ is trained to generate high-quality data whereas ![]() $D$ is trained to distinguish generated data from target data, can produce better output than CNNs (Deng et al. Reference Deng, He, Liu and Kim2019; Kim & Lee Reference Kim and Lee2020a; Kim et al. Reference Kim, Kim, Won and Lee2021; Kim, Kim & Lee Reference Kim, Kim and Lee2023). Lee & You (Reference Lee and You2019) also showed that GANs are better in long-term prediction of unsteady flow over a circular cylinder. Ravuri et al. (Reference Ravuri2021) applied a deep generative model to precipitation nowcasting and reported a much higher accuracy with small-scale features than other ML models and numerical weather-prediction systems. GANs appear to capture the statistical characteristics of fields better than CNNs; we focus on this capability of GAN in this study.

$D$ is trained to distinguish generated data from target data, can produce better output than CNNs (Deng et al. Reference Deng, He, Liu and Kim2019; Kim & Lee Reference Kim and Lee2020a; Kim et al. Reference Kim, Kim, Won and Lee2021; Kim, Kim & Lee Reference Kim, Kim and Lee2023). Lee & You (Reference Lee and You2019) also showed that GANs are better in long-term prediction of unsteady flow over a circular cylinder. Ravuri et al. (Reference Ravuri2021) applied a deep generative model to precipitation nowcasting and reported a much higher accuracy with small-scale features than other ML models and numerical weather-prediction systems. GANs appear to capture the statistical characteristics of fields better than CNNs; we focus on this capability of GAN in this study.

First, we selected two-dimensional (2-D) decaying homogeneous isotropic turbulence (DHIT), which is essential in fields such as weather forecasting (Shi et al. Reference Shi, Chen, Wang, Yeung, Wong and Woo2015; Rüttgers et al. Reference Rüttgers, Lee, Jeon and You2019; Liu & Lee Reference Liu and Lee2020; Ravuri et al. Reference Ravuri2021); it is relatively simple; thus, its prediction can be performed at a reasonable cost. In addition, the study of 2-D turbulence was initially considered as a simplified version of three-dimensional (3-D) turbulence; however, it was studied extensively (Sommeria Reference Sommeria1986; Brachet et al. Reference Brachet, Meneguzzi, Politano and Sulem1988; Mcwilliams Reference Mcwilliams1990; McWilliams, Weiss & Yavneh Reference McWilliams, Weiss and Yavneh1994; Jiménez, Moffatt & Vasco Reference Jiménez, Moffatt and Vasco1996) after its unique characteristics related to geophysical and astrophysical problems, such as strongly rotating stratified flow, are revealed (Alexakis & Doering Reference Alexakis and Doering2006). The primary goal of this study was to develop a high-accuracy prediction model for 2-D DHIT called PredictionNet based on GANs, that produces the evolution of turbulence by reflecting spatiotemporal statistical characteristics. Successfully trained PredictionNet could predict 2-D turbulence more accurately in various aspects than a baseline CNN. Although proving why GANs are better in the prediction of turbulence statistics than CNNs is prohibitively hard, we performed various quantitative statistical analyses regarding the predictive accuracy depending on time and spatial scales to provide some clues on the working principle of the GAN model. By considering scale decomposition in the analysis of the behaviour of the latent variable, we discovered that the discriminator network of a GAN possesses a scale-selection capability, leading to the successful prediction of small-scale turbulence.

Second, flow control becomes feasible if accurate flow prediction is possible. The application of ML to turbulence control dates back to Lee et al. (Reference Lee, Kim, Babcock and Goodman1997), who used a neural network for turbulence control to reduce drag in a turbulent channel flow. Recently, various studies that applied ML to flow control for drag reduction in turbulent channel flow (Han & Huang Reference Han and Huang2020; Park & Choi Reference Park and Choi2020; Lee, Kim & Lee Reference Lee, Kim and Lee2023), drag reduction of flow around a cylinder (Rabault et al. Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019; Rabault & Kuhnle Reference Rabault and Kuhnle2019; Tang et al. Reference Tang, Rabault, Kuhnle, Wang and Wang2020) and object control (Colabrese et al. Reference Colabrese, Gustavsson, Celani and Biferale2017; Verma, Novati & Koumoutsakos Reference Verma, Novati and Koumoutsakos2018) have been conducted, yielding successful control results. However, in this study, for a fundamental understanding of the control mechanism in 2-D turbulence, we considered determining the optimum disturbance field, which can modify the flow in the direction of optimising the specified objective function. Thus, we combined PredictionNet and ControlNet for specific purposes. A target where the flow control can be used meaningfully, such as maximising the propagation of the control effect of the time-evolved flow field, was set, and the results were analysed and compared with the results of similar studies (Jiménez Reference Jiménez2018; Yeh, Meena & Taira Reference Yeh, Meena and Taira2021).

Following this introduction, § 2 describes the process of collecting datasets to be used for training and testing. In § 3, ML methodologies such as objective functions and network architectures are explained. The prediction and control results are subdivided and analysed qualitatively and quantitatively in § 4, and a conclusion is drawn in § 5.

2. Data collection

For decaying 2-D turbulence, which is our target for prediction and control, DNS was performed by solving the incompressible Navier–Stokes equations in the form of the vorticity transport equation without external forcing:

with

where ![]() $\omega (x_1,x_2,t)$ is the vorticity field with

$\omega (x_1,x_2,t)$ is the vorticity field with ![]() $x_1=x$ and

$x_1=x$ and ![]() $x_2=y$ and

$x_2=y$ and ![]() $\nu$ is the kinematic viscosity. Here

$\nu$ is the kinematic viscosity. Here ![]() $\psi$ denotes the steam function that satisfies

$\psi$ denotes the steam function that satisfies ![]() $u_1=u=\partial {\psi }/ \partial {y}$ and

$u_1=u=\partial {\psi }/ \partial {y}$ and ![]() $u_2=v=-\partial {\psi }/\partial {x}$. A pseudo-spectral method with 3/2 zero padding was adopted for spatial discretisation. The computational domain size to which the biperiodic boundary condition was applied was a square box of

$u_2=v=-\partial {\psi }/\partial {x}$. A pseudo-spectral method with 3/2 zero padding was adopted for spatial discretisation. The computational domain size to which the biperiodic boundary condition was applied was a square box of ![]() $[0,2{\rm \pi} )^2$, and the number of spatial grids,

$[0,2{\rm \pi} )^2$, and the number of spatial grids, ![]() $N_x \times N_y$, was

$N_x \times N_y$, was ![]() $128\times 128$. The Crank–Nicolson method for the viscous term and second-order Adams–Bashforth method for the convective term were used for temporal advancement. In Appendix A, it was proven that the pseudo-spectral approximation to ((2.1) and (2.2)) in a biperiodic domain is equivalent to pseudo-spectral approximation to the rotational form of the 2-D Navier–Stokes equations. For the training, validation and testing of the developed prediction network, 500, 100 and 50 independent simulations with random initialisations were performed, respectively.

$128\times 128$. The Crank–Nicolson method for the viscous term and second-order Adams–Bashforth method for the convective term were used for temporal advancement. In Appendix A, it was proven that the pseudo-spectral approximation to ((2.1) and (2.2)) in a biperiodic domain is equivalent to pseudo-spectral approximation to the rotational form of the 2-D Navier–Stokes equations. For the training, validation and testing of the developed prediction network, 500, 100 and 50 independent simulations with random initialisations were performed, respectively.

Training data were collected at discrete times, ![]() $t_i (=t_0+i \delta t,\ i=0,1,2, \ldots, 100)$ and

$t_i (=t_0+i \delta t,\ i=0,1,2, \ldots, 100)$ and ![]() $t_i+T$ (

$t_i+T$ (![]() $T$ is the target lead time for prediction), where

$T$ is the target lead time for prediction), where ![]() $\delta t (=20 \Delta t)$ is the data time step, and

$\delta t (=20 \Delta t)$ is the data time step, and ![]() $\Delta t$ denotes the simulation time step. We selected

$\Delta t$ denotes the simulation time step. We selected ![]() $t_0$ such that the initial transient behaviour due to the prescribed initial condition in the simulation had disappeared sufficiently; thus, the power-law spectrum of enstrophy (

$t_0$ such that the initial transient behaviour due to the prescribed initial condition in the simulation had disappeared sufficiently; thus, the power-law spectrum of enstrophy (![]() $\varOmega (k) \propto k^{-1}$ where

$\varOmega (k) \propto k^{-1}$ where ![]() $k$ is the wavenumber; Brachet et al. Reference Brachet, Meneguzzi, Politano and Sulem1988) was maintained. During this period, the root-mean-square (r.m.s.) vorticity magnitude

$k$ is the wavenumber; Brachet et al. Reference Brachet, Meneguzzi, Politano and Sulem1988) was maintained. During this period, the root-mean-square (r.m.s.) vorticity magnitude ![]() $\omega '$ and the average dissipation rate

$\omega '$ and the average dissipation rate ![]() $\varepsilon$ decay in the form

$\varepsilon$ decay in the form ![]() $\sim t^{-0.56}$ and

$\sim t^{-0.56}$ and ![]() $\sim t^{-1.12}$, respectively, as shown in figure 1(a), whereas the Taylor length scale (

$\sim t^{-1.12}$, respectively, as shown in figure 1(a), whereas the Taylor length scale (![]() $\lambda =u'/\omega '$ where

$\lambda =u'/\omega '$ where ![]() $u'$ is the r.m.s. velocity magnitude; Jiménez Reference Jiménez2018) and the Reynolds number based on

$u'$ is the r.m.s. velocity magnitude; Jiménez Reference Jiménez2018) and the Reynolds number based on ![]() $\lambda$ (

$\lambda$ (![]() $Re_\lambda = u' \lambda /\nu$), which are ensemble-averaged over 500 independent simulations, linearly increase as shown in figure 1(b). Therefore, 50 000 pairs of flow field snapshots were used in training, and for sufficient iterations of training without overfitting, data augmentation using a random phase shift at each iteration was adopted. Hereafter, the reference time and length scales in all non-dimensionalisations are

$Re_\lambda = u' \lambda /\nu$), which are ensemble-averaged over 500 independent simulations, linearly increase as shown in figure 1(b). Therefore, 50 000 pairs of flow field snapshots were used in training, and for sufficient iterations of training without overfitting, data augmentation using a random phase shift at each iteration was adopted. Hereafter, the reference time and length scales in all non-dimensionalisations are ![]() $t^*=1/\omega '_0$ and

$t^*=1/\omega '_0$ and ![]() $\lambda ^* =u'_0/\omega '_0$, respectively. The non-dimensionalised simulation and data time steps are

$\lambda ^* =u'_0/\omega '_0$, respectively. The non-dimensionalised simulation and data time steps are ![]() $\Delta t /t^*= 0.00614$ and

$\Delta t /t^*= 0.00614$ and ![]() $\delta t/t^* = 0.123$, respectively. Here

$\delta t/t^* = 0.123$, respectively. Here ![]() $t_{100}-t_0$ is approximately 2.5 times the Eulerian integral timescale of the vorticity field, as discussed in the following. Therefore, the vorticity fields at

$t_{100}-t_0$ is approximately 2.5 times the Eulerian integral timescale of the vorticity field, as discussed in the following. Therefore, the vorticity fields at ![]() $t_0$ and

$t_0$ and ![]() $t_{100}$ are decorrelated as shown in figure 1(c,d). In our training, all of these data spanning from

$t_{100}$ are decorrelated as shown in figure 1(c,d). In our training, all of these data spanning from ![]() $t_0$ to

$t_0$ to ![]() $t_{100}$ were used without distinction such that the trained network covers diverse characteristics of decaying turbulence.

$t_{100}$ were used without distinction such that the trained network covers diverse characteristics of decaying turbulence.

Figure 1. Range of selected data for training with a time interval of ![]() $100\delta t/t^*$ shown in the yellow region in (a) the vorticity r.m.s. and dissipation rate and in (b) Reynolds number and the Taylor length scale. Example vorticity fields at (c)

$100\delta t/t^*$ shown in the yellow region in (a) the vorticity r.m.s. and dissipation rate and in (b) Reynolds number and the Taylor length scale. Example vorticity fields at (c) ![]() $t_0$ and (d)

$t_0$ and (d) ![]() $t_{100}$ from a normalised test data.

$t_{100}$ from a normalised test data.

To select the target lead time ![]() $T$, we investigate the temporal autocorrelation function of the vorticity field,

$T$, we investigate the temporal autocorrelation function of the vorticity field, ![]() $\rho (s) (= \langle \omega (t)\omega (t+s) \rangle / \langle \omega (t)^2 \rangle ^{1/2} \langle \omega (t+s)^2 \rangle ^{1/2})$ for time lag

$\rho (s) (= \langle \omega (t)\omega (t+s) \rangle / \langle \omega (t)^2 \rangle ^{1/2} \langle \omega (t+s)^2 \rangle ^{1/2})$ for time lag ![]() $s$, as shown in figure 2(a), from which the integral time scale is obtained,

$s$, as shown in figure 2(a), from which the integral time scale is obtained, ![]() $T_L = 4.53 t^* = 36.9 \delta t$. As it is meaningless to predict the flow field much later than one integral time scale, we selected four lead times to develop a prediction network:

$T_L = 4.53 t^* = 36.9 \delta t$. As it is meaningless to predict the flow field much later than one integral time scale, we selected four lead times to develop a prediction network: ![]() $10\delta t, 20 \delta t, 40 \delta t$ and

$10\delta t, 20 \delta t, 40 \delta t$ and ![]() $80 \delta t$, which are referred to as

$80 \delta t$, which are referred to as ![]() $0.25 T_L, 0.5T_L, T_L$ and

$0.25 T_L, 0.5T_L, T_L$ and ![]() $2T_L$, respectively, even though

$2T_L$, respectively, even though ![]() $T_L = 36.9 \delta t$. Figure 2(b) shows the correlation function for the scale-decomposed field of vorticity, where three decomposed fields are considered: a large-scale field consisting of the wavenumber components for

$T_L = 36.9 \delta t$. Figure 2(b) shows the correlation function for the scale-decomposed field of vorticity, where three decomposed fields are considered: a large-scale field consisting of the wavenumber components for ![]() $k \leq 4$, representing the energy (enstrophy)-containing range; an intermediate-scale field containing the inertial-range wavenumber components for

$k \leq 4$, representing the energy (enstrophy)-containing range; an intermediate-scale field containing the inertial-range wavenumber components for ![]() $4< k \leq 20$; and the small-scale field corresponding to the wavenumber components for

$4< k \leq 20$; and the small-scale field corresponding to the wavenumber components for ![]() $k>20$ in the dissipation range. This clearly illustrates that the large-scale field persists longer than the total field with an integral time scale

$k>20$ in the dissipation range. This clearly illustrates that the large-scale field persists longer than the total field with an integral time scale ![]() $T^L_L \simeq 1.4 T_L$, whereas the intermediate- and small-scale fields quickly decorrelate with

$T^L_L \simeq 1.4 T_L$, whereas the intermediate- and small-scale fields quickly decorrelate with ![]() $T_L^I \simeq 0.25 T_L$ and

$T_L^I \simeq 0.25 T_L$ and ![]() $T_L^S \simeq 0.09 T_L$. These behaviours are responsible for the different prediction capabilities of each scale component, as discussed later.

$T_L^S \simeq 0.09 T_L$. These behaviours are responsible for the different prediction capabilities of each scale component, as discussed later.

Figure 2. Distribution of the temporal autocorrelation function of (a) the whole vorticity field and (b) the scale-decomposed vorticity fields.

The spatial two-point correlation function of vorticity ![]() $R_{\omega }(r,t)$ with the corresponding integral length scale

$R_{\omega }(r,t)$ with the corresponding integral length scale ![]() $L_t$ at three different times

$L_t$ at three different times ![]() $t=t_0, t_0+T_L$ and

$t=t_0, t_0+T_L$ and ![]() $t_0+2T_L$ is shown in figure 3. For the investigated time range,

$t_0+2T_L$ is shown in figure 3. For the investigated time range, ![]() $R_{\omega }(r,t)$ decays sufficiently close to zero at

$R_{\omega }(r,t)$ decays sufficiently close to zero at ![]() $r={\rm \pi}$ (half domain length), even though

$r={\rm \pi}$ (half domain length), even though ![]() $L_t$ tends to increase over time from

$L_t$ tends to increase over time from ![]() $0.876\lambda ^*$ at

$0.876\lambda ^*$ at ![]() $t_0$ to

$t_0$ to ![]() $1.03\lambda ^*$ at

$1.03\lambda ^*$ at ![]() $t_{100}$ because of the suppression of the small-scale motions of 2-D turbulence. This marginally decaying nature in the periodic domain was considered in the design of the training network such that data at all grid points were used in the prediction of vorticity at one point, as discussed in the following section.

$t_{100}$ because of the suppression of the small-scale motions of 2-D turbulence. This marginally decaying nature in the periodic domain was considered in the design of the training network such that data at all grid points were used in the prediction of vorticity at one point, as discussed in the following section.

Figure 3. Ensemble-averaged two-point correlation functions of vorticity extracted from 500 training data.

3. ML methodology

3.1. ML models and objective functions

Training ANNs is the process of updating weight parameters to satisfy the nonlinear relationships between the inputs and outputs as closely as possible. The weight parameters were optimised to minimise the prescribed loss function (in the direction opposite to the gradient) by reflecting nonlinear mappings. Loss functions are mainly determined by objective functions, and other loss terms are often added to improve training efficiency and model performance. In GANs, a generator (![]() $G$) and discriminator (

$G$) and discriminator (![]() $D$) are trained simultaneously in an adversarial manner; parameters of

$D$) are trained simultaneously in an adversarial manner; parameters of ![]() $G$ and

$G$ and ![]() $D$ are iteratively and alternately updated to maximise

$D$ are iteratively and alternately updated to maximise ![]() $\log (1-D(G(\boldsymbol {z})))$ for

$\log (1-D(G(\boldsymbol {z})))$ for ![]() $G$ and maximise

$G$ and maximise ![]() $\log (D(\boldsymbol {x}))+\log (1-D(G(\boldsymbol {z})))$ for

$\log (D(\boldsymbol {x}))+\log (1-D(G(\boldsymbol {z})))$ for ![]() $D$, respectively. This stands for the two-player min–max game with a value function

$D$, respectively. This stands for the two-player min–max game with a value function ![]() $V(G,D)$ given by

$V(G,D)$ given by

where ![]() $\boldsymbol {x}$ and

$\boldsymbol {x}$ and ![]() $\boldsymbol {z}$ are real data and random noise vectors, respectively. Operator

$\boldsymbol {z}$ are real data and random noise vectors, respectively. Operator ![]() $\mathbb {E}$ denotes the expectation over some sampled data, and the expressions

$\mathbb {E}$ denotes the expectation over some sampled data, and the expressions ![]() $\boldsymbol {x}\sim p(\boldsymbol {x})$ and

$\boldsymbol {x}\sim p(\boldsymbol {x})$ and ![]() $\boldsymbol {z}\sim p(\boldsymbol {z})$ indicate that

$\boldsymbol {z}\sim p(\boldsymbol {z})$ indicate that ![]() $\boldsymbol {x}$ is sampled from the distribution of the real dataset

$\boldsymbol {x}$ is sampled from the distribution of the real dataset ![]() $p(\boldsymbol {x})$ and

$p(\boldsymbol {x})$ and ![]() $\boldsymbol {z}$ from some simple noise distribution

$\boldsymbol {z}$ from some simple noise distribution ![]() $p(\boldsymbol {z})$ such as a Gaussian distribution, respectively. Thus, we can obtain a generator that produces more realistic images. Various GANs have been developed rapidly since their introduction (Mirza & Osindero Reference Mirza and Osindero2014; Arjovsky, Chintala & Bottou Reference Arjovsky, Chintala and Bottou2017; Gulrajani et al. Reference Gulrajani, Ahmed, Arjovsky, Dumoulin and Courville2017; Karras et al. Reference Karras, Aila, Laine and Lehtinen2017; Mescheder, Geiger & Nowozin Reference Mescheder, Geiger and Nowozin2018; Park et al. Reference Park, Liu, Wang and Zhu2019; Zhu et al. Reference Zhu, Abdal, Qin and Wonka2020). Among these, a conditional GAN (cGAN) (Mirza & Osindero Reference Mirza and Osindero2014) provides additional information

$p(\boldsymbol {z})$ such as a Gaussian distribution, respectively. Thus, we can obtain a generator that produces more realistic images. Various GANs have been developed rapidly since their introduction (Mirza & Osindero Reference Mirza and Osindero2014; Arjovsky, Chintala & Bottou Reference Arjovsky, Chintala and Bottou2017; Gulrajani et al. Reference Gulrajani, Ahmed, Arjovsky, Dumoulin and Courville2017; Karras et al. Reference Karras, Aila, Laine and Lehtinen2017; Mescheder, Geiger & Nowozin Reference Mescheder, Geiger and Nowozin2018; Park et al. Reference Park, Liu, Wang and Zhu2019; Zhu et al. Reference Zhu, Abdal, Qin and Wonka2020). Among these, a conditional GAN (cGAN) (Mirza & Osindero Reference Mirza and Osindero2014) provides additional information ![]() $\boldsymbol {y}$, which can be any type of auxiliary information, as a condition for the input of the generator and discriminator to improve the output quality of the generator, as follows:

$\boldsymbol {y}$, which can be any type of auxiliary information, as a condition for the input of the generator and discriminator to improve the output quality of the generator, as follows:

Furthermore, there are two adaptive methods that can stabilise the training process, solve the problem of the vanishing gradient in which the discriminator is saturated, and prevent mode collapse, a phenomenon in which the distribution of generated samples is restricted to a specific small domain, even though the generator does not diverge. First, (3.2) is modified using the earth-mover (EM; or Wasserstein-1) distance combined with the Kantorovich–Rubinstein (KR) duality (Villani Reference Villani2009), which is called Wasserstein GAN (WGAN) (Arjovsky et al. Reference Arjovsky, Chintala and Bottou2017), as follows:

This modification is made based on thorough examinations of various ways of measuring the distance between the real (data) distribution (![]() $p_r$) and the model distribution (

$p_r$) and the model distribution (![]() $p_g$), including the total variation distance, Kullback–Leibler divergence and Jensen–Shannon divergence. EM distance can be expressed as the final form of (3.4) by the KR duality:

$p_g$), including the total variation distance, Kullback–Leibler divergence and Jensen–Shannon divergence. EM distance can be expressed as the final form of (3.4) by the KR duality:

where the supremum is taken over all the 1-Lipschitz functions for the set of real data ![]() $\mathcal {X}$,

$\mathcal {X}$, ![]() $f : \mathcal {X} \to \mathbb {R}$. Simply put, the model

$f : \mathcal {X} \to \mathbb {R}$. Simply put, the model ![]() $g$ that wants to make

$g$ that wants to make ![]() $p_g$ close to

$p_g$ close to ![]() $p_r$ represents the generator, and

$p_r$ represents the generator, and ![]() $f$ corresponds to the discriminator that is optimised to make the distance between

$f$ corresponds to the discriminator that is optimised to make the distance between ![]() $p_r$ and

$p_r$ and ![]() $p_g$ larger. Thus, it can be melted down to the form of (3.3), and the discriminator output can be viewed as latent codes of real and generated data. Then, a gradient penalty (GP) loss term is added to obtain the final form of WGAN-GP (Gulrajani et al. Reference Gulrajani, Ahmed, Arjovsky, Dumoulin and Courville2017). We intend to develop a model capable of predicting the dynamic behaviour of turbulence with high predictive accuracy by reflecting statistical aspects, employing a cGAN with WGAN-GP for PredictionNet and comparing the results with a baseline CNN.

$p_g$ larger. Thus, it can be melted down to the form of (3.3), and the discriminator output can be viewed as latent codes of real and generated data. Then, a gradient penalty (GP) loss term is added to obtain the final form of WGAN-GP (Gulrajani et al. Reference Gulrajani, Ahmed, Arjovsky, Dumoulin and Courville2017). We intend to develop a model capable of predicting the dynamic behaviour of turbulence with high predictive accuracy by reflecting statistical aspects, employing a cGAN with WGAN-GP for PredictionNet and comparing the results with a baseline CNN.

PredictionNet (the generator of cGAN) is a network that predicts the vorticity field after a specific lead time ![]() $T$ from the input field at

$T$ from the input field at ![]() $t$ as follows:

$t$ as follows:

where ![]() $X$,

$X$, ![]() $Y^*$ and

$Y^*$ and ![]() $Y$ represent the real data, prediction results and prediction targets, respectively (here, the prediction target

$Y$ represent the real data, prediction results and prediction targets, respectively (here, the prediction target ![]() $Y$ is independent of the additional input

$Y$ is independent of the additional input ![]() $\boldsymbol {y}$ in (3.2) and (3.3), which are general descriptions of the value function of each GAN model). Originally, the input of a generator for turbulence prediction would be a noise field,

$\boldsymbol {y}$ in (3.2) and (3.3), which are general descriptions of the value function of each GAN model). Originally, the input of a generator for turbulence prediction would be a noise field, ![]() $Z$, conditioned on the previous timestep's vorticity field from (3.3), expressed as

$Z$, conditioned on the previous timestep's vorticity field from (3.3), expressed as ![]() ${\rm Pred}(Z,X)=Y^*$. However, the desired prediction network is a deterministic mapping function along the time horizon

${\rm Pred}(Z,X)=Y^*$. However, the desired prediction network is a deterministic mapping function along the time horizon ![]() $T$; thus, the noise input is excluded in (3.5). In our cGAN application, the generator input (

$T$; thus, the noise input is excluded in (3.5). In our cGAN application, the generator input (![]() $X$) is also used as a condition in the training of the discriminator through concatenation. Therefore, the target

$X$) is also used as a condition in the training of the discriminator through concatenation. Therefore, the target ![]() $Y$ and the generator output

$Y$ and the generator output ![]() $Y^*$ conditioned on

$Y^*$ conditioned on ![]() $X$ are used as inputs to the discriminator in the first and second terms of (3.3) as

$X$ are used as inputs to the discriminator in the first and second terms of (3.3) as ![]() $D(Y,X)$ and

$D(Y,X)$ and ![]() $D(Y^*,X)$, respectively. This allows the statistical characteristics of the input (

$D(Y^*,X)$, respectively. This allows the statistical characteristics of the input (![]() $X$) as well as the target (

$X$) as well as the target (![]() $Y$) to be reflected on the training of the discriminator and eventually the generator through competitive training. Moreover, the most easily imaginable and generally employed optimisation target for prediction task is the following objective function based on the pointwise difference:

$Y$) to be reflected on the training of the discriminator and eventually the generator through competitive training. Moreover, the most easily imaginable and generally employed optimisation target for prediction task is the following objective function based on the pointwise difference:

with the trainable parameters of PredictionNet ![]() $w_p$, and

$w_p$, and ![]() $\|{\cdot }\|$ represents a distance norm of any type. This data loss can be a powerful regulariser for the generator in GANs at the beginning of training procedure. Therefore, we formulated the final form of the loss function of cGAN, including the GP and drift loss term, as follows:

$\|{\cdot }\|$ represents a distance norm of any type. This data loss can be a powerful regulariser for the generator in GANs at the beginning of training procedure. Therefore, we formulated the final form of the loss function of cGAN, including the GP and drift loss term, as follows:

for the generator and

for the discriminator, where

\begin{equation} \left. \begin{gathered} L_{true}=\mathbb{E}_{X\sim p(X)} \left[D(Y,X)\right], \quad L_{false}=\mathbb{E}_{X\sim p(X)} \left[D(Y^*,X)\right], \\ L_{gp}=\mathbb{E}_{X\sim p(X)} \left[\left(\left\|\boldsymbol{\nabla}_{Y'}D(Y',X) \right\|_2-1\right)^2 \right],\quad L_{drift}=\left(\mathbb{E}_{X\sim p(X)} \left[D(Y,X)\right]\right)^2, \end{gathered} \right\} \end{equation}

\begin{equation} \left. \begin{gathered} L_{true}=\mathbb{E}_{X\sim p(X)} \left[D(Y,X)\right], \quad L_{false}=\mathbb{E}_{X\sim p(X)} \left[D(Y^*,X)\right], \\ L_{gp}=\mathbb{E}_{X\sim p(X)} \left[\left(\left\|\boldsymbol{\nabla}_{Y'}D(Y',X) \right\|_2-1\right)^2 \right],\quad L_{drift}=\left(\mathbb{E}_{X\sim p(X)} \left[D(Y,X)\right]\right)^2, \end{gathered} \right\} \end{equation}

where ![]() $Y'=Y+\delta (Y^*-Y)$ with

$Y'=Y+\delta (Y^*-Y)$ with ![]() $\delta$ between zero and one. The simplest L2 norm distance was used for the data loss. The role of

$\delta$ between zero and one. The simplest L2 norm distance was used for the data loss. The role of ![]() $L_{drift}$ was to restrict the order of discriminator outputs (keeping them from drifting too far from zero) with a small weight

$L_{drift}$ was to restrict the order of discriminator outputs (keeping them from drifting too far from zero) with a small weight ![]() $\beta$. Its original form in Karras et al. (Reference Karras, Aila, Laine and Lehtinen2017) is similar to the L2 regularisation on

$\beta$. Its original form in Karras et al. (Reference Karras, Aila, Laine and Lehtinen2017) is similar to the L2 regularisation on ![]() $D(Y,X)$ as

$D(Y,X)$ as ![]() $L_{drift}=\mathbb {E}_{X\sim p(X)} [(D(Y,X))^2]$, but we modified it to the above form, in which regularisation can be applied average-wise during the backpropagation to robustly use hyperparameters

$L_{drift}=\mathbb {E}_{X\sim p(X)} [(D(Y,X))^2]$, but we modified it to the above form, in which regularisation can be applied average-wise during the backpropagation to robustly use hyperparameters ![]() $\alpha$ and

$\alpha$ and ![]() $\beta$ regardless of the lead time. Accordingly,

$\beta$ regardless of the lead time. Accordingly, ![]() $\beta =0.001$ was used equally for all lead times, and

$\beta =0.001$ was used equally for all lead times, and ![]() $\alpha$ and

$\alpha$ and ![]() $\gamma$ were fixed at 10 and

$\gamma$ were fixed at 10 and ![]() $100/(N_x \times N_y)$, respectively, by fine tuning. There exists a separate optimum hyperparameter setting for each lead time; however, we verified that our hyperparameter setting showed no significant difference in performance from the optimum settings. In addition, we verified that it worked properly for lead times ranging from

$100/(N_x \times N_y)$, respectively, by fine tuning. There exists a separate optimum hyperparameter setting for each lead time; however, we verified that our hyperparameter setting showed no significant difference in performance from the optimum settings. In addition, we verified that it worked properly for lead times ranging from ![]() $1\delta t$ to

$1\delta t$ to ![]() $100\delta t$. On the other hand, the baseline CNN is an identical network to PredictionNet without the adversarial learning. For the loss function of it, an L2 regularisation was added to (3.6) using the L2 norm distance to improve the performance and make the optimisation process efficient, as follows:

$100\delta t$. On the other hand, the baseline CNN is an identical network to PredictionNet without the adversarial learning. For the loss function of it, an L2 regularisation was added to (3.6) using the L2 norm distance to improve the performance and make the optimisation process efficient, as follows:

where ![]() $\sigma _1$ is set to

$\sigma _1$ is set to ![]() $1/(N_x \times N_y)$, and

$1/(N_x \times N_y)$, and ![]() $\sigma _2$ modulates the strength of regularisation and is fixed at

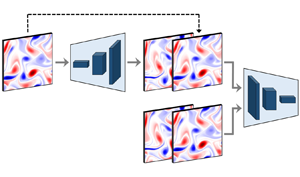

$\sigma _2$ modulates the strength of regularisation and is fixed at ![]() $0.0001$ based on case studies. Simplified configurations of the CNN and cGAN are shown in figure 4(a,b).

$0.0001$ based on case studies. Simplified configurations of the CNN and cGAN are shown in figure 4(a,b).

Figure 4. Simplified network schematics of (a) the baseline CNN, (b) cGAN-based PredictionNet and (c) ControlNet.

ControlNet uses a pre-trained PredictionNet that contains the dynamics of our data as a surrogate model to generate disturbance fields that change the evolution of the flow field in a direction suitable for a target, as follows:

Here ![]() $\Delta X$ represents the disturbance field added to the input field

$\Delta X$ represents the disturbance field added to the input field ![]() $X(t)$ and

$X(t)$ and ![]() $\tilde {Y}$ is the disturbance-added prediction at time

$\tilde {Y}$ is the disturbance-added prediction at time ![]() $t+T$. An important implication of ControlNet in this study is that it is a model-free method without restrictions, except for the strength of

$t+T$. An important implication of ControlNet in this study is that it is a model-free method without restrictions, except for the strength of ![]() $\Delta X$. The objective function to be maximised includes the change in the vorticity field at a later time, as follows:

$\Delta X$. The objective function to be maximised includes the change in the vorticity field at a later time, as follows:

where ![]() $w_c$ are the weight parameters of ControlNet. In the process of training of ControlNet, the weight parameters of ControlNet are updated in the direction maximising the change in the vorticity field at the target lead time. The already trained PredictionNet with fixed weight parameters is used in the prediction of controlled field as a surrogate model in training of ControlNet. Therefore, once the training of ControlNet is successful through maximisation of the loss based on the change in the vorticity field, the trained ControlNet can produce an optimum disturbance field. Whether the generated disturbance field is globally optimum, however, is not guaranteed. For the final form of the loss function, a spectral gradient loss term is additionally used to remove nonphysical noise (i.e. smoothing effect), and a CNN model is applied:

$w_c$ are the weight parameters of ControlNet. In the process of training of ControlNet, the weight parameters of ControlNet are updated in the direction maximising the change in the vorticity field at the target lead time. The already trained PredictionNet with fixed weight parameters is used in the prediction of controlled field as a surrogate model in training of ControlNet. Therefore, once the training of ControlNet is successful through maximisation of the loss based on the change in the vorticity field, the trained ControlNet can produce an optimum disturbance field. Whether the generated disturbance field is globally optimum, however, is not guaranteed. For the final form of the loss function, a spectral gradient loss term is additionally used to remove nonphysical noise (i.e. smoothing effect), and a CNN model is applied:

where ![]() $\boldsymbol {\nabla }_{\boldsymbol {x}}$ denotes the gradient with respect to the coordinate directions. The coefficient

$\boldsymbol {\nabla }_{\boldsymbol {x}}$ denotes the gradient with respect to the coordinate directions. The coefficient ![]() $\theta$ controls the strength of smoothing effect, and it is adjusted depending on the order of data loss (see § 4.4). Figure 4(c) shows a simplified configuration of ControlNet.

$\theta$ controls the strength of smoothing effect, and it is adjusted depending on the order of data loss (see § 4.4). Figure 4(c) shows a simplified configuration of ControlNet.

3.2. Network architecture

A typical multiscale architecture of a CNN was used for both PredictionNet and ControlNet, as shown in figure 5(a). The architecture consists of six convolutional blocks, each composed of three convolutional layers with ![]() $3 \times 3$ filter kernels (Conv.

$3 \times 3$ filter kernels (Conv. ![]() $3 \times 3$), called Convblk-m, named after their feature maps with different resolutions (

$3 \times 3$), called Convblk-m, named after their feature maps with different resolutions (![]() ${128/2^m}\times {128/2^m} \ (m=0,1,2,3,4,5)$). Here, the average pooled inputs

${128/2^m}\times {128/2^m} \ (m=0,1,2,3,4,5)$). Here, the average pooled inputs ![]() $X^{(m)} (m=1,2,3,4)$ and the original input

$X^{(m)} (m=1,2,3,4)$ and the original input ![]() $X^{(0)}$ are concatenated to the feature map tensor at the beginning of each Convblk-m to be used as feature maps. One node point of a pooled input

$X^{(0)}$ are concatenated to the feature map tensor at the beginning of each Convblk-m to be used as feature maps. One node point of a pooled input ![]() $X^{(m)}$ contains the compressed information of

$X^{(m)}$ contains the compressed information of ![]() $2^m$ points of

$2^m$ points of ![]() $X^{(0)}$. Using such an architecture enables all the spatial grid information of an input field,

$X^{(0)}$. Using such an architecture enables all the spatial grid information of an input field, ![]() $X$, to be used to calculate a specific output point, even for the lowest-resolution feature maps. For example, the receptive field of Convblk-5 is

$X$, to be used to calculate a specific output point, even for the lowest-resolution feature maps. For example, the receptive field of Convblk-5 is ![]() $(2^5\times 7)\times (2^5\times 7)$, which means that all the spatial grid points of

$(2^5\times 7)\times (2^5\times 7)$, which means that all the spatial grid points of ![]() $X^{(0)}$ are used to calculate a point in the output. In addition, by sequentially using low- to high-resolution feature maps, the network can learn large-scale motion in the early stages, and then fill in the detailed features of small-scale characteristics in the deepest layers. As mentioned in § 2, the purpose of designing networks is to increase the prediction accuracy and determine the global optimum by considering the spectral properties of the flow. However, depending on the flow features or characteristics of the flow variables, we can expect that accurate results can be obtained even using only a small input domain with a size similar to

$X^{(0)}$ are used to calculate a point in the output. In addition, by sequentially using low- to high-resolution feature maps, the network can learn large-scale motion in the early stages, and then fill in the detailed features of small-scale characteristics in the deepest layers. As mentioned in § 2, the purpose of designing networks is to increase the prediction accuracy and determine the global optimum by considering the spectral properties of the flow. However, depending on the flow features or characteristics of the flow variables, we can expect that accurate results can be obtained even using only a small input domain with a size similar to ![]() $L$ for cost-effectiveness. Furthermore, periodic padding was used to maintain the size of the feature maps after convolutional operations, because the biperiodic boundary condition was applied spatially. The feature maps generated from Convblk-(m+1) must be extended through upscaling to be concatenated and used with the following pooled input

$L$ for cost-effectiveness. Furthermore, periodic padding was used to maintain the size of the feature maps after convolutional operations, because the biperiodic boundary condition was applied spatially. The feature maps generated from Convblk-(m+1) must be extended through upscaling to be concatenated and used with the following pooled input ![]() $X^{(m)}$ (i.e. doubling the size via upsampling). In this process, a transposed convolution is used to minimise the non-physical phenomena. After upscaling,

$X^{(m)}$ (i.e. doubling the size via upsampling). In this process, a transposed convolution is used to minimise the non-physical phenomena. After upscaling, ![]() $X^{(m)}$ is concatenated to the feature map tensor and then Convblk-m operations are performed. Finally, after the original input field

$X^{(m)}$ is concatenated to the feature map tensor and then Convblk-m operations are performed. Finally, after the original input field ![]() $X^{(0)}$ is concatenated, the operations of Convblk-0 are performed, and the output of resolution

$X^{(0)}$ is concatenated, the operations of Convblk-0 are performed, and the output of resolution ![]() ${128}\times {128}$ is generated depending on the network, prediction result

${128}\times {128}$ is generated depending on the network, prediction result ![]() $\textrm {Pred}(X)=Y^*$, or disturbance field

$\textrm {Pred}(X)=Y^*$, or disturbance field ![]() $\textrm {Control}(X)=\Delta X$. Detailed information on the architectures of PredictionNet and ControlNet, including the number of feature maps used in each Convblk, are presented in table 1. A leaky rectified linear unit (LReLU) is used as an activation function to add nonlinearities after each convolutional layer. This was not applied to the last layer of Convblk-0, to prevent nonlinearity in the final output.

$\textrm {Control}(X)=\Delta X$. Detailed information on the architectures of PredictionNet and ControlNet, including the number of feature maps used in each Convblk, are presented in table 1. A leaky rectified linear unit (LReLU) is used as an activation function to add nonlinearities after each convolutional layer. This was not applied to the last layer of Convblk-0, to prevent nonlinearity in the final output.

Figure 5. Network architecture of (a) PredictionNet (the generator of cGAN) and ControlNet and (b) discriminator of cGAN.

Table 1. Number of feature maps used at each convolutional layer of Convblks and Disblks.

The discriminator of PredictionNet to which cGAN is applied has a symmetric architecture for the generator (PredictionNet), as shown in figure 5(b). Here ![]() $X^{0}$ is concatenated with the target or the prediction result for the input of the discriminator as a condition. In contrast to the generator, convolutional operations are performed from high to low resolutions, named with each convolutional block as Disblk-m. Average pooling was used for downsampling to half the size of the feature maps. After the operation of Disblk-5, its output feature maps passed through two fully connected layers (with output dimensions of

$X^{0}$ is concatenated with the target or the prediction result for the input of the discriminator as a condition. In contrast to the generator, convolutional operations are performed from high to low resolutions, named with each convolutional block as Disblk-m. Average pooling was used for downsampling to half the size of the feature maps. After the operation of Disblk-5, its output feature maps passed through two fully connected layers (with output dimensions of ![]() $1\times 1\times 256$ and

$1\times 1\times 256$ and ![]() $1\times 1\times 1$) to return a scalar value. The numbers of feature maps used for each Disblk are presented in table 1. The baseline CNN model takes the same architecture as PredictionNet but is trained without adversarial training through the discriminator.

$1\times 1\times 1$) to return a scalar value. The numbers of feature maps used for each Disblk are presented in table 1. The baseline CNN model takes the same architecture as PredictionNet but is trained without adversarial training through the discriminator.

4. Results

4.1. PredictionNet: prediction of the vorticity field at a finite lead time

In this section, we discuss the performance of PredictionNet in predicting the target vorticity field at ![]() $t+T$ using the field at

$t+T$ using the field at ![]() $t$ as the input. The considered lead times

$t$ as the input. The considered lead times ![]() $T$ are

$T$ are ![]() $0.25T_L, 0.5T_L, T_L$ and

$0.25T_L, 0.5T_L, T_L$ and ![]() $2T_L$ based on the observation that the autocorrelation of the vorticity at each lead time dropped to 0.71, 0.49, 0.26 and 0.10, respectively (figure 2a). The convergence behaviour of the L2 norm-based data loss of the baseline CNN and cGAN models for various lead times in the process of training is shown for 100 000 iterations with a batch size of 32 in figure 6. It took around 2 and 5.4 h for CNN and cGAN, respectively, on a GPU machine of NVIDIA GeForce RTX 3090. Although the baseline CNN and PredictionNet have the exact same architecture (around 520 000 trainable parameters based on table 1), cGAN includes a discriminator to be trained comparable to the generator with many complex loss terms; thus, the training time is almost tripled. Both models yielded good convergence for lead times of up to

$2T_L$ based on the observation that the autocorrelation of the vorticity at each lead time dropped to 0.71, 0.49, 0.26 and 0.10, respectively (figure 2a). The convergence behaviour of the L2 norm-based data loss of the baseline CNN and cGAN models for various lead times in the process of training is shown for 100 000 iterations with a batch size of 32 in figure 6. It took around 2 and 5.4 h for CNN and cGAN, respectively, on a GPU machine of NVIDIA GeForce RTX 3090. Although the baseline CNN and PredictionNet have the exact same architecture (around 520 000 trainable parameters based on table 1), cGAN includes a discriminator to be trained comparable to the generator with many complex loss terms; thus, the training time is almost tripled. Both models yielded good convergence for lead times of up to ![]() $0.5T_L$, whereas overfitting was observed for

$0.5T_L$, whereas overfitting was observed for ![]() $T=T_L$ and

$T=T_L$ and ![]() $2T_L$ in both models. Since the flow field is hardly correlated with the input field [correlation coefficient (CC) of 0.26 and 0.10], it is naturally more challenging to train the model that can reduce a mean-squared error (MSE)-based data loss, a pointwise metric that is independent of the spatial distribution. One notable observation in figure 6 is that for lead times beyond

$2T_L$ in both models. Since the flow field is hardly correlated with the input field [correlation coefficient (CC) of 0.26 and 0.10], it is naturally more challenging to train the model that can reduce a mean-squared error (MSE)-based data loss, a pointwise metric that is independent of the spatial distribution. One notable observation in figure 6 is that for lead times beyond ![]() $T_L$, CNN appears to exhibit better performance than cGAN, as evidenced by its smaller converged data loss. However, as discussed later, the pointwise accuracy alone cannot encompass all the aspects of turbulence prediction due to its various statistical properties. In addition, although the only loss term of CNN is the pointwise MSE except the weight regularisation, the magnitude of its converged data loss also remains significant.

$T_L$, CNN appears to exhibit better performance than cGAN, as evidenced by its smaller converged data loss. However, as discussed later, the pointwise accuracy alone cannot encompass all the aspects of turbulence prediction due to its various statistical properties. In addition, although the only loss term of CNN is the pointwise MSE except the weight regularisation, the magnitude of its converged data loss also remains significant.

Figure 6. Convergence of data loss depending on the lead time of (a) baseline CNN and (b) cGAN. The order of the converged value increases as the lead time increases. In addition, relatively large overfitting was observed in the case of ![]() $T=2T_L$.

$T=2T_L$.

As an example of the test of the trained network, for unseen input data, the fields predicted by cGAN and CNN were compared against the target data for various lead times, as shown in figure 7. In the test of cGAN, only PredictionNet was used to generate or predict flow field at the target lead time. Hereinafter, when presenting performance results for the instantaneous field, including figure 7 and all subsequent statistics, we only brought the results for input at ![]() $t_0$. This choice was made because the difficulty of tasks that the models have to learn is much larger at earlier time since flow contains more small-scale structures than later times due to decaying nature. Therefore, performance test for input data at

$t_0$. This choice was made because the difficulty of tasks that the models have to learn is much larger at earlier time since flow contains more small-scale structures than later times due to decaying nature. Therefore, performance test for input data at ![]() $t_0$ is sufficient for test of the trained network. For short lead-time predictions, such as

$t_0$ is sufficient for test of the trained network. For short lead-time predictions, such as ![]() $0.25T_L$, both cGAN and CNN showed good performance. However, for a lead time of

$0.25T_L$, both cGAN and CNN showed good performance. However, for a lead time of ![]() $0.5T_L$, the prediction by the CNN started displaying a blurry image, whereas cGAN's prediction maintained small-scale features relatively better than CNN. This blurry nature of the CNN worsened the predictions of

$0.5T_L$, the prediction by the CNN started displaying a blurry image, whereas cGAN's prediction maintained small-scale features relatively better than CNN. This blurry nature of the CNN worsened the predictions of ![]() $T_L$ and

$T_L$ and ![]() $2T_L$. Although cGAN's prediction also deteriorated as the lead time increased, the overall performance of cGAN, particularly in capturing small-scale variations in the field, was much better than that of the CNN even for

$2T_L$. Although cGAN's prediction also deteriorated as the lead time increased, the overall performance of cGAN, particularly in capturing small-scale variations in the field, was much better than that of the CNN even for ![]() $T_L$ and

$T_L$ and ![]() $2T_L$.

$2T_L$.

Figure 7. Visualised examples of the performance of the cGAN and CNN for one test dataset. (a) Input field at ![]() $t_0$, prediction results at the lead time (b)

$t_0$, prediction results at the lead time (b) ![]() $0.25T_L$, (c)

$0.25T_L$, (c) ![]() $0.5 T_L$, (d)

$0.5 T_L$, (d) ![]() $T_L$ and (e)

$T_L$ and (e) ![]() $2T_L$. Panels (b i,c i,d i,e i), (b ii,c ii,d ii,e ii) and (b iii,c iii,d iii,e iii) show the target DNS, cGAN predictions and CNN predictions, respectively.

$2T_L$. Panels (b i,c i,d i,e i), (b ii,c ii,d ii,e ii) and (b iii,c iii,d iii,e iii) show the target DNS, cGAN predictions and CNN predictions, respectively.

A quantitative comparison of the performances of the cGAN and CNN against the target in the prediction of various statistics is presented in table 2. The CC between the prediction and target fields or, equivalently, the MSE by the cGAN shows a better performance than that by the CNN for lead times of up to ![]() $0.5T_L$, even though both the cGAN and CNN predicted the target field well. For

$0.5T_L$, even though both the cGAN and CNN predicted the target field well. For ![]() $T_L$, the CC by cGAN and CNN was 0.829 and 0.868, respectively, indicating that the predictions by both models were not poor, whereas the predictions by both models were quite poor for

$T_L$, the CC by cGAN and CNN was 0.829 and 0.868, respectively, indicating that the predictions by both models were not poor, whereas the predictions by both models were quite poor for ![]() $2T_L$. Once again, for lead times larger than

$2T_L$. Once again, for lead times larger than ![]() $T_L$, CNN shows better performance on the pointwise metrics according to the trend of data loss. Conversely, the statistics related to the distribution of vorticity, such as the r.m.s. value or standardised moments (

$T_L$, CNN shows better performance on the pointwise metrics according to the trend of data loss. Conversely, the statistics related to the distribution of vorticity, such as the r.m.s. value or standardised moments (![]() $\hat {\mu }_n=\mu _n/\sigma ^n$, where

$\hat {\mu }_n=\mu _n/\sigma ^n$, where ![]() $\mu _n=\langle (X-\langle X\rangle )^n\rangle$ is the

$\mu _n=\langle (X-\langle X\rangle )^n\rangle$ is the ![]() $n$th central moments and

$n$th central moments and ![]() $\sigma =\sqrt {\mu _2}$ is the standard deviation), clearly confirm that the cGAN outperforms the CNN for all lead times, and the prediction by the cGAN was much closer to the target than that of the CNN, even for

$\sigma =\sqrt {\mu _2}$ is the standard deviation), clearly confirm that the cGAN outperforms the CNN for all lead times, and the prediction by the cGAN was much closer to the target than that of the CNN, even for ![]() $T_L$ and

$T_L$ and ![]() $2T_L$. Furthermore, the prediction of the Kolmogorov length scale (

$2T_L$. Furthermore, the prediction of the Kolmogorov length scale (![]() $\eta =(\nu ^3/\varepsilon )^{1/4}$) and dissipation (

$\eta =(\nu ^3/\varepsilon )^{1/4}$) and dissipation (![]() $\varepsilon =2\nu \langle S_{ij}S_{ij}\rangle$,

$\varepsilon =2\nu \langle S_{ij}S_{ij}\rangle$, ![]() $\varepsilon '=\varepsilon t^{*3}/\lambda ^{*2}$, where

$\varepsilon '=\varepsilon t^{*3}/\lambda ^{*2}$, where ![]() $S_{ij}$ is the strain rate tensor) by cGAN was more accurate than that by the CNN, as presented in table 2. All these qualitative and quantitative comparisons clearly suggest that the adversarial learning of the cGAN tends to capture the characteristics of turbulence data better than the CNN, which minimises the pointwise MSE only. The superiority of the cGAN is more pronounced for large lead times, for which small-scale turbulence features are difficult to predict.

$S_{ij}$ is the strain rate tensor) by cGAN was more accurate than that by the CNN, as presented in table 2. All these qualitative and quantitative comparisons clearly suggest that the adversarial learning of the cGAN tends to capture the characteristics of turbulence data better than the CNN, which minimises the pointwise MSE only. The superiority of the cGAN is more pronounced for large lead times, for which small-scale turbulence features are difficult to predict.

Table 2. Quantitative comparison of the performance of the cGAN and CNN against the target in the prediction of the CC, MSE and r.m.s., and the ![]() $n$th standardised moments, Kolmogorov length scale (

$n$th standardised moments, Kolmogorov length scale (![]() $\eta$) and dissipation rate (

$\eta$) and dissipation rate (![]() $\varepsilon '$) depending on the lead time for the input at

$\varepsilon '$) depending on the lead time for the input at ![]() $t_0$. All the dimensional data such as MSE, r.m.s. and

$t_0$. All the dimensional data such as MSE, r.m.s. and ![]() $\varepsilon '$ are provided just for the relative comparison.

$\varepsilon '$ are provided just for the relative comparison.

Detailed distributions of probability density functions (p.d.f.s) of vorticity, two-point correlation functions and enstrophy spectra (![]() $\varOmega (k)={\rm \pi} k\varSigma _{|\boldsymbol {k}|=k}|\hat {\omega }(\boldsymbol {k})|^2$ where

$\varOmega (k)={\rm \pi} k\varSigma _{|\boldsymbol {k}|=k}|\hat {\omega }(\boldsymbol {k})|^2$ where ![]() $\hat {\omega }(\boldsymbol {k},t)=\mathcal {F}\{\omega (\boldsymbol {x},t)\}$) of the target and prediction fields by the cGAN and CNN are compared for all lead times in figure 8. Both the cGAN and CNN effectively predicted the target p.d.f. distribution for lead times of up to

$\hat {\omega }(\boldsymbol {k},t)=\mathcal {F}\{\omega (\boldsymbol {x},t)\}$) of the target and prediction fields by the cGAN and CNN are compared for all lead times in figure 8. Both the cGAN and CNN effectively predicted the target p.d.f. distribution for lead times of up to ![]() $T_L$, whereas the tail part of the p.d.f. for

$T_L$, whereas the tail part of the p.d.f. for ![]() $2T_L$ was not effectively captured by the cGAN and CNN. The difference in performance between the cGAN and CNN is more striking in the prediction of

$2T_L$ was not effectively captured by the cGAN and CNN. The difference in performance between the cGAN and CNN is more striking in the prediction of ![]() $R_{\omega }(r)$ and

$R_{\omega }(r)$ and ![]() $\varOmega (k)$. The values of

$\varOmega (k)$. The values of ![]() $R_{\omega }(r)$ and

$R_{\omega }(r)$ and ![]() $\varOmega (k)$ obtained by the cGAN almost perfectly match those of the target for all lead times, whereas those predicted by the CNN show large deviations from those of the target distribution progressively with the lead time. In the prediction of the correlation function, the CNN tends to produce a relatively slow decaying distribution compared with the cGAN and the target, resulting in a much larger integral length scale for all lead times. In particular, it is noticeable that even for a short lead time of

$\varOmega (k)$ obtained by the cGAN almost perfectly match those of the target for all lead times, whereas those predicted by the CNN show large deviations from those of the target distribution progressively with the lead time. In the prediction of the correlation function, the CNN tends to produce a relatively slow decaying distribution compared with the cGAN and the target, resulting in a much larger integral length scale for all lead times. In particular, it is noticeable that even for a short lead time of ![]() $0.25T_L$, the CNN significantly underpredicts

$0.25T_L$, the CNN significantly underpredicts ![]() $\varOmega (k)$ in the high-wavenumber range, and this poor predictability propagates toward the small-wavenumber range as the lead time increases, whereas cGAN produces excellent predictions over all scale ranges, even for

$\varOmega (k)$ in the high-wavenumber range, and this poor predictability propagates toward the small-wavenumber range as the lead time increases, whereas cGAN produces excellent predictions over all scale ranges, even for ![]() $2T_L$. This evidence supports the idea that adversarial learning accurately captures the small-scale statistical features of turbulence.

$2T_L$. This evidence supports the idea that adversarial learning accurately captures the small-scale statistical features of turbulence.

Figure 8. Comparison of statistics such as the p.d.f., two-point correlation and enstrophy spectrum of the target and prediction results at different lead times (a) ![]() $0.25T_L$, (b)

$0.25T_L$, (b) ![]() $0.5 T_L$, (c)

$0.5 T_L$, (c) ![]() $T_L$ and (d)

$T_L$ and (d) ![]() $2T_L$ for the same input time point

$2T_L$ for the same input time point ![]() $t_0$.

$t_0$.

To quantify in more detail the differences in the prediction results by cGAN and CNN models, scale decomposition was performed by decomposing the fields in the wavenumber components into three regimes: large-, intermediate- and small-scale fields, as in the investigation of the temporal correlation in figure 2(b). The decomposed fields predicted for the lead time of ![]() $T_L$ by the cGAN and CNN, for example, were compared with those of the target field, as shown in figure 9. As shown in figure 2(b), the large-scale field

$T_L$ by the cGAN and CNN, for example, were compared with those of the target field, as shown in figure 9. As shown in figure 2(b), the large-scale field ![]() $(k\leq 4)$ persists longer than the total fields with an integral time scale 1.4 times larger than that of the total field, whereas the intermediate-scale field

$(k\leq 4)$ persists longer than the total fields with an integral time scale 1.4 times larger than that of the total field, whereas the intermediate-scale field ![]() $(4< k\leq 20)$ and the small-scale field

$(4< k\leq 20)$ and the small-scale field ![]() $(20< k)$ decorrelate more quickly than the total field with integral time scales one-fourth and one-twelfth of that of the total field, respectively. Given that the intermediate- and small-scale fields of the target field are almost completely decorrelated from the initial field at a lead time of

$(20< k)$ decorrelate more quickly than the total field with integral time scales one-fourth and one-twelfth of that of the total field, respectively. Given that the intermediate- and small-scale fields of the target field are almost completely decorrelated from the initial field at a lead time of ![]() $T_L$ as shown in figure 2(b), the predictions of those fields by the cGAN shown in figure 9(c,d) are excellent. The cGAN generator generates a small-scale field that not only mimics the statistical characteristics of the target turbulence but is also consistent with the large-scale motion of turbulence through adversarial learning. A comparison of the decomposed fields predicted by the cGAN and CNN for other lead times is presented in Appendix B. For small lead times, such as

$T_L$ as shown in figure 2(b), the predictions of those fields by the cGAN shown in figure 9(c,d) are excellent. The cGAN generator generates a small-scale field that not only mimics the statistical characteristics of the target turbulence but is also consistent with the large-scale motion of turbulence through adversarial learning. A comparison of the decomposed fields predicted by the cGAN and CNN for other lead times is presented in Appendix B. For small lead times, such as ![]() $0.25T_L$ and

$0.25T_L$ and ![]() $0.5T_L$, the cGAN predicted the small-scale fields accurately, whereas those produced by the CNN contained non-negligible errors. For

$0.5T_L$, the cGAN predicted the small-scale fields accurately, whereas those produced by the CNN contained non-negligible errors. For ![]() $2T_L$, it is difficult to predict using both models even with the large-scale field. On the other hand, the CC of decomposed fields between the target and predictions, provided in table 4 (Appendix B), did not demonstrate the superiority of the cGAN over the CNN. This is attributed to the fact that pointwise errors such as the MSE or CC are predominantly determined by the behaviour of large-scale motions. The pointwise MSE is the only error used in the loss function of the CNN, whereas the discriminator loss based on the latent variable is used in the loss function of the cGAN in addition to the MSE. This indicates that the latent variable plays an important role in predicting turbulent fields with multiscale characteristics.

$2T_L$, it is difficult to predict using both models even with the large-scale field. On the other hand, the CC of decomposed fields between the target and predictions, provided in table 4 (Appendix B), did not demonstrate the superiority of the cGAN over the CNN. This is attributed to the fact that pointwise errors such as the MSE or CC are predominantly determined by the behaviour of large-scale motions. The pointwise MSE is the only error used in the loss function of the CNN, whereas the discriminator loss based on the latent variable is used in the loss function of the cGAN in addition to the MSE. This indicates that the latent variable plays an important role in predicting turbulent fields with multiscale characteristics.

Figure 9. Prediction results of the scale-decomposed field for lead time ![]() $T_L$: (a) original fields, (b) large scales (

$T_L$: (a) original fields, (b) large scales (![]() $k\leq 4$), (c) intermediate scales (

$k\leq 4$), (c) intermediate scales (![]() $4< k \leq 20$) and (d) small scales (

$4< k \leq 20$) and (d) small scales (![]() $k>20$). Panels (a i,b i,c i,d i), (a ii,b ii,c ii,d ii) and (a iii,b iii,c iii,d iii) display the target DNS fields, cGAN predictions and CNN predictions, respectively.

$k>20$). Panels (a i,b i,c i,d i), (a ii,b ii,c ii,d ii) and (a iii,b iii,c iii,d iii) display the target DNS fields, cGAN predictions and CNN predictions, respectively.

Table 4. Correlation coefficient between the target and prediction of the scale-decomposed fields depending on the lead time.

In the prediction of the enstrophy spectrum, the cGAN showed excellent performance, compared with the CNN, by accurately reproducing the decaying behaviour of the spectrum in the small-scale range, as shown in figure 8. The average phase error between the target and predictions by the cGAN and CNN is shown in figure 10. For all lead times, the phase error of the small-scale motion approaches ![]() ${\rm \pi} /2$, which is the value for a random distribution. For short lead times

${\rm \pi} /2$, which is the value for a random distribution. For short lead times ![]() $0.25T_L$ and

$0.25T_L$ and ![]() $0.5T_L$, the cGAN clearly suppressed the phase error in the small-scale range compared with the CNN, whereas for

$0.5T_L$, the cGAN clearly suppressed the phase error in the small-scale range compared with the CNN, whereas for ![]() $T_L$ and

$T_L$ and ![]() $2T_L$, both the cGAN and CNN poorly predicted the phase of the intermediate- and small-scale motions, even though the cGAN outperformed the CNN in predicting the spectrum.

$2T_L$, both the cGAN and CNN poorly predicted the phase of the intermediate- and small-scale motions, even though the cGAN outperformed the CNN in predicting the spectrum.

Figure 10. Prediction results of the phase error of each model depending on ![]() $T$.

$T$.

The performance of PredictionNet in the prediction of velocity fields is presented in Appendix C, where several statistics, including the p.d.f. of velocity, two-point correlation and p.d.f. of the velocity gradient are accurately predicted for all lead times. The superiority of the cGAN over the CNN was also confirmed.

4.2. Role of the discriminator in turbulence prediction

In the previous section, we demonstrated that adversarial learning using a discriminator network effectively captured the small-scale behaviour of turbulence, even when the small-scale field at a large lead time was hardly correlated with that of the initial field. In this section, we investigate the role of the discriminator in predicting turbulence through the behaviour of the latent variable, which is the output of the discriminator. Although several attempts have been made to disclose the manner in which adversarial training affects the performance of the generator and the meaning of the discriminator output (Creswell et al. Reference Creswell, White, Dumoulin, Arulkumaran, Sengupta and Bharath2018; Yuan et al. Reference Yuan, He, Zhu and Li2019; Goodfellow et al. Reference Goodfellow, Pouget-Abadie, Mirza, Xu, Warde-Farley, Ozair, Courville and Bengio2020), there have been no attempts to reveal the implicit role of the latent variable in recovering the statistical nature of turbulence in the process of a prediction.

In the current cGAN framework, the discriminator network is trained to maximise the difference between the expected value of the latent variable of the target field ![]() $D(Y,X)$ and that of the prediction

$D(Y,X)$ and that of the prediction ![]() $D(Y^*,X)$, whereas the generator network is trained to maximise

$D(Y^*,X)$, whereas the generator network is trained to maximise ![]() $D(Y^*,X)$ and to minimise the pointwise MSE between

$D(Y^*,X)$ and to minimise the pointwise MSE between ![]() $Y$ and

$Y$ and ![]() $Y^*$. Therefore, through the adversarial learning process, the discriminator is optimised to distinguish

$Y^*$. Therefore, through the adversarial learning process, the discriminator is optimised to distinguish ![]() $Y^*$ from

$Y^*$ from ![]() $Y$, whereas the generator evolves to produce

$Y$, whereas the generator evolves to produce ![]() $Y^*$ by reflecting on the optimised latent variable of the discriminator and minimising the MSE between

$Y^*$ by reflecting on the optimised latent variable of the discriminator and minimising the MSE between ![]() $Y$ and

$Y$ and ![]() $Y^*$. The input field

$Y^*$. The input field ![]() $X$ is used as a condition in the construction of the optimal latent variable in the discriminator network. The behaviour of the expected value of the latent variable of the target and generated fields (

$X$ is used as a condition in the construction of the optimal latent variable in the discriminator network. The behaviour of the expected value of the latent variable of the target and generated fields (![]() $L_{true}$ and

$L_{true}$ and ![]() $L_{false}$) and their difference (

$L_{false}$) and their difference (![]() $L_{true}-L_{false}$) as learning proceeds for all lead times are illustrated in figure 11. Because of the regularisation of the latent variable by

$L_{true}-L_{false}$) as learning proceeds for all lead times are illustrated in figure 11. Because of the regularisation of the latent variable by ![]() $L_{drift}(=L_{true}^2)$ in the loss function of the discriminator, both

$L_{drift}(=L_{true}^2)$ in the loss function of the discriminator, both ![]() $L_{true}$ and

$L_{true}$ and ![]() $L_{false}$ remain around zero, although they sometimes oscillate significantly. As the learning proceeds,

$L_{false}$ remain around zero, although they sometimes oscillate significantly. As the learning proceeds, ![]() $L_{true}-L_{false}$ quickly decays initially owing to the suppression of the MSE and continues to decrease to zero for

$L_{true}-L_{false}$ quickly decays initially owing to the suppression of the MSE and continues to decrease to zero for ![]() $0.25T_L$ and

$0.25T_L$ and ![]() $0.5T_L$ but to a finite value for

$0.5T_L$ but to a finite value for ![]() $T_L$ and

$T_L$ and ![]() $2T_L$. The intermittent transition of

$2T_L$. The intermittent transition of ![]() $L_{true}-L_{false}$ between a finite value and zero for

$L_{true}-L_{false}$ between a finite value and zero for ![]() $0.25T_L$ and

$0.25T_L$ and ![]() $0.5T_L$ clearly suggests that the generator and discriminator operate competitively in an adversarial manner. As the generator improves, the difference monotonically decreases and occasionally exhibits sudden suppression. When sudden suppression occurs, the discriminator evolves to distinguish

$0.5T_L$ clearly suggests that the generator and discriminator operate competitively in an adversarial manner. As the generator improves, the difference monotonically decreases and occasionally exhibits sudden suppression. When sudden suppression occurs, the discriminator evolves to distinguish ![]() $Y^*$ from

$Y^*$ from ![]() $Y$ by finding a better criterion latent variable, resulting in a sudden increase in the difference. Ultimately, when the generator can produce