1 INTRODUCTION

Numerical simulations are the ‘third pillar’ of astrophysics, standing alongside observations and analytic theory. Since it is difficult to perform laboratory experiments in the relevant physical regimes and over the correct range of length and timescales involved in most astrophysical problems, we turn instead to ‘numerical experiments’ in the computer for understanding and insight. As algorithms and simulation codes become ever more sophisticated, the public availability of simulation codes has become crucial to ensure that these experiments can be both verified and reproduced.

Phantom is a smoothed particle hydrodynamics (SPH) code developed over the last decade. It has been used widely for studies of turbulence (e.g. Kitsionas et al. Reference Kitsionas2009; Price & Federrath Reference Price and Federrath2010; Price, Federrath, & Brunt Reference Price, Federrath and Brunt2011), accretion (e.g. Lodato & Price Reference Lodato and Price2010; Nixon, King, & Price Reference Nixon, King and Price2012a; Rosotti, Lodato, & Price Reference Rosotti, Lodato and Price2012), star formation including non-ideal magnetohydrodynamics (MHD) (e.g. Wurster et al. Reference Wurster, Price and Bate2016, Wurster, Price, & Bate Reference Wurster, Price and Bate2017), star cluster formation (Liptai et al. Reference Liptai, Price, Wurster and Bate2017), and for studies of the Galaxy (Pettitt et al. Reference Pettitt, Dobbs, Acreman and Price2014; Dobbs et al. Reference Dobbs, Price, Pettitt, Bate and Tricco2016), as well as for simulating dust–gas mixtures (e.g. Dipierro et al. Reference Dipierro, Price, Laibe, Hirsh and Cerioli2015; Ragusa et al. Reference Ragusa, Dipierro, Lodato, Laibe and Price2017; Tricco, Price, & Laibe Reference Tricco, Price and Laibe2017). Although the initial applications and some details of the basic algorithm were described in Price & Federrath (Reference Price and Federrath2010), Lodato & Price (Reference Lodato and Price2010), and Price (Reference Price2012a), the code itself has never been described in detail and, until now, has remained closed source.

One of the initial design goals of Phantom was to have a low memory footprint. A secondary motivation was the need for a public SPH code that is not primarily focused on cosmology, as in the highly successful Gadget code (Springel, Yoshida, & White Reference Springel, Yoshida and White2001; Springel Reference Springel2005). The needs of different communities produce rather different outcomes in the code. For cosmology, the main focus is on simulating the gravitational collapse of dark matter in large volumes of the universe, with gas having only a secondary effect. This is reflected in the ability of the public Gadget-2 code to scale to exceedingly large numbers of dark matter particles, yet neglecting elements of the core SPH algorithm that are essential for stellar and planetary problems, such as the Morris & Monaghan (Reference Morris and Monaghan1997) artificial viscosity switch [c.f. the debate between Bauer & Springel (Reference Bauer and Springel2012) and Price (Reference Price2012b)], the ability to use a spatially variable gravitational force softening (Bate & Burkert Reference Bate and Burkert1997; Price & Monaghan Reference Price and Monaghan2007) or any kind of artificial conductivity, necessary for the correct treatment of contact discontinuities (Chow & Monaghan Reference Chow and Monaghan1997; Price & Monaghan Reference Price and Monaghan2005; Rosswog & Price Reference Rosswog and Price2007; Price Reference Price2008). Almost all of these have since been implemented in development versions of Gadget-3 (e.g. Iannuzzi & Dolag Reference Iannuzzi and Dolag2011; Beck et al. Reference Beck2016; see recent comparison project by Sembolini et al. Reference Sembolini2016) but remain unavailable or unused in the public version. Likewise, the implementation of dust, non-ideal MHD, and other physics relevant to star and planet formation is unlikely to be high priority in a code designed for studying cosmology or galaxy formation.

Similarly, the sphng code (Benz et al. Reference Benz, Cameron, Press and Bowers1990; Bate Reference Bate1995) has been a workhorse for our group for simulations of star formation (e.g. Price & Bate Reference Price and Bate2007, Reference Price and Bate2009; Price, Tricco, & Bate Reference Price, Tricco and Bate2012; Lewis, Bate, & Price Reference Lewis, Bate and Price2015) and accretion discs (e.g. Lodato & Rice Reference Lodato and Rice2004; Cossins, Lodato, & Clarke Reference Cossins, Lodato and Clarke2009), contains a rich array of physics necessary for star and planet formation including all of the above algorithms, but the legacy nature of the code makes it difficult to modify or debug and there are no plans to make it public.

Gasoline (Wadsley, Stadel, & Quinn Reference Wadsley, Stadel and Quinn2004) is another code that has been widely and successfully used for galaxy formation simulations, with its successor, Gasoline 2 (Wadsley et al. Reference Wadsley, Keller and Quinn2017), recently publicly released. Hubber et al. (Reference Hubber, Batty, McLeod and Whitworth2011) have developed Seren with similar goals to Phantom, focused on star cluster simulations. Seren thus presents more advanced N-body algorithms compared to what is in Phantom but does not yet include magnetic fields, dust, or H2 chemistry.

A third motivation was the need to distinguish between the ‘high performance’ code used for 3D simulations from simpler codes used to develop and test algorithms, such as our already-public ndspmhd code (Price Reference Price2012a). Phantom is designed to ‘take what works and make it fast’, rather than containing options for every possible variation on the SPH algorithm. Obsolete options are actively deleted.

The initial release of Phantom has been developed with a focus on stellar, planetary, and Galactic astrophysics, as well as accretion discs. In this first paper, coinciding with the first stable public release, we describe and validate the core algorithms as well as some example applications. Various novel aspects and optimisation strategies are also presented. This paper is an attempt to document in detail what is currently available in the code, which include modules for MHD, dust–gas mixtures, self-gravity, and a range of other physics. The paper is also designed to serve as guide to the correct use of the various algorithms. Stable releases of Phantom are posted on the webFootnote 1, while the development version and wiki documentation are available on the Bitbucket platformFootnote 2.

The paper is organised as follows: We describe the numerical methods in Section 2 with corresponding numerical tests in Section 5. We cover SPH basics (Section 2.1), our implementation of hydrodynamics (Sections 2.2 and 5.1), the timestepping algorithm (Section 2.3), external forces (Sections 2.4 and 5.2), turbulent forcing (Sections 2.5 and 6.1), accretion disc viscosity (Sections 2.6 and 5.3), Navier–Stokes viscosity (Sections 2.7 and 5.4), sink particles (Sections 2.8 and 5.5), stellar physics (Section 2.9), MHD (Sections 2.10 and 5.6), non-ideal MHD (Sections 2.11 and 5.7), self-gravity (Sections 2.12 and 5.8), dust–gas mixtures (Sections 2.13 and 5.9), ISM chemistry and cooling (Sections 2.14 and 5.10), and particle injection (Section 2.15). We present the algorithms for generating initial conditions in Section 3. Our approach to software engineering is described in Section 4. We give five examples of recent applications highlighting different aspects of Phantom in Section 6. We summarise in Section 7.

2 NUMERICAL METHOD

Phantom is based on the SPH technique, invented by Lucy (Reference Lucy1977) and Gingold & Monaghan (Reference Gingold and Monaghan1977) and the subject of numerous reviews (Benz Reference Benz and Buchler1990; Monaghan Reference Monaghan1992, Reference Monaghan2005, Reference Monaghan2012; Rosswog Reference Rosswog2009; Springel Reference Springel2010; Price Reference Price2012a).

In the following, we adopt the convention that a, b, and c refer to particle indices; i, j, and k refer to vector or tensor indices; and n and m refer to indexing of nodes in the treecode.

2.1. Fundamentals

2.1.1. Lagrangian hydrodynamics

SPH solves the equations of hydrodynamics in Lagrangian form. The fluid is discretised onto a set of ‘particles’ of mass m that are moved with the local fluid velocity ![]() ${\bm v}$. Hence, the two basic equations common to all physics in Phantom are

${\bm v}$. Hence, the two basic equations common to all physics in Phantom are

where ![]() ${\bm r}$ is the particle position and ρ is the density. These equations use the Lagrangian time derivative,

${\bm r}$ is the particle position and ρ is the density. These equations use the Lagrangian time derivative, ![]() ${\rm d}/{\rm d}t \equiv \partial / \partial t + {\bm v} \cdot \nabla$, and are the Lagrangian update of the particle position and the continuity equation (expressing the conservation of mass), respectively.

${\rm d}/{\rm d}t \equiv \partial / \partial t + {\bm v} \cdot \nabla$, and are the Lagrangian update of the particle position and the continuity equation (expressing the conservation of mass), respectively.

2.1.2. Conservation of mass in SPH

The density is computed in Phantom using the usual SPH density sum

where a and b are particle labels, m is the mass of the particle, W is the smoothing kernel, h is the smoothing length, and the sum is over neighbouring particles (i.e. those within R kernh, where R kern is the dimensionless cut-off radius of the smoothing kernel). Taking the Lagrangian time derivative of (3), one obtains the discrete form of (2) in SPH

where ![]() $W_{ab}(h_{a})\equiv W(\vert {\bm r}_{a} - {\bm r}_{b}\vert , h_{a})$ and Ωa is a term related to the gradient of the smoothing length (Springel & Hernquist Reference Springel and Hernquist2002; Monaghan Reference Monaghan2002) given by

$W_{ab}(h_{a})\equiv W(\vert {\bm r}_{a} - {\bm r}_{b}\vert , h_{a})$ and Ωa is a term related to the gradient of the smoothing length (Springel & Hernquist Reference Springel and Hernquist2002; Monaghan Reference Monaghan2002) given by

Equation (4) is not used directly to compute the density in Phantom, since evaluating (3) provides a time-independent solution to (2) (see e.g. Monaghan Reference Monaghan1992; Price Reference Price2012a for details). The time-dependent version (4) is equivalent to (3) up to a boundary term (see Price Reference Price2008) but is only used in Phantom to predict the smoothing length at the next timestep in order to reduce the number of iterations required to evaluate the density (see below).

Since (3)–(5) all depend on the kernel evaluated on neighbours within R kern times h a, all three of these summations may be computed simultaneously using a single loop over the same set of neighbours. Details of the neighbour finding procedure are given in Section 2.1.7.

2.1.3. Setting the smoothing length

The smoothing length itself is specified as a function of the particle number density, n, via

where h fact is the proportionality factor specifying the smoothing length in terms of the mean local particle spacing and the second equality holds only for equal mass particles, which are enforced in Phantom. The restriction to equal mass particles means that the resolution strictly follows mass, which may be restrictive for problems involving large density contrasts (e.g. Hutchison et al. Reference Hutchison, Price, Laibe and Maddison2016). However, our view is that the potential pitfalls of unequal mass particles (see e.g. Monaghan & Price Reference Monaghan and Price2006) are currently too great to allow for a robust implementation in a public code.

As described in Price (Reference Price2012a), the proportionality constant h fact can be related to the mean neighbour number according to

however, this is only equal to the actual neighbour number for particles in a uniform density distribution (more specifically, for a density distribution with no second derivative), meaning that the actual neighbour number varies. The default setting for h fact is 1.2, corresponding to an average of 57.9 neighbours for a kernel truncated at 2h (i.e. for R kern = 2) in three dimensions. Table 1 lists the settings recommended for different choices of kernel. The derivative required in (5) is given by

Table 1. Compact support radii, variance, standard deviation, recommended ranges of h fact, and recommended default h fact settings (hd fact) for the kernel functions available in Phantom.

2.1.4. Iterations for h and ρ

The mutual dependence of ρ and h means that a rootfinding procedure is necessary to solve both (3) and (6) simultaneously. The procedure implemented in Phantom follows Price & Monaghan (Reference Price and Monaghan2004b, Reference Price and Monaghan2007), solving, for each particle, the equation

where ρsum is the density computed from (3) and

from (6). Equation (9) is solved with Newton–Raphson iterations:

where the derivative is given by

The iterations proceed until |h a, new − h a|/h a, 0 < εh, where h a, 0 is the smoothing length of particle a at the start of the iteration procedure and εh is the tolerance. The convergence with Newton–Raphson is fast, with a quadratic reduction in the error at each iteration, meaning that no more than 2–3 iterations are required even with a rapidly changing density field. We avoid further iterations by predicting the smoothing length from the previous timestep according to

where dρa/dt is evaluated from (4).

Since h and ρ are mutually dependent, we store only the smoothing length, from which the density can be obtained at any time via a function call evaluating ρ(h). The default value of εh is 10−4 so that h and ρ can be used interchangeably. Setting a small tolerance does not significantly change the computational cost, as the iterations quickly fall below a tolerance of ‘one neighbour’ according to (7), so any iterations beyond this refer to loops over the same set of neighbours which can be efficiently cached. However, it is important that the tolerance may be enforced to arbitrary precision rather than being an integer as implemented in the public version of Gadget, since (9) expresses a mathematical relationship between h and ρ that is assumed throughout the derivation of the SPH algorithm (see discussion in Price Reference Price2012a). The precision to which this is enforced places a lower limit on the total energy conservation. Fortunately, floating point neighbour numbers are now default in most Gadget-3 variants also.

2.1.5. Kernel functions

We write the kernel function in the form

where C norm is a normalisation constant, the factor of h 3 gives the dimensions of inverse volume, and f(q) is a dimensionless function of ![]() $q \equiv \vert {\bm r}_{a} - {\bm r}_{b} \vert / h$. Various relations for kernels in this form are given in Morris (Reference Morris1996a) and in Appendix B of Price (Reference Price2010). Those used in Phantom are the kernel gradient

$q \equiv \vert {\bm r}_{a} - {\bm r}_{b} \vert / h$. Various relations for kernels in this form are given in Morris (Reference Morris1996a) and in Appendix B of Price (Reference Price2010). Those used in Phantom are the kernel gradient

and the derivative of the kernel with respect to h,

Notice that the ∂W/∂h term in particular can be evaluated simply from the functions needed to compute the density and kernel gradient and hence does not need to be derived separately if a different kernel is used.

2.1.6. Choice of smoothing kernel

The default kernel function in SPH for the last 30 yr (since Monaghan & Lattanzio Reference Monaghan and Lattanzio1985) has been the M 4 cubic spline from the Schoenberg (Reference Schoenberg1946) B-spline family, given by

$$\begin{equation}

f(q) = \left\lbrace \arraycolsep5pt\begin{array}{ll}1 - \displaystyle\frac{3}{2} q^{2} + \displaystyle\frac{3}{4} q^{3}, & 0 \le q < 1; \\[3pt]

\displaystyle\frac{1}{4}(2-q)^3, & 1 \le q < 2; \\[3pt]

0. & q \ge 2, \end{array} \right.

\end{equation}$$

$$\begin{equation}

f(q) = \left\lbrace \arraycolsep5pt\begin{array}{ll}1 - \displaystyle\frac{3}{2} q^{2} + \displaystyle\frac{3}{4} q^{3}, & 0 \le q < 1; \\[3pt]

\displaystyle\frac{1}{4}(2-q)^3, & 1 \le q < 2; \\[3pt]

0. & q \ge 2, \end{array} \right.

\end{equation}$$

where the normalisation constant C norm = 1/π in 3D and the compact support of the function implies that R kern = 2. While the cubic spline kernel is satisfactory for many applications, it is not always the best choice. Most SPH kernels are based on approximating the Gaussian, but with compact support to avoid the ![]() $\mathcal {O}(N^{2})$ computational cost. Convergence in SPH is guaranteed to be second order (∝h 2) to the degree that the finite summations over neighbouring particles approximate integrals (e.g. Monaghan Reference Monaghan1992, Reference Monaghan2005; Price Reference Price2012a). Hence, the choice of kernel and the effect that a given kernel has on the particle distribution are important considerations.

$\mathcal {O}(N^{2})$ computational cost. Convergence in SPH is guaranteed to be second order (∝h 2) to the degree that the finite summations over neighbouring particles approximate integrals (e.g. Monaghan Reference Monaghan1992, Reference Monaghan2005; Price Reference Price2012a). Hence, the choice of kernel and the effect that a given kernel has on the particle distribution are important considerations.

In general, more accurate results will be obtained with a kernel with a larger compact support radius, since it will better approximate the Gaussian which has excellent convergence and stability properties (Morris Reference Morris1996a; Price Reference Price2012a; Dehnen & Aly Reference Dehnen and Aly2012). However, care is required. One should not simply increase h fact for the cubic spline kernel because even though this implies more neighbours [via (7)], it increases the resolution length. For the B-splines, it also leads to the onset of the ‘pairing instability’ where the particle distribution becomes unstable to transverse modes, leading to particles forming close pairs (Thomas & Couchman Reference Thomas and Couchman1992; Morris Reference Morris1996a, Reference Morris1996b; Børve, Omang, & Trulsen Reference Børve, Omang and Trulsen2004; Price Reference Price2012a; Dehnen & Aly Reference Dehnen and Aly2012). This is the motivation of our default choice of h fact = 1.2 for the cubic spline kernel, since it is just short of the maximum neighbour number that can be used while remaining stable to the pairing instability.

A better approach to reducing kernel bias is to keep the same resolution lengthFootnote 3 but to use a kernel that has a larger compact support radius. The traditional approach (e.g. Morris Reference Morris1996a, Reference Morris1996b; Børve et al. Reference Børve, Omang and Trulsen2004; Price Reference Price2012a) has been to use the higher kernels in the B-spline series, i.e. the M 5 quartic which extends to 2.5h

$$\begin{equation}

f(q) = \left\lbrace \arraycolsep5pt\begin{array}{ll}

\left(\displaystyle\frac{5}{2} -q\right)^4 - 5\left(\displaystyle\frac{3}{2} -q\right)^4 & 0 \le q < \displaystyle\frac{1}{2}, \\[6pt]

\quad + 10\left(\displaystyle\frac{1}{2}-q\right)^4,\\[6pt]

\left(\displaystyle\frac{5}{2} -q\right)^4 - 5\left(\displaystyle\frac{3}{2} -q\right)^4, & \displaystyle\frac{1}{2} \le q < \displaystyle\frac{3}{2}, \\[6pt]

\left(\displaystyle\frac{5}{2} -q\right)^4, & \displaystyle\frac{3}{2} \le q < \displaystyle\frac{5}{2}, \\[3pt]

0, & q \ge \frac{5}{2}, \end{array} \right.

\end{equation}$$

$$\begin{equation}

f(q) = \left\lbrace \arraycolsep5pt\begin{array}{ll}

\left(\displaystyle\frac{5}{2} -q\right)^4 - 5\left(\displaystyle\frac{3}{2} -q\right)^4 & 0 \le q < \displaystyle\frac{1}{2}, \\[6pt]

\quad + 10\left(\displaystyle\frac{1}{2}-q\right)^4,\\[6pt]

\left(\displaystyle\frac{5}{2} -q\right)^4 - 5\left(\displaystyle\frac{3}{2} -q\right)^4, & \displaystyle\frac{1}{2} \le q < \displaystyle\frac{3}{2}, \\[6pt]

\left(\displaystyle\frac{5}{2} -q\right)^4, & \displaystyle\frac{3}{2} \le q < \displaystyle\frac{5}{2}, \\[3pt]

0, & q \ge \frac{5}{2}, \end{array} \right.

\end{equation}$$

where C norm = 1/(20π), and the M 6 quintic extending to 3h,

$$\begin{equation}

f(q) = \left\lbrace \arraycolsep5pt\begin{array}{ll}(3-q)^5 - 6(2-q)^5 + 15(1-q)^5, & 0 \le q < 1, \\

(3-q)^5 - 6(2-q)^5, & 1 \le q < 2, \\[3pt]

(3-q)^5, & 2 \le q < 3, \\[3pt]

0, & q \ge 3, \end{array} \right.

\end{equation}$$

$$\begin{equation}

f(q) = \left\lbrace \arraycolsep5pt\begin{array}{ll}(3-q)^5 - 6(2-q)^5 + 15(1-q)^5, & 0 \le q < 1, \\

(3-q)^5 - 6(2-q)^5, & 1 \le q < 2, \\[3pt]

(3-q)^5, & 2 \le q < 3, \\[3pt]

0, & q \ge 3, \end{array} \right.

\end{equation}$$

where C norm = 1/(120π) in 3D. The quintic in particular gives results virtually indistinguishable from the Gaussian for most problems.

Recently, there has been tremendous interest in the use of the Wendland (Reference Wendland1995) kernels, particularly since Dehnen & Aly (Reference Dehnen and Aly2012) showed that they are stable to the pairing instability at all neighbour numbers despite having a Gaussian-like shape and compact support. These functions are constructed as the unique polynomial functions with compact support but with a positive Fourier transform, which turns out to be a necessary condition for stability against the pairing instability (Dehnen & Aly Reference Dehnen and Aly2012). The 3D Wendland kernels scaled to a radius of 2h are given by C 2:

$$\begin{equation}

f(q) = \left\lbrace \arraycolsep5pt\begin{array}{@{}ll@{}}\left(1 - \displaystyle\frac{q}{2} \right)^{4} \left(2 q + 1\right), & q < 2, \\[3pt]

0, & q \ge 2, \end{array}\right.

\end{equation}$$

$$\begin{equation}

f(q) = \left\lbrace \arraycolsep5pt\begin{array}{@{}ll@{}}\left(1 - \displaystyle\frac{q}{2} \right)^{4} \left(2 q + 1\right), & q < 2, \\[3pt]

0, & q \ge 2, \end{array}\right.

\end{equation}$$

where C norm = 21/(16π), the C 4 kernel

$$\begin{equation}

f(q) = \left\lbrace \arraycolsep5pt\begin{array}{@{}ll@{}}\left(1 - \displaystyle\frac{q}{2}\right)^{6} \left(\displaystyle\frac{35 q^{2}}{12} + 3 q + 1\right), & q < 2, \\[3pt]

0, & q\ge 2, \end{array}\right.

\end{equation}$$

$$\begin{equation}

f(q) = \left\lbrace \arraycolsep5pt\begin{array}{@{}ll@{}}\left(1 - \displaystyle\frac{q}{2}\right)^{6} \left(\displaystyle\frac{35 q^{2}}{12} + 3 q + 1\right), & q < 2, \\[3pt]

0, & q\ge 2, \end{array}\right.

\end{equation}$$

where C norm = 495/(256π), and the C 6 kernel

$$\begin{equation}

f(q) = \left\lbrace \arraycolsep5pt\begin{array}{@{}ll@{}}\left(1 - \displaystyle\frac{q}{2}\right)^{8} \left(4 q^{3} + \displaystyle\frac{25 q^{2}}{4} + 4 q + 1\right), & q < 2, \\[3pt]

0, & q \ge 2, \end{array}\right.

\end{equation}$$

$$\begin{equation}

f(q) = \left\lbrace \arraycolsep5pt\begin{array}{@{}ll@{}}\left(1 - \displaystyle\frac{q}{2}\right)^{8} \left(4 q^{3} + \displaystyle\frac{25 q^{2}}{4} + 4 q + 1\right), & q < 2, \\[3pt]

0, & q \ge 2, \end{array}\right.

\end{equation}$$

where C norm = 1365/(512π). Figure 1 graphs f(q) and its first and second derivative for each of the kernels available in Phantom.

Figure 1. Smoothing kernels available in Phantom (solid lines) together with their first (dashed lines) and second (dotted lines) derivatives. Wendland kernels in Phantom (bottom row) are given compact support radii of 2, whereas the B-spline kernels (top row) adopt the traditional practice where the support radius increases by 0.5. Thus, use of alternative kernels requires adjustment of h fact, the ratio of smoothing length to particle spacing (see Table 1).

Several authors have argued for use of the Wendland kernels by default. For example, Rosswog (Reference Rosswog2015) found best results on simple test problems using the C 6 Wendland kernel. However, ‘best’ in that case implied using an average of 356 neighbours in 3D (i.e. h fact = 2.2 with R kern = 2.0) which is a factor of 6 more expensive than the standard approach. Similarly, Hu et al. (Reference Hu, Naab, Walch, Moster and Oser2014) recommend the C 4 kernel with 200 neighbours which is 3.5 times more expensive. The large number of neighbours are needed because the Wendland kernels are always worse than the B-splines for a given number of neighbours due to the positive Fourier transform, meaning that the kernel bias (related to the Fourier transform) is always positive where the B-spline errors oscillate around zero (Dehnen & Aly Reference Dehnen and Aly2012). Hence, whether or not this additional cost is worthwhile depends on the application. A more comprehensive analysis would be valuable here, as the ‘best’ choice of kernel remains an open question (see also the kernels proposed by Cabezón, García-Senz, & Relaño Reference Cabezón, García-Senz and Relaño2008; García-Senz et al. Reference García-Senz, Cabezón, Escartín and Ebinger2014). An even broader question regards the kernel used for dissipation terms, for gravitational force softening and for drag in two-fluid applications (discussed further in Section 2.13). For example, Laibe & Price (Reference Laibe and Price2012a) found that double-hump-shaped kernels led to more than an order of magnitude improvement in accuracy when used for drag terms.

A simple and practical approach to checking that kernel bias does not affect the solution that we have used and advocate when using Phantom is to first attempt a simulation with the cubic spline, but then to check the results with a low resolution calculation using the quintic kernel. If the results are identical, then it indicates that the kernel bias is not important, but if not, then use of smoother but costlier kernels such as M 6 or C 6 may be warranted. Wendland kernels are mainly useful for preventing the pairing instability and are necessary if one desires to employ a large number of neighbours.

2.1.7. Neighbour finding

Finding neighbours is the main computational expense to any SPH code. Earlier versions of Phantom contained three different options for neighbour-finding: a Cartesian grid, a cylindrical grid, and a kd-tree. This was because we wrote the code originally with non-self-gravitating problems in mind, for which the overhead associated with a treecode is unnecessary. Since the implementation of self-gravity in Phantom the kd-tree has become the default, and is now sufficiently well optimised that the fixed-grid modules are more efficient only for simulations that do not employ either self-gravity or individual particle timesteps, which are rare in astrophysics.

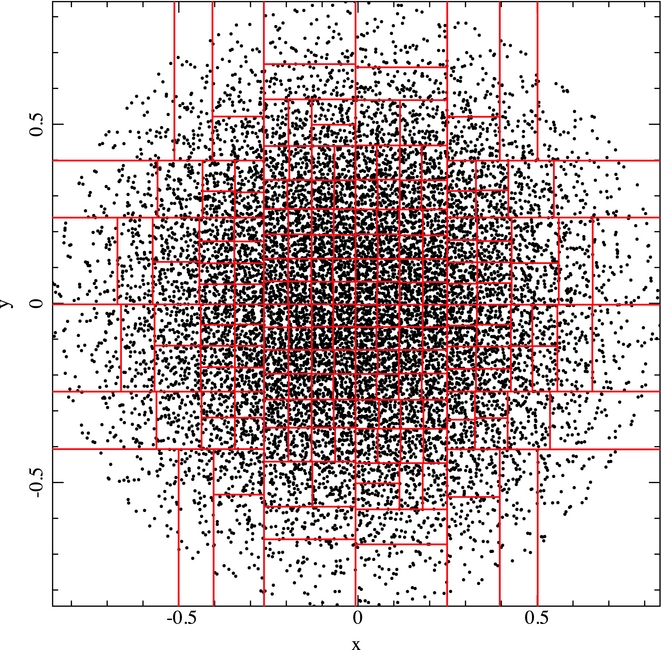

A key optimisation strategy employed in Phantom is to perform the neighbour search for groups of particles. The results of this search (i.e. positions of all trial neighbours) are then cached and used to check for neighbours for individual particles in the group. Our kd-tree algorithm closely follows Gafton & Rosswog (Reference Gafton and Rosswog2011), splitting the particles recursively based on the centre of mass and bisecting the longest axis at each level (Figure 2). The tree build is refined until a cell contains less than N min particles, which is then referred to as a ‘leaf node’. By default, N min = 10. The neighbour search is then performed once for each leaf node. Further details are given in Appendix A.3.1.

Figure 2. Example of the kd-tree build. For illustrative purposes only, we have constructed a 2D version of the tree on the projected particle distribution in the x–y plane of the particle distribution from a polytrope test with 13 115 particles. Each level of the tree recursively splits the particle distribution in half, bisecting the longest axis at the centre of mass until the number of particles in a given cell is <N min. For clarity, we have used N min = 100 in the above example, while N min = 10 by default.

2.2. Hydrodynamics

2.2.1. Compressible hydrodynamics

The equations of compressible hydrodynamics are solved in the form

$$\begin{eqnarray}

\frac{{\rm d}{\bm v}}{{\rm d}t} &=& -\frac{\nabla P}{\rho } + \Pi _{\rm shock} + {\bm a}_{\rm ext}({\bm r}, t) \nonumber \\

&& +\, {\bm a}_{\rm sink-gas} + {\bm a}_{\rm selfgrav},

\end{eqnarray}$$

$$\begin{eqnarray}

\frac{{\rm d}{\bm v}}{{\rm d}t} &=& -\frac{\nabla P}{\rho } + \Pi _{\rm shock} + {\bm a}_{\rm ext}({\bm r}, t) \nonumber \\

&& +\, {\bm a}_{\rm sink-gas} + {\bm a}_{\rm selfgrav},

\end{eqnarray}$$

where P is the pressure, u is the specific internal energy, ![]() ${\bm a}_{\rm ext}$,

${\bm a}_{\rm ext}$, ![]() ${\bm a}_{\rm sink-gas}$ and

${\bm a}_{\rm sink-gas}$ and ![]() ${\bm a}_{\rm selfgrav}$ refer to (optional) accelerations from ‘external’ or ‘body’ forces (Section 2.4), sink particles (Section 2.8), and self-gravity (Section 2.12), respectively. Πshock and Λshock are dissipation terms required to give the correct entropy increase at a shock front, and Λcool is a cooling term.

${\bm a}_{\rm selfgrav}$ refer to (optional) accelerations from ‘external’ or ‘body’ forces (Section 2.4), sink particles (Section 2.8), and self-gravity (Section 2.12), respectively. Πshock and Λshock are dissipation terms required to give the correct entropy increase at a shock front, and Λcool is a cooling term.

2.2.2. Equation of state

The equation set is closed by an equation of state (EOS) relating the pressure to the density and/or internal energy. For an ideal gas, this is given by

where γ is the adiabatic index and the sound speed c s is given by

$$\begin{equation}

c_{\rm s} = \sqrt{\frac{\gamma P}{\rho }}.

\end{equation}$$

$$\begin{equation}

c_{\rm s} = \sqrt{\frac{\gamma P}{\rho }}.

\end{equation}$$

The internal energy, u, can be related to the gas temperature, T, using

giving

where k B is Boltzmann’s constant, μ is the mean molecular weight, and m H is the mass of a hydrogen atom. Thus, to infer the temperature, one needs to specify a composition, but only the internal energy affects the gas dynamics. Equation (25) with γ = 5/3 is the default EOS in Phantom.

In the case where shocks are assumed to radiate away all of the heat generated at the shock front (i.e. Λshock = 0) and there is no cooling (Λcool = 0), (24) becomes simply, using (2)

which, using (25) can be integrated to give

where K is the polytropic constant. Even more simply, in the case where the temperature is assumed constant, or prescribed as a function of position, the EOS is simply

In both of these cases, (30) and (31), the internal energy does not need to be stored. In this case, the temperature is effectively set by the value of K (and the density if γ ≠ 1). Specifically,

2.2.3. Code units

For pure hydrodynamics, physical units are irrelevant to the numerical results since (1)–(2) and (23)–(24) are scale free to all but the Mach number. Hence, setting physical units is only useful when comparing simulations with Nature, when physical heating or cooling rates are applied via (24), or when one wishes to interpret the results in terms of temperature using (28) or (32).

In the case where gravitational forces are applied, either using an external force (Section 2.4) or using self-gravity (Section 2.12), we adopt the standard procedure of transforming units such that G = 1 in code units, i.e.

$$\begin{equation}

u_{\rm time} = \sqrt{\frac{u_{\rm dist}^{3}}{{\rm G}u_{\rm mass}}},

\end{equation}$$

$$\begin{equation}

u_{\rm time} = \sqrt{\frac{u_{\rm dist}^{3}}{{\rm G}u_{\rm mass}}},

\end{equation}$$

where u time, u dist, and u mass are the units of time, length, and mass, respectively. Additional constraints apply when using relativistic terms (Section 2.4.5) or magnetic fields (Section 2.10.3).

2.2.4. Equation of motion in SPH

We follow the variable smoothing length formulation described by Price (Reference Price2012a), Price & Federrath (Reference Price and Federrath2010), and Lodato & Price (Reference Lodato and Price2010). We discretise (23) using

$$\begin{eqnarray}

\frac{{\rm d}{\bm v}_{a}}{{\rm d}t} &= & - \sum _{b} m_{b} \left[ \frac{P_{a} + q^{a}_{ab}}{\rho _{a}^{2}\Omega _{a}}\nabla _a W_{ab}(h_{a}) + \frac{P_{b}+q^{b}_{ab}}{\rho _{b}^{2}\Omega _{b}}\nabla _a W_{ab}(h_{b}) \right] \nonumber \\

&& +\, {\bm a}_{\rm ext} ({\bm r}_{a}, t) + {\bm a}^{a}_{\rm sink-gas} + {\bm a}^{a}_{\rm selfgrav},

\end{eqnarray}$$

$$\begin{eqnarray}

\frac{{\rm d}{\bm v}_{a}}{{\rm d}t} &= & - \sum _{b} m_{b} \left[ \frac{P_{a} + q^{a}_{ab}}{\rho _{a}^{2}\Omega _{a}}\nabla _a W_{ab}(h_{a}) + \frac{P_{b}+q^{b}_{ab}}{\rho _{b}^{2}\Omega _{b}}\nabla _a W_{ab}(h_{b}) \right] \nonumber \\

&& +\, {\bm a}_{\rm ext} ({\bm r}_{a}, t) + {\bm a}^{a}_{\rm sink-gas} + {\bm a}^{a}_{\rm selfgrav},

\end{eqnarray}$$

where the qaab and qbab terms represent the artificial viscosity (discussed in Section 2.2.7).

2.2.5. Internal energy equation

The internal energy equation (24) is discretised using the time derivative of the density sum (c.f. 29), which from (4) gives

where ![]() ${\bm v}_{ab} \equiv {\bm v}_a - {\bm v}_b$. In the variational formulation of SPH (e.g. Price Reference Price2012a), this expression is used as a constraint to derive (34), which guarantees both the conservation of energy and entropy (the latter in the absence of dissipation terms). The shock capturing terms in the internal energy equation are discussed below.

${\bm v}_{ab} \equiv {\bm v}_a - {\bm v}_b$. In the variational formulation of SPH (e.g. Price Reference Price2012a), this expression is used as a constraint to derive (34), which guarantees both the conservation of energy and entropy (the latter in the absence of dissipation terms). The shock capturing terms in the internal energy equation are discussed below.

By default, we assume an adiabatic gas, meaning that PdV work and shock heating terms contribute to the thermal energy of the gas, no energy is radiated to the environment, and total energy is conserved. To approximate a radiative gas, one may set one or both of these terms to zero. Neglecting the shock heating term, Λshock, gives an approximation equivalent to a polytropic EOS (30), as described in Section 2.2.2. Setting both shock and work contributions to zero implies that du/dt = 0, meaning that each particle will simply retain its initial temperature.

2.2.6. Conservation of energy in SPH

Does evolving the internal energy equation imply that total energy is not conserved? Wrong! Total energy in SPH, for the case of hydrodynamics, is given by

Taking the (Lagrangian) time derivative, we find that conservation of energy corresponds to

Inserting our expressions (34) and (35), and neglecting for the moment dissipative terms and external forces, we find

$$\begin{eqnarray}

\frac{{\rm d}E}{{\rm d}t} &=& -\sum _{a} \sum _{b} m_{a} m_{b} \left[ \frac{P_{a}\bm {v}_b}{\rho _{a}^{2}\Omega _{a}}\cdot \nabla _a W_{ab}(h_{a}) \right. \nonumber \\

&& \left. + \frac{P_{b}\bm {v}_a}{\rho _{b}^{2}\Omega _{b}}\cdot \nabla _a W_{ab}(h_{b}) \right] = 0.

\end{eqnarray}$$

$$\begin{eqnarray}

\frac{{\rm d}E}{{\rm d}t} &=& -\sum _{a} \sum _{b} m_{a} m_{b} \left[ \frac{P_{a}\bm {v}_b}{\rho _{a}^{2}\Omega _{a}}\cdot \nabla _a W_{ab}(h_{a}) \right. \nonumber \\

&& \left. + \frac{P_{b}\bm {v}_a}{\rho _{b}^{2}\Omega _{b}}\cdot \nabla _a W_{ab}(h_{b}) \right] = 0.

\end{eqnarray}$$

The double summation on the right-hand side equals zero because the kernel gradient, and hence the overall sum, is antisymmetric. That is, ∇aW ab = −∇bW ba. This means one can relabel the summation indices arbitrarily in one half of the sum, and add it to one half of the original sum to give zero. One may straightforwardly verify that this remains true when one includes the dissipative terms (see below).

This means that even though we employ the internal energy equation, total energy remains conserved to machine precision in the spatial discretisation. That is, energy is conserved irrespective of the number of particles, the number of neighbours or the choice of smoothing kernel. The only non-conservation of energy arises from the ordinary differential equation solver one employs to solve the left-hand side of the equations. We thus employ a symplectic time integration scheme in order to preserve the conservation properties as accurately as possible (Section 2.3.1).

2.2.7. Shock capturing: momentum equation

The shock capturing dissipation terms are implemented following Monaghan (Reference Monaghan1997), derived by analogy with Riemann solvers from the special relativistic dissipation terms proposed by Chow & Monaghan (Reference Chow and Monaghan1997). These were extended by Price & Monaghan (Reference Price and Monaghan2004b, Reference Price and Monaghan2005) to MHD and recently to dust–gas mixtures by Laibe & Price (Reference Laibe and Price2014b). In a recent paper, Puri & Ramachandran (Reference Puri and Ramachandran2014) found this approach, along with the Morris & Monaghan (Reference Morris and Monaghan1997) switch (which they referred to as the ‘Monaghan–Price–Morris’ formulation) to be the most accurate and robust method for shock capturing in SPH when compared to several other approaches, including Godunov SPH (e.g. Inutsuka Reference Inutsuka2002; Cha & Whitworth Reference Cha and Whitworth2003).

The formulation in Phantom differs from that given in Price (Reference Price2012a) only by the way that the density and signal speed in the q terms are averaged, as described in Price & Federrath (Reference Price and Federrath2010) and Lodato & Price (Reference Lodato and Price2010). That is, we use

$$\begin{equation}

\Pi _{\rm shock}^{a} \equiv -\sum _{b} m_{b} \left[ \frac{q^{a}_{ab}}{\rho _{a}^{2}\Omega _{a}}\nabla _a W_{ab}(h_{a}) + \frac{q^{b}_{ab}}{\rho _{b}^{2}\Omega _{b}}\nabla _a W_{ab}(h_{b}) \right],

\end{equation}$$

$$\begin{equation}

\Pi _{\rm shock}^{a} \equiv -\sum _{b} m_{b} \left[ \frac{q^{a}_{ab}}{\rho _{a}^{2}\Omega _{a}}\nabla _a W_{ab}(h_{a}) + \frac{q^{b}_{ab}}{\rho _{b}^{2}\Omega _{b}}\nabla _a W_{ab}(h_{b}) \right],

\end{equation}$$

where

$$\begin{equation}

q^{a}_{ab} = \left\lbrace \arraycolsep5pt\begin{array}{@{}ll@{}}-\displaystyle\frac{1}{2} \rho _{a} v_{{\rm sig}, a} {\bm v}_{ab} \cdot \hat{\bm r}_{ab}, & {\bm v}_{ab} \cdot \hat{\bm r}_{ab} < 0 \\[6pt]

0 & \text{otherwise,} \end{array}\right.

\end{equation}$$

$$\begin{equation}

q^{a}_{ab} = \left\lbrace \arraycolsep5pt\begin{array}{@{}ll@{}}-\displaystyle\frac{1}{2} \rho _{a} v_{{\rm sig}, a} {\bm v}_{ab} \cdot \hat{\bm r}_{ab}, & {\bm v}_{ab} \cdot \hat{\bm r}_{ab} < 0 \\[6pt]

0 & \text{otherwise,} \end{array}\right.

\end{equation}$$

where ![]() ${\bm v}_{ab} \equiv {\bm v}_{a} - {\bm v}_{b}$,

${\bm v}_{ab} \equiv {\bm v}_{a} - {\bm v}_{b}$, ![]() $\hat{\bm r}_{ab} \equiv ({\bm r}_{a} - {\bm r}_{b})/\vert {\bm r}_{a} - {\bm r}_{b} \vert$ is the unit vector along the line of sight between the particles, and v sig is the maximum signal speed, which depends on the physics implemented. For hydrodynamics, this is given by

$\hat{\bm r}_{ab} \equiv ({\bm r}_{a} - {\bm r}_{b})/\vert {\bm r}_{a} - {\bm r}_{b} \vert$ is the unit vector along the line of sight between the particles, and v sig is the maximum signal speed, which depends on the physics implemented. For hydrodynamics, this is given by

where in general αAVa ∈ [0, 1] is controlled by a switch (see Section 2.2.9), while βAV = 2 by default.

Importantly, α does not multiply the βAV term. The βAV term provides a second-order Von Neumann & Richtmyer-like term that prevents particle interpenetration (e.g. Lattanzio et al. Reference Lattanzio, Monaghan, Pongracic and Schwarz1986; Monaghan Reference Monaghan1989), and thus βAV ⩾ 2 is needed wherever particle penetration may occur. This is important in accretion disc simulations where use of a low α may be acceptable in the absence of strong shocks, but a low β will lead to particle penetration of the disc midplane, which is the cause of a number of convergence issues (Meru & Bate Reference Meru and Bate2011, Reference Meru and Bate2012). Price & Federrath (Reference Price and Federrath2010) found that βAV = 4 was necessary at high Mach number (M ≳ 5) to maintain a sharp shock structure, which despite nominally increasing the viscosity was found to give less dissipation overall because particle penetration no longer occurred at shock fronts.

2.2.8. Shock capturing: internal energy equation

The key insight from Chow & Monaghan (Reference Chow and Monaghan1997) was that shock capturing not only involves a viscosity term but involves dissipating the jump in each component of the energy, implying a conductivity term in hydrodynamics and resistive dissipation in MHD (see Section 2.10.5). The resulting contribution to the internal energy equation is given by (e.g. Price Reference Price2012a)

$$\begin{eqnarray}

\Lambda _{\rm shock} & \equiv& - \frac{1}{\Omega _{a}\rho _a} \sum _{b} m_{b} v_{{\rm sig}, a} \frac{1}{2} ({\bm v}_{ab} \cdot \hat{\bm r}_{ab})^{2} F_{ab}(h_{a}) \nonumber \\

&& +\, \sum _{b} m_{b} \alpha _{u} v^{u}_{\rm sig} (u_{a} - u_{b}) \frac{1}{2} \left[ \frac{F_{ab} (h_{a})}{\Omega _{a} \rho _{a}} + \frac{F_{ab} (h_{b})}{\Omega _{b} \rho _{b}} \right] \nonumber \\

&& +\, \Lambda _{\rm artres},

\end{eqnarray}$$

$$\begin{eqnarray}

\Lambda _{\rm shock} & \equiv& - \frac{1}{\Omega _{a}\rho _a} \sum _{b} m_{b} v_{{\rm sig}, a} \frac{1}{2} ({\bm v}_{ab} \cdot \hat{\bm r}_{ab})^{2} F_{ab}(h_{a}) \nonumber \\

&& +\, \sum _{b} m_{b} \alpha _{u} v^{u}_{\rm sig} (u_{a} - u_{b}) \frac{1}{2} \left[ \frac{F_{ab} (h_{a})}{\Omega _{a} \rho _{a}} + \frac{F_{ab} (h_{b})}{\Omega _{b} \rho _{b}} \right] \nonumber \\

&& +\, \Lambda _{\rm artres},

\end{eqnarray}$$

where the first term provides the viscous shock heating, the second term provides an artificial thermal conductivity, F ab is defined as in (15), and Λartres is the heating due to artificial resistivity [Equation (182)]. The signal speed we use for conductivity term differs from the one used for viscosity, as discussed by Price (Reference Price2008, Reference Price2012a). In Phantom, we use

$$\begin{equation}

v^{u}_{\rm sig} = \sqrt{\frac{\vert P_{a} - P_{b} \vert }{\overline{\rho }_{ab}}}

\end{equation}$$

$$\begin{equation}

v^{u}_{\rm sig} = \sqrt{\frac{\vert P_{a} - P_{b} \vert }{\overline{\rho }_{ab}}}

\end{equation}$$

for simulations that do not involve self-gravity or external body forces (Price Reference Price2008), and

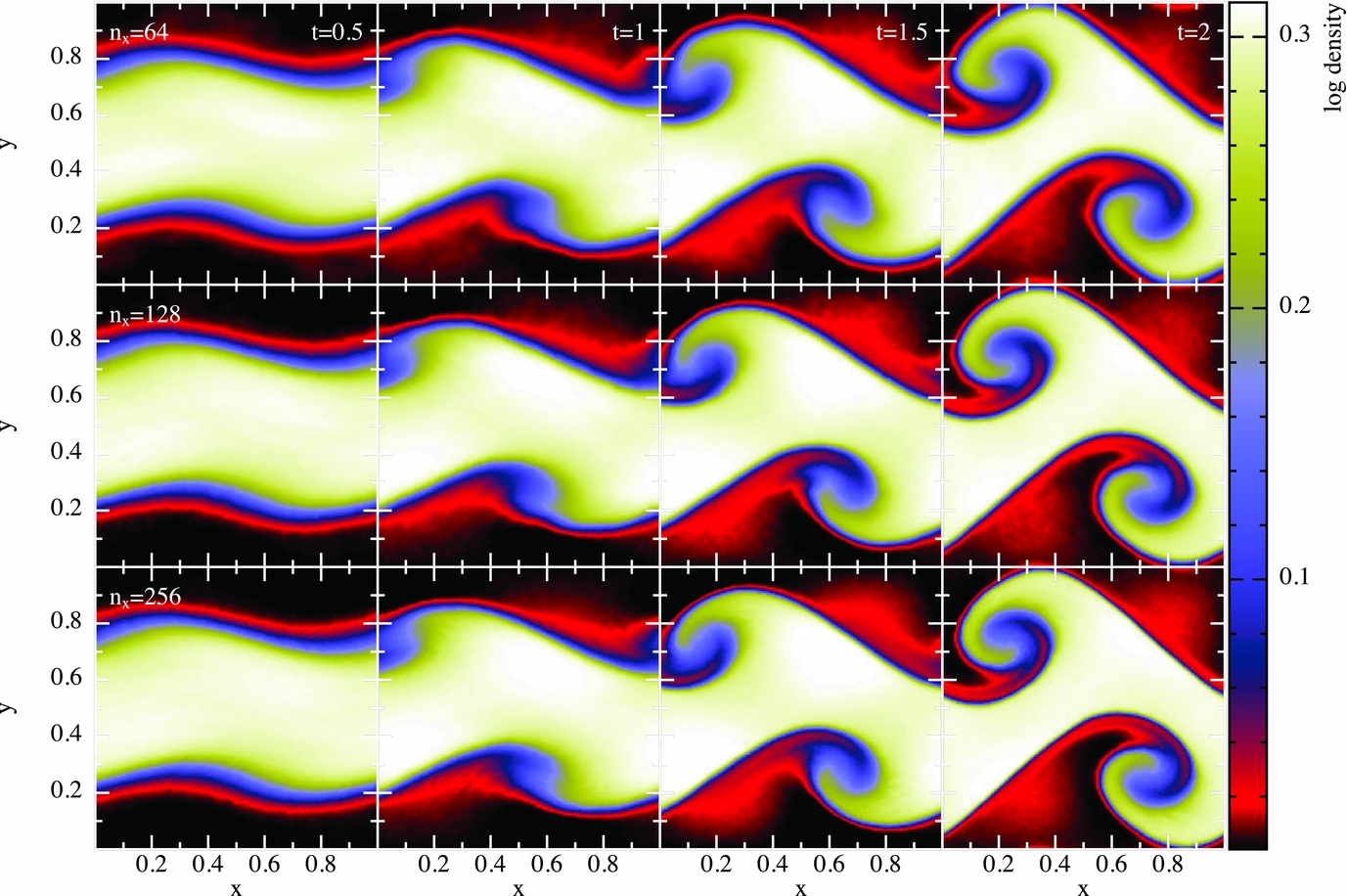

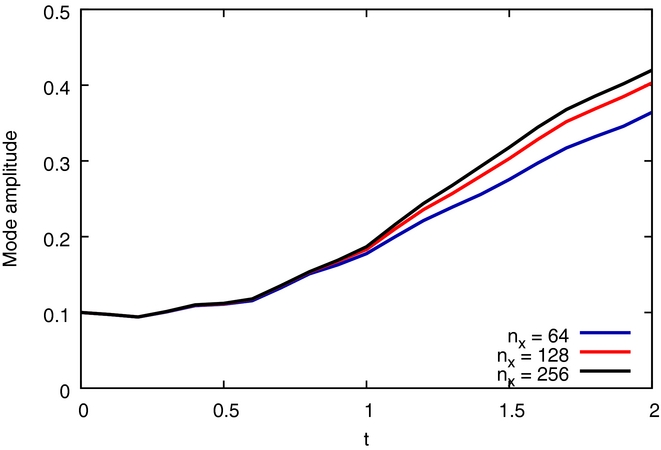

for simulations that do (Wadsley, Veeravalli, & Couchman Reference Wadsley, Veeravalli and Couchman2008). The importance of the conductivity term for treating contact discontinuities was highlighted by Price (Reference Price2008), explaining the poor results found by Agertz et al. (Reference Agertz2007) in SPH simulations of Kelvin–Helmholtz instabilities run across contact discontinuities (discussed further in Section 5.1.4). With (44), we have found there is no need for further switches to reduce conductivity (e.g. Price Reference Price2004; Price & Monaghan Reference Price and Monaghan2005; Valdarnini Reference Valdarnini2016), since the effective thermal conductivity κ is second order in the smoothing length (∝h 2). Phantom therefore uses αu = 1 by default in (42) and we have not yet found a situation where this leads to excess smoothing.

It may be readily shown that the total energy remains conserved in the presence of dissipation by combining (42) with the corresponding dissipative terms in (34). The contribution to the entropy from both viscosity and conductivity is also positive definite (see the appendix in Price & Monaghan Reference Price and Monaghan2004b for the mathematical proof in the case of conductivity).

2.2.9. Shock detection

The standard approach to reducing dissipation in SPH away from shocks for the last 15 yr has been the switch proposed by Morris & Monaghan (Reference Morris and Monaghan1997), where the dimensionless viscosity parameter α is evolved for each particle a according to

where τ ≡ h/(σdecayv sig) and σdecay = 0.1 by default. We set v sig in the decay time equal to the sound speed to avoid the need to store dα/dt, since ![]() $\nabla \cdot {\bm v}$ is already stored in order to compute (4). This is the switch used for numerous turbulence applications with Phantom (e.g. Price & Federrath Reference Price and Federrath2010; Price et al. Reference Price, Federrath and Brunt2011; Tricco et al. Reference Tricco, Price and Federrath2016b) where it is important to minimise numerical dissipation in order to maximise the Reynolds number (e.g. Valdarnini Reference Valdarnini2011; Price Reference Price2012b).

$\nabla \cdot {\bm v}$ is already stored in order to compute (4). This is the switch used for numerous turbulence applications with Phantom (e.g. Price & Federrath Reference Price and Federrath2010; Price et al. Reference Price, Federrath and Brunt2011; Tricco et al. Reference Tricco, Price and Federrath2016b) where it is important to minimise numerical dissipation in order to maximise the Reynolds number (e.g. Valdarnini Reference Valdarnini2011; Price Reference Price2012b).

More recently, Cullen & Dehnen (Reference Cullen and Dehnen2010) proposed a more advanced switch using the time derivative of the velocity divergence. A modified version based on the gradient of the velocity divergence was also proposed by Read & Hayfield (Reference Read and Hayfield2012). We implement a variation on the Cullen & Dehnen (Reference Cullen and Dehnen2010) switch, using a shock indicator of the form

where

is a modification of the Balsara (Reference Balsara1995) viscosity limiter for shear flows. We use this to set α according to

where c s is the sound speed and αmax = 1. We use c s in the expression for αloc also for MHD (Section 2.10) since we found using the magnetosonic speed led to a poor treatment of MHD shocks. If αloc, a > αa, we set αa = αloc, a, otherwise we evolve αa according to

where τ is defined as in the Morris & Monaghan (Reference Morris and Monaghan1997) version, above. We evolve α in the predictor part of the integrator only, i.e. with a first-order time integration, to avoid complications in the corrector step. However, we perform the predictor step implicitly using a backward Euler method, i.e.

which ensures that the decay is stable regardless of the timestep (we do this for the Morris & Monaghan method also).

We use the method outlined in Appendix B3 of Cullen & Dehnen (Reference Cullen and Dehnen2010) to compute ![]() ${\rm d}(\nabla \cdot {\bm v}_{a})/{{\rm d}t}$. That is, we first compute the gradient tensors of the velocity,

${\rm d}(\nabla \cdot {\bm v}_{a})/{{\rm d}t}$. That is, we first compute the gradient tensors of the velocity, ![]() ${\bm v}$, and acceleration,

${\bm v}$, and acceleration, ![]() ${\bm a}$ (used from the previous timestep), during the density loop using an SPH derivative operator that is exact to linear order, that is, with the matrix correction outlined in Price (Reference Price2004, Reference Price2012a), namely

${\bm a}$ (used from the previous timestep), during the density loop using an SPH derivative operator that is exact to linear order, that is, with the matrix correction outlined in Price (Reference Price2004, Reference Price2012a), namely

$$\begin{equation}

R^{ij}_{a} \frac{\partial v^{k}_{a}}{\partial x^{j}_{a}} = \sum _{b} m_{b} \left(v^{k}_{b} - v^{k}_{a}\right) \frac{\partial W_{ab}(h_{a})}{\partial x^{i}} ,

\end{equation}$$

$$\begin{equation}

R^{ij}_{a} \frac{\partial v^{k}_{a}}{\partial x^{j}_{a}} = \sum _{b} m_{b} \left(v^{k}_{b} - v^{k}_{a}\right) \frac{\partial W_{ab}(h_{a})}{\partial x^{i}} ,

\end{equation}$$

where

and repeated tensor indices imply summation. Finally, we construct the time derivative of the velocity divergence according to

where, as previously, repeated i and j indices imply summation. In Cartesian coordinates, the last term in (53) can be written out explicitly using

$$\begin{eqnarray}

\frac{\partial v_{a}^{i}}{\partial x_{a}^{j}} \frac{\partial v_{a}^{j}}{\partial x_{a}^{i}} & =& \left(\frac{\partial v^{x}}{\partial x} \right)^{2} + \left(\frac{\partial v^{y}}{\partial y} \right)^{2} + \left(\frac{\partial v^{z}}{\partial z} \right)^{2} \nonumber \\

& &+\, 2 \left[\frac{\partial v^{x}}{\partial y} \frac{\partial v^{y}}{\partial x} + \frac{\partial v^{x}}{\partial z} \frac{\partial v^{z}}{\partial x} + \frac{\partial v^{z}}{\partial y} \frac{\partial v^{y}}{\partial z}\right].

\end{eqnarray}$$

$$\begin{eqnarray}

\frac{\partial v_{a}^{i}}{\partial x_{a}^{j}} \frac{\partial v_{a}^{j}}{\partial x_{a}^{i}} & =& \left(\frac{\partial v^{x}}{\partial x} \right)^{2} + \left(\frac{\partial v^{y}}{\partial y} \right)^{2} + \left(\frac{\partial v^{z}}{\partial z} \right)^{2} \nonumber \\

& &+\, 2 \left[\frac{\partial v^{x}}{\partial y} \frac{\partial v^{y}}{\partial x} + \frac{\partial v^{x}}{\partial z} \frac{\partial v^{z}}{\partial x} + \frac{\partial v^{z}}{\partial y} \frac{\partial v^{y}}{\partial z}\right].

\end{eqnarray}$$

2.2.10. Cooling

The cooling term Λcool can be set either from detailed chemical calculations (Section 2.14.1) or, for discs, by the simple ‘β-cooling’ prescription of Gammie (Reference Gammie2001), namely

where

with βcool an input parameter to the code specifying the cooling timescale in terms of the local orbital time. We compute Ω in (56) using Ω ≡ 1/(x 2 + y 2 + z 2)3/2, i.e. assuming Keplerian rotation around a central object with mass equal to unity, with G = 1 in code units.

2.2.11. Conservation of linear and angular momentum

The total linear momentum is given by

such that conservation of momentum corresponds to

Inserting our discrete equation (34), we find

$$\begin{eqnarray}

\frac{{\rm d}\bm {P}}{{\rm d}t} &=& \sum _{a} \sum _{b} m_a m_{b} \left[ \frac{P_{a} + q^{a}_{ab}}{\rho _{a}^{2}\Omega _{a}}\nabla _a W_{ab}(h_{a}) \right. \nonumber \\

&& \left. +\, \frac{P_{b}+q^{b}_{ab}}{\rho _{b}^{2}\Omega _{b}}\nabla _a W_{ab}(h_{b}) \right] = 0,

\end{eqnarray}$$

$$\begin{eqnarray}

\frac{{\rm d}\bm {P}}{{\rm d}t} &=& \sum _{a} \sum _{b} m_a m_{b} \left[ \frac{P_{a} + q^{a}_{ab}}{\rho _{a}^{2}\Omega _{a}}\nabla _a W_{ab}(h_{a}) \right. \nonumber \\

&& \left. +\, \frac{P_{b}+q^{b}_{ab}}{\rho _{b}^{2}\Omega _{b}}\nabla _a W_{ab}(h_{b}) \right] = 0,

\end{eqnarray}$$

where, as for the total energy (Section 2.2.6), the double summation is zero because of the antisymmetry of the kernel gradient. The same argument applies to the conservation of angular momentum,

(see e.g. Price Reference Price2012a for a detailed proof). As with total energy, this means linear and angular momentum are exactly conserved by our SPH scheme to the accuracy with which they are conserved by the timestepping scheme.

In Phantom, linear and angular momentum are both conserved to round-off error (typically ~10−16 in double precision) with global timestepping, but exact conservation is violated when using individual particle timesteps or when using the kd-tree to compute gravitational forces. The magnitude of these quantities, as well as the total energy and the individual components of energy (kinetic, internal, potential, and magnetic), should thus be monitored by the user at runtime. Typically with individual timesteps, one should expect energy conservation to ΔE/E ~ 10−3 and linear and angular momentum conservation to ~10−6 with default code settings. The code execution is aborted if conservation errors exceed 10%.

2.3. Time integration

2.3.1. Timestepping algorithm

We integrate the equations of motion using a generalisation of the Leapfrog integrator which is reversible in the case of both velocity dependent forces and derivatives which depend on the velocity field. The basic integrator is the Leapfrog method in ‘Kick–Drift–Kick’ or ‘Velocity Verlet’ form (Verlet Reference Verlet1967), where the positions and velocities of particles are updated from time t n to t n + 1 according to

where Δt ≡ t n + 1 − t n. This is identical to the formulation of Leapfrog used in other astrophysical SPH codes (e.g. Springel Reference Springel2005; Wadsley et al. Reference Wadsley, Stadel and Quinn2004). The Verlet scheme, being both reversible and symplectic (e.g. Hairer, Lubich, & Wanner Reference Hairer, Lubich and Wanner2003), preserves the Hamiltonian nature of the SPH algorithm (e.g. Gingold & Monaghan Reference Gingold and Monaghan1982b; Monaghan & Price Reference Monaghan and Price2001). In particular, both linear and angular momentum are exactly conserved, there is no long-term energy drift, and phase space volume is conserved (e.g. for orbital dynamics). In SPH, this is complicated by velocity-dependent terms in the acceleration from the shock-capturing dissipation terms. In this case, the corrector step, (64), becomes implicit. The approach we take is to notice that these terms are not usually dominant over the position-dependent terms. Hence, we use a first-order prediction of the velocity, as follows:

At the end of the step, we then check if the error in the first-order prediction is less than some tolerance ε according to

where ![]() ${\bm v}^{\rm mag}$ is the mean velocity on all SPH particles (we set the error to zero if

${\bm v}^{\rm mag}$ is the mean velocity on all SPH particles (we set the error to zero if ![]() $\vert {\bm v}^{\rm mag}\vert = 0$) and by default εv = 10−2. If this criterion is violated, then we recompute the accelerations by replacing

$\vert {\bm v}^{\rm mag}\vert = 0$) and by default εv = 10−2. If this criterion is violated, then we recompute the accelerations by replacing ![]() ${\bm v}^{*}$ with

${\bm v}^{*}$ with ![]() ${\bm v}^{n+1}$ and iterating (68) and (69) until the criterion in (70) is satisfied. In practice, this happens rarely, but occurs for example in the first few steps of the Sedov problem where the initial conditions are discontinuous (Section 5.1.3). As each iteration is as expensive as halving the timestep, we also constrain the subsequent timestep such that iterations should not occur, i.e.

${\bm v}^{n+1}$ and iterating (68) and (69) until the criterion in (70) is satisfied. In practice, this happens rarely, but occurs for example in the first few steps of the Sedov problem where the initial conditions are discontinuous (Section 5.1.3). As each iteration is as expensive as halving the timestep, we also constrain the subsequent timestep such that iterations should not occur, i.e.

where e max = max(e) over all particles. A caveat to the above is that velocity iterations are not currently implemented when using individual particle timesteps.

Additional variables such as the internal energy, u, and the magnetic field, ![]() ${\bm B}$, are timestepped with a predictor and trapezoidal corrector step in the same manner as the velocity, following (65), (67) and (69).

${\bm B}$, are timestepped with a predictor and trapezoidal corrector step in the same manner as the velocity, following (65), (67) and (69).

Velocity-dependent external forces are treated separately, as described in Section 2.4.

2.3.2. Timestep constraints

The timestep itself is determined at the end of each step, and is constrained to be less than the maximum stable timestep. For a given particle, a, this is given by (e.g. Lattanzio et al. Reference Lattanzio, Monaghan, Pongracic and Schwarz1986; Monaghan Reference Monaghan1997)

where C cour = 0.3 by default (Lattanzio et al. Reference Lattanzio, Monaghan, Pongracic and Schwarz1986) and v dtsig is taken as the maximum of (41) over the particle’s neighbours assuming αAV = max(αAV, 1). The criterion above differs from the usual Courant–Friedrichs–Lewy condition used in Eulerian codes (Courant, Friedrichs, & Lewy Reference Courant, Friedrichs and Lewy1928) because it depends only on the difference in velocity between neighbouring particles, not the absolute value.

An additional constraint is applied from the accelerations (the ‘force condition’), where

$$\begin{equation}

\Delta t_{{\rm f}, a} \equiv C_{\rm force} \sqrt{\frac{h_{a}}{\vert {\bm a}_{a} \vert }},

\end{equation}$$

$$\begin{equation}

\Delta t_{{\rm f}, a} \equiv C_{\rm force} \sqrt{\frac{h_{a}}{\vert {\bm a}_{a} \vert }},

\end{equation}$$

where C force = 0.25 by default. A separate timestep constraint is applied for external forces

$$\begin{equation}

\Delta t_{{\rm ext},a} \equiv C_{\rm force} \sqrt{\frac{h}{\vert {\bm a}_{{\rm ext},a} \vert }},

\end{equation}$$

$$\begin{equation}

\Delta t_{{\rm ext},a} \equiv C_{\rm force} \sqrt{\frac{h}{\vert {\bm a}_{{\rm ext},a} \vert }},

\end{equation}$$

and for accelerations to SPH particles to/from sink particles (Section 2.8)

$$\begin{equation}

\Delta t_{{\rm sink-gas},a} \equiv C_{\rm force} \sqrt{\frac{h_{a}}{\vert {\bm a}_{{\rm sink-gas},a} \vert }}.

\end{equation}$$

$$\begin{equation}

\Delta t_{{\rm sink-gas},a} \equiv C_{\rm force} \sqrt{\frac{h_{a}}{\vert {\bm a}_{{\rm sink-gas},a} \vert }}.

\end{equation}$$

For external forces with potentials defined such that Φ → 0 as r → ∞, an additional constraint is applied using (Dehnen & Read Reference Dehnen and Read2011)

$$\begin{equation}

\Delta t_{{\Phi },a} \equiv C_{\rm force} \eta _\Phi \sqrt{\frac{\vert \Phi _{a}\vert }{\vert \nabla \Phi \vert _{a}^{2}}},

\end{equation}$$

$$\begin{equation}

\Delta t_{{\Phi },a} \equiv C_{\rm force} \eta _\Phi \sqrt{\frac{\vert \Phi _{a}\vert }{\vert \nabla \Phi \vert _{a}^{2}}},

\end{equation}$$

where ![]() $\eta _\Phi = 0.05$ (see Section 2.8.5).

$\eta _\Phi = 0.05$ (see Section 2.8.5).

The timestep for particle a is then taken to be the minimum of all of the above constraints, i.e.

with possible other constraints arising from additional physics as described in their respective sections. With global timestepping, the resulting timestep is the minimum over all particles

2.3.3. Substepping of external forces

In the case where the timestep is dominated by any of the external force timesteps, i.e. (74)–(76), we implement an operator splitting approach implemented according to the reversible reference system propagator algorithm (RESPA) derived by Tuckerman, Berne, & Martyna (Reference Tuckerman, Berne and Martyna1992) for molecular dynamics. RESPA splits the acceleration into ‘long-range’ and ‘short-range’ contributions, which in Phantom are defined to be the SPH and external/point-mass accelerations, respectively.

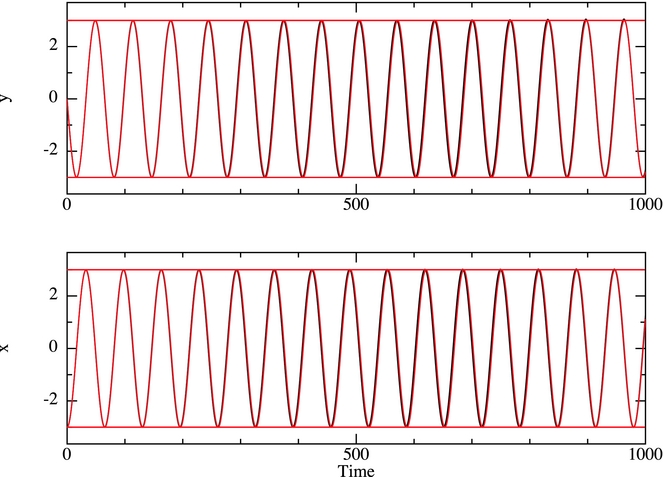

Our implementation follows Tuckerman et al. (Reference Tuckerman, Berne and Martyna1992) (see their Appendix B), where the velocity is first predicted to the half step using the ‘long-range’ forces, followed by an inner loop where the positions are updated with the current velocity and the velocities are updated with the ‘short-range’ accelerations. Thus, the timestepping proceeds according to

\begin{eqnarray*}

{\rm over\ substeps}\left\{\begin{array}{rcl}

\bm v &=& {\bm v} + \displaystyle\frac{\Delta t_{\rm ext}}{2} {\bm a}_{\rm ext}^{m}, \\

{\bm r} &=& {\bm r} + \Delta t_{\rm ext} {\bm v}, \\

&&\qquad\textrm {get } {\bm a}_{\rm ext}({\bm r}),\\

{\bm v} &=& {\bm v} + \frac{\Delta t_{\rm ext}}{2} {\bm a}_{\rm ext}^{m+1},

\end{array}\right.

\end{eqnarray*}

\begin{eqnarray*}

{\rm over\ substeps}\left\{\begin{array}{rcl}

\bm v &=& {\bm v} + \displaystyle\frac{\Delta t_{\rm ext}}{2} {\bm a}_{\rm ext}^{m}, \\

{\bm r} &=& {\bm r} + \Delta t_{\rm ext} {\bm v}, \\

&&\qquad\textrm {get } {\bm a}_{\rm ext}({\bm r}),\\

{\bm v} &=& {\bm v} + \frac{\Delta t_{\rm ext}}{2} {\bm a}_{\rm ext}^{m+1},

\end{array}\right.

\end{eqnarray*}

where ![]() ${\bm a}_{\rm sph}$ indicates the SPH acceleration evaluated from (34) and

${\bm a}_{\rm sph}$ indicates the SPH acceleration evaluated from (34) and ![]() ${\bm a}_{\rm ext}$ indicates the external forces. The SPH and external accelerations are stored separately to enable this. Δt ext is the minimum of all timesteps relating to sink–gas and external forces [equations (74)–(76)], while Δt sph is the timestep relating to the SPH forces [equations (72), (73), and (288)]. Δt ext is allowed to vary on each substep, so we take as many steps as required such that ∑m − 1jΔt ext, j + Δt ext, f = Δt sph, where Δt ext, f < Δt ext, j is chosen so that the sum will identically equal Δt sph. The number of substeps is m ≈ int(Δt ext, min/Δt sph, min) + 1, where the minimum is taken over all particles.

${\bm a}_{\rm ext}$ indicates the external forces. The SPH and external accelerations are stored separately to enable this. Δt ext is the minimum of all timesteps relating to sink–gas and external forces [equations (74)–(76)], while Δt sph is the timestep relating to the SPH forces [equations (72), (73), and (288)]. Δt ext is allowed to vary on each substep, so we take as many steps as required such that ∑m − 1jΔt ext, j + Δt ext, f = Δt sph, where Δt ext, f < Δt ext, j is chosen so that the sum will identically equal Δt sph. The number of substeps is m ≈ int(Δt ext, min/Δt sph, min) + 1, where the minimum is taken over all particles.

2.3.4. Individual particle timesteps

For simulations of stiff problems with a large range in timestep over the domain, it is more efficient to allow each particle to evolve on its own timestep independently (Bate Reference Bate1995; Springel Reference Springel2005; Saitoh & Makino Reference Saitoh and Makino2010). This violates all of the conservation properties of the Leapfrog integrator [see Makino et al. (Reference Makino, Hut, Kaplan and Saygın2006) for an attempt to solve this], but can speed up the calculation by an order of magnitude or more. We implement this in the usual blockstepped manner by assigning timesteps in factor-of-two decrements from some maximum timestep Δt max, which for convenience is set equal to the time between output files.

We implement a timestep limiter where the timestep for an active particle is constrained to be within a factor of 2 of its neighbours, similar to condition employed by Saitoh & Makino (Reference Saitoh and Makino2009). Additionally, inactive particles will be woken up as required to ensure that their timestep is within a factor of 2 of its neighbours.

The practical side of individual timestepping is described in Appendix A.6.

2.4. External forces

2.4.1. Point-mass potential

The simplest external force describes a point mass, M, at the origin, which yields gravitational potential and acceleration:

where ![]() $r_{a} \equiv \vert {\bm r}_{a} \vert \equiv \sqrt{{\bm r}_{a}\cdot {\bm r}_{a}}$. When this potential is used, we allow for particles within a certain radius, R acc, from the origin to be accreted. This allows for a simple treatment of accretion discs where the mass of the disc is assumed to be negligible compared to the mass of the central object. The accreted mass in this case is recorded but not added to the central mass. For more massive discs, or when the accreted mass is significant with respect to the central mass, it is better to model the central star using a sink particle (Section 2.8) where there are mutual gravitational forces between the star and the disc, and any mass accreted is added to the point mass (Section 2.8.2).

$r_{a} \equiv \vert {\bm r}_{a} \vert \equiv \sqrt{{\bm r}_{a}\cdot {\bm r}_{a}}$. When this potential is used, we allow for particles within a certain radius, R acc, from the origin to be accreted. This allows for a simple treatment of accretion discs where the mass of the disc is assumed to be negligible compared to the mass of the central object. The accreted mass in this case is recorded but not added to the central mass. For more massive discs, or when the accreted mass is significant with respect to the central mass, it is better to model the central star using a sink particle (Section 2.8) where there are mutual gravitational forces between the star and the disc, and any mass accreted is added to the point mass (Section 2.8.2).

2.4.2. Binary potential

We provide the option to model motion in binary systems where the mass of the disc is negligible. In this case, the binary motion is prescribed using

where M is the mass ratio in units of the total mass (which is therefore unity). For this potential, G and Ω are set to unity in computational units, where Ω is the angular velocity of the binary. Thus, only M needs to be specified to fix both m 1 and m 2. Hence, the binary remains fixed on a circular orbit at r = 1. The binary potential is therefore

such that the external acceleration is given by

Again, there is an option to accrete particles that fall within a certain radius from either star (R acc, 1 or R acc, 2, respectively). For most binary accretion disc simulations (e.g. planet migration), it is better to use ‘live’ sink particles to represent the binary so that there is feedback between the binary and the disc (we have used a live binary in all of our simulations to date, e.g. Nixon, King, & Price Reference Nixon, King and Price2013; Facchini, Lodato, & Price Reference Facchini, Lodato and Price2013; Martin et al. Reference Martin, Nixon, Armitage, Lubow and Price2014a, Reference Martin, Nixon, Lubow, Armitage, Price, Doğan and King2014b; Doğan et al. Reference Doğan, Nixon, King and Price2015; Ragusa, Lodato, & Price Reference Ragusa, Lodato and Price2016; Ragusa et al. Reference Ragusa, Dipierro, Lodato, Laibe and Price2017), but the binary potential remains useful under limited circumstances—in particular, when one wishes to turn off the feedback between the disc and the binary.

Given that the binary potential is time-dependent, for efficiency, we compute the position of the binary only once at the start of each timestep, and use these stored positions to compute the accelerations of the SPH particles via (90).

2.4.3. Binary potential with gravitational wave decay

An alternative binary potential including the effects of gravitational wave decay was used by Cerioli, Lodato, & Price (Reference Cerioli, Lodato and Price2016) to study the squeezing of discs during the merger of supermassive black holes. Here the motion of the binary is prescribed according to

$$\begin{eqnarray}

{\bm r}_{1} & =& \left[-\frac{m_{2}}{m_{1} + m_{2}} a \cos (\theta ), -\frac{m_{2}}{m_{1} + m_{2}} a \sin (\theta ), 0 \right], \nonumber \\

{\bm r}_{2} & =& \left[\frac{m_{1}}{m_{1} + m_{2}} a \cos (\theta ), \frac{m_{1}}{m_{1} + m_{2}} a \sin (\theta ), 0 \right],

\end{eqnarray}$$

$$\begin{eqnarray}

{\bm r}_{1} & =& \left[-\frac{m_{2}}{m_{1} + m_{2}} a \cos (\theta ), -\frac{m_{2}}{m_{1} + m_{2}} a \sin (\theta ), 0 \right], \nonumber \\

{\bm r}_{2} & =& \left[\frac{m_{1}}{m_{1} + m_{2}} a \cos (\theta ), \frac{m_{1}}{m_{1} + m_{2}} a \sin (\theta ), 0 \right],

\end{eqnarray}$$

where the semi-major axis, a, decays according to

$$\begin{equation}

a(t) = a_{0} \left(1 - \frac{t}{\tau } \right)^{\frac{1}{4}} .

\end{equation}$$

$$\begin{equation}

a(t) = a_{0} \left(1 - \frac{t}{\tau } \right)^{\frac{1}{4}} .

\end{equation}$$

The initial separation is a 0, with τ defined as the time to merger, given by the usual expression (e.g. Lodato et al. Reference Lodato, Nayakshin, King and Pringle2009)

where

The angle θ is defined using

Inserting the expression for a and integrating gives (Cerioli et al. Reference Cerioli, Lodato and Price2016)

$$\begin{equation}

\theta (t) = -\frac{8\tau }{5} \sqrt{\frac{G(m_{1} + m_{2})}{a_{0}^{3}}} \left( 1 - \frac{t}{\tau } \right).

\end{equation}$$

$$\begin{equation}

\theta (t) = -\frac{8\tau }{5} \sqrt{\frac{G(m_{1} + m_{2})}{a_{0}^{3}}} \left( 1 - \frac{t}{\tau } \right).

\end{equation}$$

The positions of the binary, ![]() ${\bm r}_1$ and

${\bm r}_1$ and ![]() ${\bm r}_2$, can be inserted into (89) to obtain the binary potential, with the acceleration as given in (90). The above can be used as a simple example of a time-dependent external potential.

${\bm r}_2$, can be inserted into (89) to obtain the binary potential, with the acceleration as given in (90). The above can be used as a simple example of a time-dependent external potential.

2.4.4. Galactic potentials

We implement a range of external forces representing various galactic potentials, as used in Pettitt et al. (Reference Pettitt, Dobbs, Acreman and Price2014). These include arm, bar, halo, disc, and spheroidal components. We refer the reader to the paper above for the actual forms of the potentials.

For the non-axisymmetric potentials, a few important parameters that determine the morphology can be changed at run time rather than compile time. These include the pattern speed, arm number, arm pitch angle, and bar axis lengths (where applicable). In the case of non-axisymmetric components, the user should be aware that some will add mass to the system, whereas others simply perturb the galactic disc. These potentials can be used for any galactic system, but the various default scale lengths and masses are chosen to match the Milky Way’s rotation curve (Sofue Reference Sofue2012).

The most basic potential in Phantom is a simple logarithmic potential from Binney & Tremaine (Reference Binney and Tremaine1987), which allows for the reproduction of a purely flat rotation curve with steep decrease at the galactic centre, and approximates the halo, bulge, and disc contributions. Also included is the standard flattened disc potential of Miyamoto–Nagai (Miyamoto & Nagai Reference Miyamoto and Nagai1975) and an exponential profile disc, specifically the form from Khoperskov et al. (Reference Khoperskov, Vasiliev, Sobolev and Khoperskov2013). Several spheroidal components are available, including the potentials of Plummer (Reference Plummer1911), Hernquist (Reference Hernquist1990), and Hubble (Reference Hubble1930). These can be used generally for bulges and halos if given suitable mass and scale lengths. We also include a few halo-specific profiles; the NFW (Navarro, Frenk, & White Reference Navarro, Frenk and White1996), Begeman, Broeils, & Sanders (Reference Begeman, Broeils and Sanders1991), Caldwell & Ostriker (Reference Caldwell and Ostriker1981), and the Allen & Santillan (Reference Allen and Santillan1991) potentials.

The arm potentials include some of the more complicated profiles. The first is the potential of Cox & Gómez (Reference Cox and Gómez2002), which is a relatively straightforward superposition of three sinusoidal-based spiral components to damp the potential ‘troughs’ in the inter-arm minima. The other spiral potential is from Pichardo et al. (Reference Pichardo, Martos, Moreno and Espresate2003), and is more complicated. Here, the arms are constructed from a superposition of oblate spheroids whose loci are placed along a standard logarithmic spiral. As the force from this potential is computationally expensive it is prudent to pre-compute a grid of potential/force and read it at run time. The python code to generate the appropriate grid files is distributed with the code.

Finally, the bar components: We include the bar potentials of Dehnen (Reference Dehnen2000a), Wada & Koda (Reference Wada and Koda2001), the ‘S’ shaped bar of Vogt & Letelier (Reference Vogt and Letelier2011), both biaxial and triaxial versions provided in Long & Murali (Reference Long and Murali1992), and the boxy-bulge bar of Wang et al. (Reference Wang, Zhao, Mao and Rich2012). This final bar contains both a small inner non-axisymmetric bulge and longer bar component, with the forces calculated by use of Hernquist–Ostriker expansion coefficients of the bar density field. Phantom contains the coefficients for several different forms of this bar potential.

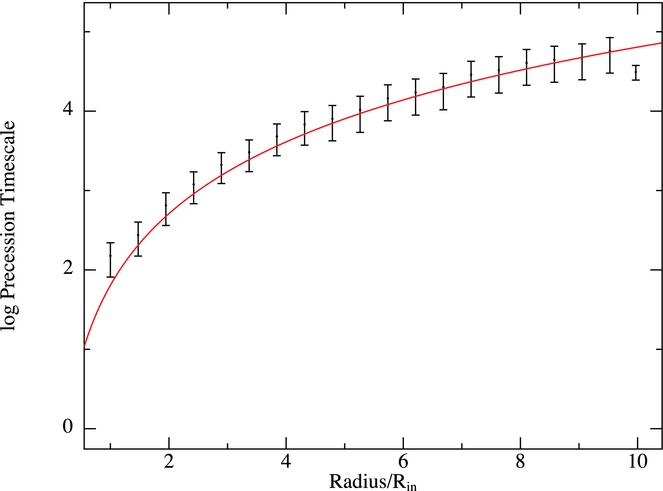

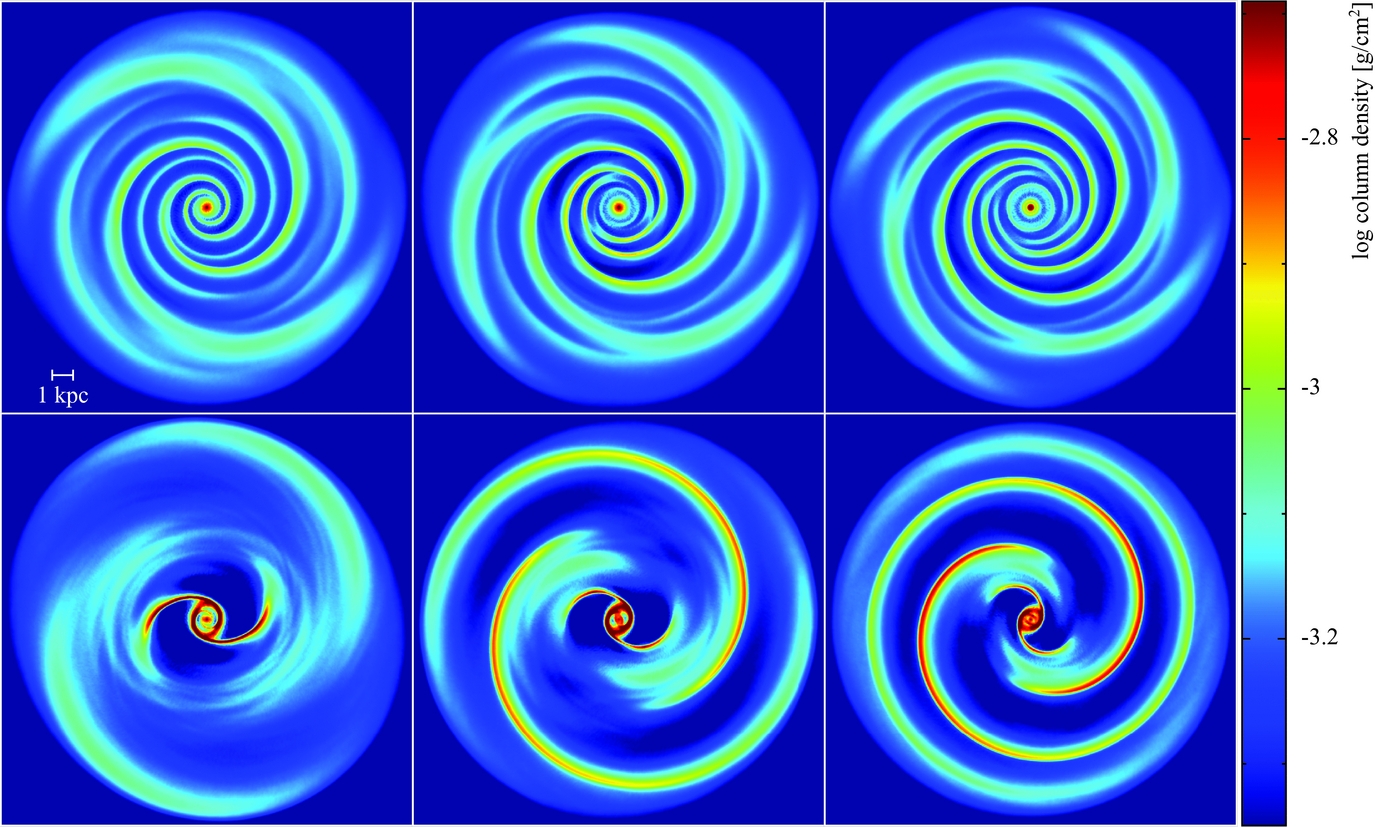

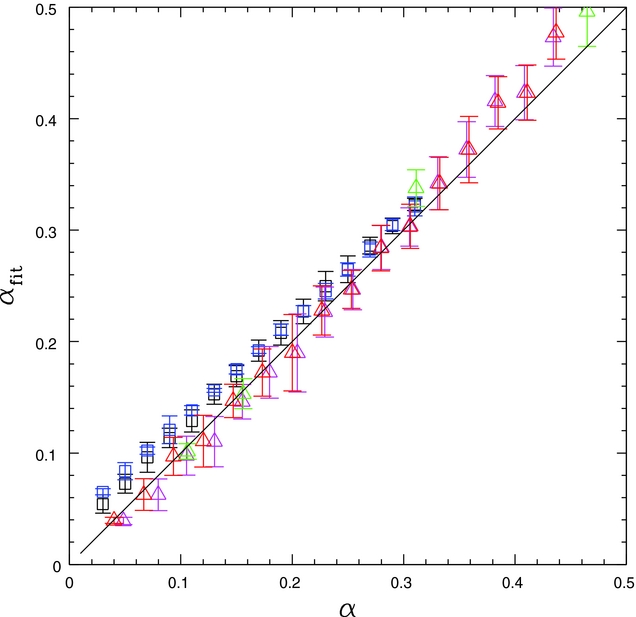

2.4.5. Lense–Thirring precession

Lense–Thirring precession (Lense & Thirring Reference Lense and Thirring1918) from a spinning black hole is implemented in a post-Newtonian approximation following Nelson & Papaloizou (Reference Nelson and Papaloizou2000), which has been used in Nixon et al. (Reference Nixon, King, Price and Frank2012b), Nealon, Price, & Nixon (Reference Nealon, Price and Nixon2015), and Nealon et al. (Reference Nealon, Nixon, Price and King2016). In this case, the external acceleration consists of a point-mass potential (Section 2.4.1) and the Lense–Thirring term:

where Φa is given by (86) and ![]() ${\bm v}_a \times {\bm \Omega }_{p,a}$ is the gravitomagnetic acceleration. A dipole approximation is used, yielding

${\bm v}_a \times {\bm \Omega }_{p,a}$ is the gravitomagnetic acceleration. A dipole approximation is used, yielding

with ![]() ${\bm S} = a_{\rm spin} (GM)^{2} {\bm k}/c^{3}$, where

${\bm S} = a_{\rm spin} (GM)^{2} {\bm k}/c^{3}$, where ![]() ${\bm k}$ is a unit vector in the direction of the black hole spin. When using the Lense–Thirring force, geometric units are assumed such that G = M = c = 1, as described in Section 2.2.3, but with the additional constraints on the unit system from M and c.

${\bm k}$ is a unit vector in the direction of the black hole spin. When using the Lense–Thirring force, geometric units are assumed such that G = M = c = 1, as described in Section 2.2.3, but with the additional constraints on the unit system from M and c.

Since in this case the external force depends on velocity, it cannot be implemented directly into Leapfrog. The method we employ to achieve this is simpler than those proposed elsewhere [c.f. attempts by Quinn et al. (Reference Quinn, Perrine, Richardson and Barnes2010) and Rein & Tremaine (Reference Rein and Tremaine2011) to adapt the Leapfrog integrator to Hill’s equations]. Our approach is to split the acceleration into position and velocity-dependent parts, i.e.

The position-dependent part [i.e. ![]() $-\nabla \Phi ({\bm r})$] is integrated as normal. The velocity-dependent Lense–Thirring term is added to the predictor step, (66)–(67), as usual, but the corrector step, (69), is written in the form

$-\nabla \Phi ({\bm r})$] is integrated as normal. The velocity-dependent Lense–Thirring term is added to the predictor step, (66)–(67), as usual, but the corrector step, (69), is written in the form

where ![]() ${\bm v}^{n + \frac{1}{2}} \equiv {\bm v}^{n} + \frac{1}{2} \Delta t {\bm a}^{n}$ as in (65). This equation is implicit but the trick is to notice that it can be solved analytically for simple forcesFootnote 4. In the case of Lense–Thirring precession, we have

${\bm v}^{n + \frac{1}{2}} \equiv {\bm v}^{n} + \frac{1}{2} \Delta t {\bm a}^{n}$ as in (65). This equation is implicit but the trick is to notice that it can be solved analytically for simple forcesFootnote 4. In the case of Lense–Thirring precession, we have

where ![]() $\tilde{\bm v} \equiv {\bm v}^{n + \frac{1}{2}} + \frac{1}{2} \Delta t ({\bm a}_{\rm sph}^{n+1} + {\bm a}_{\rm ext,x}^{n+1})$. We therefore have a matrix equation in the form

$\tilde{\bm v} \equiv {\bm v}^{n + \frac{1}{2}} + \frac{1}{2} \Delta t ({\bm a}_{\rm sph}^{n+1} + {\bm a}_{\rm ext,x}^{n+1})$. We therefore have a matrix equation in the form

where ![]() ${\bm R}$ is the 3 × 3 matrix given by

${\bm R}$ is the 3 × 3 matrix given by

$$\begin{equation}

{\bm R} \equiv \left[ \arraycolsep5pt\begin{array}{ccc}1 & - \displaystyle\frac{\Delta t}{2} \Omega ^{z}_{p} & \displaystyle\frac{\Delta t}{2} \Omega ^{y}_{p} \\[3pt]

\displaystyle\frac{\Delta t}{2} \Omega ^{z}_{p} & 1 & -\displaystyle\frac{\Delta t}{2} \Omega ^{x}_{p} \\[3pt]

-\displaystyle\frac{\Delta t}{2} \Omega ^{y}_{p} & \displaystyle\frac{\Delta t}{2} \Omega ^{x}_{p} & 1 \\

\end{array} \right].

\end{equation}$$

$$\begin{equation}

{\bm R} \equiv \left[ \arraycolsep5pt\begin{array}{ccc}1 & - \displaystyle\frac{\Delta t}{2} \Omega ^{z}_{p} & \displaystyle\frac{\Delta t}{2} \Omega ^{y}_{p} \\[3pt]

\displaystyle\frac{\Delta t}{2} \Omega ^{z}_{p} & 1 & -\displaystyle\frac{\Delta t}{2} \Omega ^{x}_{p} \\[3pt]

-\displaystyle\frac{\Delta t}{2} \Omega ^{y}_{p} & \displaystyle\frac{\Delta t}{2} \Omega ^{x}_{p} & 1 \\

\end{array} \right].

\end{equation}$$

Rearranging (102), ![]() ${\bm v}^{n+1}$ is obtained by using

${\bm v}^{n+1}$ is obtained by using

where ![]() ${\bm R}^{-1}$ is the inverse of

${\bm R}^{-1}$ is the inverse of ![]() ${\bm R}$, which we invert using the analytic solution.

${\bm R}$, which we invert using the analytic solution.

2.4.6. Generalised Newtonian potential

The generalised Newtonian potential described by Tejeda & Rosswog (Reference Tejeda and Rosswog2013) is implemented, where the acceleration terms are given by

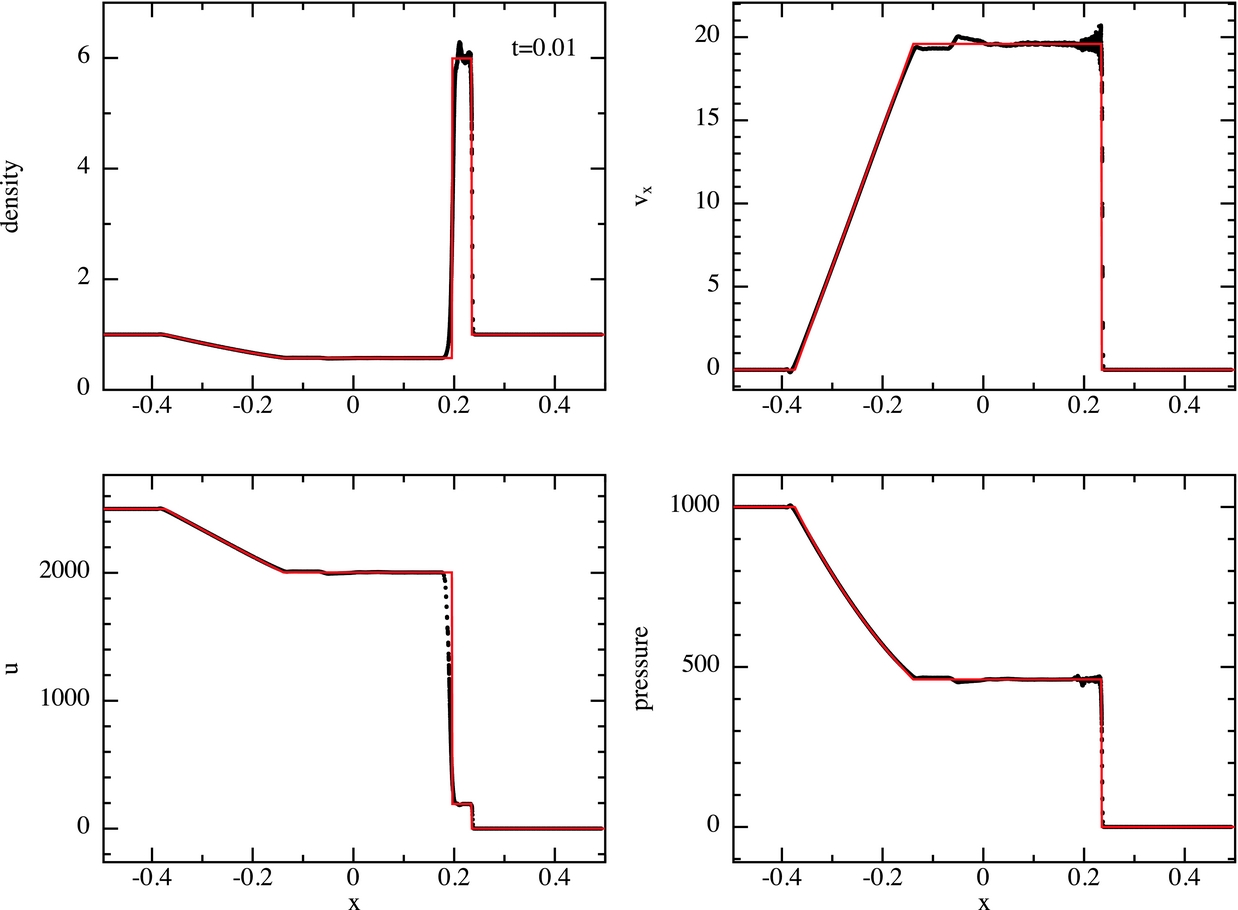

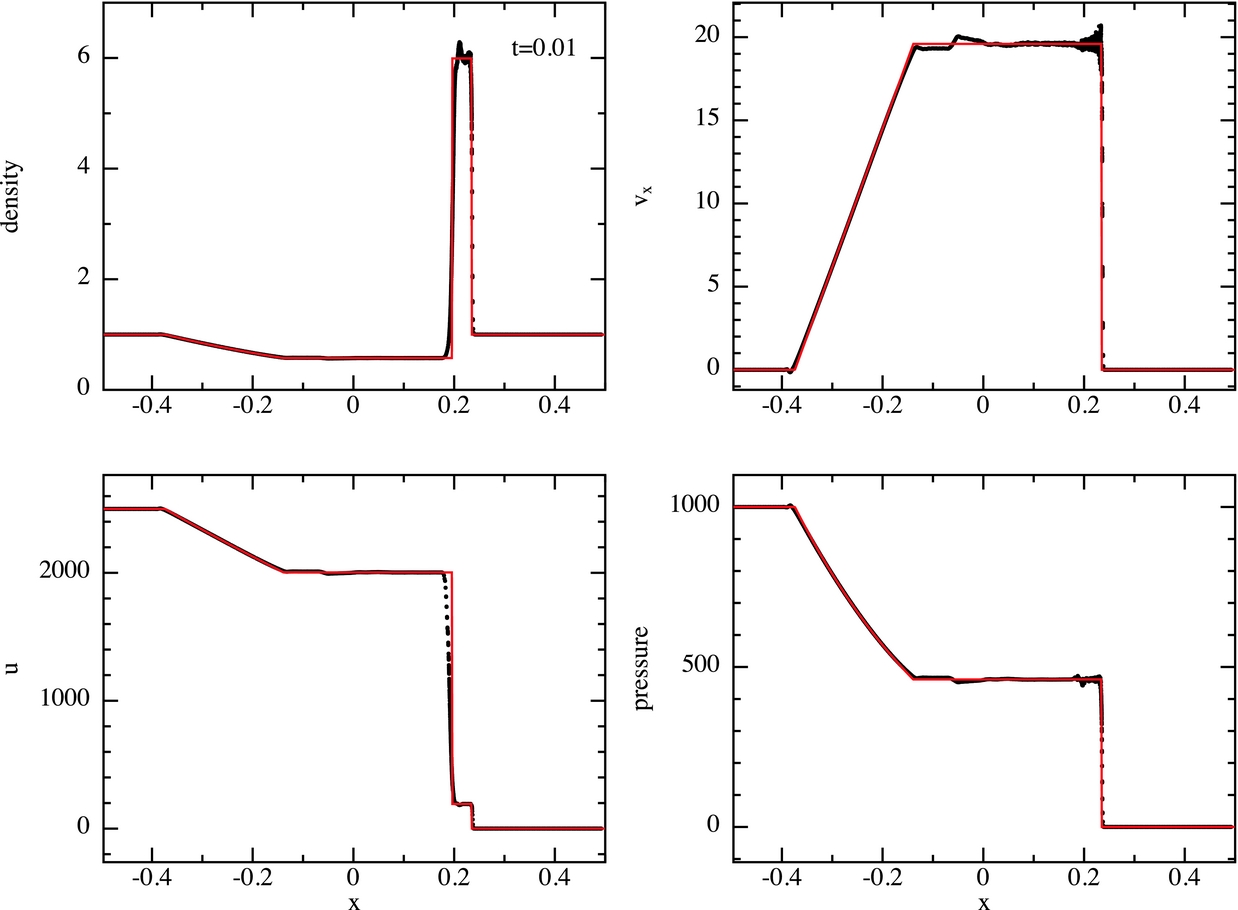

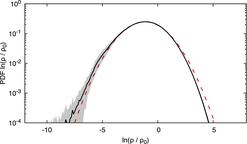

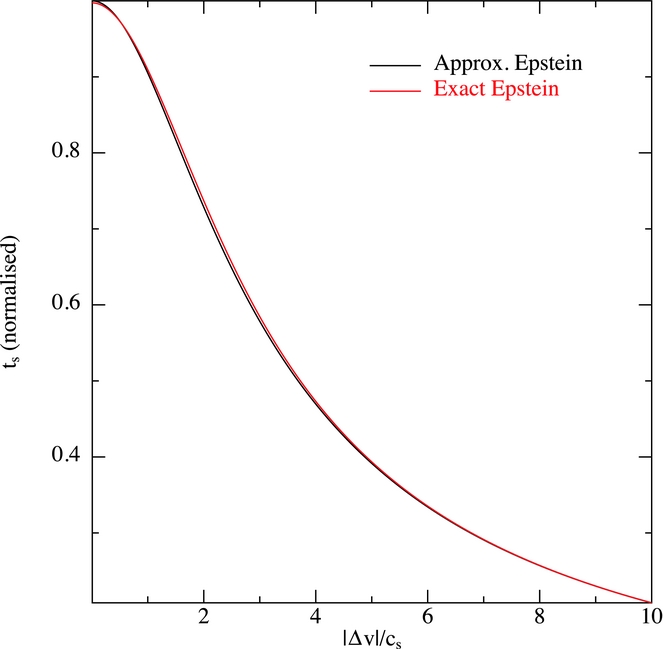

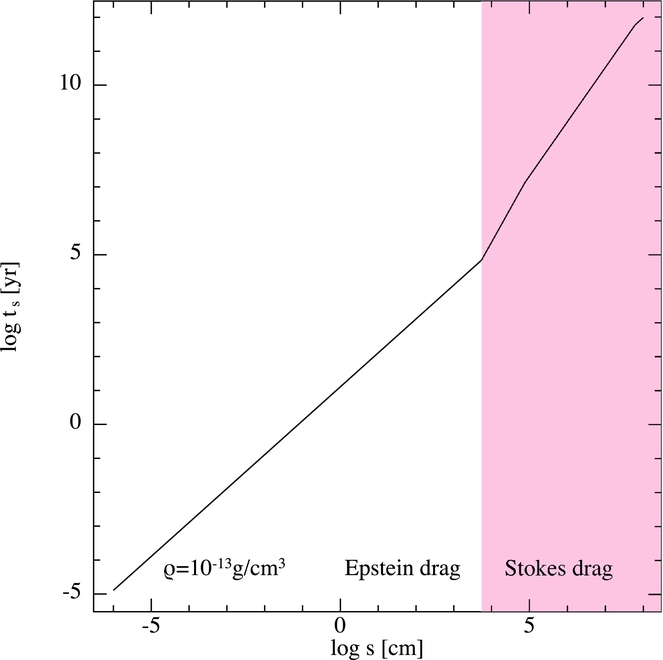

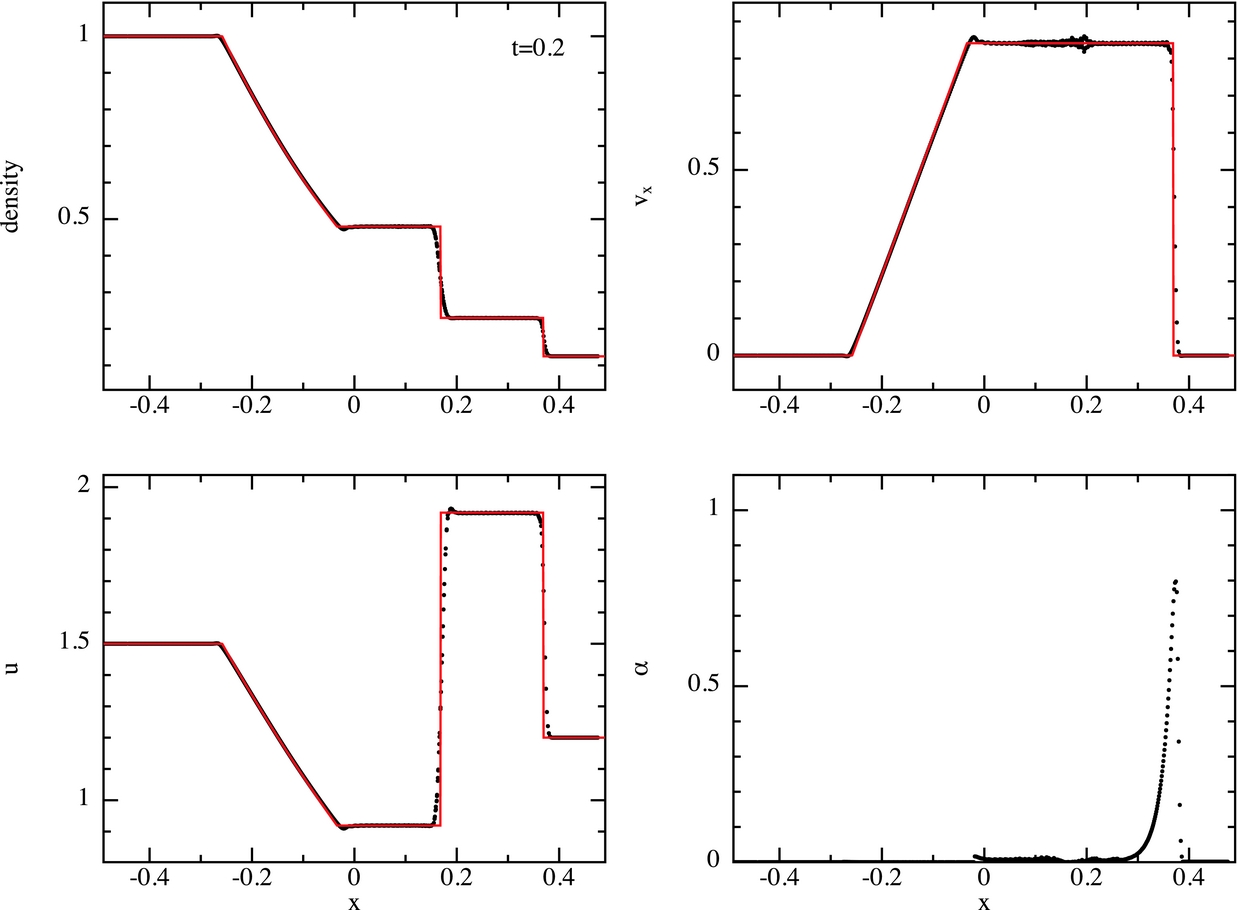

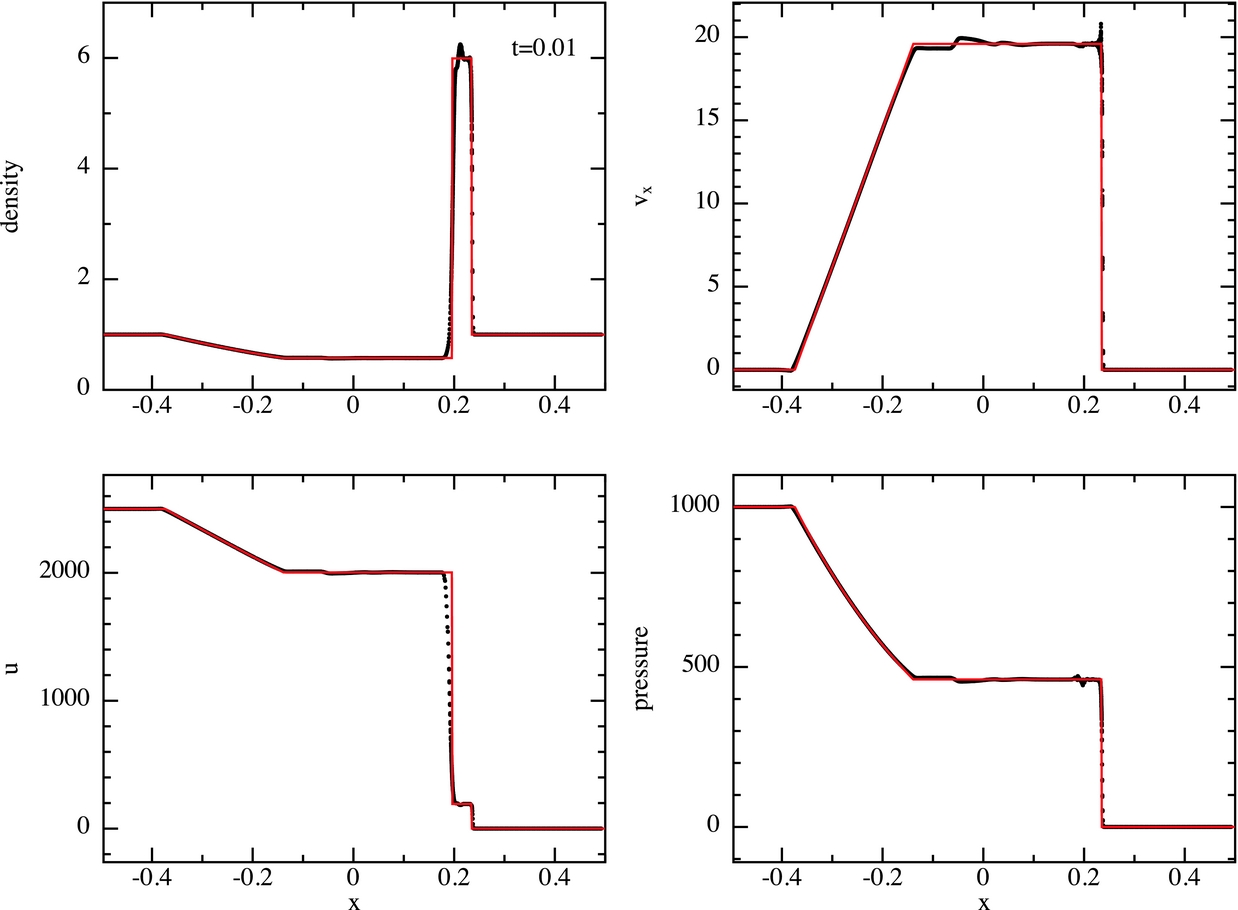

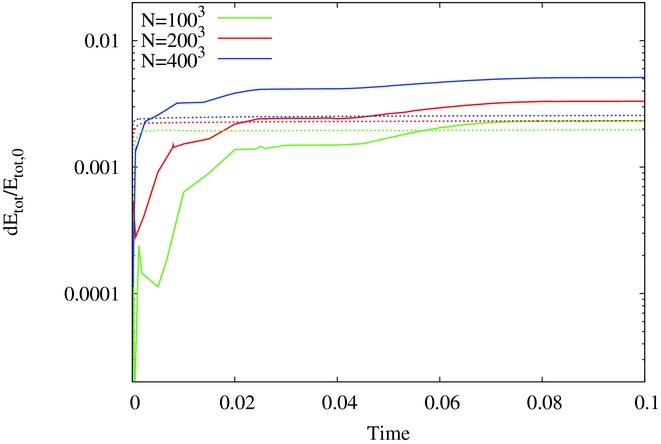

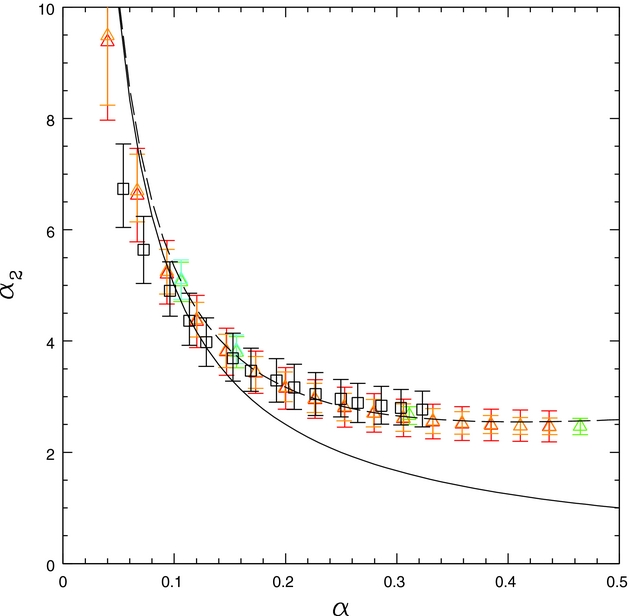

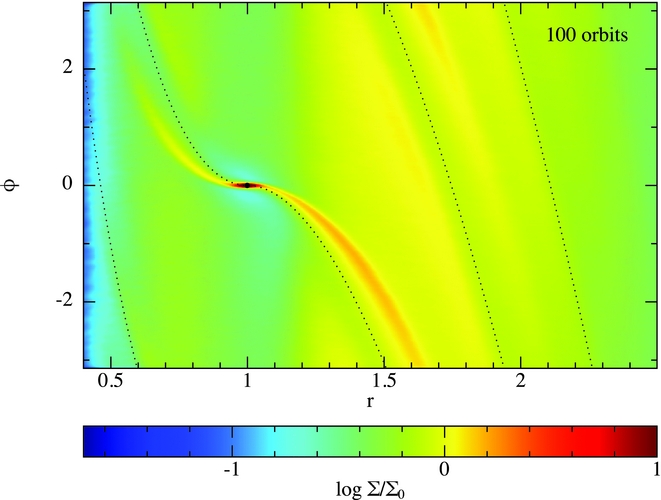

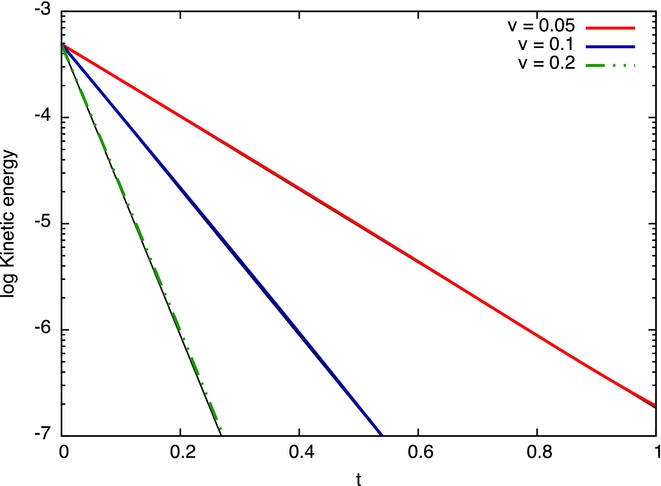

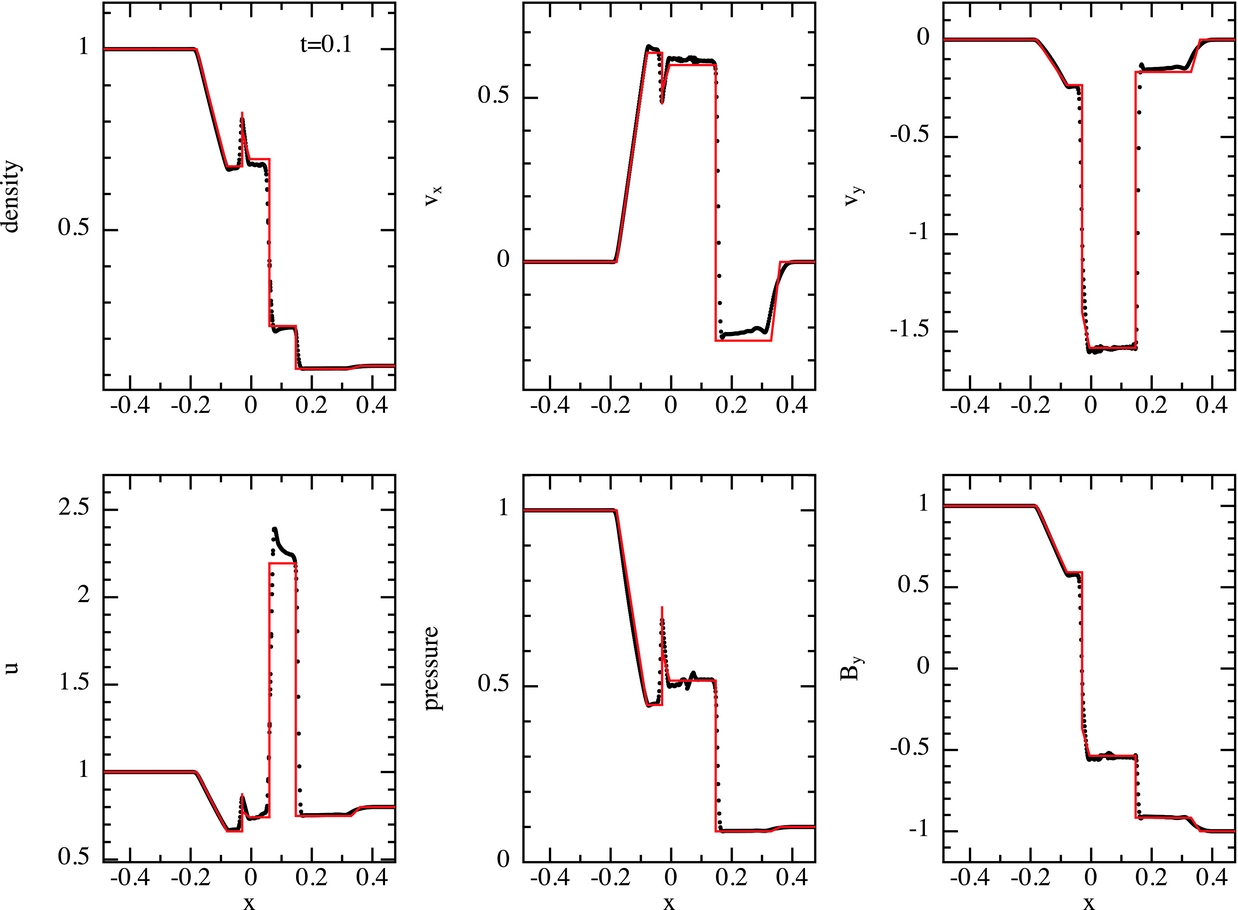

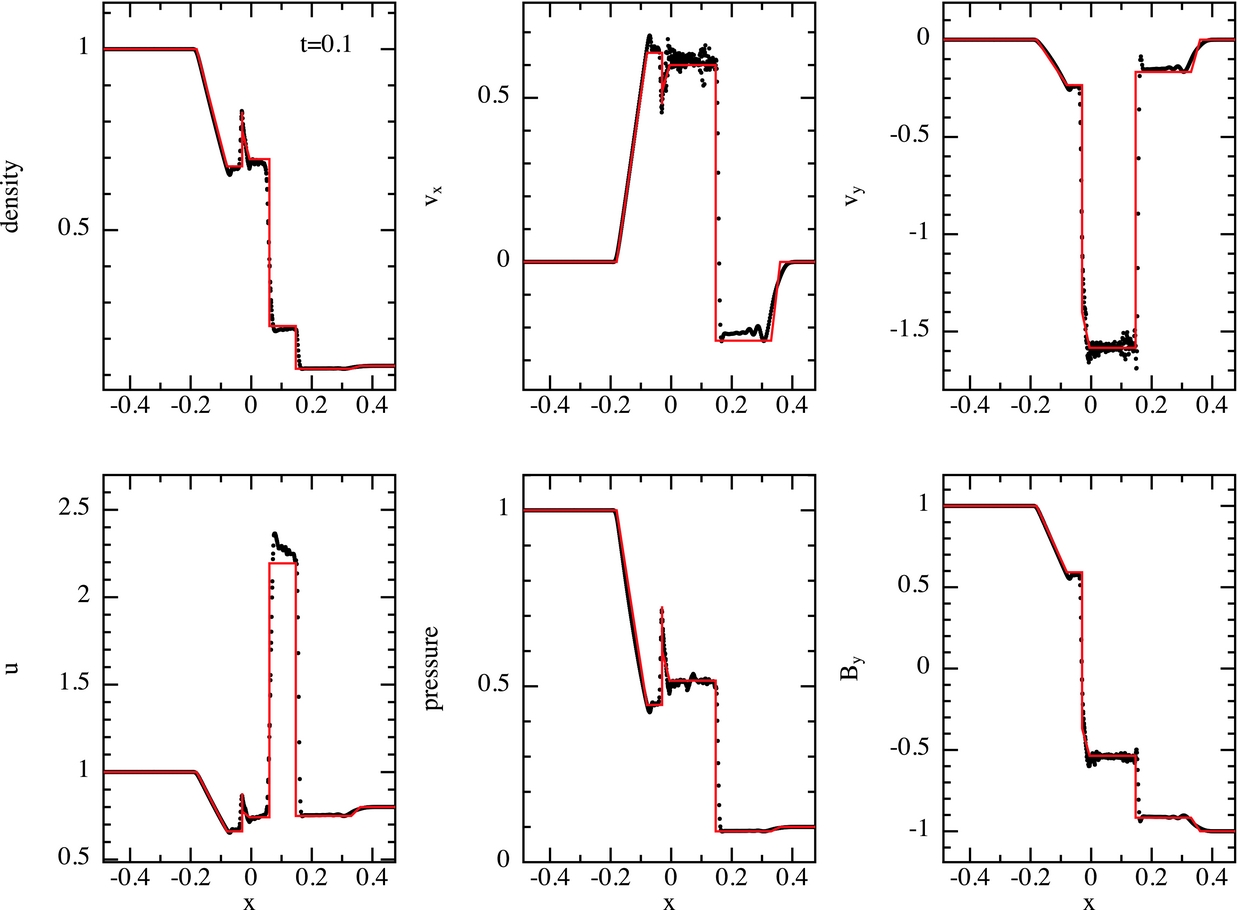

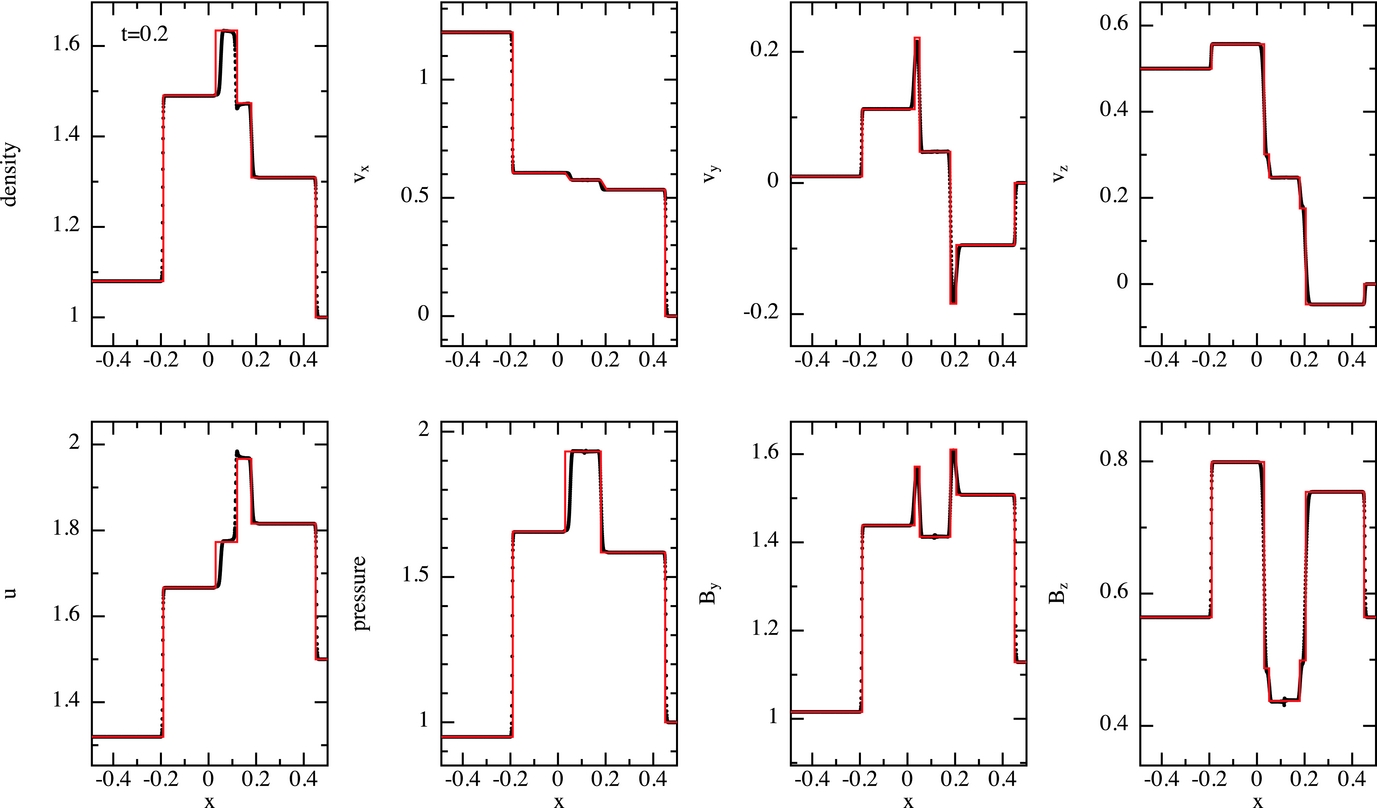

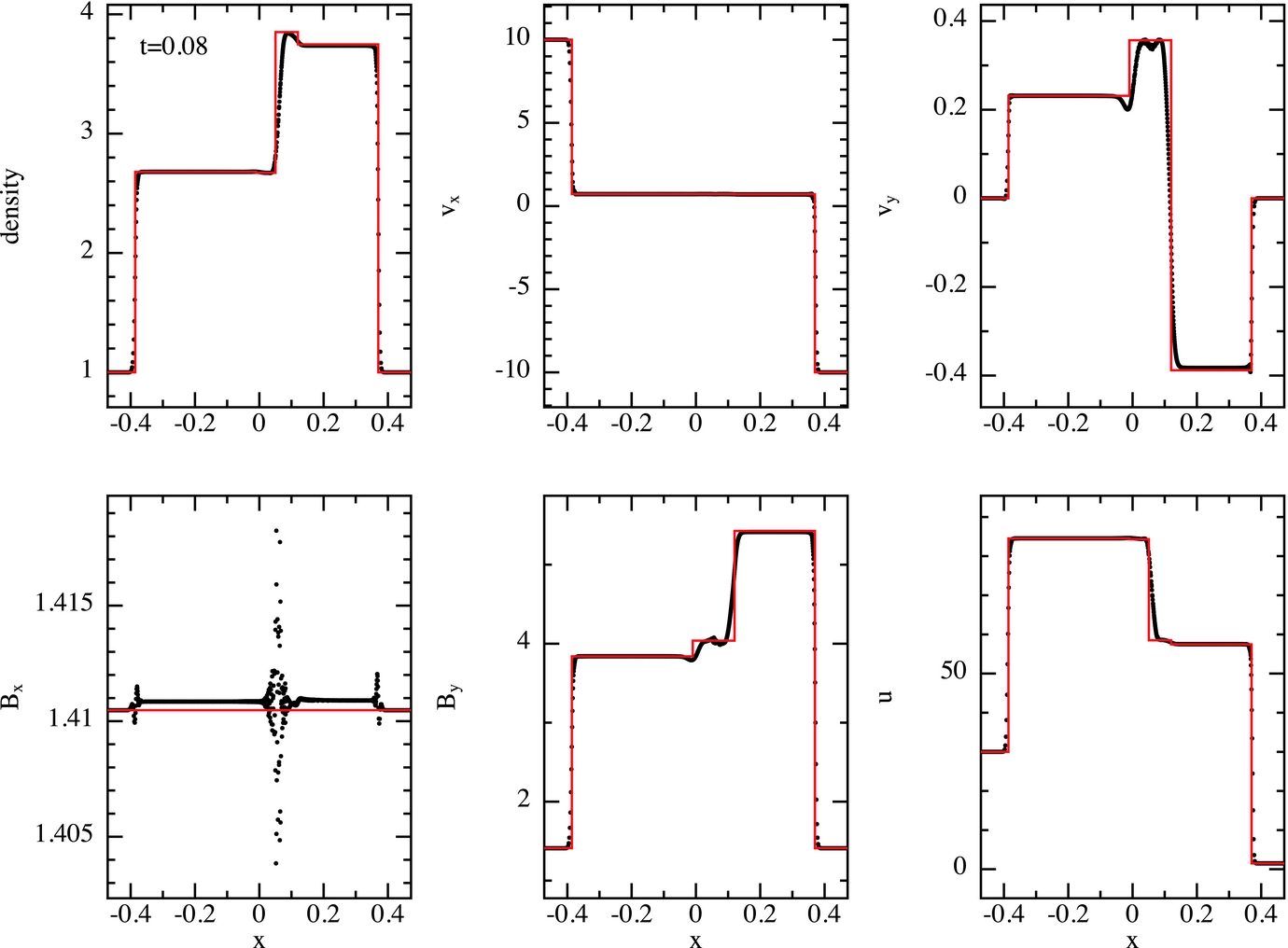

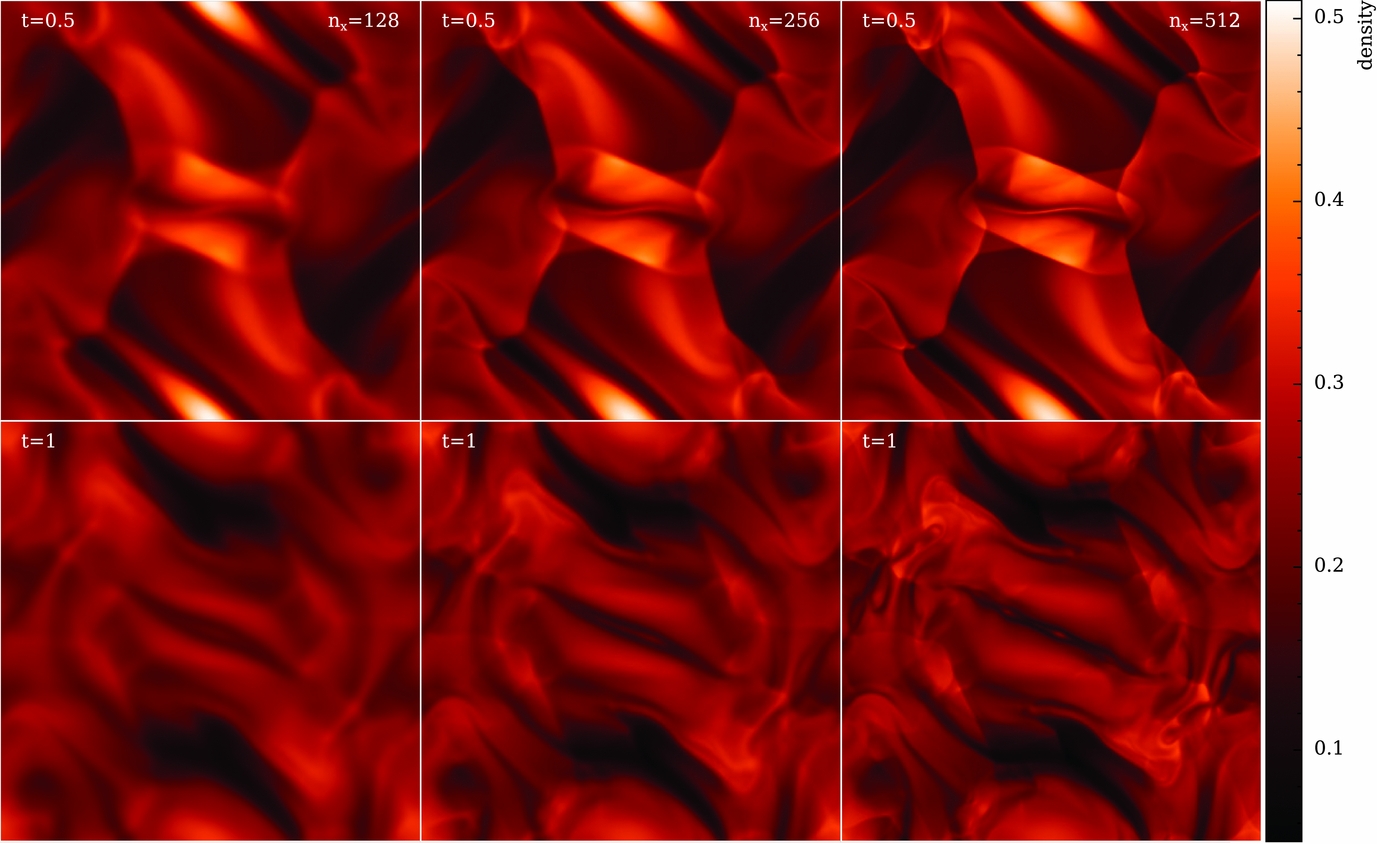

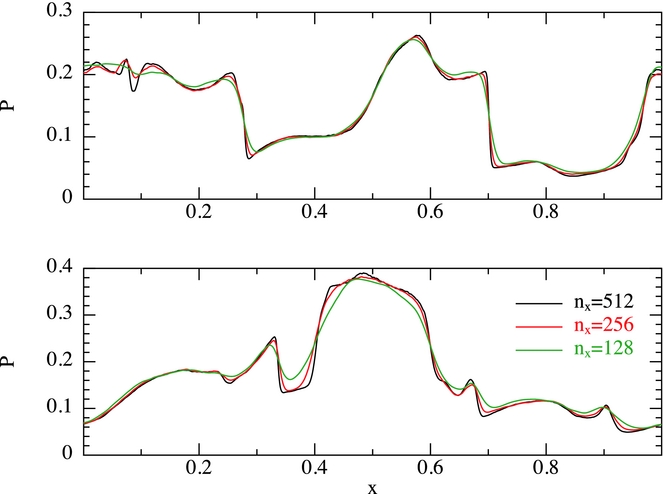

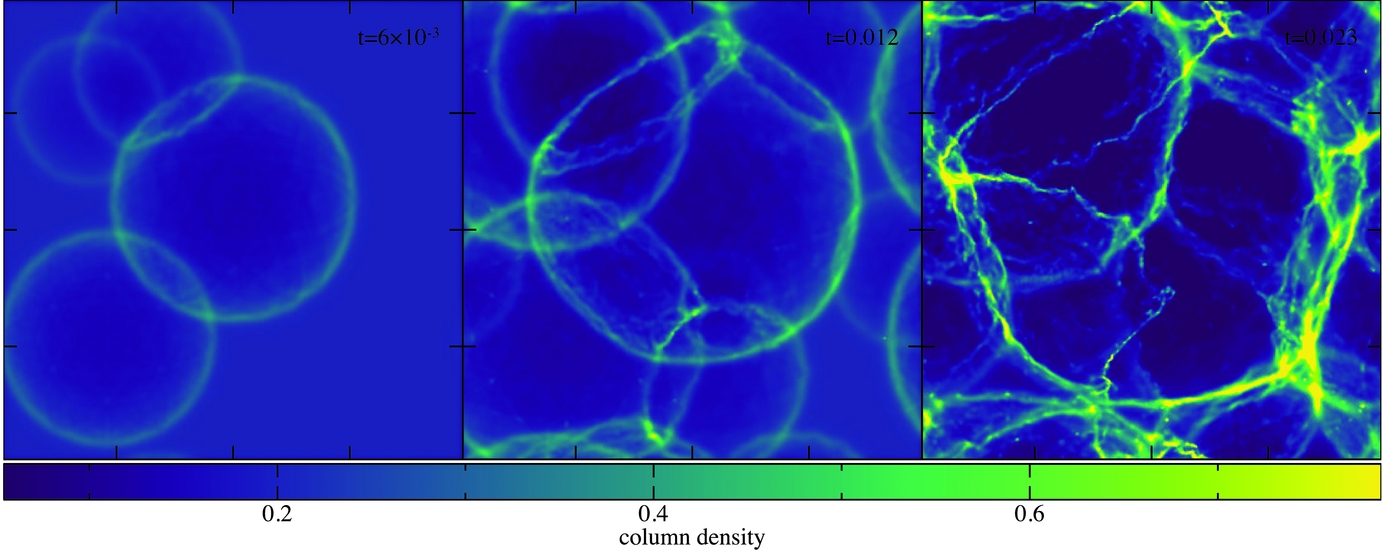

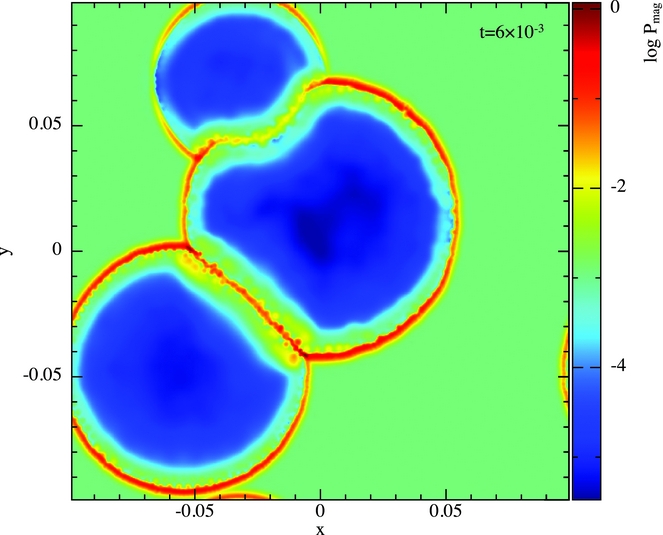

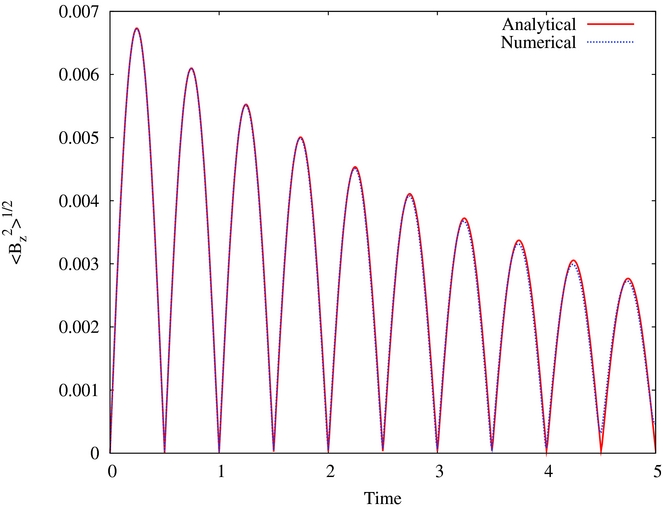

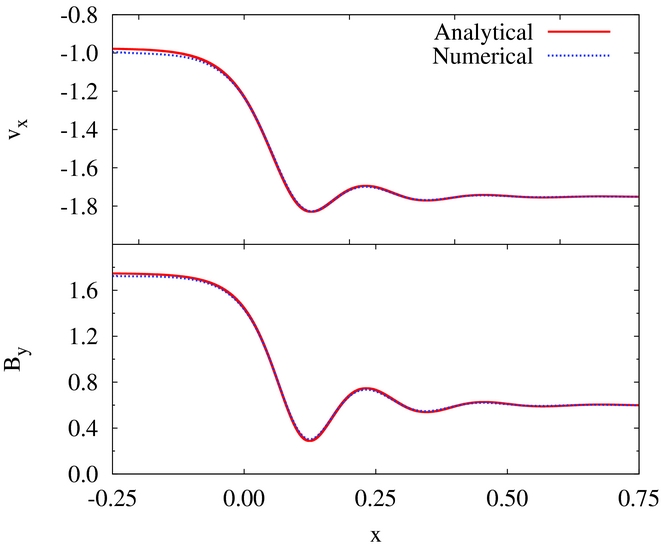

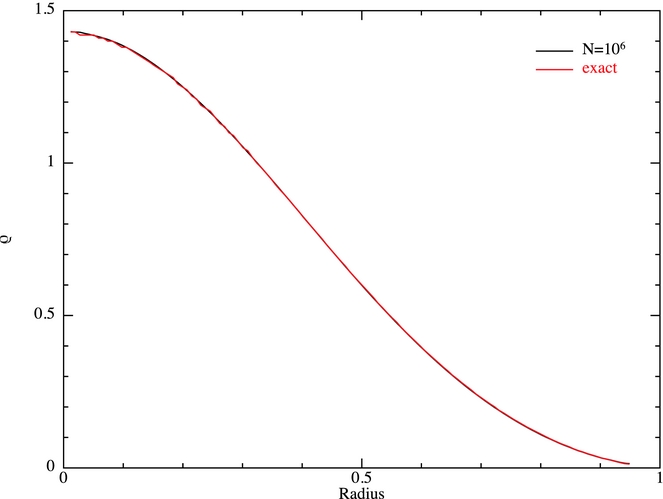

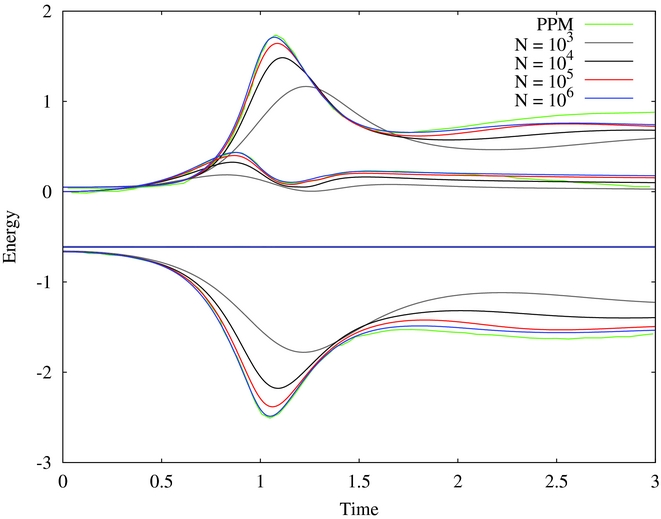

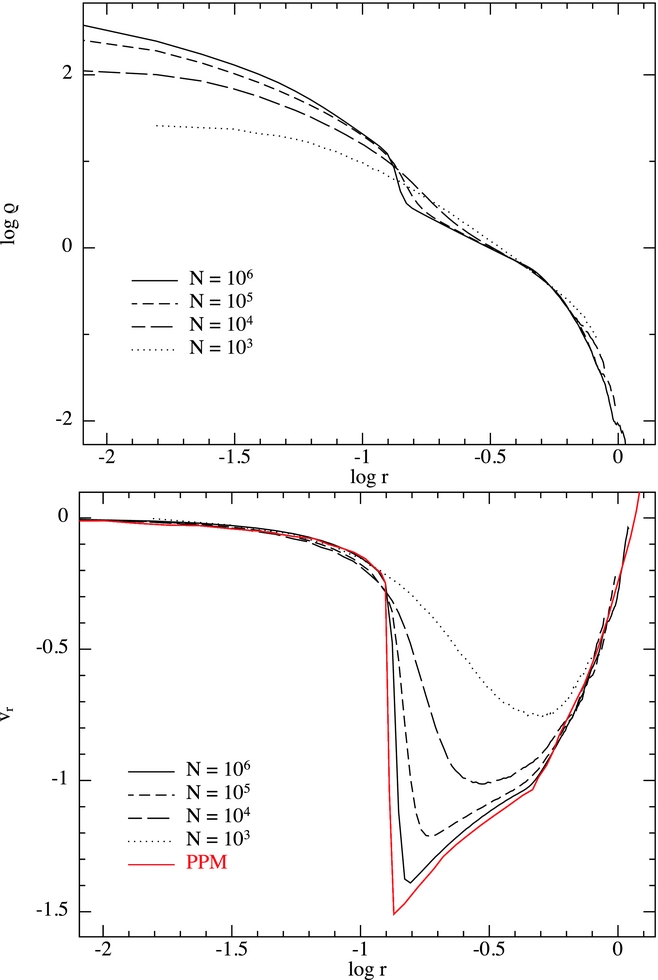

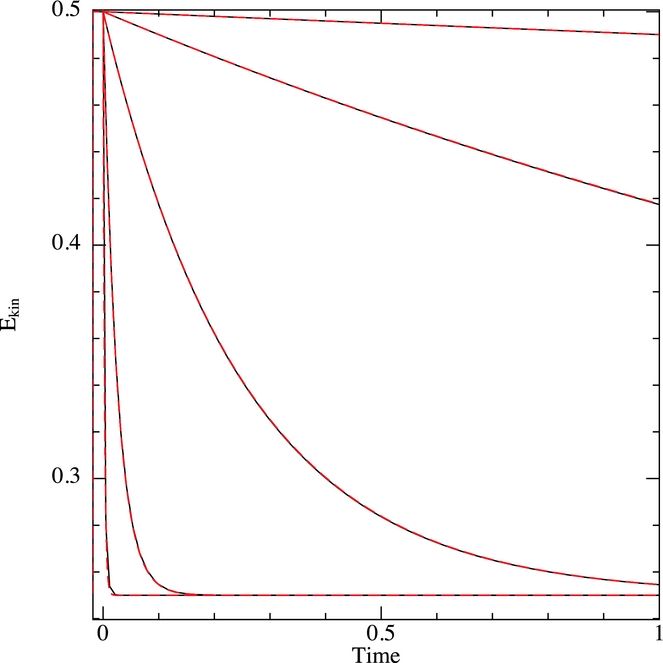

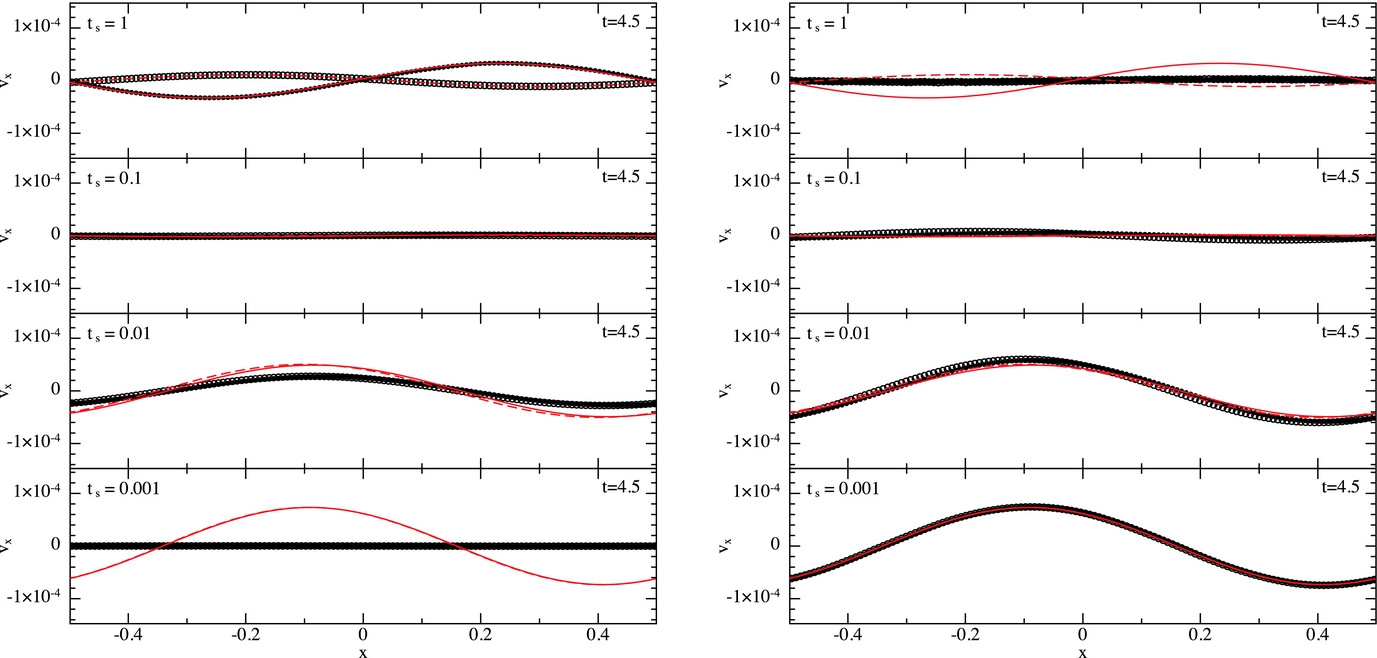

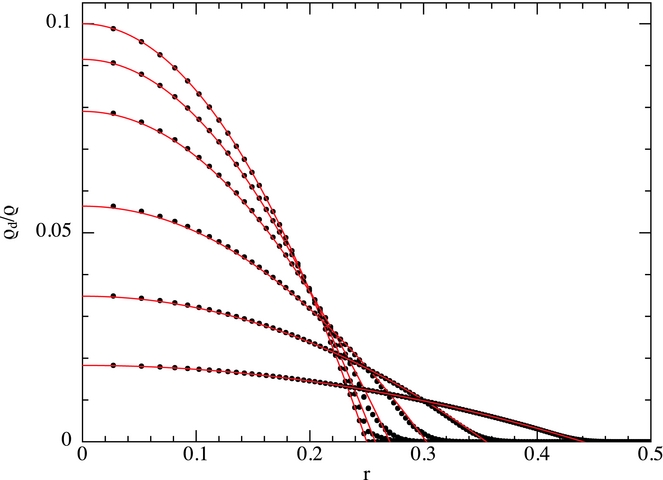

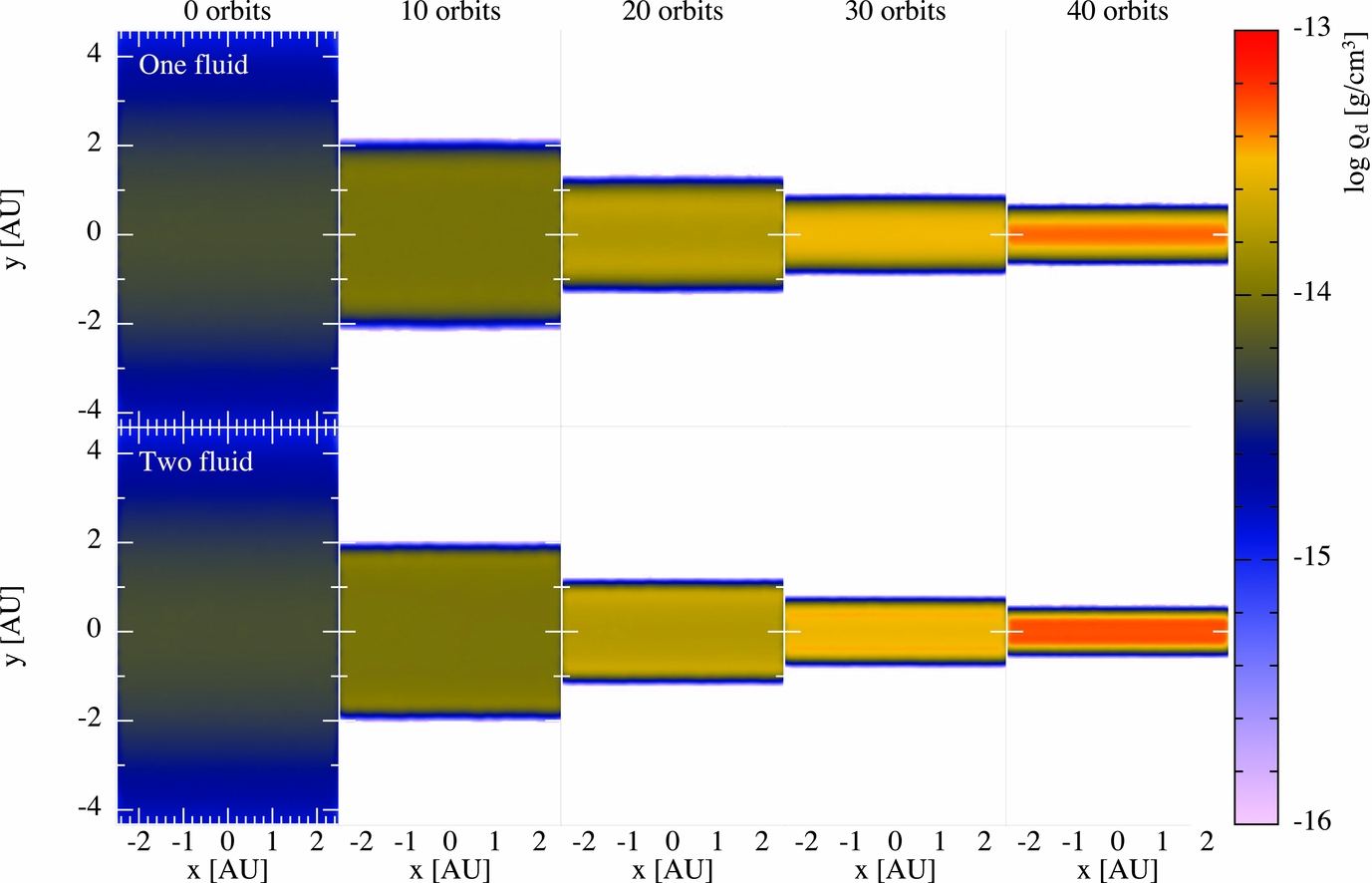

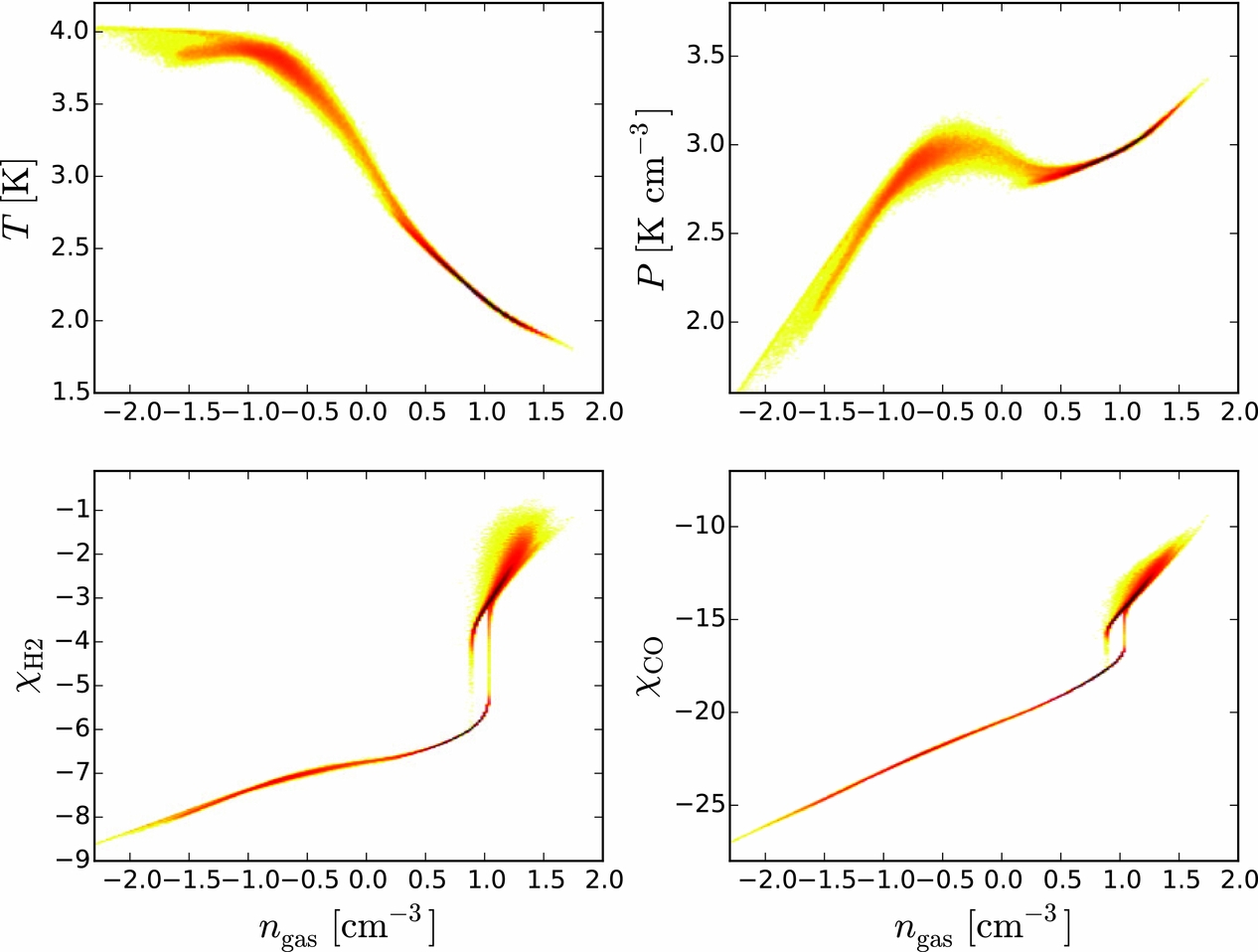

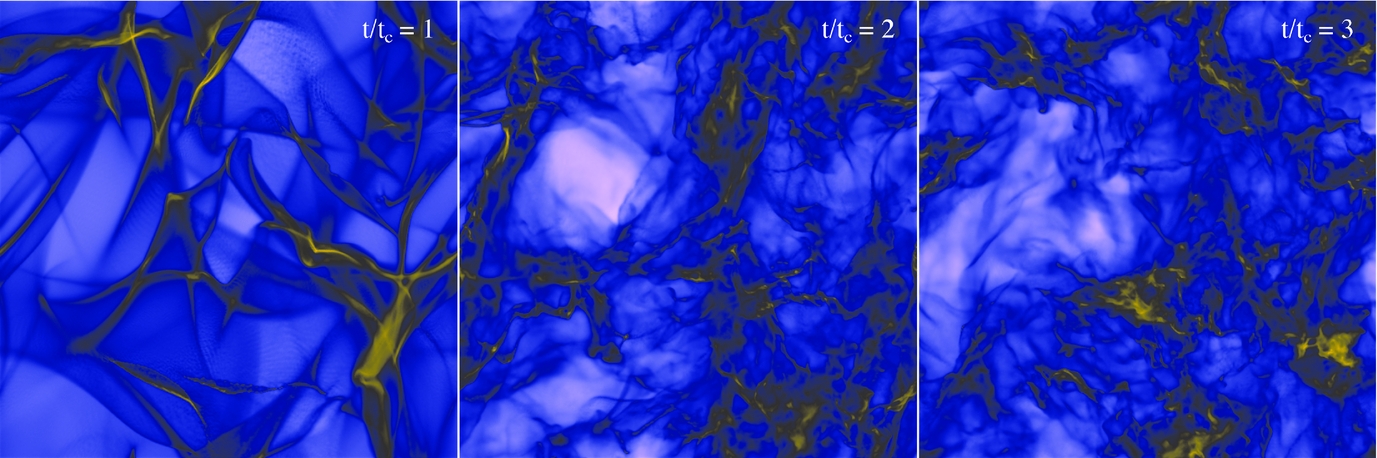

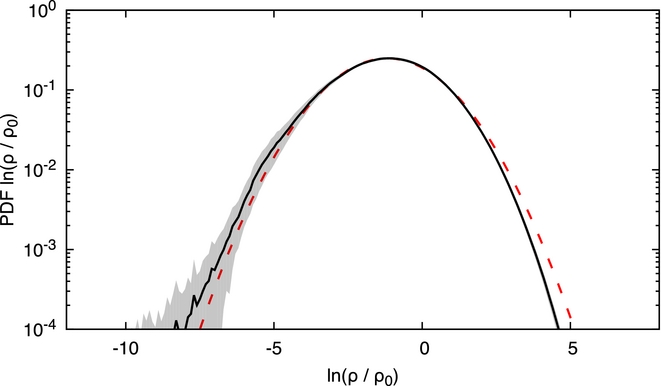

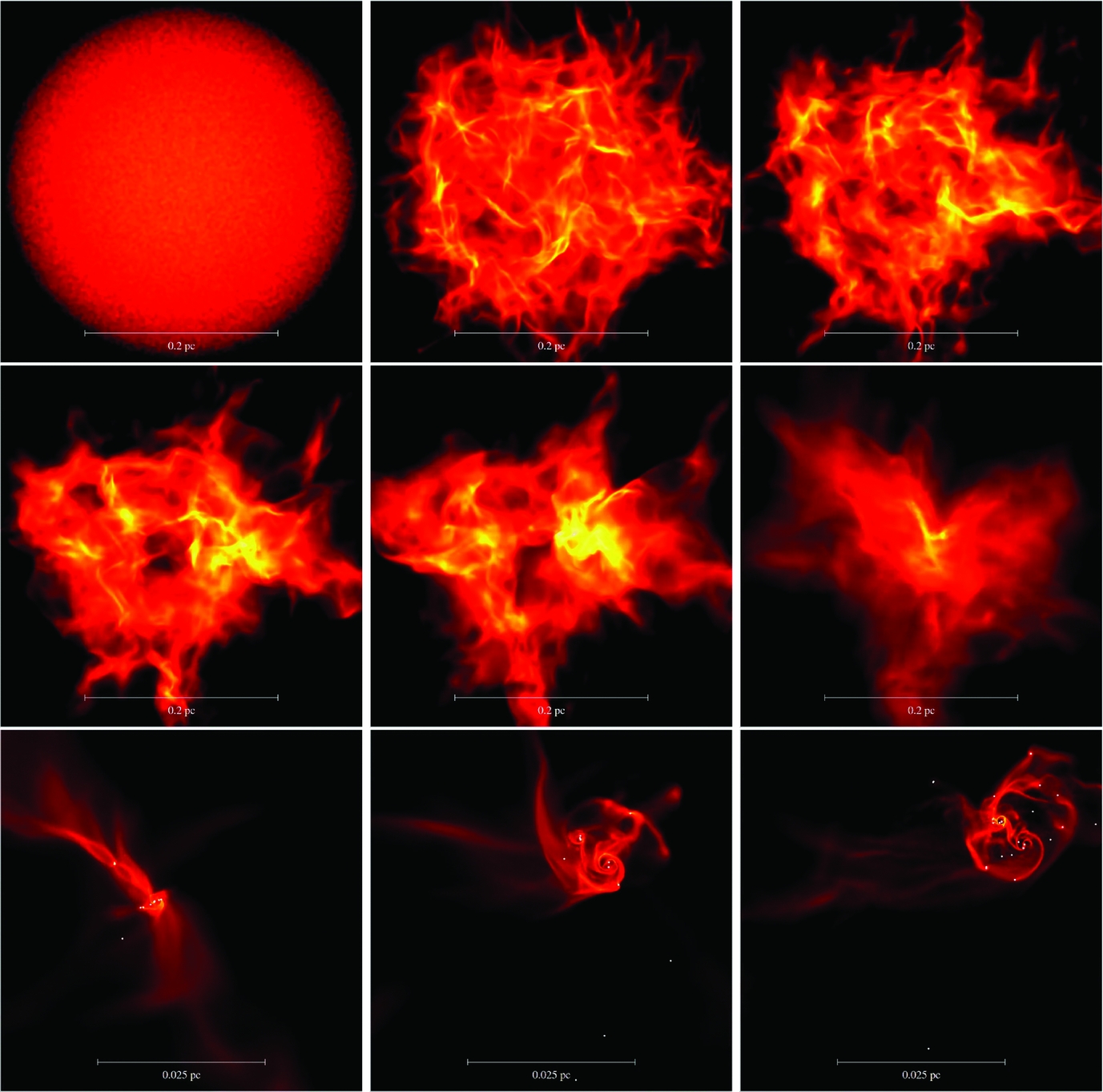

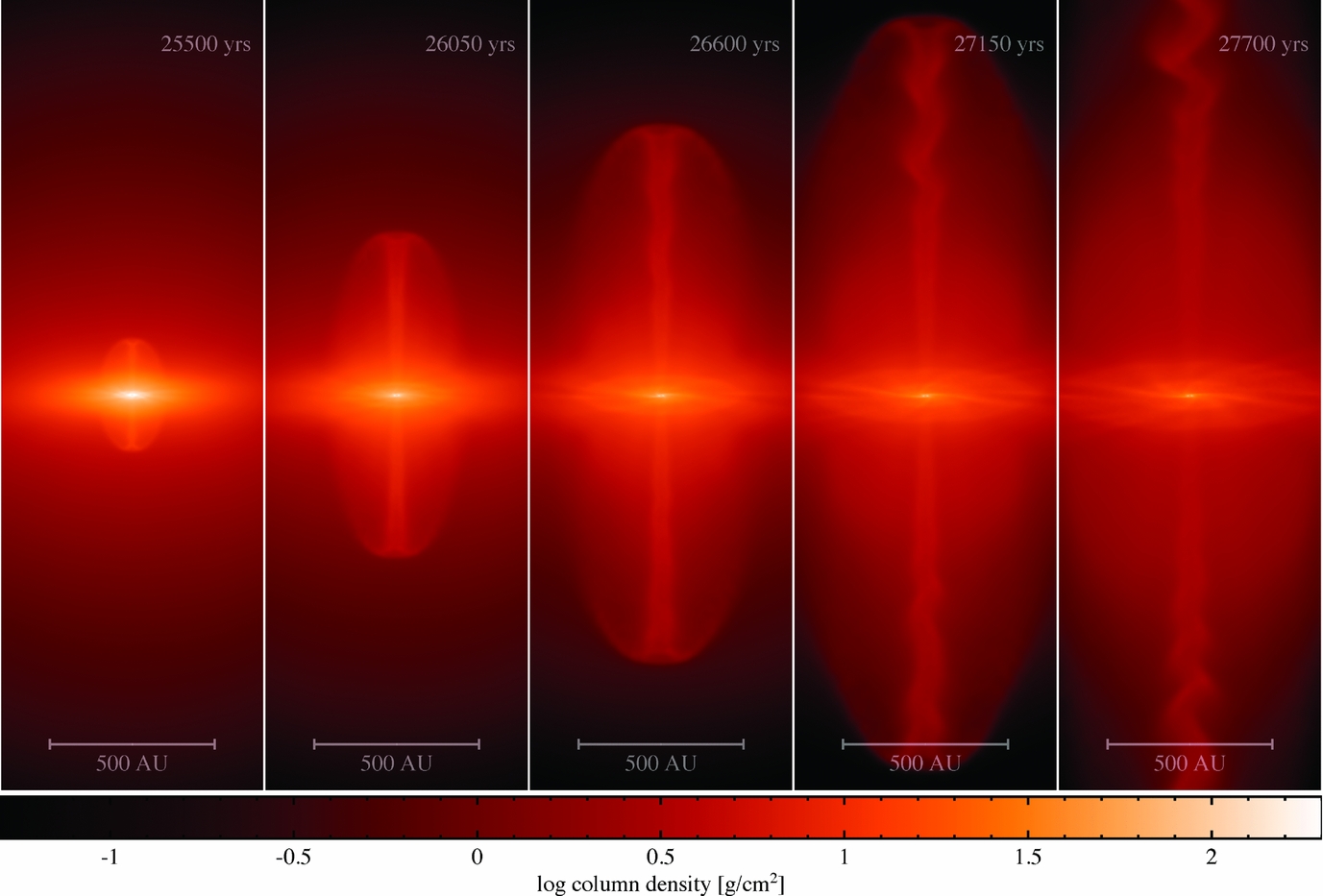

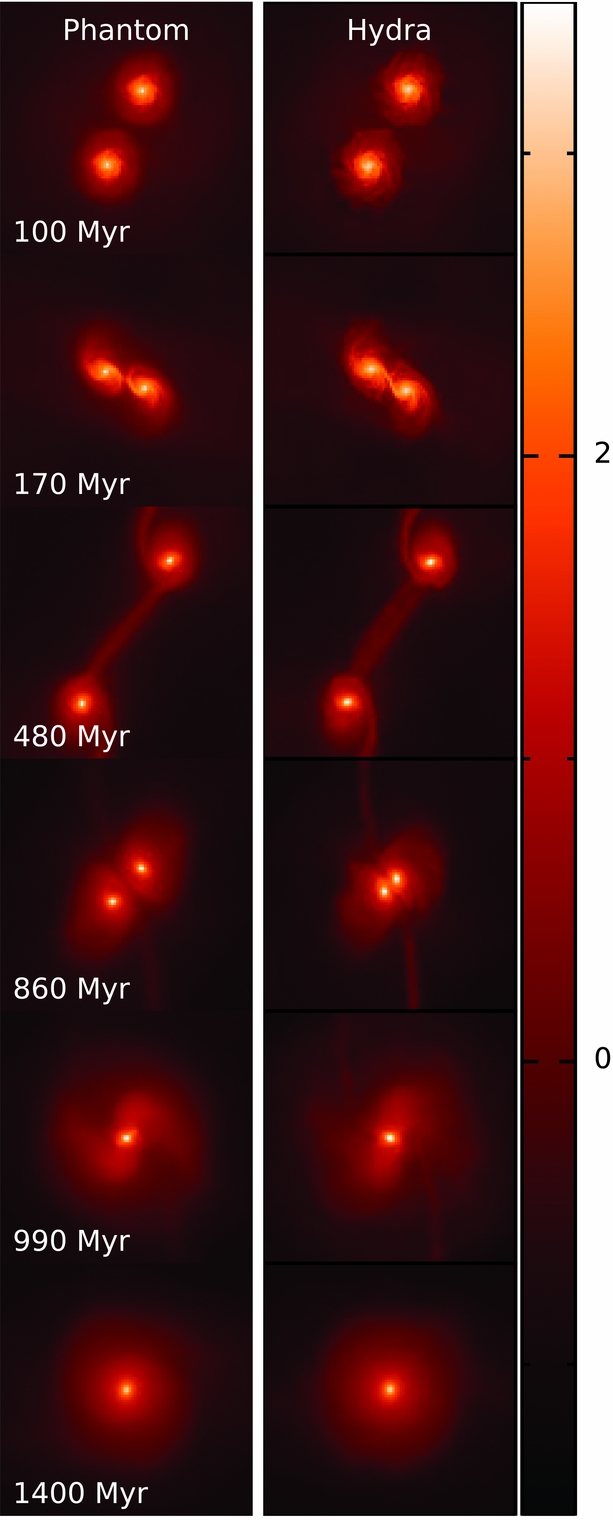

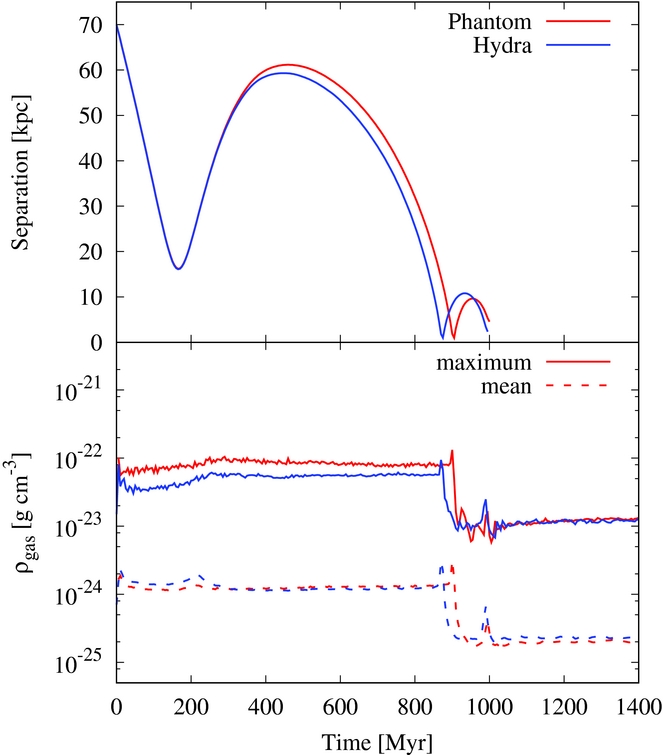

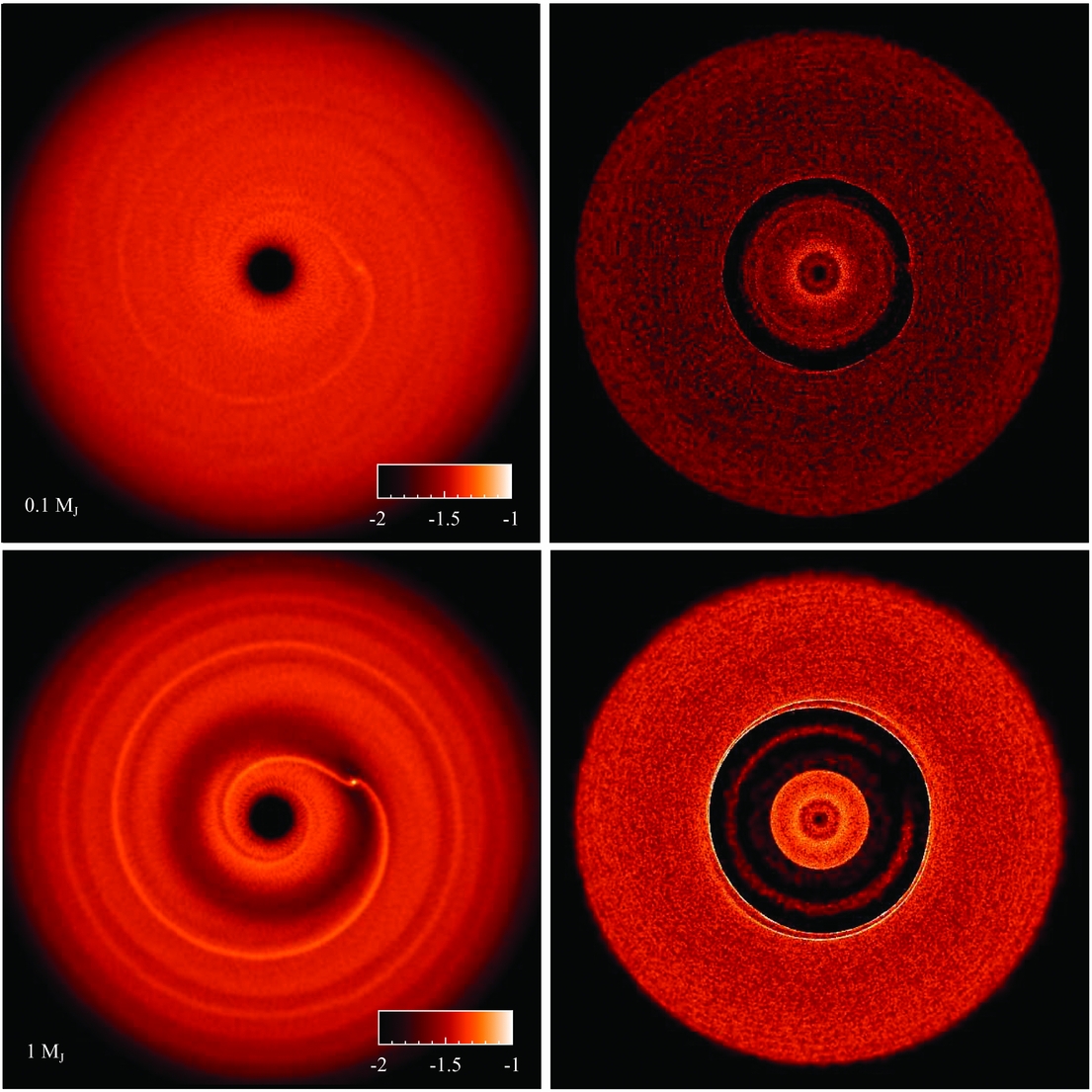

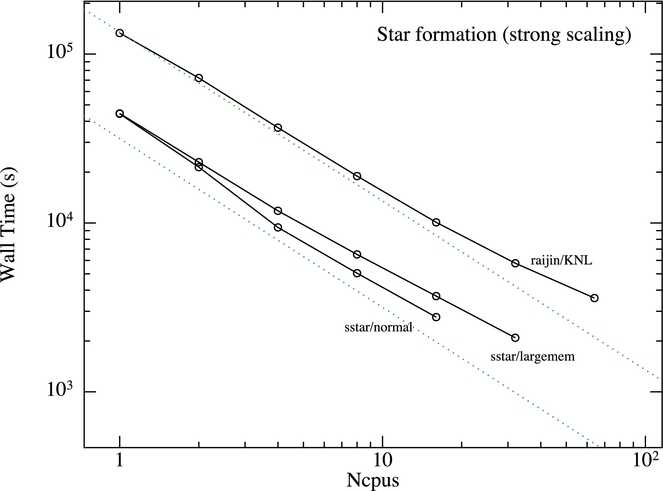

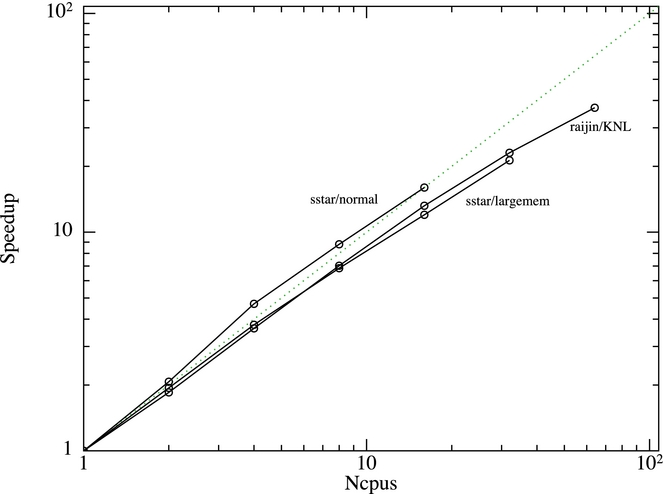

with R g ≡ GM/c 3 and ![]() $f \equiv \left(1 - 2R_{\rm g}/\vert {\bm r}_a \vert \right)$. See Bonnerot et al. (Reference Bonnerot, Rossi, Lodato and Price2016) for a recent application. This potential reproduces several features of the Schwarzschild (Reference Schwarzschild1916) spacetime, in particular, reproducing the orbital and epicyclic frequencies to better than 7% (Tejeda & Rosswog Reference Tejeda and Rosswog2013). As the acceleration involves velocity-dependent terms, it requires a semi-implicit solution like Lense–Thirring precession. Since the matrix equation is rather involved for this case, the corrector step is iterated using fixed point iterations until the velocity of each particle is converged to a tolerance of 1%.