1 Introduction

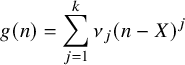

In this paper, we shall study correlations of arithmetic functions

![]() $f \colon \mathbb {N} \to \mathbb {C}$

with arbitrary nilsequences

$f \colon \mathbb {N} \to \mathbb {C}$

with arbitrary nilsequences

![]() $n \mapsto F(g(n) \Gamma )$

in short intervals. For simplicity, we will restrict attention to the following model examples of functions f:

$n \mapsto F(g(n) \Gamma )$

in short intervals. For simplicity, we will restrict attention to the following model examples of functions f:

-

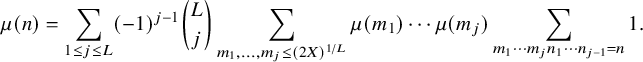

• The Möbius function

$\mu (n)$

, defined to equal

$\mu (n)$

, defined to equal

$(-1)^j$

when n is the product of j distinct primes, and

$(-1)^j$

when n is the product of j distinct primes, and

$0$

otherwise.

$0$

otherwise. -

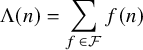

• The von Mangoldt function

$\Lambda (n)$

, defined to equal

$\Lambda (n)$

, defined to equal

$\log p$

when n is a power

$\log p$

when n is a power

$p^j$

of a prime p for some

$p^j$

of a prime p for some

$j \geq 1$

, and

$j \geq 1$

, and

$0$

otherwise.

$0$

otherwise. -

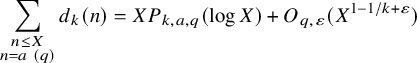

• The

$k^{\mathrm {th}}$

divisor function

$k^{\mathrm {th}}$

divisor function

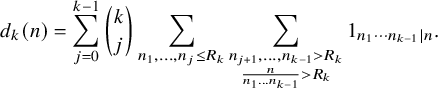

$d_k(n)$

, defined to equal the number of representations of n as the product

$d_k(n)$

, defined to equal the number of representations of n as the product

$n=n_1\dotsm n_k$

of k natural numbers, where

$n=n_1\dotsm n_k$

of k natural numbers, where

$k \geq 2$

is fixed. (In particular, all implied constants in our asymptotic notation are understood to depend on k.)

$k \geq 2$

is fixed. (In particular, all implied constants in our asymptotic notation are understood to depend on k.)

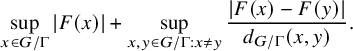

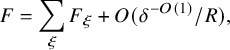

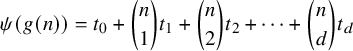

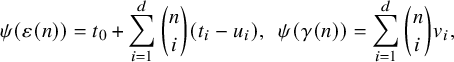

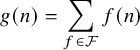

By a ‘nilsequence’, we mean a function of the form

![]() $n \mapsto F(g(n)\Gamma )$

, where

$n \mapsto F(g(n)\Gamma )$

, where

![]() $G/\Gamma $

is a filtered nilmanifold and

$G/\Gamma $

is a filtered nilmanifold and

![]() $F \colon G/\Gamma \to \mathbb {C}$

is a Lipschitz function. The precise definitions of these terms will be given in Section 2.3, but a simple example of a nilsequence to keep in mind for now is

$F \colon G/\Gamma \to \mathbb {C}$

is a Lipschitz function. The precise definitions of these terms will be given in Section 2.3, but a simple example of a nilsequence to keep in mind for now is

![]() $F(g(n) \Gamma ) = e(\alpha n^d)$

for some real number

$F(g(n) \Gamma ) = e(\alpha n^d)$

for some real number

![]() $\alpha $

, some natural number

$\alpha $

, some natural number

![]() $d \geq 0$

and with

$d \geq 0$

and with

![]() .

.

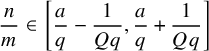

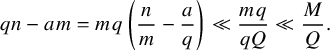

When f is nonnegative and

![]() $F(g(n) \Gamma )$

is a ‘major arc’ in some sense (e.g., if

$F(g(n) \Gamma )$

is a ‘major arc’ in some sense (e.g., if

![]() $F(g(n)\Gamma ) = e(\alpha n^s)$

with

$F(g(n)\Gamma ) = e(\alpha n^s)$

with

![]() $\alpha $

very close to a rational

$\alpha $

very close to a rational

![]() $a/q$

with small denominator q), there is actually correlation between f and

$a/q$

with small denominator q), there is actually correlation between f and

![]() $F(g(n) \Gamma )$

, but we shall deal with this by first subtracting off a suitable approximation

$F(g(n) \Gamma )$

, but we shall deal with this by first subtracting off a suitable approximation

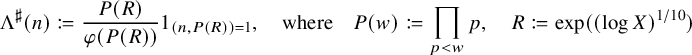

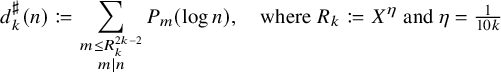

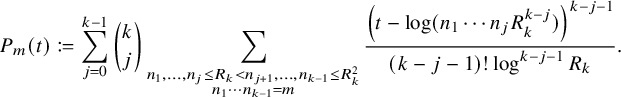

![]() $f^\sharp $

from f. In the case of the Möbius function

$f^\sharp $

from f. In the case of the Möbius function

![]() $\mu $

, we may set

$\mu $

, we may set

![]() $\mu ^\sharp = 0$

. On the other hand, the functions

$\mu ^\sharp = 0$

. On the other hand, the functions

![]() $\Lambda , d_k$

are nonnegative and one therefore needs to construct nontrivial approximants

$\Lambda , d_k$

are nonnegative and one therefore needs to construct nontrivial approximants

![]() $\Lambda ^\sharp , d_k^\sharp $

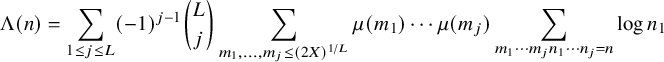

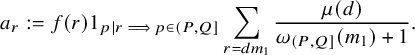

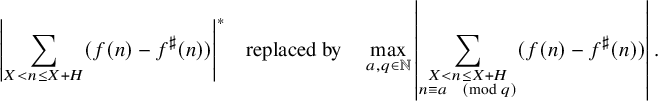

to such functions before one can expect to obtain discorrelation; we shall choose

$\Lambda ^\sharp , d_k^\sharp $

to such functions before one can expect to obtain discorrelation; we shall choose

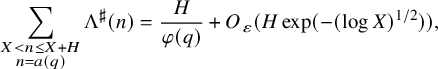

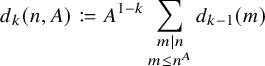

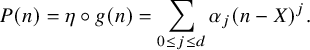

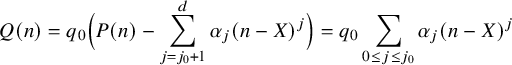

and

and the polynomials

![]() $P_m(t)$

(which have degree

$P_m(t)$

(which have degree

![]() $k-1$

) are given by the formula

$k-1$

) are given by the formula

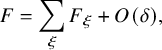

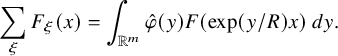

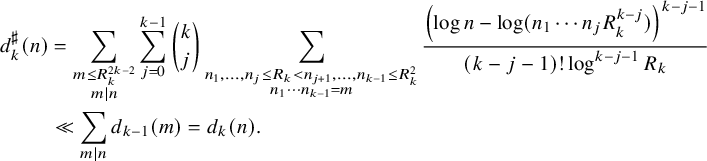

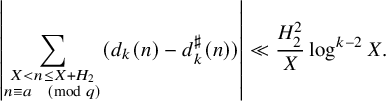

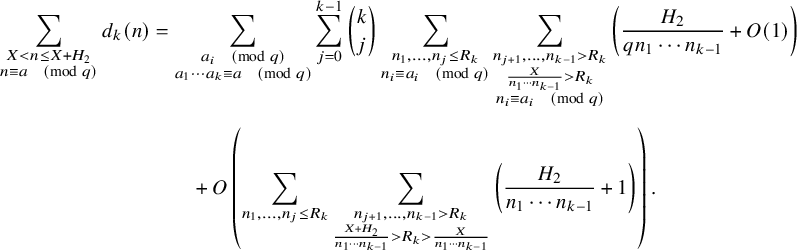

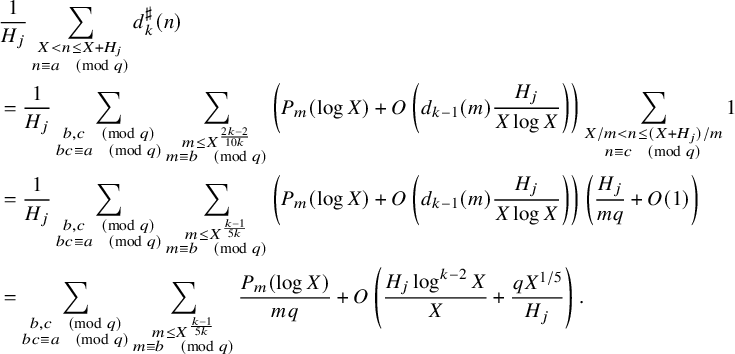

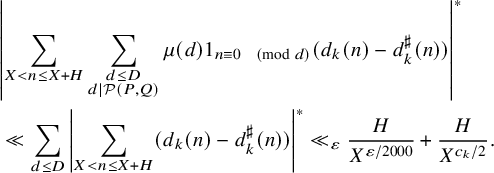

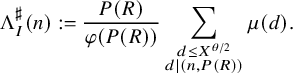

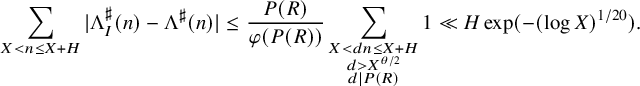

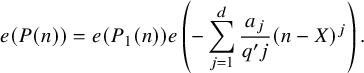

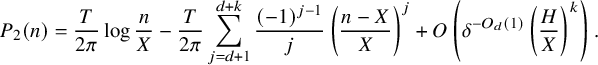

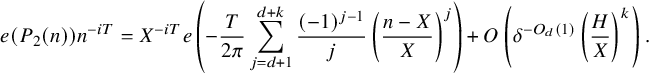

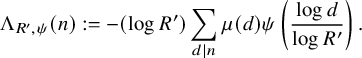

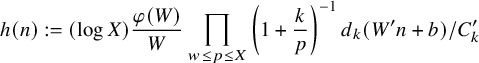

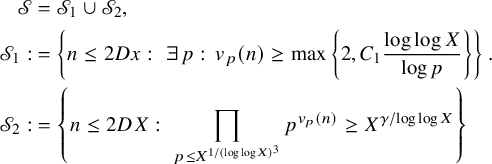

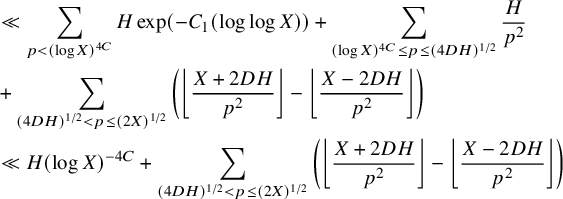

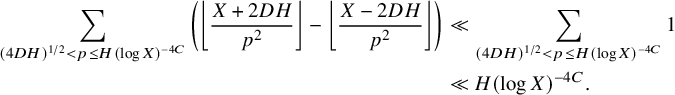

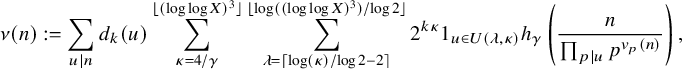

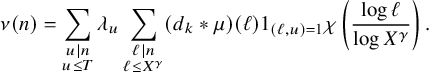

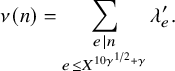

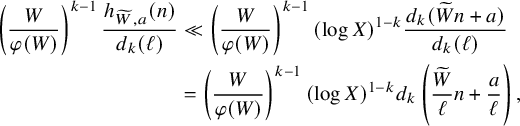

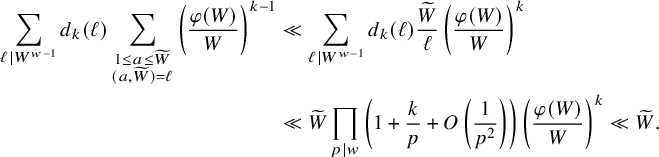

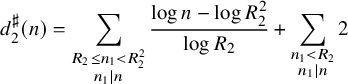

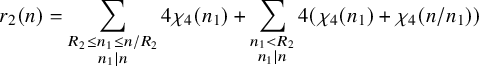

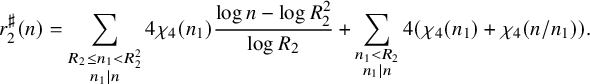

We will discuss these choices of approximants more in Section 3.1 (which can be read independently of the rest of the paper), but let us already here note that the approximants lead to type I sums and are thus easier to handle than the original functions and that the choice of the parameter R in

![]() $\Lambda ^{\sharp }$

allows for an arbitrary power of log saving in equation (1.6) below. Moreover, the approximants are nonnegative, which is helpful for some applications (in particular in the proof of Theorem 1.5 below). For future use, we record the fact that our correlation estimates for

$\Lambda ^{\sharp }$

allows for an arbitrary power of log saving in equation (1.6) below. Moreover, the approximants are nonnegative, which is helpful for some applications (in particular in the proof of Theorem 1.5 below). For future use, we record the fact that our correlation estimates for

![]() $d_k - d_k^{\sharp }$

work for

$d_k - d_k^{\sharp }$

work for

![]() $d_k^{\sharp }$

defined as in equation (1.2) with any fixed

$d_k^{\sharp }$

defined as in equation (1.2) with any fixed

![]() $0 < \eta \leq \frac {1}{10k}$

, as long as we allow implied constants to depend on

$0 < \eta \leq \frac {1}{10k}$

, as long as we allow implied constants to depend on

![]() $\eta $

.

$\eta $

.

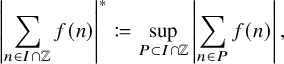

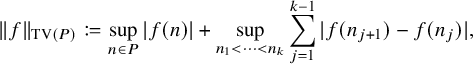

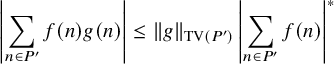

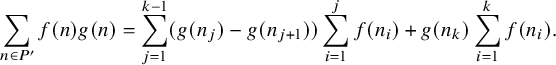

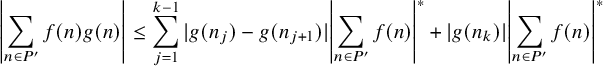

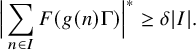

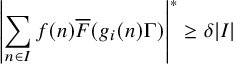

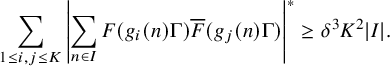

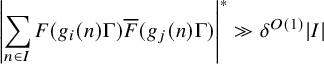

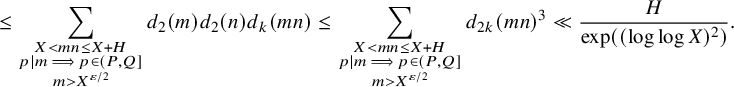

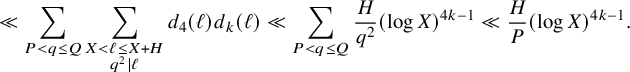

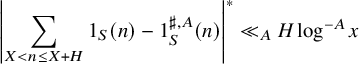

For technical reasons, it can be beneficial to consider ‘maximal discorrelation’ estimates. Loosely following Robert and Sargos [Reference Robert and Sargos58] we adopt the conventionFootnote 1 that, for an interval I,

where P ranges over all arithmetic progressions in

![]() $I \cap \mathbb {Z}$

.

$I \cap \mathbb {Z}$

.

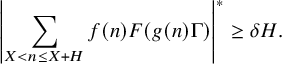

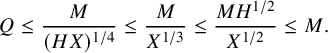

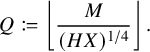

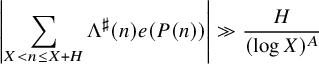

Now, we are ready to state our main theorem.Footnote 2

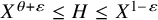

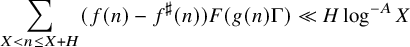

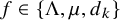

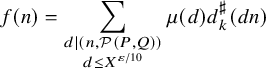

Theorem 1.1 (Discorrelation estimate).

Let

![]() $X \geq 3$

,

$X \geq 3$

,

![]() $X^{\theta +\varepsilon } \leq H \leq X^{1-\varepsilon }$

for some

$X^{\theta +\varepsilon } \leq H \leq X^{1-\varepsilon }$

for some

![]() $0 < \theta < 1$

and

$0 < \theta < 1$

and

![]() $\varepsilon> 0$

, and let

$\varepsilon> 0$

, and let

![]() $\delta \in (0,1)$

. Let

$\delta \in (0,1)$

. Let

![]() $G/\Gamma $

be a filtered nilmanifold of some degree d and dimension D and complexity at most

$G/\Gamma $

be a filtered nilmanifold of some degree d and dimension D and complexity at most

![]() $1/\delta $

, and let

$1/\delta $

, and let

![]() $F \colon G/\Gamma \to \mathbb {C}$

be a Lipschitz function of norm at most

$F \colon G/\Gamma \to \mathbb {C}$

be a Lipschitz function of norm at most

![]() $1/\delta $

.

$1/\delta $

.

-

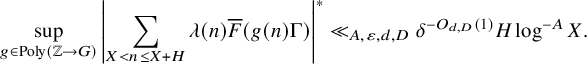

(i) If

$\theta = 5/8$

, then for all

$\theta = 5/8$

, then for all

$A> 0$

, (1.5)

$A> 0$

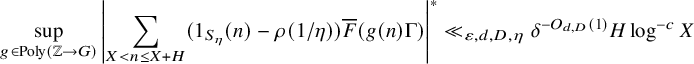

, (1.5) $$ \begin{align} \sup_{g \in {\operatorname{Poly}}(\mathbb{Z} \to G)} {\left| \sum_{X < n \leq X+H} \mu(n) \overline{F}(g(n)\Gamma) \right|}^* &\ll_{A,\varepsilon,d,D} \delta^{-O_{d,D}(1)} H \log^{-A} X \end{align} $$

$$ \begin{align} \sup_{g \in {\operatorname{Poly}}(\mathbb{Z} \to G)} {\left| \sum_{X < n \leq X+H} \mu(n) \overline{F}(g(n)\Gamma) \right|}^* &\ll_{A,\varepsilon,d,D} \delta^{-O_{d,D}(1)} H \log^{-A} X \end{align} $$

-

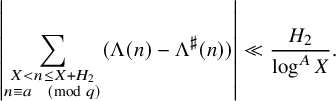

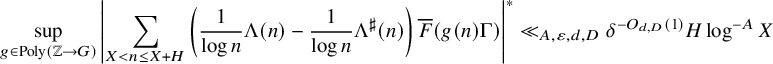

(ii) If

$\theta = 5/8$

, then for all

$\theta = 5/8$

, then for all

$A> 0$

, (1.6)

$A> 0$

, (1.6) $$ \begin{align} \sup_{g \in {\operatorname{Poly}}(\mathbb{Z} \to G)} {\left| \sum_{X < n \leq X+H} (\Lambda(n) - \Lambda^\sharp(n)) \overline{F}(g(n)\Gamma) \right|}^* &\ll_{A,\varepsilon,d,D} \delta^{-O_{d,D}(1)} H \log^{-A} X. \end{align} $$

$$ \begin{align} \sup_{g \in {\operatorname{Poly}}(\mathbb{Z} \to G)} {\left| \sum_{X < n \leq X+H} (\Lambda(n) - \Lambda^\sharp(n)) \overline{F}(g(n)\Gamma) \right|}^* &\ll_{A,\varepsilon,d,D} \delta^{-O_{d,D}(1)} H \log^{-A} X. \end{align} $$

-

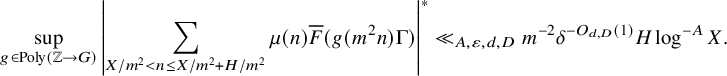

(iii) Let

$k \geq 2$

. Set

$k \geq 2$

. Set

$\theta = 1/3$

for

$\theta = 1/3$

for

$k=2$

,

$k=2$

,

$\theta =5/9$

for

$\theta =5/9$

for

$k=3$

, and

$k=3$

, and

$\theta =5/8$

for

$\theta =5/8$

for

$k \geq 4$

. Then (1.7)for some constant

$k \geq 4$

. Then (1.7)for some constant $$ \begin{align} \sup_{g \in {\operatorname{Poly}}(\mathbb{Z} \to G)}{\left| \sum_{X < n \leq X+H} (d_k(n) - d_k^\sharp(n)) \overline{F}(g(n)\Gamma) \right|}^* \ll_{\varepsilon,d,D} \delta^{-O_{d,D}(1)} H X^{-c_{k,d,D} \varepsilon} \end{align} $$

$$ \begin{align} \sup_{g \in {\operatorname{Poly}}(\mathbb{Z} \to G)}{\left| \sum_{X < n \leq X+H} (d_k(n) - d_k^\sharp(n)) \overline{F}(g(n)\Gamma) \right|}^* \ll_{\varepsilon,d,D} \delta^{-O_{d,D}(1)} H X^{-c_{k,d,D} \varepsilon} \end{align} $$

$c_{k,d,D}>0$

depending only on

$c_{k,d,D}>0$

depending only on

$k,d,D$

.

$k,d,D$

.

-

(iv) If

$\theta = 3/5$

, then (1.8)

$\theta = 3/5$

, then (1.8) $$ \begin{align} \sup_{g \in {\operatorname{Poly}}(\mathbb{Z} \to G)} {\left| \sum_{X < n \leq X+H} \mu(n) \overline{F}(g(n)\Gamma) \right|}^* \ll_{\varepsilon,d,D} \delta^{-O_{d,D}(1)} H \log^{-1/4} X. \end{align} $$

$$ \begin{align} \sup_{g \in {\operatorname{Poly}}(\mathbb{Z} \to G)} {\left| \sum_{X < n \leq X+H} \mu(n) \overline{F}(g(n)\Gamma) \right|}^* \ll_{\varepsilon,d,D} \delta^{-O_{d,D}(1)} H \log^{-1/4} X. \end{align} $$

-

(v) Let

$k \geq 4$

. If

$k \geq 4$

. If

$\theta = 3/5$

, then (1.9)

$\theta = 3/5$

, then (1.9) $$ \begin{align} \sup_{g \in {\operatorname{Poly}}(\mathbb{Z} \to G)}{\left| \sum_{X < n \leq X+H} (d_k(n) - d_k^\sharp(n)) \overline{F}(g(n)\Gamma) \right|}^* \ll_{\varepsilon,d,D} \delta^{-O_{d,D}(1)} H \log^{\frac{3}{4}k-1} X. \end{align} $$

$$ \begin{align} \sup_{g \in {\operatorname{Poly}}(\mathbb{Z} \to G)}{\left| \sum_{X < n \leq X+H} (d_k(n) - d_k^\sharp(n)) \overline{F}(g(n)\Gamma) \right|}^* \ll_{\varepsilon,d,D} \delta^{-O_{d,D}(1)} H \log^{\frac{3}{4}k-1} X. \end{align} $$

The dependency of the implied constants on A in equations (1.5) and (1.6) is ineffective due to the possible existence of Siegel zeros. All the other implied constants are effective.

Remark 1.2. One could extend the theorem to cover the range

![]() $X^{1-\varepsilon }\leq H\leq X$

without difficulty; however, this is not the most interesting regime and there are some places in the proof where the restriction to

$X^{1-\varepsilon }\leq H\leq X$

without difficulty; however, this is not the most interesting regime and there are some places in the proof where the restriction to

![]() $H\leq X^{1-\varepsilon }$

is convenient. In the cases of equations (1.5), (1.8), the result for

$H\leq X^{1-\varepsilon }$

is convenient. In the cases of equations (1.5), (1.8), the result for

![]() $X^{\theta +\varepsilon }\leq H\leq X^{1-\varepsilon }$

directly implies the result for

$X^{\theta +\varepsilon }\leq H\leq X^{1-\varepsilon }$

directly implies the result for

![]() $X^{1-\varepsilon }\leq H\leq X$

by splitting long sums into shorter ones. In the cases of equations (1.6), (1.7), (1.9), it turns out that there is some flexibility in the choice of the approximant (one can certainly vary R in equation (1.1) or

$X^{1-\varepsilon }\leq H\leq X$

by splitting long sums into shorter ones. In the cases of equations (1.6), (1.7), (1.9), it turns out that there is some flexibility in the choice of the approximant (one can certainly vary R in equation (1.1) or

![]() $R_k$

in equation (1.2) by a multiplicative factor

$R_k$

in equation (1.2) by a multiplicative factor

![]() $\asymp 1$

), and then one can make a similar splitting argument. We leave the details to the interested reader.

$\asymp 1$

), and then one can make a similar splitting argument. We leave the details to the interested reader.

In applications

![]() $d,D,\delta $

will often be fixed; however, the fact that the constants here depend in a polynomial fashion on

$d,D,\delta $

will often be fixed; however, the fact that the constants here depend in a polynomial fashion on

![]() $\delta $

will be useful for induction purposes.

$\delta $

will be useful for induction purposes.

Note that polynomial phases

![]() $F(g(n)\Gamma ) = e(P(n))$

, with

$F(g(n)\Gamma ) = e(P(n))$

, with

![]() $P \colon \mathbb {Z} \to \mathbb {R}$

a polynomial of degree d, are a special case of nilsequences – in this case the filtered nilmanifold is the unit circle

$P \colon \mathbb {Z} \to \mathbb {R}$

a polynomial of degree d, are a special case of nilsequences – in this case the filtered nilmanifold is the unit circle

![]() $\mathbb {R}/\mathbb {Z}$

(with

$\mathbb {R}/\mathbb {Z}$

(with

![]() $\mathbb {R} = (\mathbb {R},+)$

being the filtered nilpotent group with

$\mathbb {R} = (\mathbb {R},+)$

being the filtered nilpotent group with

![]() $\mathbb {R}_i = \mathbb {R}$

for

$\mathbb {R}_i = \mathbb {R}$

for

![]() $i \leq d$

and

$i \leq d$

and

![]() $\mathbb {R}_i = \{0\}$

for

$\mathbb {R}_i = \{0\}$

for

![]() $i>d$

) and

$i>d$

) and

![]() $F(\alpha ) = e(\alpha )$

for all

$F(\alpha ) = e(\alpha )$

for all

![]() $\alpha \in \mathbb {R}/\mathbb {Z}$

. In particular, the results of Theorem 1.1 hold for polynomial phases, that is, with

$\alpha \in \mathbb {R}/\mathbb {Z}$

. In particular, the results of Theorem 1.1 hold for polynomial phases, that is, with

![]() $G/\Gamma =\mathbb {R}/\mathbb {Z}$

,

$G/\Gamma =\mathbb {R}/\mathbb {Z}$

,

![]() $D=1$

, and with

$D=1$

, and with

![]() $\overline {F}(g(n)\Gamma )$

replaced with

$\overline {F}(g(n)\Gamma )$

replaced with

![]() $e(P(n))$

. Before moving on, let us for the convenience of the reader state the following corollary of our theorem in the polynomial phase case.

$e(P(n))$

. Before moving on, let us for the convenience of the reader state the following corollary of our theorem in the polynomial phase case.

Corollary 1.3 (Discorrelation of

$\mu $

and

$\mu $

and

$\Lambda $

with polynomial phases in short intervals).

$\Lambda $

with polynomial phases in short intervals).

Let

![]() $X \geq 3$

, and let

$X \geq 3$

, and let

![]() $X^{\theta +\varepsilon } \leq H \leq X^{1-\varepsilon }$

for some

$X^{\theta +\varepsilon } \leq H \leq X^{1-\varepsilon }$

for some

![]() $0 < \theta < 1$

and

$0 < \theta < 1$

and

![]() $\varepsilon> 0$

. Let

$\varepsilon> 0$

. Let

![]() $d\geq 1$

, and let

$d\geq 1$

, and let

![]() $P:\mathbb {Z}\to \mathbb {R}$

be any polynomial of degree d.

$P:\mathbb {Z}\to \mathbb {R}$

be any polynomial of degree d.

-

(i) If

$\theta = 5/8$

, then, for all

$\theta = 5/8$

, then, for all

$A> 0$

,

$A> 0$

,  $$ \begin{align*} \left|\sum_{X < n\leq X+H}\mu(n)e(P(n))\right|\ll_{d,A,\varepsilon} \frac{H}{\log^A X}. \end{align*} $$

$$ \begin{align*} \left|\sum_{X < n\leq X+H}\mu(n)e(P(n))\right|\ll_{d,A,\varepsilon} \frac{H}{\log^A X}. \end{align*} $$

-

(ii) If

$\theta = 5/8$

and

$\theta = 5/8$

and

$A> 0$

, we have unless there exists

$A> 0$

, we have unless there exists $$ \begin{align*} \left|\sum_{X < n\leq X+H}\Lambda(n)e(P(n))\right|\leq \frac{H}{\log^A X}, \end{align*} $$

$$ \begin{align*} \left|\sum_{X < n\leq X+H}\Lambda(n)e(P(n))\right|\leq \frac{H}{\log^A X}, \end{align*} $$

$1\leq q\leq (\log X)^{O_{d,A,\varepsilon }(1)}$

such that one has the ‘major arc’ property (1.10)where

$1\leq q\leq (\log X)^{O_{d,A,\varepsilon }(1)}$

such that one has the ‘major arc’ property (1.10)where $$ \begin{align} \max_{1\leq j\leq d}H^j\|q\alpha_j\|_{\mathbb{R}/\mathbb{Z}}\leq (\log X)^{O_{d,A,\varepsilon}(1)}, \end{align} $$

$$ \begin{align} \max_{1\leq j\leq d}H^j\|q\alpha_j\|_{\mathbb{R}/\mathbb{Z}}\leq (\log X)^{O_{d,A,\varepsilon}(1)}, \end{align} $$

$\alpha _j$

is the degree j coefficient of the polynomial

$\alpha _j$

is the degree j coefficient of the polynomial

$n\mapsto P(n+X)$

and

$n\mapsto P(n+X)$

and

$\|y\|_{\mathbb {R}/\mathbb {Z}}$

denotes the distance from y to the nearest integer(s).

$\|y\|_{\mathbb {R}/\mathbb {Z}}$

denotes the distance from y to the nearest integer(s).

-

(iii) If

$\theta = 3/5$

, then

$\theta = 3/5$

, then  $$ \begin{align*} \left|\sum_{X < n\leq X+H}\mu(n)e(P(n))\right|\ll_{d,\varepsilon} \frac{H}{\log^{1/10} X}. \end{align*} $$

$$ \begin{align*} \left|\sum_{X < n\leq X+H}\mu(n)e(P(n))\right|\ll_{d,\varepsilon} \frac{H}{\log^{1/10} X}. \end{align*} $$

The claims (i) and (iii) are immediate from Theorem 1.1, but (ii) requires a short argument, provided in Section 10. One could state an analogous result in the case of

![]() $d_k$

(with the same exponents as in Theorem 1.1).

$d_k$

(with the same exponents as in Theorem 1.1).

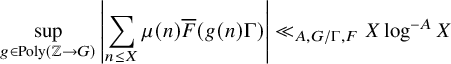

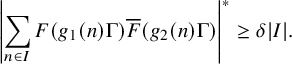

Let us now discuss the literature on the topic, starting with results concerning the Möbius function. A discorrelation estimate such as Theorem 1.1(i) with arbitrary

![]() $F(g(n) \Gamma )$

was previously only known in case of long intervals due to the work of Green and the third author [Reference Green and Tao18, Theorem 1.1]. Namely, they have shown that

$F(g(n) \Gamma )$

was previously only known in case of long intervals due to the work of Green and the third author [Reference Green and Tao18, Theorem 1.1]. Namely, they have shown that

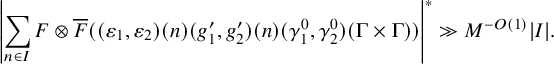

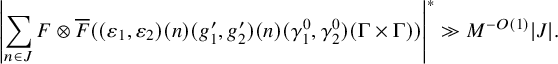

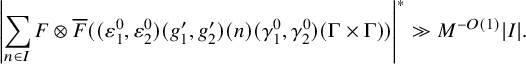

$$ \begin{align} \sup_{g \in {\operatorname{Poly}}(\mathbb{Z} \to G)} \left| \sum_{n \leq X} \mu(n) \overline{F}(g(n)\Gamma) \right| \ll_{A,G/\Gamma,F} X \log^{-A} X \end{align} $$

$$ \begin{align} \sup_{g \in {\operatorname{Poly}}(\mathbb{Z} \to G)} \left| \sum_{n \leq X} \mu(n) \overline{F}(g(n)\Gamma) \right| \ll_{A,G/\Gamma,F} X \log^{-A} X \end{align} $$

for any

![]() $X \geq 2$

,

$X \geq 2$

,

![]() $A>0$

, filtered nilmanifold

$A>0$

, filtered nilmanifold

![]() $G/\Gamma $

and Lipschitz function

$G/\Gamma $

and Lipschitz function

![]() $F \colon G/\Gamma \to \mathbb {C}$

. This result of Green and the third author is a vast generalization of a classical result of Davenport [Reference Davenport6], which states that

$F \colon G/\Gamma \to \mathbb {C}$

. This result of Green and the third author is a vast generalization of a classical result of Davenport [Reference Davenport6], which states that

$$ \begin{align} \sup_{\alpha \in \mathbb{R}} \left| \sum_{n \leq X} \mu(n) e(-\alpha n)\right| \ll_A X \log^{-A} X, \end{align} $$

$$ \begin{align} \sup_{\alpha \in \mathbb{R}} \left| \sum_{n \leq X} \mu(n) e(-\alpha n)\right| \ll_A X \log^{-A} X, \end{align} $$

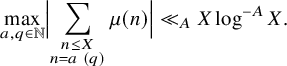

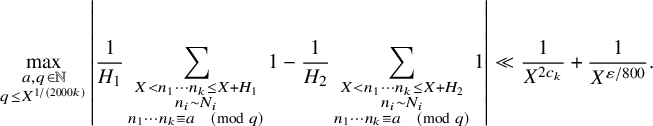

and of the Siegel–Walfisz theorem (see, e.g., [Reference Iwaniec and Kowalski37, Corollary 5.29]), which states that

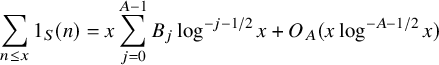

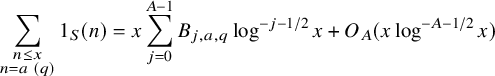

$$ \begin{align} \max_{a, q \in \mathbb{N}} \Bigl|\sum_{\substack{n \leq X\\ n = a\ (q)}} \mu(n) \Bigr| \ll_A X \log^{-A} X. \end{align} $$

$$ \begin{align} \max_{a, q \in \mathbb{N}} \Bigl|\sum_{\substack{n \leq X\\ n = a\ (q)}} \mu(n) \Bigr| \ll_A X \log^{-A} X. \end{align} $$

As is well-known, the bounds of

![]() $O_A(X \log ^{-A} X)$

here cannot be improved unconditionally with current technology, due to the possible existence of Siegel zeroes (unless one subtracts a correction term to account for the contribution of such zero; see [Reference Tao and Teräväinen61, Theorem 2.7]).

$O_A(X \log ^{-A} X)$

here cannot be improved unconditionally with current technology, due to the possible existence of Siegel zeroes (unless one subtracts a correction term to account for the contribution of such zero; see [Reference Tao and Teräväinen61, Theorem 2.7]).

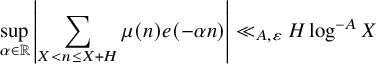

On the other hand, for short intervals there has been a lot of activity in the special case of polynomial phase twists.

Theorem 1.1(i) was previously only known in the linear phase case when

![]() $F(g(n)\Gamma ) = e(\alpha n)$

for any

$F(g(n)\Gamma ) = e(\alpha n)$

for any

![]() $\alpha \in \mathbb {R}$

by work of Zhan [Reference Zhan64]. More precisely, Zhan [Reference Zhan64, Theorem 5] established that

$\alpha \in \mathbb {R}$

by work of Zhan [Reference Zhan64]. More precisely, Zhan [Reference Zhan64, Theorem 5] established that

$$ \begin{align} \sup_{\alpha \in \mathbb{R}} \left|\sum_{X < n \leq X+H} \mu(n) e(-\alpha n)\right| \ll_{A,\varepsilon} H \log^{-A} X \end{align} $$

$$ \begin{align} \sup_{\alpha \in \mathbb{R}} \left|\sum_{X < n \leq X+H} \mu(n) e(-\alpha n)\right| \ll_{A,\varepsilon} H \log^{-A} X \end{align} $$

whenever

![]() $X^{5/8+\varepsilon } \leq H \leq X$

and

$X^{5/8+\varepsilon } \leq H \leq X$

and

![]() $A \geq 1$

. Hence, Theorem 1.1(i) can be seen as a vast extension of Zhan’s work.

$A \geq 1$

. Hence, Theorem 1.1(i) can be seen as a vast extension of Zhan’s work.

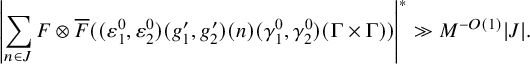

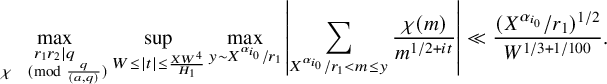

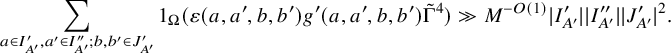

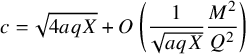

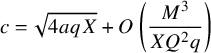

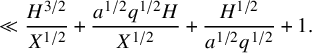

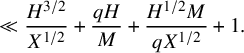

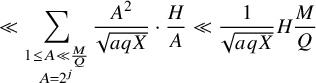

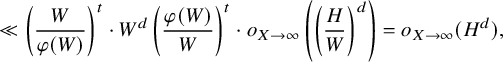

Concerning higher degree polynomials, the most recent result is due to the first two authors [Reference Matomäki and Shao49, Theorem 1.4] giving, for any polynomial

![]() $P(n)$

of degree

$P(n)$

of degree

![]() $\leq d$

,

$\leq d$

,

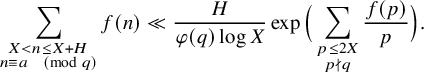

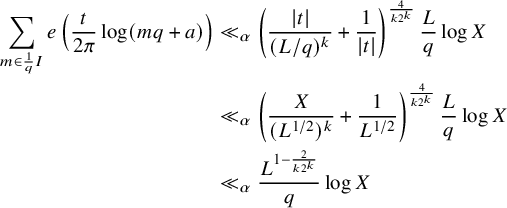

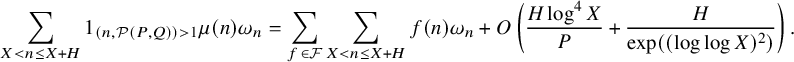

for all

![]() $A> 0$

and

$A> 0$

and

![]() $X^{2/3+\varepsilon } \leq H \leq X$

. In particular a special case of Theorem 1.1(i) (recorded here as Corollary 1.3(i)) supersedes this result by showing it with the exponent

$X^{2/3+\varepsilon } \leq H \leq X$

. In particular a special case of Theorem 1.1(i) (recorded here as Corollary 1.3(i)) supersedes this result by showing it with the exponent

![]() $2/3$

lowered to

$2/3$

lowered to

![]() $5/8$

.

$5/8$

.

All the previous results mentioned so far for the Möbius function exist also for the von Mangoldt function as long as

![]() $F(g(n) \Gamma )$

or

$F(g(n) \Gamma )$

or

![]() $e(-P(n))$

is a ‘minor arc’ in a certain sense (for results corresponding to equations (1.11), (1.12), (1.13), (1.14) and (1.15), see, respectively, [Reference Green and Tao18, Section 7], [Reference Iwaniec and Kowalski37, Theorem 13.6], [Reference Iwaniec and Kowalski37, Corollary 5.29], [Reference Zhan64, Theorems 2–3] and [Reference Matomäki and Shao49, Theorem 1.1]). It is very likely that with our choice of approximant these arguments also extend to cover major arc cases and maximal correlations, although we will not detail this here as such claims follow in any case from Theorem 1.1.

$e(-P(n))$

is a ‘minor arc’ in a certain sense (for results corresponding to equations (1.11), (1.12), (1.13), (1.14) and (1.15), see, respectively, [Reference Green and Tao18, Section 7], [Reference Iwaniec and Kowalski37, Theorem 13.6], [Reference Iwaniec and Kowalski37, Corollary 5.29], [Reference Zhan64, Theorems 2–3] and [Reference Matomäki and Shao49, Theorem 1.1]). It is very likely that with our choice of approximant these arguments also extend to cover major arc cases and maximal correlations, although we will not detail this here as such claims follow in any case from Theorem 1.1.

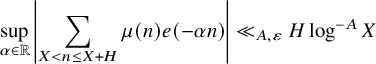

Theorem 1.1(iv) generalizes (albeit with a slightly weaker logarithmic saving) a result of the first and fourth authors [Reference Matomäki and Teräväinen50, Theorem 1.5] that gave, for

![]() $0 < A < 1/3$

,

$0 < A < 1/3$

,

$$ \begin{align} \sup_{\alpha \in \mathbb{R}} \left|\sum_{X < n \leq X+H} \mu(n) e(-\alpha n)\right| \ll_{A,\varepsilon} H \log^{-A} X \end{align} $$

$$ \begin{align} \sup_{\alpha \in \mathbb{R}} \left|\sum_{X < n \leq X+H} \mu(n) e(-\alpha n)\right| \ll_{A,\varepsilon} H \log^{-A} X \end{align} $$

in the regime

![]() $X \geq H \geq X^{3/5+\varepsilon }$

(actually [Reference Matomäki and Teräväinen50, Remark 5.2] allows one to enlarge the range of A to

$X \geq H \geq X^{3/5+\varepsilon }$

(actually [Reference Matomäki and Teräväinen50, Remark 5.2] allows one to enlarge the range of A to

![]() $0 < A < 1$

).

$0 < A < 1$

).

The literature on correlations between

![]() $d_k$

and Fourier or higher-order phases is sparse. A variant of the long interval case (1.11) (with a weaker error term) follows from work of Matthiesen [Reference Matthiesen51, Theorem 6.1].

$d_k$

and Fourier or higher-order phases is sparse. A variant of the long interval case (1.11) (with a weaker error term) follows from work of Matthiesen [Reference Matthiesen51, Theorem 6.1].

Furthermore, it should be possible to adapt the existing results on polynomial correlations of

![]() $\Lambda (n)$

also to the case of

$\Lambda (n)$

also to the case of

![]() $d_k(n)$

but with power savings. More precisely, one should be able to follow the approach of Zhan [Reference Zhan64] to obtain discorrelation with linear phases

$d_k(n)$

but with power savings. More precisely, one should be able to follow the approach of Zhan [Reference Zhan64] to obtain discorrelation with linear phases

![]() $e(\alpha n)$

for

$e(\alpha n)$

for

![]() $X \geq H \geq X^{5/8+\varepsilon }$

(for

$X \geq H \geq X^{5/8+\varepsilon }$

(for

![]() $k=2$

one can replace

$k=2$

one can replace

![]() $5/8$

by

$5/8$

by

![]() $1/2$

and for

$1/2$

and for

![]() $k=3$

one can replace

$k=3$

one can replace

![]() $5/8$

by

$5/8$

by

![]() $3/5$

) and the work of the first two authors [Reference Matomäki and Shao49] to obtain discorrelation with polynomial phases for

$3/5$

) and the work of the first two authors [Reference Matomäki and Shao49] to obtain discorrelation with polynomial phases for

![]() $X \geq H \geq X^{2/3+\varepsilon }$

(for

$X \geq H \geq X^{2/3+\varepsilon }$

(for

![]() $k=2$

one can replace

$k=2$

one can replace

![]() $2/3$

by

$2/3$

by

![]() $1/2$

). We omit the details of these extensions of [Reference Zhan64, Reference Matomäki and Shao49] as they follow from our Theorem 1.1.

$1/2$

). We omit the details of these extensions of [Reference Zhan64, Reference Matomäki and Shao49] as they follow from our Theorem 1.1.

We note that in the case

![]() $k=2$

the exponent

$k=2$

the exponent

![]() $1/3$

in Theorem 1.1(iii) matches the classical Voronoi exponent for the error term in long sums of the divisor function without any twist, and the result seems to be new even in the case of linear phases.

$1/3$

in Theorem 1.1(iii) matches the classical Voronoi exponent for the error term in long sums of the divisor function without any twist, and the result seems to be new even in the case of linear phases.

In the most major arc case

![]() $F(g(n) \Gamma ) = 1$

, shorter intervals can be reached than in Theorem 1.1; see Theorem 3.1 below. Furthermore, if one only wants discorrelation in almost all intervals, for instance by seeking to bound

$F(g(n) \Gamma ) = 1$

, shorter intervals can be reached than in Theorem 1.1; see Theorem 3.1 below. Furthermore, if one only wants discorrelation in almost all intervals, for instance by seeking to bound

$$\begin{align*}\int_X^{2X} \sup_{g \in {\operatorname{Poly}}(\mathbb{Z} \to G)} {\left| \sum_{x < n \leq x+H} (f(n)-f^\sharp(n)) \overline{F}(g(n)\Gamma) \right|}^* dx, \end{align*}$$

$$\begin{align*}\int_X^{2X} \sup_{g \in {\operatorname{Poly}}(\mathbb{Z} \to G)} {\left| \sum_{x < n \leq x+H} (f(n)-f^\sharp(n)) \overline{F}(g(n)\Gamma) \right|}^* dx, \end{align*}$$

much shorter intervals can be reached with aid of additional ideas. We will return to this question and its applications in a follow-up paper [Reference Matomäki, Radziwiłł, Shao, Tao and Teräväinen46].

Remark 1.4. It should be clear to experts from an inspection of our arguments that the methods used in this paper could also treat other arithmetic functions with similar structure to

![]() $\mu $

,

$\mu $

,

![]() $\Lambda $

or

$\Lambda $

or

![]() $d_k$

. For instance, all of the results for the Möbius function

$d_k$

. For instance, all of the results for the Möbius function

![]() $\mu $

here have counterparts for the Liouville function

$\mu $

here have counterparts for the Liouville function

![]() $\lambda $

; the results for the von Mangoldt function

$\lambda $

; the results for the von Mangoldt function

![]() $\Lambda $

have counterparts (with somewhat different normalizations) for the indicator function

$\Lambda $

have counterparts (with somewhat different normalizations) for the indicator function

![]() $1_{\mathbb {P}}$

of the primes

$1_{\mathbb {P}}$

of the primes

![]() ${\mathbb {P}}$

, and the results for

${\mathbb {P}}$

, and the results for

![]() $d_2$

have counterparts for the function

$d_2$

have counterparts for the function

![]() counting the number of representations of n as the sum of two squares. We sketch the modifications needed to establish these variants in Appendix A. We also conjecture that the methods can be extended to treat the indicator function

counting the number of representations of n as the sum of two squares. We sketch the modifications needed to establish these variants in Appendix A. We also conjecture that the methods can be extended to treat the indicator function

![]() $1_S$

of the set

$1_S$

of the set

![]() of sums of two squares or the indicator

of sums of two squares or the indicator

![]() $1_{S_\eta }$

of

$1_{S_\eta }$

of

![]() $X^\eta $

-smooth numbers, although in those two cases a technical difficulty arises that the construction of a sufficiently accurate approximant to these indicator functions is nontrivial. Again, see Appendix A for further discussion.

$X^\eta $

-smooth numbers, although in those two cases a technical difficulty arises that the construction of a sufficiently accurate approximant to these indicator functions is nontrivial. Again, see Appendix A for further discussion.

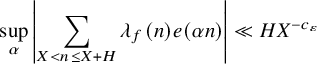

On the other hand, our arguments do not seem to easily extend to the Fourier coefficients

![]() $\lambda _f(n)$

of holomorphic cusp forms. The coefficients

$\lambda _f(n)$

of holomorphic cusp forms. The coefficients

![]() $\lambda _f(n)$

are analogous to

$\lambda _f(n)$

are analogous to

![]() $d_2(n)$

in many ways (though with vanishing approximant

$d_2(n)$

in many ways (though with vanishing approximant

![]() $\lambda ^\sharp _f = 0$

), and it is reasonable to conjecture parallel results for these two functions. For instance, in [Reference Ernvall-Hytönen and Karppinen10] it was established that

$\lambda ^\sharp _f = 0$

), and it is reasonable to conjecture parallel results for these two functions. For instance, in [Reference Ernvall-Hytönen and Karppinen10] it was established that

$$\begin{align*}\sup_\alpha \left| \sum_{X < n \leq X+H} \lambda_f(n) e(\alpha n) \right| \ll HX^{-c_\varepsilon} \end{align*}$$

$$\begin{align*}\sup_\alpha \left| \sum_{X < n \leq X+H} \lambda_f(n) e(\alpha n) \right| \ll HX^{-c_\varepsilon} \end{align*}$$

for

![]() $X^{2/5+\varepsilon } \leq H \leq X$

. See also [Reference He and Wang25] for a result with general nilsequences but long intervals. Unfortunately, the methods we use in this paper rely heavily on the convolution structure of the functions involved and do not obviously extend to give results for

$X^{2/5+\varepsilon } \leq H \leq X$

. See also [Reference He and Wang25] for a result with general nilsequences but long intervals. Unfortunately, the methods we use in this paper rely heavily on the convolution structure of the functions involved and do not obviously extend to give results for

![]() $\lambda _f$

.

$\lambda _f$

.

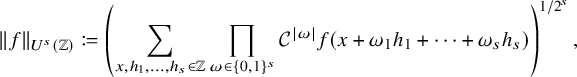

1.1 Gowers uniformity in short intervals

Just as discorrelation estimates with polynomial phases are important for applications of the circle method, discorrelation estimates with nilsequences are important in higher-order Fourier analysis due to the connection with the Gowers uniformity norms that we next discuss.

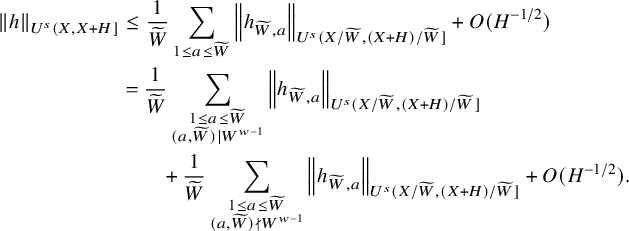

For any nonnegative integer

![]() $s \geq 1$

and any function

$s \geq 1$

and any function

![]() $f \colon \mathbb {Z} \to \mathbb {C}$

with finite support, define the (unnormalized) Gowers uniformity norm

$f \colon \mathbb {Z} \to \mathbb {C}$

with finite support, define the (unnormalized) Gowers uniformity norm

where

![]() $\omega = (\omega _1,\dots ,\omega _{s})$

,

$\omega = (\omega _1,\dots ,\omega _{s})$

,

, and

![]() $\mathcal {C} \colon z \mapsto \overline {z}$

is the complex conjugation map. Then for any interval

$\mathcal {C} \colon z \mapsto \overline {z}$

is the complex conjugation map. Then for any interval

![]() $(X,X+H]$

with

$(X,X+H]$

with

![]() $H \geq 1$

and any

$H \geq 1$

and any

![]() $f \colon \mathbb {Z} \to \mathbb {C}$

(not necessarily of finite support), define the Gowers uniformity norm over

$f \colon \mathbb {Z} \to \mathbb {C}$

(not necessarily of finite support), define the Gowers uniformity norm over

![]() $(X,X+H]$

by

$(X,X+H]$

by

where

![]() $1_{(X,X+H]} \colon \mathbb {Z} \to \mathbb {C}$

is the indicator function of

$1_{(X,X+H]} \colon \mathbb {Z} \to \mathbb {C}$

is the indicator function of

![]() $(X,X+H]$

.

$(X,X+H]$

.

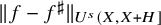

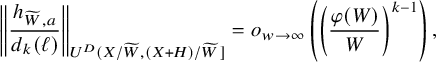

Using the inverse theorem for Gowers norms (see Proposition 9.4) we can deduce the following theorem from Theorem 1.1 and a construction of pseudorandom majorants in Section 9.

Theorem 1.5 (Gowers uniformity estimate).

Let

![]() $X^{\theta +\varepsilon }\leq H\leq X^{1-\varepsilon }$

for some fixed

$X^{\theta +\varepsilon }\leq H\leq X^{1-\varepsilon }$

for some fixed

![]() $0 < \theta < 1$

and

$0 < \theta < 1$

and

![]() $\varepsilon> 0$

. Let

$\varepsilon> 0$

. Let

![]() $s\geq 1$

be a fixed integer. Also, denote

$s\geq 1$

be a fixed integer. Also, denote

![]() $\Lambda _{w}(n):=\frac {W}{\varphi (W)}1_{(n,W)=1}$

, where

$\Lambda _{w}(n):=\frac {W}{\varphi (W)}1_{(n,W)=1}$

, where

![]() $W:=\prod _{p\leq w}p$

and X is large enough in terms of w.

$W:=\prod _{p\leq w}p$

and X is large enough in terms of w.

-

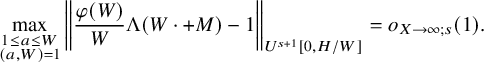

(i) If

$\theta = 5/8$

, then (1.18)

$\theta = 5/8$

, then (1.18) $$ \begin{align} &\|\Lambda-\Lambda_w\|_{U^s(X,X+H]}=o_{w\to \infty}(1), \end{align} $$

$$ \begin{align} &\|\Lambda-\Lambda_w\|_{U^s(X,X+H]}=o_{w\to \infty}(1), \end{align} $$

and for any

$1\leq a\leq W$

with

$1\leq a\leq W$

with

$(a,W)=1$

we have (1.19)

$(a,W)=1$

we have (1.19) $$ \begin{align} &\left\|\frac{\varphi(W)}{W}\Lambda(W\cdot+a)-1\right\|_{U^s(X,X+H]}=o_{w\to \infty}(1). \end{align} $$

$$ \begin{align} &\left\|\frac{\varphi(W)}{W}\Lambda(W\cdot+a)-1\right\|_{U^s(X,X+H]}=o_{w\to \infty}(1). \end{align} $$

-

(ii) Let

$k \geq 2$

. Set

$k \geq 2$

. Set

$\theta = 1/3$

for

$\theta = 1/3$

for

$k=2$

,

$k=2$

,

$\theta =5/9$

for

$\theta =5/9$

for

$k=3$

, and

$k=3$

, and

$\theta =3/5$

for

$\theta =3/5$

for

$k \geq 4$

. Then (1.20)and for any

$k \geq 4$

. Then (1.20)and for any $$ \begin{align} \|d_k-d_k^{\sharp}\|_{U^s(X, X+H]}=o(\log^{k-1} X), \end{align} $$

$$ \begin{align} \|d_k-d_k^{\sharp}\|_{U^s(X, X+H]}=o(\log^{k-1} X), \end{align} $$

$W'$

satisfying

$W'$

satisfying

$W\mid W'\mid W^{\lfloor w\rfloor }$

and for any

$W\mid W'\mid W^{\lfloor w\rfloor }$

and for any

$1\leq a\leq W'$

with

$1\leq a\leq W'$

with

$(a,W')=1$

we have (1.21)

$(a,W')=1$

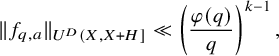

we have (1.21) $$ \begin{align} \|d_k(W'\cdot+a)-d_k^{\sharp}(W'\cdot+a)\|_{U^s(X, X+H]}=o_{w\to \infty}\left(\left(\frac{\varphi(W')}{W'}\right)^{k-1}\log^{k-1} X\right). \end{align} $$

$$ \begin{align} \|d_k(W'\cdot+a)-d_k^{\sharp}(W'\cdot+a)\|_{U^s(X, X+H]}=o_{w\to \infty}\left(\left(\frac{\varphi(W')}{W'}\right)^{k-1}\log^{k-1} X\right). \end{align} $$

-

(iii) If

$\theta = 3/5$

, then (1.22)

$\theta = 3/5$

, then (1.22) $$ \begin{align} \|\mu\|_{U^s(X, X+H]}=o(1). \end{align} $$

$$ \begin{align} \|\mu\|_{U^s(X, X+H]}=o(1). \end{align} $$

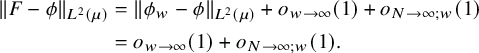

In all these estimates, the

![]() $o(1)$

notation is with respect to the limit

$o(1)$

notation is with respect to the limit

![]() $X \to \infty $

(holding

$X \to \infty $

(holding

![]() $s,\varepsilon ,k$

fixed).

$s,\varepsilon ,k$

fixed).

Remarks.

-

• The model

$\Lambda _w$

with w fixed is simple to work with and arises in various applications of Gowers uniformity (e.g., to ergodic theory). This also motivates our choice of the

$\Lambda _w$

with w fixed is simple to work with and arises in various applications of Gowers uniformity (e.g., to ergodic theory). This also motivates our choice of the

$\Lambda ^{\sharp }$

model in equation (1.1) (although that is defined with a larger value of w to produce better error terms).

$\Lambda ^{\sharp }$

model in equation (1.1) (although that is defined with a larger value of w to produce better error terms). -

• Since the bounds in this theorem (unlike in Theorem 1.1) are qualitative in nature, it should be possible to use Heath-Brown’s trick from [Reference Heath-Brown29] to extend the range of H from

$X^{\theta +\varepsilon }\leq H\leq X^{1-\varepsilon }$

to

$X^{\theta +\varepsilon }\leq H\leq X^{1-\varepsilon }$

to

$X^{\theta }\leq H\leq X^{1-\varepsilon }$

. Also, the range

$X^{\theta }\leq H\leq X^{1-\varepsilon }$

. Also, the range

$X^{1-\varepsilon }\leq H\leq X$

could be covered, as in Remark 1.2. We leave the details to the interested reader.

$X^{1-\varepsilon }\leq H\leq X$

could be covered, as in Remark 1.2. We leave the details to the interested reader. -

• In the case

$s=2$

, we obtain significantly stronger estimates thanks to the polynomial nature of the

$s=2$

, we obtain significantly stronger estimates thanks to the polynomial nature of the

$U^2$

inverse theorem. Specifically, when

$U^2$

inverse theorem. Specifically, when

$\theta =5/8+\varepsilon $

, we have for all

$\theta =5/8+\varepsilon $

, we have for all $$ \begin{align*}\|\mu\|_{U^2(X, X+X^{\theta}]}, \|\Lambda-\Lambda^{\sharp}\|_{U^2(X, X+X^{\theta}]} \ll_{A,\varepsilon} \log^{-A} X\end{align*} $$

$$ \begin{align*}\|\mu\|_{U^2(X, X+X^{\theta}]}, \|\Lambda-\Lambda^{\sharp}\|_{U^2(X, X+X^{\theta}]} \ll_{A,\varepsilon} \log^{-A} X\end{align*} $$

$A> 0$

and (1.23)for some

$A> 0$

and (1.23)for some $$ \begin{align} \|d_k\|_{U^2(X, X+X^{\theta}]} \ll_\varepsilon X^{-c_{k}\varepsilon} \end{align} $$

$$ \begin{align} \|d_k\|_{U^2(X, X+X^{\theta}]} \ll_\varepsilon X^{-c_{k}\varepsilon} \end{align} $$

$c_{k}>0$

, with equation (1.23) also holding when

$c_{k}>0$

, with equation (1.23) also holding when

$(k,\theta ) = (3,5/9), (2,1/3)$

and finally when

$(k,\theta ) = (3,5/9), (2,1/3)$

and finally when $$ \begin{align*}\|\mu\|_{U^2(X, X+X^{\theta}]} \ll_\varepsilon \log^{-1/20} X\end{align*} $$

$$ \begin{align*}\|\mu\|_{U^2(X, X+X^{\theta}]} \ll_\varepsilon \log^{-1/20} X\end{align*} $$

$\theta = 3/5$

. All of these follow directly by combining Theorem 1.1 for

$\theta = 3/5$

. All of these follow directly by combining Theorem 1.1 for

$d=1$

(that is, for Fourier phases in place of nilsequences) with the polynomial form of the

$d=1$

(that is, for Fourier phases in place of nilsequences) with the polynomial form of the

$U^2$

inverse theorem, which states that if

$U^2$

inverse theorem, which states that if

$f:[N]\to \mathbb {C}$

is

$f:[N]\to \mathbb {C}$

is

$1$

-bounded and

$1$

-bounded and

$\|f\|_{U^2[N]}\geq \delta $

for some

$\|f\|_{U^2[N]}\geq \delta $

for some

$\delta>0$

, then

$\delta>0$

, then

$|\sum _{n\leq N}f(n)e(\alpha n)|^{*}\gg \delta ^4 N$

for some

$|\sum _{n\leq N}f(n)e(\alpha n)|^{*}\gg \delta ^4 N$

for some

$\alpha \in \mathbb {R}$

. This form of the inverse theorem follows directly from the Fourier representation of the

$\alpha \in \mathbb {R}$

. This form of the inverse theorem follows directly from the Fourier representation of the

$U^2[N]$

norm and Parseval’s theorem, where the Gowers norm

$U^2[N]$

norm and Parseval’s theorem, where the Gowers norm

$U^2[N]$

is defined analogously as in equation (1.17).

$U^2[N]$

is defined analogously as in equation (1.17).

1.2 Applications

1.2.1 Polynomial phases

We already stated Corollary 1.3 concerning polynomial phases. But let us here mention that in a recent work of Kanigowski–Lemańczyk–Radziwiłł [Reference Kanigowski, Lemańczyk and Radziwiłł39] on the prime number theorem for analytic skew products, a key analytic input ([Reference Kanigowski, Lemańczyk and Radziwiłł39, Theorem 9.1]) was that Corollary 1.3(ii) holds for

![]() $H=X^{2/3-\eta }$

(with a weaker error term of

$H=X^{2/3-\eta }$

(with a weaker error term of

![]() $o_{\eta \to 0}(H)$

), thus going just beyond the range of validity of [Reference Matomäki and Shao49, Theorem 1.1]. Corollary 1.3 allows taking

$o_{\eta \to 0}(H)$

), thus going just beyond the range of validity of [Reference Matomäki and Shao49, Theorem 1.1]. Corollary 1.3 allows taking

![]() $\eta <1/24$

with strongly logarithmic savings for the error terms. Similar remarks apply to the recent work of Kanigowski [Reference Kanigowski38].

$\eta <1/24$

with strongly logarithmic savings for the error terms. Similar remarks apply to the recent work of Kanigowski [Reference Kanigowski38].

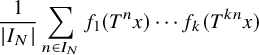

1.2.2 An application to ergodic theory

In a seminal work, Host and Kra [Reference Host and Kra32] showed that, for any measure-preserving system

![]() $(X,\mathcal {X},\mu ,T)$

, any bounded functions

$(X,\mathcal {X},\mu ,T)$

, any bounded functions

![]() $f_1,\ldots , f_k:X\to \mathbb {C}$

and any intervals

$f_1,\ldots , f_k:X\to \mathbb {C}$

and any intervals

![]() $I_N$

whose lengths tend to infinity as

$I_N$

whose lengths tend to infinity as

![]() $N\to \infty $

, the multiple ergodic averages

$N\to \infty $

, the multiple ergodic averages

$$ \begin{align*} \frac{1}{|I_N|}\sum_{n\in I_N}f_1(T^nx)\cdots f_k(T^{kn}x) \end{align*} $$

$$ \begin{align*} \frac{1}{|I_N|}\sum_{n\in I_N}f_1(T^nx)\cdots f_k(T^{kn}x) \end{align*} $$

converge in

![]() $L^2(\mu )$

as

$L^2(\mu )$

as

![]() $N\to \infty $

. Since this work, it has therefore become a natural and active question to determine for which sequences of intervals

$N\to \infty $

. Since this work, it has therefore become a natural and active question to determine for which sequences of intervals

![]() $(I_N)_N$

and weights

$(I_N)_N$

and weights

![]() $w:\mathbb {N}\to \mathbb {C}$

we have the

$w:\mathbb {N}\to \mathbb {C}$

we have the

![]() $L^2$

-convergence of

$L^2$

-convergence of

$$ \begin{align*} \frac{1}{|I_N|}\sum_{n\in I_N}w(n)f_1(T^nx)\cdots f_k(T^{kn}x) \end{align*} $$

$$ \begin{align*} \frac{1}{|I_N|}\sum_{n\in I_N}w(n)f_1(T^nx)\cdots f_k(T^{kn}x) \end{align*} $$

as

![]() $N\to \infty $

. The case of

$N\to \infty $

. The case of

![]() $I_N=[1,N]$

and with the weight being the primes, that is

$I_N=[1,N]$

and with the weight being the primes, that is

![]() $w(n)=1_{\mathbb {P}}(n)$

, was settled in the works of Frantzikinakis–Host–Kra [Reference Frantzikinakis, Host and Kra13] and Wooley–Ziegler [Reference Wooley and Ziegler63] (the results of [Reference Frantzikinakis, Host and Kra13] in the cases

$w(n)=1_{\mathbb {P}}(n)$

, was settled in the works of Frantzikinakis–Host–Kra [Reference Frantzikinakis, Host and Kra13] and Wooley–Ziegler [Reference Wooley and Ziegler63] (the results of [Reference Frantzikinakis, Host and Kra13] in the cases

![]() $k\geq 4$

were originally conditional on the Gowers uniformity of the von Mangoldt function). Analogous results also exist for weights w supported on a sequence given by a Hardy field [Reference Frantzikinakis12] or random sequences [Reference Frantzikinakis, Lesigne and Wierdl14]; see also [Reference Le42] for related results concerning correlation sequences

$k\geq 4$

were originally conditional on the Gowers uniformity of the von Mangoldt function). Analogous results also exist for weights w supported on a sequence given by a Hardy field [Reference Frantzikinakis12] or random sequences [Reference Frantzikinakis, Lesigne and Wierdl14]; see also [Reference Le42] for related results concerning correlation sequences

![]() $n \mapsto \int _X f_1(T^nx)\cdots f_k(T^{kn}x)\ d\mu (x)$

. As an application of Theorem 1.5, we can extend the result on prime weights to short collections of intervals

$n \mapsto \int _X f_1(T^nx)\cdots f_k(T^{kn}x)\ d\mu (x)$

. As an application of Theorem 1.5, we can extend the result on prime weights to short collections of intervals

![]() $(I_N)_N$

.

$(I_N)_N$

.

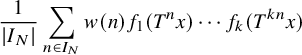

Theorem 1.6 (Multiple ergodic averages over primes in short intervals).

Let

![]() $k\geq 1$

,

$k\geq 1$

,

![]() $\varepsilon>0$

and

$\varepsilon>0$

and

![]() $\kappa \in [5/8+\varepsilon , 1-\varepsilon ]$

. Let

$\kappa \in [5/8+\varepsilon , 1-\varepsilon ]$

. Let

![]() $h_1,\ldots , h_k$

be distinct positive integers. Let

$h_1,\ldots , h_k$

be distinct positive integers. Let

![]() $(X,\mathcal {X},\mu ,T)$

be a measure-preserving system. Let

$(X,\mathcal {X},\mu ,T)$

be a measure-preserving system. Let

![]() $f_1,\ldots , f_k:X\to \mathbb {C}$

be bounded and measurable. Then the multiple ergodic averages

$f_1,\ldots , f_k:X\to \mathbb {C}$

be bounded and measurable. Then the multiple ergodic averages

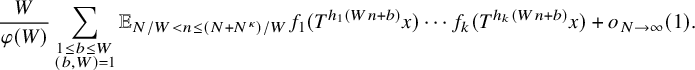

converge in

![]() $L^2(\mu )$

.

$L^2(\mu )$

.

The results of [Reference Frantzikinakis, Host and Kra13] and [Reference Wooley and Ziegler63] correspond to the case

![]() $\kappa =1$

. According to the best of our knowledge, Theorem 1.6 is the first result of its kind with

$\kappa =1$

. According to the best of our knowledge, Theorem 1.6 is the first result of its kind with

![]() $\kappa <1$

.

$\kappa <1$

.

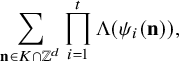

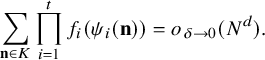

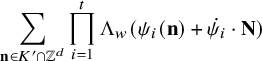

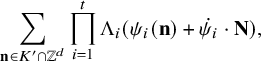

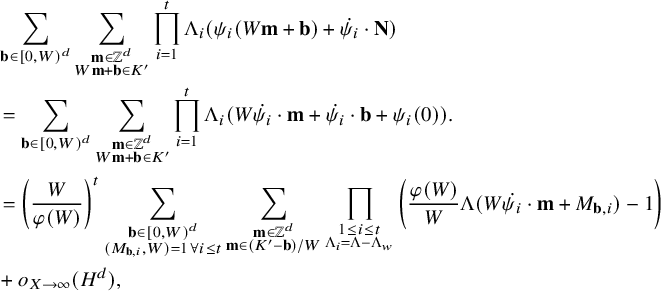

1.2.3 Linear equations in short intervals

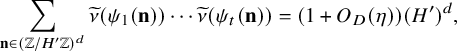

The work of Green and the third author [Reference Green and Tao17] on linear equations in primes (together with [Reference Green and Tao18], [Reference Green, Tao and Ziegler21]) provides for any finite complexity systems of linear forms

![]() $(\psi _1,\ldots , \psi _t):\mathbb {Z}^d\to \mathbb {Z}^t$

an asymptotic formula for

$(\psi _1,\ldots , \psi _t):\mathbb {Z}^d\to \mathbb {Z}^t$

an asymptotic formula for

$$ \begin{align} \sum_{\mathbf{n}\in K\cap \mathbb{Z}^d}\prod_{i=1}^t \Lambda(\psi_i(\mathbf{n})), \end{align} $$

$$ \begin{align} \sum_{\mathbf{n}\in K\cap \mathbb{Z}^d}\prod_{i=1}^t \Lambda(\psi_i(\mathbf{n})), \end{align} $$

whenever

![]() $K\subset [-X,X]^d$

is a convex body containing a positive proportion of the whole cube

$K\subset [-X,X]^d$

is a convex body containing a positive proportion of the whole cube

![]() $[-X,X]^d$

, that is,

$[-X,X]^d$

, that is,

![]() $\text {vol}(K)\gg X^d$

. One may ask if one can establish similar results when K is a smaller region in

$\text {vol}(K)\gg X^d$

. One may ask if one can establish similar results when K is a smaller region in

![]() $[-X,X]^d$

, of volume

$[-X,X]^d$

, of volume

![]() $\asymp X^{\theta d}$

with

$\asymp X^{\theta d}$

with

![]() $\theta <1$

. Note that, for a single linear form, this boils down to asymptotics for primes in short intervals (where the exponent

$\theta <1$

. Note that, for a single linear form, this boils down to asymptotics for primes in short intervals (where the exponent

![]() $\theta =7/12$

from [Reference Huxley33], [Reference Heath-Brown29] is the best one known). Using Theorem 1.5, we can indeed give asymptotics for equation (1.24) in small regions.

$\theta =7/12$

from [Reference Huxley33], [Reference Heath-Brown29] is the best one known). Using Theorem 1.5, we can indeed give asymptotics for equation (1.24) in small regions.

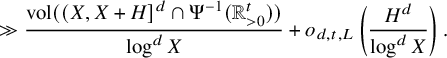

Theorem 1.7 (Generalized Hardy–Littlewood conjecture in small boxes for finite complexity systems).

Let

![]() $X \geq 3$

and

$X \geq 3$

and

![]() $X^{5/8+\varepsilon } \leq H \leq X^{1-\varepsilon }$

for some fixed

$X^{5/8+\varepsilon } \leq H \leq X^{1-\varepsilon }$

for some fixed

![]() $\varepsilon> 0$

. Let

$\varepsilon> 0$

. Let

![]() $d,t,L\geq 1$

. Let

$d,t,L\geq 1$

. Let

![]() $\Psi =(\psi _1,\ldots , \psi _t)$

be a system of affine-linear forms, where each

$\Psi =(\psi _1,\ldots , \psi _t)$

be a system of affine-linear forms, where each

![]() $\psi _i:\mathbb {Z}^d\to \mathbb {Z}$

has the form

$\psi _i:\mathbb {Z}^d\to \mathbb {Z}$

has the form

![]() $\psi _i(\mathbf {x})=\dot {\psi _i}\cdot \mathbf {x}+\psi _i(0)$

with

$\psi _i(\mathbf {x})=\dot {\psi _i}\cdot \mathbf {x}+\psi _i(0)$

with

![]() $\dot {\psi _i}\in \mathbb {Z}^d$

and

$\dot {\psi _i}\in \mathbb {Z}^d$

and

![]() $\psi _i(0)\in \mathbb {Z}$

satisfying

$\psi _i(0)\in \mathbb {Z}$

satisfying

![]() $|\dot {\psi _i}|\leq L$

and

$|\dot {\psi _i}|\leq L$

and

![]() $|\psi _i(0)|\leq LX$

. Suppose that

$|\psi _i(0)|\leq LX$

. Suppose that

![]() $\dot {\psi _i}$

and

$\dot {\psi _i}$

and

![]() $\dot {\psi _j}$

are linearly independent whenever

$\dot {\psi _j}$

are linearly independent whenever

![]() $i\neq j$

. Let

$i\neq j$

. Let

![]() $K\subset (X,X+H]^d$

be a convex body. Then

$K\subset (X,X+H]^d$

be a convex body. Then

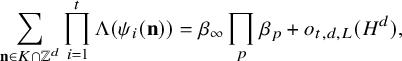

$$ \begin{align} \sum_{\mathbf{n}\in K\cap \mathbb{Z}^d}\prod_{i=1}^t \Lambda(\psi_i(\mathbf{n}))=\beta_{\infty}\prod_p \beta_p+o_{t,d,L}(H^d), \end{align} $$

$$ \begin{align} \sum_{\mathbf{n}\in K\cap \mathbb{Z}^d}\prod_{i=1}^t \Lambda(\psi_i(\mathbf{n}))=\beta_{\infty}\prod_p \beta_p+o_{t,d,L}(H^d), \end{align} $$

where

![]() $\Lambda $

is extended as

$\Lambda $

is extended as

![]() $0$

to the nonpositive integers and the Archimedean factor is given by

$0$

to the nonpositive integers and the Archimedean factor is given by

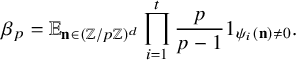

and the local factors are given by

$$ \begin{align*} \beta_p=\mathbb{E}_{\mathbf{n}\in (\mathbb{Z}/p\mathbb{Z})^d}\prod_{i=1}^t\frac{p}{p-1}1_{\psi_i(\mathbf{n})\neq 0}. \end{align*} $$

$$ \begin{align*} \beta_p=\mathbb{E}_{\mathbf{n}\in (\mathbb{Z}/p\mathbb{Z})^d}\prod_{i=1}^t\frac{p}{p-1}1_{\psi_i(\mathbf{n})\neq 0}. \end{align*} $$

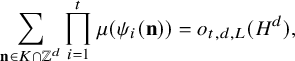

Remark 1.8. From Theorem 1.5 and the proof method of Theorem 1.7, one can also deduce similar correlation results when in equation (1.25) one replaces

![]() $\Lambda $

with

$\Lambda $

with

![]() $\mu $

or

$\mu $

or

![]() $d_k$

(with the value of

$d_k$

(with the value of

![]() $\theta $

as in Theorem 1.5, and with no main term in the case of

$\theta $

as in Theorem 1.5, and with no main term in the case of

![]() $\mu $

, and a different local product in the case of

$\mu $

, and a different local product in the case of

![]() $d_k$

). More specifically, under the assumption of Theorem 1.7, we have

$d_k$

). More specifically, under the assumption of Theorem 1.7, we have

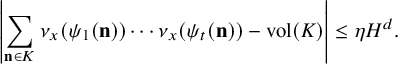

$$ \begin{align} \sum_{\mathbf{n}\in K\cap \mathbb{Z}^d}\prod_{i=1}^t \mu(\psi_i(\mathbf{n}))= o_{t,d,L}(H^d), \end{align} $$

$$ \begin{align} \sum_{\mathbf{n}\in K\cap \mathbb{Z}^d}\prod_{i=1}^t \mu(\psi_i(\mathbf{n}))= o_{t,d,L}(H^d), \end{align} $$

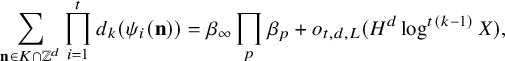

and, for a positive integer k,

$$ \begin{align*} \sum_{\mathbf{n}\in K\cap \mathbb{Z}^d}\prod_{i=1}^t d_k(\psi_i(\mathbf{n}))=\beta_{\infty}\prod_p \beta_p+o_{t,d,L}(H^d\log^{t(k-1)}X), \end{align*} $$

$$ \begin{align*} \sum_{\mathbf{n}\in K\cap \mathbb{Z}^d}\prod_{i=1}^t d_k(\psi_i(\mathbf{n}))=\beta_{\infty}\prod_p \beta_p+o_{t,d,L}(H^d\log^{t(k-1)}X), \end{align*} $$

where

![]() $d_k$

is extended as

$d_k$

is extended as

![]() $0$

to the nonpositive integers and the Archimedean factor is given by

$0$

to the nonpositive integers and the Archimedean factor is given by

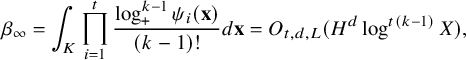

$$ \begin{align*} \beta_{\infty}=\int_K \prod_{i=1}^t \frac{\log_+^{k-1}\psi_i(\mathbf{x})}{(k-1)!} d\mathbf{x} = O_{t,d,L} (H^d \log^{t(k-1)}X), \end{align*} $$

$$ \begin{align*} \beta_{\infty}=\int_K \prod_{i=1}^t \frac{\log_+^{k-1}\psi_i(\mathbf{x})}{(k-1)!} d\mathbf{x} = O_{t,d,L} (H^d \log^{t(k-1)}X), \end{align*} $$

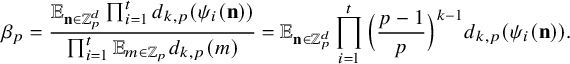

and the local factors are given by

$$ \begin{align*} \beta_p=\frac{\mathbb{E}_{\mathbf{n} \in \mathbb{Z}_p^d} \prod_{i=1}^t d_{k,p}(\psi_i(\mathbf{n}))}{\prod_{i=1}^t \mathbb{E}_{m \in \mathbb{Z}_p} d_{k,p}(m)} = \mathbb{E}_{\mathbf{n} \in \mathbb{Z}_p^d} \prod_{i=1}^t \Big(\frac{p-1}{p}\Big)^{k-1} d_{k,p}(\psi_i(\mathbf{n})). \end{align*} $$

$$ \begin{align*} \beta_p=\frac{\mathbb{E}_{\mathbf{n} \in \mathbb{Z}_p^d} \prod_{i=1}^t d_{k,p}(\psi_i(\mathbf{n}))}{\prod_{i=1}^t \mathbb{E}_{m \in \mathbb{Z}_p} d_{k,p}(m)} = \mathbb{E}_{\mathbf{n} \in \mathbb{Z}_p^d} \prod_{i=1}^t \Big(\frac{p-1}{p}\Big)^{k-1} d_{k,p}(\psi_i(\mathbf{n})). \end{align*} $$

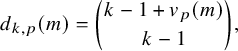

Here,

![]() $\log _+ y := \log \max (y, 1)$

,

$\log _+ y := \log \max (y, 1)$

,

![]() $\mathbb {Z}_p$

is the p-adics (with the usual Haar probability measure),

$\mathbb {Z}_p$

is the p-adics (with the usual Haar probability measure),

$$ \begin{align*}d_{k,p}(m) = \binom{k-1+v_p(m)}{k-1},\end{align*} $$

$$ \begin{align*}d_{k,p}(m) = \binom{k-1+v_p(m)}{k-1},\end{align*} $$

and

![]() $v_p(m)$

is the number of times p divides m. These local factors are natural extensions of the ones defined in [Reference Matomäki, Radziwiłł and Tao47, Remark 1.2] in the special case of two linear forms

$v_p(m)$

is the number of times p divides m. These local factors are natural extensions of the ones defined in [Reference Matomäki, Radziwiłł and Tao47, Remark 1.2] in the special case of two linear forms

![]() $\psi _1(n) = n, \psi _2(n) = n+h$

.

$\psi _1(n) = n, \psi _2(n) = n+h$

.

We have the following immediate corollary to Theorem 1.7.

Corollary 1.9 (Linear equations in primes in short intervals).

Let

![]() $X \geq 3$

and

$X \geq 3$

and

![]() $X^{5/8+\varepsilon } \leq H \leq X^{1-\varepsilon }$

for some fixed

$X^{5/8+\varepsilon } \leq H \leq X^{1-\varepsilon }$

for some fixed

![]() $\varepsilon> 0$

. Let

$\varepsilon> 0$

. Let

![]() $d,t,L\geq 1$

. Let

$d,t,L\geq 1$

. Let

![]() $\Psi =(\psi _1,\ldots , \psi _t):\mathbb {Z}^d\to \mathbb {Z}^t$

be a system of affine-linear forms, where each

$\Psi =(\psi _1,\ldots , \psi _t):\mathbb {Z}^d\to \mathbb {Z}^t$

be a system of affine-linear forms, where each

![]() $\psi _i$

has the form

$\psi _i$

has the form

![]() $\psi _i(\mathbf {x})=\dot {\psi _i}\cdot \mathbf {x}+\psi _i(0)$

with

$\psi _i(\mathbf {x})=\dot {\psi _i}\cdot \mathbf {x}+\psi _i(0)$

with

![]() $\dot {\psi _i}\in \mathbb {Z}^d$

and

$\dot {\psi _i}\in \mathbb {Z}^d$

and

![]() $\psi _i(0)\in \mathbb {Z}$

satisfying

$\psi _i(0)\in \mathbb {Z}$

satisfying

![]() $|\dot {\psi _i}|\leq L$

and

$|\dot {\psi _i}|\leq L$

and

![]() $|\psi _i(0)|\leq LX$

. Suppose that

$|\psi _i(0)|\leq LX$

. Suppose that

![]() $\dot {\psi _i}$

and

$\dot {\psi _i}$

and

![]() $\dot {\psi _j}$

are linearly independent whenever

$\dot {\psi _j}$

are linearly independent whenever

![]() $i\neq j$

. Suppose that, for every prime p, the system of equations

$i\neq j$

. Suppose that, for every prime p, the system of equations

![]() $\Psi (\mathbf {n})=0$

is solvable with

$\Psi (\mathbf {n})=0$

is solvable with

![]() $\mathbf {n}\in ((\mathbb {Z}/p\mathbb {Z})\setminus \{0\})^d$

. Then the number of solutions to

$\mathbf {n}\in ((\mathbb {Z}/p\mathbb {Z})\setminus \{0\})^d$

. Then the number of solutions to

![]() $\Psi (\mathbf {n})=0$

with

$\Psi (\mathbf {n})=0$

with

![]() $\mathbf {n}\in (\mathbb {P}\cap (X,X+H])^d$

is

$\mathbf {n}\in (\mathbb {P}\cap (X,X+H])^d$

is

$$ \begin{align*} \gg \frac{\text{vol}((X,X+H]^d\cap \Psi^{-1}(\mathbb{R}_{>0}^t))}{\log^d X}+o_{d,t,L}\left(\frac{H^d}{\log^d X}\right). \end{align*} $$

$$ \begin{align*} \gg \frac{\text{vol}((X,X+H]^d\cap \Psi^{-1}(\mathbb{R}_{>0}^t))}{\log^d X}+o_{d,t,L}\left(\frac{H^d}{\log^d X}\right). \end{align*} $$

Thus, for example, for any

![]() $\varepsilon>0$

and any large enough odd N there is a solution to

$\varepsilon>0$

and any large enough odd N there is a solution to

with

![]() $p_i\in [N/3-N^{5/8+\varepsilon },N/3+N^{5/8+\varepsilon }]$

. Without the condition

$p_i\in [N/3-N^{5/8+\varepsilon },N/3+N^{5/8+\varepsilon }]$

. Without the condition

![]() $2p_1-p_2 \in \mathbb {P}$

, this is due to Zhan [Reference Zhan64]. The exponent

$2p_1-p_2 \in \mathbb {P}$

, this is due to Zhan [Reference Zhan64]. The exponent

![]() $5/8$

in Zhan’s result has been improved using sieve methods (see, e.g., [Reference Baker and Harman3]) and more recently using the transference principle [Reference Matomäki, Maynard and Shao43]. It would probably be possible to use a sieve method also to improve on Corollary 1.9; it would suffice to find a suitable minorant function for

$5/8$

in Zhan’s result has been improved using sieve methods (see, e.g., [Reference Baker and Harman3]) and more recently using the transference principle [Reference Matomäki, Maynard and Shao43]. It would probably be possible to use a sieve method also to improve on Corollary 1.9; it would suffice to find a suitable minorant function for

![]() $\Lambda (n)$

that has positive average and is Gowers uniform in shorter intervals. Such a minorant could be constructed with our arithmetic information using Harman’s sieve method [Reference Harman24], but we do not do so here.

$\Lambda (n)$

that has positive average and is Gowers uniform in shorter intervals. Such a minorant could be constructed with our arithmetic information using Harman’s sieve method [Reference Harman24], but we do not do so here.

1.3 Methods of proof

We now describe (in somewhat informal terms) the general strategy of proof of our main theorems, although for various technical reasons the actual rigorous proof will not quite follow the intuitive plan that is outlined here.

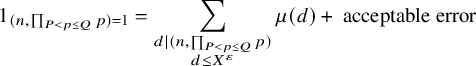

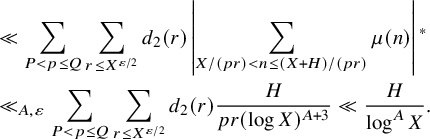

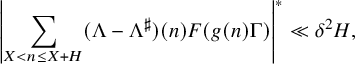

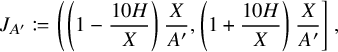

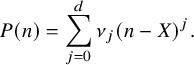

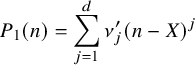

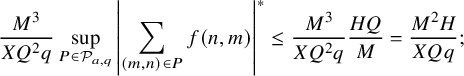

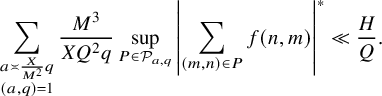

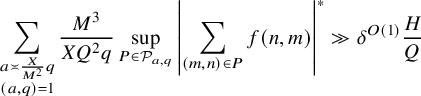

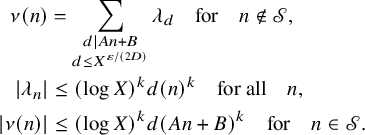

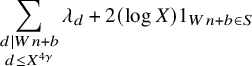

To prove Theorem 1.1, the first step, which is standard, is to apply Heath–Brown’s identity (Lemma 2.16) together with a combinatorial lemma regarding subsums of a finite number of nonnegative reals summing to one (Lemma 2.20) to decompose

![]() $\mu , \Lambda , d_k$

(up to small errors) into three standard types of sums:

$\mu , \Lambda , d_k$

(up to small errors) into three standard types of sums:

-

(I) Type I sums, which are roughly of the form

$\alpha * 1 = \alpha *d_1$

for some arithmetic function

$\alpha * 1 = \alpha *d_1$

for some arithmetic function

$\alpha \colon \mathbb {N} \to \mathbb {C}$

supported on some interval

$\alpha \colon \mathbb {N} \to \mathbb {C}$

supported on some interval

$[1, A_I]$

that is not too large, and with

$[1, A_I]$

that is not too large, and with

$\alpha $

bounded in an

$\alpha $

bounded in an

$L^2$

averaged sense.

$L^2$

averaged sense. -

(I 2) Type

$I_2$

sums, which are roughly of the form

$I_2$

sums, which are roughly of the form

$\alpha * d_2$

for some arithmetic function

$\alpha * d_2$

for some arithmetic function

$\alpha \colon \mathbb {N} \to \mathbb {C}$

supported on some interval

$\alpha \colon \mathbb {N} \to \mathbb {C}$

supported on some interval

$[1, A_{I_2}]$

that is not too large, and with

$[1, A_{I_2}]$

that is not too large, and with

$\alpha $

bounded in an

$\alpha $

bounded in an

$L^2$

averaged sense.

$L^2$

averaged sense. -

(II) Type

$II$

sums, which are roughly of the form

$II$

sums, which are roughly of the form

$\alpha * \beta $

for some arithmetic functions

$\alpha * \beta $

for some arithmetic functions

$\alpha , \beta \colon \mathbb {N} \to \mathbb {C}$

with

$\alpha , \beta \colon \mathbb {N} \to \mathbb {C}$

with

$\alpha $

supported on some interval

$\alpha $

supported on some interval

$[A_{II}^-, A_{II}^+]$

that is neither too long nor too close to

$[A_{II}^-, A_{II}^+]$

that is neither too long nor too close to

$1$

or X, and with

$1$

or X, and with

$\alpha ,\beta $

bounded in an

$\alpha ,\beta $

bounded in an

$L^2$

averaged sense.

$L^2$

averaged sense.

This decomposition is detailed in Section 4. The precise ranges of parameters

![]() $A_I, A_{I_2}, A_{II}^-$

,

$A_I, A_{I_2}, A_{II}^-$

,

![]() $A_{II}^+$

that arise in this decomposition depend on the choice of

$A_{II}^+$

that arise in this decomposition depend on the choice of

![]() $\theta $

(and, in the case of

$\theta $

(and, in the case of

![]() $d_k$

for small k, on the value of k); this is encoded in the combinatorial lemma given here as Lemma 2.20.

$d_k$

for small k, on the value of k); this is encoded in the combinatorial lemma given here as Lemma 2.20.

The treatment of these types of sums (in Theorem 4.2) depends on the behavior of the nilsequence

![]() $F(g(n) \Gamma )$

, in particular whether it is ‘major arc’ or ‘minor arc’. This splitting into different behaviors will be done somewhat differently for different types of sums.

$F(g(n) \Gamma )$

, in particular whether it is ‘major arc’ or ‘minor arc’. This splitting into different behaviors will be done somewhat differently for different types of sums.

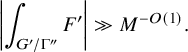

In case of type I and type

![]() $I_2$

sums, one can use the equidistribution theory of nilmanifolds to essentially reduce to two cases, the major arc case in which the nilsequence

$I_2$

sums, one can use the equidistribution theory of nilmanifolds to essentially reduce to two cases, the major arc case in which the nilsequence

![]() $F(g(n) \Gamma )$

behaves like (or ‘pretends to be’) the constant function

$F(g(n) \Gamma )$

behaves like (or ‘pretends to be’) the constant function

![]() $1$

(or some other function of small period), and the minor arc case in which F has mean zero and

$1$

(or some other function of small period), and the minor arc case in which F has mean zero and

![]() $g(n) \Gamma $

is highly equidistributed in the nilmanifold

$g(n) \Gamma $

is highly equidistributed in the nilmanifold

![]() $G/\Gamma $

. The contribution of type I and type

$G/\Gamma $

. The contribution of type I and type

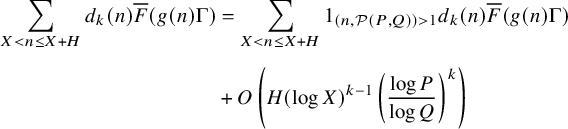

![]() $I_2$

major arc sums can be treated by standard methods, namely an application of Perron’s formula and mean value theorems for Dirichlet series; see Section 3.

$I_2$

major arc sums can be treated by standard methods, namely an application of Perron’s formula and mean value theorems for Dirichlet series; see Section 3.

The contribution of type I minor arc sums can be treated by a slight modification of the arguments in [Reference Green and Tao18], which are based on the ‘quantitative Leibman theorem’ (Theorem 2.7 below) that characterizes when a nilsequence is equidistributed, as well as a classical lemma of Vinogradov (Lemma 2.3 below) that characterizes when a polynomial modulo

![]() $1$

is equidistributed. (Actually, it will be convenient to rely primarily on a corollary of Lemma 2.3 that asserts that if typical dilates of a polynomial are equidistributed modulo

$1$

is equidistributed. (Actually, it will be convenient to rely primarily on a corollary of Lemma 2.3 that asserts that if typical dilates of a polynomial are equidistributed modulo

![]() $1$

, then the polynomial itself is equidistributed modulo

$1$

, then the polynomial itself is equidistributed modulo

![]() $1$

: See Corollary 2.4 below.)

$1$

: See Corollary 2.4 below.)

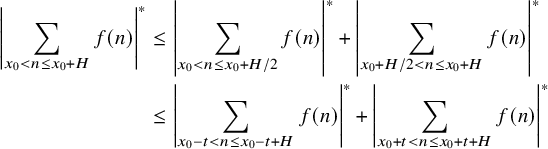

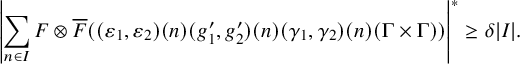

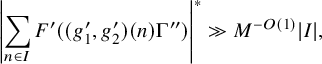

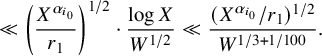

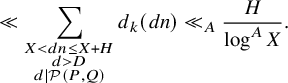

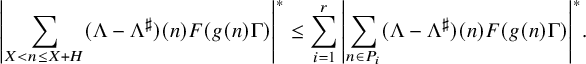

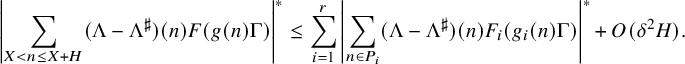

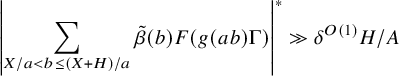

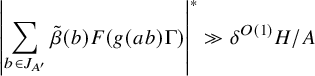

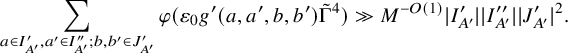

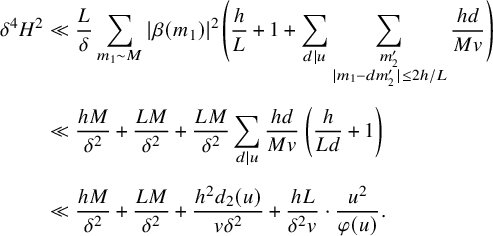

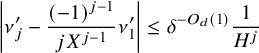

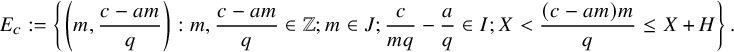

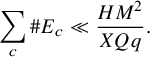

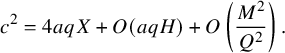

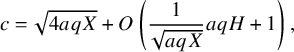

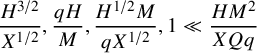

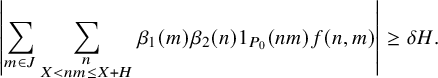

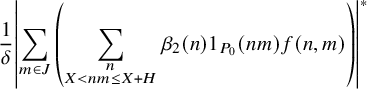

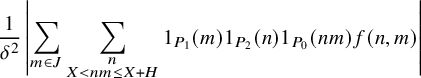

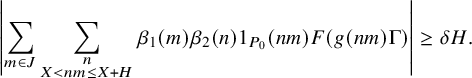

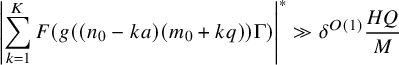

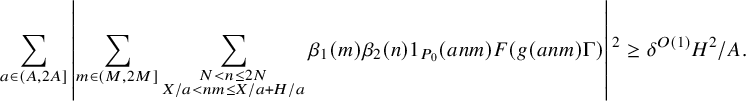

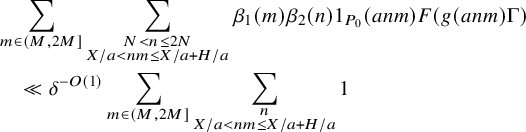

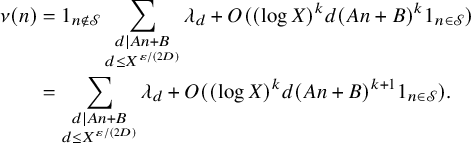

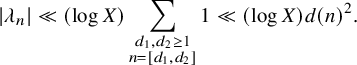

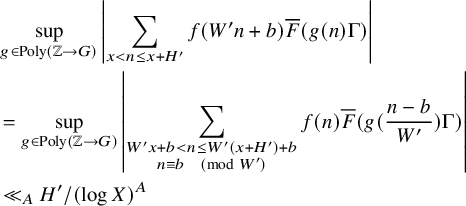

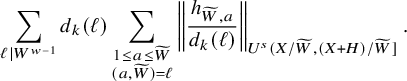

Our treatment of type

![]() $I_2$

minor arc sums is more novel. A model case is that of treating the

$I_2$

minor arc sums is more novel. A model case is that of treating the

![]() $d_2$

-type correlation

$d_2$

-type correlation

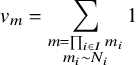

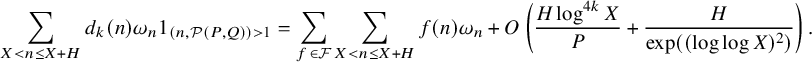

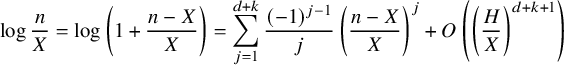

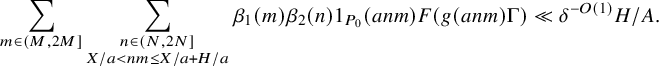

From the definition of the divisor function

![]() $d_2$

, we can expand this sum as a double sum

$d_2$

, we can expand this sum as a double sum

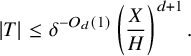

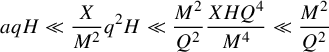

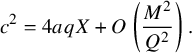

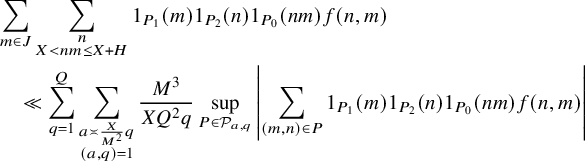

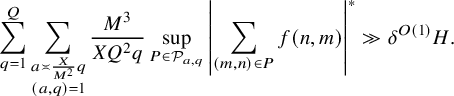

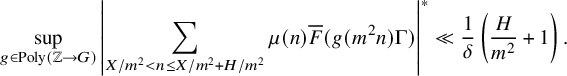

We are not able to obtain nontrivial estimates on such sums in the regime

![]() $H \leq X^{1/3}$

. However, when

$H \leq X^{1/3}$

. However, when

![]() $H \geq X^{1/3+\varepsilon }$

, it turns out by elementary geometry of numbers that the hyperbola neighborhood

$H \geq X^{1/3+\varepsilon }$

, it turns out by elementary geometry of numbers that the hyperbola neighborhood

![]() $\{ (n,m) \in \mathbb {Z}^2: X < nm \leq X+H\}$

may be partitionedFootnote

3

into arithmetic progressions

$\{ (n,m) \in \mathbb {Z}^2: X < nm \leq X+H\}$

may be partitionedFootnote

3

into arithmetic progressions

![]() $P \subset \mathbb {Z}^2$

that mostly have nontrivial length; see Theorem 8.1 for a precise statement. This decomposition lets us efficiently decompose the sum (1.27) into short sums of the form

$P \subset \mathbb {Z}^2$

that mostly have nontrivial length; see Theorem 8.1 for a precise statement. This decomposition lets us efficiently decompose the sum (1.27) into short sums of the form

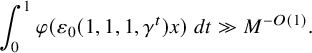

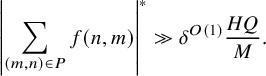

that turn out to exhibit cancellation for most progressions P in the type

![]() $I_2$

minor arc case, mainly thanks to the quantitative Leibman theorem (Theorem 2.7) and a corollary of the Vinogradov lemma (Corollary 2.4); see Section 8.

$I_2$

minor arc case, mainly thanks to the quantitative Leibman theorem (Theorem 2.7) and a corollary of the Vinogradov lemma (Corollary 2.4); see Section 8.

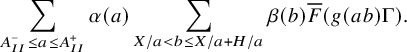

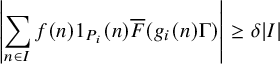

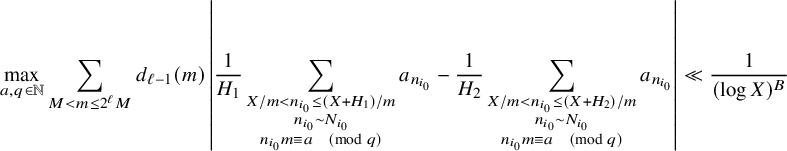

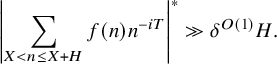

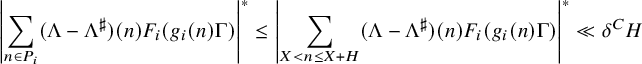

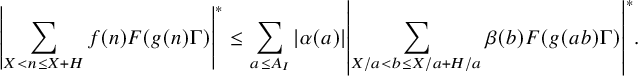

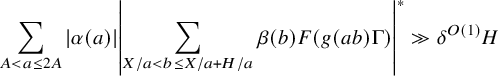

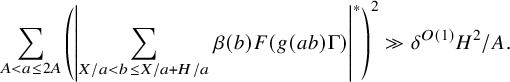

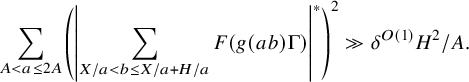

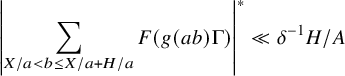

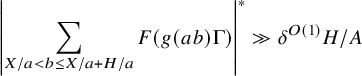

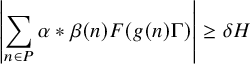

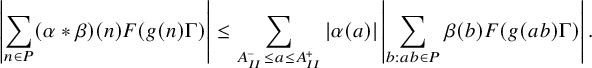

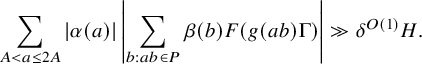

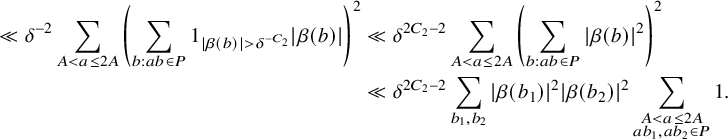

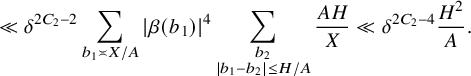

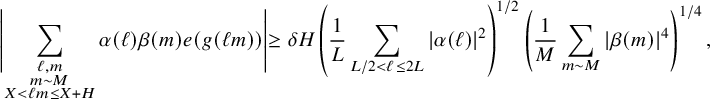

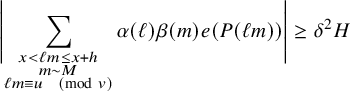

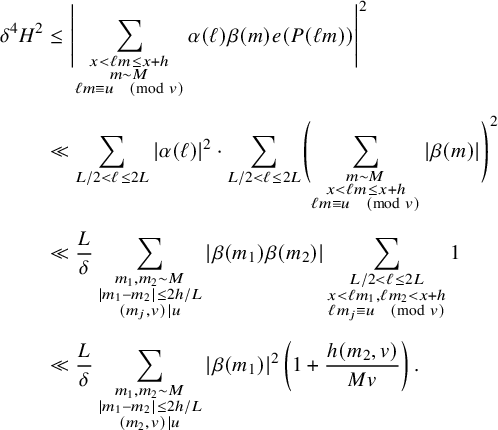

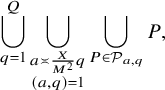

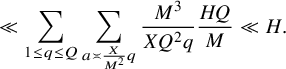

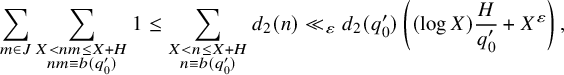

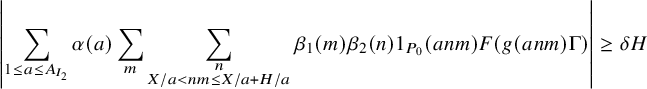

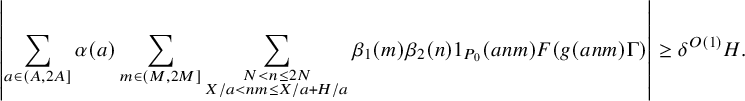

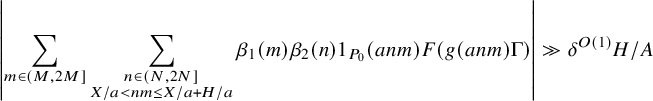

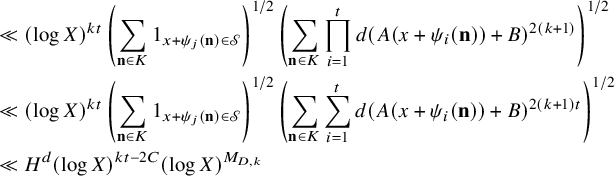

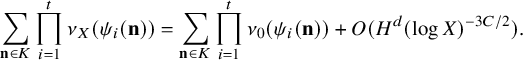

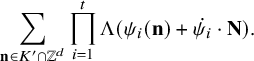

It remains to handle the contribution of type

![]() $II$

sums, which are of the form

$II$

sums, which are of the form

which we can expand as

$$ \begin{align} \sum_{A_{II}^- \leq a \leq A_{II}^+} \alpha(a) \sum_{X/a < b \leq X/a + H/a} \beta(b) \overline{F}(g(ab) \Gamma). \end{align} $$

$$ \begin{align} \sum_{A_{II}^- \leq a \leq A_{II}^+} \alpha(a) \sum_{X/a < b \leq X/a + H/a} \beta(b) \overline{F}(g(ab) \Gamma). \end{align} $$

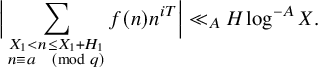

To treat these sums, we can use a Fourier decomposition and the equidistribution theory of nilmanifolds to reduce (roughly speaking) to treating the following three special cases of these sums:

-

• Type

$II$

major arc sums that are essentially of the form for some real number

$II$

major arc sums that are essentially of the form for some real number $$ \begin{align*}\sum_{X < n \leq X+H} \alpha*\beta(n) n^{iT}\end{align*} $$

$$ \begin{align*}\sum_{X < n \leq X+H} \alpha*\beta(n) n^{iT}\end{align*} $$

$T = X^{O(1)}$

of polynomial size (one can also consider generalizations of such sums when the

$T = X^{O(1)}$

of polynomial size (one can also consider generalizations of such sums when the

$n^{iT}$

factor is twisted by an additional Dirichlet character

$n^{iT}$

factor is twisted by an additional Dirichlet character

$\chi $

of bounded conductor).

$\chi $

of bounded conductor).

-

• Abelian type

$II$

minor arc sums in which

$II$

minor arc sums in which

$F(g(n)\Gamma ) = e(P(n))$

is a polynomial phase that does not ‘pretend’ to be a character

$F(g(n)\Gamma ) = e(P(n))$

is a polynomial phase that does not ‘pretend’ to be a character

$n^{iT}$

(or more generally

$n^{iT}$

(or more generally

$\chi (n) n^{iT}$

for some Dirichlet character

$\chi (n) n^{iT}$

for some Dirichlet character

$\chi $

of bounded conductor) in the sense that the Taylor coefficients of

$\chi $

of bounded conductor) in the sense that the Taylor coefficients of

$e(P(n))$

around X do not align with the corresponding coefficients of such characters.

$e(P(n))$

around X do not align with the corresponding coefficients of such characters. -

• Nonabelian type

$II$

minor arc sums, in which

$II$

minor arc sums, in which

$g(n) \Gamma $

is highly equidistributed in a nilmanifold

$g(n) \Gamma $

is highly equidistributed in a nilmanifold

$G/\Gamma $

arising from a nonabelian nilpotent group G, and F exhibits nontrivial oscillation in the direction of the center

$G/\Gamma $

arising from a nonabelian nilpotent group G, and F exhibits nontrivial oscillation in the direction of the center

$Z(G)$

of G (which one can reduce to be one-dimensional).

$Z(G)$

of G (which one can reduce to be one-dimensional).

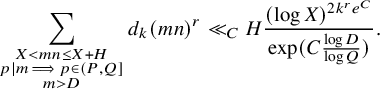

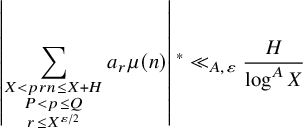

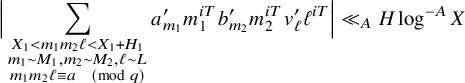

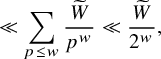

One can treat the contribution of the type

![]() $II$

major arc sums by applying Perron’s formula and Dirichlet polynomial estimates of Baker–Harman–Pintz [Reference Baker, Harman and Pintz4] in the regime, so long as one actually has a suitable triple convolution (with one of the subfactors having well-controlled correlations with

$II$

major arc sums by applying Perron’s formula and Dirichlet polynomial estimates of Baker–Harman–Pintz [Reference Baker, Harman and Pintz4] in the regime, so long as one actually has a suitable triple convolution (with one of the subfactors having well-controlled correlations with

![]() $n^{iT}$

); see Lemma 3.5. As already implicitly observed by Zhan [Reference Zhan64], this case can be treated (with favorable choices of parameters) for any of the three functions

$n^{iT}$

); see Lemma 3.5. As already implicitly observed by Zhan [Reference Zhan64], this case can be treated (with favorable choices of parameters) for any of the three functions

![]() $\mu , \Lambda , d_k$

in the case

$\mu , \Lambda , d_k$

in the case

![]() $\theta = 5/8$

. As observed in [Reference Matomäki and Teräväinen50], in the case of the Möbius function

$\theta = 5/8$

. As observed in [Reference Matomäki and Teräväinen50], in the case of the Möbius function

![]() $\mu $

, it is possible to lower

$\mu $

, it is possible to lower

![]() $\theta $

to

$\theta $

to

![]() $3/5$

and still obtain triple convolution structure after removing a small exceptional error term from

$3/5$

and still obtain triple convolution structure after removing a small exceptional error term from

![]() $\mu $

(which is responsible for the final discorrelation bounds not saving arbitrary powers of

$\mu $

(which is responsible for the final discorrelation bounds not saving arbitrary powers of

![]() $\log X$

); see Lemma 4.5.

$\log X$

); see Lemma 4.5.

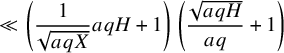

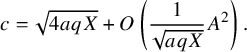

It remains to treat the contribution of nonabelian and abelian type

![]() $II$

minor arc sums. It turns out that we will be able to establish good estimates for such sums (1.28) in the regime

$II$

minor arc sums. It turns out that we will be able to establish good estimates for such sums (1.28) in the regime

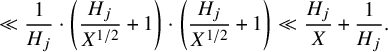

In this regime, the inner intervals

![]() $(X/a, X/a+H/a]$

in equation (1.28) have nonnegligible length (at least

$(X/a, X/a+H/a]$

in equation (1.28) have nonnegligible length (at least

![]() $X^\varepsilon $

), and furthermore they exhibit nontrivial overlap with each other (

$X^\varepsilon $

), and furthermore they exhibit nontrivial overlap with each other (

![]() $(X/a, X/a+H/a]$

will essentially be identical to

$(X/a, X/a+H/a]$

will essentially be identical to

![]() $(X/a', X/a'+H/a']$

whenever

$(X/a', X/a'+H/a']$

whenever

![]() $a' = \left (1 + O\left (X^{-\varepsilon } \frac {H}{X}\right )\right ) a$

).

$a' = \left (1 + O\left (X^{-\varepsilon } \frac {H}{X}\right )\right ) a$

).

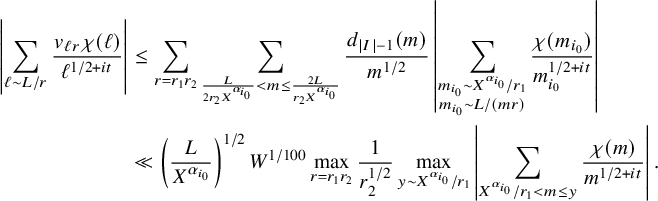

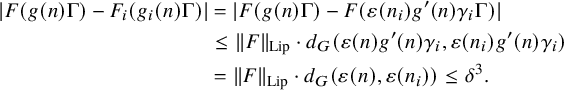

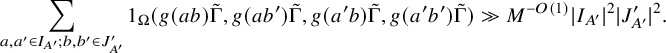

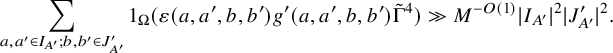

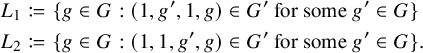

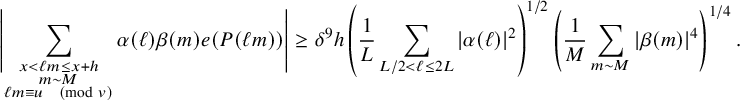

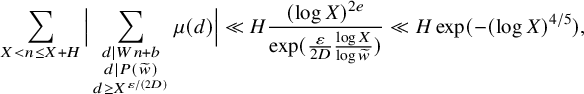

As a consequence, many of the dilated nilsequences

![]() $b \mapsto \overline {F}(g(ab) \Gamma )$

appearing in equation (1.28) will correlate with the same portion of the sequence

$b \mapsto \overline {F}(g(ab) \Gamma )$

appearing in equation (1.28) will correlate with the same portion of the sequence

![]() $\beta $

. To handle this situation, we introduce a nilsequence version of the large sieve inequality in Proposition 2.15, which we establish with the aid of the equidistribution theory for nilsequences, as well as Goursat’s lemma. The upshot of this large sieve inequality is that for many nearby pairs

$\beta $

. To handle this situation, we introduce a nilsequence version of the large sieve inequality in Proposition 2.15, which we establish with the aid of the equidistribution theory for nilsequences, as well as Goursat’s lemma. The upshot of this large sieve inequality is that for many nearby pairs

![]() $a',a$

there is an algebraic relation between the sequences

$a',a$

there is an algebraic relation between the sequences

![]() $b \mapsto g(ab)$

and

$b \mapsto g(ab)$

and

![]() $b \mapsto g(a'b)$

, namely that one has an identity of the form

$b \mapsto g(a'b)$

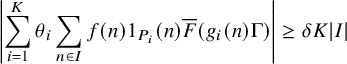

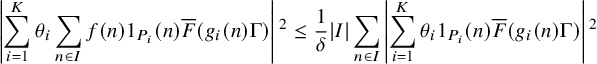

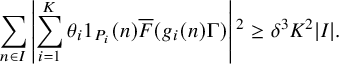

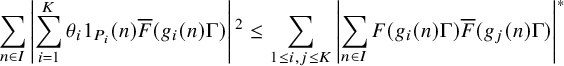

, namely that one has an identity of the form