Series Preface

The Elements in Forensic Linguistics series from Cambridge University Press publishes across four main topic areas (1) investigative and forensic text analysis; (2) the study of spoken linguistic practices in legal contexts; (3) the linguistic analysis of written legal texts; (4) explorations of the origins, development and scope of the field in various countries and regions. The Language of Fake News by Jack Grieve and Helena Woodfield is situated in the first of these and examines whether there are observable linguistic differences between fake news and genuine news articles.

Jack Grieve is best known for bringing quantitative and corpus methods to a variety of linguistic questions such as dialectology, language change, and authorship analysis methods. His quantitative work always brings linguistic insights and understanding to the fore and here with Helena Woodfield, whose principal area of research is fake news, they together bring this approach to the natural experiment provided by the Jayson Blair episode at The New York Times.

Jayson Blair was accused of and admitted falsifying a large number of news stories at The New York Times, and a subsequent inquiry by the paper identified the bad, and by implication, the good stories for the relevant period of his employment. For Grieve and Woodfield this creates parallel corpora ripe for exploration. Their principal insight is that as fake news and real news have distinctive communicative functions, respectively to deceive and to inform, the language used to carry these functions will also differ. In this Element they set out to identify and describe those differences. The implication of this approach is that linguistic analysis, independently from fact-checking approaches, can make an important contribution to fake news detection.

This sets up a new research agenda for linguistic fake news detection, which can be further explored, perhaps in future Cambridge Elements.

Series Editor

1 Introduction

1.1 The Problem of Fake News

There is no simple definition of fake news. The term can be used to refer to any news that is suspected to be inaccurate, biassed, misleading, or fabricated. This includes news originating from across the news media landscape, from anonymous blogs to mainstream newspapers. The term is often used by the public, politicians, and the news media to attack news, journalists, and news outlets deemed to be problematic. It is even common for allegations of fake news made by one outlet to be labelled as fake news by another. During the 2016 American presidential election, Hillary Clinton, the Democrats, and the mainstream press claimed that fake news from social media accounts, right-wing news outlets, and foreign governments was propelling Donald Trump to victory, while Trump, the Republicans, and the right-wing press claimed that Clinton, the Democrats, and the mainstream press were spreading fake news about these and other scandals to undermine Trump’s campaign (Reference Allcott and GentzkowAllcott & Gentzkow 2017). Fake news became the focus of the news, with news organisations arguing over whose news was faker.

Given this situation, how can the public judge what news is real and what news is fake? We cannot trust the news media to lead public inquiry into its own practices, nor can we trust the government or industry to monitor the news media, as they are most often the subject of the news whose validity is being debated. Academic research on fake news is therefore especially important, but it is also difficult to conduct (Reference Lazer, Baum and BenklerLazer et al. 2018). Researchers must define fake news in a specific and meaningful way and then apply this definition to identify instances of real and fake news for analysis. This is a challenging task. Any piece of news communicates a wide range of information, some of which can be true, some of which can be false, and all of which can be an opinion. Often the only way to verify if news is real or fake is to conduct additional independent investigation into the events being covered. Crucially, even if fake news is defined precisely and in a way that is acceptable to most people, researchers must still label individual pieces of news as fake that a substantial proportion of the public believe are real. The study of fake news therefore quickly becomes politicised, further eroding public confidence, and encouraging researchers to define fake news in such a way that data can be collected easily and uncontroversially, often moving research further away from the central problem of fake news.

To understand the central problem of fake news, it is important to consider the history of fake news. Although most current research focuses on the very recent phenomenon of online fake news, reviewing the history of deception in the news media can help researchers understand what communicative events are considered fake news, how these different forms of fake news are related, and which types of fake news should be of greatest scholarly concern. The history of fake news can also point us to specific cases for further analysis, depoliticising the study of fake news by allowing researchers to focus on news coverage of events that are of less immediate consequence.

The history of fake news is almost as old as the history of news itself. In Europe, the precursor to the modern newspaper were the avvisi, handwritten political newsletters from Italy that circulated across the continent during the sixteenth and seventeenth centuries (Reference Infelise, Signorotto and ViscegliaInfelise 2002). Unlike personal letters, the avvisi were intended to report general information and to be widely read. Unsurprisingly, we can find reports almost immediately of authorities questioning the veracity of the information being presented and the motives of their authors, who were generally anonymous. For example, in 1570, Pope Pius V executed one suspected author, Niccolo Franco, for defaming the church. Alternatively, the Italian scholar Girolamo Frachetta considered whether the avvisi could be used in wartime to spread false information to the enemy in his 1624 political treatise A Seminar on the Governance of the State and of War (Reference Infelise, Signorotto and ViscegliaInfelise 2002).

Indeed, there are many cases of fake news being used to mislead foreign populations and governments, exactly as Frachetta suggested. Many historical examples come from the Cold War, especially the Soviet use of dezinformatsiya, the purposeful spread of false information, which was often spread via the foreign press (Reference Cull, Gatov, Pomerantsev, Applebaum and ShawcrossCull et al. 2017). The word disinformation only entered the English language in the 1980s due to increased awareness of so-called active measures, a wide range of strategies used by the USSR for undermining foreign countries, including fake news. One of the most famous of these initiatives was ‘Project Infektion’, which involved the Soviets spreading rumours that the United States had engineered AIDS, initiated by a letter published in an obscure Indian newspaper in 1983, titled ‘AIDS may invade India: Mystery disease caused by US experiments’ (Reference BoghardtBoghardt 2009).

It is perhaps more common, however, for fake news during wartime to be directed at one’s own citizens – to encourage support for war and to manage expectations. For example, during World War I, the Committee on Public Information was established in the United States to influence the media and shape popular opinion, especially as President Woodrow Wilson had campaigned on staying out of the war (Reference HollihanHollihan 1984). Similar strategies were used to promote the Vietnam and Iraq wars. Most notably, we now know that reporting on the presence of weapons of mass destruction in Iraq after 9/11 was fabricated to build support for the war, especially to help Tony Blair justify the United Kingdom joining the coalition (Reference RobinsonRobinson 2017).

Fake news is not new, but the nature of fake news has shifted in recent years due to the growth of digital communication and social media (Reference Lazer, Baum and BenklerLazer et al. 2018). The Internet has changed the medium over which news is published and accessed by the public. Consequently, people now have access to a much wider range of news sources, which disseminate information continuously throughout the day, often from very specific perspectives, while social media provides a platform for people worldwide to share and discuss the news. One important effect of this new approach to the production and consumption of news is that people can focus exclusively on the information they want to hear, leading to what has become known as the echo chamber (Reference Del Vicario, Bessi and ZolloDel Vicario et al. 2016). The rise of blogging and social media has also given the opportunity to people from outside the mainstream news media to spread their own message, including potentially fake news.

This type of online fake news has been the focus of much concern in recent years, including in the lead up to the 2016 US Election. Perhaps the most notorious example was the ‘Pizzagate’ conspiracy theory, which went viral in 2016, after Wikileaks published the personal emails of John Podesta, Clinton’s campaign manager (Reference KangKang 2016). Extremist websites and social media accounts reported that the emails contained coded messages related to a satanic paedophile ring involving high-ranking officials, which allegedly met at various locations, including the Comet Ping Pong pizzeria in Washington. Provoked by these reports, a man travelled to the nation’s capital from North Carolina, shooting at the pizzeria with a semi-automatic rifle. The Covid pandemic also offers numerous examples of this type of online fake news (see Reference van Der Linden, Roozenbeek and Comptonvan Der Linden et al. 2020). For example, social media has been used to spread fake news about alternative treatments for Covid that are potentially deadly, including ingesting bleach (Reference OrganizationWorld Health Organization 2020).

Although it seems reasonable to assume that the amount of fake news has increased in recent years, we should not assume that the effect of fake news has become worse. Most notably, reporting from the mainstream news media leading up to the second Iraq War, which predates the rise of social media, was arguably far more damaging than anything that has happened since. In some ways, social media has even made it more difficult for certain types of fake news to spread by increasing public scrutiny of the news media and by amplifying alternative perspectives. An important example is coverage of the murder of George Floyd in May 2020. This event was filmed by a teenager named Darnella Frazier, who was walking to the store for groceries. She posted the video on social media, giving rise to widespread public protest to police brutality towards African Americans – a topic often overlooked by mainstream news media, which can be considered an example of fake news by omission (Reference WenzelWenzel 2019). In recognition of the importance of this act, which was only made possible by the existence of these non-traditional platforms for sharing information, Frazier received a Pulitzer Prize in 2021.

Overall, the problem of fake news is long-standing, pervasive, and potentially of great consequence, even leading to war. The study of fake news is therefore of true societal importance. Fake news is also very diverse, driven by a wide range of specific political, social, economic, and individual factors. In addition, it is clear that fake news, at least in its most troubling instantiations, is not simply characterised by inaccurate reporting: it is intentionally dishonest, designed to deceive as opposed to inform the public.

In this Element, we therefore adopt the view that fake news is most productively analysed as deceptive news, in contrast with most academic research on fake news, which focuses on false news. In other words, we define fake news based on the intent of the author: as opposed to real news, whose primary goal is to inform readers about new and important information that the journalist believes to be true, the goal of fake news is to deceive the public, to make them believe information that the journalist believes to be false. This approach not only forces us to concentrate on the most problematic forms of fake news, but, as we argue, it provides a more meaningful basis for the analysis of the language of fake news, which is the subject of this Element.

1.2 Fake News and Linguistics

Understanding the language of fake news is key to understanding the problem of fake news because most cases of fake news are language. Fake news can involve pictures and other media, but usually an instance of fake news consists primarily of a news text – an article in a newspaper, a report on the radio, a post on social media, an interview on television. The news text is the basic communicative unit of journalism and consequently the basic unit of analysis in most research on fake news. The main questions we pursue in this Element are therefore how can the language of fake news be analysed in a meaningful way? How can we describe linguistic differences between news texts that are real and news texts that are fake? And how can we understand why this variation exists?

Crucially, however, we should not assume that the language of real and fake news differs systematically. There has been considerable research in natural language processing (Reference Oshikawa, Qian and WangOshikawa et al. 2020) where machine learning models are trained to automatically distinguish between real and fake news based on patterns of language use, often achieving relatively high levels of accuracy. This may seem like evidence of variation between the language of real and fake news, but it is important to consider these results with care, especially as this research prioritises the maximisation of classification accuracy over the explanation of patterns of language use. There are two basic reasons for caution. First, the data upon which these systems are trained and evaluated may not isolate variation between real and fake news, especially given the inherent challenges associated with defining these terms and identifying cases of each. Second, these systems often focus more on variation in language content than variation in language structure: the identification of topical trends that tend to distinguish between real and fake news is different from the identification of stylistic variation in the language of real and fake news regardless of topic. In other words, research in natural language processing focuses on the language of fake news, but it does not necessarily focus on the linguistics of fake news.

Although linguistic perspectives on fake news are limited, fake news is fundamentally a linguistic phenomenon, and its analysis should therefore be grounded in linguistic theory. To address this basic limitation with fake news research, we propose a framework for the linguistic analysis of fake news in this Element. Our framework is based on functional theories of language use, drawing especially on research on register variation (Reference Biber and ConradBiber & Conrad 2019), which has repeatedly demonstrated that differences in communicative purpose and context are reflected in linguistic structure. In addition, our framework is based on the distinction between misinformation and disinformation (Reference RubinRubin 2019), which we believe is crucial for understanding what fake news is and why the language of real and fake news should differ. By bringing together these two perspectives for the first time, we provide a basis for the linguistic analysis of fake news – for collecting real and fake news texts, for comparing their grammatical structure, and for understanding why this structure varies depending on whether their author intends to inform or deceive.

To demonstrate how our framework can be used to better understand the language of fake news, in this Element, we focus on one especially famous episode drawn from the history of the news media. This case involves Jayson Blair, a young reporter at The New York Times, who published a series of fabricated news articles in the early 2000s (Reference HindmanHindman 2005). In addition to its notoriety, this case is especially well suited for the linguistic analysis of fake news for three reasons. First, there is a relatively large amount of real and fake news available from one author and from the same time period, which has been validated through an extensive investigation by The New York Times (Reference Barry, Barstow, Glater, Liptak and SteinbergBarry et al. 2003) and acknowledged by Blair himself (Reference BlairBlair 2004). This gives us a controlled context for the study of fake news, where we have substantial amounts of comparable and valid real and fake news data, allowing us to effectively isolate the effects of deception on the language of one journalist. Second, we know much about why Blair wrote fake news, including from his own account and the account of The New York Times, giving us a basis for explaining differences in language use that we observe. Third, this case is relatively uncontroversial, as it is old enough that everyone can agree that the articles in question were faked, regardless of their political outlook – an important factor that has often limited the societal impact of fake news research. In addition, the case reminds us that fake news can be found across the news media, including in one of the most respected newspapers in the world.

The analysis of the language of fake news grounded in linguistic theories and methods also opens up the possibility for a wide range of applications. Although our goal is not to develop systems for fake news detection, the most obvious application of our research is to support the language-based identification of fake news. Most notably, this includes considerable current research in natural language processing concerned with developing systems for automatically classifying real and fake news at scale via supervised machine learning (Reference Oshikawa, Qian and WangOshikawa et al. 2020). As noted above, these systems can achieve good results, but they are not designed to explain why the language of real and fake news differs, and they appear to focus more on the content of fake news than its linguistic structure (Reference Castelo, Almeida and ElghafariCastelo et al. 2019). Our framework is not intended to supplant these types of systems, but it can offer an explanation for why they work, or why they might appear to work, which is necessary to justify the real-world application of such tools. Furthermore, the identification of a principled set of linguistic features for the analysis of real and fake news can be used to enhance existing machine learning systems, which tend to be based on relatively superficial feature sets like the use of individual words and word sequences. These types of insights can be especially useful to improve performance on more challenging cases, which also seem likely to be the most important cases of fake news. In addition, our framework can directly inform how fake news corpora should be compiled in a principled manner for the robust training and evaluation of fake news detection systems, which is a major limitation in much current research on fake news (Reference Asr and TaboadaAsr & Taboada 2018).

The framework we propose is also of direct value to the detailed discursive analysis of individual cases of fake news of sufficient importance to warrant close attention. For example, in a legal context, empirical analysis presented as evidence in court is often required to be based on accepted scientific theory (Reference AllenAllen 1993). Until now, however, there has been no clear explanation for why the linguistic structure of real and fake news should be expected to differ systematically. Our framework also potentially provides a basis for extending discourse analytic methods for deception detection more generally in forensic linguistics, which is relevant across a wide range of areas, including for police interviews (Reference PicornellPicornell 2013). In addition, our framework can be of value for supporting work in investigative linguistics, which is an emerging field of applied linguistics that focuses on the application of methods for the study of language use to make sense of real-world issues currently in the news (Reference Grieve, Woodfield, Coulthard, May and Sousa-SilvaGrieve & Woodfield 2020).

More generally, understanding the language of fake news, and how it differs from the language of real news, is important for understanding the language of the news media, and, through this language, the biases and ideologies that underlie any act of journalism. The current fake news crisis reflects a growing and general distrust of the news media that cannot be rectified simply by developing systems for automatically detecting real and fake news with a reasonable degree of accuracy. An article that obliquely expresses the editorial view of a newspaper in a context that appears to be purely informational is not necessarily fake, but it has real societal consequences. Being able to recognise the motivations of journalists and news outlets through the analysis of their language is an important part of reading the news intelligently and holding the news media accountable. Studying the discourse of fake news is therefore part of the greater enterprise of understanding the expression of information, opinion, and prejudice in the news media – understanding how the language of the news media shapes the world around us and our perceptions of it. We therefore hope that our framework will also be valuable for the critical analysis of the news media (Reference van Dijkvan Dijk 1983).

Finally, our framework and its application can also help us better understand the psychology of fake news (Reference Pennycook and RandPennycook & Rand 2019, Reference Pennycook and Rand2021). Why do people create, share, and believe fake news? These are basic questions whose answers are central to understanding the phenomenon of fake news in the modern world. Most notably, as we demonstrate through our analysis of the case of Jayson Blair and The New York Times, variation in the linguistic structure of fake news reflects the specific communicative goals of authors who consciously write fake news and the production circumstances in which fake news is produced. Appreciating the linguistic structure of fake news can also potentially help us understand why some fake news is more likely to be believed and to be shared, which may be especially important for combating the spread of fake news online.

1.3 Overview

Fake news is a long-standing problem, but it is receiving unprecedented attention today due to the rise of online news and social media, as well as growing distrust of the mainstream news media. Although fake news most commonly involves news texts, the study of the language of fake news has been limited, with researchers focusing more on the automated classification of true and false news than on explaining why the structure of real and fake news differs. It is therefore crucial to extend our understanding of the language of fake news, especially through the detailed analysis of real and fake news texts collected in a principled manner and grounded in linguistic theory.

Given this background, the goal of this Element is threefold. First, we introduce a new linguistic framework for the analysis of the language of fake news, focusing on understanding how the linguistic structure of fake news differs from real news, drawing especially on the distinction between misinformation and disinformation and the concept of register variation. Second, based on this framework, we conduct a detailed analysis of the language of fake news in the famous case of Jayson Blair and The New York Times to identify and explain systematic differences in the grammatical structure of his real and fake news.Footnote 1 Third, we consider how our results can help address the problem of fake news, including by informing research in natural language processing and psychology.

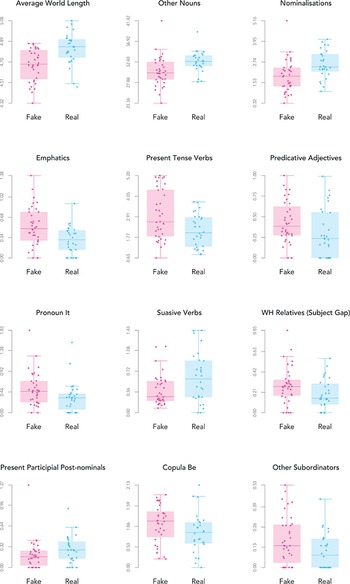

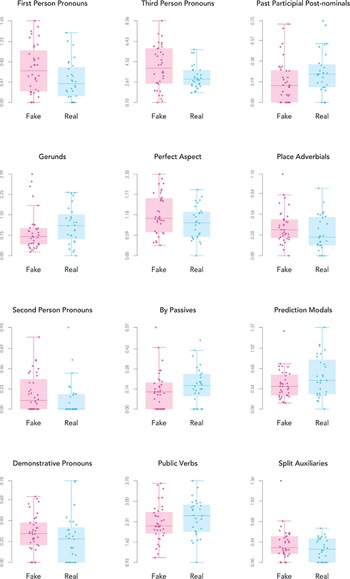

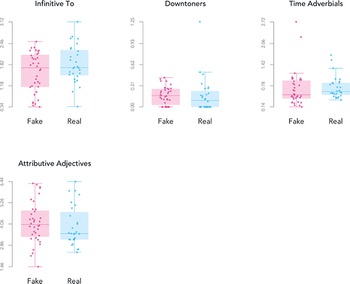

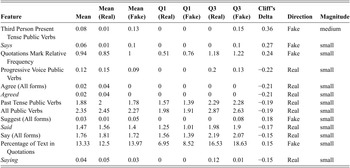

The remainder of this Element is organised as follows. In Section 2, we present a critical review of research on the language of fake news, before presenting our theoretical framework for the linguistic analysis of fake news, which directly addresses limitations with previous research. In Section 3, we review the case of Jayson Blair, including the background, the scandal, the investigation, and the aftermath. In Section 4, we describe the corpus of Jayson Blair’s writings that we collected, which is the basis of this study. In Section 5, we present our main linguistic analysis, discussing a range of grammatical features that vary across Blair’s real and fake news. We find that Blair’s fake news is written in a less dense style than his real news and with less conviction. We then offer explanations for these findings based on specific factors that led Blair to write fake news. Finally, in Section 6, we consider the implications of our research for our understanding of fake news more generally.

2 Analysing the Language of Fake News

The language of fake news has received considerable attention in recent years, especially in natural language processing, where the focus has been on the development of machine learning systems for the automatic classification of real and fake news based on language content. In this section, we critically review recent research on the language of fake news, arguing that it has been limited by the definition of fake news as false news and the lack of control for other sources of linguistic variation. To address these issues, we propose a framework for the linguistic analysis of fake news that is grounded in theories of disinformation and register variation. This framework provides a basis for describing the linguistic differences between real and fake news and explaining why these differences exist.

2.1 Defining Fake News

The first major challenge in the study of the language of fake news is to define fake news in such a way that instances of fake news texts can be identified and collected (Reference Tandoc, Lim and LingTandoc et al. 2018; Reference Asr and TaboadaAsr & Taboada 2019). There is, however, no simple or standard definition of fake news, which is better understood as the product of a range of practices that are related to the validity of information being shared by the news media. Researchers must therefore define the specific form of fake news they are interested in studying. Any coherent definition of fake news can be the starting point for meaningful empirical research, but researchers naturally tend to focus on certain types of fake news, depending both on the perceived societal importance of that type of fake news and the feasibility of collecting news texts of that type in a reliable and efficient manner – considerations that are often at odds with each other.

The vast majority of research on the language of fake news has been conducted in natural language processing and has focused on the development of tools for automatically distinguishing real and fake news (e.g. Reference Conroy, Rubin and ChenConroy et al. 2015; Reference Rubin, Chen and ConroyRubin et al. 2015; Reference Shu, Sliva, Wang, Tang and LiuShu et al. 2017; Reference Asr and TaboadaAsr & Taboada 2018; Reference Bondielli and MarcelloniBondielli & Marcelloni 2019; Reference Oshikawa, Qian and WangOshikawa et al. 2020; Reference Zhou and ZafaraniZhou & Zafarani 2020). In general, this research defines fake news as false news – untrue information disseminated by the news media. This has also been the definition that has been adopted in the very limited amount of linguistic research on this topic in discourse analysis (e.g. Reference Igwebuike and ChimuanyaIgwebuike & Chimuanya 2021). Crucially, this definition of fake news is based on the underlying truth of the information being conveyed: to study fake news from this perspective, comparable corpora of true and false news must be compiled. For example, to develop a machine learning system capable of distinguishing between true and false news requires that many true and false news texts be collected so that the system can be trained and tested on this dataset.

A major advantage of this veracity-based approach to fake news research is that it allows fake news to be collected with relative ease. Most commonly this involves drawing on the work of fact-checking organisations and mainstream news media organisations that identify fake news, including both instances of fake news and sources of fake news (Reference Asr and TaboadaAsr & Taboada 2018). This information is then used as a basis for compiling a corpus of fake news texts. These texts most commonly include passages from news articles (e.g. Reference Vlachos and RiedelVlachos & Riedel 2014; Reference WangWang 2017), social media posts (e.g. Reference Shu, Sliva, Wang, Tang and LiuShu et al. 2017, Reference Shu, Mahudeswaran, Wang, Lee and Liu2020; Reference WangWang 2017; Reference Santia and WilliamsSantia & Williams 2018), and complete news articles (e.g. Reference Rashkin, Choi, Jang, Volkova and ChoiRashkin et al. 2017; Reference Horne and AdaliHorne & Adali 2017; Reference Santia and WilliamsSantia & Williams 2018; Reference Castelo, Almeida and ElghafariCastelo et al. 2019; Reference Lin, Tremblay-Taylor, Mou, You and LeeLin et al. 2019; Reference Bonet-Jover, Piad-Morffis, Saquete, Martínez-Barco and García-CumbrerasBonet-Jover et al. 2021). Alternatively, some studies have used crowdsourcing to conduct their own fact-checking, having people rate the veracity of social media posts (e.g. Reference Mitra and GilbertMitra & Gilbert 2015) or news text (e.g. Reference Pérez-Rosas, Kleinberg, Lefevre and MihalceaPérez-Rosas et al. 2018). Crucially, these approaches not only make it possible for large collections of fake news to be compiled, but they maintain a certain degree of researcher objectivity, which is especially important in such a politicised domain. These collections of fake news are then generally contrasted with collections of true news, often collected from mainstream news media (e.g. Reference Horne and AdaliHorne & Adali 2017; Reference Rashkin, Choi, Jang, Volkova and ChoiRashkin et al. 2017; Reference Pérez-Rosas, Kleinberg, Lefevre and MihalceaPérez-Rosas et al. 2018; Reference Castelo, Almeida and ElghafariCastelo et al. 2019; Reference Bonet-Jover, Piad-Morffis, Saquete, Martínez-Barco and García-CumbrerasBonet-Jover et al. 2021).

In addition to the advantages of a veracity-based approach to the study of fake news, there are disadvantages. Most obviously, classifying news based on a true–false distinction inaccurately reduces the veracity of a news text down to a single binary variable. News texts, however, generally convey a large amount of information, some of which can be true and some of which can be false. Some fact-checking organisations and researchers have acknowledged this limitation, classifying fake news on a scale (e.g. Reference Rashkin, Choi, Jang, Volkova and ChoiRashkin et al. 2017; Reference WangWang 2017), although such an approach still assumes veracity can be reduced to a single quantitative variable.

Analysing the language of fake news based on veracity also requires that someone judges what qualifies as true and false news. Relying on external fact-checking services is a common and convenient solution to this problem, but while it may appear to increase the objectivity of a study, by limiting the involvement of the researcher at this stage of data collection, it actually immediately politicises the study, linking the research directly to the policies of the fact-checking organisation. Furthermore, these policies are not always consistent or accessible, making it difficult for the validity and biases of such research to be assessed, even by the researchers themselves. Fact-checking organisations may even be invested in the dissemination of fake news.

Finally, the veracity-based approach risks taking focus away from the type of deceptive fake news that is generally of greatest societal concern. People are not primarily worried about false news, as inaccuracies might be unintentional or inconsequential, but about deceptive news that is intended to manipulate readers, especially for establishing forms of political, social, and economic control (Reference GelfertGelfert 2018). It is important to consider the full range of practices encompassed by the term fake news, but we must not lose sight of those forms of fake news that are most problematic, even if they are inevitably more difficult to study. We certainly should not assume that natural language processing systems trained to identify false news can be used to identify deceptive news with similar levels of accuracy, especially as deceptive news is presumably the type of fake news that is most difficult for humans to identify.

The distinction between misinformation and disinformation is especially relevant to this discussion, because it helps us better understand the range of phenomena referred to as fake news, and because it helps us better understand what types of fake news should be of greatest concern. Specifically, misinformation is defined as false information, whereas disinformation is defined as deceptive information (Reference StahlStahl 2006; Reference RubinRubin 2019). The distinction between information and misinformation is therefore grounded in the concept of veracity, defined independently of the knowledge or intent of the individual, whereas the distinction between information and disinformation is grounded in the concept of deception, defined relative to the knowledge and intent of the individual. Essentially, this terminology reflects the difference between a falsehood and a lie.

It is important to acknowledge that the distinction between misinformation and disinformation pre-dates the rise of modern concerns about online fake news, and traditionally disinformation was recognised as the greater problem (Reference FallisFallis 2009). For example, Reference FetzerFetzer (2004: 231) wrote that

The distinction between misinformation and disinformation becomes especially important in political, editorial, and advertising contexts, where sources may make deliberate efforts to mislead, deceive, or confuse an audience in order to promote their personal, religious, or ideological objectives.

Fetzer was not referring to fake news directly but highlighting how deception is of far greater concern than inaccuracy. Nevertheless, the veracity-based approach to the study of fake news, which dominates current research, tends to focus implicitly on misinformation, at least in part because it is far easier to identify instances of misinformation than disinformation in the news media, for example, by drawing on the work of fact-checking organisations. This approach allows researchers to work at the scale required to train and test modern machine learning systems, but it has effectively moved focus away from the central problem of fake news – deceptive and dishonest news practices.

In most discussions of misinformation and disinformation, misinformation is presented as the larger category, including both information that is accidentally false and information that is purposely false (Reference StahlStahl 2006; Reference Tandoc, Lim and LingTandoc et al. 2018). In other words, disinformation is seen as a type of misinformation – purposeful misinformation. It is clearly necessary to draw a distinction between misinformation that qualifies and does not qualify as disinformation: people can inadvertently communicate falsehoods when they intend to share accurate information, and this should not be confused with lying. For example, a journalist might report false information obtained from a source, who the journalist believed was telling the truth. This qualifies as misinformation, but not disinformation, which only occurs when the journalist lies, reporting information they believe to be false.

It is wrong, however, to insist that all disinformation is misinformation, as people can also state the truth when they intend to deceive, if they are misinformed themselves. If Democritus, who believed the earth was flat, tried to convince his student that the earth was round (e.g. as part of a lesson), he would be deceiving his student, but inadvertently telling the truth. Situations such as these are presumably rare in journalism, although sustained disinformation campaigns would likely result in the production of some truthful disinformation over time. Perhaps a more common form of truthful disinformation in the news media is deception by omission, where the information contained in a news text is true, but important information is purposely excluded to manipulate the reader (Reference WenzelWenzel 2019). Most notably, this type of fake news would include selective and biassed reporting. For example, the American news media has been criticised for reporting mass shootings differently, excluding relevant information, depending on the race of the shooter (Reference Duxbury, Frizzell and LindsayDuxbury et al. 2018). Real news could even potentially contain purposeful falsehoods and contradictions, so as to allow true information to be communicated that could not otherwise be published openly (Reference StraussStrauss 1952).

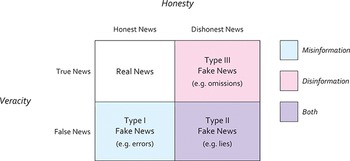

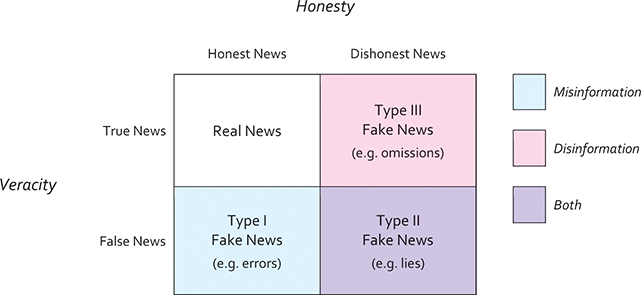

The relationship between misinformation, disinformation, and fake news is illustrated in Figure 1, which shows the overlap between disinformation and misinformation along our two main dimensions of fake news: veracity and honesty (see also Reference Tandoc, Lim and LingTandoc et al. 2018). Prototypical real news is both honest and true: generally, the goal of journalists is to share information that is true and that they believe is true. However, there are three distinct ways news texts can diverge from this expectation, creating three broad categories of fake news. Type I Fake News is unintentionally false news, which occurs when journalists report information they believe to be true, but which is false. Type I Fake News therefore qualifies as misinformation but not disinformation. Alternatively, Type II Fake News is intentionally false news, which occurs when journalists purposely report information they believe to be false. Type II Fake News therefore qualifies as both misinformation and disinformation. Finally, Type III Fake News is news that is true but is nevertheless intended to deceive, including fake news by omission or selective reporting. Type III Fake News therefore qualifies as disinformation but not misinformation.

Figure 1 A typology of fake news

Our opinion is that research on fake news should primarily focus on disinformation, especially Type II Fake News – news that was intended to deceive its readership into believing information the journalist does not believe is true – as this is the type of fake news that we believe is of greatest societal concern. We also believe it is important not to conflate these different types of fake news: studies should either focus on one type of fake news, and compare it to real news, or distinguish between different types of fake news. In our analysis of the case of Jayson Blair and The New York Times, we therefore focus on comparing Type II Fake News, where Blair purposely published false information, to real news, where he purposely told the truth. Consequently, our framework and our study differ from most research on the language of fake news, which tends to focus on false news, effectively conflating Type I and Type II Fake News, obscuring the difference between misinformation and disinformation.

2.2 Language Variation and Fake News

The second major challenge in the study of the language of fake news is collecting comparable real and fake news texts for analysis. In general, research on the language of fake news, including fake news detection in natural language processing, is based on the comparison of patterns of language variation across texts that have been classified as real and fake. If this research is to identify actual differences between real and fake news, these texts must otherwise be as comparable as possible. We know, however, that there are many other factors that also naturally cause language variation across news texts, including variation in register, author, and dialect. Consequently, once fake news has been defined in a clear and practical manner, it is still necessary to identify real news for comparison that allows for these other sources of linguistic variation to be somehow controlled. The challenge is to build corpora that allow us to isolate variation between real and fake news.

Most importantly, to study the language of fake news, it is necessary to control for register variation, that is, variation based on communicative context and purpose (Reference Biber and ConradBiber & Conrad 2019). No matter how fake news is defined, we must contrast otherwise comparable registers of real and fake news. For example, if real news is collected from traditional newspapers and fake news is collected from blogs, it would be unclear if any observed differences in language use are related to differences between real and fake news or to differences between newspapers and blogs, which are associated with different communicative purposes and production circumstances, and which therefore show distinct patterns of grammatical variation (Reference BiberBiber 1988; Reference Grieve, Biber, Friginal, Nekrasova, Mehler, Sharoff and SantiniGrieve et al. 2010). Specifically, blog writing is more informal than newspaper writing for a wide range of reasons, independent of its status as real or fake news. We would also likely find clear topical differences between these two registers of news, especially, for example, if fake news from fringe outlets online is compared to real news from the mainstream press.

This type of register imbalance is common in fake news datasets used in natural language processing. For example, the LIAR dataset (Reference WangWang 2017), which has been used in many studies (e.g. Reference Shu, Sliva, Wang, Tang and LiuShu et al. 2017; Reference Aslam, Khan, Alotaibi, Aldaej and AldubaikilAslam et al. 2021), consists of news statements that were scored for veracity by a major fact-checking organisation. The register of these statements, however, varies substantially, including statements drawn from news reports, campaign speeches, and social media posts. No information is provided on how these registers were selected or whether the distribution of true and false statements is balanced across these categories, nor is register variation generally taken into account during analysis. Similarly, the Buzzfeed fake news dataset (Reference Silverman, Lytvynenko, Vo and Singer-VineSilverman et al. 2017), which has also been used in many studies (e.g. Reference Shu, Sliva, Wang, Tang and LiuShu et al. 2017; Reference Mangal and SharmaMangal & Sharma 2021), was designed to allow for real and fake news to be compared between mainstream news outlets and extremist left- and right-wing websites. The dataset, however, is accompanied by descriptive statistics showing that data from mainstream news is rated at over 90 per cent true, whereas data from extremist websites is rated at around 50 per cent true. Fake news identification systems trained on such datasets may achieve high levels of accuracy, but this classification would likely be driven primarily by broad register differences between the texts selected to represent real and fake news as opposed to whether a text was real or fake, especially when fake news is largely concentrated in one register.

A related issue is that much research on the language of fake news tends to focus only on part of the news media landscape, especially non-traditional online sources, often associated with extreme political viewpoints, as the Buzzfeed fake news dataset clearly illustrates. This type of fake news is convenient to study because these are the sources that most fact-checking organisations target, and because labelling news from such sources as fake is less likely to be questioned, at least within the scientific community. There is no reason to doubt that fake news, including genuine disinformation, originates from these sources, but it is wrong to assume that these are the only sources of fake news, as our case of Jayson Blair and The New York Times and many of the examples we have considered thus far illustrate. In many ways, these are not even the most serious sources of fake news, because texts from non-traditional outlets are often obviously fake to a large proportion of the public, and because their status as potential fake news can often be inferred based entirely on source. Mainstream news media is generally excluded from fake news research, except as the source of true news comparison data. As a result, most fake news research does not generalise to fake news published by the mainstream news media, as fake news detection systems are trained to treat all mainstream news as real, even though this is the type of fake news that has the potential to be far more significant and difficult to detect.

In addition to register variation, it is also important to control other forms of linguistic variation when collecting comparable examples of real and fake news for analysis. For example, research in stylometry has shown that each author has their own style, with different sets of linguistic features distinguishing between the unique varieties of language used by individuals (Reference GrieveGrieve 2007). Certain features used by one author to create real or fake news may therefore be different from other authors, introducing possible confounds in large-scale corpus-based research on fake news that does not attempt to control for individual differences.

Furthermore, we know the social background of authors more generally affects their writing style (Reference GrieveGrieve 2016), so even taking large random samples of real and fake news written by many authors will not necessarily allow for sociolinguistic variation to be controlled. For example, it seems likely that when fake news is drawn from fringe online sources, and real news is drawn from the mainstream press, there will be clear social differences between the two sets of authors, especially in terms of education level and socioeconomic status, which are well-known correlates of dialect variation (Reference TagliamonteTagliamonte 2006). The apparent differences observed between real and fake news in previous studies therefore may also reflect differences in the social backgrounds of the authors being compared, as opposed to the status of the news as real or fake. For example, if authors of real news are primarily professional authors, while authors of fake news are primarily amateurs, we would expect broad differences in the language in which they write, regardless of whether or not they are telling the truth. Some research has begun to address these types of issues at least obliquely. For example, Reference Potthast, Kiesel, Reinartz, Bevendorff and SteinPotthast et al. (2017) found that hyper-partisan left- and right-wing news appears to share a style associated with the language of extremism. However, controlling for social variation in the authors of real and fake news is a topic that has received remarkably little attention in the literature, and represents another major limitation of current research on the language of fake news.

2.3 A Framework for the Linguistic Analysis of Fake News

To summarise, there are two key limitations with previous research on the language of fake news: researchers do not generally analyse the most problematic forms of fake news, focusing on misinformation as opposed to disinformation; and researchers do not generally control for other forms of linguistic variation, including variation in register, authorship, and dialect. These issues stem in part from reliance on fact-checking services for identifying fake news, as well as from the lack of a clear and meaningful definition of fake news. Consequently, researchers often miss the central problem of fake news or fail to isolate the distinction between real and fake news. To address these issues, we propose a framework for the linguistic analysis of fake news that is grounded in theories of disinformation and register variation.

We believe the language of fake news can best be understood as a form of register variation between information and disinformation in the news media. If we define fake news based on the communicative purpose of the journalist, as our focus on disinformation demands, the theories and methods of register analysis provide us with a basis for analysing the language of fake news in a meaningful way. Research on register variation has repeatedly shown that the use of a wide range of grammatical features varies systematically across contexts depending on their function (Reference HallidayHalliday 1978; Reference Chafe and TannenChafe & Tannen 1987; Reference BiberBiber 1988; Reference Biber and ConradBiber & Conrad 2019). Because the communicative goals of people change across contexts, along with the inherent communicative affordances and constraints associated with those contexts, the structure of discourse varies systematically across contexts as well. Grammatical differences between registers are not arbitrary, but directly reflect how people vary their language for effective communication in different situations. As Reference HallidayHalliday (1978: 31–2) writes, ‘the notion of register is at once very simple and very powerful. It refers to the fact that the language we speak or write varies according to the type of situation’. For example, when people tell stories, they tend to use many past tense verbs, because they are recounting events that took place in the past (Reference BiberBiber 1988). These grammatical differences exist because the communicative needs of people vary across different contexts.

For this reason, we should expect that the language of real and fake news also varies systematically, if subtly, so long as real and fake news are defined based on the intent of the journalist, for instance, to inform or deceive. Register variation can therefore provide a basis for describing and explaining grammatical differences in the language of real and fake news, specifically between information and disinformation. This link between the concepts of disinformation and register variation is the basic theoretical insight that underlies our framework for the linguistic analysis of fake news.

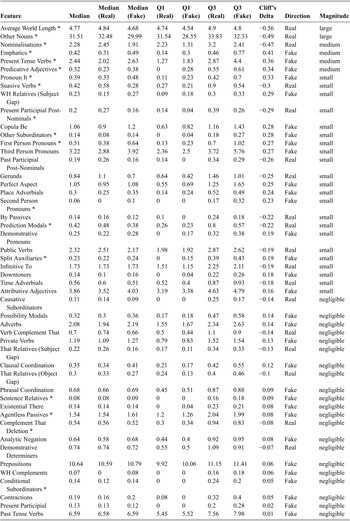

Our framework draws most directly on the type of quantitative corpus-based register analysis developed by Douglas Biber and his colleagues, known as multidimensional analysis (Reference Biber and ConradBiber & Conrad 2019). Most notably, Reference BiberBiber (1988) presented an extensive analysis of linguistic variation across registers of written and spoken British English, identifying clear grammatical differences across registers, showing that these patterns derive from variation in communicative purpose and context. In total, six aggregated dimensions of register variation were extracted from the corpus based on a multivariate statistical analysis of sixty-seven grammatical features, whose relative frequencies were measured across each of the individual texts in the corpus. These dimensions were then interpreted functionally based on the most strongly associated features and texts. For example, the first dimension, which accounts for the largest amount of variance across the feature set, was interpreted as reflecting a distinction between more informational and more involved forms of communication. On the one hand, texts from registers like academic and newspaper writing were found to be relatively informational and formal, characterised by frequent use of features like nouns and noun modifiers, which are associated with a relatively dense style of communication. On the other hand, texts from registers like face-to-face and telephone conversations were found to be relatively involved and informal, characterised by frequent use of features like verbs, pronouns, and adverbs, which are associated with relatively casual and spontaneous discourse, and consequently lower levels of informational density. For example, conversations tend to contain many pronouns due to natural communicative constraints on the production of spontaneous speech, which greatly limit opportunities for individuals to compose complex noun phrases. Instead, individuals tend to repeatedly reference entities under discussion using pronouns, adding new information with each reference. Other dimensions of register variation identified in Reference BiberBiber (1988) are related to factors like narrativity, persuasion, and abstractness.

The extensive and interpretable set of grammatical features identified in Reference BiberBiber (1988) provides us with a basis for identifying meaningful differences between the linguistic structure of real and fake news that are directly related to variation in communicative function. These features have been validated through a long history of use for the empirical analysis of register variation in the English language, with features and clusters of features having been linked to a wide range of different communicative functions. These functional linguistic patterns can therefore help us understand the motivation for linguistic variation observed when comparing real and fake news. Notably, comparable feature sets have also been compiled for other languages, including Somali, Korean, and Tuvaluan (Reference BiberBiber 1995).

Drawing on insights from register analysis therefore allows us to address another basic limitation with current research on the language of fake news – reliance on relatively superficial feature sets, like the use of individual words and word sequences (i.e. n-grams), which are easy to count, but more difficult to explain, especially from a grammatical as opposed to a topical perspective. By describing differences between real and fake news based on an established set of grammatical features with clear functional relevance, we can overcome this limitation, and identify differences in the linguistic structure of real and fake news that are driven by differences in the communicative intent of journalists – to produce news texts that are intended to inform and news texts that are intended to deceive.

In addition to conceiving of variation between real and fake news as a form of register variation, our framework also acknowledges that there are other sources of linguistic variation in the news media, which might obscure differences between real and fake news if ignored. When compiling fake news corpora, it is especially important to control for other sources of register variation, as there are clear linguistic differences across news registers, reflecting variation in communicative purpose and context. For example, it would seem to be much easier for people to distinguish between different types of news texts – for example, newspaper articles and blog posts – than it is to identify whether the journalist is honest or dishonest. This is why fake news identification is such a challenging task. Controlling register variation can be achieved either by focusing on one news register or by factoring register variation into the analysis directly. Controlling register variation also helps control dialect variation, as the social background of people who produce different types of news inevitably differs, especially in terms of education and class. For example, it seems likely that writers at mainstream newspapers are generally more highly educated than social media posters. Such differences will have clear linguistic consequences that must somehow be controlled in any rigorous study of fake news. Finally, it is important to control directly for authorship to account for individual differences of style, ideally by considering the language of one or more authors who are known to have produced both real and fake news.

Overall, by treating disinformation in the news media as a form of register variation, by drawing on a rich and interpretable grammatical feature set, and by controlling for other important sources of linguistic variation, we believe it is possible not only to identify actual differences in the language of real and fake news but to offer explanations for why these differences exist, based on variation in the communicative goals of journalists, as well as the production circumstances in which they write. In this way, we address a final limitation with current research on the language of fake news – the lack of any underlying theory for why real and fake news should differ or any explanation for why observed differences in the language of real and fake news exist. Providing the basis for truly understanding the language of fake news is the primary goal of this Element. Furthermore, our framework should be of direct value to work on fake news detection in natural language processing, because it provides a theoretical basis for detection, which can help improve the performance of these tools, especially in the most challenging cases, and because it provides justification for the application of these tools in real-world settings.

Finally, it is important to acknowledge that while there is good reason to assume systematic linguistic differences exist between news media texts that are intended to inform and deceive, this assumption does not extend to news media texts that are true and false. This is because misinformation may be shared without the knowledge of the journalist, precluding the possibility that differences in language structure arise from differences in communicative purpose. In fact, from this perspective, it is unclear why we should expect systematic linguistic differences between true and false news to exist at all, further calling into question standard approaches to the analysis of fake news based on fact-checked data. Unfortunately, the study of disinformation is more challenging than the study of misinformation, as it requires knowledge of the intent of an author, which is often inaccessible to researchers. Nevertheless, as the case of Jayson Blair and The New York Times demonstrates, such cases do exist and can be identified if we take the time to review the history of the news media. In the next three chapters, we therefore focus on this one important case of fake news, not only to demonstrate the application of our framework but to begin to truly understand the language of fake news.

3 Jayson Blair and the New York Times

The New York Times was established in 1851 and has long been regarded as one of the most important newspapers in the world – for many the newspaper of record in the United States. The Times has won over 130 Pulitzer Prizes, more than any other newspaper, and is ranked in the top 20 newspapers in the world and the top 3 newspapers in the United States by circulation (Cision Media Research 2019). Despite its reputation, like most newspapers, The Times has been subject to criticism for the honesty and accuracy of its reporting. In this Element, we focus on one such case, the Jayson Blair scandal, a famous example of fake news at The Times from the early 2000s. This event was described by a large number of news reports (Reference Barry, Barstow, Glater, Liptak and SteinbergBarry et al. 2003; Reference HernandezHernandez 2003a, Reference Hernandez2003b; Reference KelleyKelley 2003; Reference KurtzKurtz 2003a, Reference Kurtz2003b, Reference Kurtz2003c, Reference Kurtz2003d, Reference Kurtz2003e; Reference LeoLeo 2003; Reference MagdenMagden 2003; New York Times 2003a, 2003b; Newsweek 2003; Reference SteinbergSteinberg 2003a, Reference Steinberg2003b, 2003c, Reference Steinberg2003d; Reference WooWoo 2003; Reference KellerKeller 2005; Reference BarronBarron 2006; Reference CalameCalame 2006; Reference ScoccaScocca 2006), and books (Reference BlairBlair 2004; Reference MnookinMnookin 2004) from this time, as well as limited academic research (Reference HindmanHindman 2005; Reference Patterson and UrbanskiPatterson & Urbanski 2006). In this section, we introduce this case by presenting a synthesis of these accounts.

Jayson Blair was born in 1976, the son of a federal official and a schoolteacher. He grew up outside Washington D.C., in Centreville, Virginia. As a student, he worked at The Sentinel, the student newspaper at Centreville High School, and The Diamondback, the student newspaper at the University of Maryland, where he pursued a degree in journalism. During his studies, he interned at The Boston Globe, The Washington Post, and, for ten weeks in the summer of 1998, The New York Times. His work during this final internship, over which time he wrote nineteen articles, led to Blair being offered a position at The Times before he graduated. He accepted in June 1999, when he was only twenty-three years old, joining the newspaper’s Police Bureau, instead of finishing his studies.

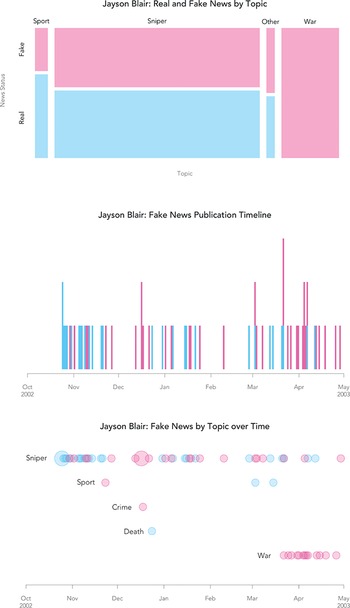

Over the next four years at The Times, Blair published more than 600 articles on a wide range of topics. His career progressed quickly. Soon after joining the newspaper, in November 1999, he was promoted from intern to intermediate reporter, moving to the Metropolitan Desk, where he gained a reputation for being highly productive and charismatic. In January 2001, Blair was promoted again to full-time reporter. After a brief stint on the Sports Desk, he was moved to the prestigious National Desk in October 2002 by the newspaper’s two top editors, Howell Raines and Gerald Boyd. Along with several other reporters, Blair was tasked with covering the D.C. Sniper Attacks, the biggest news story in the nation, which was unfolding near his hometown. Blair appeared to make the most of this opportunity over the next year, including publishing front-page features. In recognition of his success, Blair was assigned to lead coverage on the trial, following the arrest of John Muhammad and his teenage accomplice Lee Malvo. In March 2003, Blair was also assigned to report on the Iraq War from a domestic perspective as part of the newspaper’s Nation at War series. Most notably, he covered the story of Jessica Lynch, an American soldier who had famously been captured and then rescued in Iraq. In recognition of his accomplishments, his editors at The Times were considering promoting him once again in April 2003, but these would be among the last articles Blair would write.

Despite his rapid rise, Blair’s reporting had long been the subject of concern for some of his editors at The Times. Jonathan Landman, who became Blair’s editor at the Metropolitan Desk not long after he joined the newspaper, appears to have been Blair’s most vocal critic. In late 2000, Joseph Lelyveld, the executive editor at The Times, expressed concern over the number of errors being published by the newspaper, prompting Landman to conduct a review of corrections coming from his staff at the Metropolitan Desk. Although Landman had misgivings when Blair was promoted to full-time reporter in January 2001, he did not oppose the promotion. His concerns grew, however, not long after the September 11 attacks, when Blair published an article that was found to contain many factual errors. Blair claimed he was distracted by the loss of his cousin in the attack on the Pentagon. He also wrote a letter of apology to Landman. Nevertheless, in January 2002, Landman submitted a highly critical evaluation of Blair, highlighting his extremely high correction rate, resulting in a two-week leave of absence. By April 2002, Landman had become so concerned that he emailed senior colleagues recommending they terminate Blair’s contract. Instead Blair was asked to take another leave of absence. When he returned, Landman took it upon himself to monitor Blair’s output, leading to a reduction both in his publication and correction rates. Blair, however, soon left the Metropolitan Desk, and Landman’s supervision, eventually being assigned to the National Desk, where he covered the D.C. Sniper Attacks and the Iraq War. The increased attention garnered by these new national assignments would soon lead directly to Blair’s undoing.

Although they made the front page, Blair’s articles on the D.C. Sniper were highly controversial, attracting criticism from both inside and outside The Times. For example, in his first front-page article, citing anonymous sources, Blair implied that tensions between law enforcement agencies had led to the interrogation of Muhammad being cut short just as he was about to confess, a claim that was vehemently denied by government officials. In another front-page article, once again citing anonymous sources, Blair reported that Malvo had been the primary shooter, leading to the prosecutor from Virginia calling a press conference to publicly reject his claims. These issues led to renewed scrutiny of Blair’s work, but Blair continued reporting, transitioning to domestic coverage of the Iraq War starting in March 2003. This time he attracted criticism from the San Antonio Express-News. Another young reporter, Macarena Hernandez, who had interned with Blair at The Times five years earlier, noticed that a front-page article by Blair published on 26 April (‘Family Waits, Now Alone, for a Missing Soldier’) contained very similar details as her front-page article published by The Express-News on 18 April (‘Texas soldier; Valley mom awaits news of MIA son’), which recounted an interview with the family of this same missing soldier. In response, the editor of The Express-News, Robert Rivard, sent an email to Blair’s editors at The Times on 29 April alleging plagiarism.

The Times immediately started an investigation, but before Raines and Boyd could assemble their team, The Washington Post broke the story. A few days after Blair’s article was published, Hernandez had returned to Los Fresnos. The family’s son had been discovered dead in Iraq. There she happened to discuss Blair with a reporter from The Post, who had also noticed similarities between the two articles. Later that day, Howard Kurtz, the media reporter for The Post, contacted Hernandez for comment. Kurtz published his first report on the scandal on 30 April (‘New York Times Story Gives Texas Paper Sense of Deja Vu’), quoting both Hernandez and Rivard, presumably greatly increasing pressure on The Times and Blair, who would resign the next day.

Blair’s resignation was announced by The Times in an ‘Editors’ Note’ published on 2 May, which also acknowledged plagiarism in his article from 26 April. The Times also published a second short news article reporting on the case written by Jacques Steinberg (‘Times Reporter Resigns After Questions on Article’). The report quotes Raines apologising to the readers of The Times, as well as the family of the dead soldier, for ‘a grave breach of its journalistic standards’, assuring that a full investigation was underway:

We continue to examine the circumstances of Mr. Blair’s reporting about the Texas family. In also reviewing other journalistic work he has done for The Times, we will do what is necessary to be sure the record is kept straight.

The reports also discussed the plagiarised article, highlighting Blair’s transgressions. For example, although the article bore a Los Fresnos dateline and reported details about the family’s home, implying that it was written on site, the family claimed that they had never been interviewed by Blair.

A full investigation of the seventy-three articles published by Blair after joining the national desk in late October 2002 was completed over the next two weeks. The investigation started officially on 5 May, once Dan Barry, a Pulitzer Prize winner who had been with The Times since 1993, agreed to lead the investigation. In addition to Steinberg, he was joined by David Barstow, Jonathan Glater, and Adam Liptak, who were selected by Raines and Boyd. The reporters immediately demanded independence from the editors, whose management style and relationship with Blair were very much in question. This was a controversial decision, as Boyd in particular was legally obliged to read all reports published by The Times. Fortunately, Al Siegal, the assistant managing editor in charge of corrections, who had worked at the newspaper for decades, agreed to oversee the investigation in their place. The investigation culminated with the publication of three articles on 11 May, approved by Siegal, without having been seen by the two top editors at The Times, nor the publisher, Arthur Sulzberger Jr, whose family had run the newspaper since 1896. Sulzberger was quoted, however, by the investigation as saying that the scandal was ‘a huge black eye’ for the newspaper.

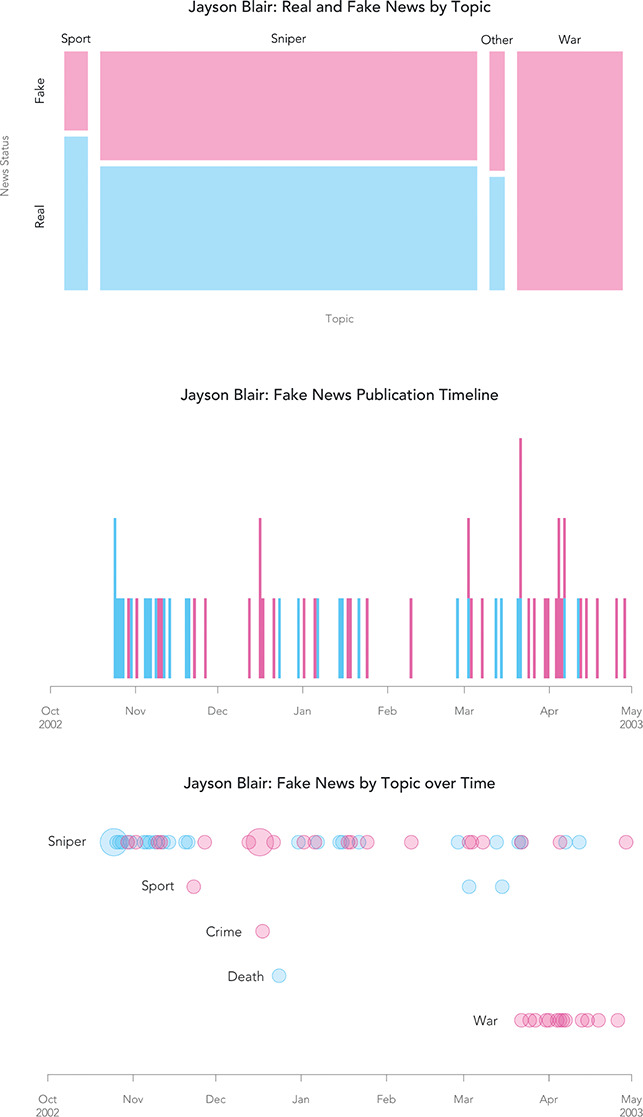

The first article was an ‘Editors’ Note’ (totalling approximately 400 words) acknowledging the extent of the incident, Blair’s resignation, and the investigation, as well as expressing the newspaper’s regret for not identifying Blair’s deceptions sooner. The article claimed that the investigation had found that thirty-seven articles authored by Jayson Blair and published by The Times since October 2002 had been plagiarised or fabricated, including the article from 26 April. The article explained the investigation focused on Blair’s work over this period because this is when he was moved to the National Desk and consequently given greater freedom – and hence greater opportunity for impropriety. Earlier articles were therefore only being spot-checked. The article also outlined the steps taken by the investigative team, who conducted interviews and examined Blair’s records, including his phone logs and expense reports.

The second article was a report (totalling approximately 7,000 words) written by the five reporters who had led the investigation (‘Times Reporter Who Resigned Leaves Long Trail of Deception’). This article focused especially on describing Blair’s activities since he had joined the national desk but also contained an extended discussion of Blair’s history at The Times. Notably, the report opened with a frank admission:

A staff reporter for The New York Times committed frequent acts of journalistic fraud while covering significant news events in recent months, an investigation by Times journalists has found. The widespread fabrication and plagiarism represent a profound betrayal of trust and a low point in the 152-year history of the newspaper.

The report then went on to directly acknowledge the extent of Blair’s deception and the range of ways in which he breached the basic ethical standards of journalism.

The reporter, Jayson Blair, 27, misled readers and Times colleagues with dispatches that purported to be from Maryland, Texas and other states, when often he was far away, in New York. He fabricated comments. He concocted scenes. He lifted material from other newspapers and wire services. He selected details from photographs to create the impression he had been somewhere or seen someone, when he had not.

Based on over 150 interviews with Blair’s colleagues and alleged sources, and the examination of a range of business records and emails, as well as reports from other news agencies, the investigation concluded that Blair was responsible for ‘systematic fraud’ over his career at The Times. He had even lied about losing a cousin in the attack on the Pentagon. The report also discussed the circumstances that gave rise to the scandal, primarily identifying issues with Blair’s character, as well as failures by his editors to communicate their concerns about Blair, and a lack of complaints from people who were misrepresented in Blair’s articles.

Most notably, the report found that Blair rarely left New York, including when he was assigned to cover the D.C. Sniper Crisis or to meet families from across the US who had lost sons and daughters in the Iraq War. For example, the report detailed issues with an article from 27 March (‘Relatives of Missing Soldiers Dread Hearing Worse News’), which recounted an interview conducted by Blair with Jessica Lynch’s father in Palestine, West Virginia. Blair described how her father became overwhelmed by emotion as they discussed his missing daughter on the porch of their family home, overlooking tobacco fields and cow pastures. Blair also reported that her brother was in the National Guard, continuing a long tradition of military service in her family. None of this was true. The family home did not overlook such a landscape, her brother was in the Army, and the family did not have a long military record. Blair had never even travelled to West Virginia, despite filing five articles about the Lynch family from the state. Instead, his email and phone records suggested he was in New York all along.

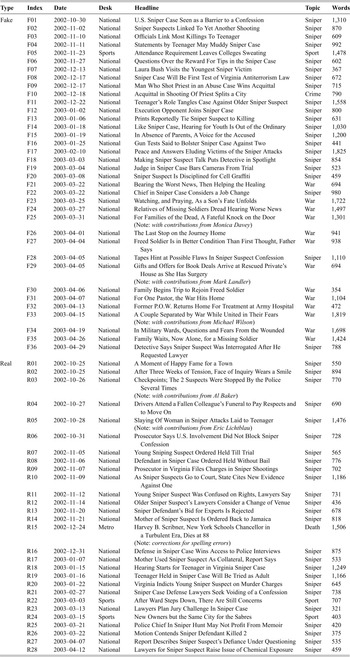

The third article was a report (totalling approximately 7,000 words) presenting the results of the investigation (‘Witnesses and Documents Unveil Deceptions in a Reporter’s Work’). The report focused on thirty-six articles published since late October 2002, excluding the article from 26 April that precipitated the investigation. For each of the thirty-six articles, the date and title were provided as well as a list of inaccuracies, classified into five categories: Denied Reports, Factual Errors, Whereabouts, Plagiarism, and Other Issues (e.g. misattributions, breaches of confidence). In addition, the report listed three articles published before this period in which errors had been identified. This final report provides a detailed record of how the credibility of one of the most important newspapers in the world was tarnished by the acts of one reporter who published a series of articles which today would be considered blatant examples of fake news.

Unsurprisingly, the case also received considerable attention from other news organisations. Reporting by Kurtz, who had broken the story for The Washington Post, was especially influential. On 2 May, the same day The Times publicly acknowledged that Blair had plagiarised The Express-News, Kurtz revealed that Blair had never graduated from the University of Maryland, a fact that was apparently unknown to many of his colleagues at The Times. Then, on 10 May, a day before The Times published the results of its investigation, Kurtz reported that Blair had also fabricated news in 1999 while working as an intern at The Boston Globe. Crucially, this was the first evidence that Blair’s lies were more widespread. Next, on 12 May, Kurtz reported on the reaction of the news media to the scandal, highlighting questions about race and affirmative action – whether Blair had been given preferential treatment for being African American.

Blair had first come to The Times as part of a programme intended to diversify the newsroom, along with three other interns, who notably all went on to have very successful careers in journalism. While Macarena Hernandez would move back to San Antonio, Winnie Hu and Edward Wong still write for The Times. Hu works at the Metropolitan Desk, and Wong, who reported from Baghdad between 2004 and 2007, is now a diplomatic correspondent in Washington.

In their reports, The Times had only addressed the issue of race briefly. Most notably, Boyd, who had led the committee that promoted Blair to full-time reporter, was quoted as saying that race was not an issue:

To say now that his promotion was about diversity in my view doesn’t begin to capture what was going on. He was a young, promising reporter who had done a job that warranted promotion.

Boyd’s relationship with Blair, however, was part of what was being questioned by the news media, especially as Boyd was also African American – as managing editor, the most highly ranked African American in the history of the newspaper at that time. For example, Kurtz quoted John Leo, a columnist from U.S. News and World Report, who had once worked at The Times:

[W]ould this young African-American’s meteoric rise to staff reporter be likely for a white reporter with comparable credentials? It appears as though the Times knew early on that hiring Blair was a dicey proposition.

Similarly, Kurtz quoted an editorial by Newsweek, which claimed that this was also the view of many of his colleagues at The Times:

Internally, reporters had wondered for years whether Blair was given so many chances – and whether he was hired in the first place – because he was a promising, if unpolished, black reporter on a staff that continues to be, like most newsrooms in the country, mostly white.

Race was also addressed by senior columnists at The Times in the coming days, including William Safire, who published an ambiguous editorial titled ‘A Huge Black Eye’, and Bob Herbert, who wrote unambiguously that ‘the race issue in this case is as bogus as some of Jayson Blair’s reporting’.

The Newsweek article also directly questioned why the actions of Raines and Boyd had not been subjected to greater scrutiny by the investigation, especially given rumours that they had lost the confidence of much of the newsroom well before the scandal had come to light. Newsweek implied that Blair’s ‘close mentoring relationship’ with Boyd, including frequent cigarette breaks together, was part of the problem, and that moving a young reporter like Blair to the National Desk was only necessary because Raines and Boyd had driven away so many senior reporters. Part of their strategy was to assign large numbers of reporters to major stories, thereby creating an aggressive environment where journalists not only competed for news but the support of their editors. The situation erupted at a staff-wide meeting on 14 May, a few days after the reports were published. Raines took responsibility for the breakdown in journalistic oversight, but staff apparently used the opportunity to attack Raines for how he ran the newsroom. Only a month later, Raines and Boyd would be forced to resign. Bill Keller would take over as executive editor in July 2003, while Raines and Boyd would go on to write books recounting their tenure at The Times. Boyd died of lung cancer in 2006. At his funeral, George Curry, who had worked with Boyd at The St. Louis Post-Dispatch, said that ‘Gerald was a victim of Jayson Blair, not his protector’.

Blair also went on to publish a book in 2004 entitled Burning down my Master’s House: My Life at The New York Times. The book opens with a clear admission of guilt:

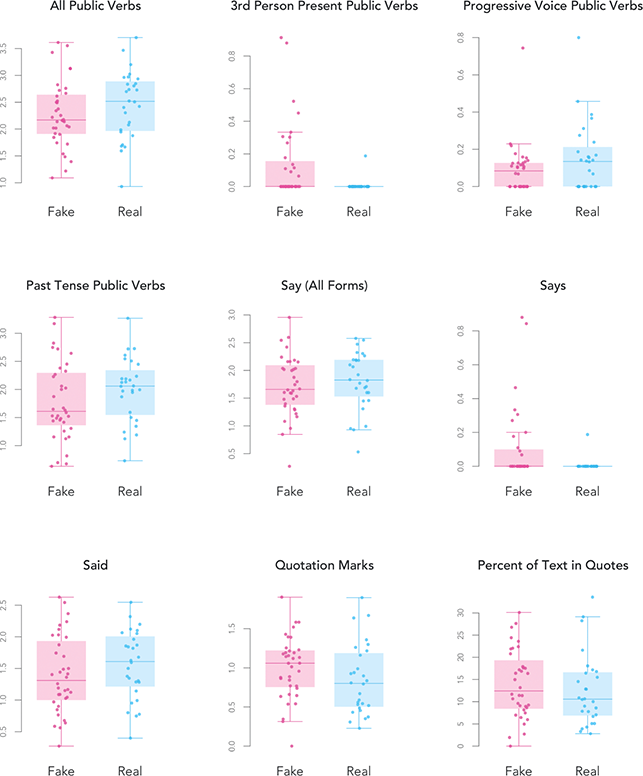

I lied and I lied – and then I lied some more. I lied about where I’d been, I lied about where I’d found information, I lied about how I wrote the story. And these were no everyday little white lies – they were complete fantasies, embellished down to the tiniest detail.