Introduction

One of the primary goals of the Clinical and Translational Science Award (CTSA) program of the National Center for Advancing Translational Sciences is to improve population health by accelerating the translation of scientific discoveries in the laboratory and clinic into practices for the community [1]. As part of this endeavor, every CTSA hub has a pilot project program aimed at funding promising early career investigators and innovative early stage research projects across the translational spectrum. In addition, metrics relating to outcomes of pilot project programs were included in the first wave of National Center for Advancing Translational Sciences-mandated common metrics [2], placing a greater focus on the pilot programs nationwide.

Partnering with other local organizations, the Institute for Integration of Medicine & Science (IIMS), comprising the academic home of the CTSA at the University of Texas Health Science Center at San Antonio, provides approximately $500,000 in pilot project funding each year to advance the health of our population in South Texas and beyond. From 2009 to 2016, the IIMS and its partners funded 148 pilot projects, 80 pilots focused on high-impact disease targets and 49 pilots focused on drug, device, or vaccine development. Further, 45% of all funded pilot projects supported research conducted by early stage investigators. As of June 2017, these 148 pilot projects, which typically receive $50,000 in funding, have led to 157 peer-reviewed publications, 67 successfully funded grant applications, and 25 patents or patent applications.

Although the pilot projects program is an important and effective means of promoting translation of science, the application, review, and selection process is not standardized across CTSA hubs. As such, processes can vary greatly. As part of our annual evaluation efforts at IIMS, we surveyed previous applicants and pilot project awardees regarding their experience with the program, eliciting feedback with the application and review process. Timeliness of the funding announcement and transparency of the review process were highlighted as concerns of applicants and awardees. Further, the efficiency of the process was a concern for the pilot project program leadership and administration as they have reviewed and scored over 750 applications since 2009. Therefore, a variety of process engineering methods were considered as potential solutions, including lean systems (just-in-time and waste elimination) [Reference Womack and Jones3], six sigma (variability reduction and structured problems solving) [Reference Pyzdek and Keller4], theory of constraints (capacity optimization) [Reference Watson, Blackstone and Gardiner5], and agile systems (resource coordination) [Reference Fernandez and Fernandez6]. For the need of timeliness, standardization, and transparency in this program, the integrated lean six sigma (LSS) approach was selected to streamline and improve the process, an approach that our team has previously employed in a nonlinear research administration setting [Reference Schmidt, Goros and Parsons7].

Methods and Materials

We used the LSS framework to examine the process flow of our pilot projects program application and review procedures. In general, the “lean” concept aims to streamline processes by reducing waste and nonvalue-added steps, while “six sigma” reduces variability in processes [Reference Schweikhart and Dembe8]. The integration of both concepts builds on individual strengths, while also minimizing the shortcomings of each. These methodologies were first introduced in manufacturing environments to streamline and improve processes, but more recently have been successfully applied in other settings such as healthcare [Reference DelliFraine, Langabeer and Nembhard9–Reference de Koning11]. Within the LSS framework, we used the “Define, Measure, Analyze, Improve, Control” (DMAIC) approach. Through DMAIC, processes are clearly mapped, to identify weak elements and nonvalue-added activities, which are then eliminated. In addition, methods for continuous performance monitoring are established [Reference John12] resulting in a culture of continuous process evaluation and improvement.

In addition to LSS and DMAIC, we used our annual “voice of the consumer” survey for all of our CTSA core resources to gauge user satisfaction, identify areas for improvement, and gain insights about user needs and concerns. These surveys were used to identify the problems in the pilot program and subsequently to evaluate the effect of the implemented changes for applicants and reviewers. We did not collect information about academic rank or focus of research to protect confidentiality among a relatively small group of investigators. We were unable to compare respondents and nonrespondents. Therefore, our preproject and postproject comparisons may be affected by this potential self-selection bias.

Results

Define

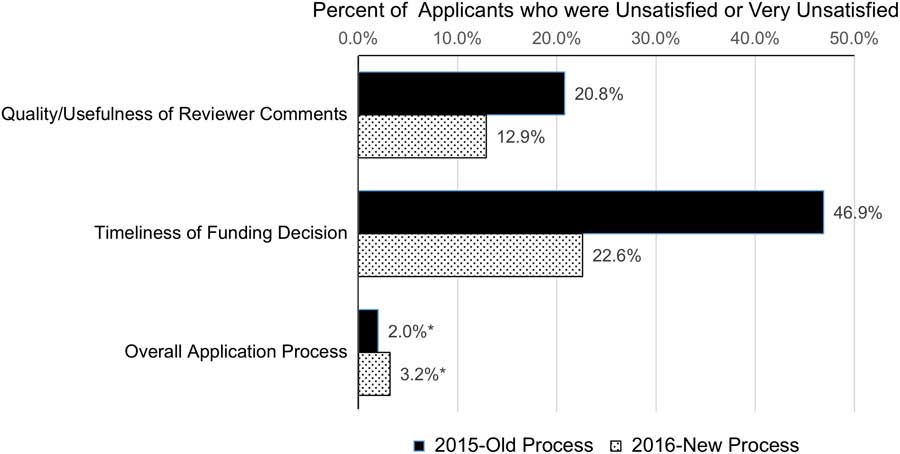

In October 2015, we surveyed pilot project applicants and awardees regarding their experiences with the application and review process. Out of 117 applicants and awardees, 50 investigators (42.7%) provided responses. Most applicants were satisfied with the application process overall, however, a sizable percentage were unsatisfied or very unsatisfied with several aspects of the process. For instance, timeliness of the funding decision (46.9% unsatisfied) and the transparency of the review process (33.3% unsatisfied) were of great concern (Fig. 1). One survey respondent stated that “The review time is too long. The review process needs to be more transparent.” Another concern was the quality/usefulness of reviewer comments (20.8% unsatisfied). In addition to applicant concerns, the pilot projects program leadership and administration thought their current review practices were cumbersome and inefficient.

Fig. 1 Changes in satisfaction with pilot projects review process after the lean six sigma project (2015 and 2016 surveys). The chart presents the percent of pilot project applicants in 2015 (n=50) and 2016 (n=31) rating their satisfaction for each item as unsatisfied or very unsatisfied.

Measure and Analyze

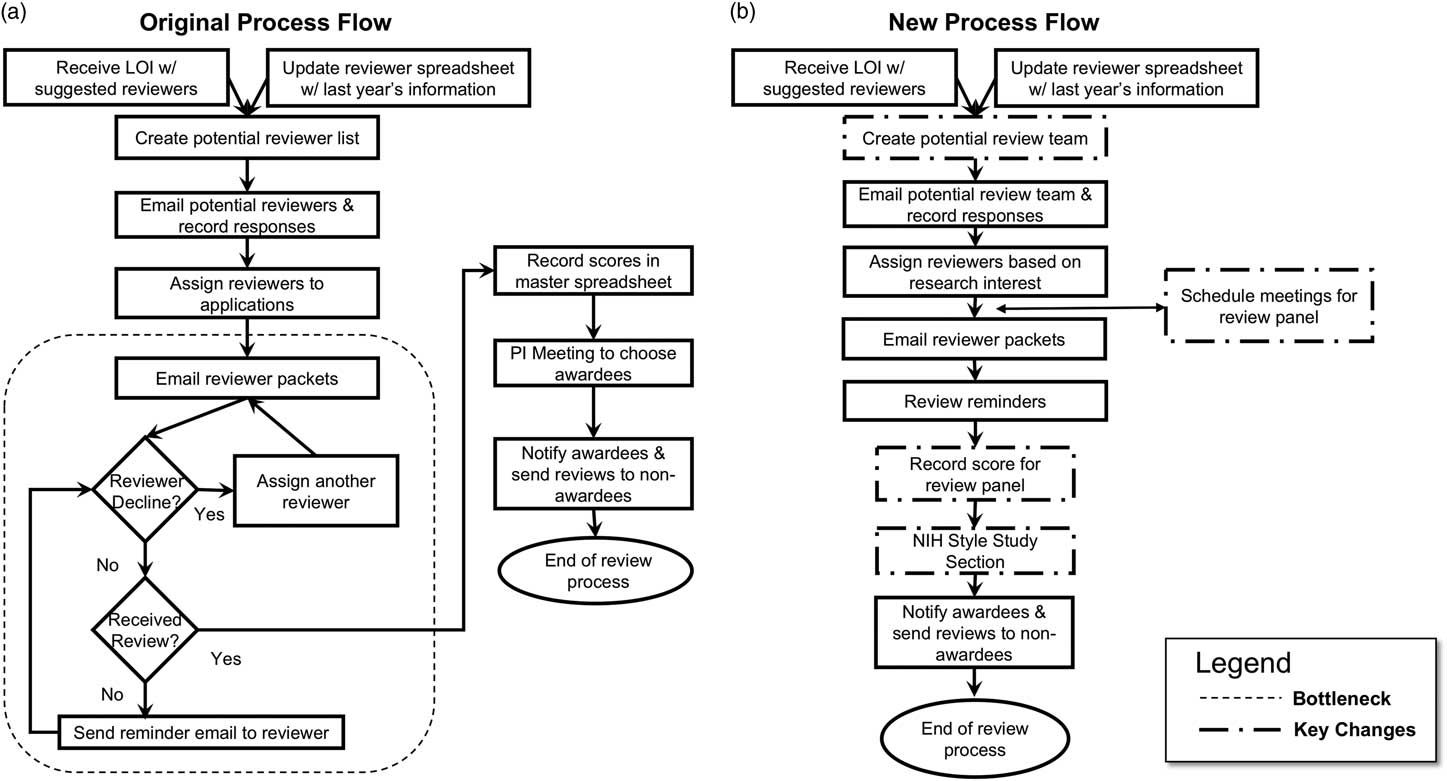

As a result of these concerns, we worked closely with the pilot projects administrative team to map out the current process flow, diagramming the entire process beginning with applicant letters of intent through the notice of awards (Fig. 2a ). One of the primary bottlenecks was the review process. In an effort to have 3 subject matter experts for each application, team administration spent considerable time identifying, contacting, and reminding reviewers to send their critiques by the stated deadlines. This was done mostly by email. Not all reviews were provided in time and reviewers sometimes had conflicts and were therefore unable to complete their reviews in time or at all, placing additional burden on the administrative team to find new reviewers on short notice. In several cases, no new reviewers could be identified leaving the program leadership to review additional applications with short turnaround times, placing major strain on the leadership team and necessitating reviews that were not in their area of expertise. Each reviewer scored the application individually and emailed comments and scores to the pilot project program leadership. Once at least 2 reviews for each application were acquired, scores were entered into a master spreadsheet. A PI meeting was scheduled to select awardees. This process was complicated by the fact that the scores for each applicant sometimes varied widely and often required re-review by the leadership team. Based on the team’s experience with past rounds of pilot project reviews, as well as other types of review processes, we identified a lack of incentives and over-commitment as major factors impacting the difficulty obtaining reviews.

Fig. 2 Original and revised process flow map. (a) The original process involved emailing potential reviewers for their availability followed by emailing applications to review by a specified date. Reviewers often missed the deadline or did not return reviews, necessitating requesting other faculty to review the applications on a shortened time frame. Difficulties in obtaining 3 reviews per application were common. The multiple emails required by this process led to inefficient use of pilot project administration staff and a prolonged time between the due date of the applications and announcement of funding decisions. (b) A National Institute of Health (NIH)-style study section replaced the inefficient email review process resulting in more efficient use of administrative staff time and greater reviewer and applicant satisfaction. LOI, letter of intent.

Improve

We focused on applicant concerns regarding the transparency of the review process and the quality/usefulness of reviewer comments. Using our results from the process mapping, we implemented a National Institute of Health (NIH)-style study section in 2016 (Fig. 2b ). The new process involved recruiting a knowledgeable review team with the expertise to review applications ranging from T1 to T4 research. The new review team consisted of 35 members, compared with over 100 reviewers used for the previous process. In 2016, a total of 83 pilot applications were received, resulting in ~40 applications being discussed in each of 2 study section meetings. Applications received 3 reviews, with each reviewer scoring a total of eight 4-page narratives. Reviewing faculty from our institution received a funds transfer to their flexible research accounts to compensate for their time commitment and expertise. Reviewers from outside our institution received an honorarium. The amount in both cases was $1500. Under this new process, reviewer dropout was minimal. Further, we were able to reduce the time to funding announcement by 2 months in our 1st year of using the new process (8 months in 2015 compared with 6 months in 2016).

Control

After a full grant application cycle using the new review process was completed, we surveyed reviewers about their experience with the process and potential areas for improvement. The response rate was high with 26 of 33 reviewers (78.8%) completing the survey. All responding reviewers were satisfied with the improved review process (53.8% very satisfied; 46.2% satisfied), including the use of in-person study section format for the review (68% very satisfied; 28% satisfied). Most reviewers had prior experience reviewing CTSA pilot applications (84.6%) and all of these preferred the in-person study section format for reviewing pilot project applications over the email-based approach (100%). To further improve the process, reviewers suggested allowing more time to read the critiques of other reviews prior to the study section, more time for discussion of each project, being able to choose applications based on interests, and creating 2 separate study sections focusing on: (1) early translational research and (2) population science to more effectively accommodate the wide range of proposals and more efficiently utilize reviewer time.

We also surveyed the 2016 applicants and awardees. Out of 83 applicants and awardees, 31 investigators provided responses (response rate=37.3%). Of those who had applied previously, 31.3% (5/16) rated the new grant process as improved, while the 68.8% (11/16) rated it as the same as previous years. Comparing overall satisfaction with the quality/usefulness of reviewer comments and the timeliness to funding decision, the percent of respondents that were unsatisfied or very unsatisfied decreased from 20.8 and 46.9% in 2015 to 12.9 and 22.6% in 2016, respectively (Fig. 1). Additional applicant suggestions for further improvements included better communication about timelines and a desire for further reduction in the time from submission to award notification. Applicants also mirrored the suggestion provided by reviewers to create 2 specialized study sections to improve quality/usefulness of feedback.

Discussion and Next Steps

Overall, using the LSS DMAIC approach, we restructured our pilot project program review process by implementing an NIH-style study section that also offered incentives for reviewers. These changes led to a decrease in the time to funding decision by 2 months, and improved applicant satisfaction with the quality/usefulness of reviewer comments. The results from our surveys highlighted the need for further improvements in the experiences of reviewers and applicants/awardees alike, which we will implement during our second cycle using the new processes. We will continue annual surveys of applicants and reviewers to inform ongoing improvement in our processes and approaches. Moreover, we will measure lead times for each step in the new process flow to identify potential bottlenecks or areas needing improvement. We plan to examine the publication and grant application success metrics of pilot studies funded under the new process and compare them to projects funded under the previous review process to determine if the process improvement has had an effect on the quality and success of the funded pilot project.

Our project demonstrated how LSS, a methodology that has not been commonly applied in research administration, can be used to evaluate processes in translational science in academic health centers. As such, LSS offers a structured methodology that serves strategically to map processes and identify areas for improvement by highlighting redundancies and bottlenecks.

Acknowledgments

The project described was supported by the National Center for Advancing Translational Sciences, National Institutes of Health, through grant no. UL1 TR001120 (R.A.C., P.K.S., S.S., L.A.S.) and through grant no. KL2 TR001118 (S.S.). The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Disclosures

The authors have no conflicts of interest to declare.