Article contents

Deep reinforcement transfer learning of active control for bluff body flows at high Reynolds number

Published online by Cambridge University Press: 20 October 2023

Abstract

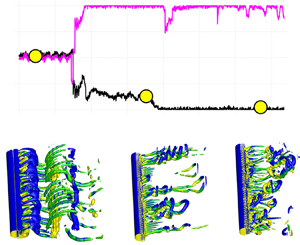

We demonstrate how to accelerate the computationally taxing process of deep reinforcement learning (DRL) in numerical simulations for active control of bluff body flows at high Reynolds number ( $Re$) using transfer learning. We consider the canonical flow past a circular cylinder whose wake is controlled by two small rotating cylinders. We first pre-train the DRL agent using data from inexpensive simulations at low

$Re$) using transfer learning. We consider the canonical flow past a circular cylinder whose wake is controlled by two small rotating cylinders. We first pre-train the DRL agent using data from inexpensive simulations at low  $Re$, and subsequently we train the agent with small data from the simulation at high

$Re$, and subsequently we train the agent with small data from the simulation at high  $Re$ (up to

$Re$ (up to  $Re=1.4\times 10^5$). We apply transfer learning (TL) to three different tasks, the results of which show that TL can greatly reduce the training episodes, while the control method selected by TL is more stable compared with training DRL from scratch. We analyse for the first time the wake flow at

$Re=1.4\times 10^5$). We apply transfer learning (TL) to three different tasks, the results of which show that TL can greatly reduce the training episodes, while the control method selected by TL is more stable compared with training DRL from scratch. We analyse for the first time the wake flow at  $Re=1.4\times 10^5$ in detail and discover that the hydrodynamic forces on the two rotating control cylinders are not symmetric.

$Re=1.4\times 10^5$ in detail and discover that the hydrodynamic forces on the two rotating control cylinders are not symmetric.

- Type

- JFM Papers

- Information

- Copyright

- © The Author(s), 2023. Published by Cambridge University Press

Footnotes

Equal contribution.

References

- 3

- Cited by