1. Introduction

Viewing fluid turbulence as a deterministic chaotic dynamical system has revealed new insights beyond what can be achieved through a purely statistical approach (see reviews by Kawahara, Uhlmann & van Veen Reference Kawahara, Uhlmann and van Veen2012; Graham & Floryan Reference Graham and Floryan2021). The idea for a dynamical description by envisioning turbulence as a chaotic trajectory in the infinite-dimensional state space of the Navier–Stokes equations dates back to the seminal work of Hopf (Reference Hopf1948). A remarkable progress in bridging the gaps between ideas from dynamical systems theory and practically studying turbulence in this framework has been the numerical computation of invariant solutions – an advance that did not happen until the 1990s. Invariant solutions are non-chaotic solutions to the governing equations with simple dependence on time. This includes equilibria (Nagata Reference Nagata1990), travelling waves (Faisst & Eckhardt Reference Faisst and Eckhardt2003; Wedin & Kerswell Reference Wedin and Kerswell2004), periodic and relative periodic orbits (Kawahara & Kida Reference Kawahara and Kida2001; Chandler & Kerswell Reference Chandler and Kerswell2013; Budanur et al. Reference Budanur, Short, Farazmand, Willis and Cvitanović2017), and invariant tori (Suri et al. Reference Suri, Pallantla, Schatz and Grigoriev2019; Parker, Ashtari & Schneider Reference Parker, Ashtari and Schneider2023). In the dynamical description, the chaotic trajectory of the turbulent dynamics transiently, yet recurringly, visits the neighbourhood of the unstable invariant solutions embedded in the state space of the evolution equations. In this picture, therefore, unstable invariant solutions serve as the building blocks supporting the turbulent dynamics, and extracting them is the key for studying turbulence in the dynamical systems framework.

Equilibria of plane Couette flow (PCF) computed numerically by Nagata (Reference Nagata1990) were the first non-trivial invariant solutions discovered in a wall-bounded three-dimensional (3-D) fluid flow. Despite their lack of temporal variation, equilibrium solutions can capture essential features of chaotic flows and play an important role in characterising their chaotic dynamics. In PCF, for instance, Nagata (Reference Nagata1990), Clever & Busse (Reference Clever and Busse1992), Waleffe (Reference Waleffe1998), Itano & Toh (Reference Itano and Toh2001), Wang, Gibson & Waleffe (Reference Wang, Gibson and Waleffe2007) and others compute equilibrium solutions. Typically, these equilibria contain wavy streaks together with pairs of staggered counter-rotating streamwise vortices, and thus capture basic structures of near-wall turbulence. Gibson, Halcrow & Cvitanović (Reference Gibson, Halcrow and Cvitanović2008, Reference Gibson, Halcrow and Cvitanović2009) and Halcrow et al. (Reference Halcrow, Gibson, Cvitanović and Viswanath2009) demonstrate how the chaotic dynamics is organised by coexisting equilibrium solutions together with their stable and unstable manifolds; Schneider, Gibson & Burke (Reference Schneider, Gibson and Burke2010) and Gibson & Brand (Reference Gibson and Brand2014) compute equilibria that capture localisation in the spanwise direction; Eckhardt & Zammert (Reference Eckhardt and Zammert2018) compute equilibria that capture localisation in the streamwise direction; Brand & Gibson (Reference Brand and Gibson2014) compute equilibria that capture localisation in both the streamwise and spanwise directions; and Reetz, Kreilos & Schneider (Reference Reetz, Kreilos and Schneider2019) identify an equilibrium solution underlying self-organised oblique turbulent–laminar stripes. While equilibrium solutions have been shown to capture features of the chaotic flow dynamics, their numerical identification in very-high-dimensional fluid flow problems remains challenging.

One approach to computing equilibrium solutions is to consider a root finding problem. Irrespective of their dynamical stability, equilibria of the dynamical system ![]() ${\partial u/\partial t=r(u)}$ are, by definition, roots of the nonlinear operator governing the time evolution,

${\partial u/\partial t=r(u)}$ are, by definition, roots of the nonlinear operator governing the time evolution, ![]() ${r(u)=0}$. The root finding problem can be solved by Newton(–Raphson) iterations. Newton iterations are popular because of their locally quadratic convergence. However, employing Newton iterations for solving the root finding problem has two principal drawbacks. For a system described by

${r(u)=0}$. The root finding problem can be solved by Newton(–Raphson) iterations. Newton iterations are popular because of their locally quadratic convergence. However, employing Newton iterations for solving the root finding problem has two principal drawbacks. For a system described by ![]() $N$ degrees of freedom, the update vector in each iteration is the solution to a linear system of equations whose coefficient matrix is the

$N$ degrees of freedom, the update vector in each iteration is the solution to a linear system of equations whose coefficient matrix is the ![]() $N\times N$ Jacobian. Solving this large system of equations, and the associated quadratically scaling memory requirement, are too costly for very-high-dimensional, strongly coupled fluid flow problems. In addition to poor scaling, Newton iterations typically have a small radius of convergence, meaning that the algorithm needs to be initialised with an extremely accurate initial guess in order to converge successfully. Finding sufficiently accurate guesses is not simple even for weakly chaotic flows close to the onset of turbulence. Newton-GMRES-hookstep is the state-of-the-art matrix-free variant of the Newton method commonly used for computing invariant solutions of fluid flows. This method defeats the

$N\times N$ Jacobian. Solving this large system of equations, and the associated quadratically scaling memory requirement, are too costly for very-high-dimensional, strongly coupled fluid flow problems. In addition to poor scaling, Newton iterations typically have a small radius of convergence, meaning that the algorithm needs to be initialised with an extremely accurate initial guess in order to converge successfully. Finding sufficiently accurate guesses is not simple even for weakly chaotic flows close to the onset of turbulence. Newton-GMRES-hookstep is the state-of-the-art matrix-free variant of the Newton method commonly used for computing invariant solutions of fluid flows. This method defeats the ![]() $N^2$ memory scaling drawback by employing the generalised minimal residual (GMRES) method and approximating the update vector in a Krylov subspace (Saad & Schultz Reference Saad and Schultz1986; Tuckerman, Langham & Willis Reference Tuckerman, Langham and Willis2019). In addition, the robustness of the convergence is improved via hookstep trust-region optimisation (Dennis & Schnabel Reference Dennis and Schnabel1996; Viswanath Reference Viswanath2007, Reference Viswanath2009). Newton-GMRES-hookstep thereby enlarges the basin of convergence of Newton iterations. Yet requiring an accurate initial guess is still a bottleneck of this method, and identifying unstable equilibria remains challenging.

$N^2$ memory scaling drawback by employing the generalised minimal residual (GMRES) method and approximating the update vector in a Krylov subspace (Saad & Schultz Reference Saad and Schultz1986; Tuckerman, Langham & Willis Reference Tuckerman, Langham and Willis2019). In addition, the robustness of the convergence is improved via hookstep trust-region optimisation (Dennis & Schnabel Reference Dennis and Schnabel1996; Viswanath Reference Viswanath2007, Reference Viswanath2009). Newton-GMRES-hookstep thereby enlarges the basin of convergence of Newton iterations. Yet requiring an accurate initial guess is still a bottleneck of this method, and identifying unstable equilibria remains challenging.

An alternative to the root finding set-up is to view the problem of computing an equilibrium solution as an optimisation problem. Deviation of a flow field from being an equilibrium solution can be penalised by the norm of the to-be-zeroed right-hand-side operator, ![]() $\|r(u)\|$. The absolute minima of this cost function,

$\|r(u)\|$. The absolute minima of this cost function, ![]() $\|r(u)\|=0$, correspond to equilibrium solutions of the system. Therefore, the problem of finding equilibria can be recast as the minimisation of the cost function. A matrix-free method is crucial for solving this minimisation problem in very-high-dimensional fluid flows. Farazmand (Reference Farazmand2016) proposed an adjoint-based minimisation technique to find equilibria and travelling waves of a two-dimensional (2-D) Kolmogorov flow. The adjoint calculations allow the gradient of the cost function to be constructed analytically as an explicit function of the current flow field. This results in a matrix-free gradient descent algorithm whose memory requirement scales linearly with the size of the problem. The adjoint-based minimisation method is significantly more robust to inaccurate initial guesses in comparison to its alternatives based on solving a root finding problem using Newton iterations. This improvement, however, is obtained by sacrificing the quadratic convergence of the Newton iterations and exhibiting slow convergence. In the context of fluid mechanics, the variational approach has been applied successfully to the 2-D Kolmogorov flows (see Farazmand Reference Farazmand2016; Parker & Schneider Reference Parker and Schneider2022).

$\|r(u)\|=0$, correspond to equilibrium solutions of the system. Therefore, the problem of finding equilibria can be recast as the minimisation of the cost function. A matrix-free method is crucial for solving this minimisation problem in very-high-dimensional fluid flows. Farazmand (Reference Farazmand2016) proposed an adjoint-based minimisation technique to find equilibria and travelling waves of a two-dimensional (2-D) Kolmogorov flow. The adjoint calculations allow the gradient of the cost function to be constructed analytically as an explicit function of the current flow field. This results in a matrix-free gradient descent algorithm whose memory requirement scales linearly with the size of the problem. The adjoint-based minimisation method is significantly more robust to inaccurate initial guesses in comparison to its alternatives based on solving a root finding problem using Newton iterations. This improvement, however, is obtained by sacrificing the quadratic convergence of the Newton iterations and exhibiting slow convergence. In the context of fluid mechanics, the variational approach has been applied successfully to the 2-D Kolmogorov flows (see Farazmand Reference Farazmand2016; Parker & Schneider Reference Parker and Schneider2022).

Despite the robust convergence and favourable scaling properties of the adjoint-based minimisation method, it has not been applied to 3-D wall-bounded flows. Beyond the high-dimensionality of the 3-D wall-bounded flows, the main challenge in the application of this method lies in handling the wall boundary conditions that cannot be imposed readily while evolving the adjoint-descent dynamics (see § 3.3). This is in contrast to doubly periodic 2-D (or triply periodic 3-D) flows where the adjoint-descent dynamics is subject to periodic boundary conditions only, that can be imposed by representing variables in Fourier basis (Farazmand Reference Farazmand2016; Parker & Schneider Reference Parker and Schneider2022). To construct a suitable formulation for 3-D flows in the presence of walls, we project the evolving velocity field onto the space of divergence-free fields and constrain pressure so that it satisfies the pressure Poisson equation instead of evolving independent of the velocity (see § 3.4). However, solving the pressure Poisson equation with sufficient accuracy is not straightforward in wall-bounded flows. The challenge in computing the instantaneous pressure associated with a divergence-free velocity field stems from the absence of explicit physical boundary conditions on pressure at the walls (Rempfer Reference Rempfer2006). As a result, a successful implementation of the constrained dynamics hinges on resolving the challenge of expressing accurately the pressure in wall-bounded flows.

We propose an algorithm for computing equilibria of wall-bounded flows using adjoint-descent minimisation in the space of divergence-free velocity fields. The proposed algorithm circumvents the explicit construction of pressure, thereby overcoming the inherent challenge of dealing with pressure in the application of the adjoint-descent method to wall-bounded flows. We construct equilibria of PCF, and discuss the application of the introduced method to other wall-bounded flows and other types of invariant solutions where the challenge of dealing with pressure exists analogously. To accelerate the convergence of the algorithm, we propose a data-driven procedure that takes advantage of the almost linear behaviour of the adjoint-descent dynamics in the vicinity of an equilibrium solution. The acceleration technique approximates the linear dynamics using dynamic mode decomposition, and thereby approximates the asymptotic solution of the adjoint-descent dynamics. The large basin of convergence together with the improved convergence properties renders the adjoint-descent method a viable alternative to the state-of-the-art Newton method.

The remainder of the paper is structured as follows. The adjoint-based variational method for constructing equilibrium solutions is introduced in a general setting in § 2. The adjoint-descent dynamics is derived for wall-bounded shear flows in § 3, and an algorithm for numerically integrating the derived dynamics is presented in § 4. The method is applied to PCF in § 5, where the convergence of multiple equilibria is demonstrated. The data-driven procedure for accelerating the convergence is discussed in § 6. Finally, the paper is summarised and concluding remarks are provided in § 7.

2. Adjoint-descent method for constructing equilibrium solutions

Consider a general autonomous dynamical system

where ![]() $\boldsymbol {u}$ is an

$\boldsymbol {u}$ is an ![]() $n$-dimensional real-valued field defined over a

$n$-dimensional real-valued field defined over a ![]() $d$-dimensional spatial domain

$d$-dimensional spatial domain ![]() $\boldsymbol {x}\in \varOmega \subseteq \mathbb {R}^d$ and varying with time

$\boldsymbol {x}\in \varOmega \subseteq \mathbb {R}^d$ and varying with time ![]() $t\in \mathbb {R}$. Within the space of vector fields

$t\in \mathbb {R}$. Within the space of vector fields ![]() $\mathscr {M}=\{\boldsymbol {u}:\varOmega \to \mathbb {R}^n\}$, the evolution of

$\mathscr {M}=\{\boldsymbol {u}:\varOmega \to \mathbb {R}^n\}$, the evolution of ![]() $\boldsymbol {u}$ is governed by the smooth nonlinear operator

$\boldsymbol {u}$ is governed by the smooth nonlinear operator ![]() $\boldsymbol {r}$ subject to time-independent boundary conditions (BCs) at

$\boldsymbol {r}$ subject to time-independent boundary conditions (BCs) at ![]() $\partial \varOmega$, the boundary of

$\partial \varOmega$, the boundary of ![]() $\varOmega$. Equilibrium solutions of this dynamical system are

$\varOmega$. Equilibrium solutions of this dynamical system are ![]() $\boldsymbol {u}^\ast \in \mathscr {M}$ for which

$\boldsymbol {u}^\ast \in \mathscr {M}$ for which

The residual of (2.2) is not zero for non-equilibrium states ![]() $\boldsymbol {u}\neq \boldsymbol {u}^\ast$. We thus penalise non-equilibrium states by the non-negative cost function

$\boldsymbol {u}\neq \boldsymbol {u}^\ast$. We thus penalise non-equilibrium states by the non-negative cost function ![]() $J^2$ defined as

$J^2$ defined as

where ![]() $\left \langle \cdot,\cdot \right \rangle$ denotes an inner product defined on

$\left \langle \cdot,\cdot \right \rangle$ denotes an inner product defined on ![]() $\mathscr {M}$. The cost function takes zero value if and only if

$\mathscr {M}$. The cost function takes zero value if and only if ![]() $\boldsymbol {u}=\boldsymbol {u}^\ast$. We thereby recast the problem of finding equilibrium solutions

$\boldsymbol {u}=\boldsymbol {u}^\ast$. We thereby recast the problem of finding equilibrium solutions ![]() $\boldsymbol {u}^\ast$ as a minimisation problem over

$\boldsymbol {u}^\ast$ as a minimisation problem over ![]() $\mathscr {M}$, and look for the global minima of

$\mathscr {M}$, and look for the global minima of ![]() $J^2$ at which

$J^2$ at which ![]() $J^2=0$, following the arguments of Farazmand (Reference Farazmand2016).

$J^2=0$, following the arguments of Farazmand (Reference Farazmand2016).

In order to find minima of ![]() $J^2$, we construct another dynamical system in

$J^2$, we construct another dynamical system in ![]() $\mathscr {M}$ along whose evolution the cost function

$\mathscr {M}$ along whose evolution the cost function ![]() $J^2$ decreases monotonically. The objective is to define an evolution equation

$J^2$ decreases monotonically. The objective is to define an evolution equation

where the choice of the operator ![]() $\boldsymbol {g}$ guarantees

$\boldsymbol {g}$ guarantees

Here, ![]() $\tau$ is a fictitious time that parametrises the evolution governed by the constructed dynamics. The rate of change of

$\tau$ is a fictitious time that parametrises the evolution governed by the constructed dynamics. The rate of change of ![]() $J^2$ along trajectories of the dynamical system (2.4) is

$J^2$ along trajectories of the dynamical system (2.4) is

where ![]() $\mathscr {L}(\boldsymbol {u};\boldsymbol {g})$ is the directional derivative of

$\mathscr {L}(\boldsymbol {u};\boldsymbol {g})$ is the directional derivative of ![]() $\boldsymbol {r}(\boldsymbol {u})$ along

$\boldsymbol {r}(\boldsymbol {u})$ along ![]() $\partial \boldsymbol {u}/\partial \tau =\boldsymbol {g}$:

$\partial \boldsymbol {u}/\partial \tau =\boldsymbol {g}$:

We can rewrite (2.6) as

where ![]() $\mathscr {L}^{\dagger}$ is the adjoint operator of the directional derivative

$\mathscr {L}^{\dagger}$ is the adjoint operator of the directional derivative ![]() $\mathscr {L}$, with the following definition:

$\mathscr {L}$, with the following definition:

To guarantee the monotonic decrease of ![]() $J^2$ with

$J^2$ with ![]() $\tau$, we choose

$\tau$, we choose

This choice results in monotonic decrease of ![]() $J^2$ along solution trajectories of the adjoint dynamical system (2.4):

$J^2$ along solution trajectories of the adjoint dynamical system (2.4):

In summary, in order to find equilibria of ![]() $\partial \boldsymbol {u}/\partial t=\boldsymbol {r}(\boldsymbol {u})$, the variational approach proposed by Farazmand (Reference Farazmand2016) constructs a globally contracting dynamical system

$\partial \boldsymbol {u}/\partial t=\boldsymbol {r}(\boldsymbol {u})$, the variational approach proposed by Farazmand (Reference Farazmand2016) constructs a globally contracting dynamical system ![]() $\partial \boldsymbol {u}/\partial \tau =\boldsymbol {g}(\boldsymbol {u})$ that is essentially the gradient descent of the cost function

$\partial \boldsymbol {u}/\partial \tau =\boldsymbol {g}(\boldsymbol {u})$ that is essentially the gradient descent of the cost function ![]() $J^2$. Every trajectory of the constructed dynamical system eventually reaches a stable equilibrium corresponding to a minimum of the cost function. Equilibria of the original dynamics are equilibria of the adjoint dynamics at which the cost function takes its global minimum value

$J^2$. Every trajectory of the constructed dynamical system eventually reaches a stable equilibrium corresponding to a minimum of the cost function. Equilibria of the original dynamics are equilibria of the adjoint dynamics at which the cost function takes its global minimum value ![]() $J^2=0$. However, the adjoint dynamics might have other equilibria that correspond to a local minimum of the cost function with

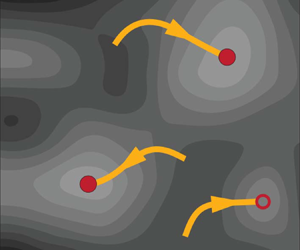

$J^2=0$. However, the adjoint dynamics might have other equilibria that correspond to a local minimum of the cost function with ![]() $J^2>0$, and are not equilibria of the original dynamics. This is illustrated schematically in figure 1. Finding equilibria of

$J^2>0$, and are not equilibria of the original dynamics. This is illustrated schematically in figure 1. Finding equilibria of ![]() $\partial \boldsymbol {u}/\partial t=\boldsymbol {r}(\boldsymbol {u})$ requires integrating the adjoint dynamics

$\partial \boldsymbol {u}/\partial t=\boldsymbol {r}(\boldsymbol {u})$ requires integrating the adjoint dynamics ![]() $\partial \boldsymbol {u}/\partial \tau =\boldsymbol {g}(\boldsymbol {u})$ forwards in the fictitious time

$\partial \boldsymbol {u}/\partial \tau =\boldsymbol {g}(\boldsymbol {u})$ forwards in the fictitious time ![]() $\tau$. The solutions obtained at

$\tau$. The solutions obtained at ![]() $\tau \to \infty$ for which

$\tau \to \infty$ for which ![]() $J^2=0$ are equilibria of the original system. Otherwise, when the trajectory gets stuck in a local minimum of the cost function, the search fails and the adjoint dynamics should be integrated from another initial condition.

$J^2=0$ are equilibria of the original system. Otherwise, when the trajectory gets stuck in a local minimum of the cost function, the search fails and the adjoint dynamics should be integrated from another initial condition.

Figure 1. Replacing the original dynamics with the gradient descent of the cost function ![]() $J=\|\boldsymbol {r}(\boldsymbol {u})\|$ by the adjoint-descent method. (a) Schematic of the trajectories and two equilibria of the original system parametrised by the physical time

$J=\|\boldsymbol {r}(\boldsymbol {u})\|$ by the adjoint-descent method. (a) Schematic of the trajectories and two equilibria of the original system parametrised by the physical time ![]() $t$:

$t$: ![]() $\partial \boldsymbol {u}/\partial t=\boldsymbol {r}(\boldsymbol {u})$. (b) Contours of

$\partial \boldsymbol {u}/\partial t=\boldsymbol {r}(\boldsymbol {u})$. (b) Contours of ![]() $J$ and sample trajectories of its gradient flow parametrised by the fictitious time

$J$ and sample trajectories of its gradient flow parametrised by the fictitious time ![]() $\tau$:

$\tau$: ![]() $\partial \boldsymbol {u}/\partial \tau =\boldsymbol {g}(\boldsymbol {u})$. Trajectories of the adjoint-descent dynamics converge to a stable fixed point, that is, either an equilibrium of the original dynamics, where the global minimum value of

$\partial \boldsymbol {u}/\partial \tau =\boldsymbol {g}(\boldsymbol {u})$. Trajectories of the adjoint-descent dynamics converge to a stable fixed point, that is, either an equilibrium of the original dynamics, where the global minimum value of ![]() $J=0$ is achieved, or a state at which

$J=0$ is achieved, or a state at which ![]() $J$ takes a local minimum value.

$J$ takes a local minimum value.

3. Application to the wall-bounded shear flows

3.1. Governing equations

We consider the flow in a 3-D rectangular domain ![]() $\varOmega$ of non-dimensional size

$\varOmega$ of non-dimensional size ![]() $x\in [0,L_x)$,

$x\in [0,L_x)$, ![]() $y\in [-1,+1]$ and

$y\in [-1,+1]$ and ![]() $z\in [0,L_z)$. The domain is bounded in

$z\in [0,L_z)$. The domain is bounded in ![]() $y$ between two parallel plates, and is periodic in the lateral directions

$y$ between two parallel plates, and is periodic in the lateral directions ![]() $x$ and

$x$ and ![]() $z$. Incompressible isotherm flow of a Newtonian fluid is governed by the Navier–Stokes equations (NSE). The non-dimensional, perturbative form of the NSE reads

$z$. Incompressible isotherm flow of a Newtonian fluid is governed by the Navier–Stokes equations (NSE). The non-dimensional, perturbative form of the NSE reads

Here, ![]() $Re$ is the Reynolds number, and

$Re$ is the Reynolds number, and ![]() $\boldsymbol {u}_b$ is the laminar base flow velocity field. The fields

$\boldsymbol {u}_b$ is the laminar base flow velocity field. The fields ![]() $\boldsymbol {u}$ and

$\boldsymbol {u}$ and ![]() $p$ are the deviations of the total velocity and pressure from the base flow velocity and pressure fields, respectively. For common driving mechanisms such as the motion of walls in the

$p$ are the deviations of the total velocity and pressure from the base flow velocity and pressure fields, respectively. For common driving mechanisms such as the motion of walls in the ![]() $xz$ plane, externally imposed pressure differences, or injection/suction through the walls, the laminar base flow satisfies the inhomogeneous BCs, absorbs body forces, and is known a priori. Consequently, the perturbative NSE (3.1) and (3.2) are subject to the BCs

$xz$ plane, externally imposed pressure differences, or injection/suction through the walls, the laminar base flow satisfies the inhomogeneous BCs, absorbs body forces, and is known a priori. Consequently, the perturbative NSE (3.1) and (3.2) are subject to the BCs

The canonical wall-bounded shear flows such as PCF, plane Poiseuille flow and asymptotic suction boundary layer flow are governed by the incompressible NSE (3.1)–(3.5), where ![]() $\boldsymbol {u}_b$ differentiates them from one another. We derive the adjoint-descent dynamics based on a general base flow velocity field

$\boldsymbol {u}_b$ differentiates them from one another. We derive the adjoint-descent dynamics based on a general base flow velocity field ![]() $\boldsymbol {u}_b$, and in § 5 demonstrate the adjoint-based method for the specific case of PCF.

$\boldsymbol {u}_b$, and in § 5 demonstrate the adjoint-based method for the specific case of PCF.

The state space ![]() $\mathscr {M}$ of the NSE contains velocity fields

$\mathscr {M}$ of the NSE contains velocity fields ![]() $\boldsymbol {u}:\varOmega \to \mathbb {R}^3$ of zero divergence that satisfy the kinematic conditions (3.3)–(3.5). The space

$\boldsymbol {u}:\varOmega \to \mathbb {R}^3$ of zero divergence that satisfy the kinematic conditions (3.3)–(3.5). The space ![]() $\mathscr {M}$ carries the standard energy-based

$\mathscr {M}$ carries the standard energy-based ![]() $L_2$ inner product denoted with

$L_2$ inner product denoted with ![]() $\left \langle \cdot,\cdot \right \rangle _\mathscr {M}$. The pressure

$\left \langle \cdot,\cdot \right \rangle _\mathscr {M}$. The pressure ![]() $p$ associated with an admissible velocity field

$p$ associated with an admissible velocity field ![]() $\boldsymbol {u}\in \mathscr {M}$ ensures that under the NSE dynamics, the velocity remains divergence-free,

$\boldsymbol {u}\in \mathscr {M}$ ensures that under the NSE dynamics, the velocity remains divergence-free,

while remaining compatible with the no-slip BCs (3.3),

This requires ![]() $p$ to satisfy the Poisson equation with a velocity-dependent source term (Rempfer Reference Rempfer2006; Canuto et al. Reference Canuto, Hussaini, Quarteroni and Zang2007).

$p$ to satisfy the Poisson equation with a velocity-dependent source term (Rempfer Reference Rempfer2006; Canuto et al. Reference Canuto, Hussaini, Quarteroni and Zang2007).

We could not derive a variational dynamics based on expressing pressure explicitly as the solution to this Poisson equation. Therefore, instead of the state space ![]() $\mathscr {M}$ of the NSE, we define the search space such that ‘velocity’ and ‘pressure’ can evolve independently. Accordingly, we define the cost function such that residuals of both (3.1) and (3.2) are included. Otherwise, the derivation follows § 2.

$\mathscr {M}$ of the NSE, we define the search space such that ‘velocity’ and ‘pressure’ can evolve independently. Accordingly, we define the cost function such that residuals of both (3.1) and (3.2) are included. Otherwise, the derivation follows § 2.

3.2. The search space

We define the inner product space of general flow fields as

\begin{equation} \mathscr{P}=\left\{\begin{bmatrix} \boldsymbol{v}\\ q \end{bmatrix}\left| \begin{array}{@{}c@{}} \boldsymbol{v}:\varOmega \rightarrow \mathbb{R}^3\\ q:\varOmega \rightarrow \mathbb{R}\\ \boldsymbol{v} \text{ and } q \text{ periodic in } x \text{ and } z \end{array}\right.\right\}, \end{equation}

\begin{equation} \mathscr{P}=\left\{\begin{bmatrix} \boldsymbol{v}\\ q \end{bmatrix}\left| \begin{array}{@{}c@{}} \boldsymbol{v}:\varOmega \rightarrow \mathbb{R}^3\\ q:\varOmega \rightarrow \mathbb{R}\\ \boldsymbol{v} \text{ and } q \text{ periodic in } x \text{ and } z \end{array}\right.\right\}, \end{equation}

where ![]() $\boldsymbol {v}$ and

$\boldsymbol {v}$ and ![]() $q$ are sufficiently smooth functions of space. Hereafter, the symbols

$q$ are sufficiently smooth functions of space. Hereafter, the symbols ![]() $\boldsymbol {u},p$ indicate physically admissible velocity and pressure, implying

$\boldsymbol {u},p$ indicate physically admissible velocity and pressure, implying ![]() $\boldsymbol {u}\in \mathscr {M}$ and

$\boldsymbol {u}\in \mathscr {M}$ and ![]() $p$ satisfying the relevant Poisson equation. The space of general flow fields

$p$ satisfying the relevant Poisson equation. The space of general flow fields ![]() $\mathscr {P}$ is endowed with the real-valued inner product

$\mathscr {P}$ is endowed with the real-valued inner product

Here, ![]() $\boldsymbol {\cdot }$ is the conventional Euclidean inner product in

$\boldsymbol {\cdot }$ is the conventional Euclidean inner product in ![]() $\mathbb {R}^3$. Physically admissible velocity and pressure fields form the following subset of the general flow fields:

$\mathbb {R}^3$. Physically admissible velocity and pressure fields form the following subset of the general flow fields:

\begin{equation} \mathscr{P}_p=\left\{ \begin{bmatrix} \boldsymbol{u}\\ p \end{bmatrix}\in\mathscr{P}_0\left| \begin{array}{@{}c@{}} \boldsymbol{\nabla}\boldsymbol{\cdot}\boldsymbol{u}=0\\ \boldsymbol{\nabla}\boldsymbol{\cdot}\mathcal{F}(\boldsymbol{u},p) = 0\\ \mathcal{F}(\boldsymbol{u},p)\big|_{y=\pm1} = \boldsymbol{0} \end{array}\right.\right\}, \end{equation}

\begin{equation} \mathscr{P}_p=\left\{ \begin{bmatrix} \boldsymbol{u}\\ p \end{bmatrix}\in\mathscr{P}_0\left| \begin{array}{@{}c@{}} \boldsymbol{\nabla}\boldsymbol{\cdot}\boldsymbol{u}=0\\ \boldsymbol{\nabla}\boldsymbol{\cdot}\mathcal{F}(\boldsymbol{u},p) = 0\\ \mathcal{F}(\boldsymbol{u},p)\big|_{y=\pm1} = \boldsymbol{0} \end{array}\right.\right\}, \end{equation}

where ![]() $\mathscr {P}_0$ is the subset of

$\mathscr {P}_0$ is the subset of ![]() $\mathscr {P}$ whose vector-valued component satisfies the homogeneous Dirichlet BC at the walls,

$\mathscr {P}$ whose vector-valued component satisfies the homogeneous Dirichlet BC at the walls,

Equilibrium solutions of the NSE are ![]() $[\boldsymbol {u}^\ast,p^\ast ]\in \mathscr {P}_p$ for which

$[\boldsymbol {u}^\ast,p^\ast ]\in \mathscr {P}_p$ for which

We aim to impose the zero-divergence constraint together with the defining property of an equilibrium solution via the variational minimisation discussed in § 2. To that end, we consider an evolution in the space of general flow fields ![]() $\boldsymbol {U}=[\boldsymbol {v},q]\in \mathscr {P}_0$ in which the velocity and the pressure component are evolved independently. A flow field

$\boldsymbol {U}=[\boldsymbol {v},q]\in \mathscr {P}_0$ in which the velocity and the pressure component are evolved independently. A flow field ![]() $\boldsymbol {U}\in \mathscr {P}_0$ necessarily satisfies neither the defining property of an equilibrium solution nor the zero-divergence constraint. Therefore, we define the residual field

$\boldsymbol {U}\in \mathscr {P}_0$ necessarily satisfies neither the defining property of an equilibrium solution nor the zero-divergence constraint. Therefore, we define the residual field ![]() $\boldsymbol {R}\in \mathscr {P}$ associated with a general flow field as

$\boldsymbol {R}\in \mathscr {P}$ associated with a general flow field as

and the cost function ![]() $J^2$ as

$J^2$ as

At the global minima of the cost function, ![]() $J^2=0$, the defining property of an equilibrium solution (3.12) and the incompressibility constraint (3.2) are both satisfied. The operator

$J^2=0$, the defining property of an equilibrium solution (3.12) and the incompressibility constraint (3.2) are both satisfied. The operator ![]() $\boldsymbol {G}=[\boldsymbol {g}_1,g_2]$ acting on general flow fields

$\boldsymbol {G}=[\boldsymbol {g}_1,g_2]$ acting on general flow fields ![]() $\boldsymbol {U}=[\boldsymbol {v},q]\in \mathscr {P}_0$ is constructed such that an equilibrium solution

$\boldsymbol {U}=[\boldsymbol {v},q]\in \mathscr {P}_0$ is constructed such that an equilibrium solution ![]() $[\boldsymbol {u}^\ast,p^\ast ]$ is obtained by evolving the variational dynamics

$[\boldsymbol {u}^\ast,p^\ast ]$ is obtained by evolving the variational dynamics

The operator ![]() $\boldsymbol {G}$ is derived following the adjoint-based method described in § 2 to guarantee the monotonic decrease of the cost function along trajectories of the variational dynamics (3.15).

$\boldsymbol {G}$ is derived following the adjoint-based method described in § 2 to guarantee the monotonic decrease of the cost function along trajectories of the variational dynamics (3.15).

3.3. Adjoint operator for the NSE

The variational dynamics (3.15) must ensure that the flow field ![]() $\boldsymbol {U}$ remains within

$\boldsymbol {U}$ remains within ![]() $\mathscr {P}_0$, thus

$\mathscr {P}_0$, thus ![]() $\boldsymbol {U}$ is periodic in

$\boldsymbol {U}$ is periodic in ![]() $x$ and

$x$ and ![]() $z$, and its velocity component

$z$, and its velocity component ![]() $\boldsymbol {v}$ takes zero value at the walls for all

$\boldsymbol {v}$ takes zero value at the walls for all ![]() $\tau$. In order for these properties of

$\tau$. In order for these properties of ![]() $\boldsymbol {U}$ to be preserved under the variational dynamics, the operator

$\boldsymbol {U}$ to be preserved under the variational dynamics, the operator ![]() $\boldsymbol {G}$ must be periodic in

$\boldsymbol {G}$ must be periodic in ![]() $x$ and

$x$ and ![]() $z$, and

$z$, and ![]() $\boldsymbol {g}_1=\partial \boldsymbol {v}/\partial \tau$ must take zero value at the walls, meaning that

$\boldsymbol {g}_1=\partial \boldsymbol {v}/\partial \tau$ must take zero value at the walls, meaning that ![]() $\boldsymbol {G}\in \mathscr {P}_0$. In addition, we choose the residual

$\boldsymbol {G}\in \mathscr {P}_0$. In addition, we choose the residual ![]() $\boldsymbol {R}$ to lie within

$\boldsymbol {R}$ to lie within ![]() $\mathscr {P}_0$. The periodicity of

$\mathscr {P}_0$. The periodicity of ![]() $\boldsymbol {R}$ in

$\boldsymbol {R}$ in ![]() $x$ and

$x$ and ![]() $z$ results automatically from the spatial periodicity of

$z$ results automatically from the spatial periodicity of ![]() $\boldsymbol {U}$ in these two directions. However, at the walls we enforce the condition

$\boldsymbol {U}$ in these two directions. However, at the walls we enforce the condition ![]() $\boldsymbol {r}_1(\boldsymbol {v},q)|_{y=\pm 1}=\mathcal {F}(\boldsymbol {v},q)|_{y=\pm 1}=\boldsymbol {0}$. With the choice of

$\boldsymbol {r}_1(\boldsymbol {v},q)|_{y=\pm 1}=\mathcal {F}(\boldsymbol {v},q)|_{y=\pm 1}=\boldsymbol {0}$. With the choice of ![]() $\boldsymbol {U},\boldsymbol {R},\boldsymbol {G}\in \mathscr {P}_0$, the flow field remains within

$\boldsymbol {U},\boldsymbol {R},\boldsymbol {G}\in \mathscr {P}_0$, the flow field remains within ![]() $\mathscr {P}_0$ as desired. Following this choice, all the boundary terms resulting from partial integrations in the derivation of the adjoint operator cancel out (see Appendix A), and the adjoint of the directional derivative of

$\mathscr {P}_0$ as desired. Following this choice, all the boundary terms resulting from partial integrations in the derivation of the adjoint operator cancel out (see Appendix A), and the adjoint of the directional derivative of ![]() $\boldsymbol {R}(\boldsymbol {U})$ along

$\boldsymbol {R}(\boldsymbol {U})$ along ![]() $\boldsymbol {G}$ is obtained as

$\boldsymbol {G}$ is obtained as

Therefore, with ![]() $\boldsymbol {G}=-\mathscr {L}^{\dagger} (\boldsymbol {U};\boldsymbol {R})$, the variational dynamics takes the form

$\boldsymbol {G}=-\mathscr {L}^{\dagger} (\boldsymbol {U};\boldsymbol {R})$, the variational dynamics takes the form

Equation (3.18) is fourth order with respect to ![]() $\boldsymbol {v}$, and (3.19) is second order with respect to

$\boldsymbol {v}$, and (3.19) is second order with respect to ![]() $q$. Therefore, four BCs for each component of

$q$. Therefore, four BCs for each component of ![]() $\boldsymbol {v}$ and two BCs for

$\boldsymbol {v}$ and two BCs for ![]() $q$ are required in the inhomogeneous direction

$q$ are required in the inhomogeneous direction ![]() $y$. The choice of

$y$. The choice of ![]() $\boldsymbol {U}\in \mathscr {P}_0$ implies

$\boldsymbol {U}\in \mathscr {P}_0$ implies ![]() $\boldsymbol {v}=\boldsymbol {0}$, and the choice of

$\boldsymbol {v}=\boldsymbol {0}$, and the choice of ![]() $\boldsymbol {R}\in \mathscr {P}_0$ implies

$\boldsymbol {R}\in \mathscr {P}_0$ implies ![]() $\boldsymbol {r}_1=\mathcal {F}(\boldsymbol {v},q)=\boldsymbol {0}$ at each wall. Consequently, the adjoint-descent dynamics requires two additional wall BCs in order to be well-posed. As the additional BCs, we impose

$\boldsymbol {r}_1=\mathcal {F}(\boldsymbol {v},q)=\boldsymbol {0}$ at each wall. Consequently, the adjoint-descent dynamics requires two additional wall BCs in order to be well-posed. As the additional BCs, we impose ![]() $\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {v}=0$ at each wall. Therefore, the adjoint-descent dynamics is subject to the following BCs:

$\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {v}=0$ at each wall. Therefore, the adjoint-descent dynamics is subject to the following BCs:

where the BC (3.23) is ![]() $\boldsymbol {r}_1=\mathcal {F}(\boldsymbol {v},q)=\boldsymbol {0}$, and the BC (3.24) is

$\boldsymbol {r}_1=\mathcal {F}(\boldsymbol {v},q)=\boldsymbol {0}$, and the BC (3.24) is ![]() $\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {v}=0$ at the walls obtained by substituting

$\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {v}=0$ at the walls obtained by substituting ![]() $\boldsymbol {v}(y=\pm 1)=\boldsymbol {0}$ in the definitions of

$\boldsymbol {v}(y=\pm 1)=\boldsymbol {0}$ in the definitions of ![]() $\mathcal {F}(\boldsymbol {v},q)$ and

$\mathcal {F}(\boldsymbol {v},q)$ and ![]() $\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {v}$, respectively. The choice of the additional BCs is consistent with the properties of the state space of the NSE, and is physically meaningful. Note, however, that the BC (3.24) does not need to be enforced explicitly during the derivation of the adjoint operator. In the absence of solid walls in a doubly periodic 2-D or a triply periodic 3-D domain, the BCs (3.22)–(3.24) do not apply. Instead, the fields are subject to periodic BCs only.

$\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {v}$, respectively. The choice of the additional BCs is consistent with the properties of the state space of the NSE, and is physically meaningful. Note, however, that the BC (3.24) does not need to be enforced explicitly during the derivation of the adjoint operator. In the absence of solid walls in a doubly periodic 2-D or a triply periodic 3-D domain, the BCs (3.22)–(3.24) do not apply. Instead, the fields are subject to periodic BCs only.

Numerically imposing the BCs (3.22)–(3.24) while evolving (3.18) and (3.19) forwards in the fictitious time is not straightforward. Consequently, instead of advancing the derived variational dynamics directly, we constrain the adjoint-descent dynamics to the subset of physical flow fields ![]() $\mathscr {P}_p$. Within this subset, pressure does not evolve independently but satisfies the pressure Poisson equation. Thereby, we obtain an evolution equation for the velocity within the state space of the NSE. This allows us to employ the influence matrix (IM) method (Kleiser & Schumann Reference Kleiser and Schumann1980) to integrate the constrained adjoint-descent dynamics.

$\mathscr {P}_p$. Within this subset, pressure does not evolve independently but satisfies the pressure Poisson equation. Thereby, we obtain an evolution equation for the velocity within the state space of the NSE. This allows us to employ the influence matrix (IM) method (Kleiser & Schumann Reference Kleiser and Schumann1980) to integrate the constrained adjoint-descent dynamics.

3.4. Variational dynamics constrained to the subset of physical flow fields

To obtain a numerically tractable variational dynamics, we constrain the adjoint-descent dynamics (3.18)–(3.24) to the subset of physical flow fields ![]() $\mathscr {P}_p$. Within

$\mathscr {P}_p$. Within ![]() $\mathscr {P}_p$, the velocity component

$\mathscr {P}_p$, the velocity component ![]() $\boldsymbol {u}$ is divergence-free over the entire domain. In addition, the pressure component

$\boldsymbol {u}$ is divergence-free over the entire domain. In addition, the pressure component ![]() $p$ is governed no longer by an explicit evolution equation, but by a Poisson equation with a velocity-dependent source term. Let

$p$ is governed no longer by an explicit evolution equation, but by a Poisson equation with a velocity-dependent source term. Let ![]() $p = \mathcal {P}[\boldsymbol {u}]$ denote the solution to the Poisson equation yielding pressure associated with an instantaneous divergence-free velocity

$p = \mathcal {P}[\boldsymbol {u}]$ denote the solution to the Poisson equation yielding pressure associated with an instantaneous divergence-free velocity ![]() $\boldsymbol {u}$. In order for

$\boldsymbol {u}$. In order for ![]() $\boldsymbol {u}$ to remain divergence-free,

$\boldsymbol {u}$ to remain divergence-free, ![]() $\boldsymbol {g}_1$ needs to be projected onto the space of divergence-free fields, yielding the evolution

$\boldsymbol {g}_1$ needs to be projected onto the space of divergence-free fields, yielding the evolution

where ![]() $\mathbb {P}$ denotes the projection operator. The argument of the operator

$\mathbb {P}$ denotes the projection operator. The argument of the operator ![]() $\mathbb {P}$ is the right-hand side of (3.18) with

$\mathbb {P}$ is the right-hand side of (3.18) with ![]() $r_2=0$ and

$r_2=0$ and ![]() $\boldsymbol {\nabla } r_2=\boldsymbol {0}$ that result from the zero divergence of

$\boldsymbol {\nabla } r_2=\boldsymbol {0}$ that result from the zero divergence of ![]() $\boldsymbol {u}$. According to Helmholtz's theorem, a smooth 3-D vector field can be decomposed into divergence-free and curl-free components. Thus

$\boldsymbol {u}$. According to Helmholtz's theorem, a smooth 3-D vector field can be decomposed into divergence-free and curl-free components. Thus ![]() $\boldsymbol {g}_1=\partial \boldsymbol {u}/\partial \tau$ is decomposed as

$\boldsymbol {g}_1=\partial \boldsymbol {u}/\partial \tau$ is decomposed as ![]() $\boldsymbol {g}_1=\boldsymbol {f} - \boldsymbol {\nabla } \phi$, where

$\boldsymbol {g}_1=\boldsymbol {f} - \boldsymbol {\nabla } \phi$, where ![]() $\boldsymbol {f}=\mathbb {P}\{\boldsymbol {g}_1\}$ is the divergence-free component, and

$\boldsymbol {f}=\mathbb {P}\{\boldsymbol {g}_1\}$ is the divergence-free component, and ![]() $\phi$ is the scalar potential whose gradient gives the curl-free component. Therefore, the evolution of the divergence-free velocity is governed by

$\phi$ is the scalar potential whose gradient gives the curl-free component. Therefore, the evolution of the divergence-free velocity is governed by

subject to

where ![]() $\boldsymbol {r}_1=\boldsymbol {r}_1(\boldsymbol {u},\mathcal {P}[\boldsymbol {u}])$ and thus the BC (3.31) is satisfied automatically. It is necessary to verify that the constrained variational dynamics still guarantees a monotonic decrease of the cost function. For

$\boldsymbol {r}_1=\boldsymbol {r}_1(\boldsymbol {u},\mathcal {P}[\boldsymbol {u}])$ and thus the BC (3.31) is satisfied automatically. It is necessary to verify that the constrained variational dynamics still guarantees a monotonic decrease of the cost function. For ![]() $\boldsymbol {U}\in \mathscr {P}_p$, the scalar component of the steepest descent direction,

$\boldsymbol {U}\in \mathscr {P}_p$, the scalar component of the steepest descent direction, ![]() $g_2$, vanishes (see (3.19)). Therefore, according to the definition of the inner product on

$g_2$, vanishes (see (3.19)). Therefore, according to the definition of the inner product on ![]() $\mathscr {P}_p$ (3.9), it is sufficient to verify that

$\mathscr {P}_p$ (3.9), it is sufficient to verify that ![]() $\int _\varOmega (\boldsymbol {f}\boldsymbol {\cdot }\boldsymbol {g}_1)\,\mathrm {d}\kern0.7pt \boldsymbol {x}=\left \langle \boldsymbol {f},\boldsymbol {g}_1\right \rangle _\mathscr {M}\geq 0$. The Helmholtz decomposition is an orthogonal decomposition with respect to the

$\int _\varOmega (\boldsymbol {f}\boldsymbol {\cdot }\boldsymbol {g}_1)\,\mathrm {d}\kern0.7pt \boldsymbol {x}=\left \langle \boldsymbol {f},\boldsymbol {g}_1\right \rangle _\mathscr {M}\geq 0$. The Helmholtz decomposition is an orthogonal decomposition with respect to the ![]() $L_2$ inner product defined on the state space of the NSE,

$L_2$ inner product defined on the state space of the NSE, ![]() $\left \langle \boldsymbol {f},\boldsymbol {\nabla }\phi \right \rangle _\mathscr {M}= 0$. Therefore,

$\left \langle \boldsymbol {f},\boldsymbol {\nabla }\phi \right \rangle _\mathscr {M}= 0$. Therefore, ![]() $\left \langle \boldsymbol {f},\boldsymbol {g}_1\right \rangle _\mathscr {M}=\left \langle \boldsymbol {f},\boldsymbol {f}\right \rangle _\mathscr {M}-\left \langle \boldsymbol {f},\boldsymbol {\nabla }\phi \right \rangle _\mathscr {M}=\|\boldsymbol {f}\|^2_\mathscr {M}\geq 0$, thus the evolution of

$\left \langle \boldsymbol {f},\boldsymbol {g}_1\right \rangle _\mathscr {M}=\left \langle \boldsymbol {f},\boldsymbol {f}\right \rangle _\mathscr {M}-\left \langle \boldsymbol {f},\boldsymbol {\nabla }\phi \right \rangle _\mathscr {M}=\|\boldsymbol {f}\|^2_\mathscr {M}\geq 0$, thus the evolution of ![]() $\boldsymbol {u}$ along

$\boldsymbol {u}$ along ![]() $\boldsymbol {f}$ guarantees the monotonic decrease of the cost function, as desired.

$\boldsymbol {f}$ guarantees the monotonic decrease of the cost function, as desired.

The variational dynamics (3.26)–(3.31) is equivariant under continuous translations in the periodic directions ![]() $x$ and

$x$ and ![]() $z$. Furthermore, one can verify through simple calculations that this dynamics is also equivariant under the action of any reflection or rotation permitted by the laminar base velocity field

$z$. Furthermore, one can verify through simple calculations that this dynamics is also equivariant under the action of any reflection or rotation permitted by the laminar base velocity field ![]() $\boldsymbol {u}_b$. Consequently, the symmetry group generated by translations, reflections and rotations in the obtained variational dynamics is identical to that of the NSE (3.1)–(3.5). Therefore, to construct equilibria within a particular symmetry-invariant subspace of the NSE, one can use initial conditions from the same symmetry-invariant subspace to initialise the variational dynamics, and the variational dynamics preserves the symmetries of the initial condition.

$\boldsymbol {u}_b$. Consequently, the symmetry group generated by translations, reflections and rotations in the obtained variational dynamics is identical to that of the NSE (3.1)–(3.5). Therefore, to construct equilibria within a particular symmetry-invariant subspace of the NSE, one can use initial conditions from the same symmetry-invariant subspace to initialise the variational dynamics, and the variational dynamics preserves the symmetries of the initial condition.

In the variational dynamics, the scalar field ![]() $\phi$ plays a role analogous to the pressure

$\phi$ plays a role analogous to the pressure ![]() $p$ in the incompressible NSE. The scalar fields

$p$ in the incompressible NSE. The scalar fields ![]() $\phi$ and

$\phi$ and ![]() $p$ adjust themselves to the instantaneous physical velocity

$p$ adjust themselves to the instantaneous physical velocity ![]() $\boldsymbol {u}$ such that

$\boldsymbol {u}$ such that ![]() $\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {u}=0$ and

$\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {u}=0$ and ![]() $\boldsymbol {u}(y=\pm 1)=\boldsymbol {0}$ are preserved under the evolution with the fictitious time

$\boldsymbol {u}(y=\pm 1)=\boldsymbol {0}$ are preserved under the evolution with the fictitious time ![]() $\tau$ and the physical time

$\tau$ and the physical time ![]() $t$, respectively. Similar to the pressure in the NSE,

$t$, respectively. Similar to the pressure in the NSE, ![]() $\phi$ satisfies a Poisson equation with a velocity-dependent source term. Solving the Poisson equation for

$\phi$ satisfies a Poisson equation with a velocity-dependent source term. Solving the Poisson equation for ![]() $\phi$ and

$\phi$ and ![]() $p$ is a numerically challenging task in the present wall-bounded configuration (Rempfer Reference Rempfer2006). Therefore, instead of attempting to compute

$p$ is a numerically challenging task in the present wall-bounded configuration (Rempfer Reference Rempfer2006). Therefore, instead of attempting to compute ![]() $p$ and

$p$ and ![]() $\phi$ and thereby advancing the variational dynamic (3.26), we formulate the numerical integration scheme based on the IM method (Kleiser & Schumann Reference Kleiser and Schumann1980), where the no-slip BC and zero divergence are satisfied precisely, while the explicit construction of

$\phi$ and thereby advancing the variational dynamic (3.26), we formulate the numerical integration scheme based on the IM method (Kleiser & Schumann Reference Kleiser and Schumann1980), where the no-slip BC and zero divergence are satisfied precisely, while the explicit construction of ![]() $p$ and

$p$ and ![]() $\phi$ is circumvented.

$\phi$ is circumvented.

4. Numerical implementation

To advance the variational dynamics (3.26)–(3.31) without computing explicitly ![]() $\phi$ and

$\phi$ and ![]() $p$, we take advantage of the structural similarity between the variational dynamics and the NSE. In order to evaluate the right-hand side of (3.26), we consider the following partial differential equation for the residual field

$p$, we take advantage of the structural similarity between the variational dynamics and the NSE. In order to evaluate the right-hand side of (3.26), we consider the following partial differential equation for the residual field ![]() $\boldsymbol {r}_1$:

$\boldsymbol {r}_1$:

subject to

where ![]() $\boldsymbol {N}(\boldsymbol {r}_1)=(\boldsymbol {\nabla }\boldsymbol {r}_1)(\boldsymbol {u}_b+\boldsymbol {u})-(\boldsymbol {\nabla }(\boldsymbol {u}_b+\boldsymbol {u}))^\textrm {T}\boldsymbol {r}_1$, with both

$\boldsymbol {N}(\boldsymbol {r}_1)=(\boldsymbol {\nabla }\boldsymbol {r}_1)(\boldsymbol {u}_b+\boldsymbol {u})-(\boldsymbol {\nabla }(\boldsymbol {u}_b+\boldsymbol {u}))^\textrm {T}\boldsymbol {r}_1$, with both ![]() $\boldsymbol {u}$ and

$\boldsymbol {u}$ and ![]() $\boldsymbol {u}_b$ being treated as constant fields. We use the dummy equation (4.1) to evaluate the right-hand side of (3.26) since the instantaneously evaluated right-hand sides of these two systems are identically equal. For brevity, we are omitting the periodic BCs in

$\boldsymbol {u}_b$ being treated as constant fields. We use the dummy equation (4.1) to evaluate the right-hand side of (3.26) since the instantaneously evaluated right-hand sides of these two systems are identically equal. For brevity, we are omitting the periodic BCs in ![]() $x$ and

$x$ and ![]() $z$ since spatial periodicity can be enforced via spectral representation in an appropriate basis, such as a Fourier basis, that is periodic by construction. Equation (4.1) together with the BC (4.2) and the zero-divergence constraint (4.3) resembles the structure of the incompressible NSE:

$z$ since spatial periodicity can be enforced via spectral representation in an appropriate basis, such as a Fourier basis, that is periodic by construction. Equation (4.1) together with the BC (4.2) and the zero-divergence constraint (4.3) resembles the structure of the incompressible NSE:

which is subject to

with ![]() $\boldsymbol {M}(\boldsymbol {u})=-(\boldsymbol {u}_b\boldsymbol {\cdot }\boldsymbol {\nabla })\boldsymbol {u}-(\boldsymbol {u}\boldsymbol {\cdot }\boldsymbol {\nabla })\boldsymbol {u}_b-(\boldsymbol {u}\boldsymbol {\cdot }\boldsymbol {\nabla })\boldsymbol {u}$. The IM algorithm has been developed to numerically advance this particular type of dynamical system, which has a Laplacian linear term and gradient of a scalar on the right-hand side, and is subject to a zero-divergence constraint and homogeneous Dirichlet BCs at the walls. This algorithm enforces zero divergence and the homogeneous Dirichlet BCs within the time-stepping process, while the scalar field is handled implicitly and is not resolved as a separate variable (Kleiser & Schumann Reference Kleiser and Schumann1980; Canuto et al. Reference Canuto, Hussaini, Quarteroni and Zang2007, § 3.4). We use the IM algorithm, and introduce the following five steps that advance

$\boldsymbol {M}(\boldsymbol {u})=-(\boldsymbol {u}_b\boldsymbol {\cdot }\boldsymbol {\nabla })\boldsymbol {u}-(\boldsymbol {u}\boldsymbol {\cdot }\boldsymbol {\nabla })\boldsymbol {u}_b-(\boldsymbol {u}\boldsymbol {\cdot }\boldsymbol {\nabla })\boldsymbol {u}$. The IM algorithm has been developed to numerically advance this particular type of dynamical system, which has a Laplacian linear term and gradient of a scalar on the right-hand side, and is subject to a zero-divergence constraint and homogeneous Dirichlet BCs at the walls. This algorithm enforces zero divergence and the homogeneous Dirichlet BCs within the time-stepping process, while the scalar field is handled implicitly and is not resolved as a separate variable (Kleiser & Schumann Reference Kleiser and Schumann1980; Canuto et al. Reference Canuto, Hussaini, Quarteroni and Zang2007, § 3.4). We use the IM algorithm, and introduce the following five steps that advance ![]() $\boldsymbol {u}$ under the variational dynamics (3.26)–(3.31) for one time step of size

$\boldsymbol {u}$ under the variational dynamics (3.26)–(3.31) for one time step of size ![]() $\Delta \tau$.

$\Delta \tau$.

(i) The current velocity field

$\boldsymbol {u}$ that satisfies

$\boldsymbol {u}$ that satisfies  $\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {u}=0$ and

$\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {u}=0$ and  $\boldsymbol {u}(y=\pm 1)=\boldsymbol {0}$ is advanced under the NSE dynamics for one physical time step

$\boldsymbol {u}(y=\pm 1)=\boldsymbol {0}$ is advanced under the NSE dynamics for one physical time step  $\Delta t$ using the IM algorithm. This yields the updated velocity

$\Delta t$ using the IM algorithm. This yields the updated velocity  $\boldsymbol {u}^{\Delta t}$, where the IM algorithm ensures

$\boldsymbol {u}^{\Delta t}$, where the IM algorithm ensures  $\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {u}^{\Delta t}=0$ and

$\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {u}^{\Delta t}=0$ and  $\boldsymbol {u}^{\Delta t}(y=\pm 1)=\boldsymbol {0}$.

$\boldsymbol {u}^{\Delta t}(y=\pm 1)=\boldsymbol {0}$.(ii) The residual field

$\boldsymbol {r}_1$, which is by definition the right-hand side of the NSE (3.1), is approximated via finite differences:

(4.7)Since both

$\boldsymbol {r}_1$, which is by definition the right-hand side of the NSE (3.1), is approximated via finite differences:

(4.7)Since both \begin{equation} \boldsymbol{r}_1=\dfrac{\partial\boldsymbol{u}}{\partial t}\approx\dfrac{\boldsymbol{u}^{\Delta t}-\boldsymbol{u}}{\Delta t}. \end{equation}

\begin{equation} \boldsymbol{r}_1=\dfrac{\partial\boldsymbol{u}}{\partial t}\approx\dfrac{\boldsymbol{u}^{\Delta t}-\boldsymbol{u}}{\Delta t}. \end{equation} $\boldsymbol {u}$ and

$\boldsymbol {u}$ and  $\boldsymbol {u}^{\Delta t}$ are divergence-free and satisfy homogeneous Dirichlet BCs at the walls,

$\boldsymbol {u}^{\Delta t}$ are divergence-free and satisfy homogeneous Dirichlet BCs at the walls,  $\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {r}_1=0$ and

$\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {r}_1=0$ and  $\boldsymbol {r}_1(y=\pm 1)=\boldsymbol {0}$.

$\boldsymbol {r}_1(y=\pm 1)=\boldsymbol {0}$.(iii) The current residual field

$\boldsymbol {r}_1$ is advanced under the dummy dynamics (4.1)–(4.3) for one time step

$\boldsymbol {r}_1$ is advanced under the dummy dynamics (4.1)–(4.3) for one time step  $\Delta \hat {\tau }$ using the IM algorithm, which yields

$\Delta \hat {\tau }$ using the IM algorithm, which yields  $\boldsymbol {r}_1^{\Delta \hat {\tau }}$. The IM algorithm ensures that

$\boldsymbol {r}_1^{\Delta \hat {\tau }}$. The IM algorithm ensures that  $\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {r}_1^{\Delta \hat {\tau }}=0$ and

$\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {r}_1^{\Delta \hat {\tau }}=0$ and  $\boldsymbol {r}_1^{\Delta \hat {\tau }}(y=\pm 1)=\boldsymbol {0}$.

$\boldsymbol {r}_1^{\Delta \hat {\tau }}(y=\pm 1)=\boldsymbol {0}$.(iv) The right-hand side of (4.1) is approximated via finite differences:

(4.8)Since both \begin{equation} \boldsymbol{f}=\dfrac{\partial\boldsymbol{r}_1}{\partial\hat{\tau}} \approx \dfrac{\boldsymbol{r}_1^{\Delta \hat{\tau}}-\boldsymbol{r}_1}{\Delta \hat{\tau}}. \end{equation}

\begin{equation} \boldsymbol{f}=\dfrac{\partial\boldsymbol{r}_1}{\partial\hat{\tau}} \approx \dfrac{\boldsymbol{r}_1^{\Delta \hat{\tau}}-\boldsymbol{r}_1}{\Delta \hat{\tau}}. \end{equation} $\boldsymbol {r}_1$ and

$\boldsymbol {r}_1$ and  $\boldsymbol {r}_1^{\Delta \hat {\tau }}$ are divergence-free and satisfy homogeneous Dirichlet BCs at the walls,

$\boldsymbol {r}_1^{\Delta \hat {\tau }}$ are divergence-free and satisfy homogeneous Dirichlet BCs at the walls,  $\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {f}=0$ and

$\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {f}=0$ and  $\boldsymbol {f}(y=\pm 1)=\boldsymbol {0}$.

$\boldsymbol {f}(y=\pm 1)=\boldsymbol {0}$.(v) Having approximated

$\boldsymbol {f}$, which is the descent direction at the current fictitious time

$\boldsymbol {f}$, which is the descent direction at the current fictitious time  $\tau$, we advance the velocity for one step of size

$\tau$, we advance the velocity for one step of size  $\Delta \tau$ using

(4.9)Since both

$\Delta \tau$ using

(4.9)Since both \begin{equation} \boldsymbol{u}^{\Delta\tau} = \boldsymbol{u} + \Delta\tau\,\boldsymbol{f}. \end{equation}

\begin{equation} \boldsymbol{u}^{\Delta\tau} = \boldsymbol{u} + \Delta\tau\,\boldsymbol{f}. \end{equation} $\boldsymbol {u}$ and

$\boldsymbol {u}$ and  $\boldsymbol {f}$ are divergence-free and take zero value at the walls, the updated velocity satisfies

$\boldsymbol {f}$ are divergence-free and take zero value at the walls, the updated velocity satisfies  $\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {u}^{\Delta \tau }=0$ and

$\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {u}^{\Delta \tau }=0$ and  $\boldsymbol {u}^{\Delta \tau }(y=\pm 1)=\boldsymbol {0}$.

$\boldsymbol {u}^{\Delta \tau }(y=\pm 1)=\boldsymbol {0}$.

The finite differences (4.7) and (4.8) affect the accuracy of time-stepping the variational dynamics, but they do not interfere with imposing the BC ![]() $\boldsymbol {u}(y=\pm 1)=\boldsymbol {0}$ and the constraint

$\boldsymbol {u}(y=\pm 1)=\boldsymbol {0}$ and the constraint ![]() $\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {u}=0$ within machine precision. The low accuracy of the first-order finite differences does not affect the accuracy of the obtained equilibrium solution since both

$\boldsymbol {\nabla }\boldsymbol {\cdot }\boldsymbol {u}=0$ within machine precision. The low accuracy of the first-order finite differences does not affect the accuracy of the obtained equilibrium solution since both ![]() $\boldsymbol {r}_1$ and

$\boldsymbol {r}_1$ and ![]() $\boldsymbol {f}$ tend to zero when an equilibrium is approached. We are also not concerned about the low accuracy of the first-order forward Euler update rule (4.9) since the objective is to obtain the attracting equilibria of the adjoint-descent dynamics reached at

$\boldsymbol {f}$ tend to zero when an equilibrium is approached. We are also not concerned about the low accuracy of the first-order forward Euler update rule (4.9) since the objective is to obtain the attracting equilibria of the adjoint-descent dynamics reached at ![]() $\tau \to \infty$. Therefore, the introduced procedure is able to construct equilibrium solutions within machine precision.

$\tau \to \infty$. Therefore, the introduced procedure is able to construct equilibrium solutions within machine precision.

We implement this procedure in Channelflow 2.0, an open-source software package for numerical analysis of the incompressible NSE in wall-bounded domains. In this software, an instantaneous divergence-free velocity field is represented by Chebyshev expansion in the wall-normal direction ![]() $y$, and Fourier expansion in the periodic directions

$y$, and Fourier expansion in the periodic directions ![]() $x$ and

$x$ and ![]() $z$:

$z$:

\begin{equation} u_j(x,y,z)=\sum_{\substack{m,p\in\mathbb{Z}\\n\in\mathbb{W}}}\hat{u}_{m,n,p,j}\,T_n(y) \exp({2{\rm \pi} {\rm i}\left({mx}/{L_x}+{pz}/{L_z}\right)}),\quad j=1,2,3, \end{equation}

\begin{equation} u_j(x,y,z)=\sum_{\substack{m,p\in\mathbb{Z}\\n\in\mathbb{W}}}\hat{u}_{m,n,p,j}\,T_n(y) \exp({2{\rm \pi} {\rm i}\left({mx}/{L_x}+{pz}/{L_z}\right)}),\quad j=1,2,3, \end{equation}

where ![]() $T_n(y)$ is the

$T_n(y)$ is the ![]() $n$th Chebyshev polynomial of the first kind,

$n$th Chebyshev polynomial of the first kind, ![]() $\textrm {i}$ is the imaginary unit, and indices

$\textrm {i}$ is the imaginary unit, and indices ![]() $1,2,3$ specify directions

$1,2,3$ specify directions ![]() $x$,

$x$, ![]() $y$ and

$y$ and ![]() $z$, respectively. Channelflow 2.0 employs the IM algorithm for time-marching the NSE (4.4). With modification for the nonlinear term

$z$, respectively. Channelflow 2.0 employs the IM algorithm for time-marching the NSE (4.4). With modification for the nonlinear term ![]() $\boldsymbol {N}(\boldsymbol {r}_1)$, (4.1) can also be advanced in time.

$\boldsymbol {N}(\boldsymbol {r}_1)$, (4.1) can also be advanced in time.

5. Application to plane Couette flow

We apply the introduced variational method to PCF, the flow between two parallel plates moving at equal and opposite velocities, which is governed by the general NSE (3.1)–(3.5) with the laminar base flow ![]() $\boldsymbol {u}_b=[y,0,0]^\textrm {T}$. Due to the periodicity in

$\boldsymbol {u}_b=[y,0,0]^\textrm {T}$. Due to the periodicity in ![]() $x$ and

$x$ and ![]() $z$, PCF is equivariant under continuous translations in these directions:

$z$, PCF is equivariant under continuous translations in these directions:

where ![]() $u$,

$u$, ![]() $v$ and

$v$ and ![]() $w$ are the components of

$w$ are the components of ![]() $\boldsymbol {u}$ in the

$\boldsymbol {u}$ in the ![]() $x$,

$x$, ![]() $y$ and

$y$ and ![]() $z$ directions, respectively. In addition, PCF is equivariant under two discrete symmetries: rotation around the line

$z$ directions, respectively. In addition, PCF is equivariant under two discrete symmetries: rotation around the line ![]() ${x=y=0}$,

${x=y=0}$,

and reflection with respect to the plane ![]() $z=0$,

$z=0$,

The variational dynamics (3.26)–(3.31) is verified easily to be equivariant under the same continuous and discrete symmetry operators. Therefore, the variational dynamics preserves these symmetries, if present in the initial condition. In the following, we demonstrate the convergence of multiple equilibrium solutions from guesses both within a symmetry-invariant subspace and outside.

5.1. Results

We search for equilibria of PCF at ![]() $Re=400$ within a domain of dimensions

$Re=400$ within a domain of dimensions ![]() $L_x=2{\rm \pi} /1.14$ and

$L_x=2{\rm \pi} /1.14$ and ![]() $L_z=2{\rm \pi} /2.5$ (see § 3.1). This domain was first studied by Waleffe (Reference Waleffe2002). Several equilibrium solutions of PCF in this domain at

$L_z=2{\rm \pi} /2.5$ (see § 3.1). This domain was first studied by Waleffe (Reference Waleffe2002). Several equilibrium solutions of PCF in this domain at ![]() $Re=400$ were computed by Gibson et al. (Reference Gibson, Halcrow and Cvitanović2008, Reference Gibson, Halcrow and Cvitanović2009). These are available in the database on channelflow.org. Here, the flow field is discretised with

$Re=400$ were computed by Gibson et al. (Reference Gibson, Halcrow and Cvitanović2008, Reference Gibson, Halcrow and Cvitanović2009). These are available in the database on channelflow.org. Here, the flow field is discretised with ![]() $N_y=31$ collocation points in the wall-normal direction, and

$N_y=31$ collocation points in the wall-normal direction, and ![]() ${N_x=N_z=32}$ points in the lateral directions. The adjoint-descent dynamics is integrated numerically by the forward Euler scheme (4.9) with

${N_x=N_z=32}$ points in the lateral directions. The adjoint-descent dynamics is integrated numerically by the forward Euler scheme (4.9) with ![]() $\Delta \tau =0.03$, and

$\Delta \tau =0.03$, and ![]() $\boldsymbol {r}_1$ and

$\boldsymbol {r}_1$ and ![]() $\boldsymbol {f}$ are approximated via finite differences (4.7) and (4.8) with step sizes

$\boldsymbol {f}$ are approximated via finite differences (4.7) and (4.8) with step sizes ![]() $\Delta t=0.25$ and

$\Delta t=0.25$ and ![]() $\Delta \hat {\tau }=0.25$, respectively (see § 4). An accurate finite-difference approximation of

$\Delta \hat {\tau }=0.25$, respectively (see § 4). An accurate finite-difference approximation of ![]() $\boldsymbol {r}_1$ and

$\boldsymbol {r}_1$ and ![]() $\boldsymbol {f}$ suggests choosing

$\boldsymbol {f}$ suggests choosing ![]() $\Delta t$ and

$\Delta t$ and ![]() $\Delta \hat {\tau }$ as small as possible. However, smaller values for these step sizes result in a less stable forward Euler integration scheme, requiring a smaller value of

$\Delta \hat {\tau }$ as small as possible. However, smaller values for these step sizes result in a less stable forward Euler integration scheme, requiring a smaller value of ![]() $\Delta \tau$ to remain stable. Since for an equilibrium solution

$\Delta \tau$ to remain stable. Since for an equilibrium solution ![]() $\boldsymbol {r}_1=\boldsymbol {f}=\boldsymbol {0}$, larger values of

$\boldsymbol {r}_1=\boldsymbol {f}=\boldsymbol {0}$, larger values of ![]() $\Delta t$ and

$\Delta t$ and ![]() $\Delta \hat {\tau }$ do not diminish the accuracy of the obtained equilibrium solution. Consequently, empirically we choose values for

$\Delta \hat {\tau }$ do not diminish the accuracy of the obtained equilibrium solution. Consequently, empirically we choose values for ![]() $\Delta t$ and

$\Delta t$ and ![]() $\Delta \hat {\tau }$ so that a reasonably large value for

$\Delta \hat {\tau }$ so that a reasonably large value for ![]() $\Delta \tau$ can be used.

$\Delta \tau$ can be used.

To verify the scheme and its implementation, we converge the so-called ‘Nagata's lower branch’ equilibrium solution (Nagata Reference Nagata1990) at ![]() $Re=400$. As initial guess, we take an equilibrium solution on the same branch but at a significantly different

$Re=400$. As initial guess, we take an equilibrium solution on the same branch but at a significantly different ![]() $Re$. The Nagata's lower branch solution at

$Re$. The Nagata's lower branch solution at ![]() $Re=400$ continued from Nagata's original domain dimensions to those considered here is available in the database on channelflow.org. We continue this equilibrium solution to

$Re=400$ continued from Nagata's original domain dimensions to those considered here is available in the database on channelflow.org. We continue this equilibrium solution to ![]() $Re=230$, and use the resulting solution to initialise both the adjoint-descent variational method and the standard Newton iterations at

$Re=230$, and use the resulting solution to initialise both the adjoint-descent variational method and the standard Newton iterations at ![]() $Re=400$. The standard Newton iterations, i.e. without optimisations such as hooksteps, fail to converge. However, the adjoint-descent variational method converges successfully to the equilibrium solution at

$Re=400$. The standard Newton iterations, i.e. without optimisations such as hooksteps, fail to converge. However, the adjoint-descent variational method converges successfully to the equilibrium solution at ![]() $Re=400$ on the same branch.

$Re=400$ on the same branch.

Along the trajectory of the adjoint-descent dynamics, the cost function initially drops rapidly and subsequently decreases with an exponential rate, as shown in figure 2. The exponential decrease of the cost function is explained by the dynamical system picture of the adjoint descent: the adjoint-descent dynamics converges to a stable fixed point, hence the evolution is dominated by the slowest eigenmode of the linearised dynamics in the vicinity of that fixed point. The sharp initial drop and the following exponential decay of the cost function are reflected in fast and slow traversal, respectively, of the trajectory within the state space. Figure 3 presents a 2-D projection of the trajectory, with markers indicating that the majority of the trajectory is traversed quickly in the beginning of the integration, and the majority of the integration time is spent on the remaining, much shorter portion of the trajectory. For instance, the portion of the trajectory traversed during the first ![]() $1.2\times 10^6$ fictitious time units, which decreases the cost function from

$1.2\times 10^6$ fictitious time units, which decreases the cost function from ![]() $J=5.9\times 10^{-3}$ to

$J=5.9\times 10^{-3}$ to ![]() $J=10^{-5}$, is considerably longer than the remaining portion, which takes over

$J=10^{-5}$, is considerably longer than the remaining portion, which takes over ![]() $90\,\%$ of the integration time to be traversed. In figure 3,

$90\,\%$ of the integration time to be traversed. In figure 3, ![]() $P_1$ and

$P_1$ and ![]() $P_2$ are the real parts of

$P_2$ are the real parts of ![]() $\hat {u}_{0,3,0,1}$ and

$\hat {u}_{0,3,0,1}$ and ![]() $\hat {u}_{0,5,0,1}$, i.e. the coefficients of the third and fifth Chebyshev polynomials in the expansion of the mean streamwise velocity in

$\hat {u}_{0,5,0,1}$, i.e. the coefficients of the third and fifth Chebyshev polynomials in the expansion of the mean streamwise velocity in ![]() $y$ (see (4.10)). The visualisation of the trajectory in different projections of the state space yields a similar observation.

$y$ (see (4.10)). The visualisation of the trajectory in different projections of the state space yields a similar observation.

Figure 2. Convergence of the adjoint-descent variational method for constructing an equilibrium solution of the PCF. The minimisation of the cost function ![]() $J$ evolves the initial guess towards a true equilibrium solution at which

$J$ evolves the initial guess towards a true equilibrium solution at which ![]() $J=0$.

$J=0$.

Figure 3. The trajectory of the adjoint-descent dynamics along which the cost function ![]() $J$ decreases monotonically, as shown in figure 2. The projection shows

$J$ decreases monotonically, as shown in figure 2. The projection shows ![]() $P_2=\mathrm {Re}\{\hat {u}_{0,5,0,1}\}$ against

$P_2=\mathrm {Re}\{\hat {u}_{0,5,0,1}\}$ against ![]() $P_1=\mathrm {Re}\{\hat {u}_{0,3,0,1}\}$. The majority of the trajectory is traversed rapidly at the beginning, as indicated by a sharp drop of

$P_1=\mathrm {Re}\{\hat {u}_{0,3,0,1}\}$. The majority of the trajectory is traversed rapidly at the beginning, as indicated by a sharp drop of ![]() $J$ in figure 2, followed by a slow traversal of the remaining portion towards the asymptotic solution, reflected in figure 2 as an exponential decay of the cost function.

$J$ in figure 2, followed by a slow traversal of the remaining portion towards the asymptotic solution, reflected in figure 2 as an exponential decay of the cost function.

Nagata's lower branch equilibrium solutions are symmetric under shift-and-rotate symmetry ![]() $s_1=\tau (L_x/2,L_z/2)\,\sigma _1$,

$s_1=\tau (L_x/2,L_z/2)\,\sigma _1$,

and shift-and-reflect symmetry ![]() $s_2=\tau (L_x/2,0)\sigma _2$,

$s_2=\tau (L_x/2,0)\sigma _2$,

Therefore, the initial guess in the present example, namely Nagata's lower branch solution at ![]() $Re=230$, is symmetric under

$Re=230$, is symmetric under ![]() $s_1$ and

$s_1$ and ![]() $s_2$ that are preserved by the adjoint-descent dynamics. The velocity field remains symmetric under

$s_2$ that are preserved by the adjoint-descent dynamics. The velocity field remains symmetric under ![]() $s_1$ and

$s_1$ and ![]() $s_2$ without explicitly enforcing them during the forward integration until the equilibrium solution on the same branch at

$s_2$ without explicitly enforcing them during the forward integration until the equilibrium solution on the same branch at ![]() $Re=400$ is converged.

$Re=400$ is converged.

To investigate further the robustness of the adjoint-descent variational method in converging successfully from inaccurate guesses, we initialise the method with guesses obtained from a direct numerical simulation. We construct a random divergence-free velocity field with ![]() $L_2$-norm

$L_2$-norm ![]() $\|\boldsymbol {u}\|=0.2$, and time-march the NSE along a turbulent trajectory until the flow laminarises. The initial condition and therefore the entire trajectory are not symmetric under any of the symmetries allowed by the PCF. We extract the local extrema of

$\|\boldsymbol {u}\|=0.2$, and time-march the NSE along a turbulent trajectory until the flow laminarises. The initial condition and therefore the entire trajectory are not symmetric under any of the symmetries allowed by the PCF. We extract the local extrema of ![]() $\|\boldsymbol {u}\|$ as a function of time

$\|\boldsymbol {u}\|$ as a function of time ![]() $t$, where

$t$, where ![]() $\partial \|\boldsymbol {u}\|/\partial t=0$, as guesses for potential equilibrium solutions. Figure 4 shows

$\partial \|\boldsymbol {u}\|/\partial t=0$, as guesses for potential equilibrium solutions. Figure 4 shows ![]() $\|\boldsymbol {u}\|$ plotted against

$\|\boldsymbol {u}\|$ plotted against ![]() $t$, from which

$t$, from which ![]() $26$ guesses are extracted. The standard Newton iterations do not converge starting from any of the guesses. With hookstep optimisation, five of the searches converge within

$26$ guesses are extracted. The standard Newton iterations do not converge starting from any of the guesses. With hookstep optimisation, five of the searches converge within ![]() $50$ Newton-GMRES-hookstep (NGh) iterations. The converged solutions include the trivial laminar solution

$50$ Newton-GMRES-hookstep (NGh) iterations. The converged solutions include the trivial laminar solution ![]() $\boldsymbol {u}=\boldsymbol {0}$ as well as two non-trivial solutions EQ1 and EQ3 (see tables 1 and 2 for properties of the converged solutions). By integrating the adjoint-descent dynamics,

$\boldsymbol {u}=\boldsymbol {0}$ as well as two non-trivial solutions EQ1 and EQ3 (see tables 1 and 2 for properties of the converged solutions). By integrating the adjoint-descent dynamics, ![]() $11$ of the guesses converge to an equilibrium solution. These solutions include the trivial solution as well as five non-trivial equilibria, EQ1 to EQ5 (see tables 1 and 2). Among these solutions, EQ1, EQ4 and EQ5 have been documented in the literature (Gibson et al. Reference Gibson, Halcrow and Cvitanović2009). Yet, to the best of our knowledge, the equilibria labelled EQ2 and EQ3 have not been reported previously. Snapshots that lead to a successful search via either NGh iterations or the adjoint-descent algorithm are marked in figure 4.

$11$ of the guesses converge to an equilibrium solution. These solutions include the trivial solution as well as five non-trivial equilibria, EQ1 to EQ5 (see tables 1 and 2). Among these solutions, EQ1, EQ4 and EQ5 have been documented in the literature (Gibson et al. Reference Gibson, Halcrow and Cvitanović2009). Yet, to the best of our knowledge, the equilibria labelled EQ2 and EQ3 have not been reported previously. Snapshots that lead to a successful search via either NGh iterations or the adjoint-descent algorithm are marked in figure 4.

Figure 4. The ![]() $L_2$-norm of the velocity field against the physical time

$L_2$-norm of the velocity field against the physical time ![]() $t$ in direct numerical simulation from a random initial condition. The snapshots corresponding to the local extrema of