1. Introduction

Astronomers have learned a great deal about the Universe using data from large-scale direct imaging surveys of the sky: from the original photographic sky surveys carried out by dedicated Schmidt telescopes in both North and South Hemispheres (Reid & Djorgovski Reference Reid, Djorgovski and Soifer1993) to the Sloan Digital Sky Survey made with a 2.5-m telescope with a very wide field to provide high-quality digital data of a significant part of the northern sky (Gunn et al. Reference Gunn2006). With the 4.1-m Very Large Telescope (VLT) Infrared Survey Telescope for Astronomy (VISTA; Emerson Sutherland Reference Emerson, Sutherland, Stepp, Gilmozzi and Hall2010) and the 2.5-m VLT Survey Telescope (VST; Schipani et al. Reference Schipani, Stepp, Gilmozzi and Hall2012), European Southern Observatory (ESO) began operating two new wide-field survey facilities that are imaging the complete southern sky, introducing a new mode of ESO public surveys that make reduced data and a number of high-level data products available to the community. More recently, the Dark Energy Survey (DES—https://www.darkenergysurvey.org/) uses a modified 4-m class telescope in Chile with the large detector area to take deep images of a substantial part of the South Hemisphere. More and bigger surveys are planned in the future, such as the Large Synoptic Survey Telescope (LSST; Ivezić et al. Reference Ivezić2008), which is in an early stage of construction and uses an 8-m class telescope also located in Chile with a 3.5° field of view to image large areas of the sky repeatedly with relatively short exposures. Surveying much of the sky every few nights can lead to the detection of exploding supernovae in distant galaxies as well as earth-approaching objects.

It is the increasing sophistication of digital, principally charge-coupled device (CCD), technology that has enabled these surveys to progress by generating large volumes of data so easily. CCDs provided high-quality repeatable electronic detectors with detective quantum efficiency approaching 100% at best, broad spectral response, the capability of integrating signals accurately over long periods of time as well as being available in large formats. What has not changed in the last 70 yr is the capacity of these surveys to deliver images any sharper than those of the original photographic sky survey. Although the highest redshift probed by wide-field surveys has increased from z ∼ 0.2 to z > 11, the vast majority of the most distant objects are essentially unresolved by ground-based telescopes. Even within our own galaxy there are many regions where stars are so close together on the sky as to be badly confused. Advances in adaptive optics technologies have allowed higher-resolution images to be obtained over very small fields of view, a few arcseconds at best and therefore of no help in delivering sharper images in wide-field surveys. It is this deficiency that GravityCam is intended to overcome. GravityCam is not intended to produce diffraction-limited images, but simply ones that are sharper than can normally be obtained from the ground. This will particularly allow imaging large areas of the inner Milky Way and nearby galaxies such as the Magellanic clouds with unprecedented angular resolution. These areas are abundant of bright stars necessary for image alignment. Moreover, by operating GravityCam at frame rates > 10 Hz, surveys at very high cadence will be enabled.

There are several key scientific programmes that will benefit substantially from such an instrument and are described below in more detail. They include the detection of extra-Solar planets and satellites down to Lunar mass by surveying tens of millions of stars in the bulge of our own galaxy, and the detection and mapping of the distribution of dark matter in distant clusters of galaxies by looking at the distortions in galaxy images. GravityCam can provide a unique new input by surveying millions of stars with high time resolution to enable asteroseismologists to better understand the structure of the interior of those stars. It can also allow a detailed survey of Kuiper belt objects (KBOs) via stellar occultations.

Efforts to procure funding for GravityCam are underway by the GravityCam team following the first international GravityCam workshop in June 2017 at the Open University.

This paper provides a detailed account of the technology and potential envisaged science cases of GravityCam, following and substantially elaborating on the brief overview previously provided by MacKay, Dominik, & Steele (Reference Mackay, Dominik, Steele, Evans, Simard and Takami2016). We give a technical overview of the instrument in Section 2, whereas Sections 3–5 present some examples of scientific breakthroughs that will be possible with GravityCam. Sections 6–9 give an in-depth description of the instrument, before we provide a summary and conclusions in Section 10.

2. Technical outline of GravityCam

2.1. High angular resolution with lucky imaging

The image quality of conventional integrating cameras is usually constrained by atmospheric turbulence characteristics. Such turbulence has a power spectrum that is strongest on the largest scales (Fried Reference Fried1978). One of the most straightforward ways to improve the angular resolution of images on a telescope therefore is to take images rapidly (in the 10–30 Hz range) and use the position of a bright object in the field to allow its offset relative to some mean to be established. This technique almost completely eliminates the tip-tilt distortions caused by atmospheric turbulence. The next level of disturbance of the wavefront entering the telescope is defocus. Using the same procedure described above but only adding the best and sharpest images together is the method known as Lucky Imaging (Mackay et al. Reference Mackay, Baldwin, Law, Warner, Moorwood and Iye2004). Even more demanding selection can ultimately give even higher factors (e.g. Baldwin, Warner, & Mackay Reference Baldwin, Warner and Mackay2008), but the resolution resulting from less strict selection will often meet the requirements of many scientific applications.

Lucky imaging is already well established as an astronomical technique with over 350 papers already published mentioning ‘Lucky Imaging’ in the abstract, including over 40 from the Cambridge group, which include specific examples of results obtained with the systems (Law,Hodgkin, & Mackay Reference Law, Hodgkin and Mackay2006; Scardia et al. Reference Scardia, Argyle, Prieur, Pansecchi, Basso, Law and Mackay2007; Mackay, Law, & Stayley Reference Mackay, Law, Stayley, McLean and Casali2008; Law et al. Reference Law2009; Faedi et al. Reference Faedi2013; Mackay Reference Mackay2013).

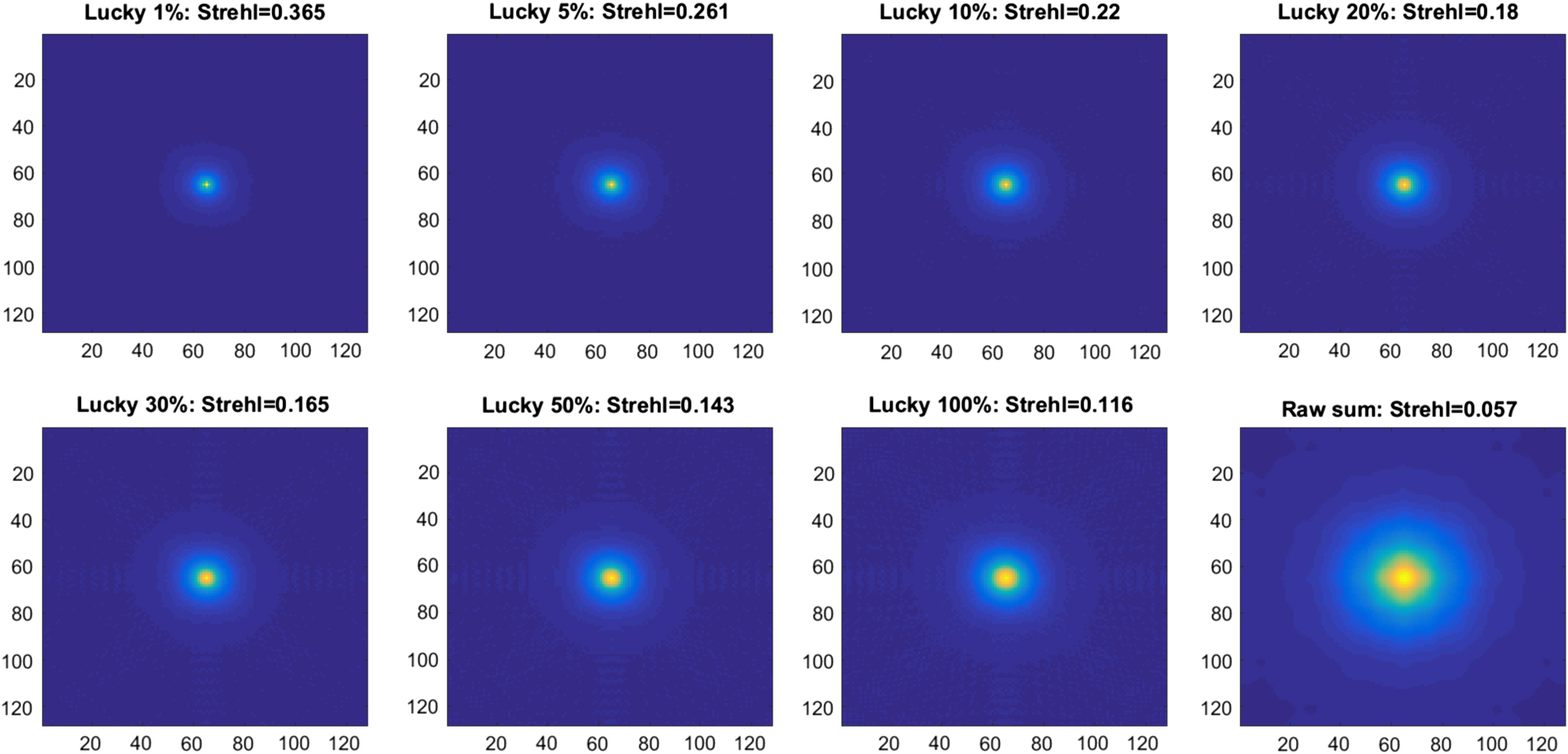

Lucky imaging works very well for small diameter telescopes, yielding resolution similar to Hubble (∼0.1 arcsec) on Hubble size (∼2.5 m) telescopes. With larger telescopes the chance of obtaining high-resolution images becomes smaller. Although it would be very convenient to achieve even higher resolution on bigger telescopes without any more effort, in practice other techniques such as combining lucky imaging with low-order adaptive optics need to be used which are beyond the scope of this paper (Law et al. Reference Law2009). However, we propose siting GravityCam on a somewhat bigger telescope such as the 3.6-m New Technology Telescope (NTT) in La Silla, Chile (Figure 1). This is an excellent site with median seeing of about 0.75 arcsec. From our experience on the NTT we find that 100% selection yields about 0.3 arcsec resolution, and 50% selection yields better than ∼0.2 arcsec (see Figure 2). The NTT has instrument slots in two Naysmith foci with rapid switching between them, so that GravityCam can be installed concurrently with the SoXS instrument (Schipani et al. Reference Schipani, Evans, Simard and Takami2016).

Figure 1. Prototype of GravityCam detector mounted on one of the Naysmith platforms at the NTT 3.6-m telescope of the European Southern Observatory in La Silla, Chile. This is one example of the instruments used on a number of telescopes to establish the credentials of the technique on good observing sites such as La Palma in the Canary Islands and La Silla in Chile. The system shown here consisted of a single EMCCD behind a simple crossed to prism atmospheric dispersion corrector (ADC) being run in the standard lucky imaging mode. There is considerable space to mount an instrument on the telescope which has extremely high optical quality and is located in a top astronomical site.

Figure 2. Simulated ESO NTT images of about 3.5 arcsec × 3.3 arcsec size showing the improvement delivered by GravityCam compared with the equivalent raw image with seeing equal to the median value for La Silla of 0.75 arcsec FWHM. The images show the result of conventional raw imaging (lower right hand) plus lucky imaging using a variety of selection factors between 1% and 100% for image sharpness. The point spread function consists of a narrow core with a faint extended tail. Lucky imaging concentrates light from the halo into the central core. We verified that this simulation for a 2.5-m telescope reproduces very closely the results delivered on the NOT telescope on La Palma (Baldwin et al. Reference Baldwin, Warner and Mackay2008).

By removing the tip-tilt components, the phase variance in the wavefront entering the telescope is reduced by a factor of about 7. A theoretical study by Kaiser, Tonry, & Luppino (Reference Kaiser, Tonry and Luppino2000) predicts a resulting improvement factor of ∼1.7 on the seeing with 100% frame selection for a telescope such as the NTT 3.6 m with median seeing of 0.75 arcsec. However, this analysis assumes measuring the mean position of each image, while experience over many years by users of lucky imaging shows that better results are obtained if the brightest pixel is used in each image (usually the centre of the brightest speckle rather than the full image) as a reference position for the shift before addition. By adopting such a procedure, one can achieve a larger improvement factor of ∼2.5 with 100% frame selection on a 3.6-m telescope. With 20% selection that is increased to at least a factor of 3 and up to a factor of 4 for 1–2% selection, as demonstrated by our observational findings. Our ‘sharper’ images are brighter in the core and narrower at their half widths, so that adjacent objects can be separated. With GravityCam we normally expect to operate with 100% selection although the instrument may be used with smaller percentages to produce higher-resolution images at the cost of reduced efficiency. This performance is predicted to be possible with significantly less than 100 photons per frame from the reference star.

It is worth noting that lucky imaging is a rather simple technique while more sophisticated approaches have been proposed and tried in practice. Using a fast autoguider that measures the median position of a reference star and computes a correction, which is then used to adjust the telescope guidance, is relatively unsatisfactory because of the nature of the servo loop that carries this out. The atmospheric phase patterns change on short timescales typically tens of milliseconds so that moving the telescope quickly even by a small amount is very difficult. Techniques such as speckle imaging (e.g. Carrano Reference Carrano, Gonglewski, Vorontsov, Gruneisen, Restaino and Tyson2002; Loktev et al. Reference Loktev, Vdovin, Soloviev, Savenko, Rogers, Casasent, Dolne, Karr and Gamiz2011) are also difficult to implement, particularly on faint reference stars and over a significant field of view. With GravityCam, we are constrained by the amount of image processing that may be carried out in real time, which poses limits to the complexity of the adopted technique.

2.2. Technical requirements

The core requirement for GravityCam is an array of detectors able to run at frame rates > 10 Hz with negligible readout noise. This will enable very faint targets to be detected. Until recently such detectors did not exist. However, the development of electron-multiplying CCDs (EMCCDs) changed the detector landscape substantially and were quickly taken up for astronomy (Mackay et al. Reference Mackay, Tubbs, Bell, Burt, Jerram, Moody, Blouke, Canosa and Sampat2001). EMCCDs have relatively high readout noise under conventional operation. However, a multiplication register within the EMCCD allows the signal to be amplified before the readout amplifier so that the effective readout noise is substantially reduced in terms of equivalent photons. The gain may be set high enough to allow photon-counting operation. Even if photon counting is not needed, it is possible to reduce the read noise to a level that is acceptable. Even more recently the development of high-performance Complementary Metal OxideSemiconductor (CMOS) devices has moved forward very rapidly. These devices are capable of very low readout noise levels (∼1 electron RMS) while running at fast frame rates (10–30 Hz). For practical purposes CMOS devices have many of the excellent characteristics of CCDs such as high quantum efficiency, good cosmetic quality, and high and linear signal capacity. They are also capable of being butted together allowing a large fraction of the area of the field of view of the telescope to be used. In comparison, EMCCDs typically have only about one-sixth of the detector package area sensitive to light. Although EMCCDs can be packed closely the overall light gathering capability is fairly limited because of the structures needed for a high-speed CCD operation.

Mounted to the 3.6-m NTT, GravityCam could cover 0.2 deg2 in six pointings with EMCCDs or 0.17 deg2 in a single pointing with CMOS devices.

Ray tracing of the NTT focal plane indicates field curvature with a radius of curvature of 1 900 mm. While this has no effect on axis if left uncorrected the induced defocus at the field edge would lead to a degradation of image quality (80% encircled energy) to 1.5 arcsec radius. This can be partially corrected either by use of a curved/stepped focal plane or a simple single element field corrector to ∼0.6 arcsec radius. To correct the residual field edge aberrations to the lucky imaging limit a more complex corrector will be required (Wynne Reference Wynne1968).

Another factor to be taken into account is atmospheric dispersion. For example, at airmass 2.0 (60° Zenith distance) this effect creates an image spread of ∼0.3 arcsec between 7 000 and 8 000 Å (Filippenko Reference Filippenko1982). An ADC (Wynne & Worswick Reference Wynne and Worswick1986) will therefore also be needed.

In concept, GravityCam is very simple: It is a wide-field imager using conventional silicon imaging detectors. By using lucky imaging, we can achieve an improvement in angular resolution by a factor of 2.5–3 from the resolution that a conventional long-exposure imaging system would give on the same telescope. How to achieve this good resolution, how to achieve it over a wide field of view, and what photometric precision can be achieved however require careful thought, further informed by detailed simulations.

Another instrument, Adaptive Optics Lucky Imager (AOLI) has been under development at Cambridge and the IAC in Tenerife that removes higher-order turbulence terms to give better improvements to image quality (Mackay et al. Reference Mackay, McLean, Ramsay and Takami2012). A paper is currently in production that describes the much more complicated techniques needed for that instrument, but the same simulation package can be used to predict and optimise the performance of GravirtyCam more accurately. We are also fortunate to be able to compare our significant observational experience of lucky imaging on a range of telescopes with diameters from 2.5 to 5 m with the outputs of the simulation package, showing generally very good agreement.

2.3. Wide-field lucky imaging

All the published work on lucky imaging has described studies that extend over a very limited field of view typically <1 arcmin in diameter. In contrast, the field of view of the NTT is approximately 30 arcmin in diameter. Unfortunately, the further a particular target is from the reference star the poorer the image quality will be. This is quantified by the isoplanatic patch size, defined as the diameter within which the image Strehl ratios are reduced by a factor less than 1/e. Both our simulations and observations from the many lucky imaging campaigns indicate an isoplanatic patch size of ∼1 arcmin diameter if we are to achieve the highest resolution with small selection percentages. Moreover, it is likely to be substantially larger for larger selection percentages.

Given the high stellar density for the vast majority of the fields monitored for our observing programmes (and in fact the crowding being one of the drivers for high angular resolution), we are not expected to need reference stars as far apart as 1 arcmin. We can therefore process the images over much smaller areas, typically 30 arcsec × 30 arcsec, with overlapping adjacent areas for cross-referencing purposes. The moments of excellent seeing would often be different from square to square. Within each square, the different percentages are accumulated. In this way, the lucky imaging performance that we have already demonstrated on many occasions may be achieved with GravityCam. It turns out that processing the smaller images is much easier and quicker than working with images many times that size.

It is also worth noting that we do not need to have a single bright reference object in each field. It is enough to use the cross correlation between the already accumulated images and the image just taken. In sparsely filled fields the photometric accuracy is maintained. Each frame is added but the offsets derived from the reference object will be inaccurate. As it is only the tip-tilt correction that is in error, the point spread function (PSFs) are simply smooth out versions of the central PSF. This allows them to be corrected for more easily if precision absolute photometry is required.

2.4. Photometric precision with GravityCam

The lucky imaging technique is a procedure for taking a subset of images of identical exposure length from a sequence. The subsets are chosen on the basis of their image sharpness. Any individual image is either chosen or not chosen. Each image properly represents the flux from the area being studied. If 100% of the images are accumulated, then the summed photon flux will be identical to that which would have been recorded using a conventional long exposure technique. If 10% only are selected, then the photon flux will be precisely 1/10 of that which would be recorded if all the images had been used. This is important because it means that the lucky imaging technique does not compromise photometric accuracy. In practice, photometric precision depends on the photon flux from a target star together with the accuracy with which the light collecting power of the instrument may be calibrated. Traditionally, only the very best nights for atmospheric clarity would be used for precision photometry. Some of our research programmes such as asteroseismology studies require precisions significantly better than 1% to be useful. Low levels of atmospheric attenuation, for example due to high-altitude cirrus which can be very difficult to detect, will ultimately constrain photometric quality. Different phases of the moon change the sky background level, the brightness of the sky in the absence of any stars in that field. GravityCam will be used for long-term photometric studies by returning again and again to the same target field. Very quickly the system will establish a very accurate knowledge of the integrated flux from any particular region of the target field. Slight variations will be found because of atmospheric opacity but when we are trying to measure the brightness of one single target object in such a field we must always remember that there are many other targets in the field which we can be certain are, on average, unchanging. The detected image from a field may be corrected to bring the instantaneously detected frame into photometric alignment with the accumulated frames. GravityCam offers a system capable of very precise relative photometry. For none of the studies we propose is absolute photometry required, we simply seek to measure very small changes in the brightness of our target stars. The photon detection rate with a target star with I ∼ 22.0 and a broad filter band could be as much as ∼ 500 photons per second or ∼1.8 million photons per hour. It is well established that such photon statistics would lead to better than 0.1% photometric accuracy for conventional CCDs. Moreover, from fits to microlensing light curves obtained with an EMCCD camera at the Danish 1.54 m at ESO La Silla (Skottfelt et al. Reference Skottfelt2015b) as part of the MiNDSTEp campaign (Dominik et al. Reference Dominik2010) since 2009, we found that the photometry follows the light curve as accurately as that from a conventional CCD. With the enhanced angular resolution of GravityCam, the photometric accuracy will be better in crowded fields given that we can resolve that part of the sky background that comes from stars of low luminosity. Therefore, the prospect of achieving photometric precision close to that predicted simply by photon statistics or at least within a factor of two of that is realistic, ultimately limited only by the increasing effect of sky brightness caused by yet fainter target stars eventually making them undetectable. We also established photometric stability with EMCCDs over 2-yr timescales, enabling variability studies over such periods (Skottfelt et al. Reference Skottfelt2015a). In 2019, we will extensively test a CMOS chip in a camera installed at the Danish 1.54 m on these properties.

3. Planet demographics down to Lunar mass through gravitational microlensing

3.1. Assembling the demographics

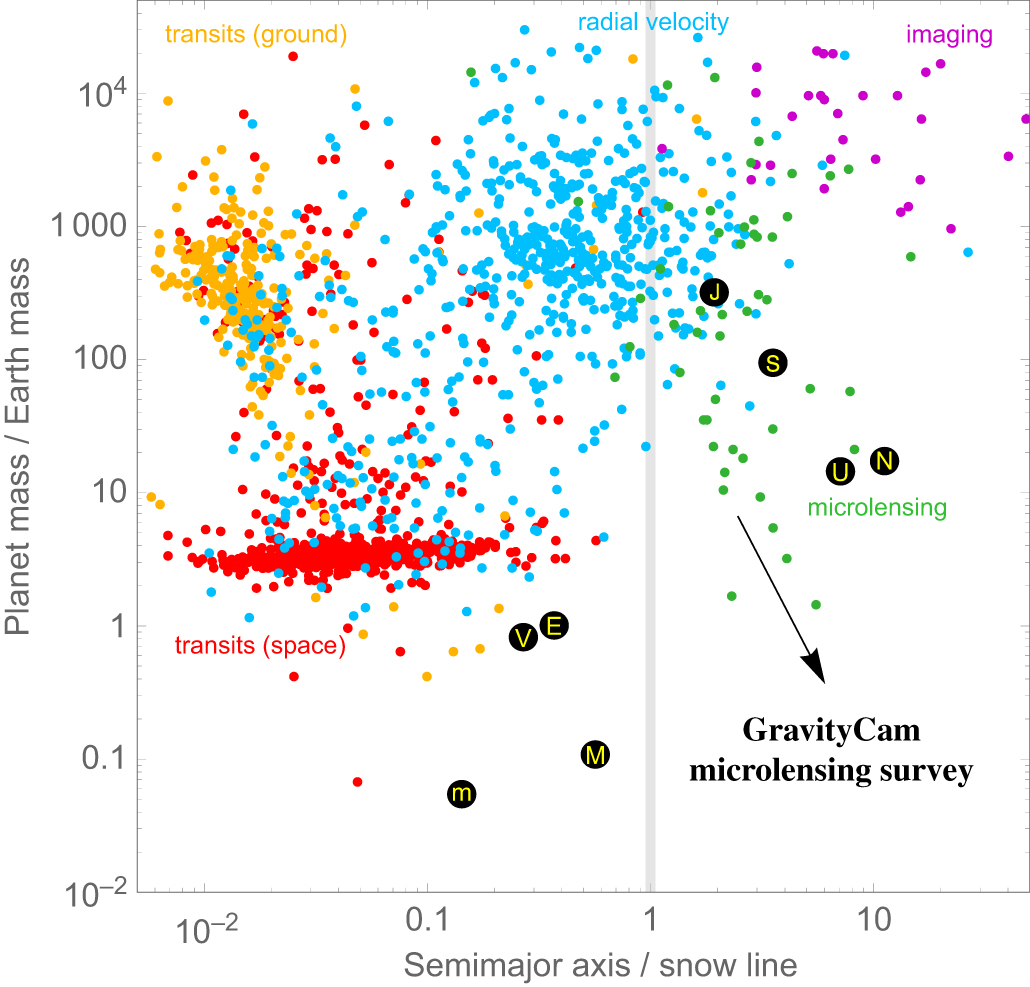

While the first planet orbiting a star other than the Sun was only discovered about 20 yr ago (Mayor & Queloz Reference Mayor and Queloz1995), several thousand planets have now been reported. It has been estimated that the Milky Way could host as many as hundreds of billions (Cassan et al. Reference Cassan2012) of planets. As illustrated in Figure 3, the planet parameter space has not been covered uniformly by the various efforts which rely on different techniques, given their specific sensitivities. A comprehensive picture of the planet abundance, essential for gaining proper insight into the formation of planetary systems and the place of the Solar System, can however only arise from exploiting their complementarity. Within foreseeable time, gravitational microlensing (Einstein Reference Einstein1936; Paczyński Reference Paczyński1986) remains the only approach suitable to obtain population statistics of cool low-mass planets throughout the Milky Way, orbiting Galactic disk or bulge stars (two populations with notably different metallicity distributions).

Figure 3. Reported planets by detection technique as function of mass and orbital separation relative to the snow line, beyond which volatile compounds condense into solid ice grains. Gravitational microlensing is particularly well suited for exploring the regime of cool low-mass planets. A ground-based survey with GravityCam on the ESO NTT will break into hitherto uncharted territory beyond the snow line and down to below Lunar mass. With M ⋆ denoting the mass of the planet’s host star, the position of the snow line has been assumed to be a snow = 2.7 AU (M ⋆/M⊙), while the masses m p of transiting planets for which only a radius R p has been measured have been assumed to be m p/M⊕ = 2.7 (R p/R⊕)1/3 (Wolfgang, Rogers, & Ford Reference Wolfgang, Rogers and Ford2016). The planets of the Solar System are indicated by letters m-V-E-M-J-S-U-N. Source: http://exoplanet.eu, 19 Jun 2017.

GravityCam will overcome the fundamental limitation resulting from the blurring of astronomical images acquired with ground-based telescopes by the turbulence of the Earth’s atmosphere, and therefore be competitive with space-based surveys. A GravityCam microlensing survey could (1) explore uncharted territory of planet and satellite population demographics: beyond the snow line and down to below Lunar mass, (2) provide a statistically well-defined sample of planets orbiting stars in the Galactic disk or bulge across the Milky Way, (3) detect substantially more cool super-Earths than known so far, and (4) obtain a first indication of the abundance of cool sub-Earths. These results would provide unique constraints to models of planet formation and evolution.

The gravitational microlensing effect is characterised by the transient brightening of an observed star due to the gravitational bending of its light by another star that happens to pass in the foreground. This leads to a symmetric achromatic characteristic light curve, whose duration is an indicator of the mass of the deflector. Gravitational microlensing is a quite rare transient phenomenon, with just about one in a million stars in the Galactic bulge being magnified by more than 30% at any given time (Kiraga & Paczyński Reference Kiraga and Paczyński1994). Therefore, surveys need to observe millions of stars in order to find a substantial number of microlensing events, which last about a month.

3.2. The GravityCam microlensing survey

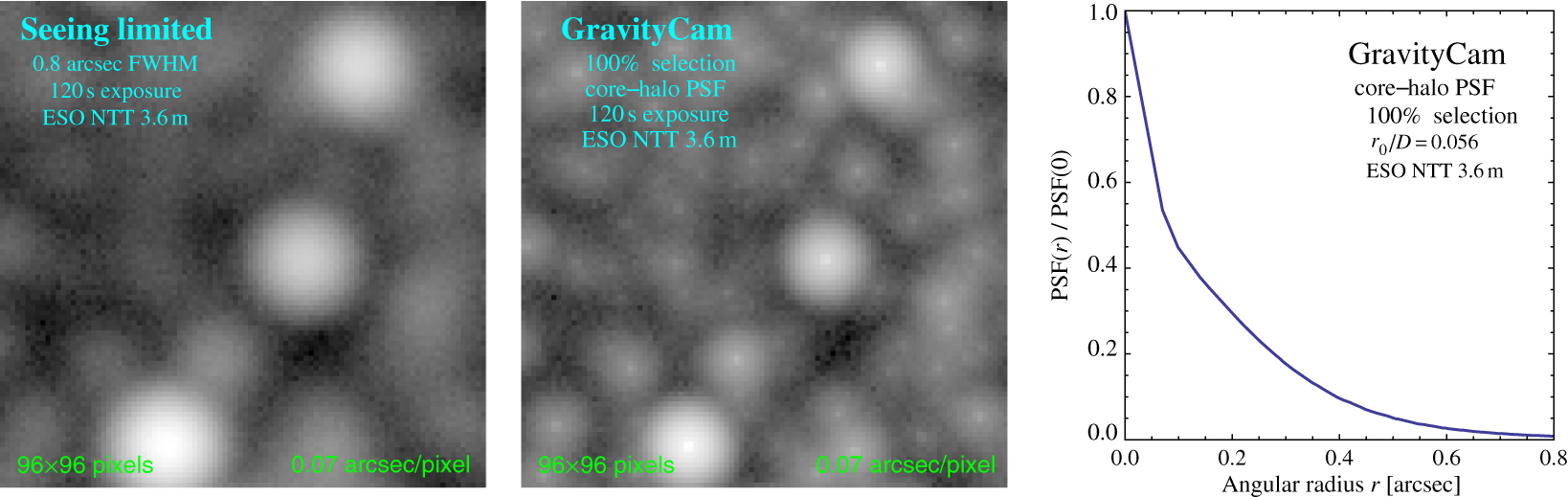

The optimal choice of survey fields arises from a compromise between the number of stars in the field, the crowding, and the extinction. Given that most of the Galactic bulge is heavily obscured by dust, the extinction for optical wavelengths can reach levels that make the vast majority of stars practically invisible. However, there are a few ‘windows’ with relatively low extinction, the largest of these ‘Baade’s window’ with a width of about 1°, centred at Galactic coordinates (l,b) = (1°,−3.9°). Because these fields are very crowded, the high angular resolution achieved with GravityCam will make a crucial difference by dramatically increasing the number of faint (and thereby small) resolved stars in the field as illustrated by the simulated images shown in Figure 4. Even with 100% selection of the incoming images, star images will be typically well separated from their nearest neighbour at I ∼ 22.

Figure 4. Simulated ESO NTT images of about 7 arcsec × 7 arcsec size for 2-min exposures, showing the improvement resulting from GravityCam as compared to being limited by an average 0.8 arcsec FWHM, where the core-halo point spread function for 100% frame selection shown on the right has been adopted, with GravityCam giving 0.07 arcsec/pixel.

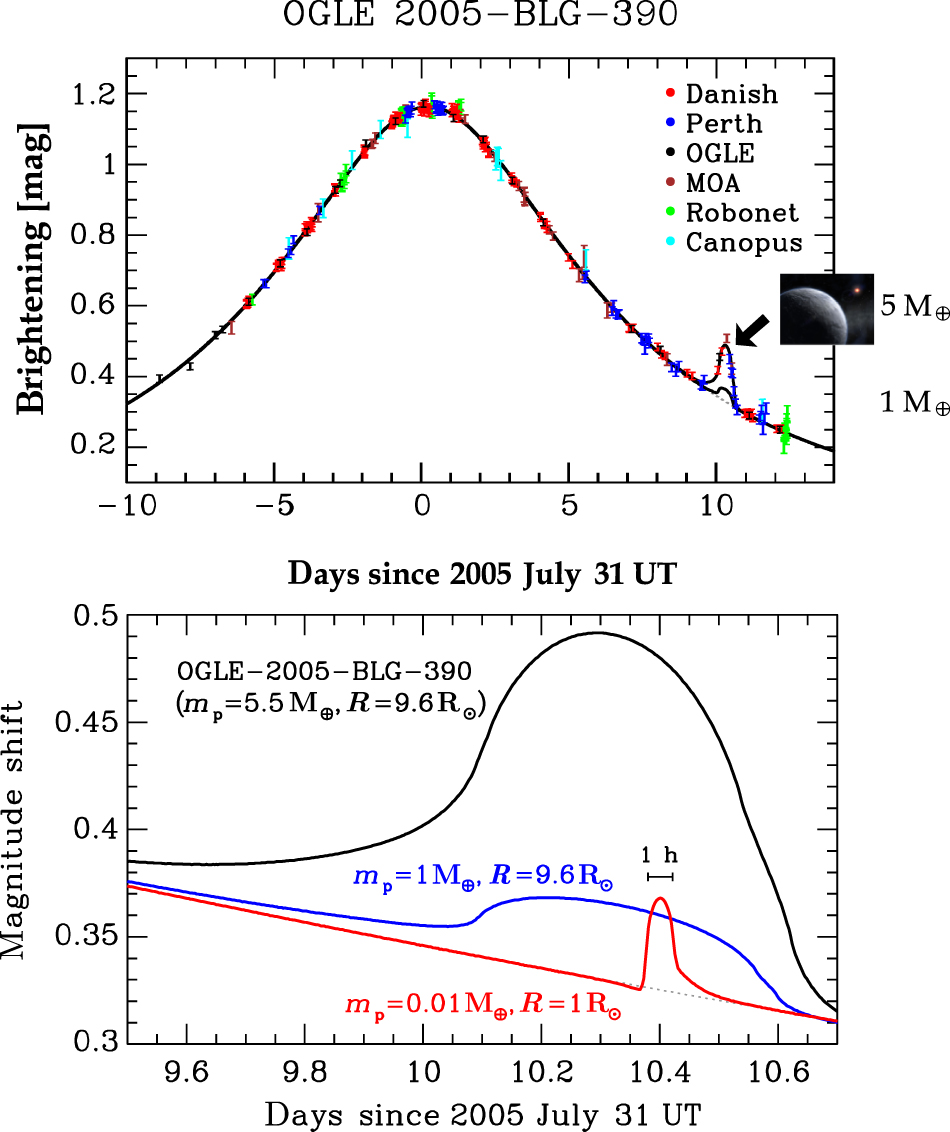

As illustrated in Figure 5, a planet orbiting the foreground (‘lens’) star may reveal its presence by causing a perturbation to the otherwise symmetric light curve (Mao & Paczyński Reference Mao and Paczyński1991; Gould & Loeb Reference Gould and Loeb1992). Its signature lasts between days for Jupiter-mass planets down to hours for planets of Earth mass or below. Shorter signals do not arise because of the finite angular size of the source star, whose motion relative to the foreground ‘lens’ star limits the signal amplitude by smearing out the effect that would arise for a point-like source star (Bennett & Rhie Reference Bennett and Rhie1996; Dominik Reference Dominik2010). Extending the sensitivity to less massive planets therefore means to go for smaller (and thereby fainter) source stars (Bennett & Rhie Reference Bennett and Rhie2002). While cool super-Earths remain detectable in microlensing events on giant source stars (R ∼ 10 R⊙) (Beaulieu et al. Reference Beaulieu2006), high-quality (few per cent) photometry on main-sequence stars (R ∼1 R⊙) enables reaching down to even Lunar mass (Paczyński Reference Paczyński1996; Dominik et al. Reference Dominik2007).

Figure 5. (left) Model light curve and data acquired with six different telescopes of microlensing event OGLE-2005-BLG-390, showing the small blip that revealed planet OGLE-2005-BLG-390Lb (Beaulieu et al. Reference Beaulieu2006) with about 5 Earth masses. An Earth-mass planet in the same spot would have led to a 3% deviation. (right) Signature of planet OGLE-2005-BLG-390Lb with m p = 5.5 M⊕ and a source star with R = 9.6 R⊙ (black), together with those for an Earth-mass planet in the same spot (blue), and a Lunar-mass body with a Sun-like star (red). Even the latter would be detectable with 2% photometry and 15-min cadence.

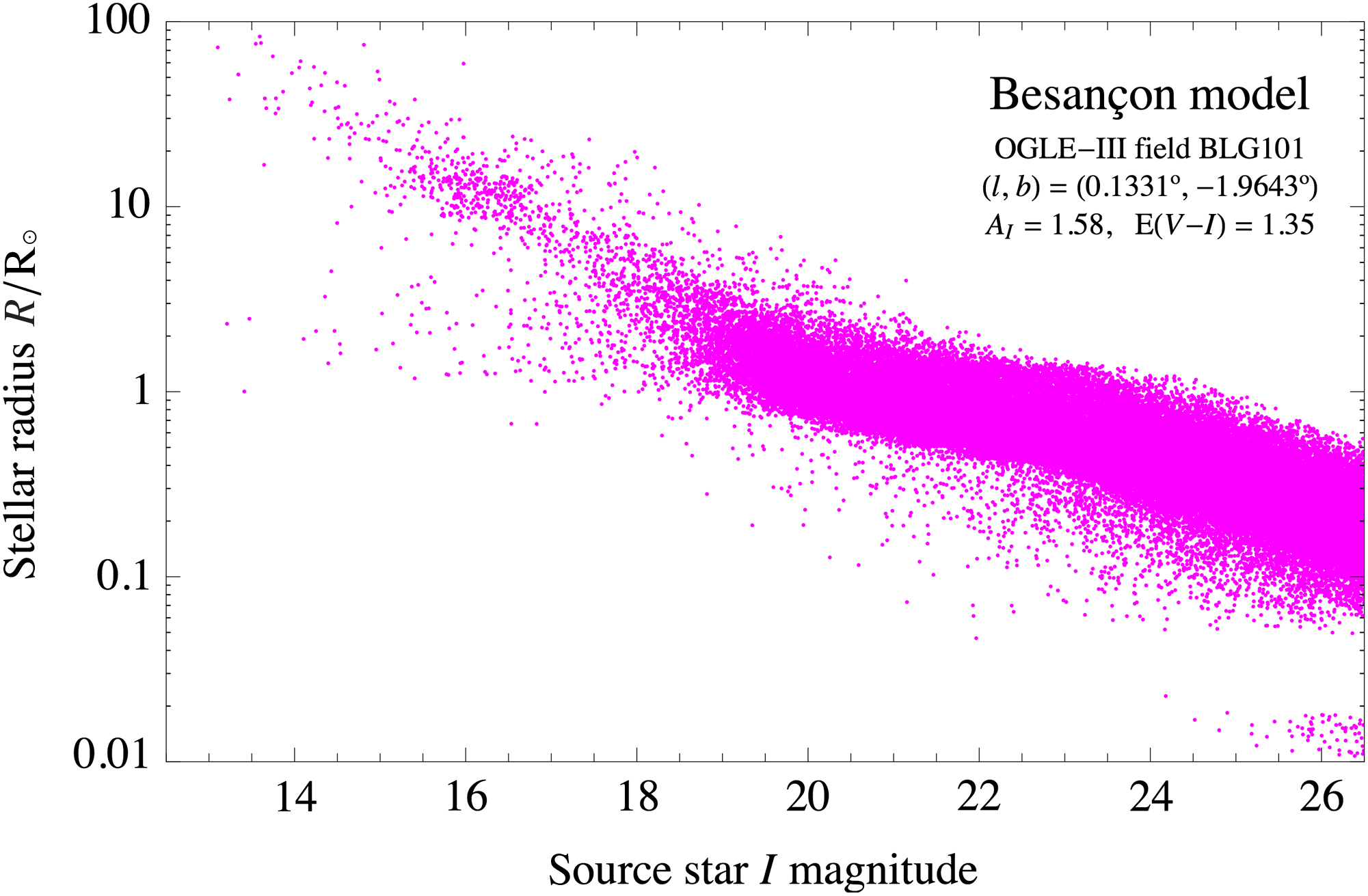

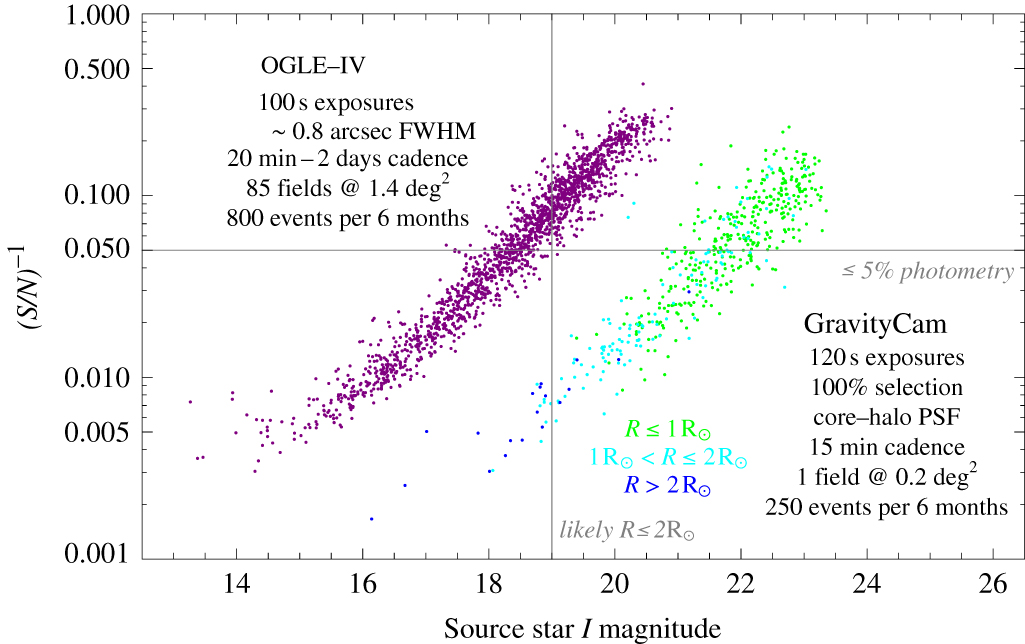

For those fields in the Galactic bulge most favourable to gravitational microlensing, giant stars start branching off the main sequence at about I ∼ 19, with a Solar analogue at 8.5 kpc being at I ∼ 20.3 (Robin et al. Reference Robin, Reylé, Derrière and Picaud2003; Nataf et al. Reference Nataf2013), as shown in Figure 6. Current microlensing surveys (such as OGLE-IV; http://ogle.astrouw.edu.pl) use small telescopes (1.3–1.8 m in diameter) and are most fundamentally limited by the typical seeing of 0.75 arcsec FWHM. As Figure 7. illustrates, with GravityCam on a 4-m-class telescope, we can go about 4 magnitudes deeper than OGLE-IV for the same signal-to-noise ratio and exposure time of 2 min, achieving ≤5% photometry for the full range 19 < I < 22. Most spectacularly, with stars at I ∼ 16 being about 10 times larger than stars at I ∼ 20, we go further down in planet mass by a factor 100 at the same sensitivity.

Figure 6. Stellar radius versus I magnitude for stars in the direction of the Galactic bulge, resulting from a Besançon population synthesis model (Robin et al. Reference Robin, Reylé, Derrière and Picaud2003) simulation for the OGLE-III BLG101 field (which has the highest event rate), as well as the extinction and reddening measured from OGLE-III (Nataf et al. Reference Nataf2013).

Figure 7. Comparison of performance between OGLE-IV and a microlensing survey with GravityCam on the ESO NTT using EMCCD detectors for resolved stars in the observed fields. With an exposure time of 2 min (similar to OGLE-IV), a single field of 0.2 deg2 can be monitored at 15-min cadence. While OGLE-IV misses out on providing ≤5% photometry on main-sequence source stars, small variations in the brightness of such small stars can be well monitored with GravityCam. Using CMOS detectors with GravityCam would boost the planet yield by a factor of at least ∼10, with the area monitored per pointing being five times as large and the photometric limits shifting by 0.8 mag.

In a single field of 0.2 deg2, we can monitor ∼ 1.0 × 107 resolved stars with 2-min exposures. With an event rate of ∼ 5 × 10−5 per star per year (Sumi et al. Reference Sumi2013), we expect ∼250 events over a campaign period of 6 months. A design with CMOS detectors would increase the field of view for a single pointing by a factor ∼5, and with a higher effective magnitude limit (by ∼ 0.8 mag), we would expect to gain a total factor ∼10 in planet yield. Given that GravityCam provides the opportunity to infer the planetary mass function for a hitherto uncharted region, the detection yield is unknown, and prior optimisation of the survey strategy is not that straightforward. The choice of survey area and exposure time determines the survey cadence, the photometric uncertainty as function of target magnitude, the number of resolved stars monitored, and ultimately the planet detection efficiency as function of planet mass. Increasing the exposure time at cost of a lower cadence would lead to losing short planetary signatures (unless further follow-up facilities can complement the survey), but the microlensing event rate would increase with more fainter stars being detected. Sticking to a single pointing would provide the opportunity to construct both effective short exposures for high cadence and effective long exposures for monitoring fainter objects and obtaining higher angular resolution by means of lucky imaging.

For monitored stars of Solar radius, we hit a sensitivity limit to companions around the foreground ‘lens’ star at about Lunar mass, which means that not only putative planets of such mass could be detected, but satellites as well. Until this happens, the detection efficiency scales with the square root of the planet mass. If the mass function of cool planets follows the suggested steep increase towards lower masses dN/d[lg(m p/M⊕)] ∝ (m p/M⊕)−β (Cassan et al. Reference Cassan2012), where β ≥ 035, we would therefore detect comparable numbers of planets for each of the mass ranges 1–10 M⊕, 0.1–1 M⊕, and 0.01–0.1 M⊕. The distribution of the detected planets (or the lack of detections) will constrain the slope of the mass function.

Given that space telescopes are unaffected by the image blurring due to the Earth’s atmosphere, a case for a microlensing survey for exoplanet detection has been made both for ESA’s Euclid mission (as potential ‘legacy’ science) and for NASA’s Wide-Field Infrared Survey Telescope (WFIRST)Footnote a, as one of the competing priorities shaping its design. Euclid is currently planned to be launched in 2020, whereas WFIRST is still at a very early stage of definition with a projected launch towards the end of the 2020s. Euclid provides a 0.55 deg2 field of view (FOV) with a 1.2-m mirror, whereas WFIRST would provide a 0.28 deg2 FOV with a 2.4-m mirror, as compared to a 0.17 deg2 FOV with a 3.6-m mirror for GravityCam with CMOS detectors on the ESO NTT. The microlensing campaigns with the space telescopes would be restricted to observing windows lasting one or two months only, substantially reducing the planet detection capabilities, given that the median timescale of microlensing events is around a month (Penny et al. Reference Penny2013; Barry et al. Reference Barry and Shaklan2011).

3.3. Crowded-field photometry with GravityCam

CCDs have been used for many years and their characteristics as detectors are well established. There are features which are just becoming better appreciated but again the quality of photometric work that is being done already is exceptional. The methods for photometry in relatively uncrowded fields are well known having been developed on many telescopes and at many observatories throughout the world. When thinking about crowded field photometry we have to distinguish between relative photometry, which is particularly important for microlensing studies in the bulge of the galaxy, and absolute photometry.

GravityCam will offer much better angular resolution than is usually available from ground-based studies. However, we must recognise that in the crowded fields, GravityCam will target their stars at the position of virtually every single pixel on the detectors. Attempting to measure the light from one star is immediately complicated by the contribution from the other nearby stars which may or may not be brighter than our target star. As with any astronomical observation seeking high precision, the effects of variable opacity due to high-level cloud or low-level moisture/fog can be significant. Seeing variability and sky brightness variability further complicate matters.

Relative photometry is very much easier in crowded fields because we can be confident that the integrated light across a large patch of the sky will be precisely constant, and any integrated flux variability can be immediately calibrated and corrected for. The lucky imaging process will select images not on the basis of percentage but on the basis of an actual achieved resolution per frame. Frames at the same resolution will be combined so that the influence of the point spread function may be better understood. Absolute photometry in crowded fields is more complicated due to source confusion in all-sky photometric catalogues and distance from isolated standard star fields. Taking calibrations at different Zenith distances has to be done with great care and only under the best conditions.

There is substantial experience in making photometric observations in crowded fields with EMCCDs. For example, the Danish 1.54-m telescope at ESO’s La Silla Observatory has played a key role in the follow-up monitoring of gravitational microlensing events since 2003, having provided in particular the crucial data for identifying the then most Earth-like extra-Solar planet OGLE-2015-BLG-390Lb (Beaulieu et al. Reference Beaulieu2006). In 2009, the telescope was upgraded with a multi-colour EMCCD camera (Skottfelt et al. Reference Skottfelt2015b). Harpsøe et al. (Reference Harpsøe, Jørgensen, Andersen and Grundahl2012) made the first investigations of how to optimise the pipeline in order to obtain optimal photometric accuracy and found RMS of the order 1% from test observations of the core of Omega Cen for stars where scintillation noise dominated the noise budget (magnitudes <17) increasing to a few per cent when photon and excess noise and background crowding dominated the budget. The photometric scatter in the crowded fields was reduced substantially (Skottfelt et al. Reference Skottfelt2013, Reference Skottfelt2015a; Figuera Jaimes et al. Reference Figuera Jaimes2016a) by selecting the very best resolution images (the so-called ‘lucky images’, or the sharpest 1% of the images covering those fraction of seconds where the atmospheric turbulence above the telescope happens to be at minimum) as reference images for the reduction of the rest of the images, and using image subtraction (Bramich Reference Bramich2008) instead of Daophot point-spread function reduction. For example, the photometric RMS of EMCCD observations of the relatively bright OGLE-2015-BLG-0966Lb (Street et al. Reference Street2016) is well below 1% for 2-min exposure sequences on the Danish 1.54 m.

Our knowledge of the photometric credentials of CMOS detectors is much less well established. A programme is currently underway at the Open University to calibrate devices which are very similar to those we are likely to use for GravityCam in order to check repeatability, stability, quantum efficiency and uniformity, calibration issues, so on. So far the results are very promising. Indeed we would not expect very great differences from the point of view of imaging devices as they are based on using the same silicon structures used in CCDs. There is also substantial experience in using infrared detectors such as those made by Teledyne and Rockwell which use CMOS readout electronics with mercury cadmium telluride (MCT) detector elements bump bonded to the CMOS components. We know from those devices that there are indeed problems, for example, with residual charge (reading out a pixel does not completely empty it) and this is something which will be the subject of future investigation. However, there is a great deal of knowledge about CMOS structures and how these are used because of the ubiquity of CMOS detectors in devices such as mobile phones and handheld digital cameras which are required to achieve extraordinarily high imaging quality (Janesick et al. Reference Janesick, Elliott, Andrews, Tower, Bell, Teruya, Kimbrough, Bishop, Bell and Grim2014).

4. A unique database for optical variability

4.1. Stellar variability and asteroseismology

Given that many stars belong to classes which are known to be variable, studies of stellar variability are a collateral benefit of virtually any optical survey. Gravitational microlensing surveys with GravityCam described above can produce precision relative photometry on as many as 90 million stars with each measured for extended periods every clear night over an entire observing season. With a limiting magnitude I ∼ 27 for 1 h exposures and the high angular resolution, the sample obtained with GravityCam extends much further towards fainter stars than OGLE-IV, while the cadence is much higher than for LSST.

Moreover, virtually every star will show low levels of variability simply because of the complex structure within each star that reflects the internal structure of the star and how sound waves within the star propagate. Helioseismology studies of the Sun have used such data and have given a great deal of information about the internal structure of the Sun (Gough Reference Gough, Shibahashi, Takata and Lynas-Gray2012). While photometric studies of more distant objects cannot resolve the surface as is possible with the Sun, they can still provide substantial information about the internal structure of the star. A recent example of the methods and results that may be obtained from the studies is given by Bowman et al. (Reference Bowman, Kurtz, Breger, Murphy and Holdsworth2016).

Such asteroseismology studies critically rely on precision photometric measurements of the star over a long period of time. The high stellar density in fields towards the Galactic bulge enables such high precision given that in each and every frame the photometric calibration is provided by comparing the target star with the mean flux from all the others. This suppresses very effectively any variations in atmospheric transmission from atmospheric haze or thin cloud cover.

At a photometric accuracy better than 500 μmag in 1 h, GravityCam is expected to provide data on around 5 million stars with I < 19.5 each night over a period of 6 months with EMCCDs, or 25 million stars with I < 20.3 with CMOS chips. Brighter stars will be imaged to even higher photometric accuracy as this accuracy is simply determined by the relative precision with which the baseline photometry from the field is established. Over the critical timescales for stellar oscillations of 1–100 h very high accuracy data will be generated. While it had previously been believed that it was extremely difficult to extract the full frequency spectrum of the oscillations within a star if the data available had significant gaps as is inevitable with the single ground-based instrument, new methods have been developed that get round this problem, very much in the way that other disciplines have had to cope with missing data or incomplete sampling (Pires et al. Reference Pires, Mathur, García, Ballot, Stello and Sato2015).

The observing cadence of GravityCam is also well suited for detecting and monitoring eclipsing binaries.

4.2. Sub-second variability from accretion onto compact objects

Massive stars end their lives as compact objects, that is, neutron stars and black holes, leading to systems in which a compact object accretes material from a companion star in a binary orbit. The short dynamical times make rapid variability a defining characteristic of such accreting compact binary systems, known as ‘X-ray binaries’, given that such variability in X-rays is well documented (e.g. Belloni & Hasinger Reference Belloni and Hasinger1990; van der Klis Reference van der Klis, Lewin, van Paradijs and van den Heuvel1997 and many others).

However, few studies exist on rapid optical variations of such objects. Optical photons are typically generated as thermal radiation from viscous stresses in the outer (cooler) regions of the accretion disc. Alternatively, X-rays from a central hot electron ‘corona’ can irradiate the outer regions and be reprocessed to the optical regime. Both pathways provide a way to map the physical and dynamical state in the outer parts of accreting flows on timescales of order ∼1–10 s (e.g. O’Brien et al. Reference O’Brien, Horne, Hynes, Chen, Haswell and Still2002), and at least some observations have shown the presence of other mechanisms at work on fast timescales (see Uttley & Casella Reference Uttley and Casella2014 for a review). This argues against irradiated components such as the outer disc where the fluctuations are expected to have longer characteristic times. The best evidence for such behaviour exists for XTE J1118+480 (Kanbach et al. Reference Kanbach, Straubmeier, Spruit and Belloni2001), GX 339–4 (Motch, Ilovaisky, & Chevalier Reference Motch, Ilovaisky and Chevalier1982; Gandhi et al., Reference Gandhi2010), Swift J1753.5–0127 (Durant et al. Reference Durant, Gandhi, Shahbaz, Fabian, Miller, Dhillon and Marsh2008), and V404 Cyg (Gandhi et al. Reference Gandhi2016). An example light curve segment is shown in Figure 8. Similar evidence also exists in some neutron star binaries (Durant et al. Reference Durant2011), though on somewhat longer characteristic timescales. In at least two cases (GX 339–4 and V404 Cyg), the fastest variations have a red spectrum, further arguing against thermal reprocessing which is expected to show blue colours.

Figure 8. Example of rapid optical variability from an accreting black hole binary V404 Cyg. The figure shows a short 30 s segment of an ULTRACAM r′ light curve from 2015 June 26. Fast sub-second flares were visible throughout these observations, with complex structure of the flares visible on ∼100 ms timescales and shorter. These sub-second flares are interpreted as non-thermal synchrotron emission from the base of the relativistic jet in this source. The full data set is described by Gandhi et al. (Reference Gandhi2016)

By cross-correlating the fast optical fluctuations with strictly simultaneous X-ray observations, time delays between the bands have been mapped, revealing a complex mix of components. The shortest delays span the range of ∼0.1–0.5 s with the optical following the X-rays, interpreted as the propagation lag between infalling (accreting) material and outflowing plasma from the base of the relativistic jet seen in these systems (Kanbach et al. Reference Kanbach, Straubmeier, Spruit and Belloni2001; Malzac, Merloni, & Fabian Reference Malzac, Merloni and Fabian2004; Gandhi et al. Reference Gandhi2010). Knowing the location of the jet base synchrotron emission is critical for constraining jet acceleration and collimation models (e.g. Markoff, Nowak, & Wilms Reference Markoff, Nowak and Wilms2005) and the optical delays appear to be constraining these to size scales of order 103 Schwarzschild radii, though this remains to be tested in detail.

On slightly longer timescales of order ∼1–5 s, there is evidence of the optical variability preceding the X-rays in anti-phase (Kanbach et al. Reference Kanbach, Straubmeier, Spruit and Belloni2001; Durant et al. Reference Durant, Gandhi, Shahbaz, Fabian, Miller, Dhillon and Marsh2008; Gandhi et al. Reference Gandhi2010; Pahari et al. Reference Pahari, Gandhi, Charles, Kotze, Altamirano and Misra2017), often interpreted as synchrotron self-Compton emission from the geometrically thick and optically thin corona lying within the disc (Hynes et al. Reference Hynes2003; Yuan, Cui, & Narayan Reference Yuan, Cui and Narayan2005; Gandhi et al. Reference Gandhi2010; Veledina, Poutanen, & Vurm Reference Veledina, Poutanen and Vurm2011). Such a medium may also undergo Lense-Thirring precession, resulting in quasi-periodic oscillations (QPOs) in the optical and X-ray light curves which give insight on the coronal dynamics (Hynes et al. Reference Hynes2003; Ingram, Done, & Fragile Reference Ingram, Done and Fragile2009; Gandhi et al. Reference Gandhi2010).

Fast optical timing observations can thus provide quantitative and novel constraints on the origin and geometry of emission components very close to the black hole cores. But with only a handful of observations thus far (the four objects mentioned above), this field remains at its incipient stages with much degeneracy between the models cited above. Progress has been hindered, in large part, due to the lack of wide availability of fast timing instruments with low deadtime. This is now starting to be addressed with instruments such as ULTRACAM (Dhillon et al. Reference Dhillon2007) on the NTT and ULTRASPEC (Dhillon et al. Reference Dhillon2014) on the Thai National Telescope which are capable of rapid optical observations, though neither instrument is available throughout the year. SALT is also approaching optimal observing efficiency (following recent mirror alignment corrections) and has a fast imager SALTICAM capable of rapid optical studies (O’Donoghue et al. Reference O’Donoghue, Iye and Moorwood2003), but the telescope pointing is constrained so most objects are visible only for short periods during any given night. A few other specialised optical instruments also exist. But another hurdle has been the lack of sensitive X-ray timing missions for coordination with optical timing. This is also now changed with the launch of the AstroSat and Neutron star Interior Composition Explorer missions.

GravityCam can play a major role in this emerging field. Its natural advantages include fast sampling capabilities on timescales of ∼0.1 s, low deadtime, and a wide field of view allowing simultaneous comparison star observations. Typical peak magnitudes of black hole X-ray binaries are V ∼ 15–17 (Vega), which should be well within reach from a 4-m class telescope. Time tagging of frames with GPS is a possibility that can enable such science.

4.3. Transits of hot planets around cool stars

A further type of variability that will show in the GravityCam data sets is the dip in light from a star produced by a planet passing in front of it (Struve Reference Struve1952). Normally the confirmation of the discovery of a planet requires that its transit is recorded several times. The depth of the dip in the light from the star and the length of the transit give important information about the planet and its orbital parameters. Unlike gravitational microlensing, which favours the detection of cool planets, such planetary transits favour the detection of hot planets, given that planets at larger distances will have larger orbital periods, and a larger orbit also makes it less probable that the planet is accurately aligned with its host star to pass in front.

While planet population statistics depend on the properties of the respective host stars, the faintness of M stars for optical wavelengths makes these more difficult targets in surveys than FGK stars. However, M stars are more favourable to detecting small planets due to their smaller radii.

The characteristics of GravityCam differ substantially from other instruments, which will lead to a quite different sample. Next Generation Transit survey (NGTS; McCormac et al. Reference McCormac2017) and Transiting Exoplanet Survey Satellite (TESS) (Ricker et al. Reference Ricker2015) use small telescopes with low angular resolution (NGTS: 20 cm, 5 arcsec/pixel; TESS: 10.5 cm, 21 arcsec/pixel), restricting these surveys to bright nearby stars. For MEarth (Nutzman & Charbonneau Reference Nutzman and Charbonneau2008), the pixel scale is smaller (0.76 arcsec/pixel), but the telescope diameter is small as well (40 cm). In contrast, GravityCam can deliver angular resolutions ∼0.15 arcsec with a 3.6-m telescope, and with CMOS detectors would reach S/N ∼ 400 at I ∼ 18 with 2 min of integration. GravityCam thereby addresses the crowding of Galactic bulge fields and enables photometry with 1–10 mmag precision that is needed to reliably detect exoplanet transits.

Using data from the VISTA Variables in Via Láctea (VVV, Minniti et al. Reference Minniti2010) survey, a preliminary investigation (Rojas-Ayala et al. Reference Rojas-Ayala, Iglesias, Minniti, Saito and Surot2014) estimates about 15,000 objects per square degree with 12 < K s < 16 and colours consistent with M4–M9 dwarfs, as well as ∼ 900 such objects per square degree for K s < 13. These M dwarfs are located relatively nearby at 0.3–1.2 kpc and their near-infrared colours are only moderately affected by extinction. With this magnitude range corresponding to about 17 < I < 21 for early/mid-M stars (Pecaut & Mamajek Reference Pecaut and Mamajek2013), one therefore finds ∼ 3 000 potential M dwarfs (or ∼ 180 brighter M dwarfs with I < 18) for a single 0.2 deg2 GravityCam field.

GravityCam moreover provides a high time resolution, favourable to studies of transit timing variations (e.g. Sartoretti & Schneider Reference Sartoretti and Schneider1999; Cáceres et al. Reference Cáceres, Ivanov, Minniti, Naef, Melo, Mason, Selman and Pietrzynski2009, Reference Cáceres2014).

5. Other applications

5.1. Galactic star clusters

It is well known that star clusters host numerous tight binaries. They are involved in the dynamic evolution of the globular clusters and may also produce peculiar stars with anomalous colours and/or chemical composition (Jiang, Han, & Li Reference Jiang, Han, Li, Charbonnel and Nota2015). It has been also predicted that the first population in the globular clusters, with multiple populations, could contain a higher fraction of close binaries than the second generation (Hong et al. Reference Hong, Vesperini, Sollima, McMillan, D’Antona and D’Ercole2016). Recently, Carraro & Benvenuto (Reference Carraro and Benvenuto2017) explained the extreme horizontal branch stars of the open cluster NGC 6791 introducing tight binaries. These recent results indicate that the detection of tight binaries, with periods between approximately 0.2 up to several days, could be important in the interpretation of some crucial observational issues of open and globular star clusters.

GravityCam is not only well suited to study stellar multiplicity due to the provided high angular resolution, but moreover accurate photometric measurements in crowded fields can provide detection of the eclipse features of the binaries. In addition, high-resolution imaging of clusters can yield proper motion measurements to be used for kinematic studies (e.g. Bellini et al. Reference Bellini2014). A survey with GravityCam on selected open clusters with anomalous horizontal branches, and in the central regions of massive globular clusters, in particular where multiple populations have been detected, with repeated observations lasting some nights, would therefore be valuable.

The picture of Galactic globular clusters that we got so far from Hubble Space Telescope (HST) (e.g. Milone et al. Reference Milone2012; Bellini et al. Reference Bellini2014) is far from complete, leaving us with many very crowded and reddened clusters at low Galactic latitude as well as some very distant halo clusters that have never observed with HST, or only with a single epoch, which does not permit inferring proper motions.

GavityCam could also add very important data on new variable stars (mainly RR Lyrae stars), mostly close to the relatively little explored cluster central regions. Their accurate photometry and improved statistics are fundamental in order remove He-age-metallicity degeneracies and constrain the He abundance (from the luminosity level of the variable gap in the Horizontal Branch) which is a recent hot topic in globular clusters (e.g. Greggio & Renzini Reference Greggio and Renzini2011; Kerber et al. Reference Kerber, Nardiello, Ortolani, Barbuy, Bica, Cassisi, Libralato and Vieira2018).

Experience from successful high-resolution monitoring of the central regions of some globular clusters have already been obtained with the small 45 arcsec × 45 arcsec field of the EMCCD camera at the Danish 1.54-m telescope at La Silla (e.g. Figuera Jaimes et al. Reference Figuera Jaimes2016a,b), leading to the discovery of variable stars previously not identified with HST images. In particular, by avoiding saturated stars in the field of the globular clusters observed, the discovery of variable stars around the top of the red giant branch became possible. This explicitly demonstrates that globular cluster systems still need further studies and are not as well understood as one might have thought.

5.2. Tracing the dark matter in the Universe

Most of the mass in the Universe are thought to be cold dark matter, forming the skeleton upon which massive luminous structures such as galaxies and clusters of galaxies are assembled. The nature and distribution of dark matter, and how structures have formed and evolved, are themes that are central to cosmology. A prediction of Einstein’s general theory of relativity that mass deflects electromagnetic radiation underpins the power of gravitational lensing as a unique tool with which to study matter in the Universe. Galaxies and clusters of galaxies can act as gravitational lenses, forming distorted images of distant cosmic sources. If the alignment between the lens and source is close, then multiple, highly magnified images can be formed; this is known as strong lensing. If the alignment is less precise, then weakly lensed, single, slightly distorted images that must be studied statistically result. Observations and analysis of these gravitational lensing signatures are used to constrain the distribution of mass in galaxies and clusters, to test and refine our cosmological model and paradigm for structure formation.

High resolution is crucial both for measuring the small distortions of lensed sources in the weak lensing regime and to study the details of strongly lensed, highly magnified, and distorted multiple images. In fact, weak shear studies look for distortions much smaller than the seeing size. As shown by Massey et al. (Reference Massey2013), and discussed further by Cropper et al. (Reference Cropper2013), inherent biases in the measurement of weak lensing observables caused by the size and knowledge of the PSF scale quadratically with the size of the PSF compared to the background galaxy size. The challenge addressed by GravityCam is to obtain exposures for which the PSF is both small in size and has planarity across the field of view.

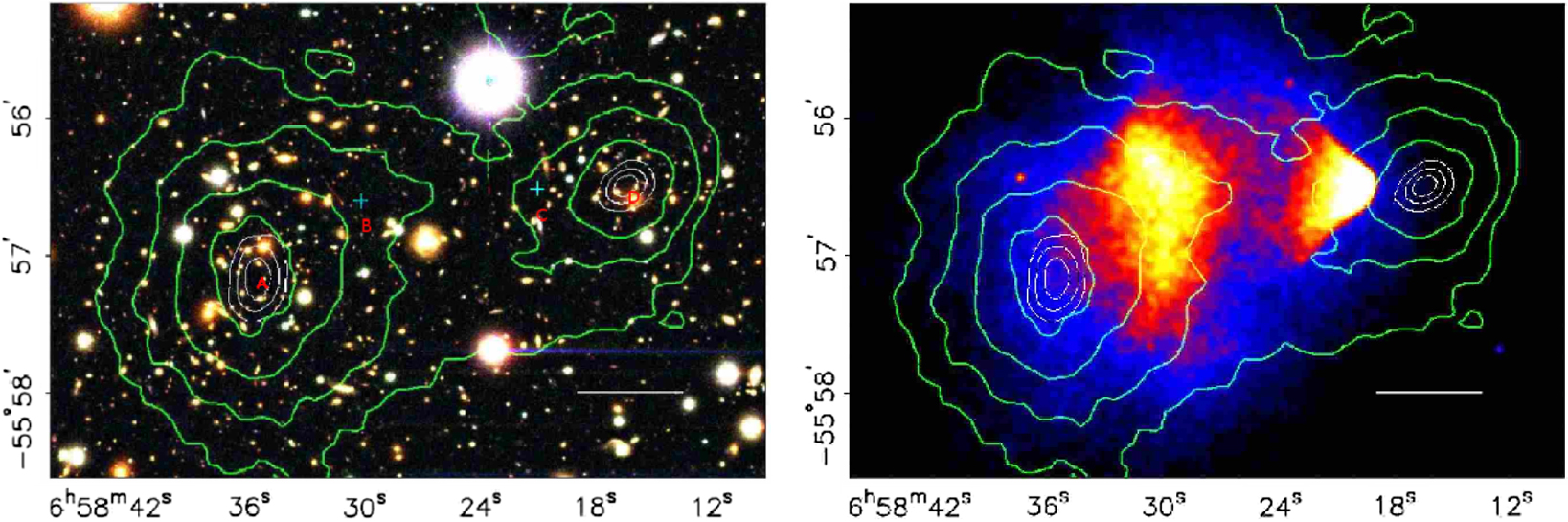

For the bullet cluster, Clowe et al. (Reference Clowe, Bradač, Gonzalez, Markevitch, Randall, Jones and Zaritsky2006) found the majority of the total matter density being offset from the stellar and luminous X-ray gas matter densities, and therefore difficult to explain as being associated with non-dark matter mass (Figure 9). In this system, two clusters have violently impacted and shot through each other, with the hot gas (the dominant luminous matter component) responsible for X-ray emission in both clusters being self-impeded and remaining between the two mass peaks (dominated by dark matter) seen on the lensing mass map (Figure 9). Both the resolution of such observations and the number density of distant background sources are critical aspects in the accuracy with which mass distributions such as this can be mapped.

Given a small and stable PSF, the requirements for accurate mass reconstruction are a large number density of objects (to reduce shot noise in the averaged measured ellipticities of the background galaxies) and a wide field of view (for an efficient observing schedule). The resolution and sensitivity of GravityCam would be comparable to the Advanced Camera for Surveys (ACS) instrument on HST, yet covering a wider field of view, hence excellent for such studies.

Figure 9. The bullet cluster; (left) optical image with contours showing projected mass derived from lensing; (right) same lensing mass map contours now with X-ray image showing location of hot gas (dominant component of normal matter). Clowe et al. (Reference Clowe, Bradač, Gonzalez, Markevitch, Randall, Jones and Zaritsky2006) showed that the mass budget is dominated by dark matter. The projected mass derived from lensing with the HST is excellent but with ground-based studies it is extremely hard to recover with any accuracy.

Observational data and N-body simulations suggest that about 10% of the dark matter in galaxy clusters is in the form of discrete galaxy-scale substructures, the remainder being distributed in a larger-scale dark matter halo. Strong lensing in clusters has been used to investigate the truncation of the dark matter halos of cluster members (e.g. Halkola, Seitz, & Pannella Reference Halkola, Seitz and Pannella2007).

Analysis of the distortion signal has been successfully used to determine the distribution of substructure in the galaxy cluster Abell 1689 using both ground-based, wide-field images from Subaru (Figure 10, left panel, Okura, Umetsu, & Futamase Reference Okura, Umetsu and Futamase2007) and deep HST/ACS images of the central part of the cluster (Figure 10, right panel, Leonard et al. Reference Leonard, Goldberg, Haaga and Massey2007). GravityCam will combine these characteristics by producing deep high-resolution wide-field images.

Figure 10. Central portion of ground-based Subaru image (left) and space-based HST/ACS image (right) of Abell 1689 with contours showing the reconstructed mass distribution from distortion measurements. Note that space-based data are high resolution and typically deeper, whereas ground-based data typically cover a larger area (tens of arc minutes compared to a few arc minutes).

While GravityCam shares several features with the Euclid spacecraft, scheduled to launch in 2020, there are some characteristics that make these instruments complementary for maximising the return on these science drivers. Euclid uses a 1.2-m diameter telescope with an array of CCD detectors covering a field of view about three times larger than that of GravityCam. The image resolution is almost the same as that of GravityCam, while the NTT with its larger mirror has nine times the collecting area. As the spacecraft will be located at the L2 position, communication limitations mean that exposure times will be relatively long. While the Euclid cosmic shear survey will benefit from space-based imaging over a wide field of view, there will be unique systematic effects that are required to be modelled, for example, charge transfer inefficiency (Holland et al. Reference Holland, Abbey, Lumb, McCarthy, Siegmund and Hudson1990) and the brighter fatter effect (Downing et al. Reference Downing, Dorn and Holland2006). Therefore, complementary observations at a similar level of space-based quality will be valuable in testing and confirming the measurements from Euclid. LSST observations will not be of sufficient resolution to provide this complementary data, and therefore GravityCam can play a unique role within the context of the Euclid experiment. Furthermore, the Euclid weak lensing measurements are made in a single broad-band (RIZ, 500–800 nm) and require space-based narrow band imaging over a smaller area to calibrate so-called ‘colour gradient’ effects caused by the broad-band (see e.g. Semboloni et al. Reference Semboloni2013). GravityCam will be able to follow up on HST by providing such data over large fields of view.

GravityCam also has ideal capabilities for the follow-up of the background sources that are strongly gravitationally lensed by the foreground galaxy clusters, with the whole strong lensing region fitting within a dithered GravityCam observation. Euclid is expected to discover ∼5 000 galaxy clusters that have giant lensing arcs (Laureijs et al. Reference Laureijs2011). With angular magnifications of up to ∼100, the stellar populations will be resolvable by GravityCam on scales approaching 25 kpc, unachievable with the single wide optical passband of Euclid. High magnification events have also been discovered through follow-ups of wide-field infrared and sub-mm surveys or through follow-ups of lensing clusters (e.g. Swinbank et al. Reference Swinbank2010; Iglesias-Groth et al. Reference Iglesias-Groth, Díaz-Sánchez, Rebolo and Dannerbauer2017; Cañameras et al. Reference Cañameras2015; Díaz-Sánchez et al. Reference Díaz-Sánchez, Iglesias-Groth, Rebolo and Dannerbauer2017). Future Stage-IV CMB experiments and proposed missions such as CORE will supply many more high magnification events (e.g. De Zotti et al. Reference De Zotti2018). There are already indications in a small number of objects for the entire far-infrared luminosities to be dominated by a handful of extreme giant molecular clouds (e.g. Swinbank et al. Reference Swinbank2010). The sub-arcsecond angular scales resolved by GravityCam’s optical imaging, tracing stellar populations, will be well matched to the atomic and molecular gas and dust traced by Atacama Large Millimeter/submillimeter Array in these objects.

5.3. Solar System Objects

Observations of Solar System Objects (SSO) with GravityCam will take advantage of both the improved spatial resolution compared with natural seeing and the high time resolution of photometry. The first will be of use in studying binary asteroids, for example, which is currently done with adaptive-optics camera on 8-m class telescopes (e.g. Margot et al. Reference Margot, Pravec, Taylor, Carry, Jacobson and Michel2015). By resolving binary pairs their mutual orbits can be measured, allowing derivation of the mass of the system, and therefore density, the most fundamental parameter to understand the composition and structure of rocky bodies (Carry Reference Carry2012). There are 21 such binaries known in the main asteroid belt which could be studied, and about 80 (fainter ones) in the Kuiper Belt. As with all other areas of astronomy, the improved S/N for point sources using the seeing-corrected images from GravityCam will also be of use for measuring orbits and light curves (and possibly colours, depending on the availability of multiple filters in the final design) of faint SSOs.

The primary science case for SSOs though is in the time domain. The high speed of readout of GravityCam, combined with its large FOV, makes it ideal for studying small bodies via occultation of background stars. Occultation studies are a powerful way to probe small bodies; timing the length of the blink of the background star gives a direct measurement of the size of the body, for SSOs too small to directly resolve. Multiple chords across the same body, from different observatories that see subtly different occultations, can reconstruct its shape in a way only rivalled by spacecraft visits (Durech et al. Reference Durech, Carry, Delbo, Kaasalainen, Viikinkoski and Michel2015). Occultations can also probe atmospheres on larger bodies (e.g. on Pluto—Dias-Oliveira et al. Reference Dias-Oliveira2015; Sicardy et al. Reference Sicardy2016) and discover satellites (Timerson et al. Reference Timerson, Brooks, Conard, Dunham, Herald, Tolea and Marchis2013) or even ring systems (Hubbard et al. Reference Hubbard, Brahic, Sicardy, Elicer, Roques and Vilas1986; Braga-Ribas et al. Reference Braga-Ribas2014). For this work high-speed photometry is a major advantage. In the discovery of the ring system around the small SSO Chariklo, the 10 Hz photometry from the lucky imaging camera (Skottfelt et al. Reference Skottfelt2015b) on the Danish 1.54-m telescope at La Silla was critical as it not only showed the drop due to the rings to be deep and of short duration (and therefore opaque narrow rings rather than a diffuse coma), but even resolved the two separate rings (Figure 11). Other telescopes with conventional CCD cameras saw only a single partial dip, as the occultation by the rings represented only a fraction of the few-second integrations. GravityCam would enable target of opportunity observations of occultations by known bodies to probe size, shape, and their surrounding material, with the advantage that the larger diameter of the NTT primary mirror, compared with the 1.54-m Danish telescope, would allow occultations of fainter stars to be observed, greatly increasing the number of potentially observable alignments. With brighter stars, the high frame rate possible with GravityCam would allow study of fine structure. With the highest readout and a typical shadow velocity, we would have sub-kilometre spatial resolution across the rings of Chariklo, allowing measurement of the variation of optical depth along its width (Figure 12), permitting the study of the particle size distribution, and dynamical structures caused by gravity waves and/or oscillation modes caused by Chariklo’s mass distribution (Michikoshi & Kokubo Reference Michikoshi and Kokubo2017).

Figure 11. Discovery of the rings around Chariklo via occultation of a background star. This high-speed (10 Hz) photometry was collected at the 1.54-m Danish telescope at La Silla with its lucky imaging camera.

The primary science case for SSOs though is in the time domain. The high speed of readout of GravityCam, combined with its large FOV, makes it ideal for studying small bodies via occultation of background stars. Occultation studies are a powerful way to probe small bodies; timing the length of the blink of the background star gives a direct measurement of the size of the body, for SSOs too small to directly resolve. Multiple chords across the same body, from different observatories that see subtly different occultations, can reconstruct its shape in a way only rivalled by spacecraft visits (Durech et al. Reference Durech, Carry, Delbo, Kaasalainen, Viikinkoski and Michel2015). Occultations can also probe atmospheres on larger bodies (e.g. on Pluto—Dias-Oliveira et al. Reference Dias-Oliveira2015; Sicardy et al. Reference Sicardy2016) and discover satellites (Timerson et al. Reference Timerson, Brooks, Conard, Dunham, Herald, Tolea and Marchis2013) or even ring systems (Hubbard et al. Reference Hubbard, Brahic, Sicardy, Elicer, Roques and Vilas1986; Braga-Ribas et al. Reference Braga-Ribas2014). For this work high-speed photometry is a major advantage. In the discovery of the ring system around the small SSO Chariklo, the 10 Hz photometry from the lucky imaging camera (Skottfelt et al. Reference Skottfelt2015b) on the Danish 1.54-m telescope at La Silla was critical as it not only showed the drop due to the rings to be deep and of short duration (and therefore opaque narrow rings rather than a diffuse coma), but even resolved the two separate rings (Figure 11). Other telescopes with conventional CCD cameras saw only a single partial dip, as the occultation by the rings represented only a fraction of the few-second integrations. GravityCam would enable target of opportunity observations of occultations by known bodies to probe size, shape, and their surrounding material, with the advantage that the larger diameter of the NTT primary mirror, compared with the 1.54-m Danish telescope, would allow occultations of fainter stars to be observed, greatly increasing the number of potentially observable alignments. With brighter stars, the high frame rate possible with GravityCam would allow study of fine structure. With the highest readout and a typical shadow velocity, we would have sub-kilometre spatial resolution across the rings of Chariklo, allowing measurement of the variation of optical depth along its width (Figure 12), permitting the study of the particle size distribution, and dynamical structures caused by gravity waves and/or oscillation modes caused by Chariklo’s mass distribution (Michikoshi & Kokubo Reference Michikoshi and Kokubo2017).

Figure 12. Occultation of a bright star by Chariklo’s rings, taken at even faster frame rate (25 Hz) at the SAAO 1.9-m telescope.

Occultations are expected to flourish as a technique in the next few years, as the accuracy of star positions and minor body orbits is vastly improved by Gaia astrometry, meaning that the accuracy of event predictions, and therefore the hit rate for successful observations, will improve (Tanga & Delbo Reference Tanga and Delbo2007).

In addition to such targeted observations, a great strength of GravityCam will be in discovery of unknown minor bodies via occultations caught by chance during other observations. This technique has long been proposed as the best way to detect very small or distant SSOs (Bailey Reference Bailey1976; Nihei et al. Reference Nihei, Lehner, Bianco, King, Giammarco and Alcock2007). This is the only way to discover Oort cloud objects, which would be far too faint to directly detect even with the largest telescopes, and very small objects in the Kuiper Belt, essential for understanding the size distribution down to the size of a typical comet nucleus and below (and therefore constraining models of comet origins, e.g. Schlichting et al. Reference Schlichting2012; Davidsson et al. Reference Davidsson2016). The Kuiper Belt size distribution is still poorly understood, even with the latest constraints from counting craters on Pluto from New Horizons images (Greenstreet, Gladman, & McKinnon Reference Greenstreet, Gladman and McKinnon2015).

The chance of detecting an occultation depends on many factors (e.g. diffraction effects dependent on the size and relative velocity of the SSO, finite source size and colour of the star, filter choice, S/N, and time sampling—see Nihei et al. Reference Nihei, Lehner, Bianco, King, Giammarco and Alcock2007), but in the end mostly depends on how many stars can be monitored, and for how long. A productive survey should maximise the number of star-hours observed. Pointing into the ecliptic plane is more likely to discover KBOs, but Oort cloud objects could be detected anywhere on the sky. In fact, the Galactic and ecliptic planes overlap, and Baade’s window (most suitable for microlensing observations) is by chance also a good place to hunt for SSOs, as it has ecliptic latitude of only −6.3°.

When performing the microlensing survey, the combination of the wide field of view with rich star fields will mean that it will be possible to have fast photometry on many stars at any given time, which can be mined for chance occultations by small bodies. With EMCCD detectors, 5% photometry for individual exposures at 10–30 Hz would be possible down to a limiting magnitude of ∼15.5–14.5, respectively, while the limit would be lower by ∼0.8 mag with CMOS detectors. This gives a fair number of stars per FOV in the Galactic bulge fields that can be followed at good S/N for strong constraints on the smallest objects, and it will be complemented by the many more that will be monitored at S/N comparable to CHIMERA (Harding et al. Reference Harding2016) or TAOS (Alcock et al. Reference Alcock2003; Lehner et al. Reference Lehner2006). The brightest stars in the bulge fields are likely to be giants, but a reasonable number of main sequence stars with small apparent diameter, more sensitive to occultation by small bodies, will also be included (see Figure 6). Dedicated occultation searches could probably operate with each detector binned to increase sensitivity and/or allow faster readout, but the major advantage for GravityCam is that the occultation search will mostly come for ‘free’, as the data from the microlensing searches (or any other observation) can be mined for events. Between 100 billion (with EMCCD detectors) and 1 trillion (with CMOS detectors) star hours per bulge season at S/N ∼5–10 should be achievable, with fairly conservative assumptions. The only requirement is that the data processing pipeline also records photometry for sources detected in individual readouts, as well as performing the alignment and stacking to produce the seeing corrected frames for deep imaging.

6. The GravityCam instrument

Large telescopes with wide fields of view are relatively uncommon unless they have been designed with complicated (and often very expensive) corrector optics. The detectors we propose have pixel sizes in the range of 10–24 μm. This matches well with the NTT. For the purposes of this paper we will assume that GravityCam will be mounted on the Naysmith focus of the telescope and that the detectors will have 16 μm (86 milliarcsec) pixels. The NTT has a 0.5° diameter field of view with Ritchey–Chretien optics. The plate scale is 5.36 arcsec/mm. This allows us to mount GravityCam on the NTT without any reimaging optics apart from a field flattener integrated as part of the detector package front window. The simplest version of GravityCam consists of a close-packed array of detectors all of which are operating in synchronism to minimise inter-detector interference. The light from the telescope passes through an ADC which is essential to give good-quality images free from residual chromatic aberration from the atmosphere. This is particularly important in crowded field imaging as well as where accurate measurement of the shape of the galaxy is critical. Telescopes such as the LSST that hope to avoid using an ADC are likely to have significant problems particularly with studies such as the weak shear gravitational lensing programme described above. In front of the detectors may be mounted interchangeable filter units should they be required. It is worth mentioning that gravitational lensing is a completely achromatic process (while occultations are mostly achromatic) and, unless there is a good scientific case to use a filter, filters may be dispensed with in order to give the highest sensitivity for the survey in question. However, in some cases it will be important to get detailed information of the stars and galaxies being targeted. Asteroseismologists, for example, need to know the colours in order to understand the internal structure of stars, which will require the availability of appropriate filters for these measurements.

The GravityCam instrument enclosure will be mounted on the image rotator that is part of the NTT Naysmith platform (see Figure 1) and no other mechanisms apart from a filter changer and the ADC are needed. The detector package will need to be cooled to between −50°C and −100°C to minimise dark current in the detectors. The detector package will therefore need to be contained within a vacuum dewar enclosure, and using either a recirculating chiller or liquid nitrogen. Each detector will have its own driver electronics and direct interface with its host computer. A modular structure is essential to allow individual modules to be replaced quickly in case of module failure so that the entire camera dewar may be vacuum pumped and cooled in good time before the next observing night. The volume of the entire GravityCam package mounted on the Naysmith platform of the NTT might be about 1 m3. The computer system necessary to serve the large number of detectors would be very much bigger, but does not need to be located particularly near to the detector package.

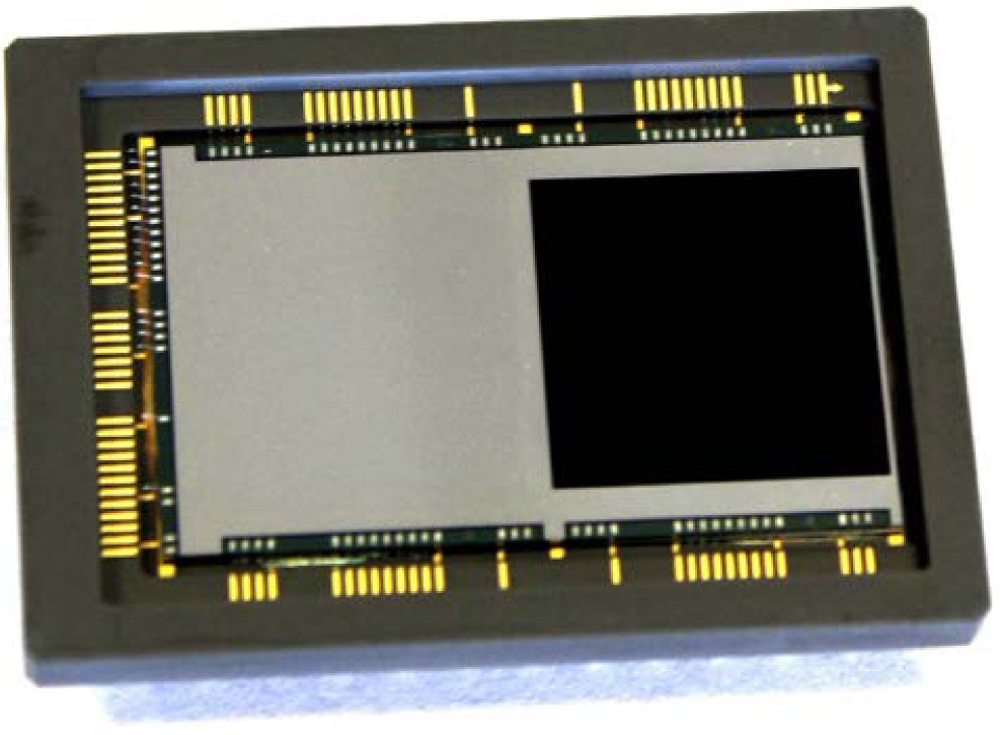

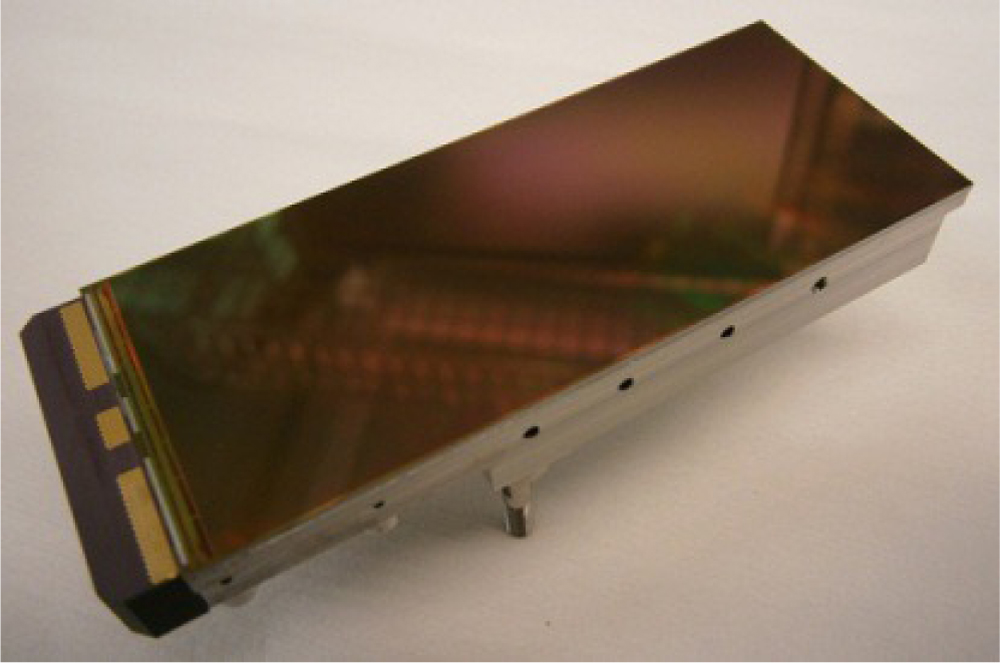

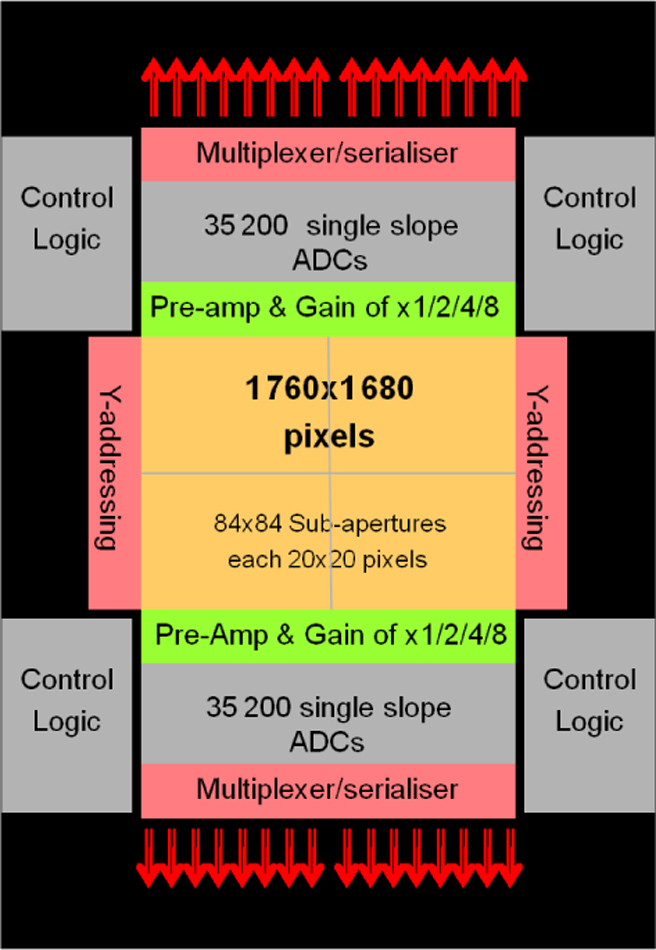

7. GravityCam detector package