CLINICIAN'S CAPSULE

What is known about the topic?

Resuscitation entrustable professional activities (EPAs) are assessed in workplace and simulated environments, but limited validity evidence exists for these assessments in either setting.

What did this study ask?

Do EPA F1 ratings improve over time, and is there an association between ratings in the workplace versus simulation environment?

What did this study find?

EPA ratings improved over time in both environments, but no correlation was observed. Ratings were higher in the workplace setting.

Why does this study matter to clinicians?

There is some validity evidence for EPA assessments in the simulated environment, but further studies are needed.

INTRODUCTION

Postgraduate medical education in Canada is restructuring to a competency-based model of education called Competency by Design, which emphasizes demonstration of competencies required for patient care.1 A cornerstone of Competency by Design is the concept of entrustable professional activities (EPAs), which are tasks specific to a discipline and stage of training.2 Assessment of EPAs requires supervisors to document performance on EPA assessment forms, which use a rating scale that incorporates entrustment anchors.Reference Rekman, Gofton, Dudek, Gofton and Hamstra3 Each EPA is assessed using a different EPA assessment form designed for national use by each discipline's specialty committee.Reference Sherbino, Bandiera and Doyle4 Despite the widespread implementation of these forms across specialties and training programs, validity evidence for their use is lacking.

The Royal College of Physicians and Surgeons of Canada (RCPSC) Emergency Medicine (EM) Specialty Committee indicates that certain EPAs may be assessed in either simulated or workplace environments.Reference Sherbino, Bandiera and Doyle4,5 Simulation provides learners with structured educational experiences to promote deliberate practice and feedback in a safe learning environment without risk to patient safety.Reference Ziv, Wolpe, Small and Glick6 It has been shown to be an effective instructional method in health care education,Reference Cook, Brydges and Hamstra7 and a growing body of literature supports the translational outcomes of simulation-based training. Two recent reviews demonstrated that simulation-based mastery learning can lead to improved patient care practices and outcomes.Reference McGaghie, Issenberg, Barsuk and Wayne8,Reference Brydges, Hatala, Zendejas, Erwin and Cook9 More recently, simulation has increasingly been used for low- and high-stakes assessment of clinical competence across medical specialties,Reference Chiu, Tarshis and Antoniou10 including EM.Reference Hall, Chaplin and McColl11 While there are well-established benefits to learning in the simulation setting, a systematic review by Cook et al. reported that the validity evidence for simulation-based assessment is sparse and concentrated within specific specialties and assessment tools.Reference Cook, Brydges, Zendejas, Hamstra and Hatala12 Additionally, there are few studies directly correlating simulation-based assessments with performance in authentic, workplace environments.Reference Hall, Chaplin and McColl11 A recent multicenter study demonstrated a weak to moderate correlation between simulation-based assessments and in-training rotation evaluations.Reference Hall, Damon Dagnone and Moore13 Another study found a moderately positive correlation between simulation and workplace assessments of resuscitation skills using a locally derived assessment tool with limited validity evidence.Reference Weersink, Hall, Rich, Szulewski and Dagnone14

There is limited validity evidence for the use of EM EPA assessment forms in the simulated and workplace environments, and it remains unclear whether EPA ratings in simulation reflect real-world performance. As Competency by Design curricula increasingly incorporate elements of simulation-based assessment, it is important to begin collecting evidence for the validity of EPA ratings in both settings. Applying modern validity theory using Kane's framework,Reference Brennan15 this study sought evidence to support an extrapolation inference (ratings in the “test world” reflect real-world performance) by examining whether (a) EPA ratings in the simulated and workplace settings correlate and (b) EPA ratings improve with progression through training.

METHODS

Study design and setting

We conducted a prospective observational study to compare ratings of resident resuscitation performance in both the workplace and simulated environments. This study was conducted at The Ottawa Hospital Department of Emergency Medicine. This study was deemed exempt from ethics review by the Ottawa Health Science Network Research Ethics Board.

Population

All first-year residents (n = 9) enrolled in the RCPSC-EM program at the University of Ottawa during the 2018–2019 academic year were included.

Clinical workplace assessments

The EM Foundations of Training EPA F1 focuses on the early stages of resuscitation, including the initial management of patients experiencing shock, dysrhythmias, respiratory distress, altered mental status, and cardiopulmonary arrest.5 When residents complete an assessment of a critically ill patient under direct observation by their supervisor, they are eligible and encouraged to have an assessment of EPA F1 completed. This assessment can be initiated by either the resident or the supervising physician in the resident's electronic portfolio, and details of the case, including patient demographics, case complexity, and clinical presentation, are documented (see the online Supplemental Appendix A). The supervisor assigns a global rating of the observed performance using the 5-point rating scale adopted by the Royal College to rate EPA performance.Reference Gofton, Dudek, Wood, Balaa and Hamstra16,Reference Gofton, Dudek, Barton and Bhanji17 The supervisor also provides and documents targeted feedback to the resident guided by the EPA milestones (the component skills required to perform the EPA). Milestone ratings are not required.

Simulated environment assessments

In the first year of training, the study cohort was scheduled for six high-fidelity simulation sessions. At each session, simulation cases were run in parallel rooms. Three residents per room each led a unique simulated scenario designed to optimize the conceptual, physical, and experiential realism of the case.Reference Rudolph, Simon and Raemer18 Cases included resuscitation of simulated patients presenting with shock, dysrhythmia, respiratory distress, traumatic injury, altered level of consciousness, and cardiopulmonary arrest (Supplemental Appendix B). The team leader for each case was observed and their performance rated at the end of the scenario by two independent assessors (one staff simulation educator, one simulation fellow) in the same manner as in the workplace setting using the EPA F1 form. All ratings were documented before the case debriefing.

The RCPSC-EM Specialty Committee anticipates that residents will progress through the Foundations stage of training during their first year of residency. Therefore, EPA F1 ratings assigned in both the workplace and simulated environments during the 2018–2019 academic year were anonymized and exported into a spreadsheet for analysis.

Data analysis

Data analysis was conducted using SPSS Statistics version 26. Descriptive statistics were calculated including means, standard deviations, and number of assessments per resident.

Reliability of EPA ratings was examined in several ways. First, an intraclass coefficient (ICC) was calculated to examine interrater reliability between EPA simulation ratings across the two raters. Second, generalizability theory (G-theory)Reference Brennan19 was used to estimate the overall reliability of EPA ratings obtained in both workplace and simulation environments. G-theory and the interpretation of G-coefficients is described in Supplemental Appendix C. Given the low-stakes, formative nature of EPAs, a dependability analysis was conducted to determine the number of assessments per resident needed to obtain a reliability of 0.6.

To examine the relationship between EPA ratings obtained in the workplace and those obtained in simulation environments, we used Lin's concordance correlation coefficient (CCC).Reference Lin20 A detailed description of this analysis is provided in Appendix C.

To determine whether mean EPA ratings from the simulated and workplace environments differed as residents progressed through their training, data were collapsed into three 4-month blocks: months 1–4, 5–8, and 9–12. A within-subjects analysis of variance (ANOVA) was conducted using the mean ratings as the dependent variable and environment (simulation, workplace) and training month (months 1–4, months 5–8, months 9–12) as independent variables. An explanation of this factorial design is provided in Supplemental Appendix C. Bonferroni corrections were applied to all multiple pairwise comparisons. Effect sizes were calculated using partial eta-squared (η2) for ANOVAs and Cohen d for t tests. The magnitude of these effect sizes was interpreted using classifications proposed by Cohen.Reference Cohen21,Reference Cohen22

RESULTS

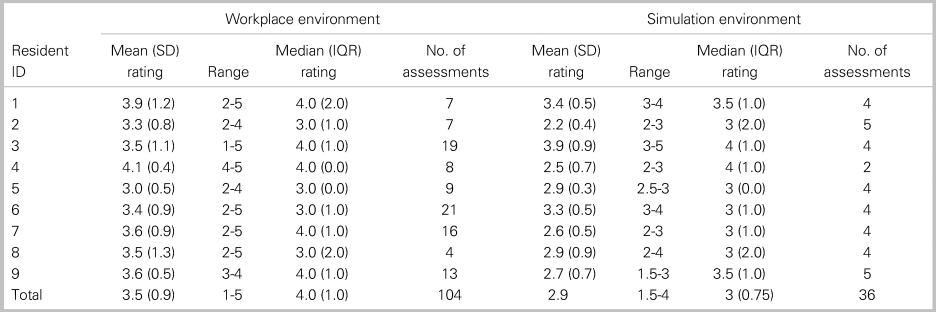

Table 1 reports the mean EPA ratings and number of assessments for each resident in both training environments. A mean of 12 workplace and 4 simulation assessments were collected per resident. Levene's Test for Equality of Variance demonstrated no significant difference in variability of mean EPA ratings across workplace and simulation settings (F = 1.802; p = 0.20). The interrater reliability of simulation assessments was high (ICC = 0.863). Generalizability (G) coefficients for workplace EPA ratings and simulation EPA ratings were 0.35 and 0.75, respectively. Thirty-three workplace EPA assessments and three in the simulated environment would be required to achieve a reliability of 0.6.

Table 1. EPA ratings in each learning environment

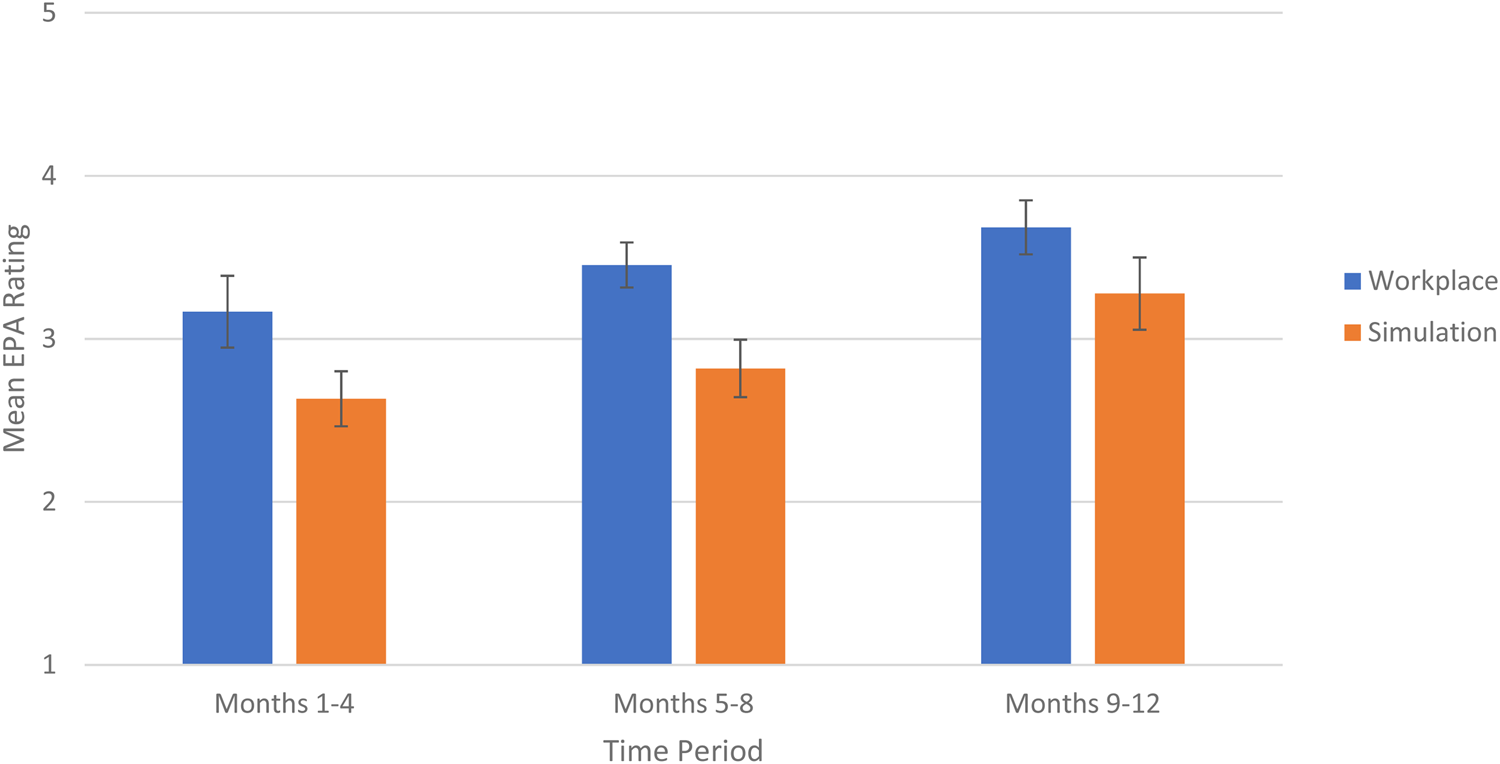

There was no evidence of a relationship between EPA ratings in the simulation and workplace learning environments (CCC(8) = -0.01; 95% CI, -0.31–0.29; p = 0.93). The mean EPA ratings as a function of time and learning environment are shown in Figure 1. There was a main effect of month of training (F(1,8) = 18.79; p < 0.001; ηp2 = 0.70). Subsequent comparisons revealed that mean ratings for months 1–4 (mean(SD) = 2.9(1.2)) were significantly lower than for months 5–8 (3.1(0.1), t(8) = 2.9; p = 0.06; d = 0.6) and months 9–12 (3.5(0.2); t(8) = 5.3; p = 0.002; d = 1.3); similarly, mean ratings for months 5–8 (3.1(0.1)) were significantly lower than for months 9–12 (3.5(0.2); t(8) = 3.0; p = 0.018; d = 0.8). A main effect of environment was also identified (F(1,8) = 7.16; p = 0.028; ηp2 = 0.47), indicating that mean workplace EPA ratings were consistently higher than mean simulation EPA ratings (3.4(0.1) v. 2.9(0.2), respectively). There was no interaction between time and environment (p = 0.80), indicating that the observed difference between workplace and simulation ratings remained constant over time.

Figure 1. Improvement in mean EPA ratings in the workplace v. the simulation environments.

DISCUSSION

We compared resident performance on the initial stages of resuscitation as assessed with EPA F1 in the workplace and simulation environments. Ratings in each environment improved over time and were consistently lower in the simulation setting. There was high interobserver reliability among simulation educators. No correlation was observed between ratings of performance in both environments.

Improvement in ratings over time

Performance ratings in both settings improved over time. This is expected as residents gain knowledge and expertise throughout their training. Applying modern validity theory, this observation supports an extrapolation inference for the validity of the assessment in either setting.Reference Brennan15 Weersink et al. observed a similar improvement in ratings based on resident training year, with more experienced residents scoring higher on assessments in the simulation setting.Reference Weersink, Hall, Rich, Szulewski and Dagnone14 Cheung et al. also observed a significant main effect of training level when residents were assessed in the workplace environment.Reference Cheung, Wood, Gofton, Dewhirst and Dudek23 Our findings suggest that several months of training can yield sufficient data to observe improvements in resuscitation skills and potentially map the trajectory of performance for a given resident cohort. This may facilitate early identification of residents who are falling off the curve and subsequent implementation of modified learning plans.

Rating reliability

The moderate to high reliability of EPA ratings in the simulation setting and high ICC observed among our simulation assessors further supports the validity of assessing resuscitation EPAs in the simulation lab. In contrast, an American study demonstrated poor interrater reliability of milestone ratings among faculty assessors who observed EM resident resuscitation performance in the simulated setting.Reference Wittels, Abboud, Chang, Sheng and Takayesu24 This difference may be partly related to the rating scales used. The Accreditation Council of Graduate Medical Education EM milestones are rated using scales that incorporate descriptive performance anchors unique to each milestone. However, there is a paucity of validity evidence for these scales.Reference Schott, Kedia and Promes25 EPAs in our study were rated using the O-SCORE scale, which incorporates entrustment anchors that reflect increasing levels of independence.Reference Gofton, Dudek, Wood, Balaa and Hamstra16 Several studies have demonstrated multiple sources of validity evidence for the use of this scale in different workplace and simulated contexts including the ED.Reference Gofton, Dudek, Wood, Balaa and Hamstra16,Reference Cheung, Wood, Gofton, Dewhirst and Dudek23,Reference MacEwan, Dudek, Wood and Gofton26–Reference Halman, Rekman, Wood, Baird, Gofton and Dudek29

Ratings in the workplace v. simulation environment

Based on a recent study comparing ratings in the simulated and workplace environment,Reference Weersink, Hall, Rich, Szulewski and Dagnone14 we expected that there would be a positive correlation in ratings. However, our study observed no correlation between EPA F1 ratings in the two settings. One explanation may be the innate variability in workplace exposure experienced by each resident. Cases that residents typically experience in the simulated environment are critically ill patients in extremis or scenarios that are rare or infrequently experienced in the workplace setting.Reference Motola, Devine, Chung, Sullivan and Issenberg30,Reference Petrosoniak, Ryzynski, Lebovic and Woolfrey31 On the other hand, we observed the use of EPA F1 in the workplace setting across a highly variable breadth of acuity, including minor trauma patients, hypotensive patients responsive to fluid administration, as well critically ill patients with multisystem injuries or cardiac arrest. Therefore, the acuity and complexity of cases reflected in each resident's simulated versus workplace EPA F1 assessments may have been variable, making it challenging to determine a correlation in performance between the two settings. If the assessments in each setting truly reflected different case types, the observed differences in mean EPA ratings and the lack of correlation between the two environments in our study may actually represent a form of discriminant validity evidence for EPA assessments.

Similarly, the observed lack of correlation in ratings may have been related to a resident's tendency to select particular cases to be assessed. Ratings in the simulation lab were significantly lower than those in the workplace. Our competency committee observed that most workplace EPA assessments were triggered by residents as opposed to their supervisors. It is possible that residents may preferentially request their supervisors to document assessments in which they performed well, thus systematically biasing workplace case selection and workplace EPA ratings. This form of “gaming the system” has been previously described in the literature, and faculty development resources have been designed to help programs mitigate this assessment bias.Reference Pinsk, Karpinski and Carlisle32,Reference Oswald, Cheung, Bhanji, Ladhani and Hamilton33

Implications for progression through training

By the end of the Foundations stage of training (months 9–12), residents were receiving mean ratings of 3.7 and 3.3 in the workplace and simulated environments, respectively. These ratings suggest that residents were not yet able to perform EPA F1 independently without supervision, a prerequisite for promotion to the next stage of training. Nevertheless, all residents were promoted. Our competence committee observed a discrepancy between EPA ratings and their associated narrative comments. The latter consistently reflected residents’ ability to perform the EPA without supervision, but were associated with ratings that suggested they could not perform the task independently. Based on the narrative comments documented, we suspect the discrepant ratings were due to supervisors rating residents based on their performance of the entire resuscitation, requiring more complex skills and abilities, rather than assessing the specific EPA task of initiating and assisting in the resuscitation. A correlation between ratings in the simulated and workplace settings may not have been observed because faculty were misinterpreting the EPA task. Misinterpretation of an EPA task by supervisors is a potential threat to the validity of these types of assessments and highlights an important ongoing faculty development need within Competency by Design.

Limitations

We observed variation in numbers of workplace assessments between residents. This likely reflects the resident-driven nature of EPA assessments. We attempted to account for this variability by conducting a within-subject analysis and applying G-theory to determine reliability. In a controlled study, all residents would have ideally had similar numbers of workplace and simulated assessments uniformly distributed over the study period. However, our pragmatic, observational design took advantage of real-world implementation of EPAs in a residency program. The observed variation in number of assessments is not unique to our institution and represents a major challenge associated with the implementation of Competency by Design.Reference Chan, Paterson and Hall34 Numbers of workplace EPAs also varied over each study block, while those in simulation remained constant. However, our within-subject analysis of variance was able to account for this difference. Furthermore, this was a single center study conducted over a short time frame, and results may have been influenced by local cultural norms and assessment patterns, thus limiting the transferability of our findings. Last, all workplace and simulation assessors were unblinded to each participant. Prior experience with each learner carries the risk biasing current and future assessments,Reference Gauthier, St-Onge and Tavares35 and our methodology did not allow for blinded external assessment of performance.

CONCLUSION

There was no correlation between ratings of resident skills in the initial resuscitation of critically ill patients in the workplace and simulated environments as assessed by EPA F1. Ratings improved over time and higher ratings were observed in the workplace settings. Factors such as variable case complexity, case selection, and misinterpretation of the assessment task make it challenging to compare ratings of performance in the two environments. Given the conflicting results of this study with others, it remains unclear whether resuscitation performance in a simulated setting reflects performance in the clinical workplace. As greater emphasis is being placed on simulation as a modality for assessing clinical competence, future studies are needed to clarify these differences and establish an evidence base for the validity of EPA assessments in both environments.

Supplemental material

The supplemental material for this article can be found at https://doi.org/10.1017/cem.2020.388.

Competing interests

There are no conflicts of interest to report.

Author Contributions

N.P., M.O., and W.J.C. conceived the idea. W.J.C. supervised the conduct of the trial and data collection. N.P. collected and managed the data. M.M. conducted statistical analysis of the data. N.P. drafted the manuscript. N.P., M.O., M.M., N.D., and W.J.C. reviewed the manuscript and contributed substantially to its revision. N.P. takes responsibility for the study as a whole.

Financial support

This study was funded by a TOH Department of Emergency Medicine Academic Grant.