Introduction

Nonmonotonic logic (abbreviated as NML) and its domain, defeasible reasoning, are multifaceted areas. In crafting an Element that serves as both an introduction and an overview, we must adopt a specific perspective to ensure coherence and systematic coverage. It is, however, in the nature of illuminating a scenario with a spotlight that certain aspects emerge prominently, while others recede into shadow. The focus of this Element is on unveiling the core ideas and concepts underlying NML. Rather than exhaustively presenting concrete logics from existing literature, we emphasize three fundamental methods: (i) formal argumentation, (ii) consistent accumulation, and (iii) semantic approaches.

An argumentative approach for understanding human reasoning has been proposed both in a philosophical context by Toulmin’s forceful attack on formal logic in Reference Toulmin1958, and more recently in cognitive science by Mercier and Sperber (Reference Mercier and Sperber2011). Pioneers such as Pollock (Reference Pollock1991) and Dung (Reference Dung1995) have provided the foundation for a rich family of systems of formal argumentation.

Consistent accumulation methods are based on the idea that an agent facing possibly conflicting and not fully reliable information is well advised to reason on the basis of only a consistent part of that information. The agent could start with certain information and then stepwise add merely plausible information. In this way they stepwise accumulate a consistent foundation to reason with. Accumulation methods cover, for instance, Reiter’s influential default logic (Reiter, Reference Reiter1980) or methods based on maximal consistent sets, such as early logics by Rescher and Manor (Reference Rescher and Manor1970) and (constrained) input–output logic (Makinson & Van Der Torre, Reference Makinson and Van Der Torre2001).

While the previous two methods are largely based on syntactic or proof-theoretic considerations, interpretation plays the essential role in semantic approaches. The core idea is to order interpretations with respect to normality considerations and then to select sufficiently normal ones. These are used to determine the consequences of a reasoning process or to give meaning to nonmonotonic conditionals. The idea surfaces in the history of NML in many places, among others in Batens (Reference Batens1986), Gelfond and Lifschitz (Reference Gelfond and Lifschitz1988), Kraus et al. (Reference Kraus, Lehman and Magidor1990), McCarthy (Reference McCarthy1980), and Shoham (Reference Shoham and Ginsberg1987).

A central aspect of this Element is its unifying perspective (inspired by works such as Bochman (Reference Bochman2005) and Makinson (Reference Makinson2005). Defeasible reasoning gives rise to a variety of formal models based on different assumptions and approaches. Comparing these approaches can be difficult. The Element presents several translations between NMLs, illustrating that in many cases the same inferences can be validated in terms of diverse formal methods. These translations offer numerous benefits. They enrich our understanding by offering different perspectives: the same underlying inference mechanism may be considered as a form of (formal) argumentation, a way of reasoning with interpretations that are ordered with respect to their plausibility, or as a way of accumulating and reasoning with consistent subsets of a possibly inconsistent knowledge base. They demonstrate the robustness of the underlying inference mechanism, since several intuitive methods give rise to the same result. While the different methodological strands of NML have often been developed with little cross-fertilization, it is remarkable that the resulting systems can often be related with relative ease. Finally, the translations may convince the reader that, despite the fact that the field of NML seems a bit of a rag rug at first sight, there is quite some coherence when taking a deeper dive. In particular, by showcasing formal argumentation’s exceptional ability to represent other NMLs, this Element adds further evidence to the fruitfulness of Dung’s program of utilizing formal argumentation as a unifying perspective on defeasible reasoning (Dung, Reference Dung1995).

The Element is organized in four parts. Part I provides a general introduction to the topic of defeasible reasoning and NML. The three core methods are each introduced in a nutshell. It provides a condensed and self-contained overview of the fundamentals of NML for readers with limited time. Part II to IV deepen on each of the respective methods by providing metatheoretic insights and presenting concrete systems from the literature.

While some short metaproofs that contribute to an improved understanding are left in the body of the Element, two technical appendices are provided for others. In particular, results marked with ‘⋆’ are proven in the appendices.

Many important aspects and systems of NML didn’t get the spotlight and fell victim to the trade-off between systematicity and scope from which an introductory Element of this length will necessarily suffer. Nevertheless, with this Element a reader will grow the wings necessary to maneuver in the lands of nonflying birds, that is, they will be well equipped to understand, say, first-order versions of logics that are discussed here on the propositional level, or systems such as autoepistemic logic.

Part I Logics for Defeasible Reasoning

1 Defeasible Reasoning

1.1 What is Defeasible Reasoning?

We certainly want more than we can get by deduction from our evidence. … So real inference, the inference we need for the conduct of life, must be nonmonotonic.

This Element introduces logical models of defeasible reasoning, so-called NonMonotonic Logics (in short, NMLs). When we reason, we make inferences, that is, we draw conclusions from some given information or basic assumptions. Whenever we reserve the possibility to retract some inferences upon acquiring more information, we reason defeasibly.Footnote 1 Two paradigmatic examples of defeasible inferences are:

| Assumption | Defeasible conclusion | Reason for retraction |

| The streets are wet. | It rained. | The streets have been cleaned. |

| Tweety is a bird. | Tweety can fly. | Tweety is a penguin. |

As the examples highlight, we often reason defeasibly if our available information is incomplete: we lack knowledge of what happened before we observed the wet streets, or we lack knowledge of what kind of bird Tweety is. Defeasible inferences often add new information to our assumptions: while being explanatory of the streets being wet, the fact that it rained is not contained in the fact that the streets are wet, and while being able to fly is a typical property of birds, being a bird does not necessitate being able to fly. In this sense defeasible inferences are ampliative.

Logics that may lose conclusions once more information is acquired are called nonmonotonic. The vast majority of logics the reader will typically encounter in logic textbooks are monotonic, with classical logic (in short, CL) being the celebrity. Whenever the given assumptions are true, an inference sanctioned by CL will securely pass the torch from the assumptions to the conclusion, warranting with absolute certainty the truth of the conclusion. Truth is preserved in inferences sanctioned by CL. No matter how much information we add, how many inferences we chain between our premises and our final conclusion, or how often the torch is passed, truth endures: the flames reach their final destination. Thus, inferences are never retracted in CL, and conclusions accumulate the more assumptions we add. This property, called monotonicity, is highly desirable for certain domains of reasoning such as mathematics, a domain where CL reigns.

However, a key motivation behind the development of NML is that out in the wild of commonsense, expert, or scientific reasoning, good inferences need not be truth preservational: we often change our minds and retract inferences when watching a crime show and wondering who the most likely murderer is; medical doctors may change their diagnosis with the arrival of more evidence, and so do scientists, sometimes resulting in scientific revolutions. In less idealized circumstances than those of purely formal sciences (such as mathematics), we usually need to reason with incomplete, sometimes even conflicting information. As a consequence, our inferences allow for exceptions and/or criticism. They are adaptable: learning or inferring more information may cause retraction, previous inferences may get defeated. Outside the ivory tower of mathematics, in the stormy domain of commonsense reasoning, the torch’s fire may get extinguished.

It is therefore not surprising that examples of defeasible reasoning are abundant. In what follows, we will list some paradigmatic examples.

Example 1. We first imagine a scenario at a student party.Footnote 2

Table 001Long description

The example shows five lines of dialogue set at a student party. 1. Peter: I haven't seen Ruth!. 2. Mary: Me neither. If there's an exam the next day Ruth studies late in the library. 3. Peter: Yes, that's it. The logic exam tomorrow!. 4. Anne: But today is Sunday. Isn't the library closed question mark. 5. Peter: True, and indeed there she is, exclamation mark. [pointing to Ruth entering the room].

In her reply to Peter’s observation concerning Ruth’s absence (1), Mary states a regularity in form of a conditional (2): If there’s an exam the next day, Ruth studies late in the library. She offers an explanation as to why Ruth is not around. The explanation is hypothetical, since she doesn’t offer any insights as to whether there is an exam. Peter supplements the information that, indeed, (3) there is an exam. Were our students to treat information (2) and (3) in the manner of CL as a material implication, they would be able to apply modus ponens to infer that Ruth is currently studying late in the library.Footnote 3 And, indeed, after utterance (3) it is quite reasonable for Mary and Peter to conclude that

(⋆) Ruth is not at the party since she’s studying late at the library.

Anne’s statement (4) casts doubt on the argument (⋆), since the library might be closed today. This does not undermine the regularity stated by Mary, but it points to a possible exception. Anne’s statement may lead to the retraction of (⋆), which is further confirmed when Peter finally sees Ruth (5): this is defeasible reasoning in action!

Defaults. Statements such as “Birds fly.” allow for exceptions. It is therefore not surprising that one of the most frequent characters in papers on NML is Tweety. While the reader may sensibly infer that Tweety can fly when they are told that Tweety is a bird, they might be skeptical when being informed that Tweety lives at the South Pole, and most definitely will retract the inference as soon as they hear that Tweety is a penguin.Footnote 4 As we have also seen in our example, we often express regularities in the form of conditionals – so-called default rules, or simply defaults – that hold typically, mostly, plausibly, and so on, but not necessarily.

Closed-World Assumption. Often, defeasible reasoning is rooted in the fact that communication practices are based on an economic use of information. When making lists such as menus at restaurants or timetables at railway stations, we typically only state positive information. We interpret (and compile) such lists under the assumption that what is not listed is not the case. For instance, if a meal or connection is not listed, we consider it not to be available. This practice is called the closed-world assumption (Reiter, Reference Reiter1981).

Rules with Explicit Exceptions. Before presenting more examples of defeasible reasoning, let us halt for a moment to address a possible objection. Is CL really inadequate as a model of this kind of reasoning? Can’t we simply express all possible exceptions as additional premises? For instance,

(†) If there’s an exam the next day and the library is open late and Ruth is not ill and on her way didn’t get into a traffic jam and …, then Ruth studies late in the library.

There are several problems with this proposal. The first concerns the open-ended nature of the list of exceptions which characterizes most rules that express what typically/usually/plausibly/and so on holds. Even in the (rare) cases in which it is – in principle – possible to compile a complete list of exceptions, the resulting conditional will not adequately represent a reasoning scenario in which our agent may not be aware of all possible exceptions. They may merely be aware of the possibility of exceptions and be able, if asked for it, to list some (such as penguins as nonflying birds). Others may escape them (such as kiwis), but they would readily retract their inference that Tweety flies after learning that Tweety is a kiwi. In other words, the complexities involved in generating explicit lists of exceptions are typically far beyond the capacities of real-life and artificial agents. What is more, in order to apply modus ponens to conditionals such as (†), our reasoner would have to first check for whether each possible exception holds. This may be impossible for some, for others unfeasible, and altogether it would render out of reach the pace of reasoning that is needed to cope with their real-life situation.

In contrast to reasoning from fixed sets of axioms in mathematics, commonsense reasoning needs to cope with incomplete (and possibly conflicting) information. In order to get off the ground, it (a) jumps to conclusions based on regularities that allow for exceptions and (b) adapts to potential problems in the form of exceptional circumstances on the fly, by means of the retraction of previous inferences.

Abductive Inferences. Another type of defeasible reasoning concerns cases in which we infer explanations of a given state of affairs (also called abductive inferences). For instance, upon seeing the wet street in front of her apartment, Susie may infer that it rained, since this explains the wetness of the streets. However, when Mike informs her that the streets have been cleaned a few minutes ago, she will retract her inference. We see this kind of inference often in diagnosis and investigative reasoning (think of Sherlock Holmes or a scientist wondering how to interpret the outcome of an experiment). As both the exciting histories of the sciences and the twisted narratives of Sir Arthur Conan Doyle reveal, abductive inference is defeasible.

Inductive Generalizations. In both scientific and everyday reasoning, we frequently rely on inductive generalizations. Having seen only white swans, a child may infer that all swans are white, only to retract the inference during a walk in the park when a black swan crosses their path.

These are some central, but far from the only types of defeasible inferences. A more exhaustive and systematic overview can be found, for instance, in Walton et al. (Reference Walton, Reed and Macagno2008), where they are informally analyzed in terms of argument schemes.Footnote 5

1.2 Challenges to Models of Defeasible Reasoning

Formal models of defeasible reasoning face various challenges. Let us highlight some.

1.2.1 Human Reasoning and the Richness of Natural Language

As we have seen, defeasible reasoning is prevalent in contexts in which agents are equipped with incomplete and uncertain information. By providing models of defeasible reasoning, NMLs are of interest to both philosophers investigating the rationality underlying human reasoning and computer scientists interested in the understanding and construction of artificially intelligent agents. Human reasoning has a peculiar status in both investigations in that selected instances of it serve as role models of rational and successful artificial reasoning. After all, humans are equipped with a highly sophisticated cognitive system that has evolutionarily adapted to an environment of which it only has incomplete and uncertain information. Therefore, it seems quite reasonable to assume that we can learn a good deal about defeasible reasoning, including the question of what is good defeasible inference, by observing human inference practices.

There are, however, several complications that come with the paradigmatic status of human defeasible reasoning. First, human reasoning is error-prone, which means we have to rely on selected instances of good reasoning. But what are exemplars of good reasoning? In view of this problem, very often nonmonotonic logicians simply rely on their own intuitions. There are good reasons why one should not let expert intuition be the last word on the issue. We may be worried, for instance, about the danger of myside bias (also known as confirmation bias; see Mercier and Sperber (Reference Mercier and Sperber2011)): intuitions may be biased toward satisfying properties of the formal system that is proposed by the respective scholar.

Then, there is the possibility of “déformation professionnelle,” given that the expert’s intuitions have been fostered in the context of a set of paradigmatic examples about penguins with the name Tweety, ex-US presidents (see Examples 2 and 3), and the like.Footnote 6

Another complication is the multifaceted character of defeasible reasoning in human reasoning. First, there is the variety of ways we can express in natural language regularities that allow for exceptions. We have “Birds fly,” “Birds typically fly,” “Birds stereotypically fly,” “Most birds fly,” and so on, none of which are synonymous: for example, while tigers stereotypically live in the wild, most tigers live in captivity. What is more important, the different formulations may give rise to different permissible inferences. Consider the generic “Lions have manes.” While having a mane implies being a male lion, “Lions are males” is not acceptable (Pelletier & Elio, Reference Pelletier and Elio1997). The inference pattern blocked is known as right weakening: if ![]() by default implies

by default implies ![]() , and

, and ![]() follows classically from

follows classically from ![]() , then

, then ![]() follows by default from

follows by default from ![]() as well. It is valid in most NMLs, and it seems adequate for the “typical,” “stereotypical,” and “most” reading of default rules, but not for some generics.Footnote 7 For NMLs this poses the challenge to keep in mind the intended interpretation of defaults and differences in the underlying logical properties that various interpretations give rise to.

as well. It is valid in most NMLs, and it seems adequate for the “typical,” “stereotypical,” and “most” reading of default rules, but not for some generics.Footnote 7 For NMLs this poses the challenge to keep in mind the intended interpretation of defaults and differences in the underlying logical properties that various interpretations give rise to.

Despite these problems, it seems clear that “reasoning in the wild” should play a role in the validation and formation of NMLs.Footnote 8 This pushes NML in proximity to psychology. In practice, nonmonotonic logicians try to strike a good balance by obtaining metatheoretically well-behaved formal systems that are to some degree intuitively and descriptively adequate relative to (selected) human reasoning practices.

1.2.2 Conflicts and Consequences

Defeasible arguments frequently conflict. This poses a challenge for normative theories of defeasible reasoning, which must specify the conditions under which inferences remain permissible in such scenarios.

For this discussion, some terminology and notation will be useful. An argument (in our technical sense) is obtained by either stating basic assumptions or by applying inference rules to the conclusions of other arguments. An argument is defeasible if it contains a defeasible rule (such as a default), symbolized by ![]() . Such an argument may include also truth-preservational strict inference rules (such as the ones from CL), symbolized by

. Such an argument may include also truth-preservational strict inference rules (such as the ones from CL), symbolized by ![]() . A conflict between two arguments arises if they lead to contradictory conclusions

. A conflict between two arguments arises if they lead to contradictory conclusions ![]() and

and ![]() (where

(where ![]() denotes negation).

denotes negation).

Let us now take a look at two paradigmatic examples.

Example 2 Nixon; Reiter and Criscuolo (1981). One of the most well-known examples in NML is the Nixon Diamond (see Fig. 1):Footnote 9

- 1. Nixon is a Dove.

- 2. Nixon is a Quaker.

- 3. By default, Doves are Pacifists.

- 4. By default, Quakers are not Pacifists.

Example 3 (Tweety; Doyle and McDermott (1980)). Another well-known example is Tweety the penguin (see Fig. 2) based on the following information:

- 1. Tweety is a penguin.

- 2. Penguins are birds.

- 3. By default, birds fly.

- 4. By default, penguins don’t fly.

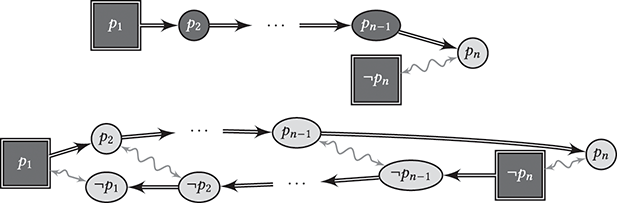

Figure 1 The Nixon Diamond from Example 2. Double arrows symbolize defeasible rules, single arrows strict rules, and wavy arrows conflicts. Black nodes represent unproblematic conclusions, while light nodes represent problematic conclusions. Rectangular nodes represent the starting point of the reasoning process. We use the same symbolism in the following figures.

Figure 2 Tweety and specificity, Example 3.

Figure 2Long description

A single arrow from penguin leads to bird (black node). A double arrow from penguin leads to ¬ fly (black node) and from bird leads to fly (light node). A way arrow is drawn between fly and ¬fly.

Our examples indicate that, first, conflicts between defeasible arguments can occur, and second, the context may determine whether and, if so, how a conflict can be resolved. We now take a look at two further challenges that come with conflicts in defeasible reasoning.

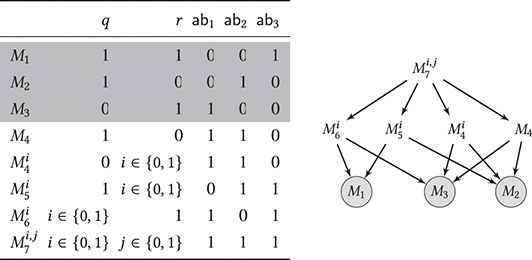

Figure 3 encodes the following information: ![]() ,

, ![]() ,

, ![]() , and

, and ![]() . Should we infer

. Should we infer ![]() ? Nonmonotonic logics that block this inference have been said to suffer from the drowning problem (Benferhat et al., Reference Benferhat, Cayrol, Dubois, Lang and Prade1993). Examples like the following seem to suggest that we should accept

? Nonmonotonic logics that block this inference have been said to suffer from the drowning problem (Benferhat et al., Reference Benferhat, Cayrol, Dubois, Lang and Prade1993). Examples like the following seem to suggest that we should accept ![]() .

.

Figure 3 A drowning scenario.

Example 4. We consider the scenario:

- 1. Micky is a dog.

- 2. Dogs normally (have the ability to) to tag along with a jogger.

- 3. Dogs normally (have the ability to) bark.

- 4. Micky lost a leg and can’t tag along with a jogger.

In this example it seems reasonable to infer, ![]() , Micky has the ability to bark, despite the presence of

, Micky has the ability to bark, despite the presence of ![]() . In other contexts one may be more cautious when jumping to a conclusion.

. In other contexts one may be more cautious when jumping to a conclusion.

Example 5. Take the following scenario.

- 1. It is night.

- 2. During the night, the light in the living room is usually off.

- 3. During the night, the heating in the living room is usually off.

- 4. The light in the living room is on.

In this scenario it seems less intuitive to infer, ![]() , The heating in the living room is off. The fact that we have in (4) an exception to default (2) may have an explanation in the light of which also default (3) is excepted. For example, the inhabitant forgot to check the living room before going to sleep, she is not at home and left the light and heating on before leaving, she is still in the living room, and so on.

, The heating in the living room is off. The fact that we have in (4) an exception to default (2) may have an explanation in the light of which also default (3) is excepted. For example, the inhabitant forgot to check the living room before going to sleep, she is not at home and left the light and heating on before leaving, she is still in the living room, and so on.

These examples show that concrete reasoning scenarios often contain a variety of relevant factors that influence what real-life reasoners take to be intuitive conclusions. Specific NMLs typically only model a few of these factors and omit others. For instance, although Elio and Pelletier (Reference Elio and Pelletier1994) and Koons (Reference Koons and Zalta2017) argue that it is useful to track causal and explanatory relations in the context of drowning problems, systematic research in this direction is lacking.

Another class of difficult scenarios has to do with so-called floating conclusions.Footnote 10

These are conclusions that follow from two opposing arguments. For example, formally the scenario may be as depicted in Fig. 4.

Figure 4 A scenario with the floating conclusion ![]() .

.

Example 6. Suppose two generally reliable weather reports:

- 1. Station 1: The hurricane will hit Louisiana and spare Alabama.

- 2. Station 2: The hurricane will hit Alabama and spare Louisiana.

- 3. If the hurricane hits Louisiana, it hits the South coast.

- 4. If the hurricane hits Alabama, it hits the South coast.

The floating conclusion, (5), The storm will probably hit the South coast, may seem acceptable to a cautious reasoner. The rationale being that both reports agree on the upcoming storm and even roughly where it will hit. The disagreement may be due to different weighing of diverse factors in their respective underlying scientific weather models. But the combined evidence of both stations seems to rather confirm conclusion (5) than dis-confirm it. This is not always the case with partially conflicting expert statements, as the next example shows.

Example 7. Assume two expert reviewers, Reviewer 1 and Reviewer 2, evaluating Anne for a professorship. She sent in two manuscripts, A and B.

- 1. Reviewer 1: Manuscript A is highly original, while manuscript B repeats arguments already known in the literature.

- 2. According to Reviewer 1, one manuscript is highly original.

- 3. Reviewer 2: Manuscript B is highly original, while manuscript A repeats arguments already known in the literature.

(We assume the inconsistency of

with

with  .)

.)- 4. According to Reviewer 2, one manuscript is highly original.

Should we conclude that one manuscript is highly original, since it follows from both reviewers’ evaluations? It seems a more cautious stance is advisable. The disagreement may well be an indication of the sub-optimality of each of the two reviews. Indeed, a possible explanation of their conflicting assessments could be that (a) Reviewer 1 is aware of an earlier article ![]() (by another author than Anne) that already makes the arguments presented in

(by another author than Anne) that already makes the arguments presented in ![]() and which is not known to Reviewer 2, and vice versa, that (b) Reviewer 2 is aware of an earlier article

and which is not known to Reviewer 2, and vice versa, that (b) Reviewer 2 is aware of an earlier article ![]() in which similar arguments to those in

in which similar arguments to those in ![]() are presented. In view of this possibility, it would seem overly optimistic to infer that Anne has a highly original article in her repertoire.

are presented. In view of this possibility, it would seem overly optimistic to infer that Anne has a highly original article in her repertoire.

2 Central Concepts

Nonmonotonic logics are designed to answer the question what are (defeasible) consequences of some available set of information. This gives rise to the notion of a nonmonotonic consequence relation. In this section we explain this central concept and some of its properties from an abstract perspective (Section 2.2). Nonmonotonic consequences are obtained by means of defeasible inferences, which are themselves obtained by applying inference rules. We discuss two ways of formalizing such rules in Section 2.3. Before doing so, we discuss some basic notation in Section 2.1.

2.1 Notation and Basic Formal Concepts

Let us get more formal. We assume that sentences are expressed in a (formal) language ![]() . We denote the standard connectives in the usual way:

. We denote the standard connectives in the usual way: ![]() (negation),

(negation), ![]() (conjunction),

(conjunction), ![]() (disjunction),

(disjunction), ![]() (implication), and

(implication), and ![]() (equivalence). We use lowercase letters

(equivalence). We use lowercase letters ![]() as propositional atoms, collected in the set

as propositional atoms, collected in the set ![]() , and uppercase letters

, and uppercase letters ![]() as metavariables for sentences such as

as metavariables for sentences such as ![]() ,

, ![]() or

or ![]() . We denote the set of sentences underlying

. We denote the set of sentences underlying ![]() by

by ![]() . In the context of classical propositional logic and typically in the context of a Tarski logic (see later), this will simply be the closure of the atoms under the standard connectives.Footnote 11 We denote sets of sentences by the uppercase calligraphic letters

. In the context of classical propositional logic and typically in the context of a Tarski logic (see later), this will simply be the closure of the atoms under the standard connectives.Footnote 11 We denote sets of sentences by the uppercase calligraphic letters ![]() ,

, ![]() , and

, and ![]() . Where

. Where ![]() is a finite nonempty set of sentences, we write

is a finite nonempty set of sentences, we write ![]() and

and ![]() for the conjunction resp. the disjunction over the elements of

for the conjunction resp. the disjunction over the elements of ![]() .Footnote 12

.Footnote 12

A consequence relation, denoted by ![]() , is a relation

, is a relation ![]() between sets of sentences and sentences:

between sets of sentences and sentences: ![]() denotes that

denotes that ![]() is a

is a ![]() -consequence of the assumption set

-consequence of the assumption set ![]() . So, the right side of

. So, the right side of ![]() encodes the given information resp. the assumptions on which the reasoning process is based, while the left side encodes the consequences which are sanctioned by

encodes the given information resp. the assumptions on which the reasoning process is based, while the left side encodes the consequences which are sanctioned by ![]() given

given ![]() .

.

We will often work in the context of Tarski logics ![]() , whose consequence relations

, whose consequence relations ![]() are reflexive (

are reflexive (![]() ), transitive (

), transitive (![]() and

and ![]() implies

implies ![]() ) and monotonic (Definition 2.1). We will also assume compactness (if

) and monotonic (Definition 2.1). We will also assume compactness (if ![]() then there is a finite

then there is a finite ![]() for which

for which ![]() ). The most well-known Tarski logic is, of course, classical logic

). The most well-known Tarski logic is, of course, classical logic ![]() .

.

2.2 An Abstract View on Nonmonotonic Consequence

The following definition introduces one of our key concepts: nonmonotonic consequence relations.

Definition 2.1. A consequence relation ![]() is monotonic iff (“if and only if”) for all sets of sentences

is monotonic iff (“if and only if”) for all sets of sentences ![]() and

and ![]() and every sentence

and every sentence ![]() it holds that

it holds that ![]() if

if ![]() . It is nonmonotonic iff it is not monotonic.

. It is nonmonotonic iff it is not monotonic.

We use ![]() as a placeholder for nonmonotonic consequence relations. Our definition expresses that for nonmonotonic consequence relations

as a placeholder for nonmonotonic consequence relations. Our definition expresses that for nonmonotonic consequence relations ![]() there are sets of sentences

there are sets of sentences ![]() and

and ![]() for which

for which ![]() while

while ![]() (i.e.,

(i.e., ![]() is not a

is not a ![]() -consequence of

-consequence of ![]() ).

).

In the following we will introduce some properties that are often discussed as desiderata for nonmonotonic consequence relations.Footnote 13 A positive account of what kind of logical behavior to expect from these relations is particularly important given the fact that ‘nonmonotonicity’ only expresses a negative property. This immediately raises the question whether there are restricted forms of monotonicity that one would expect to hold even in the context of defeasible reasoning? One proposal is

- Cautious Monotonicity (CM).

, if

, if  and

and  .Footnote 14

.Footnote 14

Whereas nonmonotonicity expresses that adding new information to one’s assumptions may lead to the retraction resp. the defeat of previously inferred conclusions, CM states that some type of information is safe to add: namely, adding a previously inferred conclusion does not lead to the loss of conclusions.

We sketch the underlying rationale. Suppose ![]() and

and ![]() . In view of

. In view of ![]() , the defeasible consequence

, the defeasible consequence ![]() of

of ![]() is sanctioned. So,

is sanctioned. So, ![]() does not contain defeating information for concluding

does not contain defeating information for concluding ![]() . Now, the only reason for

. Now, the only reason for ![]() would be that the addition of

would be that the addition of ![]() to

to ![]() generates defeating information for concluding

generates defeating information for concluding ![]() . However,

. However, ![]() already followed from

already followed from ![]() , since

, since ![]() . Thus, this defeating information should have already been contained in

. Thus, this defeating information should have already been contained in ![]() , before adding

, before adding ![]() . But then

. But then ![]() , a contradiction.

, a contradiction.

One may also demand that adding ![]() -consequences to an assumption set should not lead to more consequences.

-consequences to an assumption set should not lead to more consequences.

- Cautious Transitivity (CT).

, if

, if  and

and  .

.

Combining CM and CT comes down to requiring that ![]() is robust under adding its own conclusions to the set of assumptions.

is robust under adding its own conclusions to the set of assumptions.

- Cumulativity (C).

If

, then

, then  iff

iff  .

.

Instead of considering the dynamics of consequence under additions of new assumptions, one may wonder what happens when assumptions are manipulated. For instance, it seems desirable that a consequence relation is robust under substituting assumptions for equivalent ones.

- Left Logical Equivalence (LLE).

Where

and

and  are classically equivalent sets,Footnote 15

are classically equivalent sets,Footnote 15  iff

iff  .

.

Note that in the context of nonmonotonic consequence it would be too strong to require

- Left Logical Strengthening (LLS).

Where

,

,  implies

implies  .

.

In order to see why LLS is undesirable, consider an example featuring Tweety. If it is only known that Tweety is a bird, it nonmonotonically follows that it can fly, ![]() . The situation changes when it is also known that Tweety is a penguin,

. The situation changes when it is also known that Tweety is a penguin, ![]() .

.

For the right-hand side of ![]() one may also expect a property similar to LLE: if

one may also expect a property similar to LLE: if ![]() is a consequence, so is each equivalent formula

is a consequence, so is each equivalent formula ![]() . The following principle is stronger. It is motivated by the truth-preservational nature of CL-inferences (but recall from Section 1.2 that in the context of generics it may be problematic):

. The following principle is stronger. It is motivated by the truth-preservational nature of CL-inferences (but recall from Section 1.2 that in the context of generics it may be problematic):

- Right Weakening (RW).

Where

,

,  implies

implies  .

.

Finally, if we take our assumptions to express certain information (rather than defeasible assumptions, see Section 4), then one may expect

- Reflexivity (Ref).

.

.

Consequence relations that satisfy RW, LLE, Ref, CT, and CM are called cumulative consequence relations (Kraus et al., Reference Kraus, Lehman and Magidor1990).Footnote 16 The authors consider them “the rockbottom properties without which a system should not be considered a logical system.” (p. 176), a point mirroring Gabbay (Reference Gabbay and Apt1985). Some other intuitive principles hold for a cumulative ![]() .

.

Proposition 2.1. Every cumulative consequence relation ![]() also satisfies:

also satisfies:

1. Equivalence. If

and

and  then:

then:  iff

iff  .

.2. AND. If

and

and  then

then  .

.

Proof. Item 1 follows by CT and CM. To see this suppose (a) ![]() , (b)

, (b) ![]() , and (c)

, and (c) ![]() . We show

. We show ![]() (the inverse direction is analogous). By CM, (a) and (c),

(the inverse direction is analogous). By CM, (a) and (c), ![]() . Thus, by CT and (b),

. Thus, by CT and (b), ![]() .

.

Ad 2. Suppose (a) ![]() and (b)

and (b) ![]() . By Ref,

. By Ref, ![]() and by LLE, (c),

and by LLE, (c), ![]() . By CM, (a) and (b),

. By CM, (a) and (b), ![]() . By CT and (c),

. By CT and (c), ![]() . By (a) and CT,

. By (a) and CT, ![]() . □

. □

Another property of some NMLs is constructive dilemma: given a fixed context represented by ![]() , if

, if ![]() is both a consequence of

is both a consequence of ![]() and of

and of ![]() , it should also be a consequence of

, it should also be a consequence of ![]() .

.

- Constructive Dilemma (OR)

If

and

and  then

then  .

.

Cumulative consequence relations that also satisfy OR are called preferential (Kraus et al., Reference Kraus, Lehman and Magidor1990). We show some derived principles for preferential consequence relations.

Proposition 2.2. Every preferential consequence relation ![]() also satisfies:

also satisfies:

1. Reasoning by Cases (RbC). If

and

and  then

then  .

.2. Resolution. If

then

then  .

.

Proof. (RbC). Suppose ![]() and

and ![]() . By OR,

. By OR, ![]() and by LLE,

and by LLE, ![]() . (Resolution). Suppose now that

. (Resolution). Suppose now that ![]() . By RW, (a),

. By RW, (a), ![]() . By Ref,

. By Ref, ![]() and by RW, (b),

and by RW, (b), ![]() . By RbC, (a) and (b),

. By RbC, (a) and (b), ![]() . □

. □

A more controversial property than CM is rational monotonicity (RM).Footnote 17 The basic intuition is similar to CM: given an assumption set ![]() , we are interested in securing a safe set of sentences under the addition of which

, we are interested in securing a safe set of sentences under the addition of which ![]() is monotonic. While for CM this was the set of the

is monotonic. While for CM this was the set of the ![]() -consequences of

-consequences of ![]() , RM considers the set of all sentences that are consistent with the consequences of

, RM considers the set of all sentences that are consistent with the consequences of ![]() (consistent in the sense that their negation is not a

(consistent in the sense that their negation is not a ![]() -consequence of

-consequence of ![]() ).

).

- Rational Monotonicity (RM)

, if

, if  and

and  .

.

One way to think about RM is as follows. Let us (i) say that ![]() is defeating information for

is defeating information for ![]() if there is an

if there is an ![]() for which

for which ![]() , while

, while ![]() , and (ii)

, and (ii) ![]() is rebutted by

is rebutted by ![]() in case

in case ![]() .Footnote 18 Then, when putting CM and RM in contrapositive form,

.Footnote 18 Then, when putting CM and RM in contrapositive form,

CM expresses that no defeating information for

is derivable from any

is derivable from any  : formally, if

: formally, if  and

and  then

then  ;

;RM expresses the stronger demand that every defeating information for

is rebutted by

is rebutted by  : formally, if

: formally, if  and

and  then

then  .

.

So, RM requires that reasoners take into account potentially defeating information by having rebutting counterarguments at hand. This is quite demanding, since, as we have discussed in Section 1, (a) the reasoner may not be aware of all possibly rebutting information to her previous inferences and (b) it may be counterintuitive to conclude that each and every possible defeater is false.

Poole (Reference Poole1991) points out another problem. Consider the statement that Tweety is a bird. Now, all bird species are exceptional to some defaults about birds: penguins don’t fly, hummingbirds have an unusual size, sandpipers nest on the ground, and so on. But, then RM requires us to infer that Tweety is not a penguin, not a hummingbird, not a sandpiper, and so on, and therefore does not belong to any bird species.

In this section we have seen various properties of nonmonotonic consequence relations, many of which are considered desiderata by nonmonotonic logicians. Their study is therefore of central interest in NML and we will come back to them in the context of many of the methods presented in this Element.

2.3 Plausible and Defeasible Reasoning

A fundamental question underlying the design of NMLs is whether to model defeasible reasoning

1. by means of classical inferences based on defeasible assumptions, or

2. by means of (genuinely) defeasible inference rules.

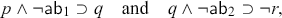

The former is sometimes called Plausible Reasoning, the latter Defeasible Reasoning.Footnote 19 Table 1 provides an overview on which of the two reasoning styles is modeled by various NMLs discussed in this Element. We illustrate with an abstract example. Suppose we want to model that

defeasibly implies

defeasibly implies  , and that

, and that defeasibly implies

defeasibly implies  .

.

In the first approach we encode these two defeasible regularities in terms of classical implications. It can be realized in two ways.

Plausible Reasoning via abnormality assumptions. One way is by formalizing the defeasible rules by

where ![]() and

and ![]() are atomic sentences that encode exceptional circumstances, that is, abnormalities, for the respective rules. These abnormalities are assumed to be false, by default. Suppose that

are atomic sentences that encode exceptional circumstances, that is, abnormalities, for the respective rules. These abnormalities are assumed to be false, by default. Suppose that ![]() is true. Then, by also assuming the falsity of

is true. Then, by also assuming the falsity of ![]() and

and ![]() we can apply modus ponens to both material implications and conclude

we can apply modus ponens to both material implications and conclude ![]() .

.

Table 1Long description

The table consists of four columns: NML, Defeasible Reasoning, Plausible Reasoning, and Blank. It read as follows. Row 1: ASPIC plus; checkmark in Defeasible Reasoning; checkmark in Plausible Reasoning; Section 8. Row 2: Logic-based argumentation; checkmark in Plausible Reasoning; Section 9. Row 3: Rescher and Manor; checkmark in Plausible Reasoning; Section 11 dot 3 dot 1. Row 4: Default Assumptions; checkmark in Plausible Reasoning; Section 11 dot 3 dot 1. Row 5: Adaptive Logic; checkmark in Plausible Reasoning; Section 11 dor 3 dot 1. Row 6: Input-Output Logic; checkmark in Defeasible Reasoning; checkmark in Plausible Reasoning with asterisk; Section 11 dot 3 dot 2. Row 7: Reiter Default Logic; checkmark in Defeasible Reasoning; checkmark in Plausible Reasoning with asterisk; Section 12. Row 8: Logic Programming; checkmark in Plausible Reasoning; Section 16.

Let us see how retraction works in this approach by supposing ![]() . In this case we can classically derive

. In this case we can classically derive ![]() , but neither

, but neither ![]() nor

nor ![]() . Note that contraposition of defeasible rules is available in this approach. For instance,

. Note that contraposition of defeasible rules is available in this approach. For instance, ![]() is CL-equivalent to

is CL-equivalent to ![]() .Footnote 20 So, we know (at least) one of the assumptions must be false, but we don’t know which. Absent any other reason to prefer one over the other, we can’t rely on

.Footnote 20 So, we know (at least) one of the assumptions must be false, but we don’t know which. Absent any other reason to prefer one over the other, we can’t rely on ![]() to derive

to derive ![]() . In view of this,

. In view of this, ![]() should not be considered a nonmonotonic consequence of the given information.

should not be considered a nonmonotonic consequence of the given information.

Plausible Reasoning via naming of defaults. Another way to proceed is by naming defaults (see, e.g., Poole (Reference Poole1988)). Here, we make use of defeasible assumptions ![]() and

and ![]() , which name defeasible inference rules and which are assumed to be true, by default. In our example, we add

, which name defeasible inference rules and which are assumed to be true, by default. In our example, we add

to the (nondefeasible) assumptions. Note that ![]() (resp.

(resp. ![]() ) is classically equivalent to

) is classically equivalent to ![]() (resp.

(resp. ![]() ). So, when substituting

). So, when substituting ![]() for

for ![]() and

and ![]() for

for ![]() the approach based on naming defaults and the approach based on abnormality assumptions boil down to the same.

the approach based on naming defaults and the approach based on abnormality assumptions boil down to the same.

Defeasible Reasoning. In this approach, regularities are expressed as genuinely defeasible rules (written with ![]() ), that is, without additional and explicit defeasible assumptions that are part of the antecedent of the rule. We encode our preceding example by

), that is, without additional and explicit defeasible assumptions that are part of the antecedent of the rule. We encode our preceding example by

Note that ![]() is not classical implication, in particular

is not classical implication, in particular ![]() does, in general, not follow from

does, in general, not follow from ![]() in this approach. In the first scenario, where only

in this approach. In the first scenario, where only ![]() is given, we apply a defeasible modus ponens rule to obtain

is given, we apply a defeasible modus ponens rule to obtain ![]() and then again to obtain

and then again to obtain ![]() . Many NMLs implement a greedy style of reasoning, according to which defeasible modus ponens is applied as much as possible. Now, if

. Many NMLs implement a greedy style of reasoning, according to which defeasible modus ponens is applied as much as possible. Now, if ![]() is also part of the assumptions, we derive

is also part of the assumptions, we derive ![]() from

from ![]() and

and ![]() , but then stop, since inferring

, but then stop, since inferring ![]() from

from ![]() and

and ![]() would result in inconsistency.

would result in inconsistency.

Example 8. For a more general context, we consider an example with defaults ![]() and the (certain) information

and the (certain) information ![]() and

and ![]() depicted in Figure 5. In the greedy style of reasoning underlying Defeasible Reasoning we will be able to apply defeasible modus ponens to derive

depicted in Figure 5. In the greedy style of reasoning underlying Defeasible Reasoning we will be able to apply defeasible modus ponens to derive ![]() ,

, ![]() , …,

, …, ![]() . Only the last application resulting in

. Only the last application resulting in ![]() is blocked by the defeating information

is blocked by the defeating information ![]() . The situation is different for Plausible Reasoning. Since contraposition is available, for each argument

. The situation is different for Plausible Reasoning. Since contraposition is available, for each argument ![]() (where each

(where each ![]() is modeled by

is modeled by ![]() ) there is a defeating argument

) there is a defeating argument ![]() . Altogether, we obtain

. Altogether, we obtain

This means that at least one ![]() cannot be assumed to be false, but we don’t know which one. Thus, no

cannot be assumed to be false, but we don’t know which one. Thus, no ![]() (for

(for ![]() ) is derivable according to Plausible Reasoning.

) is derivable according to Plausible Reasoning.

Figure 5 Top: Defeasible Reasoning giving rise to a greedy reasoning style. Bottom: Plausible Reasoning giving rise to contrapositions of defeasible rules.

3 From Knowledge Bases to Consequences and NMLs

Nonmonotonic logics represent the information relevant for the reasoning process (knowledge representation) and determine what follows defeasibly from the given information (nonmonotonic consequence, see Fig. 6).

Figure 6 The workings of NMLs.

The task of knowledge representation concerns, for instance, the structuring of the starting point of defeasible reasoning processes in terms of knowledge bases (Section 4) in which different types of information are distinguished, such as different types of assumptions and inference rules. Another task is to organize the given information in a way that is conducive of determining its defeasible consequences. As we have seen, this is challenging since the given information may give rise to conflicts and inconsistencies. NMLs provide methods for generating coherent chunks of information. We will highlight several ways of doing so, most roughly distinguished into syntactic and semantic approaches. The following three concepts play essential roles in the ways knowledge is represented in these approaches:Footnote 21

- Extensions

In syntactic approaches, coherent units of information are typically called extensions. What exactly extensions are differs in various NMLs. They may, for example, be sets of defeasible information from the knowledge base, sets of arguments (given a underlying notion of argument), or sets of sentences. In Section 5.1, 5.2 we will introduce two major families of syntactic approaches: argumentation and consistent accumulation. In Parts II and III they will be studied in more detail.

- Arguments

In syntactic approaches, arguments (or proofs) play a central role when building extensions. Arguments are obtained by applications of the given inference rules to the assumptions provided in the knowledge base.

- Models

In semantic approaches the focus is on classes of models provided by a given base logic. In Section 5.3 we will introduce semantic approaches and study some of them in more detail in Part IV.

The attentive reader will have noticed that we did not yet define what exactly NMLs are. In the narrow sense, one may consider them as nonmonotonic consequence relations (see Section 2.2), so a theory of what sentences follow defeasibly in the context of some knowledge base. In the wider sense they are methods for both knowledge representation and for providing nonmonotonic consequence relation(s).

In this Element we minimally assume that every NML ![]() comes with a formal language

comes with a formal language ![]() including a notion of what counts as a formula or sentence (written

including a notion of what counts as a formula or sentence (written ![]() ), an associated class of knowledge bases

), an associated class of knowledge bases ![]() (see Section 4 for details), at least one consequence relation and one of the following two:

(see Section 4 for details), at least one consequence relation and one of the following two:

in syntactic approaches: a notion of (in)consistent sets of sentences, of argument or proof, and a method to generate extensions (see Sections 5.1 and 5.2 and Parts II and III);

in semantic approaches: a notion of model and a method to select models (see Section 5.3 and Part IV).

4 Defeasible Knowledge Bases

Reasoning never starts in a void but it is initiated in a given context. For instance, some information will be factually given and some assumptions may hold by default. Moreover, when we reason we make use of inference rules. Some of these may be truth-preservational (such as the rules provided by ![]() ), others defeasible, allowing for exceptional circumstances. Defeasible knowledge bases structure reasoning contexts into different types of constituents, such as different types of assumptions and inference rules. Most broadly conceived they are tuples of the form:

), others defeasible, allowing for exceptional circumstances. Defeasible knowledge bases structure reasoning contexts into different types of constituents, such as different types of assumptions and inference rules. Most broadly conceived they are tuples of the form:

(4.0.1)

(4.0.1)

We let ![]() be the defeasible part of

be the defeasible part of ![]() consisting of its defeasible assumptions and rules.

consisting of its defeasible assumptions and rules.

A concrete ![]() has an associated fixed class of knowledge bases

has an associated fixed class of knowledge bases ![]() . Its underlying consequence relation(s)

. Its underlying consequence relation(s) ![]() are relations between

are relations between ![]() and

and ![]() .Footnote 22

.Footnote 22

In concrete NMLs, usually not all components of (4.0.1) are utilized or explicitly listed. For example, some NMLs do not consider defeasible rules, some come without defeasible assumptions, some without priorities, many without metarules. Take, for instance, NMLs that model Plausible Reasoning. Here, we omit ![]() since such NMLs do not work with defeasible rules. Moreover, specific components of the knowledge base are fixed for many NMLs, or they are constrained. For instance, only specific preferences relations

since such NMLs do not work with defeasible rules. Moreover, specific components of the knowledge base are fixed for many NMLs, or they are constrained. For instance, only specific preferences relations ![]() may be allowed for, such as transitive ones. Or, often the strict rules are induced by classical logic. (In such cases, the strict rules are often omitted from

may be allowed for, such as transitive ones. Or, often the strict rules are induced by classical logic. (In such cases, the strict rules are often omitted from ![]() .) In some NMLs the strict rules vary over different applications (e.g., in logic programming where strict rules usually represent domain-specific knowledge such as “penguins are birds”). In Table 2 we provide an overview for NMLs presented in this Element.

.) In some NMLs the strict rules vary over different applications (e.g., in logic programming where strict rules usually represent domain-specific knowledge such as “penguins are birds”). In Table 2 we provide an overview for NMLs presented in this Element.

Table 2Long description

The table with seven columns titled: NML, A subscript s, A subscript d, R subscript s, R subscript d, R subscript m, and Section. The rows read as follows: Row 1: ASPIC plus, tick in A subscript s, A subscript d, R subscript s, and R subscript d; Section 8. Row 2: Logic-based argumentation, tick in A subscript s and A subscript d, tick with R subscript L in R subscript s; Section 9. Row 3: Rescher and Manor: tick in A subscript d, tick in R subscript CL in R subscript s; Section 11 dot 3 dot 1. Row 4: Default Assumptions, tick in A subscript s and A subscript d, tick with R subscript CL in R subscript s; Section 11 dot 3 dot 1. Row 5: Input-output logics, tick in A subscript s, tick with R subscript CL in R subscript s; tick in R subscript d and tick mark in R subscript m; Section 11 dot 3 dot 2. Row 6: Reiter Default Logic, tick in A subscript s, tick with R subscript CL in R subscript s, tick in R subscript d; Section 12. Row 7: Logic Programming, tick in R subscript s; Section 16.

We now explain in more detail the components of ![]() .

.

- Strict assumptions

is a set of sentences expressing information that is taken as indisputable or certain.

is a set of sentences expressing information that is taken as indisputable or certain.- Defeasible assumptions

is a set of sentences that are assumed to hold normally/typically/and so on but which may be retracted in case of conflicts.

is a set of sentences that are assumed to hold normally/typically/and so on but which may be retracted in case of conflicts.- Strict rules

is a set of truth-preservational inference rules or relations, written

is a set of truth-preservational inference rules or relations, written  .Footnote 23 There are two types of such rules. On the one hand, we have material inferences, such as “If it is a penguin, it is a bird” which may be encoded by

.Footnote 23 There are two types of such rules. On the one hand, we have material inferences, such as “If it is a penguin, it is a bird” which may be encoded by  . On the other hand, we have inferences that are valid with respect to an underlying logic

. On the other hand, we have inferences that are valid with respect to an underlying logic  , such as classical logic. If such inferences are considered, we let

, such as classical logic. If such inferences are considered, we let  if

if  . If

. If  consist exclusively of such rules, we say that it is induced by the logic

consist exclusively of such rules, we say that it is induced by the logic  and write

and write  for the set containing them. All logics

for the set containing them. All logics  considered in this Element will be Tarski logics. If

considered in this Element will be Tarski logics. If  is induced by a logic (with an implication

is induced by a logic (with an implication  ) one may model the former class of material inferences simply by means of

) one may model the former class of material inferences simply by means of  . For example, in our example one may add

. For example, in our example one may add  to the strict assumptions

to the strict assumptions  . Sometimes we find strict assumptions

. Sometimes we find strict assumptions  being modeled as strict rules with empty bodies

being modeled as strict rules with empty bodies  .

.Given a set of strict rules

and a set of sentences

and a set of sentences  , we write

, we write  to indicate that there is a deduction of

to indicate that there is a deduction of  based on

based on  and

and  . This means that there is a sequence

. This means that there is a sequence  where

where  and for each

and for each  (with

(with  ), either

), either  or there are

or there are  for which

for which  .

.- Defeasible rules

is a set of defeasible inference rules written

is a set of defeasible inference rules written  , often just called defaults. As discussed in Section 2.3, defeasible rules are sometimes “indirectly” modeled as strict rules with defeasible assumptions. In NMLs that adopt this method of Plausible Reasoning,

, often just called defaults. As discussed in Section 2.3, defeasible rules are sometimes “indirectly” modeled as strict rules with defeasible assumptions. In NMLs that adopt this method of Plausible Reasoning,  may be empty. In such cases we are typically dealing with a logic-induced set of strict rules

may be empty. In such cases we are typically dealing with a logic-induced set of strict rules  and defaults are sentences of the type

and defaults are sentences of the type  in

in  where

where  . Defeasible assumptions

. Defeasible assumptions  may be also considered as defaults

may be also considered as defaults  with empty bodies.

with empty bodies.

For reasons of simplicity and following the tradition of many central NMLs, we do not consider ![]() as a defeasible conditional operator in the object language

as a defeasible conditional operator in the object language ![]() , that is, an operator that can be nested within Boolean connectives. Rather, we model

, that is, an operator that can be nested within Boolean connectives. Rather, we model ![]() as representing a defeasible rule that prima facie justifies detaching

as representing a defeasible rule that prima facie justifies detaching ![]() , given

, given ![]() . However, it should be noted that this does impose a limitation on our expressive capabilities. For instance, we cannot “directly” express canceling in the context of specificity, such as

. However, it should be noted that this does impose a limitation on our expressive capabilities. For instance, we cannot “directly” express canceling in the context of specificity, such as ![]() . Many systems have been developed to overcome this limitation, such as Delgrande (Reference Delgrande1987) or conditional logics of normality (Boutilier, Reference Boutilier1994a).Footnote 24

. Many systems have been developed to overcome this limitation, such as Delgrande (Reference Delgrande1987) or conditional logics of normality (Boutilier, Reference Boutilier1994a).Footnote 24

Example 9. In Section 2.3 we presented two ways to model a scenario in which ![]() defeasibly implies

defeasibly implies ![]() and

and ![]() defeasibly imply

defeasibly imply ![]() . Suppose now additionally that

. Suppose now additionally that ![]() and

and ![]() both strictly imply

both strictly imply ![]() and that

and that ![]() defeasibly implies

defeasibly implies ![]() .

.

In Defeasible Reasoning we may work with the knowledge base ![]() consisting of

consisting of ![]() ,

, ![]() ,

, ![]() , and

, and ![]() . Alternatively, one may use the strict assumptions

. Alternatively, one may use the strict assumptions ![]() and

and ![]() and

and ![]() as strict rules.

as strict rules.

In Plausible Reasoning we may utilize ![]() , where

, where ![]() and

and ![]() .

.

- Metarules

is a set of metarules, written

is a set of metarules, written  (where

(where  are strict and defeasible rules and

are strict and defeasible rules and  is a defeasible rule) that allow one to infer new defeasible rules from those in

is a defeasible rule) that allow one to infer new defeasible rules from those in  and

and  . For example, metarules implementing reasoning-by-cases and right weakening are:

. For example, metarules implementing reasoning-by-cases and right weakening are:- OR

- RW

Given a set

, we write

, we write  for the set of defeasible rules that are

for the set of defeasible rules that are  -deducible from

-deducible from  by the metarules in

by the metarules in  (where deductions are defined as in the context of the strict rules

(where deductions are defined as in the context of the strict rules  ).Footnote 25

).Footnote 25- Preferences

is an order on the defeasible elements

is an order on the defeasible elements  of

of  . It encodes that some sources of defeasible information may be more reliable or have more authority than others. This information can be utilized for the purpose of resolving conflicts between defeasible arguments of different strengths. Typically

. It encodes that some sources of defeasible information may be more reliable or have more authority than others. This information can be utilized for the purpose of resolving conflicts between defeasible arguments of different strengths. Typically  is reflexive and transitive, but it may allow for incomparabilities and for equally strong but different defeasible elements. We write

is reflexive and transitive, but it may allow for incomparabilities and for equally strong but different defeasible elements. We write  for the strict version of

for the strict version of  , that is,

, that is,  iff

iff  and

and  .

.

Example 10.

Consider ![]() with

with ![]() and

and ![]() . There is a conflict between the arguments

. There is a conflict between the arguments ![]() and

and ![]() . Absent priorities, there is no way to resolve the conflict on the basis of

. Absent priorities, there is no way to resolve the conflict on the basis of ![]() . If we enhance

. If we enhance ![]() to

to ![]() where

where ![]() it seems reasonable to resolve the conflict in favor of

it seems reasonable to resolve the conflict in favor of ![]() .

.

The situation can get more involved, as the following example shows.

Example 11 (Example 9 cont.). We may extend our knowledge base to ![]() by adding the preference order:

by adding the preference order: ![]() (assuming transitivity).Footnote 26 In this case we have two conflicting arguments,

(assuming transitivity).Footnote 26 In this case we have two conflicting arguments, ![]() and

and ![]() . Comparing their strengths is no longer straightforward, since the former involves both a stronger and a weaker default than the latter. In Part III (Examples 28 and 29) we will see that different methods give rise to different conclusions for

. Comparing their strengths is no longer straightforward, since the former involves both a stronger and a weaker default than the latter. In Part III (Examples 28 and 29) we will see that different methods give rise to different conclusions for ![]() (see also Liao et al. (Reference Liao, Oren, van der Torre, Villata, Cariani, Grossi, Meheus and Parent2016)).

(see also Liao et al. (Reference Liao, Oren, van der Torre, Villata, Cariani, Grossi, Meheus and Parent2016)).

5 Methodologies for Nonmonotonic Logics

We now introduce three central methodologies to obtain nonmonotonic consequence relations and to represent defeasible knowledge, namely: formal argumentation (Section 5.1), consistent accumulation (Section 5.2), and semantic methods (Section 5.3). In this part we explain basic ideas underlying each method based on simplified settings (e.g., without metarules and preferences). More details are presented in the dedicated Parts II to IV.

5.1 The Argumentation Method

The possibility of inconsistency complicates the question as to what follows from a knowledge base ![]() . As described earlier, the idea is to generate coherent sets of information from

. As described earlier, the idea is to generate coherent sets of information from ![]() and to reason on the basis of these. For this, arguments and attacks between them play a key role. Arguments are obtained from

and to reason on the basis of these. For this, arguments and attacks between them play a key role. Arguments are obtained from ![]() by chaining strict and defeasible inference rules. We can define the set of arguments

by chaining strict and defeasible inference rules. We can define the set of arguments ![]() induced by

induced by ![]() , their conclusions, subarguments, and defeasible elements (written

, their conclusions, subarguments, and defeasible elements (written ![]() ,

, ![]() , resp.

, resp. ![]() for some

for some ![]() ) in a bottom-up way.Footnote 27

) in a bottom-up way.Footnote 27

Definition 5.1 (Arguments). Where ![]() is a knowledge base we let

is a knowledge base we let ![]() iff

iff

, where

, where  .

.We let

,

,  ,

,  ,

,  .

. where

where  ,

,  and

and  is a rule in

is a rule in  .

.We let

,

,  ,

,  ,

,  .

.

Where ![]() we let

we let ![]() . Where

. Where ![]() , we let

, we let ![]() be the set of all

be the set of all ![]() for which

for which ![]() .

.

Example 12 (Example 9 cont.). Given our knowledge base ![]() in Example 9 we obtain the arguments depicted in Fig. 7 (left). We have, for instance,

in Example 9 we obtain the arguments depicted in Fig. 7 (left). We have, for instance, ![]() ,

, ![]() , and

, and ![]() .

.

Figure 7 The arguments and the argumentation framework for Example 13 (omitting the nonattacked and nonattacking ![]() and

and ![]() ). We explain the shading in Example 14.

). We explain the shading in Example 14.

There are many ways to define argumentative attacks and subtlety is required to avoid problems with consistency in the context of selecting arguments. We will go into more details in Part II. For now we simply suppose there to be a relation ![]() that determines when two arguments attack each other. We end up with a directed graph

that determines when two arguments attack each other. We end up with a directed graph ![]() , a so-called argumentation framework (Dung, Reference Dung1995).

, a so-called argumentation framework (Dung, Reference Dung1995).

Example 13 (Example 12 cont.). One way to define attacks in our example is to let ![]() attack

attack ![]() if for some

if for some ![]() of the form

of the form ![]() ,

, ![]() or

or ![]() . For instance,

. For instance, ![]() and

and ![]() attack each other. In Fig. 7 (right) we find the underlying argumentation framework.

attack each other. In Fig. 7 (right) we find the underlying argumentation framework.

Argumentation frameworks allow us to select coherent sets of arguments ![]() , which we will call A-extensions (for argumentative extensions). The latter represent argumentative stances of rational reasoners equipped with the knowledge base

, which we will call A-extensions (for argumentative extensions). The latter represent argumentative stances of rational reasoners equipped with the knowledge base ![]() . For this we utilize a number of constraints which represent rational desiderata on these stances. Two such desiderata on sets of arguments

. For this we utilize a number of constraints which represent rational desiderata on these stances. Two such desiderata on sets of arguments ![]() are, for instance (we refer to Part II for a more comprehensive overview):

are, for instance (we refer to Part II for a more comprehensive overview):

- Conflict-freeness:

avoid argumentative conflicts, that is, for all

,

,  ; and

; and- Stability:

additionally, be able to attack arguments that you don’t commit to, that is, for all

there is an

there is an  for which

for which  .

.

Such sets of constraints give rise to so-called argumentation semantics which determine A-extensions of a given argumentation framework (Dung, Reference Dung1995). For instance, according to the stable semantics the set of A-extensions is the set of all sets of arguments that satisfy stability. Once we have settled for an argumentation semantics ![]() (such as the stable semantics) we denote the set of A-extensions of

(such as the stable semantics) we denote the set of A-extensions of ![]() relative to

relative to ![]() by

by ![]() .

.

Example 14 (Example 13 cont.). We have two stable A-extensions, that is, sets of arguments that satisfy the stability requirement (see the shaded sets in the argumentation framework of Fig. 7):

Suppose we select an A-extension ![]() . We then commit to all of the conclusions of the arguments in

. We then commit to all of the conclusions of the arguments in ![]() , that is, to

, that is, to ![]() , where

, where ![]() . This induces another notion of extension, which we dub P-extensions (propositional extensions) which are sets of conclusions associated with A-extensions. We write

. This induces another notion of extension, which we dub P-extensions (propositional extensions) which are sets of conclusions associated with A-extensions. We write ![]() for the set of P-extensions of

for the set of P-extensions of ![]() (relative to a given argumentation semantics

(relative to a given argumentation semantics ![]() ).

).

Example 15 (Example 14 cont.). The following P-extensions are associated with our A-extensions:

Once an argumentation semantics is fixed and the A- and corresponding P-extensions are generated, we can define three different consequence relations for two underlying reasoning styles (see Fig. 8): skeptical and credulous reasoning.

Figure 8 The skeptical and the credulous reasoning style.

Figure 8Long description

The diagram depicts the following: Defeasible knowledge base leads to build extensions which further leads to 1. Sceptical approach: infer A if it is supported in every extension and 2. Credulous approach: infer A if it is supported in some extension by some argument |~ ∪Ext. Sceptical approach further leads to 1. by some argument (conclusion-focused) |~ ∩PExt and 2. by the same argument (argument-focused) |~∩AExt. Both of which further lead to floating conclusion.

Definition 5.2. Where ![]() is a knowledge base,

is a knowledge base, ![]() a sentence, and

a sentence, and ![]() is an argumentation semantics, we define the consequence relations in Table 3.

is an argumentation semantics, we define the consequence relations in Table 3.

Table 3Long description

The table consists of two columns. It read as follows: Row 1: Skeptical 1: K turnstile superscript S subscript intersection P-extensions A iff A element of intersection P-extensions subscript S (K), A is a member of every extension in P-extensions subscript S (K). Row 2: Skeptical 2: K turnstile superscript S subscript intersection A extensions A iff there is an a element A-extensions subscript S (K) s. t. Con (a) equals A, There is an argument a with Con (a) equals A that is contained in every A-extension of K. Row 3: Credulous: K turnstile superscript S subscript union extensions A iff A element of union P extensions subscript S (K), A is a member of some extension in P-extensions subscript S (K).

To avoid clutter in notation, we will omit the super- and subscripts whenever the context disambiguates or the strategy is not essential to a given claim. Note that the definition of the three consequence relations imposes a hierarchy in terms of strength, namely:

Example 16 (Example 15 cont.). Based on our extensions, we have the following consequences:

Table 016Long description

The table consists of five columns: p, q, not r, r, and s. It reads as follows: Row 1: Turnstile intersection P extensions: tick in p, tick in q, blank in not r and r, tick in s. Row 2: Turnstile intersection A extensions: tick in p and q; blank in not r, r, and s. Row 3: Turnstile union extensions: tick in all columns - p, q, not r, r, and s.

The example illustrates that a floating conclusion such as ![]() follows by

follows by ![]() but not by the more cautious

but not by the more cautious ![]() .

.

5.2 Methods based on Consistent Accumulation

Given a knowledge base ![]() , the basic idea behind the accumulation methods is to iteratively build coherent sets of defeasible elements from

, the basic idea behind the accumulation methods is to iteratively build coherent sets of defeasible elements from ![]() .Footnote 28 We will call such sets D-extensions (extensions consisting of defeasible elements). Below we identify two central methods of building D-extensions: the greedy and the temperate method. Once D-extensions have been generated by one of these methods, we can associate each D-extension

.Footnote 28 We will call such sets D-extensions (extensions consisting of defeasible elements). Below we identify two central methods of building D-extensions: the greedy and the temperate method. Once D-extensions have been generated by one of these methods, we can associate each D-extension ![]() with an A-extension

with an A-extension ![]() consisting of all the arguments based on elements in

consisting of all the arguments based on elements in ![]() . Moreover, each A-extension

. Moreover, each A-extension ![]() has the corresponding P-extension

has the corresponding P-extension ![]() as discussed in Section 5.1. Once A- and P-extensions are obtained, we define consequence relations just like in Definition 5.2 (see the overview in Fig. 9). We now discuss the two types of accumulation methods.

as discussed in Section 5.1. Once A- and P-extensions are obtained, we define consequence relations just like in Definition 5.2 (see the overview in Fig. 9). We now discuss the two types of accumulation methods.

Figure 9 Types of nonmonotonic consequence based on syntactic approaches.

Figure 9Long description

Both a. and b. leads to extensions which further leads to 1. Skeptical consequence and 2. Credulous consequence. Skeptical consequence leads to |~ ∩PExt and |~ ∩AExt. Credulous consequence leads to |~ UExt.

5.2.1 The Greedy Method

Given a knowledge base ![]() , methods based on consistent accumulation build iteratively sets of defeasible elements from