Background

What can we learn from the simple fact that we exist where and when we do? The answer may bear on many profound questions, including the nature and size of the cosmos, the existence and types of extraterrestrial intelligence, and the future of humanity.

Attempts to reason about the cosmos from the fact of our existence have been called anthropic reasoning (e.g. Leslie, Reference Leslie1996; Bostrom, Reference Bostrom2013). The anthropic principle argues that our existence is expected even if the events that lead up to it are rare (Carter, Reference Carter and Longair1974). In its weakest form, it simply asserts that humanity's existence is probabilistically likely in a large enough universe (Carter, Reference Carter1983). The strongest versions of the anthropic principle have an opposite premise at heart (Bostrom, Reference Bostrom2013): our existence is not a fluke, but somehow necessary in a logical sense (Barrow, Reference Barrow1983). Anthropic reasoning has been extended to include Copernican principles, which emphasize the typicality of our environment. Weak forms point out our evolution was not a special rupture in the laws of physics, but one possible outcome that can be repeated if given enough ‘trials’ in sufficiently many cosmic environments like ours. Strong forms state that conscious beings (‘observers’) like ourselves are common.

Anthropic reasoning frequently tries to synthesize the anthropic and Copernican principles: we should regard our circumstances as typical of observers like ourselves. Bostrom (Reference Bostrom2013) has formalized this notion as the Self-Sampling Assumption (SSA). The group of observers considered similar enough to us for Copernican reasoning to be valid is our ‘reference class’. It may be as wide as all possible sentient beings or as narrow as people exactly identical to your current self. There is no consensus on a single reference class, or indeed whether we might use a multitude (e.g. Neal, Reference Neal2006; Garriga and Vilenkin, Reference Garriga and Vilenkin2008; Bostrom, Reference Bostrom2013), although the more extreme Copernican formulations apply the universal reference class of all observers. Typicality arguments are often justified in normal experiments to derive conclusions when unusual outcomes are expected given enough ‘trials’. In fact, some kind of typicality assumption seems necessary to reason about large cosmologies, where thanks to the anthropic principle there will exist observers like ourselves with certainty even in Universes distinct from ours – otherwise observations have no power to constrain the nature of the world (Bostrom, Reference Bostrom2002; Bousso et al., Reference Bousso, Freivogel and Yang2008). Typicality is commonly invoked in discussions of cosmology as the ‘principle of mediocrity’ (Vilenkin, Reference Vilenkin1995).

The seemingly reasonable Copernican statement of the SSA has led to controversy, as it can be applied to constrain the cosmological contexts of as-of-yet unobservable intelligences in the Universe. Few applications of the Copernican principle are more contentious than the Doomsday Argument. In its most popular form as presented by Gott (Reference Gott1993), we are most likely ‘typical’ humans and therefore are unlikely to be near humanity's beginning or end (see also Nielsen, Reference Nielsen1989). The Bayesian Doomsday Argument, most strongly defended by Leslie (Reference Leslie1996), has a more robust basis: our birthrank (the number of humans before us) is treated as a uniform random variable drawn from the set of all birthranks of the final human population. A larger human population has more ‘outcomes’, resulting in a smaller likelihood of ‘drawing’ your specific birthrank and a Bayesian shift favouring a short future for humanity (see also Knobe et al., Reference Knobe, Olum and Vilenkin2006; Bostrom, Reference Bostrom2013). A generalized non-ranked variant has been brought to bear to evaluate the existence of beings unlike ourselves in some way, as in the Cosmic Doomsday argument (Olum, Reference Olum2004).

The Doomsday Argument and similar typicality arguments would have profound implications for many fields. For example, in the Search for Extraterrestrial Intelligences (SETI Tarter, Reference Tarter2001) the prevalence of technological societies is critically dependent on the lifespan of societies like our own (e.g. Bracewell, Reference Bracewell1960; Sagan, Reference Sagan1973; Forgan and Nichol, Reference Forgan and Nichol2011). Doomsday would be an extraordinarily powerful argument against prevalent interstellar travel, much less more exotic possibilities of astronomical-scale ‘megastructures’ (Dyson, Reference Dyson1960; Kardashev, Reference Kardashev1964). The instantaneous population of a galaxy-spanning society could be ~1020 (Gott, Reference Gott1993; Olum, Reference Olum2004) while predictions of intergalactic travel and astro-engineering suggest populations greater than 1050 (Bostrom, Reference Bostrom2003; Ćirković, Reference Ćirković2004). Yet the Doomsday Argument applies huge Bayes Factors against the viability of these possibilities or indeed any long future, essentially closing off the entire field of SETI as difficult to futile (Gott, Reference Gott1993). Indeed, this is a straightforward implication of the Cosmic Doomsday argument, which is more well known in cosmology (Olum, Reference Olum2004). Similar Bayesian shifts might drastically cut across theories in other fields.

This sheer power cannot be stressed enough. These likelihood ratios for broad classes of theories (>1040 in some cases!) imply more than mere improbability, they are far more powerful than those resulting from normal scientific observation. If we have any realistic uncertainty in such futures, even the slightest possibility of data being hoaxed or mistaken (say 10−9) results in epistemic closure. If we discovered a galaxy-spanning society, or if we made calculations implying that the majority of observers in the standard cosmology live in realms where the physical constants are different from ours, then the evidence would force us to conclude that scientists are engaged in a diabolical worldwide conspiracy to fake these data. The SSA even can lead to paradoxes where we gain eerie ‘retrocausal’ influence over the probabilities of past events by prolonging humanity's lifespan (Bostrom, Reference Bostrom2001).

Given the unrealistic confidence of the Doomsday Argument's assertions, it is not surprising that there have been many attempts to cut down its power and either tame or refute typicality (e.g. Dieks, Reference Dieks1992; Kopf et al., Reference Kopf, Krtous and Page1994; Korb and Oliver, Reference Korb and Oliver1998; Monton and Roush, Reference Monton and Roush2001; Olum, Reference Olum2002; Neal, Reference Neal2006; Bostrom, Reference Bostrom2013; Benétreau-Dupin, Reference Benétreau-Dupin2015; Garisto, Reference Garisto2020). These include disputing that our self-observation can be compared to a uniformly drawn random sampling (Dieks, Reference Dieks1992; Korb and Oliver, Reference Korb and Oliver1998; Garisto, Reference Garisto2020), arguing for the use of much narrower reference classes (Neal, Reference Neal2006; Bostrom, Reference Bostrom2013; Friederich, Reference Friederich2017; Ćirković and Balbi, Reference Ćirković and Balbi2020), or rejecting the use of a single Bayesian credence distribution (Srednicki and Hartle, Reference Srednicki and Hartle2010; Benétreau-Dupin, Reference Benétreau-Dupin2015). The most common attack is the Self-Indication Assumption (SIA): if we really are drawn randomly from the set of possible observers, then any given individual is more likely to exist in a world with a larger population. The SIA demands that we adopt a prior in which the credence placed on a hypothesis is directly proportional to the number of observers in it, which is then cut down by the SSA using our self-observation (Dieks, Reference Dieks1992; Kopf et al., Reference Kopf, Krtous and Page1994; Olum, Reference Olum2002, see also Neal Reference Neal2006 for further discussion).

The pitfall of these typicality arguments is the Presumptuous Philosopher problem where philosophical arguments giving us ridiculous levels of confidence about otherwise plausible beings in the absence of observations. The problem was first stated for the SIA: the prior posits absurd levels of confidence (say 10100 to 1) in models with a very large number of observers (for example, favouring large universe cosmologies over small universe cosmologies; Bostrom and Ćirković Reference Bostrom and Ćirković2003; Ćirković Reference Ćirković2004). This confidence is ‘corrected’ in the Doomsday Argument, but a prior favouring a galactic future $\gtrsim 10^{20}$![]() to 1 is unlike how we actually reason, and there is no correction when comparing different cosmologies for example (Bostrom and Ćirković, Reference Bostrom and Ćirković2003; Ćirković, Reference Ćirković2004). But the Doomsday Argument is a Presumptuous argument too, just in the opposite direction – one develops extreme certainty about far-off locations without ever observing them.

to 1 is unlike how we actually reason, and there is no correction when comparing different cosmologies for example (Bostrom and Ćirković, Reference Bostrom and Ćirković2003; Ćirković, Reference Ćirković2004). But the Doomsday Argument is a Presumptuous argument too, just in the opposite direction – one develops extreme certainty about far-off locations without ever observing them.

In this paper, I critically examine and deconstruct the Copernican typicality assumptions used in the Doomsday Argument and present a framework for understanding them, Fine Graining with Auxiliary Indexicals (FGAI).

Statement of the central problem

It is important to specify the issue at stake – what is being selected and what we hope to learn. The Doomsday Argument is sometimes presented as a way to prognosticate about our future specifically (Gott, Reference Gott1993; Leslie, Reference Leslie1996; Bostrom, Reference Bostrom2013). This question can become muddled if there are multiple ‘copies’ of humanity because the universe is large. Now, if we already know the fraction of societies that are long-lived versus short-lived, the fraction of observers at the ‘start’ of any society's history follows from a simple counting argument, with no Doomsday-like argument applying (Knobe et al., Reference Knobe, Olum and Vilenkin2006; Garisto, Reference Garisto2020).

The real problem is that we have nearly no idea what this probability distribution is, aside from it allowing our existence. What typicality arguments actually do is attempt to constrain the probability distribution of observers throughout the universe. This is implicit even in Gott (Reference Gott1993), which argues the negative results of SETI are an expected result, because the Doomsday Argument implies long-lived societies are rare and the vast megastructure-building ETIs are nearly non-existent. Knobe et al. (Reference Knobe, Olum and Vilenkin2006) makes this argument explicit with the ‘universal Doomsday argument’, suggesting a large fraction of observers are planetbound like us and not in galaxy-spanning societies ETIs. More generally, typicality arguments have been purported to constrain the distribution of other properties of ETIs, like whether they are concentrated around G dwarfs like the Sun instead of the more numerous red dwarfs (Haqq-Misra et al., Reference Haqq-Misra, Kopparapu and Wolf2018) or if ETIs in elliptical galaxies can greatly outnumber those in spiral galaxies (Zackrisson et al., Reference Zackrisson, Calissendorff, González, Benson, Johansen and Janson2016; Whitmire, Reference Whitmire2020). These all are questions about the distribution of observers.

Even questions about our future can be recast into a question about lifespan distributions. Specifically, what is the lifespan distribution of societies that are exactly like our own at this particular point in our history? There presumably is only one such distribution, even if there are many instantiations of humanity in the Universe. If our future is deterministic, then this distribution is monovalued, with a single lifespan for all humanities out there including ours. More generally, we can imagine there is some mechanism (e.g. nuclear war or environmental collapse) that could cut short the life of societies like ours, and we try to evaluate the probability that it strikes a random society in our reference class.

Thus, what we are comparing are different theories about this distribution, with two important qualities. First, the theories are mutually exclusive in the Garisto (Reference Garisto2020) sense – only one distribution can exist. Even if we suppose there is a multiverse with a panoply of domains, each with their own distribution (e.g. because large-scale travel and expansion is easier under certain physical laws than others), there is presumably only one distribution of these pockets, which in turn gives only one cosmic distribution for the observers themselves. Second, however, there is no ‘draw’ by an external observer when we are doing self-observation. All the observers are realized, none more real than the others. This makes it unlike other cases of exclusive selection.

The central problem, then, is whether given mutually exclusive theories about the distribution of observers, should we automatically prefer theories with narrower distributions, such that we are more typical, closer to the bulk? Should we do this even if the broader distributions have more total observers, so that both predict similar numbers of observers like us? Throughout this paper, I use the terms ‘Small’ (S) and ‘Large’ (L) to broadly group theories about unknown observers (as in Leslie, Reference Leslie1996). In Small theories, the majority of actually existing observers are similar to us and make similar observations of their environment, while Large theories propose additional, numerically dominant populations very dissimilar to us. The version of the Doomsday Argument that I focus on applies this to the distribution of the total final population (or lifespan). In this context, Small theories are ‘Short’, while Large theories are considered ‘Long’ (e.g. N total ≫ 1011 for human history).

I illustrate the Argument with some simple toy models. In the prototypical model, I consider only two distributions, both degenerate:

• In the Small theory, all ‘worlds’ have the same small N total value of 1.

• In the Large theory, all ‘worlds’ have the same large N total value, taken to be 2.

Each ‘world’ consists of an actually existing sequence of observers, ordered by birthrank, the time of their creation (note that world does not mean theory; there can be many ‘worlds’ in this sense but only one correct theory). The observers may be undifferentiated, or may have a distinct label that individuates them. The data each observer can acquire is their own birthrank, and their label if they have one. Numerous variants will be considered, however, including ones where additional data provide additional distinctions in the theory, and ones where N total is fixed but not the distribution. I also consider models where there may be a mixture of actually existing worlds of different sizes, with or without a distribution known to the observer. To distinguish this variance from Small and Large theories, I denote small and large worlds with lowercase letters (s and ℓ), reserving uppercase S and L for mutually exclusive theories.

The Doomsday argument and its terrible conclusion

The Doomsday Argument is arguably the most far-reaching and contentious of the arguments from typicality. It generates enormous Bayes factors against its Large models, despite relatively plausible (though still very uncertain) routes to Large futures. By comparison, we have no specific theory or forecast implying that non-artificial inorganic lifeforms (c.f., Neal, Reference Neal2006) or observers living under very different physical constants elsewhere in a landscape dominate the observer population by a factor of ≫ 1010. Cases where we might test typicality of astrophysical environments, like the habitability of planets around the numerically dominant red dwarfs (Haqq-Misra et al., Reference Haqq-Misra, Kopparapu and Wolf2018), generate relatively tame Bayes factors of ~10 −100 that seem plausible (a relatively extreme value being ~104 for habitable planets in elliptical galaxies from Dayal et al. Reference Dayal, Cockell, Rice and Mazumdar2015; Whitmire Reference Whitmire2020). For this reason, it is worth considering Doomsday as a stringent test of the ‘Copernican Princple’.

Noonday, a parable

A student asked their teacher, how many are yet to be born?

The teacher contemplated, and said: ‘If the number yet to be born equalled the number already born, then we would be in the centre of history. Now, remember the principle of Copernicus: as we are not in the centre of the Universe, we must not be in such a special time. We must evaluate the p-value of a possible final population as the fraction of people who would be closer to the centre of history.

‘Given that 109 billion humans have been born so far,’ continued the teacher, citing Kaneda and Haub (Reference Kaneda and Haub2020), ‘The number of humans yet to be born is not between 98 and 119 billion with 95% confidence. There may be countless trillions yet to be born, or none at all, but if you truly believe in Copernicus's wisdom, you must be sure that the number remaining is not 109 billion.’

And so all who spoke of the future from then on minded the teacher's Noonday Argument.

Noonday, x-day, and the frequentist Doomsday argument

In its popular frequentist form, the Doomsday Argument asks Wouldn't it be strange if we happened to live at the very beginning or end of history? Given some measure of history z, like humanity's lifespan or population, let F ∈ [0, 1] be the fraction z present/z total that has passed so far. If we regard F as a uniform random variable, we construct confidence intervals: F is then between [(1 − p)/2, (1 + p)/2] with probability p. Thus for a current measure z present, our confidence limit on the total measure z total is [2z present/p, 2z present/(1 − p)], allowing us to estimate the likely future lifespan of humanity (Gott, Reference Gott1993). This form does not specifically invoke the SSA: F can parametrize measures like lifespan that have nothing to do with ‘observers’ and require no reference class. It is motivated by analogy to similar reasoning applied to external phenomena. Unlike the Bayesian Doomsday Argument, it penalizes models where F = 1. A more proper Doomsday Argument would constrain the z total distribution, from which the expected F distribution can be derived, although the basic idea of finding the [(1 − p)/2, (1 + p)/2] quantile still applies.

The parable of the Noonday Argument demonstrates the weakness of the frequentist Doomsday Argument: it is not the only confidence interval we can draw. The Noonday Argument gives another, one arguably even more motivated by the Copernican notion of us not being in the ‘centre’ (c.f., Monton and Roush, Reference Monton and Roush2001). An infinite or zero future is maximally compatible with the Noonday Argument. But there is no reason to stop with Noonday either. Wouldn't it be strange if our f happened to be a simple fraction like 1/3, or some other mathematically significant quantity like 1/e, or indeed any random number we pick?

Doomsday and Noonday are just two members of a broad class of x-day Arguments. Given any x in the range [0, 1], the x-day Argument is the observation that it is extremely unlikely that a randomly drawn F will just happen to lie very near x. A confidence interval with probability p is constructed by excluding values of F in between $[ x - ( 1-p) /2]$![]() mod 1 and $[ x + ( 1-p) /2]$

mod 1 and $[ x + ( 1-p) /2]$![]() mod 1, wrapping x as in the frequentist Doomsday Argument. The Doomsday Argument is simply the 0-day Argument and the 1-day Argument, whereas the Noonday Argument is the 1/2-day Argument.

mod 1, wrapping x as in the frequentist Doomsday Argument. The Doomsday Argument is simply the 0-day Argument and the 1-day Argument, whereas the Noonday Argument is the 1/2-day Argument.

By construction, all frequentist x-day Arguments are equally valid frequentist statements if F is truly a uniformly distributed random variable. Any notion that being near the beginning, the end, or the centre is ‘strange’ is just a subjective impression. The confidence intervals are disjoint but their union covers the entire range of possibilities, with an equal density for any p covering any single z total value (Monton and Roush, Reference Monton and Roush2001).

The Noonday Argument demonstrates that not every plausible-sounding Copernican argument is useful, even when technically correct. Confidence intervals merely summarize the effects of likelihood – which is small for Large models, as reflected by the relative compactness of the 0/1-day intervals. A substantive Doomsday-style Argument therefore requires something more than the probability of being near a ‘special’ time.

Bayesian Doomsday and Bayesian Noonday

Bayesian statistics is a model of how our levels of belief, or credences, are treated as probabilities conditionalized by observations. We have a set of some models we wish to constrain, and we start with some prior credence distribution over them. The choice of prior is subjective, but a useful prior when considering a single positive parameter λ of unknown scale is the flat log prior: P prior (λ) ∝ 1/λ. An updated posterior credence distribution is calculated by multiplying prior credences by the likelihood of the observed data D in each model λ i, which is simply the probability ${\scr P}( D\vert {\boldsymbol \lambda _i})$![]() in that model that we observe y:

in that model that we observe y:

where Λ is the set of possible λ.Footnote 1

Bayesian probability provides a more robust basis for the Doomsday Argument, and an understanding of how it supposedly works. In the Bayesian Doomsday argument, we seek to constrain the distribution of N total, the final total population of humanity and its inheritors, using birthranks as the observable. The key assumption is to apply the SSA with all of humanity and its inheritors as our reference class. If we view ourselves as randomly selected from a society with a final total population of N total, our birth rank N past is drawn from a uniform distribution over [0, N total − 1], with a likelihood of

Since we are deciding between different distributions of N total, we must average this over the probability that a randomly selected observer is from a society with N total,

where f(N total = n) is the probability that a randomly selected society has a final population N total. This gives a likelihood for the distribution f of

Here, F is the complementary cumulative mass function. Starting from the uninformative flat prior $P^{{\rm prior}}( {\langle N_{\rm total} \rangle }) \propto 1/ {\langle N_{\rm total} \rangle }$![]() , applying Bayes’ theorem results in the posterior:

, applying Bayes’ theorem results in the posterior:

The posterior in equation (5) is strongly biased against Large models, with P pos(N total ≥ N) ≈ N past/N.

The parable's trick of excluding N total values near 2N past is irrelevant here. Bayesian statistics does define credible intervals containing a fraction p of posterior credence and we could draw Noonday-like intervals that include Large models but exclude a narrow range of Small models. But this is simply sleight-of-hand, using well-chosen integration bounds to hide the fundamental issue that P pos (N total ≫ N past) ≪ 1.

But could there be a deeper x-day Argument beyond simply choosing different credible intervals? Perhaps not in our world, but we can construct a thought experiment where there is one. Define an x-ranking as N x = N past − x N total; allowed values are in the range of [ − xN total, (1 − x)N total]. If through some quirk of physics we only knew our N xFootnote 2, we would treat N x as a uniform random variable, calculate likelihoods proportional to 1/N total and derive a posterior of $1/N_{\rm total}^2$![]() for allowed values of N total (N total ≥ −N x/x and N x < 0 or N total ≥ N x/(1 − x) and N x > 0). Thus all Bayesian x-day Arguments rule out Large worlds, including the Bayesian Noonday Argument, even though the parable's Noonday Argument implies we should be perfectly fine with an infinite history. This is true even if x is not ‘special’ at all: most of the x-day Arguments are perfectly consistent with a location in the beginning, the middle, or the end of history – anything is better than having F ≈ x, even if the maximum likelihood is for a ‘special’ location. For example, the Bayesian Doomsday Argument, which uses our 0-ranking, predicts a maximum likelihood for us being at the very end of history. The oddity is also clear if we knew a cyclic Noonday rank $N_{1/2}^{\prime }$

for allowed values of N total (N total ≥ −N x/x and N x < 0 or N total ≥ N x/(1 − x) and N x > 0). Thus all Bayesian x-day Arguments rule out Large worlds, including the Bayesian Noonday Argument, even though the parable's Noonday Argument implies we should be perfectly fine with an infinite history. This is true even if x is not ‘special’ at all: most of the x-day Arguments are perfectly consistent with a location in the beginning, the middle, or the end of history – anything is better than having F ≈ x, even if the maximum likelihood is for a ‘special’ location. For example, the Bayesian Doomsday Argument, which uses our 0-ranking, predicts a maximum likelihood for us being at the very end of history. The oddity is also clear if we knew a cyclic Noonday rank $N_{1/2}^{\prime }$![]() that wraps from the last to the first human: the most likely N total value would be $N_{1/2}^{\prime } + 1$

that wraps from the last to the first human: the most likely N total value would be $N_{1/2}^{\prime } + 1$![]() , a seemingly anti-Copernican conclusion favouring us being adjacent to the centre of history.

, a seemingly anti-Copernican conclusion favouring us being adjacent to the centre of history.

The Bayesian x-day Arguments provide insight into the heart of the Bayesian Doomsday Argument, which has nothing to do with any particular point in history being ‘special’. What powers all of these arguments is the SSA applied with a broad reference class: the 1/N total factor in the likelihood of equation (2). According to these SSA-based arguments, the reason Large worlds are unlikely is because measuring any particular value of N x whatsoever is more unlikely as N total increases. This is a generic property when one actually is randomly drawing from a population; it does not even depend on numerical rankings at all. Ultimately, the result follows from the Bayesian Occam's Razor effect, in which Bayesian probability punishes hypotheses with many possible outcomes (Jefferys and Berger, Reference Jefferys and Berger1991).

The presumptuous solipsist

The SSA is doing all of the work in the x-day Arguments, but nothing restricts its applications solely to birth rankings or N x in general. In fact, the unrestricted SSA argues against Large models of all kinds. It can be used to derive ‘constraints’ on all kinds of intelligences. One example is the Cosmic Doomsday argument against the existence of interstellar societies, solely by virtue of their large populations regardless of any birthrank (Olum, Reference Olum2004). If thoroughly applied, however, it would lead to shockingly strong evidence on a variety of matters, from cosmology to astrobiology to psychology (as noted by Neal, Reference Neal2006; Hartle and Srednicki, Reference Hartle and Srednicki2007).

The existence of non-human observers is widely debated, and a central question in SETI. The unrestricted SSA rules out large sentient populations that are not humanlike in some way, like having a non-carbon based biology or living in the sea. The Cosmic Doomsday argument notes how ‘unlikely’ it is to be in a planetbound society if some ETIs have founded galaxy-wide societies (Olum, Reference Olum2004). In fact it more generally obliterates the case for SETI even without the Doomsday Argument, for if many aliens existed in the Universe, what would the probability be of being born a human instead any of the panoply of extraterrestrial species that are out there?Footnote 3 despite the many astronomers of past centuries who believed other planets were similar to Earth on typicality grounds (Crowe, Reference Crowe1999). The real problem is that the unrestricted SSA rules against intelligent life everywhere else, even the nearest 10100 Hubble volumes. Animal consciousness may be a better historical example as we have reason to suspect many animals are indeed conscious in some way (e.g. Griffin and Speck, Reference Griffin and Speck2004).

The consequences extend to other fields, where the consequences become harder to accept. Several theories of physics predict a universe with infinite extent (in space, time, or otherwise), including the open and flat universes of conventional cosmology, eternal inflation, cyclic cosmology, and the many-world interpretation of quantum mechanics, with a nearly endless panoply of Earth at least slightly different from ours. But according to the unrestricted SSA, the probability that a randomly selected observer would find themselves in this version of Earth might as well be zero in a sufficiently big universe. It also makes short work of the question of the question of animal consciousness (c.f., Griffin and Speck, Reference Griffin and Speck2004). Isn't it strange that, of all the creatures on the Earth, you happen to be human? The SSA would be a strong argument against the typical mammal or bird being a conscious observer, to say nothing of the trillions of other animals and other organisms (Neal, Reference Neal2006).

Unrestrained application of the SSA leads to a far more radical, and ominous conclusion, however. Why not apply the SSA to other humans? We can do this even if we restrict our reference class to humanity and remain agnostic about aliens, multiverses and animal consciousness. Solipsism, the idea that one is the only conscious being in existence and everything else is an illusion, is an age-old speculation. Obviously, most people do not favour solipsism a priori, but it cannot actually be disproved, only ignored as untenable. Solipsism would imply that scientific investigation is pointless, however. Any method that leads to near certainty in solipsism is self-defeating, indicating a flaw in its use.

What happens when we apply the SSA to our sliver of solipsistic doubts? Let $\epsilon$![]() be the credence you assign to all solipsistic ideas that are constrained by SSA. Although surely small, perhaps 10−9 being reasonable, it should not have to be zero – if only because cosmology predicts scenarios like solipsism could happen (like being a Boltzmann brain in a tiny collapsing cosmos). What are the odds that you are you, according to the SSA? The principle assigns a likelihood of ≤1/1011 for a realist worldview; only the normalization factor in Bayes’ theorem preserves its viability. But the SSA indicates that, according to solipsism, the likelihood that an observer has your data instead of the ‘data’ of one of the hallucinatory ‘observers’ you are imagining is 1. According to Bayes’ theorem, your posterior credence in solipsism is $\epsilon /[ 1/N_{\rm past} + \epsilon ( 1 - 1/N_{\rm past}) ]$

be the credence you assign to all solipsistic ideas that are constrained by SSA. Although surely small, perhaps 10−9 being reasonable, it should not have to be zero – if only because cosmology predicts scenarios like solipsism could happen (like being a Boltzmann brain in a tiny collapsing cosmos). What are the odds that you are you, according to the SSA? The principle assigns a likelihood of ≤1/1011 for a realist worldview; only the normalization factor in Bayes’ theorem preserves its viability. But the SSA indicates that, according to solipsism, the likelihood that an observer has your data instead of the ‘data’ of one of the hallucinatory ‘observers’ you are imagining is 1. According to Bayes’ theorem, your posterior credence in solipsism is $\epsilon /[ 1/N_{\rm past} + \epsilon ( 1 - 1/N_{\rm past}) ]$![]() , or $1 - 1/( N_{\rm past} \epsilon )$

, or $1 - 1/( N_{\rm past} \epsilon )$![]() . If your prior credence is solipsism was above ~10−11, the SSA magnifies it into a virtual certainty. In fact, the problem is arguably much worse than that: Bostrom (Reference Bostrom2013) further proposes a Strong Self-Sampling Assumption (SSSA), wherein individual conscious experiences are the fundamental unit of observations. What are the odds that you happen to make this observation at this point of your life instead of any other? Your credence in extreme solipsism, where only your current observer-moment exists, should be amplified by a factor $\gtrsim 10^{17}$

. If your prior credence is solipsism was above ~10−11, the SSA magnifies it into a virtual certainty. In fact, the problem is arguably much worse than that: Bostrom (Reference Bostrom2013) further proposes a Strong Self-Sampling Assumption (SSSA), wherein individual conscious experiences are the fundamental unit of observations. What are the odds that you happen to make this observation at this point of your life instead of any other? Your credence in extreme solipsism, where only your current observer-moment exists, should be amplified by a factor $\gtrsim 10^{17}$![]() , and more if one believes there is good evidence for an interstellar future, animal consciousness, alien intelligences, or the existence of a multiverse. Unless one is unduly prejudiced against it – Presumptuously invoking the absurdly small prior probabilities that are the problem in the SIA – the principle behind the Doomsday Argument impels one into believing nobody else in existence is conscious, not even your past and future selves.Footnote 4

, and more if one believes there is good evidence for an interstellar future, animal consciousness, alien intelligences, or the existence of a multiverse. Unless one is unduly prejudiced against it – Presumptuously invoking the absurdly small prior probabilities that are the problem in the SIA – the principle behind the Doomsday Argument impels one into believing nobody else in existence is conscious, not even your past and future selves.Footnote 4

One might object that ‘me, now’ is a superficial class, that everyone in the real world is just as unique and unrepresentative, so there is no surprise that you are you instead of everything else. This is invalid according to unrestricted Copernican reasoning. You are forced to grapple with the fundamental problem that your solipsistic model has only one possible ‘outcome’ but realist models have many because there really do exist many different people – in the same way that drawing a royal flush from a stack of cards on the first try is very strong evidence it is rigged even if every possible draw is equally unlikely with a randomly shuffled deck. Indeed, we can use trivial identifying details to validly make inferences about external phenomena, like in the urn problems used as analogies for the Doomsday Argument. The x-day Argument prevent us from saving the Doomsday Argument by appealing to the unlikelihood of being born near a ‘special time’ in the future. There exists an N x that is small for each individual in any population.

A later section will provide a way out of solipsism even if one accepts the SSA. This is to fine-grain the solipsism hypothesis by making distinctions about what the sole observer hallucinates, or which of all humans is the ‘real’ one. The distinctions between specific observers are thus important and cannot be ignored. In the context of the Doomsday Argument, we can ask what is the likelihood that the sole solipsist observer imagines themself to have a birthrank of 1011 instead of 1 or 1050. Indeed, I will argue that fine-graining is an important part of understanding the role of typicality: it imposes constraints that limit its use in Doomsday Arguments.

The anti-Copernican conclusion of the maximal Copernican Principle

The Copernican Principle is inherently unstable when adopted uncritically. Even a small perturbation to one's initial prior, a sliver of doubt about there really being 109 billion people born so far, is magnified to the point where it can completely dominate one's views about the existence of other beings. This in turn leads to epistemic instability, as everything one has learned from the external world is thrown into doubt. Although the SSA started out as a way of formalizing the Copernican Principle, it has led to what may be considered an anti-Copernican conclusion in spirit. Weaker Copernican principles suggest that you consider yourself one of many possible minds, not considering yourself favoured, just as the Earth is one of many planets and not the pivot of the Universe. But this strong formulation suggests that you are the only kind of mind, unique in all of existence. Instead of a panoply of intelligences, we get at most an endless procession of copies of you and no one else. Like the anthropic principle, the most extreme versions of the Copernican principle presumptuously tell you that you are fundamental.

Deconstructing typicality

Why is typicality invoked at all?

Typicality, the principle behind the SSA, has been invoked to explain how we can conclude anything at all in a large Universe. Since the Universe appears to be infinite, all possible observations with nonzero probability will almost surely be made by some observers somewhere in the cosmos by the anthropic principle. That is, the mere fact that there exists an observer who makes some observation has likelihood 1 in every cosmology where it is possible. Furthermore, a wide range of observations is possible in any given world model. Quantum theories grant small but nonzero probabilities for measurements that diverge wildly from the expected value: that a photometer will detect no photons if it is pointed at the Sun, for example, or that every uranium nucleus in the Earth will spontaneously decay within the next ten seconds. Most extreme are Boltzmann brains: any possible observer (for a set of physical laws) with any possible memory can be generated wherever there is a thermal bath. Thus we expect there exist observers who make any possible observation in infinite cosmologies that can sustain cognition. Without an additional principle to evaluate likelihoods, no evidence can ever favour one theory over another and science is impossible (Bostrom, Reference Bostrom2002; Bousso et al., Reference Bousso, Freivogel and Yang2008; Srednicki and Hartle, Reference Srednicki and Hartle2010; Bostrom, Reference Bostrom2013).

The common solution has been to include indexical information in our distributions. Indexicals are statements relating your first-person experience to the outside world. They are not meaningful for a third-person observer standing outside the world and perceiving its entire physical content and structure. The SSA, and the SIA in reply, attempt to harness indexicals to learn things about the world: they convert the first-person statement into a probabilistic objective statement about the world, by treating you as a ‘typical’ observer. Frequently, the physical distinctions between observers are left unspecified, as if they are intrinsically identical and only their environments are different.

When we make an observation, we do at least learn the indexical information that we are an observer with our data D. The idea of these arguments, then, is to construct a single joint distribution for physical theories about the third-person nature of the cosmos and indexical theories about which observer we are. Often this is implicit – rather than having specified theories about which observer we are, indexical information is evaluated with an indexical likelihood, the fraction of observers in our reference class with data D. While all possible observations are consistent with a theory in an infinite Universe, most observations will be clustered around a typical value that indicates the true cosmology (Bostrom, Reference Bostrom2013). Thus the indexical likelihoods result in us favouring models that predict most observers have data similar to ours over those where our observations are a fluke.

Typicality is usually fine for most actual cosmological observations, but it yields problematic conclusions when attempting to choose between theories with different population sizes. These problems will motivate the development of Fine-Graining with Attached Indexicals (FGAI) approach to typicality over the next sections.

Sleeping Beauty as three different thought experiments

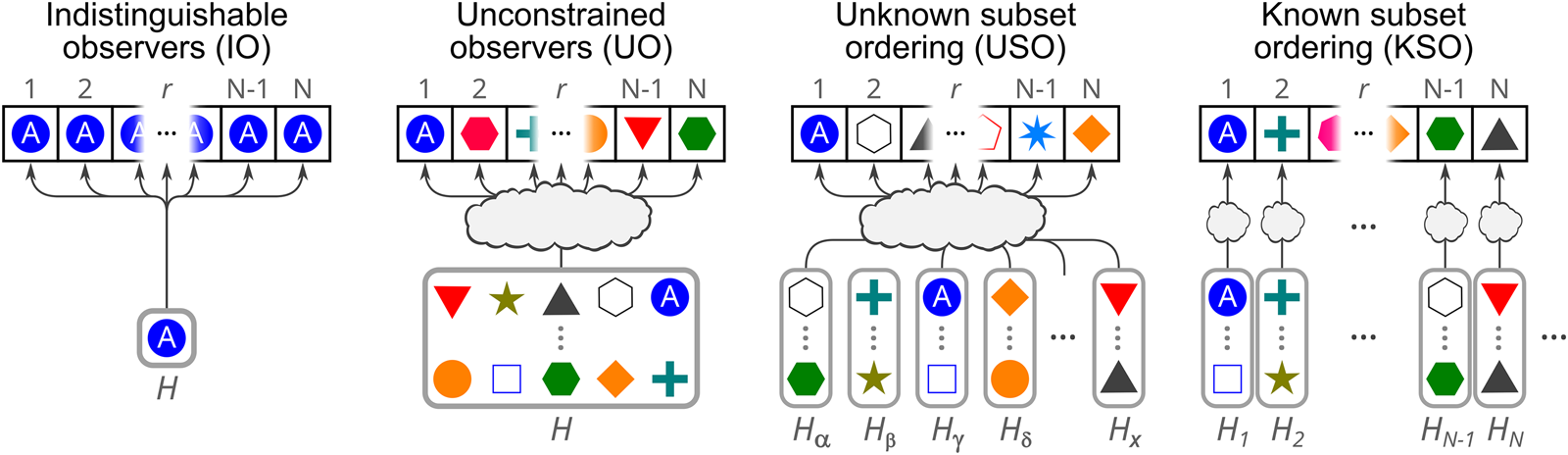

The core of the Doomsday Argument, and many similar ‘Copernican’ arguments, can be modelled with a thought experiment known as the Sleeping Beauty problem. Imagine that you are participating in experiment in which you wake up in a room either on just Monday or on both Monday and Tuesday. Each day your memory of any previous days has been wiped, so you have no sense of which day it is. Now, suppose you knew that the experiment was either Short, lasting for just Monday, or Long, lasting for Monday and Tuesday. In the original formulation of the thought experiment (SB-O), the experimenters flip a coin, running the Short version if it came up Heads and the Long version if it came up Tails (Elga, Reference Elga2000). You wake up in the room, not knowing how the coin landed, ignorant of whether you are in a Short run or a Long run. What probability should you assign to the possibility that the coin landed heads and the run is Short, 1/2 or 1/3 (Fig. 1)?Footnote 5

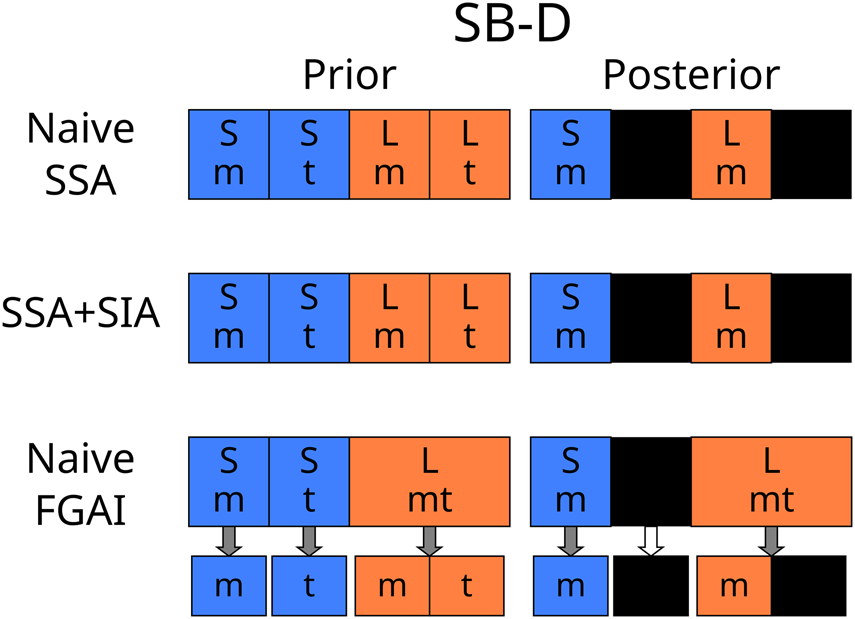

Fig. 1. The Sleeping Beauty variants SB-A, SB-B, and SB-C, illustrating how different theories of typicality handle Bayesian credence, before and after learning it is Monday (m) instead of Tuesday (t). Ruled out hypotheses are coloured in black and do not count towards the normalization. The SSA, with or without the SIA, leads to presumptuous conclusions in SB-B. In FGAI, indexical and physical distributions are not mixed. Instead, there is an overarching physical distribution, and each model has an associated indexical probability distribution (indicated by the arrows).

According to the ‘halfer’ camp, it is obvious that you have absolutely no basis to choose between Short and Long because the coin is fair, and that you should have an uninformative prior assigning weight 1/2 to each possibility.Footnote 6

The ‘thirder’ camp instead argues that it is obvious that you are more likely to wake up on any particular day in the Long experiment. That is, there are three possibilities – Short and Monday, Long and Monday, and Long are Tuesday – so the odds of Short and Monday are 1/3. Treating these hypothetical days with equal weight is not as facetious as it sounds: if the experiment was run a vast number of times with random lengths, 1/3 of all awakenings really would be in Short variations. One could even construct bets that favour odds of 1/3.

And what if the experimenters then tell you it is Monday? In the Short theory, there is a 100% chance that today is Monday, but in the Long theory, there is just a 50% chance. If you initially adopted 1/2 as the probability in Short, you now favour Short with a credence of 2/3. In fact, this the basic principle of the Doomsday Argument. On the other hand, if you initially adopted 1/3 as the probability in Long, you now have even credences in Short and Long. And indeed, if many such experiments were being run, half of the Monday awakenings occur in the Long runs. Still, this leads to the odd situation where we start out fairly confident that we are in a Long experiment. It devolves into the Presumptuous Philosopher problem, forcing us to start out virtually certain that we live in a universe with many inhabitants. Just as in the Doomsday Argument, we are drawing confident conclusions about the Universe without actually looking at anything but ourselves.

The conventional Sleeping Beauty thought experiment has been used to compare the SSA and competing principles (Neal, Reference Neal2006), but it conceals some very different situations. This is why it is not immediately obvious the probability is 1/2 or 1/3. The following versions of the experiment clarify this distinction (see Fig. 1):

-

(SB-A) You know that the experiments proceed with a Short run followed by a Long run of the experiment, and you are participating in both. Today you wake up in the room with no memories of yesterday. With what probability is today one the day you awaken during the Short run?

-

(SB-B) The experimenters have decided, through some unknown deterministic process, to run only either the Short or the Long version of the experiment. You have absolutely no idea which one they have decided upon. Today you wake up in the room with no memories of yesterday. What credence should you assign to the belief that the experimenters have chosen to do the Short run?

-

(SB-C) The experimenters have decided, through some unknown deterministic process, to run only either the Short or a variant of the Long version of the experiment. In the modified Long experiment, they run the experiment on both Monday and Tuesday, but only wake you on one of the two, chosen by another unknown deterministic process. Today you wake up in the room. What credence should you assign to the belief that the experimenters have chosen to do the Short run?

In the Doomsday Argument, we essentially are in SB-B: we know that we are ‘early’ in the possible history and want to know if we can conclude anything about conscious observers at ‘later’ times. Invocations of typicality then presume a similarity between either SB-A or SB-C to SB-B. Yet these analogies are deeply flawed. Both SB-A and SB-C have obvious uninformative priors yielding the same result with or without the SIA, but they point to different resolutions of the Sleeping Beauty problem: 1/3 for SB-A and 1/2 for SB-C.Footnote 7 Thus thought experiments lead to ambiguous conclusions (for example, Leslie Reference Leslie1996's ‘emerald’ thought experiment motivates typicality by noting that we should a priori consider it more likely we are in the ‘Long’ group in a situation like SB-A because they are more typical, but this could be viewed as support for the SIA).

SB-A and SB-B leave us with indexical uncertainty. In SB-A, this is the only uncertainty, with all relevant objective facts of the world known with complete certainty. Because only an indexical is at stake, there can be no Presumptuous Philosopher problem in SB-A – you are already absolutely certain of the ‘cosmology’. But although it has been reduced to triviality in SB-A, there is actually a second set of credences for the third-person physical facts of this world: our 100% credence in this cosmology, with both a Short and Long run. SB-B instead posits that you are not just trying to figure out where you are in the world, but the nature of the world in the first place.

SB-C and SB-B leave us with objective uncertainty about the physical nature of the world itself. The objective frequentist probability that you are in the Short run with SB-B is neither 1/2 nor 1/3 – it is either 0 or 1. Instead the Bayesian prior is solely an internal one, used by you to weigh the relative merits of different theories of the cosmology of the experiment. Thus, it makes no sense to start out implicitly biased against the Short run, so the probability 1/2 is more appropriate for a Bayesian distribution. The big difference between SB-B and SB-C is that there are two possible physical outcomes in SB-C's Long variant, but only one in SB-B's. If we knew whether the experiment was Short or Long, we could predict with 100% certainty what each day's observer will measure. But in SB-C, ‘a participant awakened on Monday’ is not a determined outcome in the SB-C Long theory.

Separating indexical and physical facts

According to typicality arguments, indexical and physical propositions can be mixed, but in this paper I regard them as fundamentally different. Indexicals are like statements about coordinate systems: they can be centred at any arbitrary location, but that freedom does not fundamentally change the way the Universe objectively works. It follows that purely indexical facts cannot directly constrain purely physical world models. The Presumptuous Philosopher paradoxes are a result of trying to force indexical data into working like physical data.

Since Bayesian updating does work in SB-A and SB-C, we can suppose there are in fact two types of credence distributions, physical and indexical. In FGAI there is an overarching physical distribution describing our credences in physical world models. Attached to each physical hypothesis is an indexical distribution (Fig. 1). Each indexical distribution is updated in response to indexical information (c.f., Srednicki and Hartle, Reference Srednicki and Hartle2010). For example, in the Sleeping Beauty thought experiment, when the experimenter announces it is the first day of the experiment, you learn both an physical fact (‘a participant wakes up on Monday’) and an indexical fact (‘I'm the me waking on Monday’). The physical distribution is insulated from changes in the indexical distribution, protecting it from the extremely small probabilities in both the SSA and SIA. Thus, in SB-B, learning ‘today is Monday’ only affects the indexical distribution, invalidating a Doomsday-like argument. Within the context of a particular world-model, one may apply typicality assumptions like the SSA/SIA to the indexical assumption.

A fourth variant of the Sleeping Beauty thought experiment will complicate this attempted reconciliation:

-

(SB-D) The experimenters have decided, through some unknown deterministic process, to run only either a modified Short or the standard (SB-B) Long version of the experiment. This version of the Short experiment is identical to the Long variant in SB-C: the experimenters wake you up on one of Monday or Tuesday, chosen through an unknown process. Today you wake up in the room. What credence should you assign to the belief that the experimenters have chosen to do the Short run?

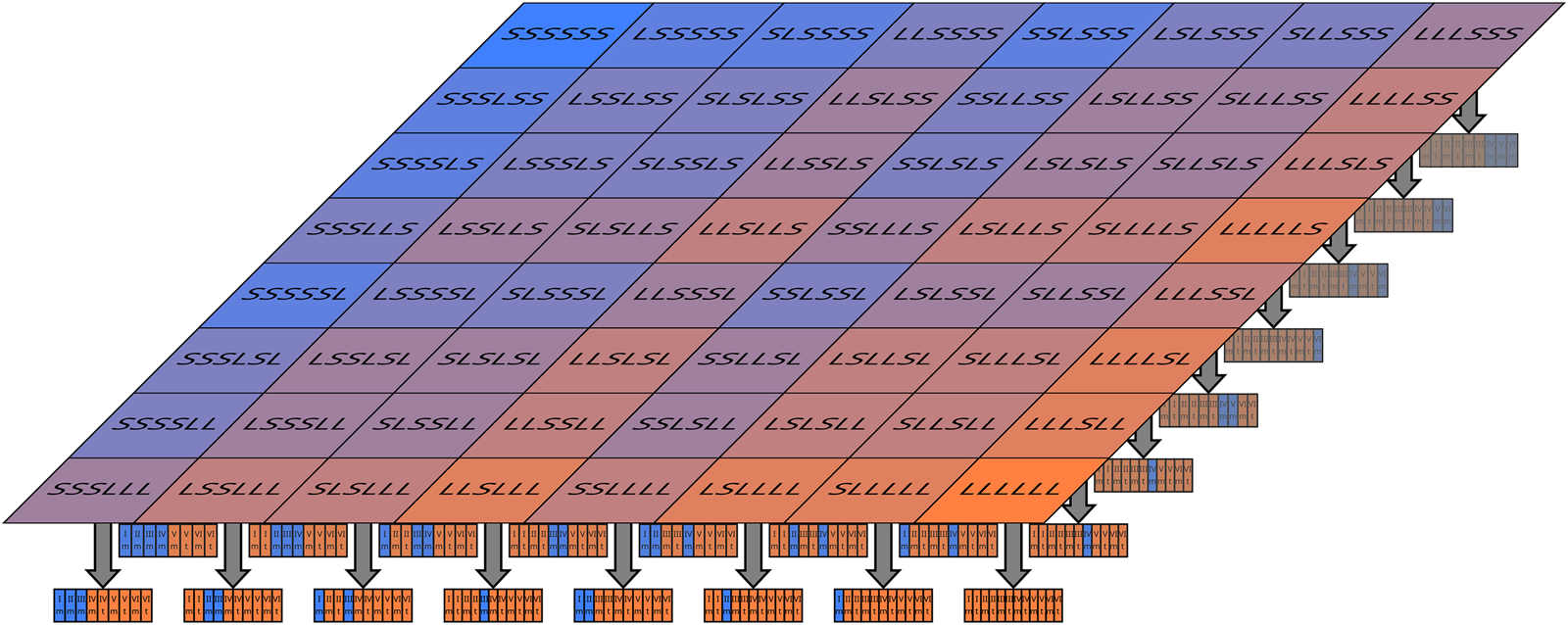

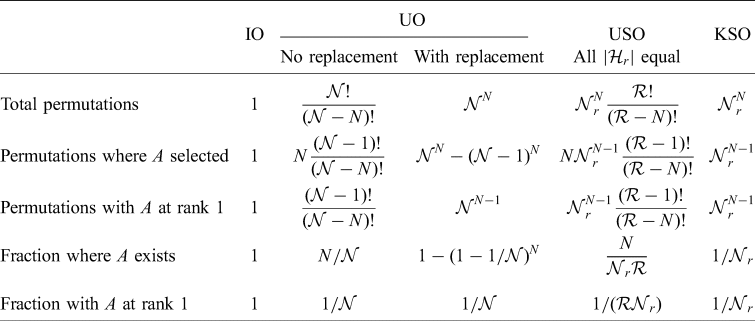

Both the SSA and SIA yield the natural result of equal credences in Short and Long before and after learning it is Monday, while a simple separation of indexical and physical facts favours Long (Fig. 2). This situation is distinct from the Doomsday Argument because it is not possible for only high N x humans to exist without the low N x ones. An attempt to address this issue will be made in the section on Weighted Fine Graining (WFG).

Fig. 2. SB-D is a variant of Sleeping Beauty that is challenging for theories with separate indexical and physical distributions. More outcomes are instantiated in the Long theory than in either Short microhypothesis, thus seemingly favouring the Long theory unless the SSA is applied.

The astrobiological relevance of Sleeping Beauty

The variants of the Sleeping Beauty thought experiment are models of ‘Copernican’ arguments about questions in astrobiology. In all these cases, we are interested in the existence and nature of beings who are unlike us in some way. In these analogies, humanity might be likened to a ‘Monday’ observer, and we are considering ‘Tuesday’ beings like those in the clouds of Jupiter, around red dwarfs, or in different types of galaxies or different cosmological epochs. With that analogy in mind, consider these hypothetical scenarios in astrobiology:

-

(AB-A) We start out already knowing that the Milky Way disk, Milky Way bulge, and M33 disk are inhabited (with M33 lacking a major bulge), and that the number of inhabitants in these three regions is similar. We know we are in one of these three regions, but not which one. Are we more likely to be in the Milky Way or M33? Are we more likely to be in a spiral disk or a bulge? What if we learn we are in a disk?

-

(AB-B) We start out knowing that we live in the Milky Way's disk. We come up with two theories: in theory S, intelligence only evolves in galactic disks, but in theory L, intelligence evolves in equal quantities in galactic disks and bulges. Does the Copernican principle let us favour theory S?

-

(AB-C) We have two theories: in theory S, intelligence only evolves in galactic disks. In theory L, intelligence either evolves only in galactic disks or only in galactic bulges but not both, with equal credence in either hypothesis. We then discover we live in a galactic disk. Do we favour theory S or theory L?

-

(AB-D) We do not know our galactic environment because of our limited observations, but from observations of external galaxies we suspect that galactic disks and bulges are possible habitats for ETIs. Theory S is divided into two hypotheses: in S 1, intelligence only evolves in galactic disks and in S 2, it evolves only in galactic bulges. Theory L proposes that intelligence is evolves in both disks and bulges. How should we apportion our credence in S 1, S 2, and L? Once we learn the Earth is in the galactic disk, how do our beliefs change?

Put this way, it is clear that neither AB-A nor AB-C are like our current astrobiological questions, and thus neither are SB-A nor SB-C. In AB-A, we already have an answer to the question of whether galactic bulges are inhabited, we are merely uncertain of our address! AB-C, meanwhile, is frankly bizarre: we are seemingly convinced that intelligence cannot evolve in both environments, as if the mere existence of intelligence in galactic bulges always prevents it from evolving in galactic disks. Since we start out knowing there is life on Earth and we wonder if there is life in non-Earthly environments, clearly AB-B – and SB-B – is the better model of astrobiology.

AB-D is an interesting case, though, and it too has relevance for astrobiology. In the 18th century, it was not clear whether Earth is in the centre of the Milky Way or near its edge, but the existence of ETIs was already a well-known question (Crowe, Reference Crowe1999). AB-D really did describe our state of knowledge about different regions of the Milky Way being inhabited back then. In other cases, the history is more like AB-B; we discovered red dwarfs and elliptical galaxies relatively late. As the distinction between AB-B and AB-D basically comes down to the order of discoveries in astronomy, it suggests AB-B and AB-D should give similar results in a theory of typicality, with the same true for SB-B and SB-D.

The frequentist limit and microhypotheses

Finally, it's worth noting that the frequentist 1/3 probability slips back in for a frequentist variant:

-

(SB-Bn) You know for certain that the experiment is being run for n times where n ≫ 1. Whether a given run is Short or Long is determined through some deterministic but pseudo-random process, such that any possible sequence of Shorts and Longs is equally credible from your point of view. Each day, you wake up with an identical psychological state. Today you wake up in the room. What credence should you assign to the belief that today is happening during a Short run (c.f., the ‘Three Thousand Weeks’ thought experiment of Bostrom, Reference Bostrom2007)?

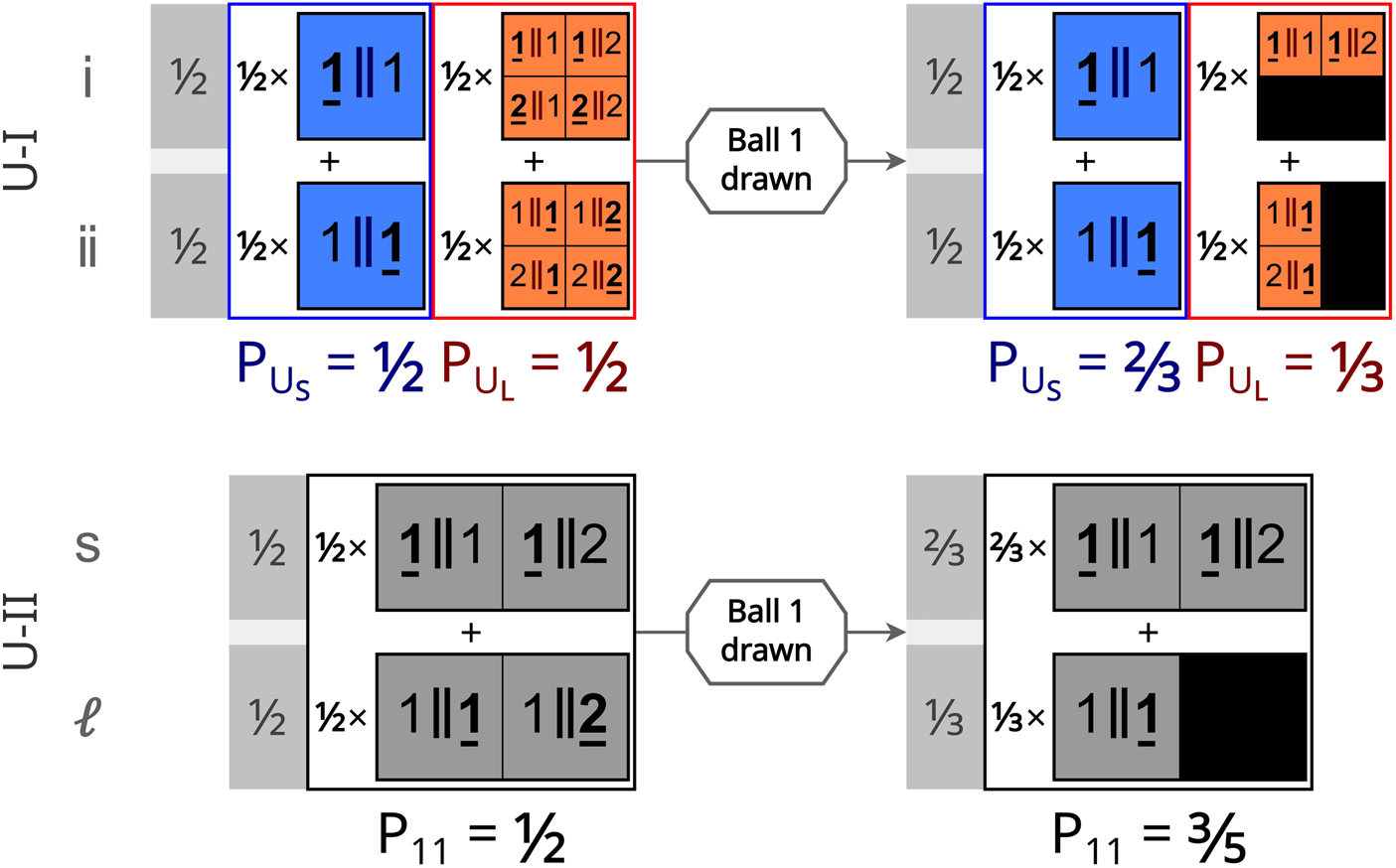

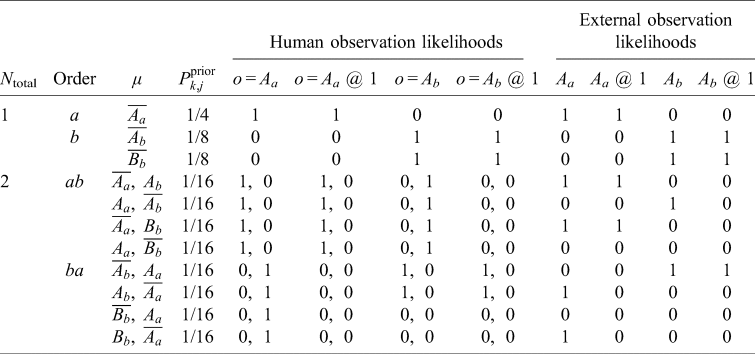

There are now 2n competing physical hypotheses, one for each possible sequence of Shorts and Longs. In most of these hypotheses, however, about half of the runs are Short and Long, and only about one-third of the observers are in the Short runs through simple combinatorics (Fig. 3). Thus, as n tends to infinity, the coarse-grained physical distribution converges to one like SB-A, with unbalanced Short/Long runs being a small outlier. We can then say in any likely scenario, the probability we are in a Short run is ~1/3.

Fig. 3. If the Sleeping Beauty experiment is run many times, with each possible sequence of Long and Short a priori equally likely, a vast number of microhypotheses about the sequence of Short and Long is generated. Shown here are the 64 microhypotheses when there are n = 6 runs. Attached to every single one of these fine models is an indexical distribution.

SB-Bn calls to mind statistical mechanics, where a vast number of physical microstates are grouped into a small number of distinguishable macrostates. By analogy, I call each possible detailed world model a microhypothesis, which are then grouped into macrotheories defined by statistical properties.

Replacing typicality

The fine-grained approach to typicality

How are we to make inferences in a large universe, then, without directly mixing indexicals into the credence distribution? I propose that most of the work performed by typicality can instead be performed by fine-graining. Fine-graining of physical theories is the first principle of FGAI.

The common practice is to treat observers in a fairly large reference class as interchangeable when discussing their observations, but this is merely a convenience. In fact, we can make fine distinctions between observers – between Earth and an inhabited planet in Hubble volume # 239,921, for example, or between me and you, or even between you now and you last Thursday. Macrotheories often cannot predict exactly which specific observer measures a particular datum. Thus, every theory is resolved into myriads of microhypotheses, each of which does make these predictions. Because the distinctions between these observers are physical, statements about a specific observer making a particular measurement are evaluated as purely physical propositions, without invoking indexicals. Some microhypotheses will be consistent with the data, others will not be. The resulting credences in the macrotheories are entirely determined by summing the posterior weight over all microhypotheses, in many cases through simple counting arguments. Typicality then follows from the likelihood values of the microhypotheses – as it indeed does in conventional probability, where specific ‘special’ events like getting a royal flush from a randomly shuffled deck are no more rare than specific mundane outcomes (Laplace, Reference Laplace1902). Thus, in most cases, there is no need to invoke any separate Copernican principle, because it is a demonstrable consequence of our theories.

The other main precept of FGAI is that purely indexical facts do not directly affect third-person propositions about the physical world, rather modifying indexical distributions attached to each world model. Observations must be treated as physical third-person events when constraining the physical distribution. Statements like ‘I picked ball 3 out of the urn’ must be recast into third-person statements like ‘Brian Lacki picked ball 3 out of the urn’. Each microhypothesis requires an observation model, a list of possible observations that each observer may make. Observation models necessarily impose physical constraints on which observations can be made by whom, forbidding impossible observations like ‘Hypatia of Alexandria observed that her peer was Cyborg 550-319447 of Gliese 710’ from being considered as possible outcomes.

The fine-graining is most straightforward when every microhypothesis predicts that all physically indistinguishable experiments lead to the same outcome. This follows when we expect conditionalization to entirely restrict possible observations. For example, the Milky Way could be the product of an indeterministic quantum fluctuation in the Big Bang, but copies of our Earth with its data (e.g. photographs of the Milky Way from inside) do not appear in elliptical galaxies except through inconceivably contrived series of coincidences. More difficult are purely indeterministic cases, when any specific observer can observe any outcome, which is true for most quantum experiments. I will argue that even then we can form microhypotheses by assuming the existence of an appropriate coordinate system.

A more serious difficulty is what to do when different plausible indexical hypotheses would lead to different likelihood evaluations for microhypotheses. That is, we may not know enough about where we are to determine whether a microhypothesis predicts an observation or not. In this section, I will adopt the perspective that I call Naive FGAI: we adopt the maximum possible likelihood over all observers we could be.

Naive FGAI

FGAI constructs the physical probability distributions using the Hierarchical Bayes framework, dividing theories into finer hypotheses about internal parameters, possibly with intermediate levels. Suppose we have M macrotheories ${\Theta }_1,\; \, {\Theta }_2,\; \, \ldots,\; \, {\Theta }_M$![]() . Each macrotheory ${\Theta }_k$

. Each macrotheory ${\Theta }_k$![]() has m k microhypotheses $\mu _{k, 1},\; \, \mu _{k, 2},\; \, \ldots ,\; \, \mu _{k, m_k}$

has m k microhypotheses $\mu _{k, 1},\; \, \mu _{k, 2},\; \, \ldots ,\; \, \mu _{k, m_k}$![]() . Each microhypothesis inherits some portion of its parent macrotheory's credence or weight. Sometimes, when the microhypotheses correspond to exact configurations resulting from a known probabilistic (e.g. flips of an unfair coin), then the prior credence in each $P_{k, j}^{{\rm prior}}$

. Each microhypothesis inherits some portion of its parent macrotheory's credence or weight. Sometimes, when the microhypotheses correspond to exact configurations resulting from a known probabilistic (e.g. flips of an unfair coin), then the prior credence in each $P_{k, j}^{{\rm prior}}$![]() can be calculated by scaling the macrotheory's total prior probability accordingly. In other cases, we have no reason to favour one microhypothesis over another, and by the Principle of Indifference, we assign each microhypothesis in a macrotheory equal prior probability: $P_{k, j}^{{\rm prior}} = P_k^{{\rm prior}} / m_k$

can be calculated by scaling the macrotheory's total prior probability accordingly. In other cases, we have no reason to favour one microhypothesis over another, and by the Principle of Indifference, we assign each microhypothesis in a macrotheory equal prior probability: $P_{k, j}^{{\rm prior}} = P_k^{{\rm prior}} / m_k$![]() . Some macrotheories are instead naturally split into mesohypotheses describing intermediate-level parameters, which in turn are fine-grained further into microhypotheses. Mesohypotheses are natural when different values of these intermediate-level parameters result in differing numbers of outcomes – like if a first coin flip determines the number of further coin flips whose results are reported. Finally, in each μ k,j, we might be found at any of a number of locations. More properly, as observers we follow trajectories through time, following a sequence of observations at particular locations, as we change in response to new data (c.f., Bostrom, Reference Bostrom2007). The set ${\cal O}_{k, j} ( D)$

. Some macrotheories are instead naturally split into mesohypotheses describing intermediate-level parameters, which in turn are fine-grained further into microhypotheses. Mesohypotheses are natural when different values of these intermediate-level parameters result in differing numbers of outcomes – like if a first coin flip determines the number of further coin flips whose results are reported. Finally, in each μ k,j, we might be found at any of a number of locations. More properly, as observers we follow trajectories through time, following a sequence of observations at particular locations, as we change in response to new data (c.f., Bostrom, Reference Bostrom2007). The set ${\cal O}_{k, j} ( D)$![]() is the set of possible observer-trajectories we could be following allowed by the microhypothesis μ k,j and the data D. In Naive FGAI, the set ${\cal O}_{k, j}$

is the set of possible observer-trajectories we could be following allowed by the microhypothesis μ k,j and the data D. In Naive FGAI, the set ${\cal O}_{k, j}$![]() describes our reference class if μ k,j is true.

describes our reference class if μ k,j is true.

In Naive FGAI, prior credences $P_{k, j}^{{\rm prior}}$![]() in μ k,j are updated by data D according to:

in μ k,j are updated by data D according to:

where ${\scr P}( o @ i \to D \vert \mu _{k, j})$![]() is the likelihood of μ k,j if the observer (o) located at position i in ${\cal O}_{k, j} ( D)$

is the likelihood of μ k,j if the observer (o) located at position i in ${\cal O}_{k, j} ( D)$![]() observes data D. Of course if the likelihoods are equal for all observers in the reference class ${\cal O}_{k, j} ( D)$

observes data D. Of course if the likelihoods are equal for all observers in the reference class ${\cal O}_{k, j} ( D)$![]() , equation (6) reduces to Bayes’ formula. The posterior credences in the macrotheories can be found simply as:

, equation (6) reduces to Bayes’ formula. The posterior credences in the macrotheories can be found simply as:

Naive FGAI is sufficient to account for many cases where typicality is invoked. Paradoxes arise when the number of observers itself is in question, as in Doomsday, requiring a more sophisticated treatment.

A simple urn experiment

In some cases, microhypotheses and observations models are nearly trivial. Consider the following urn problem: you are drawing a ball from an urn placed before you that contains a well-mixed collection of balls, numbered sequentially starting from 1. You know the urn contains either one ball (theory A) or ten (theory B), and start with equal credence in each theory. How does drawing a ball and observing its number constrain these theories? Both theory A and theory B have microhypotheses of the form ‘The urn contains N balls and ball j is drawn at the time of experiment’. In theory A, there is only one microhypothesis, which inherits the full 50% of Theory A's prior credence. Theory B has 10 microhypotheses, one for each possible draw and each of equal credence, so its microhypotheses start with 5% credence each. Each microhypotheses about drawing ball j has an observation model containing the proposition that you observe exactly ball j – this observation is a physical event, since you are a physical being.

Then the likelihoods of an observed draw is either 1 (if the ball drawn is that predicted in the microhypothesis) or 0 (if the ball is not the predicted one). If we draw ball 3, for example, the credence in all hypotheses except Theory B's ‘Ball 3 is drawn’ microhypothesis is zero. Then the remaining microhypothesis has 100% credence, and Theory B has 100% credence as well. If instead ball 1 is drawn, Theory A's sole microhypothesis and one of Theory B's microhypotheses survive unscathed, while the other nine microhypotheses of Theory B are completely suppressed. That is, 100% of Theory A's credence survives, while only 10% of Theory B's credence remains; therefore, post-observation, the credence in Theory A is 10/11 and the credence in Theory B is 1/11. This, of course, matches the usual expectation for the thought experiment.

But what if instead you and 99 other attendees at a cosmology conference were drawing from the urn with replacement and you were prevented from telling each other your results? If we regard all one hundred participants as exactly identical observers, the only distinct microhypotheses seem to be the frequency distribution of each ball j being drawn. It would seem that there could be no significant update to Theory B's credence if you draw ball 1: all you know is that you are a participant-observer and there exists an observer-participant who draws ball 1, which is nearly certain to be true. Inference would seem to require something like the SSA. Yet this is not necessary in practice because if this experiment were carried out at an actual cosmology conference, the participants would be distinguishable. We then can fine-grain Theory B further by listing each attendee by name and specifying which ball they draw for each microhypothesis, and forming an observation model where names are matched to drawn balls. For example, we could order the attendees by alphabetical order and each microhypothesis would be a 100-vector of integers from 1 to 10.

With fine-graining, there is only 1100 = 1 microhypothesis in Theory A – all participants draw ball 1 – but 10100 microhypotheses in Theory B. In only 1099 of B's microhypotheses do you specifically draw ball 1 and observe ball 1. Thus only 10% of Theory B's microhypotheses survive your observation that you drew ball 1. The credence in Theory B is again 1/11, but is derived without appealing to typicality. Instead, typicality follows from the combinatorics. The original one-participant version of this thought experiment can be regarded as a coarse-graining of this 100-participant version, after marginalizing over the unknown observations of the other participants.

Implicit microhypotheses: A thought experiment about life on Proxima b

In other cases, the microhypotheses can be treated as abstract, implicit entities in a theory. A theory may predict an outcome has some probability, but provide no further insight into which situations actually lead to the outcome. This happens frequently when we are actually trying to constrain the value of a parameter in some overarching theory that describes the workings of unknown physics. Not only do we not know their values, we have no adequate theories to explicitly predict them. Historical examples include the terms of the Drake equation and basic cosmological parameters like the Hubble constant. Yet these parameters are subject to sampling variance; some observers in a big enough Universe should deduce unusual values far from their expectation values. Naive FGAI can be adapted for such cases by positing there are implicit microhypotheses that we cannot specify yet. The probability that we observe an outcome is then treated as if it is indicating the fraction of microhypotheses where that outcome occurs.

Suppose we have two models about the origin of life, L-A and L-B, both equally plausible a priori. L-A predicts that all habitable planets around red dwarfs have life. L-B predicts that the probability that a habitable zone planet around a red dwarf has life is 10−100.Footnote 8 Despite this, we will suppose that the conditions required for life on the nearest potentially habitable exoplanet Proxima b are not independent of our existence on Earth, and that any copy of us in a large Universe will observe the same result. The butterfly effect could impose this conditionalization – small perturbations induced by or correlated with (un)favourable conditions on Proxima b may have triggered some improbable event on Earth necessary for our evolution.

We wish to constrain L-A and L-B by observing the nearest habitable exoplanet, Proxima b, and we discover that Proxima b does have life on it. The measurement is known to be perfectly reliable. Copernican reasoning suggests that in L-B, only one in 10−100 inhabited G dwarf planets would observe life around the nearest red dwarf, and that as typical observers, we should assign a likelihood of 10−100 to L-B. Thus, L-B is essentially ruled out.

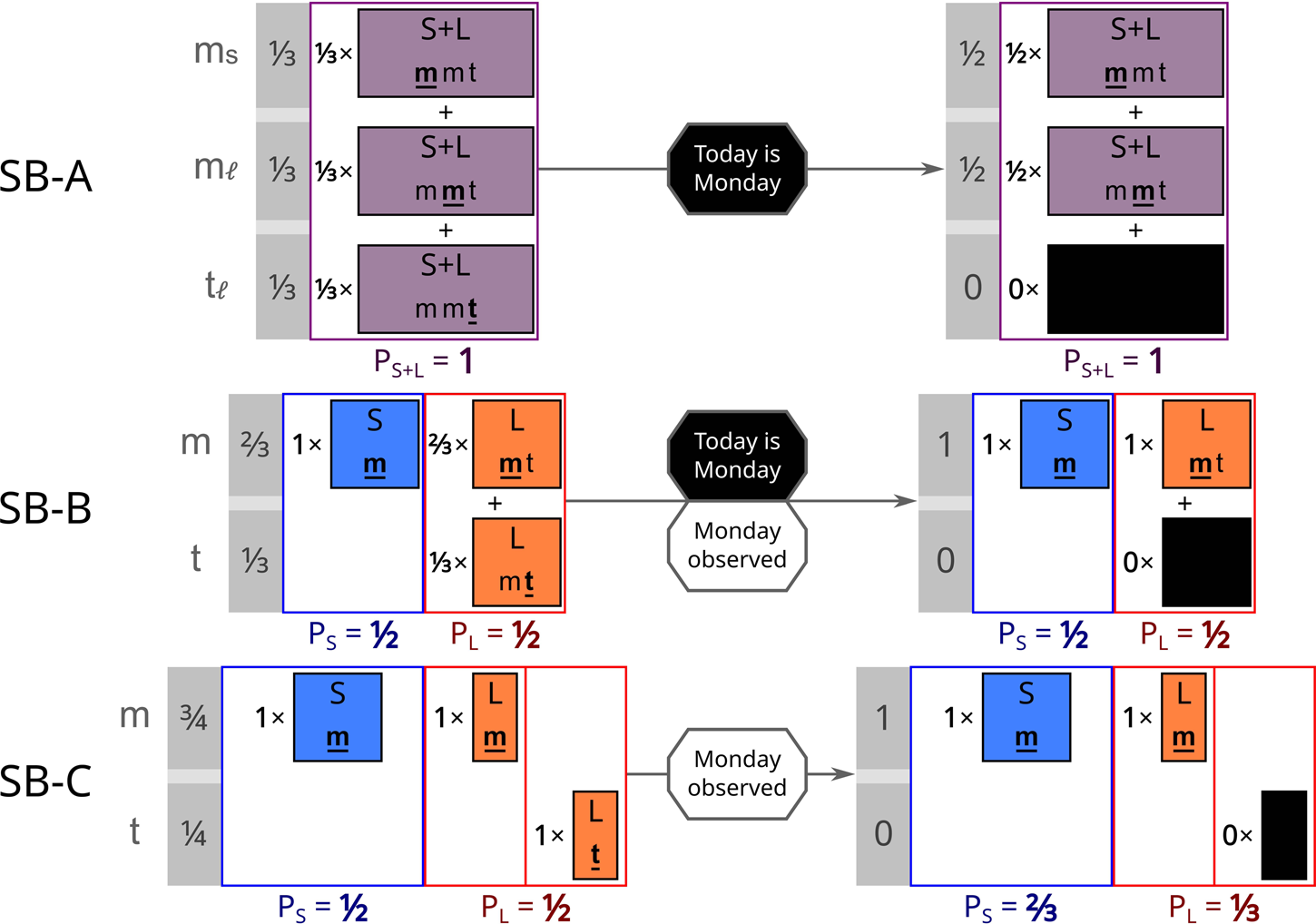

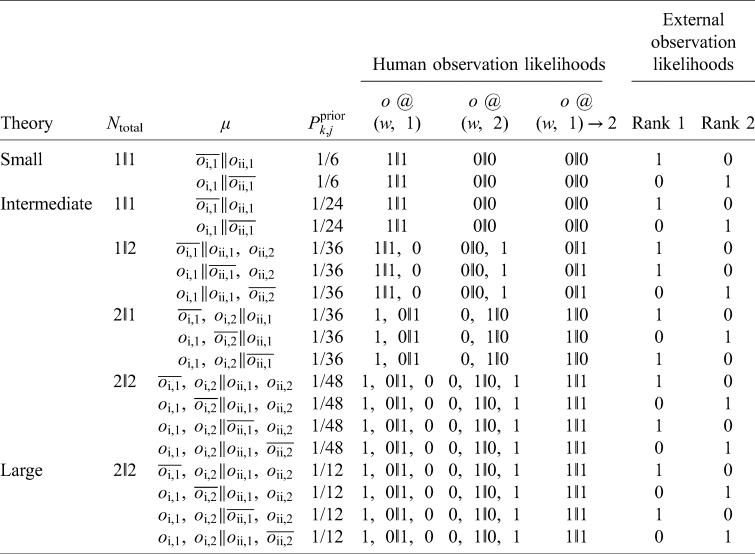

L-B does not directly specify which properties of a red dwarf are necessary for life on its planets; it merely implies that the life is the result of some unknown but improbable confluence of properties. Nonetheless, we can interpret L-B as grouping red dwarfs into 10100 equivalence classes, based on stellar and planetary characteristics. Proxima Centauri would be a member of only one of these. L-B then would assert that only one equivalence class of 10100 bears life. Thus, L-B actually implicitly represents 10100 microhypotheses, each one an implicit statement about which equivalence class is the one that hosts life (Fig. 4). In contrast, L-A has only one microhypothesis since the equivalence class contains all red dwarfs. We then proceed with the calculation as if these microhypotheses were known.

Fig. 4. Extremely simplified representation of how FGAI treats theories like L-B. Probabilities of observing a particular outcome in a macrotheory may be presumed to result from some unknown fine-scale division of parameter space into regions delineating different equivalence classes. Then microhypotheses would be constructed by considering all possible outcomes for all regions. For L-B, we observe the red dwarf nearest to Earth (Proxima $\oplus$![]() ) and see whether it is inhabited (flower symbol). The observation of an inhabited Proxima $\oplus$

) and see whether it is inhabited (flower symbol). The observation of an inhabited Proxima $\oplus$![]() reduces the number of allowed microhypotheses. Left: observers on other planets distinguishable from Earth would observe red dwarfs with different properties (Proxima X, Y, and Z) and probe different classes. Right: many identical Earths observe distinct Proxima $\oplus$

reduces the number of allowed microhypotheses. Left: observers on other planets distinguishable from Earth would observe red dwarfs with different properties (Proxima X, Y, and Z) and probe different classes. Right: many identical Earths observe distinct Proxima $\oplus$![]() (red stars). These are treated by assuming there is some indexing that allows microhypotheses to be constructed, and their likelihoods calculated by symmetry.

(red stars). These are treated by assuming there is some indexing that allows microhypotheses to be constructed, and their likelihoods calculated by symmetry.

If we observe life on Proxima b, then the sole microhypothesis of L-A survives unscathed, but implicitly only one microhypothesis of L-B of the 10100 would survive. Thus, after the observation, L-A has a posterior credence of $100\% /( 1 + 10^{-100})$![]() , while L-B has a posterior credence of 10−100/(1 + 10−100). As we would hope, FGAI predicts that we would be virtually certain that L-A is correct, which is the result we would expect if we assumed we observed a ‘typical’ red dwarf.

, while L-B has a posterior credence of 10−100/(1 + 10−100). As we would hope, FGAI predicts that we would be virtually certain that L-A is correct, which is the result we would expect if we assumed we observed a ‘typical’ red dwarf.

What of the other inhabited G dwarf planets in an infinite Universe? The nearest red dwarfs to these will have different characteristics and most of them will belong to different equivalence classes (Fig. 4). In principle, we could construct microhypotheses that specify what each type of these observers will around their nearest red dwarf, and implicitly we assume they exist. If we failed to develop the capability to determine whether Proxima b has life but trustworthy aliens from 18 Scorpii broadcast to us that their nearest red dwarf has life, the result would be the same.

Fine-graining and implicit coordinate systems

The strictest interpretation of the separation of physical and indexical facts is that we cannot constrain physical models if the observed outcome happens to any observer physically indistinguishable from us. This is untenable, at least in a large enough universe – quantum mechanics predicts that all non-zero probability outcomes will happen to our ‘copies’ in a large universe. But this would mean no measurement of a quantum mechanical parameter can be constraining. Surely if we do not observe any radiodecays in a gram of material over a century, we should be able to conclude that its half-life is more than a nanosecond, even though a falsely stable sample will be observed by some copy of us out there in the infinite universe.Footnote 9

Strict indeterminism is not necessary for this to be a problem, either. In the last section, we might have supposed that the existence of life on Proxima b depends on its exact physical microstate five billion years ago, and that these microstates are scattered in phase space. Yet, Proxima b is more massive than the Earth and has a vaster number of microstates – by the pigeonhole principle, in an infinite Universe, most Earths exactly identical to ours would neighbour a Proxima b that had a different microstate, opening the possibility of varying outcomes (Fig. 4).

But the separation of indexicals and physical theories need not be so strict. Indexicals are regarded in FGAI as propositions about coordinate systems. In physical theories we can and do use coordinate systems, sometimes arbitrary ones, as long as we do not ascribe undue objective significance. We can treat these situations by imposing an implicit indexing, as long as we do not ascribe undue physical significance to it. Thus, in an infinite Universe, we label ‘our’ Earth as Earth 1. The next closest Earth is Earth 2, the third closest Earth is Earth 3, and so on. We might in fact only implicitly use a coordinate system, designating our Earth as Earth 1 without knowing details of all the rest. Our observations then are translated into third-person propositions about observations of Earth 1. The definition of the coordinate system imposes an indexical distribution where we must be on Earth 1. Of course, this particular labelling is arbitrary, but that hardly matters because we would reach the same conclusions if we permuted the labels. If we came into contact with some other Earth that told us that ‘our’ Earth was Earth 3,296 in their coordinate system, that would not change our credence in a theory.

In a Large world, then, the microhypotheses consists of an array listing the outcome observed by each of these implicitly indexed observers. Only those microhypotheses where Earth (or observer) 1 has a matching observation survive. If the observed outcome contradicts the outcome assigned to Earth 1 by the microhypothesis, we cannot then decide we might actually be on Earth 492155 in that microhypothesis because Earth 492155 does observe that outcome. The coordinate system's definition has already imposed the indexical distribution on us.

There are limits to the uses of implicit coordinate systems, however, if we wish to avoid the usual Doomsday argument and its descent into solipsism. In SB-B, could we not assign an implicit coordinate system with today at index 1, and the possible other day of the experiment at index 2? A simplistic interpretation would then carve the Long run theory into two microhypotheses about which day has index 1, and learning ‘today is Monday’ leads us to favour the Short theory.