169 results

Population and contact tracer uptake of New Zealand’s QR-code-based digital contact tracing app for COVID-19

-

- Journal:

- Epidemiology & Infection / Volume 152 / 2024

- Published online by Cambridge University Press:

- 17 April 2024, e66

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Severe weather events and cryptosporidiosis in Aotearoa New Zealand: A case series of space–time clusters

-

- Journal:

- Epidemiology & Infection / Volume 152 / 2024

- Published online by Cambridge University Press:

- 15 April 2024, e64

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

25 Evaluating serum copper and kidney function in a cohort of bariatric surgery patients

- Part of

-

- Journal:

- Journal of Clinical and Translational Science / Volume 8 / Issue s1 / April 2024

- Published online by Cambridge University Press:

- 03 April 2024, p. 7

-

- Article

-

- You have access

- Open access

- Export citation

We need to talk about values: a proposed framework for the articulation of normative reasoning in health technology assessment

-

- Journal:

- Health Economics, Policy and Law , First View

- Published online by Cambridge University Press:

- 27 September 2023, pp. 1-21

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Impact of measurement and feedback on chlorhexidine gluconate bathing among intensive care unit patients: A multicenter study

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 44 / Issue 9 / September 2023

- Published online by Cambridge University Press:

- 13 September 2023, pp. 1375-1380

- Print publication:

- September 2023

-

- Article

- Export citation

Holocene evolution of parabolic dunes, White River Badlands, South Dakota, USA, revealed by high-resolution mapping

-

- Journal:

- Quaternary Research / Volume 115 / September 2023

- Published online by Cambridge University Press:

- 26 January 2023, pp. 46-57

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Prefacing unexplored archives from Central Andean surface-to-bedrock ice cores through a multifaceted investigation of regional firn and ice core glaciochemistry

-

- Journal:

- Journal of Glaciology / Volume 69 / Issue 276 / August 2023

- Published online by Cambridge University Press:

- 03 November 2022, pp. 693-707

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Improving Pandemic Response With Military Tools: Using Enhanced Intelligence, Surveillance, and Reconnaissance

-

- Journal:

- Disaster Medicine and Public Health Preparedness / Volume 17 / 2023

- Published online by Cambridge University Press:

- 22 September 2022, e254

-

- Article

- Export citation

An analysis of the Clinical and Translational Science Award pilot project portfolio using data from Research Performance Progress Reports

-

- Journal:

- Journal of Clinical and Translational Science / Volume 6 / Issue 1 / 2022

- Published online by Cambridge University Press:

- 18 August 2022, e113

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The prescriber’s guide to classic MAO inhibitors (phenelzine, tranylcypromine, isocarboxazid) for treatment-resistant depression

-

- Journal:

- CNS Spectrums / Volume 28 / Issue 4 / August 2023

- Published online by Cambridge University Press:

- 15 July 2022, pp. 427-440

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The association between accessing dental services and nonventilator hospital-acquired pneumonia among 2019 Medicaid beneficiaries

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 44 / Issue 6 / June 2023

- Published online by Cambridge University Press:

- 11 July 2022, pp. 959-961

- Print publication:

- June 2023

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Survey of coronavirus disease 2019 (COVID-19) infection control policies at leading US academic hospitals in the context of the initial pandemic surge of the severe acute respiratory coronavirus virus 2 (SARS-CoV-2) omicron variant

- Part of

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 44 / Issue 4 / April 2023

- Published online by Cambridge University Press:

- 16 June 2022, pp. 597-603

- Print publication:

- April 2023

-

- Article

- Export citation

21 - Arguing to Learn

- from Part IV - Learning Together

-

-

- Book:

- The Cambridge Handbook of the Learning Sciences

- Published online:

- 14 March 2022

- Print publication:

- 07 April 2022, pp 428-447

-

- Chapter

- Export citation

Sell now or later? A decision-making model for feeder cattle selling

-

- Journal:

- Agricultural and Resource Economics Review / Volume 51 / Issue 2 / August 2022

- Published online by Cambridge University Press:

- 09 March 2022, pp. 343-360

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Sources of exposure identified through structured interviews of healthcare workers who test positive for severe acute respiratory coronavirus virus 2 (SARS-CoV-2): A prospective analysis at two teaching hospitals

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 1 / Issue 1 / 2021

- Published online by Cambridge University Press:

- 15 December 2021, e65

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Finding the balance between overtreatment versus undertreatment for hospital-acquired pneumonia

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 43 / Issue 3 / March 2022

- Published online by Cambridge University Press:

- 01 December 2021, pp. 376-378

- Print publication:

- March 2022

-

- Article

- Export citation

Risk for depression tripled during the COVID-19 pandemic in emerging adults followed for the last 8 years

-

- Journal:

- Psychological Medicine / Volume 53 / Issue 5 / April 2023

- Published online by Cambridge University Press:

- 02 November 2021, pp. 2156-2163

-

- Article

- Export citation

7 - Obsessive-Compulsive and Related Disorders

-

-

- Book:

- Introduction to Psychiatry

- Published online:

- 22 July 2021

- Print publication:

- 12 August 2021, pp 146-165

-

- Chapter

- Export citation

Charting elimination in the pandemic: a SARS-CoV-2 serosurvey of blood donors in New Zealand

-

- Journal:

- Epidemiology & Infection / Volume 149 / 2021

- Published online by Cambridge University Press:

- 30 July 2021, e173

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

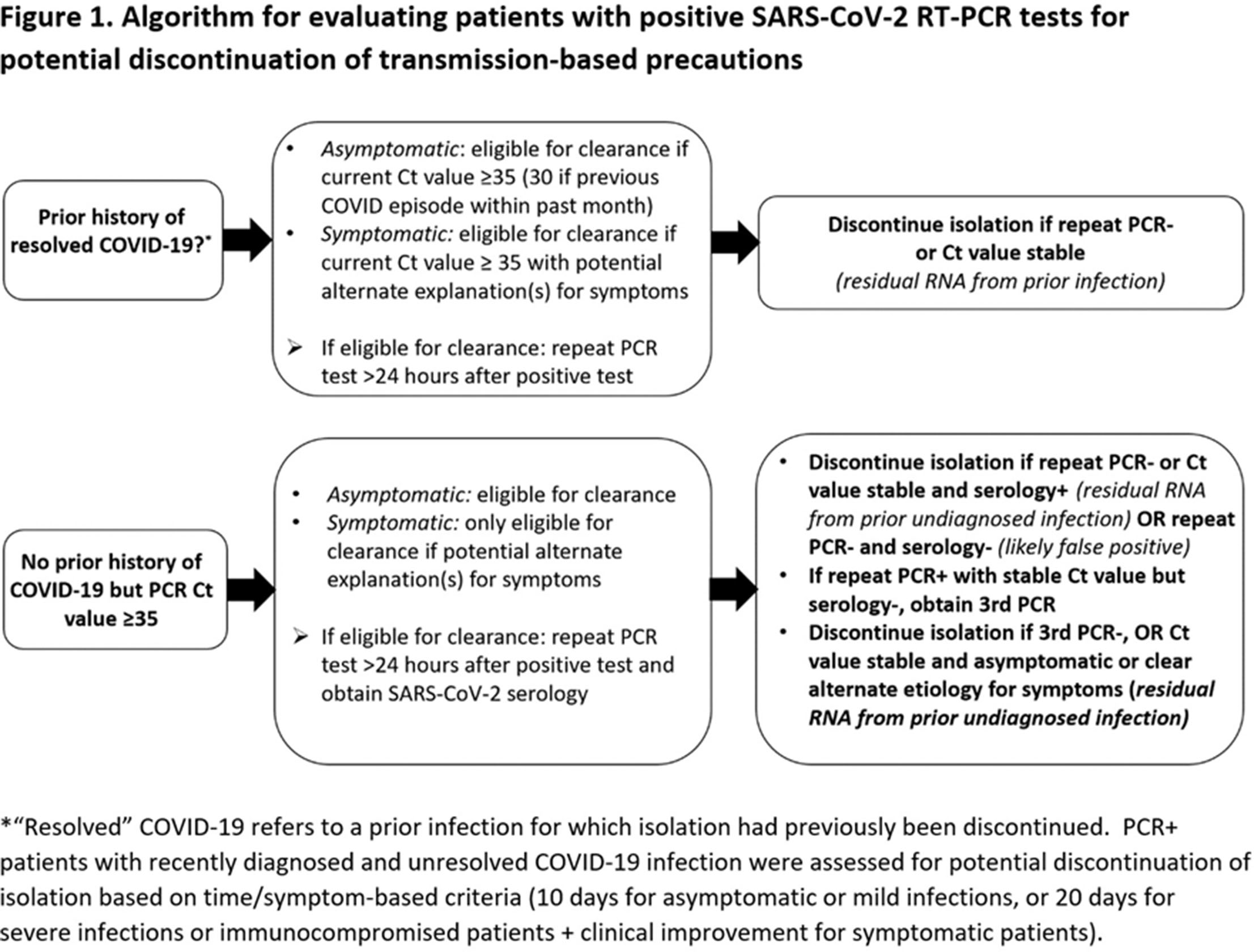

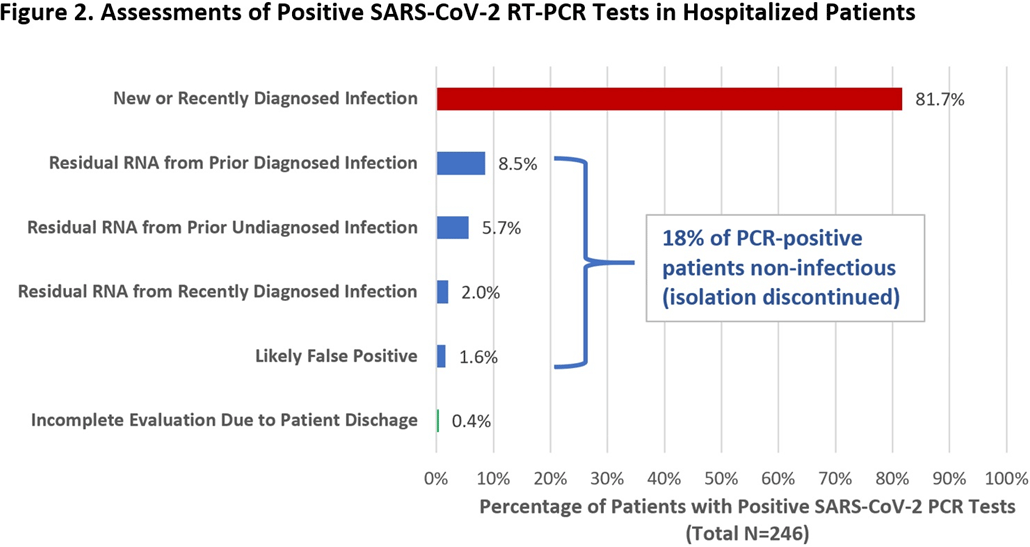

Does Every Patient with a Positive SARS-CoV-2 RT-PCR Test Require Isolation? A Prospective Analysis

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 1 / Issue S1 / July 2021

- Published online by Cambridge University Press:

- 29 July 2021, pp. s8-s9

-

- Article

-

- You have access

- Open access

- Export citation