196 results

Advancing health equity through action in antimicrobial stewardship and healthcare epidemiology

- Part of

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 45 / Issue 4 / April 2024

- Published online by Cambridge University Press:

- 14 February 2024, pp. 412-419

- Print publication:

- April 2024

-

- Article

-

- You have access

- HTML

- Export citation

Impact of primary care triage using the Head and Neck Cancer Risk Calculator version 2 on tertiary head and neck services in the post-coronavirus disease 2019 period

-

- Journal:

- The Journal of Laryngology & Otology , First View

- Published online by Cambridge University Press:

- 22 January 2024, pp. 1-6

-

- Article

- Export citation

Variations in implementation of antimicrobial stewardship via telehealth at select Veterans Affairs medical centers

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S2 / June 2023

- Published online by Cambridge University Press:

- 29 September 2023, p. s38

-

- Article

-

- You have access

- Open access

- Export citation

Susceptibility results discrepancy analysis between NHSN antimicrobial resistance (AR) Option and NEDSS Base System in Tennessee, July 2020–December 2021

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S2 / June 2023

- Published online by Cambridge University Press:

- 29 September 2023, p. s104

-

- Article

-

- You have access

- Open access

- Export citation

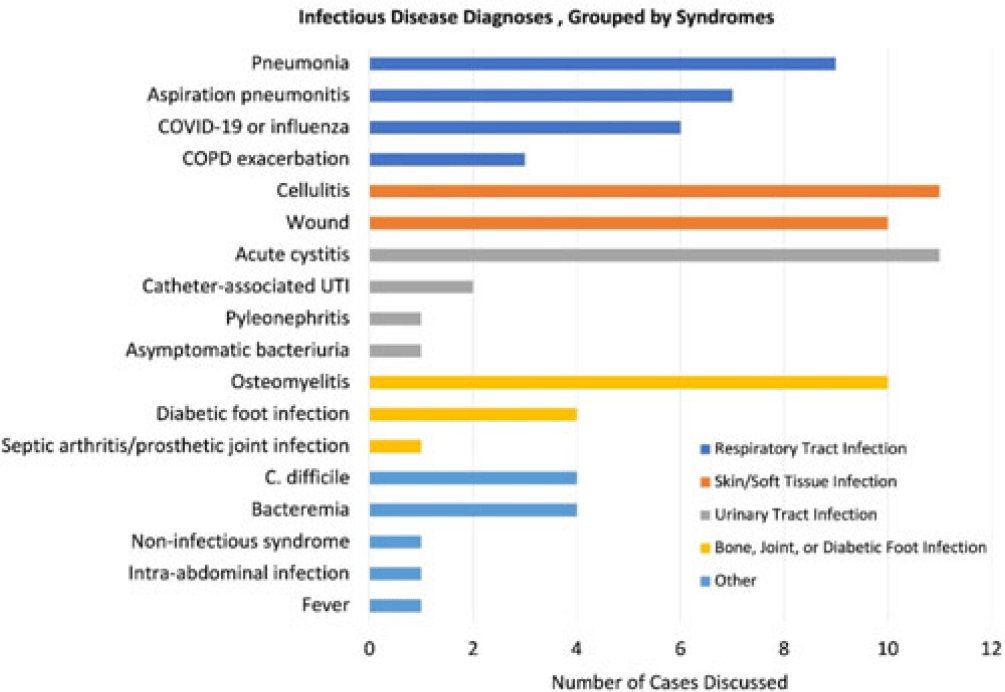

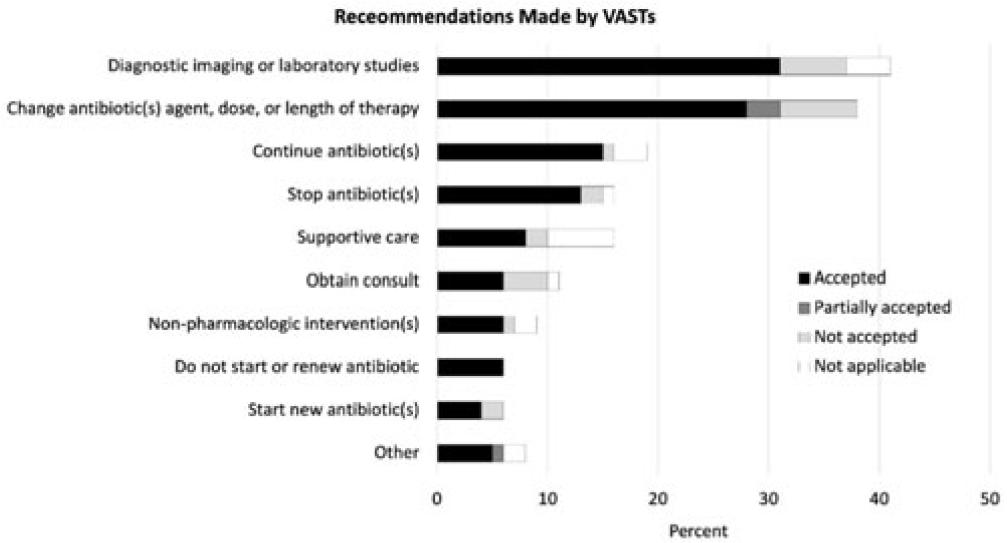

Using telehealth to support antimicrobial stewardship at four rural VA medical centers: Interim analysis

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S2 / June 2023

- Published online by Cambridge University Press:

- 29 September 2023, p. s110

-

- Article

-

- You have access

- Open access

- Export citation

Determining trends of respiratory tract infections in a long-term care facility pilot surveillance project

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S2 / June 2023

- Published online by Cambridge University Press:

- 29 September 2023, p. s24

-

- Article

-

- You have access

- Open access

- Export citation

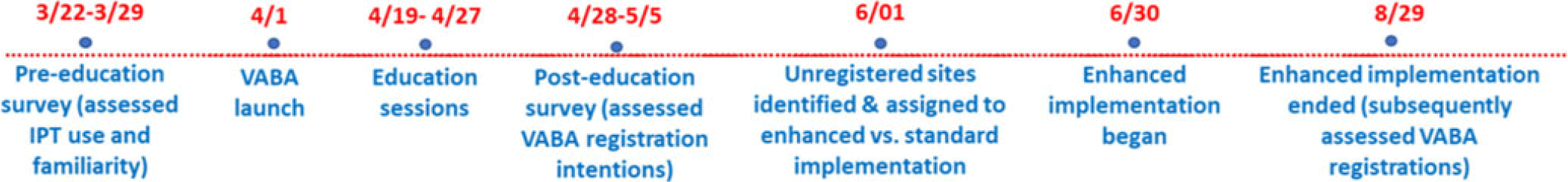

Increasing Registration for a VA Multidrug-Resistant Organism Alert Tool

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S2 / June 2023

- Published online by Cambridge University Press:

- 29 September 2023, pp. s124-s125

-

- Article

-

- You have access

- Open access

- Export citation

Dense pasts: settlement archaeology after Fox's The archaeology of the Cambridge region (1923)

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Volatility, Realignment, and Electoral Shocks: Brexit and the UK General Election of 2019

-

- Journal:

- PS: Political Science & Politics / Volume 56 / Issue 4 / October 2023

- Published online by Cambridge University Press:

- 10 August 2023, pp. 537-545

- Print publication:

- October 2023

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Incidence and risk factors for clinically confirmed secondary bacterial infections in patients hospitalized for coronavirus disease 2019 (COVID-19)

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 44 / Issue 10 / October 2023

- Published online by Cambridge University Press:

- 15 May 2023, pp. 1650-1656

- Print publication:

- October 2023

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

No effects of synchronicity in online social dilemma experiments: A registered report

-

- Journal:

- Judgment and Decision Making / Volume 16 / Issue 4 / July 2021

- Published online by Cambridge University Press:

- 01 January 2023, pp. 823-843

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Chapter 5 - Financing Health Care

- from Section 1 - Analyzing Health Systems: Concepts, Components, Performance

-

-

- Book:

- Making Health Systems Work in Low and Middle Income Countries

- Published online:

- 08 December 2022

- Print publication:

- 29 December 2022, pp 67-82

-

- Chapter

- Export citation

The Evolutionary Map of the Universe Pilot Survey – ADDENDUM

-

- Journal:

- Publications of the Astronomical Society of Australia / Volume 39 / 2022

- Published online by Cambridge University Press:

- 02 November 2022, e055

-

- Article

- Export citation

Examining the Scope of Nuclear Weapons-Related Activities Covered under the Environmental Remediation Obligation of the Treaty on the Prohibition of Nuclear Weapons

-

- Journal:

- Asian Journal of International Law / Volume 13 / Issue 2 / July 2023

- Published online by Cambridge University Press:

- 22 September 2022, pp. 365-390

- Print publication:

- July 2023

-

- Article

- Export citation

A Three-Dimensional Reconstruction Algorithm for Scanning Transmission Electron Microscopy Data from a Single Sample Orientation

-

- Journal:

- Microscopy and Microanalysis / Volume 28 / Issue 5 / October 2022

- Published online by Cambridge University Press:

- 24 June 2022, pp. 1632-1640

- Print publication:

- October 2022

-

- Article

- Export citation

Increased carbapenemase testing following implementation of national VA guidelines for carbapenem-resistant Enterobacterales (CRE)

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 2 / Issue 1 / 2022

- Published online by Cambridge University Press:

- 02 June 2022, e88

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Susceptibility results discrepancy analysis between NHSN Antibiotic Resistance (AR) Option and laboratory instrument data

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 2 / Issue S1 / July 2022

- Published online by Cambridge University Press:

- 16 May 2022, p. s66

-

- Article

-

- You have access

- Open access

- Export citation

Effect of the COVID-19 pandemic on Tennessee hospital antibiotic use

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 2 / Issue S1 / July 2022

- Published online by Cambridge University Press:

- 16 May 2022, p. s20

-

- Article

-

- You have access

- Open access

- Export citation

Determining the effect of COVID-19 on antibiotic use in long-term care facilities across Tennessee

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 2 / Issue S1 / July 2022

- Published online by Cambridge University Press:

- 16 May 2022, pp. s21-s22

-

- Article

-

- You have access

- Open access

- Export citation

Effects of antibiotic suppression on three healthcare systems’ National Healthcare Safety Network Antibiotic Resistance Option data

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 1 / Issue 1 / 2021

- Published online by Cambridge University Press:

- 10 November 2021, e47

-

- Article

-

- You have access

- Open access

- HTML

- Export citation