135 results

4 Evaluating Plasma GFAP for the Detection of Alzheimer’s Disease Dementia

- Madeline Ally, Henrik Zetterberg, Kaj Blennow, Nicholas J. Ashton, Thomas K. Karikari, Hugo Aparicio, Michael A. Sugarman, Brandon Frank, Yorghos Tripodis, Ann C. McKee, Thor D. Stein, Brett Martin, Joseph N. Palmisano, Eric G. Steinberg, Irene Simkina, Lindsay Farrer, Gyungah Jun, Katherine W. Turk, Andrew E. Budson, Maureen K. O’Connor, Rhoda Au, Wei Qiao Qiu, Lee E. Goldstein, Ronald Killiany, Neil W. Kowall, Robert A. Stern, Jesse Mez, Michael L. Alosco

-

- Journal:

- Journal of the International Neuropsychological Society / Volume 29 / Issue s1 / November 2023

- Published online by Cambridge University Press:

- 21 December 2023, pp. 408-409

-

- Article

-

- You have access Access

- Export citation

-

Objective:

Blood-based biomarkers represent a scalable and accessible approach for the detection and monitoring of Alzheimer’s disease (AD). Plasma phosphorylated tau (p-tau) and neurofilament light (NfL) are validated biomarkers for the detection of tau and neurodegenerative brain changes in AD, respectively. There is now emphasis to expand beyond these markers to detect and provide insight into the pathophysiological processes of AD. To this end, a reactive astrocytic marker, namely plasma glial fibrillary acidic protein (GFAP), has been of interest. Yet, little is known about the relationship between plasma GFAP and AD. Here, we examined the association between plasma GFAP, diagnostic status, and neuropsychological test performance. Diagnostic accuracy of plasma GFAP was compared with plasma measures of p-tau181 and NfL.

Participants and Methods:This sample included 567 participants from the Boston University (BU) Alzheimer’s Disease Research Center (ADRC) Longitudinal Clinical Core Registry, including individuals with normal cognition (n=234), mild cognitive impairment (MCI) (n=180), and AD dementia (n=153). The sample included all participants who had a blood draw. Participants completed a comprehensive neuropsychological battery (sample sizes across tests varied due to missingness). Diagnoses were adjudicated during multidisciplinary diagnostic consensus conferences. Plasma samples were analyzed using the Simoa platform. Binary logistic regression analyses tested the association between GFAP levels and diagnostic status (i.e., cognitively impaired due to AD versus unimpaired), controlling for age, sex, race, education, and APOE e4 status. Area under the curve (AUC) statistics from receiver operating characteristics (ROC) using predicted probabilities from binary logistic regression examined the ability of plasma GFAP to discriminate diagnostic groups compared with plasma p-tau181 and NfL. Linear regression models tested the association between plasma GFAP and neuropsychological test performance, accounting for the above covariates.

Results:The mean (SD) age of the sample was 74.34 (7.54), 319 (56.3%) were female, 75 (13.2%) were Black, and 223 (39.3%) were APOE e4 carriers. Higher GFAP concentrations were associated with increased odds for having cognitive impairment (GFAP z-score transformed: OR=2.233, 95% CI [1.609, 3.099], p<0.001; non-z-transformed: OR=1.004, 95% CI [1.002, 1.006], p<0.001). ROC analyses, comprising of GFAP and the above covariates, showed plasma GFAP discriminated the cognitively impaired from unimpaired (AUC=0.75) and was similar, but slightly superior, to plasma p-tau181 (AUC=0.74) and plasma NfL (AUC=0.74). A joint panel of the plasma markers had greatest discrimination accuracy (AUC=0.76). Linear regression analyses showed that higher GFAP levels were associated with worse performance on neuropsychological tests assessing global cognition, attention, executive functioning, episodic memory, and language abilities (ps<0.001) as well as higher CDR Sum of Boxes (p<0.001).

Conclusions:Higher plasma GFAP levels differentiated participants with cognitive impairment from those with normal cognition and were associated with worse performance on all neuropsychological tests assessed. GFAP had similar accuracy in detecting those with cognitive impairment compared with p-tau181 and NfL, however, a panel of all three biomarkers was optimal. These results support the utility of plasma GFAP in AD detection and suggest the pathological processes it represents might play an integral role in the pathogenesis of AD.

6 Association Between American Football Play and Parkinson's Disease: Analysis of the Fox Insight Data Set

- Hannah Bruce, Yorghos Tripodis, Michael McClean, Monica Korell, Caroline M Tanner, Brittany Contreras, Joshua Gottesman, Leslie Kirsch, Yasir Karim, Brett Martin, Joseph Palmisano, Thor D Stein, Jesse Mez, Robert A Stern, Charles H Adler, Chris Nowinski, Ann C McKee, Michael L Alosco

-

- Journal:

- Journal of the International Neuropsychological Society / Volume 29 / Issue s1 / November 2023

- Published online by Cambridge University Press:

- 21 December 2023, pp. 415-416

-

- Article

-

- You have access Access

- Export citation

-

Objective:

Parkinsonism and Parkinson's disease (PD) have been described as consequences of repetitive head impacts (RHI) from boxing, since 1928. Autopsy studies have shown that RHI from other contact sports can also increase risk for neurodegenerative diseases, including chronic traumatic encephalopathy (CTE) and Lewy bodies. In vivo research on the relationship between American football play and PD is scarce, with small samples, and equivocal findings. This study leveraged the Fox Insight study to evaluate the association between American football and parkinsonism and/or PD Diagnosis and related clinical outcomes.

Participants and Methods:Fox Insight is an online study of people with and without PD who are 18+ years (>50,000 enrolled). Participants complete online questionnaires on motor function, cognitive function, and general health behaviors. Participants self-reported whether they "currently have a diagnosis of Parkinson's disease, or parkinsonism, by a physician or other health care professional." In November 2020, the Boston University Head Impact Exposure Assessment was launched in Fox Insight for large-scale data collection on exposure to RHI from contact sports and other sources. Data used in this abstract were obtained from the Fox Insight database https://foxinsight-info.michaeljfox.org/insight/explore/insight.jsp on 01/06/2022. The sample includes 2018 men who endorsed playing an organized sport. Because only 1.6% of football players were women, analyses are limited to men. Responses to questions regarding history of participation in organized football were examined. Other contact and/or non-contact sports served as the referent group. Outcomes included PD status (absence/presence of parkinsonism or PD) and Penn Parkinson's Daily Activities Questionnaire-15 (PDAQ-15) for assessment of cognitive symptoms. Binary logistic regression tested associations between history and years of football play with PD status, controlling for age, education, current heart disease or diabetes, and family history of PD. Linear regressions, controlling for these variables, were used for the PDAQ-15.

Results:Of the 2018 men (mean age=67.67, SD=9.84; 10, 0.5% Black), 788 (39%) played football (mean years of play=4.29, SD=2.88), including 122 (16.3%) who played youth football, 494 (66.0%) played high school, 128 (17.1%) played college football, and 5 (0.7%) played at the semi-professional or professional level. 1738 (86.1%) reported being diagnosed with parkinsonism/PD, and 707 of these were football players (40.7%). History of playing any level of football was associated with increased odds of having a reported parkinsonism or PD diagnosis (OR=1.52, 95% CI=1.14-2.03, p=0.004). The OR remained similar among those age <69 (sample median age) (OR=1.45, 95% CI=0.97-2.17, p=0.07) and 69+ (OR=1.45, 95% CI=0.95-2.22, p=0.09). Among the football players, there was not a significant association between years of play and PD status (OR=1.09, 95% CI=1.00-1.20, p=0.063). History of football play was not associated with PDAQ-15 scores (n=1980) (beta=-0.78, 95% CI=-1.59-0.03, p=0.059) among the entire sample.

Conclusions:Among 2018 men from a data set enriched for PD, playing organized football was associated with increased odds of having a reported parkinsonism/PD diagnosis. Next steps include examination of the contribution of traumatic brain injury and other sources of RHI (e.g., soccer, military service).

64 Neuroimaging Evidence of Neurodegenerative Disease in Former Professional American Football Players Who “Fail” Validity Testing: A Case Series

- Ranjani Shankar, Julia Culhane, Leonardo Iaccarino, Chris Nowinski, Nidhi Mundada, Karen Smith, Jeremy Tanner, Charles Windon, Yorghos Tripodis, Gustavo Mercier, Thor D Stein, Anne C McKee, Robert A Stern, Neil Kowall, Bruce L Miller, Jesse Mez, Ron Killiany, Gil D Rabinovici, Michael L Alosco, Breton M Asken

-

- Journal:

- Journal of the International Neuropsychological Society / Volume 29 / Issue s1 / November 2023

- Published online by Cambridge University Press:

- 21 December 2023, pp. 574-575

-

- Article

-

- You have access Access

- Export citation

-

Objective:

Former professional American football players have a high relative risk for neurodegenerative diseases like chronic traumatic encephalopathy (CTE). Interpreting low cognitive test scores in this population occasionally is complicated by performance on validity testing. Neuroimaging biomarkers may help inform whether a neurodegenerative disease is present in these situations. We report three cases of retired professional American football players who completed comprehensive neuropsychological testing, but “failed” performance validity tests, and underwent multimodal neuroimaging (structural MRI, Aß-PET, and tau-PET).

Participants and Methods:Three cases were identified from the Focused Neuroimaging for the Neurodegenerative Disease Chronic Traumatic Encephalopathy (FIND-CTE) study, an ongoing multimodal imaging study of retired National Football League players with complaints of progressive cognitive decline conducted at Boston University and the UCSF Memory and Aging Center. Participants were relatively young (age range 55-65), had 16 or more years of education, and two identified as Black/African American. Raw neuropsychological test scores were converted to demographically-adjusted z-scores. Testing included standalone (Test of Memory Malingering; TOMM) and embedded (reliable digit span, RDS) performance validity measures. Validity cutoffs were TOMM Trial 2 < 45 and RDS < 7. Structural MRIs were interpreted by trained neurologists. Aß-PET with Florbetapir was used to quantify cortical Aß deposition as global Centiloids (0 = mean cortical signal for a young, cognitively normal, Aß negative individual in their 20s, 100 = mean cortical signal for a patient with mild-to-moderate Alzheimer’s disease dementia). Tau-PET was performed with MK-6240 and first quantified as standardized uptake value ratio (SUVR) map. The SUVR map was then converted to a w-score map representing signal intensity relative to a sample of demographically-matched healthy controls.

Results:All performed in the average range on a word reading-based estimate of premorbid intellect. Contribution of Alzheimer’s disease pathology was ruled out in each case based on Centiloids quantifications < 0. All cases scored below cutoff on TOMM Trial 2 (Case #1=43, Case #2=42, Case #3=19) and Case #3 also scored well below RDS cutoff (2). Each case had multiple cognitive scores below expectations (z < -2.0) most consistently in memory, executive function, processing speed domains. For Case #1, MRI revealed mild atrophy in dorsal fronto-parietal and medial temporal lobe (MTL) regions and mild periventricular white matter disease. Tau-PET showed MTL tau burden modestly elevated relative to controls (regional w-score=0.59, 72nd%ile). For Case #2, MRI revealed cortical atrophy, mild hippocampal atrophy, and a microhemorrhage, with no evidence of meaningful tau-PET signal. For Case #3, MRI showed cortical atrophy and severe white matter disease, and tau-PET revealed significantly elevated MTL tau burden relative to controls (w-score=1.90, 97th%ile) as well as focal high signal in the dorsal frontal lobe (overall frontal region w-score=0.64, 74th%ile).

Conclusions:Low scores on performance validity tests complicate the interpretation of the severity of cognitive deficits, but do not negate the presence of true cognitive impairment or an underlying neurodegenerative disease. In the rapidly developing era of biomarkers, neuroimaging tools can supplement neuropsychological testing to help inform whether cognitive or behavioral changes are related to a neurodegenerative disease.

5 Antemortem Plasma GFAP Predicts Alzheimer’s Disease Neuropathological Changes

- Madeline Ally, Henrik Zetterberg, Kaj Blennow, Nicholas J. Ashton, Thomas K. Karikari, Hugo Aparicio, Michael A. Sugarman, Brandon Frank, Yorghos Tripodis, Brett Martin, Joseph N. Palmisano, Eric G. Steinberg, Irene Simkina, Lindsay Farrer, Gyungah Jun, Katherine W. Turk, Andrew E. Budson, Maureen K. O’Connor, Rhoda Au, Wei Qiao Qiu, Lee E. Goldstein, Ronald Killiany, Neil W. Kowall, Robert A. Stern, Jesse Mez, Bertran R. Huber, Ann C. McKee, Thor D. Stein, Michael L. Alosco

-

- Journal:

- Journal of the International Neuropsychological Society / Volume 29 / Issue s1 / November 2023

- Published online by Cambridge University Press:

- 21 December 2023, pp. 409-410

-

- Article

-

- You have access Access

- Export citation

-

Objective:

Blood-based biomarkers offer a more feasible alternative to Alzheimer’s disease (AD) detection, management, and study of disease mechanisms than current in vivo measures. Given their novelty, these plasma biomarkers must be assessed against postmortem neuropathological outcomes for validation. Research has shown utility in plasma markers of the proposed AT(N) framework, however recent studies have stressed the importance of expanding this framework to include other pathways. There is promising data supporting the usefulness of plasma glial fibrillary acidic protein (GFAP) in AD, but GFAP-to-autopsy studies are limited. Here, we tested the association between plasma GFAP and AD-related neuropathological outcomes in participants from the Boston University (BU) Alzheimer’s Disease Research Center (ADRC).

Participants and Methods:This sample included 45 participants from the BU ADRC who had a plasma sample within 5 years of death and donated their brain for neuropathological examination. Most recent plasma samples were analyzed using the Simoa platform. Neuropathological examinations followed the National Alzheimer’s Coordinating Center procedures and diagnostic criteria. The NIA-Reagan Institute criteria were used for the neuropathological diagnosis of AD. Measures of GFAP were log-transformed. Binary logistic regression analyses tested the association between GFAP and autopsy-confirmed AD status, as well as with semi-quantitative ratings of regional atrophy (none/mild versus moderate/severe) using binary logistic regression. Ordinal logistic regression analyses tested the association between plasma GFAP and Braak stage and CERAD neuritic plaque score. Area under the curve (AUC) statistics from receiver operating characteristics (ROC) using predicted probabilities from binary logistic regression examined the ability of plasma GFAP to discriminate autopsy-confirmed AD status. All analyses controlled for sex, age at death, years between last blood draw and death, and APOE e4 status.

Results:Of the 45 brain donors, 29 (64.4%) had autopsy-confirmed AD. The mean (SD) age of the sample at the time of blood draw was 80.76 (8.58) and there were 2.80 (1.16) years between the last blood draw and death. The sample included 20 (44.4%) females, 41 (91.1%) were White, and 20 (44.4%) were APOE e4 carriers. Higher GFAP concentrations were associated with increased odds for having autopsy-confirmed AD (OR=14.12, 95% CI [2.00, 99.88], p=0.008). ROC analysis showed plasma GFAP accurately discriminated those with and without autopsy-confirmed AD on its own (AUC=0.75) and strengthened as the above covariates were added to the model (AUC=0.81). Increases in GFAP levels corresponded to increases in Braak stage (OR=2.39, 95% CI [0.71-4.07], p=0.005), but not CERAD ratings (OR=1.24, 95% CI [0.004, 2.49], p=0.051). Higher GFAP levels were associated with greater temporal lobe atrophy (OR=10.27, 95% CI [1.53,69.15], p=0.017), but this was not observed with any other regions.

Conclusions:The current results show that antemortem plasma GFAP is associated with non-specific AD neuropathological changes at autopsy. Plasma GFAP could be a useful and practical biomarker for assisting in the detection of AD-related changes, as well as for study of disease mechanisms.

Participation in the Georgia Food for Health programme and CVD risk factors: a longitudinal observational study

- Miranda Alonna Cook, Kathy Taylor, Tammy Reasoner, Sarah Moore, Katie Mooney, Cecilia Tran, Carli Barbo, Stacie Schmidt, Aryeh D Stein, Amy Webb Girard

-

- Journal:

- Public Health Nutrition / Volume 26 / Issue 11 / November 2023

- Published online by Cambridge University Press:

- 07 August 2023, pp. 2470-2479

-

- Article

-

- You have access Access

- Open access

- HTML

- Export citation

-

Objective:

To assess the relationship between programme attendance in a produce prescription (PRx) programme and changes in cardiovascular risk factors.

Design:The Georgia Food for Health (GF4H) programme provided six monthly nutrition education sessions, six weekly cooking classes and weekly produce vouchers. Participants became programme graduates attending at least 4 of the 6 of both the weekly cooking classes and monthly education sessions. We used a longitudinal, single-arm approach to estimate the association between the number of monthly programme visits attended and changes in health indicators.

Setting:GF4H was implemented in partnership with a large safety-net health system in Atlanta, GA.

Participants:Three hundred thirty-one participants living with or at-risk of chronic disease and food insecurity were recruited from primary care clinics. Over three years, 282 participants graduated from the programme.

Results:After adjusting for programme site, year, participant sex, age, race and ethnicity, Supplemental Nutrition Assistance Program participation and household size, we estimated that each additional programme visit attended beyond four visits was associated with a 0·06 kg/m2 reduction in BMI (95 % CI –0·12, –0·01; P = 0·02), a 0·37 inch reduction in waist circumference (95 % CI –0·48, –0·27; P < 0·001), a 1·01 mmHg reduction in systolic blood pressure (95 % CI –1·45, –0·57; P < 0·001) and a 0·43 mmHg reduction in diastolic blood pressure (95 % CI –0·69, –0·17; P = 0·001).

Conclusions:Each additional cooking and nutrition education visit attended beyond the graduation threshold was associated with modest but significant improvements in CVD risk factors, suggesting that increased engagement in educational components of a PRx programme improves health outcomes.

The use of a Subjective wellbeing scale as predictor of adherence to neuroleptic treatment to determine poor prognostic factor in African population with Schizophrenia

- J. J. Boshe, D. Stein, M. Campbell

-

- Journal:

- European Psychiatry / Volume 66 / Issue S1 / March 2023

- Published online by Cambridge University Press:

- 19 July 2023, p. S156

-

- Article

-

- You have access Access

- Open access

- Export citation

-

Introduction

Subjective well-being when on neuroleptic treatment (SWBN), has been established as a good predictor of adherence, early response and prognosis in patients with schizophrenia. The 20-item subjective well-being under neuroleptic treatment scale (SWN-K 20) is a self-rating scale that has been validated to measure SWBN. However the SWN-K20 has not been previously used to explore psychosocial and clinical factors influencing a low SWN-K20 score in an African population. This study uses the the SWN-K 20 scale among Xhosa speaking African patients with Schizophrenia to determine factors associated with SWBN in this population

ObjectivesTo investigate and identify demographic and clinical predictors of subjective well-being in a sample of Xhosa people with schizophrenia on neuroleptic treatment.

MethodsAs a part of a large genetic study, 244 study participants with a confirmed diagnosis of schizophrenia completed the translated SWN-K 20 scale. Internal consistency analysis was performed, and convergent analysis and exploratory analysis were conducted using Principal Component Analysis (PCA). Linear regression methods were used to determine predictors of SWBN in the sample population.

ResultsWhen translated into isiXhosa, the sasubscales of SWN-K 20 on their own were observed to be less reliable when compared to the scale in its entirety,internal consistency of 0.86 vs0. 59-0.47. The subscales were therefore noted to be not meaningful in measuring specific constructs but the full scale could be used to determine a single construct of general wellbeing.The validity of the SWN-K20 was further confirmed by moderate correlation scores with Global Assesment functioning scores (GAF), 0.44.There was a significant correlation between overall subjective well-being score with higher education level, and increased illness severity and GAF scores.

ConclusionsPatients’ perception of well-being while on neuroleptic treatment is an essential area of focus when aiming at improving patient centred treatment, compliance and overall treatment outcome. Treating individuals with SMI is difficult and made more complex when patient’s treatment experience and expectations are not elicited. Having a self-reported measurement like the SWN-K 20 available in a validated Xhosa language version provides helpful, possibly broad insights into the subjective well-being experiences of this patient group. Future studies should explore specific symptoms domains that are associated with a change in subjective wellbeing instead of general illness severity.

Disclosure of InterestNone Declared

Random variation drives a critical bias in the comparison of healthcare-associated infections

- Kenneth J. Locey, Thomas A. Webb, Robert A. Weinstein, Bala Hota, Brian D. Stein

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 44 / Issue 9 / September 2023

- Published online by Cambridge University Press:

- 10 March 2023, pp. 1396-1402

- Print publication:

- September 2023

-

- Article

- Export citation

-

Objective:

To evaluate random effects of volume (patient days or device days) on healthcare-associated infections (HAIs) and the standardized infection ratio (SIR) used to compare hospitals.

Design:A longitudinal comparison between publicly reported quarterly data (2014–2020) and volume-based random sampling using 4 HAI types: central-line–associated bloodstream infections, catheter-associated urinary tract infections, Clostridioides difficile infections, methicillin-resistant Staphylococcus aureus infections.

Methods:Using 4,268 hospitals with reported SIRs, we examined relationships of SIRs to volume and compared distributions of SIRs and numbers of reported HAIs to the outcomes of simulated random sampling. We included random expectations into SIR calculations to produce a standardized infection score (SIS).

Results:Among hospitals with volumes less than the median, 20%–33% had SIRs of 0, compared to 0.3%–5% for hospitals with volumes higher than the median. Distributions of SIRs were 86%–92% similar to those based on random sampling. Random expectations explained 54%–84% of variation in numbers of HAIs. The use of SIRs led hundreds of hospitals with more infections than either expected at random or predicted by risk-adjusted models to rank better than other hospitals. The SIS mitigated this effect and allowed hospitals of disparate volumes to achieve better scores while decreasing the number of hospitals tied for the best score.

Conclusions:SIRs and numbers of HAIs are strongly influenced by random effects of volume. Mitigating these effects drastically alters rankings for HAI types and may further alter penalty assignments in programs that aim to reduce HAIs and improve quality of care.

The thalamus and its subregions – a gateway to obsessive-compulsive disorder

- C. Weeland, C. Vriend, Y. Van Der Werf, C. Huyser, M. Hillegers, H. Tiemeier, T. White, N. De Joode, P. Thompson, D. Stein, O. Van Den Heuvel, S. Kasprzak

-

- Journal:

- European Psychiatry / Volume 65 / Issue S1 / June 2022

- Published online by Cambridge University Press:

- 01 September 2022, pp. S77-S78

-

- Article

-

- You have access Access

- Open access

- Export citation

-

Introduction

Higher thalamic volume has been found in children with obsessive-compulsive disorder (OCD) and children with clinical-level symptoms within the general population (Boedhoe et al. 2017, Weeland et al. 2021a). Functionally distinct thalamic nuclei are an integral part of OCD-relevant brain circuitry.

ObjectivesWe aimed to study the thalamic nuclei volume in relation to subclinical and clinical OCD across different age ranges. Understanding the role of thalamic nuclei and their associated circuits in pediatric OCD could lead towards treatment strategies specifically targeting these circuits.

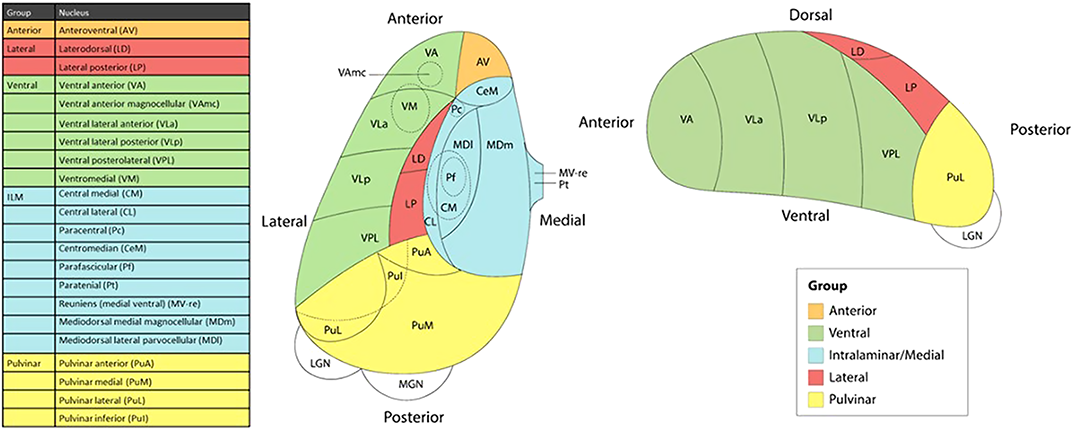

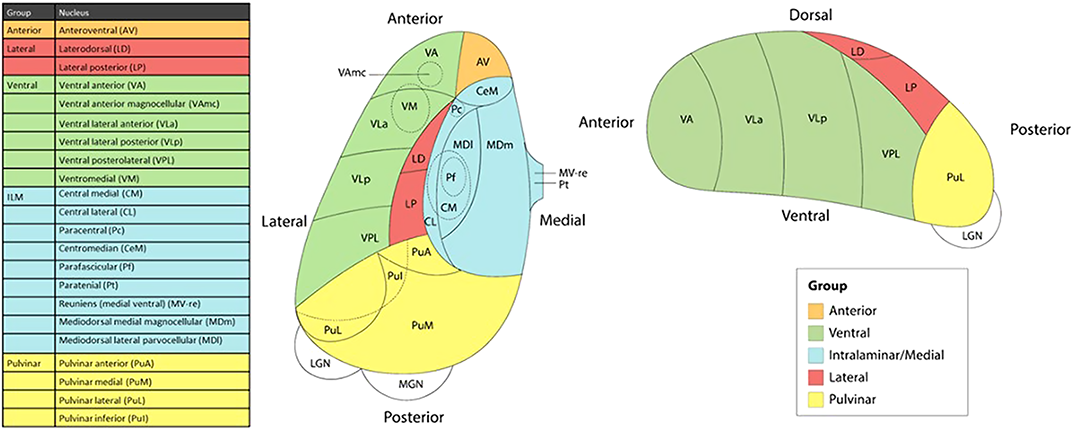

MethodsWe studied the relationship between thalamic nuclei and obsessive-compulsive symptoms (OCS) in a large sample of school-aged children from the Generation R Study (N = 2500) (Weeland et al. 2021b). Using the data from the ENIGMA-OCD working group we conducted mega-analyses to study thalamic subregional volume in OCD across the lifespan in 2,649 OCD patients and 2,774 healthy controls across 29 sites (Weeland et al. 2021c). Thalamic nuclei were grouped into five subregions: anterior, ventral, intralaminar/medial, lateral and pulvinar (Figure 1).

Results

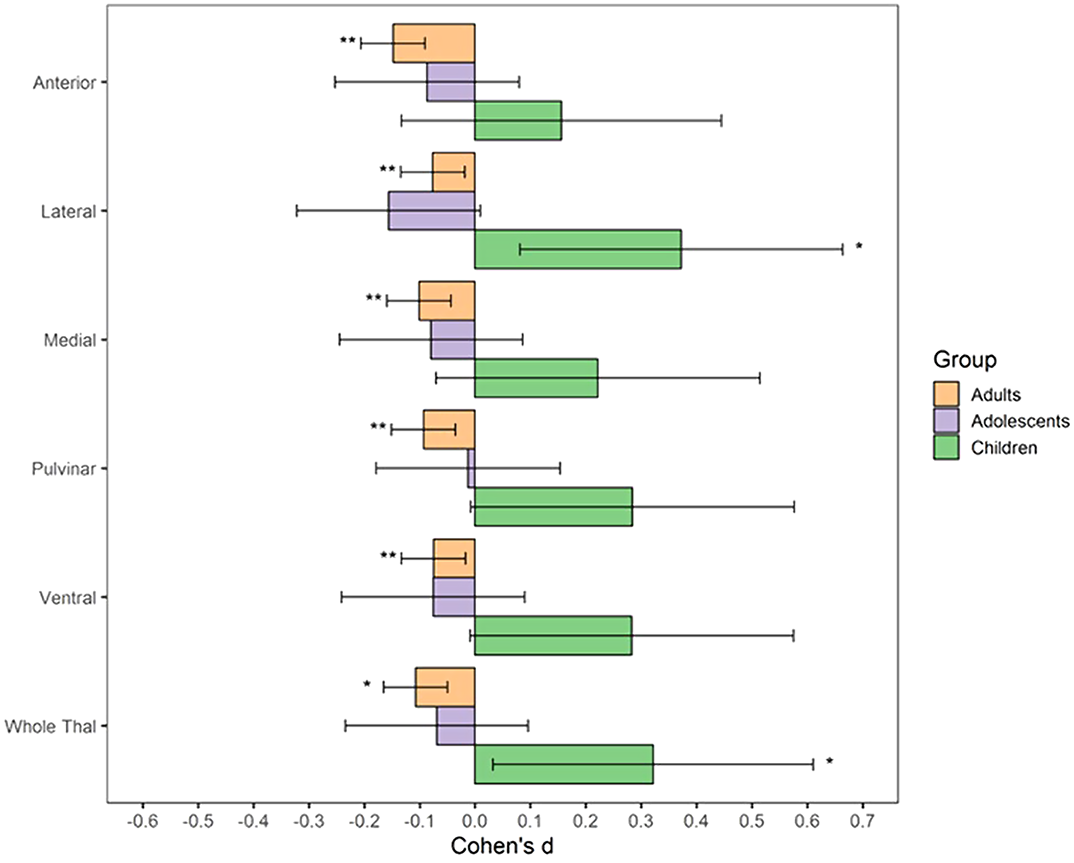

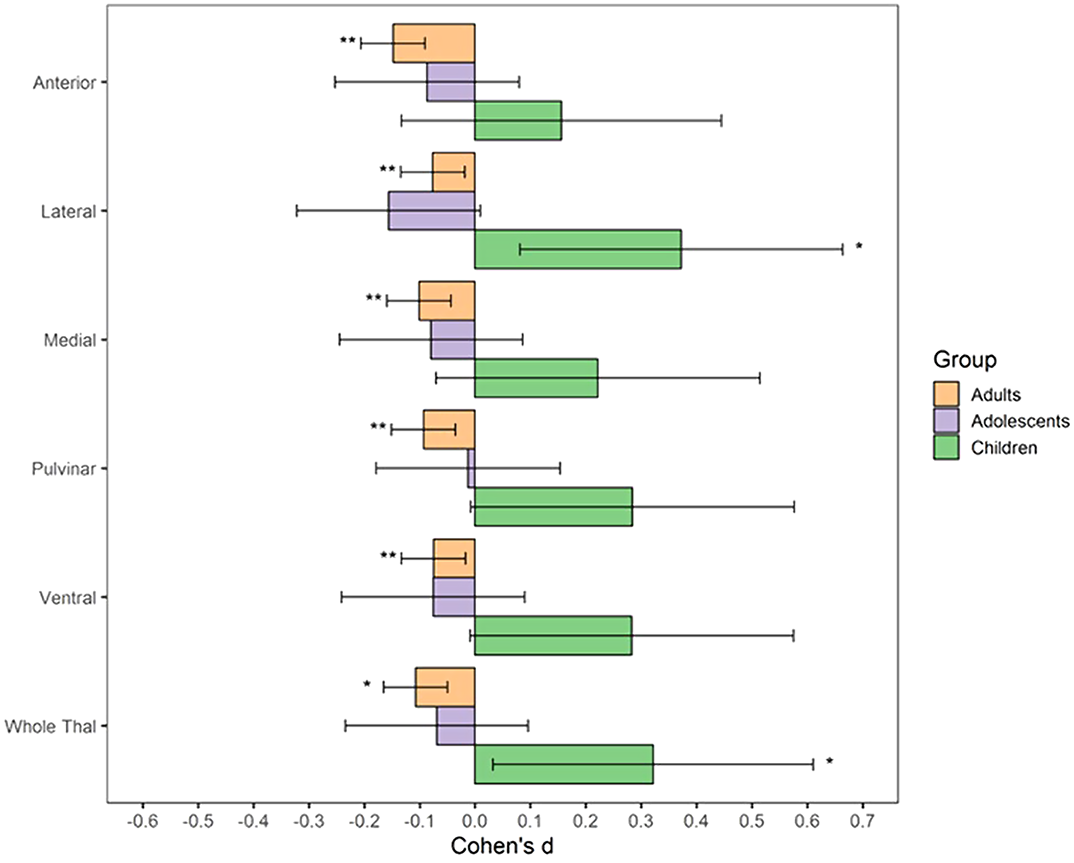

ResultsBoth children with subclinical and clinical OCD compared with controls show increased volume across multiple thalamic subregions. Adult OCD patients have decreased volume across all subregions (Figure 2), which was mostly driven by medicated and adult-onset patients.

Conclusions

ConclusionsOur results suggests that OCD-related thalamic volume differences are global and not driven by particular subregions and that the direction of effects are driven by both age and medication status.

DisclosureNo significant relationships.

Risk of bacterial bloodstream infection does not vary by central-line type during neutropenic periods in pediatric acute myeloid leukemia

- Caitlin W. Elgarten, William R. Otto, Luke Shenton, Madison T. Stein, Joseph Horowitz, Catherine Aftandilian, Staci D. Arnold, Kira O. Bona, Emi Caywood, Anderson B. Collier, M. Monica Gramatges, Meret Henry, Craig Lotterman, Kelly Maloney, Arunkumar J. Modi, Amir Mian, Rajen Mody, Elaine Morgan, Elizabeth A. Raetz, Anupam Verma, Naomi Winick, Jennifer J. Wilkes, Jennifer C. Yu, Richard Aplenc, Brian T. Fisher, Kelly D. Getz

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 44 / Issue 2 / February 2023

- Published online by Cambridge University Press:

- 25 April 2022, pp. 222-229

- Print publication:

- February 2023

-

- Article

- Export citation

-

Background:

Bloodstream infections (BSIs) are a frequent cause of morbidity in patients with acute myeloid leukemia (AML), due in part to the presence of central venous access devices (CVADs) required to deliver therapy.

Objective:To determine the differential risk of bacterial BSI during neutropenia by CVAD type in pediatric patients with AML.

Methods:We performed a secondary analysis in a cohort of 560 pediatric patients (1,828 chemotherapy courses) receiving frontline AML chemotherapy at 17 US centers. The exposure was CVAD type at course start: tunneled externalized catheter (TEC), peripherally inserted central catheter (PICC), or totally implanted catheter (TIC). The primary outcome was course-specific incident bacterial BSI; secondary outcomes included mucosal barrier injury (MBI)-BSI and non-MBI BSI. Poisson regression was used to compute adjusted rate ratios comparing BSI occurrence during neutropenia by line type, controlling for demographic, clinical, and hospital-level characteristics.

Results:The rate of BSI did not differ by CVAD type: 11 BSIs per 1,000 neutropenic days for TECs, 13.7 for PICCs, and 10.7 for TICs. After adjustment, there was no statistically significant association between CVAD type and BSI: PICC incident rate ratio [IRR] = 1.00 (95% confidence interval [CI], 0.75–1.32) and TIC IRR = 0.83 (95% CI, 0.49–1.41) compared to TEC. When MBI and non-MBI were examined separately, results were similar.

Conclusions:In this large, multicenter cohort of pediatric AML patients, we found no difference in the rate of BSI during neutropenia by CVAD type. This may be due to a risk-profile for BSI that is unique to AML patients.

Pain perception and physiological correlates in body-focused repetitive behavior disorders

- Christine Lochner, Janine Roos, Martin Kidd, Gaironeesa Hendricks, Tara S. Peris, Emily J. Ricketts, Darin D. Dougherty, Douglas W. Woods, Nancy J. Keuthen, Dan J. Stein, Jon E. Grant, John Piacentini

-

- Journal:

- CNS Spectrums / Volume 28 / Issue 2 / April 2023

- Published online by Cambridge University Press:

- 22 March 2022, pp. 197-204

-

- Article

-

- You have access Access

- Open access

- HTML

- Export citation

-

Background

Behaviors typical of body-focused repetitive behavior disorders such as trichotillomania (TTM) and skin-picking disorder (SPD) are often associated with pleasure or relief, and with little or no physical pain, suggesting aberrant pain perception. Conclusive evidence about pain perception and correlates in these conditions is, however, lacking.

MethodsA multisite international study examined pain perception and its physiological correlates in adults with TTM (n = 31), SPD (n = 24), and healthy controls (HCs; n = 26). The cold pressor test was administered, and measurements of pain perception and cardiovascular parameters were taken every 15 seconds. Pain perception, latency to pain tolerance, cardiovascular parameters and associations with illness severity, and comorbid depression, as well as interaction effects (group × time interval), were investigated across groups.

ResultsThere were no group differences in pain ratings over time (P = .8) or latency to pain tolerance (P = .8). Illness severity was not associated with pain ratings (all P > .05). In terms of diastolic blood pressure (DBP), the main effect of group was statistically significant (P = .01), with post hoc analyses indicating higher mean DBP in TTM (95% confidence intervals [CI], 84.0-93.5) compared to SPD (95% CI, 73.5-84.2; P = .01), and HCs (95% CI, 75.6-86.0; P = .03). Pain perception did not differ between those with and those without depression (TTM: P = .2, SPD: P = .4).

ConclusionThe study findings were mostly negative suggesting that general pain perception aberration is not involved in TTM and SPD. Other underlying drivers of hair-pulling and skin-picking behavior (eg, abnormal reward processing) should be investigated.

Childhood adversities and mental disorders in first-year college students: results from the World Mental Health International College Student Initiative

- Mathilde M. Husky, Ekaterina Sadikova, Sue Lee, Jordi Alonso, Randy P. Auerbach, Jason Bantjes, Ronny Bruffaerts, Pim Cuijpers, David D. Ebert, Raùl Gutiérrez Garcia, Penelope Hasking, Arthur Mak, Margaret McLafferty, Nancy A. Sampson, Dan J. Stein, Ronald C. Kessler, WHO WMH-ICS collaborators

-

- Journal:

- Psychological Medicine / Volume 53 / Issue 7 / May 2023

- Published online by Cambridge University Press:

- 11 January 2022, pp. 2963-2973

-

- Article

- Export citation

-

Background

This study investigates associations of several dimensions of childhood adversities (CAs) with lifetime mental disorders, 12-month disorder persistence, and impairment among incoming college students.

MethodsData come from the World Mental Health International College Student Initiative (WMH-ICS). Web-based surveys conducted in nine countries (n = 20 427) assessed lifetime and 12-month mental disorders, 12-month role impairment, and seven types of CAs occurring before the age of 18: parental psychopathology, emotional, physical, and sexual abuse, neglect, bullying victimization, and dating violence. Poisson regressions estimated associations using three dimensions of CA exposure: type, number, and frequency.

ResultsOverall, 75.8% of students reported exposure to at least one CA. In multivariate regression models, lifetime onset and 12-month mood, anxiety, and substance use disorders were all associated with either the type, number, or frequency of CAs. In contrast, none of these associations was significant when predicting disorder persistence. Of the three CA dimensions examined, only frequency was associated with severe role impairment among students with 12-month disorders. Population-attributable risk simulations suggest that 18.7–57.5% of 12-month disorders and 16.3% of severe role impairment among those with disorders were associated with these CAs.

ConclusionCAs are associated with an elevated risk of onset and impairment among 12-month cases of diverse mental disorders but are not involved in disorder persistence. Future research on the associations of CAs with psychopathology should include fine-grained assessments of CA exposure and attempt to trace out modifiable intervention targets linked to mechanisms of associations with lifetime psychopathology and burden of 12-month mental disorders.

Effects of prior deployments and perceived resilience on anger trajectories of combat-deployed soldiers

- Laura Campbell-Sills, Jason D. Kautz, Karmel W. Choi, James A. Naifeh, Pablo A. Aliaga, Sonia Jain, Xiaoying Sun, Ronald C. Kessler, Murray B. Stein, Robert J. Ursano, Paul D. Bliese

-

- Journal:

- Psychological Medicine / Volume 53 / Issue 5 / April 2023

- Published online by Cambridge University Press:

- 22 November 2021, pp. 2031-2040

-

- Article

-

- You have access Access

- Open access

- HTML

- Export citation

-

Background

Problematic anger is frequently reported by soldiers who have deployed to combat zones. However, evidence is lacking with respect to how anger changes over a deployment cycle, and which factors prospectively influence change in anger among combat-deployed soldiers.

MethodsReports of problematic anger were obtained from 7298 US Army soldiers who deployed to Afghanistan in 2012. A series of mixed-effects growth models estimated linear trajectories of anger over a period of 1–2 months before deployment to 9 months post-deployment, and evaluated the effects of pre-deployment factors (prior deployments and perceived resilience) on average levels and growth of problematic anger.

ResultsA model with random intercepts and slopes provided the best fit, indicating heterogeneity in soldiers' levels and trajectories of anger. First-time deployers reported the lowest anger overall, but the most growth in anger over time. Soldiers with multiple prior deployments displayed the highest anger overall, which remained relatively stable over time. Higher pre-deployment resilience was associated with lower reports of anger, but its protective effect diminished over time. First- and second-time deployers reporting low resilience displayed different anger trajectories (stable v. decreasing, respectively).

ConclusionsChange in anger from pre- to post-deployment varies based on pre-deployment factors. The observed differences in anger trajectories suggest that efforts to detect and reduce problematic anger should be tailored for first-time v. repeat deployers. Ongoing screening is needed even for soldiers reporting high resilience before deployment, as the protective effect of pre-deployment resilience on anger erodes over time.

Neurobiology of subtypes of trichotillomania and skin picking disorder

- Jon E. Grant, Richard A. I. Bethlehem, Samuel R. Chamberlain, Tara S. Peris, Emily J. Ricketts, Joseph O’Neill, Darin D. Dougherty, Dan Stein, Christine Lochner, Douglas W. Woods, John Piacentini, Nancy J. Keuthen

-

- Journal:

- CNS Spectrums / Volume 28 / Issue 1 / February 2023

- Published online by Cambridge University Press:

- 03 November 2021, pp. 98-103

-

- Article

- Export citation

-

Background

Trichotillomania (TTM) and skin picking disorder (SPD) are common and often debilitating mental health conditions, grouped under the umbrella term of body-focused repetitive behaviors (BFRBs). Recent clinical subtyping found that there were three distinct subtypes of TTM and two of SPD. Whether these clinical subtypes map on to any unique neurobiological underpinnings, however, remains unknown.

MethodsTwo hundred and fifty one adults [193 with a BFRB (85.5% [n = 165] female) and 58 healthy controls (77.6% [n = 45] female)] were recruited from the community for a multicenter between-group comparison using structural neuroimaging. Differences in whole brain structure were compared across the subtypes of BFRBs, controlling for age, sex, scanning site, and intracranial volume.

ResultsWhen the subtypes of TTM were compared, low awareness hair pullers demonstrated increased cortical volume in the lateral occipital lobe relative to controls and sensory sensitive pullers. In addition, impulsive/perfectionist hair pullers showed relative decreased volume near the lingual gyrus of the inferior occipital–parietal lobe compared with controls.

ConclusionsThese data indicate that the anatomical substrates of particular forms of BFRBs are dissociable, which may have implications for understanding clinical presentations and treatment response.

Antidepressant use in low- middle- and high-income countries: a World Mental Health Surveys report

- Alan E. Kazdin, Chi-Shin Wu, Irving Hwang, Victor Puac-Polanco, Nancy A. Sampson, Ali Al-Hamzawi, Jordi Alonso, Laura Helena Andrade, Corina Benjet, José-Miguel Caldas-de-Almeida, Giovanni de Girolamo, Peter de Jonge, Silvia Florescu, Oye Gureje, Josep M. Haro, Meredith G. Harris, Elie G. Karam, Georges Karam, Viviane Kovess-Masfety, Sing Lee, John J. McGrath, Fernando Navarro-Mateu, Daisuke Nishi, Bibilola D. Oladeji, José Posada-Villa, Dan J. Stein, T. Bedirhan Üstün, Daniel V. Vigo, Zahari Zarkov, Alan M. Zaslavsky, Ronald C. Kessler, the WHO World Mental Health Survey collaborators

-

- Journal:

- Psychological Medicine / Volume 53 / Issue 4 / March 2023

- Published online by Cambridge University Press:

- 23 September 2021, pp. 1583-1591

-

- Article

- Export citation

-

Background

The most common treatment for major depressive disorder (MDD) is antidepressant medication (ADM). Results are reported on frequency of ADM use, reasons for use, and perceived effectiveness of use in general population surveys across 20 countries.

MethodsFace-to-face interviews with community samples totaling n = 49 919 respondents in the World Health Organization (WHO) World Mental Health (WMH) Surveys asked about ADM use anytime in the prior 12 months in conjunction with validated fully structured diagnostic interviews. Treatment questions were administered independently of diagnoses and asked of all respondents.

Results3.1% of respondents reported ADM use within the past 12 months. In high-income countries (HICs), depression (49.2%) and anxiety (36.4%) were the most common reasons for use. In low- and middle-income countries (LMICs), depression (38.4%) and sleep problems (31.9%) were the most common reasons for use. Prevalence of use was 2–4 times as high in HICs as LMICs across all examined diagnoses. Newer ADMs were proportionally used more often in HICs than LMICs. Across all conditions, ADMs were reported as very effective by 58.8% of users and somewhat effective by an additional 28.3% of users, with both proportions higher in LMICs than HICs. Neither ADM class nor reason for use was a significant predictor of perceived effectiveness.

ConclusionADMs are in widespread use and for a variety of conditions including but going beyond depression and anxiety. In a general population sample from multiple LMICs and HICs, ADMs were widely perceived to be either very or somewhat effective by the people who use them.

Direct maxillary irrigation therapy in non-operated chronic sinusitis: a prospective randomised controlled trial

- O Ronen, T Marshak, N Uri, M Gruber, O Haberfeld, D Paz, N Stein, R Cohen-Kerem

-

- Journal:

- The Journal of Laryngology & Otology / Volume 136 / Issue 3 / March 2022

- Published online by Cambridge University Press:

- 01 September 2021, pp. 229-236

- Print publication:

- March 2022

-

- Article

- Export citation

-

Objective

This study aimed to compare the effectiveness of pharmacological therapy with and without direct maxillary sinus saline irrigation for the management of chronic rhinosinusitis without polyps.

MethodsIn this prospective randomised controlled trial, 39 non-operated patients were randomly assigned to be treated with direct maxillary sinus saline irrigation in conjunction with systemic antibiotics and topical sprays (n = 24) or with pharmacological therapy alone (n = 15). Endoscopy, Sino-Nasal Outcome Test and Lund–MacKay computed tomography scores were obtained before, six weeks after and one to two years after treatment.

ResultsPost-treatment Lund–Mackay computed tomography scores were significantly improved in both cohorts, with no inter-cohort difference identified. Post-treatment nasal endoscopy scores were significantly improved in the study group but were similar to those measured in the control group. The Sino-Nasal Outcome Test-20 results showed improvement in both cohorts, with no difference between treatment arms.

ConclusionMaxillary sinus puncture and irrigation with saline, combined with pharmacological treatment improves endoscopic findings in patients with chronic rhinosinusitis without polyps, but has no beneficial effect on symptoms and imaging findings over conservative treatment alone.

Non-suicidal self-injury among first-year college students and its association with mental disorders: results from the World Mental Health International College Student (WMH-ICS) initiative

- Glenn Kiekens, Penelope Hasking, Ronny Bruffaerts, Jordi Alonso, Randy P. Auerbach, Jason Bantjes, Corina Benjet, Mark Boyes, Wai Tat Chiu, Laurence Claes, Pim Cuijpers, David D. Ebert, Arthur Mak, Philippe Mortier, Siobhan O'Neill, Nancy A. Sampson, Dan J. Stein, Gemma Vilagut, Matthew K. Nock, Ronald C. Kessler

-

- Journal:

- Psychological Medicine / Volume 53 / Issue 3 / February 2023

- Published online by Cambridge University Press:

- 18 June 2021, pp. 875-886

-

- Article

- Export citation

-

Background

Although non-suicidal self-injury (NSSI) is an issue of major concern to colleges worldwide, we lack detailed information about the epidemiology of NSSI among college students. The objectives of this study were to present the first cross-national data on the prevalence of NSSI and NSSI disorder among first-year college students and its association with mental disorders.

MethodsData come from a survey of the entering class in 24 colleges across nine countries participating in the World Mental Health International College Student (WMH-ICS) initiative assessed in web-based self-report surveys (20 842 first-year students). Using retrospective age-of-onset reports, we investigated time-ordered associations between NSSI and Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition (DSM-IV) mood (major depressive and bipolar disorder), anxiety (generalized anxiety and panic disorder), and substance use disorders (alcohol and drug use disorder).

ResultsNSSI lifetime and 12-month prevalence were 17.7% and 8.4%. A positive screen of 12-month DSM-5 NSSI disorder was 2.3%. Of those with lifetime NSSI, 59.6% met the criteria for at least one mental disorder. Temporally primary lifetime mental disorders predicted subsequent onset of NSSI [median odds ratio (OR) 2.4], but these primary lifetime disorders did not consistently predict 12-month NSSI among respondents with lifetime NSSI. Conversely, even after controlling for pre-existing mental disorders, NSSI consistently predicted later onset of mental disorders (median OR 1.8) as well as 12-month persistence of mental disorders among students with a generalized anxiety disorder (OR 1.6) and bipolar disorder (OR 4.6).

ConclusionsNSSI is common among first-year college students and is a behavioral marker of various common mental disorders.

The prevalence of mental health problems in sub-Saharan adolescents living with HIV: a systematic review

- A.S. Dessauvagie, A. Jörns-Presentati, A.-K. Napp, D.J. Stein, D. Jonker, E. Breet, W. Charles, R. L. Swart, M. Lahti, S. Suliman, R. Jansen, L.L. van den Heuvel, S. Seedat, G. Groen

-

- Journal:

- Global Mental Health / Volume 7 / 2020

- Published online by Cambridge University Press:

- 26 October 2020, e29

-

- Article

-

- You have access Access

- Open access

- HTML

- Export citation

-

Despite the progress made in HIV treatment and prevention, HIV remains a major cause of adolescent morbidity and mortality in sub-Saharan Africa. As perinatally infected children increasingly survive into adulthood, the quality of life and mental health of this population has increased in importance. This review provides a synthesis of the prevalence of mental health problems in this population and explores associated factors. A systematic database search (Medline, PsycINFO, Scopus) with an additional hand search was conducted. Peer-reviewed studies on adolescents (aged 10–19), published between 2008 and 2019, assessing mental health symptoms or psychiatric disorders, either by standardized questionnaires or by diagnostic interviews, were included. The search identified 1461 articles, of which 301 were eligible for full-text analysis. Fourteen of these, concerning HIV-positive adolescents, met the inclusion criteria and were critically appraised. Mental health problems were highly prevalent among this group, with around 25% scoring positive for any psychiatric disorder and 30–50% showing emotional or behavioral difficulties or significant psychological distress. Associated factors found by regression analysis were older age, not being in school, impaired family functioning, HIV-related stigma and bullying, and poverty. Social support and parental competence were protective factors. Mental health problems among HIV-positive adolescents are highly prevalent and should be addressed as part of regular HIV care.

Comorbidity within mental disorders: a comprehensive analysis based on 145 990 survey respondents from 27 countries

- J. J. McGrath, C. C. W. Lim, O. Plana-Ripoll, Y. Holtz, E. Agerbo, N. C. Momen, P. B. Mortensen, C. B. Pedersen, J. Abdulmalik, S. Aguilar-Gaxiola, A. Al-Hamzawi, J. Alonso, E. J. Bromet, R. Bruffaerts, B. Bunting, J. M. C. de Almeida, G. de Girolamo, Y. A. De Vries, S. Florescu, O. Gureje, J. M. Haro, M. G. Harris, C. Hu, E. G. Karam, N. Kawakami, A. Kiejna, V. Kovess-Masfety, S. Lee, Z. Mneimneh, F. Navarro-Mateu, R. Orozco, J. Posada-Villa, A. M. Roest, S. Saha, K. M. Scott, J. C. Stagnaro, D. J. Stein, Y. Torres, M. C. Viana, Y. Ziv, R. C. Kessler, P. de Jonge

-

- Journal:

- Epidemiology and Psychiatric Sciences / Volume 29 / 2020

- Published online by Cambridge University Press:

- 12 August 2020, e153

-

- Article

-

- You have access Access

- Open access

- HTML

- Export citation

-

Aims

Epidemiological studies indicate that individuals with one type of mental disorder have an increased risk of subsequently developing other types of mental disorders. This study aimed to undertake a comprehensive analysis of pair-wise lifetime comorbidity across a range of common mental disorders based on a diverse range of population-based surveys.

MethodsThe WHO World Mental Health (WMH) surveys assessed 145 990 adult respondents from 27 countries. Based on retrospectively-reported age-of-onset for 24 DSM-IV mental disorders, associations were examined between all 548 logically possible temporally-ordered disorder pairs. Overall and time-dependent hazard ratios (HRs) and 95% confidence intervals (CIs) were calculated using Cox proportional hazards models. Absolute risks were estimated using the product-limit method. Estimates were generated separately for men and women.

ResultsEach prior lifetime mental disorder was associated with an increased risk of subsequent first onset of each other disorder. The median HR was 12.1 (mean = 14.4; range 5.2–110.8, interquartile range = 6.0–19.4). The HRs were most prominent between closely-related mental disorder types and in the first 1–2 years after the onset of the prior disorder. Although HRs declined with time since prior disorder, significantly elevated risk of subsequent comorbidity persisted for at least 15 years. Appreciable absolute risks of secondary disorders were found over time for many pairs.

ConclusionsSurvey data from a range of sites confirms that comorbidity between mental disorders is common. Understanding the risks of temporally secondary disorders may help design practical programs for primary prevention of secondary disorders.

Intermittent explosive disorder subtypes in the general population: association with comorbidity, impairment and suicidality

- K. M. Scott, Y. A. de Vries, S. Aguilar-Gaxiola, A. Al-Hamzawi, J. Alonso, E. J. Bromet, B. Bunting, J. M. Caldas-de-Almeida, A. Cía, S. Florescu, O. Gureje, C-Y. Hu, E. G. Karam, A. Karam, N. Kawakami, R. C. Kessler, S. Lee, J. McGrath, B. Oladeji, J. Posada-Villa, D. J. Stein, Z. Zarkov, P. de Jonge

-

- Journal:

- Epidemiology and Psychiatric Sciences / Volume 29 / 2020

- Published online by Cambridge University Press:

- 23 June 2020, e138

-

- Article

-

- You have access Access

- Open access

- HTML

- Export citation

-

Aims

Intermittent explosive disorder (IED) is characterised by impulsive anger attacks that vary greatly across individuals in severity and consequence. Understanding IED subtypes has been limited by lack of large, general population datasets including assessment of IED. Using the 17-country World Mental Health surveys dataset, this study examined whether behavioural subtypes of IED are associated with differing patterns of comorbidity, suicidality and functional impairment.

MethodsIED was assessed using the Composite International Diagnostic Interview in the World Mental Health surveys (n = 45 266). Five behavioural subtypes were created based on type of anger attack. Logistic regression assessed association of these subtypes with lifetime comorbidity, lifetime suicidality and 12-month functional impairment.

ResultsThe lifetime prevalence of IED in all countries was 0.8% (s.e.: 0.0). The two subtypes involving anger attacks that harmed people (‘hurt people only’ and ‘destroy property and hurt people’), collectively comprising 73% of those with IED, were characterised by high rates of externalising comorbid disorders. The remaining three subtypes involving anger attacks that destroyed property only, destroyed property and threatened people, and threatened people only, were characterised by higher rates of internalising than externalising comorbid disorders. Suicidal behaviour did not vary across the five behavioural subtypes but was higher among those with (v. those without) comorbid disorders, and among those who perpetrated more violent assaults.

ConclusionsThe most common IED behavioural subtypes in these general population samples are associated with high rates of externalising disorders. This contrasts with the findings from clinical studies of IED, which observe a preponderance of internalising disorder comorbidity. This disparity in findings across population and clinical studies, together with the marked heterogeneity that characterises the diagnostic entity of IED, suggests that it is a disorder that requires much greater research.

Unit cohesion during deployment and post-deployment mental health: is cohesion an individual- or unit-level buffer for combat-exposed soldiers?

- Laura Campbell-Sills, Patrick J. Flynn, Karmel W. Choi, Tsz Hin H. Ng, Pablo A. Aliaga, Catherine Broshek, Sonia Jain, Ronald C. Kessler, Murray B. Stein, Robert J. Ursano, Paul D. Bliese

-

- Journal:

- Psychological Medicine / Volume 52 / Issue 1 / January 2022

- Published online by Cambridge University Press:

- 10 June 2020, pp. 121-131

-

- Article

- Export citation

-

Background

Unit cohesion may protect service member mental health by mitigating effects of combat exposure; however, questions remain about the origins of potential stress-buffering effects. We examined buffering effects associated with two forms of unit cohesion (peer-oriented horizontal cohesion and subordinate-leader vertical cohesion) defined as either individual-level or aggregated unit-level variables.

MethodsLongitudinal survey data from US Army soldiers who deployed to Afghanistan in 2012 were analyzed using mixed-effects regression. Models evaluated individual- and unit-level interaction effects of combat exposure and cohesion during deployment on symptoms of post-traumatic stress disorder (PTSD), depression, and suicidal ideation reported at 3 months post-deployment (model n's = 6684 to 6826). Given the small effective sample size (k = 89), the significance of unit-level interactions was evaluated at a 90% confidence level.

ResultsAt the individual-level, buffering effects of horizontal cohesion were found for PTSD symptoms [B = −0.11, 95% CI (−0.18 to −0.04), p < 0.01] and depressive symptoms [B = −0.06, 95% CI (−0.10 to −0.01), p < 0.05]; while a buffering effect of vertical cohesion was observed for PTSD symptoms only [B = −0.03, 95% CI (−0.06 to −0.0001), p < 0.05]. At the unit-level, buffering effects of horizontal (but not vertical) cohesion were observed for PTSD symptoms [B = −0.91, 90% CI (−1.70 to −0.11), p = 0.06], depressive symptoms [B = −0.83, 90% CI (−1.24 to −0.41), p < 0.01], and suicidal ideation [B = −0.32, 90% CI (−0.62 to −0.01), p = 0.08].

ConclusionsPolicies and interventions that enhance horizontal cohesion may protect combat-exposed units against post-deployment mental health problems. Efforts to support individual soldiers who report low levels of horizontal or vertical cohesion may also yield mental health benefits.