200 results

546 Using Contingency Management to Understand the Cardiovascular, Immune and Psychosocial Benefits of Reduced Cocaine Use: A Protocol for a Randomized Controlled Trial

- Part of

-

- Journal:

- Journal of Clinical and Translational Science / Volume 8 / Issue s1 / April 2024

- Published online by Cambridge University Press:

- 03 April 2024, p. 163

-

- Article

-

- You have access

- Open access

- Export citation

Population and hospital-level COVID-19 measures are associated with increased risk of hospital-onset COVID-19

-

- Journal:

- Infection Control & Hospital Epidemiology , First View

- Published online by Cambridge University Press:

- 18 March 2024, pp. 1-3

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The prevalence of gram-negative bacteria with difficult-to-treat resistance and utilization of novel β-lactam antibiotics in the southeastern United States

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 4 / Issue 1 / 2024

- Published online by Cambridge University Press:

- 18 March 2024, e35

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

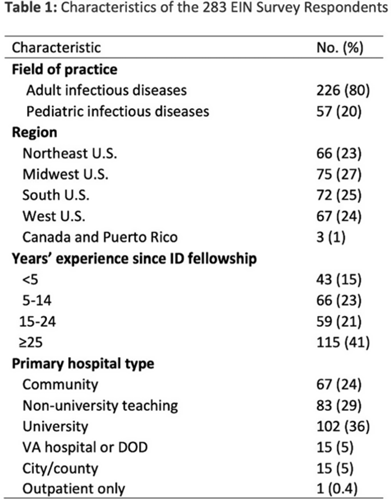

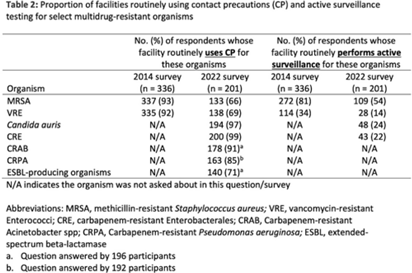

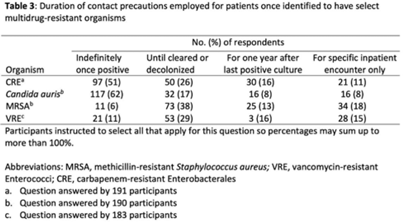

Implementation of contact precautions for multidrug-resistant organisms in the post–COVID-19 pandemic era: An updated national Emerging Infections Network (EIN) survey

-

- Journal:

- Infection Control & Hospital Epidemiology , First View

- Published online by Cambridge University Press:

- 14 February 2024, pp. 1-6

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Simultaneous submission of seven CTSA proposals: UM1, K12, R25, T32-predoctoral, T32-postdoctoral, and RC2: strategies, evaluation, and lessons learned

-

- Journal:

- Journal of Clinical and Translational Science / Volume 8 / Issue 1 / 2024

- Published online by Cambridge University Press:

- 25 January 2024, e33

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

A severe acute respiratory coronavirus virus 2 (SARS-CoV-2) nosocomial cluster with inter-facility spread: Lessons learned

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 45 / Issue 5 / May 2024

- Published online by Cambridge University Press:

- 04 January 2024, pp. 635-643

- Print publication:

- May 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

78 Examining the Association Between a Patient's Diagnosis and Occurrence of The First Error on Trails B

-

- Journal:

- Journal of the International Neuropsychological Society / Volume 29 / Issue s1 / November 2023

- Published online by Cambridge University Press:

- 21 December 2023, p. 280

-

- Article

-

- You have access

- Export citation

Use of contact precautions for multidrug-resistant organisms and the impact of the COVID-19 pandemic: An Emerging Infections Network (EIN) survey

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S2 / June 2023

- Published online by Cambridge University Press:

- 29 September 2023, pp. s102-s103

-

- Article

-

- You have access

- Open access

- Export citation

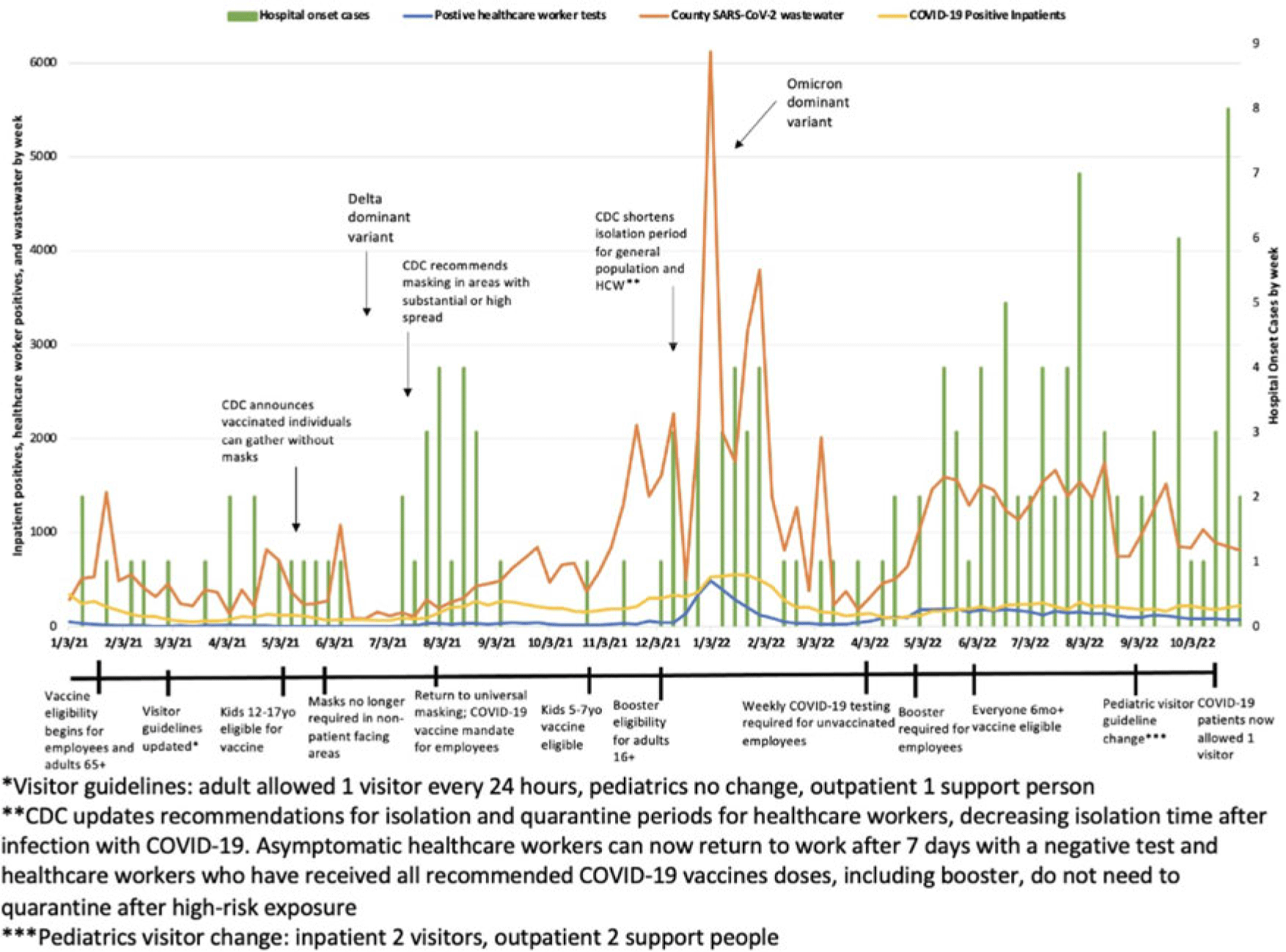

Hospital-onset COVID-19: Associations with population- and hospital-level measures to guide infection prevention efforts

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S2 / June 2023

- Published online by Cambridge University Press:

- 29 September 2023, p. s7

-

- Article

-

- You have access

- Open access

- Export citation

Agricultural Research Service Weed Science Research: Past, Present, and Future

-

- Journal:

- Weed Science / Volume 71 / Issue 4 / July 2023

- Published online by Cambridge University Press:

- 16 August 2023, pp. 312-327

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Psychological interventions for cancer-related post-traumatic stress disorder: narrative review

-

- Journal:

- BJPsych Bulletin / Volume 48 / Issue 2 / April 2024

- Published online by Cambridge University Press:

- 08 June 2023, pp. 100-109

- Print publication:

- April 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Risk factors for long coronavirus disease 2019 (long COVID) among healthcare personnel, Brazil, 2020–2022

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 44 / Issue 12 / December 2023

- Published online by Cambridge University Press:

- 05 June 2023, pp. 1972-1978

- Print publication:

- December 2023

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Cannabis use as a potential mediator between childhood adversity and first-episode psychosis: results from the EU-GEI case–control study

-

- Journal:

- Psychological Medicine / Volume 53 / Issue 15 / November 2023

- Published online by Cambridge University Press:

- 04 May 2023, pp. 7375-7384

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The association between reasons for first using cannabis, later pattern of use, and risk of first-episode psychosis: the EU-GEI case–control study

-

- Journal:

- Psychological Medicine / Volume 53 / Issue 15 / November 2023

- Published online by Cambridge University Press:

- 02 May 2023, pp. 7418-7427

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Detection of severe acute respiratory coronavirus virus 2 (SARS-CoV-2) in the air near patients using noninvasive respiratory support devices

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 44 / Issue 5 / May 2023

- Published online by Cambridge University Press:

- 15 March 2023, pp. 843-845

- Print publication:

- May 2023

-

- Article

- Export citation

Infection prevention and antibiotic stewardship program needs and practices in 2021: A survey of the Society for Healthcare Epidemiology of America Research Network

- Part of

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 44 / Issue 6 / June 2023

- Published online by Cambridge University Press:

- 14 March 2023, pp. 948-950

- Print publication:

- June 2023

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Success stories cause false beliefs about success

-

- Journal:

- Judgment and Decision Making / Volume 16 / Issue 6 / November 2021

- Published online by Cambridge University Press:

- 01 January 2023, pp. 1439-1463

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Belief bias and representation in assessing the Bayesian rationality of others

-

- Journal:

- Judgment and Decision Making / Volume 14 / Issue 1 / January 2019

- Published online by Cambridge University Press:

- 01 January 2023, pp. 1-10

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Chapter 16 - Interprofessional Education in Mental Health Services

- from Section 4 - Bridging the Gaps: Foundation Years and Interprofessional Education

-

-

- Book:

- Clinical Topics in Teaching Psychiatry

- Published online:

- 24 November 2022

- Print publication:

- 08 December 2022, pp 187-198

-

- Chapter

- Export citation

Child maltreatment, migration and risk of first-episode psychosis: results from the multinational EU-GEI study

-

- Journal:

- Psychological Medicine / Volume 53 / Issue 13 / October 2023

- Published online by Cambridge University Press:

- 28 October 2022, pp. 6150-6160

-

- Article

-

- You have access

- Open access

- HTML

- Export citation