90 results

Radiofrequency ice dielectric measurements at Summit Station, Greenland

-

- Journal:

- Journal of Glaciology , First View

- Published online by Cambridge University Press:

- 09 October 2023, pp. 1-12

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Stakeholder views on the design of National Health Service perinatal mental health services: 360-degree survey

-

- Journal:

- BJPsych Bulletin / Volume 48 / Issue 1 / February 2024

- Published online by Cambridge University Press:

- 19 May 2023, pp. 18-24

- Print publication:

- February 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

PD19 Machine Learning Modelling For Clinical Trial Design Using The National Institute for Health and Care Research Innovation Observatory’s ScanMedicine Database

-

- Journal:

- International Journal of Technology Assessment in Health Care / Volume 38 / Issue S1 / December 2022

- Published online by Cambridge University Press:

- 23 December 2022, p. S96

-

- Article

-

- You have access

- Export citation

Acknowledgments

-

- Book:

- Literary Beginnings in the European Middle Ages

- Published online:

- 11 August 2022

- Print publication:

- 25 August 2022, pp x-xii

-

- Chapter

- Export citation

Copyright page

-

- Book:

- Literary Beginnings in the European Middle Ages

- Published online:

- 11 August 2022

- Print publication:

- 25 August 2022, pp iv-iv

-

- Chapter

- Export citation

Chapter 1 - Introduction

-

-

- Book:

- Literary Beginnings in the European Middle Ages

- Published online:

- 11 August 2022

- Print publication:

- 25 August 2022, pp 12-22

-

- Chapter

- Export citation

Contributors

-

- Book:

- Literary Beginnings in the European Middle Ages

- Published online:

- 11 August 2022

- Print publication:

- 25 August 2022, pp vii-ix

-

- Chapter

- Export citation

Chapter 9 - German

-

-

- Book:

- Literary Beginnings in the European Middle Ages

- Published online:

- 11 August 2022

- Print publication:

- 25 August 2022, pp 179-202

-

- Chapter

- Export citation

Contents

-

- Book:

- Literary Beginnings in the European Middle Ages

- Published online:

- 11 August 2022

- Print publication:

- 25 August 2022, pp v-vi

-

- Chapter

- Export citation

Index

-

- Book:

- Literary Beginnings in the European Middle Ages

- Published online:

- 11 August 2022

- Print publication:

- 25 August 2022, pp 313-339

-

- Chapter

- Export citation

Literary Beginnings in the European Middle Ages

-

- Published online:

- 11 August 2022

- Print publication:

- 25 August 2022

Similar duration of viral shedding of the severe acute respiratory coronavirus virus 2 (SARS-CoV-2) delta variant between vaccinated and incompletely vaccinated individuals

- Part of

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 44 / Issue 6 / June 2023

- Published online by Cambridge University Press:

- 23 May 2022, pp. 1002-1004

- Print publication:

- June 2023

-

- Article

- Export citation

Mineral micronutrient status and spatial distribution among the Ethiopian population

-

- Journal:

- British Journal of Nutrition / Volume 128 / Issue 11 / 14 December 2022

- Published online by Cambridge University Press:

- 03 February 2022, pp. 2170-2180

- Print publication:

- 14 December 2022

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

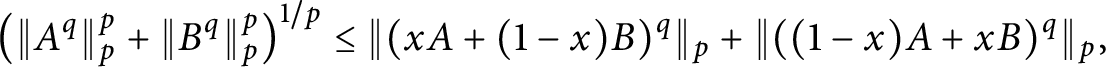

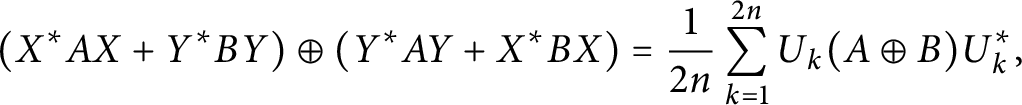

Matrix inequalities and majorizations around Hermite–Hadamard’s inequality

- Part of

-

- Journal:

- Canadian Mathematical Bulletin / Volume 65 / Issue 4 / December 2022

- Published online by Cambridge University Press:

- 10 January 2022, pp. 943-952

- Print publication:

- December 2022

-

- Article

- Export citation

An Indigenous Lens on Priorities for the Canadian Brain Research Strategy

-

- Journal:

- Canadian Journal of Neurological Sciences / Volume 50 / Issue 1 / January 2023

- Published online by Cambridge University Press:

- 01 December 2021, pp. 96-98

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Trial to Encourage Adoption and Maintenance of a MEditerranean Diet (TEAM-MED): a randomised pilot trial of a peer support intervention for dietary behaviour change in adults from a Northern European population at high CVD risk

-

- Journal:

- British Journal of Nutrition / Volume 128 / Issue 7 / 14 October 2022

- Published online by Cambridge University Press:

- 04 October 2021, pp. 1322-1334

- Print publication:

- 14 October 2022

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Neurophysiological correlates of disorder-related autobiographical memory in anorexia nervosa

-

- Journal:

- Psychological Medicine / Volume 53 / Issue 3 / February 2023

- Published online by Cambridge University Press:

- 18 June 2021, pp. 844-854

-

- Article

- Export citation

92438 Symptom Dynamics and Biomarkers of Disease Progression in Older Adult Patient-Caregiver Dyads During Care Transitions after Heart Failure Hospitalization: Study Design and Anticipated Results

-

- Journal:

- Journal of Clinical and Translational Science / Volume 5 / Issue s1 / March 2021

- Published online by Cambridge University Press:

- 30 March 2021, p. 130

-

- Article

-

- You have access

- Open access

- Export citation

Depression and increased risk of non-alcoholic fatty liver disease in individuals with obesity

-

- Journal:

- Epidemiology and Psychiatric Sciences / Volume 30 / 2021

- Published online by Cambridge University Press:

- 12 March 2021, e23

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Detection of severe acute respiratory coronavirus virus 2 (SARS-CoV-2) in outpatients: A multicenter comparison of self-collected saline gargle, oral swab, and combined oral–anterior nasal swab to a provider collected nasopharyngeal swab

- Part of

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 42 / Issue 11 / November 2021

- Published online by Cambridge University Press:

- 13 January 2021, pp. 1340-1344

- Print publication:

- November 2021

-

- Article

-

- You have access

- Open access

- HTML

- Export citation