807 results

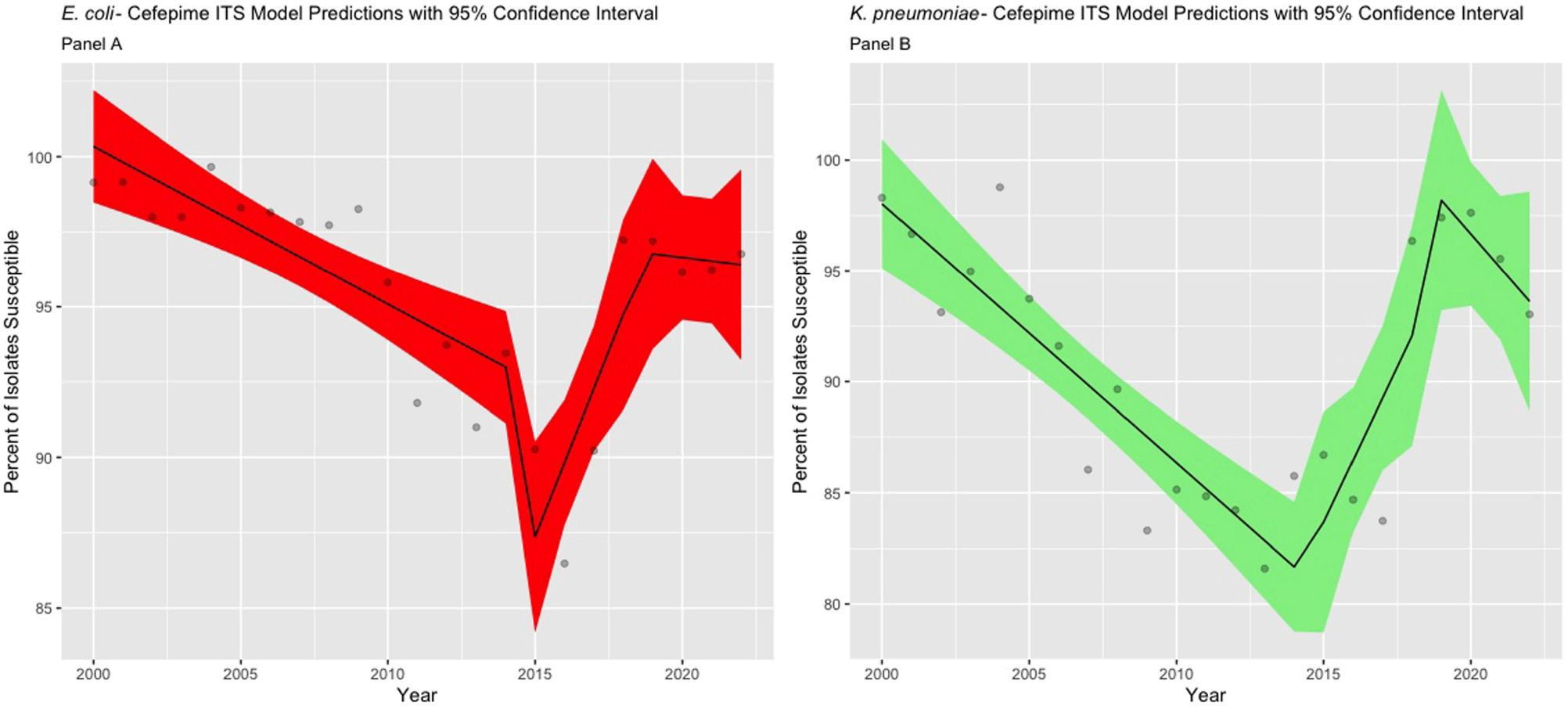

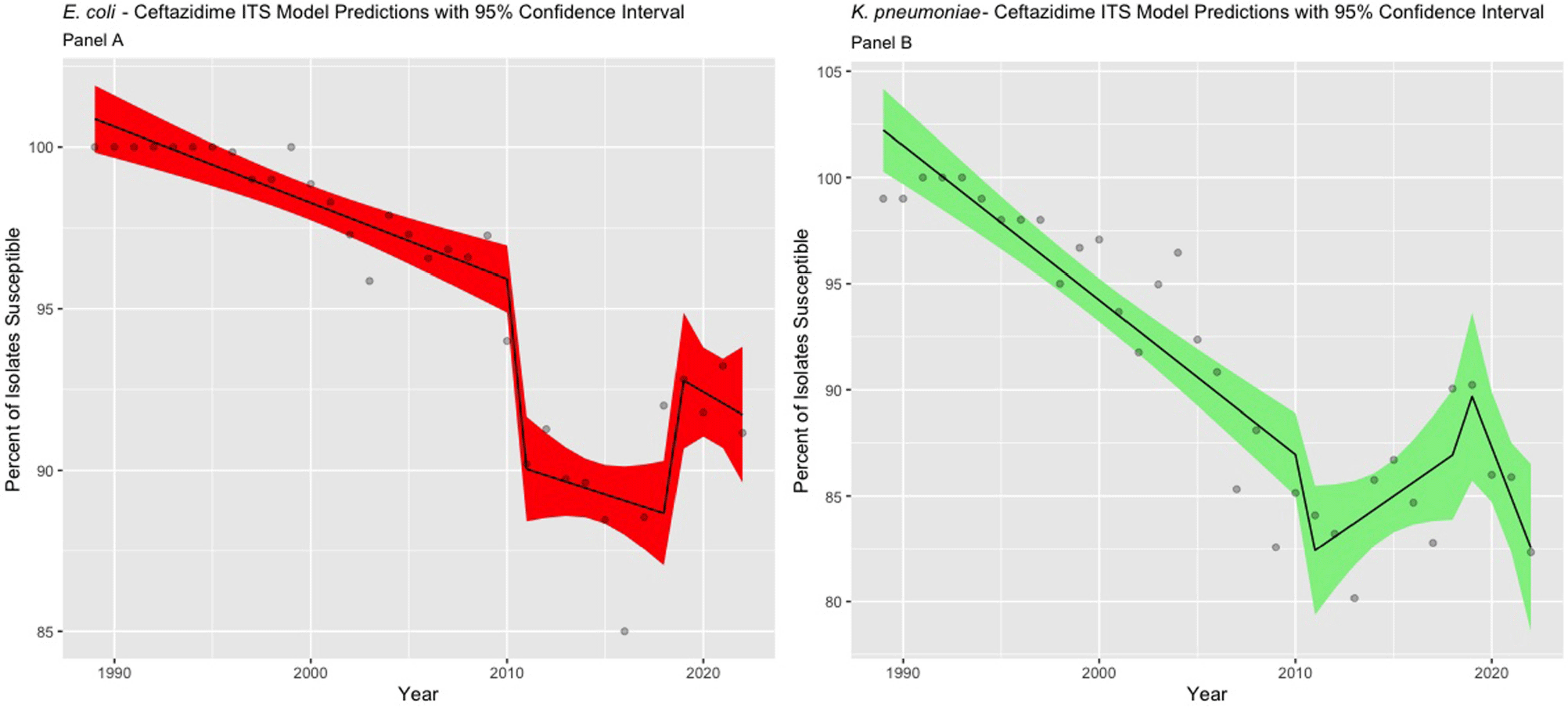

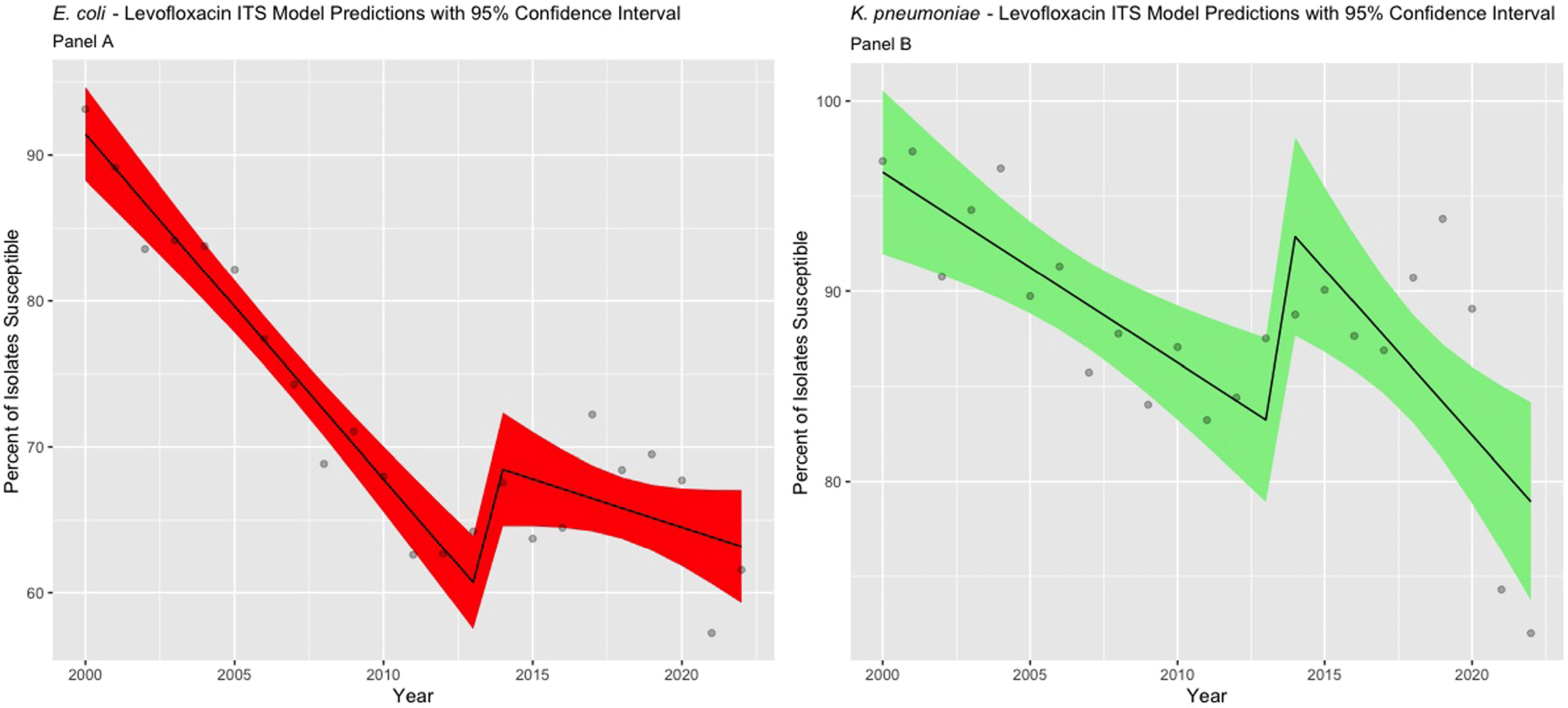

Impact of MIC Breakpoint Changes for Enterobacterales on Trends of Antibiotic Susceptibilities in An Academic Medical Center

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 4 / Issue S1 / July 2024

- Published online by Cambridge University Press:

- 16 September 2024, pp. s54-s56

-

- Article

-

- You have access

- Open access

- Export citation

Detecting suicide risk among U.S. servicemembers and veterans: a deep learning approach using social media data

-

- Journal:

- Psychological Medicine , First View

- Published online by Cambridge University Press:

- 09 September 2024, pp. 1-10

-

- Article

-

- You have access

- HTML

- Export citation

Development and initial evaluation of a clinical prediction model for risk of treatment resistance in first-episode psychosis: Schizophrenia Prediction of Resistance to Treatment (SPIRIT)

-

- Journal:

- The British Journal of Psychiatry , FirstView

- Published online by Cambridge University Press:

- 05 August 2024, pp. 1-10

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

SHEA position statement on pandemic preparedness for policymakers: emerging infectious threats

-

- Journal:

- Infection Control & Hospital Epidemiology , First View

- Published online by Cambridge University Press:

- 19 July 2024, pp. 1-3

-

- Article

-

- You have access

- HTML

- Export citation

SHEA position statement on pandemic preparedness for policymakers: the role of healthcare epidemiologists in communicating during infectious diseases outbreaks

-

- Journal:

- Infection Control & Hospital Epidemiology , First View

- Published online by Cambridge University Press:

- 05 June 2024, pp. 1-5

-

- Article

-

- You have access

- HTML

- Export citation

Society for Healthcare Epidemiology of America position statement on pandemic preparedness for policymakers: mitigating supply shortages

-

- Journal:

- Infection Control & Hospital Epidemiology , First View

- Published online by Cambridge University Press:

- 05 June 2024, pp. 1-5

-

- Article

-

- You have access

- HTML

- Export citation

SHEA position statement on pandemic preparedness for policymakers: building a strong and resilient healthcare workforce

-

- Journal:

- Infection Control & Hospital Epidemiology , First View

- Published online by Cambridge University Press:

- 05 June 2024, pp. 1-4

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

SHEA position statement on pandemic preparedness for policymakers: introduction and overview

-

- Journal:

- Infection Control & Hospital Epidemiology , First View

- Published online by Cambridge University Press:

- 05 June 2024, pp. 1-3

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Sex-dependent differences in vulnerability to early risk factors for posttraumatic stress disorder: results from the AURORA study

-

- Journal:

- Psychological Medicine , First View

- Published online by Cambridge University Press:

- 22 May 2024, pp. 1-11

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Engaging communities in Sulawesi Island, Indonesia: A collaborative approach to modelling marine plastic debris through open science and online visualization

-

- Journal:

- Cambridge Prisms: Plastics / Volume 2 / 2024

- Published online by Cambridge University Press:

- 16 May 2024, e15

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

How to co-create content moderation policies: the case of the AutSPACEs project

-

- Journal:

- Data & Policy / Volume 6 / 2024

- Published online by Cambridge University Press:

- 15 May 2024, e28

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The Right to Be Protected from Committing Suicide by Jonathan Herring, Hart Publishing, Oxford, 2022, pp xvii+265, £42.99, pbk

-

- Journal:

- New Blackfriars ,

- Published online by Cambridge University Press:

- 22 April 2024, pp. 1-3

-

- Article

-

- You have access

- HTML

- Export citation

362 Examining Temporal Links Between Distinct Negative Emotions and Tobacco Lapse During A Cessation Attempt

- Part of

-

- Journal:

- Journal of Clinical and Translational Science / Volume 8 / Issue s1 / April 2024

- Published online by Cambridge University Press:

- 03 April 2024, p. 109

-

- Article

-

- You have access

- Open access

- Export citation

The prevalence of gram-negative bacteria with difficult-to-treat resistance and utilization of novel β-lactam antibiotics in the southeastern United States

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 4 / Issue 1 / 2024

- Published online by Cambridge University Press:

- 18 March 2024, e35

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Evaluating the Current State of Epilepsy Care in the Province of Ontario

-

- Journal:

- Canadian Journal of Neurological Sciences , First View

- Published online by Cambridge University Press:

- 01 March 2024, pp. 1-3

-

- Article

-

- You have access

- HTML

- Export citation

3 Harmonized Memory and Language Function in the Harmonized Cognitive Assessment Protocol (HCAP) Across the United States and Mexico

-

- Journal:

- Journal of the International Neuropsychological Society / Volume 29 / Issue s1 / November 2023

- Published online by Cambridge University Press:

- 21 December 2023, pp. 87-88

-

- Article

-

- You have access

- Export citation

The Role of Location in the Spread of SARS-CoV-2: Examination of Cases and Exposed Contacts in South Texas, Using Social Network Analysis

-

- Journal:

- Disaster Medicine and Public Health Preparedness / Volume 17 / 2023

- Published online by Cambridge University Press:

- 23 October 2023, e516

-

- Article

-

- You have access

- HTML

- Export citation

Hypoxia following warden procedure: evaluation and percutaneous treatment

-

- Journal:

- Cardiology in the Young / Volume 34 / Issue 3 / March 2024

- Published online by Cambridge University Press:

- 11 September 2023, pp. 634-636

-

- Article

-

- You have access

- HTML

- Export citation

A multi-country comparison of jurisdictions with and without mandatory nutrition labelling policies in restaurants: analysis of behaviours associated with menu labelling in the 2019 International Food Policy Study

- Part of

-

- Journal:

- Public Health Nutrition / Volume 26 / Issue 11 / November 2023

- Published online by Cambridge University Press:

- 04 September 2023, pp. 2595-2606

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The alarms should no longer be ignored: survey of the demand, capacity and provision of adult community eating disorder services in England and Scotland before COVID-19

-

- Journal:

- BJPsych Bulletin , FirstView

- Published online by Cambridge University Press:

- 01 August 2023, pp. 1-9

-

- Article

-

- You have access

- Open access

- HTML

- Export citation