278 results

251 The Appalachian Translational Research Network (ATRN) Newsletter: Supporting Communication and Collaboration among Academic and Community Partners to Improve Health in Appalachia

- Part of

-

- Journal:

- Journal of Clinical and Translational Science / Volume 8 / Issue s1 / April 2024

- Published online by Cambridge University Press:

- 03 April 2024, pp. 75-76

-

- Article

-

- You have access

- Open access

- Export citation

Using latent class analysis to investigate enduring effects of intersectional social disadvantage on long-term vocational and financial outcomes in the 20-year prospective Chicago Longitudinal Study

-

- Journal:

- Psychological Medicine , First View

- Published online by Cambridge University Press:

- 25 March 2024, pp. 1-13

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Near-source passive sampling for monitoring viral outbreaks within a university residential setting

-

- Journal:

- Epidemiology & Infection / Volume 152 / 2024

- Published online by Cambridge University Press:

- 08 February 2024, e31

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

P114: Experiences of nursing home residents with dementia and chronic pain using an interactive social robot: A qualitative study of multiple stakeholders

-

- Journal:

- International Psychogeriatrics / Volume 35 / Issue S1 / December 2023

- Published online by Cambridge University Press:

- 02 February 2024, pp. 171-172

-

- Article

-

- You have access

- Export citation

Parliamentary reaction to the announcement and implementation of the UK Soft Drinks Industry Levy: applied thematic analysis of 2016–2020 parliamentary debates

-

- Journal:

- Public Health Nutrition / Volume 27 / Issue 1 / 2024

- Published online by Cambridge University Press:

- 24 January 2024, e51

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Reductive Degradation of p,p′-DDT cy Fe(II) in Nontronite NAu-2

-

- Journal:

- Clays and Clay Minerals / Volume 58 / Issue 6 / December 2010

- Published online by Cambridge University Press:

- 01 January 2024, pp. 821-836

-

- Article

- Export citation

Treatment effectiveness of antibiotic therapy in Veterans with multidrug-resistant Acinetobacter spp. bacteremia

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue 1 / 2023

- Published online by Cambridge University Press:

- 12 December 2023, e230

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Population monitoring of a Critically Endangered antelope, the mountain bongo, using camera traps and a novel identification scheme

-

- Journal:

- Oryx , First View

- Published online by Cambridge University Press:

- 13 November 2023, pp. 1-8

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

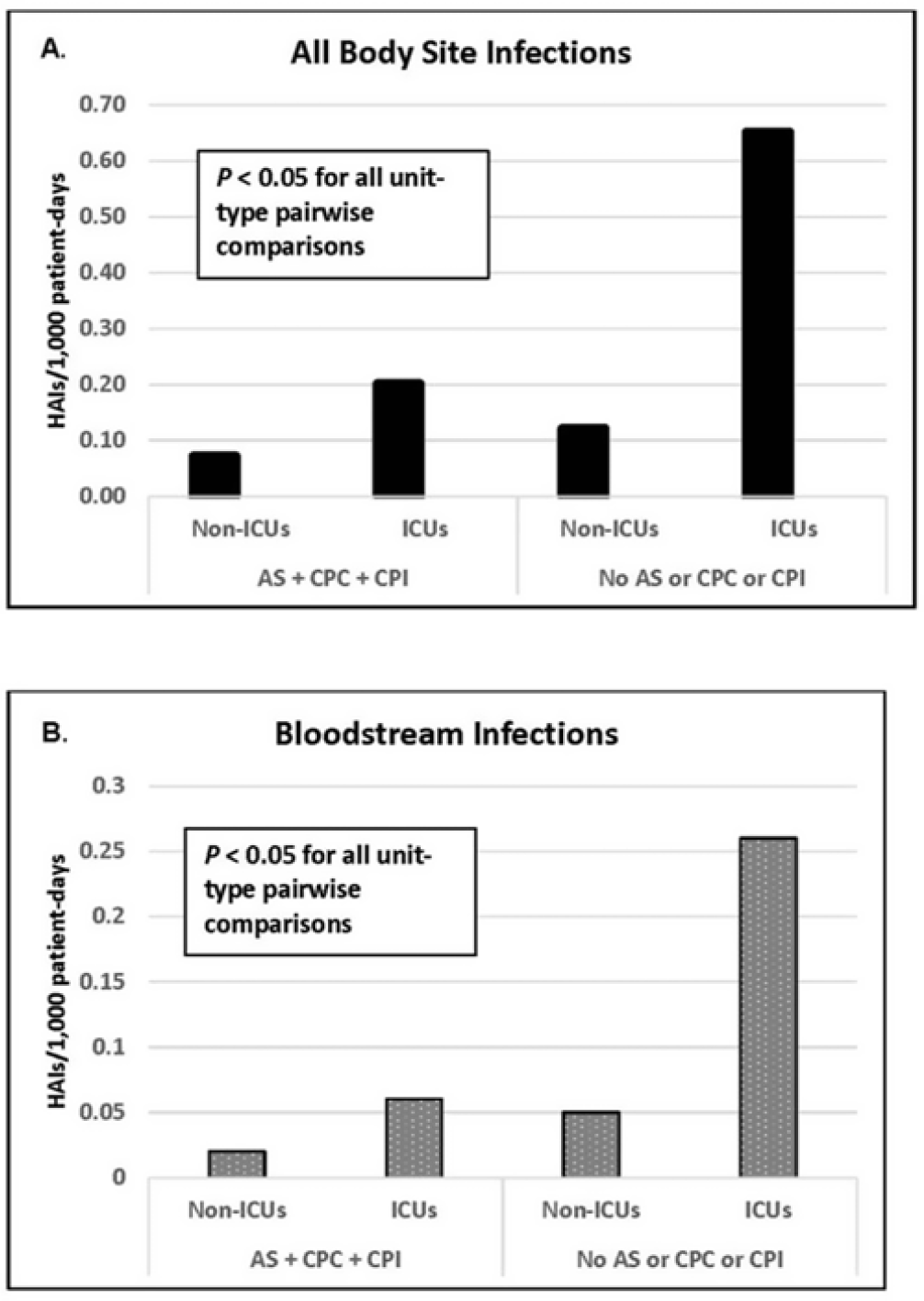

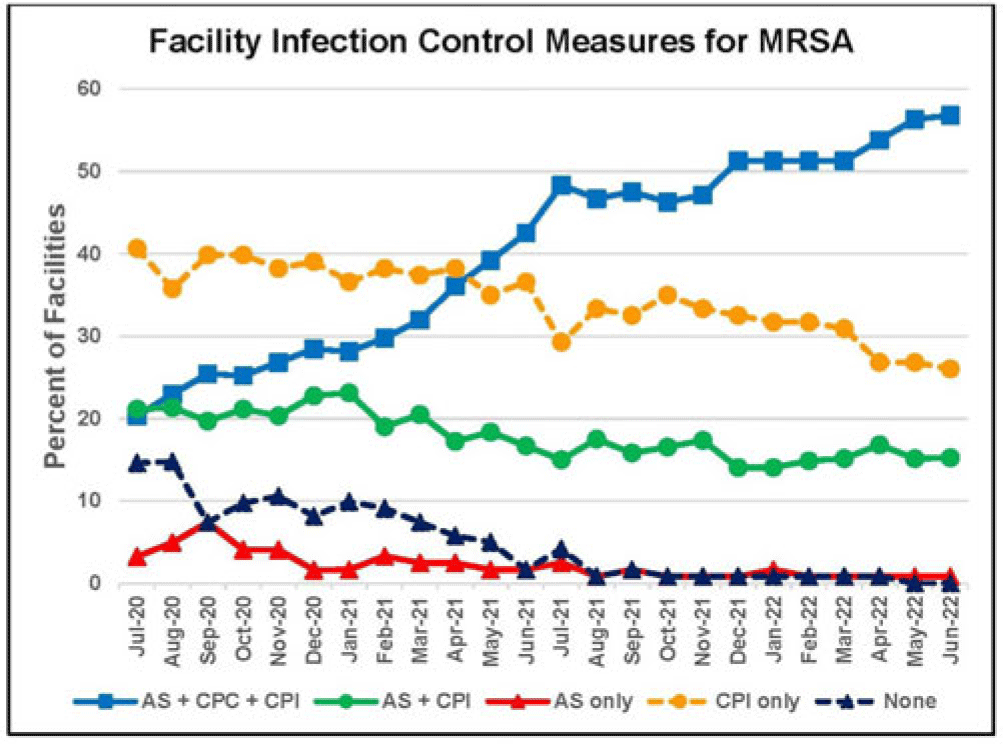

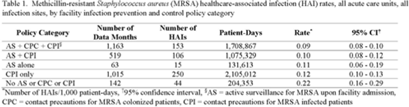

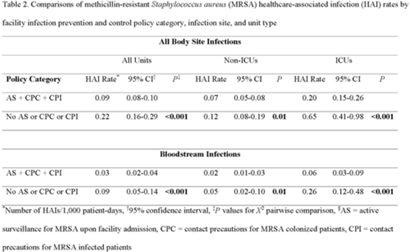

Active surveillance and contact precautions for preventing MRSA healthcare-associated infections during the COVID-19 pandemic

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S2 / June 2023

- Published online by Cambridge University Press:

- 29 September 2023, pp. s117-s118

-

- Article

-

- You have access

- Open access

- Export citation

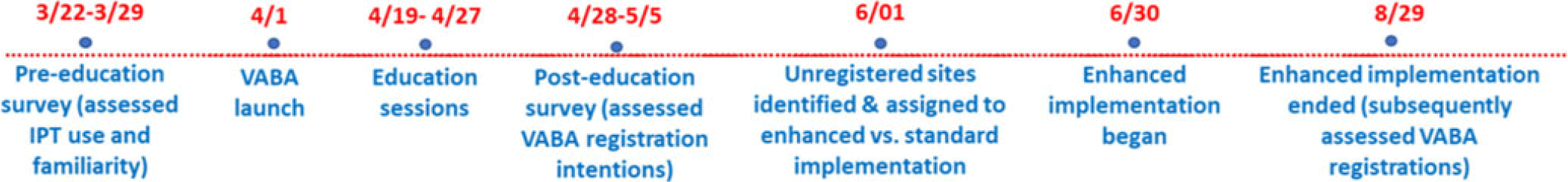

Increasing Registration for a VA Multidrug-Resistant Organism Alert Tool

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S2 / June 2023

- Published online by Cambridge University Press:

- 29 September 2023, pp. s124-s125

-

- Article

-

- You have access

- Open access

- Export citation

A multi-country comparison of jurisdictions with and without mandatory nutrition labelling policies in restaurants: analysis of behaviours associated with menu labelling in the 2019 International Food Policy Study

-

- Journal:

- Public Health Nutrition / Volume 26 / Issue 11 / November 2023

- Published online by Cambridge University Press:

- 04 September 2023, pp. 2595-2606

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

CosmoDRAGoN simulations—I. Dynamics and observable signatures of radio jets in cosmological environments

-

- Journal:

- Publications of the Astronomical Society of Australia / Volume 40 / 2023

- Published online by Cambridge University Press:

- 12 April 2023, e014

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Extraction and applications of Rayleigh wave ellipticity in polar regions

-

- Journal:

- Annals of Glaciology / Volume 63 / Issue 87-89 / September 2022

- Published online by Cambridge University Press:

- 29 March 2023, pp. 3-7

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

3 - Political Economy of the Inclusive Labour Market Revisited: Welfare through Work in Denmark

-

-

- Book:

- Employer Engagement

- Published by:

- Bristol University Press

- Published online:

- 18 January 2024

- Print publication:

- 28 February 2023, pp 34-51

-

- Chapter

- Export citation

DRAGON-Data: a platform and protocol for integrating genomic and phenotypic data across large psychiatric cohorts

-

- Journal:

- BJPsych Open / Volume 9 / Issue 2 / March 2023

- Published online by Cambridge University Press:

- 08 February 2023, e32

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

19 - The Return of the Eurasian Beaver to Britain: The Implications of Unplanned Releases and the Human Dimension

- from Part IV - Case Studies

-

-

- Book:

- Conservation Translocations

- Published online:

- 07 December 2022

- Print publication:

- 22 December 2022, pp 449-455

-

- Chapter

- Export citation

DEI co-mentoring circles for clinical research professionals: A pilot project and toolkit

-

- Journal:

- Journal of Clinical and Translational Science / Volume 7 / Issue 1 / 2023

- Published online by Cambridge University Press:

- 22 December 2022, e25

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The Shaqadud Archaeological Project (Sudan): exploring prehistoric cultural adaptations in the Sahelian hinterlands

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Lack of correlation between standardized antimicrobial administration ratios (SAARs) and healthcare-facility–onset Clostridioides difficile infection rates in Veterans Affairs medical facilities

-

- Journal:

- Infection Control & Hospital Epidemiology / Volume 44 / Issue 6 / June 2023

- Published online by Cambridge University Press:

- 01 December 2022, pp. 945-947

- Print publication:

- June 2023

-

- Article

- Export citation