165 results

Status and influencing factors of nurses’ burnout: A cross-sectional study during COVID-19 regular prevention and control in Jiangsu Province, China

-

- Journal:

- Cambridge Prisms: Global Mental Health / Accepted manuscript

- Published online by Cambridge University Press:

- 15 April 2024, pp. 1-25

-

- Article

-

- You have access

- Open access

- Export citation

Models of mild cognitive deficits in risk assessment in early psychosis

-

- Journal:

- Psychological Medicine , First View

- Published online by Cambridge University Press:

- 04 March 2024, pp. 1-12

-

- Article

- Export citation

Design Approaches, Functionalization, and Environmental and Analytical Applications of Magnetic Halloysite Nanotubes: A Review

-

- Journal:

- Clays and Clay Minerals / Volume 70 / Issue 5 / October 2022

- Published online by Cambridge University Press:

- 01 January 2024, pp. 660-694

-

- Article

- Export citation

Late Quaternary glaciations in the Taniantaweng Mountains

-

- Journal:

- Quaternary Research / Volume 117 / January 2024

- Published online by Cambridge University Press:

- 13 November 2023, pp. 3-18

-

- Article

- Export citation

Tuber development and propagation are inhibited by GA3 effects on the DELLA-dependent pathway in purple nutsedge (Cyperus rotundus) – CORRIGENDUM

-

- Journal:

- Weed Science / Volume 71 / Issue 5 / September 2023

- Published online by Cambridge University Press:

- 03 November 2023, pp. 514-515

-

- Article

-

- You have access

- HTML

- Export citation

Functional priority of syntax over semantics in Chinese ‘ba’ construction: evidence from eye-tracking during natural reading

-

- Journal:

- Language and Cognition , First View

- Published online by Cambridge University Press:

- 13 September 2023, pp. 1-21

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Tuber development and propagation are inhibited by GA3 effects on the DELLA-dependent pathway in purple nutsedge (Cyperus rotundus)

-

- Journal:

- Weed Science / Volume 71 / Issue 5 / September 2023

- Published online by Cambridge University Press:

- 04 September 2023, pp. 453-461

-

- Article

- Export citation

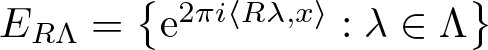

The spectral eigenmatrix problems of planar self-affine measures with four digits

- Part of

-

- Journal:

- Proceedings of the Edinburgh Mathematical Society / Volume 66 / Issue 3 / August 2023

- Published online by Cambridge University Press:

- 22 August 2023, pp. 897-918

-

- Article

- Export citation

Association between plasma and dietary trace elements and obesity in a rural Chinese population

-

- Journal:

- British Journal of Nutrition / Volume 131 / Issue 1 / 14 January 2024

- Published online by Cambridge University Press:

- 13 July 2023, pp. 123-133

- Print publication:

- 14 January 2024

-

- Article

-

- You have access

- HTML

- Export citation

Neural habituation during acute stress signals a blunted endocrine response and poor resilience

-

- Journal:

- Psychological Medicine / Volume 53 / Issue 16 / December 2023

- Published online by Cambridge University Press:

- 13 June 2023, pp. 7735-7745

-

- Article

- Export citation

Petrogenesis of Early Cretaceous volcanic rocks from the Rena-Co area in the southern Qiangtang Terrane, central Tibet: evidence from zircon U-Pb geochronology, petrochemistry and Sr-Nd-Pb–Hf isotope characteristics

-

- Journal:

- Geological Magazine / Volume 160 / Issue 6 / June 2023

- Published online by Cambridge University Press:

- 02 May 2023, pp. 1144-1159

-

- Article

- Export citation

Reduced left hippocampal perfusion is associated with insomnia in patients with cerebral small vessel disease

-

- Journal:

- CNS Spectrums / Volume 28 / Issue 6 / December 2023

- Published online by Cambridge University Press:

- 25 April 2023, pp. 702-709

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

SG-APSIC1091: Assessment of compliance to cleaning of computers by healthcare workers (HCWs) using adenosine triphosphate (ATP) measurement

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S1 / February 2023

- Published online by Cambridge University Press:

- 16 March 2023, pp. s12-s13

-

- Article

-

- You have access

- Open access

- Export citation

SG-APSIC1122: Observational study of handwashing sink activities in the inpatient setting

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S1 / February 2023

- Published online by Cambridge University Press:

- 16 March 2023, pp. s14-s15

-

- Article

-

- You have access

- Open access

- Export citation

SG-APSIC1118: Introduction of carbapenemase-producing Enterobacterales (CPE) in the aqueous environment of the newly built National Centre for Infectious Diseases (NCID) in Singapore

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S1 / February 2023

- Published online by Cambridge University Press:

- 16 March 2023, pp. s11-s12

-

- Article

-

- You have access

- Open access

- Export citation

SG-APSIC1199: Evaluation of a pooling strategy using Xpert Carba-R assay for screening for carbapenemase-producing organisms in rectal swabs

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S1 / February 2023

- Published online by Cambridge University Press:

- 16 March 2023, pp. s26-s27

-

- Article

-

- You have access

- Open access

- Export citation

SG-APSIC1203: Detection of carbapenemase genes in donor lungs at the point of care before transplantation reduces the risk of carbapenem-resistant Enterobacteriaceae–associated donor-derived infection in lung-transplant recipients

-

- Journal:

- Antimicrobial Stewardship & Healthcare Epidemiology / Volume 3 / Issue S1 / February 2023

- Published online by Cambridge University Press:

- 16 March 2023, p. s25

-

- Article

-

- You have access

- Open access

- Export citation

Calycosin attenuates Angiostrongylus cantonensis-induced parasitic meningitis through modulation of HO-1 and NF-κB activation

-

- Journal:

- Parasitology / Volume 150 / Issue 4 / April 2023

- Published online by Cambridge University Press:

- 07 November 2022, pp. 311-320

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The association between serum folate and gestational diabetes mellitus: a large retrospective cohort study in Chinese population

-

- Journal:

- Public Health Nutrition / Volume 26 / Issue 5 / May 2023

- Published online by Cambridge University Press:

- 12 September 2022, pp. 1014-1021

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

NEW SAMPLE PREPARATION LINE FOR RADIOCARBON MEASUREMENTS AT THE GXNU LABORATORY

-

- Journal:

- Radiocarbon / Volume 64 / Issue 6 / December 2022

- Published online by Cambridge University Press:

- 30 May 2022, pp. 1501-1511

- Print publication:

- December 2022

-

- Article

- Export citation