1 Introduction

We are compelled to understand and intervene in the dynamics of various complex systems in which different elements, such as human individuals, interact with each other. Such complex systems are often modeled by multiagent or network-based models that explicitly dictate how each individual behaves and influences other individuals. Stochastic processes are popular models for the dynamics of multiagent systems when it is realistic to assume random elements in how agents behave or in dynamical processes taking place in the system. For example, random walks have been successfully applied to describe locomotion and foraging of animals (Reference Codling, Plank and BenhamouCodling, Plank, & Benhamou, 2008; Reference Okubo and LevinOkubo & Levin, 2001), dynamics of neuronal firing (Reference Gabbiani and CoxGabbiani & Cox, 2010; Reference TuckwellTuckwell, 1988), and financial market dynamics (Reference Campbell, Lo and MacKinlayCampbell, Lo, & MacKinlay, 1997; Reference Mantegna and StanleyMantegna & Stanley, 2000) to name a few (see Reference Masuda, Porter and LambiotteMasuda, Porter, and Lambiotte [2017] for a review). Branching processes are another major type of stochastic processes that have been applied to describe, for example, information spread (Reference Eugster, Guerraoui, Kermarrec and MassouliéEugster et al., 2004; Reference Gleeson, Onaga and FennellGleeson et al., 2021), spread of infectious diseases (Reference BrittonBritton, 2010; Reference Farrington, Kanaan and GayFarrington, Kanaan, & Gay, 2003), cell proliferation (Reference JagersJagers, 1975), and the abundance of species in a community (Reference McGill, Etienne and GrayMcGill et al., 2007) as well as other ecological dynamics (Reference Black and McKaneBlack & McKane, 2012).

Stochastic processes in which the state of the system changes via discrete events that occur at given points in time are a major class of models for dynamics of complex systems (Reference Andersson and BrittonAndersson & Britton, 2000; Reference Barrat, Barthélemy and VespignaniBarrat, Barthélemy, & Vespignani, 2008; Reference Daley and GaniDaley & Gani, 1999; Reference de Arruda, Rodrigues and Morenode Arruda, Rodrigues, & Moreno, 2018; Reference Kiss, Miller and SimonKiss, Miller, & Simon, 2017a; Reference LiggettLiggett, 2010; Reference Shelton and CiardoShelton & Ciardo, 2014; Reference Singer and SpilermanSinger & Spilerman, 1976; Reference Van MieghemVan Mieghem, 2014). For example, in typical models for infectious disease spread, each infection event occurs at a given time

![]() such that an individual transitions instantaneously from a healthy to an infectious state. Such processes are called Markov jump processes when they satisfy certain independence conditions (Reference HansonHanson, 2007), which we will briefly discuss in Section 2.5. A jump is equivalent to a discrete event. In Markov jump processes, jumps occur according to Poisson processes. In this volume, we focus on how to simulate Markov jump processes. Specifically, we will introduce a set of exact and computationally efficient simulation algorithms collectively known as Gillespie algorithms. In the last technical section of this volume (i.e., Section 5), we will also consider more general, non-Markov, jump processes, in which the events are generated in more complicated manners than by Poisson processes. In the following text, we refer collectively to Markov jump processes and non-Markov jump processes as jump processes.

such that an individual transitions instantaneously from a healthy to an infectious state. Such processes are called Markov jump processes when they satisfy certain independence conditions (Reference HansonHanson, 2007), which we will briefly discuss in Section 2.5. A jump is equivalent to a discrete event. In Markov jump processes, jumps occur according to Poisson processes. In this volume, we focus on how to simulate Markov jump processes. Specifically, we will introduce a set of exact and computationally efficient simulation algorithms collectively known as Gillespie algorithms. In the last technical section of this volume (i.e., Section 5), we will also consider more general, non-Markov, jump processes, in which the events are generated in more complicated manners than by Poisson processes. In the following text, we refer collectively to Markov jump processes and non-Markov jump processes as jump processes.

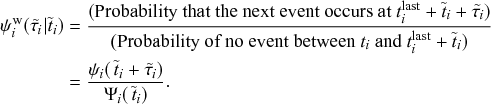

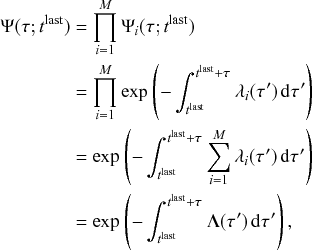

The Gillespie algorithms were originally proposed in their general forms by Daniel Gillespie in 1976 for simulating systems of chemical reactions (Reference GillespieGillespie, 1976), whereas several specialized variants had been proposed earlier; see Section 3.1 for a brief history review. Gillespie proposed two different variants of the simulation algorithm, the direct method, also known as Gillespie’s stochastic simulation algorithm (SSA), or often simply the Gillespie algorithm, and the first reaction method. Both the direct and first reaction methods have found widespread use and in fields far beyond chemical physics. Furthermore, researchers have developed many extensions and improvements of the original Gillespie algorithms to widen the types of processes that we can simulate with them and to improve their computational efficiency.

The Gillespie algorithms are practical algorithms to simulate coupled Poisson processes exactly (i.e., without approximation error). Here “coupled” means that an event that occurs somewhere in the system potentially influences the likelihood of future events’ occurrences in different parts of the same system. For example, when an individual in a population,

![]() , gets infected by a contagious disease, the likelihood that a different healthy individual in the same population,

, gets infected by a contagious disease, the likelihood that a different healthy individual in the same population,

![]() , will get infected in the near future may increase. If interactions were absent, it would suffice to separately consider single Poisson processes, and simulating the system would be straightforward.

, will get infected in the near future may increase. If interactions were absent, it would suffice to separately consider single Poisson processes, and simulating the system would be straightforward.

We believe that the Gillespie algorithms are important tools for students and researchers that study dynamic social systems, where social dynamics are broadly construed and include both human and animal interactions, ecological systems, and even technological systems. While there already exists a large body of references on the Gillespie algorithms and their variants, most are concise, mathematically challenging for beginners, and focused on chemical reaction systems.

Given these considerations, the primary aim of this volume is to provide a detailed tutorial on the Gillespie algorithms, with specific focus on simulating dynamic social systems. We will realize the tutorial in the first part of the Element (Sections 2 and 3). In this part, we assume basic knowledge of calculus and probability. Although we do introduce stochastic processes and explain the Gillespie algorithms and related concepts with much reference to networks, we do not assume prior knowledge of stochastic processes or of networks. To understand the coding section, readers will need basic knowledge of programming. The second part of this Element (Sections 4 and 5) is devoted to a survey of recent advancements of Gillespie algorithms for simulating social dynamics. These advancements are concerned with accelerating simulations and/or increasing the realism of the models to be simulated.

2 Preliminaries

We review in this section mathematical concepts needed to understand the Gillespie algorithms. In Sections 2.1 to 2.3, we introduce the types of models we will be concerned with, namely jump processes, and in particular a simple type of jump process termed Poisson processes. In Sections 2.4 to 2.6, we derive the main mathematical properties of Poisson processes. The concepts and results presented in Sections 2.1 to 2.6 are necessary for understanding Section 3, where we derive the Gillespie algorithms. In Sections 2.7 and 2.8, we review two simple methods for solving the models that predate the Gillespie algorithms and discuss some of their shortcomings. These two final subsections motivate the need for exact simulation algorithms such as the Gillespie algorithms.

2.1 Jump Processes

Before getting into the nitty-gritty of the Gillespie algorithms, we first explore which types of systems they can be used to simulate. First of all, with the Gillespie algorithms, we are interested in simulating a dynamic system. This can be, for example, epidemic dynamics in a population in which the number of infectious individuals varies over time, or the evolution of the number of crimes in a city, which also varies over time in general. Second, the Gillespie algorithms rely on a predefined and parametrized mathematical model for the system to simulate. Therefore, we must have the set of rules for how the system or the individuals in it change their states. Third, Gillespie algorithms simulate stochastic processes, not deterministic systems. In other words, every time one runs the same model starting from the same initial conditions, the results will generally differ. In contrast, in a deterministic dynamical system, if we specify the model and the initial conditions, the behavior of the model will always be the same. Fourth and last, the Gillespie algorithms simulate processes in which changes in the system are primarily driven by discrete events taking place in continuous time. For example, when a chemical reaction obeying the chemical equation A

![]() B

B

![]() C

C

![]() D happens, one unit each of A and of B are consumed, and one unit each of C and of D are produced. This event is discrete in that we can count the event and say when the event happened, but it can happen at any point in time (i.e., time is not discretized but continuous).

D happens, one unit each of A and of B are consumed, and one unit each of C and of D are produced. This event is discrete in that we can count the event and say when the event happened, but it can happen at any point in time (i.e., time is not discretized but continuous).

We refer to the class of mathematical models that satisfy these conditions and may be simulated by a Gillespie algorithm as jump processes. In the remainder of this section, we explore these processes more extensively through motivating examples. Then, we introduce some fundamental mathematical definitions and results that the Gillespie algorithms rely on.

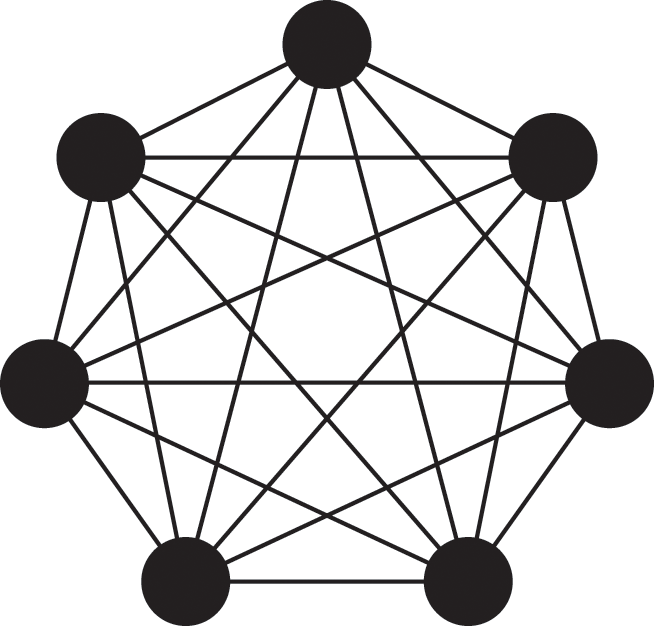

2.2 Representing a Population as a Network

Networks are an extensively used abstraction for representing a structured population, and Gillespie algorithms lend themselves naturally to simulate stochastic dynamical processes taking place in networks. In a network representation, each individual in the population corresponds to a node in the network, and edges are drawn between pairs of individuals that directly interact. What constitutes an interaction generally depends on the context. In particular, for the simulation of dynamic processes in the population, the interaction depends on the nature of the process we wish to simulate. For simulating the spread of an infectious disease, for example, a typical type of relevant interaction is physical proximity between individuals.

Formally, we define a network as a graph

![]() , where

, where

![]() is the set of nodes,

is the set of nodes,

![]() is the set of edges, and each edge

is the set of edges, and each edge

![]() defines a pair of nodes

defines a pair of nodes

![]() that are directly connected. The pairs

that are directly connected. The pairs

![]() may be ordered, in which case edges are directed (by convention from

may be ordered, in which case edges are directed (by convention from

![]() to

to

![]() ), or unordered, in which case edges are undirected (i.e.,

), or unordered, in which case edges are undirected (i.e.,

![]() connects to

connects to

![]() if and only if

if and only if

![]() connects to

connects to

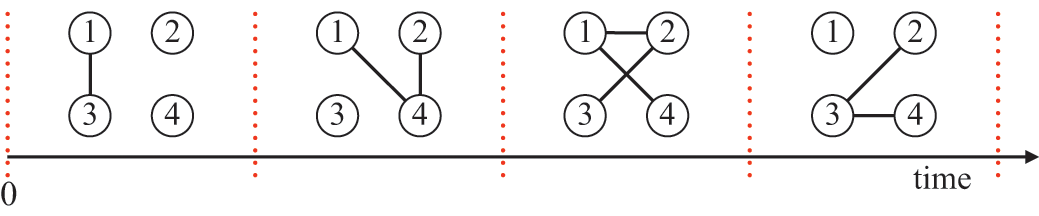

![]() ). We may also add weights to the edges to represent different strengths of interactions, or we may even consider graphs that evolve in time (so-called temporal networks) to account for the dynamics of interactions in a population.

). We may also add weights to the edges to represent different strengths of interactions, or we may even consider graphs that evolve in time (so-called temporal networks) to account for the dynamics of interactions in a population.

We will primarily consider simple (i.e., static, undirected, and unweighted) networks in our examples. However, the Gillespie algorithms apply to simulated jump processes in all kinds of populations and networks. (For temporal networks, we need to extend the classic Gillespie algorithms to cope with the time-varying network structure; see Section 5.4.)

2.3 Example: Stochastic SIR Model in Continuous Time

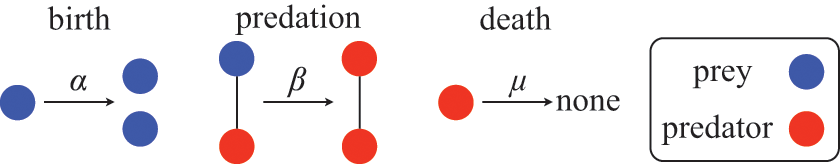

We introduce jump processes and explore their mathematical properties by way of a running example. We show how we can use them to model epidemic dynamics using the stochastic susceptible-infectious-recovered (SIR) model.Footnote 1 For more examples (namely, SIR epidemic dynamics in metapopulation networks, the voter model, and the Lotka–Volterra model for predator–prey dynamics), see Section 3.4.

We examine a stochastic version of the SIR model in continuous time defined as follows. We consider a constant population of

![]() individuals (nodes). At any time, each individual is in one of three states: susceptible (denoted by

individuals (nodes). At any time, each individual is in one of three states: susceptible (denoted by

![]() ; meaning healthy), infectious (denoted by

; meaning healthy), infectious (denoted by

![]() ), or recovered (denoted by

), or recovered (denoted by

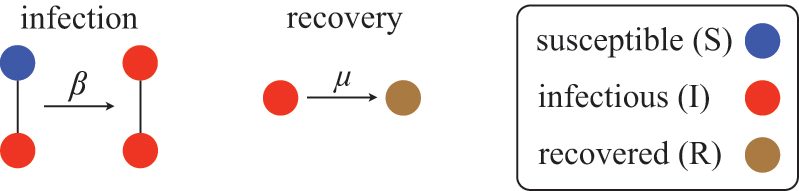

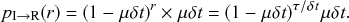

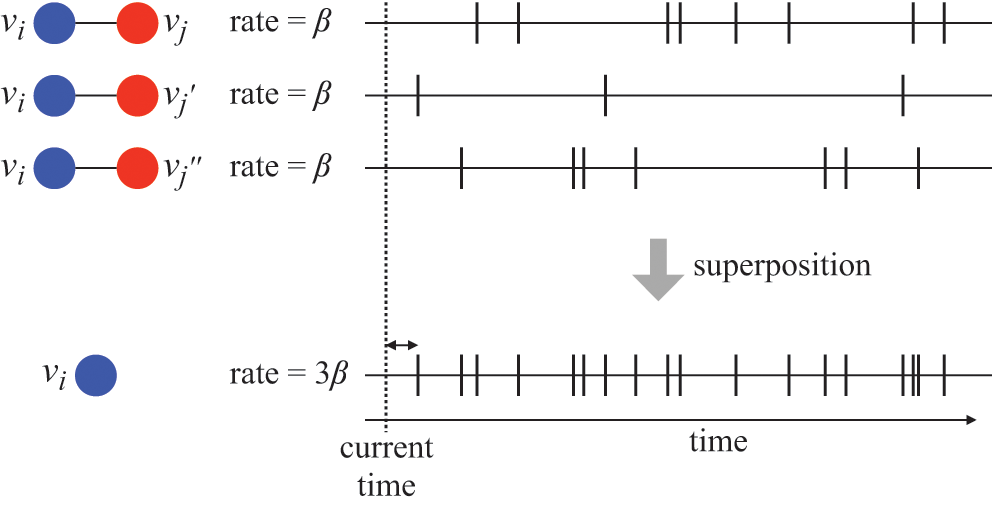

![]() ). The rules governing how individuals change their states are shown schematically in Fig. 1. An infectious individual that is in contact with a susceptible individual infects the susceptible individual in a stochastic manner with a constant infection rate

). The rules governing how individuals change their states are shown schematically in Fig. 1. An infectious individual that is in contact with a susceptible individual infects the susceptible individual in a stochastic manner with a constant infection rate

![]() . Independently of the infection events, an infectious individual may recover at any point in time, with a constant recovery rate

. Independently of the infection events, an infectious individual may recover at any point in time, with a constant recovery rate

![]() . If an infection event occurs, the susceptible individual that has been infected changes its state to I. If an infectious individual recovers, it transits from the I state to the R state. Nobody leaves or joins the population over the course of the dynamics. After reaching the R state, an individual cannot be reinfected or infect others again. Therefore, R individuals do not influence the increase or decrease in the number of S or I individuals. Because R individuals are as if they no longer exist in the system, the R state is mathematically equivalent to having died of the infection; once dead, an individual will not be reinfected or infect others.

. If an infection event occurs, the susceptible individual that has been infected changes its state to I. If an infectious individual recovers, it transits from the I state to the R state. Nobody leaves or joins the population over the course of the dynamics. After reaching the R state, an individual cannot be reinfected or infect others again. Therefore, R individuals do not influence the increase or decrease in the number of S or I individuals. Because R individuals are as if they no longer exist in the system, the R state is mathematically equivalent to having died of the infection; once dead, an individual will not be reinfected or infect others.

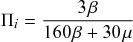

Figure 1 Rules of state changes in the SIR model. An infectious individual infects a susceptible neighbor at a rate

![]() . Each infectious individual recovers at a rate

. Each infectious individual recovers at a rate

![]() .

.

We typically start the stochastic SIR dynamics with a single infectious individual, which we refer to as the source or seed, and

![]() susceptible individuals (and thus no recovered individuals). Then, various infection and recovery events may occur. The dynamics stop when no infectious individuals are left. In this final situation, the population is composed entirely of susceptible and/or recovered individuals. Since both infection and recovery involve an infectious individual, and there are no infectious individuals left, the dynamics are stuck. The final number of recovered nodes, denoted by

susceptible individuals (and thus no recovered individuals). Then, various infection and recovery events may occur. The dynamics stop when no infectious individuals are left. In this final situation, the population is composed entirely of susceptible and/or recovered individuals. Since both infection and recovery involve an infectious individual, and there are no infectious individuals left, the dynamics are stuck. The final number of recovered nodes, denoted by

![]() , is called the epidemic size, also known as the final epidemic size or simply the final size.Footnote 2 The epidemic size tends to increase as the infection rate

, is called the epidemic size, also known as the final epidemic size or simply the final size.Footnote 2 The epidemic size tends to increase as the infection rate

![]() increases or as the recovery rate

increases or as the recovery rate

![]() decreases. Many other measures to quantify the behavior of the SIR model exist (Reference Pastor-Satorras, Castellano, Van Mieghem and VespignaniPastor-Satorras et al., 2015). For example, we may be interested in the time until the dynamics terminate or in the speed at which the number of infectious individuals grows in the initial stage of the dynamics.

decreases. Many other measures to quantify the behavior of the SIR model exist (Reference Pastor-Satorras, Castellano, Van Mieghem and VespignaniPastor-Satorras et al., 2015). For example, we may be interested in the time until the dynamics terminate or in the speed at which the number of infectious individuals grows in the initial stage of the dynamics.

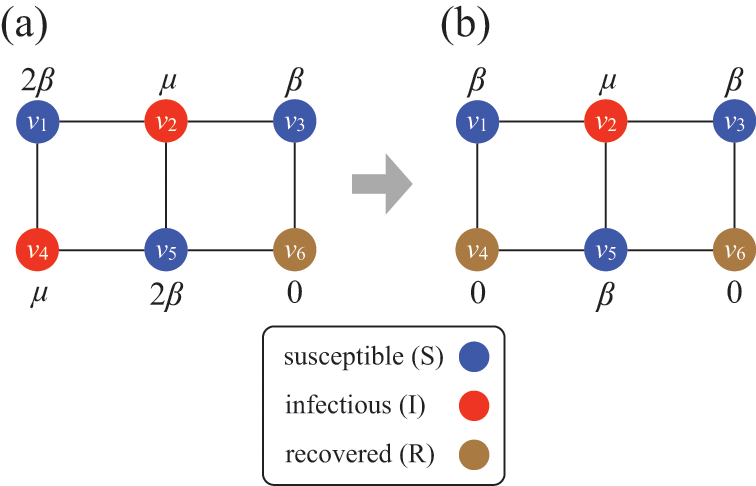

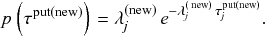

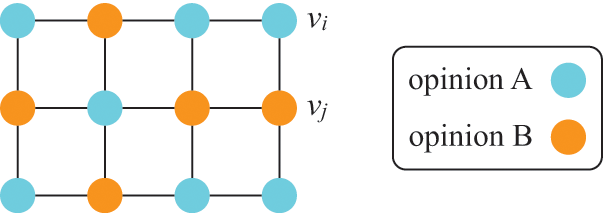

Consider Fig. 2(a), where individuals are connected as a network. We generally assume that infection may only occur between pairs of individuals that are directly connected by an edge (called adjacent nodes). For example, the node

![]() can infect

can infect

![]() and

and

![]() but not

but not

![]() . The network version of the SIR model is fully described by the infection rate

. The network version of the SIR model is fully described by the infection rate

![]() , the recovery rate

, the recovery rate

![]() , the network structure, that is, which node pairs are connected by an edge, and the choice of source node to initialize the dynamics.

, the network structure, that is, which node pairs are connected by an edge, and the choice of source node to initialize the dynamics.

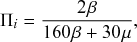

Figure 2 Stochastic SIR process on a square-grid network with six nodes. (a) Status of the network at an arbitrary time

![]() . (b) Status of the network after

. (b) Status of the network after

![]() has recovered. The values attached to the nodes indicate the rates of the events that the nodes may experience next.

has recovered. The values attached to the nodes indicate the rates of the events that the nodes may experience next.

Mathematically, we describe the system by a set of coupled, constant-rate jump processes; constant-rate jump processes are known as Poisson processes (Box 1). Each possible event that may happen is associated to a Poisson process, that is, the recovery of each infectious individual is described by a Poisson process, and so is each pair of infectious and susceptible individuals where the former may infect the latter. The Poisson processes are coupled because an event generated by one process may alter the other processes by changing their rates, generating new Poisson processes, or making existing ones disappear. For example, after a node gets infected, it may in turn infect any of its susceptible neighbors, which we represent mathematically by adding new Poisson processes. This coupling implies that the set of coupled Poisson processes generally constitutes a process that is more complicated than a single Poisson process.

In the following subsections we develop the main mathematical properties of Poisson processes and of sets of Poisson processes. We will rely on these properties in Section 3 to construct the Gillespie algorithms that can simulate systems of coupled Poisson processes exactly. Note that the restriction to Poisson (i.e., constant-rate) processes is essential for the classic Gillespie algorithms to work; see Section 5 for recent extensions to the simulation of non-Poissonian processes.

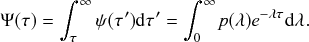

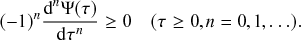

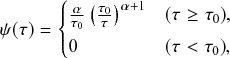

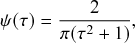

A Poisson process is a jump process that generates events with a constant rate,

![]() .

.

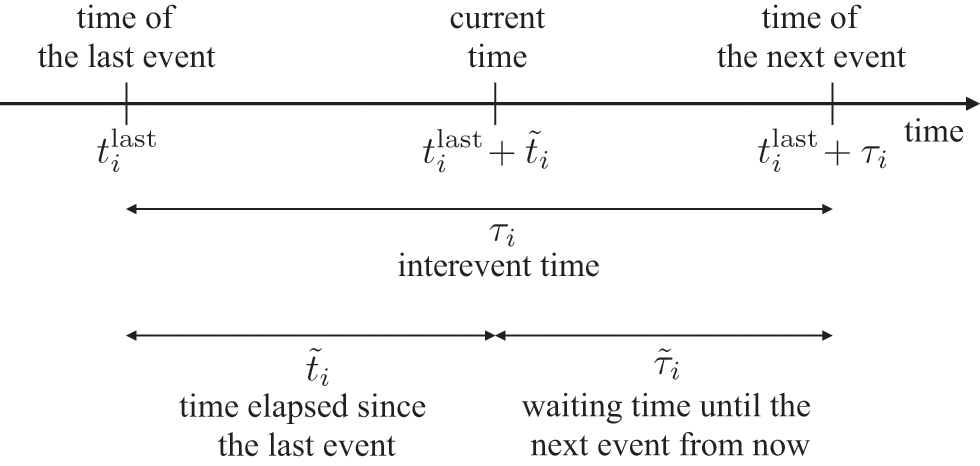

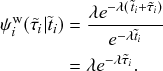

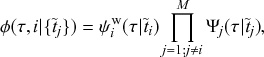

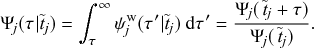

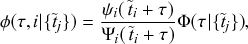

Waiting-Time Distribution

The waiting times

![]() between consecutive events generated by a Poisson process are exponentially distributed. In other words,

between consecutive events generated by a Poisson process are exponentially distributed. In other words,

![]() obeys the probability density

obeys the probability density

(2.1)

(2.1)

Memoryless Property

The waiting time left until a Poisson process generates an event given that a time

![]() has already elapsed since the last event is independent of

has already elapsed since the last event is independent of

![]() . This property is called the memoryless property of Poisson processes and is shown as follows:

. This property is called the memoryless property of Poisson processes and is shown as follows:

(2.2)

(2.2)

where

![]() represents the conditional probability density that the next event occurs a time

represents the conditional probability density that the next event occurs a time

![]() after the last event given that time

after the last event given that time

![]() has already elapsed;

has already elapsed;

![]() is called the survival probability and is the probability that no event takes place for a time

is called the survival probability and is the probability that no event takes place for a time

![]() . The first equality in Eq. (2.2) follows from the definition of the conditional probability. The second equality follows from Eq. (2.1).

. The first equality in Eq. (2.2) follows from the definition of the conditional probability. The second equality follows from Eq. (2.1).

Superposition Theorem

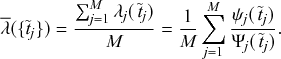

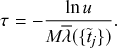

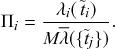

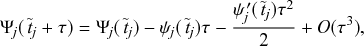

Consider a set of Poisson processes indexed by

![]() . The superposition of the processes is a jump process that generates an event whenever any of the individual processes does. It is another Poisson process whose rate is given by

. The superposition of the processes is a jump process that generates an event whenever any of the individual processes does. It is another Poisson process whose rate is given by

(2.3)

(2.3)

where

![]() is the rate of the

is the rate of the

![]() th Poisson process.

th Poisson process.

Probability of a Given Process Generating an Event in a Superposition of Poisson Processes

Consider any given event generated by a superposition of Poisson processes. The probability

![]() that the

that the

![]() th individual Poisson process has generated this event is proportional to the rate of the

th individual Poisson process has generated this event is proportional to the rate of the

![]() th process. In other words,

th process. In other words,

(2.4)

(2.4)

2.4 Waiting-Time Distribution for a Poisson Process

We derive in this subsection the waiting-time distribution for a Poisson process, which characterizes how long one has to wait for the process to generate an event. It is often easiest to start from a discrete-time description when exploring properties of a continuous-time stochastic process. Therefore, we will follow this approach here. We use the recovery of a single node in the SIR model as an example in our development.

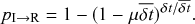

Let us partition time into short intervals of length

![]() . As

. As

![]() goes to zero, this becomes an exact description of the continuous-time process. An infectious individual recovers with probability

goes to zero, this becomes an exact description of the continuous-time process. An infectious individual recovers with probability

![]() after each interval given that it has not recovered before.Footnote 3

after each interval given that it has not recovered before.Footnote 3

Formally, we define the SIR process in the limit

![]() . Then, you might worry that the recovery event is unlikely to ever take place because the probability with which it happens during each time-step, that is,

. Then, you might worry that the recovery event is unlikely to ever take place because the probability with which it happens during each time-step, that is,

![]() , goes toward 0 when the step size

, goes toward 0 when the step size

![]() does so. However, this is not the case; because the number of time-steps in any given finite interval grows inversely proportional to

does so. However, this is not the case; because the number of time-steps in any given finite interval grows inversely proportional to

![]() , the probability to recover in finite time stays finite. For example, if we use a different step size

, the probability to recover in finite time stays finite. For example, if we use a different step size

![]() , which is ten times smaller than the original

, which is ten times smaller than the original

![]() , then the probability of recovery within the short duration of time

, then the probability of recovery within the short duration of time

![]() is indeed 10 times smaller than

is indeed 10 times smaller than

![]() (i.e.,

(i.e.,

![]() ). However, there are

). However, there are

![]() windows of size

windows of size

![]() in one time window of size

in one time window of size

![]() . So, we now have 10 chances for recovery to happen instead of only one chance. The probability for recovery to occur in any of these 10 time windows is equal to one minus the probability that it does not occur. The probability that the individual does not recover in time

. So, we now have 10 chances for recovery to happen instead of only one chance. The probability for recovery to occur in any of these 10 time windows is equal to one minus the probability that it does not occur. The probability that the individual does not recover in time

![]() is equal to

is equal to

![]() . Therefore, the probability that the individual recovers in any of the

. Therefore, the probability that the individual recovers in any of the

![]() windows is

windows is

(2.5)

(2.5)

Equation (2.5) does not vanish as we make

![]() small. In fact, the Taylor expansion of Eq. (2.5) in terms of

small. In fact, the Taylor expansion of Eq. (2.5) in terms of

![]() yields

yields

![]() , where

, where

![]() represents “approximately equal to”. Therefore, to leading order, the recovery probabilities are the same between the case of a single time window of size

represents “approximately equal to”. Therefore, to leading order, the recovery probabilities are the same between the case of a single time window of size

![]() and the case of

and the case of

![]() time windows of size

time windows of size

![]() .

.

In the limit

![]() , the recovery event may happen at any continuous point in time. We denote by

, the recovery event may happen at any continuous point in time. We denote by

![]() the waiting time from the present time until the time of the recovery event. We want to determine the probability density function (probability density or pdf for short) of

the waiting time from the present time until the time of the recovery event. We want to determine the probability density function (probability density or pdf for short) of

![]() , which we denote by

, which we denote by

![]() . By definition,

. By definition,

![]() is equal to the probability that the recovery event happens in the interval

is equal to the probability that the recovery event happens in the interval

![]() for an infinitesimal

for an infinitesimal

![]() (i.e., for

(i.e., for

![]() ). To calculate

). To calculate

![]() , we note that the probability that the event occurs after

, we note that the probability that the event occurs after

![]() time windows, denoted by

time windows, denoted by

![]() , is equal to the probability that it did not occur during the first

, is equal to the probability that it did not occur during the first

![]() time windows and then occurs in the

time windows and then occurs in the

![]() th window. This probability is equal to

th window. This probability is equal to

(2.6)

(2.6)

The first factor on the right-hand side of Eq. (2.6) is the probability that the event has not happened before the

![]() th window; it is simply equal to the probability that the event has not happened during a single window, raised to the power of

th window; it is simply equal to the probability that the event has not happened during a single window, raised to the power of

![]() . The second factor is the probability that the event happens in the

. The second factor is the probability that the event happens in the

![]() th window. By applying the identity

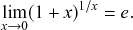

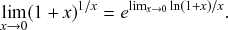

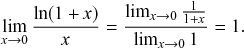

th window. By applying the identity

, known from calculus (see Appendix), with

, known from calculus (see Appendix), with

![]() to Eq. (2.6), we obtain the pdf of the waiting time as follows:

to Eq. (2.6), we obtain the pdf of the waiting time as follows:

(2.7)

(2.7)

Equation (2.7) shows the intricate connection between the Poisson process and the exponential distribution: the waiting time of a Poisson process with rate

![]() (here, specifically the recovery rate) follows an exponential distribution with rate

(here, specifically the recovery rate) follows an exponential distribution with rate

![]() (Box 1). This fact implies that the mean time we have to wait for the recovery event to happen is

(Box 1). This fact implies that the mean time we have to wait for the recovery event to happen is

![]() . The exponential waiting-time distribution actually completely characterizes the Poisson process. In other words, the Poisson process is the only jump process that generates events separated by waiting times that follow a fixed exponential distribution.

. The exponential waiting-time distribution actually completely characterizes the Poisson process. In other words, the Poisson process is the only jump process that generates events separated by waiting times that follow a fixed exponential distribution.

If we consider the infection process between a pair of S and I nodes in complete isolation from the other infection and recovery processes in the population, then exactly the same argument (Eq. (2.7)) holds true. In other words, the time until infection takes place between the two nodes is exponentially distributed with rate

![]() , that is,

, that is,

(2.8)

(2.8)

However, in practice the infection process is more complicated than the recovery process because it is coupled to other processes. Specifically, if another process generates an event before the infection process does, then Eq. (2.8) may no longer hold true for the infection process in question. For example, consider a node

![]() that is currently susceptible and an adjacent node

that is currently susceptible and an adjacent node

![]() that is infectious, as in Fig. 2. For this pair of nodes, two events are possible:

that is infectious, as in Fig. 2. For this pair of nodes, two events are possible:

![]() may infect

may infect

![]() , or

, or

![]() may recover. As long as neither of the events has yet taken place, either of the two corresponding Poisson processes may generate an event at any point in time, following Eqs. (2.8) and (2.7), respectively. However, if

may recover. As long as neither of the events has yet taken place, either of the two corresponding Poisson processes may generate an event at any point in time, following Eqs. (2.8) and (2.7), respectively. However, if

![]() recovers before it infects

recovers before it infects

![]() , then the infection event is no longer possible, and so Eq. (2.8) no longer holds. We explore in the following two subsections how to mathematically deal with this coupling.

, then the infection event is no longer possible, and so Eq. (2.8) no longer holds. We explore in the following two subsections how to mathematically deal with this coupling.

2.5 Independence and Interdependence of Jump Processes

Most models based on jump processes and most simulation methods, including the Gillespie algorithms, implicitly assume that different concurrent jump processes are independent of each other in the sense that the internal state of one process does not influence another. This notion of independence may be a source of confusion because a given process may depend on the events generated earlier by other processes, that is, the processes may be coupled, as we saw is the case for the infection processes in the SIR model. In this section, we sort out the notions of independence and coupling and what they mean for the types of jump processes we want to simulate. We will also explore another type of independence of Poisson processes, which is their independence of the past, called the memoryless property.

We can state the independence assumption as the condition that different processes are only allowed to influence each other by changing the state of the system. In other words, at any point in time each process generates an event at a rate that is independent of all other processes given the current state of the system, that is, the processes are conditionally independent. For example, the rate at which

![]() infects

infects

![]() in Fig. 2(a) depends on

in Fig. 2(a) depends on

![]() being infectious and

being infectious and

![]() being susceptible (corresponding to the system’s current state). However, it does not depend on any internal state of

being susceptible (corresponding to the system’s current state). However, it does not depend on any internal state of

![]() ’s recovery process such as the time left until

’s recovery process such as the time left until

![]() recovers. Given the states of all nodes, the two processes are independent. Poisson processes are always conditionally independent in this sense. The conditional independence property follows directly from the fact that Poisson processes have constant rates by definition and thus are not influenced by other processes. The conditional independence is essential for the Gillespie algorithms to work. Even the recent extensions of the Gillespie algorithms to simulate non-Poissonian processes, which we review in Section 5, rely on an assumption of conditional independence between the jump processes.

recovers. Given the states of all nodes, the two processes are independent. Poisson processes are always conditionally independent in this sense. The conditional independence property follows directly from the fact that Poisson processes have constant rates by definition and thus are not influenced by other processes. The conditional independence is essential for the Gillespie algorithms to work. Even the recent extensions of the Gillespie algorithms to simulate non-Poissonian processes, which we review in Section 5, rely on an assumption of conditional independence between the jump processes.

We underline that the assumption of conditional independence does not imply that the different jump processes are not coupled with each other. Such uncoupled processes would indeed be boring. If the jump processes constituting a given system were all uncoupled, then they would not be able to generate any collective dynamics. On the technical side, there would in this case be no reason to consider the set of processes as one system. It would suffice to analyze each process separately. We would in particular have no need for the specialized machinery of the Gillespie algorithms since we could simply simulate each process by sampling waiting times from the corresponding exponential distribution (Box 1, Eq. (2.1)).

In fact, the conditional independence assumption allows different processes to be coupled, as long as they only do so by changing the physical state of the system. This is a natural constraint in many systems. For example, in chemical reaction systems, the processes (that is, chemical reactions) are coupled through discrete reaction events that use molecules of some chemical species to generate others. Similarly, in the SIR model different processes influence each other by changing the state of the nodes, that is, from S to I in an infection event or from I to R in a recovery event. In the example shown in Fig. 2, when node

![]() recovers, it decreases the probability that its neighboring susceptible node

recovers, it decreases the probability that its neighboring susceptible node

![]() gets infected within a certain time horizon compared with the scenario where

gets infected within a certain time horizon compared with the scenario where

![]() remains infectious. As this example suggests, the probability that a susceptible node gets infected depends on the past states of its neighbors. Therefore, over the course of the entire simulation, the dynamics of a node’s state (e.g.,

remains infectious. As this example suggests, the probability that a susceptible node gets infected depends on the past states of its neighbors. Therefore, over the course of the entire simulation, the dynamics of a node’s state (e.g.,

![]() ) are dependent on those of its neighbors (e.g.,

) are dependent on those of its neighbors (e.g.,

![]() and

and

![]() ).

).

Because of the coupling between jump processes, which is present in most systems of interest, we cannot simply simulate the system by separately generating the waiting times for each process according to Eq. (2.1). Any event that occurs will alter the processes to which it is coupled, thus rendering the waiting times we drew for the affected processes invalid. What the Gillespie algorithms do instead is to successively generate the waiting time until the next event, update the state of the system, and reiterate.

Besides being conditionally independent of each other, Poisson processes also display a temporal independence property, the so-called memoryless property (Box 1). In Poisson processes, the probability of the time to the next event,

![]() , is independent of how long we have already waited since the last event. In this sense, we do not need to worry about what has happened in the past. The only things that matter are the present status of the population (such as

, is independent of how long we have already waited since the last event. In this sense, we do not need to worry about what has happened in the past. The only things that matter are the present status of the population (such as

![]() is susceptible and

is susceptible and

![]() is infectious right now) and the model parameters (such as

is infectious right now) and the model parameters (such as

![]() and

and

![]() ). The memoryless property can be seen as a direct consequence of the exponential distribution of waiting times of Poisson processes (Box 1, Eq.(2.2)).

). The memoryless property can be seen as a direct consequence of the exponential distribution of waiting times of Poisson processes (Box 1, Eq.(2.2)).

2.6 Superposition of Poisson Processes

In this section, we explain a remarkable property of Poisson processes called the superposition theorem. The direct method exploits this theorem. Other methods, such as the rejection sampling algorithm (see Section 2.8) and the first reaction method, can also benefit from the superposition theorem to accelerate the simulations without impacting their accuracy.

Consider a susceptible individual

![]() in the SIR model that is in contact with

in the SIR model that is in contact with

![]() infectious individuals. Any of the

infectious individuals. Any of the

![]() infectious individuals may infect

infectious individuals may infect

![]() . Consider the case shown in Fig. 3(a), where

. Consider the case shown in Fig. 3(a), where

![]() . If we focus on a single edge connecting

. If we focus on a single edge connecting

![]() to one of its neighbors and ignore the other neighbors, the probability that

to one of its neighbors and ignore the other neighbors, the probability that

![]() is infected via this edge exactly in time

is infected via this edge exactly in time

![]() from now, where

from now, where

![]() is small, is given by

is small, is given by

![]() (see Eq. (2.8)). Each of

(see Eq. (2.8)). Each of

![]() ’s

’s

![]() neighbors may infect

neighbors may infect

![]() in the same manner and independently. The neighbor that does infect

in the same manner and independently. The neighbor that does infect

![]() is the one for which the corresponding waiting time is the shortest, provided that it does not recover before it infects

is the one for which the corresponding waiting time is the shortest, provided that it does not recover before it infects

![]() . From this we can intuitively see that the larger

. From this we can intuitively see that the larger

![]() is, the shorter the waiting time before

is, the shorter the waiting time before

![]() gets infected tends to be. To simulate the dynamics of this small system, we need to know, not when each of its neighbors would infect

gets infected tends to be. To simulate the dynamics of this small system, we need to know, not when each of its neighbors would infect

![]() , but rather the time until any of its neighbors infects

, but rather the time until any of its neighbors infects

![]() .

.

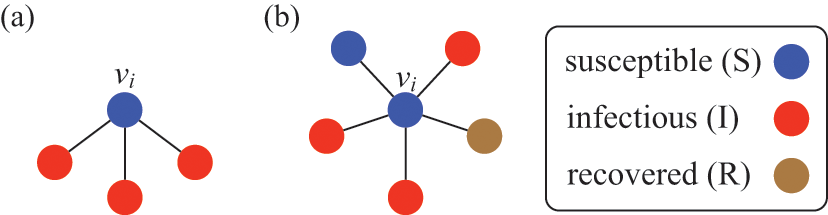

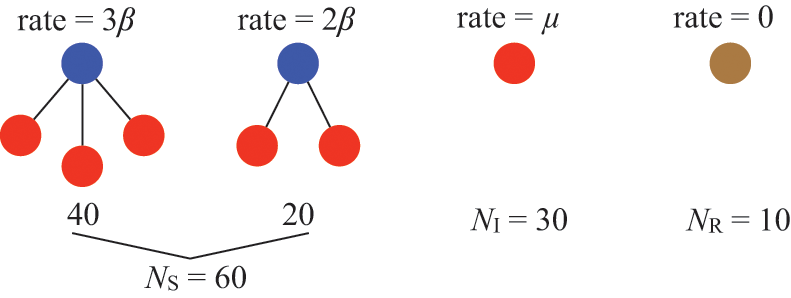

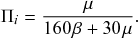

Figure 3 A susceptible node and other nodes surrounding it. (a) A susceptible node

![]() surrounded by three infectious nodes. (b) A susceptible node

surrounded by three infectious nodes. (b) A susceptible node

![]() surrounded by five nodes in different states.

surrounded by five nodes in different states.

To calculate the waiting-time distribution for the infection of

![]() by any of its neighbors, we again resort to the discrete-time view of the infection processes. Because the infection processes are independent, the probability that

by any of its neighbors, we again resort to the discrete-time view of the infection processes. Because the infection processes are independent, the probability that

![]() is not infected by any of its

is not infected by any of its

![]() infectious neighbors in a time window of duration

infectious neighbors in a time window of duration

![]() is given by

is given by

(2.9)

(2.9)

Therefore, the probability that

![]() is infected after a time

is infected after a time

![]() (i.e.,

(i.e.,

![]() gets infected exactly in the

gets infected exactly in the

![]() th time window of length

th time window of length

![]() and not before) is given by

and not before) is given by

(2.10)

(2.10)

Here, the factor

![]() is the survival probability that an infection does not happen for a time

is the survival probability that an infection does not happen for a time

![]() . The factor

. The factor

![]() is the probability that any of

is the probability that any of

![]() ’s infectious neighbors infects

’s infectious neighbors infects

![]() in the next time window,

in the next time window,

![]() .

.

Using the exponential identity

![]() with

with

![]() as we did in Section 2.4, we obtain in the continuous-time limit that

as we did in Section 2.4, we obtain in the continuous-time limit that

(2.11)

(2.11)

where the first equality is obtained by noting that

![]() and rearranging the terms. In the same limit of

and rearranging the terms. In the same limit of

![]() , we obtain from Taylor expansion that

, we obtain from Taylor expansion that

(2.12)

(2.12)

By combining Eqs. (2.10), (2.11), and (2.12), we obtain

![]() . Therefore, the probability density with which

. Therefore, the probability density with which

![]() gets infected at time

gets infected at time

![]() is given by

is given by

(2.13)

(2.13)

that is, the exponential distribution with rate parameter

![]() . By comparing Eqs. (2.8) and (2.13), we see that the effect of having

. By comparing Eqs. (2.8) and (2.13), we see that the effect of having

![]() infectious neighbors (see Fig. 3(a) for the case of

infectious neighbors (see Fig. 3(a) for the case of

![]() ) is the same as that of having just one infectious neighbor with an infection rate of

) is the same as that of having just one infectious neighbor with an infection rate of

![]() .

.

This is a convenient property of Poisson processes, known as the superposition theorem (see Box 1, Eq. (2.3) for the general theorem). To calculate how likely it is that a susceptible node

![]() will be infected in time

will be infected in time

![]() , one does not need to examine when the infection would happen or whether the infection happens for each of the infectious individuals contacting

, one does not need to examine when the infection would happen or whether the infection happens for each of the infectious individuals contacting

![]() . We are allowed to agglomerate all those effects into one infectious supernode as if the supernode infects

. We are allowed to agglomerate all those effects into one infectious supernode as if the supernode infects

![]() with rate

with rate

![]() . We refer to such a superposed Poisson process that induces a particular state transition in the system (in the present case, the transition from the S to the I state for

. We refer to such a superposed Poisson process that induces a particular state transition in the system (in the present case, the transition from the S to the I state for

![]() ) as a reaction channel, following the nomenclature in chemical reaction systems.

) as a reaction channel, following the nomenclature in chemical reaction systems.

This interpretation remains valid even if

![]() is adjacent to other irrelevant individuals. In the network shown in Fig. 3(b), the susceptible node

is adjacent to other irrelevant individuals. In the network shown in Fig. 3(b), the susceptible node

![]() has degree (i.e., number of other nodes that are connected to

has degree (i.e., number of other nodes that are connected to

![]() by an edge)

by an edge)

![]() . Three neighbors of

. Three neighbors of

![]() are infectious, one is susceptible, and one is recovered. In this case,

are infectious, one is susceptible, and one is recovered. In this case,

![]() will be infected at a rate of

will be infected at a rate of

![]() , the same as in the case of

, the same as in the case of

![]() in the network shown in Fig. 3(a).

in the network shown in Fig. 3(a).

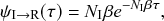

In both cases, we are replacing three instances of the probability density of the time to the next infection event, each given by

![]() , by a single probability density

, by a single probability density

![]() . Representing the three infectious nodes by one infectious supernode, that is, one reaction channel, with three times the infection rate is equivalent to superposing the three Poisson processes into one. Figure 4 illustrates this superposition, showing the putative event times generated by each Poisson process as well as those generated by their superposition. The superposition theorem dictates that the superposition is a Poisson process with a rate of

. Representing the three infectious nodes by one infectious supernode, that is, one reaction channel, with three times the infection rate is equivalent to superposing the three Poisson processes into one. Figure 4 illustrates this superposition, showing the putative event times generated by each Poisson process as well as those generated by their superposition. The superposition theorem dictates that the superposition is a Poisson process with a rate of

![]() . This in particular means that we can draw the waiting time

. This in particular means that we can draw the waiting time

![]() until the first of the events generated by all the three Poisson processes happens (shown by the double-headed arrow in Fig. 4) directly from the exponential distribution

until the first of the events generated by all the three Poisson processes happens (shown by the double-headed arrow in Fig. 4) directly from the exponential distribution

![]() . Note that Poisson processes are defined as generating events indefinitely, and for illustrative purposes we show multiple events in Figure 4. However, in the SIR model only the first event in the superposed process will take place in practice. For example, once the event changes the state of

. Note that Poisson processes are defined as generating events indefinitely, and for illustrative purposes we show multiple events in Figure 4. However, in the SIR model only the first event in the superposed process will take place in practice. For example, once the event changes the state of

![]() from S to I, the node cannot be infected anymore, and therefore none of the three infection processes can generate any more events.

from S to I, the node cannot be infected anymore, and therefore none of the three infection processes can generate any more events.

Figure 4 Superposition of three Poisson processes. The event sequence in the bottom is the superposition of the three event sequences corresponding to each of the three edges connecting

![]() to its neighbors

to its neighbors

![]() ,

,

![]() , and

, and

![]() . The superposed event sequence generates an event whenever one of the three individual processes does. Note that edges (

. The superposed event sequence generates an event whenever one of the three individual processes does. Note that edges (

![]() ,

,

![]() ), (

), (

![]() ,

,

![]() ), and (

), and (

![]() ,

,

![]() ) generally carry different numbers of events in a given time window despite the rate of the processes (i.e., the infection rate,

) generally carry different numbers of events in a given time window despite the rate of the processes (i.e., the infection rate,

![]() ) being the same. This is due to the stochastic nature of Poisson processes.

) being the same. This is due to the stochastic nature of Poisson processes.

Let us consider again the snapshot of the SIR dynamics shown in Fig. 3(a), but this time we consider all the possible infection and recovery events. We can represent all the possible events that may occur by four reaction channels (i.e., Poisson processes). One channel represents the infection of the node

![]() by any of its neighbors, which happens at a rate

by any of its neighbors, which happens at a rate

![]() . We refer to this reaction channel as the first reaction channel. The three other channels each represent the recovery process of one of the infectious nodes. We refer to these three reaction channels as the second to the fourth reaction channels. We can use the same approach as above to obtain the probability density for the waiting time until the first event generated by any of the channels. However, to completely describe the dynamics, it is not sufficient to know when the next event happens. We also need to know which channel generates the event. Precisely speaking, we need to know the probability

. We refer to this reaction channel as the first reaction channel. The three other channels each represent the recovery process of one of the infectious nodes. We refer to these three reaction channels as the second to the fourth reaction channels. We can use the same approach as above to obtain the probability density for the waiting time until the first event generated by any of the channels. However, to completely describe the dynamics, it is not sufficient to know when the next event happens. We also need to know which channel generates the event. Precisely speaking, we need to know the probability

![]() that it is the

that it is the

![]() th reaction channel that generates the event. Using the definition of conditional probability, we obtain

th reaction channel that generates the event. Using the definition of conditional probability, we obtain

(2.14)

(2.14)

In a discrete-time description, the numerator in Eq. (2.14) is simply

![]() , where

, where

![]() and

and

![]() are the rates of the reaction channels. The denominator is equal to

are the rates of the reaction channels. The denominator is equal to

![]() , which in the limit of small

, which in the limit of small

![]() can be Taylor expanded to

can be Taylor expanded to

![]() . Thus, the probability that the

. Thus, the probability that the

![]() th reaction channel has generated an event that has taken place is

th reaction channel has generated an event that has taken place is

(2.15)

(2.15)

that is,

![]() is simply proportional to the rate

is simply proportional to the rate

![]() .

.

The same result holds true for general superpositions of Poisson processes (see Box 1).

2.7 Ignoring Stochasticity: Differential Equation Approach

We have introduced the types of models we are interested in and have explored their basic mathematical properties. We now turn our attention to the problem of how we can solve such models in practice. We consider again the SIR model. One simple strategy to solve it is to forget about the true stochastic nature of infection and recovery and approximate the processes as being deterministic. In this approach, we only track the dynamics of the mean numbers of susceptible, infectious, and recovered individuals. Such deterministic dynamics are described by a system of ordinary differential equations (ODEs). The ODE version of the SIR model has a longer history than the stochastic one, dating back to the seminal work by William Ogilvy Kermack and Anderson Gray McKendrick in the 1920s (Reference Kermack and McKendrickKermack & McKendrick, 1927). For the basic SIR model described previously, the corresponding ODEs are given by

(2.16)

(2.16)

(2.17)

(2.17)

(2.18)

(2.18)

where

![]() ,

,

![]() , and

, and

![]() are the fractions of S, I, and R individuals, respectively. The

are the fractions of S, I, and R individuals, respectively. The

![]() terms in Eqs. (2.16) and (2.17) represent infection events, through which the number of S individuals decreases and the number of I individuals increases by the same amount. The

terms in Eqs. (2.16) and (2.17) represent infection events, through which the number of S individuals decreases and the number of I individuals increases by the same amount. The

![]() terms in Eqs. (2.17) and (2.18) represent recovery events.

terms in Eqs. (2.17) and (2.18) represent recovery events.

One can solve Eqs. (2.16), (2.17), and (2.18) either analytically, to some extent, or numerically using an ODE solver implemented in various programming languages. Suppose that we have coded up Eqs. (2.16), (2.17), and (2.18) into an ODE solver to simulate the infection dynamics (such as time courses of

![]() ) for various values of

) for various values of

![]() and

and

![]() . Does the result give us complete understanding of the original stochastic SIR model? The answer is negative (Reference Mollison, Isham and GrenfellMollison, Isham, & Grenfell, 1994), at least for the following reasons.

. Does the result give us complete understanding of the original stochastic SIR model? The answer is negative (Reference Mollison, Isham and GrenfellMollison, Isham, & Grenfell, 1994), at least for the following reasons.

First, the ODE is not a good approximation when

![]() is small. In Eqs. (2.16), (2.17), and (2.18), the variables are the fraction of individuals in each state. For example,

is small. In Eqs. (2.16), (2.17), and (2.18), the variables are the fraction of individuals in each state. For example,

![]() . The ODE description assumes that

. The ODE description assumes that

![]() can take any real value between 0 and 1 and that

can take any real value between 0 and 1 and that

![]() changes continuously as time goes by. However, in reality

changes continuously as time goes by. However, in reality

![]() is quantized, so it can take only the values

is quantized, so it can take only the values

![]() ,

,

![]() ,

,

![]() ,

,

![]()

![]() , and

, and

![]() , and it changes in steps of

, and it changes in steps of

![]() (e.g., it changes from

(e.g., it changes from

![]() to

to

![]() discontinuously). This discrete nature does not typically cause serious problems when

discontinuously). This discrete nature does not typically cause serious problems when

![]() is large, in which case

is large, in which case

![]() is close to being continuous. By contrast, the ODE model is not accurate when

is close to being continuous. By contrast, the ODE model is not accurate when

![]() is small due to the quantization effect. (Note that the ODE approach is problematic in some cases even when

is small due to the quantization effect. (Note that the ODE approach is problematic in some cases even when

![]() is large, i.e. near critical points, as we discuss in what follows.)

is large, i.e. near critical points, as we discuss in what follows.)

Second, even if

![]() is large, the actual dynamic changes in

is large, the actual dynamic changes in

![]() , for example, are not close to what the ODEs describe when the number of infectious individuals is small. For example, if

, for example, are not close to what the ODEs describe when the number of infectious individuals is small. For example, if

![]() , there are two infectious individuals. If one of them recovers,

, there are two infectious individuals. If one of them recovers,

![]() changes to

changes to

![]() , and this is a 50 percent decrease in

, and this is a 50 percent decrease in

![]() . The ODE assumes that

. The ODE assumes that

![]() changes continuously and is not ready to describe such a change. As another example, suppose that we initially set

changes continuously and is not ready to describe such a change. As another example, suppose that we initially set

![]() ,

,

![]() , and

, and

![]() . In other words, there is a single infectious seed, and all the other individuals are initially susceptible. In fact, the theory of the ODE version of the SIR model shows that

. In other words, there is a single infectious seed, and all the other individuals are initially susceptible. In fact, the theory of the ODE version of the SIR model shows that

![]() increases deterministically, at least initially, if

increases deterministically, at least initially, if

![]() , corresponding to the situation in which an outbreak of infection happens. However, in the stochastic SIR model, the only initially infectious individual may recover before it infects anybody even if

, corresponding to the situation in which an outbreak of infection happens. However, in the stochastic SIR model, the only initially infectious individual may recover before it infects anybody even if

![]() . When this situation occurs, the dynamics terminate once the initially infectious individual has recovered, and no outbreak is observed. Although the probability with which this situation occurs decreases as

. When this situation occurs, the dynamics terminate once the initially infectious individual has recovered, and no outbreak is observed. Although the probability with which this situation occurs decreases as

![]() increases, it is still not negligibly small for many large

increases, it is still not negligibly small for many large

![]() values. This is inconsistent with the prediction of the ODE model. It should be noted that another common way to initialize the system is to start with a small fraction of infectious individuals, regardless of

values. This is inconsistent with the prediction of the ODE model. It should be noted that another common way to initialize the system is to start with a small fraction of infectious individuals, regardless of

![]() . In this case, if we start the stochastic SIR dynamics in a large, well-mixed population and, for example, with 10 percent initially infectious individuals, the ODE version is sufficiently accurate at describing the stochastic SIR dynamics.

. In this case, if we start the stochastic SIR dynamics in a large, well-mixed population and, for example, with 10 percent initially infectious individuals, the ODE version is sufficiently accurate at describing the stochastic SIR dynamics.

Third, ODEs are not accurate at describing the counterpart stochastic dynamics when the system is close to a so-called critical point. For example, in the SIR model, given the value of the infection rate (i.e.,

![]() ), there is a value of the infection rate called the epidemic threshold, which we denote by

), there is a value of the infection rate called the epidemic threshold, which we denote by

![]() . For

. For

![]() , only a small number of the individuals will be infected (i.e., the final epidemic size is of

, only a small number of the individuals will be infected (i.e., the final epidemic size is of

![]() ). For

). For

![]() , the final epidemic size is large (i.e.,

, the final epidemic size is large (i.e.,

![]() ) with a positive probability. In analogy with statistical physics,

) with a positive probability. In analogy with statistical physics,

![]() is termed a critical point of the SIR model. Near criticality the fluctuations of

is termed a critical point of the SIR model. Near criticality the fluctuations of

![]() ,

,

![]() , and

, and

![]() are not negligible compared to their mean values, even for large

are not negligible compared to their mean values, even for large

![]() , and the ODE generally fails.

, and the ODE generally fails.

Fourth, ODEs are not accurate when dynamics are mainly driven by stochasticity rather than by the deterministic terms on the right-hand sides of the ODEs. This situation may happen even far from criticality or in a model that does not show critical dynamics. The voter model (see Section 4.10.3 for details) is such a case. In its simplest version, the voter model describes the tug-of-war between two equally strong opinions in a population of individuals. Because the two opinions are equally strong, the ODE version of the voter model predicts that the fraction of individuals supporting opinion A (and that of individuals supporting opinion B) does not vary over time, that is, one obtains

![]() , where

, where

![]() and

and

![]() are the fractions of individuals supporting opinions A and B, respectively. However, in fact, the opinion of the individuals flips here and there in the population due to stochasticity, and it either increases or decreases over time.

are the fractions of individuals supporting opinions A and B, respectively. However, in fact, the opinion of the individuals flips here and there in the population due to stochasticity, and it either increases or decreases over time.

To summarize, when stochasticity manifests itself, the approximation of the original stochastic dynamics by an ODE model is not accurate.

2.8 Rejection Sampling Algorithm

The most intuitive method to simulate the stochastic SIR model, while accounting for the stochastic nature of the model, is probably to discretize time and simulate the dynamics by testing whether each possible event takes place in each step. This is called the rejection sampling algorithm. Let us consider the stochastic SIR model on a small network composed of

![]() nodes, as shown in Fig. 2, to explain the procedure.

nodes, as shown in Fig. 2, to explain the procedure.

Assume that the state of the network (i.e., the states of the individual nodes) is as shown in Fig. 2(a) at time

![]() ; three nodes are susceptible, two nodes are infectious, and the other node is recovered. In the next time-step, which accounts for a time length of

; three nodes are susceptible, two nodes are infectious, and the other node is recovered. In the next time-step, which accounts for a time length of

![]() and corresponds to the time interval

and corresponds to the time interval

![]() , an infection event may happen in five ways:

, an infection event may happen in five ways:

![]() infects

infects

![]() ,

,

![]() infects

infects

![]() ,

,

![]() infects

infects

![]() ,

,

![]() infects

infects

![]() , and

, and

![]() infects

infects

![]() . Recovery events may happen for

. Recovery events may happen for

![]() and

and

![]() . Therefore, there are seven possible events in total, some of which may simultaneously happen in the next time-step.

. Therefore, there are seven possible events in total, some of which may simultaneously happen in the next time-step.

With the rejection sampling method, we sequentially (called asynchronous updating) or simultaneously (called synchronous updating) check whether or not each of these events happens in each time-step of length

![]() . Note that it is not possible to go to the limit of

. Note that it is not possible to go to the limit of

![]() in rejection sampling. In our example,

in rejection sampling. In our example,

![]() infects

infects

![]() with probability

with probability

![]() in a time-step. With probability

in a time-step. With probability

![]() , nothing occurs along this edge. In practice, to determine whether the event takes place or not, we draw a random number

, nothing occurs along this edge. In practice, to determine whether the event takes place or not, we draw a random number

![]() uniformly from

uniformly from

![]() . If

. If

![]() , the algorithm rejects the proposed infection event (thus the name rejection sampling). If

, the algorithm rejects the proposed infection event (thus the name rejection sampling). If

![]() , we let the infection occur. Then, under asynchronous updating, we change the state of

, we let the infection occur. Then, under asynchronous updating, we change the state of

![]() from S to I and update the set of possible events accordingly right away, and then proceed to check the occurrence of each of the remaining possible events in turn. Under synchronous updating, we first check whether each of the possible state changes takes place and note down the changes that take place. We then implement all the noted changes simultaneously. Regardless of whether we use asynchronous or synchronous updating, the infection event occurs with probability

from S to I and update the set of possible events accordingly right away, and then proceed to check the occurrence of each of the remaining possible events in turn. Under synchronous updating, we first check whether each of the possible state changes takes place and note down the changes that take place. We then implement all the noted changes simultaneously. Regardless of whether we use asynchronous or synchronous updating, the infection event occurs with probability

![]() .

.

If

![]() recovers, which occurs with probability

recovers, which occurs with probability

![]() , and none of the other six possible events occurs in the same time-step, the status of the network at time

, and none of the other six possible events occurs in the same time-step, the status of the network at time

![]() is as shown in Fig. 2(b). Then, in the next time-step,

is as shown in Fig. 2(b). Then, in the next time-step,

![]() may get infected,

may get infected,

![]() may recover,

may recover,

![]() may get infected, and

may get infected, and

![]() may get infected, which occurs with probabilities

may get infected, which occurs with probabilities

![]() ,

,

![]() ,

,

![]() , and

, and

![]() , respectively. In this manner, we carry forward the simulation by discrete steps until no infectious nodes are left.

, respectively. In this manner, we carry forward the simulation by discrete steps until no infectious nodes are left.

There are several caveats to this approach. First, the asynchronous and the synchronous updating schemes of the same stochastic dynamics model may lead to systematically different results (Reference Cornforth, Green and NewthCornforth, Green, & Newth, 2005; Reference Greil and DrosselGreil & Drossel, 2005; Reference Huberman and GlanceHuberman & Glance, 1993).

Second, one should set

![]() such that both

such that both

![]() and

and

![]() always hold true. In fact, the discrete-time interpretation of the original model is justified only when

always hold true. In fact, the discrete-time interpretation of the original model is justified only when

![]() is small enough to yield

is small enough to yield

![]() and

and

![]() .

.

Third, in the case of asynchronous updating, the order of checking the events is arbitrary, but it affects the outcome, particularly if

![]() is not tiny. For example, we can sequentially check whether each of the five infection events occurs and then whether each of the two recovery events occurs, completing one time-step, One can alternatively check the recovery events first and then the infection events. If we do so and

is not tiny. For example, we can sequentially check whether each of the five infection events occurs and then whether each of the two recovery events occurs, completing one time-step, One can alternatively check the recovery events first and then the infection events. If we do so and

![]() recovers in the time-step, then it is no longer possible that

recovers in the time-step, then it is no longer possible that

![]() infects

infects

![]() or

or

![]() in the same time-step because

in the same time-step because

![]() has recovered. If the infection events were checked before the recovery events, it is possible that

has recovered. If the infection events were checked before the recovery events, it is possible that

![]() infects

infects

![]() or

or

![]() before

before

![]() recovers in the same time-step.

recovers in the same time-step.

Fourth, some of the seven types of event cannot occur simultaneously in a single time-step regardless of whether the updating is asynchronous or synchronous, and regardless of the order in which we check the events in the asynchronous updating. For example, if

![]() has infected

has infected

![]() , then

, then

![]() cannot infect

cannot infect

![]() in the same time-step (or anytime later) and vice versa. In fact, from the susceptible node

in the same time-step (or anytime later) and vice versa. In fact, from the susceptible node

![]() ’s point of view, it does not matter which infectious neighbor, either

’s point of view, it does not matter which infectious neighbor, either

![]() or

or

![]() , infects

, infects

![]() . What is primarily important is whether

. What is primarily important is whether

![]() gets infected or not in the given time-step, whereas one wants to know who infected whom in some tasks such as contact tracing.

gets infected or not in the given time-step, whereas one wants to know who infected whom in some tasks such as contact tracing.

A useful method to mitigate the effect of overlapping events of this type is to take a node-centric view. The superposition theorem implies that

![]() will get infected according to a Poisson process with rate

will get infected according to a Poisson process with rate

![]() because it has two infectious neighbors (Section 2.6). By exploiting this observation, let us redefine the list of possible events at time

because it has two infectious neighbors (Section 2.6). By exploiting this observation, let us redefine the list of possible events at time

![]() . The node

. The node

![]() will get infected with probability

will get infected with probability

![]() (and will not get infected with probability

(and will not get infected with probability

![]() ). Nodes

). Nodes

![]() and

and

![]() will get infected with probabilities

will get infected with probabilities

![]() and

and

![]() , respectively. As before,

, respectively. As before,

![]() and

and

![]() recover with probability

recover with probability

![]() each. In this manner, we have reduced the number of possible events from seven to five. We are usually interested in simulating such stochastic processes in much larger networks or populations, where nodes tend to have a degree larger than in the network shown in Fig. 2. For example, if a node

each. In this manner, we have reduced the number of possible events from seven to five. We are usually interested in simulating such stochastic processes in much larger networks or populations, where nodes tend to have a degree larger than in the network shown in Fig. 2. For example, if a node

![]() has 50 infectious neighbors, implementing the rejection sampling using the probability that

has 50 infectious neighbors, implementing the rejection sampling using the probability that

![]() gets infected,

gets infected,

![]() , rather than checking if

, rather than checking if

![]() gets infected with probability

gets infected with probability

![]() along each edge that

along each edge that

![]() has with an infectious neighbor, will confer a fiftyfold speedup of the algorithm.

has with an infectious neighbor, will confer a fiftyfold speedup of the algorithm.

Rejection sampling is a widely used method, particularly in research communities where continuous-time stochastic process thinking does not prevail. In a related vein, many people are confused by being told that the infection and recovery rates

![]() and

and

![]() can exceed 1. They are accustomed to think in discrete time such that they are not trained to distinguish between the rate and probability. They are different; simply put, the rate is for continuous time, and the probability is for discrete time. Here we advocate that we should not use the discrete-time versions in general, despite their simplicity and their appeal to our intuition, for the following reasons (see Reference Gómez, Gómez-Gardeñes, Moreno and ArenasGómez, Gómez-Gardeñes, Moreno, and Arenas [2011] and Reference Fennell, Melnik and GleesonFennell, Melnik, and Gleeson [2016] for similar arguments).

can exceed 1. They are accustomed to think in discrete time such that they are not trained to distinguish between the rate and probability. They are different; simply put, the rate is for continuous time, and the probability is for discrete time. Here we advocate that we should not use the discrete-time versions in general, despite their simplicity and their appeal to our intuition, for the following reasons (see Reference Gómez, Gómez-Gardeñes, Moreno and ArenasGómez, Gómez-Gardeñes, Moreno, and Arenas [2011] and Reference Fennell, Melnik and GleesonFennell, Melnik, and Gleeson [2016] for similar arguments).

First, the use of a small

![]() , which is necessary to assure an accurate approximation of the actual continuous-time stochastic process, implies a large computation time. If the duration of time that one run of simulation needs is

, which is necessary to assure an accurate approximation of the actual continuous-time stochastic process, implies a large computation time. If the duration of time that one run of simulation needs is

![]() , one needs

, one needs

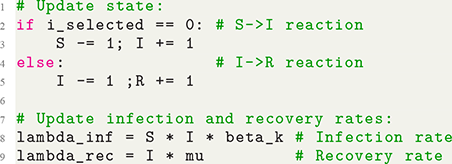

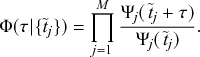

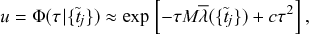

![]() discrete time-steps, which is large when