1. Introduction

Due to the longevity phenomenon, forecasting mortality has become more and more important for actuaries, pension sponsors and policymakers. Since the seminal work of Lee and Carter (Reference Lee and Carter1992), (dynamic) factor models (DFMs), including the Lee–Carter (LC), the Cairns–Blake–Dowd (CBD) models (Cairns et al., Reference Cairns, Blake and Dowd2006) and others (see e.g., French and O’Hare, Reference French and O’Hare2013; Chulia et al., Reference Chulia, Guillen and Uribe2016; Heinemann, 2017; Gao et al., Reference Gao, Shang and Yang2019; He et al., Reference He, Huang, Shi and Yang2021), have become the workhorse for mortality modelling.Footnote 1 Their mathematical simplicity can partly explain this success story since they assume that a small number of factor processes drive age-specific mortality rates. For instance, in the LC model, the log-mortality rates

![]() $\log m_{x,t}$

at time t are driven by the same factor across different ages. However, depending on the data at hand, this simple factor structure might be too restrictive to describe the evolution of the mortality dynamics adequately. In particular, empirical studies often find that the inclusion of a cohort effect, that is a process indexed by

$\log m_{x,t}$

at time t are driven by the same factor across different ages. However, depending on the data at hand, this simple factor structure might be too restrictive to describe the evolution of the mortality dynamics adequately. In particular, empirical studies often find that the inclusion of a cohort effect, that is a process indexed by

![]() $t-x$

, improves the fit of the model significantly, but the identification and estimation of such age-period-cohort models is often difficult (see e.g., Kuang et al., Reference Kuang, Nielsen and Nielsen2008; Hunt and Villegas, Reference Hunt and Villegas2015).

$t-x$

, improves the fit of the model significantly, but the identification and estimation of such age-period-cohort models is often difficult (see e.g., Kuang et al., Reference Kuang, Nielsen and Nielsen2008; Hunt and Villegas, Reference Hunt and Villegas2015).

The downside of such DFMs is also well documented in the time series literature. As Lin and Michailidis (Reference Lin and Michailidis2020) put it, “the identification of factors is often problematic, especially when we wish to give them an economic interpretation…. even when all such ‘common’ factors are taken into account, there will be important residual interdependencies … that remain to be explained”. One popular alternative that has emerged in the past several decades is the Vector Autoregressive (VAR) model. These models do not assume a factor structure and are thus much more flexible than DFMs. The mortality modelling community has recently also started to embrace VAR models as a strong competitor to factor models (Lazar and Denuit, Reference Lazar and Denuit2009). Nevertheless, the application of VAR models to high-dimensional time series is not straightforward due to the curse of dimensionality. In particular, while in typical, economic applications, the dimension of the time series is often less than 10, and this number can easily reach 100 or larger in the context of mortality modelling, leading to a huge number of parameters (5000) even in the simplest, first-order [VAR(1)] case. Moreover, mortality data are usually only available annually, with

![]() $T<100$

. This has forced existing VAR-type mortality papers to introduce parametric constraints. These constraints can be either a priori given or data-driven. For instance, Li and Lu (Reference Li and Lu2017) assume that the only non-zero elements of the coefficient matrix are on the diagonal (corresponding to the regression coefficients of their own lags), or the second (respectively the third) lower diagonal (corresponding to the regression coefficients of their adjacent lags). Although the model of Li and Lu (Reference Li and Lu2017) has the appealing property of ensuring co-integration of the log-mortality rates for any pair of ages, their sparsity constraint on the coefficient matrix is quite restrictive and has been questioned by the subsequent literature (see, e.g., Doukhan et al., Reference Doukhan, Pommeret, Rynkiewicz and Salhi2017; Guibert et al., Reference Guibert, Lopez and Piette2019; Shi, Reference Shi2020; Feng et al., Reference Feng, Shi and Chang2021; Chang and Shi, Reference Chang and Shi2021). Alternative regularisation methods, such as the elastic net and the Lasso, have been proposed to address the curse of dimensionality. These recent contributions, though, also have their limitations.

$T<100$

. This has forced existing VAR-type mortality papers to introduce parametric constraints. These constraints can be either a priori given or data-driven. For instance, Li and Lu (Reference Li and Lu2017) assume that the only non-zero elements of the coefficient matrix are on the diagonal (corresponding to the regression coefficients of their own lags), or the second (respectively the third) lower diagonal (corresponding to the regression coefficients of their adjacent lags). Although the model of Li and Lu (Reference Li and Lu2017) has the appealing property of ensuring co-integration of the log-mortality rates for any pair of ages, their sparsity constraint on the coefficient matrix is quite restrictive and has been questioned by the subsequent literature (see, e.g., Doukhan et al., Reference Doukhan, Pommeret, Rynkiewicz and Salhi2017; Guibert et al., Reference Guibert, Lopez and Piette2019; Shi, Reference Shi2020; Feng et al., Reference Feng, Shi and Chang2021; Chang and Shi, Reference Chang and Shi2021). Alternative regularisation methods, such as the elastic net and the Lasso, have been proposed to address the curse of dimensionality. These recent contributions, though, also have their limitations.

First, unlike Li and Lu (Reference Li and Lu2017) and the original LC and CBD models, these latter models are not written on the level of the log-mortality,Footnote 2 but on their first-order difference, that is the mortality improvement rate. Even though the dilemma of whether to differentiate the series before applying VAR-type models arises frequently both in the economic (see, e.g., Williams, Reference Williams1978) and actuarial literature (Haberman and Renshaw, Reference Haberman and Renshaw2012; Mitchell et al., Reference Mitchell, Brockett, Mendoza-Arriaga and Muthuraman2013; Chulia et al., Reference Chulia, Guillen and Uribe2016; Guibert et al., Reference Guibert, Lopez and Piette2019; Jarner and Jallbjørn, 2020), we believe that the differentiation approach has several downsides. Indeed, models written on the differences (implicitly) assume that mortality rates are first-order integrated (i.e., I(1)). This assumption is usually made out of mathematical convenience, without being tested against other alternative hypothesis. Moreover, while the theory on unit root tests of univariate time series is well documented, their performance in a small-T context is known to be often unsatisfactory. Moreover, when applied to the

![]() $(\kappa_{t})$

process estimated from the LC model, the result of the tests should be interpreted very carefully, since

$(\kappa_{t})$

process estimated from the LC model, the result of the tests should be interpreted very carefully, since

![]() $\kappa_{t}$

is not directly observed, but can only be indirectly estimated and is hence subject to estimation error. For instance, the unit root hypothesis in the LC model has recently been questioned by Leng and Peng (Reference Leng and Peng2016). Furthermore, even if the I(1) assumption holds true, a model written on the improvement rates is not able to detect potential co-integration relationships. In some cases, such properties might be desirable, since they spell biological reasonableness, that is, the mortality at different ages do not diverge. Interestingly, this assumption is not imposed in the original, LC model, but was merely an empirically plausible specification, when the model was applied to the US data.

$\kappa_{t}$

is not directly observed, but can only be indirectly estimated and is hence subject to estimation error. For instance, the unit root hypothesis in the LC model has recently been questioned by Leng and Peng (Reference Leng and Peng2016). Furthermore, even if the I(1) assumption holds true, a model written on the improvement rates is not able to detect potential co-integration relationships. In some cases, such properties might be desirable, since they spell biological reasonableness, that is, the mortality at different ages do not diverge. Interestingly, this assumption is not imposed in the original, LC model, but was merely an empirically plausible specification, when the model was applied to the US data.

Second, Guibert et al. (Reference Guibert, Lopez and Piette2019) report that when fitting large VAR(p) models on mortality improvement rates, a large p typically leads to better fit. This may be an indication of likely over-differentiation. This issue also raises further concerns about the curse of dimensionality (especially in a small T context), as well as the choice of the optimal order. Guibert et al. (Reference Guibert, Lopez and Piette2019) propose to fix a prior p, and they acknowledge that “by increasing the lag order (and by using regularisation techniques), some non-null coefficients can be forced to zero in favour of other coefficients in autoregressive matrices of higher lag order.”

A potential alternative to VAR(p) models is the Vector Autoregressive and Moving Average (VARMA) models. The VARMA model is, roughly speaking, the multivariate analogue of ARMA process and is hence a legitimate, parsimonious competitor of VAR(p). However, their application to time series data is still in its infancy due to (i) identification difficulties that are proper to (multivariate) VARMA models (see e.g., Gouriéroux et al., Reference Gouriéroux, Monfort and Renne2020); (ii) the much more complicated, non-linear estimation procedure (see e.g., Litterman, Reference Litterman1986; Chan and Eisenstat, Reference Chan and Eisenstat2017), compared to the Ordinary Least Square (OLS)-type estimators frequently employed for VAR models.

This paper proposes to solve the aforementioned challenges through a new approach, called Factor-Augmented VAR (or FAVAR) model. This model, first introduced by Bernanke et al. (Reference Bernanke, Boivin and Eliasz2005), is a trade-off between standalone DFM and VAR models and has since received much attention in (macro-) econometrics (see e.g., Dufour and Stevanović, Reference Dufour and Stevanović2013; Bai et al., Reference Bai, Li and Lu2016). The original FAVAR formulation has also evolved and given rise to several variants depending on the specific application. In particular, recently, Chan and Eisenstat (Reference Chan and Eisenstat2015) propose a new specification, which, roughly speaking, is obtained from the VAR(1) model by adding one unobserved factor process. This factor allows for a natural, systemic (longevity) risk factor interpretation, similar to the

![]() $(\kappa_{t})$

process in the LC model. This feature is especially instructive and interesting since this way, the model allows for DFM and VAR models as special cases, and hence naturally enjoys many of the better properties of both elementary models that are well known to actuaries. On the one hand, the FAVAR model inherits VAR’s flexibility of capturing serial correlation. On the other hand, the common factor extends the VAR(1) model, while at the same time being much more parsimonious than the aforementioned alternative extensions such as VAR(p) and VARMA models. Finally, by gathering the LC and the VAR approach within the same framework, the FAVAR model also makes model comparison much more convenient.

$(\kappa_{t})$

process in the LC model. This feature is especially instructive and interesting since this way, the model allows for DFM and VAR models as special cases, and hence naturally enjoys many of the better properties of both elementary models that are well known to actuaries. On the one hand, the FAVAR model inherits VAR’s flexibility of capturing serial correlation. On the other hand, the common factor extends the VAR(1) model, while at the same time being much more parsimonious than the aforementioned alternative extensions such as VAR(p) and VARMA models. Finally, by gathering the LC and the VAR approach within the same framework, the FAVAR model also makes model comparison much more convenient.

We propose to estimate the FAVAR model in a Bayesian fashion, using the state-of-the-art shrinkage prior (or Minnesota prior) developed in the Bayesian VAR (BVAR) literature. This approach, introduced by Litterman (Reference Litterman1986), is instrumental in dealing with the curse of dimensionality through Bayesian shrinkage. More precisely, the prior distribution has the effect of “pushing” the posterior distribution towards some benchmark model. This latter will be chosen by the user of our model, according to his/her prior belief, as well as other constraints he/she wants to put in place, such as biological reasonableness, which rules out long-term divergence of logged mortality rates at different ages. For instance, in the empirical section, we let the prior distribution of the parameters to be concentrated around a value corresponding to the sparse VAR proposed in Li and Lu (Reference Li and Lu2017). Our choice of the prior can be viewed as the Bayesian analogue of the frequentist regularization techniques already known to the mortality literature. This interpretation is particularly interesting, given the Bayesian posterior median interpretation of the Lasso estimate in a linear regression setting (see Tibshirani, Reference Tibshirani1996; Park and Casella, Reference Park and Casella2008). Note that recently, Bayesian Lasso (Billio et al., Reference Billio, Casarin and Rossini2019) and elastic net models (Gefang, Reference Gefang2014) have also gained popularity and are thus a possible alternative to the Minnesota prior. Nevertheless, in light of the recent debate on sparsity versus density (Giannone et al., Reference Giannone, Lenza and Primiceri2021), we opt for the Minnesota-type shrinkage prior over Lasso-type methods.

Compared to the existing frequentist regularisation approaches in the mortality literature, our Bayesian approach has several distinctive advantages. First, it naturally introduces parameter uncertainty, which is essential for stress testing and risk management in the pension and insurance industries. In particular, because pension and annuity products are typically indexed to macroeconomic and financial indicators, both need to be forecast or simulated by the financial economist/pension sponsor. Consequently, a unified modelling framework would be highly welcome. BVAR-type models are natural candidates given their strong popularity in macroeconomic forecasting, especially when quantifying forecasting uncertainty. Second, because the FAVAR model (as well as the LC and CBD models) involves an unobserved factor, it belongs to the family of state-space models. In the actuarial literature, such models are typically estimated using a two-step approach. First, the latent factor(s) path is estimated from the raw mortality time series, as if they were deterministically given. These estimated paths are then used in the second stage to estimate the dynamics of the factor process. This approach induces efficiency loss (see Bernanke et al., Reference Bernanke, Boivin and Eliasz2005 for a discussion), and to make things worse, Leng and Peng (Reference Leng and Peng2016) show that they are asymptotically inconsistent, even in the plain LC model. A more rigorous, but cumbersome way is to compute the likelihood function in one step by integrating out all possible paths of the latent factor. This can be done both frequentistly and in a Bayesian way. Under the frequentist approach, the model parameter is first estimated, then the path of the latent factor is filtered out. If the modeller were to further measure parameter uncertainty, the filtering exercise needs to be conducted for each draw of the parameter value, making the computation formidable. The strength of the Bayesian approach here is it combines the parameter estimation and factor filtering tasks in one step. This is the approach we take, and we rely on state-of-the-art Markov chain Monte Carlo (MCMC) techniques to minimise the computational burden.

Despite these benefits, the use of Bayesian methods in mortality modelling is still sparse and has been largely confined to DFM models (Czado et al., Reference Czado, Delwarde and Denuit2005; Pedroza, Reference Pedroza2006; Reichmuth and Sarferaz, Reference Reichmuth and Sarferaz2008; Cairns et al., Reference Cairns, Blake, Dowd, Coughlan and Khalaf-Allah2011; Antonio et al., Reference Antonio, Bardoutsos and Ouburg2015; Li et al., Reference Li, De Waegenaere and Melenberg2015; van Berkum et al., Reference van Berkum, Antonio and Vellekoop2017; Alexopoulos et al., Reference Alexopoulos, Dellaportas and Forster2019; Li et al., Reference Li, Zhou, Zhu, Chan and Chan2019; Wang et al., Reference Wang, Pantelous and Vahid2021).Footnote 3 To our knowledge, the only paper employing BVAR to mortality data is Njenga and Sherris (Reference Njenga and Sherris2020). These authors first fit the three-parameter Heligman–Pollard model to the age-specific, cross-sectional mortality tables for each year t to obtain a three-dimensional process of parameters

![]() $\theta_{t}$

, which they then use to fit the BVAR model. Besides the fact that our FAVAR model includes an extra latent factor, our approach further differs from theirs in several aspects. First, Njenga and Sherris (Reference Njenga and Sherris2020) adopt the “sum of coefficient” prior (Sims and Zha, Reference Sims and Zha1998). This latter, which is also an extension of the Minnesota prior, assumes a priori the existence of (co)-integration. Even though we could also follow this route, in this paper, we take a different approach and construct our prior by inspiring directly from the original Minnesota prior, without imposing non-stationarity (see our discussions above). Second, similar to the LC model, it is unclear how the estimation error induced during the fitting of the Heligman–Pollard model impacts the estimation at the second BVAR stage. Thirdly, the BVAR model fitted to

$\theta_{t}$

, which they then use to fit the BVAR model. Besides the fact that our FAVAR model includes an extra latent factor, our approach further differs from theirs in several aspects. First, Njenga and Sherris (Reference Njenga and Sherris2020) adopt the “sum of coefficient” prior (Sims and Zha, Reference Sims and Zha1998). This latter, which is also an extension of the Minnesota prior, assumes a priori the existence of (co)-integration. Even though we could also follow this route, in this paper, we take a different approach and construct our prior by inspiring directly from the original Minnesota prior, without imposing non-stationarity (see our discussions above). Second, similar to the LC model, it is unclear how the estimation error induced during the fitting of the Heligman–Pollard model impacts the estimation at the second BVAR stage. Thirdly, the BVAR model fitted to

![]() $(\theta_{t})$

does not guarantee the positivity of its components, leaving room to potentially implausible scenarios. Fourthly, since the Heligman–Pollard model involves a non-linear transformation from

$(\theta_{t})$

does not guarantee the positivity of its components, leaving room to potentially implausible scenarios. Fourthly, since the Heligman–Pollard model involves a non-linear transformation from

![]() $\theta_{t}$

to the vector of age-specific mortality rates, a potential, linear (co)-integration property of process

$\theta_{t}$

to the vector of age-specific mortality rates, a potential, linear (co)-integration property of process

![]() $(\theta_{t})$

typically does not translate into linear (co)-integration relationships of the log-mortality rates. In other words, it is difficult to compare this specification with existing approaches, especially when it comes to the resulting, long-run dynamics of the mortality rate process. Fifthly, since parameter

$(\theta_{t})$

typically does not translate into linear (co)-integration relationships of the log-mortality rates. In other words, it is difficult to compare this specification with existing approaches, especially when it comes to the resulting, long-run dynamics of the mortality rate process. Fifthly, since parameter

![]() $\theta_{t}$

is low-dimensional, the BVAR’s advantage of parameter shrinkage is less pronounced. Finally, Njenga and Sherris (Reference Njenga and Sherris2020) do not describe the estimation of their model. Given that our model has a large number (around 6000) of parameters and it differs from BVAR by the introduction of an extra factor, we feel it helpful to provide the actuarial community with a step-by-step introduction to the estimation of BVAR and FAVAR models.

$\theta_{t}$

is low-dimensional, the BVAR’s advantage of parameter shrinkage is less pronounced. Finally, Njenga and Sherris (Reference Njenga and Sherris2020) do not describe the estimation of their model. Given that our model has a large number (around 6000) of parameters and it differs from BVAR by the introduction of an extra factor, we feel it helpful to provide the actuarial community with a step-by-step introduction to the estimation of BVAR and FAVAR models.

Our model is illustrated using data from two populations: US males and French males. These two datasets differ in several key aspects. First, the US data have a very small time series dimension (

![]() $T<100$

), whereas observations start as early as 1816 in the French case. This difference of the sample size has implications on the different ability of the Bayesian model to “learn” from the data. In particular, the difference between our estimated FAVAR model and the Li and Lu model is far more important for the French males than for American males. This result demonstrates the necessity of introducing more flexible models, especially when the sample size of a dataset is large enough to warrant such an extension. Second, Li and Lu (Reference Li and Lu2017)’s model works well only on the US data, but much less so on the French ones. In the empirical section, we explain how this preliminary finding can be used to guide the specification of our prior distribution,. Overall, we show that the FAVAR model performs significantly better than the LC and the sparse VAR model.

$T<100$

), whereas observations start as early as 1816 in the French case. This difference of the sample size has implications on the different ability of the Bayesian model to “learn” from the data. In particular, the difference between our estimated FAVAR model and the Li and Lu model is far more important for the French males than for American males. This result demonstrates the necessity of introducing more flexible models, especially when the sample size of a dataset is large enough to warrant such an extension. Second, Li and Lu (Reference Li and Lu2017)’s model works well only on the US data, but much less so on the French ones. In the empirical section, we explain how this preliminary finding can be used to guide the specification of our prior distribution,. Overall, we show that the FAVAR model performs significantly better than the LC and the sparse VAR model.

The paper is organised as follows. Section 2 introduces the FAVAR model. Section 3 describes the Bayesian estimation approach, which is inspired and adapted from the BVAR literature. Section 4 applies the methodology to US and French male mortality data and compares it with the benchmark models of Lee and Carter (Reference Lee and Carter1992) and Li and Lu (Reference Li and Lu2017). Section 5 concludes.

2. The model

2.1. Review of existing mortality models

Let us denote

![]() $m_{x,t}$

, the mortality rate at age x and date t, a high-dimensional time series observed over time. The mortality modelling literature has recently focused on two strands of models, namely the dynamic factor model and the VAR-type model.

$m_{x,t}$

, the mortality rate at age x and date t, a high-dimensional time series observed over time. The mortality modelling literature has recently focused on two strands of models, namely the dynamic factor model and the VAR-type model.

The original LC model specifies the log-mortality rates

![]() $\log m_{x,t}$

are driven by the same factor across different ages:

$\log m_{x,t}$

are driven by the same factor across different ages:

where

![]() $a_{x}$

and

$a_{x}$

and

![]() $b_{x}$

are age-specific intercept and slope, respectively, and

$b_{x}$

are age-specific intercept and slope, respectively, and

![]() $\epsilon_{x,t}$

is an normally distributed i.i.d error term. To project the processes forward, the standard trick is to consider

$\epsilon_{x,t}$

is an normally distributed i.i.d error term. To project the processes forward, the standard trick is to consider

Several variants of this dynamic factor model, including the two-factor CBD model (Cairns et al., Reference Cairns, Blake and Dowd2006) and others (see, e.g., Heinemann, 2017), have been introduced to the mortality literature.

The VAR(p) model, instead of using a low-dimensional dynamic factors explaining the high-dimensional mortality movements, assumes that

\begin{equation*}\log m_{x,t}=\alpha_{x,0}+\sum_{i=1}^{p}\sum_{j=0}^{d-1}\alpha_{x,x_{0}+j}^{i}\log(m_{j,t-i})+\epsilon_{x,t}\;\forall x,t,\end{equation*}

\begin{equation*}\log m_{x,t}=\alpha_{x,0}+\sum_{i=1}^{p}\sum_{j=0}^{d-1}\alpha_{x,x_{0}+j}^{i}\log(m_{j,t-i})+\epsilon_{x,t}\;\forall x,t,\end{equation*}

where $d$ is the total number of ages for which mortality data are available. The model is highly parameterised that the mortality literature has only considered VAR with one lag case. Even in the one lag case, the number of parameters is

![]() $(d+1)d$

, and Li and Lu (Reference Li and Lu2017) specifies the sparse version of it as

$(d+1)d$

, and Li and Lu (Reference Li and Lu2017) specifies the sparse version of it as

subject to the constraints:

Li and Lu show that the additional constraints above ensure the co-integration of these processes.

2.2. The FAVAR model

The two base models of the previous section motivated us to consider the following model written on the log-mortality rates:

\begin{equation}\log(m_{x,t})=a_{x}+\sum_{j=0}^{d-1}a_{x,x_{0}+j}\log(m_{j,t-1})+b_{x}\kappa_{t}+\epsilon_{x,t},\end{equation}

\begin{equation}\log(m_{x,t})=a_{x}+\sum_{j=0}^{d-1}a_{x,x_{0}+j}\log(m_{j,t-1})+b_{x}\kappa_{t}+\epsilon_{x,t},\end{equation}

where

![]() $x_{0}$

is the lowest age for which mortality rates are observable,

$x_{0}$

is the lowest age for which mortality rates are observable,

![]() $(\epsilon_{t})$

is an i.i.d. random vector satisfying:

$(\epsilon_{t})$

is an i.i.d. random vector satisfying:

and factor

![]() $(\kappa_{t})$

is unobservable, following the dynamics:

$(\kappa_{t})$

is unobservable, following the dynamics:

where

![]() $(\eta_{t})$

is another i.i.d. sequence and is mutually independent with

$(\eta_{t})$

is another i.i.d. sequence and is mutually independent with

![]() $(\epsilon_{t})$

, following:

$(\epsilon_{t})$

, following:

Equation (2) implies, among others, that

![]() $\epsilon_{x,t},x=1,2,...$

are mutually independent. This assumption, borrowed from the LC model, has recently been relaxed in VAR-type mortality models such as Li and Lu (Reference Li and Lu2017) and Guibert et al. (Reference Guibert, Lopez and Piette2019), using a two-stage approach. The first stage is a Seemingly Unrelated Regression (SUR), that is, the coefficient matrix of the VAR model is estimated as if the different components of

$\epsilon_{x,t},x=1,2,...$

are mutually independent. This assumption, borrowed from the LC model, has recently been relaxed in VAR-type mortality models such as Li and Lu (Reference Li and Lu2017) and Guibert et al. (Reference Guibert, Lopez and Piette2019), using a two-stage approach. The first stage is a Seemingly Unrelated Regression (SUR), that is, the coefficient matrix of the VAR model is estimated as if the different components of

![]() $\epsilon_{t}$

are independent. Then the pseudo-residuals are recovered and used to compute the empirical estimator of the covariance matrix. However, in this paper, we will stick with the independence assumption for two reasons. First, this is the assumption retained in Litterman’s Minnesota prior and is also adopted by Njenga and Sherris (Reference Njenga and Sherris2020). Second, restricting the covariance matrix to be diagonal leads to an important dimension reduction and makes the MCMC computation much simpler (see Section 3 for details). Third, since process

$\epsilon_{t}$

are independent. Then the pseudo-residuals are recovered and used to compute the empirical estimator of the covariance matrix. However, in this paper, we will stick with the independence assumption for two reasons. First, this is the assumption retained in Litterman’s Minnesota prior and is also adopted by Njenga and Sherris (Reference Njenga and Sherris2020). Second, restricting the covariance matrix to be diagonal leads to an important dimension reduction and makes the MCMC computation much simpler (see Section 3 for details). Third, since process

![]() $(y_{t})$

likely features non-stationarity, the impact of the mis-specification of the covariance matrix could be much smaller than a potential mis-specification of the coefficient matrix. Fourth, it is well known that the (frequentist) estimation of covariance matrix without constraint is highly unreliable in large dimensions (see e.g., Ledoit and Wolf, Reference Ledoit and Wolf2003), and this is especially the case in the SUR framework, given the efficiency loss induced during the first stage. As we will explain in detail in the next section, even though there are standard Bayesian tools to handle the non-diagonal covariance matrix, the curse of dimensionality issue remains acute.

$(y_{t})$

likely features non-stationarity, the impact of the mis-specification of the covariance matrix could be much smaller than a potential mis-specification of the coefficient matrix. Fourth, it is well known that the (frequentist) estimation of covariance matrix without constraint is highly unreliable in large dimensions (see e.g., Ledoit and Wolf, Reference Ledoit and Wolf2003), and this is especially the case in the SUR framework, given the efficiency loss induced during the first stage. As we will explain in detail in the next section, even though there are standard Bayesian tools to handle the non-diagonal covariance matrix, the curse of dimensionality issue remains acute.

Note that in the above model, the factor

![]() $\kappa_{t}$

is only identified up to an affine transformation. Thus, for identification purpose, we shall let

$\kappa_{t}$

is only identified up to an affine transformation. Thus, for identification purpose, we shall let

We set the initial

![]() $\kappa,\kappa_{0},$

as a model parameter. This identification condition is slightly different from the standard one used in the LC model but is much more common in the literature on factor models and has also been mentioned as an alternative in Section 6 of their paper.

$\kappa,\kappa_{0},$

as a model parameter. This identification condition is slightly different from the standard one used in the LC model but is much more common in the literature on factor models and has also been mentioned as an alternative in Section 6 of their paper.

The proposed approach also shares some similar spirit but is yet different from the so-called Global Vector Autoregressive (GVAR) model. This latter, introduced by Pesaran et al. (Reference Pesaran, Schuermann and Weiner2004) in macro-econometrics to model the dependence of economic variables across different countries, has recently been applied in mortality forecasting by Li and Shi (Reference Li and Shi2021). There are several major differences between our model and the GVAR. First, the GVAR model is mostly appropriate in a multiple-population framework, in which mortality rates of different countries are first modelled separately with a VAR model, and then the different countries are linked together through the GVAR. In the case of single population mortality, it is less natural to separate ex ante the set of all ages into different groups. Our model, on the other hand, is mainly motivated by a single-population framework.Footnote 4 Secondly, although the GVAR specification also adds a factor into a VAR model, this factor is usually assumed observable, such as a linear combination of the observed time series of interest with pre-fixed weights (Li and Shi, Reference Li and Shi2021). Put differently, the GVAR model does not allow for the LC or other factor model as special cases.Footnote 5

Our approach of combining VAR and factor models is also different from the approach of Bernanke et al. (Reference Bernanke, Boivin and Eliasz2005), Biffis and Millossovich (Reference Biffis and Millossovich2006), Debón et al. (Reference Debón, Montes, Mateu, Porcu and Bevilacqua2008), and Mavros et al. (Reference Mavros, Cairns, Streftaris and Kleinow2017). This latter literature propose to first fit a factor model, recover the residuals and then estimate a VAR-type model on these residuals. Even though it would be interesting to compare these two approaches in the future, we note that in these latter models, the status of the DFM and VAR models are different, since the DFM is used as the benchmark, whereas the VAR is merely used as a means to improve the fit of the DFM.

Factor-augmented VAR representation. The above model can be more conveniently represented using a matrix, factor VAR form:

where

![]() $y_{t}=\left\{ \log(m_{x_{0},t}),...,\log(m_{x_{0}+d-1,t})\right\}^{\prime},a=\left\{ a_{x_{0}},...,a_{x_{0}+d-1}\right\}^{\prime},b=\left\{ b_{x_{0}},....,b_{x_{0}+d-1}\right\}^{\prime}$

are d dimensional column vectors and the

$y_{t}=\left\{ \log(m_{x_{0},t}),...,\log(m_{x_{0}+d-1,t})\right\}^{\prime},a=\left\{ a_{x_{0}},...,a_{x_{0}+d-1}\right\}^{\prime},b=\left\{ b_{x_{0}},....,b_{x_{0}+d-1}\right\}^{\prime}$

are d dimensional column vectors and the

![]() $d\times d$

matrix A is given by:

$d\times d$

matrix A is given by:

\begin{equation*}A=\left[\begin{array}{c@{\quad}c@{\quad}c@{\quad}c@{\quad}c}a_{x_{0},x_{0}} & ... & a_{x_{0},x_{0}+j} & ... & a_{x_{0},x_{0}+d-1}\\[5pt]... & ... & .. & ... & ...\\[5pt]a_{x_{0}+i,x_{0}} & .. & a_{x_{0}+i,x_{0}+j} & .. & a_{x_{0}+i,x_{0}+d-1}\\[5pt]... & ... & ... & ... & ...\\[5pt]a_{x_{0}+d-1,x_{0}} & ... & a_{x_{0}+d-1,x_{0}+j} & ... & a_{x_{0}+d-1,x_{0}+d-1}\end{array}\right].\end{equation*}

\begin{equation*}A=\left[\begin{array}{c@{\quad}c@{\quad}c@{\quad}c@{\quad}c}a_{x_{0},x_{0}} & ... & a_{x_{0},x_{0}+j} & ... & a_{x_{0},x_{0}+d-1}\\[5pt]... & ... & .. & ... & ...\\[5pt]a_{x_{0}+i,x_{0}} & .. & a_{x_{0}+i,x_{0}+j} & .. & a_{x_{0}+i,x_{0}+d-1}\\[5pt]... & ... & ... & ... & ...\\[5pt]a_{x_{0}+d-1,x_{0}} & ... & a_{x_{0}+d-1,x_{0}+j} & ... & a_{x_{0}+d-1,x_{0}+d-1}\end{array}\right].\end{equation*}

In particular, if matrix

![]() $A=0$

, we get the one-factor LC model; if instead vector

$A=0$

, we get the one-factor LC model; if instead vector

![]() $b=0$

, then we have a pure VAR model. It has been argued that VAR models provide a better fit to mortality data (Guibert et al., Reference Guibert, Lopez and Piette2019), compared to factor models. However, the LC model, as well as its extensions such as the CBD models, have the great advantage of ensuring co-integration between mortality rates at different ages, a property not satisfied by most VAR-based mortality models. To our knowledge, only the model of Li and Lu (Reference Li and Lu2017) allows for such an a priori co-integration relationship, but their model requires a very restrictive specification of the coefficient matrix A. In the general case, when both A and b are non-zero, the above model is a FAVAR model (Bernanke et al., Reference Bernanke, Boivin and Eliasz2005; Chan and Eisenstat, Reference Chan and Eisenstat2015). To motivate this terminology, let us consider the factor-augmented vector

$b=0$

, then we have a pure VAR model. It has been argued that VAR models provide a better fit to mortality data (Guibert et al., Reference Guibert, Lopez and Piette2019), compared to factor models. However, the LC model, as well as its extensions such as the CBD models, have the great advantage of ensuring co-integration between mortality rates at different ages, a property not satisfied by most VAR-based mortality models. To our knowledge, only the model of Li and Lu (Reference Li and Lu2017) allows for such an a priori co-integration relationship, but their model requires a very restrictive specification of the coefficient matrix A. In the general case, when both A and b are non-zero, the above model is a FAVAR model (Bernanke et al., Reference Bernanke, Boivin and Eliasz2005; Chan and Eisenstat, Reference Chan and Eisenstat2015). To motivate this terminology, let us consider the factor-augmented vector

![]() $\tilde{y_{t}}=[y_{t}',\kappa_{t}]'$

, then we can rewrite the system as a VAR:

$\tilde{y_{t}}=[y_{t}',\kappa_{t}]'$

, then we can rewrite the system as a VAR:

\begin{equation*}\tilde{y}_{t}=\left[\begin{array}{c}a\\[5pt]\gamma_{1}\end{array}\right]+\left[\begin{array}{c@{\quad}c}A & \gamma_{2}b\\[5pt]\mathbf{0}_{1\times d} & \gamma_{2}\end{array}\right]\tilde{y}_{t-1}+\left[\begin{array}{c@{\quad}c}\mathbb{\mathbf{I}}_{d} & b\\[5pt]0 & 1\end{array}\right]\tilde{\epsilon_{t}},\end{equation*}

\begin{equation*}\tilde{y}_{t}=\left[\begin{array}{c}a\\[5pt]\gamma_{1}\end{array}\right]+\left[\begin{array}{c@{\quad}c}A & \gamma_{2}b\\[5pt]\mathbf{0}_{1\times d} & \gamma_{2}\end{array}\right]\tilde{y}_{t-1}+\left[\begin{array}{c@{\quad}c}\mathbb{\mathbf{I}}_{d} & b\\[5pt]0 & 1\end{array}\right]\tilde{\epsilon_{t}},\end{equation*}

where the new error

![]() $\tilde{\epsilon_{t}}=(\epsilon'_{t},\eta_{t})'.$

$\tilde{\epsilon_{t}}=(\epsilon'_{t},\eta_{t})'.$

3. Bayesian estimation

Since VAR models are parameter-rich, their estimation can be challenging. The parameter estimates are likely erratic without prior information, rendering the resulting impulse response function and forecasts unreliable. Hence, Bayesian approach is often called on to address the curse of dimensionality through the specification of shrinkage prior. Roughly speaking, this shrinkage approach can be viewed as the Bayesian analogue of frequentist, regularisation techniques. Indeed, whereas in the frequentist regression context, Lasso and elastic net algorithms force most of the regression parameters to be exactly zero, Bayesian shrinkage priors resemble more ridge regression, in the sense that they are usually concentrated around a vector of parameter values

![]() $\theta_{0}$

corresponding to a simpler model. This way, the posterior distribution of the unknown parameters will also concentrate around

$\theta_{0}$

corresponding to a simpler model. This way, the posterior distribution of the unknown parameters will also concentrate around

![]() $\theta_{0}$

but are not required to be sparse.

$\theta_{0}$

but are not required to be sparse.

This section starts with a quick reminder of the BVAR model and explains the standard (dependent or independent) conjugate priors. Then we introduce a popular shrinkage prior, also called Minnesota prior. This latter, however, cannot be applied directly in our context due to several reasons. First, the Minnesota prior is designed for plain VAR models instead of for FAVAR. Moreover, it is concentrated around the central scenario that the log-mortality rates are random walks, not co-integrated. Thus, we will adapt the Minnesota prior, by changing the mean of its prior distribution to a new vector corresponding to co-integrated VAR model à la Li and Lu (Reference Li and Lu2017) and specify independent prior for the augmented factor part of the FAVAR model.

3.1. Specification of the prior

“Conjugate” priors for BVAR. Consider the baseline VAR model:

If

![]() $\Sigma$

is fixed, then the standard conjugate prior of the

$\Sigma$

is fixed, then the standard conjugate prior of the

![]() $d+d^{2}$

dimensional vector parameter

$d+d^{2}$

dimensional vector parameter

![]() $vec[a';\;A']$

is Gaussian,Footnote 6 whereas for fixed a and A, the standard conjugate prior for

$vec[a';\;A']$

is Gaussian,Footnote 6 whereas for fixed a and A, the standard conjugate prior for

![]() $d\times d$

matrix parameter

$d\times d$

matrix parameter

![]() $\Sigma$

is Inverse-Wishart (IW) with dispersion parameter

$\Sigma$

is Inverse-Wishart (IW) with dispersion parameter

![]() $\nu$

and scale matrix S [henceforth

$\nu$

and scale matrix S [henceforth

![]() $IW(\nu,S)$

]. In case where both (a, A) and

$IW(\nu,S)$

]. In case where both (a, A) and

![]() $\Sigma$

are unknown and stochastic, there are two usual prior specifications, depending on whether

$\Sigma$

are unknown and stochastic, there are two usual prior specifications, depending on whether

![]() $vec[a';\;A']$

and

$vec[a';\;A']$

and

![]() $\Sigma$

are assumed independent:

$\Sigma$

are assumed independent:

-

dependent conjugate prior:

(3.1)where the \begin{equation}vec[a';\;A']|\Sigma\sim N(\mu_{a},\Sigma\otimes\tilde{\Sigma}_{a}),\qquad\Sigma\sim IW(\nu,S),\end{equation}

\begin{equation}vec[a';\;A']|\Sigma\sim N(\mu_{a},\Sigma\otimes\tilde{\Sigma}_{a}),\qquad\Sigma\sim IW(\nu,S),\end{equation}

$(d+1)\times(d+1)$

matrix

$(d+1)\times(d+1)$

matrix

$\tilde{\Sigma}_{a}$

is symmetric definite non-negative, and the symbol

$\tilde{\Sigma}_{a}$

is symmetric definite non-negative, and the symbol

$\otimes$

denotes the Kronecker product;

$\otimes$

denotes the Kronecker product;

-

independent Gaussian-Inverse Wishart prior:

(3.2)where the \begin{equation}vec[a;\;A']\sim N(\mu_{a},\Sigma_{a}),\quad\Sigma\sim IW(\nu,S),\end{equation}

\begin{equation}vec[a;\;A']\sim N(\mu_{a},\Sigma_{a}),\quad\Sigma\sim IW(\nu,S),\end{equation}

$(d^{2}+d)\times(d^{2}+d)$

matrix

$(d^{2}+d)\times(d^{2}+d)$

matrix

$\Sigma_{a}$

is symmetric positive definite, and

$\Sigma_{a}$

is symmetric positive definite, and

$vec[a;\;A']$

and

$vec[a;\;A']$

and

$\Sigma$

are independent.

$\Sigma$

are independent.

Prior specification (3.1) is commonly called the “natural conjugate prior” (Zellner, Reference Zellner1971). Its main advantage is that the associated posterior and the one-step-ahead predictive density are Normal-Wishart, with closed-form density. This prior, however, has also several serious downsides. First, the Kronecker product implies cross-equation restrictions on the covariance matrix. In particular, this structure requires, for each component of

![]() $y_{t}$

, a symmetric treatment of its own lags and lags of other variables. This might sound at odds with existing mortality VAR models, in which the weight of one component’s own lag is typically more important.

$y_{t}$

, a symmetric treatment of its own lags and lags of other variables. This might sound at odds with existing mortality VAR models, in which the weight of one component’s own lag is typically more important.

As a comparison, the independent prior (3.2) is much more flexible. However, unlike the natural conjugate prior, it does not lead to a closed-form posterior distribution. Nevertheless, this prior still has the nice property that the conditional posterior of each block of the parameter given the other, that are

![]() $\ell(\Sigma|a,A)$

and

$\ell(\Sigma|a,A)$

and

![]() $\ell(a,A|\Sigma)$

, are of known classes (IW and Gaussian, respectively). This suggests straightforward, Gibbs sampling-type MCMC algorithms. Note, however, that in high dimensions, the sampling of vector or matrix parameters can still be very costly, and further restrictions are needed. Hence, the introduction of Minnesota prior below.

$\ell(a,A|\Sigma)$

, are of known classes (IW and Gaussian, respectively). This suggests straightforward, Gibbs sampling-type MCMC algorithms. Note, however, that in high dimensions, the sampling of vector or matrix parameters can still be very costly, and further restrictions are needed. Hence, the introduction of Minnesota prior below.

The Minnesota prior for VAR. Introduced by Litterman (Reference Litterman1986), the shrinkage, or Minnesota prior is one of the most popular prior specifications for VAR models. This prior can be viewed as a simplified version of the aforementioned independent prior (3.2). For the prior mean

![]() $\mu_{a}$

of vec[a

′, A

′], the Minnesota prior involves setting

$\mu_{a}$

of vec[a

′, A

′], the Minnesota prior involves setting

![]() $\mathbb{E}[a]=0$

and

$\mathbb{E}[a]=0$

and

![]() $\mathbb{E}[A]=Id$

. As for the covariance matrix parameters S and

$\mathbb{E}[A]=Id$

. As for the covariance matrix parameters S and

![]() $\Sigma_{a}$

, Litterman assumes that:

$\Sigma_{a}$

, Litterman assumes that:

-

(a) The

$d\times d$

symmetric positive definite matrix

$d\times d$

symmetric positive definite matrix

$\Sigma$

is diagonal almost surely. Thus, each of its diagonal entries follows inverse gamma distribution

$\Sigma$

is diagonal almost surely. Thus, each of its diagonal entries follows inverse gamma distribution

$IG(\nu,s_{i})$

, where

$IG(\nu,s_{i})$

, where

$s_{i}$

is the i-th diagonal entry of S. Litterman further fixes

$s_{i}$

is the i-th diagonal entry of S. Litterman further fixes

$s_{i}$

by OLS estimate of the error variance in the i-th equation of the VAR model.

$s_{i}$

by OLS estimate of the error variance in the i-th equation of the VAR model. -

(b) The

$(d+d^{2})\times(d+d^{2})$

symmetric positive definite matrix

$(d+d^{2})\times(d+d^{2})$

symmetric positive definite matrix

$\Sigma_{a}$

is also diagonal, and its entries are related to those of S through:

$\Sigma_{a}$

is also diagonal, and its entries are related to those of S through:

where the

![]() $d\times(d+1)$

matrix V is given by:

$d\times(d+1)$

matrix V is given by:

\begin{equation}V=\begin{cases}c_{1}s_{i} & i=1\\[5pt]c_{2} & j=i-1\\[9pt]\dfrac{c_{3}s_{i+1}}{s_{j}} & j\neq i-1\end{cases}\end{equation}

\begin{equation}V=\begin{cases}c_{1}s_{i} & i=1\\[5pt]c_{2} & j=i-1\\[9pt]\dfrac{c_{3}s_{i+1}}{s_{j}} & j\neq i-1\end{cases}\end{equation}

for some constants

![]() $c_{1},c_{2},c_{3}$

to be chosen by the modeller. Here, the degree of shrinkage is controlled by parameters

$c_{1},c_{2},c_{3}$

to be chosen by the modeller. Here, the degree of shrinkage is controlled by parameters

![]() $c_{1},c_{2},c_{3}$

. The smaller the c’s, the stronger belief one has on the benchmark model. This ad hoc specification is mainly motivated by computational reasons. Indeed, first, for

$c_{1},c_{2},c_{3}$

. The smaller the c’s, the stronger belief one has on the benchmark model. This ad hoc specification is mainly motivated by computational reasons. Indeed, first, for

![]() $d=100$

, sampling from a

$d=100$

, sampling from a

![]() $d\times d$

Inverse-Wishart distribution is computationally very intensive, let alone the potential numerical instability that may result, if the stochastic matrix is close to singularity.Footnote 7

$d\times d$

Inverse-Wishart distribution is computationally very intensive, let alone the potential numerical instability that may result, if the stochastic matrix is close to singularity.Footnote 7

Secondly, without the diagonal assumption, matrix

![]() $\Sigma_{a}$

involves roughly

$\Sigma_{a}$

involves roughly

![]() $5\times10^{7}$

parameters.

$5\times10^{7}$

parameters.

Adapting the Minnesota prior to accommodate for a baseline model with co-integration. Under the Minnesota prior, the prior mean of the coefficient matrix A is identity:

![]() $\mathbb{E}[A]=Id$

. In other words, the dynamics of the process

$\mathbb{E}[A]=Id$

. In other words, the dynamics of the process

![]() $(y_{t})$

is assumed to be centred around the benchmark model of random walk without draft, instead of being co-integrated. While this feature is widely accepted for economic variables, the lack of co-integration might be undesirable for mortality forecasting, since it is the synonym of long-term divergence between mortality rates at different ages. One natural alternative is to replace the identity matrix by the coefficient matrix estimated from the sparse VAR model of Li and Lu (Reference Li and Lu2017). Indeed, the authors show that their model significantly outperforms the LC model. Note, however, that since A has a Gaussian prior distribution, even though its mean matrix is sparse, draws from its distribution have non-zero entries. Therefore, this specification is much more flexible than the model of Li and Lu (Reference Li and Lu2017). Moreover, instead of setting all prior means of the vector a to zero as in the Minnesota prior (corresponding to the popular random walk without drift assumption in economics), we set them to be 1 to reflect the decreasing trend of the mortality rates over time, that is the longevity phenomenon:

$(y_{t})$

is assumed to be centred around the benchmark model of random walk without draft, instead of being co-integrated. While this feature is widely accepted for economic variables, the lack of co-integration might be undesirable for mortality forecasting, since it is the synonym of long-term divergence between mortality rates at different ages. One natural alternative is to replace the identity matrix by the coefficient matrix estimated from the sparse VAR model of Li and Lu (Reference Li and Lu2017). Indeed, the authors show that their model significantly outperforms the LC model. Note, however, that since A has a Gaussian prior distribution, even though its mean matrix is sparse, draws from its distribution have non-zero entries. Therefore, this specification is much more flexible than the model of Li and Lu (Reference Li and Lu2017). Moreover, instead of setting all prior means of the vector a to zero as in the Minnesota prior (corresponding to the popular random walk without drift assumption in economics), we set them to be 1 to reflect the decreasing trend of the mortality rates over time, that is the longevity phenomenon:

Then for the diagonal elements of

![]() $\Sigma$

, we slightly modify Litterman’s specification by assuming that they are i.i.d.:

$\Sigma$

, we slightly modify Litterman’s specification by assuming that they are i.i.d.:

where

![]() $s_{0}$

is fixed, rather than estimated using OLS. Finally, we retain the same specification for

$s_{0}$

is fixed, rather than estimated using OLS. Finally, we retain the same specification for

![]() $\Sigma_{a}$

as the Minnesota prior.

$\Sigma_{a}$

as the Minnesota prior.

Extending to a FAVAR model. It suffices now to set the prior distribution of the parameters characterising the factor

![]() $(\kappa_{t})$

of the model that are the age-specific loading vector b, the scalars

$(\kappa_{t})$

of the model that are the age-specific loading vector b, the scalars

![]() $\gamma_{1}$

(intercept),

$\gamma_{1}$

(intercept),

![]() $\gamma_{2}$

(drift), as well as the variance

$\gamma_{2}$

(drift), as well as the variance

![]() $\sigma_{\eta}^{2}$

of the residuals

$\sigma_{\eta}^{2}$

of the residuals

![]() $(\eta_{t})$

. We assume that they are mutually independent and are also independent of all the above parameters, with marginal distributions:

$(\eta_{t})$

. We assume that they are mutually independent and are also independent of all the above parameters, with marginal distributions:

\begin{align*}(b_{x_{0}+1},...,b_{x_{0}+d-1})' & \sim N(\mu_{b},\Sigma_{b}),{\qquad}\textrm{where} \qquad\mu_{b}\in\mathbb{R}^{d-1}\\[5pt] (\gamma_{1},\gamma_{2})' & \sim N\Big((\mu_{\gamma},\mu_{\gamma})',\sigma^{2}\mathbf{I}_{2}\Big),\\[5pt] \sigma_{\eta}^{2} & \sim IG(\nu_{1},s_{1})\\[5pt] \kappa_{1} & \sim N(0,\sigma_{k}^{2}).\end{align*}

\begin{align*}(b_{x_{0}+1},...,b_{x_{0}+d-1})' & \sim N(\mu_{b},\Sigma_{b}),{\qquad}\textrm{where} \qquad\mu_{b}\in\mathbb{R}^{d-1}\\[5pt] (\gamma_{1},\gamma_{2})' & \sim N\Big((\mu_{\gamma},\mu_{\gamma})',\sigma^{2}\mathbf{I}_{2}\Big),\\[5pt] \sigma_{\eta}^{2} & \sim IG(\nu_{1},s_{1})\\[5pt] \kappa_{1} & \sim N(0,\sigma_{k}^{2}).\end{align*}

Note that here, vector

![]() $(b_{x_{0}+1},...,b_{x_{0}+d-1})'$

is of dimension

$(b_{x_{0}+1},...,b_{x_{0}+d-1})'$

is of dimension

![]() $d-1$

since

$d-1$

since

![]() $b_{x_{0}}$

is set to 1 for identification purpose.

$b_{x_{0}}$

is set to 1 for identification purpose.

3.2. Potential alternative prior specifications

We have now completely specified the prior we will use in the empirical part of the paper. Before moving forward, let us mention that this prior is very flexible. While being less general, several of its submodels might have a more straightforward interpretation and thus could also be of interest to the mortality forecaster.

For instance, we could fix

![]() $\gamma_{2}$

to 1 so that factor

$\gamma_{2}$

to 1 so that factor

![]() $(\kappa_{t})$

is constrained to be a random walk. Second, instead of shrinking matrix A towards

$(\kappa_{t})$

is constrained to be a random walk. Second, instead of shrinking matrix A towards

![]() $A_{0}$

with unit eigenvalue, we can shrink it instead towards a matrix whose spectral radius is smaller than 1. This way factor

$A_{0}$

with unit eigenvalue, we can shrink it instead towards a matrix whose spectral radius is smaller than 1. This way factor

![]() $(\kappa_{t})$

will be solely responsible for the common longevity phenomenon as in the LC model, whereas the VAR part of the model captures the remaining, (stationary) dynamics. In particular, if we let the only non-zero entries of matrix

$(\kappa_{t})$

will be solely responsible for the common longevity phenomenon as in the LC model, whereas the VAR part of the model captures the remaining, (stationary) dynamics. In particular, if we let the only non-zero entries of matrix

![]() $A_{0}$

to be the first subdiagonal (which captures the cohort effect, see Li and Lu, Reference Li and Lu2017), then we get a competitor of the LC model with cohort effect, which have been shown to suffer from identification issues (see e.g., Hunt and Villegas, Reference Hunt and Villegas2015).

$A_{0}$

to be the first subdiagonal (which captures the cohort effect, see Li and Lu, Reference Li and Lu2017), then we get a competitor of the LC model with cohort effect, which have been shown to suffer from identification issues (see e.g., Hunt and Villegas, Reference Hunt and Villegas2015).

Another intuitive submodel is when we force the spectral radius of A to be equal to 1,Footnote 8 while at the same restricting

![]() $\gamma_{2}$

to lie between 0 and 1. This way, our FAVAR model is more tilted towards the spatial VAR model of Li and Lu (Reference Li and Lu2017), but the extra common factor

$\gamma_{2}$

to lie between 0 and 1. This way, our FAVAR model is more tilted towards the spatial VAR model of Li and Lu (Reference Li and Lu2017), but the extra common factor

![]() $(\kappa_{t})$

will be able to better capture common, extreme mortality shocks such as COVID and heat wave. The modelling of such mortality shocks is essential for the pricing of mortality-related derivatives (see e.g., Chen and Cox, Reference Chen and Cox2009; Zhou et al., Reference Zhou, Li and Tan2013; Bauer and Kramer, Reference Bauer and Kramer2016). Here, one question of fundamental importance that has been long debated is whether the effect of such a shock on the mortality rates is permanent (see Cox et al., Reference Cox, Lin and Wang2006) or transitory (see Chen and Cox, Reference Chen and Cox2009). In our model, since A has eigenvalues that are either equal to or smaller than 1, the effect of a (transitory) shock on

$(\kappa_{t})$

will be able to better capture common, extreme mortality shocks such as COVID and heat wave. The modelling of such mortality shocks is essential for the pricing of mortality-related derivatives (see e.g., Chen and Cox, Reference Chen and Cox2009; Zhou et al., Reference Zhou, Li and Tan2013; Bauer and Kramer, Reference Bauer and Kramer2016). Here, one question of fundamental importance that has been long debated is whether the effect of such a shock on the mortality rates is permanent (see Cox et al., Reference Cox, Lin and Wang2006) or transitory (see Chen and Cox, Reference Chen and Cox2009). In our model, since A has eigenvalues that are either equal to or smaller than 1, the effect of a (transitory) shock on

![]() $(\kappa_{t})$

on (linear combinations) of

$(\kappa_{t})$

on (linear combinations) of

![]() $y_{t}$

is decomposed into one transitory part and one permanent part. In other words, this kind of model would be very appropriate to compare the relative importance of transitory and permanent effects of the past extreme mortality shocks. This is an alternative to the aforementioned pricing-related literature, which usually make a priori assumptions on the nature of the shocks.

$y_{t}$

is decomposed into one transitory part and one permanent part. In other words, this kind of model would be very appropriate to compare the relative importance of transitory and permanent effects of the past extreme mortality shocks. This is an alternative to the aforementioned pricing-related literature, which usually make a priori assumptions on the nature of the shocks.

3.3. Other regularisation methods

Several authors have tested regularisation methods such as elastic net and Lasso to address the curse of dimensionality in the mortality modelling space. These recent contributions, though, also have their own limitations.

First, unlike Li and Lu (Reference Li and Lu2017) and the original LC and CBD models, these latter models are not written on the level of the log-mortality, but on their first-order difference, that is the mortality improvement rate. Even though the dilemma of whether to differentiate the series before applying VAR-type models arises frequently both in the economic (see e.g., Williams, Reference Williams1978) and actuarial literature (see e.g., Guibert et al., Reference Guibert, Lopez and Piette2019), we believe that the differentiation approach has several downsides. Indeed, models written on the mortality improvement rate (implicitly) assume that mortality rates are first-order integrated (i.e., I(1)). This assumption is usually made out of mathematical convenience, without being tested against other alternative hypothesis. Interestingly, this assumption is not imposed in the original, LC model, but was merely an empirically plausible specification when the model was applied to the US data. Indeed, while the theory on unit root tests of univariate time series is well documented, their performance in a small-T context is known to be often unsatisfactory. Moreover, when applied to the

![]() $(\kappa_{t})$

process estimated from the LC model, the result of the tests should be interpreted very carefully, since

$(\kappa_{t})$

process estimated from the LC model, the result of the tests should be interpreted very carefully, since

![]() $\kappa_{t}$

is not directly observed, but can only be indirectly estimated and is hence subject to estimation error. For instance, the unit root hypothesis in the LC model has recently been questioned by Leng and Peng (Reference Leng and Peng2016) and Liu et al. (Reference Liu, Ling, Li and Peng2019a); Liu et al. (Reference Liu, Ling and Peng2019b). Furthermore, even if the I(1) assumption holds true, a model written on the improvement rates is not able to detect potential co-integration relationships. In some cases, such properties might be desirable, since they spell biological reasonableness, that is the mortality at different ages do not diverge.

$\kappa_{t}$

is not directly observed, but can only be indirectly estimated and is hence subject to estimation error. For instance, the unit root hypothesis in the LC model has recently been questioned by Leng and Peng (Reference Leng and Peng2016) and Liu et al. (Reference Liu, Ling, Li and Peng2019a); Liu et al. (Reference Liu, Ling and Peng2019b). Furthermore, even if the I(1) assumption holds true, a model written on the improvement rates is not able to detect potential co-integration relationships. In some cases, such properties might be desirable, since they spell biological reasonableness, that is the mortality at different ages do not diverge.

Second, Guibert et al. (Reference Guibert, Lopez and Piette2019) report that for such models, a large p typically leads to better fit. This may be an indication of likely over-differentiation. Moreover, this issue raises further concerns about the curse of dimensionality, as well as the choice of the optimal order. Guibert et al. (Reference Guibert, Lopez and Piette2019) propose to fix a prior p, and they acknowledge that “by increasing the lag order (and by using regularisation techniques), some non-null coefficients can be forced to zero in favour of other coefficients in autoregressive matrices of higher lag order.” Moreover, it is well known that a d-dimensional VAR(p) model is equivalent to a VAR(1) model of dimension pd. For large p, this dimension is way larger than the time series dimension T, rendering the estimation of the coefficient matrices of the VAR(p) problematic (see a further discussion in Section 4.6).

Compared to the existing frequentist regularization approaches in the mortality literature, our Bayesian approach has several distinctive advantages. First, it is more convenient to account for parameter uncertainty and evaluate its impact on forecasting (see e.g., Czado et al., Reference Czado, Delwarde and Denuit2005), that is,

where

![]() $\pi(\theta|y_{1},...,y_{T})$

here denote the posterior distribution of the model parameters given the observed dataset. This is crucial in mortality modelling for several reasons. First, in a small T context, standard large sample theory may break down, as evidenced by, for example Leng and Peng (Reference Leng and Peng2016). As a result, given the huge sensitivity of the long-term pension projections vis-à-vis the presence or the lack thereof the unit root, it would be preferable if we could provide probabilistic answers to questions such as: (i) given the data, what is the probability that the log-mortality process is (co-)integrated? (ii) What is the impact of the parameter uncertainty on the evaluation of future pension liabilities? The proposed Bayesian approach can efficiently address these questions by assigning an appropriate prior distribution on the parameters and computing the posterior distribution numerically. In particular, instead of assuming a priori that the log-mortality rates are integrated and estimate a VAR model on the differentiated series, our model will be fitted to the level of the log-mortality rates.

$\pi(\theta|y_{1},...,y_{T})$

here denote the posterior distribution of the model parameters given the observed dataset. This is crucial in mortality modelling for several reasons. First, in a small T context, standard large sample theory may break down, as evidenced by, for example Leng and Peng (Reference Leng and Peng2016). As a result, given the huge sensitivity of the long-term pension projections vis-à-vis the presence or the lack thereof the unit root, it would be preferable if we could provide probabilistic answers to questions such as: (i) given the data, what is the probability that the log-mortality process is (co-)integrated? (ii) What is the impact of the parameter uncertainty on the evaluation of future pension liabilities? The proposed Bayesian approach can efficiently address these questions by assigning an appropriate prior distribution on the parameters and computing the posterior distribution numerically. In particular, instead of assuming a priori that the log-mortality rates are integrated and estimate a VAR model on the differentiated series, our model will be fitted to the level of the log-mortality rates.

Second, because the FAVAR model (as well as the LC and CBD models) involves an unobserved factor, it belongs to the family of state-space models. In the actuarial literature, such models are typically estimated using a two-step approach. First, the latent factor(s) path is estimated from the raw mortality time series, as if they were deterministically given. These estimated paths are then used in the second stage to estimate the dynamics of the factor process. This approach induces efficiency loss, and to make things worse, Leng and Peng (Reference Leng and Peng2016) show that they are asymptotically inconsistent, even in the plain LC model. A more rigorous, but cumbersome way is to compute the likelihood function in one step by integrating out all possible paths of the latent factor, as Equation (A2) in the next section. This can be done both in a frequentist and in a Bayesian way. Under the frequentist approach, the model parameter is first estimated, then the path of the latent factor is filtered out. If the modeller were to further measure parameter uncertainty, the filtering exercise needs to be conducted for each draw of the parameter value, making the computation formidable. The strength of the Bayesian approach here is it combines the parameter estimation and factor filtering tasks in one step, within the MCMC as detailed in the next section.

3.4. Sampling from the posterior distribution using MCMC

The likelihood function and the factor-augmented likelihood function. Let us first compute the likelihood function of the observed process

![]() $(y_{t})$

, for given value of the parameter vector

$(y_{t})$

, for given value of the parameter vector

![]() $\theta$

. By integrating out the factor path, this likelihood function is equal to:

$\theta$

. By integrating out the factor path, this likelihood function is equal to:

where the integral is of dimension T, and

![]() $\ell(\mathbf{Y,\boldsymbol{\kappa}}|\theta)$

is the joint likelihood function of the observation

$\ell(\mathbf{Y,\boldsymbol{\kappa}}|\theta)$

is the joint likelihood function of the observation

![]() ${\boldsymbol{{Y}}}$

and the latent process

${\boldsymbol{{Y}}}$

and the latent process

![]() $\boldsymbol{\kappa}$

, with

$\boldsymbol{\kappa}$

, with

![]() $\mathbf{Y}=[y_{2},...,y_{T}]'$

,

$\mathbf{Y}=[y_{2},...,y_{T}]'$

,

![]() $\boldsymbol{\kappa}=[\kappa_{2},...,\kappa_{T}]'$

. We have

$\boldsymbol{\kappa}=[\kappa_{2},...,\kappa_{T}]'$

. We have

\begin{align}\log\ell(\mathbf{Y,\boldsymbol{\kappa}}|\theta) & =-\frac{T-1}{2}\sum_{x=x_{0}}^{d-1}\log(2\pi\sigma_{x}^{2})-\frac{1}{2}\sum_{t=2}^{T}(y_{t}-a-Ay_{t-1}-b\kappa_{t})'\Sigma^{-1}\left(y_{t}-a-Ay_{t-1}-b\kappa_{t}\right),\nonumber \\[5pt] & \quad-\frac{1}{2}\sum_{t=2}^{T}\frac{(\kappa_{t}-\gamma_{1}-\gamma_{2}\kappa_{t-1})^{2}}{\sigma_{\eta}^{2}}-\frac{T-1}{2}\log(2\pi\sigma_{\eta}^{2}),\end{align}

\begin{align}\log\ell(\mathbf{Y,\boldsymbol{\kappa}}|\theta) & =-\frac{T-1}{2}\sum_{x=x_{0}}^{d-1}\log(2\pi\sigma_{x}^{2})-\frac{1}{2}\sum_{t=2}^{T}(y_{t}-a-Ay_{t-1}-b\kappa_{t})'\Sigma^{-1}\left(y_{t}-a-Ay_{t-1}-b\kappa_{t}\right),\nonumber \\[5pt] & \quad-\frac{1}{2}\sum_{t=2}^{T}\frac{(\kappa_{t}-\gamma_{1}-\gamma_{2}\kappa_{t-1})^{2}}{\sigma_{\eta}^{2}}-\frac{T-1}{2}\log(2\pi\sigma_{\eta}^{2}),\end{align}

where the first term on the right-hand side (RHS) is the complete (or augmented) log-likelihood function given the path of the latent factor, whereas the second term is the log-likelihood function of the latent process. Note that under the Gaussian assumption of the error term

![]() $\epsilon_{t}$

, we have a linear, Gaussian state-space model (see Durbin and Koopman, Reference Durbin and Koopman2012). Therefore, the likelihood function in Equation (3.7) allows for closed-form formula (see Appendix). This closed-form likelihood function can be used to conduct alternative, frequentist maximum likelihood function. From a Bayesian perspective, however, since the integrated likelihood function (3.7) depends on parameter

$\epsilon_{t}$

, we have a linear, Gaussian state-space model (see Durbin and Koopman, Reference Durbin and Koopman2012). Therefore, the likelihood function in Equation (3.7) allows for closed-form formula (see Appendix). This closed-form likelihood function can be used to conduct alternative, frequentist maximum likelihood function. From a Bayesian perspective, however, since the integrated likelihood function (3.7) depends on parameter

![]() $\theta$

in a highly complex manner, no suitable MCMC algorithm exists to sample from the posterior distribution

$\theta$

in a highly complex manner, no suitable MCMC algorithm exists to sample from the posterior distribution

![]() $\ell(\theta|{\boldsymbol{{Y}}})$

. As a consequence, in the following, we do

$\ell(\theta|{\boldsymbol{{Y}}})$

. As a consequence, in the following, we do

![]() $\textit{not}$

use directly use this function, but work with the joint, factor-augmented likelihood function (3.8).

$\textit{not}$

use directly use this function, but work with the joint, factor-augmented likelihood function (3.8).

By the Bayes formula, the joint posterior distribution of

![]() $\theta$

and

$\theta$

and

![]() $\boldsymbol{\kappa}$

given

$\boldsymbol{\kappa}$

given

![]() ${\boldsymbol{{Y}}}$

is

${\boldsymbol{{Y}}}$

is

Let us now sample from this distribution. Because it is high-dimensional and the normalisation constant in the above equation is intractable, we resort to MCMC. We remark that on the one hand, for fixed

![]() $\boldsymbol{\kappa}$

, the RHS reduces to the posterior distribution of the parameters of a BAR-type model (with a common,

$\boldsymbol{\kappa}$

, the RHS reduces to the posterior distribution of the parameters of a BAR-type model (with a common,

![]() $\textit{observed}$

factor

$\textit{observed}$

factor

![]() $\kappa_{t}$

); on the other hand, for fixed

$\kappa_{t}$

); on the other hand, for fixed

![]() $\boldsymbol{\theta}$

, the RHS reduces to the posterior distribution of a Gaussian AR(1) process. To take advantage of this nice feature, we will use the block Gibbs sampler, by sampling alternately (Carter and Kohn, Reference Carter and Kohn1994; Chan and Jeliazkov, Reference Chan and Jeliazkov2009) from the two conditional distributions:

$\boldsymbol{\theta}$

, the RHS reduces to the posterior distribution of a Gaussian AR(1) process. To take advantage of this nice feature, we will use the block Gibbs sampler, by sampling alternately (Carter and Kohn, Reference Carter and Kohn1994; Chan and Jeliazkov, Reference Chan and Jeliazkov2009) from the two conditional distributions:

That is, we first sample a path of

![]() $\boldsymbol{\kappa}$

, then go on to sample a realisation of

$\boldsymbol{\kappa}$

, then go on to sample a realisation of

![]() $\theta$

, and so on. Let us now explain how each of these two conditional distributions are sampled.

$\theta$

, and so on. Let us now explain how each of these two conditional distributions are sampled.

Sampling

![]() $\boldsymbol\theta$

. Again, due to the dimension of

$\boldsymbol\theta$

. Again, due to the dimension of

![]() $\theta$

, instead of sampling it directly from the distribution

$\theta$

, instead of sampling it directly from the distribution

![]() $\theta|\boldsymbol{\kappa},\mathbf{Y}$

, we use the block Gibbs sampler. More precisely, we regroup its components into five blocks that are:

$\theta|\boldsymbol{\kappa},\mathbf{Y}$

, we use the block Gibbs sampler. More precisely, we regroup its components into five blocks that are:

Then, we update each of these blocks one by one, by drawing from the following conditional distributions:

-

sample vector a and matrix A from

$vec[a',A']'|\mathbb{\mathbf{Y}},\boldsymbol{\kappa,}b,\gamma_{1},\gamma_{2},\Sigma,\sigma_{\eta}^{2}\sim N(\tilde{\mu}_{a},\tilde{K}_{a}^{-1})$

, where:

$vec[a',A']'|\mathbb{\mathbf{Y}},\boldsymbol{\kappa,}b,\gamma_{1},\gamma_{2},\Sigma,\sigma_{\eta}^{2}\sim N(\tilde{\mu}_{a},\tilde{K}_{a}^{-1})$

, where:  \begin{align*}\tilde{K_{a}} & =\Sigma_{a}^{-1}+\mathbf{X}'\mathbf{X}\otimes\Sigma^{-1},\qquad\textrm{with} \quad\mathbf{X}=[(1,y_{1}'),....(1,y_{T-1}')']'\\[5pt]\tilde{\mu_{a}} & =\left(\tilde{K_{a}}\right)^{-1}\left(vec(\Sigma^{-1}\left(\mathbb{\mathbf{Y}}-\boldsymbol{\kappa'}b\right)'\mathbf{X})+\Sigma_{a}^{-1}\mu_{a}\right)\end{align*}

\begin{align*}\tilde{K_{a}} & =\Sigma_{a}^{-1}+\mathbf{X}'\mathbf{X}\otimes\Sigma^{-1},\qquad\textrm{with} \quad\mathbf{X}=[(1,y_{1}'),....(1,y_{T-1}')']'\\[5pt]\tilde{\mu_{a}} & =\left(\tilde{K_{a}}\right)^{-1}\left(vec(\Sigma^{-1}\left(\mathbb{\mathbf{Y}}-\boldsymbol{\kappa'}b\right)'\mathbf{X})+\Sigma_{a}^{-1}\mu_{a}\right)\end{align*}

-

sample

$(b_{x_{0}+1,...,}b_{x_{0}+d-1})$

from

$(b_{x_{0}+1,...,}b_{x_{0}+d-1})$

from

$(b_{x_{0}+1,...,}b_{x_{0}+d-1})'|\mathbb{\mathbf{Y}},a,A,\boldsymbol{\kappa,}\gamma_{1,},\gamma_{2},\Sigma,\sigma_{\eta}^{2}\sim N(\tilde{\mu}_{b},\tilde{K}_{b}^{-1})$

, where: Here,

$(b_{x_{0}+1,...,}b_{x_{0}+d-1})'|\mathbb{\mathbf{Y}},a,A,\boldsymbol{\kappa,}\gamma_{1,},\gamma_{2},\Sigma,\sigma_{\eta}^{2}\sim N(\tilde{\mu}_{b},\tilde{K}_{b}^{-1})$

, where: Here, \begin{align*}\tilde{K}_{b} & =(\Sigma^{-1})_{2:d_{x}}\boldsymbol{\kappa}'\boldsymbol{\kappa}+\Sigma_{b}^{-1}\\[5pt]\tilde{\mu}_{b} & =\tilde{K}_{b}^{-1}\left(\Sigma^{-1}\left(\mathbb{\mathbf{Y}}-\mathbf{X}\alpha\right)^{\prime}\boldsymbol{\kappa}\right)_{2:d_{x}}\end{align*}

\begin{align*}\tilde{K}_{b} & =(\Sigma^{-1})_{2:d_{x}}\boldsymbol{\kappa}'\boldsymbol{\kappa}+\Sigma_{b}^{-1}\\[5pt]\tilde{\mu}_{b} & =\tilde{K}_{b}^{-1}\left(\Sigma^{-1}\left(\mathbb{\mathbf{Y}}-\mathbf{X}\alpha\right)^{\prime}\boldsymbol{\kappa}\right)_{2:d_{x}}\end{align*}

$(\Sigma^{-1})_{2:d}$

denotes the

$(\Sigma^{-1})_{2:d}$

denotes the

$\left(d-1\right)\times\left(d-1\right)$

matrix by excluding the first column and row of

$\left(d-1\right)\times\left(d-1\right)$

matrix by excluding the first column and row of

$d\times d$

matrix

$d\times d$

matrix

$\Sigma^{-1}$

. Similarly,

$\Sigma^{-1}$

. Similarly,

$\left(\Sigma^{-1}\left(\mathbb{\mathbf{Y}}-\mathbf{X}\alpha\right)^{\prime}\boldsymbol{\kappa}\right)_{2\;:\;d}$

is the column vector of dimension

$\left(\Sigma^{-1}\left(\mathbb{\mathbf{Y}}-\mathbf{X}\alpha\right)^{\prime}\boldsymbol{\kappa}\right)_{2\;:\;d}$

is the column vector of dimension

$d-1$

by excluding the first component of

$d-1$

by excluding the first component of

$\Sigma^{-1}\left(\mathbb{\mathbf{Y}}-\mathbf{X}\alpha\right)^{\prime}\boldsymbol{\kappa}.$

$\Sigma^{-1}\left(\mathbb{\mathbf{Y}}-\mathbf{X}\alpha\right)^{\prime}\boldsymbol{\kappa}.$

-

sample vector

$(\gamma_{1},\gamma_{2})'$

from

$(\gamma_{1},\gamma_{2})'$

from

$(\gamma_{1},\gamma_{2})'|\mathbb{\mathbf{Y}},a,A,b,\boldsymbol{\kappa},\Sigma,\sigma_{\eta}^{2}\sim N(\tilde{\mu}_{\gamma},\tilde{K}_{\gamma}^{-1})$

, where:

$(\gamma_{1},\gamma_{2})'|\mathbb{\mathbf{Y}},a,A,b,\boldsymbol{\kappa},\Sigma,\sigma_{\eta}^{2}\sim N(\tilde{\mu}_{\gamma},\tilde{K}_{\gamma}^{-1})$

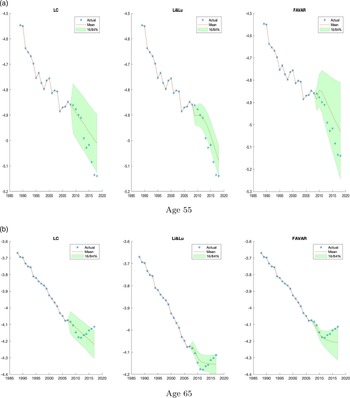

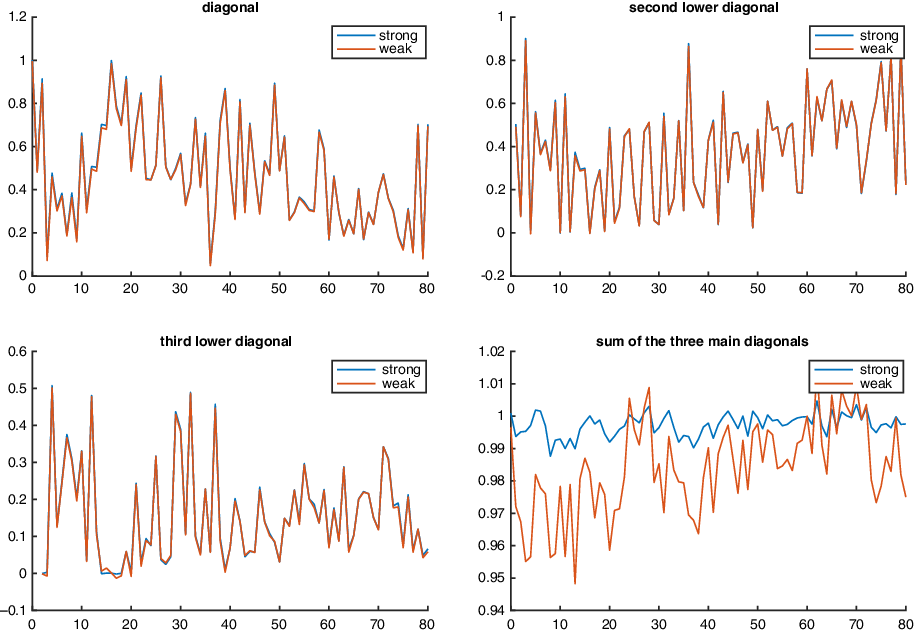

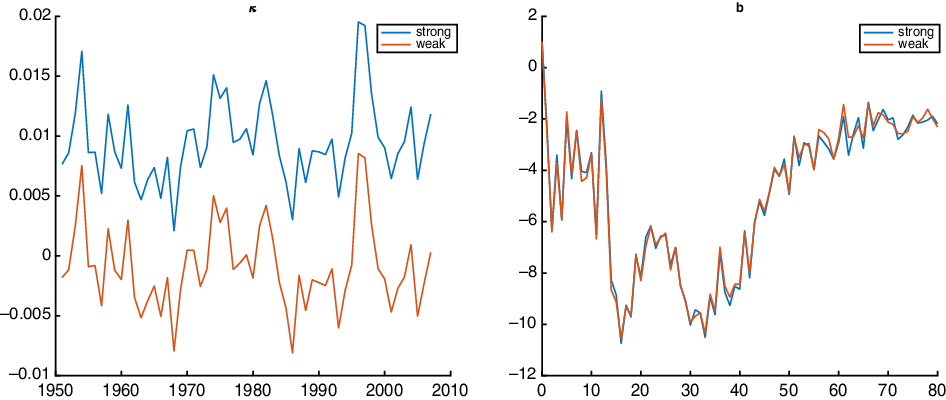

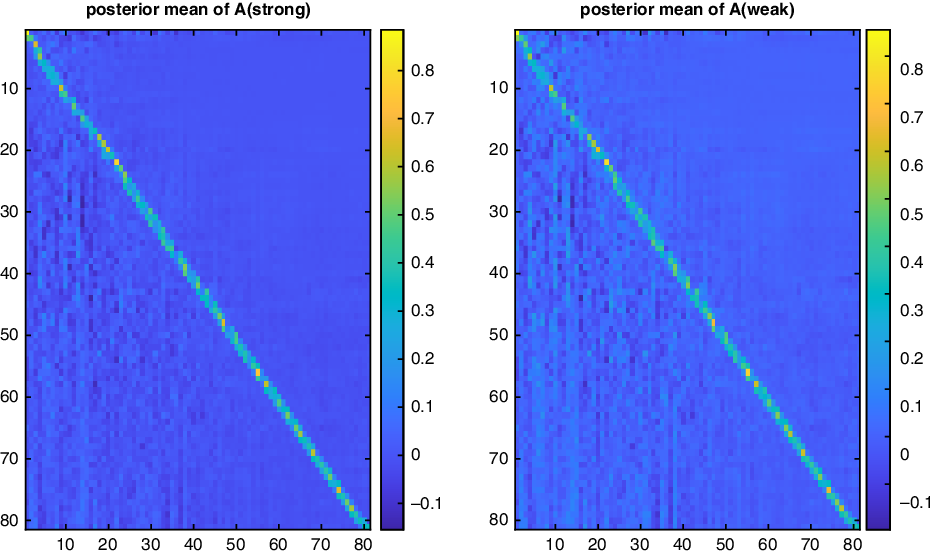

, where:  \begin{align*}\tilde{K}_{\gamma} & =\sum_{t=1}^{T-1}\left[\begin{array}{c@{\quad}c}1 & \kappa_{t}\\[5pt] \kappa_{t} & \kappa_{t}^{2}\end{array}\right]\left(\sigma_{\eta}^{2}\right)^{-1}+\left(\sigma^{2}\right)^{-1}\mathbb{I}_{2}\\[5pt]\tilde{\mu}_{\gamma} & =\tilde{K}_{\gamma}^{-1}\left(\sum_{t=1}^{T-1}\left[\begin{array}{c}\kappa_{t+1}\\[5pt]\kappa_{t}\kappa_{t+1}\end{array}\right]\left(\sigma_{\eta}^{2}\right)^{-1}+\left[\begin{array}{c}\left(\sigma^{2}\right)^{-1}\mu_{\gamma}\\[5pt]\left(\sigma^{2}\right)^{-1}\mu_{\gamma}\end{array}\right]\right)\end{align*}