Introduction

Anxiety and depression are common and impactful in epilepsy, yet under-recognized and undertreated. Individuals with epilepsy have 2–5 times higher risk of lifetime anxiety or depression than the general population [Reference Fiest, Dykeman and Patten1,Reference Rai, Kerr, McManus, Jordanova, Lewis and Brugha2], with increased risk before and after epilepsy diagnosis [Reference Hesdorffer, Ishihara, Mynepalli, Webb, Weil and Hauser3]. Among epilepsy samples, up to 50% have clinically relevant anxiety or depression symptoms on screeners at a given time [Reference Munger Clary, Snively and Hamberger4,Reference Fox, Wood and Phillips5]. Anxiety and depression are greater independent predictors of poor quality of life than seizure frequency and are associated with more severe epilepsy, medication side effects, cognitive concerns, increased healthcare costs, and mortality [Reference Hamilton, Anderson and Dahodwala6–Reference Kwan, Yu, Leung, Leon and Mychaskiw11]. Despite this, surveys distributed to leading epileptologists by the American Epilepsy Society and international care professionals by the International League Against Epilepsy indicated only 10–23% screen using validated measures, and limited time is a key barrier [Reference Gandy, Modi and Wagner12,Reference Bermeo-Ovalle13]. Without standardized instruments, symptoms are often unrecognized [Reference Scott, Sharpe, Hunt and Gandy14], and substantial literature indicates most people with mental health problems and epilepsy are not treated [Reference Scott, Sharpe and Thayer15].

The American Academy of Neurology (AAN) recognized the importance of screening with validated instruments by introducing the depression and anxiety screening for patients with epilepsy measure, requiring anxiety and depression screening at every visit [Reference Patel, Baca and Franklin16]. This is still recommended as a quality measure for epilepsy care. While this and other consensus statements support anxiety and depression screening [Reference Kerr, Mensah and Besag17,Reference Valente, Reilly and Carvalho18], there remains a paucity of literature on implementation strategies for epilepsy clinics. Implementation science utilizing behavior change theories and evaluation frameworks to develop and assess strategies can support implementation success and provide generalizable practical knowledge. Electronic health record (EHR)-based strategies involving support staff and patient screening self-completion may increase uptake and overcome time-related barriers [Reference Satre, Anderson and Leibowitz19].

In the present study, a strategy for implementing clinic support staff-facilitated, EHR-based anxiety and depression screening in an epilepsy clinic was developed using the Capability, Opportunity, Motivation-Behavior (COM-B) behavior change wheel framework [Reference Michie, van Stralen and West20]. This strategy was incorporated into a comprehensive epilepsy clinic and a pre-post evaluation was conducted using RE-AIM (Reach, Effectiveness, Adoption, Implementation, and Maintenance) [Reference Glasgow, Harden and Gaglio21], with primary outcome effectiveness (visit proportion meeting depression and anxiety quality measure).

Materials and methods

Ethics and design

This is a pre-post implementation study of EHR-based data at a single site. IRB approval was with waiver of informed consent for implementation evaluation, which was an exploratory objective of a parent study (NCT03879525). Additional pre-implementation timeframes were evaluated by analyzing data collected from preexisting approved IRB protocols, also with waiver of informed consent. Waivers were approved because data collection was minimal risk, involved no research-specific patient interactions (only retrospective collection of routine care data), and obtaining consent was impracticable. Data handling involved careful procedures to maintain confidentiality.

Setting

This study was conducted in the adult-focused clinic of an academic tertiary care epilepsy center in the Southeastern United States with six epileptologists and one epilepsy-focused physician assistant. Support staff included: certified medical assistants (CMAs) with primary responsibility to room patients before visits; telephone triage nurses who sometimes roomed patients; and float pool staff (primarily CMAs) from other departments intermittently assigned to room neurology patients. The epilepsy clinic was a designated section of a large multispecialty tertiary neurology clinic with shared staff. Pre-implementation clinic rooming involved calling patients from the waiting room, obtaining vitals, moving to a visit room for medication/allergy verification, mandated screening such as fall risk, and alerting providers that a patient is ready before departing the clinic room, where the patient awaited provider arrival.

Screening instruments (evidence-based intervention)

The Generalized Anxiety Disorder-7 (GAD-7) and Neurological Disorders Depression Inventory-Epilepsy (NDDI-E) are freely available, validated in epilepsy and multiple languages, widely recommended for use in epilepsy, and meet the AAN depression and anxiety screening epilepsy quality measure [Reference Bermeo-Ovalle13,Reference Patel, Baca and Franklin16,Reference Munger Clary and Salpekar22–Reference Gilliam, Barry, Hermann, Meador, Vahle and Kanner24]. The original GAD-7 validation suggested scores ≥10 detect generalized anxiety disorder, and scores 0–4 are considered normal, with 5–9, 10–14, and 15–21 indicating mild, moderate, and severe anxiety, respectively [Reference Spitzer, Kroenke, Williams and Lowe25]. Scores on the NDDI-E (epilepsy-specific depression scale), range 6–24, with original validation cutoff >15 for detecting major depressive episodes [Reference Gilliam, Barry, Hermann, Meador, Vahle and Kanner24]; recent meta-analyses suggest >13 may be optimal [Reference Kim, Kim, Yang and Kwon26]. The NDDI-E item addressing passive suicidality (“I’d be better off dead”) is validated as a suicidality screener (responses 3: sometimes or 4: always or often screening positive) [Reference Mula, McGonigal, Micoulaud-Franchi, May, Labudda and Brandt27]. Quality of life was assessed using the Quality of Life in Epilepsy-10 (QOLIE-10), with scores ranging 0–100 (higher scores indicate better quality of life) [Reference Jones, Ezzeddine, Herman, Buchhalter, Fureman and Moura28]. This instrument is feasible in practice and meets the AAN quality of life assessment for patients with epilepsy quality measure [Reference Patel, Baca and Franklin16,Reference Moura, Schwamm and Moura Junior29].

Implementation strategy

The implementation strategy was developed using the Capability, Opportunity, Motivation-Behavior system (COM-B), Behavior Change Wheel Framework [Reference Michie, van Stralen and West20]. Preliminary decisions to focus on enhanced EHR features and incorporating them into existing support staff-driven rooming/check-in were informed by the epilepsy center’s prior experience using research staff to conduct screening outside the EHR (during a pilot study involving 3 physicians), existing survey data on time-related barriers to depression and anxiety screening [Reference Bermeo-Ovalle13], and stakeholder input from epilepsy providers, psychiatrists, clinic staff and administration. Strategies such as using iPads upon arrival with front desk staff were considered but not compatible with existing clinic policies. Epilepsy provider stakeholder input was also informed by experience using existing EHR tools, including flowsheet-based versions of validated anxiety, depression, and quality of life instruments obtained via practice-based research network participation [Reference Narayanan, Dobrin and Choi30]. These required manual entry by the provider during interview-based instrument administration or following patient self-completion on paper.

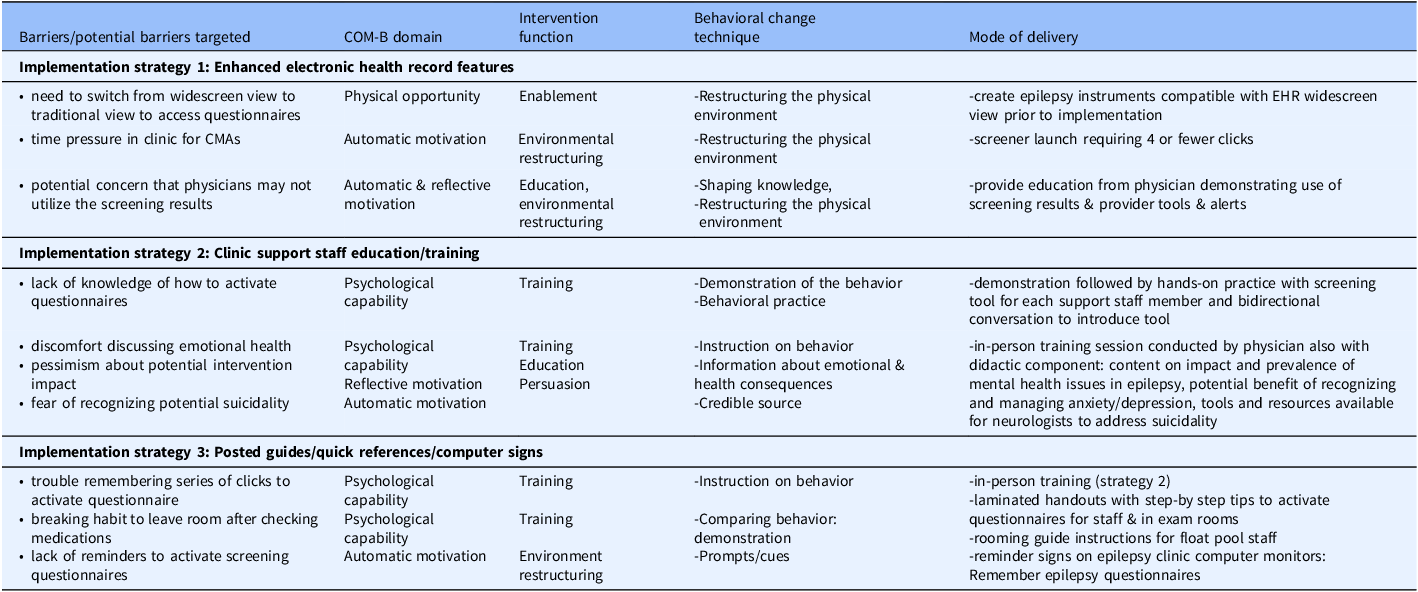

The implementation strategy focused on support staff/CMA behavior and was developed by mapping barriers to the COM-B framework and identifying aligned intervention functions of the behavior change wheel for strategy development (Table 1). Strategy 1, Enhanced EHR features included enabling patient self-completion of questionnaires on clinic computers at end of check-in while awaiting provider arrival. Questionnaire activation involved support staff clicking a link to activate secure patient portal questionnaire entry on clinic computers, with results filing directly into the EHR. Using these electronic questionnaires required a step to attach them to clinic encounters prior to visits. This was accomplished manually by a graduate student during the post-implementation evaluation, but there was potential for future automation. The order of questionnaire presentation was dictated by EHR system settings, which the study team and collaborating EHR analysts were unable to change. Questionnaire presentation had the following sequence: QOLIE-10, GAD-7, NDDI-E. The other implementation strategy components were: Strategy 2, Clinic support staff education/training and Strategy 3, Posted guides and reference materials (Table 1). The hands-on support staff education session (Strategy 2) was delivered to core CMAs with primary patient rooming responsibility and nurses who worked in the same area, while Strategy 3 features were available to other support staff intermittently rooming epilepsy patients.

Table 1. Implementation strategy for anxiety and depression screening

Note: COM-B: Capability, Opportunity, Motivation-Behavior framework [Reference Michie, van Stralen and West20].

While the implementation strategy’s primary focus was to support staff behavior to facilitate screening, tools, and education were also delivered to epilepsy providers, who all agreed to the support staff-focused implementation strategy in clinic. Providers attended a brief live education session and received printed reference materials on relevant epilepsy quality measures and how to activate EHR questionnaires, SmartLinks to pull anxiety and depression scores into notes, bright coloring to highlight positive screens or passive suicidality, a pop-up alert for positive suicidality screen, and training and tools for responding to suicidality. Suicidality tools included a handout with scripting and a process to evaluate for active suicidality and respond, along with a smart phrase for developing action plans for passive suicidality [Reference Giambarberi and Munger Clary31]. While providers were informed of the implementation strategy and provided education and resources, the implementation strategy focused on support staff. Providers did not receive specific instructions regarding what to do if patients did not complete screening instruments when a provider was ready to see a patient.

Evaluation

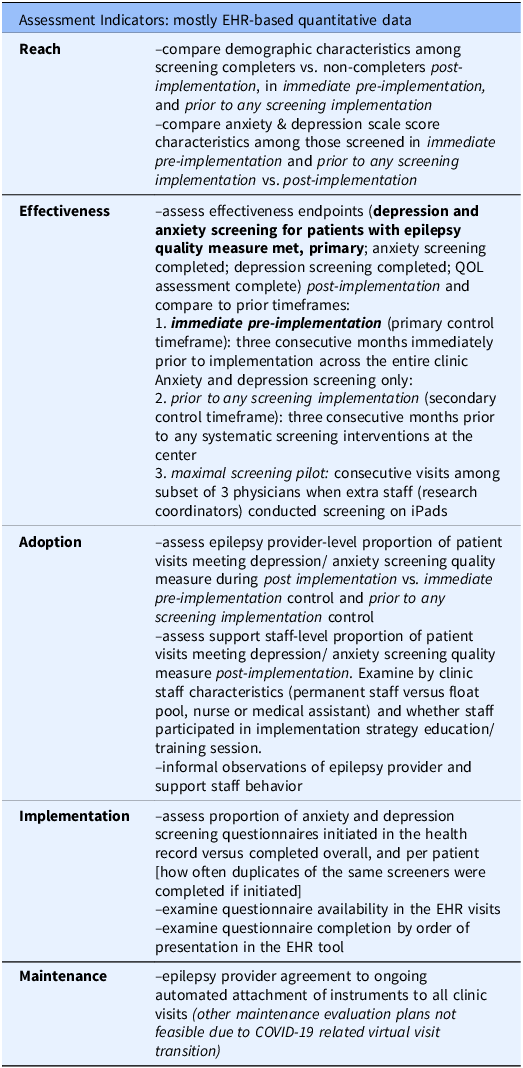

A RE-AIM-based [Reference Glasgow, Harden and Gaglio21] evaluation plan was developed (Table 2). When relevant, 3 months before implementation (immediate pre-implementation) was compared with 5 months after implementation (post-implementation). To assess effectiveness, reach, and provider-level adoption, another 3 consecutive months pre-implementation timeframe, prior to any screening intervention was also examined; this was prior to any dedicated screening intervention (paper screeners available in clinic only). All completed visits in the adult-focused epilepsy clinic were included for each timeframe.

Table 2. Evaluation plan

Note: QOL, Quality of life.

Reach was evaluated by characterizing demographics of individuals who completed both anxiety and depression screeners and testing for differences between screening completers and non-completers. Anxiety and depression scores among screened individuals were calculated. The primary Effectiveness outcome (primary outcome) was the proportion of completed clinic visits with both anxiety and depression screening completed, thus meeting the depression and anxiety screening for patients with epilepsy quality measure [Reference Patel, Baca and Franklin16]. Proportion of visits with anxiety, depression, and quality of life instruments completed was also calculated separately. Each of these endpoints was calculated for immediate pre-implementation and post-implementation, and all but quality of life were calculated prior to any screening intervention (quality of life data was not collected under the relevant protocol). The primary effectiveness outcome was also calculated during a limited pilot of maximal screening attempts for consecutive visits among 3 epileptologists (maximal screening pilot). The maximal screening pilot was conducted by research assistants for pragmatic trial recruitment and clinical care [Reference Munger Clary, Croxton and Allan32,Reference Ongchuan Martin, Sadeghifar and Snively33], concluding >3 months before immediate pre-implementation.

Adoption was evaluated at provider and support staff levels, as the proportion of visits meeting the depression and anxiety screening quality measure post-implementation, and for providers during immediate pre-implementation and prior to any screening implementation. While the main implementation strategy focus was on support staff, provider adoption was important, both because provider behavior had potential to impact screening completion, and because quality measures are calculated at the provider level. Process measures included grouping support staff by neurology staff vs. float pool, by profession (CMA vs. nursing), by rooming volume, and by attendance at hands-on training (Strategy 2). Informal observations and retrospective reflections regarding provider and support staff behavior were also collected from participating study authors. Implementation was evaluated as the proportion of questionnaires initiated in clinic/left incomplete compared to completed. Process measures included whether duplicate instances of instruments were completed, proportion of visits with questionnaires attached to encounters, and method of questionnaire completion (manual EHR entry versus electronic questionnaires). Although at the time of project conception, there was intention to evaluate Maintenance over one year following post-implementation, this was not done due to transition to nearly 100% virtual visits during post-implementation, because of COVID-19. These virtual visits had no support staff role in the workflow and thus disrupted support-staff-based elements of the implementation strategy. However, the questionnaires attached to visits for the clinic-based implementation strategy were available in the patient portal where patients logged in for video visits. These questionnaires could be completed before visits by patients who noticed the questionnaire section in patient portal.

Data collection, implementation timeline

Data for immediate pre-implementation and post-implementation was extracted from the Epic Clarity database by experienced programmers and verified by the study team. The implementation strategy was developed in 2019, with some limited piloting of electronic screening questionnaires available across some providers prior to full implementation (overlapping in part with immediate pre-implementation). Support staff and provider training was completed the first week of December 2019 and questionnaire in-clinic launch strategy was initiated on December 12, 2019, with questionnaire tools attached to EHR encounters and prompts present in epilepsy clinic rooms. Resources for float pool staff were disseminated on December 16, 2019 and in-person support for clinic team members was offered on December 17, 2019 (first high-volume clinic during post-implementation). For analysis, post-implementation spanned December 12, 2019–May 14, 2020. Within post-implementation, by March 24, 2020 nearly all clinic visits became virtual due to COVID-19. While support staff questionnaire activation was no longer possible and support staff had no role in virtual visit workflow, questionnaires were still attached to virtual visits and were accessible to patients previsit within the patient portal, and other EHR tools remained available.

Preliminary implementation monitoring conducted in 2020 focused on effectiveness, implementation, and adoption during post-implementation. Duration of post-implementation monitoring and data analysis was determined by parent study duration [Reference Munger Clary, Snively and Topaloglu34], and an immediate pre-implementation timeframe lasting 3 months was felt to be sufficient to account for month-to-month variability in individual provider clinic volumes and provide an adequate sample size. Final data extraction and full analysis including immediate pre-implementation (Sept 12, 2019–Dec 11, 2019) was completed in 2023–2024. Data had been manually collected from the EHR for additional pre-implementation comparison timeframes and deposited in REDCap databases. Specifically, data was collected on consecutive completed epilepsy clinic visits during 3 months in 2017 prior to any screening implementation, and among 3 physicians during the 2018–2019 maximal screening pilot. Some analyses for prior to any screening implementation were conducted in 2025.

Statistical analysis

Distributions were examined and descriptive statistics were generated for pre-implementation and post-implementation timeframes using SAS version 9.4. Post-implementation was further subdivided into clinic post-implementation (December 12, 2019–March 23, 2020) and virtual post-implementation (March 24, 2020–May 14, 2020). Chi-square and Wilcoxon rank-sum tests were conducted to compare demographics among individuals completing quality measure-satisfying depression and anxiety screening versus non-completers and to compare quality measure attainment rates pre- and post-implementation. Two-sample t-tests were used to compare mean GAD-7 and NDDI-E scores during immediate pre-implementation and prior to any screening implementation with post-implementation. P values <0.05 were considered statistically significant.

Results

Sample characteristics

Immediate pre-implementation included 546 completed visits (1258 scheduled, 632 canceled, 78 no-shows, 2 initiated but incomplete visits). Post-implementation included 943 completed visits (2335 scheduled, 1276 canceled, 113 no-shows, and 3 incomplete). Canceled visits include those canceled weeks to months ahead of time due to provider inpatient service and cancelations due to pandemic-related shutdowns. Of 943 completed post-implementation visits, 631 occurred during clinic post-implementation, with 312 during virtual post-implementation. The other comparison timeframes had 573 consecutive visits over 3 months prior to any screening implementation, then 1152 consecutive visits across 3 epileptologists in the maximal screening pilot. There were 30 support staff who roomed patients during post-implementation, including 4 core CMAs primarily rooming epilepsy patients, 8 neurology CMAs with other primary responsibilities, 3 nurses, and 15 float pool staff.

Reach

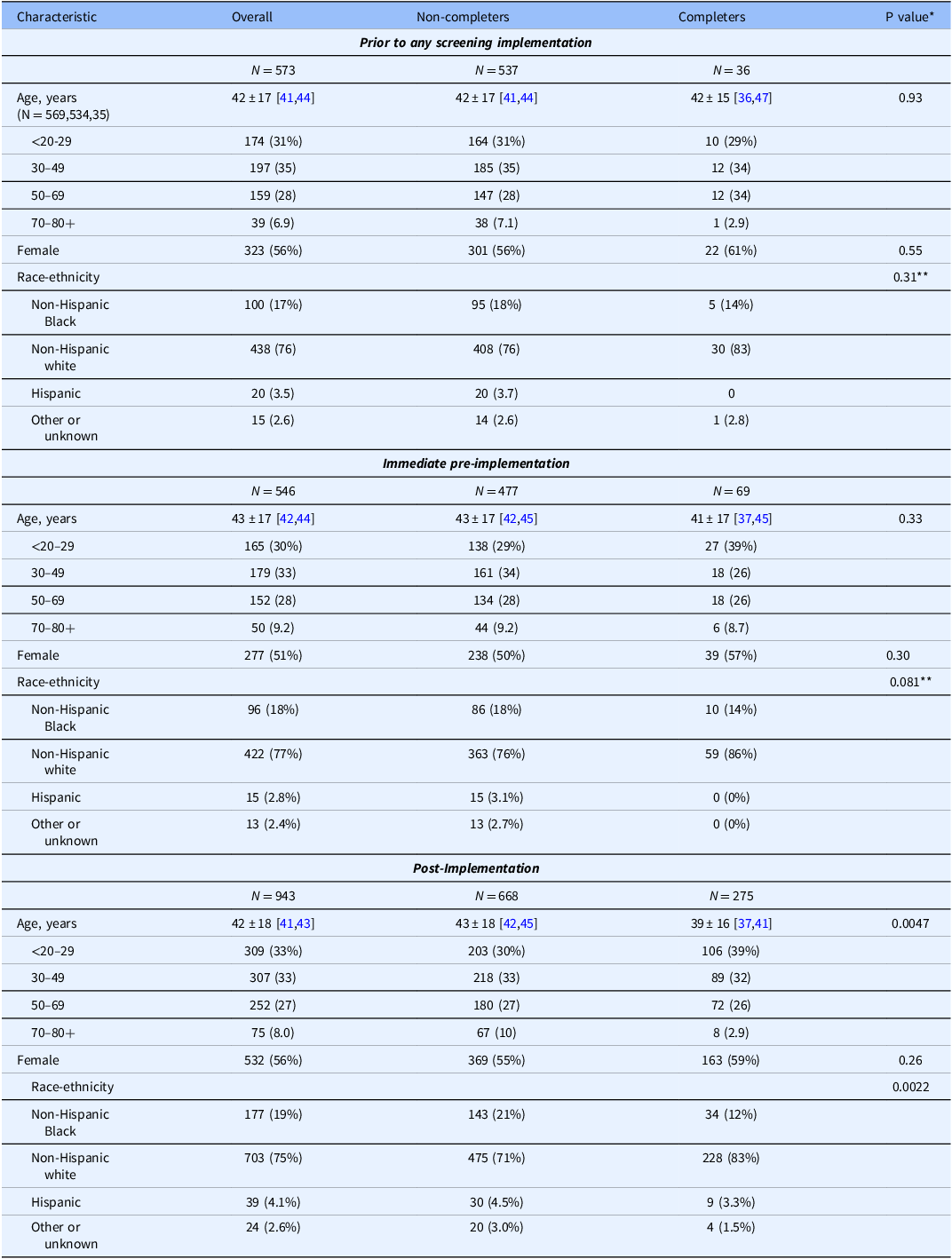

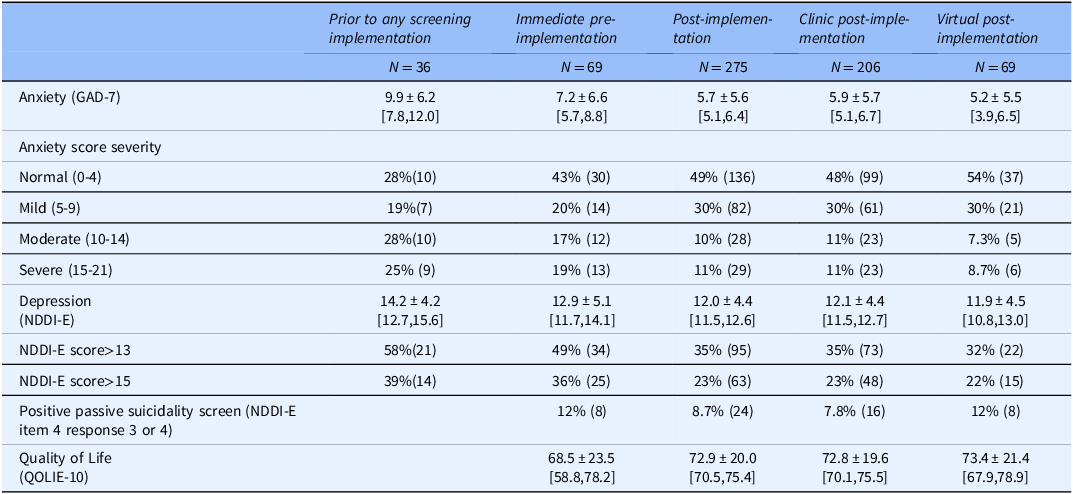

Table 3 demonstrates demographics of individuals who completed anxiety and depression screening during prior to any screening implementation, immediate pre-implementation, and post-implementation vs. those who did not receive screening. Post-implementation, older individuals and non-white or Hispanic patients were significantly less likely to be screened than younger or white individuals. While not statistically significant, the age and race/ethnicity patterns were similar during immediate pre-implementation (Table 3). Anxiety and depression scores were higher among individuals screened during both pre-implementation timeframes than post-implementation (Table 4), but differences were only statistically significant for prior to any screening implementation (GAD-7: p < 0.001, NDDI-E: p = 0.0053; immediate pre-implementation GAD-7: p = 0.058, NDDI-E: p = 0.16).

Table 3. Reach: pre- and post-implementation instrument completion by patient demographics

Note: GAD-7 and NDDI-E completion status: both completed (quality measure met) versus not both. Count (column %), mean±SD, and [95% Confidence Interval]. *Wilcoxon rank sum and chi-square tests for comparison of completer and non-completer groups. **Non-Hispanic white versus all other groups.

Table 4. Reach: pre- and post-implementation group-level depression and anxiety scores among instrument completers

Note: Mean±SD [95% Confidence Interval] or % (N); Instrument completers were defined as individuals having completed both the GAD-7 instrument and NDDI-E. Timeframes: prior to any screening implementation: March 1, 2017-May 31, 2017; immediate pre-implementation: Sept 12,2019-Dec 11, 2019; post-implementation Dec 12, 2019-May 14, 2020; clinic post-implementation Dec 12, 2019-March 24, 2020; virtual post-implementation March 25, 2020-May 14, 2020. Total N for the QOLIE-10: Immediate pre-implementation, 25; Post-implementation, 265; Clinic post-implementation, 206; Virtual post-implementation, 61. QOLIE-10 and item level responses on NDDI-E were not collected during prior to any screening implementation.

Effectiveness

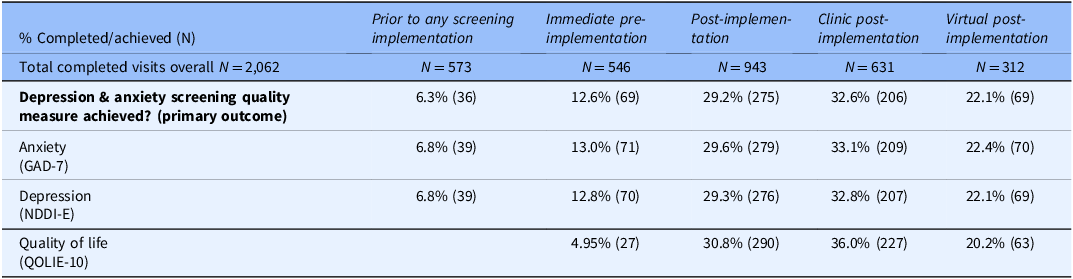

During immediate pre-implementation, 12.6% of completed visits (95% CI 10.1%–15.7%) met the depression and anxiety screening quality measure, with both GAD-7 and NDDI-E completed (hereafter screening completion, Table 5). Screening completion increased significantly to 29.2% post-implementation (CI 26.4%–32.1%, p < 0.0001), and clinic post-implementation had higher screening completion than virtual post-implementation (32.6% vs. 22.1%). Quality of life measurement increased substantially post-implementation compared to immediate pre-implementation (Table 5). Among the post-implementation visits with questionnaires successfully attached to the EHR and thus fully available for support staff-initiated screening (878 visits), screening completion was 31.3%.

Table 5. Effectiveness of implementation strategy: instrument completion, quality measure attainment

Note: depression & anxiety screening quality measure was achieved if both the anxiety and depression screening instruments were completed. QOLIE-10 was not collected during prior to any screening implementation.

During prior to any screening implementation, GAD-7 and NDDI-E were completed for 6.28% (36 of 573) consecutive patients (CI 4.57%–8.58%). Post-implementation screening completion was significantly higher than this alternative control period (p < 0.0001). During the maximal screening pilot (research staff member dedicated to approaching patients for screening right after clinic staff check-in), of 1152 completed visits, staff approached 1012 individuals to attempt screening, and 884 completed anxiety and depression screening (76.7%). Among those approached and not screened, only 9 refused screening (0.89%), but 119 (11.8%) were not screened due to cognitive impairment, both of which are allowable exclusions for the quality measure, resulting in measure attainment for 884/1024 (86.3%).

Adoption

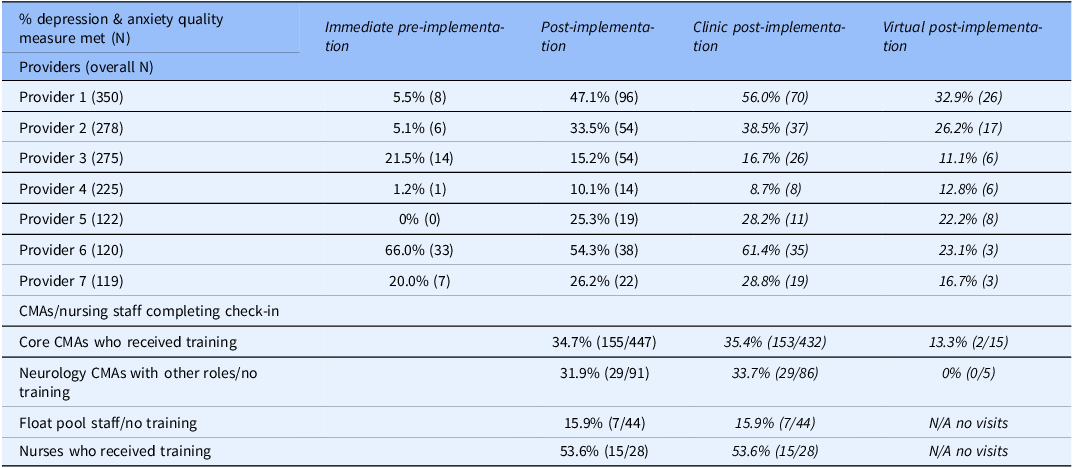

At both individual provider and support staff levels, there was substantial variability in screening completion. Provider-level screening completion is summarized in Table 6, with immediate pre-implementation screening completion rates ranging from 0 to 66%. The 4 providers with highest immediate pre-implementation screening rates had some screenings completed via EHR questionnaires (thus in part reflecting pre-piloting of EHR questionnaire component of the implementation strategy). Post-implementation, individual rates ranged 10–54%, with most providers having higher screening completion post-implementation than during immediate pre-implementation. Also, nearly all epilepsy providers had higher screening rates during clinic post-implementation than virtual post-implementation. During prior to any screening implementation, individual provider screening completion varied from 0 to 18%, with 3 providers having screening completion of 0%, two<5%, and two over 10%. In this timeframe, five of seven providers were the same as during immediate pre-implementation and post-implementation, but two were distinct individuals.

Table 6. Adoption: provider and certified medical assistant (CMA)/Nurse-level instrument completion

Note: CMA/nursing staff level data during virtual post-implementation reflects the small number of in-person clinic visits conducted during that timeframe.

Post-implementation screening completion was highest for nurses and lowest for float pool (Table 6), and completion among individual support staff ranged 0–80%. Considering individual completion rates and check-in visit volume, nurses had 44–75% completion (4–16 visits per nurse), core CMAs had 32–40% completion (45–174 visits per CMA), other neurology CMAs had 0–46% completion (5–39 visits per CMA), and float pool had 0–80% completion (1–16 visits per staff member). The support staff-directed training session (Strategy 2) was attended by all nurses and core CMAs, with overall screening completion rate of 35.8% versus 26.7% among those who did not receive training but had access to posted guides/reminders and the float pool rooming document (Strategy 3).

Distribution of support staff type was examined across providers. Among different providers, 36.0%–60.5% of visits were roomed by core CMAs, 6.7–14% by other neurology CMAs, 1.0–5.7% by neurology nurses, and 1.3%–7.2% by float pool staff. Providers with high and low screening completion were represented at either end of these ranges for different support staff groups. Questionnaire attachment to visits also varied by provider, with GAD/NDDI-E attachment ranging 87.8%–97.1% across providers. The two providers having highest provider-level post-implementation screening completion had the two highest proportions of assigned questionnaires, and the provider with lowest quality measure attainment had the lowest proportion of questionnaire-assigned visits.

Informal observations of provider and support staff behavior during post-implementation included varied provider instruction directly to support staff: instructing them not to activate screeners for their patients, or not to activate screeners if the provider is ready to see a patient, or asking support staff to activate screeners for patients if screening had been missed. Some providers asked for electronic tools to indicate if a patient declined screening or screening was not appropriate due to cognitive limitations. Modification of EHR tools and support staff re-training to enable this was not feasible before COVID-19-related virtual visit transition. Some providers attached questionnaires to visits if they found this had not been done before a visit. In a retrospective post-implementation discussion among providers regarding factors they recall influencing screening completion, providers varied in their expectations for screening completion. For example, multiple providers stated if patients were roomed late relative to their scheduled visit time, they would interrupt patients who had initiated questionnaires and start the provider portion of the visit, and some stated they would interrupt if it seemed patients were taking a long time to complete screeners, while others indicated they would wait for screener completion regardless of timing. Some providers recalled support staff would often ask if they desired screening to be done for individual patients, regardless of whether arrival was on-time or delayed.

Implementation

During immediate pre-implementation, 40% of visits had questionnaires assigned (instrument pre-piloting), with 37.7% of screening completed via EHR questionnaire (26/69). The remainder were documented via manual provider entry into the EHR. All post-implementation screening completions were via EHR questionnaires, except one duplicate entry described below.

During post-implementation, 878 of 943 completed visits (93.1%) had GAD-7 and NDDI-E questionnaires attached, while 873 had QOLIE-10 attached (92.6%). Of these attached questionnaires, only 2 visits (0.23%) had initiated but incomplete GAD-7, and 0 NDDI-Es were initiated but incomplete. Five visits (0.5%) had initiated but incomplete QOLIE-10. Fourteen visits had QOLIE-10 completion only, with neither GAD-7 nor NDDI-E completed. Duplicate instrument completion was observed for one visit during immediate pre-implementation and one post-implementation. In each case, the provider manually entered the second score.

Maintenance

While evaluation of maintenance was not formally conducted because it would not be meaningful after COVID-related transition to virtual visits with no support staff role in visit check-in, at end of post-implementation all epilepsy center providers (100%, 7/7) agreed to ongoing automated attachment of GAD-7, NDDI-E, and QOLIE-10 for all adult epilepsy clinic visits. This practice has been sustained for more than 4 years.

Discussion

This theory-based implementation strategy for anxiety and depression screening using existing staff in an epilepsy clinic significantly increased quality measure attainment overall and for most providers, but a large screening gap remained. Not surprisingly, the strategy did not achieve screening levels transiently attained in a subset of the practice via a labor-intensive maximal screening pilot (using an extra research staff member, not sustainable for practical use). Reach of anxiety and depression screening was biased toward younger patients and whites/non-Hispanics post-implementation. Significant provider and support staff-level variability occurred, with better performance observed among support staff with highest implementation strategy exposure.

This work is a notable addition to the epilepsy mental health screening literature in employing a theory-based implementation strategy and framework-based evaluation, and by incorporating the strategy using only existing clinical staff, requiring minimal staff time ( ≤ 4 clicks to activate questionnaires) and using scalable automatable EHR features. While a notable screening gap remained, screening rates nearly doubled post-implementation compared to immediate pre-implementation (which likely had artificially elevated screening due to electronic tool pre-piloting). This increase in screening is clinically relevant, as it would result in >200 additional screenings per year in this clinic, and thus increase opportunities to close treatment gaps for numerous individuals with anxiety and/or depression. Further, screening more than quadrupled compared to prior to any screening implementation, so the potential impact of this strategy may be higher in some settings.

This COM-B, behavior change wheel-based implementation strategy represents a more realistic real-world clinical care circumstance than prior epilepsy screening publications, and screening completion of close to one-third of visits during clinic post-implementation is similar to some prior publications when accounting for all epilepsy visits. Previously published work on anxiety and depression screening or quality of life assessment in epilepsy required additional staff time (usually research staff) or resources such as iPads or external apps, and these studies reported anxiety/depression screening or quality of life completion rates of 31.6%, 44.8%, and 62.7% [Reference Fox, Wood and Phillips5,Reference Moura, Schwamm and Moura Junior29,Reference Syed, Marawar, Basha and Zutshi35]. Further, most prior epilepsy efforts involved screeners completed outside the EHR, requiring provider review on paper/subsequent scanning into the EHR [Reference Moura, Schwamm and Moura Junior29,Reference Ongchuan Martin, Sadeghifar and Snively33,Reference Syed, Marawar, Basha and Zutshi35–Reference Lim, Wong and Chee37]. The most successful EHR-based screening effort of these involved sending screening questionnaires in the EHR portal 48 hours before a visit, then a reminder call with screening-specific reminder [Reference Fox, Wood and Phillips5]. Layered approaches such as this and others [Reference Satre, Anderson and Leibowitz19] in which a series of methods are used to screen individuals who initially do not complete screening initially may be needed to close screening gaps.

Our analysis demonstrated screening completers post-implementation were younger and more likely to be white/non-Hispanic than non-completers, which highlights the importance of future approaches to enhance equity in screening implementation strategies. This finding is consistent with prior general population literature indicating older adults were less likely to be assessed [Reference Willborn, Barnacle, Maack, Petry, Werremeyer and Strand38] and may align with literature suggesting mental health stigma may impact minoritized populations more than whites [Reference Sanchez, Eghaneyan and Trivedi39], contributing to reduced screening. Unconscious biases of providers and clinical staff could also play a role, along with age-aligned preferences/comfort with electronic interfaces for screening. Another potential contributor that could not be assessed in our analysis is distribution of cognitive impairment by age and race/ethnicity, as health disparities may be associated with more severe neurological disease and higher chance of cognitive impairment that would obviate screening. Future work should include data collection on inability to complete screening due to cognitive impairment. Future efforts to enhance equity could include briefer scales which have been evaluated in epilepsy [Reference Munger Clary, Wan and Conner40] and may enhance reach to elderly patients [Reference Pomeroy, Clark and Philp41]. Requiring all staff and providers to take implicit bias tests for race and age, such as the Implicit Association Test https://implicit.harvard.edu/implicit/takeatest.html, and participate in a facilitated debriefing session could provide the opportunity to reflect on how staff/providers can take responsibility for mitigating bias. Also, efforts to address social or cultural barriers to care [Reference Lee-Tauler, Eun, Corbett and Collins42] and incorporating collaborative care or other integrated mental health care models could be beneficial for future work [Reference Jackson-Triche, Unutzer and Wells43,Reference Alves-Bradford, Trinh, Bath, Coombs and Mangurian44].

Provider-level variability in screening was observed in prior literature [Reference Beaulac, Edwards and Steele45,Reference Mello, Becker, Bromberg, Baird, Zonfrillo and Spirito46] and not surprising given variability across providers in this group dating back to at least the 2017, and since the implementation strategy focused primarily on support staff. Variability across providers likely reflects varied practice styles and individual provider-level barriers, and may partly reflect implementation factors such as provider overlap with higher-versus-lower-completing support staff and questionnaire attachment proportion. This implementation strategy did not address literature-documented provider barriers to screening such as provider knowledge around screening and mental health management, or lack of referral resources (other than for suicidality) [Reference Gandy, Modi and Wagner12,Reference Bermeo-Ovalle13,Reference Giambarberi and Munger Clary31,Reference Willborn, Barnacle, Maack, Petry, Werremeyer and Strand38,Reference Henry, Schmidt and M.47,Reference Phoosuwan and Lundberg48].

Informal observations during post-implementation, questionnaire completion patterns, and provider retrospective reflection on the implementation experience also suggest clinic visit timing-related factors contribute to provider variability (such as whether a given provider’s clinic flow accommodates time for screener completion between support staff check-in and provider arrival). While the implementation strategy attempted to address provider time-related barriers to screening via support-staff-initiated screening, it did require time for patients to answer questionnaires after visit check-in, and some providers stated that this time for screening was a prominent barrier if visits were already running late. Further, informal observations and higher rates of completion for the first questionnaire in the series (QOLIE-10) suggest delay to complete instruments likely influenced screening. While data specifically on timing of patient arrival relative to scheduled visit time and time from check-in to provider portion of the visit was not available for this analysis, future studies would benefit from this type of data collection. The potential need for providers to spend additional visit time addressing positive screens and initiating management was not addressed by this implementation strategy, nor was potential concern that screening results might reflect falsely elevated symptoms if completed on the clinic computer, akin to elevated blood pressure readings due to “white coat syndrome.” Future implementation strategies would benefit from more comprehensive attention to provider-level barriers, targeting providers more directly in implementation and incorporating successful strategies from non-neurology settings [Reference D’Amico, Hanania and Lee49,Reference Powell, Proctor and Glass50]. Further, data collection and analysis related to provider tools such as use of smart phrases for managing suicidality and provider action in response to passive suicidality screening alerts would be beneficial in future work.

Support staff variability in screener completion was most marked among staff who did not receive the Strategy 2 education/training session (0–80% among those who did not receive Strategy 2 versus 32–75%). Mean screening completion was higher among staff based in neurology, who presumably had the most exposure to Strategy 3 and who may have prior knowledge regarding anxiety and depression screening in epilepsy and its importance. These patterns likely suggest some impact of implementation strategy components but highlight a need for refined strategies incorporating more support staff input. Provider preferences and their communication with support staff as identified via informal observation and provider reflection may have influenced support staff behavior; this is important to explore in refining future strategies. Future work would likely benefit from additional COM-B/behavior change wheel-aligned strategy components, including monitoring and feedback, which were considered for this implementation strategy but not feasible (technical limitations on timing of data availability preventing rapid feedback, and COVID-19 disruption).

The purpose of scientifically evaluating implementation is to reduce the gap between what we know works (or fails to work) and what we do in routine practice. The key clinical implications of this study are: (1) Screening rates can increase through simple implementation strategies using existing staff and automatable EHR features. (2) To close the screening gap more fully, it is important to enable iterative enhancements in the implementation strategy targeting additional barriers and facilitators identified during initial implementation and to reinforce strategy components. (3) Strategies to comprehensively address provider time-based barriers to screening are needed, including workflow considerations such as promoting pre-visit screener completion and time-saving tools and resources for providers to address positive screens.

Limitations

This study had limitations, including COVID-19-pandemic-related disruption in clinic scheduling and workflow which limited evaluation of Maintenance, prevented implementation strategy refresher training, and interrupted plans to add feedback and otherwise refine the implementation strategy. One benefit of COVID disruptions was the observation that a substantial portion of patients self-completed screeners prior to virtual visits in the patient portal. The transition to portal-based video visits also likely increased patient engagement with the patient portal prior to visits, facilitating portal-based screening. This suggests some of the screening gap may be addressed by facilitating patient self-completion of questionnaires prior to visits, aligned with subsequent published epilepsy data [Reference Fox, Wood and Phillips5].

Additional limitations include single epilepsy center design, which may limit generalizability, though a strength of this study setting is providers representing a full spectrum of perspectives on mental health management of epilepsy, ranging from antidepressant nonprescribing to advocating for neurologists to manage mental health. The provider-level data indeed demonstrated significant variability across epilepsy providers, potentially reflecting these varied perspectives and strengthening generalizability. Variability at provider and support staff levels is likely driven by multiple factors (measured and unmeasured) that could not be fully controlled, including distribution of support staff across providers, patient arrival time/rooming time relative to visit time, visit type (new vs. follow-up) and questionnaire attachment. The distribution of support staff and questionnaire attachments were reviewed, and while these factors may partially explain provider-level variability, they are unlikely to fully account for the observed differences. Finally, this implementation strategy and analysis were limited to individuals who completed their epilepsy clinic visits, though patients in need of mental health screening and management may be more likely to miss visits [Reference Ongchuan Martin, Sadeghifar and Snively33].

Conclusion and future directions

This theory-informed implementation strategy for anxiety and depression screening in an epilepsy center and RE-AIM-based evaluation demonstrated increased screening using EHR-based tools and clinic support staff questionnaire activation. However, future work to address time-related barriers to screening/disruption of clinic workflow, enhance equity of screening reach, and evaluate and address persistent barriers to screening is needed. Strategies utilizing ultra-brief screening instruments, fostering pre-visit screening self-completion, and integrated care strategies addressing both screening and management are promising future approaches to address some of the key lessons from this evaluation.

Acknowledgements

The authors would like to acknowledge Jianyi Li and Rachel Croxton for data collection support, Nancy Lawlor for study regulatory support, Amy Ayler for electronic health record technical support, and Paneeni Lohana for preliminary abstract copyediting.

Author contributions

Conception and design of the work: HMC, BS, and SG; collection or contribution of data: HBA, KC, COD, JB, MS, CR, MW, and HMC; contributions of analysis tools or expertise BS, JC, and SG; conduct and interpretation of analysis: BS, JC, HMC, SG, JB, and HBA; and drafting of the manuscript: HMC, CM, and BS, among other critical intellectual contributions. Corresponding author HMC takes responsibility for the manuscript as a whole.

Funding statement

This work was supported by the National Institutes of Health grants KL2TR001421, UL1TR001420, and R25NS088248. Early development of some EHR-based tools adapted for this study was supported by the Agency for Healthcare Research and Quality under award number R01HS24057. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Competing interests

Heidi M. Munger Clary has received research grants from NIH, Department of Defense, and Duke Endowment for projects on anxiety and depression screening and integrated care models in epilepsy, she serves on the American Academy of Neurology Epilepsy Quality Measurement Workgroup, and she serves as Co-Chair of the International League Against Epilepsy’s Integrated Mental Healthcare Pathways Task Force. Jane Boggs reports research support from Jazz, Biohaven, UCB, and Neurona. The other authors have no conflicts of interest to disclose.