1 Introduction

Reactive systems perform an ongoing interaction with their environment, receiving inputs from the outside, changing their internal state, and producing output. Examples of such systems include GUIs, web applications, video games, and robots. Programming such systems with traditional general-purpose imperative languages can be challenging: the components of the reactive system are put together via a complex and often confusing web of callbacks and shared mutable state. As a consequence, individual components cannot be easily understood in isolation, which makes building and maintaining reactive systems in this manner difficult and error-prone (Parent, Reference Parent2006; Järvi et al., Reference Järvi, Marcus, Parent, Freeman and Smith2008).

Functional reactive programming (FRP), introduced by Elliott and Hudak (Reference Elliott and Hudak1997), tries to remedy this problem by introducing time-varying values (called behaviours or signals) and events as a means of communication between components in a reactive system instead of shared mutable state and callbacks. Crucially, signals and events are first-class values in FRP and can be freely combined and manipulated. These high-level abstractions not only provide a rich and expressive programming model but also make it possible for us to reason about FRP programs by simple equational methods.

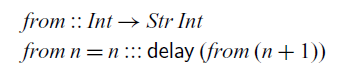

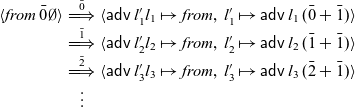

Elliott and Hudak’s original conception of FRP is an elegant idea that allows for direct manipulation of time-dependent data but also immediately raises the question of what the interface for signals and events should be. A naive approach would be to model discrete signals as streams defined by the following Haskell data type: Footnote 1

A stream of type

![]() $Str\,a$

thus consists of a head of type a and a tail of type

$Str\,a$

thus consists of a head of type a and a tail of type

![]() $Str\,a$

. The type

$Str\,a$

. The type

![]() $Str\,a$

encodes a discrete signal of type a, where each element of a stream represents the value of that signal at a particular time.

$Str\,a$

encodes a discrete signal of type a, where each element of a stream represents the value of that signal at a particular time.

Combined with the power of higher-order functional programming, we can easily manipulate and compose such signals. For example, we may apply a function to the values of a signal:

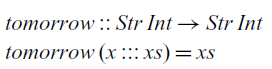

However, this representation is too permissive and allows the programmer to write non-causal programs, that is, programs where the present output depends on future input such as the following:

At each time step, this function takes the input of the next time step and returns it in the current time step. In practical terms, this reactive program cannot be effectively executed since we cannot compute the current value of the signal that it defines.

Much of the research in FRP has been dedicated to addressing this problem by adequately restricting the interface that the programmer can use to manipulate signals. This can be achieved by exposing only a carefully selected set of combinators to the programmer or by using a more sophisticated type system. The former approach has been very successful in practice, not least because it can be readily implemented as a library in existing languages. This library approach also immediately integrates the FRP language with a rich ecosystem of existing libraries and inherits the host language’s compiler and tools. The most prominent example of this approach is Arrowised FRP (Nilsson et al., Reference Nilsson, Courtney and Peterson2002), as implemented in the Yampa library for Haskell (Hudak et al., Reference Hudak, Courtney, Nilsson and Peterson2004), which takes signal functions as primitive rather than signals themselves. However, this library approach forfeits some of the simplicity and elegance of the original FRP model as it disallows direct manipulation of signals.

More recently, an alternative to this library approach has been developed (Jeffrey, Reference Jeffrey2014; Krishnaswami and Benton, Reference Krishnaswami and Benton2011; Krishnaswami et al., Reference Krishnaswami, Benton and Hoffmann2012; Krishnaswami Reference Krishnaswami2013; Jeltsch, Reference Jeltsch2013; Bahr et al., Reference Bahr, Graulund and Møgelberg2019, Reference Bahr, Graulund and Møgelberg2021) that uses a modal type operator

![]() $\bigcirc$

, which captures the notion of time. Following this idea, an element of type

$\bigcirc$

, which captures the notion of time. Following this idea, an element of type

![]() $\bigcirc a$

represents data of type a arriving in the next time step. Signals are then modelled by the type of streams defined instead as follows:

$\bigcirc a$

represents data of type a arriving in the next time step. Signals are then modelled by the type of streams defined instead as follows:

That is, a stream of type

![]() $Str\,a$

is an element of type a now and a stream of type

$Str\,a$

is an element of type a now and a stream of type

![]() $Str\,a$

later, thus separating consecutive elements of the stream by one time step. Combining this modal type with guarded recursion (Nakano, Reference Nakano2000) in the form of a fixed point operator of type

$Str\,a$

later, thus separating consecutive elements of the stream by one time step. Combining this modal type with guarded recursion (Nakano, Reference Nakano2000) in the form of a fixed point operator of type

![]() $(\bigcirc a\to a)\to a$

gives a powerful type system for reactive programming that guarantees not only causality, but also productivity, that is, the property that each element of a stream can be computed in finite time.

$(\bigcirc a\to a)\to a$

gives a powerful type system for reactive programming that guarantees not only causality, but also productivity, that is, the property that each element of a stream can be computed in finite time.

Causality and productivity of an FRP program means that it can be effectively implemented and executed. However, for practical purposes, it is also important whether it can be implemented with given finite resources. If a reactive program requires an increasing amount of memory or computation time, it will eventually run out of resources to make progress or take too long to react to input. It will grind to a halt. Since FRP programs operate on a high level of abstraction, it is typically quite difficult to reason about their space and time cost. A reactive program that exhibits a gradually slower response time, that is, its computations take longer and longer as time progresses, is said to have a time leak. Similarly, we say that a reactive program has a space leak, if its memory use is gradually increasing as time progresses, for example, if it holds on to memory while continually allocating more.

Within both lines of work – the library approach and the modal types approach – there has been an effort to devise FRP languages that avoid implicit space leaks. We say that a space leak is implicit if it is caused not by explicit memory allocations intended by the programmer but rather by the implementation of the FRP language holding on to old data. This is difficult to prevent in a higher-order language as closures may capture references to old data, which consequently must remain in memory for as long as the closure might be invoked. In addition, the language has to carefully balance eager and lazy evaluation: While some computations must necessarily be delayed to wait for input to arrive, we run the risk of needing to keep intermediate values in memory for too long unless we perform computations as soon as all required data have arrived. To avoid implicit space leaks, Ploeg and Claessen (2015) devised an FRP library for Haskell that avoids implicit space leaks by carefully restricting the API to manipulate events and signals. Based on the modal operator

![]() $\bigcirc$

described above, Krishnaswami (Reference Krishnaswami2013) has devised a modal FRP calculus that permits an aggressive garbage collection strategy that rules out implicit space leaks.

$\bigcirc$

described above, Krishnaswami (Reference Krishnaswami2013) has devised a modal FRP calculus that permits an aggressive garbage collection strategy that rules out implicit space leaks.

Contributions.

In this paper, we present

![]() $\mathsf{Rattus}$

, a practical modal FRP language that takes its ideas from the modal FRP calculi of Krishnaswami (Reference Krishnaswami2013) and Bahr et al. (Reference Bahr, Graulund and Møgelberg2019, Reference Bahr, Graulund and Møgelberg2021) but with a simpler and less restrictive type system that makes it attractive to use in practice. Like the Simply RaTT calculus of Bahr et al., we use a Fitch-style type system (Clouston, Reference Clouston2018), which extends typing contexts with tokens to avoid the syntactic overhead of the dual-context-style type system of Krishnaswami (Reference Krishnaswami2013). In addition, we further simplify the typing system by (1) only requiring one kind of token instead of two, (2) allowing tokens to be introduced without any restrictions, and (3) generalising the guarded recursion scheme. The resulting calculus is simpler and more expressive, yet still retains the operational guarantees of the earlier calculi, namely productivity, causality, and admissibility of an aggressive garbage collection strategy that prevents implicit space leaks. We have proved these properties by a logical relations argument formalised using the Coq theorem prover (see supplementary material and Appendix B).

$\mathsf{Rattus}$

, a practical modal FRP language that takes its ideas from the modal FRP calculi of Krishnaswami (Reference Krishnaswami2013) and Bahr et al. (Reference Bahr, Graulund and Møgelberg2019, Reference Bahr, Graulund and Møgelberg2021) but with a simpler and less restrictive type system that makes it attractive to use in practice. Like the Simply RaTT calculus of Bahr et al., we use a Fitch-style type system (Clouston, Reference Clouston2018), which extends typing contexts with tokens to avoid the syntactic overhead of the dual-context-style type system of Krishnaswami (Reference Krishnaswami2013). In addition, we further simplify the typing system by (1) only requiring one kind of token instead of two, (2) allowing tokens to be introduced without any restrictions, and (3) generalising the guarded recursion scheme. The resulting calculus is simpler and more expressive, yet still retains the operational guarantees of the earlier calculi, namely productivity, causality, and admissibility of an aggressive garbage collection strategy that prevents implicit space leaks. We have proved these properties by a logical relations argument formalised using the Coq theorem prover (see supplementary material and Appendix B).

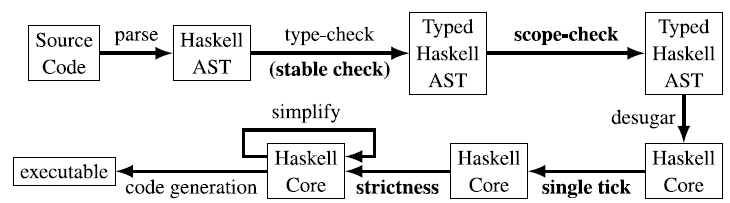

To demonstrate its use as a practical programming language, we have implemented

![]() $\mathsf{Rattus}$

as an embedded language in Haskell. This implementation consists of a library that implements the primitives of the language along with a plugin for the GHC Haskell compiler. The latter is necessary to check the more restrictive variable scope rules of

$\mathsf{Rattus}$

as an embedded language in Haskell. This implementation consists of a library that implements the primitives of the language along with a plugin for the GHC Haskell compiler. The latter is necessary to check the more restrictive variable scope rules of

![]() $\mathsf{Rattus}$

and to ensure the eager evaluation strategy that is necessary to obtain the operational properties. Both components are bundled in a single Haskell library that allows the programmer to seamlessly write

$\mathsf{Rattus}$

and to ensure the eager evaluation strategy that is necessary to obtain the operational properties. Both components are bundled in a single Haskell library that allows the programmer to seamlessly write

![]() $\mathsf{Rattus}$

code alongside Haskell code. We further demonstrate the usefulness of the language with a number of case studies, including an FRP library based on streams and events as well as an arrowised FRP library in the style of Yampa. We then use both FRP libraries to implement a primitive game. The two libraries implemented in

$\mathsf{Rattus}$

code alongside Haskell code. We further demonstrate the usefulness of the language with a number of case studies, including an FRP library based on streams and events as well as an arrowised FRP library in the style of Yampa. We then use both FRP libraries to implement a primitive game. The two libraries implemented in

![]() $\mathsf{Rattus}$

also demonstrate different approaches to FRP libraries: discrete time (streams) versus continuous time (Yampa); and first-class signals (streams) versus signal functions (Yampa). The

$\mathsf{Rattus}$

also demonstrate different approaches to FRP libraries: discrete time (streams) versus continuous time (Yampa); and first-class signals (streams) versus signal functions (Yampa). The

![]() $\mathsf{Rattus}$

Haskell library and all examples are included in the supplementary material.

$\mathsf{Rattus}$

Haskell library and all examples are included in the supplementary material.

Overview of paper.

Section 2 gives an overview of the

![]() $\mathsf{Rattus}$

language introducing the main concepts and their intuitions. Section 3 presents a case study of a simple FRP library based on streams and events, as well as an arrowised FRP library. Section 4 presents the underlying core calculus of

$\mathsf{Rattus}$

language introducing the main concepts and their intuitions. Section 3 presents a case study of a simple FRP library based on streams and events, as well as an arrowised FRP library. Section 4 presents the underlying core calculus of

![]() $\mathsf{Rattus}$

including its type system, its operational semantics, and our main metatheoretical results: productivity, causality, and absence of implicit space leaks. We then reflect on these results and discuss the language design of

$\mathsf{Rattus}$

including its type system, its operational semantics, and our main metatheoretical results: productivity, causality, and absence of implicit space leaks. We then reflect on these results and discuss the language design of

![]() $\mathsf{Rattus}$

. Section 5 gives an overview of the proof of our metatheoretical results. Section 6 describes how

$\mathsf{Rattus}$

. Section 5 gives an overview of the proof of our metatheoretical results. Section 6 describes how

![]() $\mathsf{Rattus}$

has been implemented as an embedded language in Haskell. Section 7 reviews related work and Section 8 discusses future work.

$\mathsf{Rattus}$

has been implemented as an embedded language in Haskell. Section 7 reviews related work and Section 8 discusses future work.

2 Introduction to Rattus

To illustrate

![]() $\mathsf{Rattus}$

, we will use example programs written in the embedding of the language in Haskell. The type of streams is at the centre of these example programs:

$\mathsf{Rattus}$

, we will use example programs written in the embedding of the language in Haskell. The type of streams is at the centre of these example programs:

The annotation with bangs (!) ensures that the constructor ::: is strict in both its arguments. We will have a closer look at the evaluation strategy of

![]() $\mathsf{Rattus}$

in Section 2.2.

$\mathsf{Rattus}$

in Section 2.2.

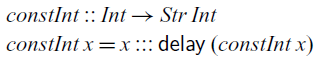

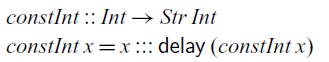

The simplest stream one can define just repeats the same value indefinitely. Such a stream is constructed by the

![]() $\textit{constInt}$

function below, which takes an integer and produces a constant stream that repeats that integer at every step:

$\textit{constInt}$

function below, which takes an integer and produces a constant stream that repeats that integer at every step:

Because the tail of a stream of integers must be of type

![]() $\bigcirc(Str\;Int)$

, we have to use

$\bigcirc(Str\;Int)$

, we have to use

![]() $\mathsf{delay}$

, which is the introduction form for the type modality

$\mathsf{delay}$

, which is the introduction form for the type modality

![]() $\bigcirc$

. Intuitively speaking,

$\bigcirc$

. Intuitively speaking,

![]() $\mathsf{delay}$

moves a computation one time step into the future. We could think of

$\mathsf{delay}$

moves a computation one time step into the future. We could think of

![]() $\mathsf{delay}$

having type

$\mathsf{delay}$

having type

![]() $a\to \bigcirc a$

, but this type is too permissive as it can cause space leaks. It would allow us to move arbitrary computations – and the data they depend on – into the future. Instead, the typing rule for

$a\to \bigcirc a$

, but this type is too permissive as it can cause space leaks. It would allow us to move arbitrary computations – and the data they depend on – into the future. Instead, the typing rule for

![]() $\mathsf{delay}$

is formulated as follows:

$\mathsf{delay}$

is formulated as follows:

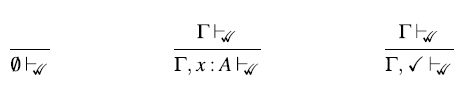

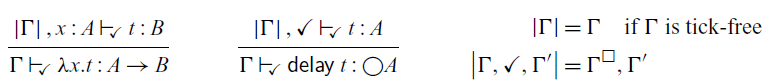

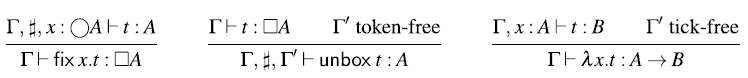

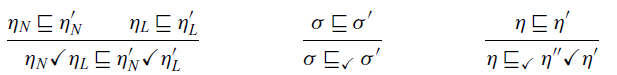

This is a characteristic example of a Fitch-style typing rule (Clouston, Reference Clouston2018): it introduces the token

![]() $\checkmark$

(pronounced ‘tick’) in the typing context

$\checkmark$

(pronounced ‘tick’) in the typing context

![]() $\Gamma$

. A typing context consists of type assignments of the form

$\Gamma$

. A typing context consists of type assignments of the form

![]() $x::A$

, but it can also contain several occurrences of

$x::A$

, but it can also contain several occurrences of

![]() $\checkmark$

. We can think of

$\checkmark$

. We can think of

![]() $\checkmark$

as denoting the passage of one time step, that is, all variables to the left of

$\checkmark$

as denoting the passage of one time step, that is, all variables to the left of

![]() $\checkmark$

are one time step older than those to the right. In the above typing rule, the term t does not have access to these ‘old’ variables in

$\checkmark$

are one time step older than those to the right. In the above typing rule, the term t does not have access to these ‘old’ variables in

![]() $\Gamma$

. There is, however, an exception: if a variable in the typing context is of a type that is time-independent, we still allow t to access them – even if the variable is one time step old. We call these time-independent types stable types, and in particular all base types such as Int and Bool are stable. We will discuss stable types in more detail in Section 2.1.

$\Gamma$

. There is, however, an exception: if a variable in the typing context is of a type that is time-independent, we still allow t to access them – even if the variable is one time step old. We call these time-independent types stable types, and in particular all base types such as Int and Bool are stable. We will discuss stable types in more detail in Section 2.1.

Formally, the variable introduction rule of

![]() $\mathsf{Rattus}$

reads as follows:

$\mathsf{Rattus}$

reads as follows:

That is, if x is not of a stable type and appears to the left of a

![]() $\checkmark$

, then it is no longer in scope.

$\checkmark$

, then it is no longer in scope.

Turning back to our definition of the

![]() ${\textit{constInt}}$

function, we can see that the recursive call

${\textit{constInt}}$

function, we can see that the recursive call

![]() ${\textit{constInt}\;\textit{x}}$

must be of type

${\textit{constInt}\;\textit{x}}$

must be of type

![]() ${\textit{Str}\;\textit{Int}}$

in the context

${\textit{Str}\;\textit{Int}}$

in the context

![]() $\Gamma,\checkmark$

, where

$\Gamma,\checkmark$

, where

![]() $\Gamma$

contains

$\Gamma$

contains

![]() ${\textit{x}\mathbin{::}\textit{Int}}$

. So x remains in scope because it is of type Int, which is a stable type. This would not be the case if we were to generalise

${\textit{x}\mathbin{::}\textit{Int}}$

. So x remains in scope because it is of type Int, which is a stable type. This would not be the case if we were to generalise

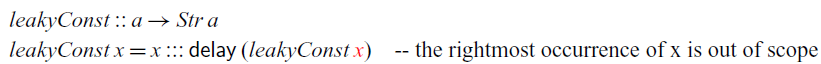

![]() $\textit{constInt}$

to arbitrary types:

$\textit{constInt}$

to arbitrary types:

In this example, x is of type a and therefore goes out of scope under

![]() $\mathsf{delay}$

: since a is not necessarily stable,

$\mathsf{delay}$

: since a is not necessarily stable,

![]() $x::a$

is blocked by the

$x::a$

is blocked by the

![]() $\checkmark$

introduced by

$\checkmark$

introduced by

![]() $\mathsf{delay}$

. We can see that

$\mathsf{delay}$

. We can see that

![]() $\textit{leakyConst}$

would indeed cause a space leak by instantiating it to the type

$\textit{leakyConst}$

would indeed cause a space leak by instantiating it to the type

![]() $\textit{leakyConst}::\textit{Str}\;\textit{Int}\to \textit{Str}\;(\textit{Str}\;\textit{Int})$

: at each time step n, it would have to store all previously observed input values from time step 0 to

$\textit{leakyConst}::\textit{Str}\;\textit{Int}\to \textit{Str}\;(\textit{Str}\;\textit{Int})$

: at each time step n, it would have to store all previously observed input values from time step 0 to

![]() $n- 1$

, thus making its memory usage grow linearly with time. To illustrate this on a concrete example, assume that

$n- 1$

, thus making its memory usage grow linearly with time. To illustrate this on a concrete example, assume that

![]() $\textit{leakyConst}$

is fed the stream of numbers

$\textit{leakyConst}$

is fed the stream of numbers

![]() $0, 1, 2, \dots$

as input. Then, the resulting stream of type

$0, 1, 2, \dots$

as input. Then, the resulting stream of type

![]() $\textit{Str}\;(\textit{Str}\;\textit{Int})$

contains at each time step n the same stream

$\textit{Str}\;(\textit{Str}\;\textit{Int})$

contains at each time step n the same stream

![]() $0, 1, 2, \dots$

. However, the input stream arrives one integer at a time. So at time n, the input stream would have advanced to

$0, 1, 2, \dots$

. However, the input stream arrives one integer at a time. So at time n, the input stream would have advanced to

![]() $n, n+1, n+2, \dots$

, that is, the next input to arrive is n. Consequently, the implementation of

$n, n+1, n+2, \dots$

, that is, the next input to arrive is n. Consequently, the implementation of

![]() $\textit{leakyConst}$

would need to have stored the previous values

$\textit{leakyConst}$

would need to have stored the previous values

![]() $0,1, \dots n-1$

of the input stream.

$0,1, \dots n-1$

of the input stream.

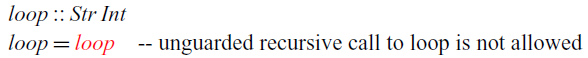

The definition of constInt also illustrates the guarded recursion principle used in

![]() $\mathsf{Rattus}$

. For a recursive definition to be well-typed, all recursive calls have to occur in the presence of a

$\mathsf{Rattus}$

. For a recursive definition to be well-typed, all recursive calls have to occur in the presence of a

![]() $\checkmark$

– in other words, recursive calls have to be guarded by

$\checkmark$

– in other words, recursive calls have to be guarded by

![]() $\mathsf{delay}$

. This restriction ensures that all recursive functions are productive, which means that each element of a stream can be computed in finite time. If we did not have this restriction, we could write the following obviously unproductive function:

$\mathsf{delay}$

. This restriction ensures that all recursive functions are productive, which means that each element of a stream can be computed in finite time. If we did not have this restriction, we could write the following obviously unproductive function:

The recursive call to

![]() $\textit{loop}$

does not occur under a delay and is thus rejected by the type checker.

$\textit{loop}$

does not occur under a delay and is thus rejected by the type checker.

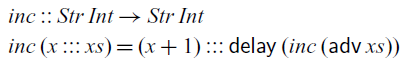

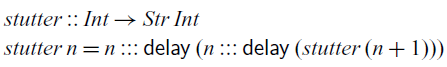

Let’s consider an example program that transforms streams. The function

![]() $\textit{inc}$

below takes a stream of integers as input and increments each integer by 1:

$\textit{inc}$

below takes a stream of integers as input and increments each integer by 1:

Here we have to use ![]() , the elimination form for

, the elimination form for

![]() $\bigcirc$

, to convert the tail of the input stream from type

$\bigcirc$

, to convert the tail of the input stream from type

![]() $\bigcirc(Str\;Int)$

into type

$\bigcirc(Str\;Int)$

into type

![]() ${\textit{Str}\;\textit{Int}}$

. Again we could think of

${\textit{Str}\;\textit{Int}}$

. Again we could think of ![]() having type

having type

![]() $\bigcirc a\to a$

, but this general type would allow us to write non-causal functions such as the

$\bigcirc a\to a$

, but this general type would allow us to write non-causal functions such as the

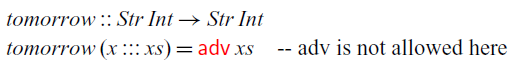

![]() $\textit{tomorrow}$

function we have seen in the introduction:

$\textit{tomorrow}$

function we have seen in the introduction:

This function looks one time step ahead so that the output at time n depends on the input at time

![]() $n+1$

.

$n+1$

.

To ensure causality, ![]() is restricted to contexts with a

is restricted to contexts with a

![]() $\checkmark$

:

$\checkmark$

:

Not only does ![]() require a

require a

![]() $\checkmark$

, but it also causes all bound variables to the right of

$\checkmark$

, but it also causes all bound variables to the right of

![]() $\checkmark$

to go out of scope. Intuitively speaking

$\checkmark$

to go out of scope. Intuitively speaking

![]() $\mathsf{delay}$

looks ahead one time step and

$\mathsf{delay}$

looks ahead one time step and ![]() then allows us to go back to the present. Variable bindings made in the future are therefore not accessible once we returned to the present.

then allows us to go back to the present. Variable bindings made in the future are therefore not accessible once we returned to the present.

Note that

![]() $\mathsf{adv}$

causes the variables to the right of

$\mathsf{adv}$

causes the variables to the right of

![]() $\checkmark$

to go out of scope forever, whereas it brings variables back into scope that were previously blocked by the

$\checkmark$

to go out of scope forever, whereas it brings variables back into scope that were previously blocked by the

![]() $\checkmark$

. That is, variables that go out of scope due to

$\checkmark$

. That is, variables that go out of scope due to

![]() $\mathsf{delay}$

can be brought back into scope by

$\mathsf{delay}$

can be brought back into scope by

![]() $\mathsf{adv}$

.

$\mathsf{adv}$

.

2.1 Stable types

We haven’t yet made precise what stable types are. To a first approximation, types are stable if they do not contain

![]() $\bigcirc$

or function types. Intuitively speaking,

$\bigcirc$

or function types. Intuitively speaking,

![]() $\bigcirc$

expresses a temporal aspect and thus types containing

$\bigcirc$

expresses a temporal aspect and thus types containing

![]() $\bigcirc$

are not time-invariant. Moreover, functions can implicitly have temporal values in their closure and are therefore also excluded from stable types.

$\bigcirc$

are not time-invariant. Moreover, functions can implicitly have temporal values in their closure and are therefore also excluded from stable types.

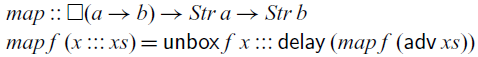

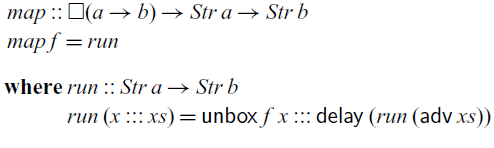

However, as a consequence, we cannot implement the

![]() $\textit{map}$

function that takes a function

$\textit{map}$

function that takes a function

![]() $f::a\to b$

and applies it to each element of a stream of type

$f::a\to b$

and applies it to each element of a stream of type

![]() $Str\,a$

, because it would require us to apply the function f at any time in the future. We cannot do this because

$Str\,a$

, because it would require us to apply the function f at any time in the future. We cannot do this because

![]() $a\to b$

is not a stable type (even if a and b were stable) and therefore f cannot be transported into the future. However,

$a\to b$

is not a stable type (even if a and b were stable) and therefore f cannot be transported into the future. However,

![]() $\mathsf{Rattus}$

has the type modality

$\mathsf{Rattus}$

has the type modality

![]() $\square$

, pronounced ‘box’, that turns any type A into a stable type

$\square$

, pronounced ‘box’, that turns any type A into a stable type

![]() $\square A$

. Using the

$\square A$

. Using the

![]() $\square$

modality, we can implement

$\square$

modality, we can implement

![]() $\textit{map}$

as follows:

$\textit{map}$

as follows:

Instead of a function of type

![]() $a\to b$

,

$a\to b$

,

![]() $\textit{map}$

takes a boxed function f of type

$\textit{map}$

takes a boxed function f of type

![]() $\square(a\to b)$

as its argument. That means, f is still in scope under the delay because it is of a stable type. To use f, it has to be unboxed using

$\square(a\to b)$

as its argument. That means, f is still in scope under the delay because it is of a stable type. To use f, it has to be unboxed using

![]() $\mathsf{unbox}$

, which is the elimination form for the

$\mathsf{unbox}$

, which is the elimination form for the

![]() $\square$

modality and simply has type

$\square$

modality and simply has type

![]() $\square a \to a$

, without any restrictions.

$\square a \to a$

, without any restrictions.

The corresponding introduction form for

![]() $\square$

does come with some restrictions. It has to make sure that boxed values only refer to variables of a stable type:

$\square$

does come with some restrictions. It has to make sure that boxed values only refer to variables of a stable type:

Here,

![]() $\Gamma^{\square}$

denotes the typing context that is obtained from

$\Gamma^{\square}$

denotes the typing context that is obtained from

![]() $\Gamma$

by removing all variables of non-stable types and all

$\Gamma$

by removing all variables of non-stable types and all

![]() $\checkmark$

tokens:

$\checkmark$

tokens:

Thus, for a well-typed term

![]() $\mathsf{box}\;t$

, we know that t only accesses variables of stable type.

$\mathsf{box}\;t$

, we know that t only accesses variables of stable type.

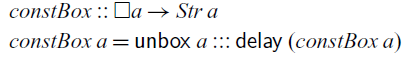

For example, we can implement the

![]() $\textit{inc}$

function using

$\textit{inc}$

function using

![]() $\textit{map}$

as follows:

$\textit{map}$

as follows:

Using the

![]() $\square$

modality, we can also generalise the constant stream function to arbitrary boxed types:

$\square$

modality, we can also generalise the constant stream function to arbitrary boxed types:

Alternatively, we can make use of the

![]() $\textit{Stable}$

type class, to constrain type variables to stable types:

$\textit{Stable}$

type class, to constrain type variables to stable types:

Since the type of streams is not stable, the restriction to stable types disallows the instantiation of the

![]() $\textit{const}$

function to the type

$\textit{const}$

function to the type

![]() $\textit{Str}\;\textit{Int}\to \textit{Str}\;(\textit{Str}\;\textit{Int})$

, which as we have seen earlier would cause a memory leak. By contrast,

$\textit{Str}\;\textit{Int}\to \textit{Str}\;(\textit{Str}\;\textit{Int})$

, which as we have seen earlier would cause a memory leak. By contrast,

![]() $\textit{constBox}$

can be instantiated to the type

$\textit{constBox}$

can be instantiated to the type

![]() $\square(\textit{Str}\;\textit{Int})\to \textit{Str}\;(\textit{Str}\;\textit{Int})$

. This is unproblematic since a value of type

$\square(\textit{Str}\;\textit{Int})\to \textit{Str}\;(\textit{Str}\;\textit{Int})$

. This is unproblematic since a value of type

![]() $\square(\textit{Str}\;\textit{Int})$

is a suspended, time-invariant computation that produces an integer stream. In other words, this computation is independent of any external input and can thus be executed at any time in the future without keeping old temporal values in memory.

$\square(\textit{Str}\;\textit{Int})$

is a suspended, time-invariant computation that produces an integer stream. In other words, this computation is independent of any external input and can thus be executed at any time in the future without keeping old temporal values in memory.

So far, we have only looked at recursive definitions at the top level. Recursive definitions can also be nested, but we have to be careful how such nested recursion interacts with the typing environment. Below is an alternative definition of

![]() $\textit{map}$

that takes the boxed function f as an argument and then calls the

$\textit{map}$

that takes the boxed function f as an argument and then calls the

![]() $\textit{run}$

function that recurses over the stream:

$\textit{run}$

function that recurses over the stream:

Here,

![]() $\textit{run}$

is type-checked in a typing environment

$\textit{run}$

is type-checked in a typing environment

![]() $\Gamma$

that contains

$\Gamma$

that contains

![]() $f::\square(a\to b)$

. Since

$f::\square(a\to b)$

. Since

![]() $\textit{run}$

is defined by guarded recursion, we require that its definition must type-check in the typing context

$\textit{run}$

is defined by guarded recursion, we require that its definition must type-check in the typing context

![]() $\Gamma^{\square}$

. Because f is of a stable type, it remains in

$\Gamma^{\square}$

. Because f is of a stable type, it remains in

![]() $\Gamma^{\square}$

and is thus in scope in the definition of

$\Gamma^{\square}$

and is thus in scope in the definition of

![]() $\textit{run}$

. That is, guarded recursive definitions interact with the typing environment in the same way as

$\textit{run}$

. That is, guarded recursive definitions interact with the typing environment in the same way as

![]() $\mathsf{box}$

, which ensures that such recursive definitions are stable and can thus safely be executed at any time in the future. As a consequence, the type checker will prevent us from writing the following leaky version of

$\mathsf{box}$

, which ensures that such recursive definitions are stable and can thus safely be executed at any time in the future. As a consequence, the type checker will prevent us from writing the following leaky version of

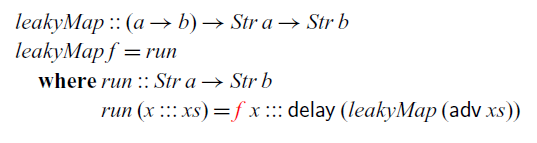

![]() $\textit{map}$

:

$\textit{map}$

:

The type of f is not stable, and thus it is not in scope in the definition of

![]() $\textit{run}$

.

$\textit{run}$

.

Note that top-level defined identifiers such as

![]() $\textit{map}$

and

$\textit{map}$

and

![]() $\textit{const}$

are in scope in any context after they are defined regardless of whether there is a

$\textit{const}$

are in scope in any context after they are defined regardless of whether there is a

![]() $\checkmark$

or whether they are of a stable type. One can think of top-level definitions being implicitly boxed when they are defined and implicitly unboxed when they are used later on.

$\checkmark$

or whether they are of a stable type. One can think of top-level definitions being implicitly boxed when they are defined and implicitly unboxed when they are used later on.

2.2 Operational semantics

As we have seen in the examples above, the purpose of the type modalities

![]() $\bigcirc$

and

$\bigcirc$

and

![]() $\square$

is to ensure that

$\square$

is to ensure that

![]() $\mathsf{Rattus}$

programs are causal, productive and without implicit space leaks. In simple terms, the latter means that temporal values, that is, values of type

$\mathsf{Rattus}$

programs are causal, productive and without implicit space leaks. In simple terms, the latter means that temporal values, that is, values of type

![]() $\bigcirc{A}$

, are safe to be garbage collected after two time steps. In particular, input from a stream can be safely garbage collected one time step after it has arrived. This memory property is made precise later in Section 4 along with a precise definition of the operational semantics of

$\bigcirc{A}$

, are safe to be garbage collected after two time steps. In particular, input from a stream can be safely garbage collected one time step after it has arrived. This memory property is made precise later in Section 4 along with a precise definition of the operational semantics of

![]() $\mathsf{Rattus}$

.

$\mathsf{Rattus}$

.

To obtain this memory property,

![]() $\mathsf{Rattus}$

uses an eager evaluation strategy except for

$\mathsf{Rattus}$

uses an eager evaluation strategy except for

![]() $\mathsf{delay}$

and

$\mathsf{delay}$

and

![]() $\mathsf{box}$

. That is, arguments are evaluated to values before they are passed on to functions, but special rules apply to

$\mathsf{box}$

. That is, arguments are evaluated to values before they are passed on to functions, but special rules apply to

![]() $\mathsf{delay}$

and

$\mathsf{delay}$

and

![]() $\mathsf{box}$

. In addition, we only allow strict data types in

$\mathsf{box}$

. In addition, we only allow strict data types in

![]() $\mathsf{Rattus}$

, which explains the use of strictness annotations in the definition of

$\mathsf{Rattus}$

, which explains the use of strictness annotations in the definition of

![]() $\textit{Str}$

. This eager evaluation strategy ensures that we do not have to keep intermediate values in memory for longer than one time step.

$\textit{Str}$

. This eager evaluation strategy ensures that we do not have to keep intermediate values in memory for longer than one time step.

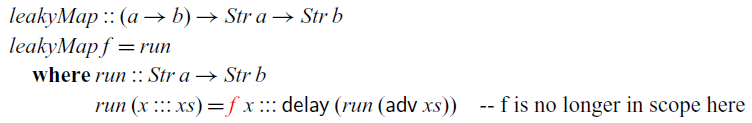

Following the temporal interpretation of the

![]() $\bigcirc$

modality, its introduction form

$\bigcirc$

modality, its introduction form

![]() $\mathsf{delay}$

does not eagerly evaluate its argument since we may have to wait until input data arrives. For example, in the following function, we cannot evaluate

$\mathsf{delay}$

does not eagerly evaluate its argument since we may have to wait until input data arrives. For example, in the following function, we cannot evaluate

![]() ${\rm adv}\;x+1$

until the integer value of

${\rm adv}\;x+1$

until the integer value of

![]() $x::\bigcirc\textit{Int}$

arrives, which is one time step from now:

$x::\bigcirc\textit{Int}$

arrives, which is one time step from now:

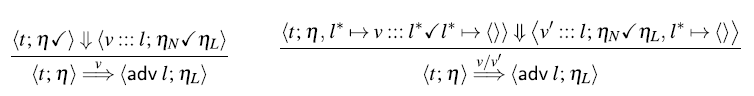

However, evaluation is only delayed by one time step, and this delay is reversed by adv. For example,

![]() ${\rm adv}\;(\mathsf{delay}\;(1+1))$

evaluates immediately to 2.

${\rm adv}\;(\mathsf{delay}\;(1+1))$

evaluates immediately to 2.

Turning to

![]() $\mathsf{box}$

, we can see that it needs to lazily evaluate its argument in order to maintain the memory property of

$\mathsf{box}$

, we can see that it needs to lazily evaluate its argument in order to maintain the memory property of

![]() $\mathsf{Rattus}$

: in the expression

$\mathsf{Rattus}$

: in the expression

![]() $\mathsf{box}\;(\mathsf{delay}\;1)$

of type

$\mathsf{box}\;(\mathsf{delay}\;1)$

of type

![]() $\square(\bigcirc\textit{Int})$

, we should not evaluate

$\square(\bigcirc\textit{Int})$

, we should not evaluate

![]() ${\mathsf{delay}\;1}$

right away. As mention above, values of type

${\mathsf{delay}\;1}$

right away. As mention above, values of type

![]() ${\bigcirc\textit{Int}}$

should be garbage collected after two time steps. However, boxed types are stable and can thus be moved arbitrarily into the future. Hence, by the time this boxed value is unboxed in the future, we might have already garbage collected the value of type

${\bigcirc\textit{Int}}$

should be garbage collected after two time steps. However, boxed types are stable and can thus be moved arbitrarily into the future. Hence, by the time this boxed value is unboxed in the future, we might have already garbage collected the value of type

![]() ${\bigcirc\textit{Int}}$

it contains.

${\bigcirc\textit{Int}}$

it contains.

The modal FRP calculi of Krishnaswami (Reference Krishnaswami2013) and Bahr et al. (Reference Bahr, Graulund and Møgelberg2019, Reference Bahr, Graulund and Møgelberg2021) have a similar operational semantics to achieve same memory property that

![]() $\mathsf{Rattus}$

has. However,

$\mathsf{Rattus}$

has. However,

![]() $\mathsf{Rattus}$

uses a slightly more eager evaluation strategy for

$\mathsf{Rattus}$

uses a slightly more eager evaluation strategy for

![]() $\mathsf{delay}$

: recall that

$\mathsf{delay}$

: recall that

![]() $\mathsf{delay}\,t$

delays the computation t by one time step and that

$\mathsf{delay}\,t$

delays the computation t by one time step and that

![]() $\mathsf{adv}$

reverses such a delay. The operational semantics of

$\mathsf{adv}$

reverses such a delay. The operational semantics of

![]() $\mathsf{Rattus}$

reflects this intuition by first evaluating every term t that occurs as

$\mathsf{Rattus}$

reflects this intuition by first evaluating every term t that occurs as

![]() $\mathsf{delay}\,(\dots \mathsf{adv}\,t \dots)$

before evaluating

$\mathsf{delay}\,(\dots \mathsf{adv}\,t \dots)$

before evaluating

![]() $\mathsf{delay}$

. In other words,

$\mathsf{delay}$

. In other words,

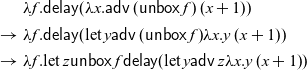

![]() $\mathsf{delay}\,(\dots \mathsf{adv}\,t \dots)$

is equivalent to

$\mathsf{delay}\,(\dots \mathsf{adv}\,t \dots)$

is equivalent to

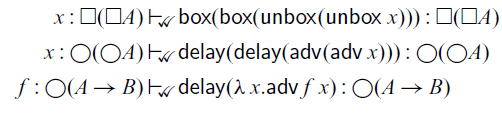

This adjustment of the operational semantics of

![]() $\mathsf{delay}$

is important, as it allows us to lift the restrictions present in previous calculi (Krishnaswami Reference Krishnaswami2013; Bahr et al., Reference Bahr, Graulund and Møgelberg2019, Reference Bahr, Graulund and Møgelberg2021) that disallow guarded recursive definitions to ‘look ahead’ more than one time step. In the Fitch-style calculi of Bahr et al. (Reference Bahr, Graulund and Møgelberg2019, Reference Bahr, Graulund and Møgelberg2021), this can be seen in the restriction to allow at most one

$\mathsf{delay}$

is important, as it allows us to lift the restrictions present in previous calculi (Krishnaswami Reference Krishnaswami2013; Bahr et al., Reference Bahr, Graulund and Møgelberg2019, Reference Bahr, Graulund and Møgelberg2021) that disallow guarded recursive definitions to ‘look ahead’ more than one time step. In the Fitch-style calculi of Bahr et al. (Reference Bahr, Graulund and Møgelberg2019, Reference Bahr, Graulund and Møgelberg2021), this can be seen in the restriction to allow at most one

![]() $\checkmark$

in the typing context. For the same reason, these two calculi also disallow function definitions in the context of a

$\checkmark$

in the typing context. For the same reason, these two calculi also disallow function definitions in the context of a

![]() $\checkmark$

. As a consequence, terms like

$\checkmark$

. As a consequence, terms like

![]() $\mathsf{delay}(\mathsf{delay}\, 0)$

and

$\mathsf{delay}(\mathsf{delay}\, 0)$

and

![]() $\mathsf{delay}(\lambda x. x)$

do not type-check in the calculi of Bahr et al. (Reference Bahr, Graulund and Møgelberg2019); Bahr et al. (Reference Bahr, Graulund and Møgelberg2021).

$\mathsf{delay}(\lambda x. x)$

do not type-check in the calculi of Bahr et al. (Reference Bahr, Graulund and Møgelberg2019); Bahr et al. (Reference Bahr, Graulund and Møgelberg2021).

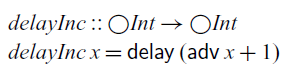

The extension in expressive power afforded by

![]() $\mathsf{Rattus}$

’s slightly more eager evaluation strategy has immediate practical benefits: most importantly, there are no restrictions on where one can define functions. Secondly, we can write recursive functions that look several steps into the future:

$\mathsf{Rattus}$

’s slightly more eager evaluation strategy has immediate practical benefits: most importantly, there are no restrictions on where one can define functions. Secondly, we can write recursive functions that look several steps into the future:

Applying

![]() $\textit{stutter}$

to 0 would construct a stream of numbers

$\textit{stutter}$

to 0 would construct a stream of numbers

![]() $0, 0, 1, 1, 2, 2, \ldots$

. In order to implement

$0, 0, 1, 1, 2, 2, \ldots$

. In order to implement

![]() $\textit{stutter}$

in the more restrictive language of Krishnaswami (Reference Krishnaswami2013) and Bahr et al. (Reference Bahr, Graulund and Møgelberg2019, Reference Bahr, Graulund and Møgelberg2021), we would need to decompose it into two mutually recursive functions (Bahr et al., Reference Bahr, Graulund and Møgelberg2021). A more detailed comparison of the expressive power of these calculi can be found in Section 4.5

$\textit{stutter}$

in the more restrictive language of Krishnaswami (Reference Krishnaswami2013) and Bahr et al. (Reference Bahr, Graulund and Møgelberg2019, Reference Bahr, Graulund and Møgelberg2021), we would need to decompose it into two mutually recursive functions (Bahr et al., Reference Bahr, Graulund and Møgelberg2021). A more detailed comparison of the expressive power of these calculi can be found in Section 4.5

At first glance, one might think that allowing multiple ticks could be accommodated without changing the evaluation of

![]() $\mathsf{delay}$

and instead extending the time one has to keep temporal values in memory accordingly, that is, we may safely garbage collect temporal values after

$\mathsf{delay}$

and instead extending the time one has to keep temporal values in memory accordingly, that is, we may safely garbage collect temporal values after

![]() $n+1$

time steps, if we allow at most n ticks. However, as we will demonstrate in Section 4.3.3, this is not enough. Even allowing just two ticks would require us to keep temporal values in memory indefinitely, that is, it would permit implicit space leaks. On the other hand, given the more eager evaluation strategy for

$n+1$

time steps, if we allow at most n ticks. However, as we will demonstrate in Section 4.3.3, this is not enough. Even allowing just two ticks would require us to keep temporal values in memory indefinitely, that is, it would permit implicit space leaks. On the other hand, given the more eager evaluation strategy for

![]() $\mathsf{delay}$

, we can still garbage collect all temporal values after two time steps, no matter how many ticks were involved in type-checking the program.

$\mathsf{delay}$

, we can still garbage collect all temporal values after two time steps, no matter how many ticks were involved in type-checking the program.

3 Reactive programming in Rattus

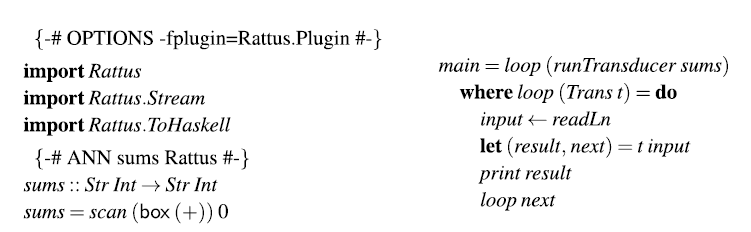

In this section, we showcase how

can be used for reactive programming. To this end, we implement a small library of combinators for programming with streams and events. We then use this library to implement a simple game. Finally, we implement a Yampa-style library and re-implement the game using that library instead. The full sources of both implementations of the game are included in the supplementary material along with a third variant that uses a combinator library based on monadic streams (Perez et al., Reference Perez, Bärenz and Nilsson2016).

3.1 Programming with streams and events

To illustrate how

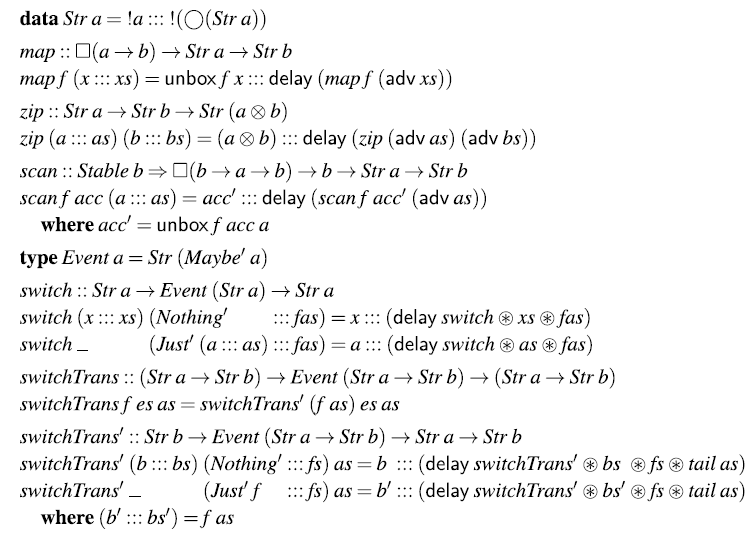

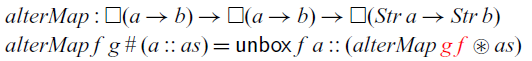

facilitates working with streams and events, we have implemented a small set of combinators, as shown in Figure 1. The

![]() $\textit{map}$

function should be familiar by now. The

$\textit{map}$

function should be familiar by now. The

![]() $\textit{zip}$

function combines two streams similarly to Haskell’s

$\textit{zip}$

function combines two streams similarly to Haskell’s

![]() $\textit{zip}$

function defined on lists. Note however that instead of the normal pair type, we use a strict pair type:

$\textit{zip}$

function defined on lists. Note however that instead of the normal pair type, we use a strict pair type:

Fig. 1. Small library for streams and events.

It is like the normal pair type (a,b), but when constructing a strict pair

![]() ${s\otimes t}$

, the two components s and t are evaluated to weak head normal form.

${s\otimes t}$

, the two components s and t are evaluated to weak head normal form.

The

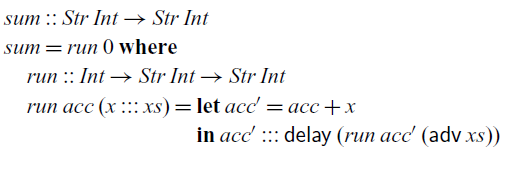

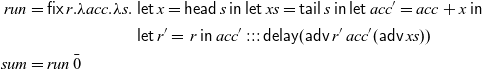

![]() $\textit{scan}$

function is similar to Haskell’s

$\textit{scan}$

function is similar to Haskell’s

![]() $\textit{scanl}$

function on lists: given a stream of values

$\textit{scanl}$

function on lists: given a stream of values

![]() $\textit{v}_{{\rm 0}},v_{1},v_{2},\ldots$

, the expression

$\textit{v}_{{\rm 0}},v_{1},v_{2},\ldots$

, the expression

![]() $\textit{scan}\;(\mathsf{box}\;f)\;{v}$

computes the stream

$\textit{scan}\;(\mathsf{box}\;f)\;{v}$

computes the stream

If one would want a variant of

![]() $\textit{scan}$

that is closer to Haskell’s

$\textit{scan}$

that is closer to Haskell’s

![]() $\textit{scanl}$

, that is, the result starts with the value v instead of

$\textit{scanl}$

, that is, the result starts with the value v instead of

![]() ${f\;v\;v_{0}}$

, one can simply replace the first occurrence of

${f\;v\;v_{0}}$

, one can simply replace the first occurrence of

![]() $\textit{acc}'$

in the definition of

$\textit{acc}'$

in the definition of

![]() $\textit{scan}$

with

$\textit{scan}$

with

![]() $\textit{acc}$

. Note that the type b has to be stable in the definition of

$\textit{acc}$

. Note that the type b has to be stable in the definition of

![]() $\textit{scan}$

so that

$\textit{scan}$

so that

![]() $\textit{acc}'::b$

is still in scope under

$\textit{acc}'::b$

is still in scope under

![]() $\mathsf{delay}$

.

$\mathsf{delay}$

.

A central component of FRP is that it must provide a way to react to events. In particular, it must support the ability to switch behaviour in response to the occurrence of an event. There are different ways to represent events. The simplest representation defines events of type a as streams of type

![]() $\textit{Maybe}\;a$

. However, we will use the strict variant of the

$\textit{Maybe}\;a$

. However, we will use the strict variant of the

![]() ${\textit{Maybe}}$

type:

${\textit{Maybe}}$

type:

We can then devise a simple

![]() ${\textit{switch}}$

combinator that reacts to events. Given an initial stream xs and an event e that may produce a stream, swith xs e initially behaves as xs but changes to the new stream provided by the occurrence of an event. In this implementation, the behaviour changes every time an event occurs, not only the first time. For a one-shot variant of

${\textit{switch}}$

combinator that reacts to events. Given an initial stream xs and an event e that may produce a stream, swith xs e initially behaves as xs but changes to the new stream provided by the occurrence of an event. In this implementation, the behaviour changes every time an event occurs, not only the first time. For a one-shot variant of

![]() ${\textit{switch}}$

, we would just have to change the second equation to

${\textit{switch}}$

, we would just have to change the second equation to

In the definition of

![]() ${\textit{switch}}$

, we use the applicative operator

${\textit{switch}}$

, we use the applicative operator

![]() ${\mathop{\circledast}}$

defined as follows:

${\mathop{\circledast}}$

defined as follows:

Instead of using

![]() ${\mathop{\circledast}}$

, we could have also written

${\mathop{\circledast}}$

, we could have also written

![]() ${\mathsf{delay}\;(\textit{switch}\;(\mathsf{adv}\;xs)\;(\mathsf{adv}\;\textit{fas}))}$

instead.

${\mathsf{delay}\;(\textit{switch}\;(\mathsf{adv}\;xs)\;(\mathsf{adv}\;\textit{fas}))}$

instead.

Finally,

![]() ${\textit{switchTrans}}$

is a variant of

${\textit{switchTrans}}$

is a variant of

![]() ${\textit{switch}}$

that switches to a new stream function rather than just a stream. It is implemented using the variant

${\textit{switch}}$

that switches to a new stream function rather than just a stream. It is implemented using the variant

![]() $\textit{switchTrans}'$

, which takes just a stream as its first argument instead of a stream function.

$\textit{switchTrans}'$

, which takes just a stream as its first argument instead of a stream function.

3.2 A simple reactive program

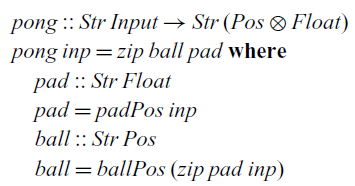

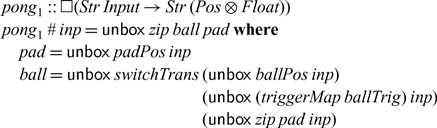

To put our bare-bones FRP library to use, let’s implement a simple single-player variant of the classic game Pong: the player has to move a paddle at the bottom of the screen to bounce a ball and prevent it from falling. Footnote 2 The core behaviour is described by the following stream function:

It receives a stream of inputs (button presses and how much time has passed since the last input) and produces a stream of pairs consisting of the 2D position of the ball and the x coordinate of the paddle. Its implementation uses two helper functions to compute these two components. The position of the paddle only depends on the input, whereas the position of the ball also depends on the position of the paddle (since it may bounce off it):

Both auxiliary functions follow the same structure. They use a

![]() $\textit{scan}$

to compute the position and the velocity of the object, while consuming the input stream. The velocity is only needed to compute the position and is therefore projected away afterward using

$\textit{scan}$

to compute the position and the velocity of the object, while consuming the input stream. The velocity is only needed to compute the position and is therefore projected away afterward using

![]() $\textit{map}$

. Here,

$\textit{map}$

. Here,

![]() ${\textit{fst}'}$

is the first projection for the strict pair type. We can see that the ball starts at the centre of the screen (at coordinates (0,0)) and moves toward the upper right corner (with velocity (20,50)).

${\textit{fst}'}$

is the first projection for the strict pair type. We can see that the ball starts at the centre of the screen (at coordinates (0,0)) and moves toward the upper right corner (with velocity (20,50)).

This simple game only requires a static dataflow network. To demonstrate the ability to express dynamically changing dataflow networks in

![]() $\mathsf{Rattus}$

, we briefly discuss three refinements of the pong game, each of which introduces different kinds of dynamic behaviours. In each case, we reuse the existing stream functions that describe the basic behaviour of the ball and the paddle, but we combine them using different combinators. Thus, these examples also demonstrate the modularity that

$\mathsf{Rattus}$

, we briefly discuss three refinements of the pong game, each of which introduces different kinds of dynamic behaviours. In each case, we reuse the existing stream functions that describe the basic behaviour of the ball and the paddle, but we combine them using different combinators. Thus, these examples also demonstrate the modularity that

![]() $\mathsf{Rattus}$

affords.

$\mathsf{Rattus}$

affords.

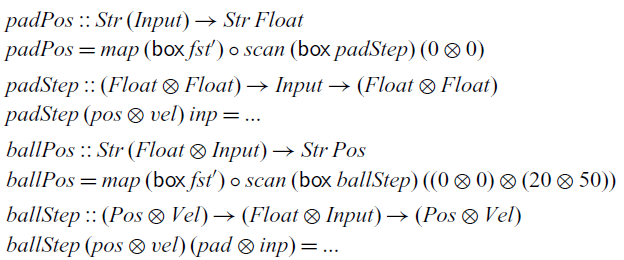

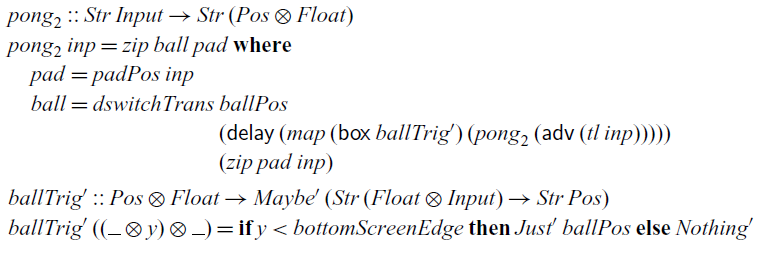

First refinement.

We change the implementation of

![]() $\textit{pong}$

so that it allows the player to reset the game, for example, after the ball has fallen off the screen:

$\textit{pong}$

so that it allows the player to reset the game, for example, after the ball has fallen off the screen:

To achieve this behaviour, we use the

![]() ${\textit{switchTrans}}$

combinator, which we initialise with the original behaviour of the ball. The event that will trigger the switch is constructed by mapping

${\textit{switchTrans}}$

combinator, which we initialise with the original behaviour of the ball. The event that will trigger the switch is constructed by mapping

![]() ${\textit{ballTrig}}$

over the input stream, which will create an event of type

${\textit{ballTrig}}$

over the input stream, which will create an event of type

![]() ${\textit{Event}\;(\textit{Str}\;(\textit{Float}\otimes\textit{Input})\to

\textit{Str}\;\textit{Pos})}$

, which will be triggered every time the player hits the reset button.

${\textit{Event}\;(\textit{Str}\;(\textit{Float}\otimes\textit{Input})\to

\textit{Str}\;\textit{Pos})}$

, which will be triggered every time the player hits the reset button.

Second refinement.

Footnote 3

We can further refine this behaviour of the game so that it automatically resets once the ball has fallen off the screen. This requires a feedback loop since the behaviour of the ball depends on the current position of the ball. Such a feedback loop can be constructed using a variant of the

![]() ${\textit{switchTrans}}$

combinator that takes a delayed event as argument:

${\textit{switchTrans}}$

combinator that takes a delayed event as argument:

Thus, the event we pass on to

![]() ${\textit{dswitchTrans}}$

can be constructed from the output of

${\textit{dswitchTrans}}$

can be constructed from the output of

![]() ${\textit{dswitchTrans}}$

by using guarded recursion, which closes the feedback loop:

${\textit{dswitchTrans}}$

by using guarded recursion, which closes the feedback loop:

Final refinement.

Footnote 4

Finally, we change the game a bit so as to allow the player to spawn new balls at any time and remove balls that are currently in play. To this end, we introduce a type

![]() ${\textit{ObjAction}\;a\;b}$

that represents events that may remove or add objects defined by a stream function of type

${\textit{ObjAction}\;a\;b}$

that represents events that may remove or add objects defined by a stream function of type

![]() ${\textit{Str}\;a\to \textit{Str}\;b}$

:

${\textit{Str}\;a\to \textit{Str}\;b}$

:

To keep it simple, we only have two operations: we may add an object by giving its stream function, or we may remove the oldest object. Given the strict list type

we can implement a combinator that can react to such events:

This combinator takes three arguments: an event that may trigger operations to manipulate the list of active objects, the initial list of active objects, and the input stream. The result is a stream that at each step contains a list with the current value of each active object.

To use this combinator, we revise the

![]() ${\textit{ballTrig}}$

function so that it produces events to add or remove balls in response to input by the player, and we initialise the list of objects as the empty list:

${\textit{ballTrig}}$

function so that it produces events to add or remove balls in response to input by the player, and we initialise the list of objects as the empty list:

Instead of a stream of type

![]() ${\textit{Str}\;\textit{Pos}}$

to describe a single ball, we now have a stream of type

${\textit{Str}\;\textit{Pos}}$

to describe a single ball, we now have a stream of type

![]() ${\textit{Str}\;(\textit{List}\;\textit{Pos})}$

to describe multiple balls.

${\textit{Str}\;(\textit{List}\;\textit{Pos})}$

to describe multiple balls.

The

![]() ${\textit{objTrans}}$

combinator demonstrates that

${\textit{objTrans}}$

combinator demonstrates that

![]() $\mathsf{Rattus}$

can accommodate dataflow networks that dynamically grow and shrink. But more realistic variants of this combinator can be implemented as well. For example, by replacing

$\mathsf{Rattus}$

can accommodate dataflow networks that dynamically grow and shrink. But more realistic variants of this combinator can be implemented as well. For example, by replacing

![]() ${\textit{List}}$

with a

${\textit{List}}$

with a

![]() ${\textit{Map}}$

data structure, objects may have identifiers and can be removed or manipulated based on that identifier. Orthogonal to that, we may also allow objects to destroy themselves and spawn new objects. This can be achieved by describing objects using trees instead of streams:

${\textit{Map}}$

data structure, objects may have identifiers and can be removed or manipulated based on that identifier. Orthogonal to that, we may also allow objects to destroy themselves and spawn new objects. This can be achieved by describing objects using trees instead of streams:

A variant of

![]() ${\textit{objTrans}}$

can then be implemented where the type

${\textit{objTrans}}$

can then be implemented where the type

![]() ${\textit{Str}\;b}$

in

${\textit{Str}\;b}$

in

![]() ${\textit{objTrans}}$

and

${\textit{objTrans}}$

and

![]() ${\textit{ObjAction}\;a\;b}$

is replaced by

${\textit{ObjAction}\;a\;b}$

is replaced by

![]() ${\textit{Tree}\;b}$

. For example, this can be used in the pong game to make balls destroy themselves once they disappear from screen or make a ball split into two balls when it hits a certain surface.

${\textit{Tree}\;b}$

. For example, this can be used in the pong game to make balls destroy themselves once they disappear from screen or make a ball split into two balls when it hits a certain surface.

3.3 Arrowised FRP

The benefit of a modal FRP language is that we can directly interact with signals and events in a way that guarantees causality. A popular alternative to ensure causality is arrowised FRP (Nilsson et al., Reference Nilsson, Courtney and Peterson2002), which takes signal functions as primitive and uses Haskell’s arrow notation (Paterson, Reference Paterson2001) to construct them. By implementing an arrowised FRP library in

![]() $\mathsf{Rattus}$

instead of plain Haskell, we can not only guarantee causality but also productivity and the absence of implicit space leaks. As we will see, this forces us to slightly restrict the API of arrowised FRP compared to Yampa. Furthermore, this exercise demonstrates that

$\mathsf{Rattus}$

instead of plain Haskell, we can not only guarantee causality but also productivity and the absence of implicit space leaks. As we will see, this forces us to slightly restrict the API of arrowised FRP compared to Yampa. Furthermore, this exercise demonstrates that

![]() $\mathsf{Rattus}$

can also be used to implement a continuous-time FRP library, in contrast to the discrete-time FRP library from Section 3.1.

$\mathsf{Rattus}$

can also be used to implement a continuous-time FRP library, in contrast to the discrete-time FRP library from Section 3.1.

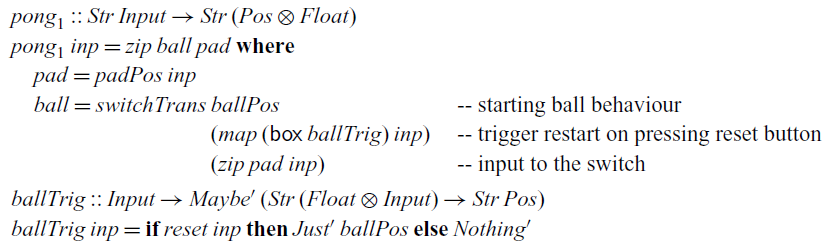

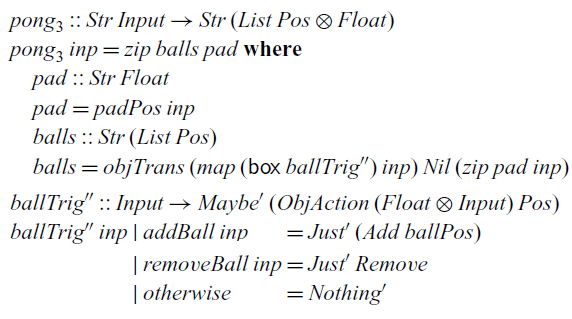

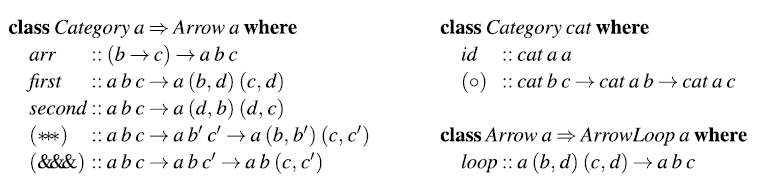

At the centre of arrowised FRP is the

![]() ${\textit{Arrow}}$

type class shown in Figure 2. If we can implement a signal function type

${\textit{Arrow}}$

type class shown in Figure 2. If we can implement a signal function type

![]() ${\textit{SF}\;a\;b}$

that implements the

${\textit{SF}\;a\;b}$

that implements the

![]() ${\textit{Arrow}}$

class, we can benefit from the convenient notation Haskell provides for it. For example, assuming we have signal functions

${\textit{Arrow}}$

class, we can benefit from the convenient notation Haskell provides for it. For example, assuming we have signal functions

![]() ${\textit{ballPos}::\textit{SF}\;(\textit{Float}\otimes\textit{Input})\;\textit{Pos}}$

and padPos::SF Input Float describing the positions of the ball and the paddle from our game in Section 3.2, we can combine these as follows:

${\textit{ballPos}::\textit{SF}\;(\textit{Float}\otimes\textit{Input})\;\textit{Pos}}$

and padPos::SF Input Float describing the positions of the ball and the paddle from our game in Section 3.2, we can combine these as follows:

Fig. 2. Arrow type class.

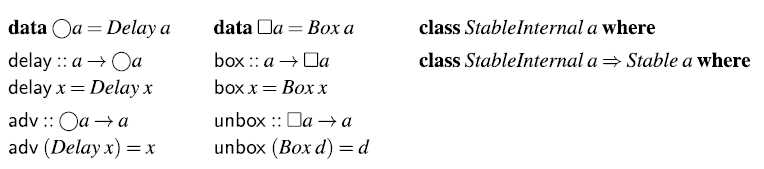

The

![]() $\mathsf{Rattus}$

definition of

$\mathsf{Rattus}$

definition of

![]() ${\textit{SF}}$

is almost identical to the original Haskell definition from Nilsson et al. (Reference Nilsson, Courtney and Peterson2002). The only difference is the use of strict types and the insertion of the

${\textit{SF}}$

is almost identical to the original Haskell definition from Nilsson et al. (Reference Nilsson, Courtney and Peterson2002). The only difference is the use of strict types and the insertion of the

![]() $\bigcirc$

modality to make it a guarded recursive type:

$\bigcirc$

modality to make it a guarded recursive type:

This implements a continuous-time signal function using sampling, where the additional argument of type

![]() ${\textit{Float}}$

indicates the time passed since the previous sample.

${\textit{Float}}$

indicates the time passed since the previous sample.

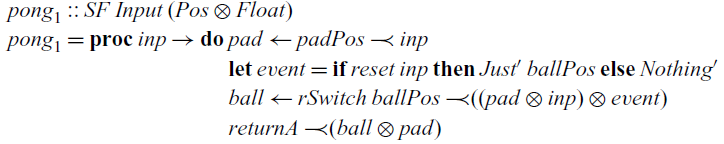

Implementing the methods of the

![]() ${\textit{Arrow}}$

type class is straightforward with the exception of the

${\textit{Arrow}}$

type class is straightforward with the exception of the

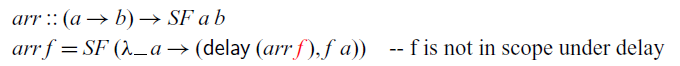

![]() ${\textit{arr}}$

method. In fact, we cannot implement

${\textit{arr}}$

method. In fact, we cannot implement

![]() ${\textit{arr}}$

in

${\textit{arr}}$

in

![]() $\mathsf{Rattus}$

at all. Because the first argument is not stable, it falls out of scope in the recursive call:

$\mathsf{Rattus}$

at all. Because the first argument is not stable, it falls out of scope in the recursive call:

The situation is similar to the

![]() $\textit{map}$

function, and we must box the function argument so that it remains available at all times in the future:

$\textit{map}$

function, and we must box the function argument so that it remains available at all times in the future:

That is, the

![]() ${\textit{arr}}$

method could be a potential source for space leaks. However, in practice,

${\textit{arr}}$

method could be a potential source for space leaks. However, in practice,

![]() ${\textit{arr}}$

does not seem to cause space leaks and thus its use in conventional arrowised FRP libraries should be safe. Nonetheless, in

${\textit{arr}}$

does not seem to cause space leaks and thus its use in conventional arrowised FRP libraries should be safe. Nonetheless, in

![]() $\mathsf{Rattus}$

, we have to replace

$\mathsf{Rattus}$

, we have to replace

![]() ${\textit{arr}}$

with the more restrictive variant

${\textit{arr}}$

with the more restrictive variant

![]() ${\textit{arrBox}}$

. But fortunately, this does not prevent us from using the arrow notation:

${\textit{arrBox}}$

. But fortunately, this does not prevent us from using the arrow notation:

![]() $\mathsf{Rattus}$

treats

$\mathsf{Rattus}$

treats

![]() ${\textit{arr}\;f}$

as a short hand for

${\textit{arr}\;f}$

as a short hand for

![]() ${\textit{arrBox}\;(\mathsf{box}\;f)}$

, which allows us to use the arrow notation while making sure that

${\textit{arrBox}\;(\mathsf{box}\;f)}$

, which allows us to use the arrow notation while making sure that

![]() ${\mathsf{box}\;f}$

is well-typed, that is, f only refers to variables of stable type.

${\mathsf{box}\;f}$

is well-typed, that is, f only refers to variables of stable type.

The

![]() ${\textit{Arrow}}$

type class only provides a basic interface for constructing static signal functions. To permit dynamic behaviour, we need to provide additional combinators, for example, for switching signals and for recursive definitions. The

${\textit{Arrow}}$

type class only provides a basic interface for constructing static signal functions. To permit dynamic behaviour, we need to provide additional combinators, for example, for switching signals and for recursive definitions. The

![]() ${\textit{rSwitch}}$

combinator corresponds to the

${\textit{rSwitch}}$

combinator corresponds to the

![]() ${\textit{switchTrans}}$

combinator from Figure 1:

${\textit{switchTrans}}$

combinator from Figure 1:

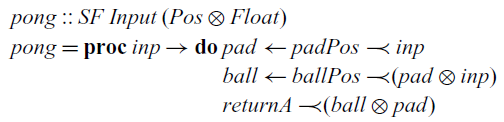

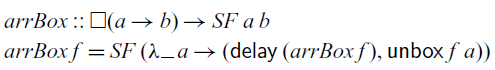

This combinator allows us to implement our game so that it resets to its start position if we hit the reset button: Footnote 5

Arrows can be endowed with a very general recursion principle by instantiating the

![]() $\textit{loop}$

method in the

$\textit{loop}$

method in the

![]() ${\textit{ArrowLoop}}$

type class shown in Figure 2. However,

${\textit{ArrowLoop}}$

type class shown in Figure 2. However,

![]() $\textit{loop}$

cannot be implemented in

$\textit{loop}$

cannot be implemented in

![]() $\mathsf{Rattus}$

as it would break the productivity property. Moreover,

$\mathsf{Rattus}$

as it would break the productivity property. Moreover,

![]() $\textit{loop}$

depends crucially on lazy evaluation and is thus a source for space leaks.

$\textit{loop}$

depends crucially on lazy evaluation and is thus a source for space leaks.

Instead of

![]() $\textit{loop}$

, we implement a different recursion principle that corresponds to guarded recursion:

$\textit{loop}$

, we implement a different recursion principle that corresponds to guarded recursion:

Intuitively speaking, this combinator constructs a signal function from b to c with the help of an internal state of type d. The first argument initialises the state, and the second argument is a signal function that turns input of type b into output of type c while also updating the internal state. Apart from the addition of the

![]() $\bigcirc$

modality and strict pair types, this definition has the same type as Yampa’s

$\bigcirc$

modality and strict pair types, this definition has the same type as Yampa’s

![]() ${\textit{loopPre}}$

. Alternatively, we could drop the

${\textit{loopPre}}$

. Alternatively, we could drop the

![]() $\bigcirc$

modality and constrain d to be stable. The use of

$\bigcirc$

modality and constrain d to be stable. The use of

![]() ${\textit{loopPre}}$

instead of

${\textit{loopPre}}$

instead of

![]() $\textit{loop}$

introduce a delay by one sampling step (as indicated by the

$\textit{loop}$

introduce a delay by one sampling step (as indicated by the

![]() $\bigcirc$

modality) and thus ensures productivity. However, in practice, such a delay can be avoided by refactoring the underlying signal function.

$\bigcirc$

modality) and thus ensures productivity. However, in practice, such a delay can be avoided by refactoring the underlying signal function.

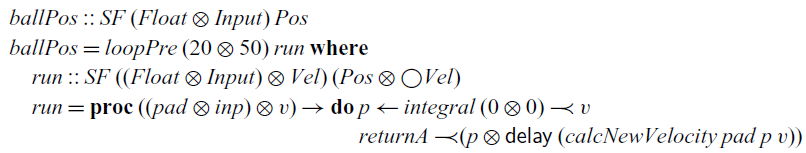

Using the

![]() ${\textit{loopPre}}$

combinator, we can implement the signal function of the ball:

${\textit{loopPre}}$

combinator, we can implement the signal function of the ball:

Here, we also use the

![]() ${\textit{integral}}$

combinator that computes the integral of a signal using a simple approximation that sums up rectangles under the curve:

${\textit{integral}}$

combinator that computes the integral of a signal using a simple approximation that sums up rectangles under the curve:

The signal function for the paddle can be implemented in a similar fashion. The complete code of the case studies presented in this section can be found in the supplementary material.

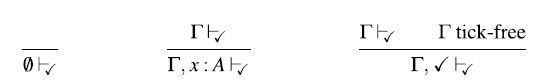

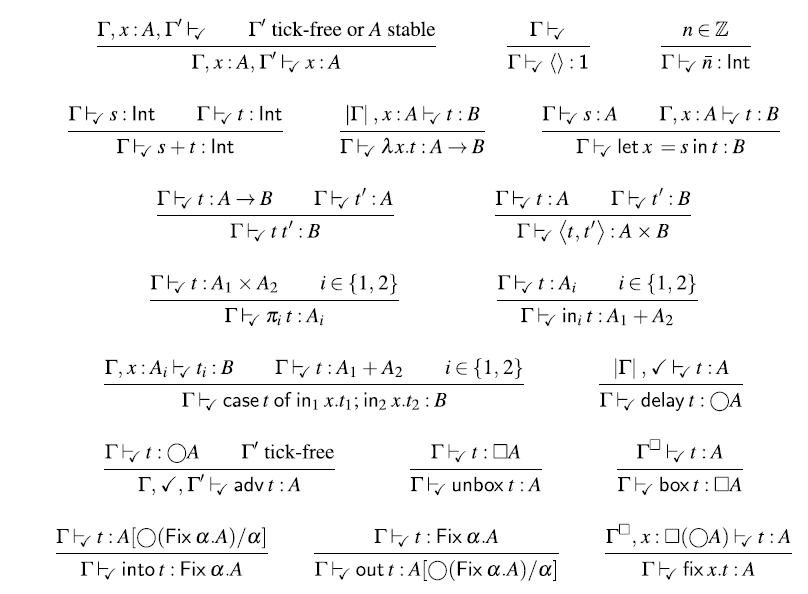

4 Core calculus

In this section, we present the core calculus of

![]() $\mathsf{Rattus}$

, which we call

$\mathsf{Rattus}$

, which we call

![]() $\lambda_{\checkmark\!\!\checkmark}$

. The purpose of

$\lambda_{\checkmark\!\!\checkmark}$

. The purpose of

![]() $\lambda_{\checkmark\!\!\checkmark}$

is to formally present the language’s Fitch-style typing rules, its operational semantics, and to formally prove the central operational properties, that is, productivity, causality, and absence of implicit space leaks. To this end, the calculus is stripped down to its essence: a simply typed lambda calculus extended with guarded recursive types

$\lambda_{\checkmark\!\!\checkmark}$

is to formally present the language’s Fitch-style typing rules, its operational semantics, and to formally prove the central operational properties, that is, productivity, causality, and absence of implicit space leaks. To this end, the calculus is stripped down to its essence: a simply typed lambda calculus extended with guarded recursive types

![]() $\mathsf{Fix}\,\alpha. A$

, a guarded fixed point combinator, and the two type modalities

$\mathsf{Fix}\,\alpha. A$

, a guarded fixed point combinator, and the two type modalities

![]() $\square$

and

$\square$

and

![]() $\bigcirc $

. Since general inductive types and polymorphic types are orthogonal to the issue of operational properties in reactive programming, we have omitted these for the sake of clarity.

$\bigcirc $

. Since general inductive types and polymorphic types are orthogonal to the issue of operational properties in reactive programming, we have omitted these for the sake of clarity.

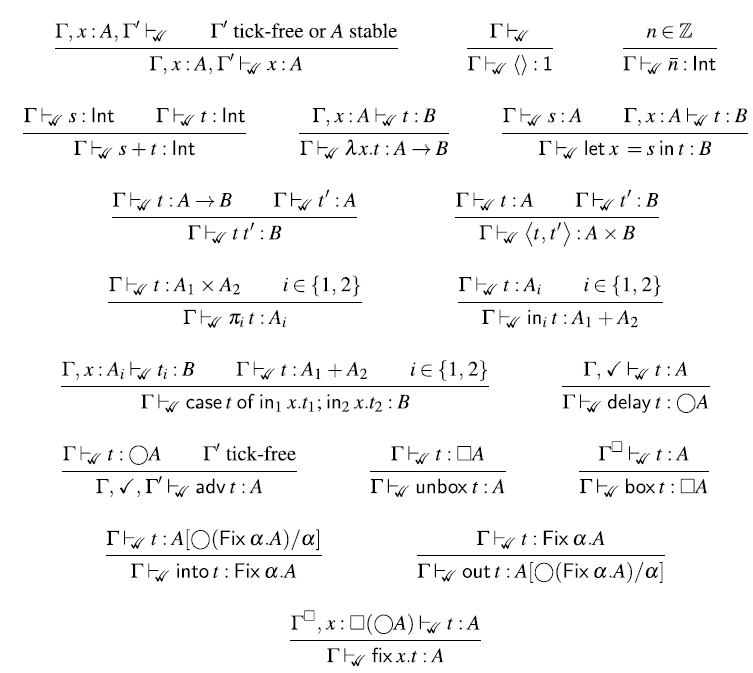

4.1 Type system

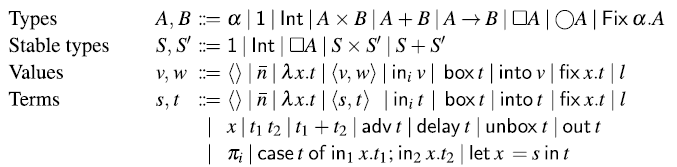

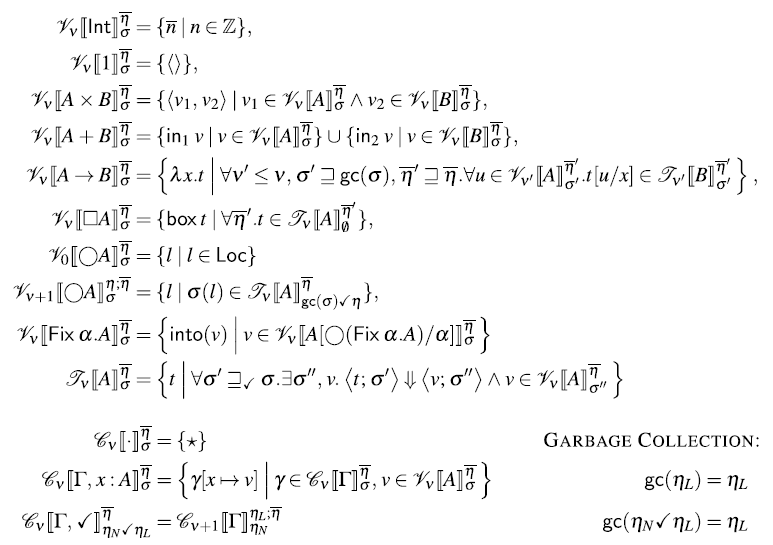

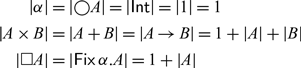

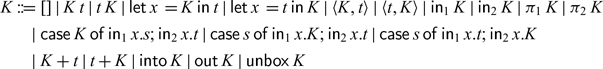

Figure 3 defines the syntax of

![]() $\lambda_{\checkmark\!\!\checkmark}$

. Besides guarded recursive types, guarded fixed points, and the two type modalities, we include standard sum and product types along with unit and integer types. The type

$\lambda_{\checkmark\!\!\checkmark}$

. Besides guarded recursive types, guarded fixed points, and the two type modalities, we include standard sum and product types along with unit and integer types. The type

![]() ${\textit{Str}\;\textit{A}}$

of streams of type A is represented as the guarded recursive type

${\textit{Str}\;\textit{A}}$

of streams of type A is represented as the guarded recursive type

![]() $\mathsf{Fix}\,\alpha. A \times \alpha$

. Note the absence of

$\mathsf{Fix}\,\alpha. A \times \alpha$

. Note the absence of

![]() $\bigcirc $

in this type. When unfolding guarded recursive types such as

$\bigcirc $

in this type. When unfolding guarded recursive types such as

![]() $\mathsf{Fix}\,\alpha. A \times \alpha$

, the

$\mathsf{Fix}\,\alpha. A \times \alpha$

, the

![]() $\bigcirc$

modality is inserted implicitly:

$\bigcirc$

modality is inserted implicitly:

![]() $\mathsf{Fix}\,\alpha . A \times \alpha \cong A \times \bigcirc (\mathsf{Fix}\,\alpha. A

\times \alpha)$

. This ensures that guarded recursive types are by construction always guarded by the

$\mathsf{Fix}\,\alpha . A \times \alpha \cong A \times \bigcirc (\mathsf{Fix}\,\alpha. A

\times \alpha)$

. This ensures that guarded recursive types are by construction always guarded by the

![]() $\bigcirc$

modality.

$\bigcirc$

modality.

Fig. 3. Syntax of (stable) types, terms, and values. In typing rules, only closed types are considered (i.e., each occurrence of a type variable

![]() $\alpha$

is in the scope of a

$\alpha$

is in the scope of a

![]() $\mathsf{Fix}\,\alpha$

).

$\mathsf{Fix}\,\alpha$

).

For the sake of the operational semantics, the syntax also includes heap locations 1. However, as we shall see, heap locations cannot be used by the programmer directly, because there is no typing rule for them. Instead, heap locations are allocated and returned by

![]() $\mathsf{delay}$