1 Introduction

Invertible computation, also known as reversible computation in physics and more hardware-oriented contexts, is a fundamental concept in computing. It involves computations that run both forward and backward so that the forward/backward semantics form a bijection. (In this paper, we do not concern ourselves with the totality of functions. We call a function a bijection if it is bijective on its actual domain and range, instead of its formal domain and codomain.) Early studies of invertible computation arise from the effort to reduce heat dissipation caused by information-loss in the traditional (unidirectional) computation model (Landauer, Reference Landauer1961). More modern interpretations of the problem include software concerns that are not necessarily connected to the physical realization. Examples of such include developing pairs of programs that are each other’s inverses: serializer and deserializer (Kennedy & Vytiniotis, Reference Kennedy and Vytiniotis2012), parser and printer (Rendel & Ostermann, Reference Rendel and Ostermann2010; Matsuda & Wang, Reference Matsuda and Wang2013, Reference Matsuda and Wang2018b), compressor and decompressor (Srivastava et al., Reference Srivastava, Gulwani, Chaudhuri and Foster2011), and also auxiliary processes in other program transformations such as bidirectionalization (Matsuda et al., Reference Matsuda, Hu, Nakano, Hamana and Takeichi2007).

Invertible (reversible) programming languages are languages that offer primitive support to invertible computations. Examples include Janus (Lutz, Reference Lutz1986; Yokoyama et al.,

2008), Frank’s R (Frank, Reference Frank1997),

![]() $\Psi$

-Lisp (Baker, Reference Baker1992), RFun (Yokoyama et al., Reference Yokoyama, Axelsen and Glück2011),

$\Psi$

-Lisp (Baker, Reference Baker1992), RFun (Yokoyama et al., Reference Yokoyama, Axelsen and Glück2011),

![]() $\Pi$

/

$\Pi$

/

![]() $\Pi^o$

(James & Sabry, Reference James and Sabry2012), and Inv (Reference Mu, Hu and TakeichiMu et al., 2004b

). The basic idea of these programming languages is to support deterministic forward and backward computation by local inversion: if a forward execution issues (invertible) commands

$\Pi^o$

(James & Sabry, Reference James and Sabry2012), and Inv (Reference Mu, Hu and TakeichiMu et al., 2004b

). The basic idea of these programming languages is to support deterministic forward and backward computation by local inversion: if a forward execution issues (invertible) commands

![]() $c_1$

,

$c_1$

,

![]() $c_2$

, and

$c_2$

, and

![]() $c_3$

in this order, a backward execution issues corresponding inverse commands in the reverse order, as

$c_3$

in this order, a backward execution issues corresponding inverse commands in the reverse order, as

![]() $c_3^{-1}$

,

$c_3^{-1}$

,

![]() $c_2^{-1}$

, and

$c_2^{-1}$

, and

![]() $c_1^{-1}$

. This design has a strong connection to the underlying physical reversibility and is known to be able to achieve reversible Turing completeness (Bennett, Reference Bennett1973); i.e., all computable bijections can be defined.

$c_1^{-1}$

. This design has a strong connection to the underlying physical reversibility and is known to be able to achieve reversible Turing completeness (Bennett, Reference Bennett1973); i.e., all computable bijections can be defined.

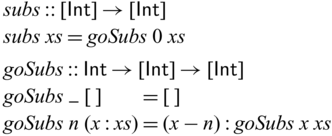

However, this requirement of local invertibility does not always match how high-level programs are naturally expressed. As a concrete example, let us see the following toy program that computes the difference of two adjacent elements in a list, where the first element in the input list is kept in the output. For example, we have

![]() ${subs} ~ [1,2,5,2,3] = [1,1,3,-3,1]$

.

${subs} ~ [1,2,5,2,3] = [1,1,3,-3,1]$

.

Despite being simple, these kind of transformations are nevertheless useful. For example, a function similar to subs can be found in the preprocessing step of image compression algorithms such as those used for PNG.Footnote 1 Another example is the encoding of bags (multisets) of integers, where subs can be used to convert sorted lists to lists of integers without any constraints (Kennedy & Vytiniotis, Reference Kennedy and Vytiniotis2012).

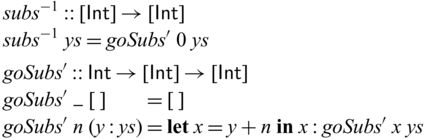

The function subs is invertible. We can define its inverse as below.

However, subs cannot be expressed directly in existing reversible programming languages. The problem is that, though subs is perfectly invertible, its subcomponent goSubs is not (its first argument is discarded in the empty-list case, and thus the function is not injective). Similar problems are also common in adaptive compression algorithms, where the model (such as a Huffman tree or a dictionary) grows in the same way in both compression and decompression, and the encoding process itself is only invertible after fixing the model at the point.

In the neighboring research area of program inversion, which studies program transformation techniques that derive

![]() $f^{-1}$

from f’s defintion, functions like goSubs are identified as partially invertible. Note that this notion of partiality is inspired by partial evaluation, and partial inversion (Romanenko, Reference Romanenko1991; Nishida et al., Reference Nishida, Sakai and Sakabe2005; Almendros-Jiménez & Vidal,

$f^{-1}$

from f’s defintion, functions like goSubs are identified as partially invertible. Note that this notion of partiality is inspired by partial evaluation, and partial inversion (Romanenko, Reference Romanenko1991; Nishida et al., Reference Nishida, Sakai and Sakabe2005; Almendros-Jiménez & Vidal,

2006) allows static (or fixed) parameters whose values are known in inversion and therefore not required to be invertible (for example the first argument of goSubs). (To avoid potential confusion, in this paper, we avoid the use of “partial” when referring to totality, and use the phrase “not-necessarily-total” instead.) However, program inversion by itself does not readily give rise to a design of invertible programming language. Like most program transformations, program inversion may fail, and often for reasons that are not obvious to users. Indeed, the method by Nishida et al. (Reference Nishida, Sakai and Sakabe2005) fails for subs, and for some other methods (Almendros-Jiménez & Vidal, Reference Almendros-Jiménez and Vidal2006; Kirkeby & Glück, Reference Kirkeby and Glück2019, Reference Kirkeby and Glück2020), success depends on the (heuristic) processing order of the expressions.

In this paper, we propose a novel programming language Sparcl

Footnote

2

that for the first time addresses the practical needs of partially invertible programs. The core idea of our proposal is based on a language design that allows invertible and conventional unidirectional computations, which are distinguished by types, to coexist and interact in a single definition. Specifically, inspired by Reference Matsuda and WangMatsuda & Wang (2018c

), our type system contains a special type constructor ![]() (pronounced as “invertible”), where

(pronounced as “invertible”), where ![]() represents A-typed values that are subject to invertible computation. However, having invertible types like

represents A-typed values that are subject to invertible computation. However, having invertible types like ![]() only solves half of the problem. For the applications we consider, exemplified by subs, the unidirectional parts (the first argument of goSubs) may depend on the invertible part (the second argument of goSubs), which complicates the design. (This is the very reason why Nishida et al. (Reference Nishida, Sakai and Sakabe2005)’s partial inversion fails for subs. In other words, a binding-time analysis (Gomard & Jones, Reference Gomard and Jones1991) is not enough (Almendros-Jiménez & Vidal, Reference Almendros-Jiménez and Vidal2006).) This interaction demands conversion from invertible values of type

only solves half of the problem. For the applications we consider, exemplified by subs, the unidirectional parts (the first argument of goSubs) may depend on the invertible part (the second argument of goSubs), which complicates the design. (This is the very reason why Nishida et al. (Reference Nishida, Sakai and Sakabe2005)’s partial inversion fails for subs. In other words, a binding-time analysis (Gomard & Jones, Reference Gomard and Jones1991) is not enough (Almendros-Jiménez & Vidal, Reference Almendros-Jiménez and Vidal2006).) This interaction demands conversion from invertible values of type ![]() to ordinary ones of type A, which only becomes feasible when we leverage the fact that such values can be seen as static (in the sense of partial inversion (Almendros-Jiménez & Vidal, Reference Almendros-Jiménez and Vidal2006)) if the values are already known in both forward and backward directions. The nature of reversibility suggests linearity or relevance (Walker, Reference Walker2004), as discarding of inputs is intrinsically irreversible. In fact, reversible functional programming languages (Baker, Reference Baker1992; Mu et al.,

to ordinary ones of type A, which only becomes feasible when we leverage the fact that such values can be seen as static (in the sense of partial inversion (Almendros-Jiménez & Vidal, Reference Almendros-Jiménez and Vidal2006)) if the values are already known in both forward and backward directions. The nature of reversibility suggests linearity or relevance (Walker, Reference Walker2004), as discarding of inputs is intrinsically irreversible. In fact, reversible functional programming languages (Baker, Reference Baker1992; Mu et al.,

2004b; Yokoyama et al., Reference Yokoyama, Axelsen and Glück2011; James & Sabry, Reference James and Sabry2012; Matsuda &Wang, Reference Matsuda and Wang2013) commonly assume a form of linearity or relevance, and in Sparcl this assumption is made explicit by a linear type system based on

![]() $\lambda^q_\to$

(the core system of Linear Haskell (Bernardy et al., Reference Bernardy, Boespflug, Newton, Peyton Jones and Spiwack2018)).

$\lambda^q_\to$

(the core system of Linear Haskell (Bernardy et al., Reference Bernardy, Boespflug, Newton, Peyton Jones and Spiwack2018)).

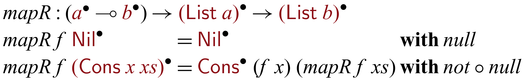

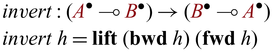

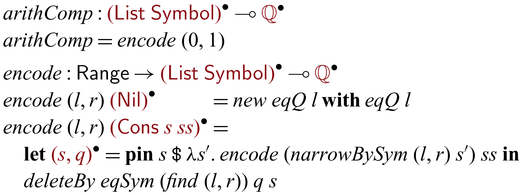

As a teaser, an invertible version of subs in Sparcl is shown in Figure 1.Footnote

3

In Sparcl, invertible functions from A to B are represented as functions of type ![]() , where

, where

![]() $\multimap$

is the constructor for linear functions. Partial invertibility is conveniently expressed by taking additional parameters as in

$\multimap$

is the constructor for linear functions. Partial invertibility is conveniently expressed by taking additional parameters as in ![]() . The

. The ![]() operator converts invertible objects into unidirectional ones. It captures a snapshot of its invertible argument and uses the snapshot as a static value in the body to create a safe local scope for the recursive call. Both the invertible argument and evaluation result of the body are returned as the output to preserve invertibility. The

operator converts invertible objects into unidirectional ones. It captures a snapshot of its invertible argument and uses the snapshot as a static value in the body to create a safe local scope for the recursive call. Both the invertible argument and evaluation result of the body are returned as the output to preserve invertibility. The

![]() $\mathrel{{{{{\mathbf{with}}}}}}$

conditions associated with the branches can be seen as postconditions which will be used for invertible case branching. We leave the detailed discussion of the language constructs to later sections, but would like to highlight the fact that looking beyond the surface syntax, the definition is identical in structure to how subs is defined in a conventional language: goSubs has the same recursive pattern with two cases for empty and nonempty lists. This close resemblance to the conventional programming style is what we strive for in the design of Sparcl.

$\mathrel{{{{{\mathbf{with}}}}}}$

conditions associated with the branches can be seen as postconditions which will be used for invertible case branching. We leave the detailed discussion of the language constructs to later sections, but would like to highlight the fact that looking beyond the surface syntax, the definition is identical in structure to how subs is defined in a conventional language: goSubs has the same recursive pattern with two cases for empty and nonempty lists. This close resemblance to the conventional programming style is what we strive for in the design of Sparcl.

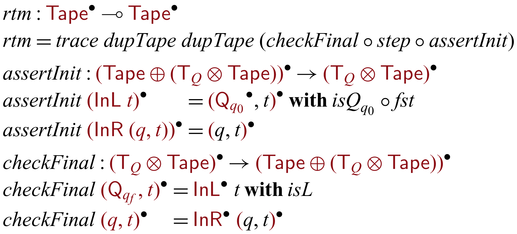

Fig. 1. Invertible subs in Sparcl.

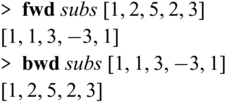

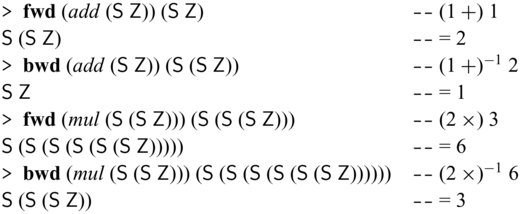

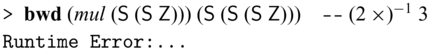

What Sparcl brings to the table is bijectivity guaranteed by construction (potentially with partially invertible functions as auxiliary functions). We can run Sparcl programs in both directions, for example as below, and it is guaranteed that

![]() ${{{{\mathbf{fwd}}}}} ~ e ~ v$

results in v’ if and only if

${{{{\mathbf{fwd}}}}} ~ e ~ v$

results in v’ if and only if

![]() ${{{{\mathbf{bwd}}}}} ~ e ~ v'$

results in v (

${{{{\mathbf{bwd}}}}} ~ e ~ v'$

results in v (

![]() ${{{{\mathbf{fwd}}}}}$

and

${{{{\mathbf{fwd}}}}}$

and

![]() ${{{{\mathbf{bwd}}}}}$

are primitives for forward and backward executions).

${{{{\mathbf{bwd}}}}}$

are primitives for forward and backward executions).

This guarantee of bijectivity is clearly different from the case of (functional) logic programming languages such as Prolog and Curry. Those languages rely on (lazy) generate-and-test (Antoy et al., Reference Antoy, Echahed and Hanus2000) to find inputs corresponding to a given output, a technique that may be adopted in the context of inverse computation (Abramov et al., Reference Abramov, Glück, Klimov, Papers, Virbitskaite and Voronkov2006). However, the generate-and-test strategy has the undesirable consequence of making reversible programming less apparent: it is unclear to programmers whether they are writing bijective programs that may be executed deterministically. Moreover, lazy generation of inputs may cause unpredictable overhead, whereas in reversible languages (Lutz, Reference Lutz1986; Baker,

1992; Frank, Reference Frank1997; Reference Mu, Hu and TakeichiMu et al., 2004b ; Yokoyama et al., Reference Yokoyama, Axelsen and Glück2008, Reference Yokoyama, Axelsen and Glück2011; James & Sabry, Reference James and Sabry2012) including Sparcl, the forward and backward executions of a program take the same steps.

[2]

One might notice from the type of

![]() ${{{{\mathbf{pin}}}}}$

that Sparcl is a higher-order language, in the sense that it contains the simply-typed

${{{{\mathbf{pin}}}}}$

that Sparcl is a higher-order language, in the sense that it contains the simply-typed

![]() $\lambda$

-calculus (more precisely, the simple multiplicity fragment of

$\lambda$

-calculus (more precisely, the simple multiplicity fragment of

![]() $\lambda^q_\to$

(Bernardy et al., Reference Bernardy, Boespflug, Newton, Peyton Jones and Spiwack2018)) as a subsystem. Thus, we can, for example, write an invertible map function in Sparcl as below.

$\lambda^q_\to$

(Bernardy et al., Reference Bernardy, Boespflug, Newton, Peyton Jones and Spiwack2018)) as a subsystem. Thus, we can, for example, write an invertible map function in Sparcl as below.

Ideally, we want to program invertible functions by using higher-order functions. But it is not possible. It is known that there is no higher-order invertible languages where

![]() $\multimap$

always denotes (not-necessarily-total) bijections. In contrast, there is no issue on having first-order invertible languages as demonstrated by existing reversible languages (see, e.g., RFun (Yokoyama et al., Reference Yokoyama, Axelsen and Glück2011)). Thus, the challenge of designing a higher-order invertible languages lies in finding a sweet spot such that a certain class of functions denote (not-necessarily-total) bijections and programmers can use higher-order functions to abstract computation patterns. Partial invertibility plays an important role here, as functions can be used as static inputs or outputs without violating invertibility. Though this idea has already been considered in the literature (Almendros-Jiménez & Vidal, Reference Almendros-Jiménez and Vidal2006; Mogensen, Reference Mogensen2008; Jacobsen et al., Reference Jacobsen, Kaarsgaard and Thomsen2018) while with restrictions (specifically, no closures), and the advantage is inherited from Reference Matsuda and WangMatsuda &Wang (2018c

) from which Sparcl is inspired, we claim that Sparcl is the first invertible programming language that achieved a proper design for higher-order programming.

$\multimap$

always denotes (not-necessarily-total) bijections. In contrast, there is no issue on having first-order invertible languages as demonstrated by existing reversible languages (see, e.g., RFun (Yokoyama et al., Reference Yokoyama, Axelsen and Glück2011)). Thus, the challenge of designing a higher-order invertible languages lies in finding a sweet spot such that a certain class of functions denote (not-necessarily-total) bijections and programmers can use higher-order functions to abstract computation patterns. Partial invertibility plays an important role here, as functions can be used as static inputs or outputs without violating invertibility. Though this idea has already been considered in the literature (Almendros-Jiménez & Vidal, Reference Almendros-Jiménez and Vidal2006; Mogensen, Reference Mogensen2008; Jacobsen et al., Reference Jacobsen, Kaarsgaard and Thomsen2018) while with restrictions (specifically, no closures), and the advantage is inherited from Reference Matsuda and WangMatsuda &Wang (2018c

) from which Sparcl is inspired, we claim that Sparcl is the first invertible programming language that achieved a proper design for higher-order programming.

In summary, our main contributions are as follows:

• We design Sparcl, a novel higher-order invertible programming language that captures the notion of partial invertibility. It is the first language that handles both clear separation and close integration of unidirectional and invertible computations, enabling new ways of structuring invertible programs. We formally specify the syntax, type system, and semantics of its core system named

![]() ${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

(Section 3).

${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

(Section 3).

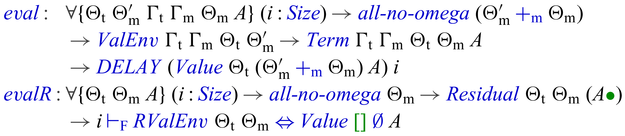

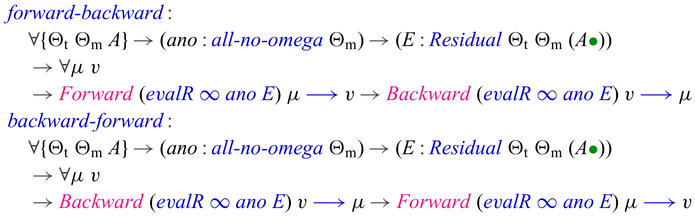

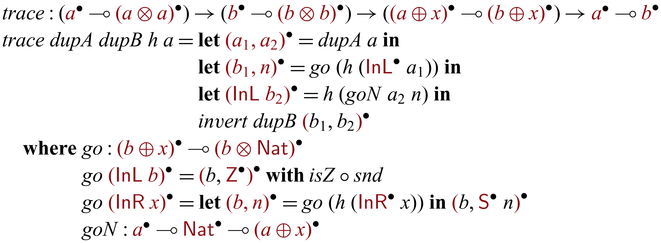

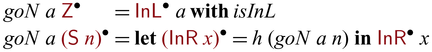

• We state and prove several properties about

![]() ${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

(Section 3.6), including subject reduction, bijectivity, and reversible Turing completeness (Bennett, Reference Bennett1973). We do not state the progress property directly, which is implied by our definitional (Reynolds, Reference Reynolds1998) interpreter written in AgdaFootnote

4

(Section 4).

${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

(Section 3.6), including subject reduction, bijectivity, and reversible Turing completeness (Bennett, Reference Bennett1973). We do not state the progress property directly, which is implied by our definitional (Reynolds, Reference Reynolds1998) interpreter written in AgdaFootnote

4

(Section 4).

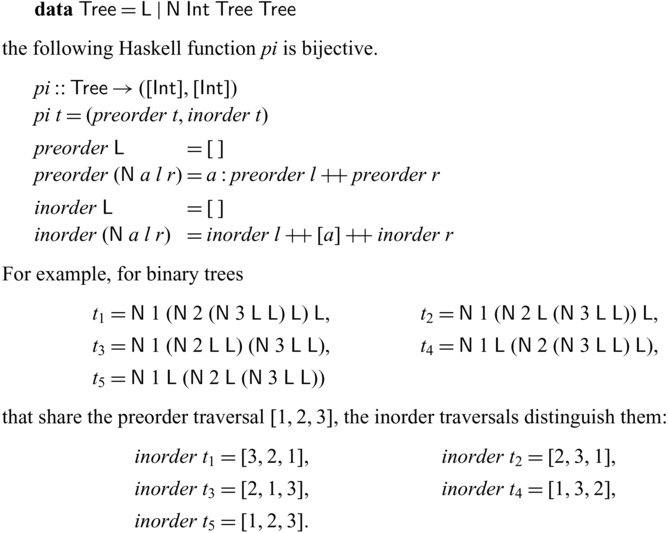

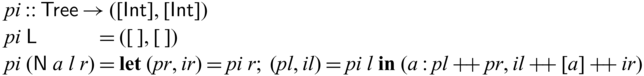

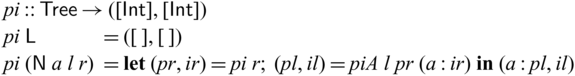

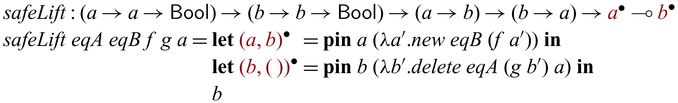

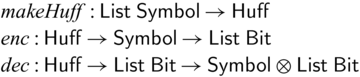

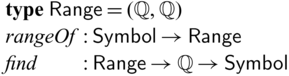

• We demonstrate the utility of Sparcl with nontrivial examples: tree rebuilding from inorder and preoder traversals (Mu & Bird, Reference Mu and Bird2003) and simplified versions of compression algorithms including Huffman coding, arithmetic coding, and LZ77 (Ziv & Lempel, Reference Ziv and Lempel1977) (Section 5).

In addition, a prototype implementation of Sparcl is available from https://github.com/kztk-m/sparcl,which also contains more examples. All the artifacts are linked from the Sparcl web page (https://bx-lang.github.io/EXHIBIT/sparcl.html).Footnote 5

A preliminary version of this paper appeared in ICFP20 (Matsuda & Wang, Reference Matsuda and Wang2020) with the same title. The major changes include a description of our Agda implementation in Section 4 and the arithmetic coding and LZ77 examples in Sections 5.3 and 5.4. Moreover, the related work section (Section 6) is updated to include work published after the preliminary version (Matsuda & Wang, Reference Matsuda and Wang2020).

2 Overview

In this section, we informally introduce the essential constructs of Sparcl and demonstrate their use with small examples.

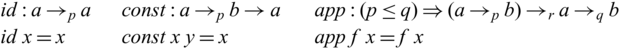

2.1 Linear-typed programming

Linearity (or weaker relevance) is commonly adopted in reversible functional languages (Baker, Reference Baker1992; Reference Mu, Hu and TakeichiMu et al., 2004b

; Yokoyama et al., Reference Yokoyama, Axelsen and Glück2011; James & Sabry, Reference James and Sabry2012; Matsuda & Wang, Reference Matsuda and Wang2013) to exclude noninjective functions such as constant functions. Sparcl is no exception (we will revisit its importance in Section 2.3) and adopts a linear type system based on

![]() $\lambda^q_{\to}$

(the core system of Linear Haskell (Bernardy et al., Reference Bernardy, Boespflug, Newton, Peyton Jones and Spiwack2018)). A feature of the type system is its function type

$\lambda^q_{\to}$

(the core system of Linear Haskell (Bernardy et al., Reference Bernardy, Boespflug, Newton, Peyton Jones and Spiwack2018)). A feature of the type system is its function type

![]() $A \to_{p} B$

, where the arrow is annotated by the argument’s multiplicity (1 or

$A \to_{p} B$

, where the arrow is annotated by the argument’s multiplicity (1 or

![]() $\omega$

). Here,

$\omega$

). Here,

![]() $A \to_{1} B$

denotes linear functions that use the input exactly once, while

$A \to_{1} B$

denotes linear functions that use the input exactly once, while

![]() $A \to_{\omega} B$

denotes unrestricted functions that have no restriction on the use of its input. The following are some examples of linear and unrestricted functions.

$A \to_{\omega} B$

denotes unrestricted functions that have no restriction on the use of its input. The following are some examples of linear and unrestricted functions.

Observe that the double used x twice and const discards y; hence, the corresponding arrows must be annotated by

![]() $\omega$

. The purpose of the type system is to ensure bijectivity. But having linearity alone is not sufficient. We will come back to this point after showing invertible programming in Sparcl. Readers who are familiar with linear-type systems that have the exponential operator

$\omega$

. The purpose of the type system is to ensure bijectivity. But having linearity alone is not sufficient. We will come back to this point after showing invertible programming in Sparcl. Readers who are familiar with linear-type systems that have the exponential operator

![]() $!$

(Wadler, Reference Wadler1993) may view

$!$

(Wadler, Reference Wadler1993) may view

![]() $A \to_{\omega} B$

as

$A \to_{\omega} B$

as

![]() $!A \multimap B$

. A small deviation from the (simply-typed fragment of)

$!A \multimap B$

. A small deviation from the (simply-typed fragment of)

![]() $\lambda^q_{\to}$

is that Sparcl is equipped with rank-1 polymorphism with qualified typing (Jones, Reference Jones1995) and type inference (Matsuda, Reference Matsuda2020). For example, the system infers the following types for the following functions.

$\lambda^q_{\to}$

is that Sparcl is equipped with rank-1 polymorphism with qualified typing (Jones, Reference Jones1995) and type inference (Matsuda, Reference Matsuda2020). For example, the system infers the following types for the following functions.

In first two examples, p is arbitrary (i.e., 1 or

![]() $\omega$

); in the last example, the predicate

$\omega$

); in the last example, the predicate

![]() $p \le q$

states an ordering of multiplicity, where

$p \le q$

states an ordering of multiplicity, where

![]() $1 \le \omega$

.Footnote

6

This predicate states that if an argument is linear then it cannot be passed to an unrestricted function, as an unrestricted function may use its argument arbitrary many times. A more in-depth discussion of the surface type system is beyond the scope of this paper, but note that unlike the implementation of Linear Haskell as of GHC 9.0.XFootnote

7

which checks linearity only when type signatures are given explicitly, Sparcl can infer linear types thanks to the use of qualified typing.

$1 \le \omega$

.Footnote

6

This predicate states that if an argument is linear then it cannot be passed to an unrestricted function, as an unrestricted function may use its argument arbitrary many times. A more in-depth discussion of the surface type system is beyond the scope of this paper, but note that unlike the implementation of Linear Haskell as of GHC 9.0.XFootnote

7

which checks linearity only when type signatures are given explicitly, Sparcl can infer linear types thanks to the use of qualified typing.

For simplicity, we sometimes write

![]() $\multimap$

for

$\multimap$

for

![]() $\to_{1}$

and simply

$\to_{1}$

and simply

![]() $\to$

for

$\to$

for

![]() $\to_{\omega}$

when showing programming examples in Sparcl.

$\to_{\omega}$

when showing programming examples in Sparcl.

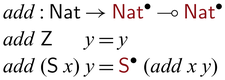

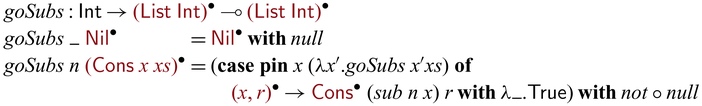

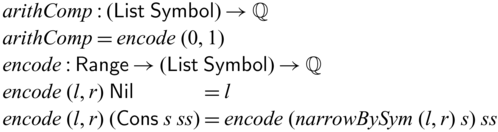

2.2 Multiplication

One of the simplest examples of partially invertible programs is multiplication (Nishida et al., 2005). Suppose that we have a datatype of natural numbers defined as below.

In Sparcl, constructors have linear types: ![]() .

.

We define multiplication in term of addition, which is also partially invertible.Footnote 8

As mentioned in the introduction, we use the type constructor ![]() to distinguish data that are subject to invertible computation (such as

to distinguish data that are subject to invertible computation (such as ![]() ) and those that are not (such as

) and those that are not (such as ![]() ): when the latter is fixed, a partially invertible function is turned into a (not-necessarily-total) bijection, for example,

): when the latter is fixed, a partially invertible function is turned into a (not-necessarily-total) bijection, for example, ![]() . (For those who read the paper with colors, the arguments of

. (For those who read the paper with colors, the arguments of ![]() are highlighted in

are highlighted in ![]() .) Values of

.) Values of ![]() -types are constructed by lifted constructors such as

-types are constructed by lifted constructors such as ![]() . In the forward direction,

. In the forward direction, ![]() to the input, and in the backward direction, it strips one

to the input, and in the backward direction, it strips one

![]() $\mathsf{S}$

(and the evaluation gets stuck if

$\mathsf{S}$

(and the evaluation gets stuck if

![]() $\mathsf{Z}$

is found). In general, since constructors by nature are always bijective (though not necessarily total in the backward direction), every constructor

$\mathsf{Z}$

is found). In general, since constructors by nature are always bijective (though not necessarily total in the backward direction), every constructor

![]() $\mathsf{C} : \sigma_1 \multimap \dots \multimap \sigma_n \multimap \tau$

automatically give rise to a corresponding lifted version

$\mathsf{C} : \sigma_1 \multimap \dots \multimap \sigma_n \multimap \tau$

automatically give rise to a corresponding lifted version ![]() .

.

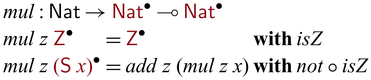

A partially invertible multiplication function can be defined by using add as below.Footnote 9

An interesting feature in the mul program is the invertible pattern matching (Yokoyama

et al., 2008) indicated by patterns ![]() (here, we annotate patterns instead of constructors). Invertible pattern matching is a branching mechanism that executes bidirectionally: the forward direction basically performs the standard pattern matching, the backward direction employs an additional

(here, we annotate patterns instead of constructors). Invertible pattern matching is a branching mechanism that executes bidirectionally: the forward direction basically performs the standard pattern matching, the backward direction employs an additional

![]() $\mathrel{{{{{\mathbf{with}}}}}}$

clause to determine the branch to be taken. For example,

$\mathrel{{{{{\mathbf{with}}}}}}$

clause to determine the branch to be taken. For example, ![]() , in the forward direction, values are matched against the forms

, in the forward direction, values are matched against the forms

![]() $\mathsf{Z}$

and

$\mathsf{Z}$

and

![]() $\mathsf{S} ~ x$

; in the backward direction, the

$\mathsf{S} ~ x$

; in the backward direction, the

![]() $\mathrel{{{{{\mathbf{with}}}}}}$

conditions are checked upon an output of the function

$\mathrel{{{{{\mathbf{with}}}}}}$

conditions are checked upon an output of the function

![]() ${mul} ~ n$

: if

${mul} ~ n$

: if

![]() ${isZ}: {\mathsf{Nat}} \to \mathsf{Bool}$

returns

${isZ}: {\mathsf{Nat}} \to \mathsf{Bool}$

returns

![]() $\mathsf{True}$

, the first branch is chosen, otherwise the second branch is chosen. When the second branch is taken, the backward computation of

$\mathsf{True}$

, the first branch is chosen, otherwise the second branch is chosen. When the second branch is taken, the backward computation of

![]() ${add} ~ n$

is performed, which essentially subtracts n, followed by recursively applying the backward computation of

${add} ~ n$

is performed, which essentially subtracts n, followed by recursively applying the backward computation of

![]() ${mul} ~ {n}$

to the result. As the last step, the final result is enclosed with

${mul} ~ {n}$

to the result. As the last step, the final result is enclosed with

![]() $\mathsf{S}$

and returned. In other words, the backward behavior of

$\mathsf{S}$

and returned. In other words, the backward behavior of

![]() ${mul} ~ n$

recursively tries to subtract n and returns the count of successful subtractions.

${mul} ~ n$

recursively tries to subtract n and returns the count of successful subtractions.

In Sparcl,

![]() $\mathrel{{{{{\mathbf{with}}}}}}$

conditions are provided by programmers and expected to be exclusive; the conditions are enforced at run-time: the

$\mathrel{{{{{\mathbf{with}}}}}}$

conditions are provided by programmers and expected to be exclusive; the conditions are enforced at run-time: the

![]() $\mathrel{{{{{\mathbf{with}}}}}}$

conditions are asserted to be postconditions on the branches’ values. Specifically, the branch’s

$\mathrel{{{{{\mathbf{with}}}}}}$

conditions are asserted to be postconditions on the branches’ values. Specifically, the branch’s

![]() $\mathrel{{{{{\mathbf{with}}}}}}$

condition is a positive assertion while all the other branches’ ones are negative assertions. Thus, the forward computation fails when the branch’s

$\mathrel{{{{{\mathbf{with}}}}}}$

condition is a positive assertion while all the other branches’ ones are negative assertions. Thus, the forward computation fails when the branch’s

![]() $\mathrel{{{{{\mathbf{with}}}}}}$

condition is not satisfied, or any of the other

$\mathrel{{{{{\mathbf{with}}}}}}$

condition is not satisfied, or any of the other

![]() $\mathrel{{{{{\mathbf{with}}}}}}$

conditions is also satisfied. This exclusiveness enables the backward computation to uniquely identify the branch (Lutz, Reference Lutz1986; Yokoyama et al., Reference Yokoyama, Axelsen and Glück2008). Sometimes we may omit the

$\mathrel{{{{{\mathbf{with}}}}}}$

conditions is also satisfied. This exclusiveness enables the backward computation to uniquely identify the branch (Lutz, Reference Lutz1986; Yokoyama et al., Reference Yokoyama, Axelsen and Glück2008). Sometimes we may omit the

![]() $\mathrel{{{{{\mathbf{with}}}}}}$

condition of the last branch, as it can be inferred as the negation of the conjunction of all the others. For example, in the definition of goSubs the second branch’s

$\mathrel{{{{{\mathbf{with}}}}}}$

condition of the last branch, as it can be inferred as the negation of the conjunction of all the others. For example, in the definition of goSubs the second branch’s

![]() $\mathrel{{{{{\mathbf{with}}}}}}$

condition is

$\mathrel{{{{{\mathbf{with}}}}}}$

condition is ![]() . One could use sophisticated types such as refinement types to infer

. One could use sophisticated types such as refinement types to infer

![]() $\mathrel{{{{{\mathbf{with}}}}}}$

-conditions and even statically enforce exclusiveness instead of assertion checking. However, we stick to simple types in this paper as our primal goal is to establish the basic design of Sparcl.

$\mathrel{{{{{\mathbf{with}}}}}}$

-conditions and even statically enforce exclusiveness instead of assertion checking. However, we stick to simple types in this paper as our primal goal is to establish the basic design of Sparcl.

An astute reader may wonder what bijection

![]() ${mul} ~ \mathsf{Z}$

defines, as zero times n is zero for any n. In fact, it defines a non-total bijection that in the forward direction the domain of the function contains only

${mul} ~ \mathsf{Z}$

defines, as zero times n is zero for any n. In fact, it defines a non-total bijection that in the forward direction the domain of the function contains only

![]() $\mathsf{Z}$

, i.e., the trivial bijection between

$\mathsf{Z}$

, i.e., the trivial bijection between

![]() ${\mathsf{Z}}$

and

${\mathsf{Z}}$

and

![]() ${\mathsf{Z}}$

.

${\mathsf{Z}}$

.

2.3 Why linearity itself is insufficient but still matters

The primal role of linearity is to prohibit values from being discarded or copied, and Sparcl is no exception. However, linearity itself is insufficient for partially invertible programming.

To start with, it is clear that

![]() $\multimap$

is not equivalent to not-necessarily-total bijections. For example, the function

$\multimap$

is not equivalent to not-necessarily-total bijections. For example, the function

![]() $\lambda x.x ~ (\lambda y.y) ~ (\lambda z.z) : ((\sigma \multimap \sigma) \multimap (\sigma \multimap \sigma)\multimap (\sigma \multimap \sigma)) \multimap \sigma \multimap \sigma$

returns

$\lambda x.x ~ (\lambda y.y) ~ (\lambda z.z) : ((\sigma \multimap \sigma) \multimap (\sigma \multimap \sigma)\multimap (\sigma \multimap \sigma)) \multimap \sigma \multimap \sigma$

returns

![]() $\lambda y.y$

for both

$\lambda y.y$

for both

![]() $\lambda f.\lambda g.\lambda x.f ~ (g ~ x)$

and

$\lambda f.\lambda g.\lambda x.f ~ (g ~ x)$

and

![]() $\lambda f.\lambda g.\lambda x.g ~ (f ~ x)$

. Theoretically, this comes from the fact that the category of (not-necessarily-total) bijections is not (monoidal) closed. Thus, as discussed above, the challenge is to find a sweet spot where a certain class of functions denote (not-necessarily-total) bijections.

$\lambda f.\lambda g.\lambda x.g ~ (f ~ x)$

. Theoretically, this comes from the fact that the category of (not-necessarily-total) bijections is not (monoidal) closed. Thus, as discussed above, the challenge is to find a sweet spot where a certain class of functions denote (not-necessarily-total) bijections.

It is known that a linear calculus concerning tensor products (

![]() $\otimes$

) and linear functions

$\otimes$

) and linear functions

![]() $(\multimap)$

(even with exponentials (

$(\multimap)$

(even with exponentials (

![]() $!$

)) can be modeled in the Int-construction (Joyal et al., Reference Joyal, Street and Verity1996) of the category of not-necessarily-total bijections (Abramsky et al., Reference Abramsky, Haghverdi and Scott2002; Abramsky, Reference Abramsky2005. Here, roughly speaking, first-order functions on base types can be understood as not-necessarily-total bijections. However, it is also known that such a system cannot be easily extended to include sum-types nor invertible pattern matching (Abramsky, Reference Abramsky2005, Section 7).

$!$

)) can be modeled in the Int-construction (Joyal et al., Reference Joyal, Street and Verity1996) of the category of not-necessarily-total bijections (Abramsky et al., Reference Abramsky, Haghverdi and Scott2002; Abramsky, Reference Abramsky2005. Here, roughly speaking, first-order functions on base types can be understood as not-necessarily-total bijections. However, it is also known that such a system cannot be easily extended to include sum-types nor invertible pattern matching (Abramsky, Reference Abramsky2005, Section 7).

Moreover, linearity does not express partiality as in partially invertible computations. For example, without the ![]() types, function add can have type

types, function add can have type

![]() $\mathsf{Nat} \multimap \mathsf{Nat} \multimap \mathsf{Nat}$

(note the linear use of the first argument), which does not specify which parameter is a fixed one. It even has type

$\mathsf{Nat} \multimap \mathsf{Nat} \multimap \mathsf{Nat}$

(note the linear use of the first argument), which does not specify which parameter is a fixed one. It even has type

![]() $\mathsf{Nat} \otimes \mathsf{Nat} \multimap \mathsf{Nat}$

after uncurrying though addition is clearly not fully invertible. These are the reasons why we separate the invertible world and the unidirectional world by using

$\mathsf{Nat} \otimes \mathsf{Nat} \multimap \mathsf{Nat}$

after uncurrying though addition is clearly not fully invertible. These are the reasons why we separate the invertible world and the unidirectional world by using ![]() , inspired by staged languages (Nielson & Nielson, Reference Nielson and Nielson1992; Moggi, Reference Moggi1998; Davies & Pfenning, Reference Davies and Pfenning2001). Readers familiar with staged languages may see

, inspired by staged languages (Nielson & Nielson, Reference Nielson and Nielson1992; Moggi, Reference Moggi1998; Davies & Pfenning, Reference Davies and Pfenning2001). Readers familiar with staged languages may see ![]() as residual code of type A, which will be executed forward or backward at the second stage to output or input A-typed values.

as residual code of type A, which will be executed forward or backward at the second stage to output or input A-typed values.

On the other hand, ![]() does not replace the need for linearity either. Without linearity,

does not replace the need for linearity either. Without linearity, ![]() -typed values may be discarded or duplicated, which may lead to non-bijectivity. Unlike discarding, the exclusion of duplication is debatable as the inverse of duplication can be given as equality check (Glück and Kawabe, Reference Glück and Kawabe2003). So it is our design choice to exclude duplication (contraction) in addition to discarding (weakening) to avoid unpredictable failures that may be caused by the equality checks. Without contraction, users are still able to implement duplication for datatypes with decidable equality (see Section 5.1.3). However, this design requires duplication (and the potential of failing) to be made explicit, which improves the predictability of the system. Having explicit duplication is not uncommon in this context (Reference Mu, Hu and TakeichiMu et al., 2004b

; Yokoyama et al., Reference Yokoyama, Axelsen and Glück2011).

-typed values may be discarded or duplicated, which may lead to non-bijectivity. Unlike discarding, the exclusion of duplication is debatable as the inverse of duplication can be given as equality check (Glück and Kawabe, Reference Glück and Kawabe2003). So it is our design choice to exclude duplication (contraction) in addition to discarding (weakening) to avoid unpredictable failures that may be caused by the equality checks. Without contraction, users are still able to implement duplication for datatypes with decidable equality (see Section 5.1.3). However, this design requires duplication (and the potential of failing) to be made explicit, which improves the predictability of the system. Having explicit duplication is not uncommon in this context (Reference Mu, Hu and TakeichiMu et al., 2004b

; Yokoyama et al., Reference Yokoyama, Axelsen and Glück2011).

Another design choice we made is to admit types like ![]() to simplify the formalization; otherwise, kinds will be needed to distinguish types that can be used in

to simplify the formalization; otherwise, kinds will be needed to distinguish types that can be used in ![]() from general types, and subkinding to allow running and importing bijections (Sections 2.4 and 2.5). Such types are not very useful though, as function- or invertible-typed values cannot be inspected during invertible computations.

from general types, and subkinding to allow running and importing bijections (Sections 2.4 and 2.5). Such types are not very useful though, as function- or invertible-typed values cannot be inspected during invertible computations.

2.4 Running reversible computation

Sparcl provides primitive functions to execute invertible functions in either directions: ![]() . For example, we have:

. For example, we have:

Of course, the forward and backward computations may not be total. For example, the following expression (legitimately) fails.

The guarantee Sparcl offers is that derived bijections are total with respect to the functions’ actual domains and ranges; i.e.,

![]() ${{{{\mathbf{fwd}}}}} ~ e ~ v$

results in u, then

${{{{\mathbf{fwd}}}}} ~ e ~ v$

results in u, then

![]() ${{{{\mathbf{bwd}}}}} ~ e ~ u$

results in v, and vice versa (Section 3.6.2).

${{{{\mathbf{bwd}}}}} ~ e ~ u$

results in v, and vice versa (Section 3.6.2).

Linearity plays a role here. Linear calculi are considered resource-aware in the sense that linear variables will be lost once used. In our case, resources are ![]() -typed values, as

-typed values, as ![]() represents (a part of) an input or (a part of) an output of a bijection being constructed, which must be retained throughout the computation. This is why the first argument of

represents (a part of) an input or (a part of) an output of a bijection being constructed, which must be retained throughout the computation. This is why the first argument of

![]() ${{{{\mathbf{fwd}}}}}$

/

${{{{\mathbf{fwd}}}}}$

/

![]() ${{{{\mathbf{bwd}}}}}$

is unrestricted rather than linear. Very roughly speaking, an expression that can be passed to an unrestricted function cannot contain linear variables, or “resources”. Thus, a function of type

${{{{\mathbf{bwd}}}}}$

is unrestricted rather than linear. Very roughly speaking, an expression that can be passed to an unrestricted function cannot contain linear variables, or “resources”. Thus, a function of type ![]() passed to

passed to

![]() ${{{{\mathbf{fwd}}}}}$

/

${{{{\mathbf{fwd}}}}}$

/

![]() ${{{{\mathbf{bwd}}}}}$

cannot use any resources other than one value of type

${{{{\mathbf{bwd}}}}}$

cannot use any resources other than one value of type ![]() to produce one value of type

to produce one value of type ![]() . In other words, all and only information from

. In other words, all and only information from ![]() is retained in

is retained in ![]() , guaranteeing bijectivity. As a result, Sparcl’s type system effectively rejects code like

, guaranteeing bijectivity. As a result, Sparcl’s type system effectively rejects code like ![]() as x’s multiplicity is

as x’s multiplicity is

![]() $\omega$

in both cases. In the former case, x is discarded and multiplicity in our system is either 1 or

$\omega$

in both cases. In the former case, x is discarded and multiplicity in our system is either 1 or

![]() $\omega$

. In the latter case, x appears in the first argument of

$\omega$

. In the latter case, x appears in the first argument of

![]() ${{{{\mathbf{fwd}}}}}$

, which is unrestricted.

${{{{\mathbf{fwd}}}}}$

, which is unrestricted.

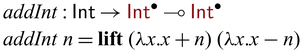

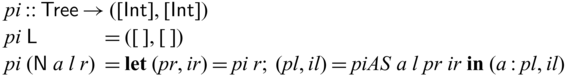

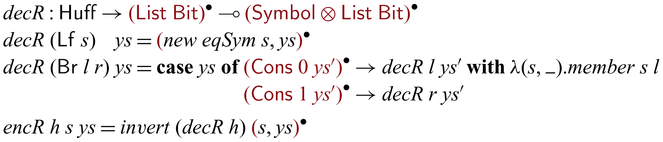

2.5 Importing existing invertible functions

Bijectivity is not uncommon in computer science or mathematics, and there already exist many established algorithms that are bijective. Examples include nontrivial results in number theory or category theory, and manipulation of primitive or sophisticated data structures such as Burrows-Wheeler transformations on suffix arrays.

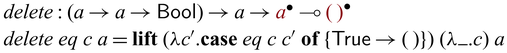

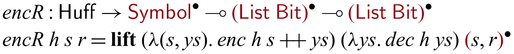

Instead of (re)writing them in Sparcl, the language provides a mechanism to directly import existing bijections (as a pair of functions) to construct valid Sparcl programs: ![]() converts a pair of functions into a function on

converts a pair of functions into a function on ![]() -typed values, expecting that the pair of functions form mutual inverses. For example, by

-typed values, expecting that the pair of functions form mutual inverses. For example, by

![]() ${{{{\mathbf{lift}}}}}$

, we can define addInt as below

${{{{\mathbf{lift}}}}}$

, we can define addInt as below

The use of

![]() ${{{{\mathbf{lift}}}}}$

allows one to create primitive bijections to be composed by the various constructs in Sparcl. Another interesting use of

${{{{\mathbf{lift}}}}}$

allows one to create primitive bijections to be composed by the various constructs in Sparcl. Another interesting use of

![]() ${{{{\mathbf{lift}}}}}$

is to implement in-language inversion.

${{{{\mathbf{lift}}}}}$

is to implement in-language inversion.

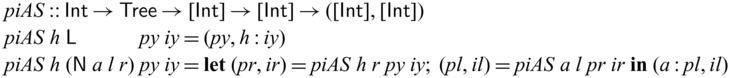

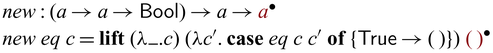

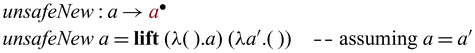

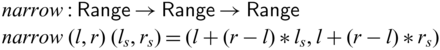

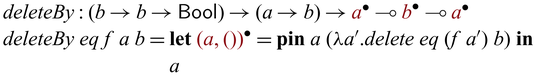

2.6 Composing partially invertible functions

Partially invertible functions in Sparcl expect arguments of both ![]() and

and ![]() types, which sometimes makes the calling of such functions interesting. This phenomenon is particularly noticeable in recursive calls where values of type

types, which sometimes makes the calling of such functions interesting. This phenomenon is particularly noticeable in recursive calls where values of type ![]() may need to be fed into function calls expecting values of type A. In this case, it becomes necessary to convert

may need to be fed into function calls expecting values of type A. In this case, it becomes necessary to convert ![]() -typed values to A-typed one. To avoid the risk of violating invertibility, such conversions are carefully managed in Sparcl through a special function

-typed values to A-typed one. To avoid the risk of violating invertibility, such conversions are carefully managed in Sparcl through a special function ![]()

![]() , inspired by the depGame function in Kennedy & Vytiniotis (Reference Kennedy and Vytiniotis2012) and reversible updates (Axelsen et al., Reference Axelsen, Glück and Yokoyama2007) in reversible imperative languages (Lutz, Reference Lutz1986; Frank, Reference Frank1997; Yokoyama et al., Reference Yokoyama, Axelsen and Glück2008; Glück & Yokoyama, Reference Glück and Yokoyama2016). The function

, inspired by the depGame function in Kennedy & Vytiniotis (Reference Kennedy and Vytiniotis2012) and reversible updates (Axelsen et al., Reference Axelsen, Glück and Yokoyama2007) in reversible imperative languages (Lutz, Reference Lutz1986; Frank, Reference Frank1997; Yokoyama et al., Reference Yokoyama, Axelsen and Glück2008; Glück & Yokoyama, Reference Glück and Yokoyama2016). The function

![]() ${{{{\mathbf{pin}}}}}$

creates a static snapshot of its first argument (

${{{{\mathbf{pin}}}}}$

creates a static snapshot of its first argument (![]() ) and uses the snapshot (A) in its second argument. Bijectivity of a function involving

) and uses the snapshot (A) in its second argument. Bijectivity of a function involving

![]() ${{{{\mathbf{pin}}}}}$

is guaranteed as the original

${{{{\mathbf{pin}}}}}$

is guaranteed as the original ![]() value is retained in the output

value is retained in the output ![]() together with the evaluation result of the second argument (

together with the evaluation result of the second argument (![]() ). For example,

). For example, ![]() , which defines the mapping between (n,m) and

, which defines the mapping between (n,m) and

![]() $(n,n+m)$

, is bijective. We will define the function

$(n,n+m)$

, is bijective. We will define the function

![]() ${{{{\mathbf{pin}}}}}$

and formally state the correctness property in Section 3.

${{{{\mathbf{pin}}}}}$

and formally state the correctness property in Section 3.

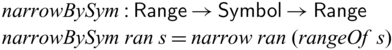

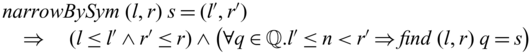

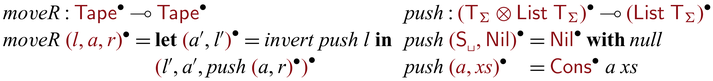

Let us revisit the example in Section 1. The partially invertible version of goSubs can be implemented via

![]() ${{{{\mathbf{pin}}}}}$

as below.

${{{{\mathbf{pin}}}}}$

as below.

Here, we used

![]() ${{{{\mathbf{pin}}}}}$

to convert

${{{{\mathbf{pin}}}}}$

to convert ![]() to

to

![]() $x':{\mathsf{Int}}$

in order to pass it to the recursive call of goSubs. In the backward direction,

$x':{\mathsf{Int}}$

in order to pass it to the recursive call of goSubs. In the backward direction,

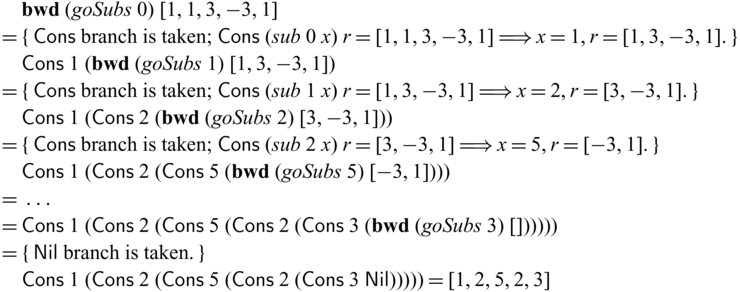

![]() ${goSubs} ~ n$

executes as follows.Footnote

10

${goSubs} ~ n$

executes as follows.Footnote

10

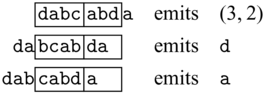

Note that the first arguments of (recursive) calls of goSubs (which are static) have the same values (1, 2, 5, 2, and 3) in both forward/backward executions, distinguishing their uses from those of the invertible arguments. As one can see,

![]() ${goSubs} ~ {n}$

behaves exactly like the hand-written goSubs’ in

${goSubs} ~ {n}$

behaves exactly like the hand-written goSubs’ in

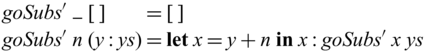

![]() ${subs}^{-1}$

which is reproduced below.

${subs}^{-1}$

which is reproduced below.

The use of

![]() ${{{{\mathbf{pin}}}}}$

commonly results in an invertible

${{{{\mathbf{pin}}}}}$

commonly results in an invertible

![]() ${{{{\mathbf{case}}}}}$

with a single branch, as we see in goSubs above. We capture this pattern with an invertible

${{{{\mathbf{case}}}}}$

with a single branch, as we see in goSubs above. We capture this pattern with an invertible

![]() ${{{{\mathbf{let}}}}}$

as a shorthand notation, which enables us to write

${{{{\mathbf{let}}}}}$

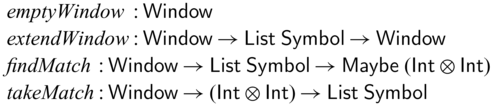

as a shorthand notation, which enables us to write ![]() . The definition of goSubs shown in Section 1 uses this shorthand notation, which is reproduced in Figure 2(a).

. The definition of goSubs shown in Section 1 uses this shorthand notation, which is reproduced in Figure 2(a).

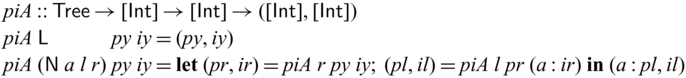

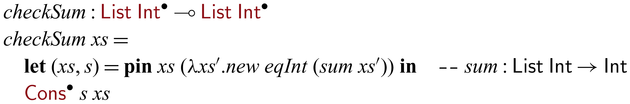

Fig. 2. Side-by-side comparison of partially invertible (a) and fully invertible (b) versions of subs.

We would like to emphasize that partial invertibility, as supported in Sparcl, is key to concise function definitions. In Figure 2, we show side-by-side two versions of the same program written in the same language: the one on the left allows partial invertibility whereas the one on the right requires all functions (include the intermediate ones) to be fully invertible (note the different types in the two versions of goSubs and sub). As a result, goSubsF is much harder to define and the code becomes fragile and error-prone. This advantage of Sparcl, which is already evident in this small example, becomes decisive when dealing with larger programs, especially those requiring complex manipulation of static values (for example, the Huffman coding in Section 5.2).

We end this section with a theoretical remark. One might wonder why ![]() is not a monad. This intuitively comes from the fact that the first and second stages are in different languages (the standard one and an invertible one, respectively) with different semantics. More formally,

is not a monad. This intuitively comes from the fact that the first and second stages are in different languages (the standard one and an invertible one, respectively) with different semantics. More formally, ![]() , which brings a type in the second stage into the first stage, forms a functor, but the functor is not endo. Recall that

, which brings a type in the second stage into the first stage, forms a functor, but the functor is not endo. Recall that ![]() represents residual code in an invertible system of type A; that is,

represents residual code in an invertible system of type A; that is, ![]() and its component A belong to different categories (though we have not formally described them).Footnote

11

One then might wonder whether

and its component A belong to different categories (though we have not formally described them).Footnote

11

One then might wonder whether ![]() is a relative monad (Altenkirch et al., Reference Altenkirch, Chapman and Uustalu2010). To form a relative monad, one needs to find a functor that has the same domain and codomain as (the functor corresponding to)

is a relative monad (Altenkirch et al., Reference Altenkirch, Chapman and Uustalu2010). To form a relative monad, one needs to find a functor that has the same domain and codomain as (the functor corresponding to) ![]() . It is unclear whether there exists such a functor other than

. It is unclear whether there exists such a functor other than ![]() itself; in this case, the relative monad operations do not provide any additional expressive power.

itself; in this case, the relative monad operations do not provide any additional expressive power.

2.7 Implementations

We have implemented a proof-of-concept interpreter for Sparcl including the linear type system, which is available from https://github.com/kztk-m/sparcl. The implementation adds two small but useful extensions to what is presented in this paper. First, the implementation allows nonlinear constructors, such as

![]() $\mathsf{MkUn} : a \to \mathsf{Un} ~ a$

which serves as

$\mathsf{MkUn} : a \to \mathsf{Un} ~ a$

which serves as

![]() $!$

and helps us to write a function that returns both linear and unrestricted results. Misusing such constructors in invertible pattern matching is guarded against by the type system (otherwise it may lead to discarding or copying of invertible values). Second, the implementation uses the first-match principle for both forward and backward computations. That is, both patterns and

$!$

and helps us to write a function that returns both linear and unrestricted results. Misusing such constructors in invertible pattern matching is guarded against by the type system (otherwise it may lead to discarding or copying of invertible values). Second, the implementation uses the first-match principle for both forward and backward computations. That is, both patterns and

![]() $\mathrel{{{{{\mathbf{with}}}}}}$

conditions are examined from top to bottom. Recall also that the implementation uses a non-indentation-sensitive syntax for simplicity as mentioned in Section 1.

$\mathrel{{{{{\mathbf{with}}}}}}$

conditions are examined from top to bottom. Recall also that the implementation uses a non-indentation-sensitive syntax for simplicity as mentioned in Section 1.

It is worth noting that the implementation uses Matsuda (Reference Matsuda2020)’s type inference to infer linear types effectively without requiring any annotations. Hence, the type annotations in this paper are more for documentation purposes.

As part of our effort to prove type safety (subject reduction and progress), we also produced a parallel implementation in Agda to serve as proofs (Section 3.6), available from https://github.com/kztk-m/sparcl-agda.

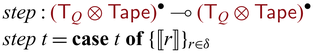

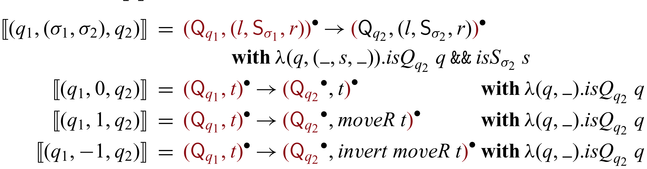

3 Core system:

${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

This section introduces

![]() ${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

, the core system that Sparcl is built on. Our design mixes ideas of linear-typed programming and meta-programming. As mentioned in Section 2.1, the language is based on (the simple multiplicity fragment of)

${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

, the core system that Sparcl is built on. Our design mixes ideas of linear-typed programming and meta-programming. As mentioned in Section 2.1, the language is based on (the simple multiplicity fragment of)

![]() $\lambda^q_{\to}$

(Bernardy et al., Reference Bernardy, Boespflug, Newton, Peyton Jones and Spiwack2018), and, as mentioned in Section 2.3, it is also two-staged (Nielson & Nielson, Reference Nielson and Nielson1992; Moggi, Reference Moggi1998) with different meta and object languages. Specifically, the meta stage is a usual call-by-value language (i.e., unidirectional), and the object stage is an invertible language. By having the two stages, partial invertibility is made explicit in this formalization.

$\lambda^q_{\to}$

(Bernardy et al., Reference Bernardy, Boespflug, Newton, Peyton Jones and Spiwack2018), and, as mentioned in Section 2.3, it is also two-staged (Nielson & Nielson, Reference Nielson and Nielson1992; Moggi, Reference Moggi1998) with different meta and object languages. Specifically, the meta stage is a usual call-by-value language (i.e., unidirectional), and the object stage is an invertible language. By having the two stages, partial invertibility is made explicit in this formalization.

In what follows, we use a number of notational conventions. A vector notation ![]() denotes a sequence such as

denotes a sequence such as

![]() $t_1,\dots,t_n$

or

$t_1,\dots,t_n$

or

![]() $t_1; \dots ; t_n$

, where each

$t_1; \dots ; t_n$

, where each

![]() $t_i$

can be of any syntactic category and the delimiter (such as “,” and “;”) can differ depending on the context; we also refer to the length of the sequence by

$t_i$

can be of any syntactic category and the delimiter (such as “,” and “;”) can differ depending on the context; we also refer to the length of the sequence by ![]() . In addition, we may refer to an element in the sequence

. In addition, we may refer to an element in the sequence ![]() as

as

![]() $t_i$

. A simultaneous substitution of

$t_i$

. A simultaneous substitution of

![]() $x_1,\dots,x_n$

in t with

$x_1,\dots,x_n$

in t with

![]() $s_1,\dots,s_n$

is denoted as

$s_1,\dots,s_n$

is denoted as

![]() $t[s_1/x_1,\dots,s_n/x_n]$

, which may also be written as

$t[s_1/x_1,\dots,s_n/x_n]$

, which may also be written as ![]() .

.

3.1 Central concept: Bijections at the heart

The surface language of Sparcl is designed for programming partially invertible functions, which are turned into bijections (by fixing the static arguments) for execution. This fact is highlighted in the core system

![]() ${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

where we have a primitive bijection type

${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

where we have a primitive bijection type

![]() ${A \rightleftharpoons B}$

, which is inhabited by bijections constructed from functions of type

${A \rightleftharpoons B}$

, which is inhabited by bijections constructed from functions of type ![]() . Technically, having a dedicated bijection type facilitates reasoning. For example, we may now straightforwardly state that “values of a bijection type

. Technically, having a dedicated bijection type facilitates reasoning. For example, we may now straightforwardly state that “values of a bijection type

![]() ${A \rightleftharpoons B}$

are bijections between A and B” (Corollary 3.4).

${A \rightleftharpoons B}$

are bijections between A and B” (Corollary 3.4).

Accordingly, the

![]() ${{{{\mathbf{fwd}}}}}$

and

${{{{\mathbf{fwd}}}}}$

and

![]() ${{{{\mathbf{bwd}}}}}$

functions for execution in Sparcl are divided into application operators

${{{{\mathbf{bwd}}}}}$

functions for execution in Sparcl are divided into application operators

![]() $ \triangleright $

and

$ \triangleright $

and

![]() $ \triangleleft $

that apply bijection-typed values and an

$ \triangleleft $

that apply bijection-typed values and an

![]() ${{{{\mathbf{unlift}}}}}$

operator for constructing bijections from functions of type

${{{{\mathbf{unlift}}}}}$

operator for constructing bijections from functions of type ![]() . For example, we have

. For example, we have

![]() ${{{{\mathbf{unlift}}}}} ~ ({add} ~ \mathsf{(S Z))} : {\mathsf{Nat} \rightleftharpoons \mathsf{Nat}}$

(where

${{{{\mathbf{unlift}}}}} ~ ({add} ~ \mathsf{(S Z))} : {\mathsf{Nat} \rightleftharpoons \mathsf{Nat}}$

(where ![]() is defined in Section 2), and the bijection can be executed as

is defined in Section 2), and the bijection can be executed as

![]() $ {{{{\mathbf{unlift}}}}} ~ ({add} ~ \mathsf{(S Z))} \triangleright \mathsf{S Z} $

resulting in

$ {{{{\mathbf{unlift}}}}} ~ ({add} ~ \mathsf{(S Z))} \triangleright \mathsf{S Z} $

resulting in

![]() $\mathsf{S (S Z)}$

and

$\mathsf{S (S Z)}$

and

![]() $ {{{{\mathbf{unlift}}}}} ~ ({add} ~ \mathsf{(S Z))} \triangleleft \mathsf{S (S Z)} $

resulting in

$ {{{{\mathbf{unlift}}}}} ~ ({add} ~ \mathsf{(S Z))} \triangleleft \mathsf{S (S Z)} $

resulting in

![]() $S Z$

. In fact, the operators

$S Z$

. In fact, the operators

![]() ${{{{\mathbf{fwd}}}}}$

and

${{{{\mathbf{fwd}}}}}$

and

![]() ${{{{\mathbf{bwd}}}}}$

are now derived in

${{{{\mathbf{bwd}}}}}$

are now derived in

![]() ${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

, as

${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

, as

![]() ${{{{\mathbf{fwd}}}}} = \lambda_\omega h. \lambda_\omega x. {{{{\mathbf{unlift}}}}} ~ h \triangleright x$

and

${{{{\mathbf{fwd}}}}} = \lambda_\omega h. \lambda_\omega x. {{{{\mathbf{unlift}}}}} ~ h \triangleright x$

and

![]() ${{{{\mathbf{bwd}}}}} = \lambda_\omega h. \lambda_\omega x. {{{{\mathbf{unlift}}}}} ~ h \triangleleft x$

.

${{{{\mathbf{bwd}}}}} = \lambda_\omega h. \lambda_\omega x. {{{{\mathbf{unlift}}}}} ~ h \triangleleft x$

.

[2] Here,

![]() $\omega$

of

$\omega$

of

![]() $\lambda_\omega$

indicates that the bound variable can be used arbitrary many. In contrast,

$\lambda_\omega$

indicates that the bound variable can be used arbitrary many. In contrast,

![]() $\lambda_1$

indicates that the bound variable must be used linearly. Hence, for example,

$\lambda_1$

indicates that the bound variable must be used linearly. Hence, for example,

![]() $\lambda_1 x.\mathsf{Z}$

and

$\lambda_1 x.\mathsf{Z}$

and

![]() $\lambda_1 x.(x,x)$

are ill-typed, while

$\lambda_1 x.(x,x)$

are ill-typed, while

![]() $\lambda_1 x.x$

,

$\lambda_1 x.x$

,

![]() $\lambda_\omega x.\mathsf{Z}$

and

$\lambda_\omega x.\mathsf{Z}$

and

![]() $\lambda_\omega x. (x,x)$

are well-typed. Similarly, we also annotate (unidirectional)

$\lambda_\omega x. (x,x)$

are well-typed. Similarly, we also annotate (unidirectional)

![]() ${{{{\mathbf{case}}}}}$

s with the multiplicity of the variables bound by pattern matching. Thus, for example,

${{{{\mathbf{case}}}}}$

s with the multiplicity of the variables bound by pattern matching. Thus, for example,

![]() ${{{{\mathbf{case}}}}}_1~\mathsf{S} ~ \mathsf{Z}~{{{{\mathbf{of}}}}}~\{ \mathsf{S} ~ x \to (x,x) \}$

and

${{{{\mathbf{case}}}}}_1~\mathsf{S} ~ \mathsf{Z}~{{{{\mathbf{of}}}}}~\{ \mathsf{S} ~ x \to (x,x) \}$

and

![]() $\lambda_1 x. {{{{\mathbf{case}}}}}_\omega~x~{{{{\mathbf{of}}}}} \{ \mathsf{S} ~ y \to \mathsf{Z} \}$

are ill-typed.

$\lambda_1 x. {{{{\mathbf{case}}}}}_\omega~x~{{{{\mathbf{of}}}}} \{ \mathsf{S} ~ y \to \mathsf{Z} \}$

are ill-typed.

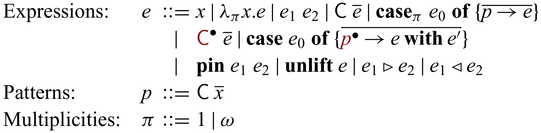

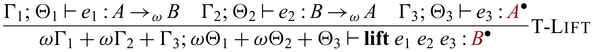

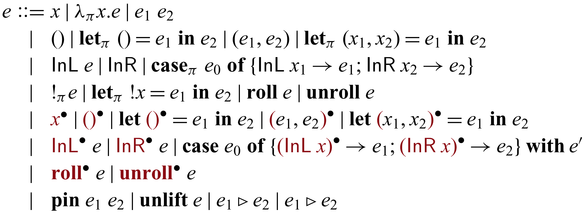

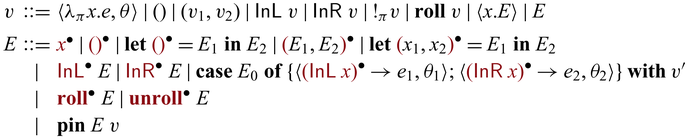

3.2 Syntax

The syntax of

![]() ${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

is given as below.

${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

is given as below.

There are three lines for the various constructs of expressions. The ones in the first line are standard except the annotations in

![]() $\lambda$

and

$\lambda$

and

![]() ${{{{\mathbf{case}}}}}$

that determine the multiplicity of the variables introduced by the binders:

${{{{\mathbf{case}}}}}$

that determine the multiplicity of the variables introduced by the binders:

![]() $\pi = 1$

means that the bound variable is linear, and

$\pi = 1$

means that the bound variable is linear, and

![]() $\pi = \omega$

means there is no restriction. These annotations are omitted in the surface language as they are inferred. The second and third lines consist of constructs that deal with invertibility. As mentioned above,

$\pi = \omega$

means there is no restriction. These annotations are omitted in the surface language as they are inferred. The second and third lines consist of constructs that deal with invertibility. As mentioned above,

![]() ${{{{\mathbf{unlift}}}}} ~ e$

,

${{{{\mathbf{unlift}}}}} ~ e$

,

![]() $e_1 \triangleright e_2$

, and

$e_1 \triangleright e_2$

, and

![]() $e_1 \triangleleft e_2$

handles bijections which can be used to encode

$e_1 \triangleleft e_2$

handles bijections which can be used to encode

![]() ${{{{\mathbf{fwd}}}}}$

and

${{{{\mathbf{fwd}}}}}$

and

![]() ${{{{\mathbf{bwd}}}}}$

in Sparcl. We have already seen lifted constructors, invertible

${{{{\mathbf{bwd}}}}}$

in Sparcl. We have already seen lifted constructors, invertible

![]() ${{{{\mathbf{case}}}}}$

, and

${{{{\mathbf{case}}}}}$

, and

![]() ${{{{\mathbf{pin}}}}}$

in Section 2. For simplicity, we assume that

${{{{\mathbf{pin}}}}}$

in Section 2. For simplicity, we assume that

![]() ${{{{\mathbf{pin}}}}}$

,

${{{{\mathbf{pin}}}}}$

,

![]() $\mathsf{C}$

and

$\mathsf{C}$

and ![]() are fully applied. Lifted constructor expressions

are fully applied. Lifted constructor expressions ![]() and invertible

and invertible

![]() ${{{{\mathbf{case}}}}}$

s are basic invertible primitives in

${{{{\mathbf{case}}}}}$

s are basic invertible primitives in

![]() ${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

. They are enough to make our system reversible Turing complete Bennett (Reference Bennett1973) (Theorem 3.5); i.e., all bijections can be implemented in the language. For simplicity, we assume that patterns are nonoverlapping both for unidirectional and invertible

${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

. They are enough to make our system reversible Turing complete Bennett (Reference Bennett1973) (Theorem 3.5); i.e., all bijections can be implemented in the language. For simplicity, we assume that patterns are nonoverlapping both for unidirectional and invertible

![]() ${{{{\mathbf{case}}}}}$

s. We do not include

${{{{\mathbf{case}}}}}$

s. We do not include

![]() ${{{{\mathbf{lift}}}}}$

, which imports external code into Sparcl, as it is by definition unsafe. Instead, we will discuss it separately in Section 3.7.

${{{{\mathbf{lift}}}}}$

, which imports external code into Sparcl, as it is by definition unsafe. Instead, we will discuss it separately in Section 3.7.

Different from conventional reversible/invertible programming languages, the constructs

![]() ${{{{\mathbf{unlift}}}}}$

(together with

${{{{\mathbf{unlift}}}}}$

(together with

![]() $ \triangleright $

and

$ \triangleright $

and

![]() $ \triangleleft $

) and

$ \triangleleft $

) and

![]() ${{{{\mathbf{pin}}}}}$

support communication between the unidirectional world and the invertible world. The

${{{{\mathbf{pin}}}}}$

support communication between the unidirectional world and the invertible world. The

![]() ${{{{\mathbf{unlift}}}}}$

construct together with

${{{{\mathbf{unlift}}}}}$

construct together with

![]() $ \triangleright $

and

$ \triangleright $

and

![]() $ \triangleleft $

runs invertible computation in the unidirectional world. The

$ \triangleleft $

runs invertible computation in the unidirectional world. The

![]() ${{{{\mathbf{pin}}}}}$

operator is the key to partiality; it enables us to temporarily convert a value in the invertible world into a value in the unidirectional world.

${{{{\mathbf{pin}}}}}$

operator is the key to partiality; it enables us to temporarily convert a value in the invertible world into a value in the unidirectional world.

3.3 Types

Types in

![]() ${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

are defined as below.

${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

are defined as below.

Here,

![]() $\alpha$

denotes a type variable,

$\alpha$

denotes a type variable,

![]() $\mathsf{T}$

denotes a type constructor,

$\mathsf{T}$

denotes a type constructor,

![]() $A \to_\pi B$

is a function type annotated with the argument’s multiplicity

$A \to_\pi B$

is a function type annotated with the argument’s multiplicity

![]() $\pi$

,

$\pi$

, ![]() marks invertibility, and

marks invertibility, and

![]() ${A \rightleftharpoons B}$

is a bijection type.

${A \rightleftharpoons B}$

is a bijection type.

Each type constructor

![]() $\mathsf{T}$

comes with a set of constructors

$\mathsf{T}$

comes with a set of constructors

![]() $\mathsf{C}$

of type

$\mathsf{C}$

of type

with ![]() for any i.Footnote

12

Type variables

for any i.Footnote

12

Type variables

![]() $\alpha$

are only used for types of constructors in the language. For example, the standard multiplicative product

$\alpha$

are only used for types of constructors in the language. For example, the standard multiplicative product

![]() $\otimes$

and additive sum

$\otimes$

and additive sum

![]() $\oplus$

Wadler (Reference Wadler1993) are represented by the following constructors.

$\oplus$

Wadler (Reference Wadler1993) are represented by the following constructors.

We assume that the set of type constructors at least include

![]() $\otimes$

and

$\otimes$

and

![]() $\mathsf{Bool}$

, where

$\mathsf{Bool}$

, where

![]() $\mathsf{Bool}$

has the constructors

$\mathsf{Bool}$

has the constructors

![]() $\mathsf{True} : \mathsf{Bool}$

and

$\mathsf{True} : \mathsf{Bool}$

and

![]() $\mathsf{False} : \mathsf{Bool}$

. Types can be recursive via constructors; for example, we can have a list type

$\mathsf{False} : \mathsf{Bool}$

. Types can be recursive via constructors; for example, we can have a list type

![]() $\mathsf{List} ~ \alpha$

with the following constructors.

$\mathsf{List} ~ \alpha$

with the following constructors.

We may write ![]() for

for

![]() $A_1 \multimap A_2 \multimap \cdots \multimap A_n \multimap B$

(when n is zero,

$A_1 \multimap A_2 \multimap \cdots \multimap A_n \multimap B$

(when n is zero, ![]() is B). We shall also instantiate constructors implicitly and write

is B). We shall also instantiate constructors implicitly and write ![]() when there is a constructor

when there is a constructor ![]() for each i. Thus, we assume all types in our discussions are closed.

for each i. Thus, we assume all types in our discussions are closed.

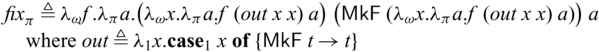

Negative recursive types are allowed in our system, which, for example, enables us to define general recursions without primitive fixpoint operators. Specifically, via

![]() $\mathsf{F}$

with the constructor

$\mathsf{F}$

with the constructor

![]() $ \mathsf{MkF} : (\mathsf{F} ~ \alpha \to \alpha) \multimap \mathsf{F} ~ \alpha$

, we have a fixpoint operator as below.

$ \mathsf{MkF} : (\mathsf{F} ~ \alpha \to \alpha) \multimap \mathsf{F} ~ \alpha$

, we have a fixpoint operator as below.

Here, out has type

![]() $\mathsf{F} ~ C \multimap \mathsf{F} ~ C \to C$

for any C (in this case

$\mathsf{F} ~ C \multimap \mathsf{F} ~ C \to C$

for any C (in this case

![]() $C = A \to_{\pi} B$

), and thus

$C = A \to_{\pi} B$

), and thus

![]() ${fix}_\pi$

has type

${fix}_\pi$

has type

![]() $((A \to_\pi B) \to (A \to_\pi B)) \to A \to_\pi B$

.

$((A \to_\pi B) \to (A \to_\pi B)) \to A \to_\pi B$

.

The most special type in the language is ![]() , which is the invertible version of A. More specifically, the invertible type

, which is the invertible version of A. More specifically, the invertible type ![]() represents residual code in an invertible system that are executed forward and backward at the second stage to output and input A-typed values. Values of type

represents residual code in an invertible system that are executed forward and backward at the second stage to output and input A-typed values. Values of type ![]() must be treated linearly and can only be manipulated by invertible operations, such as lifted constructors, invertible pattern matching, and

must be treated linearly and can only be manipulated by invertible operations, such as lifted constructors, invertible pattern matching, and

![]() ${{{{\mathbf{pin}}}}}$

. To keep our type system simple, or more specifically single-kinded, we allow types like

${{{{\mathbf{pin}}}}}$

. To keep our type system simple, or more specifically single-kinded, we allow types like ![]() , while the category of (not-necessarily-total) bijections are not closed and

, while the category of (not-necessarily-total) bijections are not closed and

![]() ${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

has no third stage. These types do not pose any problem, as such components cannot be inspected in invertible computation by any means (except in

${{{{\lambda^{\mathrm{PI}}_{\to}}}}}$

has no third stage. These types do not pose any problem, as such components cannot be inspected in invertible computation by any means (except in

![]() $\mathrel{{{{{\mathbf{with}}}}}}$

conditions, which are unidirectional, i.e., run at the first stage).

$\mathrel{{{{{\mathbf{with}}}}}}$

conditions, which are unidirectional, i.e., run at the first stage).

Note that we consider the primitive bijection types

![]() ${A \rightleftharpoons B}$

as separate from

${A \rightleftharpoons B}$

as separate from

![]() $(A \to B) \otimes (B \to A)$

. This separation is purely for reasoning; in our theoretical development, we will show that

$(A \to B) \otimes (B \to A)$

. This separation is purely for reasoning; in our theoretical development, we will show that

![]() ${A \rightleftharpoons B}$

denotes pairs of functions that are guaranteed to form (not-necessarily-total) bijections (corollary:correctness).

${A \rightleftharpoons B}$

denotes pairs of functions that are guaranteed to form (not-necessarily-total) bijections (corollary:correctness).

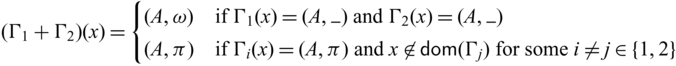

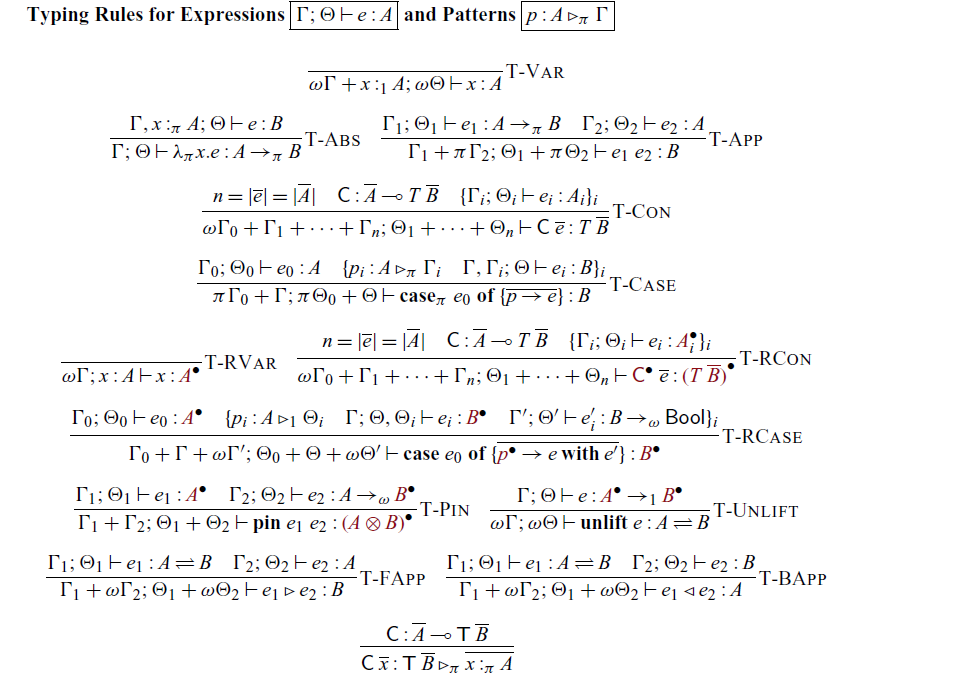

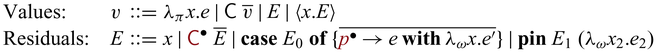

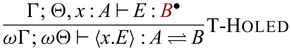

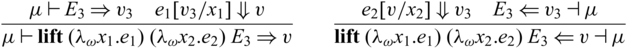

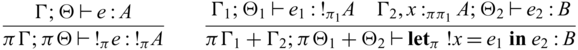

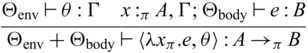

3.4 Typing relation

A typing environment is a mapping from variables x to pairs of type A and its multiplicity

![]() $\pi$

, meaning that x has type A and can be used

$\pi$

, meaning that x has type A and can be used

![]() $\pi$

-many times. We write

$\pi$

-many times. We write

![]() $x_1:_{\pi_1} A_1, \dots, x_n:_{\pi_n} B_n$

instead of

$x_1:_{\pi_1} A_1, \dots, x_n:_{\pi_n} B_n$

instead of

![]() $\{ x_1 \mapsto (A_1 , \pi_1), \dots, x_n \mapsto (B_n, \pi_n) \}$

for readability and write

$\{ x_1 \mapsto (A_1 , \pi_1), \dots, x_n \mapsto (B_n, \pi_n) \}$

for readability and write

![]() ${epsilon}$

for the empty environment. Reflecting the two stages, we adopt a dual context system (Davies & Pfenning, Reference Davies and Pfenning2001), which has unidirectional and invertible environments, denoted by

${epsilon}$

for the empty environment. Reflecting the two stages, we adopt a dual context system (Davies & Pfenning, Reference Davies and Pfenning2001), which has unidirectional and invertible environments, denoted by

![]() $\Gamma$

and

$\Gamma$

and

![]() $\Theta$

respectively. This separation of the two is purely theoretical, for the purpose of facilitating reasoning when we interpret

$\Theta$

respectively. This separation of the two is purely theoretical, for the purpose of facilitating reasoning when we interpret ![]() -typed expressions that are closed in unidirectional variables but may have free variables in

-typed expressions that are closed in unidirectional variables but may have free variables in

![]() $\Theta$

as bijections. In fact, our prototype implementation does not distinguish the two environments. For all invertible environments

$\Theta$

as bijections. In fact, our prototype implementation does not distinguish the two environments. For all invertible environments

![]() $\Theta$

, without the loss of generality we assume that the associated multiplicities must be 1, i.e.,