1. Introduction

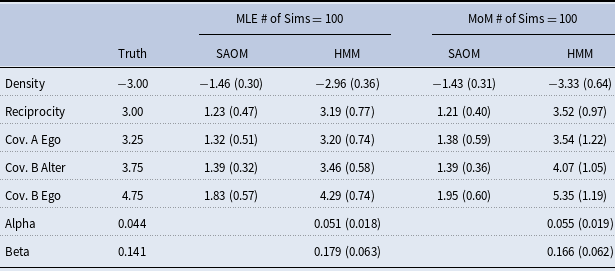

Networks have been used broadly in biology, the social sciences, and many other fields to model and analyze the relational structure of individual units in a complex system. In a network model, nodes or vertices represent the units of the system, and edges connect the vertices if the corresponding units share a relationship. It is desirable, in many applications, to study the change in connections among the vertices of a network over time. Stochastic actor-oriented models (SAOMs), designed specifically for longitudinally observed networks (i.e. network panel data), are a class of models that were developed for this purpose in the social network setting by Snijders et al., Snijders (Reference Snijders1996); Snijders et al. (Reference Snijders, Van de Bunt and Steglich2010); Snijders (Reference Snijders2017).

The SAOM framework revolves around the notion that the vertices control the connections they make to other vertices. This approach is different from other models, such as the temporal exponential random graph model (TERGM) developed by Hanneke et al., Hanneke et al. (Reference Hanneke, Fu and Xing2010). The assumption is that the network evolves as a continuous time Markov chain and that the networks one has observed are snapshots of this stochastic process. Network changes are assumed to happen by one vertex making a change in one of its connections at a time. Vertices seek to change these connections such that their “personal satisfaction” with the network configuration is maximized. This “satisfaction” is captured by an objective function, in the form of a linear combination of effects, which can be both endogenous (i.e. functions of the network itself) and exogenous (i.e. functions of vertex characteristics). Parameters indicating the strength of each effect are estimated using either a method of moments or maximum likelihood simulation-based approach, and hypotheses associated with each effect can be tested, similar to a linear regression framework Snijders (Reference Snijders2017); Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010).

SAOMs have been shown to be useful in a variety of applications, but a key assumption is that the networks one has observed are error-free. In other words, one is assuming that the vertices present in the network and the relationships observed among them are all accurate at the time the network data were measured. However, what if this is not the case? Network analysis has long been plagued by issues of measurement error Wang et al. (Reference Wang, Shi, McFarland and Leskovec2012). For instance, survey respondents may not report the correct spellings of their friends’ names. This not only leads to erroneous vertices, but also to an absence of an edge to the correct vertex in the social network. Furthermore, even if everyone reports the correct spellings of their friends’ names, the understanding of what qualifies as a friendship tie can vary by respondent. Other settings, such as co-authorship networks, which represent collaborative relationships, can also contain false positive and false negative edges Wuchty et al. (Reference Wuchty, Jones and Uzzi2007). In this case, false edges may exist because of failure to account for edge decay. One can deal with this issue by setting a pre-specified time window under which the established relationship is thought to be meaningful Fleming and Frenken (Reference Fleming and Frenken2007). However, setting too narrow of a window might overlook important relationships and introduce false negatives, while setting too wide of a window can introduce false positives Wang et al. (Reference Wang, Shi, McFarland and Leskovec2012).

In addition to SAOMs being used extensively in a social network context, in our own work we have recently adapted these models to resting-state fMRI complex brain networks. We sought to answer questions such as, “If two brain regions are in the same cortical lobe, are they more likely to connect?” In this case, a connection represents a similar pattern of brain activity. In the neuroscience setting, functional network edges are almost always defined based on some measure of association between patterns of activation between distinct brain regions Simpson et al. (Reference Simpson, Bowman and Laurienti2013). Therefore, it is unreasonable to assume that the observed networks are always the truth. Instead, we expect some level of type I error (false positives) and type 2 error (false negatives) to exist in the inferred networks.

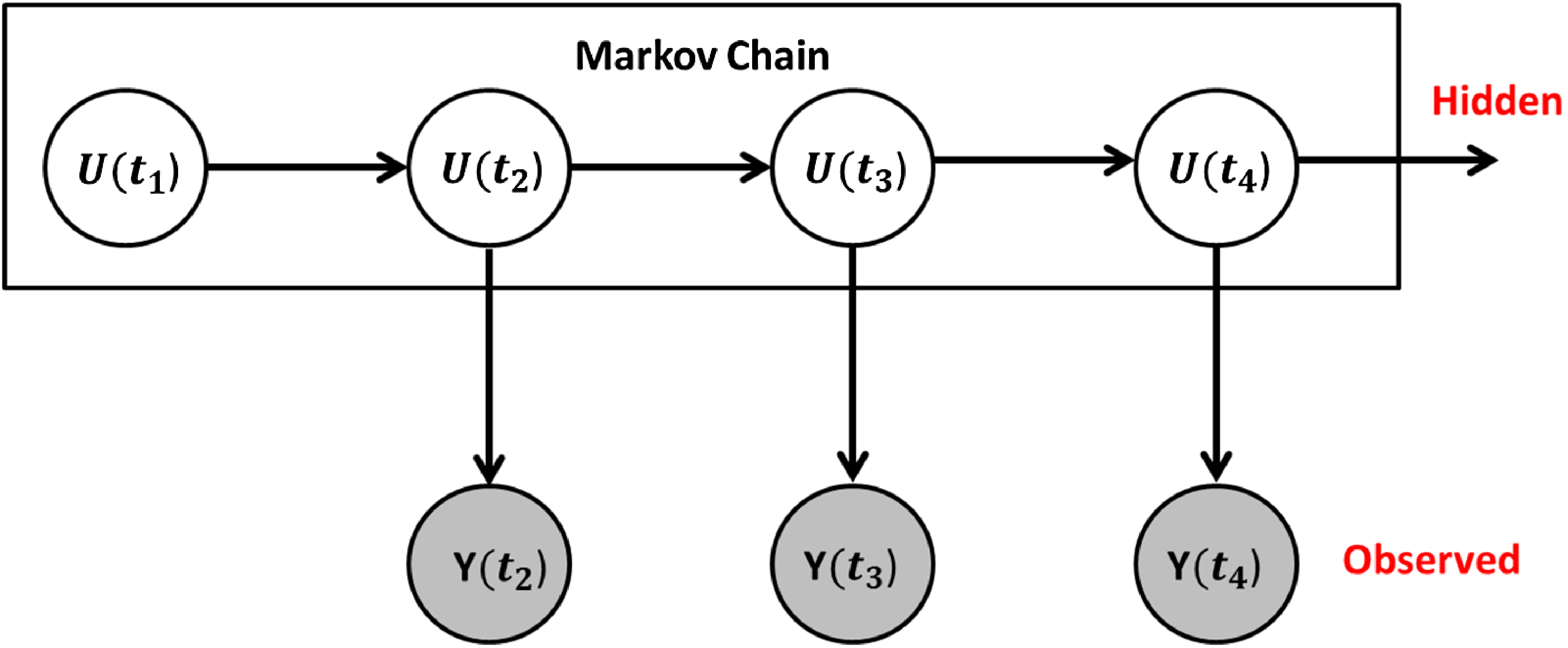

Motivated by scenarios such as these, our goal is to account for false positive and false negative edges while analyzing observed networks with SAOMs. To capture the notion of false positive and false negative rates, along with the parameters in the SAOM, we propose a hidden Markov model (HMM) based approach. This modeling approach consists of two components - the latent Markov model and the measurement model. The latent Markov model specifies that the unobserved hidden networks evolve according to a Markov process, as they did in the original SAOM framework. The measurement model describes the conditional distribution of the observed networks given the true networks.

HMMs, developed by Baum and colleagues in the 1960s Baum et al. (Reference Baum, Petrie, Soules and Weiss1970), are a natural modeling approach to take given that we have an observable sequence of a system in which the hidden state is governed by a Markov process. They have been widely studied in statistics Ephraim and Merhav (Reference Ephraim and Merhav2002) and have been applied extensively in many applications, such as in speech recognition and biological sequence analysis Gales and Young (Reference Gales and Young2008); Yoon (Reference Yoon2009). While HMMs have been used much less frequently in the dynamic network analysis literature, there is some work where they have been incorporated. For example, Guo et al., developed the hidden TERGM, which utilizes a hidden Markov process to model and recover temporally rewiring networks from time series of node characteristics Guo et al. (Reference Guo, Hanneke, Fu and Xing2007). Dong et al., present the Graph-Coupled Hidden Markov Model, a discrete-time model for analyzing the interactions between individuals in a dynamic social network Dong et al. (Reference Dong, Pentland and Heller2012). Their method incorporates dynamic social network structure into a hidden Markov Model to predict how the spread of illness occurs and can be avoided on an individual level. Similarly, Raghavan et al., propose a coupled Hidden Markov Model, where each user’s activity in a social network evolves according to a Markov chain with a hidden state that is influenced by the collective activity of the friends of the user Raghavan et al. (Reference Raghavan, Ver Steeg, Galstyan and Tartakovsky2014).

HMMs for SAOMs have not been published in a peer-reviewed journal, but they have been published in a dissertation Lospinoso and Lospinoso (Reference Lospinoso and Lospinoso2012). Lospinoso worked to tackle this same problem of accounting for error on the observed networking edges in the SAOM setting. We take a similar approach in this paper, but instead of using a full MCMC algorithm for performing maximum likelihood estimation of the model parameters, we take an MCMC within an Expectation-Maximization approach to parameter estimation. We also focus on a brain network application, whereas Lospinoso applies the methodology to social networks. We touch further on the similarities and differences to Lospinoso’s approach in the Discussion section.

The remainder of this paper is organized as follows. In section 2, we introduce our HMM-SAOM set-up. Section 3 provides necessary background information on the SAOM model set-up, as well as the estimation routine traditionally used to fit the SAOM parameters. Our framework incorporates the methods described in this section. Section 4 describes our Expectation-Maximum algorithm for maximum likelihood estimation of the false positive and false negative error rates, along with the SAOM parameters. We assess the performance of our method on a series of simulated dynamic networks in Section 5, comparing it to the case of fitting only a standard SAOM to noisy networks. In Section 6, we apply our method to functional brain networks inferred from electroencephalogram (EEG) data. We conclude with a discussion of our method and open directions for future research.

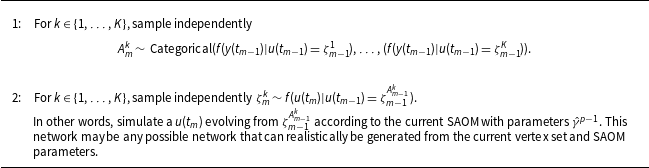

Figure 1. Hidden Markov Model Set-Up. The unobserved hidden networks evolve according to a Markov process, as they did in the original stochastic actor-oriented models framework. The true networks are then observed with measurement error.

2. The SAOM hidden Markov model set-up

We consider repeated observations of a directed network on a given set of vertices

![]() $\mathcal{N}={1,\ldots ,N}$

, observed according to a panel design. The observations are represented as a sequence of digraphs

$\mathcal{N}={1,\ldots ,N}$

, observed according to a panel design. The observations are represented as a sequence of digraphs

![]() $y(t_{m})$

for

$y(t_{m})$

for

![]() $m=1,\ldots ,M$

, where

$m=1,\ldots ,M$

, where

![]() $t_{1} \lt \ldots \lt t_{M}$

are the observation times and the node set is the same for all observation times. A digraph is defined as a subset

$t_{1} \lt \ldots \lt t_{M}$

are the observation times and the node set is the same for all observation times. A digraph is defined as a subset

![]() $y$

of

$y$

of

![]() $\big \{(i,j) \in \mathcal{N}^2|i \neq j\big \}$

Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010); Snijders (Reference Snijders2017). When

$\big \{(i,j) \in \mathcal{N}^2|i \neq j\big \}$

Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010); Snijders (Reference Snijders2017). When

![]() $(i,j) \in y$

, there is an edge from vertex

$(i,j) \in y$

, there is an edge from vertex

![]() $i$

to vertex

$i$

to vertex

![]() $j$

. The observations

$j$

. The observations

![]() $y(t_{m})$

are realizations of random digraph/network variables

$y(t_{m})$

are realizations of random digraph/network variables

![]() $Y(t_{m})$

. This random vector of observed network variables

$Y(t_{m})$

. This random vector of observed network variables

![]() $Y(t_{1}),\ldots , Y(t_{M})$

is denoted by

$Y(t_{1}),\ldots , Y(t_{M})$

is denoted by

![]() $\tilde {Y}$

. We represent a true/hidden network variable underlying the observed network at a particular observation time by

$\tilde {Y}$

. We represent a true/hidden network variable underlying the observed network at a particular observation time by

![]() $U(t_{m})$

. The vector of true network variables,

$U(t_{m})$

. The vector of true network variables,

![]() $U(t_{1}),\ldots , U(t_{M})$

is denoted by

$U(t_{1}),\ldots , U(t_{M})$

is denoted by

![]() $\tilde {U}$

. See Figure 1 for a visual representation. We also assume that

$\tilde {U}$

. See Figure 1 for a visual representation. We also assume that

-

1. The vector of true networks follows a first order Markov chain.

\begin{equation*}f(\tilde {u})=f(u(t_{1}))\prod \limits _{m=2}^M f(u(t_{m})|u(t_{m-1}))\end{equation*}

\begin{equation*}f(\tilde {u})=f(u(t_{1}))\prod \limits _{m=2}^M f(u(t_{m})|u(t_{m-1}))\end{equation*}

-

2. The observed networks are conditionally independent given the latent process.

\begin{equation*}f(y(t_{m})|u(t_{1:M}), y(t_{1:(m-1)}), y(t_{(m+1):M}))=f(y(t_{m})|u(t_{m}))\end{equation*}

\begin{equation*}f(y(t_{m})|u(t_{1:M}), y(t_{1:(m-1)}), y(t_{(m+1):M}))=f(y(t_{m})|u(t_{m}))\end{equation*}

-

3. We condition on the first observed network

$y(t_{1})$

, and we assume the first true network

$y(t_{1})$

, and we assume the first true network

$u(t_{1})$

is observed error-free.

$u(t_{1})$

is observed error-free. \begin{equation*} f(u(t_{1}))= \begin{cases} 1 & u(t_{1})=y(t_{1}) \\ 0 & otherwise \end{cases} \end{equation*}

\begin{equation*} f(u(t_{1}))= \begin{cases} 1 & u(t_{1})=y(t_{1}) \\ 0 & otherwise \end{cases} \end{equation*}

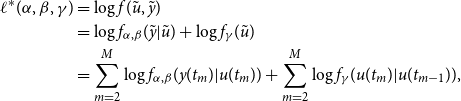

Therefore, the complete data log-likelihood, conditional on

![]() $y(t_{1})$

, can be written as:

$y(t_{1})$

, can be written as:

\begin{align} \ell ^{*}(\alpha , \beta , \gamma )&=\log f(\tilde {u},\tilde {y}) \nonumber \\ &=\log f_{\alpha ,\beta }(\tilde {y}|\tilde {u}) + \log f_{\gamma }(\tilde {u}) \nonumber \\ &=\sum _{m=2}^{M} \log f_{\alpha ,\beta }(y(t_{m})|u(t_{m})) + \sum _{m=2}^{M} \log f_{\gamma }(u(t_{m})|u(t_{m-1})), \end{align}

\begin{align} \ell ^{*}(\alpha , \beta , \gamma )&=\log f(\tilde {u},\tilde {y}) \nonumber \\ &=\log f_{\alpha ,\beta }(\tilde {y}|\tilde {u}) + \log f_{\gamma }(\tilde {u}) \nonumber \\ &=\sum _{m=2}^{M} \log f_{\alpha ,\beta }(y(t_{m})|u(t_{m})) + \sum _{m=2}^{M} \log f_{\gamma }(u(t_{m})|u(t_{m-1})), \end{align}

where

![]() $\alpha$

is the false positive rate,

$\alpha$

is the false positive rate,

![]() $\beta$

is the false negative rate, and

$\beta$

is the false negative rate, and

![]() $\gamma$

consists of the objective function parameters and rate parameters in the SAOM. Additional details on the SAOM and its parameters are provided in Section 3.

$\gamma$

consists of the objective function parameters and rate parameters in the SAOM. Additional details on the SAOM and its parameters are provided in Section 3.

The first term in

![]() $\ell ^{*}(\alpha , \beta , \gamma )$

derives from the conditional distribution of the observed networks given the true networks and takes the form

$\ell ^{*}(\alpha , \beta , \gamma )$

derives from the conditional distribution of the observed networks given the true networks and takes the form

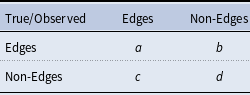

where

![]() $a_{km},b_{km},c_{km},$

and

$a_{km},b_{km},c_{km},$

and

![]() $d_{km}$

(for a given true network

$d_{km}$

(for a given true network

![]() $u_{m}$

at observation time

$u_{m}$

at observation time

![]() $t_{m}$

) represent counts of false positive and false negative edges (see Table 1).

$t_{m}$

) represent counts of false positive and false negative edges (see Table 1).

Table 1. Counts corresponding to false positive and false negative edges

The second term in

![]() $\ell ^{*}(\alpha , \beta , \gamma )$

, deriving from the network transition probability distribution, cannot be calculated in closed form. Additional details will be provided in the Section 3.

$\ell ^{*}(\alpha , \beta , \gamma )$

, deriving from the network transition probability distribution, cannot be calculated in closed form. Additional details will be provided in the Section 3.

3. Background on SAOM framework

3.1 Modeling framework

We adopt the SAOM framework of Snijders et al., Snijders et al. (Reference Snijders, Van de Bunt and Steglich2010) for the evolution of our true networks, which are underlying the sequence of our observed networks,

![]() $y(t_{m})$

. In this framework, it is assumed that the changing network is the outcome of a continuous time Markov process with time parameter

$y(t_{m})$

. In this framework, it is assumed that the changing network is the outcome of a continuous time Markov process with time parameter

![]() $t \in T$

where the

$t \in T$

where the

![]() $u(t_{m})$

, underlying the

$u(t_{m})$

, underlying the

![]() $y(t_{m})$

, are realizations of stochastic digraphs

$y(t_{m})$

, are realizations of stochastic digraphs

![]() $U(t_{m})$

embedded in a continuous-time stochastic process

$U(t_{m})$

embedded in a continuous-time stochastic process

![]() $U(t)$

,

$U(t)$

,

![]() $t_{1} \le t \le t_{M}$

. The totality of possible networks is the state space and the discrete set of true networks are snapshots of the true network state during the continuous period of time. In other words, many changes are assumed to happen in the true networks between observation times, and the process unfolds in time steps of potentially varying lengths Snijders (Reference Snijders1996); Snijders et al. (Reference Snijders, Van de Bunt and Steglich2010); Snijders (Reference Snijders2017).

$t_{1} \le t \le t_{M}$

. The totality of possible networks is the state space and the discrete set of true networks are snapshots of the true network state during the continuous period of time. In other words, many changes are assumed to happen in the true networks between observation times, and the process unfolds in time steps of potentially varying lengths Snijders (Reference Snijders1996); Snijders et al. (Reference Snijders, Van de Bunt and Steglich2010); Snijders (Reference Snijders2017).

Each

![]() $U(t)$

is made up of

$U(t)$

is made up of

![]() $N\times (N-1)$

possible edge status variables

$N\times (N-1)$

possible edge status variables

![]() $u_{ij}$

, where

$u_{ij}$

, where

![]() $u_{ij}=1$

if there exists a directed edge from vertex

$u_{ij}=1$

if there exists a directed edge from vertex

![]() $i$

to vertex

$i$

to vertex

![]() $j$

and

$j$

and

![]() $u_{ij}=0$

otherwise. At a given moment, one probabilistically selected vertex may change an edge, where the decision is modeled according to a random utility model, requiring the specification of a utility function (i.e., objective function) depending on a set of explanatory variables and parameters. Therefore, we are reduced to modeling the change of one edge status variable

$u_{ij}=0$

otherwise. At a given moment, one probabilistically selected vertex may change an edge, where the decision is modeled according to a random utility model, requiring the specification of a utility function (i.e., objective function) depending on a set of explanatory variables and parameters. Therefore, we are reduced to modeling the change of one edge status variable

![]() $u_{ij}$

by one vertex at a time (a network micro step) and modeling the occurrence of all these micro steps over time. The first true network

$u_{ij}$

by one vertex at a time (a network micro step) and modeling the occurrence of all these micro steps over time. The first true network

![]() $u(t_{1})$

serves as a starting value of the evolution process. At any time point

$u(t_{1})$

serves as a starting value of the evolution process. At any time point

![]() $t$

with current network

$t$

with current network

![]() $u(t) = u$

, each of the vertices has a rate function

$u(t) = u$

, each of the vertices has a rate function

![]() $\lambda _{i}(\delta ,u)$

, where

$\lambda _{i}(\delta ,u)$

, where

![]() $\delta$

is a parameter. Therefore, the waiting time until occurrence of the next micro step by any vertex is exponentially distributed with parameter

$\delta$

is a parameter. Therefore, the waiting time until occurrence of the next micro step by any vertex is exponentially distributed with parameter

\begin{align} \lambda (\delta , u)=\sum _{i=1}^{N}\lambda _{i}(\delta , u). \end{align}

\begin{align} \lambda (\delta , u)=\sum _{i=1}^{N}\lambda _{i}(\delta , u). \end{align}

Given that an opportunity for change occurs, the probability that it is vertex

![]() $i$

who gets the opportunity is given by

$i$

who gets the opportunity is given by

The micro step that vertex

![]() $i$

takes is determined probabilistically by a linear combination of effects. For example, let’s assume that

$i$

takes is determined probabilistically by a linear combination of effects. For example, let’s assume that

![]() $u$

is the current network and vertex

$u$

is the current network and vertex

![]() $i$

has the opportunity to make a network change. The next network state

$i$

has the opportunity to make a network change. The next network state

![]() $u'$

then must be either equal to

$u'$

then must be either equal to

![]() $u$

or deviate from

$u$

or deviate from

![]() $u$

by one edge. Vertex

$u$

by one edge. Vertex

![]() $i$

chooses the value of

$i$

chooses the value of

![]() $u'$

for which

$u'$

for which

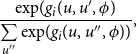

is maximal, where

![]() $\epsilon _{i}(u,u')$

is a Gumbel-distributed random disturbance that captures the uncertainty stemming from unknown factors, and

$\epsilon _{i}(u,u')$

is a Gumbel-distributed random disturbance that captures the uncertainty stemming from unknown factors, and

where

![]() $\phi _{e}$

represent parameters and

$\phi _{e}$

represent parameters and

![]() $W_{e}(i,u,u')$

represent the corresponding effects. There are many types of effects one can place in the model. See Ripley et al., Ripley et al. (Reference Ripley, Snijders, Boda, Vörös and Preciado2024) for a full list. Some are purely structural effects, such as triangle formation and reciprocity. Other effects may involve vertex traits, such as gender or smoking status of the individuals in a social network.

$W_{e}(i,u,u')$

represent the corresponding effects. There are many types of effects one can place in the model. See Ripley et al., Ripley et al. (Reference Ripley, Snijders, Boda, Vörös and Preciado2024) for a full list. Some are purely structural effects, such as triangle formation and reciprocity. Other effects may involve vertex traits, such as gender or smoking status of the individuals in a social network.

Equation 3.4 is the objective function. It can be thought of as a function of the network perceived by the focal vertex. Probabilities are higher for moving towards network states with a high value of the objective function. The objective function depends on the personal network position of vertex

![]() $i$

, vertex

$i$

, vertex

![]() $i$

’s exogenous covariates, and the exogenous covariates of all of the vertices in

$i$

’s exogenous covariates, and the exogenous covariates of all of the vertices in

![]() $i$

’s personal network. Due to distributional assumptions placed on

$i$

’s personal network. Due to distributional assumptions placed on

![]() $\epsilon _{i}(u,u')$

, the probability of choosing

$\epsilon _{i}(u,u')$

, the probability of choosing

![]() $u'$

can be expressed in multinomial logit form as

$u'$

can be expressed in multinomial logit form as

\begin{align} \frac {\exp\!(g_{i}(u,u',\phi )}{\sum \limits _{u''}\exp\!(g_{i}(u,u'',\phi ))}, \end{align}

\begin{align} \frac {\exp\!(g_{i}(u,u',\phi )}{\sum \limits _{u''}\exp\!(g_{i}(u,u'',\phi ))}, \end{align}

where the sum of the denominator extends over all possible next network states

![]() $u''$

.

$u''$

.

For each set of model parameters, there exists a stationary distribution of probabilities over the state space of all possible network configurations for the Markov process that governs the SAOM. The complexity of the model does not allow for the equilibrium distribution (nor the likelihood of the network “snapshots”) to be calculated in closed form. Therefore, parameter estimates need to be obtained via an iterative stochastic approximation version of a maximum likelihood approach based on data augmentation.

3.2 SAOM maximum likelihood framework

The distribution of the true networks

![]() $U(t_{1}),\ldots , U(t_{M})$

conditional on

$U(t_{1}),\ldots , U(t_{M})$

conditional on

![]() $U(t_{1})$

cannot generally be expressed in closed form. Therefore, the true networks are augmented with data such that an easily computable likelihood is obtained Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010). The data augmentation can be done for each period

$U(t_{1})$

cannot generally be expressed in closed form. Therefore, the true networks are augmented with data such that an easily computable likelihood is obtained Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010). The data augmentation can be done for each period

![]() $(U(t_{m-1}),U(t_{m}))$

separately, and therefore, it is explained below only for

$(U(t_{m-1}),U(t_{m}))$

separately, and therefore, it is explained below only for

![]() $U(t_{1})$

and

$U(t_{1})$

and

![]() $U(t_{2})$

.

$U(t_{2})$

.

Denote the time points of an opportunity for change by

![]() $T_{r}$

and their total number between

$T_{r}$

and their total number between

![]() $t_{1}$

and

$t_{1}$

and

![]() $t_{2}$

by

$t_{2}$

by

![]() $R$

, the time points being ordered increasingly so that

$R$

, the time points being ordered increasingly so that

![]() $t_{1}= T_{0} \lt T_{1} \lt T_{2} \lt \ldots \lt T_{R} \leq t_{2}$

. The model assumptions imply that at each time

$t_{1}= T_{0} \lt T_{1} \lt T_{2} \lt \ldots \lt T_{R} \leq t_{2}$

. The model assumptions imply that at each time

![]() $T_{r}$

, there is one vertex, denoted

$T_{r}$

, there is one vertex, denoted

![]() $I_{r}$

, who gets an opportunity for change at this time moment. Define

$I_{r}$

, who gets an opportunity for change at this time moment. Define

![]() $J_{r}$

as the vertex toward whom the edge status variable is changed, and define

$J_{r}$

as the vertex toward whom the edge status variable is changed, and define

![]() $J_{r}=I_{r}$

if there is no change. Given

$J_{r}=I_{r}$

if there is no change. Given

![]() $u(t_{1})$

, the outcome of the stochastic process

$u(t_{1})$

, the outcome of the stochastic process

![]() $(T_{r},I_{r},J_{r})$

,

$(T_{r},I_{r},J_{r})$

,

![]() $r=1,\ldots ,R$

completely determines

$r=1,\ldots ,R$

completely determines

![]() $u(t)$

,

$u(t)$

,

![]() $t_{1}\lt t \leq t_{2}$

.

$t_{1}\lt t \leq t_{2}$

.

The stochastic process

![]() $V=((I_{r},J_{r}),r=1,\ldots ,R$

) will be referred to as the sample path. Define

$V=((I_{r},J_{r}),r=1,\ldots ,R$

) will be referred to as the sample path. Define

![]() $u^{(r)}=u(T_{r})$

. The graphs

$u^{(r)}=u(T_{r})$

. The graphs

![]() $u^{(r)}$

and

$u^{(r)}$

and

![]() $u^{(r-1)}$

differ in element

$u^{(r-1)}$

differ in element

![]() $(I_{r},J_{r})$

, provided

$(I_{r},J_{r})$

, provided

![]() $I_{r}\neq J_{r}$

and in no other elements. Snijders et al., show that in the case where the vertex-level rates of change

$I_{r}\neq J_{r}$

and in no other elements. Snijders et al., show that in the case where the vertex-level rates of change

![]() $\lambda _{i}(\delta , u)$

are constant (which is an assumption typically recommended in using SAOMs), denoted by

$\lambda _{i}(\delta , u)$

are constant (which is an assumption typically recommended in using SAOMs), denoted by

![]() $\delta _{1}$

, the probability function of the sample path, conditional on

$\delta _{1}$

, the probability function of the sample path, conditional on

![]() $u(t_{1})$

, is given by

$u(t_{1})$

, is given by

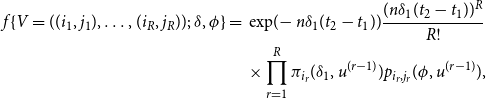

\begin{align} f\{V=((i_{1},j_{1}),\ldots ,(i_{R},j_{R}));\;\delta ,\phi \}= {} & \exp\!{(\!-n\delta _{1}(t_{2}-t_{1}))}\frac {(n\delta _{1}(t_{2}-t_{1}))^{R}}{R!} \nonumber \\ & \times \prod \limits _{r=1}^R \pi _{i_{r}}(\delta _{1},u^{(r-1)})p_{i_{r},j_{r}}(\phi ,u^{(r-1)}), \end{align}

\begin{align} f\{V=((i_{1},j_{1}),\ldots ,(i_{R},j_{R}));\;\delta ,\phi \}= {} & \exp\!{(\!-n\delta _{1}(t_{2}-t_{1}))}\frac {(n\delta _{1}(t_{2}-t_{1}))^{R}}{R!} \nonumber \\ & \times \prod \limits _{r=1}^R \pi _{i_{r}}(\delta _{1},u^{(r-1)})p_{i_{r},j_{r}}(\phi ,u^{(r-1)}), \end{align}

where

![]() $\pi _{i}$

is defined in (3.2), and

$\pi _{i}$

is defined in (3.2), and

where the summation extends over all possible next network states

![]() $\acute {u}$

.

$\acute {u}$

.

Therefore, for two possible true networks

![]() $(u(t_{1}),u(t_{2}))$

augmented by a sample path, the likelihood conditional on

$(u(t_{1}),u(t_{2}))$

augmented by a sample path, the likelihood conditional on

![]() $u(t_{1})$

can be expressed exactly. An MCMC algorithm is used to find the maximum likelihood estimator based on the augmented data. The algorithm Snijders et al., implements, proposed by Gu and Kong Gu and Kong (Reference Gu and Kong1998), is based on the missing information principle, which can be summarized as follows. Let the rate and objective function parameters

$u(t_{1})$

can be expressed exactly. An MCMC algorithm is used to find the maximum likelihood estimator based on the augmented data. The algorithm Snijders et al., implements, proposed by Gu and Kong Gu and Kong (Reference Gu and Kong1998), is based on the missing information principle, which can be summarized as follows. Let the rate and objective function parameters

![]() $(\delta , \phi )$

be denoted by

$(\delta , \phi )$

be denoted by

![]() $\gamma$

. Suppose

$\gamma$

. Suppose

![]() $\tilde {u}$

is given and having probability density

$\tilde {u}$

is given and having probability density

![]() $f(\tilde {u}; \;\gamma )$

. Then, suppose it is augmented by extra data

$f(\tilde {u}; \;\gamma )$

. Then, suppose it is augmented by extra data

![]() $v$

, such that the joint density is

$v$

, such that the joint density is

![]() $f(\tilde {u}, v;\; \gamma )$

. Denote the incomplete data score function

$f(\tilde {u}, v;\; \gamma )$

. Denote the incomplete data score function

![]() $\frac {\partial }{\partial \gamma }\log f(\tilde {u};\; \gamma )$

by

$\frac {\partial }{\partial \gamma }\log f(\tilde {u};\; \gamma )$

by

![]() $S(\gamma ;\;\tilde {u})$

and the complete data score function

$S(\gamma ;\;\tilde {u})$

and the complete data score function

![]() $\frac {\partial }{\partial \gamma }\log f(\tilde {u}, v;\; \gamma )$

by

$\frac {\partial }{\partial \gamma }\log f(\tilde {u}, v;\; \gamma )$

by

![]() $S(\gamma ;\;\tilde {u}, v)$

. It can be shown

$S(\gamma ;\;\tilde {u}, v)$

. It can be shown

which implies that maximum likelihood estimates can be determined as the solution to

In the SAOM context,

![]() $U(t_{1})$

is treated as fixed, and data are augmented between the true networks at each observed time point

$U(t_{1})$

is treated as fixed, and data are augmented between the true networks at each observed time point

![]() $m=1,\ldots ,M$

by a sample path that could have brought each true network to the next. Each period

$m=1,\ldots ,M$

by a sample path that could have brought each true network to the next. Each period

![]() $(t_{m-1}, t_{m})$

is treated separately, and draws from the probability distribution of the sample path,

$(t_{m-1}, t_{m})$

is treated separately, and draws from the probability distribution of the sample path,

![]() $v_{m}$

, conditional on

$v_{m}$

, conditional on

![]() $U(t_{m})=u(t_{m})$

,

$U(t_{m})=u(t_{m})$

,

![]() $U(t_{m-1})=u(t_{m-1})$

, are generated by the Metropolis Hastings Algorithm. These sample paths for each period combined constitute

$U(t_{m-1})=u(t_{m-1})$

, are generated by the Metropolis Hastings Algorithm. These sample paths for each period combined constitute

![]() $v$

. The complete data score function can be written as

$v$

. The complete data score function can be written as

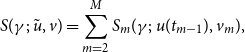

\begin{align} S(\gamma ;\;\tilde {u}, v)=\sum _{m=2}^{M}S_{m}(\gamma ;\;u(t_{m-1}),v_{m}), \end{align}

\begin{align} S(\gamma ;\;\tilde {u}, v)=\sum _{m=2}^{M}S_{m}(\gamma ;\;u(t_{m-1}),v_{m}), \end{align}

and (3.8) can now be written as

\begin{align} \mathbb{E}[S(\gamma ;\;\tilde {u}, V)|\tilde {U}=\tilde {u}]=\sum _{m=2}^{M}E_{\gamma }[S_{m}(\gamma ;\;u(t_{m-1}),V_{m})|U(t_{m-1})=u(t_{m-1}), U(t_{m})=u(t_{m})]. \end{align}

\begin{align} \mathbb{E}[S(\gamma ;\;\tilde {u}, V)|\tilde {U}=\tilde {u}]=\sum _{m=2}^{M}E_{\gamma }[S_{m}(\gamma ;\;u(t_{m-1}),V_{m})|U(t_{m-1})=u(t_{m-1}), U(t_{m})=u(t_{m})]. \end{align}

The maximum likelihood estimate is the value of

![]() $\gamma$

for which (3.11) equals 0. The solution is obtained by stochastic approximation via a Robbins Monro Algorithm with updating step

$\gamma$

for which (3.11) equals 0. The solution is obtained by stochastic approximation via a Robbins Monro Algorithm with updating step

where

![]() $v^{l}$

is generated according to the conditional distribution of

$v^{l}$

is generated according to the conditional distribution of

![]() $V$

, given

$V$

, given

![]() $\tilde {U}=\tilde {u}$

, with parameter value

$\tilde {U}=\tilde {u}$

, with parameter value

![]() $\hat {\gamma }^{l}$

.

$\hat {\gamma }^{l}$

.

![]() $a_{l}$

is a sequence of positive numbers tending to 0, and

$a_{l}$

is a sequence of positive numbers tending to 0, and

![]() $D$

is a positive definite matrix. See Snijders et al., Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010) and https://www.stats.ox.ac.uk/∼snijders/siena/Siena_algorithms.pdf[stats.ox.ac.uk] for additional details on this algorithm.

$D$

is a positive definite matrix. See Snijders et al., Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010) and https://www.stats.ox.ac.uk/∼snijders/siena/Siena_algorithms.pdf[stats.ox.ac.uk] for additional details on this algorithm.

4. Maximum likelihood estimation for the SAOM-HMM

In this section we present an algorithm to calculate the maximum likelihood estimates of the parameters

![]() $\alpha$

,

$\alpha$

,

![]() $\beta$

, and

$\beta$

, and

![]() $\gamma$

in our SAOM-HMM described in Section 2. Let

$\gamma$

in our SAOM-HMM described in Section 2. Let

![]() $\Gamma$

consist of

$\Gamma$

consist of

![]() $(\alpha , \beta , \gamma )$

. We develop a variation of the EM algorithm, an iterative method which alternates between performing an expectation (E) step and a maximization (M) step Dempster et al. (Reference Dempster, Laird and Rubin1977). We first find the expected value of the complete data log-likelihood

$(\alpha , \beta , \gamma )$

. We develop a variation of the EM algorithm, an iterative method which alternates between performing an expectation (E) step and a maximization (M) step Dempster et al. (Reference Dempster, Laird and Rubin1977). We first find the expected value of the complete data log-likelihood

![]() $\ell ^{*}(\Gamma )=\log f(\tilde {u},\tilde {y})$

, with respect to the unknown, true networks

$\ell ^{*}(\Gamma )=\log f(\tilde {u},\tilde {y})$

, with respect to the unknown, true networks

![]() $\tilde {u}$

, given the observed networks

$\tilde {u}$

, given the observed networks

![]() $\tilde {y}$

and the current parameter estimates for

$\tilde {y}$

and the current parameter estimates for

![]() $\Gamma$

. We then maximize the expected log-likelihood found in the E-step. Our M-step framework embeds the SAOM estimation routine described in Section 3.2. The overall schematic of our estimation routine (that we will describe in detail in the remainder of this section) is as follows:

$\Gamma$

. We then maximize the expected log-likelihood found in the E-step. Our M-step framework embeds the SAOM estimation routine described in Section 3.2. The overall schematic of our estimation routine (that we will describe in detail in the remainder of this section) is as follows:

Given a series of observed networks

![]() $\tilde {y}$

and a number of effects one wishes to include in the SAOM, a SAOM is fit (via the maximum likelihood estimation routine described in Section 3.2) to get initial estimates of the parameters associated with each effect. We also make the choice, for this current implementation, to assume a constant rate parameter across all vertices. This is a fairly standard choice and is recommended in the SAOM literature, unless one has strong reason to believe otherwise. Set

$\tilde {y}$

and a number of effects one wishes to include in the SAOM, a SAOM is fit (via the maximum likelihood estimation routine described in Section 3.2) to get initial estimates of the parameters associated with each effect. We also make the choice, for this current implementation, to assume a constant rate parameter across all vertices. This is a fairly standard choice and is recommended in the SAOM literature, unless one has strong reason to believe otherwise. Set

![]() $\hat {\gamma }^{1}$

equal to these estimates. The

$\hat {\gamma }^{1}$

equal to these estimates. The

![]() $p^{th}$

updating step of our EM algorithm then proceeds as follows:

$p^{th}$

updating step of our EM algorithm then proceeds as follows:

-

1. Using

$\hat {\Gamma }^{p-1}$

, perform Algorithms1, 2, and 3 described in Section 1.3 to sample a series of true networks. This step corresponds to the E-step in the EM algorithm and is necessary due to the incredibly large state space present in our Expectation.

$\hat {\Gamma }^{p-1}$

, perform Algorithms1, 2, and 3 described in Section 1.3 to sample a series of true networks. This step corresponds to the E-step in the EM algorithm and is necessary due to the incredibly large state space present in our Expectation. -

2. Perform the maximization step using the formulas in section 4.2.1 to obtain

$\hat {\alpha }^{p}$

and

$\hat {\alpha }^{p}$

and

$\hat {\beta }^{p}$

.

$\hat {\beta }^{p}$

. -

3. Perform the maximization routine outlined in section 4.2.2 to obtain

$\hat {\gamma }^{p}$

.

$\hat {\gamma }^{p}$

. -

4. Repeat steps 1-3 until convergence.

Additional details are presented below.

4.1 E-step

The expected value of the complete data log-likelihood (2.1) with respect to the true networks

![]() $\tilde {u}$

given the observed networks

$\tilde {u}$

given the observed networks

![]() $\tilde {y}$

is:

$\tilde {y}$

is:

\begin{align} Q(\Gamma , \Gamma ^{p-1})&=\mathbb{E}[\!\log f(\tilde {u},\tilde {y}|\Gamma )|\tilde {y},\Gamma ^{p-1}] \nonumber \\ &=\sum _{\tilde {u} \in \tilde {U}}^{}\bigg [\sum _{m=2}^{M}\log f_{\alpha ,\beta }(y(t_{m})|u(t_{m}))\bigg ]f(\tilde {u}|\tilde {y},\Gamma ^{p-1}) + \sum _{\tilde {u} \in \tilde {U}}^{}\log f_{\gamma }(\tilde {u})f(\tilde {u}|\tilde {y},\Gamma ^{p-1}), \end{align}

\begin{align} Q(\Gamma , \Gamma ^{p-1})&=\mathbb{E}[\!\log f(\tilde {u},\tilde {y}|\Gamma )|\tilde {y},\Gamma ^{p-1}] \nonumber \\ &=\sum _{\tilde {u} \in \tilde {U}}^{}\bigg [\sum _{m=2}^{M}\log f_{\alpha ,\beta }(y(t_{m})|u(t_{m}))\bigg ]f(\tilde {u}|\tilde {y},\Gamma ^{p-1}) + \sum _{\tilde {u} \in \tilde {U}}^{}\log f_{\gamma }(\tilde {u})f(\tilde {u}|\tilde {y},\Gamma ^{p-1}), \end{align}

where

![]() $p$

is the iteration number,

$p$

is the iteration number,

![]() $\Gamma ^{p-1}$

are the current parameter estimates that we use to evaluate the expectation, and

$\Gamma ^{p-1}$

are the current parameter estimates that we use to evaluate the expectation, and

![]() $\Gamma ^{p}$

are the new parameters that we want to optimize to increase

$\Gamma ^{p}$

are the new parameters that we want to optimize to increase

![]() $Q(\Gamma , \Gamma ^{p-1})$

. This expectation is difficult to calculate due to the magnitude of the state space. In order to address this computational challenge, we employ a particle filtering based sampling scheme (i.e. a sequential Monte Carlo method), which will be described in detail in Section 4.3.

$Q(\Gamma , \Gamma ^{p-1})$

. This expectation is difficult to calculate due to the magnitude of the state space. In order to address this computational challenge, we employ a particle filtering based sampling scheme (i.e. a sequential Monte Carlo method), which will be described in detail in Section 4.3.

4.2 M-step

The second step of the EM algorithm is to maximize the expectation, i.e. to calculate

![]() $\Gamma ^p = \arg \max _{\Gamma } Q(\Gamma , \Gamma ^{p-1})$

. Since the parameters we wish to optimize are independently split into two terms in

$\Gamma ^p = \arg \max _{\Gamma } Q(\Gamma , \Gamma ^{p-1})$

. Since the parameters we wish to optimize are independently split into two terms in

![]() $Q(\Gamma , \Gamma ^{p-1})$

, we can optimize each separately.

$Q(\Gamma , \Gamma ^{p-1})$

, we can optimize each separately.

4.2.1 Maximizing in

$\alpha$

and

$\alpha$

and

$\beta$

$\beta$

We write the first term in

![]() $Q(\Gamma , \Gamma ^{p-1})$

as:

$Q(\Gamma , \Gamma ^{p-1})$

as:

\begin{align} \sum _{\tilde {u} \in \tilde {U}}^{}\bigg [\sum _{m=2}^{M} & \log f_{\alpha ,\beta }(y(t_{m})|u(t_{m}))\bigg ]f(\tilde {u}|\tilde {y},\Gamma ^{p-1})= \nonumber \\ & \sum _{k=1}^{K}\sum _{m=2}^{M}\log \big [ \alpha ^{c_{km}}(1-\alpha )^{d_{km}}\beta ^{b_{km}}(1-\beta )^{a_{km}}\big ] f(u(t_{m})=u_{k}|\tilde {y},\Gamma ^{p-1}), \end{align}

\begin{align} \sum _{\tilde {u} \in \tilde {U}}^{}\bigg [\sum _{m=2}^{M} & \log f_{\alpha ,\beta }(y(t_{m})|u(t_{m}))\bigg ]f(\tilde {u}|\tilde {y},\Gamma ^{p-1})= \nonumber \\ & \sum _{k=1}^{K}\sum _{m=2}^{M}\log \big [ \alpha ^{c_{km}}(1-\alpha )^{d_{km}}\beta ^{b_{km}}(1-\beta )^{a_{km}}\big ] f(u(t_{m})=u_{k}|\tilde {y},\Gamma ^{p-1}), \end{align}

where

![]() $a,b,c,$

and

$a,b,c,$

and

![]() $d$

for a given true network

$d$

for a given true network

![]() $u_{k}$

at observation time

$u_{k}$

at observation time

![]() $t_{m}$

are defined in Table 1. Let

$t_{m}$

are defined in Table 1. Let

![]() $A,B,C,$

and

$A,B,C,$

and

![]() $D$

correspond to the random variables for each. Maximizing in

$D$

correspond to the random variables for each. Maximizing in

![]() $\alpha$

and

$\alpha$

and

![]() $\beta$

yields the following:

$\beta$

yields the following:

The formula for

![]() $\hat {\alpha }$

is the expected number of false edges in the observed network divided by the expected number of non-edges in the true network, given the observed networks

$\hat {\alpha }$

is the expected number of false edges in the observed network divided by the expected number of non-edges in the true network, given the observed networks

![]() $\tilde {y}$

and current parameter estimates

$\tilde {y}$

and current parameter estimates

![]() $\Gamma ^{p-1}$

. The formula for

$\Gamma ^{p-1}$

. The formula for

![]() $\hat {\beta }$

is the expected number of false non-edges in the observed network divided by the expected number of edges in the true network, given the observed networks

$\hat {\beta }$

is the expected number of false non-edges in the observed network divided by the expected number of edges in the true network, given the observed networks

![]() $\tilde {y}$

and current parameter estimates

$\tilde {y}$

and current parameter estimates

![]() $\Gamma ^{p-1}$

.

$\Gamma ^{p-1}$

.

4.2.2 Maximizing in

$\gamma$

$\gamma$

The second term in our

![]() $Q$

function is

$Q$

function is

where

![]() $\gamma$

consists of the SAOM parameters. We adopt the SAOM framework of Snijders et al., Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010) described in Section 2. Note that taking the derivative of the second term in our

$\gamma$

consists of the SAOM parameters. We adopt the SAOM framework of Snijders et al., Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010) described in Section 2. Note that taking the derivative of the second term in our

![]() $Q$

function yields

$Q$

function yields

Since

![]() $f_{\gamma }(\tilde {u})$

cannot be calculated in closed form, neither can

$f_{\gamma }(\tilde {u})$

cannot be calculated in closed form, neither can

![]() $\sum _{\tilde {u} \in \tilde {U}}^{}S(\gamma ;\;\tilde {u})f(\tilde {u}|\tilde {y},\Gamma ^{p-1})$

. To aid in maximization, we augment each true network series

$\sum _{\tilde {u} \in \tilde {U}}^{}S(\gamma ;\;\tilde {u})f(\tilde {u}|\tilde {y},\Gamma ^{p-1})$

. To aid in maximization, we augment each true network series

![]() $\tilde {u}$

with a possible sample path that could have led one true network to the next in the series. We define the random variable

$\tilde {u}$

with a possible sample path that could have led one true network to the next in the series. We define the random variable

![]() $V$

to be a sample path associated with a true network series

$V$

to be a sample path associated with a true network series

![]() $\tilde {u}$

. Drawing upon the missing information principle and the work of Snijders et al., Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010) and Gu et al., Gu and Kong (Reference Gu and Kong1998), we write the equation above as

$\tilde {u}$

. Drawing upon the missing information principle and the work of Snijders et al., Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010) and Gu et al., Gu and Kong (Reference Gu and Kong1998), we write the equation above as

where the expectation on the right-hand side of the equation is with respect to

![]() $V$

. Maximum likelihood estimates for

$V$

. Maximum likelihood estimates for

![]() $\gamma$

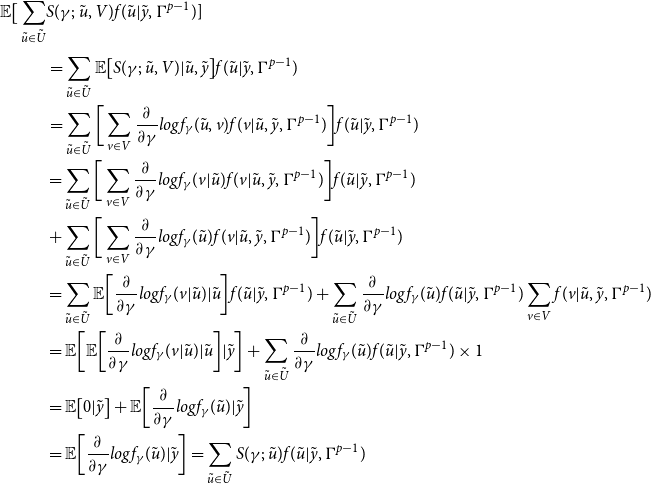

can be determined, under regularity conditions, as the solution for (4.4) equaling 0. The proof of (4.4) is shown in Appendix A.

$\gamma$

can be determined, under regularity conditions, as the solution for (4.4) equaling 0. The proof of (4.4) is shown in Appendix A.

In our approach, the solution to (4.4) is obtained by stochastic approximation via a Robbins Monro algorithm, which is similar to that used in Snijders et al., Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010) and described in Section 3.2. At each iteration of the Robbins Monro algorithm, a possible

![]() $V$

, i.e. a path connecting each possible true network, in each possible latent network series, is sampled. This sampling is done via a Metropolis Hastings algorithm Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010). Next,

$V$

, i.e. a path connecting each possible true network, in each possible latent network series, is sampled. This sampling is done via a Metropolis Hastings algorithm Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010). Next,

![]() $\sum _{\tilde {u} \in \tilde {U}}^{}S(\gamma ;\;\tilde {u}, v)f(\tilde {u}|\tilde {y},\Gamma ^{p-1})$

is calculated and used in an updating step in the algorithm. It is unreasonable, given the prohibitively large state space of true network series, to sum over every possible true network series in this calculation. Therefore, we note that

$\sum _{\tilde {u} \in \tilde {U}}^{}S(\gamma ;\;\tilde {u}, v)f(\tilde {u}|\tilde {y},\Gamma ^{p-1})$

is calculated and used in an updating step in the algorithm. It is unreasonable, given the prohibitively large state space of true network series, to sum over every possible true network series in this calculation. Therefore, we note that

![]() $\sum _{\tilde {u} \in \tilde {U}}^{}S(\gamma ;\;\tilde {u}, v)f(\tilde {u}|\tilde {y},\Gamma ^{p-1})$

is an expectation, and we sample a smaller number (denoted by

$\sum _{\tilde {u} \in \tilde {U}}^{}S(\gamma ;\;\tilde {u}, v)f(\tilde {u}|\tilde {y},\Gamma ^{p-1})$

is an expectation, and we sample a smaller number (denoted by

![]() $H$

) of true network series from

$H$

) of true network series from

![]() $f(\tilde {u}|\tilde {y},\Gamma ^{p-1})$

. The average of

$f(\tilde {u}|\tilde {y},\Gamma ^{p-1})$

. The average of

![]() $S(\gamma ;\;\tilde {u}, v)$

for this smaller sample is what is used in the updating step of the Robbins Monro algorithm in place of

$S(\gamma ;\;\tilde {u}, v)$

for this smaller sample is what is used in the updating step of the Robbins Monro algorithm in place of

![]() $\sum _{\tilde {u} \in \tilde {U}}^{}S(\gamma ;\;\tilde {u}, v)f(\tilde {u}|\tilde {y},\Gamma ^{p-1})$

. The updating step is

$\sum _{\tilde {u} \in \tilde {U}}^{}S(\gamma ;\;\tilde {u}, v)f(\tilde {u}|\tilde {y},\Gamma ^{p-1})$

. The updating step is

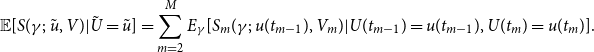

\begin{align} \hat {\gamma }^{l+1}= \hat {\gamma }^{l} + a_{l}D^{-1}\sum _{p=1}^{H}S_{p}(\hat {\gamma }^{l};\;\tilde {u},v^{l}) , \end{align}

\begin{align} \hat {\gamma }^{l+1}= \hat {\gamma }^{l} + a_{l}D^{-1}\sum _{p=1}^{H}S_{p}(\hat {\gamma }^{l};\;\tilde {u},v^{l}) , \end{align}

where the sum is over the total number of sampled true network series

![]() $\tilde {u}$

,

$\tilde {u}$

,

![]() $D$

is the matrix of partial derivatives, and

$D$

is the matrix of partial derivatives, and

![]() $a_{l}$

is a sequence of positive numbers tending to 0.

$a_{l}$

is a sequence of positive numbers tending to 0.

Our actual implementation of the above algorithm again borrows from that of Snijders et al., Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010), which follows directly from the work of Gue and Kong Gu and Kong (Reference Gu and Kong1998). The algorithm performed consists of two phases. In the first phase a small number of simulations are used to obtain a rough estimate of the matrix of partial derivatives (defined as D in our updating step), which are estimated by a score-function method Schweinberger and Snijders (Reference Schweinberger and Snijders2007). The second phase determines the estimate of

![]() $\gamma$

by simulating

$\gamma$

by simulating

![]() $V$

and performing the updating step.

$V$

and performing the updating step.

4.3 Particle filtering

The expectation in the E-step of our E-M algorithm is difficult to calculate largely due to the magnitude of the state space. There are

![]() $2^{N*(N-1)}$

possible true networks at each observation time, and to calculate

$2^{N*(N-1)}$

possible true networks at each observation time, and to calculate

![]() $f(u(t_{m})|\tilde {y}, \Gamma ^{p-1})$

for a given observation moment

$f(u(t_{m})|\tilde {y}, \Gamma ^{p-1})$

for a given observation moment

![]() $m$

, one needs to sum over all possible combinations of

$m$

, one needs to sum over all possible combinations of

![]() $u$

at the previous

$u$

at the previous

![]() $m-1$

observation times. The forward-backward algorithm can in principle be used to compute these posterior marginals of all hidden state variables given a sequence of observations Rabiner and Juang (Reference Rabiner and Juang1986). The algorithm makes use of the principle of dynamic programing to efficiently compute the values in two passes. The first pass goes forward in time, while the second goes backward in time. However, given the magnitude of our network space, direct use of this approach is not computationally feasible in any but the smallest of problems. Also, as described in the previous section, the transition probabilities,

$m-1$

observation times. The forward-backward algorithm can in principle be used to compute these posterior marginals of all hidden state variables given a sequence of observations Rabiner and Juang (Reference Rabiner and Juang1986). The algorithm makes use of the principle of dynamic programing to efficiently compute the values in two passes. The first pass goes forward in time, while the second goes backward in time. However, given the magnitude of our network space, direct use of this approach is not computationally feasible in any but the smallest of problems. Also, as described in the previous section, the transition probabilities,

![]() $f_{\gamma }(u(t_{m})|u(t_{m-1}))$

, cannot be calculated in closed form.

$f_{\gamma }(u(t_{m})|u(t_{m-1}))$

, cannot be calculated in closed form.

In order to address this computational challenge, we employ a particle filtering based sampling scheme (i.e. a Sequential Monte Carlo method) Doucet and Lee (Reference Doucet and Lee2018). Particle filtering methods provide a way to sample from an approximation of

![]() $f(u(t_{m})|\tilde {y}, \Gamma ^{p-1})$

through time. Our adaptation of particle filtering principles to the current content is described in Algorithms1– 3 and follows almost exactly from Doucet and Lee (Reference Doucet and Lee2018). In this context, we let the particle

$f(u(t_{m})|\tilde {y}, \Gamma ^{p-1})$

through time. Our adaptation of particle filtering principles to the current content is described in Algorithms1– 3 and follows almost exactly from Doucet and Lee (Reference Doucet and Lee2018). In this context, we let the particle

![]() $\zeta ^{k}$

be a provisional, hypothetical value of true network

$\zeta ^{k}$

be a provisional, hypothetical value of true network

![]() $u$

, with respective elements

$u$

, with respective elements

![]() $\zeta ^{k}_{m}$

and

$\zeta ^{k}_{m}$

and

![]() $u(t_{m})$

. Algorithm1 mirrors the forward portion of the forward-backward algorithm referred to above. Algorithm2 describes the ancestral simulation of

$u(t_{m})$

. Algorithm1 mirrors the forward portion of the forward-backward algorithm referred to above. Algorithm2 describes the ancestral simulation of

![]() $\star$

in 1.1, and Algorithm3 is used to sample full sequences of latent network variables, i.e., to obtain an approximate sample from the conditional distribution of the latent networks given the observed networks (

$\star$

in 1.1, and Algorithm3 is used to sample full sequences of latent network variables, i.e., to obtain an approximate sample from the conditional distribution of the latent networks given the observed networks (

![]() $f(\tilde {u}|\tilde {y}, \Gamma ^{p-1}))$

. For more details on the particle filtering method we have applied in our algorithm, refer to Doucet and Lee (Reference Doucet and Lee2018).

$f(\tilde {u}|\tilde {y}, \Gamma ^{p-1}))$

. For more details on the particle filtering method we have applied in our algorithm, refer to Doucet and Lee (Reference Doucet and Lee2018).

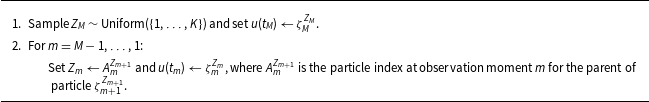

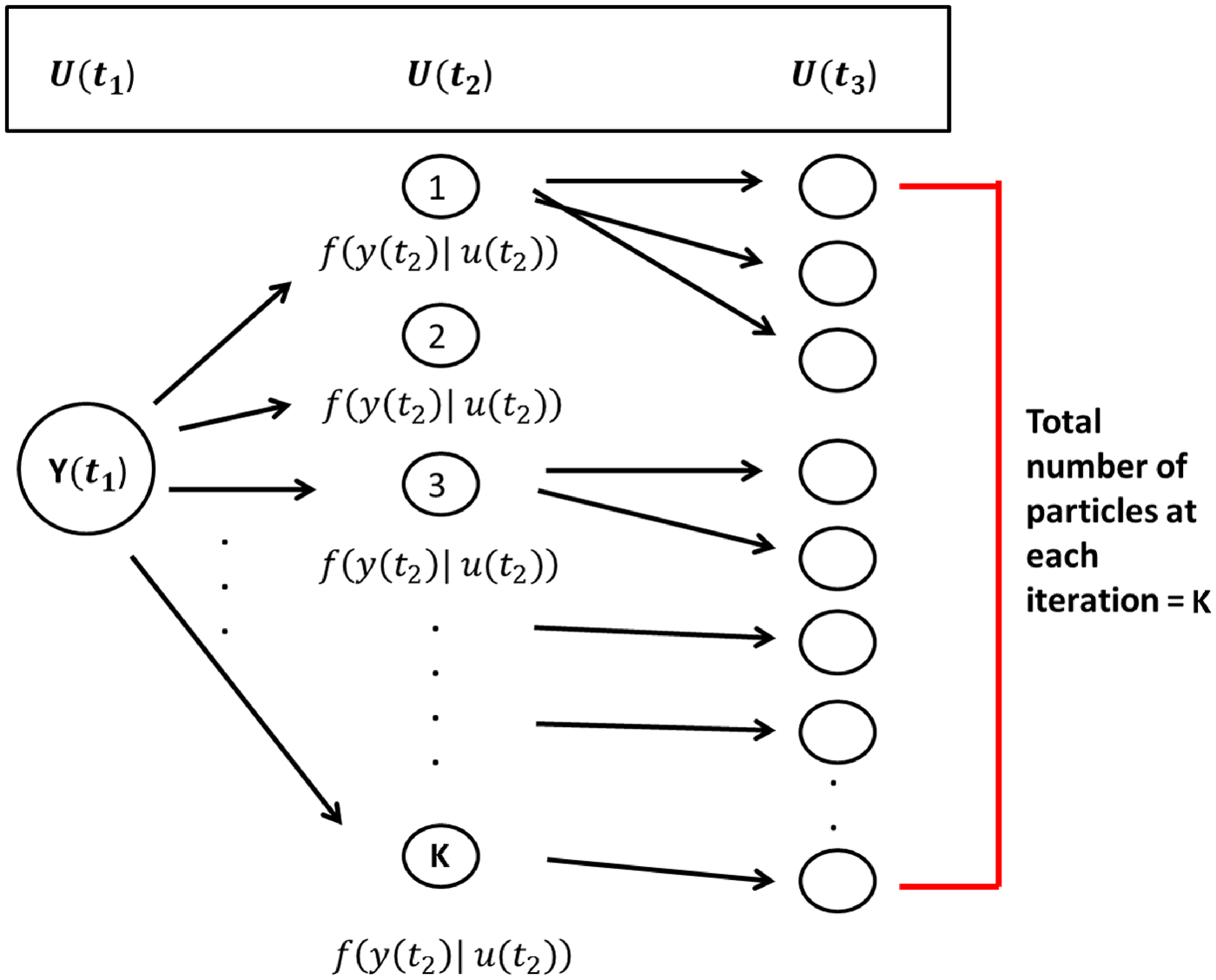

Algorithm 1. A mirror of the Forward Algorithm

Algorithm 2. Ancestral simulation of (⋆)

Algorithm 3. Sampling an ancestral line (i.e., a true network series)

Of special note is that

![]() $(\!\star\!)$

in step 2 is sampled in two stages and is outlined in Algorithm2. The first stage samples a mixture parameter,

$(\!\star\!)$

in step 2 is sampled in two stages and is outlined in Algorithm2. The first stage samples a mixture parameter,

![]() $\zeta ^{k}_{m-1}$

, with probability proportional to

$\zeta ^{k}_{m-1}$

, with probability proportional to

![]() $f(y(t_{m-1})|u(t_{m-1})=\zeta _{m-1})$

. The second stage samples from

$f(y(t_{m-1})|u(t_{m-1})=\zeta _{m-1})$

. The second stage samples from

![]() $f(u(t_{m})|u(t_{m-1})=\zeta _{m-1})$

. This two-stage process provides a genealogical interpretation of the particles that are produced by the algorithm. For

$f(u(t_{m})|u(t_{m-1})=\zeta _{m-1})$

. This two-stage process provides a genealogical interpretation of the particles that are produced by the algorithm. For

![]() $m \in \{1,\ldots ,M \}$

and

$m \in \{1,\ldots ,M \}$

and

![]() $k \in \{1,\ldots ,K \}$

, we denote by

$k \in \{1,\ldots ,K \}$

, we denote by

![]() $A^{k}_{m-1}$

the ancestor index of particle

$A^{k}_{m-1}$

the ancestor index of particle

![]() $\zeta ^{k}_{m}$

.

$\zeta ^{k}_{m}$

.

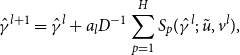

One can view Algorithms1 and 2 as a kind of evolutionary system where at observation moment

![]() $m \lt M$

each particle has exactly one parent, and at each observation moment

$m \lt M$

each particle has exactly one parent, and at each observation moment

![]() $m \gt 1$

, each particle has some number of offspring. Algorithms1 and 2 create a collection of possible true networks at each observation moment. Figure 2 provides a visual description of the scheme. The total number of observation moments

$m \gt 1$

, each particle has some number of offspring. Algorithms1 and 2 create a collection of possible true networks at each observation moment. Figure 2 provides a visual description of the scheme. The total number of observation moments

![]() $M$

are fixed. At each observation moment, particles from the previous observation moment

$M$

are fixed. At each observation moment, particles from the previous observation moment

![]() $m-1$

are assigned probabilities that are proportional to

$m-1$

are assigned probabilities that are proportional to

![]() $f(y(t_{m-1})|u(t_{m-1}))$

(i.e., parameterized by the false positive and false negative error rate estimates). An independent random sample of these particles is drawn according to these probabilities. For each of these particles, a next true network

$f(y(t_{m-1})|u(t_{m-1}))$

(i.e., parameterized by the false positive and false negative error rate estimates). An independent random sample of these particles is drawn according to these probabilities. For each of these particles, a next true network

![]() $u(t_{m})$

(i.e., particle

$u(t_{m})$

(i.e., particle

![]() $\zeta _{m}^{k}$

) is simulated as evolving from the current SAOM with parameter estimates

$\zeta _{m}^{k}$

) is simulated as evolving from the current SAOM with parameter estimates

![]() $\hat {\gamma }^{p-1}$

, conditional on

$\hat {\gamma }^{p-1}$

, conditional on

![]() $u(t_{m-1})$

(i.e., particle

$u(t_{m-1})$

(i.e., particle

![]() $\zeta _{m-1}^{k}$

). However, we ultimately would like a sample of possible true network

$\zeta _{m-1}^{k}$

). However, we ultimately would like a sample of possible true network

![]() $\it{series}$

. Algorithm3 describes how to sample such a series. By sampling an ancestral line, we are effectively sampling from

$\it{series}$

. Algorithm3 describes how to sample such a series. By sampling an ancestral line, we are effectively sampling from

![]() $f(\tilde {u}|\tilde {y}, \Gamma ^{p-1})$

.

$f(\tilde {u}|\tilde {y}, \Gamma ^{p-1})$

.

Figure 2. Particle filtering sampling scheme.

![]() $K$

particles are sampled at each observation moment following a two-stage process. First, particles are selected from the previous observation moment with probability proportional to the conditional distribution of the true network given the observed network at that time point. Then, a true network (i.e., particle) at the next observation moment is sampled/simulated, starting from the current selected particle network, and according to the parameter estimates in the stochastic actor-oriented models.

$K$

particles are sampled at each observation moment following a two-stage process. First, particles are selected from the previous observation moment with probability proportional to the conditional distribution of the true network given the observed network at that time point. Then, a true network (i.e., particle) at the next observation moment is sampled/simulated, starting from the current selected particle network, and according to the parameter estimates in the stochastic actor-oriented models.

4.4 Putting it all together

We now combine the elements presented in the previous sections to define a complete algorithm for the estimation of

![]() $\alpha$

,

$\alpha$

,

![]() $\beta$

, and

$\beta$

, and

![]() $\gamma$

in our SAOM-HMM.

$\gamma$

in our SAOM-HMM.

Given a series of observed networks

![]() $\tilde {y}$

and a number of effects one wishes to include in the SAOM, a SAOM is fit (via maximum likelihood estimation) to get initial estimates of the parameters associated with each effect. We also make the choice, for this current implementation, to assume a constant rate parameter across all vertices. This is a fairly standard choice and is recommended in the SAOM literature, unless one has strong reason to believe otherwise. Set the initial SAOM parameter estimates,

$\tilde {y}$

and a number of effects one wishes to include in the SAOM, a SAOM is fit (via maximum likelihood estimation) to get initial estimates of the parameters associated with each effect. We also make the choice, for this current implementation, to assume a constant rate parameter across all vertices. This is a fairly standard choice and is recommended in the SAOM literature, unless one has strong reason to believe otherwise. Set the initial SAOM parameter estimates,

![]() $\hat {\gamma }^{1}$

, equal to these estimates.

$\hat {\gamma }^{1}$

, equal to these estimates.

The

![]() $p^{th}$

updating step of our EM algorithm then proceeds as follows:

$p^{th}$

updating step of our EM algorithm then proceeds as follows:

-

1. Using

$\hat {\Gamma }^{p-1}$

, perform Algorithm2 to get

$\hat {\Gamma }^{p-1}$

, perform Algorithm2 to get

$K$

possible true networks at each observation moment.

$K$

possible true networks at each observation moment. -

2. Sample

$H$

number of true network series from an approximation of

$H$

number of true network series from an approximation of

$f(\tilde {u}|\tilde {y}, \Gamma ^{p-1})$

using the ancestral sampling scheme in Algorithm3.

$f(\tilde {u}|\tilde {y}, \Gamma ^{p-1})$

using the ancestral sampling scheme in Algorithm3. -

3. Perform the maximization step using Equations 4.3 to obtain

$\hat {\alpha }^{p}$

and

$\hat {\alpha }^{p}$

and

$\hat {\beta }^{p}$

. This step obtains estimates of the false positive and false negative rates.

$\hat {\beta }^{p}$

. This step obtains estimates of the false positive and false negative rates. -

4. Perform the maximization routine outlined in section 4.2.2 to obtain

$\hat {\gamma }^{p}$

. This step provides estimates of the SAOM parameters and can be done using the multi-group option of RSiena Ripley et al. (Reference Ripley, Snijders, Boda, Vörös and Preciado2024).

$\hat {\gamma }^{p}$

. This step provides estimates of the SAOM parameters and can be done using the multi-group option of RSiena Ripley et al. (Reference Ripley, Snijders, Boda, Vörös and Preciado2024). -

5. Repeat steps 1-4 until convergence. The convergence criteria we have used in our simulation study is based on a moving average and is the following:

where \begin{equation*} \frac {\mid \mid \hat {\Gamma }^{*p} - \hat {\Gamma }^{*p-1} \mid \mid _{1}}{\mid \mid \hat {\Gamma }^{*p-1} \mid \mid _{1}}\lt 0.01 , \end{equation*}

\begin{equation*} \frac {\mid \mid \hat {\Gamma }^{*p} - \hat {\Gamma }^{*p-1} \mid \mid _{1}}{\mid \mid \hat {\Gamma }^{*p-1} \mid \mid _{1}}\lt 0.01 , \end{equation*}

$\hat {\Gamma }^{*p} = \frac {\sum _{i=p-4}^{p} \hat {\Gamma _{i}}}{5}$

and

$\hat {\Gamma }^{*p} = \frac {\sum _{i=p-4}^{p} \hat {\Gamma _{i}}}{5}$

and

$\hat {\Gamma }^{*p-1} = \frac {\sum _{i=p-5}^{p-1} \hat {\Gamma _{i}}}{5}$

.

$\hat {\Gamma }^{*p-1} = \frac {\sum _{i=p-5}^{p-1} \hat {\Gamma _{i}}}{5}$

.

However, in other instances, some parameter coordinates may have a different scale than others, and the true estimate may be close to 0. Therefore, the convergence criterion may need to be adapted.

The choice of

![]() $K$

in step 1 (i.e. the number of particles sampled at each observation moment) and the choice of

$K$

in step 1 (i.e. the number of particles sampled at each observation moment) and the choice of

![]() $H$

(i.e. the sample of true network series to sample) in step 2 should depend on the size of the network, the number of observation moments, and the amount of noise and strength of the SAOM parameter signals one suspects to be present. For example, when working with network sizes of 10 vertices, 4 observation moments, and 5 parameters in our SAOM, we have used a K of 50,000 and an

$H$

(i.e. the sample of true network series to sample) in step 2 should depend on the size of the network, the number of observation moments, and the amount of noise and strength of the SAOM parameter signals one suspects to be present. For example, when working with network sizes of 10 vertices, 4 observation moments, and 5 parameters in our SAOM, we have used a K of 50,000 and an

![]() $H$

of 3000 for the maximization of

$H$

of 3000 for the maximization of

![]() $\alpha$

and

$\alpha$

and

![]() $\beta$

. For the maximization of

$\beta$

. For the maximization of

![]() $\gamma$

, we have worked with an

$\gamma$

, we have worked with an

![]() $H$

of 50.

$H$

of 50.

We deliberately keep

![]() $H$

relatively small (in this case, 50) because this step involves finding

$H$

relatively small (in this case, 50) because this step involves finding

![]() $\hat {\gamma }$

that maximizes data from

$\hat {\gamma }$

that maximizes data from

![]() $H$

number of network series. As has often been remarked by the developers of SAOMs, even estimating

$H$

number of network series. As has often been remarked by the developers of SAOMs, even estimating

![]() $\gamma$

for one series of networks can be time consuming, depending on the size of the network and the number of parameters in the model. This step of our algorithm consumes much of the run time, and to try and estimate

$\gamma$

for one series of networks can be time consuming, depending on the size of the network and the number of parameters in the model. This step of our algorithm consumes much of the run time, and to try and estimate

![]() $\gamma$

for many more

$\gamma$

for many more

![]() $H$

will require significantly more time.

$H$

will require significantly more time.

4.5 Calculation of standard errors

The algorithm outlined thus far only produces the parameter estimates. Additional work is required if one wants the standard errors associated with the estimates. Since inference is likely the end goal in practice, a method for calculating standard errors of the estimates is needed. We propose performing a parametric bootstrap where the maximum likelihood estimates from the above algorithm are collected and a number of network series from the estimated model are sampled. The proposed algorithm is then run on each of the sampled network series to obtain a new collection of parameter estimates, which are then used to calculate the standard errors. The more samples we take, the more accurate the estimates of the standard error will be. However, again, there is a computational trade-off, since our algorithm needs to be run for each of the sampled network series.

4.6 Algorithm modifications

The focus of the framework discussed thus far has been on directed networks since SAOMs were initially developed for directed networks. It should be noted, though, that SAOMs are now capable of handling undirected networks, and so is our methodology. The only caveat is that one needs to define how edges are assumed to form in the SAOM model Snijders and Pickup (Reference Snijders, Pickup, Victor, Montgomery and Lubell2017). For example, does one vertex unilaterally impose that an edge is created or dissolved? Or, do both vertices have to “agree”? We present an example of an application to undirected networks in Section 6.

Another modification one may choose to make is with regards to how

![]() $\gamma$

is estimated in the maximization step in the algorithm we propose. In section 4.2.2, we outline a Robbins Monro algorithm to maximize the complete data log likelihood. Our approach borrows from the algorithm Snijders et al., use for the maximum likelihood estimation of the SAOM parameters for the evolution of one observed network series Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010). The computation for this step is time consuming. As a way to reduce the maximization time for

$\gamma$

is estimated in the maximization step in the algorithm we propose. In section 4.2.2, we outline a Robbins Monro algorithm to maximize the complete data log likelihood. Our approach borrows from the algorithm Snijders et al., use for the maximum likelihood estimation of the SAOM parameters for the evolution of one observed network series Snijders et al. (Reference Snijders, Koskinen and Schweinberger2010). The computation for this step is time consuming. As a way to reduce the maximization time for

![]() $\gamma$

, one could instead perform a method of moments based estimation routine. This approach also utilizes a Robbins Monro algorithm. In the maximum likelihood based routine, at each iteration of the Robbins Monro algorithm, possible paths leading from one network to the next are augmented for each of our sampled true network series. A complete data score function is then calculated and used in the updating step. The method of moments based routine instead simulates the SAOM evolution process, calculates statistics corresponding to each parameter in the model on both the observed and simulated networks, and then takes the difference of these to be used in the updating step of the Robbins Monro algorithm. In other words, parameter estimates are determined as the parameter value for which the expected value of the statistics equals the observed value at each observation point and for each sampled true network series Snijders et al. (Reference Snijders, Van de Bunt and Steglich2010).

$\gamma$

, one could instead perform a method of moments based estimation routine. This approach also utilizes a Robbins Monro algorithm. In the maximum likelihood based routine, at each iteration of the Robbins Monro algorithm, possible paths leading from one network to the next are augmented for each of our sampled true network series. A complete data score function is then calculated and used in the updating step. The method of moments based routine instead simulates the SAOM evolution process, calculates statistics corresponding to each parameter in the model on both the observed and simulated networks, and then takes the difference of these to be used in the updating step of the Robbins Monro algorithm. In other words, parameter estimates are determined as the parameter value for which the expected value of the statistics equals the observed value at each observation point and for each sampled true network series Snijders et al. (Reference Snijders, Van de Bunt and Steglich2010).

Although the method of moment based estimation for

![]() $\gamma$

does not produce formal maximum likelihood estimates, it it still a viable estimation method and has been shown to provide similar results in our setting. We demonstrate this in section 5.2 through a small simulation study. In larger networks, the savings in run time may justify the use of method of moments as an approximation.

$\gamma$

does not produce formal maximum likelihood estimates, it it still a viable estimation method and has been shown to provide similar results in our setting. We demonstrate this in section 5.2 through a small simulation study. In larger networks, the savings in run time may justify the use of method of moments as an approximation.

4.7 Algorithm implementation

The algorithm outlined in this paper is implemented in R. We have written code for steps 1–3 outlined in Section 4.4. For step 4, we call upon the RSiena package Ripley et al. (Reference Ripley, Snijders, Boda, Vörös and Preciado2024), which was developed by R. Ripley, K. Boitmanis, and T.A. Snijders for the implementation of SAOMs. Version 1.1-232 was used for our modeling framework. Minor modifications were made to the source files of this package (saved locally) to allow for our specific algorithm outlined in Section 4.2.2 to be implemented.

One iteration of our E-M algorithm for the networks used in our simulation study explained in the following section takes approximately 45 minutes to run on a high performance Linux computing cluster using 5 cores. The algorithm converged, on average, after 15-20 iterations.

5. Simulation study

5.1 Study design

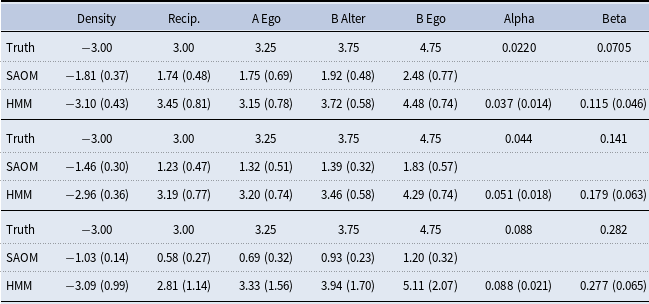

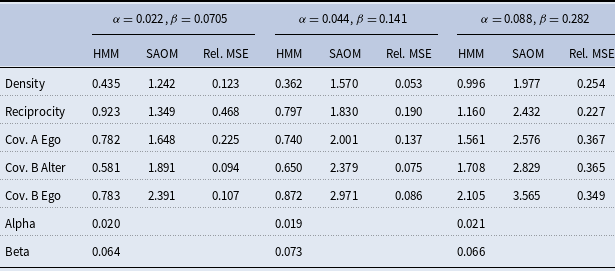

We present a small simulation study to demonstrate the accuracy of our method and draw a comparison between the behavior of our HMM-SAOM ML estimator and the ML estimator obtained from only fitting a SAOM (i.e. the naive approach). For this study, we simulate 10 node directed networks at 4 observation moments, referred to as

![]() $t_{1}$

,

$t_{1}$

,

![]() $t_{2}$

,

$t_{2}$

,

![]() $t_{3}$

, and

$t_{3}$

, and

![]() $t_{4}$

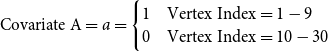

. We also create 2 vertex covariates, called Covariate A and Covariate B. Both are indicator variables and are defined as:

$t_{4}$

. We also create 2 vertex covariates, called Covariate A and Covariate B. Both are indicator variables and are defined as:

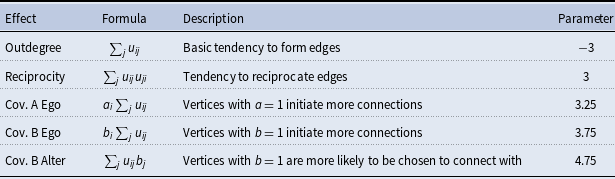

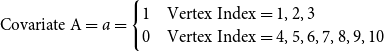

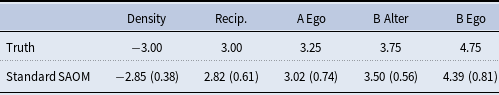

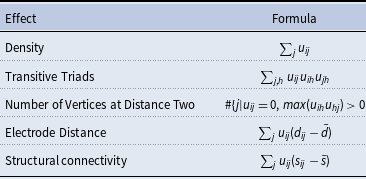

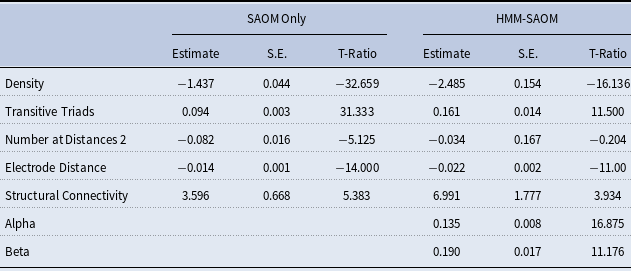

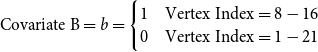

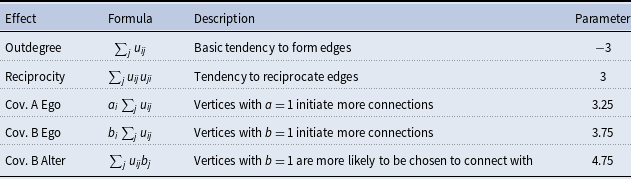

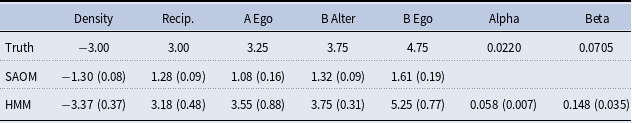

Table 2. Simulation model effects and parameters

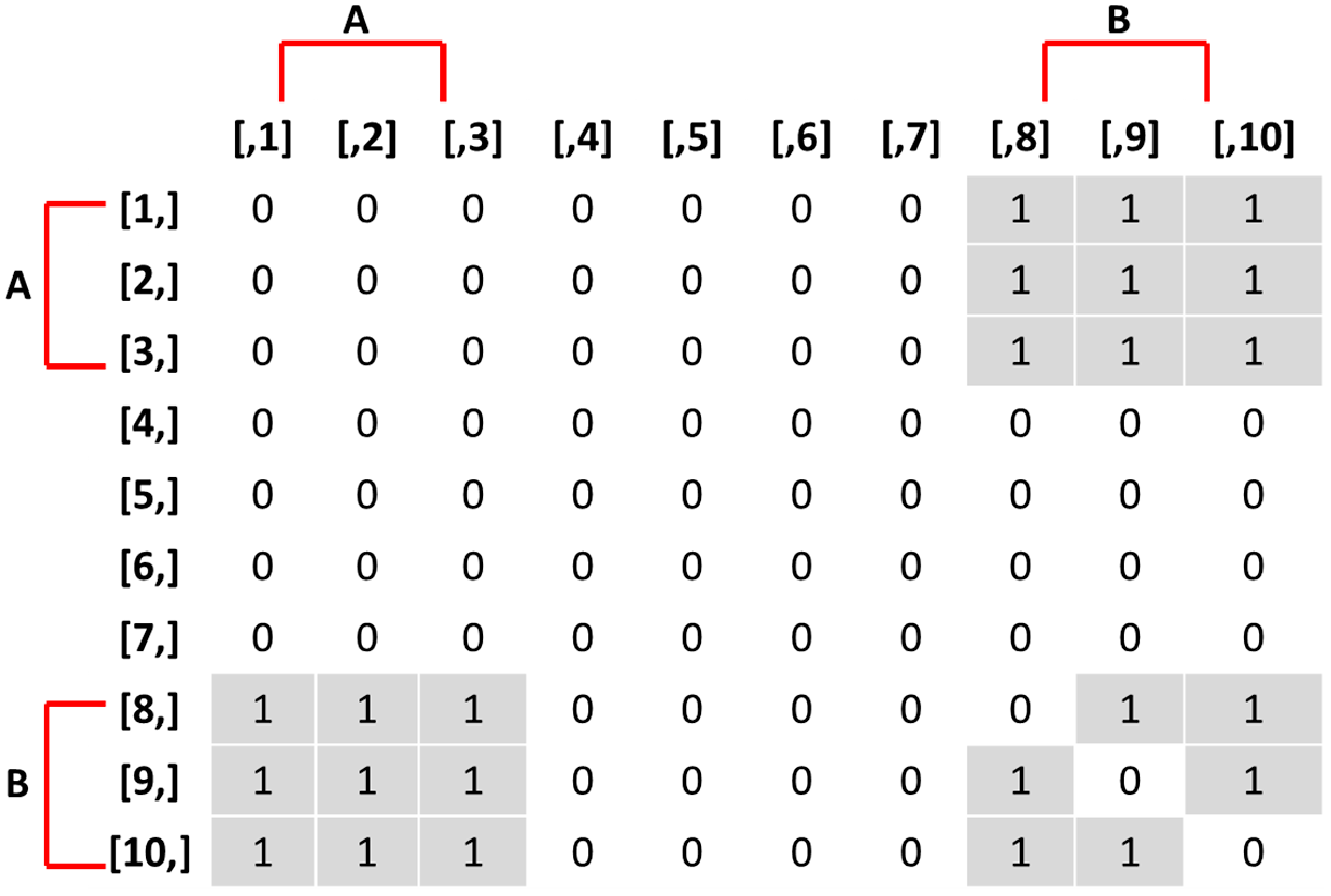

Figure 3. Adjacency matrix of true networks encouraged by the stochastic actor-oriented models in the simulation study. An entry of 1 in row

![]() $i$

and column

$i$

and column

![]() $j$

represents a directed edge from vertex

$j$

represents a directed edge from vertex

![]() $i$

to vertex

$i$

to vertex

![]() $j$

.

$j$

.

\begin{equation*} \text{Covariate A}= a = \begin{cases} 1 & \text{Vertex Index} = 1,2,3 \\ 0 & \text{Vertex Index} = 4,5,6,7,8,9,10 \end{cases} \end{equation*}

\begin{equation*} \text{Covariate A}= a = \begin{cases} 1 & \text{Vertex Index} = 1,2,3 \\ 0 & \text{Vertex Index} = 4,5,6,7,8,9,10 \end{cases} \end{equation*}

\begin{equation*} \text{Covariate B}= b = \begin{cases} 1 & \text{Vertex Index} = 8,9,10 \\ 0 & \text{Vertex Index} = 1,2,3,4,5,6,7 \end{cases} \end{equation*}

\begin{equation*} \text{Covariate B}= b = \begin{cases} 1 & \text{Vertex Index} = 8,9,10 \\ 0 & \text{Vertex Index} = 1,2,3,4,5,6,7 \end{cases} \end{equation*}

The objective function in the SAOM for the evolution of the true networks,

![]() $u(t_{m})$

, contains 5 effects (2 endogenous and 3 exogenous). Table 2 lists these effects, their mathematical definitions, and their descriptions. The large, negative value for outdegree, keeps our simulated true networks fairly sparse. It sets the probability of connections forming low, unless the other parameters in the function influence specific vertices in a more positive way. For example, the large positive value for reciprocated edges encourages a directed edge to form if one already exists in the reverse direction. These parameters, in conjunction with the parameters assigned to the three covariate related effects, promote the network structure demonstrated in Figure 3. In other words, the Covariate A Ego effect makes it highly likely that vertices 1, 2, and 3 will initiate out-connections to other vertices, outweighing the negative density parameter, as long as these connections form with vertices 8, 9, and 10 since the Covariate B Alter effect parameter is high. Furthermore, the Covariate B Ego effect parameter is large, encouraging vertices 8, 9, and 10 to connect to other vertices, but they will mainly choose to connect amongst each other and to vertices 1, 2, and 3 due to the large reciprocity parameter.

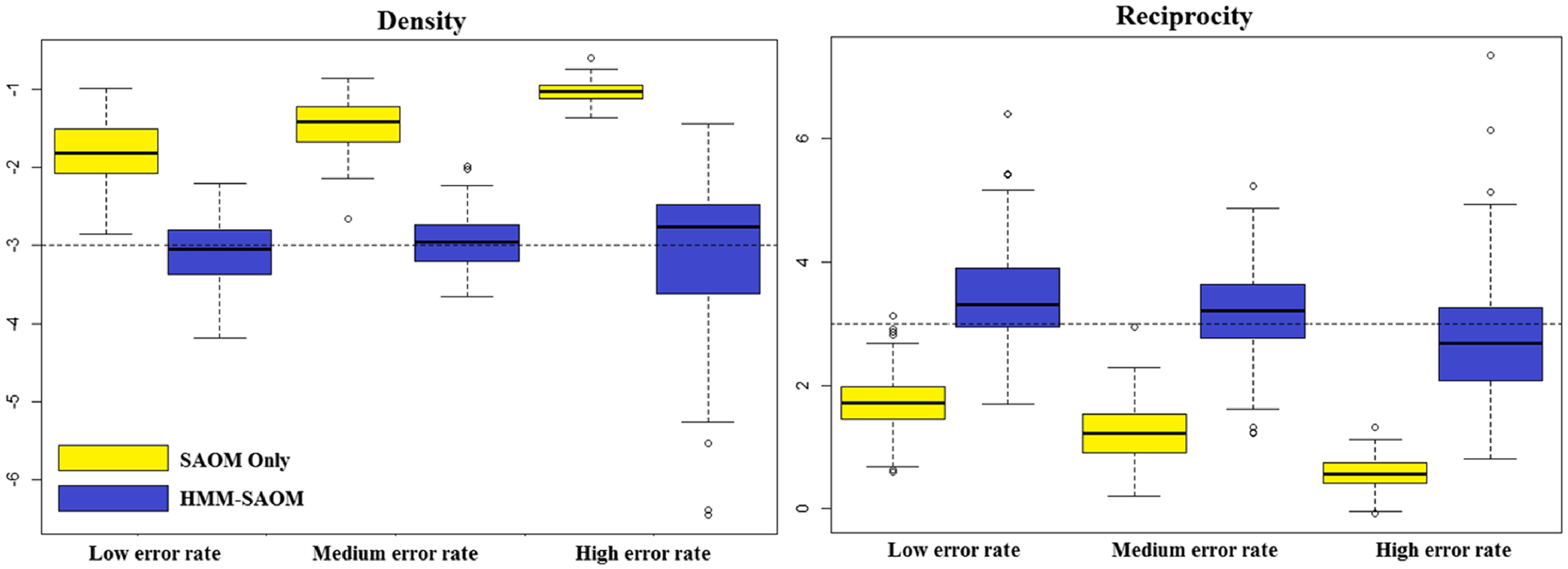

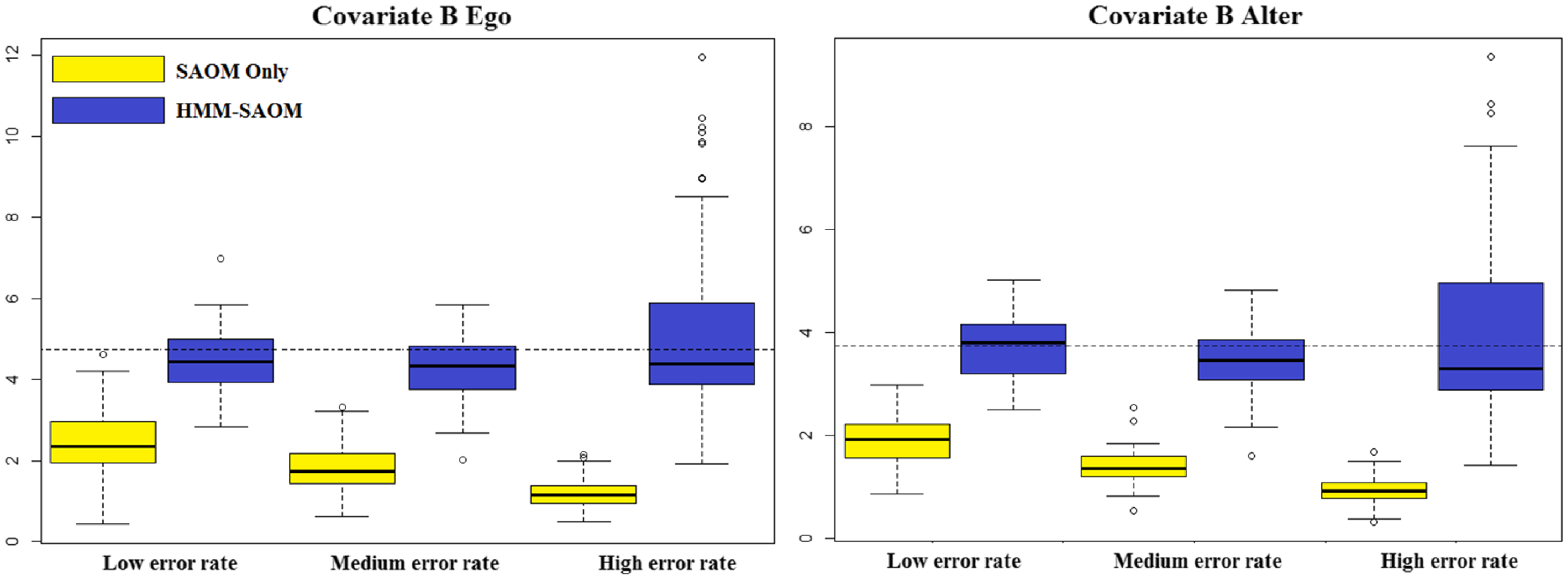

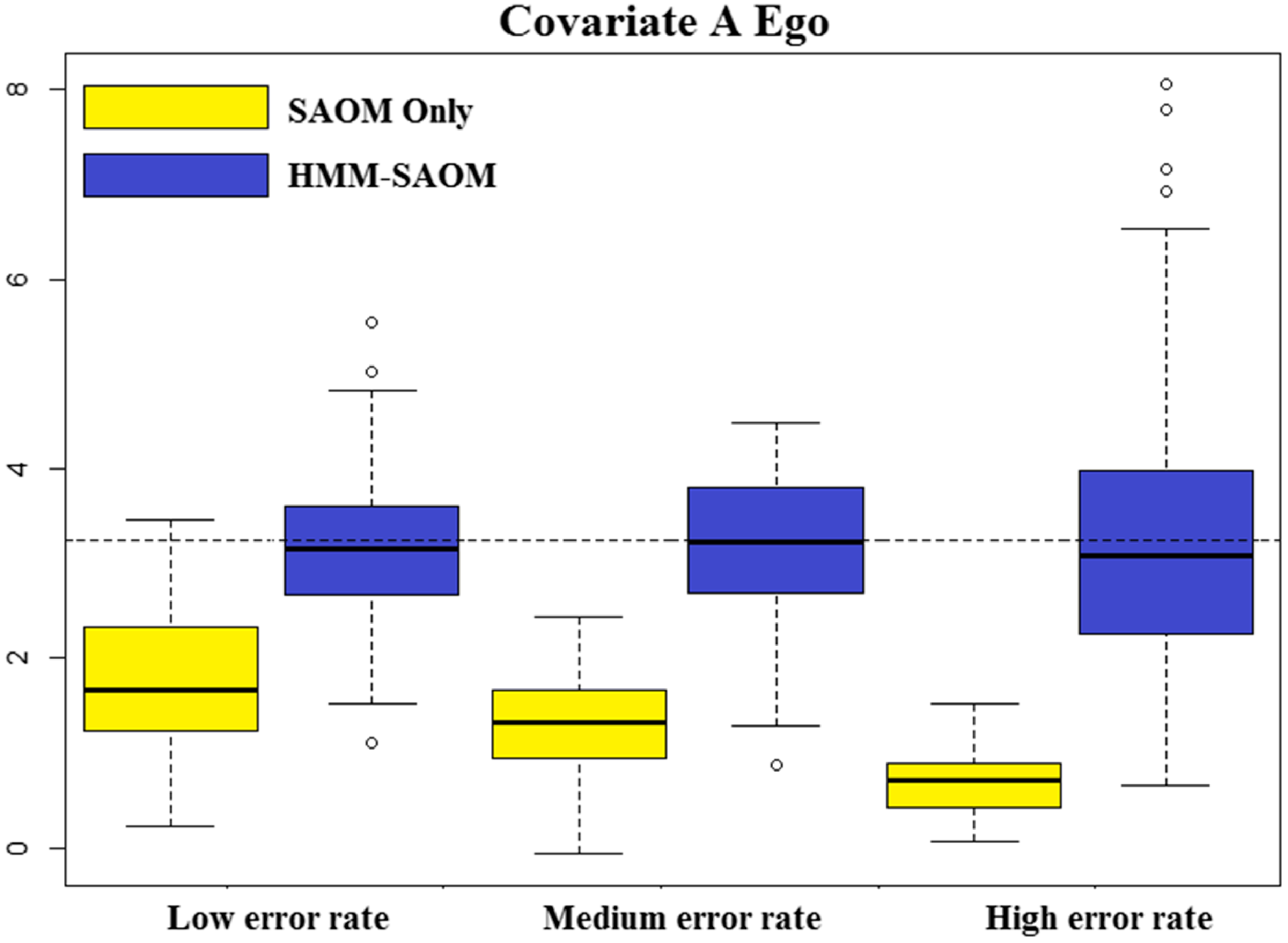

$u(t_{m})$