1. INTRODUCTION

Aesthetically, the return of the composer/performer demands new ways to ‘play’ music. (Ryan Reference Ryan1991: 4)

The use of game structures in music is not new; composers have been using such strategies in various contexts for many years. From the Musicalishes Würfelspiel of the eighteenth century and John Zorn’s Cobra (Zorn Reference Zorn1987), to Terry Riley’s in C (Riley Reference Riley1968) and Iannis Xenakis’s battle pieces Duel (Xenakis Reference Xenakis1959), Strategie (Xenakis Reference Xenakis1966) and Linaia Agon (Xenakis Reference Xenakis1972) (Sluchin and Malt Reference Sluchin and Malt2011), there have been several different approaches to rule-based strategies in improvisation and composition, spanning radically different performance practices. While such approaches have produced a wide range of musical output, there is a clear common theme: the blurring of established boundaries between composers and performers, instrumental practices and compositions. Such notions quickly become hard to define in this context, as such pieces transcend the established Western hierarchical music-making paradigms. With the musical goal being not necessarily the linear fixed configuration of musical ideas in time, which is often not a priority at all, musical agency shifts from composers to performers, with each manifestation of the piece being only an instance of the blueprint set by the designer. This improvisatory approach to music-making has often presented new opportunities for musical expression. By giving performers space to operate within their own performance environments and granting them a larger amount of agency within the piece’s rule-space, they are able to respond to each system’s calls to action within their own idiosyncratic vocabularies. While Zorn used cards as visual cues to structure Cobra, today it is possible to implement a wide range of digital computational systems for improvisational suggestion (Hayes and Michalakos Reference Hayes and Michalakos2012; Gifford, Knotts, McCormack, Kalonaris, Yee-King and d’Inverno Reference Gifford, Knotts, McCormack, Kalonaris, Yee-King and d’Inverno2018).

The rise and democratisation of real-time prototyping and programming environments such as Max/MSP, Pure Data and SuperCollider, as well as circuit bending and embedded computing platforms such as ArduinoFootnote 1 and Bela,Footnote 2 have given birth to a breadth of bespoke performance environments and decentralised communities of musical expression in the past decades. Today, game design is on a similar trajectory. With quite a few game engines such as Unity and Unreal Engine being widely documented and freely available, independent game designers can explore unique ideas in terms of narrative, mechanics, visual and sonic aesthetics, without requiring significant resources, as would have been the case only a few years ago. This opens up a wide range of possibilities for the design of experiences that do not necessarily need to be commercially viable by appealing to large audiences.

Just as the wide range of new instruments for musical expression was born out of different motivations and musical goals situated in disparate artistic milieus, from free jazz to live coding, the goals of the use of game-based music-making can also vary greatly. Ricardo Climent has been exploring works for virtual instruments in virtual spaces using game engines (Climent Reference Climent2016). Andriana Sa in her piece AG#3 has experimented with the audio input of a zither controlling a digital environment (Sa Reference Sa2014). Henrique Roscoe’s DOT is a videogame with no winner; an audiovisual performance with synchronised sounds and images (Roscoe Reference Roscoe2016). Martin Parker’s gruntCount (2012) is a computer music system likened to a computer game, where the performer steps through a landscape of possibilities using performer sounds (Parker and Furniss Reference Parker and Furniss2014). From experimental games with no winners to competitive pieces such as EvoMusic and Chase (Studley, Drummond, Scott and Nesbitt Reference Studley, Drummond, Scott and Nesbitt2020) and smartphone musical apps such as Ocarina (Smule 2008) affording musical expression (Wang Reference Wang2014), game-based music today is one of the most exciting and diverse areas of performance practice.

2. WHERE DOES THE GAME END AND THE INSTRUMENT BEGIN?

Conversations related to composer/performer agency and interface design, in relation to player engagement and expression, are particularly relevant in the games industry. With a plethora of commercially available musical games such as Guitar Hero (Harmonix 2005), Rocksmith (Ubisoft 2011) and Piano Magic (Smule 2010), designers need to constantly address such questions, directly feeding into the design of their products. In the Game Developers Conference of 2015, a panel with the title Where Does the Game End and the Instrument Begin? Footnote 3 took place in San Francisco. Smule’s Tod Moldover observed that not fixing a strict tempo on which notes should be triggered on the Magic Piano app, and by waiting for the players to tap on the screen in their own time, gave them a greater sense of authorship of the produced music. This possibility of expression made a significant difference in terms of user engagement, providing a heightened feeling of musical control. On the other hand, Guitar Hero’s fixed musical grid presents timing and synchronisation challenges to keep players engaged. While there is definitely a skill being developed throughout the game by the player, it is not as clear that it affords musical expression as it is not possible to deviate from the timeline of events.

Using acquired skills expressively through an interface is often discussed outside the realm of music, in game design. To illustrate this, let us consider a simple Super Mario Bros (Nintendo 1985) game level. First, there are certain actions for the Mario avatar to perform through the gamepad. Walk left, right, jump, duck and shoot a projectile. Then, as part of the level there are platforms, power-ups, hazards and a flag where the level ends. The goal for each level is for the avatar to reach the flag, however, the player is free to use their avatar in any way they want in order to achieve this. Additionally, points are added to the score for extra actions performed, such as collecting coins. The challenge for the game designer is to provide a compelling experience for the player – a mixture of tension build-up, release, interwoven narrative, with the configuration of the platforms and enemies becoming harder and harder as the game progresses and the player becomes more skilled in controlling their avatar.

A few parallels here could be drawn from music. Instead of using an avatar navigating through a level, in an orchestral context the violin player uses a violin to navigate through a linear piece of music by looking at notation. In a sense, the notation becomes the platforms and hazards of the videogame that the player needs to skilfully navigate through. Expressive control emerges as player and instrumentalist both navigate through that landscape and reach the end of the designed experience. For both the highly skilled gamer and virtuoso violinist, the physical interaction with their respective interface eventually becomes frictionless. As such, the composer/game designer’s success is measured by the ability to design an appropriate expressive experience using existing and acquired skills, providing opportunities for risk-taking, tension, growth and reward.

One can easily observe more similarities between musical performance and game play. For example, the use of extended gameplay techniques such as speed-running could be seen as an equivalent to extended instrumental techniques in contemporary composition, compared with the intentions of the game designer or instrument designer. Speed-running in games is the attempt of players to complete the game as quickly as possible by mastering gameplay techniques or by finding new affordances within the game, outside the intentions of the designer. Similarly, extended techniques are instrumental techniques that performers have mastered, without necessarily being in the instrument’s established or orthodox vocabulary. Just as circular breathing can in some sense break the rules of what a saxophone can do, mastering extended gameplay techniques such as wall-jumping in the game Super Metroid (Nintendo 1994) can give the player access to areas earlier than the designer has intended for the player. In other games, such as Braid (Number None Reference Number2009), the player can rewind the time so death becomes irrelevant, while in open world games such as the Grand Theft Auto series (Take-Two Interactive 1997), the player is free to explore and improvise with their avatar, while also being able to follow a narrative if they choose to do so. From the linear level design of Super Mario, to the open world of Grand Theft Auto, there is a plethora of skills to be acquired and gameplay expression to be performed, just as the role of a violinist varies across a wide spectrum of expression, from an orchestral performance to free improvisation.

While there are a number of games attempting to recreate existing instrumental practices for non-expert audiences through instrument-like interfaces, these only tend to be lower resolution versions of their analogue counterparts, despite their value in a social context (Miller Reference Miller2009). To that end, perhaps one needs to look for musical expression in games elsewhere. By harnessing emergent gameplay scenarios from existing game genres, such as platform, racing or action games, one could be able to repurpose expressive gameplay for musical purposes. An example of this approach is the action game Ape Out (Devolver 2019) where the player controls an avatar running through a maze while evading enemies. The game’s four chapters essentially represent four free jazz albums with each level representing one track. All the avatar’s gestures and actions are represented in the procedurally generated soundtrack, with the player essentially performing free jazz music through a conventional gamepad. Additionally, the levels are randomised at the start of each game, which makes the experience non-repetitive. Other games exploring different approaches to musical expression are Spore (Electronic Arts 2008), Hohokum (Sony Computer Entertainment 2014), Rez (Sega 2001) and Thumper (Drool 2016), each using dynamically generated soundtracks emerging from gameplay.

3. CONTROL

Conventional game controllers are designed to fit nearly all possible gaming scenarios and to be used by a wide range of users. They are cheap, accessible and easy to become familiar with, and in a short time they become transparent interfaces between game and player. While the design of a game and the player objectives can be significantly complicated and considerably varying even within the same game, the controllers remain simple in principle, relying on dynamic multimapping depending on each interactive experience in order to provide an accessible physical interface.

This of course does not mean that the game controllers themselves cannot be musically expressive under the right circumstances, as seen in some of the aforementioned games. Additionally, as they are designed to be versatile, they are being used frequently in the New Interfaces for Musical Expression (NIME) community as physical interfaces to complex sound synthesis and interactive music systems. Notable examples include Robert van Heumen who uses a game controller in his project Shackle with Anne LaBerge (La Berge and van Heumen Reference La Berge and van Heumen2006), and Alex Nowitz who uses Nintendo Wii controllers for his vocal performance processing environment (Nowitz Reference Nowitz2008).

In contrast to the game controller, mastering an acoustic musical instrument requires a lifetime of continuous practice and engagement. Physical resistance from the instrument plays an integral role in this. While there are already commercially available game controllers making use of haptic feedback, such as the Novint FalconFootnote 4 and the Sony DualShock,Footnote 5 most of the designed haptic relationships between players and game events are one-way. The intention of this feedback is to either reinforce established game mechanics, or to contribute to game immersion. For instance, haptic feedback synchronised with key events in horror games or weapon recoil in first-person shooters helps further ground the player within the game world. This type of physical resistance is not an inherent difficulty to be mastered but only an extra dimension to the existing gameplay experience.

In the case of the instrumental performer on the other hand, it is exactly overcoming the instrument’s inherent or designed resistances that leads to skill and expressive range. As Joel Ryan observes, NIME controllers should be effortful as effort is closely related to expression (Ryan Reference Ryan1992). In time, the skilled instrumental performer is able to use an instrument with the same intuition and agility as a gamer does a game controller. The difference between a player making music through a game such as Ape Out and a virtuoso violinist improvising, however, is that in the first one only has a few hours to master the combined affordances between their game controller and digital avatar, while the violinist has acquired skill over decades, having been through a much longer and steeper learning curve. While they both lead to an expressive scenario in the short term of one particular piece or game level, the instrumentalist is then able to reach significantly wider ranges of nuance, precision and control, affording musical expression and improvisation over several pieces and repertoire. Thus it is clear that there could be significant opportunities for musical potentialities, if one were to harness the skills of the agile instrumentalist and combine them with real-time emergent gameplay and performance into a hybrid gameplay-performance medium.

4. PERFORMANCE PRACTICE WITH THE AUGMENTED DRUM KIT

As a composer/improviser, the author has always been more comfortable in perceiving his performance practice not as a process towards an end product, a musical piece in a finished state either in the form of musical notation or recording, but as a blueprint of musical potentiality where opportunities for musical expression are built into the system. The exploration of the ‘contiguities between composition, performance and improvisation which can be seen to be afforded by current technologies’ (Waters Reference Waters2007), strongly resonates with this work. Also, each performance is only seen as one particular instance of the piece within the space of possibilities that each piece affords; one particular point of view to the designed sonic landscape, prepared and positioned at a point where interesting observations can be made. As each performance with the same musical potential could take a different direction, it is only after several performances that the shape of the piece starts to become apparent (Figure 1).

Figure 1. Left: Pathfinder performance path at NIME in Brisbane, 2016. Right: overlaid paths of all conference and festival performances.

4.1. Instrument design

The author’s performance practice is centred on the augmented drum kit, which consists of a traditional drum kit mounted with sensors, contact microphones, speakers, DMX-controlled lights and bespoke software programmed in Max/MSP (Michalakos Reference Michalakos2012a). The acoustic kit is in essence the control interface of the electronics. There is minimal interaction with the computer during performances – all control of the electronic sound is carried out through the acoustic instrument with the computer serving only as the mediator for all assembled pieces of digital and analogue technology. The instrument has been used in various contexts, from solo improvised performances, such as in Frrriction (Michalakos Reference Michalakos2012b), to structured pieces and group performance such as Elsewhere (Edwards, Hayes, Michalakos, Parker and Svoboda Reference Edwards, Hayes, Michalakos, Parker and Svoboda2017). The instrument’s digital system comprises a series of presets and musical parameters that the performer can navigate through. This can be done either linearly when the performance has a predetermined course, or non-linearly, when the performance is open-ended. Different presets are mapped onto different processing routings, parameter control values and mappings from the acoustic sound to the electronics, and thus provide distinct sound worlds. The transition between presets could either be abrupt or gradual through parameter interpolation, depending on the requirements of the performance. At the heart of the instrument is the real-time signal analysis of the acoustic sound. Sound descriptors such as periodicity, noisiness, transient detection, specific drums being hit and the sequence of the hits are all used to shape the processing and synthesis parameters. At each point during a performance, all extracted control parameters work on two levels: first, attempting to determine how to progress or not through the timeline of the performance; and second, used for sound synthesis and live-audio processing parameter control. The interpolation between different states can be driven by different inputs, including the time elapsed, number of drum hits performed, or performance intensity. Where a series of presets is set for the piece, one rarely has to interact with the computer as it is the type of musical gestures on the drum kit that determine how quickly the piece needs to progress. In order to make these unsupervised decisions about the next actions, the software constantly analyses musical gestures, extracting meaningful parameter information. To this extent, the piece is controlled organically through the acoustic instrument, with the performer being able to choose either to explore a particular sonority further or to quickly progress through it by performing the appropriate musical gestures, much as in Martin Parker’s work gruntCount (Reference Parker2013).

4.2. Instrument-specific game design

Given that the augmented drum kit’s software is already a rule-based performance framework controlled by physical gestures, using a game engine can be seen as an organic extension of the instrument. Two of the author’s electroacoustic game-pieces, Pathfinder and ICARUS, were designed and implemented using the Unity game engine and Jorge Garcia’s UnityOSC interoperability APIFootnote 6 to communicate with the Max/MSP patch. The electroacoustic sound of the drum kit itself becomes the control interface of the games, while the games become in some sense a digital visual representation of the instrument’s sound world, projected behind the drum kit (Figure 2). This creates an additional layer of engagement, as performer and audience are looking at the same visual information, in all transparency, much as in the field of live coding where audiences can see in real time the code that produces the music. The game acts as a structure and interface for the sound engine of the instrument where both performer and audience perceive a set of objectives and implied narrative.

Figure 2. Left: Pathfinder open world movement. Right: Improvisation area.

5. PATHFINDER

Pathfinder (2016) was premiered at the International Conference on Live Interfaces in 2016 in Brighton.Footnote 7 It is a musical game that takes advantage of the augmented drum kit’s bespoke musical vocabulary and turns it into an expressive game controller where all extracted information, including timbral, spectral, dynamic and temporal qualities, informs the progress of the piece. While there is a definite beginning and end to the narrative and a series of events to complete the game, all decisions about the time and order are left to the performer. As the main aim for the game is to take advantage of the established performance practice with the instrument, there were certain concrete design principles from the start:

-

1. There should not be a predetermined linear game sequence. The performer should be able to move freely and explore the world in any direction on the plane.

-

2. The game should encourage improvisation, nuance and experimentation.

-

3. Nothing should happen unless the performer uses the instrument: lack of musical input results in inactivity in the game.

-

4. There are no timed cues or onscreen events that the performer has to synchronise to, and no penalty for being silent or inactive for any period of time.

5.1. Game design

These principles had as an aim to give to the performer as much freedom as possible, while attempting to create the potential for musically engaging scenarios through visual feedback shown to both performer and audience. While Guitar Hero could be described as a timing and synchronisation game, Pathfinder could be described as a musical puzzle game. The player needs to be actively observing the relationships between the sonic/gestural input and visual output and understand which parts of the instrument’s musical vocabulary are required for each section in order to progress. The difference with a conventional puzzle game where false actions simply delay the game’s completion or lead to a fail state is that in Pathfinder erroneous gestures contribute to the musical performance regardless of the fact that they are not contributing to the game’s completion. In fact, it is often the case that the performer wanting to prolong a section will not perform the right gestures, until the musical moment for progression is right. The performer has to navigate between four primary improvisation areas and three secondary objectives. All the primary areas need to be completed successfully in order for the game to finish. The secondary objectives could be seen as side-quests in conventional role-playing games, where their completion has some benefit to the experience but is not necessary in order to finish the game.

5.2. Movement

The game puts the performer in first-person perspective within an open world. A bird’s eye perspective of the real-time path and position in the game-space is also available (Figure 1, left). Switching between the two perspectives is possible through a foot pedal. At the start of the game the player has 555 available steps (similarly to the main mechanic of the infamous Atari game ET the Extraterrestrial (Atari 1982)). Each step is triggered whenever the microphone detects a transient above a certain threshold from the drum kit, which happens roughly each time the performer hits the snare drum with a drumstick. This means that the player has to first choose how to spend the remaining hits and towards which direction. The steps are replenished to maximum each time one of the improvisation areas is completed successfully.

5.3. Improvisation areas

When one of these areas is found, the screen changes from the main view to the objective progression bar (Figure 2, right). Correct gestural input results in the bar progressing forward to the right, while inactivity pushes the bar back to the beginning. After repeatedly performing acceptable sonic gestures, the progress bar reaches the end and the objective is considered met. The steps are replenished to the maximum number and the player continues exploring the open world. To illustrate, one of the areas requires sounds with periodicity above a certain value. Once the type of sonic input for an area is identified, the performer needs to produce sounds above a certain periodicity (bowing cymbals or hitting pitched bells would work, for example) until the bar reaches the end. In the case that the player remains idle during an area where a certain action is required, the bar will start moving backwards and eventually back to its starting position. It is only by continuous push through particular sonic gestures that the progress bar can reach the end and the step number replenish. Experimentation plays a significant role here: as the performer does not initially know which area they are currently in, as the areas are randomised every time the game starts, improvisation is necessary in order to realise the type of sonority or gesture that is required. At the start of the improvisation, the bar will probably not progress much as different types of gestures are performed in succession. When a cymbal or bell is hit, because of some periodical spectral content, the bar will start progressing slightly more. The improviser will then realise that pitched material is required in order to progress, so it is up to them either to feed the system solely with pitched content and finish with this area promptly or to play around that fact in order to stay in this state for a longer period of time. It needs to be noted that at the same time all these gestures need to make sense musically, so the performer is in a constant state of friction between playing the game and performing a coherent piece of music, creating an interesting tension that is perceived by the audience. This tension is quite possibly where the essence of this piece lies.

The progress bar behaves differently according to each area. While in most cases it pushes back if inactive, in certain cases it requires silence. This is indicated by different bar colours that can change at any time: when the bar is white and the background is black, the correct input will cause the bar to move forward; however, if the bar changes to black and the background to white, opposite rules apply and the bar moves backwards when the correct input is performed, undoing any progress made thus far.

5.4. Secondary objectives

The secondary objectives are represented by multicoloured polygons spawned in the open-world area. For this, the drum kit’s embedded DMX-controlled lights are used. If found and collected, the polygon colour is added to the instrument’s light palette, adding an additional layer of visual connection between colours seen on the projected screen and the physical performance space. The mapping of the lights to the performance gestures varies according to the area of the game and is dynamic, similar to the live electronics. By collecting them, the drum kit acquires multiple light colour functionality, just as a player can acquire multiple types of power-ups over time in a conventional role-playing game.

5.5. Fail state

In the case that the performer has used all the available steps without completing the four areas, the game is over and the performance needs to restart. Quite early and before the piece’s premiere, the decision was made to actively introduce the fail state into the performance. While failure was always a possibility and did occur organically on many occasions, it was decided that the audience should know immediately what the player is trying to avoid (which is the step count reaching 0 without having completed the game’s four improvisation areas). This is why it was decided that when performed live, the first playthrough should be a failed one. After the start of the performance the player performs a snare drum solo, moving in a straight line without any area exploration and quickly exhausting the available steps. The bird’s eye view map (as seen in Figure 1) showing the path until that moment is shown to the audience along with a ‘game over’ prompt. The game resets to the main menu, and the process starts again, with the audience now knowing what the implication is if the steps are misspent.

5.6. Visual feedback

While the augmented drum kit’s software has its own bespoke performance interface, which is not visible to the audience, in Pathfinder the visual feedback for both player and audience is the game’s projected digital space itself. The information displayed is clear and minimal and the relationships to the performance gestures apparent. The game’s simple display helps to further contextualise the instrument’s sound world to non-specialised audiences. The displayed information comprises three series of numbers. On the top left (Figure 3), the performer can see the objectives completed, a total of seven (four primary and three secondary). On the bottom right, three numbers signify the x, y, z position of the player in the game world. At the centre of the screen the performer can see the remaining available steps, here 277. The number of the remaining steps is positioned at the centre so that the performer can simultaneously aim towards the desired direction via the centre cross, while also being constantly aware of the number. This allows for the player to perform undistracted by unnecessary visual elements but to be helped by useful information, in the same way that a car driver quickly accesses information and rules about the road through clear and non-ambiguous road signs.

Figure 3. Pathfinder HUD.

5.7. Discussion

The performance itself can take many forms: from a very efficient and quick playthrough lasting only a few minutes, to a sparse and slow performance spending a lot of time within and between each area, to a risky one where only a few steps are left before entering an improvisation area. As a result, the perception of each performance can vary significantly. For example, a riskier performance would mean a more exciting experience for performer and audience, while a longer performance would mean a more meditative experience, exploring the three-dimensional digital landscape to a greater extent. Combinations of all these approaches can be used within the same performance and this was the most common approach to the piece. The aim is for game, music and instrument to feel as one unified hybrid performance work where the audience does not differentiate between its individual technological components.

6. ICARUS

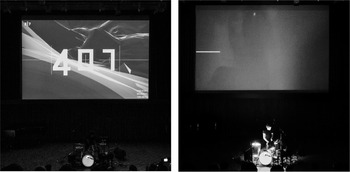

ICARUS (2019) is the author’s second game for the augmented drum kit.Footnote 8 It was premiered at the ACM Creativity and Cognition ’19 conference in San Diego (Figure 4). Unlike Pathfinder, where the player navigates within an open world in a three-dimensional space, ICARUS comprises five distinct mini-game chapters presenting different challenges. Each of these defines a particular set of musical interactions, sonorities, and modalities through distinct mappings and level design. The performer is free to improvise, fail, explore, and through trial and error understand what the game rules are and complete each chapter. The game’s chapter sequence can be seen in Figure 5.

Figure 4. ICARUS chapter structure.

Figure 5. ICARUS setup and performance at the ACM Creativity and Cognition conference in San Diego, 2019.

6.1. Game chapters

The chapters are described in the following sections.

6.1.1. Title screen

Making a reference to commercial games where one needs to push a gamepad button repeatedly in order to start a process (such as pulling an in-game lever or turning a wheel), the player needs to repeatedly perform hits on the floor tom in order to turn a circular dial; the moment that the dial reaches the end, the game starts.

6.1.2. Chapter 1: MACH333

The game’s introductory chapter requires the player to perform 333 hits on the snare drum, before the time elapses. Failure to do so results in a fail state, when the game needs to start again. Successfully performing the task leads to the next level.

6.1.3. Chapter 2: VEGA

The player’s avatar rotates randomly in 360 degrees. Each hit performed while the red light is visible subtracts one value from the number on the screen, while for each hit performed when the blue light is visible, one value is added. If the number reaches 0 then the game is over, while if it reaches the value 555 the game moves on to the next chapter. The player must be closely paying attention to the light’s state – it might be tempting, for example, to perform as many drum hits as possible when the light is blue, however, if the light suddenly turns to red and the hits carry on for an equivalent time duration, all progress will be undone.

6.1.4. Chapter 3: Lagrangian

This chapter is inspired by Lagrange points, which are the points of gravitational balance between planetary objects, where a smaller object will maintain its position relative to the large orbiting bodies. A sphere pulls the player’s avatar towards it, while the player attempts to stay away from it through audio input in an attempt to maintain a stable position, not too far from or too close to the sphere. There is no fail state in this stage; if the player manages to stay in an area of balance the game moves into a Secret Chapter, while if the sphere manages to overpower the player’s efforts the game simply moves to Chapter 4: RIGEL.

6.1.5. Chapter 4: RIGEL

Rigel recreates an old coin-flipping logic game. The goal of the game is to switch all lights to the same colour. Correct musical gestures switch two lights at a time, which has interesting implications, having to choose which two lights to flip in order for all of them to eventually have the same colour. These are controlled through hits on different drums. As a twist to the existing puzzle game, the lights reset to randomised positions if the player performs notes above a certain threshold. As such, the player needs to solve the puzzle without exceeding a certain amount of amplitude, around pianissimo, making this chapter a softer section of the piece.

6.1.6. Secret Chapter

Following Pathfinder’s improvisation areas, this chapter comprises a progress bar that moves upwards when the performer produces the correct musical gestures, which are selected randomly every time the game restarts. Thus, through improvisation, one finds the desired sonic input that leads to the filling of the bar and the progress to the next section.

6.1.7. Chapter 5: ICARUS

The final chapter is a game of stamina. The performer essentially needs to continually perform on all drums as quickly and loudly as possible, until the progress bar reaches the top of the screen. Even small periods of inaction cause the progress bar to drop significantly lower, so if the improviser stops before the level is over, the tasks become increasingly more difficult as the player’s physical stamina falls the longer the level is played. In theory, it is entirely possible for the performer to get stuck in this level during a performance without being able to proceed to the end. As inactivity does not lead to a fail state and the game being over, this means that the performer will simply have to abandon the game, which in the author’s view is a riskier situation than having a game over screen.

6.2. Discussion

This game’s chapters are designed to last a relatively short amount of time, with each section exploring particular attributes of the electroacoustic vocabulary of the instrument. While in Pathfinder the open-world design lends itself to a wider set of options that could all be valid towards the completion of the game, in ICARUS, each chapter presents very specific challenges. Additionally, as failure happens quite often, quick transitions between the ‘game over’ screen, the title screen and the five chapters are all part of the performance’s aesthetic. The same chapters might need to be performed numerous times, more efficiently, moving quickly to the chapter where the player last failed. This is inspired by conventional games, where players can fail multiple times at a certain challenging point of the game until they develop the necessary skills to survive it and move to the next challenge. All initial challenging points then become within the player’s abilities, which leads to quick repetitive playthroughs until the new challenge is surpassed. In both Pathfinder and ICARUS, the electronic sound of the instrument plays an equal role in the performance. Since all acoustic sound is used both as a game controller and as input to the instrument’s live electronics processing environment, the avatar’s position in the game world becomes an input parameter for the control of the electronics, which have been designed according to each section’s musical goals. To put it simply, in terms of live electronics processing, Pathfinder’s avatar position in the 3D space is the preset interpolation point between sonic states that have been positioned in the digital landscape, while in ICARUS, each chapter is essentially a distinct Max/MSP preset of the augmented drum kit’s electronic sound. As such, game and electroacoustic sound world are merged together into the hybrid performance medium.

7. FUTURE DIRECTIONS

The two pieces were performed on several occasions, most notably at the International Conference on Live Interfaces (ICLI) 2016, New Interfaces for Musical Expression (NIME) 2016, Digital Games Research Association (DiGRA) 2016, Foundations of Digital Games (FDG) 2016, Sonorities Festival 2016, the Edinburgh Science Festival 2016, the International Computer Music Conference (ICMC) 2017 and ACM Creativity and Cognition 2019. Pathfinder won the best performance award at the NIME 2016 conference in Brisbane, Australia. At the time of writing, ICARUS is planned to be performed at an online version of xCoAx ’20 in Gratz, Austria and NIME ’20 in Birmingham, UK.

Using game engines to structure electroacoustic improvisation has proved to be extremely rewarding. By harnessing an established performance practice through instrument-specific musical games, very particular expressive aspects of the instrument are explored and employed in diverse gameplay scenarios. Projecting the game behind the performer enables the audience to experience the progression of each piece, leading to further engagement with the performance. This has been positively commented on by audiences, with an emphasis on the fact that visual information is used not as a private means for the instrument’s monitoring by the performer but as a transparent and accessible common visual medium for performer and audience. While it is good to know that the visual elements of the pieces help make the narrative of the electroacoustic improvisation more transparent, it is important to note that this is not necessarily a high priority by design. All elements of the pieces are designed to equally complement each other in one gameplay-performance medium, rather than using the projected visuals as a means to simply provide additional insights into the musical performance. The playful character of the performances also add an additional layer of humour and empathy with the performer, particularly when failing or reaching game over screens.

In the future, the author will be exploring multiplayer games for several performers, either in cooperation mode or duels. In the game-piece Death Ground (Michalakos and Wærstad Reference Michalakos and Wærstad2019) some of the musical implications of a one-versus-one combat game have already been explored. In this case, instead of instruments, the digital avatars are controlled through conventional game controllers, however, the piece will be further explored using musical instruments as control inputs. There are also many lessons to be learned from online gameplay communities where emergent gameplay can lead to complex interactions. These could be used as a point of departure for real-time improvised performance structures involving zero-sum scenarios or resource management games. Such frameworks could be used not only to facilitate short-term improvisation but also to encourage longer-term strategies between groups of performers engaging in musical battles, the overall outcome of which could be determined over several performances.

Acknowledgments

The author wishes to acknowledge the support of Abertay University and the Abertay Game Lab in Dundee, Scotland.