1. Introduction

Temporal logic (TL) is arguably the primary language for the formal specification and reasoning about system correctness and safety. It has been successfully applied to the analysis of a wide range of systems, including cyber-physical systems (Bartocci et al., Reference Bartocci, Deshmukh, Donzé, Fainekos, Maler, Nickovic and Sankaranarayanan2018), programs (Manna and Pnueli, Reference Manna and Pnueli2012), and stochastic models (Kwiatkowska et al., Reference Kwiatkowska, Norman and Parker2007). In cyber-physical systems, TLs are especially useful for expressing and verifying critical properties of these systems, to ensure systems meet performance and safety criteria. For example, (probabilistic) TLs can express safety and reachability properties (e.g., ‘will the system eventually reach the goal state(s) while avoiding unsafe states?”) and fault-tolerance properties (e.g., ‘will the system return to some desired service level after a fault?”).

However, a limitation of existing TLs is that TL specifications must be evaluated on a fixed configuration of the system, for example a fixed choice of control policy, communication protocol, or system dynamics. That is, they cannot express queries like ‘what is the probability that the system throughput will stay above a certain threshold if we switch to a high-performance controller?”, or ‘what would have been the probability that the signal would have stayed below a given threshold if we had used a different policy in the past?” This kind of reasoning about different system conditions falls under the realm of causal inference (Pearl, Reference Pearl2009), by which the first query is called an intervention and the second a counterfactual. Even though both causal inference and TL-based verification are well-established on their own, their combination hasn’t been sufficiently explored in past literature (see Section 7 for a more complete account of the related work). With this paper, we contribute to bridging these two fields.

We introduce PCFTL (Probabilistic CounterFactual Temporal Logic), the first probabilistic temporal logic that explicitly includes causal operators to express interventional properties (‘what will happen if…”), counterfactual properties (‘what would have happened if…”), and so-called causal effects, defined as the difference of interventional or counterfactual probabilities between two different configurations. In particular, in this paper we focus on the analysis of Markov Decision Processes (MDPs), which are capable of modeling sequential decision-making processes under uncertainty, a key aspect in many cyber-physical systems applications. MDPs provide a useful framework for a variety of applications, such as reinforcement learning, planning, and probabilistic verification. For MDPs, arguably the most relevant kind of causal reasoning concerns evaluating how a change in the MDP policy affects some outcome. The outcome of interest for us is the satisfaction probability of a temporal-logic formula.

Interventions are ‘forward-looking” (Oberst and Sontag, Reference Oberst and Sontag2019), as they allow us to evaluate the probability of a TL property ϕ after applying a particular change X ← X′ to the system. Counterfactuals are instead ‘retrospective” (Oberst and Sontag, Reference Oberst and Sontag2019), telling us what might have happened under a different condition: having observed an MDP path τ, they allow us to evaluate ϕ on the what-if version of τ, that is the path that we would have observed if we had applied X ← X′ at some point in the past, provided that the random factors that yielded τ remain fixed. Causal effects (Guo et al., Reference Guo, Cheng, Li, Hahn and Liu2020) allow us to establish the impact of a given change at the level of the individual path or overall, and they quantify the increase in the probability of ϕ induced by a manipulation X ← X′. Causal and counterfactual reasoning has gained a lot of attention in recent years due to its power in observational data studies: with counterfactuals, one can answer what-if questions relative to an observed path, that is without having to intervene on the real system (which might jeopardize safety) but using observational data only. Our PCFTL logic enables this kind of reasoning in the context of formal verification.

Our approach to incorporating causal inference in temporal logic involves only a minimal extension of traditional probabilistic logics. PCFTL is an extension of PCTL⋆ (Baier et al., 1997; Baier, 1998) where the probabilistic operator P ⋈ p (ϕ), which checks whether the probability of ϕ satisfies threshold ⋈ p (where ⋈ ∈ {≤, <, >, ≥ }), is replaced with a counterfactual operator I @t .P ⋈ p (ϕ), which concerns the probability of ϕ if we had applied intervention I at t time steps in the past. Albeit minimal, such an extension provides great expressive power: if t > 0, then the operator corresponds to a counterfactual query; if t = 0, it represents an interventional probability; if both t = 0 and I is empty, then we retrieve the traditional P ⋈ p (ϕ) operator.

Motivating example: To better grasp interventions and counterfactuals, consider an example of a robot in a 2D space, modeled by the equation S

t + 1 = S

t

+ A

t

+ U

t

, where S

t

∈ ℝ2 and A

t

∈ ℝ2 are the state and action at time t, and U

t

∈ ℝ2 is an unobserved random exogenous input (e.g., white Gaussian noise). The robot must satisfy a bounded safety property ϕ = ¬ℱ[1,4](S

t

≥ [1, 2]), which specifies that the robot must avoid entering the unsafe region S

t

≥ [1, 2] on all paths (up to length 3) that it takes. Suppose we observe a path τ under some policy π, given by

![]() $\tau = [0, 0]{ [0, 1]\atop{\longrightarrow}} [0.1, 0.5] {[1,1]\atop{\longrightarrow}} [0.8, 1.75] {[0, 0]\atop{\longrightarrow}}[1.3, 2.1]$

, where

$\tau = [0, 0]{ [0, 1]\atop{\longrightarrow}} [0.1, 0.5] {[1,1]\atop{\longrightarrow}} [0.8, 1.75] {[0, 0]\atop{\longrightarrow}}[1.3, 2.1]$

, where

![]() $s{ a\atop{\longrightarrow}}s'$

denotes a step from state s to s′ through action a. This path, and hence policy π, is unsafe because it violates the safety property in its final state. A question then arises: given τ, if we had intervened in the past by changing the policy from π to some π′, could have we prevented this violation? Define the intervention

$s{ a\atop{\longrightarrow}}s'$

denotes a step from state s to s′ through action a. This path, and hence policy π, is unsafe because it violates the safety property in its final state. A question then arises: given τ, if we had intervened in the past by changing the policy from π to some π′, could have we prevented this violation? Define the intervention

![]() $I = \pi \leftarrow \pi^\prime$

. Then, the counterfactual PCFTL query I

@3.P

⋈ p

(ϕ) allows us to evaluate the probability of the safety property ϕ in a what-if version of τ where we apply I (i.e., policy π′ instead of π) at 3 steps back from the last state of τ, that is from the beginning of the path in this case.Footnote

1

In particular, the counterfactual path is obtained by applying I but by keeping the same values of the random exogenous factors U

t

that led to τ. These factors cannot be directly observed, but, given the above Equation, they can be readily determined as U

t

= S

t + 1 − S

t

− A

t

, leading to U

1 = [0.1,−0.5], U

2 = [−0.3,0.25], and U

3 = [0.5,0.35]. Then, suppose the alternative policy π′ chooses actions A′1 = [0,0.5] and A′3 = [−0.4,−0.2] (but keeps A′2 = A

2), then this induces the counterfactual path

$I = \pi \leftarrow \pi^\prime$

. Then, the counterfactual PCFTL query I

@3.P

⋈ p

(ϕ) allows us to evaluate the probability of the safety property ϕ in a what-if version of τ where we apply I (i.e., policy π′ instead of π) at 3 steps back from the last state of τ, that is from the beginning of the path in this case.Footnote

1

In particular, the counterfactual path is obtained by applying I but by keeping the same values of the random exogenous factors U

t

that led to τ. These factors cannot be directly observed, but, given the above Equation, they can be readily determined as U

t

= S

t + 1 − S

t

− A

t

, leading to U

1 = [0.1,−0.5], U

2 = [−0.3,0.25], and U

3 = [0.5,0.35]. Then, suppose the alternative policy π′ chooses actions A′1 = [0,0.5] and A′3 = [−0.4,−0.2] (but keeps A′2 = A

2), then this induces the counterfactual path

![]() $\tau '= [0,0]{ [0, 0.5]\atop{\longrightarrow}} [0.1, 0] {[1, 1]\atop{\longrightarrow}} [0.8, 1.25] {[-0.4, -0.2]\atop{\longrightarrow}} [0.9, 1.4]$

. Notably, now τ′ satisfies the safety property.

$\tau '= [0,0]{ [0, 0.5]\atop{\longrightarrow}} [0.1, 0] {[1, 1]\atop{\longrightarrow}} [0.8, 1.25] {[-0.4, -0.2]\atop{\longrightarrow}} [0.9, 1.4]$

. Notably, now τ′ satisfies the safety property.

Despite the simplicity of this example, counterfactual reasoning becomes challenging when dealing with discrete-state probabilistic models like MDPs. Indeed, the state of an MDP evolves according to a categorical distribution, for which the identification and inference of the exogenous factors are non-trivial.

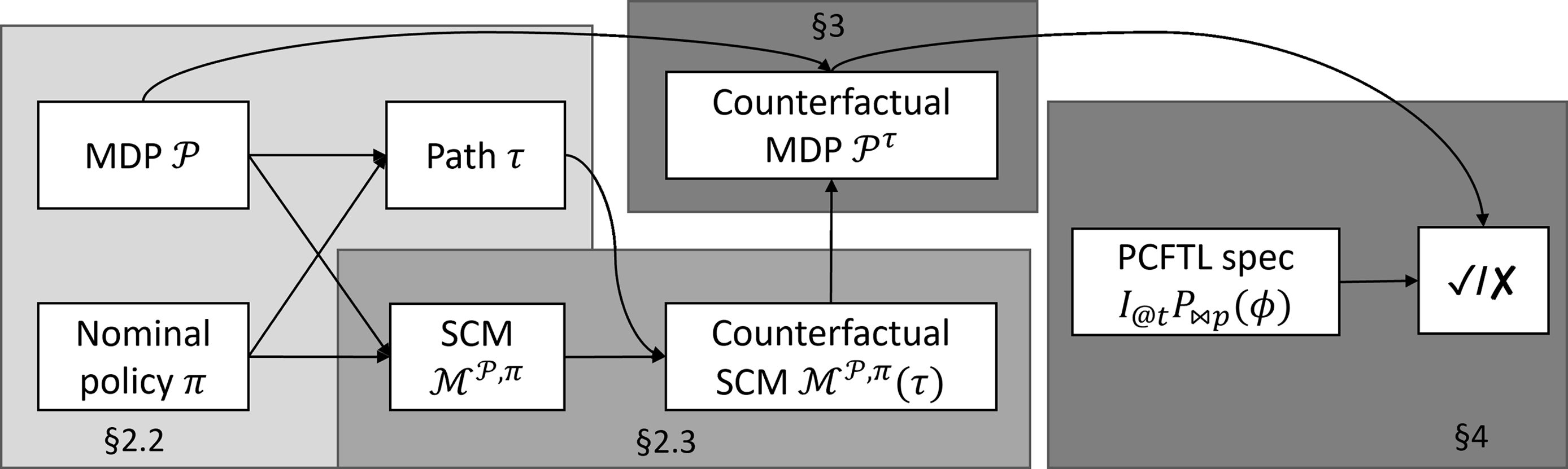

Contributions: In this paper, we introduce the syntax and semantics of PCFTL and present a statistical model-checking approach for verifying PCFTL properties in MDP environments. Our approach, summarized in Figure 1, relies on translating the MDP into a so-called structural causal model (SCM), a fundamental model in causal inference that enables computation of counterfactual distributions. We use a particular form of SCMs (Oberst and Sontag, Reference Oberst and Sontag2019) suitable for encoding categorical counterfactuals (arising with discrete-state MDPs). After performing counterfactual inference, the SCM model is then translated back into an MDP amenable for PCFTL model checking. Unlike existing logics, PCFTL formulas are interpreted with respect to an observed MDP path τ, rather than a single MDP state, as we must keep track of the past to perform counterfactual reasoning.

Using efficient statistical model checking procedures, we evaluate PCFTL on a reinforcement learning benchmark (Chevalier-Boisvert et al., Reference Chevalier-Boisvert, Willems and Pal2018) involving multiple 2D grid-world environments, goal-oriented tasks, and interventional and counterfactual properties under various policies learned through neural-network-based reinforcement learning methods. These results demonstrate the usefulness of PCFTL in AI safety, but our approach could enhance the verification of probabilistic models in a variety of domains, from distributed systems to security and biology.

The paper covers background about SCMs, MDPs, and SCM-based encoding of MDPs in Section 2, construction of counterfactual MDPs in Section 3, definition of PCFTL syntax, semantics, and decision procedures in Section 4, experimental results in Section 6, related work in Section 7, and conclusions in Section 8.

2. Background

2.1. Causal inference with structural causal models

Structural Causal Models (SCMs) (Pearl, Reference Pearl2009; Glymour et al., Reference Glymour, Pearl and Jewell2016) are equation-based models to specify and reason about causal relationships involving some variables of interest.

Figure 1. Overview of our approach to PCFTL verification, with section pointers.

Definition 1 (Structural Causal Model (SCM))

An SCM is a tuple

![]() ${{\cal {M}}} =({\bf {U}},{\bf {V}}, {\cal {F}}, P({\bf {U}}))$

where

${{\cal {M}}} =({\bf {U}},{\bf {V}}, {\cal {F}}, P({\bf {U}}))$

where

-

${\bf {U}}$

is a set of (mutually independent) exogenous variables.

${\bf {U}}$

is a set of (mutually independent) exogenous variables.

-

${\bf {V}}$

is a set of endogenous variables, where the value of each

${\bf {V}}$

is a set of endogenous variables, where the value of each

$V \in {\bf {V}}$

is determined by a function

$V \in {\bf {V}}$

is determined by a function

$V = f_V({\bf {PA}}_V, U_V)$

. Here,

$V = f_V({\bf {PA}}_V, U_V)$

. Here,

${\bf {PA}}_V \subseteq {\bf {V}}$

are the set of direct causes of V, and

${\bf {PA}}_V \subseteq {\bf {V}}$

are the set of direct causes of V, and

$U_V\in {\bf {U}}$

.

$U_V\in {\bf {U}}$

. -

ℱ is the set of functions

$\{\,f_V\}_{V \in {\bf {V}}}$

.

$\{\,f_V\}_{V \in {\bf {V}}}$

. -

$P({\bf {U}})=\bigotimes _{U \in {\bf {U}}} P(U)$

is the joint distribution of the (mutually independent) exogenous variables.

$P({\bf {U}})=\bigotimes _{U \in {\bf {U}}} P(U)$

is the joint distribution of the (mutually independent) exogenous variables.

Assignments in ℱ must be acyclic, to ensure that no variable can be a direct or indirect cause of itself. Because of this, the causal relationships in an SCM can be represented by a directed acyclic graph (DAG), called a causal diagram.

In an SCM, the values of the exogenous variables

![]() ${\bf {U}}$

are determined by factors outside the model, which is modelled by some distribution

${\bf {U}}$

are determined by factors outside the model, which is modelled by some distribution

![]() $P({\bf {U}})$

. Exogenous variables are unobserved variables which act as the source of randomness in the system. Indeed, for a fixed realization

$P({\bf {U}})$

. Exogenous variables are unobserved variables which act as the source of randomness in the system. Indeed, for a fixed realization

![]() $\mathbf {u}$

of

$\mathbf {u}$

of

![]() ${\bf {U}}$

, that is a concrete unfolding of the system’s randomness, the values of

${\bf {U}}$

, that is a concrete unfolding of the system’s randomness, the values of

![]() ${\bf {V}}$

become deterministic, as they are uniquely determined by

${\bf {V}}$

become deterministic, as they are uniquely determined by

![]() $\mathbf {u}$

and the causal processes ℱ. A concrete value

$\mathbf {u}$

and the causal processes ℱ. A concrete value

![]() $\mathbf {u}$

of

$\mathbf {u}$

of

![]() ${\bf {U}}$

is also called context (or unit). We denote by

${\bf {U}}$

is also called context (or unit). We denote by

![]() $P_{{\cal {M}}}({\bf {V}})$

the so-called observational distribution of

$P_{{\cal {M}}}({\bf {V}})$

the so-called observational distribution of

![]() ${\bf {V}}$

, that is, the data-generating distribution entailed by the SCM ℱ and

${\bf {V}}$

, that is, the data-generating distribution entailed by the SCM ℱ and

![]() $P({\bf {U}})$

.

$P({\bf {U}})$

.

Interventions. With SCMs, one can establish the causal effect of some input variable X on some output variable Y by evaluating Y after ‘forcing” some specific values x on X, an operation called intervention. Applying X←x means replacing the RHS of

![]() $X = f_X({\bf {PA}}_X, U_X)$

with x. Interventions allow to establish the true causal effect of X on Y by comparing the so-called post-interventional distribution P

ℳ[X←x](Y) at different values x, where ℳ[X←x] is the SCM obtained from ℳ by applying X ← x.Footnote

2

By ‘disconnecting” X from any of its possible causes, interventions prevent any source of spurious association between X and Y (Glymour et al., Reference Glymour, Pearl and Jewell2016) (i.e., caused by variables other than X and that are not descendants of X).Footnote

3

In the following we will use the notation I (and ℳ[I]) to denote a set of interventions I = {V

i

← v

i

}

i

.

$X = f_X({\bf {PA}}_X, U_X)$

with x. Interventions allow to establish the true causal effect of X on Y by comparing the so-called post-interventional distribution P

ℳ[X←x](Y) at different values x, where ℳ[X←x] is the SCM obtained from ℳ by applying X ← x.Footnote

2

By ‘disconnecting” X from any of its possible causes, interventions prevent any source of spurious association between X and Y (Glymour et al., Reference Glymour, Pearl and Jewell2016) (i.e., caused by variables other than X and that are not descendants of X).Footnote

3

In the following we will use the notation I (and ℳ[I]) to denote a set of interventions I = {V

i

← v

i

}

i

.

Counterfactuals. Upon observing a particular realization

![]() ${\bf {v}}$

of the SCM variables

${\bf {v}}$

of the SCM variables

![]() ${\bf {V}}$

, counterfactuals answer the following question: what would have been the value of some variable Y for observation

${\bf {V}}$

, counterfactuals answer the following question: what would have been the value of some variable Y for observation

![]() ${\bf {v}}$

if we had applied intervention I on our model ℳ? This corresponds to evaluating

${\bf {v}}$

if we had applied intervention I on our model ℳ? This corresponds to evaluating

![]() ${\bf {V}}$

in a hypothetical world characterized by the same context (i.e., same realization of random factors) that generated the observation

${\bf {V}}$

in a hypothetical world characterized by the same context (i.e., same realization of random factors) that generated the observation

![]() ${\bf {v}}$

but under a different causal process.

${\bf {v}}$

but under a different causal process.

Computing counterfactuals involves three steps (Glymour et al., Reference Glymour, Pearl and Jewell2016):

-

1. abduction: estimate the context given the observation, that is derive

${P({\bf {U}} \mid {\bf {V}}=\bf {v}})$

;

${P({\bf {U}} \mid {\bf {V}}=\bf {v}})$

; -

2. action: modify the SCM by applying the intervention of interest, for example ℳ[I]; and

-

3. prediction: evaluate

${\bf {V}}$

under the manipulated model ℳ[I] and the inferred context.

${\bf {V}}$

under the manipulated model ℳ[I] and the inferred context.

We denote by

![]() ${{\cal {M}}({\bf {v}}})[I]$

the counterfactual model obtained by replacing

${{\cal {M}}({\bf {v}}})[I]$

the counterfactual model obtained by replacing

![]() $P({\bf {U}})$

with

$P({\bf {U}})$

with

![]() ${P({\bf {U}} \mid {\bf {V}}=\bf {v}})$

in the SCM ℳ and then applying intervention I. Note that here

${P({\bf {U}} \mid {\bf {V}}=\bf {v}})$

in the SCM ℳ and then applying intervention I. Note that here

![]() ${\bf {v}}$

is a realization of

${\bf {v}}$

is a realization of

![]() ${\bf {V}}$

under ℳ and not under ℳ[I].

${\bf {V}}$

under ℳ and not under ℳ[I].

As explained above, each observation

![]() ${{\bf {V}}=\bf {v}}$

can be seen as a deterministic function of a particular value

${{\bf {V}}=\bf {v}}$

can be seen as a deterministic function of a particular value

![]() $\mathbf {u}$

of

$\mathbf {u}$

of

![]() ${\bf {U}}$

. Therefore, the counterfactual model is deterministic too, assuming that such

${\bf {U}}$

. Therefore, the counterfactual model is deterministic too, assuming that such

![]() $\mathbf {u}$

can be identified from

$\mathbf {u}$

can be identified from

![]() ${{\bf {V}}=\bf {v}}$

. However, inferring

${{\bf {V}}=\bf {v}}$

. However, inferring

![]() $\mathbf {u}$

precisely is often not possible (as discussed later), resulting in a (non-Dirac) posterior distribution of contexts

$\mathbf {u}$

precisely is often not possible (as discussed later), resulting in a (non-Dirac) posterior distribution of contexts

![]() ${P({\bf {U}} \mid {\bf {V}}=\bf {v}})$

and thus, a stochastic counterfactual value.

${P({\bf {U}} \mid {\bf {V}}=\bf {v}})$

and thus, a stochastic counterfactual value.

2.1.1. Causal effects

Estimating a causal effect amounts to comparing some variable Y (outcome, output) under different values of some other variable X (treatment, input). Interventions and counterfactuals enable this task by ruling out spurious association between X and Y, as discussed above. There are three main estimators of causal effects:

Individual Treatment Effect (ITE). For a context

![]() $\mathbf {u}$

, the ITE of

$\mathbf {u}$

, the ITE of

![]() $Y\in {\bf {V}}$

between interventions I

1 and I

0 is defined as

$Y\in {\bf {V}}$

between interventions I

1 and I

0 is defined as

![]() $Y_{I_1}(\mathbf {u})-Y_{I_0}(\mathbf {u})$

, where

$Y_{I_1}(\mathbf {u})-Y_{I_0}(\mathbf {u})$

, where

![]() $Y_{I_i}(\mathbf {u})$

is the counterfactual value of Y induced by

$Y_{I_i}(\mathbf {u})$

is the counterfactual value of Y induced by

![]() $\mathbf {u}$

under the post-intervention model ℳ[I

i

]. As explained above, we don’t have direct access to the exogenous values

$\mathbf {u}$

under the post-intervention model ℳ[I

i

]. As explained above, we don’t have direct access to the exogenous values

![]() $\mathbf {u}$

but only to realizations

$\mathbf {u}$

but only to realizations

![]() ${\bf {v}} \sim P_{{\cal {M}}}({\bf {V}})$

. Thus, below we define the ITE as a function of

${\bf {v}} \sim P_{{\cal {M}}}({\bf {V}})$

. Thus, below we define the ITE as a function of

![]() ${\bf {v}}$

(rather than

${\bf {v}}$

(rather than

![]() $\mathbf {u}$

) by plugging in the average counterfactual value of Y w.r.t. the posterior

$\mathbf {u}$

) by plugging in the average counterfactual value of Y w.r.t. the posterior

![]() ${P({\bf {U}} \mid {\bf {V}}=\bf {v}})$

:

${P({\bf {U}} \mid {\bf {V}}=\bf {v}})$

:

Average Treatment Effect (ATE). ATE is used to estimate causal effects at the population level and is defined as the expected value (w.r.t.

![]() $P({\bf {U}})$

) of the individual treatment effect, or equivalently, as the difference of post-interventional expectations:

$P({\bf {U}})$

) of the individual treatment effect, or equivalently, as the difference of post-interventional expectations:

Conditional Average Treatment Effect (CATE). The CATE is the conditional version of ATE. This estimator is useful when the treatment effect may vary across the population depending on the value of some variables V:

2.2. Markov Decision Processes (MDPs)

MDPs are a class of stochastic models to describe sequential decision making processes, where at each step t, an agent in state s i performs some action a i determined by a policy π ending up in state s i + 1 ∼ P(⋅ ∣ s i , a i ). The agent receives some reward ℛ(s i , a i ) for performing a i at s i . Here, we focus on MDPs with finite state and action spaces. Without loss of generality, we restrict the policy class to deterministic policies (Puterman, Reference Puterman2014). Moreover, each MDP state satisfies a (possibly empty) set of atomic propositions, with AP being the set of atomic propositions.

Definition 2 (Markov Decision Process (MDP))

An MDP is a tuple 𝒫 = (𝒮, 𝒜, P 𝒫, P I , ℛ, L) where 𝒮 is the state space, 𝒜 is the set of actions, P 𝒫 : (𝒮 × 𝒜 × 𝒮)→[0,1] is the transition probability function, P I : 𝒮 → [0,1] is the initial state distribution, ℛ : (𝒮 × 𝒜) → ℝ is the reward function, and L : 𝒮 → 2 AP is a labelling function, which assigns to each state s ∈ 𝒮 the set of atomic propositions that are valid in s. A (deterministic) policy π for 𝒫 is a function π : 𝒮 → 𝒜.

An agent acting under policy π in an MDP environment will induce an MDP path τ, as follows:

Definition 3 (MDP path)

A path τ = (s

1, a

1), (s

2, a

2), … of an MDP 𝒫 = (𝒮, 𝒜, P

𝒫, P

I

, ℛ, L) induced by a policy π is a sequence of state-action pairs where s

i

∈ 𝒮 and a

i

= π(s

i

) for all i ≥ 1. The probability of a path τ is given by

![]() $P_{\cal {P}}(\tau ) = P_I(s_1)\cdot \prod _{i\geq 1} P_{\cal {P}}(s_{i+1}\mid s_i, a_i)$

. For a finite path τ = (s

1, a

1), …, (s

$P_{\cal {P}}(\tau ) = P_I(s_1)\cdot \prod _{i\geq 1} P_{\cal {P}}(s_{i+1}\mid s_i, a_i)$

. For a finite path τ = (s

1, a

1), …, (s

![]() $ {\text k} $

, a

$ {\text k} $

, a

![]() $ {\text k} $

), we denote by Paths

𝒫, π

(τ) the set of all (infinite) paths with prefix τ induced by MDP 𝒫 and policy π, which has probability P

𝒫(Paths

𝒫, π

(τ)) = P

𝒫(τ).

$ {\text k} $

), we denote by Paths

𝒫, π

(τ) the set of all (infinite) paths with prefix τ induced by MDP 𝒫 and policy π, which has probability P

𝒫(Paths

𝒫, π

(τ)) = P

𝒫(τ).

We denote by |τ| the length of the path, by τ[i] the i-th element of τ (for 0 < i ≤ |τ|), by τ[i :] the suffix of τ starting at position i (inclusive), and by τ[i : i + j] the subsequence spanning positions i to i + j (inclusive). Even though τ[i] denotes the pair (s i , a i ) of the path, we will often use it, when the context is clear, to denote only the state s i . We slightly abuse notation and write Paths 𝒫, π (s) to denote the set of paths induced by π and starting with s.

Usually, an MDP is stationary, meaning that its transition probability function and/or reward function remain fixed over time. However, there exists a variant, called a non-stationary MDP (Lecarpentier and Rachelson, Reference Lecarpentier, Rachelson, Wallach, Larochelle, Beygelzimer, d’Alché-Buc, Fox and Garnett2019), where the transition probability function and/or reward function may change over time. A non-stationary MDP can be converted to a stationary MDP by augmenting its state space with a variable that keeps track of the time.

An MDP under a fixed policy can be described as a deterministic-time Markov Chain (DTMC), as follows.

Definition 4 (Induced DTMC)

An MDP 𝒫 = (𝒮, 𝒜, P 𝒫 P I ,R, L) and a policy π : 𝒮 → 𝒜 induce a discrete-time Markov Chain (DTMC) 𝒟𝒫, π = (𝒮, P 𝒟𝒫, π , P I , R 𝒫, π , L) where for s, s′ ∈ 𝒮, P 𝒟𝒫, π (s′∣s) = P 𝒫(s′ ∣ s, π(s)), and for s ∈ 𝒮, R 𝒫, π (s) = R(s, π(s)). Paths of 𝒟𝒫, π are sequences of states, and their probabilities are defined similarly to Def. 3 .

2.3. SCM-based encoding of MDPs

We now present the SCM-based encoding of MDPs introduced in (Oberst and Sontag, Reference Oberst and Sontag2019). For a given path length T, the SCM ℳ𝒫, π, T induced by an MDP 𝒫 and a policy π characterizes the unrolling of paths of 𝒫 of length T, that is it has endogenous variables S t and A t describing the MDP’s state and action at each time step t, where t = 1, …, T. These are defined by the structural equations:

where the probabilistic state transition at t, P 𝒫(S t + 1 ∣ S t , A t ), is encoded as a deterministic function f of S t , A t , and the (random) exogenous variables U t , while the random choice of the initial state, P I (S 1), as a deterministic function f 0 of U 0.

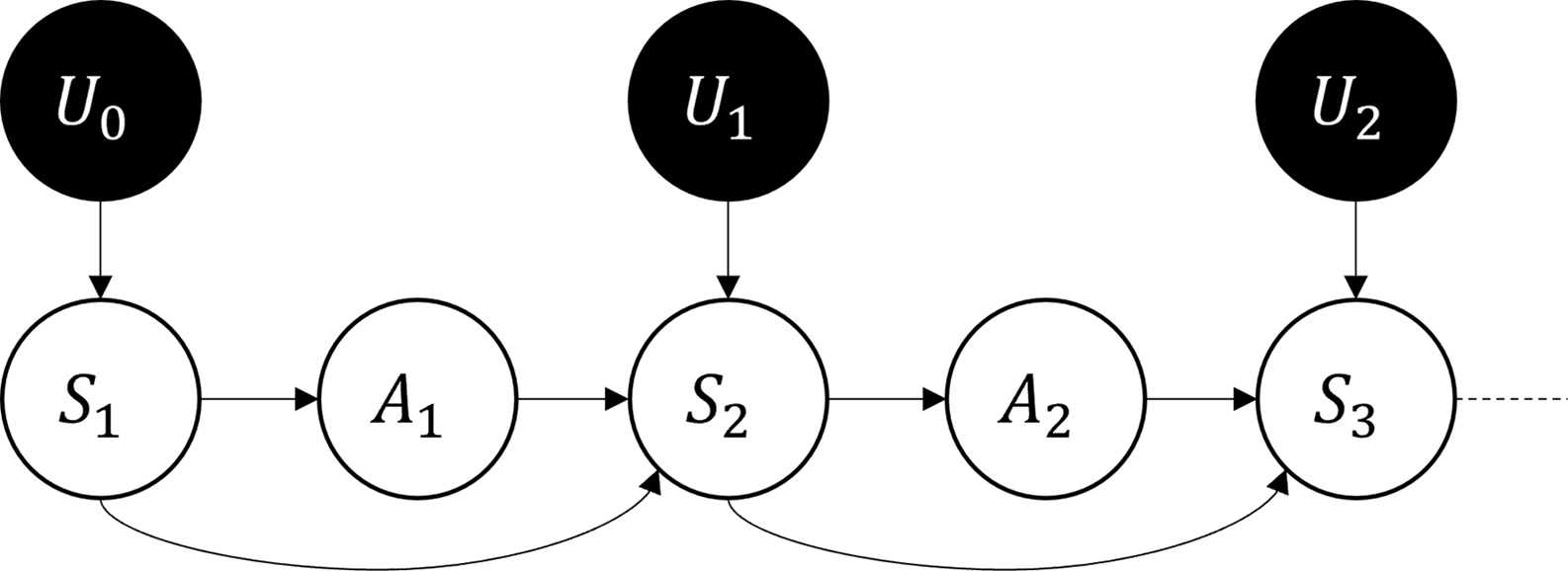

We stress that the SCM encoding does not require any assumptions about the structure of the MDP: such encoding results in an acyclic graph, while the original MDP need not be. Figure 2 shows the causal diagram resulting from this SCM encoding.

Figure 2. Causal diagram for the SCM encoding of an MDP. Black circles represents exogenous variables, while white circles represent endogenous ones.

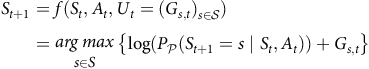

Note that both P 𝒫(S t + 1 ∣ S t , A t ) and P I (S 1) are categorical distributions and encoding them in the above SCM form (i.e., as functions of a random variable) is not obvious. Oberst and Sontag (Reference Oberst and Sontag2019) proposed a solution termed Gumbel-Max SCM, as given by:

$$\begin{gathered}{S_{t + 1}} = f({S_t},{A_t},{U_t} = {({G_{s,t}})_{s \in S}}) \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,= \begin{array}{*{20}{c}}{arg{\mkern 1mu} max} \\ \small{s \in S} \\ \end{array} \left\{ {\log \left( {{P_P}({S_{t + 1}} = s\mid {S_t},{A_t})} \right) + {G_{s,t}}} \right\} \\ \end{gathered}$$

$$\begin{gathered}{S_{t + 1}} = f({S_t},{A_t},{U_t} = {({G_{s,t}})_{s \in S}}) \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,= \begin{array}{*{20}{c}}{arg{\mkern 1mu} max} \\ \small{s \in S} \\ \end{array} \left\{ {\log \left( {{P_P}({S_{t + 1}} = s\mid {S_t},{A_t})} \right) + {G_{s,t}}} \right\} \\ \end{gathered}$$

where, for s ∈ 𝒮 and t ∈ 1 = …, T, G

s, t

∼ Gumbel. This is based on the Gumbel-Max trick, by which one can sample from a categorical distribution with

![]() $ {\cal k} $

categories (corresponding to the |S| MDP states in our case) by first drawing realizations g

1, …, g

$ {\cal k} $

categories (corresponding to the |S| MDP states in our case) by first drawing realizations g

1, …, g

![]() $ {\cal k} $

of a standard Gumbel distribution and then by setting the outcome to arg max

j

{log(P(Y = j))+g

j

}. By using the Gumbel-Max trick, the assignment S

t + 1 = f(S

t

, A

t

, (G

s, t

)

s ∈ 𝒮) in (5) will be equivalent to sampling S

t + 1 ∼ P

𝒫(S ∣ S

t

, A

t

):

$ {\cal k} $

of a standard Gumbel distribution and then by setting the outcome to arg max

j

{log(P(Y = j))+g

j

}. By using the Gumbel-Max trick, the assignment S

t + 1 = f(S

t

, A

t

, (G

s, t

)

s ∈ 𝒮) in (5) will be equivalent to sampling S

t + 1 ∼ P

𝒫(S ∣ S

t

, A

t

):

Proposition 1 (Gumbel-Max SCM correctness)

Given an MDP 𝒫, policy π, and time bound T, then for any path τ of 𝒫 induced by π of length T, we have P ℳ𝒫, π, T (τ) = P 𝒫(τ), where ℳ𝒫, π, T is the Gumbel-Max SCM for 𝒫, π, and T.

Importantly, the Gumbel-Max SCM encoding enjoys a desirable property called counterfactual stability:

Definition 5 (Counterfactual stability (Oberst and Sontag, Reference Oberst and Sontag2019))

An SCM ℳ satisfies counterfactual stability relative to a categorical variable Y of ℳ if whenever we observe Y = i under some intervention I, then the counterfactual value of Y under I′ ≠ I remains Y = i unless I′ increases the relative likelihood of an alternative outcome j ≠ i, that is unless P ℳ[I′](Y=j)/P ℳ[I](Y=j) > P ℳ[I′](Y=i)/P ℳ[I](Y=i).

Intuitively, the above definition tells us that, in a counterfactual scenario, we would observe the same outcome Y = i unless the intervention increases the relative likelihood of an alternative outcome Y = j, that is, unless

![]() $ {{p_j'}\over {p_j}} \gt {{p_i'}\over {p_i}}$

holds for some j.

$ {{p_j'}\over {p_j}} \gt {{p_i'}\over {p_i}}$

holds for some j.

Gumbel-Max SCMs are the most prominent encoding that can express categorical variables as functions of independent random variables and that satisfy counterfactual stability.Footnote 4 However, there also exists methods that generalise to other causal mechanisms with the counterfactual stability property (see Section 7).

Counterfactual inference. Given we observed an MDP path τ = (s 1,a 1), …, (s |τ|,a |τ|), counterfactual inference in this setting entails deriving P((G s, t ) s ∈ 𝒮 t = 1, …, |τ| − 1 ∣τ). Essentially, this means finding values for the Gumbel exogenous variables compatible with τ. By the Markov property, the above can be factorized as follows:

However, the mechanism of (5) is non-invertible, i.e., given s t and a t , there might be multiple values of (G s, t ) s ∈ 𝒮 leading to the same s t + 1. This implies that MDP counterfactuals can’t be uniquely identified, a problem that affects categorical counterfactuals in general and not just Gumbel-Max SCMs (Oberst and Sontag, Reference Oberst and Sontag2019).

As suggested by Oberst and Sontag (Reference Oberst and Sontag2019), we can perform (approximate) posterior inference of P((G s, t ) s ∈ 𝒮∣s t ,a t ,s t + 1) through rejection sampling. This involves sampling from the prior P((G s, t ) s ∈ 𝒮), and rejecting all the realizations (g s, t ) s ∈ 𝒮 for which f(s t ,a t ,(g s, t ) s ∈ 𝒮) ≠ s t + 1.

Interventions in MDPs. In principle, we can consider any kind of intervention I over the SCM encoding of an MDP. Arguably, the most relevant case is when I affects the MDP policy π. For instance, in some applications, we might want to replace π with a more conservative or aggressive policy. Hence, in the following, we assume interventions of the form I = {(π←π′)} for some policy π′ (i.e., we change the RHS of the equation for A t in the SCM (4)).

Example 1 (MDP counterfactuals)

Consider an MDP model of a light switch. The MDP has two states,

![]() ${\cal S} = \{ \mathsf On, Off \}$

, and we can take two actions,

${\cal S} = \{ \mathsf On, Off \}$

, and we can take two actions,

![]() ${\cal A} = \{ \mathsf Switch, Nop \}$

. If we take action

${\cal A} = \{ \mathsf Switch, Nop \}$

. If we take action

![]() $\mathsf Switch$

, the state of the MDP changes (from

$\mathsf Switch$

, the state of the MDP changes (from

![]() $\mathsf On$

, to

$\mathsf On$

, to

![]() $\mathsf Off$

, or vice versa) with probability 0.9, and it remains the same with probability 0.1. If we take action

$\mathsf Off$

, or vice versa) with probability 0.9, and it remains the same with probability 0.1. If we take action

![]() $\mathsf Nop$

, with probability 0.9 the MDP’s state does not change, and with probability 0.1 the state changes. We fix the following policy: π(

$\mathsf Nop$

, with probability 0.9 the MDP’s state does not change, and with probability 0.1 the state changes. We fix the following policy: π(

![]() $\mathsf On$

) =

$\mathsf On$

) =

![]() $\mathsf NOP$

and π(

$\mathsf NOP$

and π(

![]() $\mathsf Off$

) =

$\mathsf Off$

) =

![]() $\mathsf Switch$

.

$\mathsf Switch$

.

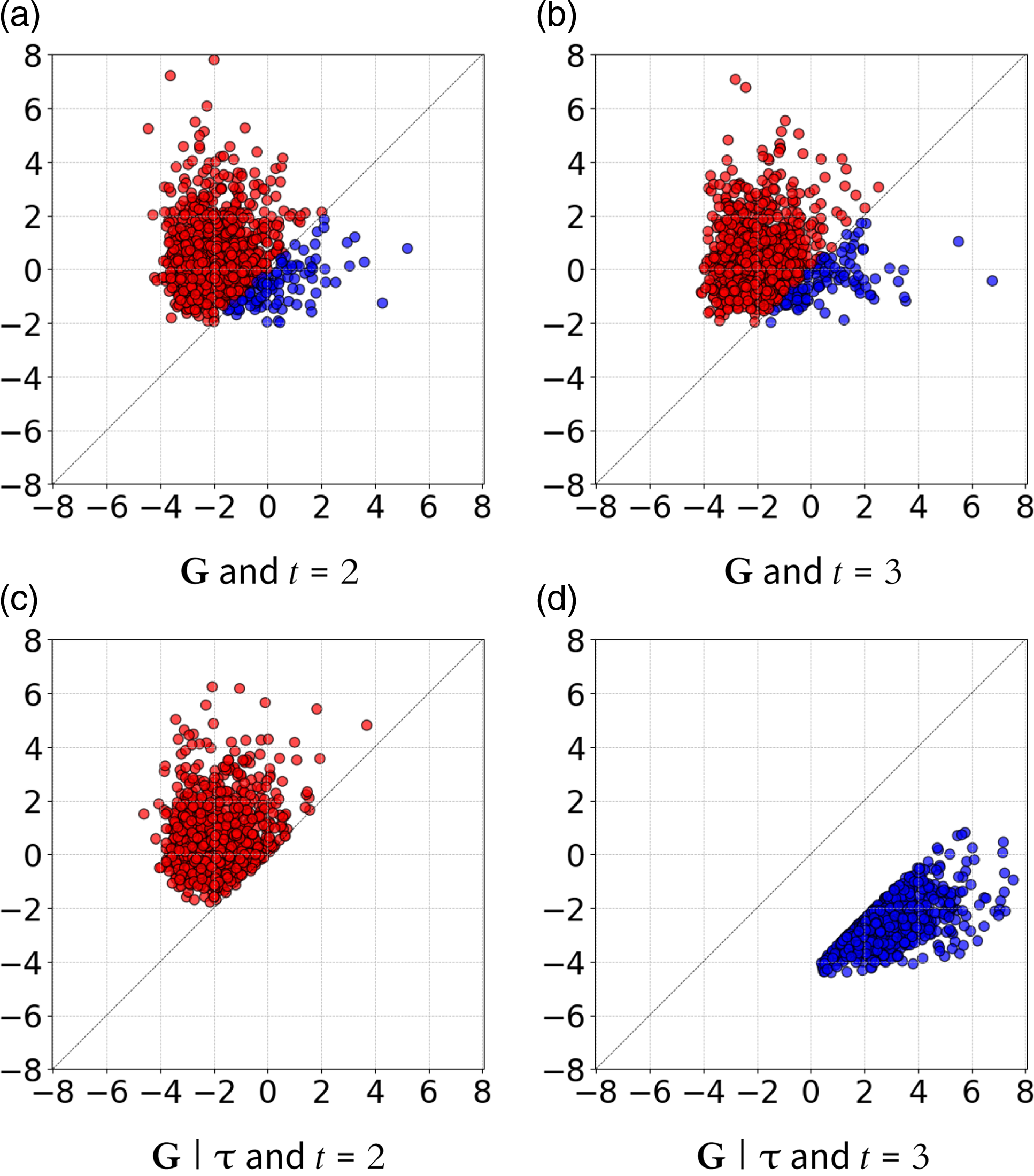

Assume we observe the path

![]() $\tau = {\mathsf Off} {{\mathsf {\ Switch\ }}\atop{\longrightarrow}}\mathsf {On\ } {{\mathsf {\ NOP\ }}\atop{\longrightarrow}} \mathsf {Off}$

, where the first step has probability 0.9 and the second step 0.1. First, we want to show that the Gumbel-max SCM formulation

(5)

yields the same probability values, modulo sampling variability. In Figure 3a and Figure 3b we show the values of log (P

𝒫(

$\tau = {\mathsf Off} {{\mathsf {\ Switch\ }}\atop{\longrightarrow}}\mathsf {On\ } {{\mathsf {\ NOP\ }}\atop{\longrightarrow}} \mathsf {Off}$

, where the first step has probability 0.9 and the second step 0.1. First, we want to show that the Gumbel-max SCM formulation

(5)

yields the same probability values, modulo sampling variability. In Figure 3a and Figure 3b we show the values of log (P

𝒫(

![]() $\mathsf Off\,$

∣S

t

,A

t

)) + G

$\mathsf Off\,$

∣S

t

,A

t

)) + G

![]() $\mathsf Off$

, t (x-axis) and log (P

𝒫(

$\mathsf Off$

, t (x-axis) and log (P

𝒫(

![]() $\mathsf On\,$

∣S

t

,A

t

)) + G

$\mathsf On\,$

∣S

t

,A

t

)) + G

![]() $\mathsf On$

, t (y-axis) obtained by sampling 1000 realizations of the Gumbel variables

$\mathsf On$

, t (y-axis) obtained by sampling 1000 realizations of the Gumbel variables

![]() $\bf {G}$

. We see indeed that, at t = 2, 89.7% of these points lie above the identity line, that is they yield

$\bf {G}$

. We see indeed that, at t = 2, 89.7% of these points lie above the identity line, that is they yield

![]() $\mathsf On$

as the next state. At t = 3, we find that 10.9% of the points yield

$\mathsf On$

as the next state. At t = 3, we find that 10.9% of the points yield

![]() $\mathsf Off$

as the next state.

$\mathsf Off$

as the next state.

In Figure 3c and Figure 3d, we show the computation of counterfactuals. Assume an intervention that changes the policy into one that constantly performs action

![]() $\mathsf Switch$

. Now, we want to see what is the probability of path

$\mathsf Switch$

. Now, we want to see what is the probability of path

![]() $\tau ' = {\mathsf Off} {{\mathsf {\ Switch\ }}\atop{\longrightarrow}}\mathsf {On\ } {{\mathsf {\ Switch\ }}\atop{\longrightarrow}} \mathsf {Off}$

given that we observed τ. That is, we compute the probability of τ′ in the counterfactual SCM model where the (prior) Gumbel variables are replaced by

$\tau ' = {\mathsf Off} {{\mathsf {\ Switch\ }}\atop{\longrightarrow}}\mathsf {On\ } {{\mathsf {\ Switch\ }}\atop{\longrightarrow}} \mathsf {Off}$

given that we observed τ. That is, we compute the probability of τ′ in the counterfactual SCM model where the (prior) Gumbel variables are replaced by

![]() ${\bf {G}'=\mathbf {G}}\mid \tau$

, that is those inferred from τ.

${\bf {G}'=\mathbf {G}}\mid \tau$

, that is those inferred from τ.

First note that τ and τ′ perform the same first step. Hence, this step has probability 1 under

![]() ${\bf {G}}'$

because

${\bf {G}}'$

because

![]() ${\bf {G}}'$

is defined such that it assigns probability 1 to the observed path (see also Proposition 3 for a similar statement). In the second step, the observed path τ transitioned into

${\bf {G}}'$

is defined such that it assigns probability 1 to the observed path (see also Proposition 3 for a similar statement). In the second step, the observed path τ transitioned into

![]() $\mathsf Off$

after performing Nop, despite a probability of 0.9 of jumping into

$\mathsf Off$

after performing Nop, despite a probability of 0.9 of jumping into

![]() $\mathsf On$

. This means that

$\mathsf On$

. This means that

![]() ${\bf {G}}'$

strongly favours

${\bf {G}}'$

strongly favours

![]() $\mathsf Off$

(over

$\mathsf Off$

(over

![]() $\mathsf On$

) to happen in the second step. Hence, we expect that the probability of

$\mathsf On$

) to happen in the second step. Hence, we expect that the probability of

![]() ${\mathsf On} {{\mathsf {\ Switch\ }}\atop{\longrightarrow}}\mathsf {Off}$

in the counterfactual world will be higher than the nominal probability P

𝒫(

${\mathsf On} {{\mathsf {\ Switch\ }}\atop{\longrightarrow}}\mathsf {Off}$

in the counterfactual world will be higher than the nominal probability P

𝒫(

![]() $\mathsf Off$

∣

$\mathsf Off$

∣

![]() $\mathsf On$

,

$\mathsf On$

,

![]() $\mathsf Switch$

). In particular, by counterfactual stability (Def. 5), such probability should be 1 because the intervention doesn’t make state

$\mathsf Switch$

). In particular, by counterfactual stability (Def. 5), such probability should be 1 because the intervention doesn’t make state

![]() $\mathsf On$

more likely to happen (rather the opposite: the relative likelihood of

$\mathsf On$

more likely to happen (rather the opposite: the relative likelihood of

![]() $\mathsf On$

is indeed 0.1/0.9, while it is 0.9/0.1 for

$\mathsf On$

is indeed 0.1/0.9, while it is 0.9/0.1 for

![]() $\mathsf Off$

). This can be proven also by showing that, by rejection sampling, we have that:

$\mathsf Off$

). This can be proven also by showing that, by rejection sampling, we have that:

Since 0.9 = P

𝒫(

![]() $\mathsf Off$

∣

$\mathsf Off$

∣

![]() $\mathsf On, Switch$

) > P

𝒫(

$\mathsf On, Switch$

) > P

𝒫(

![]() $\mathsf Off$

∣

$\mathsf Off$

∣

![]() $\mathsf On, NOP$

) = 0.1 and 0.1 = P

𝒫(

$\mathsf On, NOP$

) = 0.1 and 0.1 = P

𝒫(

![]() $\mathsf On$

∣

$\mathsf On$

∣

![]() $\mathsf On, Switch$

) < P

𝒫(

$\mathsf On, Switch$

) < P

𝒫(

![]() $\mathsf On$

∣

$\mathsf On$

∣

![]() $\mathsf On, NOP$

) = 0.9, it follows that

$\mathsf On, NOP$

) = 0.9, it follows that

i.e., performing action

![]() $\mathsf Switch$

at state

$\mathsf Switch$

at state

![]() $\mathsf On$

has probability 1 of leading into state

$\mathsf On$

has probability 1 of leading into state

![]() $\mathsf Off$

in the counterfactual world. In particular, since P

𝒫(

$\mathsf Off$

in the counterfactual world. In particular, since P

𝒫(

![]() $\mathsf Off$

∣

$\mathsf Off$

∣

![]() $\mathsf On, Switch$

) > P

𝒫(

$\mathsf On, Switch$

) > P

𝒫(

![]() $\mathsf Off$

∣

$\mathsf Off$

∣

![]() $\mathsf On, NOP$

), the points in Figure 3d (corresponding to the counterfactual step) are shifted to the right compared to Figure 3b (observed step).

$\mathsf On, NOP$

), the points in Figure 3d (corresponding to the counterfactual step) are shifted to the right compared to Figure 3b (observed step).

Figure 3. Light switch MDP (example 1). X-axis: log (P

𝒫(

![]() $\rm\mathsf Off $

∣S

t

, A

t

)) + G

$\rm\mathsf Off $

∣S

t

, A

t

)) + G

![]() $\rm\mathsf Off$

, t; Y-axis: log (P

𝒫(

$\rm\mathsf Off$

, t; Y-axis: log (P

𝒫(

![]() $\rm\mathsf On$

∣S

t

, A

t

)) + G

$\rm\mathsf On$

∣S

t

, A

t

)) + G

![]() $\rm\mathsf On$

, t. Plots (a) and (b) are relative to the prior Gumbel G and the observed path τ (using 1000 realizations for G). Plots (c) and (d) are relative to the posterior Gumbel G τ and the counterfactual path τ′. Points leading to state

$\rm\mathsf On$

, t. Plots (a) and (b) are relative to the prior Gumbel G and the observed path τ (using 1000 realizations for G). Plots (c) and (d) are relative to the posterior Gumbel G τ and the counterfactual path τ′. Points leading to state

![]() $\rm\mathsf On$

are in red, while those for

$\rm\mathsf On$

are in red, while those for

![]() $\rm\mathsf Off$

are in blue.

$\rm\mathsf Off$

are in blue.

3. Construction of counterfactual MDP

Consider a Gumbel-max SCM ℳ𝒫 for an MDP 𝒫 under policy π, and a (finite) path τ of ℳ𝒫. Let

![]() ${\bf {G}}'=(G'_{s,i})_{s\in \cal {S}}^{i=1,\ldots, |\tau |-1}$

be the set of posterior Gumbel variables, where, for i = 1, …, |τ| − 1, G′

s, i

∼ P

ℳ𝒫

(G

s, i

∣τ) and G

s, i

∼ Gumbel. That is, G′

s, i

is the value of the exogenous variable (associated to position i and state s) inferred from τ. Then, for i = 1, …, |τ| − 1, we have the following transition probability function, which directly follows from the SCM (5):

${\bf {G}}'=(G'_{s,i})_{s\in \cal {S}}^{i=1,\ldots, |\tau |-1}$

be the set of posterior Gumbel variables, where, for i = 1, …, |τ| − 1, G′

s, i

∼ P

ℳ𝒫

(G

s, i

∣τ) and G

s, i

∼ Gumbel. That is, G′

s, i

is the value of the exogenous variable (associated to position i and state s) inferred from τ. Then, for i = 1, …, |τ| − 1, we have the following transition probability function, which directly follows from the SCM (5):

See also (Tsirtsis, De, and Rodriguez, Reference Tsirtsis, De and Rodriguez2021) for a similar definition. Then, we can express this non-stationary MDP as a stationary one by augmenting its state space as follows.

Definition 6 (Counterfactual MDP)

Given an MDP 𝒫, policy π, and a finite path τ of 𝒫 under π, the corresponding (stationary) counterfactual MDP 𝒫τ = (𝒮τ, 𝒜, P 𝒫τ, P I τ, ℛ′, L′). Here, 𝒮τ = 𝒮 × {1, …, |τ|} is an augmented state space where each state s′ ∈ 𝒮τ corresponds to a tuple s′ = (s, i), where each state s ∈ 𝒮 from the nominal MDP 𝒫 has been augmented with a timestep i, ℛ′ : (𝒮τ × 𝒜) → ℝ is a reward function such that ℛ′((s, i), a) = ℛ(s, a), L′ : 𝒮τ → 2 AP is a labelling function such that L′((s, i)) = L(s), P I τ(τ[1],1) = 1, and for any (s, i), (s′, i′) ∈ 𝒮τ and a ∈ 𝒜,

In other words, in 𝒫τ we introduce an extra variable to track the position i of the observed path τ. Then, for i < |τ|, 𝒫τ behaves according to the transition probabilities of the counterfactual model, as per Eq. 6. For i = |τ|, 𝒫τ is equivalent to the original MDP model 𝒫, because we do not have an observation on which we can condition our Gumbel exogenous variables. Also, P I τ is defined such that 𝒫τ admits only one initial state, that is, the first state of τ. The following proposition shows that the counterfactual MDP reduces to the original MDP in the special case when |τ| = 1.

Proposition 2. If |τ| = 1, then the counterfactual MDP 𝒫τ of an MDP 𝒫 is equivalent to 𝒫(τ[1]).

Proof. It is easy to see that, by applying Def. 6, we recover the definition of the original MDP 𝒫 (with the provision that 𝒮τ = 𝒮 × {1}) initialised at τ[1], the only state of τ. Indeed, if τ contains only one state, then we do not have any observed transitions to perform posterior inference of the Gumbel exogenous variables.□

Another useful property is that if we do not perform any interventions, that is we maintain the original policy π, then the counterfactual MDP induces the observed path τ with probability 1, as expected.

Proposition 3. Given 𝒫, π, and τ as per Definition 6 , then the resulting counterfactual MDP 𝒫τ is such that P 𝒫τ (τ) = 1.

Proof. It is enough to show that, for any 1 ≤ i < |τ|, it holds that

This is true because the posterior Gumbel variables G′ s″, i are inferred in order to be consistent with the observed path. This holds also for (approximate) inference via rejection sampling: since we discard all the Gumbel realizations incompatible with the observation, we have that

which proves the above equality.□

In the following, for simplicity, we will use policies π defined over 𝒮 (the state space of the original MDP 𝒫) also for the augmented state space 𝒮τ of the counterfactual MDP, by assuming π(s, i) = π(s) for any i.

4. PCFTL: a probabilistic temporal logic with interventions, Counterfactuals, and Causal Effects

In this section, we formally define PCFTL (Probabilistic CounterFactual Temporal Logic). A PCFTL formula is interpreted over an MDP 𝒫, a policy π, and an observed path τ resulting from 𝒫 and π.

PCFTL extends PCTL⋆ (Baier et al., 1997; Baier, 1998) with a counterfactual operator I

@t

.P

⋈ p

(ϕ), a counterfactual reward operator I

@t

.R

⋈ r

≤

![]() $ {\cal k} $

, and two causal effect operators,

$ {\cal k} $

, and two causal effect operators,

![]() $ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.P

⋈ p

(ϕ) and

$ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.P

⋈ p

(ϕ) and

![]() $ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.R

⋈ r

≤

$ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.R

⋈ r

≤

![]() $ {\cal k} $

. The latter two formulas are defined as the difference of counterfactual probabilities (resp., cumulative rewards) between interventions I

1 and I

0, in line with the definition of treatment effects in Section 2.1.

$ {\cal k} $

. The latter two formulas are defined as the difference of counterfactual probabilities (resp., cumulative rewards) between interventions I

1 and I

0, in line with the definition of treatment effects in Section 2.1.

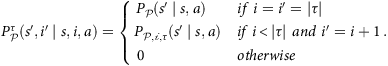

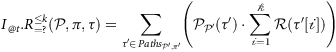

PCFTL syntax. The syntax of PCFTL is as follows:

where I, I

0, I

1 are (possibly empty) interventions, t ∈ ℤ ≥ 0, ρ ∈ AP, p ∈ [0,1], r ∈ ℝ, p′ ∈ [−1, 1], ⋈ ∈ {<, ≤, ≥, > },

![]() $ \cal k $

∈ ℤ ≥ 1, and [a, b] is an interval with a ∈ ℤ ≥ 0 and b ∈ ℤ ≥ 0 ∪ {∞}. State formulas Φ can be atomic propositions, counterfactual or causal effect formulas, or logical combinations of them. Path formula ϕ1𝒰[a,b]ϕ2 is satisfied by paths where ϕ2 holds at some time point within the (potentially unbounded) interval [a, b] and ϕ1 always holds before that point. Other standard bounded temporal operators are derived as: ℱ[a,b]ϕ

$ \cal k $

∈ ℤ ≥ 1, and [a, b] is an interval with a ∈ ℤ ≥ 0 and b ∈ ℤ ≥ 0 ∪ {∞}. State formulas Φ can be atomic propositions, counterfactual or causal effect formulas, or logical combinations of them. Path formula ϕ1𝒰[a,b]ϕ2 is satisfied by paths where ϕ2 holds at some time point within the (potentially unbounded) interval [a, b] and ϕ1 always holds before that point. Other standard bounded temporal operators are derived as: ℱ[a,b]ϕ

![]() $ \equiv$

⊤𝒰[a,b]ϕ (eventually), 𝒢[a, b]ϕ

$ \equiv$

⊤𝒰[a,b]ϕ (eventually), 𝒢[a, b]ϕ

![]() $ \equiv$

¬ℱ[a,b]¬ϕ (always), and 𝒳ϕ

$ \equiv$

¬ℱ[a,b]¬ϕ (always), and 𝒳ϕ

![]() $ \equiv$

ℱ[1,1]ϕ (next).

$ \equiv$

ℱ[1,1]ϕ (next).

Before introducing the semantics of PCFTL, we define the quantitative counterfactual operators I

@t

.P

= ?(ϕ)(𝒫, π, τ) and I

@t

.R

= ?

≤

![]() $ {\cal k} $

(𝒫, π, τ). These quantify the probability of a path formula ϕ (resp., the expected cumulative reward up to step

$ {\cal k} $

(𝒫, π, τ). These quantify the probability of a path formula ϕ (resp., the expected cumulative reward up to step

![]() $ \cal k $

) in the counterfactual model obtained from MDP 𝒫, given that we observed path τ under policy π, and by applying I from t steps back in the past (we emphasise that t is a local indexing).

$ \cal k $

) in the counterfactual model obtained from MDP 𝒫, given that we observed path τ under policy π, and by applying I from t steps back in the past (we emphasise that t is a local indexing).

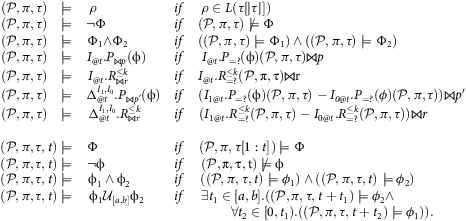

$$\small{{I}_{@ t}.R^{\leq k}_{=?}(\cal {P}, \pi, \tau ) = \& \sum _{\tau ' \in \,\it {Paths}_{\cal {P}',\pi '}} \left (P_{\cal {P}'}(\tau ')\cdot \sum _{i=\text 1}^k \cal {R}(\tau '[i])\right )}$$

$$\small{{I}_{@ t}.R^{\leq k}_{=?}(\cal {P}, \pi, \tau ) = \& \sum _{\tau ' \in \,\it {Paths}_{\cal {P}',\pi '}} \left (P_{\cal {P}'}(\tau ')\cdot \sum _{i=\text 1}^k \cal {R}(\tau '[i])\right )}$$

where 𝒫′ = 𝒫τ[|τ|−t:] is the counterfactual MDP derived from 𝒫 and τ[|τ|−t :], i.e., the path suffix starting at the time of intervention, and π′ is the intervention policy (corresponding to π if I =

![]() $ {0/} $

). Note that the probability of ϕ is evaluated in the counterfactual model starting from the time of intervention, not from the last state of the path (to do so, one can simply replace ϕ with ℱ[t,t]ϕ). The satisfaction relation for path formulae is as follows.

$ {0/} $

). Note that the probability of ϕ is evaluated in the counterfactual model starting from the time of intervention, not from the last state of the path (to do so, one can simply replace ϕ with ℱ[t,t]ϕ). The satisfaction relation for path formulae is as follows.

Definition 7 (Semantics of PCFTL)

Given a PCFTL formula Φ, an MDP 𝒫, and a path τ of 𝒫 under some policy π, the PCFTL satisfaction relation ⊨ is defined by the following rules:

Remark 1. A main difference compared to existing temporal logics like PCTL⋆ is that a PCFTL formula Φ is evaluated over a path of observed states and actions rather than the current state only. Keeping track of the past allows us to perform counterfactual reasoning; see Equations 7 and 8 . Without counterfactuals, there would be no need to carry over the path, but only the current state because the system is Markovian. Footnote 5 Also, PCTL⋆ formulas evaluated over a DTMC model, while in our logic, it is convenient to keep 𝒫 and π separated rather than working with the DTMC induced by 𝒫 and π.

Remark 2. Normally, probabilistic model checking of MDPs is concerned with computing the maximum or minimum satisfaction probability across the policy space (Baier and Katoen, 2008). In this work, we instead want to compute probabilities w.r.t. given nominal and interventional/counterfactual policies, not across the entire policy space.

Building on the intuition that our counterfactual operator generalizes PCTL⋆’s probabilistic operator, we demonstrate below that our logic subsumes PCTL⋆.

Proposition 4. Every PCTL⋆ formula is a PCFTL formula, but not viceversa.

Proof. It suffices to prove that PCTL⋆’s probabilistic operator (see Baier and Katoen, 2008) is a special case of our counterfactual operator. Path formulas and their semantics are indeed equivalent between the two logics, with the only difference being that in PCFTL we keep track of the point t in the path at which ϕ is evaluated.

In particular, we show that, for s ∈ 𝒮, P

= ?(ϕ)(𝒫, π, s) =

![]() $ \emptyset $

@0.P

= ?(ϕ)(𝒫, π, (s)), where P

= ?(ϕ)(𝒫, π, s) = P

𝒫(s)({τ′ ∈ Paths

𝒫(s), π

∣(𝒫(s), π, τ′,1)⊨ϕ}) is the quantitative probabilistic operator. By applying (7), we have that

$ \emptyset $

@0.P

= ?(ϕ)(𝒫, π, (s)), where P

= ?(ϕ)(𝒫, π, s) = P

𝒫(s)({τ′ ∈ Paths

𝒫(s), π

∣(𝒫(s), π, τ′,1)⊨ϕ}) is the quantitative probabilistic operator. By applying (7), we have that

where π′ = π (the intervention is empty), and 𝒫′ = 𝒫(s). By Proposition 2, we have that 𝒫(s) = 𝒫(s).□

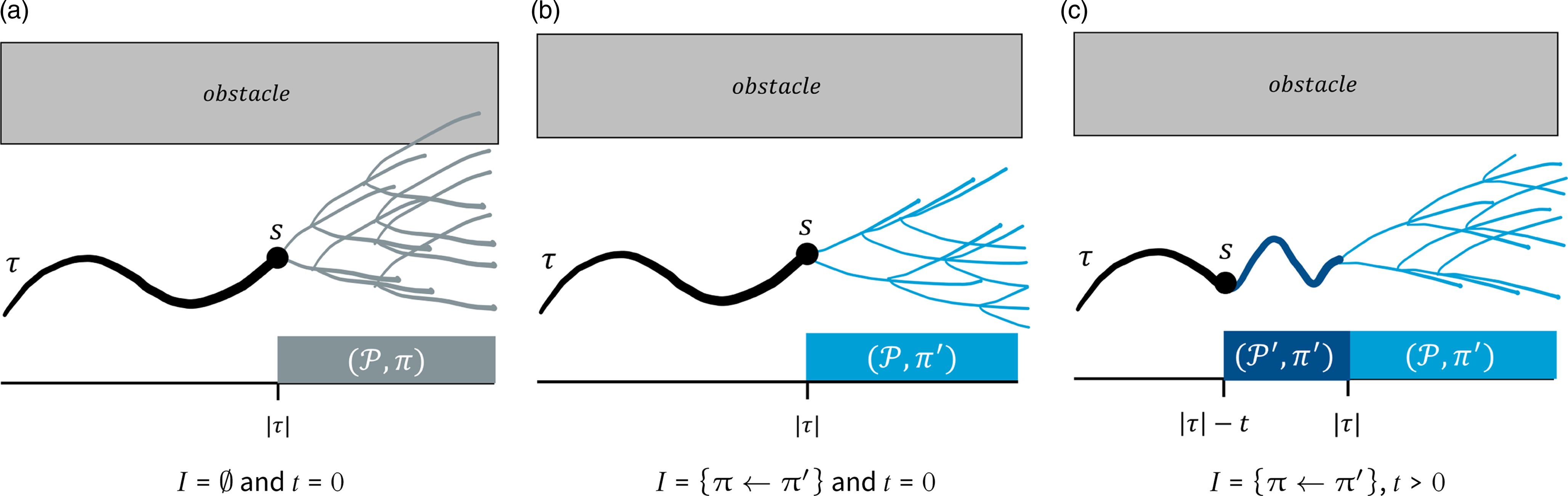

Expressiveness. We discuss the counterfactual operator I

@t

.P

⋈ p

(ϕ) (a similar reasoning holds for I

@t

.R

⋈ r

≤

![]() $ {\cal k} $

). When t = 0, our operator captures the post-interventional probability; that is, the probability of a path formula ϕ after we apply intervention I at the current state. In this case, no counterfactuals need to be inferred because, trivially, we don’t have any observed MDP states beyond the time of intervention (see Figure 4b). Indeed, by Proposition 2, we have that 𝒫τ[|τ|−0:] = 𝒫(τ[|τ|−0]) = 𝒫(τ[|τ|]), that is the counterfactual MDP conditioned on the last state of τ corresponds to the original MDP 𝒫 initialized at that state. For this reason, as also shown in the proof of Propositon 4, our operator subsumes PCTL⋆’s probabilistic formula (which is indeed omitted in PCFTL): when t = 0 and I =

$ {\cal k} $

). When t = 0, our operator captures the post-interventional probability; that is, the probability of a path formula ϕ after we apply intervention I at the current state. In this case, no counterfactuals need to be inferred because, trivially, we don’t have any observed MDP states beyond the time of intervention (see Figure 4b). Indeed, by Proposition 2, we have that 𝒫τ[|τ|−0:] = 𝒫(τ[|τ|−0]) = 𝒫(τ[|τ|]), that is the counterfactual MDP conditioned on the last state of τ corresponds to the original MDP 𝒫 initialized at that state. For this reason, as also shown in the proof of Propositon 4, our operator subsumes PCTL⋆’s probabilistic formula (which is indeed omitted in PCFTL): when t = 0 and I =

![]() $ \emptyset $

, I

@t

.P

⋈ p

(ϕ) corresponds to evaluating P

⋈ p

(ϕ) w.r.t. the original MDP 𝒫 initialized at τ[|τ|−0] = τ[|τ|] and under the original policy π (see Figure 4a). Thus,

$ \emptyset $

, I

@t

.P

⋈ p

(ϕ) corresponds to evaluating P

⋈ p

(ϕ) w.r.t. the original MDP 𝒫 initialized at τ[|τ|−0] = τ[|τ|] and under the original policy π (see Figure 4a). Thus,

![]() $ \emptyset $

@0.P

⋈ p

(ϕ) ≡ P

⋈ p

(ϕ).

$ \emptyset $

@0.P

⋈ p

(ϕ) ≡ P

⋈ p

(ϕ).

When t > 0, our operator expresses a counterfactual query, which answers the question: given that we observed τ, what would have been the probability of ϕ if we had applied a particular intervention I at t steps back in the past (but under the same random circumstances that led to τ)? A common choice is to apply I at the beginning of τ (t = |τ| − 1) but other options are possible, for example intervening before some violation has happened in τ. We stress, however, that our operator goes beyond the usual notion of counterfactuals, by which the outcomes of interest are obtained only from the observed (or counterfactual) path. Indeed, depending on the bounds in the temporal operators of ϕ, evaluating ϕ might require paths that extend beyond τ. Hence, up to the length of τ, ϕ is evaluated on counterfactual paths; beyond that point, paths follow the original MDP model 𝒫 (which is precisely how our counterfactual MDP is constructed, see Def. 6) because there are no observations to condition on. We show why this matters in Example 2 below.

Figure 4. Three scenarios for the evaluation of I @t .P ⋈ p (ϕ). The observed path τ is in black. The counterfactual path (induced by the counterfactual MDP 𝒫′ = 𝒫τ[|τ|−t:] and the intervention policy π′) is in dark blue (in general we have a distribution of such paths, but here we show only one for simplicity). Paths extensions under the nominal policy π are in gray, and those under π′ in light blue. The horizontal axis represents time (or path positions), and the vertical axis the MDP state (continuous and one-dimensional for illustration purposes). While none of the three examples hit the obstacle within the observed/counterfactual path, moving forward, π yields a higher probability of this happening.

Example 2. Consider an MDP 𝒫 and an obstacle avoidance property φ H = 𝒢[0,H]¬obstacle for some horizon H > 0. Let τ be an observed path of 𝒫 under some policy π. Let τ I , with |τ I | = |τ|, denote the counterfactual path obtained from τ by applying some intervention I = {π ← π′} at the start. (For simplicity, we assume that only one counterfactual path is possible.) Now suppose that no obstacle is hit in τ or τ I . So, in usual counterfactual analysis, one would conclude that the nominal policy and the intervention policy are equivalent relative to property φ H and observation τ. However, if the safety property bound H extends beyond the length of τ, then it is necessary to reason about the future evolution of the MDP beyond τ (or τ I ): in one case, starting from the last state of τ and under the nominal policy; in the other, from the last state of the counterfactual path τ I and under I’s policy. At this point, it is entirely possible that going forward from the counterfactual world yields a higher probability of obstacle avoidance than remaining with the nominal policy, as illustrated in Figures 4 a and 4c. Thus, limiting the analysis to outcomes within the observed/counterfactual past, as done in previous work (Oberst and Sontag, Reference Oberst and Sontag2019; Tsirtsis, De, and Rodriguez, Reference Tsirtsis, De and Rodriguez2021), would lead to the wrong conclusion that the two policies are equivalent safety-wise.

Encoding treatment effects. We explain how the introduced causal effect operators

![]() $ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.P

⋈ p′(ϕ) and

$ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.P

⋈ p′(ϕ) and

![]() $ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.R

⋈ r

≤

$ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.R

⋈ r

≤

![]() $ {\cal k} $

can be used to express the traditional CATE and ITE estimators (defined in Section 2.1). We saw that CATE is the difference of post-interventional probabilities, conditioned on a particular value V = v of some variable V. In reinforcement learning with MDPs, one sensible choice is to condition on the first state of the post-interventional path (Oberst and Sontag, Reference Oberst and Sontag2019). Therefore, for the same argument made above about defining post-interventional probabilities with I

@0.P

⋈ p

(ϕ) formulas, we can express this notion of CATE in PCFTL with the formula

$ {\cal k} $

can be used to express the traditional CATE and ITE estimators (defined in Section 2.1). We saw that CATE is the difference of post-interventional probabilities, conditioned on a particular value V = v of some variable V. In reinforcement learning with MDPs, one sensible choice is to condition on the first state of the post-interventional path (Oberst and Sontag, Reference Oberst and Sontag2019). Therefore, for the same argument made above about defining post-interventional probabilities with I

@0.P

⋈ p

(ϕ) formulas, we can express this notion of CATE in PCFTL with the formula

![]() $ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.P

⋈ p

(ϕ). The latter indeed is the effect in the probability of ϕ between interventions I

1 and I

0, conditioned on paths starting with τ[|τ|−0] = τ[|τ|] (the last state of τ).

$ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.P

⋈ p

(ϕ). The latter indeed is the effect in the probability of ϕ between interventions I

1 and I

0, conditioned on paths starting with τ[|τ|−0] = τ[|τ|] (the last state of τ).

ATE, the unconditional version of CATE, cannot be directly expressed in PCFTL because our semantics is defined over a non-empty path τ, and hence, probabilities are implicitly conditional on the last state τ[|τ|]. An equivalent of ATE can be defined as the expected value of the CATE formula

![]() $ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.P

⋈ p

(ϕ) evaluated at the initial states S ∼ P

I

(S) of the MDP.

$ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.P

⋈ p

(ϕ) evaluated at the initial states S ∼ P

I

(S) of the MDP.

Finally, akin to how I

@t

.P

⋈ p

(ϕ) with t > 0 expresses a counterfactual probability (as discussed previously), the operator

![]() $ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.P

⋈ p

(ϕ) with t > 0 provides a notion of ITE, because, like ITE, our operator is defined as the difference of the counterfactual probabilities I

1@t

.P

= ?(ϕ) and I

0@t

.P

= ?(ϕ).

$ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.P

⋈ p

(ϕ) with t > 0 provides a notion of ITE, because, like ITE, our operator is defined as the difference of the counterfactual probabilities I

1@t

.P

= ?(ϕ) and I

0@t

.P

= ?(ϕ).

4.1. Example properties

Below, we provide examples of useful properties that can be expressed with the newly introduced counterfactual and causal effect operators of PCFTL, for the verification of cyber-physical systems.

Example 3. Let τ denote an observed path (of length τ) in an arbitrary MDP 𝒫 under policy π. Let π′ represent an alternative policy that we can intervene on, defined by I′ = π ← π′, and let ϕ be a path formula describing some requirement of interest. Using PCFTL, we can express many interventional and counterfactual properties related to cyber-physical systems, such as:

-

Safety:

-

– I @|τ| − 1.P ≥ 0.99(𝒢[0,20]signal < threshold): ‘If we had replaced the nominal policy π with π′ at the beginning, would the probability of the signal remaining below a specified safety threshold over the next 20 steps have been at least 99%?”

-

– Δ @0 I′,

$ \emptyset $

. P > 0(𝒢[a,b]ϕ): “Is π′ safer than π moving forward from the current state (between bounds a and b)?” (this is a CATE-like query)

$ \emptyset $

. P > 0(𝒢[a,b]ϕ): “Is π′ safer than π moving forward from the current state (between bounds a and b)?” (this is a CATE-like query)

-

–

$ \emptyset $

@t

.P

< p

(𝒢[a,b]ϕ) → I′@t

.P

≥ p

(𝒢[a,b]ϕ): “Had we deployed π′ t steps in the past, would we have observed a safety probability of at least p if π failed to achieve so?”

$ \emptyset $

@t

.P

< p

(𝒢[a,b]ϕ) → I′@t

.P

≥ p

(𝒢[a,b]ϕ): “Had we deployed π′ t steps in the past, would we have observed a safety probability of at least p if π failed to achieve so?”

-

– I′@t .P = ?(ℱ[t′,t′](¬ϕ∧Δ @0 I″,

$ \emptyset $

. P > 0 ℱ[1,H]ϕ)), where I″ = {(π←π″)} and H ≥ 1 : “What would have been the probability, had we applied π′ t steps in the past, of observing a violation after time t′, and subsequently, of a different policy π″ yielding a better recovery probability than π′?”

$ \emptyset $

. P > 0 ℱ[1,H]ϕ)), where I″ = {(π←π″)} and H ≥ 1 : “What would have been the probability, had we applied π′ t steps in the past, of observing a violation after time t′, and subsequently, of a different policy π″ yielding a better recovery probability than π′?”

-

-

Liveness:

-

– I @|τ| − 1.P ≥ 0.99(𝒢[0,20]waiting_for_resource < ℱ acquired_resource): ‘If we had replaced the nominal policy π with π′ at the beginning, would the probability of avoiding resource starvation over the next 20 steps have been at least 99%?”

-

-

Reachability:

-

– I @0.P ≥ 0.95(ℱ[0,10]goal): “If we apply the intervention I′ = {(π←π′)} in the current state, will the probability of reaching the goal state(s) within 10 steps be at least 95%?”

-

– Δ @0 I′,

$ \emptyset $

. P

≥ 0(ℱ[0,10]goal): “If we replaced the nominal policy π with π′ at the current time step, would this increase the likelihood of reaching the goal state(s) within the next 10 steps?”

$ \emptyset $

. P

≥ 0(ℱ[0,10]goal): “If we replaced the nominal policy π with π′ at the current time step, would this increase the likelihood of reaching the goal state(s) within the next 10 steps?”

-

-

Reward-based properties:

-

– I @10.R ≥ 200 ≤ |τ| : “If we replaced the nominal policy π with π′ in the last 10 time steps, would the expected reward be over 200?”

-

– Δ @|τ| − 1 I′,

$ \emptyset $

. R ≥ 30 ≤ |τ|: “If we replaced the nominal policy π with π′ at the beginning, would the expected reward over τ steps under π′ have been at least 30 higher than the expected reward under π”

$ \emptyset $

. R ≥ 30 ≤ |τ|: “If we replaced the nominal policy π with π′ at the beginning, would the expected reward over τ steps under π′ have been at least 30 higher than the expected reward under π”

-

4.2. Decidability

Despite the added expressiveness, PCFTL remains decidable. First, we note that the transition probability function of a counterfactual MDP, defined in (6), is a well-defined probability measure. Therefore, the set of paths induced by a counterfactual MDP 𝒫′ and some policy is also measurable (Baier and Katoen, 2008), which ensures that we can quantify the probability of a path formula.

A decision procedure for PCFTL can be adapted from the standard model checking algorithm for a DTMC 𝒟 = (𝒮, P

𝒟, P

I

, R, L) and a PCTL* formula Φ (Baier and Katoen, 2008), which we summarise next. The procedure traverses the parse tree of Φ bottom-up. For each node, representing a subformula

![]() $ \Psi $

, the satisfaction set Sat(

$ \Psi $

, the satisfaction set Sat(

![]() $ \Psi $

) = {s ∈ 𝒮 ∣ s ⊨

$ \Psi $

) = {s ∈ 𝒮 ∣ s ⊨

![]() $ \Psi $

} is computed. When

$ \Psi $

} is computed. When

![]() $ \Psi $

is a simple Boolean formula, computing Sat(

$ \Psi $

is a simple Boolean formula, computing Sat(

![]() $ \Psi $

) is straightforward, so we focus on the case

$ \Psi $

) is straightforward, so we focus on the case

![]() $ \Psi $

= P

⋈ p′(ϕ). Here, all maximal state subformulas of ϕ are replaced with new atomic propositions representing their satisfaction sets. This step is possible because the satisfaction sets are precomputed during the bottom-up traversal. This operation effectively transforms ϕ into an LTL property, which enables the computation of P

𝒟(s ⊨ ϕ) using a standard automata-based approach (Baier and Katoen, 2008). Hence, we can compute the satisfaction set of

$ \Psi $

= P

⋈ p′(ϕ). Here, all maximal state subformulas of ϕ are replaced with new atomic propositions representing their satisfaction sets. This step is possible because the satisfaction sets are precomputed during the bottom-up traversal. This operation effectively transforms ϕ into an LTL property, which enables the computation of P

𝒟(s ⊨ ϕ) using a standard automata-based approach (Baier and Katoen, 2008). Hence, we can compute the satisfaction set of

![]() $ \Psi $

as Sat(

$ \Psi $

as Sat(

![]() $ \Psi $

) = {s ∈ 𝒮 ∣P

𝒟(s ⊨ ϕ) ⋈ p′}.

$ \Psi $

) = {s ∈ 𝒮 ∣P

𝒟(s ⊨ ϕ) ⋈ p′}.

Model-checking PCTL* has double-exponential time complexity in |ϕ| due to the transformation of ϕ′ into a deterministic Rabin automaton and polynomial complexity in the size of the DTMC. Moreover, as demonstrated by Kwiatkowska et al. (Reference Kwiatkowska, Norman and Parker2007), determining reward properties does not impact the decidability or time complexity of the model-checking procedure, so we will not discuss this case here.

The decision procedure for PCFTL follows a similar approach. We do not discuss Boolean and reward properties and cover the case when

![]() $ \Psi $

= I

@t

.P

⋈ p

(ϕ) (from which a procedure for

$ \Psi $

= I

@t

.P

⋈ p

(ϕ) (from which a procedure for

![]() $ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.P

⋈ p′(ϕ) can be easily derived). The key difference here is that the satisfaction set for Sat(

$ \Delta_{@t}^{{I_{1}}, {I_{0}}}$

.P

⋈ p′(ϕ) can be easily derived). The key difference here is that the satisfaction set for Sat(

![]() $ \Psi $

) cannot include states, but it must include paths because the satisfaction of I

@t

.P

⋈ p

(ϕ) depends on an (observed) path. It is important to note that this set will include paths of at most length T where T is the largest t offset of an intervention appearing in any state subformula. Indeed, it is easy to see that the satisfaction of I

@t

.P

⋈ p

(ϕ) w.r.t. a path τ (with |τ| ≥ t) depends only on the t-length suffix of τ (which is the suffix used to construct the counterfactual MDP, see (7)). To transform the path formula ϕ into an equivalent LTL formula (as done above), we now need to express these satisfaction sets (defined over finite paths, i.e., sequences of states) as atomic propositions (defined over states). This is possible by augmenting the MDP with memory to keep track of the last T − 1 visited states.Footnote

6

In this way, there is a direct correspondence between the elements of Sat(

$ \Psi $

) cannot include states, but it must include paths because the satisfaction of I

@t

.P

⋈ p

(ϕ) depends on an (observed) path. It is important to note that this set will include paths of at most length T where T is the largest t offset of an intervention appearing in any state subformula. Indeed, it is easy to see that the satisfaction of I

@t

.P

⋈ p

(ϕ) w.r.t. a path τ (with |τ| ≥ t) depends only on the t-length suffix of τ (which is the suffix used to construct the counterfactual MDP, see (7)). To transform the path formula ϕ into an equivalent LTL formula (as done above), we now need to express these satisfaction sets (defined over finite paths, i.e., sequences of states) as atomic propositions (defined over states). This is possible by augmenting the MDP with memory to keep track of the last T − 1 visited states.Footnote

6

In this way, there is a direct correspondence between the elements of Sat(

![]() $ \Psi $

) and the states of the augmented MDP, as desired. So, we can now construct our sets as done for the PCTL* case above, as Sat(

$ \Psi $

) and the states of the augmented MDP, as desired. So, we can now construct our sets as done for the PCTL* case above, as Sat(

![]() $ \Psi $

) = {τ ∈ ⋃1 ≤ i ≤ T

𝒮

i

∣ P

𝒟τ, π′

(τ[1]⊨ϕ)} where 𝒟τ, π′ is the (counterfactual) DTMC induced by the interventional policy π′ and by the counterfactual MDP associated to the original MDP and path τ. Having shown that PCFTL model checking reduces to PCTL* model checking, its complexity is still polynomial in the size of the induced (counterfactual) DTMC, as the state space size of the augmented model is polynomial in that of the induced DTMC.

$ \Psi $

) = {τ ∈ ⋃1 ≤ i ≤ T

𝒮

i

∣ P

𝒟τ, π′

(τ[1]⊨ϕ)} where 𝒟τ, π′ is the (counterfactual) DTMC induced by the interventional policy π′ and by the counterfactual MDP associated to the original MDP and path τ. Having shown that PCFTL model checking reduces to PCTL* model checking, its complexity is still polynomial in the size of the induced (counterfactual) DTMC, as the state space size of the augmented model is polynomial in that of the induced DTMC.

5. PCFTL verification with statistical model checking

We use statistical model checking (SMC) (Younes and Simmons, Reference Younes and Simmons2006; Legay et al., Reference Legay, Delahaye and Bensalem2010) to determine whether our properties are satisfied, that is by sampling finite paths of the (counterfactual) MDP model. We leave the study of numerical-symbolic algorithms for future work.

Since we deal with finite paths, we consider a fragment of the logic with bounded temporal operators. Also, we restrict to non-nested properties, that is those where path sub-formulas ϕ do not contain in turn counterfactual operators (even though we allow for arbitrary nesting of temporal operators in ϕ). The complication with nested formulas is that we require multiple executions to determine the satisfaction of ϕ, leading to a sample size that is exponential in the depth of the nested operator (Younes and Simmons, Reference Younes and Simmons2006; Legay et al., Reference Legay, Delahaye and Bensalem2010). Nevertheless, the fragment we consider is rich enough to express a variety of reinforcement learning tasks (see Section 6) and subsumes Probabilistic Bounded LTL (Zuliani, Platzer, and Clarke, Reference Zuliani, Platzer and Clarke2013) (because our counterfactual formulas generalize probabilistic ones).

In short, with SMC we reduce the problem of checking I @t .P ≥ p (ϕ) to one of hypothesis testing, given a sample of MDP realizations. As in (Younes and Simmons, Reference Younes and Simmons2006; Legay et al., Reference Legay, Delahaye and Bensalem2010), we employ a sequential scheme that allows sampling only the number of paths necessary to ensure a priori probabilities α and β of type-1 errors (wrongly concluding that the property is false) and type-2 errors (wrongly concluding that it is true), respectively. Our approach builds on (Younes and Simmons, Reference Younes and Simmons2006; Legay et al., Reference Legay, Delahaye and Bensalem2010) and extends it to handle reward and causal effect properties, by defining a suitable sequential test for T-distributed outcomes (rather than Bernoulli ones as done in Younes and Simmons (Reference Younes and Simmons2006) and Legay et al. (Reference Legay, Delahaye and Bensalem2010)).

5.1. Computation of counterfactuals and causal effects

SMC relies on sampling paths of the (counterfactual) MDP model under some policy. We choose to sample these paths using the Gumbel-Max trick (see (5)) as it facilitates inference for the causal effect operator, as we will explain next. Using this formulation, we can express the counterfactual probability of (7) as the expectation of a function

![]() $f(\bf {G})$

of (prior) Gumbel variables

$f(\bf {G})$

of (prior) Gumbel variables

![]() ${\bf {G}}\sim \rm {Gumbel}$

, as follows:

${\bf {G}}\sim \rm {Gumbel}$

, as follows:

where

![]() $\bf {1}$

is the indicator function, 𝒫′ = 𝒫τ[|τ|−t:] is the counterfactual MDP, π′ is intervention I’s policy, and

$\bf {1}$

is the indicator function, 𝒫′ = 𝒫τ[|τ|−t:] is the counterfactual MDP, π′ is intervention I’s policy, and

![]() $\tau '(\bf {G})$

is the path of 𝒫′ under π′ which is uniquely determined by

$\tau '(\bf {G})$

is the path of 𝒫′ under π′ which is uniquely determined by

![]() $\bf {G}$

.Footnote

7

$\bf {G}$

.Footnote

7

The corresponding formulation for the counterfactual reward of (8) is readily obtained as:

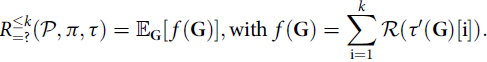

$$ R^{\leq \it k}_{=?}({\cal {P}}, \pi, \tau ) = {\mathbb {E}}_{{\bf {G}}}[\,f({\bf {G}})], \text { with } {\it \ f}({\bf {G}})=\sum _{i=1}^{\it k} {\cal {R}}(\tau '({\bf {G}})[i]). $$

$$ R^{\leq \it k}_{=?}({\cal {P}}, \pi, \tau ) = {\mathbb {E}}_{{\bf {G}}}[\,f({\bf {G}})], \text { with } {\it \ f}({\bf {G}})=\sum _{i=1}^{\it k} {\cal {R}}(\tau '({\bf {G}})[i]). $$

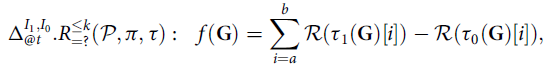

We proceed similarly for causal effect operators, with one important difference. While in Definition 7, we formulated the causal effect as the difference of two independent probabilities (or expected rewards), we here express it as the mean of paired differences between individual outcomes. This will allow us to reduce a two-sample inference problem into a one-sample problem.

$$\Delta ^{I_1,I_0}_{@t}.R^{\leq k}_{=?}({\cal {P}}, \pi, \tau ) : \ \ f({\bf {G}})=\sum _{i=a}^b {\cal {R}}(\tau _1({\bf {G}})[i])-{\cal {R}}(\tau _0({\bf {G}})[i]),$$

$$\Delta ^{I_1,I_0}_{@t}.R^{\leq k}_{=?}({\cal {P}}, \pi, \tau ) : \ \ f({\bf {G}})=\sum _{i=a}^b {\cal {R}}(\tau _1({\bf {G}})[i])-{\cal {R}}(\tau _0({\bf {G}})[i]),$$

where for i = 0, 1, π

i

is I

i

’s policy, and

![]() $\tau _i({\bf {G}})$

is the path of the counterfactual MDP 𝒫′ under π

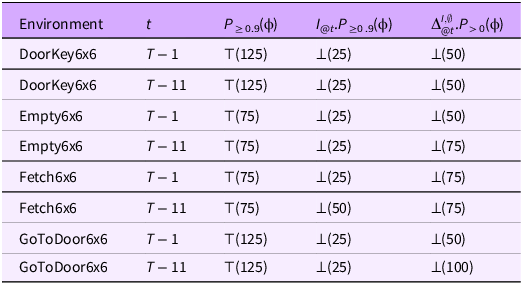

i