Highlights

What is already known?

Traditional methods for assessing calibration in clinical prediction models often assume independence between observations. However, when performing an external validation where data are clustered, this assumption is violated. Ignoring clustering can impair the reliability of calibration assessments, potentially leading to misguided clinical decisions.

What is new?

We introduce three novel methodologies that explicitly account for clustering in calibration assessments during clinical prediction model validation. These methods generate an overall calibration curve with PI, representing expected calibration curves in hypothetical new clusters. Among them, the MIX-C method is particularly recommended, as it provides cluster-specific calibration curves, as well as the 2MA-C with splines, as it provides good overall calibration and PI with decent coverage. To facilitate adoption, we provide ready-to-use R functions as part of the CalibrationCurves R package.

Potential impact for RSM readers

External validations aim to assess how clinical prediction models perform across diverse external settings. Neglecting the clustered nature of data in multicenter validations or meta-analyses of validation studies undermines calibration analysis, overlooking cluster-specific insights. The methodologies we propose address these limitations by estimating overall calibration curves and offering PI for hypothetical new clusters. This approach enables more refined and reliable calibration assessments, directly supporting informed decision making in clinical research.

1 Introduction

Clinical prediction models (CPMs) are evidence-based tools that estimate the probability of health-related events either at the time of evaluation (diagnosis) or at some point in the future (prognosis).Reference Steyerberg1, Reference van Smeden, Reitsma, Riley, Collins and Moons2 These risk estimates play a crucial role in evidence-based decision making and can be of great value when counseling patients. However, to ensure the reliability of risk estimates, CPMs must exhibit good calibration.Reference Van Calster and Vickers3 Calibration assesses how well the predicted risks correspond to observed risks.Reference Van Calster, Nieboer, Vergouwe, De Cock, Pencina and Steyerberg4 In recent years, there has been a notable increase in studies involving multiple clusters. The term “cluster” can refer to different groupings within the population under study, depending on the context. For example, in multicenter studies, a cluster commonly refers to different centers, whereas in meta-analysis, a cluster may pertain to each study or to centers within the study where possible (e.g., individual patient data (IPD) meta-analysisReference Riley, Stewart and Tierney5). In this work, the term “cluster” will specifically denote a center in a multicenter study, but the methods proposed apply more generally as well. An analysis of CPMs included in Tufts PACE (Predictive Analytics + Comparative Effectiveness), developed after the year 2000 revealed that 64% of the models utilized multicenter (clustered) data, indicating a growing trend in such studies.Reference Wynants, Kent, Timmerman, Lundquist and Van Calster6, Reference Wessler, Paulus and Lundquist7 TRIPOD (Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis) has addressed this development by publishing an extension known as TRIPOD-Cluster.Reference Debray, Collins and Riley8 This extension underscores the importance of taking clustering into account when developing or evaluating prediction models.

Miscalibration can have a harmful influence on medical decision making.Reference Van Calster and Vickers3 It is common to observe heterogeneity in model performance across centers or studies, most notably in model calibration.Reference Van Calster, Valentin and Froyman9– Reference WAC12 It can thus be misleading to generalize performance results from single-cluster data.Reference Wynants, Vergouwe, Van Huffel, Timmerman and Van Calster13– Reference Moher, Schulz, Simera and Altman16 For these reasons, it is crucial to collect clustered data to investigate and quantify heterogeneity in calibration performance between clusters. The presence of clustering introduces challenges, as individuals are no longer independent due to correlations among patients within the same cluster.Reference Snijders and Bosker17, Reference Finch, Bolin and Kelley18 Traditional (non-clustered) methodologies should therefore be adapted for use with clustered data.

The most informative assessment of calibration performance for a prediction model is a calibration plot, which is used to assess whether, among patients with the same estimated risk of the event, the observed proportion of events equals the estimated risk.Reference Van Calster, Nieboer, Vergouwe, De Cock, Pencina and Steyerberg4,

Reference Van Calster, Collins and Vickers19 A calibration plot presents estimated risks on the x-axis and the observed proportion on the y-axis. For binary outcomes, the observed outcome values are

![]() $Y=0$

(no event) or

$Y=0$

(no event) or

![]() $Y=1$

(event). The observed proportion of individuals with the event conditional on the estimated risk is not observed directly, but is estimated. One approach consists of creating

$Y=1$

(event). The observed proportion of individuals with the event conditional on the estimated risk is not observed directly, but is estimated. One approach consists of creating

![]() $Q$

groups (usually based on quantiles of estimated risks) and estimating the observed proportion in each of the groups as the proportion of individuals with the condition. We refer to this approach throughout the text as grouped calibration. The calibration plot then has

$Q$

groups (usually based on quantiles of estimated risks) and estimating the observed proportion in each of the groups as the proportion of individuals with the condition. We refer to this approach throughout the text as grouped calibration. The calibration plot then has

![]() $Q$

dots representing the mean risk (value on the x-axis) and observed proportion (value on the y-axis). Another approach is using a flexible model.Reference Austin and Steyerberg20 The observed outcome is regressed on the estimated probabilities using a smoother such as local regression (LOESS) or splines. The smoothed relation or

$Q$

dots representing the mean risk (value on the x-axis) and observed proportion (value on the y-axis). Another approach is using a flexible model.Reference Austin and Steyerberg20 The observed outcome is regressed on the estimated probabilities using a smoother such as local regression (LOESS) or splines. The smoothed relation or

![]() $Q$

groups are plotted together with a line of identity, which represents perfect calibration. The grouped approach loses information by categorizing the estimated risks and depends on the value of

$Q$

groups are plotted together with a line of identity, which represents perfect calibration. The grouped approach loses information by categorizing the estimated risks and depends on the value of

![]() $Q$

and the grouping approach. Flexible models are dependent on smoothing parameters. Flexible calibration curves have been presented for different problems like survival modelsReference Austin, Harrell and Van Klaveren21, competing risk modelsReference Austin, Putter, Giardiello and van Klaveren22, or multiclass models.Reference Van Hoorde, Van Huffel, Timmerman, Bourne and Van Calster23 Flexible calibration curves that consider clustering have been understudied. Although summarizing statistics of calibration (e.g., O:E) can be meta-analyzed across clusters, such summarizing statistics are by definition less informative than the calibration plot.Reference Van Calster, Collins and Vickers19,

Reference Debray, Damen and Snell24 We present three different approaches to construct flexible calibration curves from clustered data, provide cluster-specific curves, and quantify heterogeneity using prediction intervals (PI). We illustrate the methodologies with a real case example on ovarian cancer data and compare the performance of the methodology using simulated data and synthetic data. Section 2 presents the motivating example, the general notation used throughout the article, and introduces the three approaches to obtain calibration plots accounting for clustering. Sections 3 and 4 present the methodology and results of the simulation study and synthetic data analysis, respectively, and in Section 5, we conclude by discussing our findings. This study is an initial assessment of methods for clustered calibration analysis that we classify as a phase 2 methodological study in the four-phase framework from Heinze et al.Reference Heinze, Boulesteix, Kammer, Morris and White25

$Q$

and the grouping approach. Flexible models are dependent on smoothing parameters. Flexible calibration curves have been presented for different problems like survival modelsReference Austin, Harrell and Van Klaveren21, competing risk modelsReference Austin, Putter, Giardiello and van Klaveren22, or multiclass models.Reference Van Hoorde, Van Huffel, Timmerman, Bourne and Van Calster23 Flexible calibration curves that consider clustering have been understudied. Although summarizing statistics of calibration (e.g., O:E) can be meta-analyzed across clusters, such summarizing statistics are by definition less informative than the calibration plot.Reference Van Calster, Collins and Vickers19,

Reference Debray, Damen and Snell24 We present three different approaches to construct flexible calibration curves from clustered data, provide cluster-specific curves, and quantify heterogeneity using prediction intervals (PI). We illustrate the methodologies with a real case example on ovarian cancer data and compare the performance of the methodology using simulated data and synthetic data. Section 2 presents the motivating example, the general notation used throughout the article, and introduces the three approaches to obtain calibration plots accounting for clustering. Sections 3 and 4 present the methodology and results of the simulation study and synthetic data analysis, respectively, and in Section 5, we conclude by discussing our findings. This study is an initial assessment of methods for clustered calibration analysis that we classify as a phase 2 methodological study in the four-phase framework from Heinze et al.Reference Heinze, Boulesteix, Kammer, Morris and White25

2 Methods

2.1 Motivating example: ADNEX model and ovarian cancer data

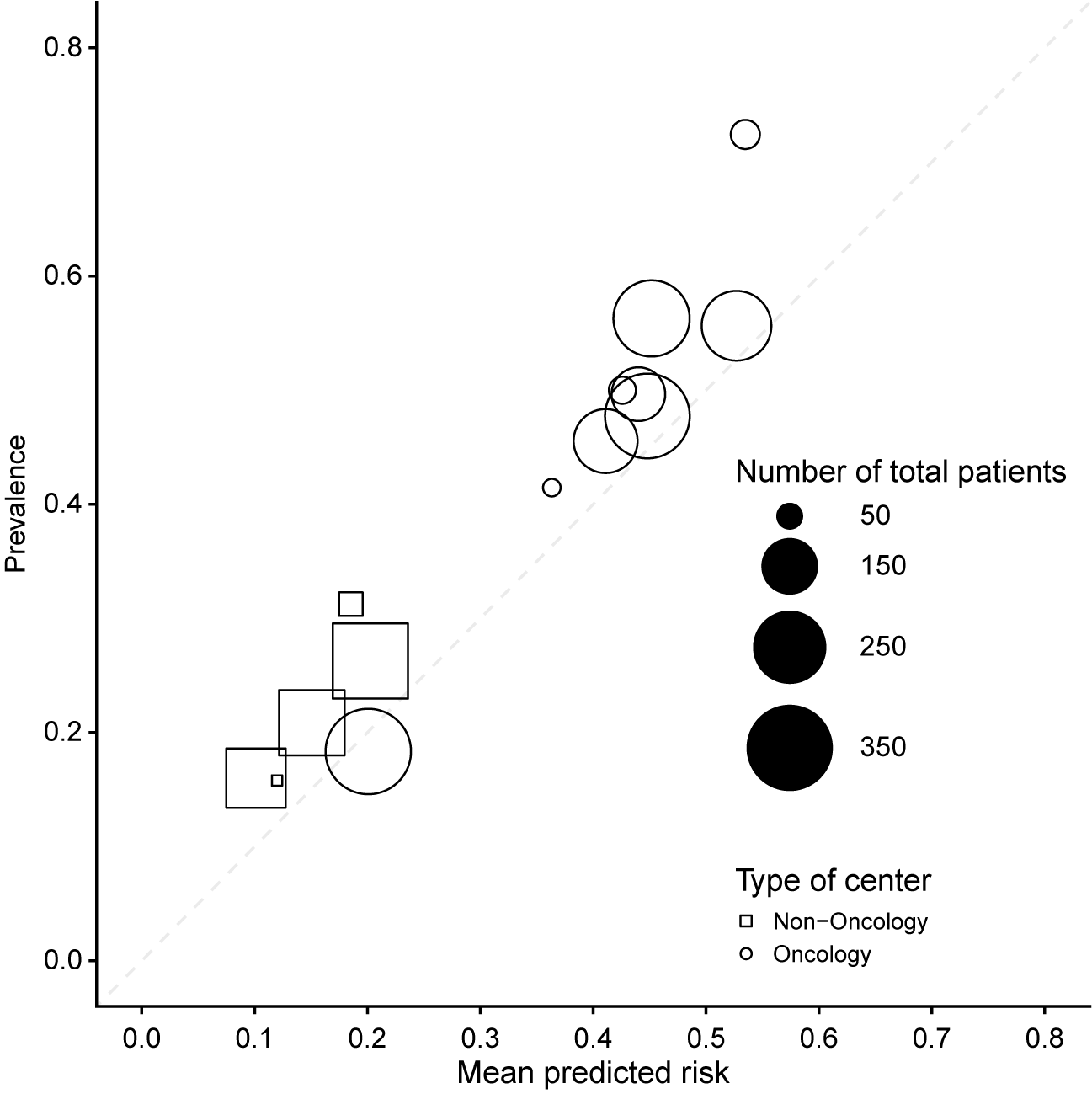

The methods will be illustrated using the ADNEX model for ovarian tumor discrimination, which was developed with data from the International Ovarian Tumor Analysis (IOTA) group.Reference Van Calster, Hoorde and Valentin26, Reference Timmerman, Ledger and Bourne27 The ADNEX model is a multinomial regression mixed model with random intercepts per center to estimate the probability that an ovarian mass is malignant. Predicting the malignancy of ovarian masses prior to surgery is important because benign masses can be managed conservatively, and malignant masses require different surgical approaches depending on the malignant subtype. ADNEX estimates the risk of five outcomes: benign tumor, borderline tumor, stage I invasive cancer, stage II–IV invasive cancer, and secondary metastasis. This work will focus on the overall risk of malignancy, which is obtained by adding the risks for the malignant subtypes. ADNEX has three clinical and six ultrasound predictors: age, serum CA125 level, type of center (oncology center or other hospital type), maximal diameter of the lesion, proportion of solid tissue, number of papillary projections, presence of more than10 locules, acoustic shadows, and ascites. CA125 is optional, and in this work, we focus on ADNEX without CA125. ADNEX coefficients already capture some of the between-center heterogeneity through the type of center. To illustrate multicenter calibration of the ADNEX model, we use an external validation dataset of 2489 patients recruited in 17 hospitals.Reference Kaijser28 Previous external validation of ADNEX on this dataset suggested that there was important heterogeneity between hospitals (Supplementary Figure S1).Reference Van Calster, Valentin and Froyman9 To avoid computation errors, three small non-oncology centers in Italy were combined, as well as two small non-oncology centers in the United Kingdom. The dataset then contains 14 clusters, with a median number of 189 patients per cluster (range 38 to 360) (Figure 1). The mean estimated probabilities (range 0.10 to 0.53) and the prevalence of malignancy (range 0.16 to 0.72) varied between clusters. The intra-cluster correlation (ICC) in (logit) malignancy risk based on the null random intercept model was 15%.Reference Snijders and Bosker17 An ICC of 0 would mean that all clusters are similar, and all variance in the logit malignancy risk is due to individual-level variability.

Figure 1 Prevalence and mean predicted ADNEX risk by center across the 14 centers in the dataset. The dashed diagonal line indicates perfect calibration.

2.2 Notation

We assume a dataset with a total of

![]() ${J}$

clusters and use

${J}$

clusters and use

![]() $j=\left(1,\dots, J\right)$

to index the clusters. In each cluster, we have

$j=\left(1,\dots, J\right)$

to index the clusters. In each cluster, we have

![]() ${n}_j$

patients, and we index them using

${n}_j$

patients, and we index them using

![]() $i=\left(1,\dots, {n}_j\right)$

. The total sample size is

$i=\left(1,\dots, {n}_j\right)$

. The total sample size is

![]() ${N={\sum}_{j=1}^J{n}_j}$

. We use

${N={\sum}_{j=1}^J{n}_j}$

. We use

![]() ${y}_{ij}\in 0,1$

to denote the outcome of patient

${y}_{ij}\in 0,1$

to denote the outcome of patient

![]() $i$

in cluster

$i$

in cluster

![]() $j$

, which takes on the value 0 in the case of a non-event and 1 in the case of an event. We assume that

$j$

, which takes on the value 0 in the case of a non-event and 1 in the case of an event. We assume that

![]() ${y}_{ij}$

follows a Bernoulli distribution

${y}_{ij}$

follows a Bernoulli distribution

![]() ${y}_{ij}\sim \mathrm{Bern}\left({\pi}_{ij}\right)$

, where

${y}_{ij}\sim \mathrm{Bern}\left({\pi}_{ij}\right)$

, where

![]() ${\pi}_{ij}$

denotes the probability of experiencing the event. Typically,

${\pi}_{ij}$

denotes the probability of experiencing the event. Typically,

![]() ${\pi}_{ij}$

is expressed as a function of a set of risk characteristics, captured in the covariate vector

${\pi}_{ij}$

is expressed as a function of a set of risk characteristics, captured in the covariate vector

![]() ${\boldsymbol{x}}_{ij}$

, and we assume that there exists an unknown regression function

${\boldsymbol{x}}_{ij}$

, and we assume that there exists an unknown regression function

![]() $r\left({\boldsymbol{x}}_{ij}\right)=P\left({y}_{ij}=1|{\boldsymbol{x}}_{ij}\right)$

. We approximate this function using a risk prediction model, where we model the outcome as a function of the observed risk characteristics and employ statistical or machine learning techniques to estimate this model. A general expression that encompasses both types is

$r\left({\boldsymbol{x}}_{ij}\right)=P\left({y}_{ij}=1|{\boldsymbol{x}}_{ij}\right)$

. We approximate this function using a risk prediction model, where we model the outcome as a function of the observed risk characteristics and employ statistical or machine learning techniques to estimate this model. A general expression that encompasses both types is

where

![]() $f\left(\cdotp \right)$

denotes the predicted risk given covariate vector

$f\left(\cdotp \right)$

denotes the predicted risk given covariate vector

![]() ${\boldsymbol{x}}_{ij}$

. In a logistic regression model, we have that

${\boldsymbol{x}}_{ij}$

. In a logistic regression model, we have that

$$\begin{align*}\unicode{x3c0} \left({\boldsymbol{x}}_{ij}\right)=\frac{e^{{\boldsymbol{x}}_{ij}^T\boldsymbol{\beta}}}{1+{e}^{{\boldsymbol{x}}_{ij}^T\boldsymbol{\beta}}}\end{align*}$$

$$\begin{align*}\unicode{x3c0} \left({\boldsymbol{x}}_{ij}\right)=\frac{e^{{\boldsymbol{x}}_{ij}^T\boldsymbol{\beta}}}{1+{e}^{{\boldsymbol{x}}_{ij}^T\boldsymbol{\beta}}}\end{align*}$$

where

![]() $\boldsymbol{\beta}$

denotes the parameter vector. We can rewrite the above equation as

$\boldsymbol{\beta}$

denotes the parameter vector. We can rewrite the above equation as

$$\begin{align*}\kern0.36em \log \left(\frac{\unicode{x3c0} \left({\boldsymbol{x}}_{ij}\right)}{1-\unicode{x3c0} \left({\boldsymbol{x}}_{ij}\right)}\right)={\boldsymbol{x}}_{ij}^T\boldsymbol{\beta}\end{align*}$$

$$\begin{align*}\kern0.36em \log \left(\frac{\unicode{x3c0} \left({\boldsymbol{x}}_{ij}\right)}{1-\unicode{x3c0} \left({\boldsymbol{x}}_{ij}\right)}\right)={\boldsymbol{x}}_{ij}^T\boldsymbol{\beta}\end{align*}$$

We can employ splines to allow for a non-linear relationship between the covariates and the outcome.Reference Harrell29 To estimate equation (1), we fit a statistical or machine learning model to the training data. The resulting model fit then provides us with the predicted probability

![]() $\widehat{\unicode{x3c0}}\left({\boldsymbol{x}}_{ij}\right)$

.

$\widehat{\unicode{x3c0}}\left({\boldsymbol{x}}_{ij}\right)$

.

Using calibration curves, we assess how well the predicted probabilities correspond to the actual event probabilities.Reference Steyerberg1,

Reference Van Calster, Nieboer, Vergouwe, De Cock, Pencina and Steyerberg4,

Reference Dimitriadis, Dümbgen, Henzi, Puke and Ziegel30,

Reference De Cock Campo31 A calibration plot maps the predicted probabilities

![]() $\unicode{x3c0}\left({\boldsymbol{x}}_{ij}\right)$

to the actual event probabilities

$\unicode{x3c0}\left({\boldsymbol{x}}_{ij}\right)$

to the actual event probabilities

![]() $\mathrm{P}\left({y}_{ij}=1\mid \unicode{x3c0}\left({\boldsymbol{x}}_{ij}\right)\right)$

and hereby provides a visual representation of the alignment between the model’s estimated risks and the true probabilities. For a perfectly calibrated model, the calibration curve follows the diagonal as

$\mathrm{P}\left({y}_{ij}=1\mid \unicode{x3c0}\left({\boldsymbol{x}}_{ij}\right)\right)$

and hereby provides a visual representation of the alignment between the model’s estimated risks and the true probabilities. For a perfectly calibrated model, the calibration curve follows the diagonal as

![]() $\mathrm{P}\left({y}_{ij}=1\mid \unicode{x3c0}\left({\boldsymbol{x}}_{ij}\right)\right)=\pi \left({\boldsymbol{x}}_{ij}\right)$

for all

$\mathrm{P}\left({y}_{ij}=1\mid \unicode{x3c0}\left({\boldsymbol{x}}_{ij}\right)\right)=\pi \left({\boldsymbol{x}}_{ij}\right)$

for all

![]() $i$

and all

$i$

and all

![]() $j$

.

$j$

.

2.3 Flexible calibration plots ignoring clustering

We can estimate the calibration curve using a logistic regression modelReference De Cock Campo31– Reference Cox33

where we estimate the observed proportions as a function of the (logit-transformed) predicted probabilities. Using the fitted model, we create a calibration curve by plotting

![]() $\widehat{\unicode{x3c0}}\left({\boldsymbol{x}}_{ij}\right)$

against the observed proportions

$\widehat{\unicode{x3c0}}\left({\boldsymbol{x}}_{ij}\right)$

against the observed proportions

![]() $\widehat{P}\left({y}_{ij}=1\mid \widehat{\unicode{x3c0}}\left({\boldsymbol{x}}_{ij}\right)\right)$

. This model, however, only allows for a linear relationship and, as such, does not adequately capture moderate calibration. To allow for a non-linear relationship between

$\widehat{P}\left({y}_{ij}=1\mid \widehat{\unicode{x3c0}}\left({\boldsymbol{x}}_{ij}\right)\right)$

. This model, however, only allows for a linear relationship and, as such, does not adequately capture moderate calibration. To allow for a non-linear relationship between

![]() $\widehat{\unicode{x3c0}}\left({\boldsymbol{x}}_{ij}\right)$

and

$\widehat{\unicode{x3c0}}\left({\boldsymbol{x}}_{ij}\right)$

and

![]() ${y}_{ij}$

, we can rely on non-parametric smoothers, such as locally estimated scatterplot smoothing (LOESS) or restricted cubic splines

${y}_{ij}$

, we can rely on non-parametric smoothers, such as locally estimated scatterplot smoothing (LOESS) or restricted cubic splines

Here,

![]() $s\left(\cdotp \right)$

denotes the smooth function applied to the logit-transformed predicted probability. This results in a flexible calibration plot, which is implemented in R packages such as CalibrationCurves (val.prob.ci function), rms (val.prob function), or tidyverse (cal_plot_logistic function).Reference Van Calster, Nieboer, Vergouwe, De Cock, Pencina and Steyerberg4(p. 20),

Reference Harrell29,

Reference Wickham, Averick and Bryan34,

35

$s\left(\cdotp \right)$

denotes the smooth function applied to the logit-transformed predicted probability. This results in a flexible calibration plot, which is implemented in R packages such as CalibrationCurves (val.prob.ci function), rms (val.prob function), or tidyverse (cal_plot_logistic function).Reference Van Calster, Nieboer, Vergouwe, De Cock, Pencina and Steyerberg4(p. 20),

Reference Harrell29,

Reference Wickham, Averick and Bryan34,

35

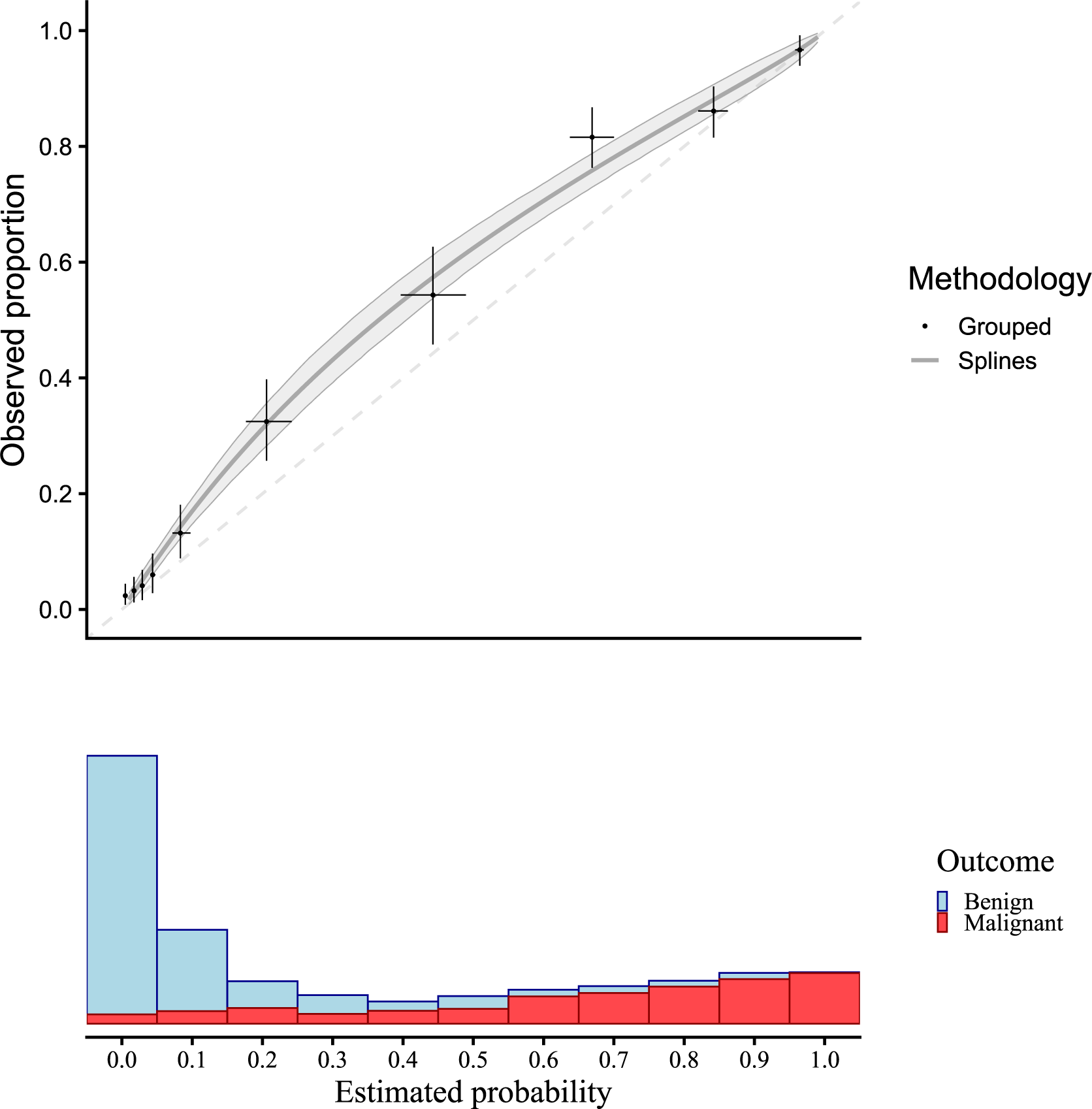

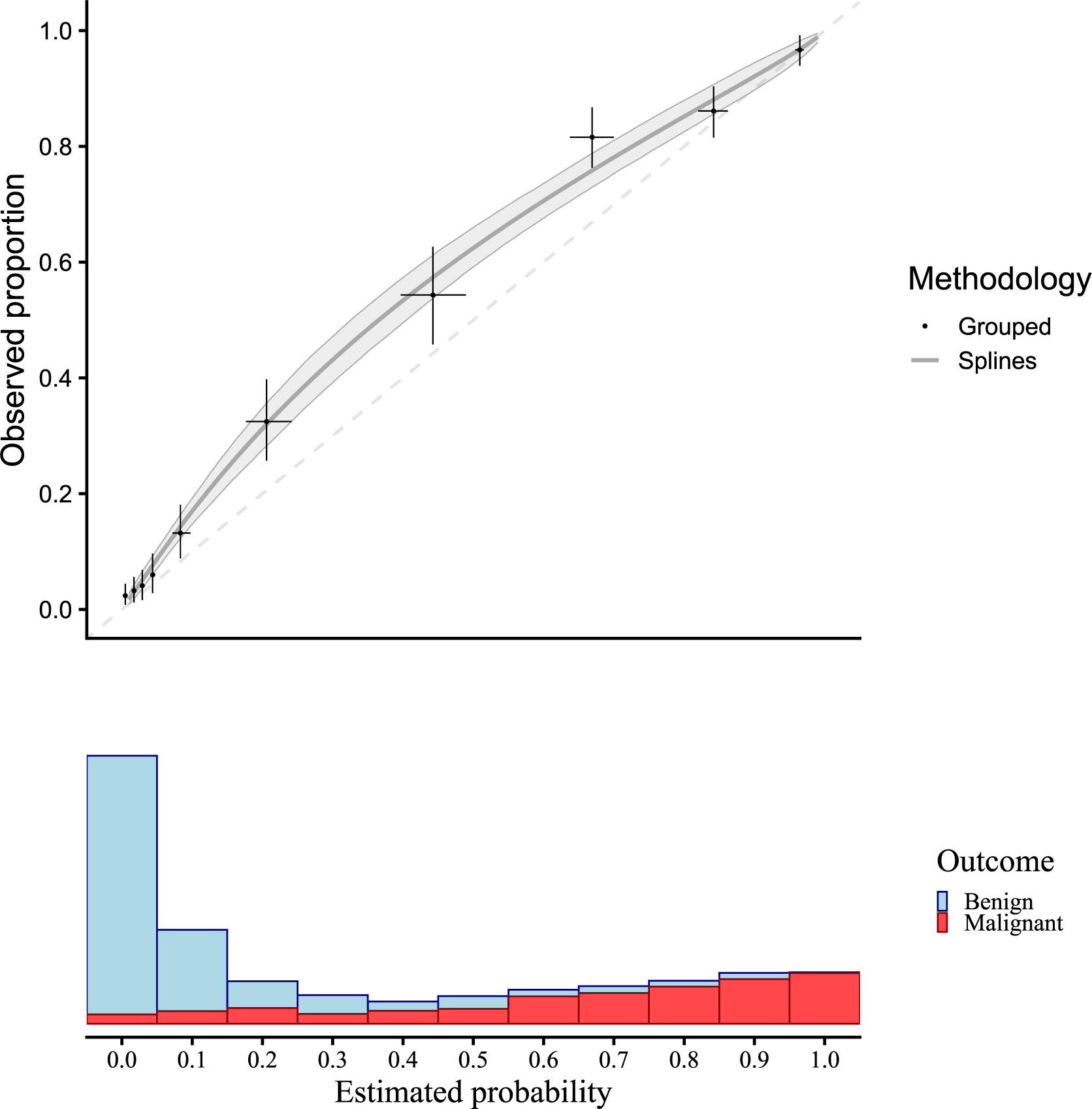

Figure 2 presents a flexible calibration curve (estimated using a restricted cubic spline) based on the motivating example with pooled data, and the results of a grouped calibration assessment for ovarian malignancy prediction. The panel below shows the sample distribution of the estimated risks for benign cases (blue) and malignant cases (red). The plots suggest that risks were estimated too low.

Figure 2 Traditional flexible calibration curves for the ADNEX model in the motivating example. Observed proportion is estimated with a logistic model with restricted cubic splines to model non-linear effects, and estimated risks are grouped in 10 groups. Confidence intervals are shown for 1000 bootstraps with a shaded area for splines and a + for grouped calibration. The dashed diagonal line indicates perfect calibration.

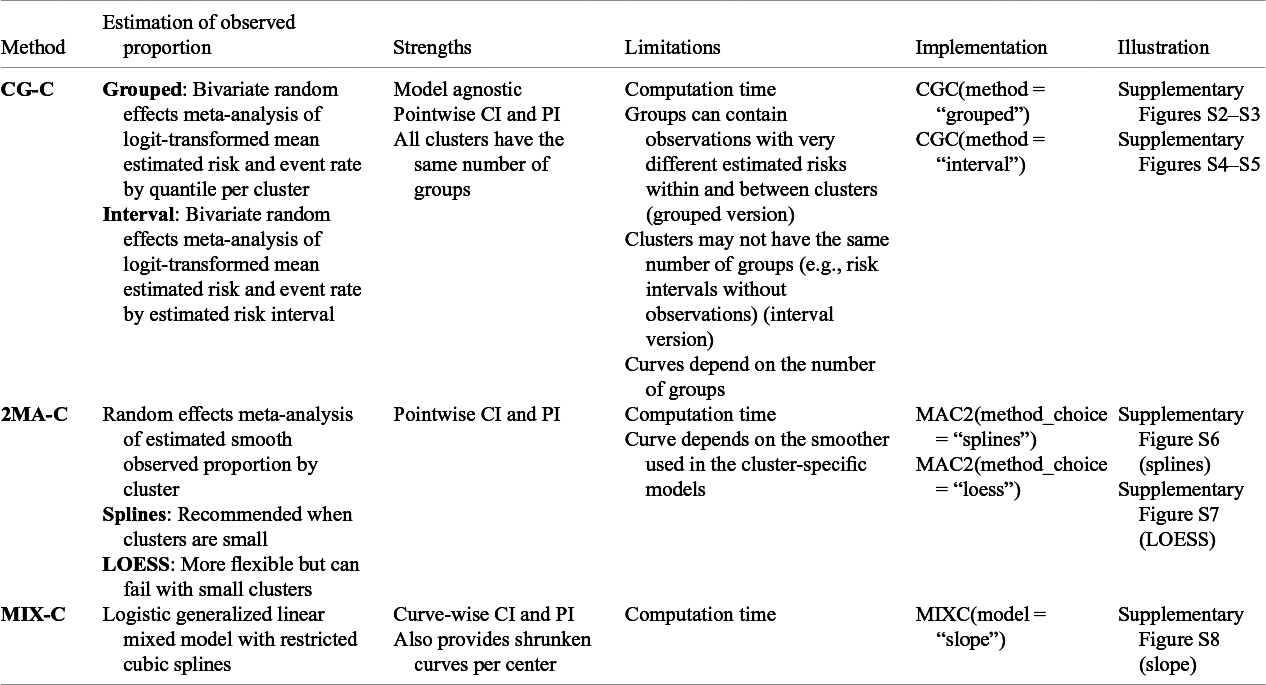

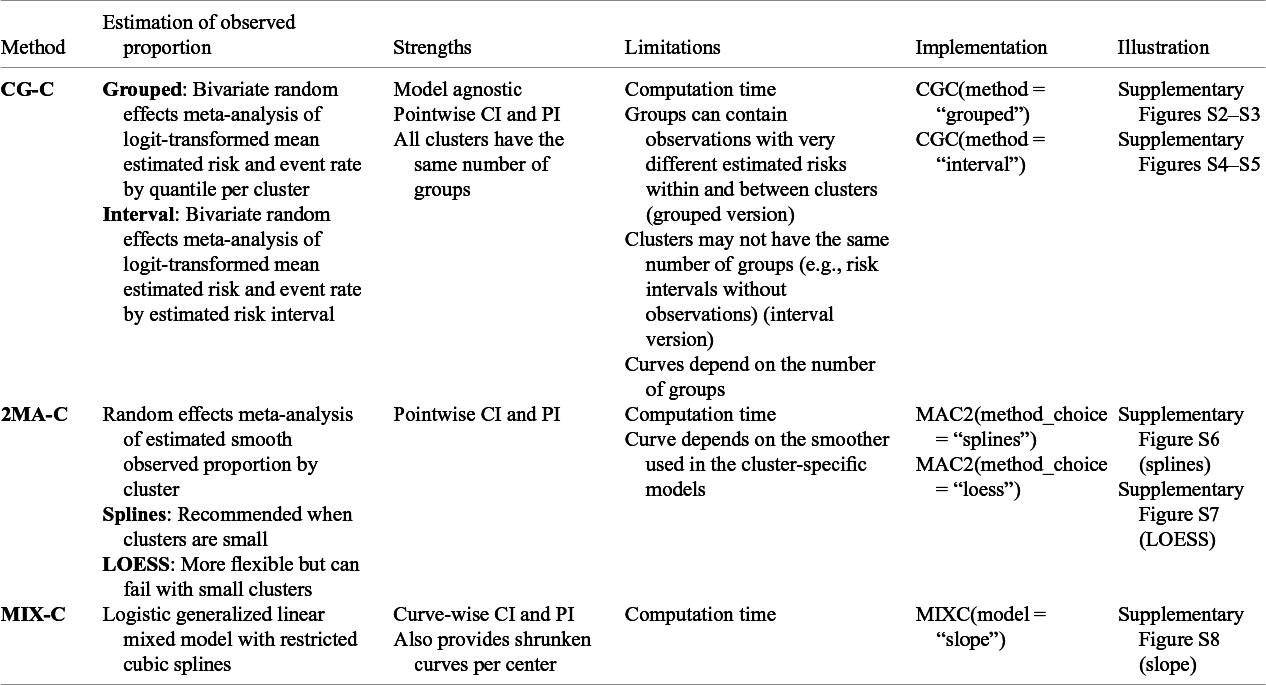

Since there is no commonly accepted way to estimate calibration curves for prediction models in clustered data, we present three approaches covering different statistical methodologies. First, we present a grouped clustered calibration plot based on a bivariate random effects meta-analysis model (clustered group calibration, CG-C). Second, we introduce a two-stage univariate random effects meta-analytical approach for the estimation of the observed events (two-stage meta-analysis calibration, 2MA-C). Finally, we provide a one-step approach where we fit a random effects model with smooth effects to obtain individual calibration slopes per cluster (mixed model calibration, MIX-C). We present the summary of the three proposed approaches in Table 1.

Table 1 Overview of introduced methodologies for creating flexible calibration curves accounting for clustering

Note: Implementation in the code included in the OSF. For the implementation in CalibrationCurves package go to the package documentation.

2.4 Clustered group calibration (CG-C)

The CG-C is a two-stage approach that extends the traditional grouped calibration to clustered data. In the traditional approach, data are pooled and groups are created based on quantiles (often deciles). In CG-C, we create

![]() $Q$

quantiles in every cluster (based on the estimated risk distribution per cluster) with

$Q$

quantiles in every cluster (based on the estimated risk distribution per cluster) with

![]() $q=\left(1,\dots, Q\right)$

. Hereafter, we pool the estimated risks and observed proportions with a bivariate random effects meta-analysis at each quantile

$q=\left(1,\dots, Q\right)$

. Hereafter, we pool the estimated risks and observed proportions with a bivariate random effects meta-analysis at each quantile

![]() $q$

. The logit-transformed observed proportion and average estimated risk are the response variables, and we capture the cluster-specific deviation by including a random intercept. The equation of this model is given by

$q$

. The logit-transformed observed proportion and average estimated risk are the response variables, and we capture the cluster-specific deviation by including a random intercept. The equation of this model is given by

$$\begin{align}{\displaystyle \left[\genfrac{}{}{0pt}{}{\mathrm{logit}\left({\overline{y}}_{qj}\right)}{\mathrm{logit}\left({\overline{\widehat{\pi}}}_{qj}\right)}\right]=\left[\genfrac{}{}{0pt}{}{{}_{\overline{y}}{\mu}_q+{u}_{qj}+{\varepsilon}_{qj}}{{{}_{\overline{\widehat{\unicode{x3c0}}}}{}\unicode{x3bc}}_q+{\unicode{x3bd}}_{qj}+{\epsilon}_{qj}}\right]}\end{align}$$

$$\begin{align}{\displaystyle \left[\genfrac{}{}{0pt}{}{\mathrm{logit}\left({\overline{y}}_{qj}\right)}{\mathrm{logit}\left({\overline{\widehat{\pi}}}_{qj}\right)}\right]=\left[\genfrac{}{}{0pt}{}{{}_{\overline{y}}{\mu}_q+{u}_{qj}+{\varepsilon}_{qj}}{{{}_{\overline{\widehat{\unicode{x3c0}}}}{}\unicode{x3bc}}_q+{\unicode{x3bd}}_{qj}+{\epsilon}_{qj}}\right]}\end{align}$$

where

![]() ${\overline{y}}_{qj}$

denotes the prevalence of cluster

${\overline{y}}_{qj}$

denotes the prevalence of cluster

![]() $j$

within quantile

$j$

within quantile

![]() $q$

and

$q$

and

![]() ${\overline{\widehat{\pi}}}_{qj}$

the average estimated risk in cluster

${\overline{\widehat{\pi}}}_{qj}$

the average estimated risk in cluster

![]() $j$

within quantile

$j$

within quantile

![]() $q$

. We use the subscript

$q$

. We use the subscript

![]() $q$

in the quantities to indicate that this refers to quantile

$q$

in the quantities to indicate that this refers to quantile

![]() $q$

.

$q$

.

![]() ${u}_{qj}$

and

${u}_{qj}$

and

![]() ${\unicode{x3bd}}_{qj}$

represent the random intercepts for cluster

${\unicode{x3bd}}_{qj}$

represent the random intercepts for cluster

![]() $j$

, capturing the between-cluster heterogeneity, whereas

$j$

, capturing the between-cluster heterogeneity, whereas

![]() ${\varepsilon}_{qj}$

and

${\varepsilon}_{qj}$

and

![]() ${\epsilon}_{qj}$

account for the within-cluster error. In this model, we assume that

${\epsilon}_{qj}$

account for the within-cluster error. In this model, we assume that

![]() ${}_{\overline{y}}{\mu}_q+{u}_{qj}$

and

${}_{\overline{y}}{\mu}_q+{u}_{qj}$

and

![]() ${{}_{\overline{\widehat{\unicode{x3c0}}}}{}\unicode{x3bc}}_q+{\unicode{x3bd}}_{qj}$

are randomly drawn from a distribution with mean (or pooled)

${{}_{\overline{\widehat{\unicode{x3c0}}}}{}\unicode{x3bc}}_q+{\unicode{x3bd}}_{qj}$

are randomly drawn from a distribution with mean (or pooled)

![]() ${}_{\overline{y}}{\mu}_q$

and

${}_{\overline{y}}{\mu}_q$

and

![]() ${{}_{\overline{\widehat{\unicode{x3c0}}}}{}\unicode{x3bc}}_q$

, respectively. Additionally, we assume that

${{}_{\overline{\widehat{\unicode{x3c0}}}}{}\unicode{x3bc}}_q$

, respectively. Additionally, we assume that

$$\begin{align*}\left[\begin{array}{c}{u}_{qj}\\ {}{\unicode{x3bd}}_{qj}\end{array}\right]\sim \mathcal{N}\left(\left[\genfrac{}{}{0pt}{}{0}{0}\right],{\Omega}_q\right)\;\mathrm{and}\;\left[\begin{array}{c}{\varepsilon}_{qj}\\ {}{\epsilon}_{qj}\end{array}\right]\sim \mathcal{N}\left(\left[\genfrac{}{}{0pt}{}{0}{0}\right],{\Sigma}_{qj}\right)\end{align*}$$

$$\begin{align*}\left[\begin{array}{c}{u}_{qj}\\ {}{\unicode{x3bd}}_{qj}\end{array}\right]\sim \mathcal{N}\left(\left[\genfrac{}{}{0pt}{}{0}{0}\right],{\Omega}_q\right)\;\mathrm{and}\;\left[\begin{array}{c}{\varepsilon}_{qj}\\ {}{\epsilon}_{qj}\end{array}\right]\sim \mathcal{N}\left(\left[\genfrac{}{}{0pt}{}{0}{0}\right],{\Sigma}_{qj}\right)\end{align*}$$

where

![]() ${\Omega}_{\mathrm{q}}$

and

${\Omega}_{\mathrm{q}}$

and

![]() ${\Sigma}_{qj}$

represent the between-cluster and within-cluster covariance matrices within quantile

${\Sigma}_{qj}$

represent the between-cluster and within-cluster covariance matrices within quantile

![]() $q$

, respectively

$q$

, respectively

$$\begin{align*}{\varOmega}_q&=\left[\begin{array}{cc}{}_{\overline{y}}{\tau}_q^2& {{}_b{}\rho}_q \ \ {}_{\overline{y}}{\tau}_q \ \ {}_{\overline{\widehat{\pi}}}{}{\tau}_q\\ {}{{}_b{}\rho}_q \ \ {}_{\overline{y}}{\tau}_q \ \ {}_{\overline{\widehat{\pi}}}{}{\tau}_q& {}_{\overline{\widehat{\pi}}}{}{\tau}_q^2\end{array}\right]\\[6pt]{\varSigma}_{qj}&=\left[\begin{array}{cc}{}_{\overline{y}}{\sigma}_{qj}^2& {}_w{\rho}_q \ \ {{}_{\overline{y}}{\sigma}_{qj}} \ \ _{\overline{\widehat{\pi}}}{\sigma}_{qj}\\ {}{}_w{\rho}_q \ \ {{}_{\overline{y}}{\sigma}_{qj}} \ \ _{\overline{\widehat{\pi}}}{\sigma}_{qj}& {}_{\overline{\widehat{\pi}}}{\sigma}_{qj}^2\end{array}\right].\end{align*}$$

$$\begin{align*}{\varOmega}_q&=\left[\begin{array}{cc}{}_{\overline{y}}{\tau}_q^2& {{}_b{}\rho}_q \ \ {}_{\overline{y}}{\tau}_q \ \ {}_{\overline{\widehat{\pi}}}{}{\tau}_q\\ {}{{}_b{}\rho}_q \ \ {}_{\overline{y}}{\tau}_q \ \ {}_{\overline{\widehat{\pi}}}{}{\tau}_q& {}_{\overline{\widehat{\pi}}}{}{\tau}_q^2\end{array}\right]\\[6pt]{\varSigma}_{qj}&=\left[\begin{array}{cc}{}_{\overline{y}}{\sigma}_{qj}^2& {}_w{\rho}_q \ \ {{}_{\overline{y}}{\sigma}_{qj}} \ \ _{\overline{\widehat{\pi}}}{\sigma}_{qj}\\ {}{}_w{\rho}_q \ \ {{}_{\overline{y}}{\sigma}_{qj}} \ \ _{\overline{\widehat{\pi}}}{\sigma}_{qj}& {}_{\overline{\widehat{\pi}}}{\sigma}_{qj}^2\end{array}\right].\end{align*}$$

We use

![]() ${}_{\overline{y}}{\sigma}_{qj}^2$

and

${}_{\overline{y}}{\sigma}_{qj}^2$

and

![]() ${}_{\overline{\widehat{\pi}}}{\sigma}_{qj}^2$

to denote the variance of

${}_{\overline{\widehat{\pi}}}{\sigma}_{qj}^2$

to denote the variance of

![]() ${\overline{y}}_{qj}$

and,

${\overline{y}}_{qj}$

and,

![]() ${\overline{\widehat{\pi}}}_{qj}$

${\overline{\widehat{\pi}}}_{qj}$

![]() ${}_w{\rho}_q$

represents the within-cluster correlation and

${}_w{\rho}_q$

represents the within-cluster correlation and

![]() ${{}_b{}\unicode{x3c1}}_q$

the between-cluster correlation in quantile q. The between-cluster variance is denoted as

${{}_b{}\unicode{x3c1}}_q$

the between-cluster correlation in quantile q. The between-cluster variance is denoted as

![]() ${}_{\overline{y}}{\tau}_q^2$

and

${}_{\overline{y}}{\tau}_q^2$

and

![]() ${}_{\overline{\widehat{\unicode{x3c0}}}}{}{\unicode{x3c4}}_q^2$

. For each quantile

${}_{\overline{\widehat{\unicode{x3c0}}}}{}{\unicode{x3c4}}_q^2$

. For each quantile

![]() $q$

, we use a bivariate meta-analysis model to account for the strong dependence between the average estimated risk and observed proportion. The calibration plot is then created by plotting the

$q$

, we use a bivariate meta-analysis model to account for the strong dependence between the average estimated risk and observed proportion. The calibration plot is then created by plotting the

![]() $Q$

values of

$Q$

values of

![]() ${{}_{\overline{\widehat{\unicode{x3c0}}}}{}\hat{\unicode{x3bc}}}_q$

on the x-axis and

${{}_{\overline{\widehat{\unicode{x3c0}}}}{}\hat{\unicode{x3bc}}}_q$

on the x-axis and

![]() ${}_{\overline{y}}{\hat{\mu}}_q$

on the y-axis. This approach has the advantage of obtaining an observed proportion per cluster in a model-agnostic way. In addition, it provides uncertainty measures in the cluster with the average random effect with confidence intervals (CI) for that effect and in a hypothetical new cluster (PI). It also has some limitations. First, it has the same drawback as the traditional grouped calibration because the curve is dependent on the number of quantiles selected, especially when the sample size is limited. Second, and linked to the previous limitation, the arbitrary selection of

${}_{\overline{y}}{\hat{\mu}}_q$

on the y-axis. This approach has the advantage of obtaining an observed proportion per cluster in a model-agnostic way. In addition, it provides uncertainty measures in the cluster with the average random effect with confidence intervals (CI) for that effect and in a hypothetical new cluster (PI). It also has some limitations. First, it has the same drawback as the traditional grouped calibration because the curve is dependent on the number of quantiles selected, especially when the sample size is limited. Second, and linked to the previous limitation, the arbitrary selection of

![]() $Q$

quantiles might create groups that are too heterogeneous between clusters because the estimated risk distributions differ (e.g., a high-risk setting vs a low-risk setting). Third, the meta-analysis does not provide cluster-specific curves using empirical Bayes. We used the rma.mv function of the metafor package.Reference Viechtbauer36

$Q$

quantiles might create groups that are too heterogeneous between clusters because the estimated risk distributions differ (e.g., a high-risk setting vs a low-risk setting). Third, the meta-analysis does not provide cluster-specific curves using empirical Bayes. We used the rma.mv function of the metafor package.Reference Viechtbauer36

We include an extension to the proposed methodology that we call “interval” grouping. The methodology is the same except for the way the groups are created. Per cluster, we create

![]() $I$

groups by dividing the probability space (0–1) into

$I$

groups by dividing the probability space (0–1) into

![]() $I$

equally spaced intervals. This way, we will create

$I$

equally spaced intervals. This way, we will create

![]() $I$

groups of different sizes based on the estimated risks. By doing this, we reduce the within-group variability at the expense of not necessarily having all clusters present in all

$I$

groups of different sizes based on the estimated risks. By doing this, we reduce the within-group variability at the expense of not necessarily having all clusters present in all

![]() $I$

groups. The algorithm, additional details on the implementation, and illustration are available in the Supplementary Material (Appendix A1, Figures S2–S5), and code for the implementation in the OSF repository.Reference Barreñada, Wynants and van Calster37

$I$

groups. The algorithm, additional details on the implementation, and illustration are available in the Supplementary Material (Appendix A1, Figures S2–S5), and code for the implementation in the OSF repository.Reference Barreñada, Wynants and van Calster37

2.5 Two-stage meta-analysis calibration (2MA-C)

For the second approach, we use a two-stage random effects meta-analysis to estimate the calibration plot. First, we fit a flexible curve per cluster with a smoother of choice. Currently, we have implemented restricted cubic splines and LOESS. For LOESS, in each cluster, the span parameter with the lowest bias-corrected AIC is selected. For restricted cubic splines, the number of knots per cluster is selected by performing a likelihood ratio test between all combinations of models with three, four, and five knots, selecting the model with the fewest knots that provides the best fit. We train a flexible curve per cluster and estimate the observed proportion for a fixed grid of estimated risks from 0.01 to 0.99 (

![]() $G$

, default is 100 points). Hereafter, we use a random effects meta-analysis model per point in the grid to combine the logit-transformed predictions across clusters, fitting the following univariate model per point

$G$

, default is 100 points). Hereafter, we use a random effects meta-analysis model per point in the grid to combine the logit-transformed predictions across clusters, fitting the following univariate model per point

![]() $g$

in the grid

$g$

in the grid

![]() ${}_s{\widehat{\unicode{x3c0}}}_{gj}$

denotes the predicted proportion of point

${}_s{\widehat{\unicode{x3c0}}}_{gj}$

denotes the predicted proportion of point

![]() $g$

for cluster

$g$

for cluster

![]() $j$

,

$j$

,

![]() ${{}_{\widehat{\unicode{x3c0}}}{}\unicode{x3bc}}_g$

the overall mean for point

${{}_{\widehat{\unicode{x3c0}}}{}\unicode{x3bc}}_g$

the overall mean for point

![]() $g$

,

$g$

,

![]() ${\unicode{x3bd}}_{gj}$

the cluster-specific deviation, and

${\unicode{x3bd}}_{gj}$

the cluster-specific deviation, and

![]() ${\epsilon}_{gj}$

the error. We assume that

${\epsilon}_{gj}$

the error. We assume that

![]() ${\unicode{x3bd}}_{gj}\sim \mathcal{N}\left(0,{{}_{\widehat{\unicode{x3c0}}}{}\unicode{x3c4}}_g^2\right)$

and

${\unicode{x3bd}}_{gj}\sim \mathcal{N}\left(0,{{}_{\widehat{\unicode{x3c0}}}{}\unicode{x3c4}}_g^2\right)$

and

![]() ${\epsilon}_{gj}\sim \mathcal{N}\left(0,{{}_{\widehat{\unicode{x3c0}}}\unicode{x3c3}}_{gj}^2\right)$

. Furthermore, we include the inverse of the variance of

${\epsilon}_{gj}\sim \mathcal{N}\left(0,{{}_{\widehat{\unicode{x3c0}}}\unicode{x3c3}}_{gj}^2\right)$

. Furthermore, we include the inverse of the variance of

![]() $\mathrm{logit}\left({}_s{\widehat{\unicode{x3c0}}}_{gj}\right)$

as weight and thus take both the cluster-specific (

$\mathrm{logit}\left({}_s{\widehat{\unicode{x3c0}}}_{gj}\right)$

as weight and thus take both the cluster-specific (

![]() ${{}_{\widehat{\unicode{x3c0}}}{}\unicode{x3c3}}_{gj}^2$

) and between-cluster variability (

${{}_{\widehat{\unicode{x3c0}}}{}\unicode{x3c3}}_{gj}^2$

) and between-cluster variability (

![]() ${{}_{\widehat{\unicode{x3c0}}}{}\unicode{x3c4}}_g^2$

) into account. As such, clusters with more precise estimates are assigned greater weights. This approach has the strength of being easy to compute and providing heterogeneity measures for the cluster with average calibration. This allows us to plot CI and PI, which help visually assess the certainty of the average curve (CI) and the heterogeneity between hypothetical new clusters (PI) in the whole range of predicted probabilities. The main limitation is that it is based on the smoothing technique used in each individual cluster, so the curves will vary depending on the smoother selected. Additionally, the technique treats each point in the grid as independent, leading to pointwise CI and PI. Cluster-specific curves are obtained in the first stage independently of the rest of the clusters. The algorithm, additional details on the implementation, and illustration are available in the Supplementary Material (Appendix A2, Figures S6–S7), and code for the implementation in the OSF repository.

${{}_{\widehat{\unicode{x3c0}}}{}\unicode{x3c4}}_g^2$

) into account. As such, clusters with more precise estimates are assigned greater weights. This approach has the strength of being easy to compute and providing heterogeneity measures for the cluster with average calibration. This allows us to plot CI and PI, which help visually assess the certainty of the average curve (CI) and the heterogeneity between hypothetical new clusters (PI) in the whole range of predicted probabilities. The main limitation is that it is based on the smoothing technique used in each individual cluster, so the curves will vary depending on the smoother selected. Additionally, the technique treats each point in the grid as independent, leading to pointwise CI and PI. Cluster-specific curves are obtained in the first stage independently of the rest of the clusters. The algorithm, additional details on the implementation, and illustration are available in the Supplementary Material (Appendix A2, Figures S6–S7), and code for the implementation in the OSF repository.

2.6 Mixed model calibration (MIX-C)

In the third approach, we employ a one-stage logistic generalized linear mixed model (GLMM) to model the outcome as a function of the logit-transformed predictions. To allow for a non-linear effect, we employ restricted cubic splines with three knots for both the fixed and random effects

Here,

![]() $\overset{\sim }{s_j}$

denotes the smooth random effect for cluster

$\overset{\sim }{s_j}$

denotes the smooth random effect for cluster

![]() $j$

. The model is fit using lme4

Reference Bates, Mächler, Bolker and Walker38 and splines are added with rms package.Reference Harrell39 This approach estimates the calibration per cluster and the variance of the random effects in a single step. As opposed to the random effects in 2MA-C, the MIX-C model takes all observations across the entire spectrum of predicted risk into account when predicting the realized values of the random effects and cluster-specific calibration curves. The main limitation is the computation time needed when the number of clusters is large. The algorithm, additional details on the implementation, and illustration are available in the Supplementary Material (Appendix A3, Figure S8), and code for the implementation is available in the OSF repository.

$j$

. The model is fit using lme4

Reference Bates, Mächler, Bolker and Walker38 and splines are added with rms package.Reference Harrell39 This approach estimates the calibration per cluster and the variance of the random effects in a single step. As opposed to the random effects in 2MA-C, the MIX-C model takes all observations across the entire spectrum of predicted risk into account when predicting the realized values of the random effects and cluster-specific calibration curves. The main limitation is the computation time needed when the number of clusters is large. The algorithm, additional details on the implementation, and illustration are available in the Supplementary Material (Appendix A3, Figure S8), and code for the implementation is available in the OSF repository.

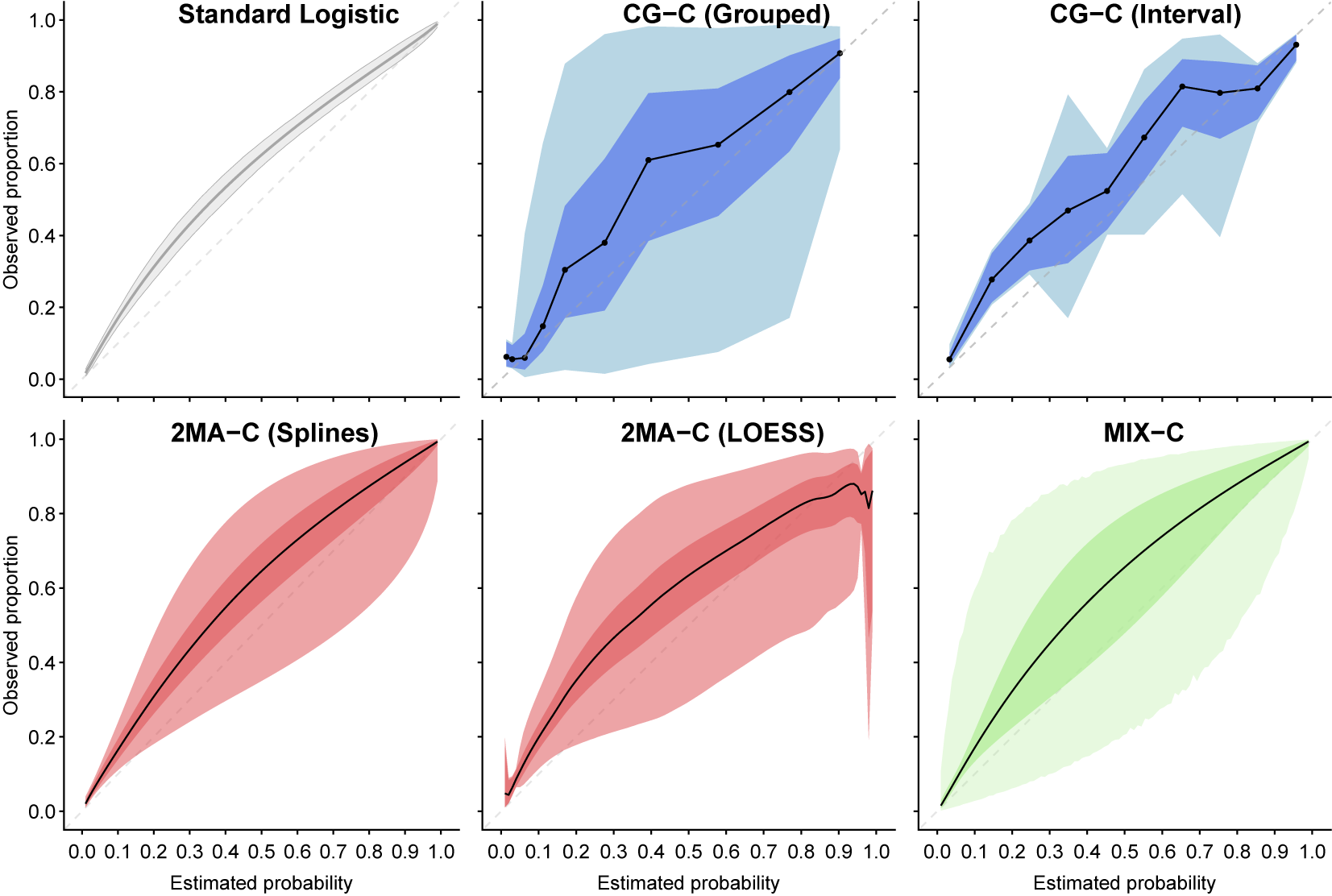

2.7 Results for the case example

A visual analysis of the different curves shows that all the approaches present similar results on the case example, in line with the previously obtained results ignoring clustering (Figure 3). All plots suggest that ADNEX underestimated risks. Nevertheless, there are important differences in the estimated uncertainty and heterogeneity. Details, visualizations, and variations of each method are shown in Supplementary Figures S2–S8. The methods are available in the CalibrationCurves package.Reference De Cock, Nieboer, Van Calster, Steyerberg and Vergouwe45

Figure 3 Comparison of the standard logistic regression with splines and the three introduced methodologies with CI (bright shaded) and PI (light shaded). Number of quantiles for CG-C were 10, 2MA-C fitted center-specific curves with splines or LOESS, and MIX-C used random intercept and slopes with restricted cubic splines and three knots. The dashed diagonal line indicates perfect calibration.

3 Simulation study

The motivating example showcases the applicability of the methods. However, with real data, it is not possible to know the true underlying observed proportion. Therefore, we designed a simulation study to explore the performance of the introduced approaches and compare it with cluster ignorant methodologies.

3.1 Methods

The data-generating models were based on logistic regression with a random intercept per cluster; hence, the formula to obtain the true probabilities was of the form

![]() $\mathrm{logit}\left(\unicode{x3c0} \left({x}_{ij}\right)\right)={\unicode{x3b2}}_0+{x}_{ij}{\unicode{x3b2}}_1+{u}_j$

.

$\mathrm{logit}\left(\unicode{x3c0} \left({x}_{ij}\right)\right)={\unicode{x3b2}}_0+{x}_{ij}{\unicode{x3b2}}_1+{u}_j$

.

![]() ${\unicode{x3b2}}_0$

represents the intercept,

${\unicode{x3b2}}_0$

represents the intercept,

![]() ${\unicode{x3b2}}_1$

is the corresponding effect of covariate

${\unicode{x3b2}}_1$

is the corresponding effect of covariate

![]() $x$

, and

$x$

, and

![]() ${u}_j$

is the cluster-specific deviation. We first obtained the true models according to a full factorial design where two factors were varied: little vs strong clustering (ICC 5% or 20%) and lower vs higher true area under the receiver operating characteristic curve (AUC;0.75 and 0.9). Event rate was fixed at 30% for the whole population but varied between clusters according to the variance of the random intercept

${u}_j$

is the cluster-specific deviation. We first obtained the true models according to a full factorial design where two factors were varied: little vs strong clustering (ICC 5% or 20%) and lower vs higher true area under the receiver operating characteristic curve (AUC;0.75 and 0.9). Event rate was fixed at 30% for the whole population but varied between clusters according to the variance of the random intercept

![]() ${u}_j$

. For each data-generating mechanism, we generated data for 200 clusters and 2,000,000 patients (10,000 observations per cluster) and a single normally distributed linear predictor (

${u}_j$

. For each data-generating mechanism, we generated data for 200 clusters and 2,000,000 patients (10,000 observations per cluster) and a single normally distributed linear predictor (

![]() $x$

, which can be seen as a combination of several predictors) with mean 0 and variance 1. Random effects

$x$

, which can be seen as a combination of several predictors) with mean 0 and variance 1. Random effects

![]() $({u}_j)$

were also normally distributed with variance according to the desired ICC. ICC is calculated as the variance of a null random intercept model divided by the total variance, calculated as the sum of the variances of the null random intercept model and the standard logistic distribution.Reference Hosmer, Lemeshow and Sturdivant40 AUC was controlled by varying the coefficient (

$({u}_j)$

were also normally distributed with variance according to the desired ICC. ICC is calculated as the variance of a null random intercept model divided by the total variance, calculated as the sum of the variances of the null random intercept model and the standard logistic distribution.Reference Hosmer, Lemeshow and Sturdivant40 AUC was controlled by varying the coefficient (

![]() ${\unicode{x3b2}}_1$

), and the desired event rate was controlled by modifying the intercept

${\unicode{x3b2}}_1$

), and the desired event rate was controlled by modifying the intercept

![]() ${\unicode{x3b2}}_0$

. We obtained four superpopulations by trial and error (Supplementary Table S1). The code to obtain the superpopulations is available in the OSF repository.

${\unicode{x3b2}}_0$

. We obtained four superpopulations by trial and error (Supplementary Table S1). The code to obtain the superpopulations is available in the OSF repository.

In each of the superpopulations, we drew four scenarios, inspired by real validation or development studies, varying events per cluster (EPC) (20 vs 200) and number of clusters (5 vs 30). These scenarios refer to the dataset on which hypothetical prediction models are developed or validated. This results in 16 scenarios overall, by combining all values for ICC, AUC, EPC, and number of clusters. We then applied the methods for varying development or varying validation sample size.

3.1.1 Varying development size

The clusters and patients within each cluster were selected randomly, and the number of patients was selected according to the prevalence and the desired EPC (

![]() ${n}_j$

= EPC * 1.15 divided by cluster prevalence; we multiplied EPC to ensure that the desired EPC is obtained across all clusters). We developed logistic regression models with restricted cubic splines and three knots in these training datasets. Then, we externally validated the calibration of the model in patients from clusters not used for model development in an ideal situation with a high number of clusters and high EPC. Therefore, we validated models on a random selection of 100,000 observations from a random selection of 30 clusters that were not sampled for model development. On average, each cluster contained 1,000 events and 3,333 observations, but the number of events varied depending on the cluster-specific event rate.

${n}_j$

= EPC * 1.15 divided by cluster prevalence; we multiplied EPC to ensure that the desired EPC is obtained across all clusters). We developed logistic regression models with restricted cubic splines and three knots in these training datasets. Then, we externally validated the calibration of the model in patients from clusters not used for model development in an ideal situation with a high number of clusters and high EPC. Therefore, we validated models on a random selection of 100,000 observations from a random selection of 30 clusters that were not sampled for model development. On average, each cluster contained 1,000 events and 3,333 observations, but the number of events varied depending on the cluster-specific event rate.

3.1.2 Varying validation size

Additionally, we validated the models with varying validation sample sizes. In this case, we fixed the model to be validated to a logistic regression model with restricted cubic splines and three knots trained with a sufficiently large sample size according to Riley’s criteria (N = 1711) from a cluster with average event rate in each of the superpopulations.Reference Riley, Debray and Collins41 Then, we validated those models varying EPC (20 vs 200) and the number of clusters (5 vs 30) in the validation sample.

We applied the CG-C (grouped and interval), 2MA-C (splines and LOESS), and MIX-C approaches to the validation sample varying development and validation sample size. For comparison, we also obtained a standard flexible logistic calibration model (i.e., ignoring clustering, see Section 2.3) using restricted cubic splines and three knots. True risks are obtained using the formula in Supplementary Table S1, and the true risks in the cluster with the average effect are obtained by setting the random intercept to 0. These true risks can be used to generate true calibration curves per cluster and for the cluster with the average effect. To numerically compare the deviation of the estimated calibration curve from the true one, we calculated the mean squared calibration error (MSCE) as the mean squared difference between the estimated observed proportions and the true observed proportions (based on the true risks) in the cluster with the average effect (setting

![]() ${u}_j=0$

) over a fixed grid of 100 estimated risks (100 evenly spaced points from 0.01 to 0.99).Reference Van Hoorde, Van Huffel, Timmerman, Bourne and Van Calster23 For CG-C, the grid contains 10 points by definition. The process was repeated 100 times per scenario.

${u}_j=0$

) over a fixed grid of 100 estimated risks (100 evenly spaced points from 0.01 to 0.99).Reference Van Hoorde, Van Huffel, Timmerman, Bourne and Van Calster23 For CG-C, the grid contains 10 points by definition. The process was repeated 100 times per scenario.

3.1.3 Heterogeneity

We evaluated the coverage of 95% PI. For each of the three methods, using the same grid of values that we used for the MSCE, we evaluated whether the true cluster-specific risk (including random effects) fell within the PI at each of the grid points. This means that we obtained the true cluster-specific probabilities using the formula in Supplementary Table S1 and compared for each clustered calibration approach whether the PI contained the true values. Coverage was calculated as the proportion over the 200 clusters in the superpopulations.

3.2 Results

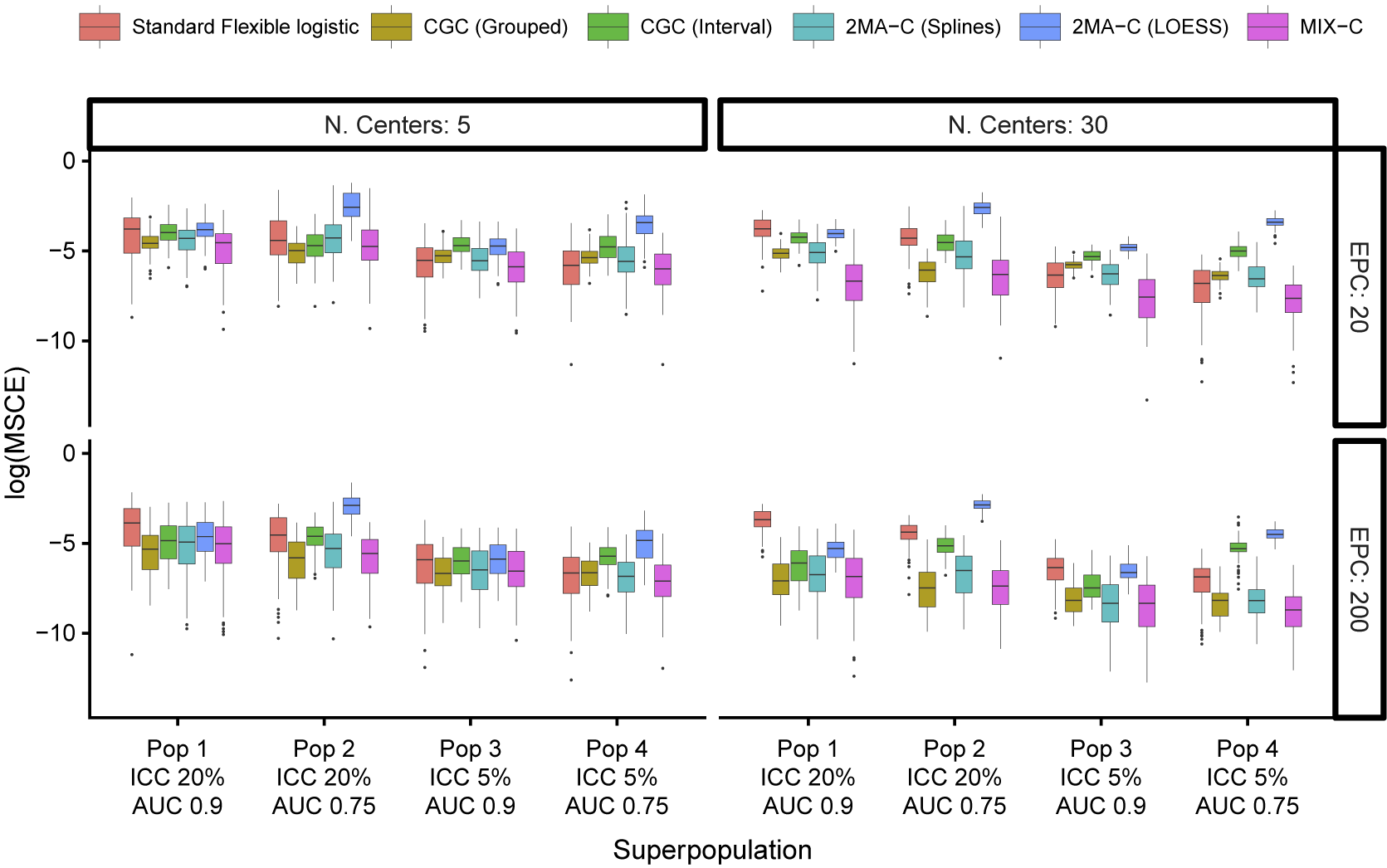

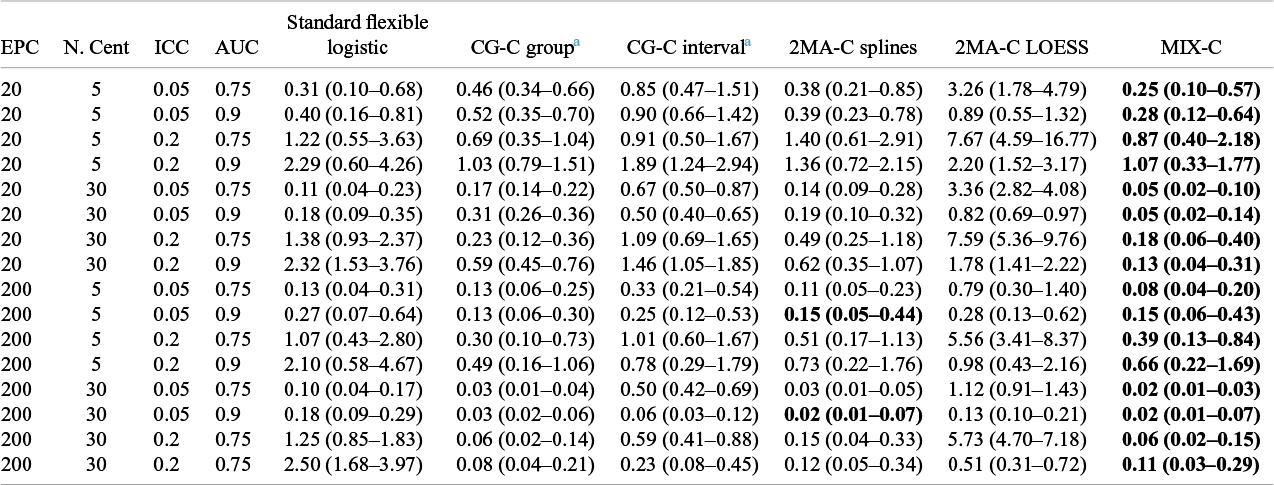

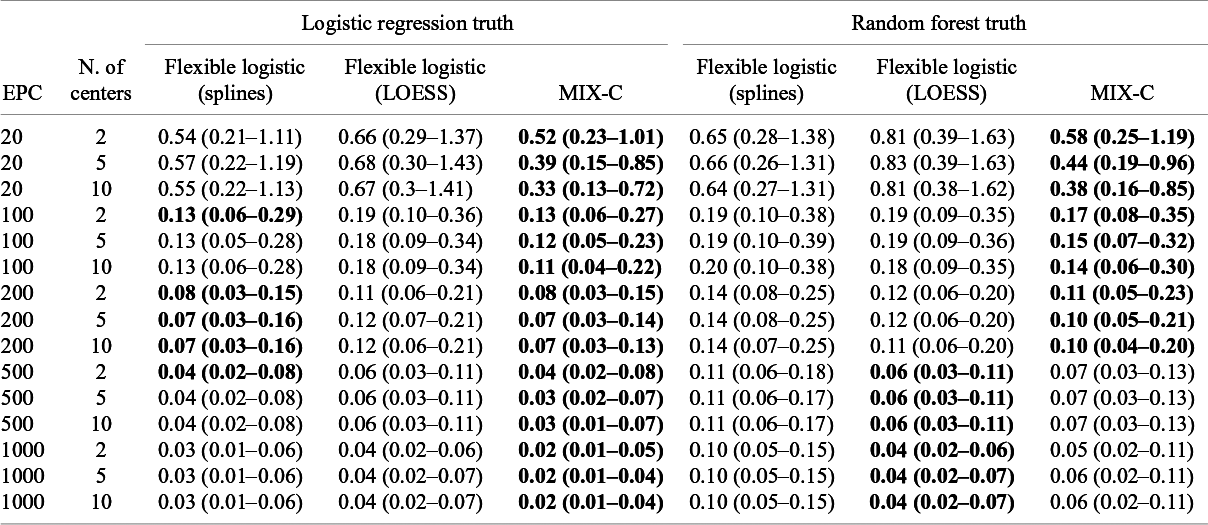

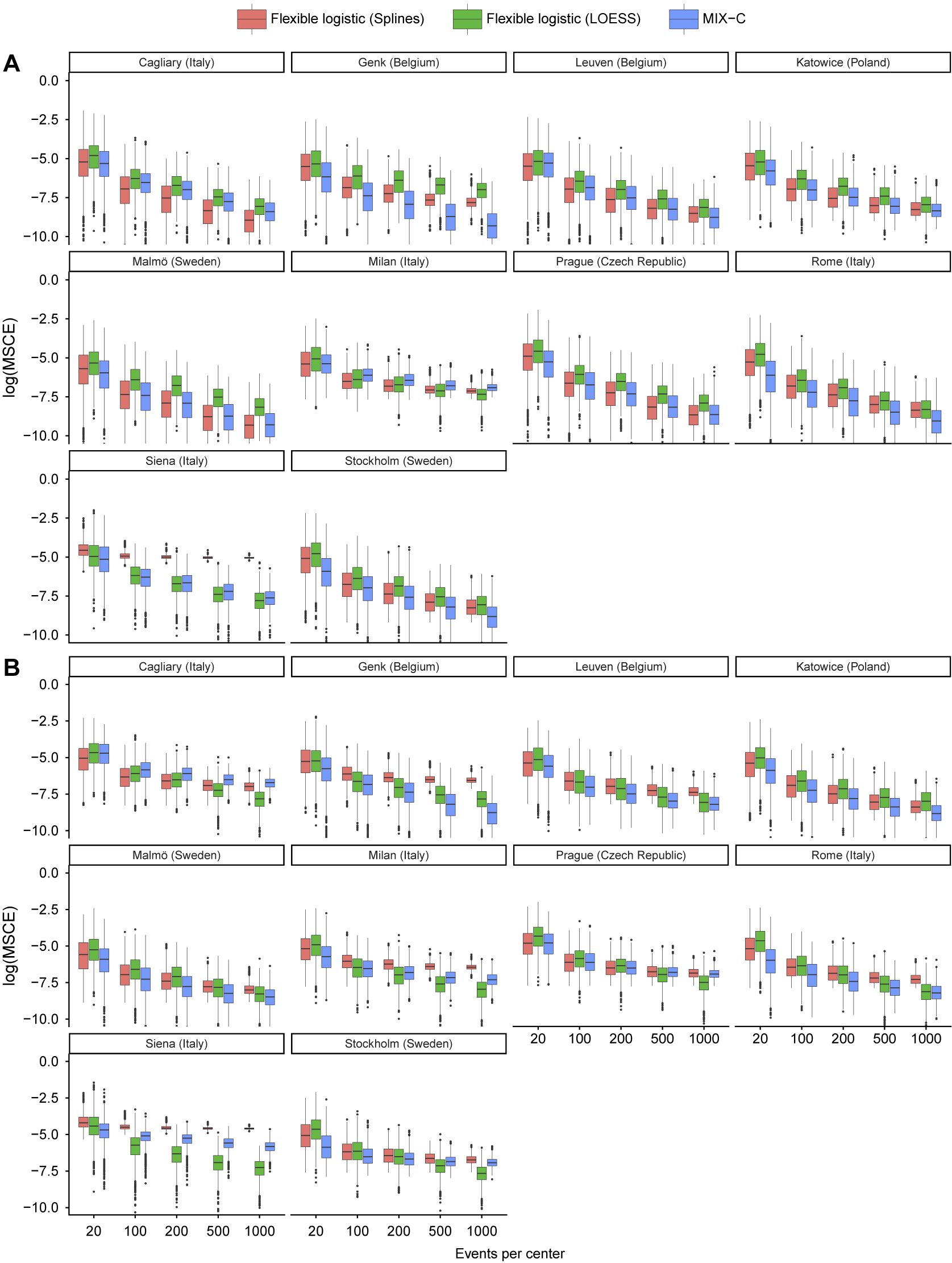

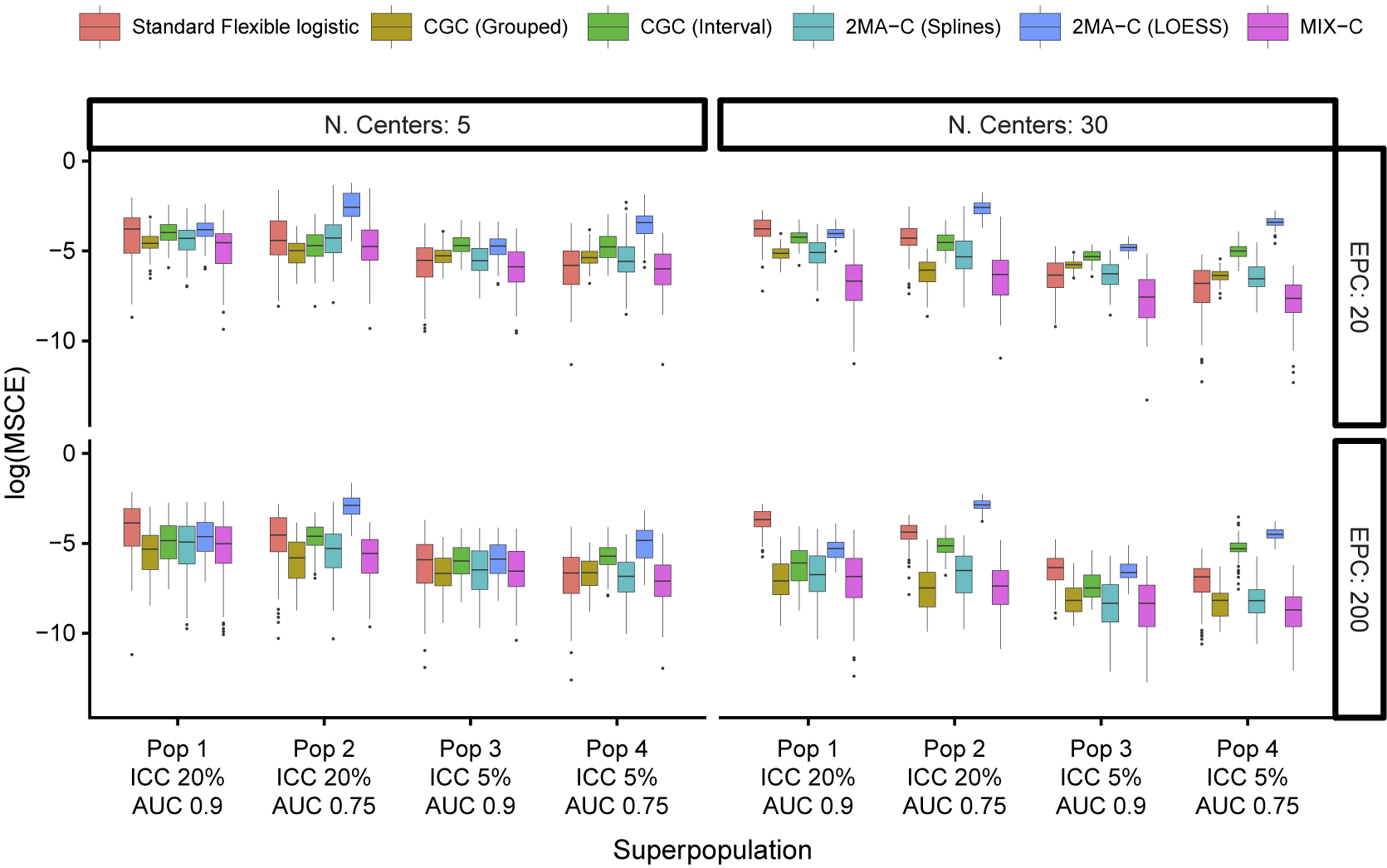

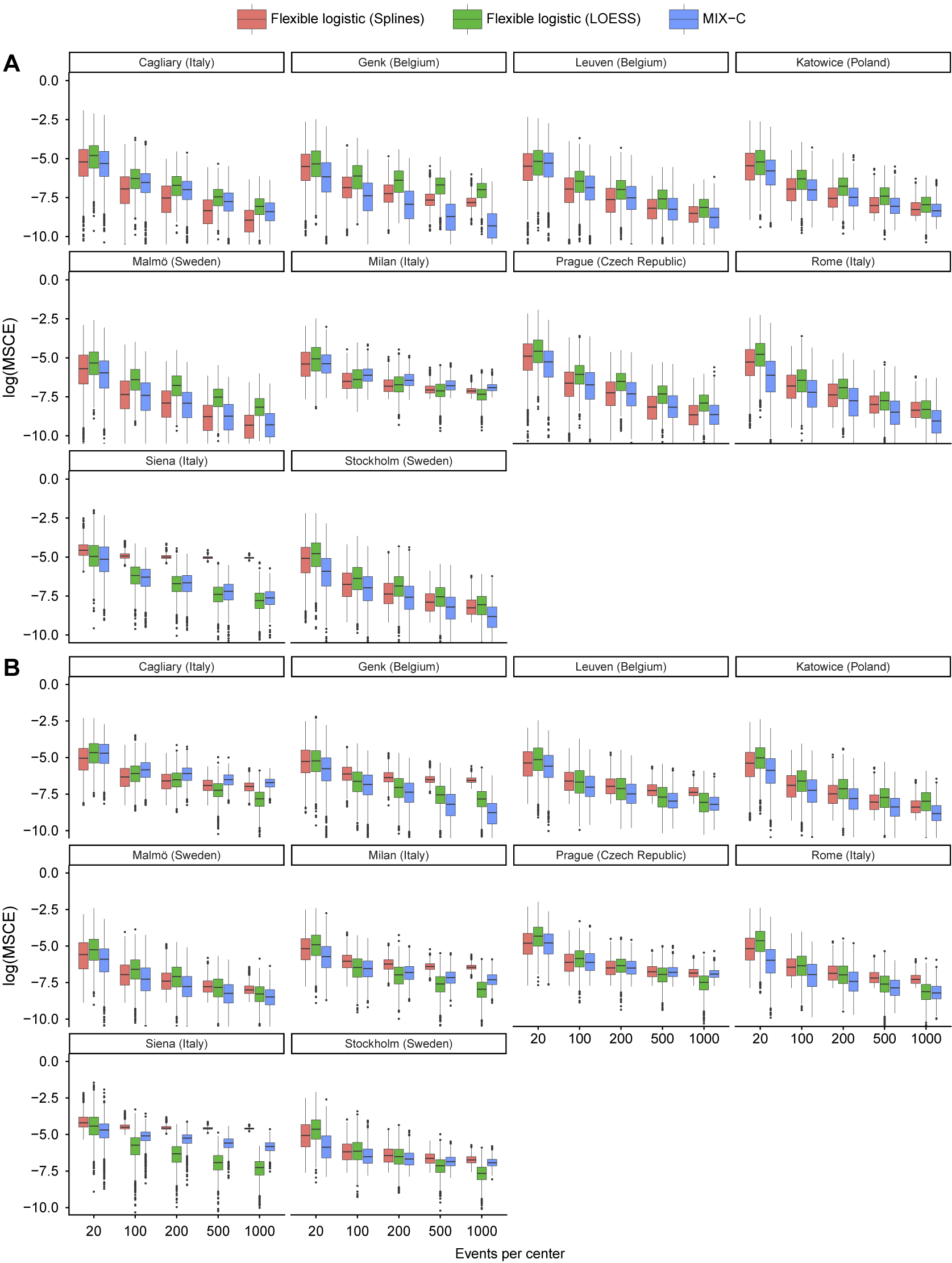

The median MSCE results for varying validation sample sizes are displayed in Table 2 (multiplied by 100) and Figure 4. Note that CG-C focuses on 10 points, whereas the other approaches focus on a grid of 100 points, so CG-C and the other approaches are not directly comparable. MIX-C was the best performing approach in all cases. 2MA-C (splines) also performed well in scenarios with high validation sample size. In general, the MSCE was better with high EPC, low ICC, and more clusters in the validation. Standard flexible logistic calibration performed considerably worse in all scenarios, in particular when ICC was high. When evaluated on a large validation sample with varying development size, the results also showed that 2MA-C (splines) and MIX-C were the best methods across all scenarios (Table 3 and Supplementary Figure S9).

Figure 4 Boxplots of mean squared calibration error (log) for fixed prediction models varying validation events per center (EPC) and the number of centers in the validation.

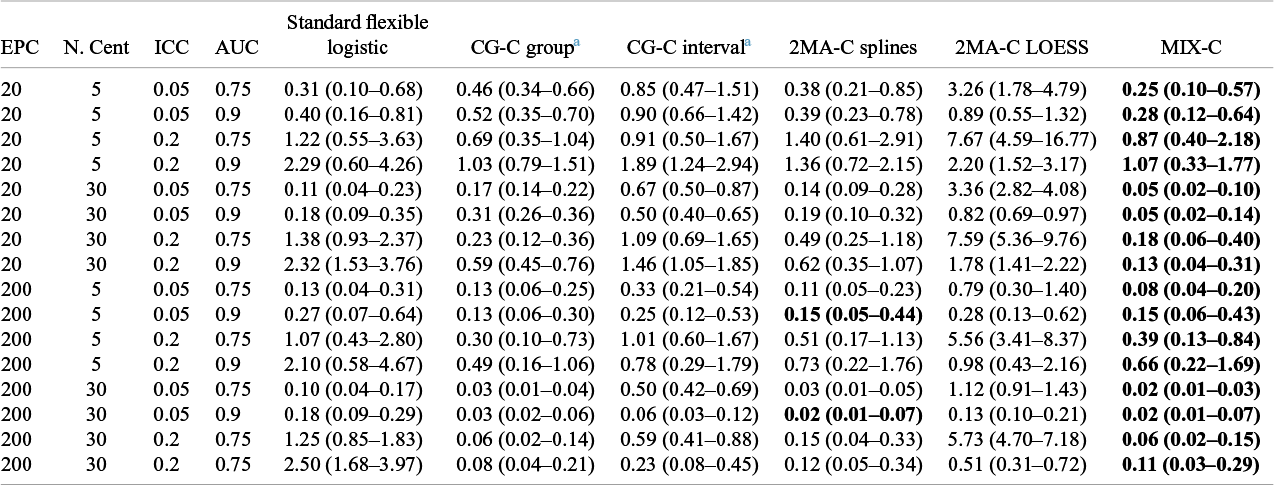

Table 2 Median (IQR) squared difference between true average probabilities and estimated observed proportion (MSCE) with logistic calibration, CG-C (10 groups), 2MA-C, and MIX-C methods for a logistic model varying validation events per center and number of centers. Bold numbers indicate best performance across approaches

Note: MSCE is multiplied by 100. The lower the number, the closer the estimated summary calibration curve is to the true calibration curve in a cluster with an average effect. Rounded to two decimals.In bold are the best working approach(es) for estimating observed proportions excluding CG-C methods.

Abbreviations: EPC: Event per center in the validation sample; N.Cent: Number of centers in the validation sample.

a Deciles is calculated only with 10 points instead of 100.

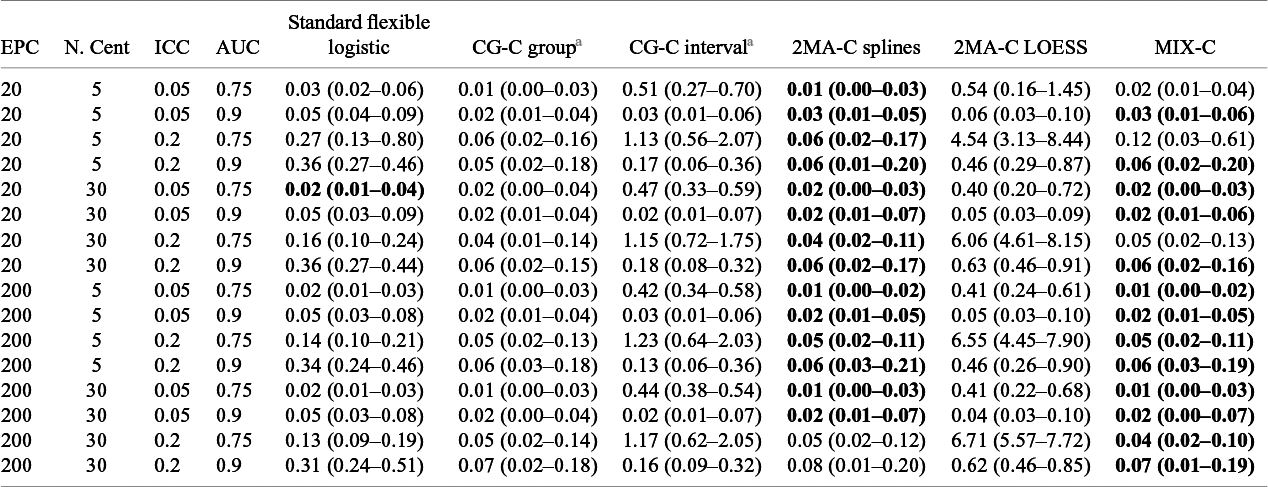

Table 3 Median (IQR) squared difference between true average probabilities and estimated observed proportion (MSCE) with logistic calibration, CG-C (10 groups), 2MA-C, and MIX-C methods for a logistic model varying training sample events per center and number of centers. Bold numbers indicate best performance across approaches

Note: MSCE is multiplied by 100. The lower the number, the closer the estimated summary calibration curve is to the true calibration curve in a cluster with an average effect. Rounded to two decimals. In bold are the best working approach(es) for estimating observed proportions excluding CG-C methods.

EPC: Event per center in the training sample; N.Cent: Number of centers in the training sample.

a Deciles is calculated only with 10 points instead of 100.

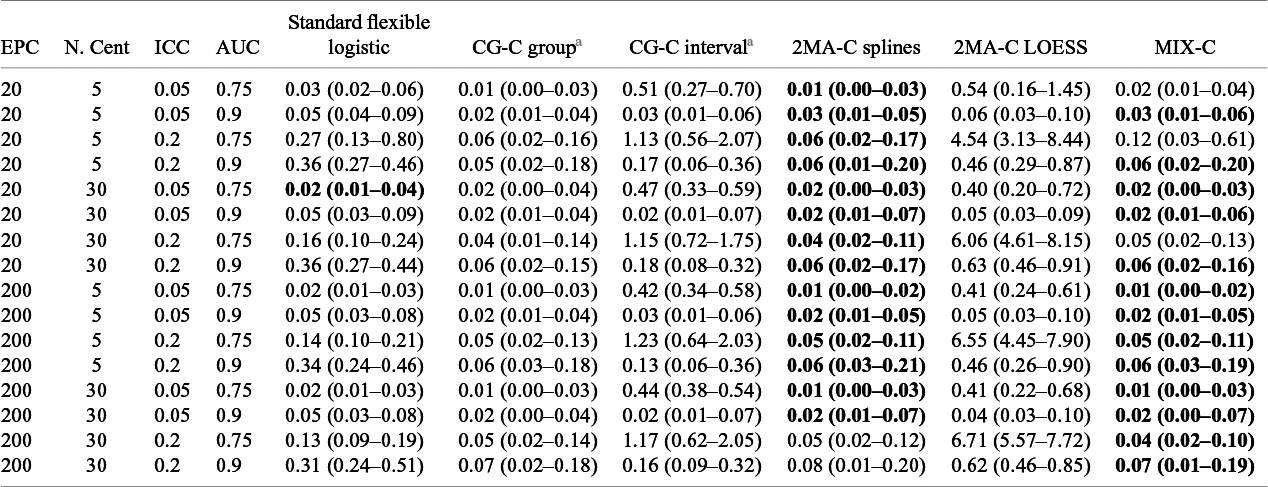

The PI coverage with varying validation sample sizes was close to nominal with high EPC and low ICC and when using the 2MA-C (splines) approach (Figure 5). When EPC was high, the coverage was close to nominal in the region where validation data were available but very poor at the tails (i.e., regions with few validation data) except for the MIX-C approach. MIX-C had correct coverage when ICC was high and AUC was low, too wide PI when ICC was low, and too narrow PI when ICC and AUC were high. When EPC was low, increasing the number of clusters reduced the coverage notably, especially for the grouped approaches and at the tails. The coverage in the large validation dataset was poor at the tails except for MIX-C (Supplementary Figure S10). High AUC and low ICC in development tended to have better PI coverage. Code and data to reproduce the simulation study and analysis are available in the OSF repository.

Figure 5 Pointwise prediction interval coverage varying the validation sample size. The model validated is the same in each superpopulation, and it was trained from a center with average event rate and with adequate sample size. The black dotted line indicates nominal coverage (95%).

4 Synthetic data

The simulation study showed that the methods correctly estimate observed proportions under different logistic DGMs. In the real world, the link between outcome and predictors is often not perfectly defined by a linear or logistic function. Therefore, we aimed to test the methods in a more flexible situation using a synthetically generated dataset based on the data used in the motivating example.

4.1 Methods

To generate synthetic data, we used data from the International Ovarian Tumor Analysis (IOTA) consortium that was used to develop prediction models to estimate the risk of malignancy in patients with an ovarian tumor.Reference Van Calster, Valentin and Froyman9, Reference Van Calster, Hoorde and Valentin26 We used the synthpop package in R, which learns the structure of the data and generates a new dataset where IPD are masked, but the underlying structure is preserved.Reference Nowok, Raab and synthpop42 We generated synthetic data for 1 million individuals for each of the 10 hospitals separately and used these synthetic patients as cluster-specific populations (retaining the clustered structure of the original dataset). We generated two true models per center: one based on a logistic regression model with splines for continuous variables and the other based on a random forest model with the number of randomly selected parameters per split (mtry) set to three and the depth of the individual trees set to 10 (minimum node size).Reference Wright and ranger43 Both models were trained on the real data from that center, using the nine ADNEX predictors listed above, excluding type of center. The true models were applied to the 1 million synthetic patients, and the outcome was generated with Bernoulli trials based on the true risks of malignancy from the applied models. This means that the same synthetic patient has two true risks and can have two different outcomes. The code to generate the synthetic data is available in the OSF repository, but the original data or the synthetic data are not publicly available. The comparison of the real and synthetic data for quality check in one center is presented in Supplementary Figure S11.

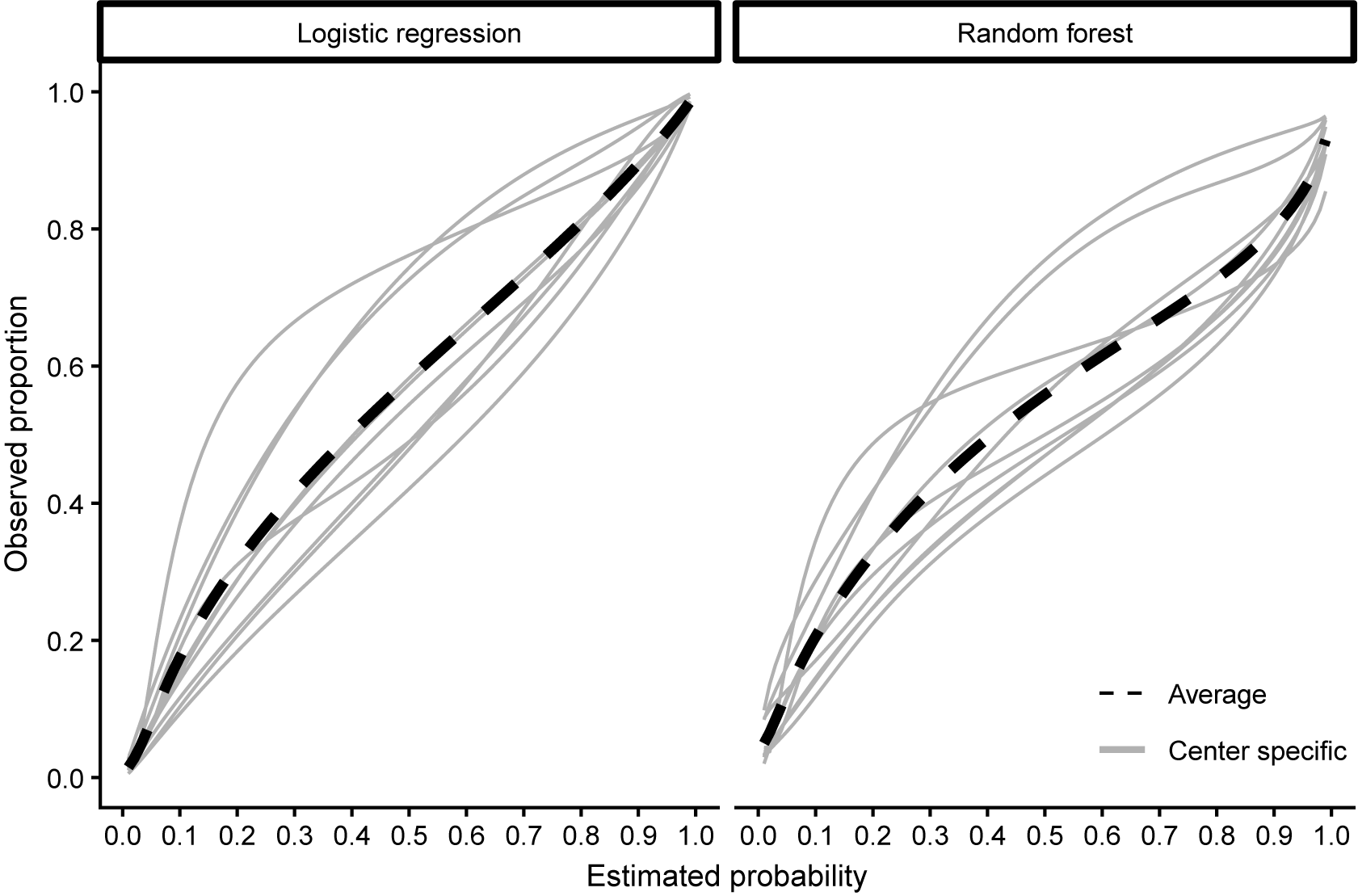

We used the synthetic data to validate the calibration of the published ADNEX model without CA125 in each center.Reference Van Calster, Hoorde and Valentin26 We defined 15 scenarios based on the number of centers (2, 5, 10) and validation data EPC (20, 100, 200, 500, 1,000). We repeated this 1,000 times by randomly drawing validation datasets. If the number of centers was 2 or 5, the centers were randomly chosen as well. In each repetition, we calculated MSCE for the two true risks. True center-specific curves were obtained by training flexible calibration models (splines with five knots) on all 1 million synthetic patients from that center (Figure 6). We then compared the center-specific estimated observed proportions and the true probabilities per center in a fixed grid of 100 values. The only method that obtained center-specific curves borrowing information from other clusters is the MIX-C method, and we compare this to standard flexible calibration with LOESS and restricted cubic splines with three knots (stage 1 of 2MA-C).

Figure 6 Center-specific (grey) and average true calibration plots for the synthetic data with 1000000 observations per center.

4.2 Results

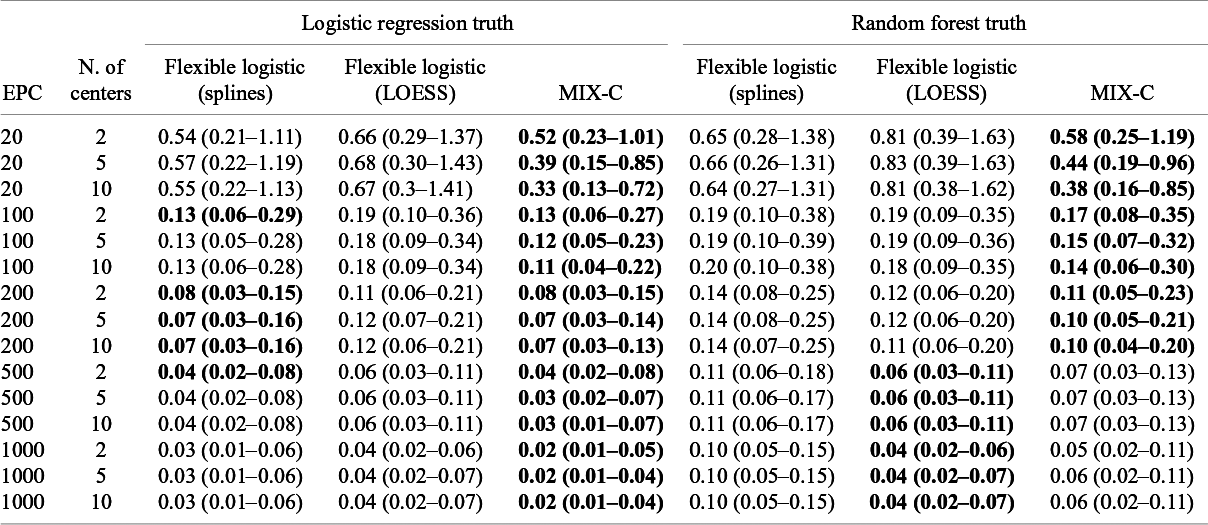

Median MSCE for the synthetic data analysis with 1,000 iterations is shown in Table 4 and by center in Figure 7. MIX-C was the best-performing method in all scenarios when the truth was based on a logistic regression, with splines working equally well in five scenarios. When the truth was based on a random forest model, MIX-C was the best with small validation samples (EPC < 500) and LOESS when the sample size was above 500 events per center. Borrowing information from other clusters is an example of a bias–variance trade-off and leads to improved accuracy for the estimated cluster-specific curves for clusters with modest sample sizes. Additionally, the performance by approach varied considerably between centers (Figure 7); that is, the best approach varied by center.

Table 4 Median MSCE for the synthetic data study for center-specific results. MSCE is presented multiplied by 100. Bold numbers indicate best performance across approaches

Note: The center-specific true curves are based on a flexible logistic model with restricted cubic splines. The lower the number, the closer the estimated center-specific calibration curve is to the true calibration curve in each cluster. Rounded to two decimals. In bold are the best working approach(es) for estimating observed proportions excluding CG-C methods.

Figure 7 Center-specific results of log(MSCE) by the number of events per center for the logistic truth (a) and the random forest (b) truth.

5 Discussion

Calibration of CPMs is crucial since it is related to the usefulness of the recommended clinical decisions provided by the model.Reference Van Calster and Vickers3 Evaluation of calibration performance is best done with flexible calibration plots.Reference Van Calster, Nieboer, Vergouwe, De Cock, Pencina and Steyerberg4, Reference Austin and Steyerberg20 In this work, we introduced three methods for obtaining flexible calibration plots that account for clustering in the dataset. The first method extended the grouped calibration plot using bivariate random effects meta-analysis (CG-C method); the second method was a two-step approach in which flexible cluster-specific plots were combined through random effects meta-analysis. We generated cluster-specific plots in two ways: using restricted cubic splines (2MA-C splines) or LOESS (2MA-C LOESS). The third method used a mixed effects logistic calibration model using restricted cubic splines and random intercepts and slopes per cluster (MIX-C). Through a simulation study and a study using synthetic data from patients with an ovarian tumor, we observed that MIX-C and 2MA-C (splines) worked best to obtain the calibration plot in the cluster with the average effect (simulation study) and MIX-C for the cluster-specific calibration plots (synthetic data). The coverage probability of the PI was suboptimal for all methods and all scenarios with 2MA-C (splines) standing out as the best across scenarios. A disadvantage of 2MA-C (splines) is that the estimated calibration curves per cluster deviated more from the true curves per cluster than those obtained by MIX-C, which uses shrinkage to obtain better cluster-specific calibration curves. While CG-C may work well too to obtain a calibration plot in the cluster with the average effect when using 10 groups based on deciles, it worked poorly when using 10 groups based on equal intervals of the estimated risk. However, the grouped approach tends to depend on the number and types of groups, which is undesirable.Reference Van Calster, Collins and Vickers19, Reference Arrieta-Ibarra, Gujral, Tannen, Tygert and Xu44 Obviously, the other methods depend on the level of smoothing of the spline or LOESS, but we automatically selected these parameters based on statistical goodness of fit to reduce the modeler’s choices. We recommend using 2MA-C (splines) to estimate the curve with the average effect and 95% PI and MIX-C for the cluster-specific curves, especially when the sample size per cluster is limited. In our simulation, 2MA-C (LOESS) had the worst performance, possibly due to the logistic data generation mechanism. In real-world scenarios, the association of outcome and predictors tends to be more complex; therefore, a more flexible approach could be useful, as shown in the synthetic data with a non-linear data-generating mechanism and large validation sample sizes per cluster. In this work, we used the method only for external validation, but the methods also apply to model development with clustered data to explore between-cluster calibration heterogeneity during internal–external validation. We provide ready-to-use R functions to plot the curves and obtain numerical results in the OSF repository, and they are incorporated into the CalibrationCurves package in CRAN.Reference De Cock, Nieboer, Van Calster, Steyerberg and Vergouwe45

Munoz et al.Reference Munoz, Moons, Debray and de Jong46 developed similar methodologies to derive calibration plots in large clustered datasets (preprint published after our initial submission). In their work, they presented four approaches to obtain overall calibration plots. Although their rationale is similar to ours in some cases, the implementation is different. The stacking approach is similar to what we define as flexible curve ignoring clustering (Section 2.3), where all the data from different clusters is combined into a single dataset and a calibration model is fitted. Their “one-step meta-analysis” approach corresponds to our MIX-C method where a mixed model is fitted. Their “two-step meta-analysis” method is similar to 2MA-C, but they also present a variant where instead of fitted probabilities the model parameters are aggregated. Finally, they introduced an approach where the calibration model is a generalized estimating equation. None of the methodologies include the estimation of PI. The work is illustrated with an evaluation of a model to diagnose deep vein thrombosis, but no simulation study is included to evaluate the performance of the methodologies or to compare them to our results.

The main strength of our methodologies is the inclusion of a heterogeneity measure through the PI. These intervals indicate, for every estimated risk, the range within which the observed proportion may fall in a new cluster. It is crucial to differentiate the calibration in the cluster with the average effect with the cluster-specific calibration represented in the PI. While a model can be well calibrated in the cluster with the average effect, this does not necessarily imply that the model is perfectly calibrated in every individual cluster. Statements such as “the model was well calibrated” based on the cluster with the average effect calibration plot should be avoided or at least accompanied by a clarification that this only applies to the summary curve. Using the interpretation of a calibration curve with the average effect for a specific cluster might lead to incorrect decision making. For example, the curve with average effect suggests overestimation of risks, but in some clusters, the model might be underestimating them. Although no method provided PI with correct coverage in all investigated simulation scenarios (Figure 5 and Supplementary Figure S10), they show a more realistic picture than the cluster-ignorant CI. In general, all methods underestimate the heterogeneity between clusters, therefore yielding too narrow PI, especially for estimated risks far from prevalence and when the validation sample size is small. PI of MIX-C had an odd behavior where it tended to have too narrow or too wide PI across the calibration curve. For the rest of the approaches, increasing the number of clusters in the validation when the number of events per cluster was low caused the PI to be too narrow (Supplementary Figures S12–S16). More research to fix the suboptimal performance of the PI is needed where Bayesian-based PI derivation could be a solution.Reference Partlett and Riley47– Reference Higgins, Thompson and Spiegelhalter49 Another limitation concerning CG-C and 2MA-C is that they present calibration plots based on meta-analysis of independent points. These approaches create the plot by joining each pointwise estimate of the observed proportion instead of creating a model for the whole plot and therefore are dependent on the number of points. Each of these points represents the calibration in the cluster with the average effect conditional on the estimated risks. We assume that all pointwise estimates form the calibration plot in the cluster with the average effect, but in the modeling process, each point is independent.

This study is a phase two methodological study that focused on the presentation of the methodology and assessments in a limited number of settings.Reference Heinze, Boulesteix, Kammer, Morris and White25 Further research should therefore evaluate the methods in more extended settings and applications. For example, simulations could focus on more complex truths, such as multiple predictors with random slopes for their effects on the outcome, continuous predictors with non-linear associations with the outcome under study, and prediction models based on flexible machine learning methods such as random forests, boosting approaches, or neural networks. Instead of performing a larger simulation study, we decided to focus on synthetic data based on real data from 10 hospitals on women with an ovarian tumor with the aim of building diagnostic prediction models to estimate the risk of malignancy. These data were used to evaluate an existing prediction model, ADNEX, which is a multinomial logistic regression model using random intercepts and uniform shrinkage of model coefficients.Reference Van Calster, Hoorde and Valentin26 In this synthetic data analysis, we focused on the cluster-specific performance of MIX-C by varying the validation sample. MIX-C showed good results, especially when the sample size is limited, while non-clustered approaches generally performed worse.

Author contributions

Contributions were based on the CRediT taxonomy. Conceptualization: LB, LW, and BVC. Funding acquisition: LW and BVC. Project administration: LB. Supervision: LW and BVC. Methodology: LB, LW, BVC, and BDCC. Resources: LB. Investigation: LB, LW, and BVC. Validation: LB, LW, BVC, and BDCC. Data curation: BVC. Software: LB. Formal analysis: LB, LW, and BVC. Visualization: LB, LW, and BVC. Writing—original draft: LB, LW, and BVC. Writing—review and editing: LB, LW, BVC, and BDCC. All authors have read the manuscript, share final responsibility for the decision to submit it for publication, and agree to be accountable for all aspects of the work.

Competing interest statement

The authors declare that no competing interests exist.

Data availability statement

Data and code to reproduce results and figures are available in a public, open-access repository (https://osf.io/aj8ew/). All data relevant to the study are included in the article or uploaded as supplementary information, except the motivating example data and the synthetic data due to privacy concerns.

Funding statement

This research was supported by the Research Foundation—Flanders (FWO) under grant G097322N with BVC as supervisor. LW and BVC were supported by Internal Funds KU Leuven (grant C24M/20/064). LB was supported by a long stay abroad grant from Research Foundation—Flanders (FWO) under grant V457024N.

Supplementary material

To view supplementary material for this article, please visit http://doi.org/10.1017/rsm.2025.10046.