Introduction

While there is a clear consensus on the importance of encouraging interdisciplinary team science as a way to generate innovative solutions to pressing scientific and societal challenges, real-world programs to advance this goal have generally been confined to offering incentives for team formation. The assumption appears to be some variation on, “if you pay them, they will come.” Researchers may be more than willing to accept funding to embark on interdisciplinary endeavors, but simply bringing intelligent, hard working, and well-intentioned scholars and scientists together is no guarantee of success [Reference Hughes, Zajac, Woods and Salas1]. Thus, providing other kinds of support for interdisciplinary teams may well be a wise (and modest) investment relative to direct support for research, yielding a higher rate of return. Many universities and institutes provide infrastructure to help incubate interdisciplinary teams, including physical spaces for collaborative teams [Reference Harris and Holley2,Reference Fiore, Gabelica, Wiltshire, Stokols, Hall, Vogel and Croyle3] and administrative support for project management, including scheduling and coordination of team activities and help with the development of large external grant proposals (e.g. UC San Francisco, University of Michigan, University of Florida). Support for the unique needs of interdisciplinary teams can include identification of appropriate team members across disciplines, provision of space in discipline-neutral locations, conflict mediation services, and specialized development interventions designed to help team members develop collaboration skills and knowledge of best communication practices [Reference Harris and Holley2–Reference Lotrecchiano, Mallinson, Leblanc-Beaudoin, Schwartz and Falk-Krzesinski4].

In this study, we focus on the importance of team development and offer an empirical analysis of the effects of a multimodule team science workshop offered to intact, novel teams (i.e., teams of individuals who have not worked together before) tackling grand challenges to society. Any institution investing substantial resources to cultivate interdisciplinary research will want to maximize the return on investment; however, few organizations offer team development opportunities as a way to maximize the chances of team success. This study (1) provides important information on how to create a team development program and (2) provides preliminary evidence of the effectiveness of such a program.

The Importance of Team Development Interventions

Recognition of the importance of team development for the success of interdisciplinary teams is relatively recent, with rigorous empirical evaluations being conducted only within the last decade [Reference Hughes, Zajac, Woods and Salas1,Reference Fiore, Gabelica, Wiltshire, Stokols, Hall, Vogel and Croyle3]. The fact that there are still few studies on the effects of interventions is likely due to the lack of resources dedicated to programs within universities, research centers, and institutes. This may be driven by a widespread assumption that if scholars are successful in their own disciplines, they will have little difficulty acquiring the skills required to collaborate with other successful scholars, even if they work in other fields. Unfortunately, technical expertise in a specific field is a poor predictor of a researcher’s ability to function as a member of a team [Reference Lacerenza and Salas5]. As the recognition of this fact has grown, team development has been identified as a priority area for empirical work in the science of team science (SciTS) [Reference Falk-Krzesinski, Contractor and Fiore6]. This is particularly true for cognitively diverse teams, where team members are drawn from widely divergent fields [Reference Edmondson and Harvey7]. There are many funding initiatives that prioritize the union of STEM and non-STEM fields, for example, but while the difficulties of collaboration are well-documented, practical solutions to this problem have been slow to emerge [Reference Hinrichs, Seager, Tracy and Hannah8,Reference Bennett and Gadlin9].

Challenges associated with fostering collaboration are often linked to the ways in which researchers are trained and socialized. Discipline-specific training rarely even acknowledges the importance of learning how to collaborate across fields [Reference Cooke and Hilton10]; opportunities to learn collaborative skills and cross-disciplinary perspectives on a problem are rare [Reference Baldwin and Chang11]. However, these skills can be acquired at any point in a researcher’s career, which means that improving competencies related to team science is not only a means of enhancing the likelihood of success for the team, but constitutes a route for meaningful faculty development. An empirically based development program, if well-executed, is highly likely to have important long-term outcomes that are favorable to both team scientists and the institutions that employ them. There are indications that faculty who successfully collaborate on an interdisciplinary team publish more and generate higher impact publications [Reference Wuchty, Jones and Uzzi12] and are more likely to engage in future collaborations [Reference Jeong and Choi13]. Effective team development programs help to overcome challenges associated with team science, including challenges created by large team size, geographic dispersion, cognitive diversity, task interdependence, and the need to integrate knowledge across diverse disciplines [Reference Cooke and Hilton10]. Thus, universities that seek to increase their impact and to elevate their profiles may do well to create or support programs that provide these critical skills.

There is growing evidence that interdisciplinary team development programs help teams and individual researchers generate higher-impact outcomes [Reference Wildman and Bedwell14]. Generally, team building involves exercises that help teams set goals, develop interpersonal relationships among members, engage in productive problem-solving, and clarify individual roles on the team [Reference Klein, DiazGranados and Salas15]. These processes overlap with many of the competencies that are the focus of interventions designed to improve collaboration. Additionally, team development activities constitute a set of shared experiences, particularly when teams participate in development modules as a group. In turn, these shared experiences support the development of relationships within teams, which is critical to the processes that support team success [Reference Cheruvelil, Soranno and Weathers16].

Team Science Competencies

Empirical work on team development interventions focuses on a wide range of competencies. The competencies that have been identified in these studies are generally derived from interview, focus group, and survey studies of leaders of scientific teams (especially principal investigators/PIs) [Reference Bennett and Gadlin9,Reference Bishop, Huck, Ownley, Richards and Skolits17–Reference Salazar and Lant22] and leaders of Clinical and Translational Science Institutes (CTSIs) [Reference Begg, Crumley and Fair23–Reference DuBois, Schilling and Heitman25]. While researchers focused on the science of team science are still working toward general agreement on a single set of team science competencies that should be part of an intervention (for example, see the work of Begg [Reference Begg, Crumley and Fair23]), there is some notable overlap in the knowledge, skills, and abilities that have been the subject of previous studies, which indicates that there is a set of priorities that can serve as a starting point. These include knowledge about best practices in interdisciplinary research [Reference Nash, Collins and Loughlin26–Reference Wooten, Calhoun and Bhavnani28], communication skills [Reference Fiore, Gabelica, Wiltshire, Stokols, Hall, Vogel and Croyle3,Reference Falk-Krzesinski, Contractor and Fiore6,Reference Cooke and Hilton10,Reference Lotrecchiano20,Reference Salazar and Lant22,Reference Begg, Crumley and Fair23,Reference Nash, Collins and Loughlin26,Reference Marlow, Lacerenza, Paoletti, Burke and Salas29–Reference Verderame, Freedman and Kozlowski33], interpersonal relationship development and maintenance [Reference Fiore, Gabelica, Wiltshire, Stokols, Hall, Vogel and Croyle3,Reference Falk-Krzesinski, Contractor and Fiore6,Reference Lotrecchiano20,Reference Marlow, Lacerenza, Paoletti, Burke and Salas29,Reference Antes, Mart and DuBois30], meeting management and task coordination [Reference Lotrecchiano20,Reference Antes, Mart and DuBois30,Reference Stevens and Campion32], goal setting [Reference Lotrecchiano, Mallinson, Leblanc-Beaudoin, Schwartz and Falk-Krzesinski4,Reference Lotrecchiano20,Reference Wooten, Calhoun and Bhavnani28,Reference Stevens and Campion32], conflict management [Reference Falk-Krzesinski, Contractor and Fiore6,Reference Stevens and Campion32], and leadership skills [Reference Falk-Krzesinski, Contractor and Fiore6,Reference Cooke and Hilton10,Reference Lotrecchiano20,Reference Begg, Crumley and Fair23,Reference Wooten, Calhoun and Bhavnani28,Reference Antes, Mart and DuBois30,Reference Verderame, Freedman and Kozlowski33]. Modifiable individual traits that support team science include positive attitudes toward collaboration [Reference Nash, Collins and Loughlin26,Reference Wooten, Calhoun and Bhavnani28] and cognitive openness, which includes a willingness to learn about the approaches of other disciplines [Reference Begg, Crumley and Fair23,Reference Belkhouja and Yoon34]. In addition to several of the competencies listed above, Verdarme and colleagues [Reference Verderame, Freedman and Kozlowski33] recommend that mentors or program sponsors assess specific skills and knowledge of early career scientists in order to evaluate their preparedness for interdisciplinary collaborations, including deep knowledge of one’s own field, critical thinking skills, computational skills, and the ability to conduct sound research in an ethical, responsible, and reproducible manner.

In a comprehensive review of programs designed to support team scientists and a report of an impressive initiative developed by the University of Texas Medical Branch’s CTSI, Wooten and colleagues [Reference Wooten, Calhoun and Bhavnani28] recommend that interventions for interdisciplinary teams focus on the development of team science knowledge, collaboration skills, and positive attitudes toward collaboration. Additionally, team building is supported through activities that focus on goal setting, the creation of interpersonal relationships, and the clarification of individual members’ roles. Further, they posit that programs help teams to develop a shared mental model by helping them engage in productive knowledge management and cognitive integration. The careful structure and design of programs supporting interdisciplinary team science also help teams achieve their goals by fostering broad collaborative networks spanning diverse disciplines. While Wooten et al. [Reference Wooten, Calhoun and Bhavnani28] also identify leadership skills as a potential area for professional development, they found it the most resistant to change, indicating that improving leadership abilities may be more about the timing of faculty development programs relative to the stage of a person’s career and individual personalities than skills training.

A central question, of course, is whether a team science development program focused on any set of competencies related to interdisciplinary collaboration will be well-received by participants, and even more importantly, can create meaningful change [Reference Begg, Crumley and Fair23]. Fortunately, the small body of existing empirical evidence suggests that interdisciplinary team development improves attitudes toward interdisciplinary collaborations [Reference Cooke and Hilton10,Reference Spring, Klyachko and Rak35], enhances team trust [Reference Cooke and Hilton10], increases knowledge relevant to the team’s work [Reference Cooke and Hilton10,Reference Spring, Klyachko and Rak35], improves role clarity [Reference Cooke and Hilton10], enhances interpersonal communication skills [Reference Cooke and Hilton10,Reference Vogel, Feng and Oh36], and ultimately, increases team productivity, as measured by publications and citations [Reference Vogel, Feng and Oh36,Reference Keck, Sloane and Liechty37]. Meta-analyses indicate that interventions focused on collaboration skills improve team performance as well as more proximal outcomes including cognitive, affective, and process-related outcomes [Reference Falk-Krzesinski, Contractor and Fiore6,Reference Delise, Allen Gorman and Brooks38]. This is highly encouraging for any organization seeking to invest in the success of teams beyond the provision of pilot funding.

While the empirical literature supporting the efficacy of team development interventions is promising, the body of evidence is still small. We have designed an evaluation of a team science workshop to examine the impacts of an interdisciplinary team development workshop on individual and team outcomes and evaluated the outcomes by collecting data from two sources: a pre/post (T1/T2) intervention survey and a questionnaire about participants’ reactions to individual sessions during the workshop itself. Combined, the data sets allow us to address the following research question and hypotheses:

RQ: Which workshop program modules were regarded the most favorably by participants?

H1: Individuals who attended a team workshop will score higher on readiness to collaborate than those who did not attend.

H2: Individuals who attend a team development workshop will score higher on goal clarity than individuals who did not attend the workshop.

H3: Individuals who attend a team development workshop will score higher on process clarity than individuals who did not attend the workshop.

H4: Individuals who attend a team development workshop will exhibit less role ambiguity than individuals who did not attend the workshop.

H5: Individuals who attend a team development workshop will score higher on behavioral trust than individuals who did not attend the workshop.

H6: Teams with a higher percentage of members who attend a team development workshop will report higher levels of team satisfaction.

Focusing on the outcomes of a team development intervention allows for more precision in the development and refinement of programs for interdisciplinary research teams [Reference Cooke and Hilton10]. Toward this end, we will present information about a team science workshop and evaluations from participants as well as individual- and team-based outcomes that resulted from the workshop.

Methods

U-LINK Program Description

The University of Miami Laboratory for INtegrative Knowledge (U-LINK) program is an interdisciplinary pilot research funding program designed to incentivize highly innovative work to identify solutions to grand societal challenges. There are a number of features that the program shares with other pilot funding programs at other universities and institutes. However, although the U-LINK program is grounded in the most common recommendations that appear in the SciTS literature, we know of no other programs that include all of these research-related features. These features include: (1) focus on a grand challenge to society rather than a discrete scientific problem; (2) a Phase I/Phase II structure, where the first 9-month phase is devoted to the process of “teaming” and the second (1-year, renewable) phase is designed to support teams in pilot data collection and feasibility testing; (3) support from multiple campus stakeholders, including the university’s CTSI (co-sponsorship of an additional Phase I team), the Graduate School (sponsorship of three full-time graduate fellows for Phase II teams); and the Libraries, which provide an embedded librarian for each team (please see Miller et al. [Reference Miller, Ben-Knaan and Clark Hughes39] for details on this innovative part of the U-LINK program); (4) the requirement for all team members to attend an annual team science workshop; and (5) the requirement for teams to engage with community-based stakeholders who directly participate in the work of the team and provide an internal check on the likely viability of the solutions proposed by the teams. The activities of U-LINK, including review of applications and renewals, were guided by an internal advisory board of interdisciplinary faculty, as well as a representative of the university’s development office and an administrative representative from the Libraries (herself a humanities scholar). The U-LINK program was directed by the Vice Provost and Associate Provost for Research from 2017 to 2020.

Team Development Intervention Description and Content

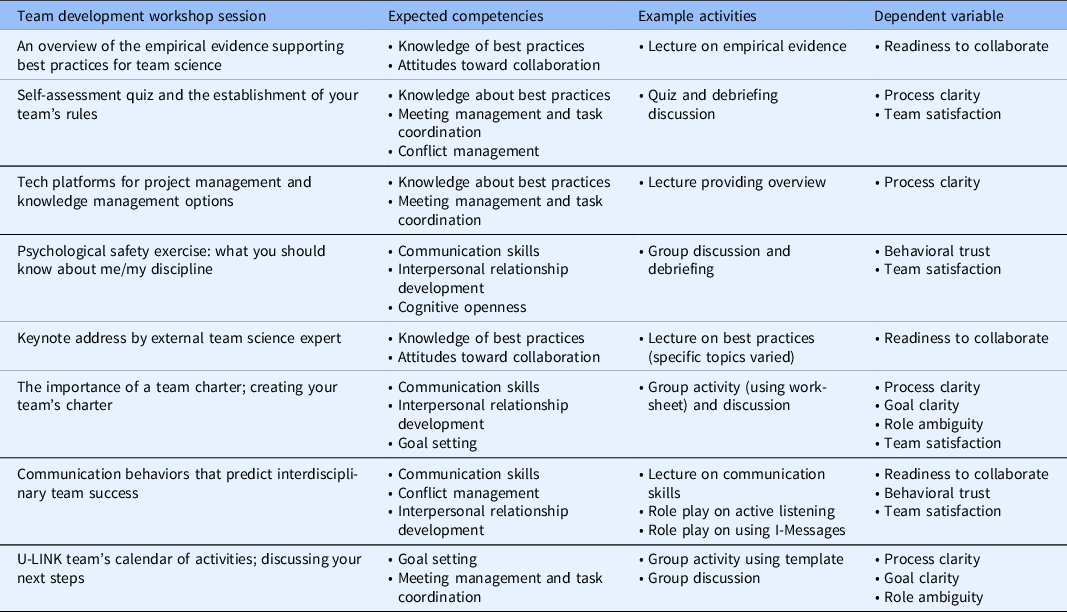

Teams awarded U-LINK funding were required to attend a team development workshop on team science within a month of the start of the funding period. Because the workshop varied in content and format for each year it was offered, we have focused on the 2019 iteration. The workshop, which spanned two consecutive half-days, was a combination of didactic presentations that provided an overview of empirical evidence, and opportunities to practice skills in communication and to conduct collaborative work designed to support long-term team functioning. We adopted a team development intervention approach that combines skill acquisition with team building (see Lacerenza and colleagues [Reference Lacerenza, Marlow, Tannenbaum and Salas40] for more information about types of team development interventions). As per recommendations from the empirical literature [Reference Falk-Krzesinski, Contractor and Fiore6,Reference Lacerenza, Marlow, Tannenbaum and Salas40], we varied modes of delivery, including lectures, demonstrations, and role plays, targeting a variety of competencies related to team science, including communication, the ability to establish psychological safety, and a focus on team processes. In particular, we asked each team to develop a team charter (colloquially termed a “research prenup”) in which members agree to a process for team meetings, adding and removing team members, determining the order of co-authorship of publications, and other vital functions. This intensive intervention also served as an opportunity for U-LINK teams to engage both with their own team members and other teams, some of which may have a shared focus (e.g., threats from climate change, computation/big data). The workshop also featured a distinguished keynote speaker with expertise in the “science of team science” who offered advice to teams based on their own empirical work. See Table 1 for the relationship between workshop content and expected competencies.

Table 1. Team development workshop content, expected competencies, example activities, and study variables

Development of Workshop Content

The U-LINK workshop was developed in the same way that the U-LINK pilot funding program itself was developed. First, a careful review of the literature on the key competencies related to interdisciplinary scientific team collaborations was performed. Second, this list of competencies was examined further to assess whether (1) the empirical literature linked team outcomes to these competencies and (2) evidence existed that these competencies were sensitive to training. For example, there is a near-consensus that communication skills are central to the success of teams and there is ample evidence that training programs can improve communication skills [Reference Marlow, Lacerenza, Paoletti, Burke and Salas29]. Conversely, however, while much has been made about the importance of competent leadership for the success of teams [Reference Antes, Mart and DuBois30], there is little evidence that leadership skills can be acquired through brief interventions [Reference Wooten, Calhoun and Bhavnani28]. Thus, for our workshop, we focused on improving competencies which were most likely to respond to our intervention(s). These competencies appear in Table 1. The workshop agenda appears in Appendix A; an internal report on the workshop that includes additional details about its content can be found in the University of Miami’s scholarly repository: https://doi.org/10.33596/ovprrs-19. Figure 1 represents the balance of workshop content. The content categories include lectures, discussions/role plays, and social interactions. Lectures presented during the workshop were designed to be understandable and useful to a broad audience (i.e. no undefined jargon, avoiding the presentation of academic theories, or detailed analyses). Lectures were alternated with group discussions and role plays designed to give teams the opportunity to interact with each other to practice behavioral skills and to perform recommended team-based tasks, such as addressing key questions necessary to complete a team charter (i.e. authorship order considerations, agreements about meeting frequency, and format). These activities were balanced with opportunities for informal interactions among team members, including coffee breaks, meals, and a happy hour with drinks and hors d’oeuvres. It is worth noting that we also hosted a “team launch” dinner for each individual team 1–3 weeks prior to the workshop to help establish the importance of personal interactions outside of the work environment. This created another opportunity for bonding within the team and for establishing the team’s “origin story” and identity. (See Bennett & Gadlin [Reference Bennett and Gadlin9] for more on the importance of team processes for team success and how social elements support this success.)

Fig. 1. Team science workshop content.

Participants

Participants in this study are members of U-LINK teams who received a Phase I and/or Phase II funding award in 2018 and 2019. Participants were faculty members and/or library staff members at the University of Miami and represent a variety of disciplines and ranks. Each team had members from at least three disciplines and ranged in size from 5 to 9 members. Disciplines represented include engineering, architecture, art, history, English, medicine, communication, public health, education, educational psychology, nursing, and psychology. While most team members were tenured (25.3% associate professors; 20.3% full professors), a substantial minority were assistant professors (20.3%) and several were nontenure track academic professionals (n = 11, 13.9%). While most respondents were White (n = 47, 59.5%), there were respondents reporting from other racial/ethnic backgrounds (n = 9, 11.4% Asian; n = 6, 8% Hispanic; n = 5, 6.3% African American/Black; n = 12, 15% other or did not specify). See Table 2 for characteristics of participants and nonparticipants; Table 3 provides information about the characteristics of teams.

Table 2. Characteristics of participants (n = 79) and nonparticipants (n = 13)

Table 3. Characteristics of teams (k = 11)

Each survey and two follow-up requests were sent to all team members. Participants in the team development workshop were asked to complete an evaluation form at the end of each day: 69.2% responded to the survey after Day 1 and 86.5% completed the Day 2 survey. Presumably because attendance was designated as “mandatory,” participation in the workshop was high (approximately 75%) for most elements of the workshop. (Faculty with teaching conflicts moved in and out.)

Data Collection Procedures

Data for this study were drawn from two different sources. First, participants filled out a paper-based questionnaire at the end of each day, which was intended to measure the individual session’s overall effectiveness, usefulness/applicability, and impacts on the team’s work. Second, we collected survey data (see measures below) from grant awardees via Qualtrics. The pretest survey was emailed to all awardees 2 weeks prior to the workshop; a follow-up reminder prior to the workshop was also sent. A post-test survey was sent approximately 2 weeks after the workshop, along with a follow-up request. No incentives were provided in exchange for completing the survey. This study was determined to be exempt from IRB review because it qualifies as “process improvement” rather than human subjects research. Measures described below reflect the final items used for all analyses.

Measures

Evaluation of the team development workshop

A researcher-created three-item questionnaire was used to measure participants’ perception of each session’s overall effectiveness, usefulness/applicability, and likely impacts on the team’s work. These items were “what is your overall assessment of this session?” for overall effectiveness, “Please indicate the extent to which the session will be useful/applicable to your team’ s work” for usefulness/applicability, and “Please indicate the likelihood that the session will influence my team’s work” for impacts on the team’s work. Items were rated on a 5-point Likert scale that ranged from “Terrible” (1) to “Excellent” (5) for overall effectiveness; “Not at all useful” (1) to “extremely useful” (5) for usefulness/applicability; and “Not at all likely” (1) to “extremely likely” (5) for impacts on the team’s work.

Readiness to collaborate

Readiness to collaborate was measured using a three-item instrument that was revised from Hall et al. [Reference Hall, Stokols and Moser41] These items were “I believe the benefits of collaboration among scientists from different disciplines usually outweigh the inconveniences and costs of such work,” “There is so much work to be done within my field that it is important for me to focus my research efforts working with others in my own discipline,” and “My own work can benefit from incorporating the perspectives of disciplines that are different from my own.” Items were rated on a 5-point Likert scale that ranged from “Strongly Disagree” (1) to “Strongly Agree” (5). A composite score was created by averaging responses on five items, with higher score indicating higher level of one’s readiness to collaborate. The internal consistency measured by Cronbach’s alpha was below average at both time points (

![]() $\alpha $

= .51 for time 1;

$\alpha $

= .51 for time 1;

![]() $\alpha $

= .56 for time 2).

$\alpha $

= .56 for time 2).

Behavioral trust disclosure

Behavioral trust disclosure was measured only at time 2 using a five-item instrument that was developed by Gillespie [Reference Gillespie42]. Sample items include “Share your personal feelings with your team,” “Confide in your team about personal issues that are affecting your work,” and “Discuss how you honestly feel about your work, even negative feelings and frustratio n.” Items were rated on a 5-point Likert scale that ranged from “Not at all willing” (1) to “Completely willing” (5). A composite score was created by averaging responses on five items, with higher score indicating higher level of one’s willingness to share personal feelings and issues related to the work. Internal consistency was high,

![]() $\alpha $

= .90.

$\alpha $

= .90.

Goal clarity

This four-item measure was revised from Sawyer’s [Reference Sawyer43] measurement of goal and process clarity. Sample items include “I am clear about my responsibilities on this U-LINK team,” “I am confident that I know what the goals are for my U-LINK team,” and “I know how my work relates to the overall objectives of my U-LINK team.” Items were rated on a 5-point Likert scale that ranged from “Strongly Disagree” (1) to “Strongly Agree” (5). A composite score was created by averaging responses on three items, with a higher score indicating a higher level of goal clarity. The internal consistency measured by Cronbach’s alpha was good at each time point (

![]() $\alpha $

= .90 for time 1;

$\alpha $

= .90 for time 1;

![]() $\alpha $

= .93 for time 2).

$\alpha $

= .93 for time 2).

Process clarity

This three-item measure was revised from Sawyer’s [Reference Sawyer43] measurement of goal and process clarity. Sample items include “I know how to go about my work on my U-LINK team,” “I know how my team will move forward with its work on our U-LINK project,” and “I am confident that my U-LINK team is using the right processes to move forward with its work.” Items were rated on a 5-point Likert scale that ranged from “Strongly Disagree” (1) to “Strongly Agree” (5). A composite score was created by averaging responses on three items, with higher score indicating a higher level of goal clarity. The internal consistency measured by Cronbach’s alpha was poor at time 1 (

![]() $\alpha $

= .56 for time 1) but was good at time 2 (

$\alpha $

= .56 for time 1) but was good at time 2 (

![]() $\alpha $

= .87).

$\alpha $

= .87).

Role ambiguity

This two-item measure was drawn from Peterson and colleagues’ measurement of role ambiguity, conflict, and overload [Reference Peterson, Smith and Akande44]. The items were “I know exactly what is expected of me on my U-LINK team” and “I know what my responsibilities are on my U-LINK team.” Items were rated on a 5-point Likert scale that ranged from “Strongly Disagree” (1) to “Strongly Agree” (5). A composite score was created by averaging responses on two items, with higher score indicating less uncertainty about one’s role around U-LINK team. The internal consistency measured by Cronbach’s alpha was good at times 1 (

![]() $\;\alpha $

= .90) and was acceptable at time 2 (

$\;\alpha $

= .90) and was acceptable at time 2 (

![]() $\alpha $

= .77).

$\alpha $

= .77).

Team satisfaction

Team satisfaction was measured using three items that were revised from Hackman and Oldham’s job satisfaction survey questionnaire [Reference Hackman and Oldham45]. Sample items include “I enjoy the kind of work we do on this U-LINK team,” “Working on this U-LINK team is an exercise in frustration,” (reverse-coded) and “Generally speaking, I am very satisfied with this U-LINK team.” Items were rated on a 5-points Likert scale that ranged from “Strongly Disagree” (1) to “Strongly Agree” (5). A composite score was created by averaging responses on three items, with higher score indicating higher level of satisfaction with U-LINK team. The internal consistency measured by Cronbach’s alpha was acceptable (

![]() $\alpha $

= .82).

$\alpha $

= .82).

Analytic Strategies

We used SPSS to first summarize participants’ rating on the three proximal outcomes of each session numerically and graphically. Second, a series of repeated-measures Analysis of Variance (ANOVA) tests were performed to explore how specific sessions were perceived by participants, followed by a post-hoc analysis using Bonferroni adjustment [Reference Gamst, Meyers and Guarino46]. ANOVA was conducted separately for Day 1 and Day 2 sessions. Third, a series of independent samples t-tests was conducted to see whether individuals who attended at least one team science workshop session scored higher on some outcomes than those who did not attend any. None of the variables measured before the team development intervention was significantly different, and thus they were not controlled in the analyses. Lastly, using the Mann–Whitney U test, team-level outcomes (team satisfaction, behavioral trust, goal clarity and process clarity, and role ambiguity) were compared based on the percentage of team members who attended or did not attend at least one day of the 2-day team development workshop (i.e., Group 1 consisted of teams with >75% attendance; Group 2 had ≤ 75% attendance). The Mann–Whitney U test was used due to our small sample size (n = 11 teams), and its effect size was computed based on the formula provided by Kerby [Reference Kerby47].

Results

Team Characteristics

The number of team members ranged from 5 to 9 (M = 7.18, SD = 1.25). The percentage of male team members varied from 0 to 75% (M = 50.69%, SD = 26.84%). Six teams composed of more females and five teams with fewer females. Years of U-LINK funding ranged from 1 to 2. The percentage of team members who participated in the 2-day team development workshop varied from 0% (n = 1) to 100% (n = 1), with a total of 52 participants on Day 1 and 52 on Day 2. Some participants attended both days, while some attended only one or the other. Of those who attended, 36 on Day 1 and 45 on Day 2 filled out a paper-format survey questionnaire that measured the three proximal outcomes of each workshop session: overall effectiveness, usefulness/applicability, and likely impacts on the team’s work.

Teams’ Evaluation of Workshop Sessions

The first research question focused on how participants evaluated the team science workshop sessions. Figures 2-7 present the means of the evaluations for each of the workshop topics. Repeated measures ANOVA indicated that there were no group differences in the change score. Mean participants’ rating on the perceptions of the team development intervention at time 2 varied by individual session: 3.94–4.72 for overall effectiveness, 3.74–4.32 for usefulness/applicability, and 3.91–4.18 for impacts on the team’s work. Most participants perceived all of the sessions to be effective, useful/applicable, and impactful on their ability to work as a team, with a very small number of participants responding with low ratings on a couple of sessions for both days. However, responses to individual sessions varied significantly. Results from a repeated-measure ANOVA indicate that there were significant differences in usefulness/applicability (F(3,99) = 4.11, p = .009) across the four workshop sessions given on Day 1 as well as across the five sessions given on Day 2 (F(4,148) = 6.75, p < .05). As shown in Figures 1-6, participants perceived sessions that provided technological platforms for project management and knowledge management options to be the least useful/applicable for their work, while the keynote address delivered by a prominent scholar was perceived to be the most effective and useful/applicable.

Fig. 2. Participants’ ratings on Day 1 sessions: overall assessments.

Session 1: An overview of the empirical evidence supporting best practices for team science

Session 2: Self-assessment quiz and the establishment of your team’s rules

Session 3: Tech platforms for project management and knowledge management options

Session 4: Psychological safety exercise: What you should know about me/my discipline

Fig. 3. Participants’ ratings on Day 1 sessions: perceived usefulness.

Session 1: An overview of the empirical evidence supporting best practices for team science

Session 2: Self-assessment quiz and the establishment of your team’s rules

Session 3: Tech platforms for project management and knowledge management options

Session 4: Psychological safety exercise: What you should know about me/my discipline

Fig. 4. Participants’ ratings on Day 1 sessions: influence on teams’ work.

Session 1: An overview of the empirical evidence supporting best practices for team science

Session 2: Self-assessment quiz and the establishment of your team’s rules

Session 3: Tech platforms for project management and knowledge management options

Session 4: Psychological safety exercise: What you should know about me/my discipline

Fig. 5. Participants’ ratings on Day 2 sessions: overall assessments.

Session 1: Keynote address by external team science expert

Session 2: The importance of a team charter; creating your team’s charter

Session 3: Communication behaviors that predict interdisciplinary team success; role play

Session 4: U-LINK team’s calendar of activities; discussing your next steps

Session 5: Presentation of U-LINK Resources

Fig. 6. Participants’ ratings on Day 2 sessions: Perceived usefulness.

Session 1: Keynote address by external team science expert

Session 2: The importance of a team charter; creating your team’s charter

Session 3: Communication behaviors that predict interdisciplinary team success; role play

Session 4: U-LINK team’s calendar of activities; discussing your next steps

Session 5: Presentation of U-LINK Resources

Fig. 7. Participants’ ratings on Day 2 sessions: influence on teams’ work.

Session 1: Keynote address by external team science expert

Session 2: The importance of a team charter; creating your team’s charter

Session 3: Communication behaviors that predict interdisciplinary team success; role play

Session 4: U-LINK team’s calendar of activities; discussing your next steps

Session 5: Presentation of U-LINK Resources

Impact of Team Development Intervention on Outcomes

Individual-level outcome measures

No significant difference was found at T1 between those who attended the workshop and those who did not, indicating that any significant differences at posttest were a function of participation in the team science workshop. Individuals who participated in at least one workshop session (M = 4.63, SD = .47, n = 28) scored higher at posttest on readiness to collaborate than those who did not (M = 4.06, SD = .65, n = 11), (t(37) = 3.04, p = .004), supporting H1. Cohen’s d of 1.08 (95% CI: 0.36 to 1.82) suggests a substantial difference between the two groups in individuals’ perceived readiness to collaborate. This indicates that, on average, 86% of individuals who attended a workshop session had a higher mean on readiness to collaborate than those who did not. Figure 8 provides a visual representation of these results.

Fig. 8. Impact of team development intervention on readiness to collaborate.

In addition, individuals who participated in at least one workshop session scored significantly higher on behavioral trust (M = 4.22, SD = .50, n = 30) than those who did not (M = 3.77, SD = .68, n = 11). Cohen’s d of 0.82 (95% CI: 0.10 to 1.53) indicates a large difference between the two groups in an individual’s behavioral trust level, suggesting that on average, 81% of individuals who attended a workshop session had a higher mean on behavioral trust level than those who did not. This difference was significant, (t(39) = 2.33, p = .03), which supports H5. Table 4 presents the results of individual-level hypotheses.

Table 4. Results of tests of individual-level hypotheses

Results from independent samples t-tests did not find differences between individuals who participated in at least one team science workshop and those who did not on the other three distal outcome measures, including goal clarity, process clarity, and role ambiguity, which fails to support H2, H3, or H4. Results from the Mann–Whitney U test suggest that teams were not different on team satisfaction as a result of workshop participation. Cohen’s d effects calculated from the Mann–Whitney U statistics were small (0.04), likely because the study was underpowered. This means that H6 was not supported.

Discussion

In this study, we evaluated the impact of a team development workshop for novel, intact interdisciplinary teams on key outcomes. The U-LINK workshop included didactic lectures on empirically based best practices for collaborative teams, as well as skills-based modules on communication skills and creating psychological safety. Participants had the opportunity to enact their team science knowledge and skills during sessions on creating team rules, establishing a team charter, and planning a calendar of activities. These topics were selected based on the empirical literature on the science of team science indicating that the topics (1) represent knowledge, skills, and behaviors supporting collaboration that can be improved, and (2) are associated with team success.

Our evaluation drew upon both workshop evaluation forms and a pretest (T1)/posttest (T2) survey of participants on constructs related to team science. The results of the post-workshop evaluation indicate that nearly all of the workshop sessions were favorably evaluated. In particular, participants were enthusiastic about the keynote speaker’s presentation that focused on empirical research on effective teams; the remaining sessions scored between 4.2 and 4.4 on a 5-point scale, with just one exception. While liking and valuing material is no guarantee of positive outcomes in terms of individual attitudes and behaviors, the positive evaluations of these sessions indicate that participants value the material and find it useful.

Analyses of the survey results indicate that development interventions for interdisciplinary teams can improve some of the outcomes that are predictive of team success. Specifically, our data indicate that the team science workshop resulted in meaningful improvements in readiness to collaborate and team trust. The importance of this finding cannot be overstated; the influential work of Bennett and Gadlin [Reference Bennett and Gadlin9] on predictors of the success of interdisciplinary teams emphasizes that of the key elements that are critical for team success, the “most important of these is trust” (p. 768) because all team interactions and communication behaviors suffer without it. However, we were unable to demonstrate improvements in other variables, including goal clarity, process clarity, and role ambiguity. Nonetheless, we believe that ongoing interactions within teams during the course of regular meetings may improve these outcomes over time; we are hopeful that these outcomes will have been enhanced because of participants’ engagement in workshop activities. It should be noted, however, that some measures suffered from low reliability and require additional instrument development efforts from researchers.

Questions about the format, content, and the timing of team development programs remain. For example, our program was mandatory for all awardees and was scheduled within a month of the start date of the grant award. As a matter of design, this was intended to ensure that team members had an understanding of collaborative best practices before meaningful work began, rather than as a way to remedy entrenched problems with collaboration. Additionally, the program was offered to intact teams to maximize the potential for immediate real-world skill transference as well as to create a shared experience that could serve to foster team bonds [Reference Fernandez, Shah and Rosenman48,Reference Liang, Moreland and Argote49]. There are almost infinite permutations of content, even when focused on key competencies identified in the empirical literature on best practices in supporting team science. Other programs may be intended to provide skills for individual researchers to enhance their ability to join interdisciplinary teams or to improve their performance (or comfort with interdisciplinary collaborations).

The most effective timing for team development programs is another issue worth consideration. Many argue that interventions that support more effective interdisciplinary collaborations should be offered in graduate school or even during undergraduate education [Reference Cooke and Hilton10,Reference Nash, Collins and Loughlin26,Reference Klein50]. While some researchers attend to the issue of whether it is preferable to intervene with intact teams versus individuals [Reference Fernandez, Shah and Rosenman48,Reference Liang, Moreland and Argote49], there has been little attention to the “perfect time” to offer interventions, likely because documenting differences in outcomes would require a large number of teams operating under similar conditions, all receiving the same interventions. Because the U-LINK program is designed for shared leadership rather than a traditional top-down “PI model” of responsibility and delegation, it was important that teams attended the workshop at the very beginning of their work, thus precluding options for providing initial workshops at other points in time. However, it should be noted that the U-LINK program provided at least three additional professional development workshops, lectures, and mixers during each academic year.

Additionally, questions remain about the intensity of the interventions needed to improve team outcomes. The duration of team development programs varies widely, from regular 1-hour seminars spaced every month or so [Reference Cooke and Hilton10,Reference Begg, Crumley and Fair23] to full-day short courses 2–3 times per year [Reference Gibert, Tozer and Westoby51]. Our program consisted of two consecutive half-days at the beginning of teams’ funding period. This is another design issue that warrants consideration by future program organizers, but there are scant data to indicate that there is a monotonic relationship between the number of hours of team development and improved team outcomes. However, a general consensus in the literature indicates that it is important to improve specific knowledge, skills, and attitudes, which would be difficult to accomplish in less than a full day.

While the content of the U-LINK team science workshop was grounded in the recommendations that have emerged from the empirical literature on the science of team science, there is not enough of a body of evidence to link specific content to specific outcomes. Based on our experience and prior findings reported in the literature on the science of team science [Reference Klein, DiazGranados and Salas15,Reference Wooten, Calhoun and Bhavnani28,Reference Vogel, Feng and Oh36,Reference Read, O’Rourke and Hong52] we recommend that team development workshops include the following components:

-

One-day program to maximize participation

-

Combination of didactic lectures presenting the evidence for best practices, hands-on skills development, and role plays to build communication skills and conflict management skills

-

In-person participation of intact teams who experience the program together

-

Inclusion of informal components where team members can enjoy each other’s company: shared meals, team-building exercises, and a post-workshop happy hour can be helpful

Additionally, we would recommend that future programs focus more heavily on the in-person development of a team charter than our own program did. A separate half-day retreat following a team science workshop might be in order. Experienced team moderators may help to ensure that all voices and concerns are heard, particularly from those with less seniority, experience, and power. Teams that are required to complete a team charter near the beginning of their project work would likely report greater goal and process clarity and less role ambiguity than teams that do not create a charter.

There are other emerging strategies for supporting the development and function of interdisciplinary teams, including structural interventions. In particular, creating coordination centers can help scientific teams take innovative discoveries through to a translational stage [Reference Gehlert, Hall and Vogel53,Reference Rolland, Lee and Potter54]. This type of structural intervention provides recognition [Reference Rolland, Lee and Potter54].

There are, of course, limitations to this study. While we had hoped for a higher response rate among our attendees, our study ultimately had unavoidable issues linked to the relatively small number of teams which received grant funding through our internal program. Additionally, of course, most of our hypotheses require analyses that acknowledge that our data are nested within teams, further compromising our statistical power. It is our hope, however, that the data presented here can contribute to further meta-analyses and thoughtful discussions about the ideal design for team development programs.

Conclusion

This study was designed to assess the efficacy of an interdisciplinary team development program that was part of a larger effort to support the success of novel teams (i.e., those with no previous experience working together) who received pilot funding to work toward developing viable solutions for grand challenges to society. It was our hope to contribute to the body of evidence that institutions can increase the chances that an interdisciplinary team will succeed by providing a brief team development program. Indeed, data collected before, during, and after the program indicate that the program was well-received and produced meaningful improvements in readiness to collaborate and behavioral trust. While we were not able to demonstrate increases in long-term outcomes, our ability to thoroughly assess the impacts of this program was limited by a small sample size of teams and the nesting of individual survey responses within teams, which compromises statistical power. Overall, however, the pattern of findings is consistent with the larger body of research that indicates that a team development workshop constitutes a worthwhile investment for universities and institutes. The incorporation of innovative mechanisms to support team development (like simulations that would prepare teams for common obstacles to effective collaboration) and cutting-edge measurement techniques, like electronic badges, that can provide evidence of the impact of development interventions on actual real-world behavior [Reference Pentland55] would advance this area of research further. It is our hope that the description of this program and its evaluation provide a blueprint that will prove useful for others as they design interdisciplinary research programs designed to address grand challenges to society.

Supplementary Material

To view supplementary material for this article, please visit https://doi.org/10.1017/cts.2021.831.

Acknowledgements

The authors are grateful to all of the U-LINK awardees who responded to the surveys. Without their participation, this evaluation would not have been possible.

Disclosures

The authors have no conflicts of interest to declare.