Given the scale of the global mental health burden, we need time- and resource-efficient methods for the development, testing, and optimization of psychological interventions. However, this need stands in contrast to the present reality: The standard route for treatment development is slow and inefficient; typically proceeding via initial single case or cohort designs followed by a series of 2- or 3-arm randomized controlled trials (RCTs), this process is essentially the same as it was a few decades ago and is unable to keep pace with the demand for improved outcomes in mental health. In other areas of health, such as cancer, recognition of the need for more rapid treatment development has led to the creation of a new generation of alternative research designs (e.g. Hobbs, Chen, and Lee, Reference Hobbs, Chen and Lee2018; Wason and Trippa, Reference Wason and Trippa2014). Building on these methodological innovations, a new research method has recently been proposed to accelerate the development and optimization of psychological interventions in mental health: the ‘leapfrog’ trial (Blackwell, Woud, Margraf, & Schönbrodt, Reference Blackwell, Woud, Margraf and Schönbrodt2019).

The leapfrog design can be considered a simple form of an ‘adaptive platform trial’ (Angus et al., Reference Angus, Alexander, Berry, Buxton, Lewis and Paoloni2019). These trials aim to offer an efficient means to test multiple interventions simultaneously, with interim analyses used to adjust the trial design. For example, in the platform design described by Hobbs et al. (Reference Hobbs, Chen and Lee2018), multiple new treatments are tested against a control arm (e.g. an established treatment), with repeated Bayesian analyses based on posterior predictive probability used to rapidly eliminate those treatments judged to have a low chance of reaching a pre-specified success criterion. Other platform designs use Bayesian analyses to make adjustments to the randomization weights for individual arms based on their performance, such that participants are preferentially allocated to better-performing arms and those performing poorly are eventually eliminated (e.g. Wason and Trippa, Reference Wason and Trippa2014). The leapfrog design was conceived as a type of adaptive platform trial that would lend itself easily to the kinds of comparisons typical in psychological treatment development (e.g. differences in symptom change between treatments) using a relatively simple analytic method, and that additionally included a mechanism for continuous treatment development and optimization via replacement of the control condition over time (Blackwell et al., Reference Blackwell, Woud, Margraf and Schönbrodt2019).

How does a leapfrog trial work? In such a trial, multiple treatment arms can be tested simultaneously, with one designated as the comparison arm or control. As the trial proceeds and data accumulates, sequential Bayes Factors (BFs; e.g. Schönbrodt, Wagenmakers, Zehetleitner, and Perugini, Reference Schönbrodt, Wagenmakers, Zehetleitner and Perugini2017) are calculated on an ongoing basis, enabling repeated comparison of each arm to the control for the chosen outcome without compromising the statistical inferences. These BFs continuously quantify the strength of evidence for or against superiority of a treatment arm over the control, allowing poorly performing arms to be dropped from the trial as soon as there is sufficient evidence to do so. Further, new treatment arms informed by the latest research findings can be incorporated directly into an ongoing trial. Finally, if sufficient evidence accumulates to suggest a treatment arm is better than the control, the control arm is dropped and replaced by this superior arm. These design features provide a route for accelerated treatment development: they substantially reduce the sample sizes needed, they enable a close link between basic and applied research, reducing the time needed for innovation, and they provide mechanisms for continuous treatment optimization consolidated within one trial infrastructure. However, for any new methodology a crucial step is a first demonstration, to show that the method works in practice and not just on paper, and that the promised advantages are not just theoretical, but are also borne out in reality.

We therefore aimed to conduct a leapfrog trial to provide a concrete demonstration from pre-registration to final reporting, as well as to ascertain the design's feasibility and identify any potential problems or adjustments needed. We applied the design to an internet-delivered cognitive training intervention, imagery cognitive bias modification (imagery CBM; Blackwell et al., Reference Blackwell, Browning, Mathews, Pictet, Welch, Davies and Holmes2015). Imagery CBM was originally developed in the context of depression, with negative interpretation biases and deficits in positive mental imagery as the target mechanisms (Everaert, Podina, & Koster, Reference Everaert, Podina and Koster2017; Holmes, Blackwell, Burnett Heyes, Renner, & Raes, Reference Holmes, Blackwell, Burnett Heyes, Renner and Raes2016). More recently applications have focused specifically on anhedonia (e.g. Blackwell et al., Reference Blackwell, Browning, Mathews, Pictet, Welch, Davies and Holmes2015; Westermann et al., Reference Westermann, Woud, Cwik, Graz, Nyhuis, Margraf and Blackwell2021), a challenging clinical target where there is great need for treatment innovation (e.g. Craske, Meuret, Ritz, Treanor, and Dour, Reference Craske, Meuret, Ritz, Treanor and Dour2016; Dunn et al., Reference Dunn, German, Kazanov, Zu, Hollon and DeRubeis2020). Imagery CBM currently stands at a critical stage of clinical translation, needing to make the crucial transition from early-phase trials to tests of real-world implementations. Further, while research suggests promise for reducing anhedonia, it also indicates the need for further development work (Blackwell et al., Reference Blackwell, Browning, Mathews, Pictet, Welch, Davies and Holmes2015). Imagery CBM therefore made a particularly suitable exemplar for a leapfrog trial.

In applying the leapfrog design to imagery CBM as a low-intensity intervention to reduce anhedonia, our primary aim in this study was to implement and thus demonstrate the core features of a leapfrog trial: Sequential Bayesian analyses based on Bayes Factors (BF), updated continuously with each new participant completing the trial; removal of a trial arm when it hit a prespecified threshold for failure; introduction of new trial arms into an ongoing trial; and replacement of the control arm with a different arm when that intervention arm reached a pre-specified threshold for success.

Methods

Design

The study was a randomized controlled leapfrog trial with multiple parallel arms. Allocation was on an equal ratio between those arms currently in the trial at the time of an individual participant's randomization (e.g. 1:1:1 ratio when there were three arms in the trial; 1:1 ratio when there were two arms in the trial). The trial was prospectively registered on clinicaltrials.gov (NCT04791137) and the Open Science Framework (https://osf.io/6k48m; including full study protocol). The study was conducted entirely in German.

Participants and recruitment

Participants were recruited primarily online, e.g. via social media posts and paid adverts, with adverts indicating that the study involved an online training program that could help with depressed or low mood (see online Supplementary Methods for details). Adverts directed participants to the study website, where they could provide informed consent and register for the study.

Inclusion criteria were: Aged ≥ 18; scoring ≥ 6 on the Quick Inventory of Depressive Symptomatology (QIDS; Rush et al., Reference Rush, Trivedi, Ibrahim, Carmody, Arnow, Klein and Keller2003), indicating at least mild levels of depression symptomsFootnote *Footnote 1; fluent German; willing and able to complete all study procedures (including having a suitable device/internet access); and interested in monitoring mood over the study time-period (one month). The depression criterion was assessed during the baseline assessment; all others were self-verified by the participant via the online consent form. There were no exclusion criteria. No financial or other incentives were offered for participation.

The study was conducted entirely online. Study procedures (assessments, interventions, participant tracking) were implemented via a web-based platform and were entirely automated and unguided, with contact from researchers only in response to queries from the participants or to provide technical information (e.g. website unavailability).

Interventions

Initial control arm: monitoring

The control arm at study initiation comprised weekly completion of symptom questionnaires (see below for details). This was chosen as the initial control arm primarily to increase the likelihood of another arm reaching BFsuccess, thus enabling us to fulfill our aim of implementing replacement of the control arm. To reduce attrition, once participants had completed the post-intervention assessment they could try out one of the other intervention arms.

Intervention arms: imagery cognitive bias modification (imagery CBM)

General overview. All intervention arms were variants of imagery CBM, adapted from previous experimental (e.g. Holmes, Lang, and Shah, Reference Holmes, Lang and Shah2009) and clinical (e.g. Blackwell et al., Reference Blackwell, Browning, Mathews, Pictet, Welch, Davies and Holmes2015; Westermann et al., Reference Westermann, Woud, Cwik, Graz, Nyhuis, Margraf and Blackwell2021) studies. The interventions comprised a series of training sessions (e.g. 5–20 min each, depending on the arm). Training stimuli were brief audio recordings of everyday scenarios, which always started ambiguous but then resolved positively. Participants were instructed to listen to and imagine themselves in the scenarios as they unfolded; via repeated practice imagining positive outcomes for ambiguous scenarios during the training sessions, imagery CBM aims to instill a bias to automatically imagine positive outcomes for ambiguous situations in daily life. Participants also completed weekly questionnaires, as in the monitoring arm. The different intervention variants are here designated version 1 (v1), version 2 (v2) etc. Fuller descriptions are provided in the online Supplementary Methods.

CBMv1. CBMv1 started with an initial introductory session comprising an extended introduction to mental imagery followed by 20 training scenarios (as per Westermann et al., Reference Westermann, Woud, Cwik, Graz, Nyhuis, Margraf and Blackwell2021). The first two training weeks then included four sessions with 40 scenarios each, and the final two weeks included two sessions of 40 scenarios.

CBMv2. CBMv2 had an identical schedule to CBMv1, but a more extended rationale for the training was provided, as well as further instructions and suggestions to recall and rehearse the scenarios in daily life, for example when encountering a similar situation, with the aim of enhancing training transfer (see Blackwell, Reference Blackwell2020; Blackwell and Holmes, Reference Blackwell and Holmes2017).

CBMv3. CBMv3 tested a schedule of more frequent, briefer training sessions. Following the introductory session, on five days of each training week there were two brief training sessions scheduled for completion (15 scenarios, approx. 5 min). It was planned that if CBMv2 had a higher Bayes Factor v. control than CBMv1 when CBMv3 was introduced, the additional instructions from CBMv2 would also be used for CBMv3.

CBMv4. The specifications of CBMv4 were decided upon during the trial, based on data and feedback from participants completing CBMv1 and CBMv2, in particular the higher than expected attrition rates (see Fig. 1 and Table 1). Instead of implementing our original plan for a fourth CBM arm, we designed CBMv4 to increase adherence and acceptability, by including shorter sessions (32 instead of 40 scenarios per session, only 10 in the introductory session), fewer sessions in the first training weeks (2 in the first week, 3 in subsequent weeks), and more engaging task instructions (building on those used in CBMv2) including encouragement to keep training even if participants felt they were not yet benefitting.

Fig. 1. Flow of participants through the study. Note that not all trial arms were included simultaneously in the trial (see Fig. 2).

Table 1. Final outcomes for each training arm v. the relevant comparison arm, including all participants randomized

Note: Comparisons between arms include only participants who were concurrently randomized (i.e. who could have been randomized to either arm); hence sample sizes, % outcome data provided and model estimates vary depending on which arms are being compared. Estimated means, CIs, effect size v. comparison arm (d), and Bayes Factor v. comparison arm (BF) derived from the constrained longitudinal data analysis model. DARS, Dimensional Anhedonia Rating Scale. Higher scores on the DARS indicate lower levels of anhedonia. CBM v1/2/3/4, Cognitive Bias Modification version 1/2/3/4.

Measures

Primary outcome

The primary outcome was anhedonia at post-intervention (controlling for baseline scores), measured via the total score on the Dimensional Anhedonia Rating Scale (DARS; Rizvi et al., Reference Rizvi, Quilty, Sproule, Cyriac, Bagby and Kennedy2015; Wellan, Daniels, and Walter, Reference Wellan, Daniels and Walter2021). Higher scores indicate lower levels of anhedonia.

Secondary outcomes

The QIDS (Roniger, Späth, Schweiger, & Klein, Reference Roniger, Späth, Schweiger and Klein2015; Rush et al., Reference Rush, Trivedi, Ibrahim, Carmody, Arnow, Klein and Keller2003), GAD-7 (Lowe et al., Reference Lowe, Decker, Muller, Brahler, Schellberg, Herzog and Herzberg2008; Spitzer, Kroenke, Williams, & Lowe, Reference Spitzer, Kroenke, Williams and Lowe2006), and Positive Mental Health Scale (PMH; Lukat, Margraf, Lutz, van der Veld, and Becker, Reference Lukat, Margraf, Lutz, van der Veld and Becker2016) were administered as weekly measures of depression, anxiety, and positive mental health respectively. The Ambiguous Scenarios Test for Depression (AST; Rohrbacher and Reinecke, Reference Rohrbacher and Reinecke2014) and Prospective Imagery Test (PIT; Morina, Deeprose, Pusowski, Schmid, and Holmes, Reference Morina, Deeprose, Pusowski, Schmid and Holmes2011) were administered at baseline and post-intervention as measures of putative mechanisms (interpretation bias and vividness of prospective imagery). The short (20-item) form of the Negative Effects Questionnaire (NEQ; Rozental et al., Reference Rozental, Kottorp, Forsström, Månsson, Boettcher, Andersson and Carlbring2019) was administered at post-intervention to assess self-reported negative effects (see online Supplementary Methods for further information including details of internal consistency).

Other measures

The Credibility/Expectancy Questionnaire (CEQ; Devilly and Borkovec, Reference Devilly and Borkovec2000; Riecke, Holzapfel, Rief, and Glombiewski, Reference Riecke, Holzapfel, Rief and Glombiewski2013) was administered at baseline to measure credibility of the intervention rationale and expectancy for symptom improvement. A feedback questionnaire was administered at the end of the study (see online Supplementary Methods for more details).

Adverse events

The following Adverse Events were pre-specified: reliable deterioration on the QIDS or GAD-7 from pre- to post-intervention, indicated by an increase greater than a reliable change index calculated from normative data (see online Supplementary Methods); other potential adverse events (e.g. suicidal ideation/acts, self-harm) communicated to the researchers by messages sent by participants during the trial, or via the NEQ.

Procedure

After registering and logging in to the study platform, participants provided demographic and background clinical information, then completed a sound test to check device compatibility. Next they completed the DARS, QIDS, GAD-7, PMH, AST, SUIS, and PIT, with the baseline QIDS used to determine eligibility. After confirming readiness to start the next phase, participants were randomized to condition (see below). Participants then read a brief description of their assigned condition and completed the CEQ.

Over the next four weeks, participants were scheduled to complete a set of questionnaires (QIDS, GAD-7, PMH) each week, as well as training sessions according to their allocated condition. Automated emails were sent to remind participants about scheduled training sessions or questionnaires, and participants could see a graphical display of their scores within the online system. At four weeks post-baseline, participants were requested to complete the final set of questionnaires, the DARS, QIDS, GAD-7, PMH, AST, and PIT, followed by the NEQ and feedback questionnaire. They then received debriefing information and were given the option of starting a new training schedule of their choice. Participants had one week to complete the final questionnaires, after which they were no longer available and counted as missing data.

Randomization, allocation concealment, and blinding

Randomization was stratified by gender (female v. not female) and baseline score on the QIDS (≤9 v. >9, i.e. mild v. moderate-to-severe). Randomization was in blocks equal to the number of arms in the trial at the time (e.g. block lengths of 3 when there were 3 arms in the trial). Each time a trial arm was added or removed a new set of randomization sequences were generated, such that participants were only randomized between those arms currently in the trial at that timepoint (see online Supplementary Methods for further details).

Participants were not blind as to whether they were receiving an intervention or monitoring only, but otherwise were blind to the nature of the different versions of the intervention being tested. The research team were not blind to participant allocation, although only had minimal contact with participants (e.g. responding to messages). One researcher (SEB) monitored performance of the arms as data accumulated, via the sequential analyses, and shared outcome data with other researchers (FDS, MLW, AW, JM) for the purpose of decision-making. These researchers were therefore not blind to outcomes as the trial progressed.

Statistical analyses

All analyses were conducted in RStudio (RStudio, Inc., 2016), running R version 4.1.2 for the analyses in this paper (R Development CoreTeam, 2011).

Sequential analyses, repeated each time new outcome data was provided, were based on computation of directional Bayes factors (BFs) comparing each trial arm individually to the control arm for the primary outcome (DARS at post-intervention, controlling for pre-intervention scores). BFs (e.g. Wagenmakers, Reference Wagenmakers2007) are essentially the probability of the observed data if one hypothesis were true (in this case, the alternative hypothesis that a trial arm was superior to the control arm in reducing anhedonia) divided by the probability of the observed data if another hypothesis were true (in this case, the null hypothesis that a trial arm was not superior to the control arm in reducing anhedonia). Sequential analyses were conducted as intention-to-treat (including all participants randomized to condition). To handle missing data, analyses were conducted via constrained longitudinal data analysis (cLDA; Coffman, Edelman, and Woolson, Reference Coffman, Edelman and Woolson2016) using nlme (Pinheiro, Bates, DebRoy, Sarkar, & R Core Team, Reference Pinheiro, Bates, DebRoy and Sarkar2019), and an approximate BF calculated via the t-statistic for the Time × Group effect using BayesFactor (Morey & Rouder, Reference Morey and Rouder2015) with a directional default Cauchy prior (rscale parameter = √2/2).

As elaborated by Blackwell et al. (Reference Blackwell, Woud, Margraf and Schönbrodt2019), how a leapfrog trial proceeds is determined by a pre-specified set of analysis parameters: A minimal sample size per arm, N min, at which sequential analyses are initiated; a maximum sample size, N max, at which an arm is removed from the trial (this prevents a trial continuing indefinitely); a BF threshold for failure, BFfail, reaching of which results in an arm being removed from the trial; and a BF threshold for success, BFsuccess, reaching of which results in an arm becoming the new control arm, with the current control arm being dropped. The specific parameters chosen for any particular trial can be determined by simulation to achieve the desired false-positive and false-negative error rates (at a pairwise or whole trial level) in the context of feasible sample sizes. For this specific trial, as the primary aim was to demonstrate the leapfrog trial features, rather than to draw confident conclusions about the efficacy of the intervention being used as an exemplar, the parameters were chosen to provide lenient thresholds, therefore facilitating this demonstration without requiring large sample sizes. The parameters for this trial were pre-specified as follows: N min = 12; N max = 40; BFfail = 1/3; and BFsuccess = 3. Simulations with up to 25% missing data suggested that these parameters provided a pairwise false-positive rate of 9% and power of ~25%, ~55%, and ~85% respectively for small (d = 0.2), medium (d = 0.5) and large (d = 0.8) between-group effect sizes (see the protocol and associated simulation scripts at https://osf.io/8mxda/ for full details). When an arm was dropped from the trial, any participants still completing the trial in that arm finished their intervention as usual. Their data did not inform further sequential analyses but was used in calculating the final BF and effect sizes v. the relevant control arm.

Effect sizes (approximate Cohen's d, including an adjustment for sample size) and estimated means for the primary outcome, as well as their 95% confidence intervals (CIs), were derived from the cLDA model using the packages effectsize (Ben-Shachar, Lüdecke, & Makowski, Reference Ben-Shachar, Lüdecke and Makowski2020) and emmeans (Lenth, Reference Lenth2022). Data and analysis scripts can be found at https://osf.io/8mxda/.

Results

A total of 188 participants were randomized between 14 April 2021 and 12 December 2021, with the last outcome data provided on 12 January 2022. Participant characteristics are shown in Table 2, with more detailed information in online Supplementary Table S1 in the Supplementary Results. Figure 1 shows the flow of participants through the study. While adherence rates were high in the initial control arm, drop-out was higher across the training arms (see Fig. 1 and Table 1).

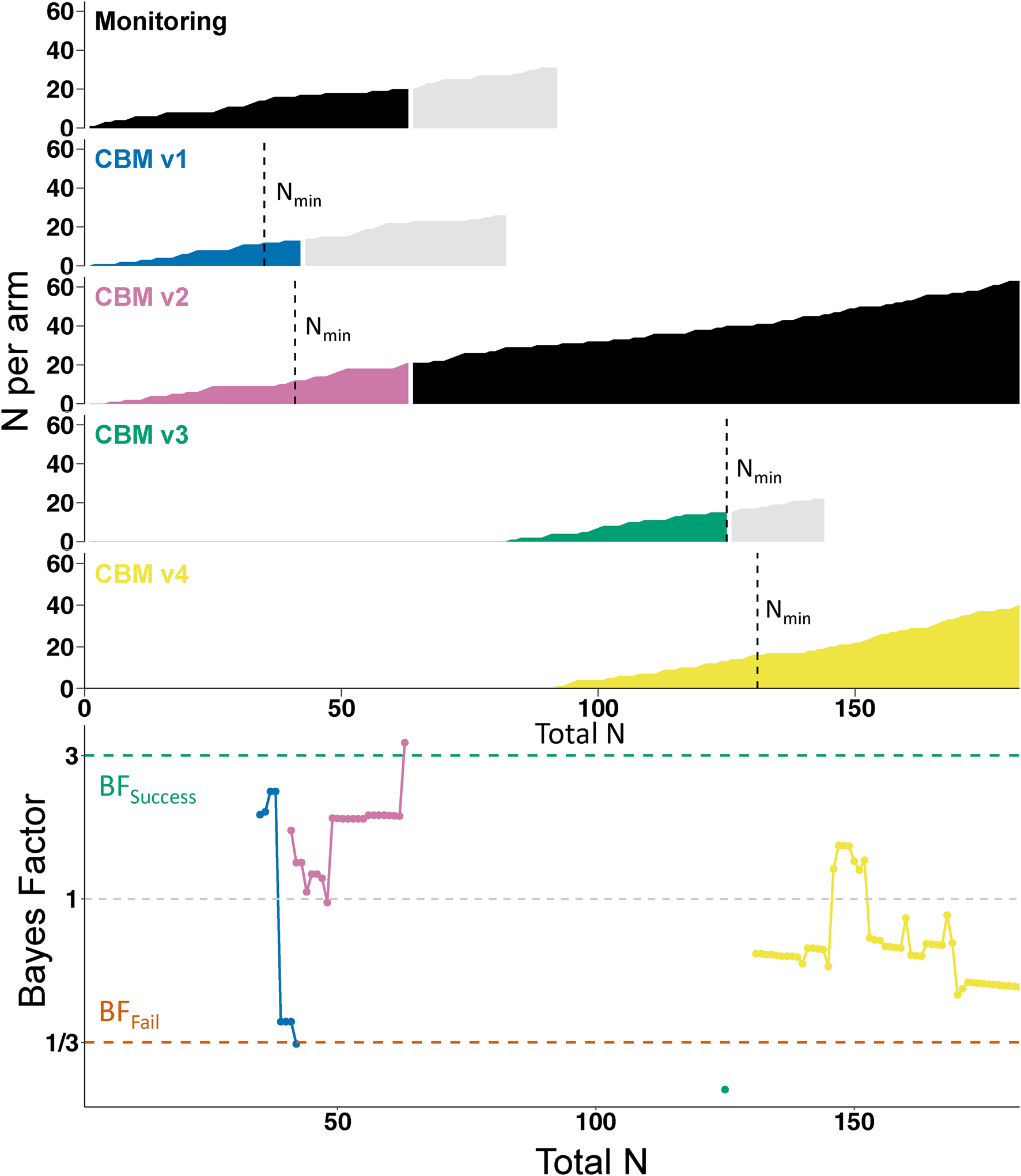

Fig. 2. Course of the trial. The top part of the figure shows the accumulation of participant data in each individual arm, plotted against the total number of participants who had completed the trial. Vertical dashed lines indicate when the arm reached the minimum sample size (Nmin) for starting sequential analyses. Black shading indicates the control condition (i.e. initially Monitoring, later CBM v2). Light gray shading indicates participants who had been randomized to an arm but had not yet completed the trial when their allocated arm was dropped; they completed the trial as normal but their data was not used in the sequential analyses. However, their data did inform the final analyses as presented in Table 1. The lower part of the figure shows how the Bayes Factors developed in each arm (identifiable by color).

Table 2. Participant characteristics at baseline across trial arms, including all participants randomized

CBM v1/2/3/4, Cognitive Bias Modification version 1/2/3/4; DARS, Dimensional Anhedonia Rating Scale; QIDS, Quick Inventory of Depressive Symptomatology; PMH, Positive Mental Health Scale; CEQ, Credibility/Expectancy Scale.

Note: A total of 32 participants were randomized into the Monitoring arm, but one withdrew their data from the trial, hence data for only 31 participants are presented here.

Sequential analyses (primary outcome: anhedonia)

Sequential Bayesian analyses, based on the primary outcome of anhedonia (DARS) at post-intervention, started on 27 May 2021 when both monitoring and CBMv1 hit N min. Figure 2 shows the progress of the trial and sequential BFs, which were calculated as the trial proceeded and used to make decisions (e.g. removal of an arm) while the trial was ongoing. CBMv1 hit BFfail (BF = 0.3297) once 13 participants had provided outcome data (v. 17 in the control condition); CBMv1 was therefore dropped from the trial and CBMv3 was introduced as a new arm. To increase the trial's efficiency, CBMv4 was introduced shortly afterwardsFootnote 2. CBMv2 hit BFsuccess (BF = 3.31) after outcome data had been provided for 21 participants (v. 20 in the control condition) and therefore became the new control condition, with monitoring (the initial control condition) dropped from the trial. CBMv3 hit BFfail (BF = 0.23) after 15 participants (v. 12 participants in CBMv2) and was therefore dropped from the trial. The trial ended with CBMv4 hitting N max (N = 40, BF = 0.51), at which point CBMv2 was the remaining armFootnote 3.

Final outcomes

Table 1 shows final outcomes for each arm v. their relevant control, as calculated at the end of the trial including all participants randomized. This indicates that the superiority of CBMv2 over monitoring in reducing anhedonia corresponded to a medium effect size (d = 0.55), and a BF providing strong evidence of superiority (BF = 11.676).

Secondary outcomes

Details of secondary outcomes are provided in the online Supplementary Results.

Adverse events

No Serious Adverse Events were recorded. We recorded the following Adverse Events: Two participants showed reliable deterioration on the QIDS (one in monitoring, one in CBMv1), and four participants showed reliable deterioration on the GAD7 (two in monitoring, one in CBMv2, one in CBMv3). For further details of negative effects (NEQ) see the online Supplementary Results.

Discussion

This study provides a successful first demonstration of a ‘leapfrog’ trial applied to the development of a psychological treatment, in this case an internet-delivered cognitive training intervention targeting anhedonia, and illustrates its central design features: sequential Bayesian analyses, dropping or promotion of a trial arm on the basis of these analyses, and introduction of new arms into an ongoing trial. These design features could all be successfully implemented, indicating that at least in the context of simple internet-delivered interventions the leapfrog design is a feasible option for treatment development and optimization.

How can we interpret the results of such a trial and what would be the next steps? We started with an automated, unguided web-based self-help intervention that had never previously been tested in this format, and tested four possible versions of how to implement it; we ended with one ‘winning’ version that was found to be better at reducing anhedonia than an initial control condition, and whose effectiveness was not surpassed by any other version tested. One option would then be to take this winning arm further forwards in the research process, for example testing it in a definitive (superiority or non-inferiority) RCT against established similar low-intensity unguided web-based interventions to see how it compared to these in reducing anhedonia; if pre-planned, such as step could in fact be added seamlessly to the end of the initial leapfrog trial (Blackwell et al., Reference Blackwell, Woud, Margraf and Schönbrodt2019). Alternatively, if we felt that when taking into account all outcome measures (primary and secondary) another arm appeared potentially superior (e.g. CBMv4; see online Supplementary Fig. S2 in the Supplementary Results) we could of course choose this as the arm to take forwards. We could have also decided to continue testing new variants until a certain pre-set threshold in improvement in anhedonia had been achieved (e.g. reflecting a specific level of clinically meaningful improvement; see Table 3), rather than stopping the trial when we did, which was based on the pragmatic rationale of having fulfilled our aim of demonstrating the leapfrog trial features. It is important to note that the current trial was conducted with a lenient set of analysis parameters in order to demonstrate the core leapfrog features; drawing confident conclusions about the efficacy of the interventions tested would require a stricter set of analysis parameters (e.g. higher N min, N max, and BFsuccess values, and a lower BFfail) with lower associated error rates (see Blackwell et al., Reference Blackwell, Woud, Margraf and Schönbrodt2019, for examples). However, the principles would remain the same, such that the trial would provide a streamlined version of what would normally be a much longer and more drawn-out treatment development process.

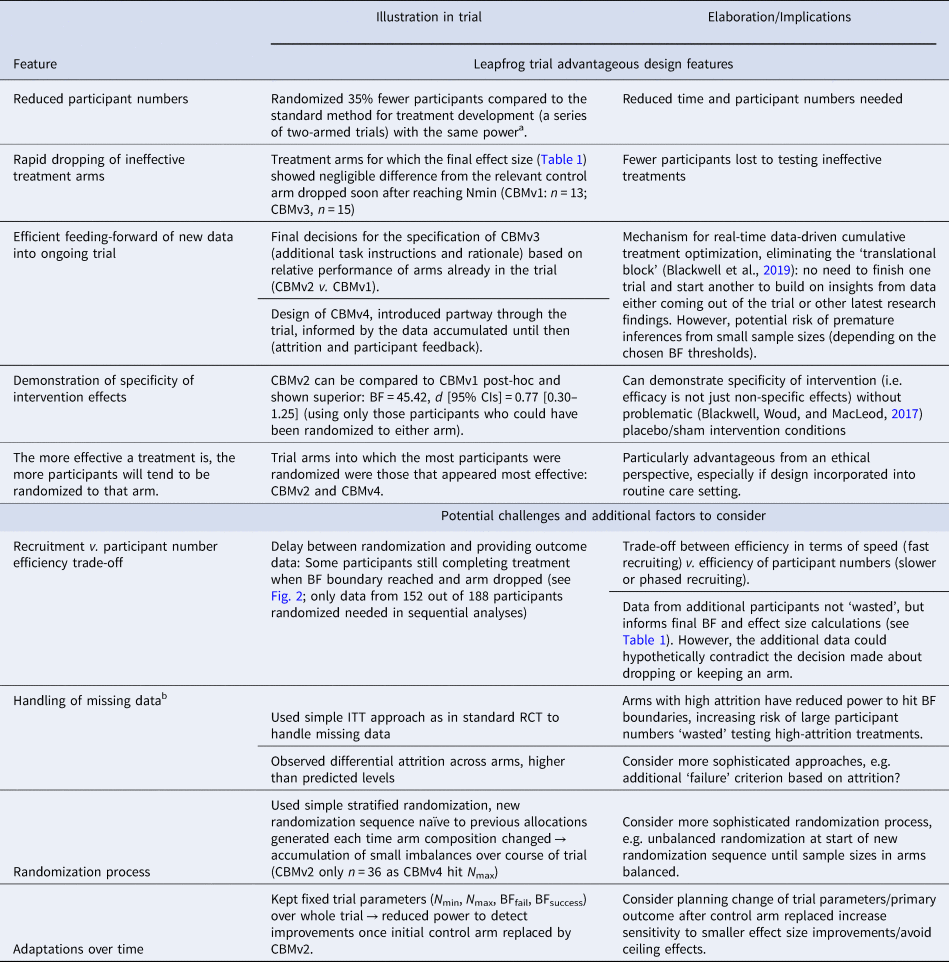

Table 3. Selected leapfrog trial advantageous design features and potential challenges illustrated in the current trial

a 55% power to find d = 0.5 at p < 0.09 with 25% missing data requires 36 participants per arm. For a series of four 2-arm trials this means 36 × 2 × 4 = 288 participants in total. See online Supplementary Results for full details.

b See Blackwell et al. (Reference Blackwell, Woud, Margraf and Schönbrodt2019) for further discussion of missing data.

The current trial illustrates some of the advantageous features of the leapfrog design for treatment development, as well as some additional challenges and factors researchers may wish to consider in optimizing its application in their own research, summarized in Table 3. However, there are limitations to what we can infer about the leapfrog design from the current trial. For example, the design was applied to a simple internet-based intervention, and there will be additional practical issues to consider in other contexts, such as face-to-face therapy delivery (Blackwell et al., Reference Blackwell, Woud, Margraf and Schönbrodt2019). We also used relatively loose inclusion criteria to facilitate recruitment as our main aim was demonstrating the trial design and its implementation, but for full-scale trials it may be preferable to achieve a more precisely-specified sample. Further, the trial was designed and conducted by developers of the leapfrog design, which introduces a potential bias in assessing its success. Finally, as the trial was conducted on small scale over a limited time frame, additional planning considerations may be required if it is used over longer periods of time, for example as a ‘perpetual trial’ (e.g. Hobbs et al., Reference Hobbs, Chen and Lee2018), such as adjustments to the analysis parameters or primary outcome (see Table 3).

In the current study we demonstrated one specific application of the leapfrog design: a treatment development process aiming to find an efficacious version of a new intervention via testing several different variants. However, via application of the basic principles as illustrated here the design could be applied in many ways across the translational spectrum, from pre-clinical work (e.g. developing optimal versions of experimental paradigms or measures) to large clinical trials testing treatment selection algorithms; the principles could also be extended to non-inferiority or equivalence testing and more complex treatment outcomes such as rate of change or cost-effectiveness (see Blackwell et al., Reference Blackwell, Woud, Margraf and Schönbrodt2019, for further discussion). Despite the advantages provided by the design, there are caveats to its use, for example that sequential analyses can lead to biased effect size estimates (Schönbrodt et al., Reference Schönbrodt, Wagenmakers, Zehetleitner and Perugini2017). Further, a researcher needs to be extremely cautious about making simple indirect comparisons between arms that were not in the trial concurrently, as interpretation may be confounded by e.g. seasonal or history effects (see Blackwell et al., Reference Blackwell, Woud, Margraf and Schönbrodt2019, for further discussion; and Marschner and Schou, Reference Marschner and Schou2022, for one potential approach to such comparisons). Additionally, although the use of Bayes factors, which are relatively straightforward to compute, makes the design simpler to implement than approaches using more complex analytic approaches such as Bayesian posterior predictive probability (Hobbs et al., Reference Hobbs, Chen and Lee2018) or continuous adjustment of randomization weights (Wason & Trippa, Reference Wason and Trippa2014), there is still the need for researchers to familiarize themselves with what might be a new statistical approach before they might feel comfortable to use the design. Similar designs may also be possible using null hypothesis significance testing (i.e. p value based) approaches (see Hills and Burnett, Reference Hills and Burnett2011, for one example), if ad-hoc adjustments to the p value thresholds are incorporated to control for the impact of repeated statistical testing.

The leapfrog design was conceived and developed in the context of a changing landscape for psychological treatment development: For many disorders the most crucial questions are no longer about whether we can develop an efficacious psychological intervention at all, but rather how we can address the need to increase efficacy and accessibility still further, for example via improved targeting of refractory symptoms, tailoring of treatments to individuals, reducing relapse, and innovating in our basic conceptions of psychological interventions and methods of treatment delivery (Blackwell & Heidenreich, Reference Blackwell and Heidenreich2021; Cuijpers, Reference Cuijpers2017; Holmes et al., Reference Holmes, Ghaderi, Harmer, Ramchandani, Cuijpers, Morrison and Craske2018). Addressing these kinds of questions requires moving beyond the standard RCTs that have been the main driver of efficacy research to date, and the last few years have accordingly seen increasing application of more sophisticated trial methodologies (e.g. Collins, Murphy, and Strecher, Reference Collins, Murphy and Strecher2007; Kappelmann, Müller-Myhsok, and Kopf-Beck, Reference Kappelmann, Müller-Myhsok and Kopf-Beck2021; Nelson et al., Reference Nelson, Amminger, Yuen, Wallis, Kerr, Dixon and McGorry2018; Watkins et al., Reference Watkins, Newbold, Tester-Jones, Javaid, Cadman, Collins and Mostazir2016) in psychological treatment research. Within this context, the leapfrog design offers a means to streamline treatment development and optimization, enabling more rapid and resource-efficient progress. The core features of the leapfrog design provide a simple flexible framework that can be built upon to address a wide range of research questions, from simple head-to-head comparisons of treatment variants to more sophisticated questions about sequencing, tailoring, or targeting of interventions (Blackwell et al., Reference Blackwell, Woud, Margraf and Schönbrodt2019). Building on this foundation provides the opportunity to move into a new phase of psychological treatment research, one in which the pace of treatment development can start to match the need for improved outcomes in mental health.

Supplementary material

The supplementary material for this article can be found at https://doi.org/10.1017/S0033291722003294.

Acknowledgements

We would like to thank Amelie Requardt and Julia Grönwald for their help in preparing for the study and recruitment, Felix Würtz for his help in preparing study materials, and Christian Leson for setting up and maintaining the study server.

Financial support

Marcella L. Woud is funded by the Deutsche Forschungsgemeinschaft (DFG) via the Emmy Noether Programme (WO 2018/3-1), a DFG research grant (WO 2018/2-1), and the SFB 1280 ‘Extinction Learning’. The funding body had no role in the preparation of the manuscript.

Conflict of interest

None.

Ethical standards

The authors assert that all procedures contributing to this work comply with the ethical standards of the relevant national and institutional committees on human experimentation and with the Helsinki Declaration of 1975, as revised in 2008.