In 2013, American Sign Language (ASL) was the third most frequently taught second language (L2) in US schools of higher education (Goldberg, Looney, & Lusin, Reference Goldberg, Looney and Lusin2015). Despite this, there exists a paucity of research on the cognitive processes involved in L2 sign language learning by hearing individuals (or deaf individuals for that matter; for the state of the field, see Pichler & Koulidobrova, Reference Pichler, Koulidobrova, Marschark, Spencer, Marschark and Spencer2016). Given the popularity of ASL as an L2, the practical importance of investigating L2 sign learning is evident; however, this kind of research is also important for theory development, as researching the processes involved in learning an L2 in a second modality can provide insight into those processes that are universal to all languages and those that are unique to a particular language modality.

Because the journey that is learning an L2 often begins with lexical learning, we chose to begin our own line of investigations by identifying predictors of sign learning in hearing nonsigners. As a component of language acquisition, lexical learning is related to grammar acquisition (for a review, see Bates & Goldman, Reference Bates and Goodman1997), L2 class performance (Cooper, Reference Cooper1964; Krug, Shafer, Dardick, Magalis, & Parenté, Reference Krug, Shafer, Dardick, Magalis and Parenté2002), and language learning aptitude (Cooper, Reference Cooper1964; Li, Reference Li2015). Moreover, there is a large body of research on word learning in spoken languages that can be drawn upon.

One factor that figures prominently in the prediction of word learning is phonological short-term memory (STM). In the multicomponent model of working memory, phonological STM is served by the phonological loop, a system composed of a temporary phonological store and an articulatory rehearsal mechanism that aids in maintaining phonological representations (Baddeley, Reference Baddeley2012). Because the phonological store deals with abstract phonological information, it is further theorized as amodal, that is, capable of maintaining phonological information from any language, whether spoken or signed (Baddeley, Reference Baddeley, Wen, Mota, McNeill, Bunting and Engle2015; Baddeley, Gathercole, & Papagno, Reference Baddeley, Gathercole and Papagno1998). However, the literature also suggests that modality-specific processes play a significant role. Below, we consider this evidence and provide our own interpretation, which guided the research described herein.

PHONOLOGICAL STM AND LEXICAL LEARNING (IN SPOKEN LANGUAGES)

In spoken language research, phonological STM is typically operationalized as the number of phonological items (e.g., digits, letters, words, or nonsense syllables) that one can recall after a brief retention interval. Despite their simplicity (Marshalek, Lohman, & Snow, Reference Marshalek, Lohman and Snow1983), a number of studies have found that measures of phonological STM serve as predictors of native and L2 word learning in children (Gathercole & Baddeley, Reference Gathercole and Baddeley1989; Gathercole, Hitch, Service, & Martin, Reference Gathercole, Hitch, Service and Martin1997; Gathercole, Willis, Baddeley, & Emslie, Reference Gathercole, Willis, Baddeley and Emslie1994; Masoura & Gathercole, Reference Masoura and Gathercole1999, Reference Masoura and Gathercole2005; Masoura, Gathercole, & Bablekou, Reference Masoura, Gathercole and Bablekou2004) as well as adults (Atkins & Baddeley, Reference Atkins and Baddeley1998; Gupta, Reference Gupta2003; Hummel & French, Reference Hummel and French2016; Martin & Ellis, Reference Martin and Ellis2012; O'Brien, Segalowitz, Collentine, & Freed, Reference O'Brien, Segalowitz, Collentine and Freed2006; O'Brien, Segalowitz, Freed, & Collentine, Reference O'Brien, Segalowitz, Freed and Collentine2007).

Gathercole (Reference Gathercole2006) hypothesized that the relationship between phonological STM and word learning exists because both rely on similar processes, namely, auditory, phonological, and speech-motor processes. She cautioned, however, that the relationship is strongest when items in the memory task consist of unfamiliar phonological structures such as pseudowords or words from an unknown L2; the more unfamiliar the phonologic material, the less long-term memory, in the form of lexical and phonetic knowledge, can mediate the relationship between phonological STM and word learning (Gathercole, Reference Gathercole1995; Gathercole, Pickering, Hall, & Peaker, Reference Gathercole, Pickering, Hall and Peaker2001; Hulme, Maughan, & Brown, Reference Hulme, Maughan and Brown1991; Thorn & Frankish, Reference Thorn and Frankish2005). Accordingly, nonwordFootnote 1 repetition is generally viewed as a better predictor of word learning than digit span (Baddeley et al., Reference Baddeley, Gathercole and Papagno1998; Gathercole et al., Reference Gathercole, Willis, Baddeley and Emslie1994) and the relationship between L2 word learning and phonological STM tasks employing L2 words as stimuli attenuates as individuals become proficient in the L2 (Masoura & Gathercole, Reference Masoura and Gathercole2005).

PERCEPTUAL-MOTOR PROCESSES IN PHONOLOGICAL STM AND LEXICAL LEARNING

The caveat that the relationship between phonological STM and word learning is attenuated to the degree that linguistic knowledge can be utilized suggests that phonological processing does not drive the relationship between phonological STM and word learning but rather that it acts as a nuisance variable. Converging evidence from behavioral and neuroimaging studies suggest that beyond general mnemonic and attentional processes, the relationship between phonological STM and word learning is due to common perceptual-motor processes.

PERCEPTUAL-MOTOR PROCESSES IN PHONOLOGICAL STM AND WORD LEARNING

In hearing individuals, phonological STM is disrupted by sound similarity (Baddeley, Reference Baddeley1966; Conrad & Hull, Reference Conrad and Hull1964), item length (Baddeley, Thomson, & Buchanan, Reference Baddeley, Thomson and Buchanan1975), articulatory suppression (Baddeley, Reference Baddeley1986), and irrelevant speech (Colle & Welsh, Reference Colle and Welsh1976) and sounds (such as tones and instrumental music; Jones & Macken, Reference Jones and Macken1993; Salamé & Baddeley, Reference Salamé and Baddeley1989), that is, by perceptual and motor manipulations (for similar arguments, see Jones, Hughes, & Macken, Reference Jones, Hughes and Macken2006; Wilson, Reference Wilson2001). Briefly, the similarity effect occurs when to-be-remembered stimuli sound similar (sets of similar sounding items [e.g., B, E, G, P, T] are not remembered as well as dissimilar items [e.g., D, X, I, L, Q]), suggesting that linguistic material is encoded in such a way that information about the surface form is retained. The length effect is assumed to occur because the stimuli are being rehearsed (overtly or covertly); items that take longer to articulate take more time to rehearse and therefore cannot be refreshed before they decay from a memory buffer. Articulatory suppression, when one is asked to repeat a short word or syllable during encoding, prevents articulatory rehearsal, and as a result, performance is lower compared to a nonsuppressed condition. Finally, irrelevant speech and nonspeech sounds affect performance on phonological STM tasks, possibly because, as Neath (Reference Neath2000) theorizes, some features of the irrelevant sounds are encoded during a STM task and serve as cues during recall; these cues are erroneous and therefore disrupt performance.

These same perceptual and motor manipulations have also been found to disrupt word learning, but only when it involves stimuli sufficiently different from the first language (L1; Papagno, Valentine, & Baddeley, Reference Papagno, Valentine and Baddeley1991; Papagno & Vallar, Reference Papagno and Vallar1992). What this suggests is that word learning in a language that is sufficiently different from the L1 heavily relies on perceptual-motor processes, and hence perceptual-motor manipulations negatively affect learning. When to-be-learned stimuli are known or derived from a language that is similar to the L1, then individuals can make use of lexical knowledge and associative-semantic processes, which are not affected by perceptual-motor manipulations.

PERCEPTUAL-MOTOR PROCESSES IN PHONOLOGICAL STM AND SIGN LEARNING

In the realm of signed languages, a series of studies by Wilson and colleagues revealed that STM for signs is also affected by similarity, length, suppression, and irrelevant stimuli (Wilson & Emmorey, Reference Wilson and Emmorey1997, Reference Wilson and Emmorey1998, Reference Wilson and Emmorey2003; Wilson & Fox, Reference Wilson and Fox2007). While the effects were similar, the means were different.

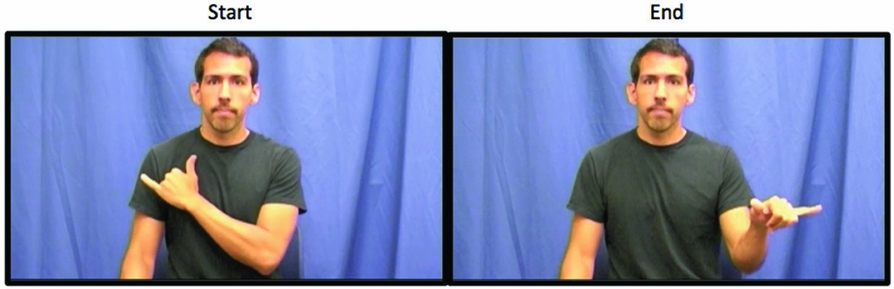

Signed languages, as visuospatial-manual languages, are composed of the simultaneous presentation of the major phonological parameters of location (i.e., place of articulation), handshape, orientation, and movement (see Figure 1; Brentari, Reference Brentari1998; Klima & Bellugi, Reference Klima and Bellugi1979). Accordingly, rather than elicit the similarity effect by presenting similar sounding stimuli, Wilson and Emmorey (Reference Wilson and Emmorey1997) presented visually similar signs to deaf individuals. Analogously, the suppression effect was evoked by asking deaf participants to produce a pseudosign during encoding (Wilson & Emmorey, Reference Wilson and Emmorey1997); the length effect by using signs with repetitive or relatively long movements (Wilson & Emmorey, Reference Wilson and Emmorey1998); and the irrelevant stimuli effect by displaying irrelevant pseudosigns and unnamable rotating figures (Wilson & Emmorey, Reference Wilson and Emmorey2003). Finally, Wilson and Fox (Reference Wilson and Fox2007) showed that the similarity, suppression, and length effects could be elicited in hearing nonsigners tasked with remembering pseudosigns.

Figure 1. Example of a pseudosign depicting the major phonological parameters of handshape, location, movement, and orientation. The sign begins with the right, dominant hand holding a “Y” handshape, oriented with the palm facing the body, and in contact with the chest. Next, the dominant hand arcs away from the body and toward the right while simultaneously rotating the hand so that the palm faces the ground. The pseudosign ends in front of the model, in neutral space.

With regard to sign learning, research is scant; however, two studies are germane. First, Williams and Newman (Reference Williams and Newman2016a) investigated the effect of perceptual similarity. Analogous to the similarity effect in word learning, visually distinct signs were learned more rapidly than visually similar signs. Second, Williams, Darcy, and Newman (Reference Williams, Darcy and Newman2016a) investigated the role that, among other factors, a phonological STM task, digit span, would play in the prediction of ASL vocabulary growth. A multiple linear regression analysis with ASL vocabulary growth as the outcome variable revealed that neither forward nor backward digit span were predictive. Williams et al. (Reference Williams, Darcy and Newman2016a) theorized that digit span was not predictive because in nonsigners, this task would not assess critical modality-specific, that is, perceptual-motor, processes. They cautioned, however, that due to the small sample size (n = 25), their results might not generalize.

PERCEPTUAL-MOTOR PROCESSES: EVIDENCE FROM NEUROIMAGING

Neuroimaging studies corroborate the behavioral studies reported above (Bavelier et al., Reference Bavelier, Newman, Mukherjee, Hauser, Kemeny, Braun and Boutla2008; Campbell, MacSweeney, & Waters, Reference Campbell, MacSweeney and Waters2008; Pa, Wilson, Pickell, Bellugi, & Hickok, Reference Pa, Wilson, Pickell, Bellugi and Hickok2008; Rönnberg, Rudner, & Ingvar, Reference Rönnberg, Rudner and Ingvar2004; Rudner, Reference Rudner2015; Rudner, Andin, & Rönnberg, Reference Rudner, Andin and Rönnberg2009; Williams, Darcy, & Newman, Reference Williams, Darcy and Newman2015, Reference Williams and Newman2016b). In general, researchers find differences in modality-specific areas, such that hearing individuals show greater activation of areas associated with auditory processing while deaf individuals exhibit greater activation of visual and motor areas. Both deaf and hearing individuals, however, show similarities in areas associated with language processing, such as the inferior temporal gyrus and posterior superior temporal gyrus.

The finding that deaf and hearing individuals show similar patterns of activation in language processing areas provides evidence of amodal language processing and possibly of an amodal phonological loop (Baddeley, Reference Baddeley2012; Vallar, Reference Vallar2006); however, the results of two longitudinal neuroimaging studies suggest that linguistic processing is only possible after a significant amount of L2 learning has occurred (Newman-Norlund, Frey, Petitto, & Grafton, Reference Newman-Norlund, Frey, Petitto and Grafton2006; Williams et al., Reference Williams, Darcy and Newman2016b). Across the two studies, the evidence indicated that initially, individuals processed L2 stimuli in a nonlinguistic fashion, with significant activation located primarily in respective sensorimotor areas; as learning progressed, however, there was increased left lateralization and activation of classic language processing areas, namely, the inferior temporal gyrus and posterior superior temporal gyrus. This transition from nonlinguistic to linguistic processing occurred in hearing individuals learning either a spoken or a signed L2, though the transition did occur more rapidly in spoken L2 learning (Newman-Norlund et al., Reference Newman-Norlund, Frey, Petitto and Grafton2006).

The slower transition from nonlinguistic to linguistic processing observed in spoken L2 learning may have occurred because, as Williams (Reference Williams2017) posits, hearing L2 sign learners face an additional hurdle in transitioning from nonlinguistic to linguistic processing, in that they first must “acquire the unique aspects of their new visual language modality before amodal linguistic representations can be accurately acquired” (p. v). In contrast, a hearing individual already has finely tuned auditory-perceptual and speech-motor skills to aid them in their learning of a spoken L2 (see Rosen, Reference Rosen2004). In sum, initial L2 learning, especially in an L2 that is significantly different from the L1, appears to rely heavily on modality-specific perceptual-motor processes.

Taken together, behavioral and neuroimaging studies indicate that individuals confronted with either a sign or a spoken language engage, when possible, similar linguistic-semantic processes but differ in the perceptual and motor processes employed: sign languages make use of visual perception and sign-motor processes while spoken languages make use of auditory perception and speech-motor processes. When it is not possible to rely on linguistic knowledge, such as during a phonological STM task where stimuli are drawn from an unknown language that is quite different from the L1, then the onus falls on modality-specific processes. Moreover, an abundance of research on word learning reveals that the same perceptual and motor manipulations that disrupt phonological STM also disrupt word learning. Thus, the relationship between phonological STM and lexical learning appears to be driven by common mnemonic, attentional, and critically, perceptual-motor processes.

THE PRESENT STUDY

The primary objective of the present study was to identify predictors of sign learning in hearing nonsigners. Predictors were selected based on the theory that the relationship between phonological STM and lexical learning is due in part to common perceptual-motor, not phonological, processes. Thus, we hypothesized that in hearing nonsigners, STM for movements (movement STM), whether signlike (nominally phonological STM) or not, would be related to sign learning, as both movement STM and sign learning involve encoding and binding biological motion and visuospatial features such as limb configurations (Moulton & Kosslyn, Reference Moulton and Kosslyn2009; Porro et al., Reference Porro, Francescato, Cettolo, Diamond, Baraldi, Zuiani and di Prampero1996; Stankov, Seizova-Cajić, & Roberts, Reference Stankov, Seizova-Cajić and Roberts2001; Vicary, Robbins, Calvo-Merino, & Stevens, Reference Vicary, Robbins, Calvo-Merino and Stevens2014; Vicary & Stevens, Reference Vicary and Stevens2014). In addition, as a subcomponent of movement STM, visuospatial STM should be related to sign learning, albeit, to a lesser extent, as it shares fewer processing components with sign learning than movement STM does. In order to test these hypotheses, three movement STM and two visuospatial STM tasks varying on a number of dimensions (e.g., response format, set size, and scoring procedure) were used. By ensuring that tasks varied in a number of ways, we attempted to reduce the likelihood that any relationship found was due to superficial similarities (i.e., common method variance).

A secondary objective was to identify which of the measures best predict sign learning. We did not have predictions about specific tasks, but we hypothesized that visuospatial STM would account for variance in sign learning performance over and above movement STM. Though observing human body movements necessarily engages visuospatial processing, a number of studies have reported a dissociation between visuospatial and biological motion processing (Ding et al., Reference Ding, Zhao, Wu, Lu, Gao and Shen2015; Peelen & Downing, Reference Peelen and Downing2007; Seemüller, Fiehler, & Rösler, Reference Seemüller, Fiehler and Rösler2011; Urgolites & Wood, Reference Urgolites and Wood2013a, Reference Urgolites and Wood2013b; Zihl & Heywood, Reference Zihl and Heywood2015). Studies investigating movement STM have found that memory for static-visual features (e.g., color and body configurations) is suppressed when biological motion processing is engaged (Ding et al., Reference Ding, Zhao, Wu, Lu, Gao and Shen2015; Vicary et al., Reference Vicary, Robbins, Calvo-Merino and Stevens2014; Vicary & Stevens, Reference Vicary and Stevens2014). It stands to reason that it would be difficult for one to encode and bind all of the features that distinguish one sign from another after a single exposure. In naturalistic settings, as well as in the paradigm used here (paired associate learning with multiple learning trials), however, individuals can shift their attention to different aspects as they are repeatedly exposed to target signs; movement STM tasks, by definition, do not offer this opportunity. Consequently, we expected that a more direct assessment of visuospatial STM would improve measurement accuracy and therefore account for a greater proportion of variance in sign learning than movement STM alone.

METHOD

Participants

One hundred and seven participants between the ages of 18 and 33 (M = 21.7, SD = 4.1, 55% female) were recruited from our university subject pool (55%) and surrounding area (45%), including other universities and local colleges. Participants recruited from the university subject pool were compensated with course credit; all others received up to $25. All participants were hearing, right-handed, fluent in English, had normal or corrected-to-normal vision, and were free of upper-body movement disorders. One participant reported having attended an ASL course but stated she was not fluent or currently enrolled; no other participant reported experience with ASL. We did not inquire about participants’ familiarity with fingerspelling.

Design and procedure

All tasks were administered in a single, private session lasting no more than 2 hr, including an optional break. Tasks were programmed in PsychoPy (Peirce, Reference Peirce2007) and presented on a MacBook Pro laptop. Two tasks required reproducing movements and were filmed using a Canon video camera mounted on a tripod so they could be scored later.

Participants completed three measures of movement STM and two visuospatial STM tasks; a pseudosign-word paired associate learning task; a questionnaire asking for demographic and achievement test scores; and for those participants recruited from the university subject pool, a record release form to access achievement test records. Unfortunately, few participants self-reported achievement scores, and those that we were able to access were generally in the top 10th percentile resulting in a highly restricted range of scores; as a result, achievement score data will not be reported here.

Written consent to participate in the study was always obtained at the beginning of the session; the questionnaire and, when applicable, the record release form at the end; the remaining tasks were administered using a Latin-square design, initially ordered as: sign learning task, Corsi block tapping task, nonsign paired task, movement span, pattern span, and nonsign repetition (see below for task descriptions). One to three practice items with feedback were provided for all tasks.

Instruments

Movement STM

MOVEMENT SPAN (MOVESPAN)

The MoveSpan task (Wu & Coulson, Reference Wu and Coulson2014) is a movement STM task requiring free recall of manual gestures that are difficult to verbally recode and do not necessarily follow the phonotactics of any particular sign language (e.g., there is no dominant hand and a number of movements are asymmetric, disyllabic, and/or place one of the hands fully behind the back; see Brentari, 2006).

In the MoveSpan, individuals are presented with three sets, each of one to five movements. After viewing a set, participants freely recall movements at their own pace by mirroring them. Raters, trained to a 0.80 interclass correlation consistency (2,1; Shrout & Fleiss, Reference Shrout and Fleiss1979) criterion, later scored participants’ recorded responses, awarding 1 point for every movement correctly recalled and 0.5 point for a movement that deviated from the target by one criterion (see Appendix A for scoring instructions). Movements within a set were fixed; however, sets were presented randomly. MoveSpan score was calculated as the total number of points earned across all sets, and thus the maximum score was 45 points.

NONSIGN REPETITION TASK (NSRT)

The NSRT (Mann, Marshall, Mason, & Morgan, Reference Mann, Marshall, Mason and Morgan2010) was designed to be analogous to nonword repetition. It consists of 40 pseudosigns that obey British Sign Language phonotactics but are themselves meaningless (Mann et al., Reference Mann, Marshall, Mason and Morgan2010, p. 15).

In the NSRT, participants view video clips of pseudosigns produced by a deaf fluent British Sign Language user, one at a time, and are expected to mirror the items immediately after presentation. Requiring participants to mirror the items rather than to reverse perspective deviates from the protocol followed by Mann et al. (Reference Mann, Marshall, Mason and Morgan2010) but was made to maintain consistency with the MoveSpan task and therefore to curtail errors due to participants confounding instructions across tasks. Because all single-handed signs appeared to be performed with the left hand by the model, mirroring these signs required participants use their right hand.

Items were presented randomly and participant performance was recorded and scored offline by raters trained to a 0.80 interclass correlation consistency criterion. Scoring was dichotomous, with 1 point awarded for correctly mirrored pseudosigns and no points for reproductions that differed from the target pseudosign by one parameter (see Appendix B for scoring instructions). Participant scores on the NSRT were calculated by summing the total points awarded, and the maximum score was 40.

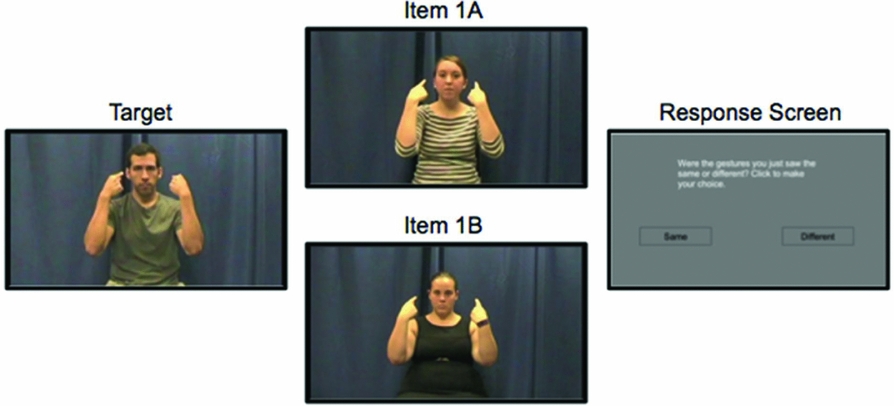

NONSIGN PAIRED TASK (NSPT; FIGURE 2)

The NSPT was designed similarly to Bochner and colleagues’ ASL Discrimination Test (ASL-DT; Bochner, Christie, Hauser, & Searls, Reference Bochner, Christie, Hauser and Searls2011; Bochner et al., Reference Bochner, Samar, Hauser, Garrison, Searls and Sanders2016); however, our tasks differ in that the ASL-DT is intended as an assessment of ASL proficiency while the NSPT is used here as a movement STM task similar to nonword recognition. In spoken language research, nonword recognition correlates with nonword repetition and vocabulary development (Martin & Ellis, Reference Martin and Ellis2012; O'Brien et al., Reference O'Brien, Segalowitz, Collentine and Freed2006, Reference O'Brien, Segalowitz, Freed and Collentine2007).

Figure 2. An example from the nonsign paired task. After seeing the full video of the target and either item 1A in the first block or 1B in the second, the response screen appears: “Were the gestures you just saw the same or different? Click to make your choice.” Pictures display the final position of a pseudosign.

In the NSPT, participants view a target pseudosign and must judge whether a reproduction was the same or different from the target according to specified criteria, similar to the criteria used to score the NSRT (see Appendix B). Reproductions were designed to either faithfully reproduce the target or differ in one of the following parameters: movement, orientation, or handshape. A parametric approach, with the previously named parameters as categories, was used to create all pseudosigns and were later judged phonotactically permissible by a native ASL signer (the second author). A parametric approach to pseudosign construction has been used previously (e.g., Orfanidou, Adam, McQueen, & Morgan, Reference Orfanidou, Adam, McQueen and Morgan2009; Pa et al., Reference Pa, Wilson, Pickell, Bellugi and Hickok2008; Wilson & Fox, Reference Wilson and Fox2007) and allows for a great degree of control in manipulating item characteristics (see Mann et al., Reference Mann, Marshall, Mason and Morgan2010). Approximately 60% of reproductions were classified as different, with about 40% of those involving a change in movement and the remaining 60% equally divided between orientation and handshape.

Participants began the NSPT by viewing a brief (2 min, 44 s) instructional video. The video introduced participants to the task, instructed them on the judging criteria, and provided examples. Next, participants completed three practice items with a researcher providing feedback. After completing the practice items and receiving feedback, the critical trials began.

There were two blocks. In both blocks, participants viewed a target pseudosign produced by a hearing male nonsigner, immediately followed by one of two hearing female nonsigners “attempting” to copy the target pseudosign. The same target pseudosigns were used across the two blocks; however, a different female nonsigner performed the reproductions in each block. This was done to focus the participants’ attention on the intended parameters and to limit the degree to which slight variations in production may lead to erroneous decisions.

Next, a response screen prompted the participant to judge the reproduction as same or different from the target. As in Bochner et al.’s ASL-DT, for an individual to receive a point, both reproductions of a particular target (across the two blocks) needed to be correct. In this way, the chance of guessing was reduced from 50% to 25%. There were 55 paired-comparisons and so the maximum possible score was 55.

Visuospatial STM Tasks

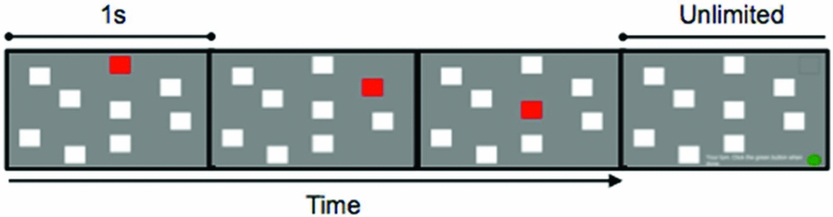

CORSI BLOCK TAPPING TASK (CORSI; FIGURE 3)

The Corsi task (Milner, Reference Milner1971) is a dynamic visuospatial STM task that has been shown to load more heavily on spatial processing (Della Sala, Gray, Baddeley, Allamano, & Wilson, Reference Della Sala, Gray, Baddeley, Allamano and Wilson1999). Items consist of 4–9 rectangles flashing sequentially on the computer screen for 1000 ms each. After presentation of an item, participants were to immediately click the rectangles in the same order they had flashed. There were three blocks, each set length was randomly presented once within a block of trials, and therefore, each set length was presented three times. Participant scores were calculated using a partial scoring method in which a single point was awarded for each square correctly recalled in its serial position (Conway et al., Reference Conway, Kane, Bunting, Hambrick, Wilhelm and Engle2005). The maximum possible score was 117.

Figure 3. An example of a practice Corsi trial, set size three.

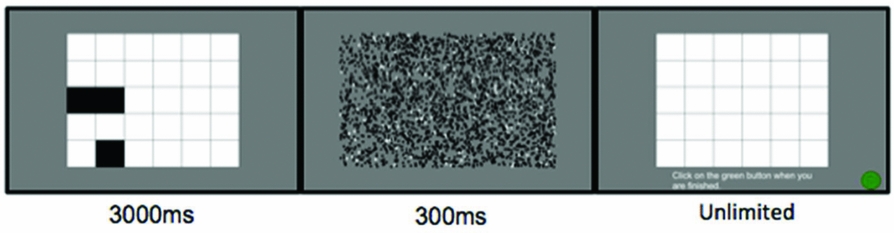

PATTERN SPAN (PATSPAN; FIGURE 4)

The PatSpan task is an adaptation of the Visual Pattern Test, which has been shown to load more heavily on static-visual processing (Della Sala et al., Reference Della Sala, Gray, Baddeley, Allamano and Wilson1999). Items in the PatSpan consisted of a 5 × 6 array of rectangles with 4 to 13 of them shaded black for 3000 ms. After presentation, a visual mask was presented for 300 ms followed by a blank 5 × 6 array. Participants reproduced the pattern of shaded rectangles they had just viewed by using the computer track pad to click on the rectangles presented in the array. There were three different items for each set length; items were the same for all participants though presentation was randomized. PatSpan scores were calculated by awarding a single point for every pattern correctly recalled; thus the maximum score was 30.

Figure 4. An example of a practice PatSpan trial, set size three. The final frame depicts the response screen, instructing participants to “click on the green button when you are finished.”

Sign Learning

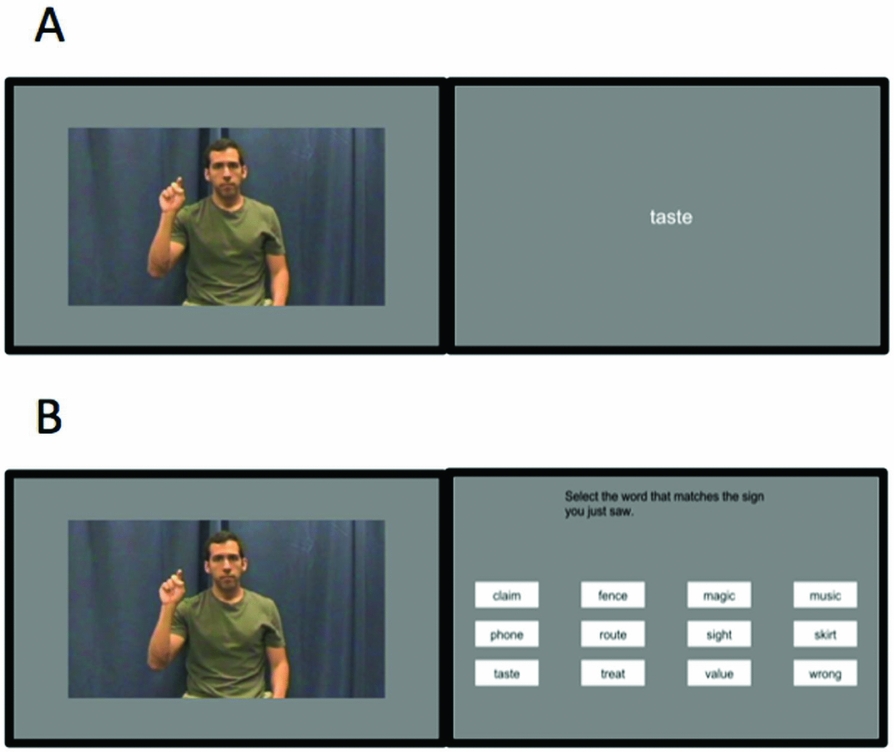

SIGN LEARNING TASK (SLT; FIGURE 5)

The criterion variable, the SLT, is a paired-associate learning task employing a study-test learning procedure. Such tasks have been shown to result in long-term retention (Seibert, Reference Seibert1930; Thorndike, Reference Thorndike1908) and correlate with verbal ability and language aptitude (Cooper, Reference Cooper1964; Hundal & Horn, Reference Hundal and Horn1977; Kyllonen & Tirre, Reference Kyllonen and Tirre1988; Kyllonen & Woltz, Reference Kyllonen, Woltz, Kanfer, Cudeck, Kanfer and Cudeck1989). Moreover, utilizing paired-associate learning in the lab, as opposed to assessing vocabulary growth in ASL students, confers a greater degree of control, for example, in the amount of time and method of study.

Figure 5. Depiction of the sign learning task. (a) A pseudosign–word pair from the study portion of a trial. (b) An item from the test portion: the pseudosign (cue) is presented followed by the response screen showing all words from this set, in alphabetical order.

The task consisted of two sets of 12 visually presented pseudosign–English word pairs. Pseudosigns were used for the following reasons: as detailed above, doing so allows us to easily manipulate item characteristics; pseudosigns and English words can be paired randomly (for experimental purposes); and so that this task could be used in a future study with proficient signers. As with the NSPT, pseudosigns were created using a parametric approach and deemed phonotactically permissible by a fluent ASL signer (the second author); a hearing nonsigner produced all pseudosigns. All English words were five-letter high-frequency nouns selected from the SUBTLEX-US corpus (Brysbaert & New, Reference Brysbaert and New2009).

The SLT began with instructions introducing the task followed by a single example. Critical trials consisted of two blocks, each with 12 different pseudosign–English word pairs, for a total of 24 pseudosign–English pairs. Within each block, there were two study-test trials. During the study portion, each pseudosign was presented for an average duration of 3500 ms immediately followed by its randomly associated English word for 1000 ms. During testing, participants viewed a randomly selected pseudosign immediately followed by the response screen showing all 12 possible English response words. After making a selection by mouse click, the next pseudosign was shown and so on; feedback was never provided, and all pairs were studied and tested again during the second study-test trial, regardless of prior performance. The dependent variable was the total number of words correctly recalled across the two blocks; thus the maximum score was 48.

RESULTS

The data were assessed for univariate outliers using a cutoff z score of 3.29 (Field, Reference Field2013) and by graphical examination. Four participants achieved z scores at or above the cutoff on at least one variable, and evidence from a number of scatter plots indicated that these participants completed the study in a perfunctory manner or were not representative of the intended population. As a result, scores from these 4 individuals were removed from further analysis, leaving the final sample size at 103.

Descriptive statistics and internal reliability coefficients (Cronbach α) are provided in Table 1. The items used to calculate Cronbach α were derived as follows: MoveSpan, Corsi, and PatSpan reliabilities were each calculated by forming three subscores composed of one instance of each set length (see Engle, Tuholski, Laughlin, & Conway, Reference Engle, Tuholski, Laughlin and Conway1999); NSPT and NSRT reliabilities were calculated using each item as a score (i.e., as is typical); for the SLT, the “items” consisted of subscores derived by summing the points awarded for correctly identifying each instance of a particular word. All internal reliability coefficients were near or above 0.80, indicating acceptable reliabilities. In addition, the correlation between the two SLT blocks was strong (r = .617), providing further evidence of reliability.

Table 1. Descriptive statistics for all tasks

a Expressed as percent of score possible.

Note: MoveSpan = movement span; NSRT = nonsign repetition; NSPT = nonsign paired task, Corsi = Corsi block tapping task, PatSpan = pattern span; SLT = sign learning task.

Next, correlations were analyzed to assess the degree to which predictors correlated with the outcome variable and, as we were concerned with both observed and latent variables, to assess construct validity. Table 2 shows bivariate correlations among all tasks and SLT trials and, because there may have been an effect of task administration order, partial correlations controlling for variance due to order effects.

Table 2. Bivariate (bottom half) and partial (controlling for order; upper half) correlations

Note: Lower half are bivariate correlations, upper half are partial correlations controlling for task administration order. All correlations significant at .01 level. MoveSpan = movement span; NSRT = nonsign repetition; NSPT = nonsign paired task, Corsi = Corsi block tapping task, PatSpan = pattern span; SLT = sign learning task.

All predictors were positively related to the SLT, with bivariate correlations ranging between .400 and .535. Evidence of construct validity was evident from the strong correlations between the visuospatial STM tasks. With regard to the movement STM tasks, we found that these tasks positively correlated with each other; however, they were also moderately to strongly correlated with the visuospatial STM tasks. Cross examination of the bivariate and partial correlations provided in Table 2 indicated that administration order did not significantly affect the pattern of results.

After noting the relationships between visuospatial STM and all other tasks, we felt it prudent to conduct a partial correlation analysis to investigate the degree to which visuospatial processing drove these relationships (see Table 3). Despite the movement STM tasks varying in a number of ways (e.g., response format, set size, and scoring procedure), after partialing out the variance shared with the two visuospatial STM tasks, all movement STM tasks remained positively correlated with each other, indicating a significant amount of shared variance over and above that which is shared with visuospatial STM. The positive relationship between the movement STM tasks and SLT also remained significant. Further controlling for task order did not substantially change the pattern of results. Thus, in line with our expectations, these results indicated that the predictors could be classified as measures of two related but distinct constructs, namely, movement STM and visuospatial STM.

Table 3. Partial correlations controlling for visuospatial STM and order

Note: Lower half shows partial correlations, controlling for variance shared with Corsi and PatSpan tasks; partial correlations in upper half also control for task administration order. All correlations significant at .01 level. MoveSpan = movement span; NSRT = nonsign repetition; NSPT = nonsign paired task, Corsi = Corsi block tapping task, PatSpan = pattern span; SLT = sign learning task.

Finally, regression analyses were conducted to (a) test the hypothesis that visuospatial STM accounts for variance in sign learning over and above movement STM and (b) assess which predictor or set of predictors accounted for the greatest variance in the SLT. Note, because the previous two analyses indicated that task order did not substantially affect the results, we chose to disregard it for subsequent analyses.

For the first analysis, in order to more accurately assess the contribution of each construct, composite scores, derived by standardizing and summing construct-relevant scores (e.g., Corsi and PatSpan scores were standardized and summed together to form the visuospatial composite), were used in place of raw scores.Footnote 2 The movement STM composite (MoveScore) was predictive of SLT, F (1, 101) = 54.054, p < .001, accounting for 34.9% of the variance in SLT performance. Adding the visuospatial composite (VisuoScore) to the model significantly increased R 2, F (2, 100) = 7.177, p = .009, accounting for an additional 4.4% of the variance (see Table 4).

Table 4. Hierarchical regression analysis with SLT as the outcome variable

Note: ß=standardized coefficient; pr = partial correlation; SLT = sign learning task; MoveScore = composite score formed by standardizing and summing movement-based scores (viz., movement span, nonsign repetition, and nonsign paired task); VisuoScore = composite score formed by standardizing and summing scores on visuospatial tasks (viz., pattern span and Corsi block tapping task).

Next, because we did not have specific predictions about which task or set of tasks would best predict sign learning, a forward stepwise regression analysis using the Aikake information criterion was conducted with SLT performance as the outcome variable. The best fitting model utilized the NSPT, PatSpan, and MoveSpan as predictors, F (3, 99) = 21.728, p < .001, and accounted for 39.7% of the variance in SLT performance. All predictors were significant (see Table 5).

Table 5. Forward stepwise regression analysis with SLT as the outcome variable

Note: ß=standardized coefficient; pr = partial correlation; AIC = Aikake information criterion. NSPT = nonsign paired task; PatSpan = pattern span; MoveSpan = movement span.

DISCUSSION

Perceptual-motor and phonological processing in sign learning

The primary objective of this study was to identify predictors of sign learning. In order to do so, we worked under the theory that, in addition to general mnemonic and attentional processes, the observed relationship between phonological STM and L2 lexical learning is due to similarities in perceptual-motor, not phonological, processing. Based on this theory and the fact that sign languages are visuospatial-manual, we identified predictors that varied along a number of dimensions but that we believed could nonetheless be classified as movement STM and visuospatial STM—constructs we reasoned were relevant to sign learning. In line with our predictions, predictors could be categorized as measures of the aforementioned constructs, and all predictors positively correlated with the SLT, indicating that movement STM and visuospatial STM are related to sign learning.

What then is the role of phonological processing in L2 sign learning by hearing nonsigners? We interpret our results as suggesting that phonological processing played little if any role in the relationships observed. To review, bivariate and partial correlation analyses revealed that all tasks classified as movement STM shared a large proportion of variance; however, this group of predictors included two tasks that used pseudosigns and can nominally be classified as phonological STM measures (the NSRT and NSPT) along with one nonlinguistic measure of STM for movement (the MoveSpan). Visuospatial STM was assessed with tasks using stimuli that bore no resemblance to signs, and these tasks were also significantly related to all other variables.

There are, of course, a number of potential counterpoints. Here we address three. First, one can look at the results of our regression analyses and note that the movement STM task that elicited the most attention to the phonological features of sign languages, the NSPT, was the best predictor of sign learning performance, suggesting that the phonological component assessed by this task was critical. This may be the case; however, it is important to note that beyond assessing memory for signs, the NSPT task was the only predictor with a clear learning component: all participants watched an instructional video explaining the judgment criteria. Thus, the strong relationship between NSPT and SLT performance may be partially explained by a shared learning, or long-term memory, component. In support of this view, note that the other task that used pseudosigns, the NSRT, did not correlate as highly with sign learning as either of the other two movement STM tasks.

A second counterpoint is that a nonsigner performed the SLT and NSPT pseudosigns. Beginning sign learners are more variable in production (Hilger, Loucks, Quinto-Pozos, & Dye, Reference Hilger, Loucks, Quinto-Pozos and Dye2015), produce larger signs than native signers (Mirus, Rathmann, & Meier, Reference Mirus, Rathmann, Meier, Diveley, Metzger, Taub and Baer2001), and take longer to sign (Cull, Reference Cull2014). These differences may have affected the rhythmic-temporal patterns that characterize all languages (Petitto, Reference Petitto and McGilvray2005; Petitto et al., Reference Petitto, Berens, Kovelman, Dubins, Jasinska and Shalinsky2012, Reference Petitto, Langdon, Stone, Andriola, Kartheiser and Cochran2016), and that may have triggered phonological processing. Pseudosigns in the NSRT, however, were performed by a deaf native signer, and as discussed above, performance on this task shared a significant proportion of variance with the other two movement STM tasks and with sign learning. This suggests that in the present study, the effect of having a nonsigner perform pseudosigns was negligible or, more generally, that to the nonsigner, signs are processed in a nonlinguistic fashion.

Finally, a third counterpoint is that we did not provide discriminant evidence: our case would be stronger had we shown that phonological STM assessed via an auditory-verbal measure was more weakly correlated with sign learning than movement or visuospatial STM tasks. Recall, however, that at least one study has found that in hearing nonsigners, digit span did not correlate with ASL vocabulary growth (Williams et al., Reference Williams, Darcy and Newman2016a). Still, these counterpoints warrant further investigation.

If our conclusions are substantiated, then they raise questions about the nature of the phonological loop and its relationship to lexical learning. As currently conceptualized, the phonological loop is a STM system that is distinct from long-term memory and, because it is specialized for abstract phonological representations, is critical for lexical learning in any language, signed or spoken (Baddeley, Reference Baddeley2012; Baddeley et al., Reference Baddeley, Gathercole and Papagno1998). Our results, in conjunction with prior research (e.g., Newman-Norlund et al., Reference Newman-Norlund, Frey, Petitto and Grafton2006; Williams et al., Reference Williams, Darcy and Newman2016a, Reference Williams, Darcy and Newman2016b), however, raise the possibility either that the phonological loop does not come online until after experience with a particular language modality or that it does not deal with phonological information per se but with perceptual-motor events (see Jones, Hughes, & Macken, Reference Jones, Hughes and Macken2006; Jones et al., Reference Jones, Hughes and Macken2007; Wilson, Reference Wilson2001).

The role of visuospatial STM in sign learning

A secondary objective was to identify which task or set of tasks would best predict sign learning. A priori, we hypothesized that visuospatial STM would account for variance in sign learning over and above movement STM; however, we made no predictions about specific tasks. To test our hypothesis, we created composite scores by standardizing and summing relevant predictors and then submitted these composites to a hierarchical regression analysis with the SLT as the outcome variable. Both composite scores were significant predictors, indicating that movement and visuospatial STM account for independent portions of variance in sign learning performance. Next, we performed a forward stepwise regression analysis to identify the best predictors. This analysis revealed that the NSPT, PatSpan, and MoveSpan accounted for the greatest unique proportions of variance in overall sign learning performance.

It is important to note that in formulating our hypothesis, we took into account two features that make signs, and movements in general, quite different from spoken words: rather than the sequential presentation of sounds, signs are composed of the simultaneous presentation of static and dynamic visuospatial features. Based on the nature of signs and research showing that it is difficult to process visual and motion information in parallel (Ding et al., Reference Ding, Zhao, Wu, Lu, Gao and Shen2015; Vicary et al., Reference Vicary, Robbins, Calvo-Merino and Stevens2014; Vicary & Stevens, Reference Vicary and Stevens2014), we reasoned that it would be difficult for one to encode the disparate features that distinguish one sign from another after only a single exposure but that multiple exposures would allow one to shift attention to features that are encoded by different STM subsystems. In line with this reasoning, we viewed movement STM tasks as faulty measures of their component processes and so hypothesized that including more direct assessments of visuospatial STM in a battery of tests would improve measurement accuracy.

This line of reasoning appears to have been supported: visuospatial STM did account for variance in sign learning over and above movement STM. We were surprised, however, by the magnitude of the relationship between visuospatial STM tasks, particularly the PatSpan, and sign learning, as we expected that movement STM tasks would generally be the strongest predictors.

We suspect that the relative equality between visuospatial and movement STM was due in part to a strategy that utilized memory for key configurations to aid in the recall of movements. To illustrate, consider the pseudosign depicted in Figure 1. A large amount of information can be gleaned by simply referring to the two static images; all that is left to know or guess is the intervening motion. In a similar fashion, memory for key configurations is likely used to redintegrate entire movement patterns. Note, this example implies that one can correctly recall a movement by encoding two configurations; however, it is likely that as the complexity of a pseudosign, and human body movements in general, increases, so too does the load on visuospatial memory (Vicary et al., Reference Vicary, Robbins, Calvo-Merino and Stevens2014).

Whether the magnitude of this relationship generalizes should be investigated, as others have reported a dissociation between visuospatial and movement STM, evident, for example, by a lack of interference in dual-task paradigms (Smyth, Pearson, & Pendleton, Reference Smyth, Pearson and Pendleton1988; Smyth & Pendleton, Reference Smyth and Pendleton1989, Reference Smyth and Pendleton1990) and insignificant correlations between movement and visuospatial STM tasks (Wu & Coulson, Reference Wu and Coulson2014). On the one hand, it may be the case that some idiosyncrasy in our sample, tasks, or methods resulted in the observed relationships. On the other hand, individuals asked to recall signs and other body movements might generally rely on visuospatial memory to aid recall. In support of the latter view, participants in our study were not instructed on whether they should rehearse any of the movements, and few spontaneously chose to do so. Moreover, research on the perception and production of signs by adult signers and nonsigners typically finds that the movement parameter is the most error prone, followed by handshape and orientation, and finally location (Bochner et al., Reference Bochner, Christie, Hauser and Searls2011, Reference Bochner, Samar, Hauser, Garrison, Searls and Sanders2016; Mann et al., Reference Mann, Marshall, Mason and Morgan2010; Ortega & Morgan, Reference Ortega and Morgan2015; Williams & Newman, Reference Williams and Newman2016b). Handshape, orientation, and location are features that can be represented in static visual imagery.

Concerning the tasks identified as “best” predicting sign learning, the result of the forward stepwise regression analysis indicates that sign learning is best assessed by a battery of movement and visuospatial STM tasks. This further supports our view that movement STM tasks, which require visuospatial and motion processing, are faulty measures of their component processes. Consequently, researchers interested in sign learning may wish to include assessments of movement and visuospatial STM in their battery of tasks.

Conclusion and future directions

The results of this study suggest that sign learning is strongly dependent on movement and visuospatial STM and that both make unique contributions to its prediction. Although this study was not explicitly intended to test the role that phonological processing plays in the relationship between phonological STM and lexical learning, it does raise questions about its involvement. In this way, we have illustrated how investigating L2 sign language acquisition can inform theories about memory and language learning.

In order to substantiate the conclusions drawn here, future studies should continue to investigate the possibility that phonological processing is an important component of sign learning in beginning signers, for example, by including both spoken and signed measures of phonological STM as well as other language and perceptual-motor tasks. It is also important to investigate the ecological validity of our findings: are movement STM and visuospatial STM equally as important to learning real signs performed by native signers as they are to predicting pseudosigns performed by nonsigners? Are they predictive of learning in a classroom as well as in a lab? Finally, we chose to focus exclusively on STM measures; however, there are certainly other important factors to investigate.

APPENDIX A MOVEMENT SPAN (MOVESPAN) SCORER DIRECTIONS

Scoring

Each movement will be scored individually and can be awarded 0, 0.5, or 1 point.

• Full point: all parameters of the movement were reproduced

• Half point: the movement differed by one parameter from the target OR the movement was reproduced correctly but not mirrored.

• Zero: the movement differed by more than one parameter

Comments

All movements that are scored less than 1 must have comments. Use the following along with your own comments.

• O = omission = item was not performed

• I = intrusion = an item not part of the current set (or even task) was performed (use the other comments section)

• S = substitution = an incorrect movement, handshape, location, or orientation was used in an item

• A = addition = an extra movement was added to an item

• D = deletion = a movement was deleted from an item

Parameters

Handshape

• There are three handshapes in this task: spread hand (ASL 5), flat hand (ASL flat B), and fist (ASL A).

• Do not deduct points for small deviations—for example, if the handshape was supposed to be a flat hand but there is a little spread, judge it on whether it looks more like a spread hand or more like a flat hand. Similarly, do not deduct for slight extensions of the thumb or pinky or any other digit.

Orientation

• Any deviation of about 75 degrees or greater is considered incorrect.

Location

• Movements done along or referencing a specific part of the body (not including fingers, see below) should be judged correct if they were in the general area (think of easily nameable areas). For example, if the right hand should be touching the knuckles of the open left hand and the participant is instead touching the middle portion of the back of the hand, that is okay. In contrast, touching the tips of the fingers or touching closer to the wrist is incorrect.

• Movements pointing to or between specific fingers must be reproduced to those specific locations. If hand orientation is reversed, the location could be correct even if pointing to an incorrect finger. For example, if the model showed their palm and pointed to the ring finger but the subject showed the back of their hand and pointed to the middle finger, then, assuming all other aspects were correct, this item would be given half a point.

• Movements done around the body should be judged using the NSRT criteria for location: use general zones such as near the head, near the body, in front of the head, in front of the body, and so on.

Path movement—movement beginning at the shoulder joint

• Path movement will be considered incorrect if path movement was added, deleted, performed in the wrong direction, or used a completely different movement.

• Regarding repetitions: do not count! Simply distinguish between “once” and “more than once,” meaning if a movement has repetitions but the subject only does the movement once, then this is incorrect; or if the movement has no repetition but the subject adds one, this is also incorrect.

• Regarding length of path: only consider the length of the movement when it extends from within the persons frame to outside of it and vice versa.

• Regarding trajectory: Any deviation of ~30 degrees or more is considered incorrect

Internal movement—movement of the wrist or fingers

• The only internal movement in this task is wrist rotation; judge this parameter incorrect if the orientation of the hand is off by about 75 degrees or more.

• This parameter should be considered wrong if any other internal movement is added.

APPENDIX B NONSIGN REPETITION TASK (NSRT) SCORING DIRECTIONS

Scoring

Nonsigns will be scored dichotomously (0 or 1) on the following: handshape, path movement, and internal movement. Location errors will be noted but will not be used in calculating scores.

Besides scoring, you will also provide comments for each column.

Handshape

• An incorrect handshape may add or delete finger/thumb or use a different handshape (5 instead of B or V instead of K)

• A small deviation in handshape is allowed. For example, if the pinky sticks out a bit while doing a B handshape.

• Deviations in handshape will be considered incorrect when a finger/thumb is in a different position or configuration.

• Orientation: any deviation of 75 degrees or greater will be considered an error in handshape.

Path movement

• Path movement will be considered incorrect if path movement was added, deleted, performed in the wrong direction, or used a completely different movement.

• Regarding repetitions: do not count! Simply distinguish between “once” and “more than once,” meaning if a movement has repetitions but the subject only does the movement once, then this is a 0; or if the movement has no repetition but the subject adds one, this is also a 0.

• Regarding length of path: Only consider the length of the movement when it extends from within the persons frame to outside of it and vice versa. (Remember [lead author's] example.)

• Regarding trajectory: any deviation of ~30 degrees or more is considered incorrect

• Regarding size of the movement if a shape is outlined: two sizes, large and small (e.g., a circular path movement that forms a circle).

Internal movement

Internal movement will be considered incorrect if aperture or trill was added or deleted, wrist rotation, deviation, nodding [extension/flexion] was added or deleted, if a second handshape's orientation is off by 75 degrees or greater, or if the second handshape is otherwise incorrect.

Location

• Location will be considered incorrect . . .

○ If a movement or handshape is incorrectly occluded or exposed

○ For unidirectional movements:

▪ If the location of the gesture begins or ends in the wrong general area

• Around the head/around the body, in front/to the side of the person, etc.

• Again, judge distance grossly

○ For alternating movements or movements with repetition:

▪ The movement should be contained within the same general space, but do NOT count off if the individual begins and ends the movement in the opposite place (e.g., if the movement alternated between right and left but the participant began the movement as left-right, that is fine).

▪ Since we are not counting how many times a movement was performed, location may be off here as well and that is okay, so long as the movement required repetition and the participant did more than 1 cycle.

Comments

• Comments must be made anytime

○ A “0” is given for any parameter

○ A difficult decision was made

○ The wrong hand was used

▪ If the wrong hand is used and this affects the movement or location, make sure to score and comment accordingly.

▪ If the wrong hand was used but movement or location was unaffected, then simply note it here.

○ Use the following codes:

▪ O = omission = item was not performed

▪ I = intrusion = an item not part of the current set (or even task) was performed (use the other comments section)

▪ S = substitution = an incorrect movement, handshape, location, or orientation was used

▪ A = addition = an extra movement was added

▪ D = deletion = a movement was deleted

ACKNOWLEDGMENTS

This research was supported by the National Science Foundation Science of Learning Center Grant on Visual Language and Visual Learning (VL2) at Gallaudet University, under cooperative agreement number SBE-0541953, and by a Goizueta Foundation Fellowship awarded to the first author. We thank Maya Berinhout, Rachel Monahan, Natalie Pittman, and Angelique Soulakos for their help throughout the project. We also thank Dr. Randy Engle and Dr. Daniel Spieler for helpful suggestions.