1 Introduction

In this article, we address the following question: Is it possible to determine the non-linear law of a reaction-diffusion process by applying sources and measuring the corresponding flux on the boundary of the domain of diffusion? Mathematically, the question can be stated as a problem of determination of a general semilinear term appearing in a semilinear parabolic equation from boundary measurements.

Let us explain the problem precisely. Let

![]() $T>0$

and

$T>0$

and

![]() $\Omega \subset {\mathbb R}^n$

, with

$\Omega \subset {\mathbb R}^n$

, with

![]() $n\geqslant 2$

, be a bounded and connected domain with a smooth boundary. Let

$n\geqslant 2$

, be a bounded and connected domain with a smooth boundary. Let

![]() $a:=(a_{ik})_{1 \leqslant i,k \leqslant n} \in C^\infty ([0,T]\times \overline {\Omega };{\mathbb R}^{n\times n})$

be symmetric matrix field,

$a:=(a_{ik})_{1 \leqslant i,k \leqslant n} \in C^\infty ([0,T]\times \overline {\Omega };{\mathbb R}^{n\times n})$

be symmetric matrix field,

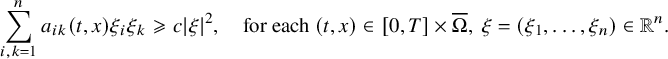

which fulfills the following ellipticity condition: there exists a constant

![]() $c>0$

such that

$c>0$

such that

$$ \begin{align} \sum_{i,k=1}^n a_{ik}(t,x) \xi_i \xi_k \geqslant c |\xi|^2, \quad \mbox{for each } (t,x)\in [0,T]\times\overline{\Omega},\ \xi=(\xi_1,\ldots,\xi_n) \in {\mathbb R}^n. \end{align} $$

$$ \begin{align} \sum_{i,k=1}^n a_{ik}(t,x) \xi_i \xi_k \geqslant c |\xi|^2, \quad \mbox{for each } (t,x)\in [0,T]\times\overline{\Omega},\ \xi=(\xi_1,\ldots,\xi_n) \in {\mathbb R}^n. \end{align} $$

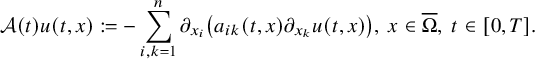

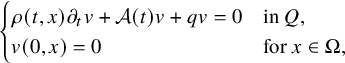

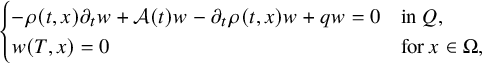

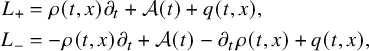

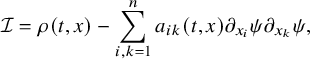

We define elliptic operators

![]() $\mathcal A(t)$

,

$\mathcal A(t)$

,

![]() $t\in [0,T]$

, in divergence form by

$t\in [0,T]$

, in divergence form by

$$ \begin{align*}\mathcal A(t) u(t,x) :=-\sum_{i,k=1}^n \partial_{x_i} \left( a_{ik}(t,x) \partial_{x_k} u(t,x) \right),\ x\in\overline{\Omega},\ t\in[0,T]. \end{align*} $$

$$ \begin{align*}\mathcal A(t) u(t,x) :=-\sum_{i,k=1}^n \partial_{x_i} \left( a_{ik}(t,x) \partial_{x_k} u(t,x) \right),\ x\in\overline{\Omega},\ t\in[0,T]. \end{align*} $$

Throughout the article, we set

and we refer to

![]() $\Sigma $

as the lateral boundary. Let us also fix

$\Sigma $

as the lateral boundary. Let us also fix

![]() $\rho \in C^\infty ([0,T]\times \overline {\Omega };{\mathbb R}_+)$

and

$\rho \in C^\infty ([0,T]\times \overline {\Omega };{\mathbb R}_+)$

and

![]() $b\in C^\infty ([0,T]\times \overline {\Omega }\times {\mathbb R})$

. Here,

$b\in C^\infty ([0,T]\times \overline {\Omega }\times {\mathbb R})$

. Here,

![]() ${\mathbb R}_+=(0,+\infty )$

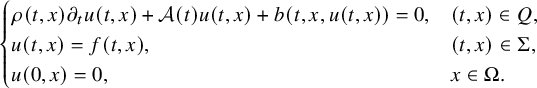

. Then, we consider the following initial boundary value problem (IBVP in short):

${\mathbb R}_+=(0,+\infty )$

. Then, we consider the following initial boundary value problem (IBVP in short):

$$ \begin{align} \begin{cases} \rho(t,x) \partial_t u(t,x)+\mathcal A(t) u(t,x)+ b(t,x,u(t,x)) = 0, & (t,x)\in Q,\\ u(t,x) = f(t,x), & (t,x) \in \Sigma, \\ u(0,x) = 0, & x \in \Omega. \end{cases} \end{align} $$

$$ \begin{align} \begin{cases} \rho(t,x) \partial_t u(t,x)+\mathcal A(t) u(t,x)+ b(t,x,u(t,x)) = 0, & (t,x)\in Q,\\ u(t,x) = f(t,x), & (t,x) \in \Sigma, \\ u(0,x) = 0, & x \in \Omega. \end{cases} \end{align} $$

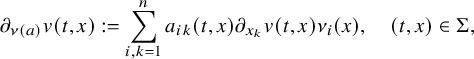

The parabolic Dirichlet-to-Neumann map (DN map in short) is formally defined by

where u is the solution to (1.2). Here, the conormal derivative

![]() $\partial _{\nu (a)}$

associated to the coefficient a is defined by

$\partial _{\nu (a)}$

associated to the coefficient a is defined by

$$ \begin{align*}\partial_{\nu(a)}v(t,x):=\sum_{i,k=1}^na_{ik}(t,x)\partial_{x_k}v(t,x)\nu_i(x),\quad (t,x)\in\Sigma,\end{align*} $$

$$ \begin{align*}\partial_{\nu(a)}v(t,x):=\sum_{i,k=1}^na_{ik}(t,x)\partial_{x_k}v(t,x)\nu_i(x),\quad (t,x)\in\Sigma,\end{align*} $$

where

![]() $\nu =(\nu _1,\ldots ,\nu _n)$

denotes the outward unit normal vector of

$\nu =(\nu _1,\ldots ,\nu _n)$

denotes the outward unit normal vector of

![]() $\partial \Omega $

with respect to the Euclidean

$\partial \Omega $

with respect to the Euclidean

![]() ${\mathbb R}^n$

metric. The solution u used in the definition of the DN map

${\mathbb R}^n$

metric. The solution u used in the definition of the DN map

![]() $\mathcal {N}_b$

is unique in a specific sense so that there is no ambiguity in the definition of

$\mathcal {N}_b$

is unique in a specific sense so that there is no ambiguity in the definition of

![]() $\mathcal {N}_b$

. For this fact and a rigorous definition of the DN map, we refer to to Section 2.1. We write simply

$\mathcal {N}_b$

. For this fact and a rigorous definition of the DN map, we refer to to Section 2.1. We write simply

![]() $\partial _{\nu }=\partial _{\nu (a)}$

in the case a is the

$\partial _{\nu }=\partial _{\nu (a)}$

in the case a is the

![]() $n\times n$

identity matrix

$n\times n$

identity matrix

![]() $\mathrm {Id}_{{\mathbb R}^{n\times n}}$

. The inverse problem we study is the following.

$\mathrm {Id}_{{\mathbb R}^{n\times n}}$

. The inverse problem we study is the following.

-

• Inverse problem (IP): Can we recover the semilinear term b from the knowledge of the parabolic Dirichlet-to-Neumann map

$\mathcal N_{b}$

?

$\mathcal N_{b}$

?

Physically, reaction diffusion equations of the form (1.2) describe several classes of diffusion processes with applications in chemistry, biology, geology, physics and ecology. This includes the spreading of biological populations [Reference FisherFis37], the Rayleigh-Bénard convection [Reference Newell and WhiteheadNW87] or models appearing in combustion theory [Reference VolpertVol14, Reference Zeldovich and Frank-KamenetskyZFK38]. The inverse problem (IP) is equivalent to the determination of an underlying physical law of a diffusion process, described by the nonlinear expression b in (1.2), by applying different sources (e.g., heat sources) and measuring the corresponding flux at the lateral boundary

![]() $\Sigma $

. The information extracted from this way is encoded into the DN map

$\Sigma $

. The information extracted from this way is encoded into the DN map

![]() $\mathcal N_{b}$

.

$\mathcal N_{b}$

.

These last decades, problems of parameter identification in nonlinear partial differential equations have generated a large interest in the mathematical community. Among the different formulation of these inverse problems, the determination of a nonlinear law is one of the most challenging from the severe ill-posedness and nonlinearity of the problem. For diffusion equations, one of the first results in that direction can be found in [Reference IsakovIsa93]. Later on, this result was improved by [Reference Choulli and KianCK18b], where the stability issue was also considered. To the best of our knowledge, the most general and complete result known so far about the determination of a semilinear term of the form

![]() $b(t,x,u)$

depending simultaneously on the time variable t, the space variable x and the solution of the equation u from knowledge of the parabolic DN map

$b(t,x,u)$

depending simultaneously on the time variable t, the space variable x and the solution of the equation u from knowledge of the parabolic DN map

![]() $\mathcal N_{b}$

can be found in [Reference Kian and UhlmannKU23]. Without being exhaustive, we mention the works of [Reference IsakovIsa01, Reference Cannon and YinCY88, Reference Choulli, Ouhabaz and YamamotoCOY06] devoted to the determination of semilinear terms depending only on the solution and the determination of quasilinear terms addressed in [Reference Caro and KianCK18a, Reference Egger, Pietschmann and SchlottbomEPS17, Reference Feizmohammadi, Kian and UhlmannFKU22]. Finally, we mention the works of [Reference Feizmohammadi and OksanenFO20, Reference Kurylev, Lassas and UhlmannKLU18, Reference Lassas, Liimatainen, Lin and SaloLLLS21, Reference Lassas, Liimatainen, Lin and SaloLLLS20, Reference Liimatainen, Lin, Salo and TyniLLST22, Reference Krupchyk and UhlmannKU20b, Reference Krupchyk and UhlmannKU20a, Reference Feizmohammadi, Liimatainen and LinFLL23, Reference Harrach and LinHL23, Reference Kian, Krupchyk and UhlmannKKU23, Reference Cârstea, Feizmohammadi, Kian, Krupchyk and UhlmannCFK+21, Reference Feizmohammadi, Kian and UhlmannFKU22, Reference Lai and LinLL22a, Reference LinLin22, Reference Kian and UhlmannKU23, Reference Lin and LiuLL23, Reference Lai and LinLL19] devoted to similar problems for elliptic and hyperbolic equations. Moreover, in the recent works [Reference Lin, Liu, Liu and ZhangLLLZ22, Reference Lin, Liu and LiuLLL21], the authors investigated simultaneous determination problems of coefficients and initial data for both parabolic and hyperbolic equations.

$\mathcal N_{b}$

can be found in [Reference Kian and UhlmannKU23]. Without being exhaustive, we mention the works of [Reference IsakovIsa01, Reference Cannon and YinCY88, Reference Choulli, Ouhabaz and YamamotoCOY06] devoted to the determination of semilinear terms depending only on the solution and the determination of quasilinear terms addressed in [Reference Caro and KianCK18a, Reference Egger, Pietschmann and SchlottbomEPS17, Reference Feizmohammadi, Kian and UhlmannFKU22]. Finally, we mention the works of [Reference Feizmohammadi and OksanenFO20, Reference Kurylev, Lassas and UhlmannKLU18, Reference Lassas, Liimatainen, Lin and SaloLLLS21, Reference Lassas, Liimatainen, Lin and SaloLLLS20, Reference Liimatainen, Lin, Salo and TyniLLST22, Reference Krupchyk and UhlmannKU20b, Reference Krupchyk and UhlmannKU20a, Reference Feizmohammadi, Liimatainen and LinFLL23, Reference Harrach and LinHL23, Reference Kian, Krupchyk and UhlmannKKU23, Reference Cârstea, Feizmohammadi, Kian, Krupchyk and UhlmannCFK+21, Reference Feizmohammadi, Kian and UhlmannFKU22, Reference Lai and LinLL22a, Reference LinLin22, Reference Kian and UhlmannKU23, Reference Lin and LiuLL23, Reference Lai and LinLL19] devoted to similar problems for elliptic and hyperbolic equations. Moreover, in the recent works [Reference Lin, Liu, Liu and ZhangLLLZ22, Reference Lin, Liu and LiuLLL21], the authors investigated simultaneous determination problems of coefficients and initial data for both parabolic and hyperbolic equations.

Most of the above mentioned results concern the inverse problem (IP) under the assumption that the semilinear term b in (1.2) satisfies the condition

This condition implies that (1.2) has at least one known solution, the trivial solution. In the same spirit, for any constant

![]() $\lambda \in {\mathbb R}$

, the condition

$\lambda \in {\mathbb R}$

, the condition

![]() $ b(t,x,\lambda )=0$

for

$ b(t,x,\lambda )=0$

for

![]() $(t,x)\in Q$

implies that the constant function

$(t,x)\in Q$

implies that the constant function

![]() $(t,x)\mapsto \lambda $

is a solution of the equation

$(t,x)\mapsto \lambda $

is a solution of the equation

![]() $\rho (t,x) \partial _t u+\mathcal A(t) u+ b(t,x,u) = 0$

in Q. In the present article, we treat the determination of general class of semilinear terms that might not satisfy the condition (1.3). In this case, the inverse problem (IP) is even more challenging since no solutions of (1.2) may be known a priori. In fact, as observed in [Reference SunSun10] for elliptic equations, there is an obstruction to the determination of b from the knowledge of

$\rho (t,x) \partial _t u+\mathcal A(t) u+ b(t,x,u) = 0$

in Q. In the present article, we treat the determination of general class of semilinear terms that might not satisfy the condition (1.3). In this case, the inverse problem (IP) is even more challenging since no solutions of (1.2) may be known a priori. In fact, as observed in [Reference SunSun10] for elliptic equations, there is an obstruction to the determination of b from the knowledge of

![]() $\mathcal N_b$

in the form of a gauge symmetry. We demonstrate the gauge symmetry first in the form of an example.

$\mathcal N_b$

in the form of a gauge symmetry. We demonstrate the gauge symmetry first in the form of an example.

Example 1.1. Let us consider the inverse problem (IP) for the simplest linear case

$$ \begin{align} \begin{cases} \partial_t u -\Delta u =b_0 &\text{ in }Q, \\ u=f& \text{ on }\Sigma,\\ u(0,x)=0 &\text{ in }\Omega, \end{cases} \end{align} $$

$$ \begin{align} \begin{cases} \partial_t u -\Delta u =b_0 &\text{ in }Q, \\ u=f& \text{ on }\Sigma,\\ u(0,x)=0 &\text{ in }\Omega, \end{cases} \end{align} $$

where the aim is to recover an unknown source term

![]() $b_0=b_0(x,t)$

. Here,

$b_0=b_0(x,t)$

. Here,

![]() $\Delta $

is the Laplacian, but it could also be replaced by a more general second-order elliptic operator whose coefficients are known. Let us consider a function

$\Delta $

is the Laplacian, but it could also be replaced by a more general second-order elliptic operator whose coefficients are known. Let us consider a function

![]() $\varphi \in C^{\infty }([0,T]\times \overline {\Omega })$

, which satisfies

$\varphi \in C^{\infty }([0,T]\times \overline {\Omega })$

, which satisfies

![]() $\varphi \not \equiv 0$

,

$\varphi \not \equiv 0$

,

![]() $\varphi (0,x)=0$

for

$\varphi (0,x)=0$

for

![]() $x\in \Omega $

and

$x\in \Omega $

and

![]() $\varphi =\partial _{\nu } \varphi =0$

on

$\varphi =\partial _{\nu } \varphi =0$

on

![]() $\Sigma $

, where

$\Sigma $

, where

![]() $\partial _\nu \varphi $

denotes the Neumann derivative of

$\partial _\nu \varphi $

denotes the Neumann derivative of

![]() $\varphi $

on

$\varphi $

on

![]() $\Sigma $

.

$\Sigma $

.

Then the function

![]() $\tilde u:=u+\varphi $

satisfies

$\tilde u:=u+\varphi $

satisfies

$$ \begin{align} \begin{cases} \partial_t \tilde u -\Delta \tilde u =b_0+(\partial_t -\Delta)\varphi &\text{ in }Q, \\ \tilde u=f& \text{ on }\Sigma,\\ \tilde u(0,x)=0 &\text{ in }\Omega. \end{cases} \end{align} $$

$$ \begin{align} \begin{cases} \partial_t \tilde u -\Delta \tilde u =b_0+(\partial_t -\Delta)\varphi &\text{ in }Q, \\ \tilde u=f& \text{ on }\Sigma,\\ \tilde u(0,x)=0 &\text{ in }\Omega. \end{cases} \end{align} $$

Since u and

![]() $\tilde u$

have the same initial data at

$\tilde u$

have the same initial data at

![]() $t=0$

and boundary data on

$t=0$

and boundary data on

![]() $\Sigma $

, we see that the DN maps of (1.4) and (1.5) are the same. However, by unique continuation properties for parabolic equations (see, for example, [Reference Saut and ScheurerSS87, Theorem 1.1]), the conditions

$\Sigma $

, we see that the DN maps of (1.4) and (1.5) are the same. However, by unique continuation properties for parabolic equations (see, for example, [Reference Saut and ScheurerSS87, Theorem 1.1]), the conditions

![]() $\varphi =\partial _{\nu } \varphi =0$

on

$\varphi =\partial _{\nu } \varphi =0$

on

![]() $\Sigma $

and

$\Sigma $

and

![]() $\varphi \not \equiv 0$

imply that

$\varphi \not \equiv 0$

imply that

![]() $(\partial _t -\Delta )\varphi \not \equiv 0$

, and it follows that

$(\partial _t -\Delta )\varphi \not \equiv 0$

, and it follows that

![]() $b_0+(\partial _t -\Delta )\varphi \neq b_0$

. Consequently, the inverse problem (IP) cannot be uniquely solved.

$b_0+(\partial _t -\Delta )\varphi \neq b_0$

. Consequently, the inverse problem (IP) cannot be uniquely solved.

In what follows, we assume that

![]() $\alpha \in (0,1)$

and refer to Section 2.1 for the definitions of various function spaces that will show up. Let us describe the gauge symmetry, or gauge invariance, of the inverse problem (IP) in detail. For this, let a function

$\alpha \in (0,1)$

and refer to Section 2.1 for the definitions of various function spaces that will show up. Let us describe the gauge symmetry, or gauge invariance, of the inverse problem (IP) in detail. For this, let a function

![]() $\varphi \in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

again satisfy

$\varphi \in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

again satisfy

and consider the mapping

![]() $S_\varphi $

from

$S_\varphi $

from

![]() $ C^\infty ({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

into itself defined by

$ C^\infty ({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

into itself defined by

As in the example above, one can easily check that

![]() $\mathcal N_{b}=\mathcal N_{S_\varphi b}$

.

$\mathcal N_{b}=\mathcal N_{S_\varphi b}$

.

In view of this obstruction, the inverse problem (IP) should be reformulated as a problem about determining the semilinear term up to the gauge symmetry described by (1.7). We note that (1.7) implies an equivalence relation for functions in

![]() $ C^\infty ({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

. Corresponding equivalence classes will be called gauge classes:

$ C^\infty ({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

. Corresponding equivalence classes will be called gauge classes:

Definition 1.1 (Gauge class).

We say that two nonlinearities

![]() $b_1,b_2\in C^\infty ({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega })$

are in the same gauge class, or equivalent up to a gauge, if there is

$b_1,b_2\in C^\infty ({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega })$

are in the same gauge class, or equivalent up to a gauge, if there is

![]() $\varphi \in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

satisfying (1.6) such that

$\varphi \in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

satisfying (1.6) such that

Here,

![]() $S_\varphi $

is as in (1.7). In the case we consider a partial data inverse problem, where the normal derivative of solutions is assumed to be known only on

$S_\varphi $

is as in (1.7). In the case we consider a partial data inverse problem, where the normal derivative of solutions is assumed to be known only on

![]() $(0,T)\times \tilde \Gamma $

, with

$(0,T)\times \tilde \Gamma $

, with

![]() $\tilde \Gamma $

open in

$\tilde \Gamma $

open in

![]() $\partial \Omega $

, we assume

$\partial \Omega $

, we assume

![]() $\partial _{\nu (a)} \varphi =0$

only on

$\partial _{\nu (a)} \varphi =0$

only on

![]() $(0,T)\times \tilde \Gamma $

in (1.6).

$(0,T)\times \tilde \Gamma $

in (1.6).

Using the above definition of the gauge class and taking into account the obstruction described above, we reformulate the inverse problem (IP) as follows.

-

• Inverse problem (IP1): Can we determine the gauge class of the semilinear term b from the full or a partial knowledge of the parabolic DN map

$\mathcal N_{b}$

?

$\mathcal N_{b}$

?

There is a natural additional question raised by (IP1) – namely,

-

• Inverse problem (IP2): When does the gauge invariance break leading to a full resolution of the inverse problem (IP)?

One can easily check that it is possible to give a positive answer to problem (IP2) when (1.3) is fulfilled. Nevertheless, as observed in the recent work [Reference Liimatainen and LinLL22b], the resolution of problem (IP2) is not restricted to such situations. The work [Reference Liimatainen and LinLL22b] provided the first examples (in an elliptic setting) how to use nonlinearity as a tool to break the gauge invariance of (IP).

Let us also remark that, following Example 1.1, when

![]() $u\mapsto b(\,\cdot \,,u)$

is affine, corresponding to the case where the equation (1.2) is linear and has a source term, there is no hope to break the gauge invariance (1.7) and the answer to (IP2) is, in general, negative. As will be observed in this article, this is no longer the case for various classes of nonlinear terms b. We will present cases where we will be able to solve (IP) uniquely. These cases present new instances where nonlinear interaction can be helpful in inverse problems. Nonlinearity has earlier been observed to be a helpful tool by many authors in different situations such as in partial data inverse problems and in anisotropic inverse problems on manifolds; see, for example, [Reference Kurylev, Lassas and UhlmannKLU18, Reference Feizmohammadi and OksanenFO20, Reference Lassas, Liimatainen, Lin and SaloLLLS21, Reference Lassas, Liimatainen, Lin and SaloLLLS20, Reference Liimatainen, Lin, Salo and TyniLLST22, Reference Krupchyk and UhlmannKU20a, Reference Krupchyk and UhlmannKU20b, Reference Feizmohammadi, Liimatainen and LinFLL23].

$u\mapsto b(\,\cdot \,,u)$

is affine, corresponding to the case where the equation (1.2) is linear and has a source term, there is no hope to break the gauge invariance (1.7) and the answer to (IP2) is, in general, negative. As will be observed in this article, this is no longer the case for various classes of nonlinear terms b. We will present cases where we will be able to solve (IP) uniquely. These cases present new instances where nonlinear interaction can be helpful in inverse problems. Nonlinearity has earlier been observed to be a helpful tool by many authors in different situations such as in partial data inverse problems and in anisotropic inverse problems on manifolds; see, for example, [Reference Kurylev, Lassas and UhlmannKLU18, Reference Feizmohammadi and OksanenFO20, Reference Lassas, Liimatainen, Lin and SaloLLLS21, Reference Lassas, Liimatainen, Lin and SaloLLLS20, Reference Liimatainen, Lin, Salo and TyniLLST22, Reference Krupchyk and UhlmannKU20a, Reference Krupchyk and UhlmannKU20b, Reference Feizmohammadi, Liimatainen and LinFLL23].

In the present article, we will address both problems (IP1) and (IP2). We will start by considering the problem (IP1) for nonlinear terms, which are quite general. Then, we will exhibit several general situations where the gauge invariance breaks and give an answer to (IP2).

We mainly restrict our analysis to semilinear terms b subjected to the condition that the map

![]() $u\mapsto b(\,\cdot \,,u)$

is analytic (the

$u\mapsto b(\,\cdot \,,u)$

is analytic (the

![]() $(t,x)$

dependence is of

$(t,x)$

dependence is of

![]() $b(t,x,\mu )$

will not be assumed to be analytic). The restriction to this class of nonlinear terms is motivated by the study of the challenging problems (IP1) and (IP2) in this article. Indeed, even when condition (1.3) is fulfilled, the problem (IP) is still open, in general, for semilinear terms that are not subjected to our analyticity condition (see [Reference Kian and UhlmannKU23] for the most complete results known so far for this problem). Results for semilinear elliptic equations have also been proven for cases when the nonlinear terms are, roughly speaking, globally Lipschitz (see, for example, [Reference Isakov and NachmanIN95, Reference Isakov and SylvesterIS94]). For this reason, our assumptions seem reasonable for tackling problems (IP1) and (IP2) and giving the first answers to these challenging problems. Note also that the linear part of (1.2) will be associated with a general class of linear parabolic equations with variable time-dependent coefficients. Consequently, we will present results also for linear equations with full and partial data measurements.

$b(t,x,\mu )$

will not be assumed to be analytic). The restriction to this class of nonlinear terms is motivated by the study of the challenging problems (IP1) and (IP2) in this article. Indeed, even when condition (1.3) is fulfilled, the problem (IP) is still open, in general, for semilinear terms that are not subjected to our analyticity condition (see [Reference Kian and UhlmannKU23] for the most complete results known so far for this problem). Results for semilinear elliptic equations have also been proven for cases when the nonlinear terms are, roughly speaking, globally Lipschitz (see, for example, [Reference Isakov and NachmanIN95, Reference Isakov and SylvesterIS94]). For this reason, our assumptions seem reasonable for tackling problems (IP1) and (IP2) and giving the first answers to these challenging problems. Note also that the linear part of (1.2) will be associated with a general class of linear parabolic equations with variable time-dependent coefficients. Consequently, we will present results also for linear equations with full and partial data measurements.

2 Main results

In this section, we will first introduce some preliminary definitions and results required for the rigorous formulation of our problem (IP). Then, we will state our main results for problems (IP1) and (IP2).

2.1 Preliminary properties

From now on, we fix

![]() $\alpha \in (0,1)$

, and we denote by

$\alpha \in (0,1)$

, and we denote by

![]() $C^{\frac {\alpha }{2},\alpha }([0,T]\times X)$

, with

$C^{\frac {\alpha }{2},\alpha }([0,T]\times X)$

, with

![]() $X=\overline {\Omega }$

or

$X=\overline {\Omega }$

or

![]() $X=\partial \Omega $

, the set of functions h lying in

$X=\partial \Omega $

, the set of functions h lying in

![]() $ C([0,T]\times X)$

satisfying

$ C([0,T]\times X)$

satisfying

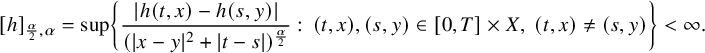

$$ \begin{align*}[h]_{\frac{\alpha}{2},\alpha}=\sup\left\{\frac{|h(t,x)-h(s,y)|}{(|x-y|^2+|t-s|)^{\frac{\alpha}{2}}}:\, (t,x),(s,y)\in [0,T]\times X,\ (t,x)\neq(s,y)\right\}<\infty.\end{align*} $$

$$ \begin{align*}[h]_{\frac{\alpha}{2},\alpha}=\sup\left\{\frac{|h(t,x)-h(s,y)|}{(|x-y|^2+|t-s|)^{\frac{\alpha}{2}}}:\, (t,x),(s,y)\in [0,T]\times X,\ (t,x)\neq(s,y)\right\}<\infty.\end{align*} $$

Then we define the space

![]() $ C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times X)$

as the set of functions h lying in

$ C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times X)$

as the set of functions h lying in

such that

We consider these spaces with their usual norms, and we refer to [Reference ChoulliCho09, pp. 4] for more details. Let us also introduce the space

If

![]() $r>0$

and

$r>0$

and

![]() $h\in \mathcal K_0$

, we denote by

$h\in \mathcal K_0$

, we denote by

the ball centered at h and of radius r in the space

![]() $\mathcal K_0$

. We assume also that b fulfills the following condition:

$\mathcal K_0$

. We assume also that b fulfills the following condition:

In this article, we assume that there exists

![]() $f_0\in \mathcal K_0$

such that (1.2) admits a unique solution u lying in

$f_0\in \mathcal K_0$

such that (1.2) admits a unique solution u lying in

![]() $C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

when

$C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

when

![]() $f=f_0$

. We note that the existence of u requires the condition (2.2) in order to have compatibility with the initial data at time

$f=f_0$

. We note that the existence of u requires the condition (2.2) in order to have compatibility with the initial data at time

![]() $t=0$

and the Dirichlet data on the lateral boundary

$t=0$

and the Dirichlet data on the lateral boundary

![]() $\Sigma $

(see [Reference Ladyženskaja, Solonnikov and Ural’cevaLSU88, pp. 319 and pp. 449] for more details).

$\Sigma $

(see [Reference Ladyženskaja, Solonnikov and Ural’cevaLSU88, pp. 319 and pp. 449] for more details).

According to [Reference Ladyženskaja, Solonnikov and Ural’cevaLSU88, Theorem 6.1, pp. 452], [Reference Ladyženskaja, Solonnikov and Ural’cevaLSU88, Theorem 2.2, pp. 429], [Reference Ladyženskaja, Solonnikov and Ural’cevaLSU88, Theorem 4.1, pp. 443], [Reference Ladyženskaja, Solonnikov and Ural’cevaLSU88, Lemma 3.1, pp. 535] and [Reference Ladyženskaja, Solonnikov and Ural’cevaLSU88, Theorem 5.4, pp. 448], the problem (1.2) is well-posed for any

![]() $f=f_0\in \mathcal K_0$

if, for instance, there exist

$f=f_0\in \mathcal K_0$

if, for instance, there exist

![]() $c_1,c_2\geqslant 0$

such that the semilinear term b satisfies the following sign condition

$c_1,c_2\geqslant 0$

such that the semilinear term b satisfies the following sign condition

The unique existence of solutions of (1.2) for some

![]() $f\in \mathcal K_0$

is not restricted to such a situation. Indeed, assume that there exists

$f\in \mathcal K_0$

is not restricted to such a situation. Indeed, assume that there exists

![]() $\psi \in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

satisfying

$\psi \in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

satisfying

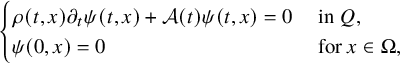

$$ \begin{align*} \begin{cases} \rho(t,x) \partial_t \psi(t,x)+\mathcal A(t) \psi(t,x) = 0 & \text{ in } Q,\\ \psi(0,x) = 0 & \text{ for }x \in \Omega, \end{cases} \end{align*} $$

$$ \begin{align*} \begin{cases} \rho(t,x) \partial_t \psi(t,x)+\mathcal A(t) \psi(t,x) = 0 & \text{ in } Q,\\ \psi(0,x) = 0 & \text{ for }x \in \Omega, \end{cases} \end{align*} $$

such that

![]() $b(t,x,\psi (t,x))=0$

for

$b(t,x,\psi (t,x))=0$

for

![]() $(t,x)\in [0,T]\times \overline {\Omega }$

. Then, one can easily check that (1.2) admits a unique solution when

$(t,x)\in [0,T]\times \overline {\Omega }$

. Then, one can easily check that (1.2) admits a unique solution when

![]() $f=\psi |_{\Sigma }$

. Moreover, applying Proposition 3.1, we deduce that (1.2) will be well-posed when

$f=\psi |_{\Sigma }$

. Moreover, applying Proposition 3.1, we deduce that (1.2) will be well-posed when

![]() $f\in \mathcal K_0$

is sufficiently close to

$f\in \mathcal K_0$

is sufficiently close to

![]() $\psi |_{\Sigma }$

in the sense of

$\psi |_{\Sigma }$

in the sense of

![]() $C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \partial \Omega )$

.

$C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \partial \Omega )$

.

As will be shown in Proposition 3.1, the existence of

![]() $f_0\in \mathcal K_0$

such that (1.2) admits a solution when

$f_0\in \mathcal K_0$

such that (1.2) admits a solution when

![]() $f=f_0$

implies that there exists

$f=f_0$

implies that there exists

![]() $\epsilon>0$

, depending on a,

$\epsilon>0$

, depending on a,

![]() $\rho $

, b,

$\rho $

, b,

![]() $f_0$

,

$f_0$

,

![]() $\Omega $

, T, such that, for all

$\Omega $

, T, such that, for all

![]() $f\in \mathbb B(f_0,\epsilon )$

, the problem (1.2) admits a solution

$f\in \mathbb B(f_0,\epsilon )$

, the problem (1.2) admits a solution

![]() $u_f\in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

, which is unique in a sufficiently small neighborhood of the solution of (1.2) with boundary value

$u_f\in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

, which is unique in a sufficiently small neighborhood of the solution of (1.2) with boundary value

![]() $f=f_0$

. Using these properties, we can define the parabolic DN map

$f=f_0$

. Using these properties, we can define the parabolic DN map

2.2 Resolution of (IP1)

We present our first main results about recovering the gauge class of a semilinear term from the corresponding DN map.

We consider

![]() $\Omega _1$

to be an open bounded, smooth and connected subset of

$\Omega _1$

to be an open bounded, smooth and connected subset of

![]() ${\mathbb R}^n$

such that

${\mathbb R}^n$

such that

![]() $\overline {\Omega }\subset \Omega _1$

. We extend a and

$\overline {\Omega }\subset \Omega _1$

. We extend a and

![]() $\rho $

into functions defined smoothly on

$\rho $

into functions defined smoothly on

![]() $[0,T]\times \overline {\Omega }_1$

satisfying

$[0,T]\times \overline {\Omega }_1$

satisfying

![]() $\rho>0$

and condition (1.1) with

$\rho>0$

and condition (1.1) with

![]() $\Omega $

replaced by

$\Omega $

replaced by

![]() $\Omega _1$

. For all

$\Omega _1$

. For all

![]() $t\in [0,T]$

, we set also

$t\in [0,T]$

, we set also

and we consider the compact Riemannian manifold with boundary

![]() $(\overline {\Omega }_1,g(t))$

.

$(\overline {\Omega }_1,g(t))$

.

Assumption 2.1. Throughout this article, we assume that

![]() $ (\overline {\Omega }_1,g(t) )$

is a simple Riemannian manifold for all

$ (\overline {\Omega }_1,g(t) )$

is a simple Riemannian manifold for all

![]() $t\in [0,T]$

. That is, we assume that for any point

$t\in [0,T]$

. That is, we assume that for any point

![]() $x\in \overline {\Omega }_1$

, the exponential map

$x\in \overline {\Omega }_1$

, the exponential map

![]() $\exp _x$

is a diffeomorphism from some closed neighborhood of

$\exp _x$

is a diffeomorphism from some closed neighborhood of

![]() $0$

in

$0$

in

![]() $T_x\hspace {0.5pt} \overline {\Omega }_1$

onto

$T_x\hspace {0.5pt} \overline {\Omega }_1$

onto

![]() $\overline {\Omega }_1$

and

$\overline {\Omega }_1$

and

![]() $\partial \Omega _1$

is strictly convex.

$\partial \Omega _1$

is strictly convex.

From now on, for any Banach space X, we denote by

![]() $\mathbb A({\mathbb R};X)$

the set of analytic functions on

$\mathbb A({\mathbb R};X)$

the set of analytic functions on

![]() ${\mathbb R}$

as maps taking values in X. That is, for any

${\mathbb R}$

as maps taking values in X. That is, for any

![]() $b\in \mathbb A({\mathbb R};X)$

and

$b\in \mathbb A({\mathbb R};X)$

and

![]() $\mu \in {\mathbb R}$

, b has convergent X-valued Taylor series on a neighborhood of

$\mu \in {\mathbb R}$

, b has convergent X-valued Taylor series on a neighborhood of

![]() $\mu $

.

$\mu $

.

Theorem 2.1. Let

![]() $a:=(a_{ik})_{1 \leqslant i,k \leqslant n} \in C^\infty ([0,T]\times \overline {\Omega };{\mathbb R}^{n\times n})$

satisfy (1.1) and

$a:=(a_{ik})_{1 \leqslant i,k \leqslant n} \in C^\infty ([0,T]\times \overline {\Omega };{\mathbb R}^{n\times n})$

satisfy (1.1) and

![]() $\rho \in C^\infty ([0,T]\times \overline {\Omega };{\mathbb R}_+)$

. Let

$\rho \in C^\infty ([0,T]\times \overline {\Omega };{\mathbb R}_+)$

. Let

![]() $b_j\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

, which satisfies (2.2) as

$b_j\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

, which satisfies (2.2) as

![]() $b=b_j$

, for

$b=b_j$

, for

![]() $j=1,2$

. We also assume that there exists

$j=1,2$

. We also assume that there exists

![]() $f_0\in \mathcal K_0$

such that problem (1.2), with

$f_0\in \mathcal K_0$

such that problem (1.2), with

![]() $f=f_0$

and

$f=f_0$

and

![]() $b=b_j$

, admits a unique solution for

$b=b_j$

, admits a unique solution for

![]() $j=1,2$

. Then, the condition

$j=1,2$

. Then, the condition

implies that there exists

![]() $\varphi \in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

satisfying (1.6) such that

$\varphi \in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

satisfying (1.6) such that

with

![]() $S_\varphi $

the map defined by (1.7).

$S_\varphi $

the map defined by (1.7).

Remark 2.1. Let us list the data that can be recovered by our methods without the assumption of analyticity of b in the

![]() $\mu $

-variable. In this case, we can only recover the Taylor series of b in

$\mu $

-variable. In this case, we can only recover the Taylor series of b in

![]() $\mu $

-variable at shifted points. Indeed, assume as in Theorem 2.1 with the exception that the nonlinearities

$\mu $

-variable at shifted points. Indeed, assume as in Theorem 2.1 with the exception that the nonlinearities

![]() $b_1$

and

$b_1$

and

![]() $b_2$

are not analytic in the

$b_2$

are not analytic in the

![]() $\mu $

-variable, and fix

$\mu $

-variable, and fix

![]() $(t,x)$

. In this case, by inspecting the proof of Theorem 2.1(see Section 4), we can show that

$(t,x)$

. In this case, by inspecting the proof of Theorem 2.1(see Section 4), we can show that

where

![]() $u_{j,0}(t,x)$

, for

$u_{j,0}(t,x)$

, for

![]() $j=1,2$

, is the solution to (1.2) with coefficient

$j=1,2$

, is the solution to (1.2) with coefficient

![]() $b=b_j$

and boundary value

$b=b_j$

and boundary value

![]() $f=f_0\in \mathcal {K}_0$

. Thus, we see that the formal Taylor series of

$f=f_0\in \mathcal {K}_0$

. Thus, we see that the formal Taylor series of

![]() $b_1(t,x,\,\cdot \,)$

at

$b_1(t,x,\,\cdot \,)$

at

![]() $u_{1,0}(t,x)$

is that of

$u_{1,0}(t,x)$

is that of

![]() $b_2(t,x,\,\cdot \,)$

shifted by

$b_2(t,x,\,\cdot \,)$

shifted by

![]() $u_{2,0}(t,x)-u_{1,0}(t,x)$

, which is typically nonzero as we do not assume that we know any solutions to (1.2) a priori.

$u_{2,0}(t,x)-u_{1,0}(t,x)$

, which is typically nonzero as we do not assume that we know any solutions to (1.2) a priori.

By assuming analyticity in Theorem 2.1, we are able to connect the Taylor series of

![]() $b_1$

and

$b_1$

and

![]() $b_2$

in the

$b_2$

in the

![]() $\mu $

-variable at different points, which leads to (2.5) in the end. This is one motivation for the analyticity assumption. Note that we do not assume analyticity in the other variables.

$\mu $

-variable at different points, which leads to (2.5) in the end. This is one motivation for the analyticity assumption. Note that we do not assume analyticity in the other variables.

For our second result, let us consider a partial data result when

![]() $\mathcal A(t)=-\Delta $

(that is,

$\mathcal A(t)=-\Delta $

(that is,

![]() $a=\text {Id}_{\hspace {0.5pt} {\mathbb R}^{n\times n}}$

is an

$a=\text {Id}_{\hspace {0.5pt} {\mathbb R}^{n\times n}}$

is an

![]() $n\times n$

identity matrix) and

$n\times n$

identity matrix) and

![]() $\rho \equiv 1$

. More precisely, consider the front and back sets of

$\rho \equiv 1$

. More precisely, consider the front and back sets of

![]() $\partial \Omega $

$\partial \Omega $

with respect to a source

![]() $x_0\in {\mathbb R}^n\setminus \overline {\Omega }$

. Then, our second main result is stated as follows:

$x_0\in {\mathbb R}^n\setminus \overline {\Omega }$

. Then, our second main result is stated as follows:

Theorem 2.2. For

![]() $n\geqslant 3$

and

$n\geqslant 3$

and

![]() $\Omega $

simply connected, let

$\Omega $

simply connected, let

![]() $a=(a_{ik})_{1 \leqslant i,k \leqslant n}= \mathrm {Id}_{\,{\mathbb R}^{n\times n}}$

and

$a=(a_{ik})_{1 \leqslant i,k \leqslant n}= \mathrm {Id}_{\,{\mathbb R}^{n\times n}}$

and

![]() $\rho \equiv 1$

. Let

$\rho \equiv 1$

. Let

![]() $b_j\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

, which satisfies (2.2) as

$b_j\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

, which satisfies (2.2) as

![]() $b=b_j$

, for

$b=b_j$

, for

![]() $j=1,2$

. We also assume that there exists

$j=1,2$

. We also assume that there exists

![]() $f_0\in \mathcal K_0$

such that problem (1.2), with

$f_0\in \mathcal K_0$

such that problem (1.2), with

![]() $f=f_0$

and

$f=f_0$

and

![]() $b=b_j$

, admits a unique solution, for

$b=b_j$

, admits a unique solution, for

![]() $j=1,2$

. Fix

$j=1,2$

. Fix

![]() $x_0\in {\mathbb R}^n\setminus \overline {\Omega }$

and consider

$x_0\in {\mathbb R}^n\setminus \overline {\Omega }$

and consider

![]() $\tilde {\Gamma }$

a neighborhood of

$\tilde {\Gamma }$

a neighborhood of

![]() $\Gamma _-(x_0)$

on

$\Gamma _-(x_0)$

on

![]() $\partial \Omega $

. Then, the condition

$\partial \Omega $

. Then, the condition

implies that there exists

![]() $\varphi \in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

satisfying

$\varphi \in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

satisfying

such that

We will be able to break the gauge condition

![]() $b_1=S_\varphi b_2$

in Theorems 2.1 and 2.2 in various cases. We present these results separately in the next section.

$b_1=S_\varphi b_2$

in Theorems 2.1 and 2.2 in various cases. We present these results separately in the next section.

2.3 Breaking the gauge in the sense of (IP2)

In several situations, the gauge class (2.9) could be broken, and one can fully determine the semilinear term b in (1.2) from its parabolic DN map. We will present below classes of nonlinearities when such phenomenon occurs. We start by considering general elements of

![]() $b\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

for which the gauge invariance (2.9) breaks.

$b\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

for which the gauge invariance (2.9) breaks.

Corollary 2.1. Let the conditions of Theorem 2.1 be fulfilled and assume that there exists

![]() $\kappa \in C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega })$

such that

$\kappa \in C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega })$

such that

Then, the condition (2.4) implies that

![]() $b_1=b_2$

. In the same way, assuming that the conditions of Theorem 2.2 are fulfilled, the condition (2.6) implies that

$b_1=b_2$

. In the same way, assuming that the conditions of Theorem 2.2 are fulfilled, the condition (2.6) implies that

![]() $b_1=b_2$

.

$b_1=b_2$

.

Corollary 2.2. Let the conditions of Theorem 2.1 be fulfilled, and assume that there exists

![]() $h\in C^{\alpha }(\overline {\Omega })$

,

$h\in C^{\alpha }(\overline {\Omega })$

,

![]() $G\in C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega })$

and

$G\in C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega })$

and

![]() $\theta \in (0,T]$

satisfying the condition

$\theta \in (0,T]$

satisfying the condition

such that

Assume also that the solutions

![]() $u_{j,0}$

of (1.2),

$u_{j,0}$

of (1.2),

![]() $j=1,2$

, with

$j=1,2$

, with

![]() $f=f_0$

and

$f=f_0$

and

![]() $b=b_j$

, satisfy the condition

$b=b_j$

, satisfy the condition

Then, the condition (2.4) implies that

![]() $b_1=b_2$

. Moreover, by assuming that the conditions of Theorem 2.2 are fulfilled, the condition (2.6) implies that

$b_1=b_2$

. Moreover, by assuming that the conditions of Theorem 2.2 are fulfilled, the condition (2.6) implies that

![]() $b_1=b_2$

.

$b_1=b_2$

.

Now let us consider elements

![]() $b\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

which are polynomials of the form

$b\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

which are polynomials of the form

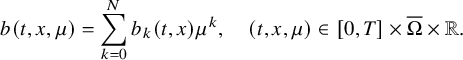

$$ \begin{align} b(t,x,\mu)=\sum_{k=0}^N b_k(t,x)\mu^k,\quad (t,x,\mu)\in[0,T]\times\overline{\Omega}\times{\mathbb R}. \end{align} $$

$$ \begin{align} b(t,x,\mu)=\sum_{k=0}^N b_k(t,x)\mu^k,\quad (t,x,\mu)\in[0,T]\times\overline{\Omega}\times{\mathbb R}. \end{align} $$

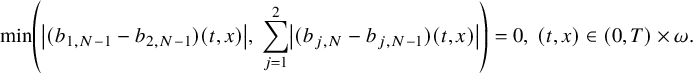

For this class of nonlinear terms, we can prove the following.

Theorem 2.3. Let the condition of Theorem 2.1 be fulfilled, and assume that, for

![]() $j=1,2$

, there exists

$j=1,2$

, there exists

![]() $N_j\geqslant 2$

such that

$N_j\geqslant 2$

such that

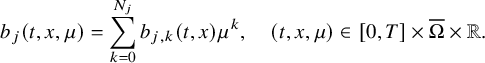

$$ \begin{align} b_j(t,x,\mu)=\sum_{k=0}^{N_j} b_{j,k}(t,x)\mu^k,\quad (t,x,\mu)\in[0,T]\times\overline{\Omega}\times{\mathbb R}. \end{align} $$

$$ \begin{align} b_j(t,x,\mu)=\sum_{k=0}^{N_j} b_{j,k}(t,x)\mu^k,\quad (t,x,\mu)\in[0,T]\times\overline{\Omega}\times{\mathbb R}. \end{align} $$

Let

![]() $\omega $

be an open subset of

$\omega $

be an open subset of

![]() ${\mathbb R}^n$

such that

${\mathbb R}^n$

such that

![]() $\omega \subset \Omega $

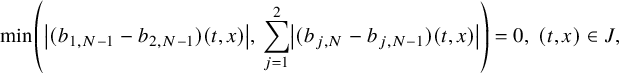

and J is a dense subset of

$\omega \subset \Omega $

and J is a dense subset of

![]() $(0,T)\times \omega $

. We assume also that, for

$(0,T)\times \omega $

. We assume also that, for

![]() $N=\min (N_1,N_2)$

, the conditions

$N=\min (N_1,N_2)$

, the conditions

$$ \begin{align} \min\kern1pt \left(\left|(b_{1,N-1}-b_{2,N-1})(t,x)\right|, \, \sum_{j=1}^2\left|(b_{j,N}-b_{j,N-1})(t,x)\right|\right)=0,\ (t,x)\in J, \end{align} $$

$$ \begin{align} \min\kern1pt \left(\left|(b_{1,N-1}-b_{2,N-1})(t,x)\right|, \, \sum_{j=1}^2\left|(b_{j,N}-b_{j,N-1})(t,x)\right|\right)=0,\ (t,x)\in J, \end{align} $$

hold true. Then the condition (2.4) implies that

![]() $b_1=b_2$

. In addition, assuming that the conditions of Theorem 2.2 are fulfilled, condition (2.6) implies that

$b_1=b_2$

. In addition, assuming that the conditions of Theorem 2.2 are fulfilled, condition (2.6) implies that

![]() $b_1=b_2$

.

$b_1=b_2$

.

We make the following remark.

Remark 2.2.

-

(i) The preceding theorem, in particular, says that the inverse source problem of recovering a source function F from the DN map of

is uniquely solvable. This is in strict contrast to the inverse source problem for the linear equation $$\begin{align*}\partial_t u-\Delta u + u^2=F \end{align*}$$

$$\begin{align*}\partial_t u-\Delta u + u^2=F \end{align*}$$

$\partial _t u-\Delta u =F$

that always has a gauge invariance explained in Example 1.1: the sources F and

$\partial _t u-\Delta u =F$

that always has a gauge invariance explained in Example 1.1: the sources F and

$\widetilde {F}:=F+\partial _t\varphi -\Delta \varphi $

in Q have the same DN map. Here, the only restrictions for

$\widetilde {F}:=F+\partial _t\varphi -\Delta \varphi $

in Q have the same DN map. Here, the only restrictions for

$\varphi $

are given in (1.6) and thus typically

$\varphi $

are given in (1.6) and thus typically

$F\neq \widetilde F$

.

$F\neq \widetilde F$

.

-

(ii) Inverse source problems for semilinear elliptic equations were studied in [Reference Liimatainen and LinLL22b]. There it was shown that if in the notation of the corollary

$b_{1,N-1}=b_{2,N-1}$

and

$b_{1,N-1}=b_{2,N-1}$

and

$b_{1,N}\neq 0$

in

$b_{1,N}\neq 0$

in

$\Omega $

(so that (2.16) and (2.17) hold), then the gauge breaks. With natural replacements, the corollary generalizes [Reference Liimatainen and LinLL22b, Corollary 1.3] in the elliptic setting. This can be seen by inspecting its proof.

$\Omega $

(so that (2.16) and (2.17) hold), then the gauge breaks. With natural replacements, the corollary generalizes [Reference Liimatainen and LinLL22b, Corollary 1.3] in the elliptic setting. This can be seen by inspecting its proof.

Let us then consider nonlinear terms

![]() $b\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

of the form

$b\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

of the form

We start by considering nonlinear terms of the form (2.19) with

![]() $b_2\equiv 1$

.

$b_2\equiv 1$

.

Theorem 2.4. Let the conditions of Theorem 2.1 be fulfilled, and assume that, for

![]() $j=1,2$

, there exists

$j=1,2$

, there exists

![]() $h_j\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2}}([0,T]))$

such that

$h_j\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2}}([0,T]))$

such that

Assume also that, for all

![]() $t\in (0,T)$

, there exist

$t\in (0,T)$

, there exist

![]() $\mu _t\in {\mathbb R}$

and

$\mu _t\in {\mathbb R}$

and

![]() $n_t\in \mathbb N$

such that

$n_t\in \mathbb N$

such that

Moreover, we assume that

and that for all

![]() $t\in (0,T)$

, there exists

$t\in (0,T)$

, there exists

![]() $x_t\in \partial \Omega $

such that

$x_t\in \partial \Omega $

such that

Then, the condition (2.4) implies that

![]() $b_1=b_2$

. Moreover, assuming that the conditions of Theorem 2.2 are fulfilled, the condition (2.6) implies that

$b_1=b_2$

. Moreover, assuming that the conditions of Theorem 2.2 are fulfilled, the condition (2.6) implies that

![]() $b_1=b_2$

.

$b_1=b_2$

.

Under a stronger assumption imposed on the expression h, we can also consider nonlinear terms of the form (2.19) with

![]() $b_2\not \equiv 1$

.

$b_2\not \equiv 1$

.

Theorem 2.5. Let the conditions of Theorem 2.1 be fulfilled, and assume that, for

![]() $j=1,2$

, there exists

$j=1,2$

, there exists

![]() $h_j\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2}}([0,T]))$

such that

$h_j\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2}}([0,T]))$

such that

Assume also that, for all

![]() $t\in (0,T)$

, there exists

$t\in (0,T)$

, there exists

![]() $n_t\in \mathbb N$

such that

$n_t\in \mathbb N$

such that

Moreover, we assume that

and that for all

![]() $t\in (0,T)$

, there exists

$t\in (0,T)$

, there exists

![]() $x_t\in \partial \Omega $

such that

$x_t\in \partial \Omega $

such that

Then, the condition (2.4) implies that

![]() $b_1=b_2$

. Moreover, assuming that the conditions of Theorem 2.2 are fulfilled, the condition (2.6) implies that

$b_1=b_2$

. Moreover, assuming that the conditions of Theorem 2.2 are fulfilled, the condition (2.6) implies that

![]() $b_1=b_2$

.

$b_1=b_2$

.

Finally, we consider nonlinear terms

![]() $b\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

satisfying

$b\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

satisfying

Corollary 2.3. Let the conditions of Theorem 2.1 be fulfilled, and assume that, for

![]() $j=1,2$

, there exists

$j=1,2$

, there exists

![]() $G\in \mathbb A({\mathbb R};C^{\alpha }(\overline {\Omega }))$

such that

$G\in \mathbb A({\mathbb R};C^{\alpha }(\overline {\Omega }))$

such that

Assume also that one of the following conditions is fulfilled:

-

(i) We have

$b_{1,2}=b_{2,2}$

, and for all

$b_{1,2}=b_{2,2}$

, and for all

$x\in \Omega $

, there exists

$x\in \Omega $

, there exists

$n_x\in \mathbb N$

and

$n_x\in \mathbb N$

and

$\mu _x\in {\mathbb R}$

such that (2.30)

$\mu _x\in {\mathbb R}$

such that (2.30) $$ \begin{align} \partial_\mu^{n_x} G(x,\,\cdot\,)\not\equiv0 \text{ and } \partial_\mu^{n_x} G(x,\mu_x)=0,\quad x\in\Omega. \end{align} $$

$$ \begin{align} \partial_\mu^{n_x} G(x,\,\cdot\,)\not\equiv0 \text{ and } \partial_\mu^{n_x} G(x,\mu_x)=0,\quad x\in\Omega. \end{align} $$

-

(ii) For all

$x\in \Omega $

, there exists

$x\in \Omega $

, there exists

$n_x\in \mathbb N$

such that (2.31)

$n_x\in \mathbb N$

such that (2.31) $$ \begin{align} \partial_\mu^{n_x} G(x,\,\cdot\,)\not\equiv0\text{ and } \partial_\mu^{n_x} G(x,0)=0,\quad x\in\Omega. \end{align} $$

$$ \begin{align} \partial_\mu^{n_x} G(x,\,\cdot\,)\not\equiv0\text{ and } \partial_\mu^{n_x} G(x,0)=0,\quad x\in\Omega. \end{align} $$

Moreover, we assume that condition (2.26) is fulfilled. Then, the condition (2.4) implies that

![]() $b_1=b_2$

. In addition, by assuming that the conditions of Theorem 2.2 are fulfilled, condition (2.6) implies that

$b_1=b_2$

. In addition, by assuming that the conditions of Theorem 2.2 are fulfilled, condition (2.6) implies that

![]() $b_1=b_2$

.

$b_1=b_2$

.

Remark 2.3. Let us observe that the results of Theorems 2.4 and 2.5 and Corollary 2.3 are mostly based on the conditions (2.21) and (2.26) imposed to nonlinear terms of the form (2.19), and on the conditions (2.30) and (2.31) imposed to nonlinear terms of the form (2.28). These conditions are rather general, and they will be fulfilled in various situations for different class of functions. For instance, assuming that the function

![]() $h_1$

takes the form

$h_1$

takes the form

with

![]() $P,Q\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2}}([0,T]))$

, condition (2.21) will be fulfilled if we assume that there exists

$P,Q\in \mathbb A({\mathbb R};C^{\frac {\alpha }{2}}([0,T]))$

, condition (2.21) will be fulfilled if we assume that there exists

![]() $\sigma \in C^{\alpha /2}([0,T])$

such that

$\sigma \in C^{\alpha /2}([0,T])$

such that

Such a condition will, of course, be fulfilled when

![]() $h_1(t,\mu )=\mu e^\mu $

,

$h_1(t,\mu )=\mu e^\mu $

,

![]() $(t,\mu )\in [0,T]\times {\mathbb R}$

.

$(t,\mu )\in [0,T]\times {\mathbb R}$

.

More generally, let

![]() $\sigma \in C^{\frac {\alpha }{2}}([0,T])$

be arbitrary chosen, and for each

$\sigma \in C^{\frac {\alpha }{2}}([0,T])$

be arbitrary chosen, and for each

![]() $t\in [0,T]$

, consider

$t\in [0,T]$

, consider

![]() $N_t\in \mathbb N$

. Assuming that the function

$N_t\in \mathbb N$

. Assuming that the function

![]() $h_1$

satisfies the following property

$h_1$

satisfies the following property

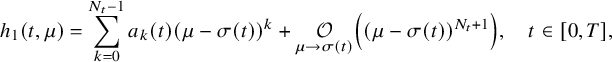

$$ \begin{align}h_1(t,\mu)=\sum_{k=0}^{N_t-1}a_k(t)(\mu-\sigma(t))^k+ \underset{\mu\to\sigma(t)}{\mathcal O}\left((\mu-\sigma(t))^{N_t+1}\right),\quad t\in[0,T],\end{align} $$

$$ \begin{align}h_1(t,\mu)=\sum_{k=0}^{N_t-1}a_k(t)(\mu-\sigma(t))^k+ \underset{\mu\to\sigma(t)}{\mathcal O}\left((\mu-\sigma(t))^{N_t+1}\right),\quad t\in[0,T],\end{align} $$

with

![]() $a_{k}\in C^{\frac {\alpha }{2}}([0,T])$

,

$a_{k}\in C^{\frac {\alpha }{2}}([0,T])$

,

![]() $k\in \mathbb N\cup \{0\}$

, one can easily check that condition (2.21) will be fulfilled since we have

$k\in \mathbb N\cup \{0\}$

, one can easily check that condition (2.21) will be fulfilled since we have

Condition (2.26) will be fulfilled under the same condition provided that

![]() $h_1$

satisfies (2.32) with

$h_1$

satisfies (2.32) with

![]() $\sigma \equiv 0$

. Moreover, let

$\sigma \equiv 0$

. Moreover, let

![]() $g\in C^{\alpha }(\overline {\Omega })$

, and for each

$g\in C^{\alpha }(\overline {\Omega })$

, and for each

![]() $x\in \Omega $

, consider

$x\in \Omega $

, consider

![]() $N_x\in \mathbb N$

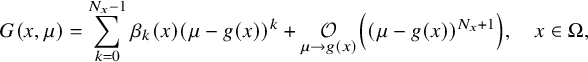

. Assuming that the function G in (2.30) satisfies the property

$N_x\in \mathbb N$

. Assuming that the function G in (2.30) satisfies the property

$$ \begin{align}G(x,\mu)=\sum_{k=0}^{N_x-1}\beta_k(x)(\mu-g(x))^k+ \underset{\mu\to g(x)}{\mathcal O}\left((\mu-g(x))^{N_x+1}\right),\quad x\in \Omega,\end{align} $$

$$ \begin{align}G(x,\mu)=\sum_{k=0}^{N_x-1}\beta_k(x)(\mu-g(x))^k+ \underset{\mu\to g(x)}{\mathcal O}\left((\mu-g(x))^{N_x+1}\right),\quad x\in \Omega,\end{align} $$

with functions

![]() $\beta _{k}\in C^{\alpha }(\overline {\Omega })$

,

$\beta _{k}\in C^{\alpha }(\overline {\Omega })$

,

![]() $k\in \mathbb N\cup \{0\}$

, it is clear that condition (2.30) will be fulfilled since we have

$k\in \mathbb N\cup \{0\}$

, it is clear that condition (2.30) will be fulfilled since we have

The same is true for condition (2.31) when G satisfies (2.33) with

![]() $g\equiv 0$

.

$g\equiv 0$

.

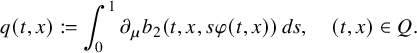

Finally, via previous observations, we can also determine the order coefficients for linear parabolic equations.

Corollary 2.4 (Global uniqueness with partial data).

Adopting all notations in Theorem 2.2, let

![]() $q_j=q_j(t,x)\in C^\infty ([0,T]\times \overline {\Omega })$

and

$q_j=q_j(t,x)\in C^\infty ([0,T]\times \overline {\Omega })$

and

![]() $b_j(t,x,\mu )=q_j(t,x)\mu $

for

$b_j(t,x,\mu )=q_j(t,x)\mu $

for

![]() $j=1,2$

. Then (2.4) implies

$j=1,2$

. Then (2.4) implies

![]() $q_1=q_2$

in Q.

$q_1=q_2$

in Q.

We mention that we could also prove that the assumptions of Theorem 2.1 and

![]() $b_j(t,x,\mu )=q_j(t,x)\mu $

,

$b_j(t,x,\mu )=q_j(t,x)\mu $

,

![]() $j=1,2$

, imply

$j=1,2$

, imply

![]() $q_1=q_2$

.

$q_1=q_2$

.

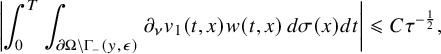

2.4 Comments about our results

To the best of our knowledge, Theorems 2.1 and 2.2 give the first positive answer to the inverse problem (IP1) for semilinear parabolic equations. In addition, the results of Theorem 2.1 and 2.2 extend the analysis of [Reference Liimatainen and LinLL22b] that considered a problem similar to (IP1) for elliptic equations, but which did not fully answer the question raised by (IP1). In that sense, Theorems 2.1 and 2.2 give the first positive answer to (IP1) for a class of elliptic PDEs as well. While Theorem 2.1 is stated for general class of parabolic equations, Theorem 2.2 gives a result with measurements restricted to a neighborhood of the back set with respect to a source

![]() $x_0\in {\mathbb R}^n\setminus \overline {\Omega }$

in the spirit of the most precise partial data results stated for linear elliptic equations such as [Reference Kenig, Sjöstrand and UhlmannKSU07]. Note that in contrast to [Reference Kenig, Sjöstrand and UhlmannKSU07], the source

$x_0\in {\mathbb R}^n\setminus \overline {\Omega }$

in the spirit of the most precise partial data results stated for linear elliptic equations such as [Reference Kenig, Sjöstrand and UhlmannKSU07]. Note that in contrast to [Reference Kenig, Sjöstrand and UhlmannKSU07], the source

![]() $x_0$

is not necessary outside the convex hull of

$x_0$

is not necessary outside the convex hull of

![]() $\overline {\Omega }$

and, as observed in [Reference Kenig, Sjöstrand and UhlmannKSU07], when

$\overline {\Omega }$

and, as observed in [Reference Kenig, Sjöstrand and UhlmannKSU07], when

![]() $\Omega $

is convex, the measurements of Theorem 2.2 can be restricted to any open set of

$\Omega $

is convex, the measurements of Theorem 2.2 can be restricted to any open set of

![]() $\partial \Omega $

. Even for linear equations, Theorems 2.1 and 2.2 improve in precision and generality the earlier works of [Reference Choulli and KianCK18b, Reference IsakovIsa91] dealing with determination of time dependent coefficients appearing in linear parabolic equations.

$\partial \Omega $

. Even for linear equations, Theorems 2.1 and 2.2 improve in precision and generality the earlier works of [Reference Choulli and KianCK18b, Reference IsakovIsa91] dealing with determination of time dependent coefficients appearing in linear parabolic equations.

We gave a positive answer to the problem (IP2) and show that the gauge breaks for three different classes of semilinear terms:

-

1) Semilinear terms with prescribed information in Corollaries 2.1 and 2.2,

-

2) Polynomial semilinear terms in Theorem 2.3,

-

3) Semilinear terms with separated variables of the form (2.19) or (2.28) in Theorems 2.4 and 2.5 and in Corollary 2.3.

This seems to be the most complete overview of situations where one can give a positive answer to problem (IP2). While [Reference Liimatainen and LinLL22b] considered also such phenomena for polynomial nonlinear terms and some specific examples, the conditions of Corollary 2.1 and 2.2, Theorems 2.4 and 2.5 and Corollary 2.3, leading to a positive answer for (IP2), seem to be new. In Remark 2.3, we gave several concrete and general examples of semilinear terms satisfying the conditions of Theorems 2.4, 2.5 and Corollary 2.3.

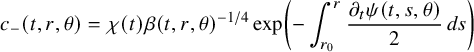

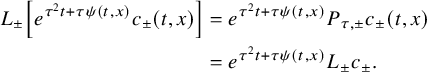

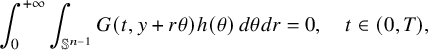

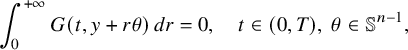

The proof of our results is based on a combination of the higher-order linearization technique, application of suitable class of geometric optics solutions for parabolic equations, Carleman estimates, properties of holomorphic functions and different properties of parabolic equations. Theorem 2.1 is deduced from the linearized result of Proposition 4.1 that we prove by using geometric optics solutions for parabolic equations. These solutions are built by using the energy estimate approach introduced in the recent work of [Reference FeizmohammadiFei23].

We mention that Assumption 2.1 is used in this work mainly for two purposes. Our construction of geometric optics solutions is based on the use of global polar normal coordinates on the manifolds

![]() $(\overline {\Omega }_1, g(t) )$

,

$(\overline {\Omega }_1, g(t) )$

,

![]() $t\in [0,T]$

. In addition, Assumption 2.1 guarantees the injectivity of the geodesic ray transform on the manifolds

$t\in [0,T]$

. In addition, Assumption 2.1 guarantees the injectivity of the geodesic ray transform on the manifolds

![]() $ ( \overline {\Omega }_1, g(t))$

,

$ ( \overline {\Omega }_1, g(t))$

,

![]() $t\in [0,T]$

, which is required in our proofs of Theorems 2.1 and 2.2. It is not clear at the moment how Assumption 2.1 can be relaxed in the construction of geometric optics solutions for parabolic equations of the form considered in this paper.

$t\in [0,T]$

, which is required in our proofs of Theorems 2.1 and 2.2. It is not clear at the moment how Assumption 2.1 can be relaxed in the construction of geometric optics solutions for parabolic equations of the form considered in this paper.

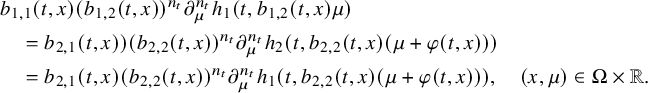

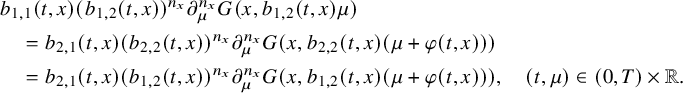

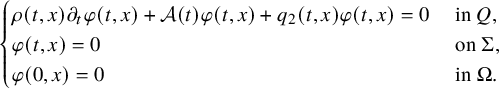

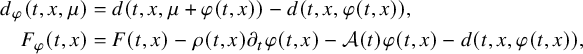

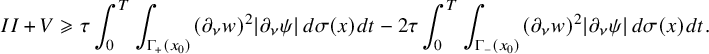

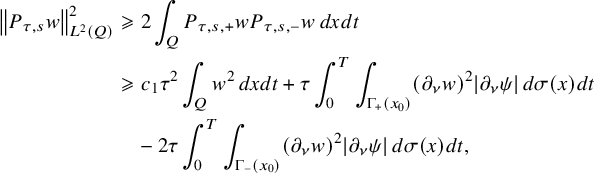

This allows us to consider problem (IP1) for general class of semilinear parabolic equations with variable coefficients. In Theorem 2.2, we combine this class of geometric optics solutions with a Carleman estimate with boundary terms stated in Lemma 6.1 in order to restrict the boundary measurements to a part of the boundary. Note that the weight under consideration in Lemma 6.1 is not a limiting Carleman weight for parabolic equations. This is one reason why we cannot apply such Carleman estimates for making also a restriction on the support of the Dirichlet input in Theorem 2.2.

It is worth mentioning that our results for problem (IP1) and (IP2) can be applied to inverse source problems for nonlinear parabolic equations. This important application is discussed in Section 8. There we show how the nonlinear interaction allows to solve this problem for general classes of source terms, depending simultaneously on the time and space variables. Corresponding problems for linear equations cannot be solved uniquely (see Example 1.1 or, for example, [Reference Kian, Soccorsi, Xue and YamamotoKSXY22, Appendix A]). In that sense, our analysis exhibits a new consequence of the nonlinear interaction, already considered for examples in [Reference Feizmohammadi and OksanenFO20, Reference Kurylev, Lassas and UhlmannKLU18, Reference Lassas, Liimatainen, Lin and SaloLLLS21, Reference Lassas, Liimatainen, Lin and SaloLLLS20, Reference Liimatainen, Lin, Salo and TyniLLST22, Reference Krupchyk and UhlmannKU20b, Reference Krupchyk and UhlmannKU20a, Reference Feizmohammadi, Liimatainen and LinFLL23]), by showing how nonlinearity can help for the resolution of inverse source problems for parabolic equations.

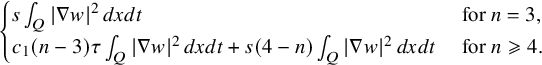

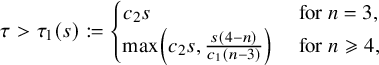

We remark that while Theorem 2.1 is true for

![]() $n\geqslant 2$

, we can only prove Theorem 2.2 for dimension

$n\geqslant 2$

, we can only prove Theorem 2.2 for dimension

![]() $n\geqslant 3$

. The fact that we cannot prove Theorem 2.2 for

$n\geqslant 3$

. The fact that we cannot prove Theorem 2.2 for

![]() $n=2$

is related to the Carleman estimate of Lemma 6.1 that we can only derive for

$n=2$

is related to the Carleman estimate of Lemma 6.1 that we can only derive for

![]() $n\geqslant 3$

. Since this Carleman estimate is a key ingredient in the proof of Theorem 2.2, we need to exclude the case

$n\geqslant 3$

. Since this Carleman estimate is a key ingredient in the proof of Theorem 2.2, we need to exclude the case

![]() $n=2$

in the statement of this result.

$n=2$

in the statement of this result.

Finally, let us observed that, under the suitable assumption of simplicity stated in Assumption 2.1, the result of Theorem 2.1 can be applied to the determination of a semilinear term for reaction diffusion equations on a Riemannian manifold with boundary equipped with a time-dependent metric.

2.5 Outline of the paper

This article is organized as follows. In Section 3, we consider the forward problem by proving the well-posedness of (1.2) under suitable conditions, and we recall some properties of the higher-order linearization method for parabolic equations. Section 4 is devoted to the proof of Theorem 2.1, while in Section 5, we prove Proposition 4.1. In Section 6, we prove Theorem 2.2, and in Section 7, we consider our results related to problem (IP2). Finally, in Section 8, we discuss the applications of our results to inverse source problems for parabolic equations. In the Appendix A, the outline of the proof of the Carleman estimates of Lemma 6.1 is presented.

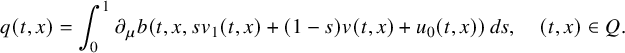

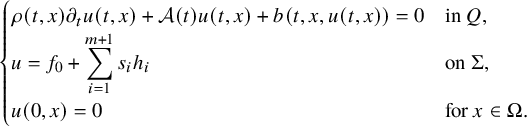

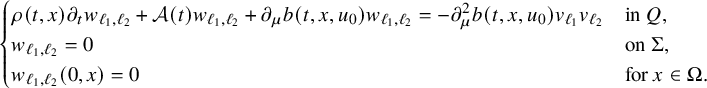

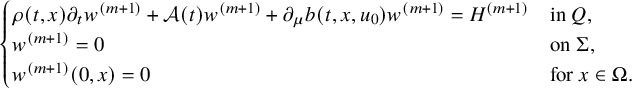

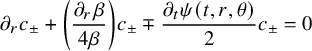

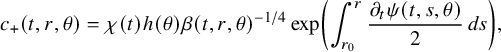

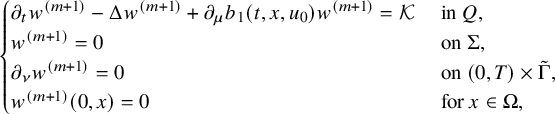

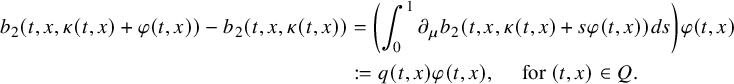

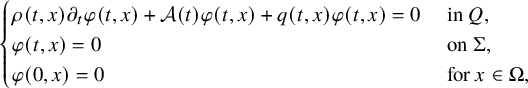

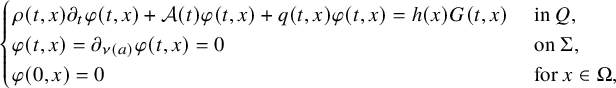

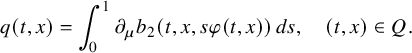

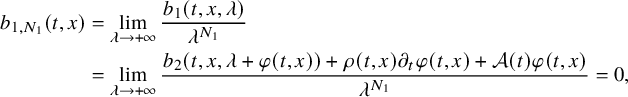

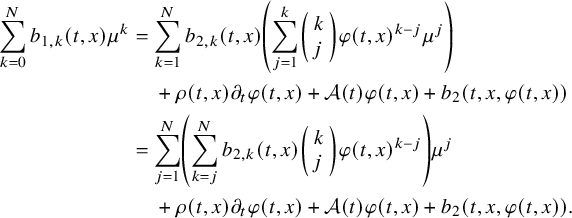

3 The forward problem and higher-order linearization

Recall that in this article we assume that there is a solution

![]() $u_0$

to (1.2) corresponding to a lateral boundary data

$u_0$

to (1.2) corresponding to a lateral boundary data

![]() $f_0$

.

$f_0$

.

3.1 Well-posedness for Dirichlet data close to

$f_0$

$f_0$

In this subsection, we consider the well-posedness for the problem (1.2), whenever the boundary datum f is sufficiently close to

![]() $f_0$

with respect to

$f_0$

with respect to

![]() $C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \partial \Omega )$

. For this purpose, we consider the Banach space

$C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \partial \Omega )$

. For this purpose, we consider the Banach space

![]() $\mathcal {K}_0$

with the norm of the space

$\mathcal {K}_0$

with the norm of the space

![]() $C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \partial \Omega )$

. Our local well-posedness result is stated as follows.

$C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \partial \Omega )$

. Our local well-posedness result is stated as follows.

Proposition 3.1. Let

![]() $a:=(a_{ik})_{1 \leqslant i,k \leqslant n} \in C^\infty ([0,T]\times \overline {\Omega };{\mathbb R}^{n\times n})$

satisfy (1.1),

$a:=(a_{ik})_{1 \leqslant i,k \leqslant n} \in C^\infty ([0,T]\times \overline {\Omega };{\mathbb R}^{n\times n})$

satisfy (1.1),

![]() $\rho \in C^\infty ([0,T]\times \overline {\Omega };{\mathbb R}_+)$

and

$\rho \in C^\infty ([0,T]\times \overline {\Omega };{\mathbb R}_+)$

and

![]() $b\in C^\infty ({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

. We assume also that there exists a boundary value

$b\in C^\infty ({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

. We assume also that there exists a boundary value

![]() $f_0\in \mathcal K_0$

such that the problem (1.2) with

$f_0\in \mathcal K_0$

such that the problem (1.2) with

![]() $f=f_0$

admits a unique solution

$f=f_0$

admits a unique solution

![]() $u_0\in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega }))$

. Then there exists

$u_0\in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega }))$

. Then there exists

![]() $\epsilon>0$

depending on a,

$\epsilon>0$

depending on a,

![]() $\rho $

, b,

$\rho $

, b,

![]() $f_0$

,

$f_0$

,

![]() $\Omega $

, T, such that, for all

$\Omega $

, T, such that, for all

![]() $f\in \mathbb B(f_0,\epsilon )$

, the problem (1.2) admits a unique solution

$f\in \mathbb B(f_0,\epsilon )$

, the problem (1.2) admits a unique solution

![]() $u_f\in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega }))$

satisfying

$u_f\in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega }))$

satisfying

Moreover, the map

![]() $\mathbb B(f_0,\epsilon )\ni f\mapsto u_f\in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega }))$

is

$\mathbb B(f_0,\epsilon )\ni f\mapsto u_f\in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega }))$

is

![]() $C^\infty $

in the Fréchet sense.

$C^\infty $

in the Fréchet sense.

Proof. Let us first observe that we may look for a solution

![]() $u_f$

by splitting it into two terms by

$u_f$

by splitting it into two terms by

![]() $u_f=u_0+v$

, where v solves

$u_f=u_0+v$

, where v solves

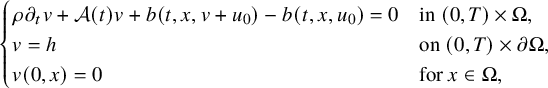

$$ \begin{align} \begin{cases} \rho\hspace{0.5pt} \partial_t v+\mathcal A(t) v+ b(t,x,v+u_0)-b(t,x,u_0)=0 & \mbox{in}\ (0,T)\times\Omega , \\ v=h &\mbox{on}\ (0,T)\times\partial\Omega,\\ v(0,x)=0 &\text{for } x\in\Omega, \end{cases} \end{align} $$

$$ \begin{align} \begin{cases} \rho\hspace{0.5pt} \partial_t v+\mathcal A(t) v+ b(t,x,v+u_0)-b(t,x,u_0)=0 & \mbox{in}\ (0,T)\times\Omega , \\ v=h &\mbox{on}\ (0,T)\times\partial\Omega,\\ v(0,x)=0 &\text{for } x\in\Omega, \end{cases} \end{align} $$

with

![]() $h:=f-f_0$

. Therefore, it is enough for our purpose to show that there exists

$h:=f-f_0$

. Therefore, it is enough for our purpose to show that there exists

![]() $\epsilon>0$

depending on a,

$\epsilon>0$

depending on a,

![]() $\rho $

, b,

$\rho $

, b,

![]() $f_0$

,

$f_0$

,

![]() $\Omega $

, T, such that for

$\Omega $

, T, such that for

![]() $h\in \mathbb B(0,\epsilon )$

, the problem (3.2) admits a unique solution

$h\in \mathbb B(0,\epsilon )$

, the problem (3.2) admits a unique solution

![]() $v_h\in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

satisfying

$v_h\in C^{1+\frac {\alpha }{2},2+\alpha }([0,T]\times \overline {\Omega })$

satisfying

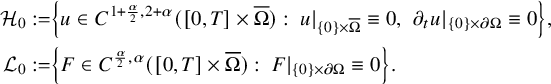

We introduce the spaces

$$ \begin{align*} \begin{aligned} \mathcal H_0:=&\left\{u\in C^{1+\frac{\alpha}{2},2+\alpha}([0,T]\times\overline{\Omega}):\ u|_{\{0\}\times\overline{\Omega}}\equiv 0,\ \left. \partial_tu\right|{}_{\{0\}\times\partial\Omega}\equiv 0\right\}, \\ \mathcal L_0:=&\left\{F\in C^{\frac{\alpha}{2},\alpha}([0,T]\times\overline{\Omega}):\ F|_{\{0\}\times\partial\Omega}\equiv 0\right\}. \end{aligned} \end{align*} $$

$$ \begin{align*} \begin{aligned} \mathcal H_0:=&\left\{u\in C^{1+\frac{\alpha}{2},2+\alpha}([0,T]\times\overline{\Omega}):\ u|_{\{0\}\times\overline{\Omega}}\equiv 0,\ \left. \partial_tu\right|{}_{\{0\}\times\partial\Omega}\equiv 0\right\}, \\ \mathcal L_0:=&\left\{F\in C^{\frac{\alpha}{2},\alpha}([0,T]\times\overline{\Omega}):\ F|_{\{0\}\times\partial\Omega}\equiv 0\right\}. \end{aligned} \end{align*} $$

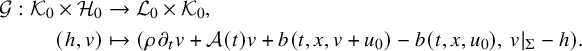

Then, let us introduce the map

![]() $\mathcal G$

defined by

$\mathcal G$

defined by

$$ \begin{align*} \mathcal G:\mathcal K_0\times\mathcal H_0 &\to \mathcal L_0\times\mathcal K_0 ,\\ (h,v) &\mapsto\left(\rho \hspace{0.5pt}\partial_t v+\mathcal A(t) v+ b(t,x,v+u_0)-b(t,x,u_0), \, v|_{\Sigma}-h\right). \end{align*} $$

$$ \begin{align*} \mathcal G:\mathcal K_0\times\mathcal H_0 &\to \mathcal L_0\times\mathcal K_0 ,\\ (h,v) &\mapsto\left(\rho \hspace{0.5pt}\partial_t v+\mathcal A(t) v+ b(t,x,v+u_0)-b(t,x,u_0), \, v|_{\Sigma}-h\right). \end{align*} $$

We will find a solution to (1.2) by applying the implicit function theorem to the map

![]() $\mathcal G$

. Using the fact that

$\mathcal G$

. Using the fact that

![]() $b\in C^\infty ({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

, it follows that the map

$b\in C^\infty ({\mathbb R};C^{\frac {\alpha }{2},\alpha }([0,T]\times \overline {\Omega }))$

, it follows that the map

![]() $\mathcal G$

is

$\mathcal G$

is

![]() $C^\infty $

on

$C^\infty $

on

![]() $\mathcal K_0\times \mathcal H_0$

in the Fréchet sense. Moreover, we have

$\mathcal K_0\times \mathcal H_0$

in the Fréchet sense. Moreover, we have

![]() $\mathcal G(0,0)=(0,0)$

and

$\mathcal G(0,0)=(0,0)$

and

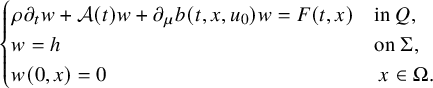

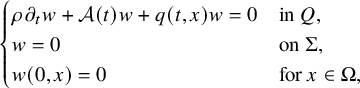

In order to apply the implicit function theorem, we will prove that the map

![]() $\partial _v\mathcal G(0,0)$

is an isomorphism from

$\partial _v\mathcal G(0,0)$

is an isomorphism from

![]() $\mathcal H_0$

to

$\mathcal H_0$

to

![]() $\mathcal L_0\times \mathcal K_0$

. For this purpose, let us fix

$\mathcal L_0\times \mathcal K_0$

. For this purpose, let us fix

![]() $(F,h)\in \mathcal L_0\times \mathcal K_0$

, and let us consider the linear parabolic problem

$(F,h)\in \mathcal L_0\times \mathcal K_0$

, and let us consider the linear parabolic problem

$$ \begin{align} \begin{cases} \rho \partial_t w+\mathcal A(t) w+ \partial_\mu b(t,x,u_0)w=F(t,x) & \mbox{in}\ Q , \\ w=h &\mbox{on}\ \Sigma,\\ w(0,x)=0 &\ x\in\Omega. \end{cases} \end{align} $$

$$ \begin{align} \begin{cases} \rho \partial_t w+\mathcal A(t) w+ \partial_\mu b(t,x,u_0)w=F(t,x) & \mbox{in}\ Q , \\ w=h &\mbox{on}\ \Sigma,\\ w(0,x)=0 &\ x\in\Omega. \end{cases} \end{align} $$

Applying [Reference Ladyženskaja, Solonnikov and Ural’cevaLSU88, Theorem 5.2, Chapter IV, page 320], we deduce that problem (3.4) admits a unique solution

![]() $w\in \mathcal H_0$

satisfying

$w\in \mathcal H_0$

satisfying

for some constant

![]() $C>0$

independent of w, F and h. From this result, we deduce that

$C>0$

independent of w, F and h. From this result, we deduce that

![]() $\partial _v\mathcal G(0,0)$

is an isomorphism from

$\partial _v\mathcal G(0,0)$

is an isomorphism from

![]() $\mathcal H_0$

to

$\mathcal H_0$

to

![]() $\mathcal L_0\times \mathcal K_0$

.

$\mathcal L_0\times \mathcal K_0$

.

Therefore, applying the implicit function theorem (see, for example, [Reference Renardy and RogersRR06, Theorem 10.6]), we deduce that there exists

![]() $\epsilon>0$

depending on a, b,

$\epsilon>0$

depending on a, b,

![]() $\rho $

,

$\rho $

,

![]() $f_0$

,

$f_0$

,

![]() $\Omega $

, T, and a smooth map

$\Omega $

, T, and a smooth map

![]() $\psi $

from

$\psi $

from

![]() $\mathbb B(0,\epsilon )$

to

$\mathbb B(0,\epsilon )$

to

![]() $\mathcal H_0$

, such that, for all

$\mathcal H_0$

, such that, for all