Introduction

A trained workforce helps ensure the implementation of high-quality clinical trials. For clinical trials that involve drugs or devices, principles of good clinical practice (GCP) have been established for clinical research professionals (investigators and research staff) to protect human subjects and promote scientific integrity [1]. These GCP principles, while applicable to any clinical trial, have traditionally been geared towards clinical research professionals who develop and test drugs, devices, and biologics and are required to adhere to Food and Drug Administration regulations. Thus, most training courses in GCP have been designed with biomedical clinical trials in mind and course content has not traditionally covered issues specific to conduct of social and behavioral clinical trials.

The need for training social and behavioral clinical research professionals in GCP became evident when the National Institute of Health (NIH) revised their definition of a clinical trial to include social and behavioral intervention development and testing [2]. Investigators who receive NIH funding for social and behavioral clinical trials must now complete training in GCP [3]. In collaboration with members of multiple Clinical Translational Science Award (CTSA)-funded institutions nationwide, we recently developed the Best Practices in Social and Behavioral Research (SBR) e-Learning Course for social and behavioral clinical research professionals [Reference Murphy4]. Developed under the auspices of the National Center for Advancing Translational Sciences, the purpose of this course is to enhance training for clinical research professionals [Reference Shanley5]. Course content was developed by first mapping potential topics to competency domains identified through the parent project [Reference Shanley5], determining module topics through a review of several resources on GCP and social and behavioral research training, and assigning content experts to supply content in conjunction with an instructional designer which was vetted by the work group and outside consultants. The course, supported by the use of social and behavioral research examples, provides tailored GCP training to highlight the most salient issues in research conduct. After the course was completed, the lead course development team at the University of Michigan worked with volunteer CTSA sites to install the course at their institutions, and recruit and track learner outcomes.

The purpose of this study was to evaluate the feasibility and effectiveness of the Best Practices in SBR e-Learning Course. We hypothesized that the course would be feasible to administer and that participants would rate the course as relevant, engaging, and impactful to their work after the initial survey and at 2 months follow-up.

Materials and Methods

A collaborative network was formed after the University of Michigan reached out to other CTSA hubs via the National Center for Advancing Translational Sciences Workforce Development Committee. Sites interested in participating in the evaluation of this pilot online training course were invited to participate if they had the capability to load the course onto a customized site-based learning management system (LMS) and could share course data gathered (e.g., attempt, duration, and passing rates of the embedded knowledge checks after each course module). Also, collaborating sites needed to obtain human subjects approval from their institution for this study, recruit participants to take the course, and establish a system of administering an online survey at the 2 time points. Initially 5 sites agreed to participate in the initiative; however, one site dropped out due to lack of staff effort to devote to the project. The remaining institutions that participated in this study included: the University of Michigan, University of Florida, Boston University, and the University at Buffalo. The course was integrated into each institution’s LMS and all people who were interested at each institution could take the course whether or not they consented to be in the research study. Those interested in taking the course were asked to participate in the research to help the study team gain insight into how the content was received and to guide any future refinements to the course.

Participant Recruitment

University of Michigan

Coinciding with the January 2017 NIH requirement for GCP training for all clinical trials study personnel, the pilot study commenced at the University of Michigan. Many individuals enrolled in the course to fulfill the training requirement and this information was shared while advertising the course. In addition, we worked with the University’s Office of Research, who agreed to accept the Best Practices in SBR Course as one of several options to fulfill the new training requirement. The Office of Research had a Frequently Asked Questions webpage and possible GCP trainings to take, which includes this course on their web site. Also, the University of Michigan’s Medical School had recently formed several clinical trial support offices around specific research areas, one of which was for behavioral research. This office disseminated information about the availability of this course to faculty and staff who had already been identified as conducting behavioral research.

Information about the course was disseminated through emails, newsletters, social media, flyers, and in-person communications to faculty, postdocs, staff, graduate and undergraduate students, and Michigan Institute for Clinical and Health Research scholars in the Medical School, School of Public Health, Nursing School, Kinesiology, Institute for Healthcare Policy and Innovation, the Institute for Research on Women and Gender, Institute for Social Research, Center for Bioethics & Social Sciences in Medicine, and at the Flint and Dearborn campuses, reaching an ~18,500 people. All communications included information stating that the course would satisfy the NIH requirement, a course weblink, and an email address where questions about the course could be sent. A consent form was provided on the LMS when people registered to take the course. Participants could opt in or out of the study without affecting their ability to take the course. If they opted into the study by indicating consent, they were emailed surveys immediately following the course and 2 months after the course was taken.

University of Florida

Participants in the study were recruited from the population of staff who are involved in SBR or clinical research currently working in the research enterprise. Using university email lists targeting the research workforce, individuals were sent an email invitation, encouraging individuals to participate by taking the Best Practices in SBR Course. Several flyers and posters were used across the Academic Health Center. Research leaders at the Academic Health Center were debriefed on the study and asked to disseminate the recruitment email and links to their stakeholders. Messages included information about using the course to satisfy the NIH requirement, a link directly to the course, and an email address to send questions about the course. Contact information for the study team was provided to participants within the consent form which was presented via secure online hyperlink embedded in the recruitment emails linking to the consent and the survey in Qualtrics. The study commenced early in 2017 coinciding with efforts to accommodate NIH’s requirement for all clinical trials study personnel to undertake GCP training. Though it was hoped that many people would see taking this training as an opportunity to fulfill NIH’s requirement, a majority of potential participants had already taken a GCP course elsewhere by the time the course was advertised. In addition, we contacted the University of Florida’s Clinical and Translational Science Institute (CTSI) Clinical Research Professionals Council, a network of ~1000 research coordinators and professionals who had received CTSI’s professional training to disseminate in-person communications and information about the course. Information was also circulated to research faculty, clinical fellows, junior faculty, postdocs, and research fellows as well as doctoral students through the CTSI Translational Workforce Development Program which includes: TL1 Training Grant, Career and Professional Development Programs for Graduate Students and Postdocs with Clinical and Translational Science Interdisciplinary Concentrations; Training and Research Academy for Clinical and Translational Science; KL2 Training Grant; and Mentor Academy.

University at Buffalo

The University’s CTSI, the University’s Clinical Research Office, and the SBR Support Office collaborated to develop and implement a recruitment strategy. An email was sent through the CTSI listserv (which spans the all of the University’s health science schools and affiliated institutes, reaching 8400 people) to reach faculty, staff, and students affiliated with the health sciences. All marketing emails included an attached flyer, a link to register for the course and an email address for any inquiries. Participants consented to participate in the survey on the LMS and if they consented, they took surveys on the system.

Boston University

The Boston Medical Center/Boston University Medical Campus Office of Human Research Affairs agreed to accept the Best Practices in SBR Course as one of several options to fulfill the new GCP training requirement from NIH. The requirement and the option fulfill it by taking this course was communicated through the Office of Human Research Affairs Web site and mentioned at monthly seminars and in newsletters to clinical researchers. In addition, emails were sent to the newly formed Research Professionals Network announcing the course. All learners reviewed an online consent form for the study before participation. Learners could choose to take the course without participating in the pilot evaluation. Once learners finished the course, they were presented with the option to take part in the research survey which was preceded by the consent statement that described the purpose of the research, that participation was voluntary, and that survey responses would be anonymous.

Procedures

After consenting, participants in this study took the Best Practices in SBR Course. The course consists of 9 modules that cover topics related to GCP but were tailored to social and behavioral clinical trials [Reference Murphy4]. At the conclusion of the course, participants were asked to complete a survey and rate the course and their experience with it as detailed in the training outcomes section. Participants were also asked to provide demographic information such as age, sex, and characteristics of their job including their role and years of experience. A follow-up survey was sent to participants ~2 months later. The goal of the follow-up survey was to better understand if and how the course impacted participants’ enactment of work responsibilities.

Training Outcomes

The main outcomes of interest were (a) perceived relevance of the online training course to participants’ work; (b) perception of how engaging the course was; and (c) the degree to they reported working differently as a result of the training. Participants rated outcomes on a Likert scale, where 1=strongly disagree to 7=strongly agree. In response to open-ended survey items, participants were asked to describe ways in which the course was useful or if and how they used the resources provided in the course.

Data Analysis

From each institutional LMS, data indicating the amount of time taken to complete each course module and number of attempts on the knowledge checks were collected using descriptive statistics (e.g., means, standard deviations, medians, interquartile ranges) and aggregated. Median time and interquartile range were used in the analysis when extreme outliers in time were observed. Quantitative analysis was performed on the survey items that requested discrete answers. Responses to open-ended questions were analyzed qualitatively. Responses to the initial survey items regarding the relevance of training, how engaging the training was, and if they worked differently were summarized for each sample. t-tests were used to compare ratings on these outcomes by experience and background variables.

To examine the impact of the course on current work, we examined how reports of working differently on the initial survey and 2 experience variables—number of years of experience in conducting clinical trials and number of years of experience in social behavioral research—predicted report of working differently as a result of the training 2 months later. An ordinary least squares regression model was used. The model was limited to these few key experience variables to avoid over-specification given the sample size. Statistical analyses supported the use of the linear model and showed that the model was robust when testing regression assumptions of normality, heteroscedasticity, outlying residuals, and misspecified models [Reference Huber6, Reference White7].

Qualitative Analysis

This study was guided by the transtheoretical change model (TTM), which asserts that individual behavioral change occurs within stages [Reference Prochaska and Velicer8] along a continuum of behavior modification. Stages of this model provide insight as to whether individuals are ready for change or subject to relapse [Reference Littell and Girvin9]. Analyzing participant growth by the TTM guides selection of specific educational activities designed to enhance their development.

Working in pairs, authors (S.L.M. and E.C., H.R.K. and L.S.B.H.) analyzed each participant response (n=105) to the following open-ended questions:

(1) If you will work differently as a result of having received the training please describe how using the space below.

(2) You indicated that you have worked differently as a result of having received this training. Please describe the ways that you will work differently as a result of having participated in the course.

(3) Please describe why you may or may not have worked differently as a result of having participated in the course.

(4) Please describe why you will not work differently as a result of having participated in the course.

The coders individually coded each response according to TTM stage: Pre-contemplation, Contemplation, Preparation, and Action. Next, the coders met, compared their responses, and reached consensus on any differences in initial coding.

Results

In total, 294 individuals across the partner sites who participated in the Best Practices in SBR Course consented to participate in the pilot evaluation. Differences in course and survey administration among sites are outlined in Table 1. Over 73% (n=217) of participants completed the training course, while over 49% (n=107) took the survey.

Table 1 Best practices training administration by site

* n=217 of completers.

† Survey response rates reflect the proportion of completers who took either the initial or follow-up survey. Participants at the University of Florida were invited to take the surveys when they started the training; the survey response rate for this institution is the proportion of consented participants, not completed participants as it is with the other sites. No data from the survey was available from University at Buffalo indicated by NA.

Course Data from the LMS

Time taken to complete each of the 9 modules of the course and attempts of the knowledge checks after each module were summarized for participants who completed the course and had available data. The timing and attempt course data were not available for the 15 participants at University at Buffalo; the remaining sample was 202 participants (see Table 2). The course data supports the hypothesis that it was feasible to administer. It was designed with the intent of having participants take a course in which participation would not be longer than 4 hours. The median time participants took to complete the course was ~190 minutes, or 3.2 hours, with the median time for each module of 19.8 minutes to complete. The median time taken by module varied from 3 minutes for the conclusion/wrap-up module to 26.2 minutes for the research protocol module. Knowledge check questions were attempted a mean of 2 times [standard deviation (SD)=0.7] to pass with 100% accuracy. The average number of attempts participants needed to pass the knowledge checks varied across modules ranging from a low of 1.6 times (SD=1.0) to a high of 2.5 times (SD=1.7).

Table 2 Completion time and attempt data total and per module among course completers (n=202)

AE, adverse event; IQR, interquartile range.

* For introduction (n=199); informed consent communication, quality control and assurance, and research misconduct (n=201).

† Course completion data was made available by the University of Michigan, University of Florida, and Boston University. Summary data for completion time was available from University at Buffalo which was included in the calculation of total course time to complete (n=217).

Survey Results

The response rate of completing either survey was higher (49%) than the response rates for the initial survey conducted during the training (46%) and much higher than that of the follow-up survey (29%) conducted 2 months later. The University at Buffalo did not collect survey responses from individual participants, as shown in the summary in Table 1.

Characteristics of Survey Respondents

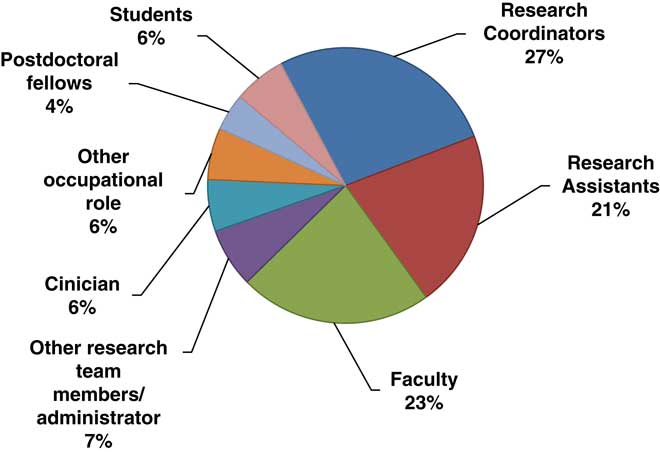

Over 70% (n=75) of participants who responded to the survey reported that they took the course to fulfill training requirements, although 17% participated in the training voluntarily, without the expectation of fulfilling any training requirements. Many participants were currently conducting or supporting clinical trials research. Of the 105 individuals who indicated their involvement in clinical trials, 15% were not currently involved in any clinical trials research, and almost 17% were involved in more than one type of clinical trial. A majority of these studies (n=112) were social and behavioral trials (72%), although many were drug, device (17%), or biological clinical trials (8%). Just over 12% of participants supported clinical trials through multiple roles. Of the total number of roles in clinical trials they reported holding (n=103), almost 70% were Research Coordinators, Research Assistants or other team members and roughly 30% were Co- or Principal Investigators. Similarly, about 17% of participants reported having multiple occupational roles regardless of their involvement in clinical trials research. The diversity of roles held by participants is shown in Fig. 1.

Fig. 1 Survey respondents by research role (115 roles reported).

The participants who responded to the survey also reported that they had considerable prior research experience. On average, they reported having been engaged in social and behavioral research for 7.1 years (n=78, SD=8.3) and in clinical trials research for 6.5 years (n=84, SD=7.7). Information about participants’ highest postsecondary degrees was also collected from 93 individuals. Roughly a third of respondents had earned their Doctorate (32%, n=30), Masters (33%, n=31), or Bachelors (32%, n=30) degrees. Two individuals (2%) reported their highest credential was an Associate’s degree. Information about other types of professional credentials, such as SOCRA and ACRP certifications was solicited from respondents, but too few participants responded to the questions to enable conclusions to be drawn about participants’ acquisition of other professional credentials.

Training Outcomes

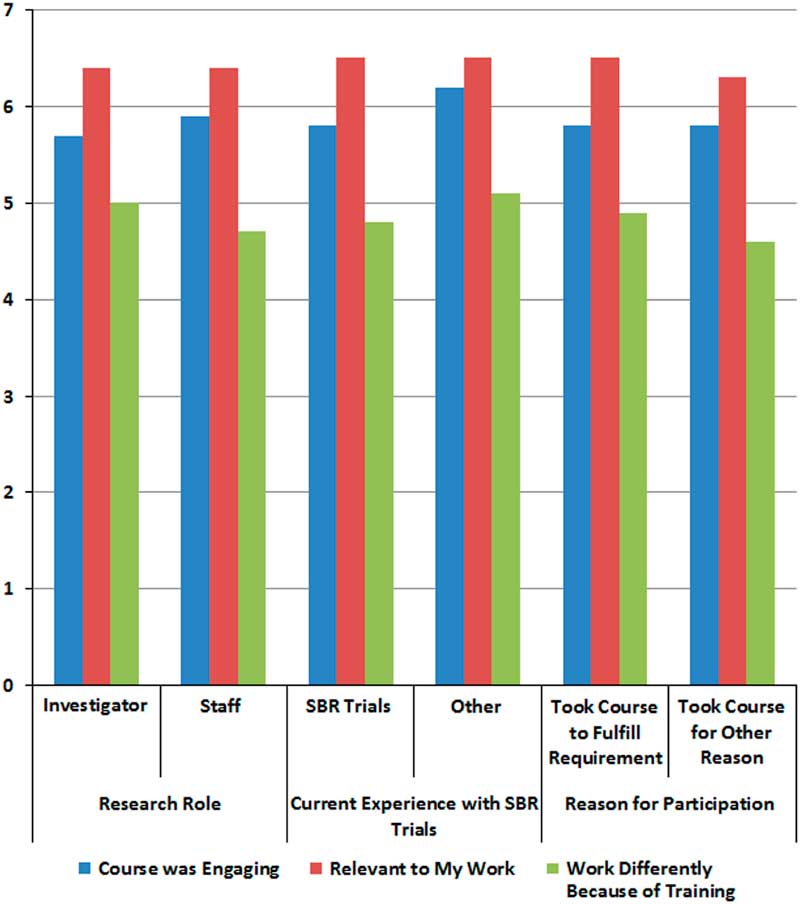

We hypothesized that participants would rate the course as relevant, engaging, and impactful to their work, and this was largely supported. Of the 3 training outcomes in which 90 participants rated agreement on a scale from 0 to 7 at baseline, they most strongly endorsed the course’s relevance (6.4±1.0), followed by the training being engaging (5.8±1.2), and working differently as a result of the training (4.7±1.6). There were no significant differences in outcomes by research role, experience in social and behavioral trials, or by whether the training was taken as a requirement or not (see Fig. 2). Participants who did not currently work on social and behavioral trials compared with those who did had a trend of reporting that the course was more engaging (p≤0.10). When asked if they would recommend the course to their colleagues during the initial survey, 96% (n=70) agreed. After 2 months having completed the training, 90% (n=48) agreed.

Fig. 2 Perceptions of course from initial survey by participant characteristics.

An examination of the predictors of working differently as a result of the training 2 months after training showed that 32% of the variance was explained by the model (F3,23, p=0.001; adjusted R 2=0.32) that included participant’s initial endorsement of working differently as a result of the training (β=0.50, p=0.005), and years of experience conducting clinical trials research (β=−0.07, p=0.001). The results indicate that the endorsement of working differently at baseline and fewer years of experience are associated with working differently 2 months later.

Qualitative responses were analyzed to understand if and how people worked differently as a result of the training according to TTM stage of change are summarized in Table 3. The responses described a variety of ways in which respondents were thinking about working differently or making actionable changes. There was a trend towards more active change at the 2 month survey. In the initial survey, 44 participants responded to the question in which they were asked to describe how they would work differently. Eight responses were in the Pre-contemplation stage, and 18 in both the Contemplation and Preparation stages. At the 2 month follow-up survey, 30 participants responded to the question about working differently as a result of having received this training. There were 5 responses in the Pre-contemplation stage, 6 in Contemplation, 5 in Preparation, and 14 in Action. At least half of the responses were descriptions of the ways that participants implemented ideas culled from the training experience.

Table 3 Stages of change, definitions, and selected quotations about working differently as a result of the course

An example of Pre-contemplation in the initial survey is:

I liked the use of real life examples of research impropriety and other situations which I can foresee easily occurring. …These modules gave a good foundation for the principles of protection of human subjects and ethical research.

In the comment given above and for other comments in the Pre-contemplation stage, participants described what they liked about the training content. They remarked about the value of the training; however, they offered no indication of how the learning experiences could be applied to their research roles.

At follow-up, the Pre-contemplation stage was exemplified by the following statement:

[I] haven’t worked differently; either not applicable or already knew.

In this example and others in this stage, participants expressed no intention to change behavior in the foreseeable future.

The following response was an example of participants in the Contemplation stage after the initial survey:

The training was a great way to enforce a non-complacent approach to doing research. It will motivate me to think thoroughly in addition to providing materials to look back to for reference/refreshing.

At the follow-up survey, the following statement exemplifies the Contemplation stage:

Being more mindful of consenting process and overall operational procedures; The training provided clear examples of how members of a research team can face special challenges that may not be captured by [a different training program].; I have limited capacity to approve new forms or [standard operating procedures] (SOPs). However, I have more awareness.

This statement and others in this stage described participants becoming more mindful and conscious and suggesting that they had developed a new understanding of their job responsibilities. Although how and what types of changes the participants might make in the future is not articulated, it is important to recognize that in the absence of awareness, change is unlikely. Participants in this stage explained how they are thinking about the connection between training and their roles and indicate a newfound awareness.

The following response exemplified participants in the Preparation stage after the initial survey:

Engage in QA (Question/Answer) activities during the study more frequently. Maintain better record keeping of study related activities.

In the comment above and others indicative of the Preparation stage, participants expressed an intention of how they planned to change their behavior, such as by becoming more mindful or by engaging more frequently in quality assurance activities and maintaining better records.

At follow-up, the Preparation stage was exemplified by the following statement:

I am more knowledgeable about what should/should not be done in clinical practice, so I am able to communicate what I know to others in order to make sure our entire research team is conducting good clinical practice.

The statement above and others at this stage not only signify participant awareness, but they also specify their intentions to make changes.

At 2 months follow-up, almost half of participants who responded (14/30) described a change that was classified in the Action stage:

I have created better protocol documents for future study coordinators.

[I] started writing SOPs for different task areas, have implemented a data check for QA, and constantly discuss the importance of fidelity to our assessment protocol and procedures.

These statements describe the behavioral changes that participants have made during the training. Creating better protocol documents, writing standard operating procedures, and implementing data checks are clearly actionable steps.

Discussion

This study reported the first multisite evaluation of the Best Practices in SBR Course which was designed to provide training for clinical research professionals of social and behavioral clinical trials. This was a critical investigation because the recently revised definition of clinical trials by NIH in which social and behavioral trials are being characterized in a similar way to clinical trials of drugs, devices, or biologics represents a paradigm shift in social and behavioral research. Social and behavioral investigators come from a variety of disciplines (such as psychology, rehabilitation, social science) [10], and many have not previously considered their studies to be classified under the umbrella of clinical trials. For some clinical research professionals, GCP was a new term, and this was the first specific GCP training they have taken. For these reasons, we were interested in examining characteristics of how the course was taken via LMS and participants’ perceptions of the course’s relevance to their work and potential impact of the materials. Having both quantitative and qualitative responses to questions was thought to be a fundamental step towards increasing our understanding of these training outcomes.

Across the participating sites, the sample consisted of participants who were responsible for the conduct, management, and oversight of clinical trials, highly credentialed, and had considerable prior research experience. Overall, the rate of course completion compared favorably to those of other open online courses recently offered to clinical professionals [Reference Goldberg11, Reference Sarabia-Cobo12]. The survey response rates across the sites are also comparable with those reported in similar training evaluation studies, even for those conducted months after the end of a course [Reference Gomes13, Reference Kristen14]. Although there is no standard completion rate for evaluation surveys in clinical and translational research training courses, the sustained participation of individuals in the pilot study was notable because their robust participation enables more reliable conclusions to be derived from analyses of their LMS activity and survey responses. There was considerable variation in the number and proportion of participants who completed the training across the 4 partner sites. This variability is likely due in large part to differences in the research training requirements among the institutions. Differences in the administration of the evaluation surveys also likely contributed to variation in the response rates across sites. These differences reflect an inherent variation in the technological systems and administrative requirements of these research universities.

The data derived from each Institution’s LMS suggest that the course could be completed efficiently and effectively by the participants. Participants struggled most often to pass the knowledge check in the first training module. This could be due to the likelihood that some participants started the online training but quickly paused to do other things while leaving the training open on their computers. The modest amount of time and effort participants spent completing each module suggests that they could finish the training with considerable efficiency. Future participants should expect to set aside about 4 hours to complete the course and know that it is unlikely they will pass each module’s knowledge check on their first attempt.

Training Outcomes

Most participants in the course reported that the training was highly relevant, engaging and, ultimately, that it had a positive impact on their work regardless of participant characteristics. Results from the regression model examining predictors of working differently at 2 months suggests that clinical research professionals with fewer years of experience conducting clinical trials may find the course more impactful on their work than those with more clinical trials experience. Taken together with several qualitative comments about the course providing a good foundation of knowledge in best practices, it appears important to target this training to clinical research professionals who are early in their career or new to social and behavioral clinical trials. Interestingly, the qualitative analysis showed a progression of change in the sample over the 2 months from Pre-contemplation to Action according to the TTM model given that a higher proportion of participants progressed to later stages of change (Preparation and Action) at the 2 month follow-up. Actionable changes were often noted in areas of adverse event reporting, consenting participants in studies, and being more careful in record keeping. These findings suggest that this course is an important tool for training that has lasting effects on improving scientific rigor in social and behavioral clinical trials. The results from both the LMS and survey provide strong preliminary support for the use of this course to train clinical research professionals in GCP in social and behavioral trials. This study also provides support for the feasibility of a multisite evaluation in which these data can be aggregated across a larger sample than could be ascertained from one site alone.

Limitations

Some deviations from the pilot protocol across the participating sites should be noted. There were differences in the ways the different sites utilized their LMS to provide access to the training and to send out follow-up survey invitations. There were also great differences in the IRB review processes at each site which caused the implementation to be asynchronous across the sites. Much of the variation in data collection was because different LMS were used to host the training. Some systems did not capture information about the time participants’ spent completing the training and about their responses to the embedded knowledge checks. Also, one LMS could only administer follow-up surveys anonymously. These differences necessitated the analysis of subsets of data collected from some sites and not others to interpret the pilot results. Lastly, our multivariate analysis using regression involved a small subsample using data from participants at both the initial and follow-up surveys. Although the regression diagnostics did not indicate violations of key assumptions for analysis, larger studies are warranted to strengthen the ability to generalize these findings.

Recommendations for Implementation of the Best Practices in SBR Course

The pilot results support some recommendations regarding the use of LMS to evaluate the implementation and impact of training modules. Administrators of LMS should be involved as key personnel as early as possible in such projects to ensure that their institution’s systems can support as many of the requirements of the program evaluation as possible, particularly when conducting multisite studies. In addition, robust beta testing of these systems can help investigators accurately identify deviations and to quickly diagnose their sources. Finally, close coordination and communication between study sites are essential, as it is for any multisite study.

Future Directions

In addition to the need for a larger study multisite evaluation, an additional enhancement to better understand the impact of the training could be the implementation of more sophisticated competency-based assessments such as evaluation of problem-solving through a case study or the use of objective assessments. Objective assessments could involve assessing rates of adverse event reporting or protocol deviations at study sites. Although this course appears to provide a good overview of GCP for social and behavioral clinical trials for clinical research professionals, it may also be a good source of training for partners in communities who are vital members of a research team. Community-based site staff should be provided a foundation of training and mentorship to strengthen scientific rigor outside of highly controlled medical or healthcare settings. Training models for these partners is an important next step in improving the design and conduct of social and behavioral clinical trials. More research is needed to determine how this course can be used for these members of the workforce.

Conclusions

Overall, these evaluation results suggest the Best Practices in SBR Course was easy for participants to take and yielded positive outcomes. Participants demonstrated that the course could be completed efficiently and has the potential to yield high completion rates compared with many voluntary online training offerings. The way in which all participants worked differently suggests the training motivated change across the full continuum of behavior modification. The findings also support the validity of estimating the impact of similar training programs on the professional practice by measuring participants’ perceptions of their intent to work differently as a result of the training they have received.

Acknowledgments

The authors gratefully acknowledge the faculty members from academic institutions nationally who graciously donated their time and expertise to this project.

Financial Support

This research was supported by the National Center for Advancing Translational Research grant no. UL1TR002240 (George Mashour, PI) and supplement grant: 3UL1TR000433-08S1 (Thomas Shanley, PI). This research was supported by the National Center for Advancing Translational Research grant no. UL1TR001412 (Timothy Murphy, PI) and KL2TR001413 (Margarita L. Dubocovich, PI) awarded to University at Buffalo.

Conflicts of Interest

The authors have no conflicts of interest to declare.