1. Introduction

1.1 Motivation

Loss severity distributions and aggregate loss distributions in insurance often have a shape that cannot easily be modelled with the common distributions implemented in software packages. In the range of smaller losses and around the mean the observed densities often look somewhat like asymmetric bell curves, being skewed to the right with one positive mode. This is not a problem in itself as well-known models like the Lognormal distribution have exactly this kind of geometry. Alternatively, distributions like the Exponential are available for cases where a strictly decreasing density seems more adequate. However, it often occurs that the traditional models, albeit incorporating the desired kind of skewness towards the right, have a less heavy tail than what the data indicate (Punzo et al., Reference Punzo, Bagnato and Maruotti2018) – if we restrict the fit to the very large losses, the Pareto distribution or variants thereof often seem the best choice. But, those typical heavy-tailed distributions rarely have a shape fitting well below the tail area.

In practice, bad fits in certain areas can sometimes be ignored. When we are mainly focused on the large majority of small and medium losses, we can often accept a bad tail fit and work with, for example, the Lognormal distribution. It might have a tail that is too light, so we will underestimate the expected value; however, often the large losses are such rare that their numerical impact is very low. Conversely, when we are focused on extreme quantiles like the 200-year event or want to rate a policy with a high deductible or a reinsurance layer, we only need an exact model for the large losses. In such situations we could work with a distribution that models smaller losses wrongly (or completely ignores them). There is a wide range of situations where the choice of the model can be made focusing just on the specific task to be accomplished, while some inaccuracy in less important areas is willingly accepted.

However, exactness over the whole range of loss sizes, from the many smaller to the very few large ones, becomes more and more important. For example, according to a modern holistic risk management/capital modelling perspective we do not only look at the average loss (which often depends mainly on the smaller losses) but also want to derive the probability of very bad scenarios (which depend heavily on the tail) – namely, out of the same model. Further, it has become popular to study various levels of retentions for a policy, or for a portfolio to be reinsured. A traditional variant of this is what reinsurers call exposure rating, see, for example, Parodi (Reference Parodi2014), Mack & Fackler (Reference Mack and Fackler2003). For such analyses one needs a distribution model being accurate both in the smaller loss area, which is where the retention typically applies, and in the tail area, whose impact on the expected loss becomes higher the higher the retention is chosen. In other words: one needs a flexible full model with a heavy tail.

Such situations require actuaries to abandon the distribution models they know best and proceed to somewhat more complex ones. In the literature and in software packages there is no lack of such models. For example, the seminal book by Klugman et al. (Reference Klugman, Panjer and Willmot2008) provides generalisations of the Gamma and the Beta distribution having up to four parameters and providing the desired geometries. However, despite the availability of such models, actuaries tend to stick to their traditional distributions. This is not (only) due to nostalgia – it has to do with a common experience of actuarial work: lack of empirical data. In an ever changing environment it is not easy to gather a sufficient amount of representative data to reliably infer several distribution parameters. A way to detect and possibly avoid big estimation errors is to check the inferred parameters with market experience, namely with analogous results calculated from other empirical data stemming from similar business. It would be best to see at a glance whether the inferred parameters are realistic, which means in particular that the parameters must be interpretable in some way.

To this end, it would be ideal to work with models looking initially like one of the traditional distributions but having a tail shape like Pareto with interpretable parameters. Such models can be constructed by piecewise definition on the lower versus large-loss areas; they are called spliced or composite distributions.

1.2 Scientific context

Spliced models have been treated in the applied statistics literature for some decades, see the survey paper by Scarrott & MacDonald (Reference Scarrott and MacDonald2012) for an early overview. Recently the models have received a lot of attention in actuarial publications. We will discuss references in Section 6; let us highlight just a few here. A simple Lognormal-Pareto variant was presented early by Knecht & Küttel (Reference Knecht and Küttel2003). The seminal paper for the topic is Scollnik (Reference Scollnik2007) proposing a more general Lognormal-Pareto/GPD model that has inspired many authors to study variants thereof, in particular alternatives for the Lognormal part, and to apply them to insurance loss data. Grün & Miljkovic (Reference Grün and Miljkovic2019) give a compact overview of this research, followed by an inventory of over 250 spliced distributions, which were notably all implemented and applied.

1.3 Objective

The main scope of our paper is to collect and generalise a number of spliced models having a Pareto or GPD tail, and to design a general framework of variants and extensions of the Pareto distribution family. Special attention is paid to the geometry of distribution functions and to intuitive interpretations of parameters. We show where such intuition can ease parameter inference from scarce data, e.g. by combining information from different sources.

1.4 Outline

Section 2 explains why reinsurers like the single-parameter Pareto distribution so much, and collects some results that enhance intuition about distribution tails in general. Section 3 presents parameterisations of the Generalised Pareto distribution that will make reinsurers (and some others) like this model too. Section 4 explains how more Pareto variants can be created, catering in particular for a more flexible modelling of smaller losses. Section 5 gives an inventory of spliced Lognormal-Pareto models that embraces as special cases various distributions introduced earlier by other authors. Section 6 reviews analogous models employing other distributions in place of Lognormal, plus some generalisations. Section 7 revisits the Riebesell model and another old exposure rating method, in the light of the methodology developed so far.

The sections are somewhat diverse, from mixed educational-survey (2, 3, 4) to mainly literature survey (6) to original research (5, 7). All content, be it well known, less common or novel, is presented with the same practice-oriented aim: to provide intuition for models that can help in scarce-data situations.

This paper emerges from an award-winning conference paper (Fackler, Reference Fackler2013), providing updated and additional content. In particular, we treat the full range of the GPD, not only the popular Pareto-like case having the exponent

![]() $$\xi \gt 0$$

. Further we appraise the fast-growing literature on spliced models by discussing both older and recent references.

$$\xi \gt 0$$

. Further we appraise the fast-growing literature on spliced models by discussing both older and recent references.

1.5 Technical remarks

In most of the following we will not distinguish between loss severity and aggregate loss distributions. Technically, model fitting works the same way, further the shapes being observed for the two distribution types overlap. For aggregate losses, at least in case of large portfolios and not too many dependencies between the single risks, it is felt that distributions should mostly have a unique positive mode (maximum density) like the Normal distribution; however, considerable skewness and heavy tails cannot be ruled out (Knecht & Küttel, Reference Knecht and Küttel2003). Severity distributions are observed to be more heavy-tailed; here a priori both a strictly decreasing density and a positive mode are plausible, let alone multimodal distributions requiring very complex modelling (Klugman et al., Reference Klugman, Panjer and Willmot2008).

For any loss severity or aggregate loss distribution, let

![]() $$\bar F\left( x \right) = 1 - F\left( x \right) = {\rm{P}}\left( {X \gt x} \right)$$

be the survival function,

$$\bar F\left( x \right) = 1 - F\left( x \right) = {\rm{P}}\left( {X \gt x} \right)$$

be the survival function,

![]() $$f\left( x \right)$$

the probability density function (where it exists), that is, the derivative of the cumulative distribution function

$$f\left( x \right)$$

the probability density function (where it exists), that is, the derivative of the cumulative distribution function

![]() $$F\left( x \right)$$

. As it is geometrically more intuitive (and a bit more general), we will formulate as many results as possible in terms of cdf instead of pdf, mainly working with the survival function, which often yields simpler formulae than the cdf.

$$F\left( x \right)$$

. As it is geometrically more intuitive (and a bit more general), we will formulate as many results as possible in terms of cdf instead of pdf, mainly working with the survival function, which often yields simpler formulae than the cdf.

Unless specified otherwise, the model parameters appearing in this paper are (strictly) positive real numbers.

2. Pareto – reinsurer’s old love

One could call it the standard model of the reinsurance pricing actuaries: The Pareto distribution, also called Type I Pareto, European Pareto, or Single-parameter Pareto, has survival function

In this paper we reserve the name “Pareto” for this specific model, noting that is used for other variants of the large Pareto family as well.

Does the Pareto model have one or two parameters? It depends – namely on what the constraint

![]() $$\theta \le x$$

means. It may mean that no losses between

$$\theta \le x$$

means. It may mean that no losses between

![]() $$0$$

and

$$0$$

and

![]() $$\theta $$

exist, or alternatively that nothing shall be specified about losses between

$$\theta $$

exist, or alternatively that nothing shall be specified about losses between

![]() $$0$$

and

$$0$$

and

![]() $$\theta $$

. Unfortunately, this is not always clearly mentioned when the model is used. Formally, we have two very different cases:

$$\theta $$

. Unfortunately, this is not always clearly mentioned when the model is used. Formally, we have two very different cases:

Situation 1: There are no losses below the threshold

![]() $$\theta $$

.

$$\theta $$

.

This model has two parameters

![]() $$\alpha $$

und

$$\alpha $$

und

![]() $$\theta $$

. Here

$$\theta $$

. Here

![]() $$\theta $$

is not just a parameter, it is indeed a scale parameter (as defined e.g. in Klugman et al., Reference Klugman, Panjer and Willmot2008) of the model.

$$\theta $$

is not just a parameter, it is indeed a scale parameter (as defined e.g. in Klugman et al., Reference Klugman, Panjer and Willmot2008) of the model.

We call the above model Pareto-only, reflecting the fact that there is no area of small losses having a distribution shape other than Pareto.

This model is quite popular, despite its unrealistic shape in the area of low losses, whatever

![]() $$\theta $$

is. (If

$$\theta $$

is. (If

![]() $$\theta $$

is large, there is an unrealistically large gap in the distribution. If

$$\theta $$

is large, there is an unrealistically large gap in the distribution. If

![]() $$\theta $$

is small, say

$$\theta $$

is small, say

![]() $$\theta = 1$$

Euro, the gap is negligible, but a Pareto-like shape for losses in the range from

$$\theta = 1$$

Euro, the gap is negligible, but a Pareto-like shape for losses in the range from

![]() $$1$$

to some 10,000 Euro is rarely observed in the real world.)

$$1$$

to some 10,000 Euro is rarely observed in the real world.)

Situation 2: Only the tail is modelled, so to be precise we are dealing with the conditional distribution

This model only has parameter

![]() $$\alpha $$

, while

$$\alpha $$

, while

![]() $$\theta $$

is the known lower threshold of the model.

$$\theta $$

is the known lower threshold of the model.

Situation 1 implies Situation 2 but not vice versa. We will later see distributions combining a Pareto tail with a quite different distribution of the smaller losses.

2.1 A memoryless property

Why is the Pareto model so popular among reinsurers? The most useful property of the Pareto tail model is without doubt the closedness and parameter invariance when modelling upper tails: if we have

![]() $$\bar F\left( {x\left| {X \gt \theta } \right.} \right) = {\left( {{\theta \over x}} \right)^\alpha }$$

and derive the model for a higher threshold

$$\bar F\left( {x\left| {X \gt \theta } \right.} \right) = {\left( {{\theta \over x}} \right)^\alpha }$$

and derive the model for a higher threshold

![]() $$d \ge \theta $$

, we get

$$d \ge \theta $$

, we get

$$\bar F\left( {x\left| {X \gt d} \right.} \right) = {{\bar F\left( {x\left| {X \gt \theta } \right.} \right)} \over {\bar F\left( {d\left| {X \gt \theta } \right.} \right)}} = {{{{\left( {{\theta \over x}} \right)}^\alpha }} \over {{{\left( {{\theta \over d}} \right)}^\alpha }}} = {\left( {{d \over x}} \right)^\alpha },\;\;\;\;\;\;\;\;d \le x,$$

$$\bar F\left( {x\left| {X \gt d} \right.} \right) = {{\bar F\left( {x\left| {X \gt \theta } \right.} \right)} \over {\bar F\left( {d\left| {X \gt \theta } \right.} \right)}} = {{{{\left( {{\theta \over x}} \right)}^\alpha }} \over {{{\left( {{\theta \over d}} \right)}^\alpha }}} = {\left( {{d \over x}} \right)^\alpha },\;\;\;\;\;\;\;\;d \le x,$$

which is again Pareto with

![]() $$d$$

taking the place of

$$d$$

taking the place of

![]() $$\theta $$

. We could say, when going “upwards” to model somewhat larger losses only, the model “forgets” the original threshold

$$\theta $$

. We could say, when going “upwards” to model somewhat larger losses only, the model “forgets” the original threshold

![]() $$\theta $$

, which is not needed any further – instead the new threshold comes in. That implies:

$$\theta $$

, which is not needed any further – instead the new threshold comes in. That implies:

• If a distribution has a Pareto tail and we only need to model quite large losses, we do not need to know exactly where that tail starts. As long as we are in the tail (let us call it Pareto area) we always have the same parameter

$$\alpha $$

, no matter which threshold is used.

$$\alpha $$

, no matter which threshold is used.• It is possible to compare data sets having different (reporting) thresholds. Say for a MTPL portfolio we know all losses above

$$2$$

million Euro, for another one we only have the losses exceeding

$$2$$

million Euro, for another one we only have the losses exceeding

$$3$$

million Euro available. Although these tail models have different thresholds, we can judge whether the underlying portfolios have similar tail behaviour or not, according to whether they have similar Pareto alphas. Such comparisons of tails starting at different thresholds are extremely useful in the reinsurance practice, where typically, to get a representative overview of a line of business in a country, one must collect data from several reinsured portfolios, all possibly having different reporting thresholds.

$$3$$

million Euro available. Although these tail models have different thresholds, we can judge whether the underlying portfolios have similar tail behaviour or not, according to whether they have similar Pareto alphas. Such comparisons of tails starting at different thresholds are extremely useful in the reinsurance practice, where typically, to get a representative overview of a line of business in a country, one must collect data from several reinsured portfolios, all possibly having different reporting thresholds.• This comparability across tails can lead to market values for Pareto alphas being applicable as benchmarks: see Schmutz & Doerr (Reference Schmutz and Doerr1998) and Section 4.4.8 of FINMA (2006). Say we observe that a certain type of Fire portfolio in a certain country frequently has Pareto tails starting somewhere between

$$1$$

and

$$1$$

and

$$2$$

million Euro, having an alpha typically in the range of

$$2$$

million Euro, having an alpha typically in the range of

$$1.8$$

.

$$1.8$$

.

With the option to compare an inferred Pareto alpha to other fits or to market benchmarks, it becomes an interpretable parameter.

2.2 Basic formulae

Let us recall some useful facts about losses in the Pareto tail (Schmutz & Doerr, Reference Schmutz and Doerr1998). These are well known but we will show some less-known generalisations soon.

2.2.1 Pareto extrapolation equation for frequencies

To relate frequencies at different thresholds

![]() $${d_1},{d_2} \ge \theta $$

, the Pareto model yields a famous, very simple, equation, called Pareto extrapolation:

$${d_1},{d_2} \ge \theta $$

, the Pareto model yields a famous, very simple, equation, called Pareto extrapolation:

2.2.2 Structure of layer premiums

Consider a (re)insurance layer

![]() $$C{\rm{\;xs\;}}D$$

, that is, a cover paying, of each loss

$$C{\rm{\;xs\;}}D$$

, that is, a cover paying, of each loss

![]() $$x$$

, the part

$$x$$

, the part

![]() $${\rm{min}}\left( {{{\left( {x - D} \right)}^ + },C} \right)$$

. (Infinite

$${\rm{min}}\left( {{{\left( {x - D} \right)}^ + },C} \right)$$

. (Infinite

![]() $$C$$

is admissible for

$$C$$

is admissible for

![]() $$\alpha \gt 1$$

.) Suppose the layer operates fully in the Pareto area, that is,

$$\alpha \gt 1$$

.) Suppose the layer operates fully in the Pareto area, that is,

![]() $$D \ge \theta $$

. Then the average layer loss equals

$$D \ge \theta $$

. Then the average layer loss equals

which is well-defined (taking the limit) also for

![]() $$\alpha = 1$$

.

$$\alpha = 1$$

.

If

![]() $$\eta $$

is the loss frequency at

$$\eta $$

is the loss frequency at

![]() $$\theta $$

, the frequency at

$$\theta $$

, the frequency at

![]() $$D$$

equals

$$D$$

equals

![]() $$\eta {\left( {{\theta \over D}} \right)^\alpha }$$

. Thus, the risk premiums of layers have a particular structure, equalling a function

$$\eta {\left( {{\theta \over D}} \right)^\alpha }$$

. Thus, the risk premiums of layers have a particular structure, equalling a function

![]() $${D^{1 - \alpha }}\psi \left( {{D \over C}} \right)$$

. This yields a further simple extrapolation equation.

$${D^{1 - \alpha }}\psi \left( {{D \over C}} \right)$$

. This yields a further simple extrapolation equation.

2.2.3 Pareto extrapolation equation for layer risk premiums

$${{{\rm{risk}}\;{\rm{premium}}\;{\rm{of}}\;{C_2}{\rm{xs}}{D_2}} \over {{\rm{risk}}\;{\rm{premium}}\;{\rm{of}}\;{C_1}{\rm{xs}}{D_1}}} = {{{{\left( {{C_2} + {D_2}} \right)}^{1 - \alpha }} - D_2^{1 - \alpha }} \over {{{\left( {{C_1} + {D_1}} \right)}^{1 - \alpha }} - D_1^{1 - \alpha }}}\;\;\;\;{\underset{\mathop\alpha \to 1}{\longrightarrow}} \;\;\;\;{{{\rm{ln}}\left( {1 + {{{C_2}} \over {{D_2}}}} \right)} \over {{\rm{ln}}\left( {1 + {{{C_1}} \over {{D_1}}}} \right)}}$$

$${{{\rm{risk}}\;{\rm{premium}}\;{\rm{of}}\;{C_2}{\rm{xs}}{D_2}} \over {{\rm{risk}}\;{\rm{premium}}\;{\rm{of}}\;{C_1}{\rm{xs}}{D_1}}} = {{{{\left( {{C_2} + {D_2}} \right)}^{1 - \alpha }} - D_2^{1 - \alpha }} \over {{{\left( {{C_1} + {D_1}} \right)}^{1 - \alpha }} - D_1^{1 - \alpha }}}\;\;\;\;{\underset{\mathop\alpha \to 1}{\longrightarrow}} \;\;\;\;{{{\rm{ln}}\left( {1 + {{{C_2}} \over {{D_2}}}} \right)} \over {{\rm{ln}}\left( {1 + {{{C_1}} \over {{D_1}}}} \right)}}$$

2.3. Testing empirical data

Distributions having nice properties only help if they provide good fits to real-world data. From the (re)insurance practice it is known that not all empirical tails look like Pareto; in particular the model often seems to be somewhat too heavy-tailed at the very large end, see Albrecher et al. (Reference Albrecher, Araujo-Acuna and Beirlant2021) for Pareto modifications catering for this effect. Nevertheless Pareto can be a good model for a wide range of loss sizes. For example, if it fits well between

![]() $$1$$

and

$$1$$

and

![]() $$20$$

million Euro, one can use it for layers in that area independently of whether or not beyond

$$20$$

million Euro, one can use it for layers in that area independently of whether or not beyond

![]() $$20$$

million Euro a different model is needed.

$$20$$

million Euro a different model is needed.

To quickly check whether an empirical distribution is well fit by the Pareto model, at least for a certain range of loss sizes, there is a well-known graphical method available:

•

$$\bar F\left( x \right)$$

is Pareto is equivalent to

$$\bar F\left( x \right)$$

is Pareto is equivalent to•

$$\bar F\left( x \right)$$

is a straight line on double-logarithmic paper (having slope –

$$\bar F\left( x \right)$$

is a straight line on double-logarithmic paper (having slope –

$$\alpha $$

).

$$\alpha $$

).

So, if the log-log-graph of an empirical survival function is about a straight line for a certain range of loss sizes, in that area a Pareto fit should work well.

2.4 Local property

Thinking of quite small intervals of loss sizes being apt for Pareto fits leads to a generalisation being applicable to any smooth distribution: the local Pareto alpha (Riegel, Reference Riegel2008). Mathematically, it is the negative derivative of

![]() $$\bar F\left( x \right)$$

on log-log scale.

$$\bar F\left( x \right)$$

on log-log scale.

At any point

![]() $$x \gt 0$$

where the survival function is positive and differentiable, we call

$$x \gt 0$$

where the survival function is positive and differentiable, we call

the local Pareto alpha at

![]() $$x$$

.

$$x$$

.

If

![]() $${\alpha _x}$$

is a constant on some interval, this interval is a Pareto-distributed piece of the distribution. In practice one often, but not always, observes that, for very large

$${\alpha _x}$$

is a constant on some interval, this interval is a Pareto-distributed piece of the distribution. In practice one often, but not always, observes that, for very large

![]() $$x$$

(far out in the million Euro range),

$$x$$

(far out in the million Euro range),

![]() $${\alpha _x}$$

is a (slowly) increasing function of

$${\alpha _x}$$

is a (slowly) increasing function of

![]() $$x$$

. The resulting distribution tail is somewhat less heavy than that of distributions with Pareto tail, where

$$x$$

. The resulting distribution tail is somewhat less heavy than that of distributions with Pareto tail, where

![]() $${\alpha _x}$$

is constant for large

$${\alpha _x}$$

is constant for large

![]() $$x$$

.

$$x$$

.

The above Pareto extrapolation equation for frequencies yields an intuitive interpretation of the local Pareto alpha: it is the speed of the decrease of the loss frequency as a function of the threshold. One sees quickly that if we increase a threshold

![]() $$d$$

by

$$d$$

by

![]() $$p$$

percent (for small

$$p$$

percent (for small

![]() $$p$$

), the loss frequency decreases by approximately

$$p$$

), the loss frequency decreases by approximately

![]() $${\alpha _d}{\rm{\;}}p$$

percent. Or equivalently, if we keep the threshold fixed but the losses increase by

$${\alpha _d}{\rm{\;}}p$$

percent. Or equivalently, if we keep the threshold fixed but the losses increase by

![]() $$p$$

percent (say due to inflation), the loss frequency at

$$p$$

percent (say due to inflation), the loss frequency at

![]() $$d$$

increases by approximately

$$d$$

increases by approximately

![]() $${\alpha _d}{\rm{\;}}p$$

percent. See Chapter 6 of Fackler (Reference Fackler2017) for how this leads to a general theory of the impact of inflation on (re)insurance layers.

$${\alpha _d}{\rm{\;}}p$$

percent. See Chapter 6 of Fackler (Reference Fackler2017) for how this leads to a general theory of the impact of inflation on (re)insurance layers.

3. Generalised Pareto – a new love?

Now we study a well-known generalisation of the Pareto model, see in the following Embrechts et al. (Reference Embrechts, Klüppelberg and Mikosch2013). Apparently less known is that it shares some of the properties making the Pareto model so popular.

The Generalised Pareto distribution, shortly denoted as GP(D), has survival function

This is a tail model like Pareto, having two parameters

![]() $$\xi $$

und

$$\xi $$

und

![]() $$\tau $$

, while

$$\tau $$

, while

![]() $$\theta $$

is the known model threshold. However,

$$\theta $$

is the known model threshold. However,

![]() $$\theta $$

is the third parameter in the corresponding GP-only model having no losses between

$$\theta $$

is the third parameter in the corresponding GP-only model having no losses between

![]() $$0$$

and

$$0$$

and

![]() $$\theta $$

, analogous to the Pareto case.

$$\theta $$

, analogous to the Pareto case.

![]() $$\xi $$

can take any real value, while

$$\xi $$

can take any real value, while

![]() $$\tau $$

must be positive. We use

$$\tau $$

must be positive. We use

![]() $$\tau $$

instead of the more common

$$\tau $$

instead of the more common

![]() $$\sigma $$

in order to reserve the latter for the Lognormal distribution. The GPD has finite expectation iff

$$\sigma $$

in order to reserve the latter for the Lognormal distribution. The GPD has finite expectation iff

![]() $$\xi \lt 1$$

. For negative

$$\xi \lt 1$$

. For negative

![]() $$\xi $$

the losses are (almost surely) bounded, having the supremum

$$\xi $$

the losses are (almost surely) bounded, having the supremum

![]() $$\theta + {\tau \over { - \xi }}$$

. The case

$$\theta + {\tau \over { - \xi }}$$

. The case

![]() $$\xi = 0$$

is well defined (take the limit) and yields the Exponential distribution

$$\xi = 0$$

is well defined (take the limit) and yields the Exponential distribution

We call the case

![]() $$\xi \gt 0$$

proper GPD.

$$\xi \gt 0$$

proper GPD.

Proper GP is largely considered the most interesting case for the insurance practice. Some authors notably mean only this case when speaking of the GPD.

The parameterisation for the Generalized Pareto distribution in comes from Extreme Value Theory (EVT), which is frequently quoted in the literature to justify the use of the GPD for the modelling of insurance data exceeding large thresholds.

The core of this reasoning is the famous Pickands-Balkema-De Haan Theorem stating that, simply put, for large-enough thresholds, the distribution tail asymptotically equals the GPD; see Balkema & De Haan (Reference Balkema and De Haan1974), Pickands (Reference Pickands1975). It could, however, be that the relevance of this theorem for the insurance practice is a bit overrated. A warning comes notably from a prominent EVT expert (Embrechts, Reference Embrechts2010): the rate of convergence to the GPD can be extremely slow (much slower than one is used to from the Central Limit Theorem), thus could be too slow for practical relevance.

Further, most real-world loss distributions must have limited support. Insured risks usually have finite sums insured, which also limits the loss potential of accumulation losses and aggregate losses. And even where explicit insurance policy limits don’t apply, most losses should be bounded by, say, 300 times today’s world GDP. With such upper bounds, EVT still applies, but here “high-enough threshold” could mean five Dollars less than the upper bound, which would again not be of practical interest.

Whether or not one is optimistic about the applicability of EVT, there are practical reasons for using the GPD. Widespread application in (and beyond) insurance shows that it provides good fits to a lot of tail data. Further, one can make its parameters interpretable, which can be helpful in scarce-data situations. This option emerges from a parameter change proposed by Scollnik (Reference Scollnik2007) for the proper GPD.

Set

![]() $$\alpha : = {1 \over \xi } \gt 0$$

,

$$\alpha : = {1 \over \xi } \gt 0$$

,

![]() $$\lambda : = \alpha \tau - \theta \gt - \theta $$

. Now we have

$$\lambda : = \alpha \tau - \theta \gt - \theta $$

. Now we have

The parameter space is quite intricate here as

![]() $$\lambda $$

may (to some extent) take on negative values. So, for parameter inference other parameterisations may work better. Yet, apart from this complication, the above representation will turn out to be extremely convenient, revealing in particular a lot of analogies to the Pareto model.

$$\lambda $$

may (to some extent) take on negative values. So, for parameter inference other parameterisations may work better. Yet, apart from this complication, the above representation will turn out to be extremely convenient, revealing in particular a lot of analogies to the Pareto model.

3.1. Names and parameters

At a glance we note two well-known special cases:

Case 1

![]() $$\lambda = 0$$

. This is the Pareto tail model from Section 2.

$$\lambda = 0$$

. This is the Pareto tail model from Section 2.

Case 2

![]() $$\lambda \gt 0$$

,

$$\lambda \gt 0$$

,

![]() $$\theta = 0$$

. This is not a tail model but a ground-up model (full model) for losses of any size. In the literature it is often called Pareto as well. However, some more specific names have been introduced: Type II Pareto, American Pareto, Two-parameter Pareto, Lomax.

$$\theta = 0$$

. This is not a tail model but a ground-up model (full model) for losses of any size. In the literature it is often called Pareto as well. However, some more specific names have been introduced: Type II Pareto, American Pareto, Two-parameter Pareto, Lomax.

Let us look briefly at a third kind of model. Every tail model reflecting a conditional distribution

![]() $$X\left| {X \gt \theta } \right.$$

has a corresponding excess model

$$X\left| {X \gt \theta } \right.$$

has a corresponding excess model

![]() $$X - \theta \left| {X \gt \theta } \right.$$

. If the former is proper GP as above, the latter has the survival function

$$X - \theta \left| {X \gt \theta } \right.$$

. If the former is proper GP as above, the latter has the survival function

![]() $${\left( {{{\theta + \lambda } \over {x + \theta + \lambda }}} \right)^\alpha }$$

, which is Two-parameter Pareto with parameters

$${\left( {{{\theta + \lambda } \over {x + \theta + \lambda }}} \right)^\alpha }$$

, which is Two-parameter Pareto with parameters

![]() $$\alpha $$

and

$$\alpha $$

and

![]() $$\theta + \lambda \gt 0$$

. However, in the Pareto case the survival function

$$\theta + \lambda \gt 0$$

. However, in the Pareto case the survival function

![]() $${\left( {{\theta \over {x + \theta }}} \right)^\alpha }$$

looks like Two-parameter Pareto but is materially different: here

$${\left( {{\theta \over {x + \theta }}} \right)^\alpha }$$

looks like Two-parameter Pareto but is materially different: here

![]() $$\theta $$

is the known threshold – this model has the only parameter

$$\theta $$

is the known threshold – this model has the only parameter

![]() $$\alpha $$

.

$$\alpha $$

.

The names Single versus Two-parameter Pareto (apart from anyway not being always consistently used in the literature) are somewhat misleading – as we have seen, both models have variants having 1 or 2 parameters, respectively. Whatever the preferred name, when using a Pareto variant, it is essential to make always clear whether one is using it as a ground-up model, a tail model, or an excess model.

3.2. Memoryless property

Let us come back to the GP tail model, for which in the following we borrow a bit from Section 6.5 of Fackler (Reference Fackler2017). If we as above derive the model for a higher tail starting at

![]() $$d \gt \theta $$

, we get

$$d \gt \theta $$

, we get

$$\bar F\left( {x\left| {X \gt d} \right.} \right) = {{{{\left( {{{\theta + \lambda } \over {x + \lambda }}} \right)}^\alpha }} \over {{{\left( {{{\theta + \lambda } \over {d + \lambda }}} \right)}^\alpha }}} = {\left( {{{d + \lambda } \over {x + \lambda }}} \right)^\alpha },\;\;\;\;\;\;\;\;d \le x.$$

$$\bar F\left( {x\left| {X \gt d} \right.} \right) = {{{{\left( {{{\theta + \lambda } \over {x + \lambda }}} \right)}^\alpha }} \over {{{\left( {{{\theta + \lambda } \over {d + \lambda }}} \right)}^\alpha }}} = {\left( {{{d + \lambda } \over {x + \lambda }}} \right)^\alpha },\;\;\;\;\;\;\;\;d \le x.$$

As in the Pareto case, the model is still (proper) GP but “forgets” the original threshold

![]() $$\theta $$

, replacing it by the new one. Again the parameter

$$\theta $$

, replacing it by the new one. Again the parameter

![]() $$\alpha $$

remains unchanged but also the second parameter

$$\alpha $$

remains unchanged but also the second parameter

![]() $$\lambda $$

. Both are thus invariants when modelling higher tails. The standard parameterisation of the GPD has only the invariant parameter

$$\lambda $$

. Both are thus invariants when modelling higher tails. The standard parameterisation of the GPD has only the invariant parameter

![]() $$\xi $$

, while the second parameter changes in an intricate way when shifting from a tail threshold to another one. There is, however, a variant that is tail invariant and works notably for any real

$$\xi $$

, while the second parameter changes in an intricate way when shifting from a tail threshold to another one. There is, however, a variant that is tail invariant and works notably for any real

![]() $$\xi $$

: replace

$$\xi $$

: replace

![]() $$\tau $$

by the so-called modified scale

$$\tau $$

by the so-called modified scale

![]() $$\omega = \tau - \xi \theta \gt - \xi \theta $$

, see, for example, Scarrott & MacDonald (Reference Scarrott and MacDonald2012). This yields

$$\omega = \tau - \xi \theta \gt - \xi \theta $$

, see, for example, Scarrott & MacDonald (Reference Scarrott and MacDonald2012). This yields

and for higher tails one just has to replace

![]() $$\theta $$

by the new threshold (which for

$$\theta $$

by the new threshold (which for

![]() $$\xi \lt 0$$

must be below the supremum loss

$$\xi \lt 0$$

must be below the supremum loss

![]() $$\theta + {\tau \over { - \xi }} = {\omega \over { - \xi }}$$

).

$$\theta + {\tau \over { - \xi }} = {\omega \over { - \xi }}$$

).

The tail invariance of

![]() $$\alpha $$

and

$$\alpha $$

and

![]() $$\lambda $$

, or of

$$\lambda $$

, or of

![]() $$\xi $$

and

$$\xi $$

and

![]() $$\omega $$

for the whole GPD, yields the same advantages for tail analyses as the Pareto model – interpretable parameters:

$$\omega $$

for the whole GPD, yields the same advantages for tail analyses as the Pareto model – interpretable parameters:

• There is no need to know exactly where the tail begins,

• one can compare tails starting at different thresholds,

• it might be possible to derive market values for the two parameters in certain business areas.

Thus, one can use the GPD in the same way as the Pareto model. The additional parameter adds flexibility – while on the other hand requiring more data for parameter inference.

The parameters

![]() $$\alpha \gt 0$$

and

$$\alpha \gt 0$$

and

![]() $$\lambda = \alpha \omega $$

of the proper GPD have a geometric interpretation:

$$\lambda = \alpha \omega $$

of the proper GPD have a geometric interpretation:

•

$$\lambda $$

is a “shift” from the Pareto model having the same alpha. (Note that

$$\lambda $$

is a “shift” from the Pareto model having the same alpha. (Note that

$$\lambda $$

has the same “dimension” as the losses, for example, Euro or thousand US Dollar.) We could think of starting with a Pareto distribution having the threshold

$$\lambda $$

has the same “dimension” as the losses, for example, Euro or thousand US Dollar.) We could think of starting with a Pareto distribution having the threshold

$$\theta + \lambda \gt 0$$

, then all losses are shifted by

$$\theta + \lambda \gt 0$$

, then all losses are shifted by

$$\lambda $$

to the left (by subtracting

$$\lambda $$

to the left (by subtracting

$$\lambda $$

) and we obtain the GPD. Thus, in graphs (with linear axes), proper GP tails have exactly the same shape as Pareto tails; just their location on the x-axis is different.

$$\lambda $$

) and we obtain the GPD. Thus, in graphs (with linear axes), proper GP tails have exactly the same shape as Pareto tails; just their location on the x-axis is different.• The parameter

$$\alpha $$

, apart from belonging to the corresponding Pareto model, is the local Pareto alpha at infinite:

$$\alpha $$

, apart from belonging to the corresponding Pareto model, is the local Pareto alpha at infinite:

$${\alpha _\infty } = \alpha $$

. More generally, one sees quickly that

$${\alpha _\infty } = \alpha $$

. More generally, one sees quickly that

$${\alpha _d} = {d \over {d + \lambda }}\alpha $$

.

$${\alpha _d} = {d \over {d + \lambda }}\alpha $$

.

The behaviour of

![]() $${\alpha _d}$$

as a function of

$${\alpha _d}$$

as a function of

![]() $$d$$

is as follows:

$$d$$

is as follows:

Case 1

![]() $$\lambda \gt 0$$

:

$$\lambda \gt 0$$

:

![]() $${\alpha _d}$$

rises (as is often observed for fits of large insurance losses).

$${\alpha _d}$$

rises (as is often observed for fits of large insurance losses).

Case 2

![]() $$\lambda = 0$$

: Pareto (

$$\lambda = 0$$

: Pareto (

![]() $$\theta \gt 0$$

).

$$\theta \gt 0$$

).

Case 3

![]() $$\lambda \lt 0$$

:

$$\lambda \lt 0$$

:

![]() $${\alpha _d}$$

decreases (

$${\alpha _d}$$

decreases (

![]() $$\theta \gt - \lambda \gt 0$$

).

$$\theta \gt - \lambda \gt 0$$

).

For any

![]() $$d \ge \theta $$

one easily gets

$$d \ge \theta $$

one easily gets

which is an alternative proper-GP parameterisation focusing on the local alphas (Riegel, Reference Riegel2008).

3.3. Proper GPD formulae

Bearing in mind that proper GP is essentially Pareto with the x-axis shifted by

![]() $$\lambda $$

, we get without any further calculation compact formulae very similar to the Pareto case:

$$\lambda $$

, we get without any further calculation compact formulae very similar to the Pareto case:

Summing up, proper Generalised Pareto is nearly as easy to handle as Pareto, but has two advantages: greater flexibility and the backing from both Extreme Value Theory and practical experience making it a preferred candidate for the modelling of high tails.

3.4. The complete picture

Formally, the parameters

![]() $$\alpha $$

and

$$\alpha $$

and

![]() $$\lambda $$

are not only applicable for the proper GPD but also for

$$\lambda $$

are not only applicable for the proper GPD but also for

![]() $$\xi \lt 0$$

. However, in the latter case their negatives are far more intuitive. Indeed, we get with

$$\xi \lt 0$$

. However, in the latter case their negatives are far more intuitive. Indeed, we get with

![]() $\beta : = - \alpha = - {1 \over \xi } \gt 0$

,

$\beta : = - \alpha = - {1 \over \xi } \gt 0$

,

![]() $\nu : = - \lambda = \beta \omega = \theta + \beta \tau = \theta + {\tau \over { - \xi }}{\rm{ \gt }}\theta $

the equation

$\nu : = - \lambda = \beta \omega = \theta + \beta \tau = \theta + {\tau \over { - \xi }}{\rm{ \gt }}\theta $

the equation

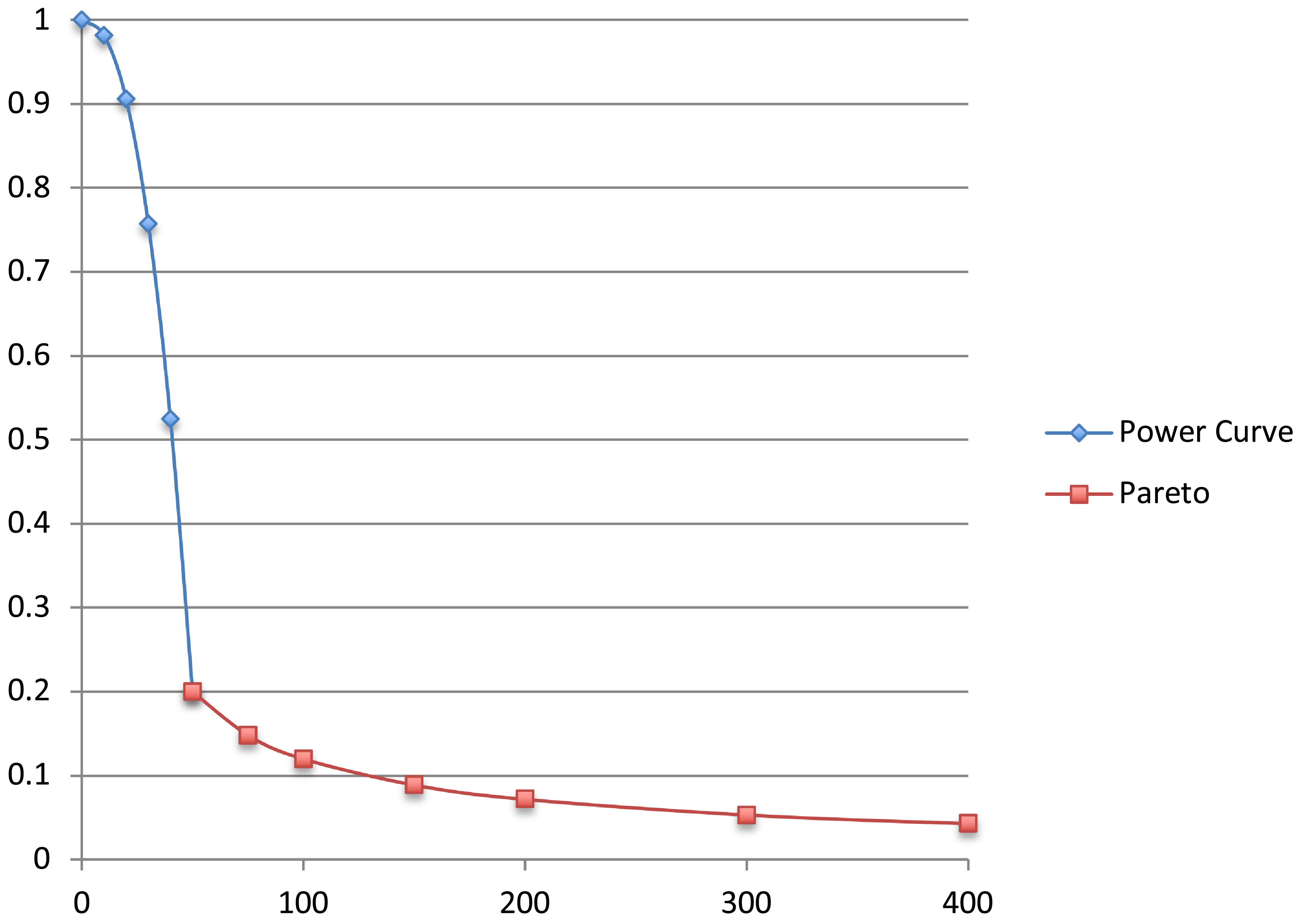

which shows at a glance that this GP case is a piece of a shifted power curve having

![]() $$\beta $$

as (positive) exponent and

$$\beta $$

as (positive) exponent and

![]() $$\nu $$

as supremum loss (and centre of the power curve).

$$\nu $$

as supremum loss (and centre of the power curve).

If

![]() $$\xi $$

is close to zero, this supremum is very high and the distribution is fairly close to a heavy tailed one (a bit less so than Exponential). Such GPDs can be an adequate model for situations where one observes initial heavy-tailedness but ultimately has bounded loss sizes. Instead, values

$$\xi $$

is close to zero, this supremum is very high and the distribution is fairly close to a heavy tailed one (a bit less so than Exponential). Such GPDs can be an adequate model for situations where one observes initial heavy-tailedness but ultimately has bounded loss sizes. Instead, values

![]() $$\xi $$

well below

$$\xi $$

well below

![]() $$0$$

will hardly appear in fits to insurance loss data:

$$0$$

will hardly appear in fits to insurance loss data:

![]() $$\xi = - 1$$

yields the uniform distribution between the threshold and the supremum, while for

$$\xi = - 1$$

yields the uniform distribution between the threshold and the supremum, while for

![]() $$\xi \lt - 1$$

the pdf rises, i.e. larger losses are overall more likely than smaller losses, a rather unrealistic case.

$$\xi \lt - 1$$

the pdf rises, i.e. larger losses are overall more likely than smaller losses, a rather unrealistic case.

The local Pareto alpha, which in general for the GPD reads

for

![]() $$\xi \lt 0$$

equals

$$\xi \lt 0$$

equals

![]() ${\alpha _d} = {d \over {\nu - d}}\beta $

, which is always an increasing and diverging (as

${\alpha _d} = {d \over {\nu - d}}\beta $

, which is always an increasing and diverging (as

![]() $$d{\nearrow}\nu $$

) function in

$$d{\nearrow}\nu $$

) function in

![]() $$d$$

. The same holds in the Exponential case, where

$$d$$

. The same holds in the Exponential case, where

![]() ${\alpha _d} = {d \over \tau } = {d \over \omega }$

is a linear function.

${\alpha _d} = {d \over \tau } = {d \over \omega }$

is a linear function.

To conclude, we illustrate the variety of properties that GP tails starting at a given threshold

![]() $$\theta \ge 0$$

can have, using the original parameters

$$\theta \ge 0$$

can have, using the original parameters

![]() $$\xi $$

and

$$\xi $$

and

![]() $$\tau \gt 0$$

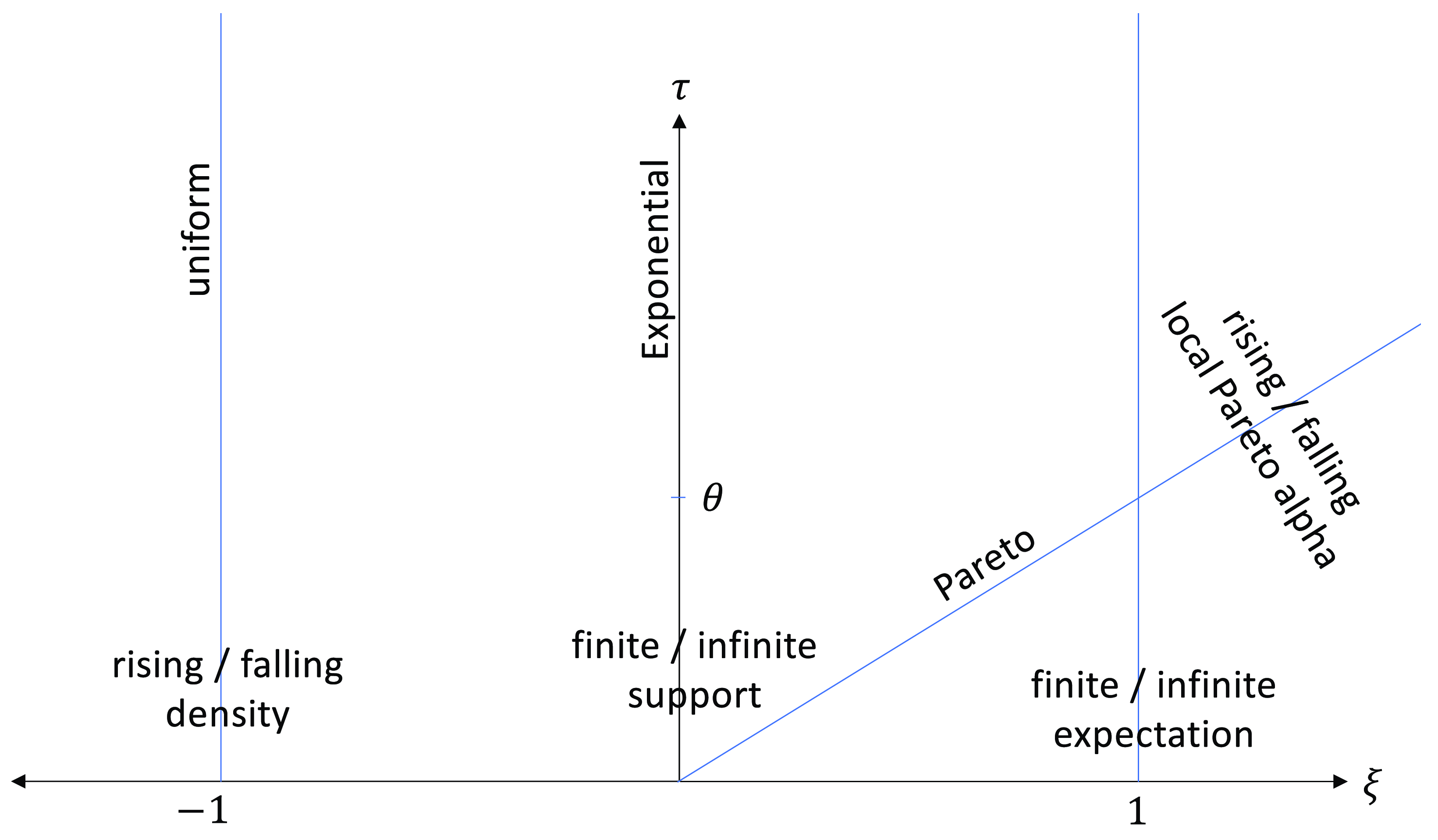

. They span an open half-plane, which can be split in two parts by a half-line in four different ways (see Figure 1):

$$\tau \gt 0$$

. They span an open half-plane, which can be split in two parts by a half-line in four different ways (see Figure 1):

•

$$\xi = - 1$$

(uniform): rising vs falling density

$$\xi = - 1$$

(uniform): rising vs falling density•

$$\xi = 0$$

(Exponential): finite vs infinite support

$$\xi = 0$$

(Exponential): finite vs infinite support•

$$\xi = + 1$$

: finite vs infinite expectation

$$\xi = + 1$$

: finite vs infinite expectation•

$$\xi \theta = \tau $$

(Pareto): rising vs falling local Pareto alpha

$$\xi \theta = \tau $$

(Pareto): rising vs falling local Pareto alpha

Figure 1. Areas of the GPD parameter space.

The fourth half-line represents indeed the Pareto model, which requires

![]() $$\theta \gt 0$$

and has

$$\theta \gt 0$$

and has

![]() $$\lambda = 0$$

. (If

$$\lambda = 0$$

. (If

![]() $$\theta = 0$$

, this half-line falls out of the parameter space and coincides with the right half of the

$$\theta = 0$$

, this half-line falls out of the parameter space and coincides with the right half of the

![]() $$\xi $$

axis, such that there is no sector between the two where the local Pareto alpha would decrease.)

$$\xi $$

axis, such that there is no sector between the two where the local Pareto alpha would decrease.)

The most plausible (but not exclusive) parameter area for the modelling of large losses is the infinite trapezoid defined by the inequalities

![]() $$0 \lt \xi \lt 1$$

and

$$0 \lt \xi \lt 1$$

and

![]() $$\tau \ge \xi \theta $$

, which contains the proper-GP models having finite expectation and rising or constant local Pareto alpha.

$$\tau \ge \xi \theta $$

, which contains the proper-GP models having finite expectation and rising or constant local Pareto alpha.

As for the estimation of the GPD parameters, see Brazauskas & Kleefeld (Reference Brazauskas and Kleefeld2009) studying various fitting methods, from traditional to newly developed, and showing that the latter are superior in case of scarce data. (Note that the paper uses the parameter

![]() $$\gamma = - \xi $$

.) See also the many related papers of the first author, for example, Zhao et al. (Reference Zhao, Brazauskas and Ghorai2018), Brazauskas et al. (Reference Brazauskas, Jones and Zitikis2009) and (on estimation of the Pareto alpha) Brazauskas & Serfling (Reference Brazauskas and Serfling2003).

$$\gamma = - \xi $$

.) See also the many related papers of the first author, for example, Zhao et al. (Reference Zhao, Brazauskas and Ghorai2018), Brazauskas et al. (Reference Brazauskas, Jones and Zitikis2009) and (on estimation of the Pareto alpha) Brazauskas & Serfling (Reference Brazauskas and Serfling2003).

4. Construction of distribution variants

We strive after further flexibility in our distribution portfolio. Before focusing on the smaller losses, let us have a brief look at the opposite side, the very large losses.

4.1. Cutting distributions

Sometimes losses greater than a certain maximum are impossible:

![]() $$X \le {\rm{Max}}$$

. If one does not find suitable models with finite support (like the GPD with negative

$$X \le {\rm{Max}}$$

. If one does not find suitable models with finite support (like the GPD with negative

![]() $$\xi $$

), one can adapt distributions with infinite support, in two easy ways: censoring and truncation. We follow the terminology of Klugman et al. (Reference Klugman, Panjer and Willmot2008), noting that in the literature we occasionally found the two terms interchanged.

$$\xi $$

), one can adapt distributions with infinite support, in two easy ways: censoring and truncation. We follow the terminology of Klugman et al. (Reference Klugman, Panjer and Willmot2008), noting that in the literature we occasionally found the two terms interchanged.

Right censoring modifies a survival function as follows.

Properties of the resulting survival function:

• mass point (jump) at Max with probability

$$\bar F\left( {{\rm{Max}}} \right)$$

;

$$\bar F\left( {{\rm{Max}}} \right)$$

;• below Max same shape as original model.

A mass point at the maximum loss is indeed plausible in some real-world situations. For example, there could be a positive probability for a total loss (100% of the sum insured) in a homeowners’ fire policy, which occurs if the insured building burns down completely.

Right truncation modifies a survival function as follows.

Properties of the resulting survival function:

• equals the conditional distribution of

$$X\left| {X \le {\rm{Max}}} \right.$$

;

$$X\left| {X \le {\rm{Max}}} \right.$$

;• continuous at Max, no mass point;

• shape below Max is a bit different from original model, tail is thinner, but the numerical impact of this deviation is low for small/medium losses.

Of course, both variants yield finite expectation even when the expected value of the original model (e.g. GP tails with

![]() $$\xi \ge 1$$

) is infinite, which eases working with such models.

$$\xi \ge 1$$

) is infinite, which eases working with such models.

Left censoring and left truncation are analogous. We have seen the latter earlier: an upper tail model is formally a left truncation of the full model it is derived from.

Both ways to disregard the left or right end of the distribution can be combined and applied to any distribution, including the Pareto family. Right truncation is, in particular, a way to get tails being thinner than Pareto in the area close to the maximum.

The right-censored/truncated versions of models having a GP/Pareto tail preserve the memoryless property stated above.

For censoring this is trivial – the only change is that the tail ends in a jump at Max.

As for truncating, let

![]() $$\bar F$$

be a survival function having a proper-GP tail, i.e.

$$\bar F$$

be a survival function having a proper-GP tail, i.e.

![]() $\bar F\left( x \right) = \bar F\left( \theta \right){\left( {{{\theta + \lambda } \over {x + \lambda }}} \right)^\alpha }$

for

$\bar F\left( x \right) = \bar F\left( \theta \right){\left( {{{\theta + \lambda } \over {x + \lambda }}} \right)^\alpha }$

for

![]() $$x \ge \theta $$

. As each higher tail is again GP with the same parameters, for any

$$x \ge \theta $$

. As each higher tail is again GP with the same parameters, for any

![]() $${\rm{Max}} \gt x \ge d \ge \theta $$

we have

$${\rm{Max}} \gt x \ge d \ge \theta $$

we have

![]() $\bar F\left( x \right) = \bar F\left( d \right){\left( {{{d + \lambda } \over {x + \lambda }}} \right)^\alpha }$

, which leads to

$\bar F\left( x \right) = \bar F\left( d \right){\left( {{{d + \lambda } \over {x + \lambda }}} \right)^\alpha }$

, which leads to

The original threshold

![]() $$\theta $$

disappears again; each truncated GP tail model has the same parameters

$$\theta $$

disappears again; each truncated GP tail model has the same parameters

![]() $$\alpha $$

,

$$\alpha $$

,

![]() $$\lambda $$

and Max. The same reasoning works for the whole GPD with the parameters

$$\lambda $$

and Max. The same reasoning works for the whole GPD with the parameters

![]() $$\xi $$

and

$$\xi $$

and

![]() $$\omega $$

(however, the case

$$\omega $$

(however, the case

![]() $$\xi \lt 0$$

has a supremum loss anyway, such that further truncation is rarely of interest).

$$\xi \lt 0$$

has a supremum loss anyway, such that further truncation is rarely of interest).

Truncated Pareto-like distributions get increasing attention in the literature, see, for example, Clark (Reference Clark2013) and Beirlant et al. (Reference Beirlant, Alves and Gomes2016).

4.2. Basic full models

Now we start investigating ground-up models having a more plausible shape for smaller losses than Pareto/GP-only, with its gap between

![]() $$0$$

and

$$0$$

and

![]() $$\theta $$

. We have already seen an example, a special case of the proper GPD.

$$\theta $$

. We have already seen an example, a special case of the proper GPD.

The Lomax model has the survival function

This is a ground-up distribution having two parameters, the exponent and a scale parameter. It can be generalised via transforming (Klugman et al., Reference Klugman, Panjer and Willmot2008), which yields a three-parameter distribution model.

The Burr model has the survival function

For large

![]() $$x$$

this model asymptotically tends to Pareto-only with exponent

$$x$$

this model asymptotically tends to Pareto-only with exponent

![]() $$\alpha \gamma $$

, but in the area of the small losses it has much more flexibility. While Burr distributions with

$$\alpha \gamma $$

, but in the area of the small losses it has much more flexibility. While Burr distributions with

![]() $$\gamma \lt 1$$

and Lomax (

$$\gamma \lt 1$$

and Lomax (

![]() $$\gamma = 1$$

) have a strictly decreasing density, such that their mode (point of maximum density) equals zero, for the Burr variants with

$$\gamma = 1$$

) have a strictly decreasing density, such that their mode (point of maximum density) equals zero, for the Burr variants with

![]() $$\gamma \gt 1$$

the (only) mode is positive. This is our first example of a unimodal distribution having a density looking roughly like an asymmetric bell curve and at the same time a tail similar to Pareto.

$$\gamma \gt 1$$

the (only) mode is positive. This is our first example of a unimodal distribution having a density looking roughly like an asymmetric bell curve and at the same time a tail similar to Pareto.

More examples can be created via combining distributions. There are two handy options for this, see in the following Klugman et al. (Reference Klugman, Panjer and Willmot2008).

4.3. Mixed distributions

In general, mixing can make distributions more flexible and more heavy-tailed (Punzo et al., Reference Punzo, Bagnato and Maruotti2018). We treat only finite mixtures, which are easy to handle and to interpret. A finite mixture of distributions is simply a weighted average of two (or more) distributions. The underlying intuition is as follows: We have two kinds of losses, for example, material damage and bodily injury in MTPL, having different distributions. Then it is most natural to model them separately and combine the results, setting the weights according to the frequencies of the two loss types. The calculation of cdf, pdf, (limited) expected value and many other quantities is extremely easy – just take the weighted average of the figures describing the two original models.

A classical example is a mixture of two Lomax distributions.

The five-parameter Pareto model has the survival function

The four-parameter Pareto model has the same survival function with the number of parameters reduced via the constraint

![]() $${\alpha _1} = {\alpha _2} + 2$$

.

$${\alpha _1} = {\alpha _2} + 2$$

.

Sometimes mixing is used even when there is no natural separation into various loss types. The idea is as follows. There is a model describing the smaller losses very well but underestimating the large-loss probability. If this model is combined with a quite heavy-tailed model and the latter gets only a tiny weight, the resulting mixture will, for small losses, be very close to the first model, whose impact will fade out for larger losses, letting the second model take over and yield a good tail fit.

4.4. Spliced distributions

Pursuing this idea more strictly, one naturally gets to spliced, i.e. piecewise defined, distributions. The basic idea is to just put pieces of two or more different models together. We focus on the case of two pieces, noting that more can be combined in an analogous manner, see, for example, Albrecher et al. (Reference Albrecher, Beirlant and Teugels2017).

In the literature, splicing is frequently defined in terms of densities. In order to make it geometrically intuitive and a bit more general, we formulate it via the survival function.

The straightforward approach is to replace the tail of a model by another one:

For survival functions

![]() $${\bar F_1}\left( x \right)$$

and

$${\bar F_1}\left( x \right)$$

and

![]() $${\bar F_2}\left( x \right)$$

, tail replacement of the former at a threshold

$${\bar F_2}\left( x \right)$$

, tail replacement of the former at a threshold

![]() $$\theta \gt 0$$

, by means of the latter, yields the survival function

$$\theta \gt 0$$

, by means of the latter, yields the survival function

Note that, to get a continuous function, the second survival function must be tail-only starting at

![]() $$\theta $$

, that is,

$$\theta $$

, that is,

![]() $${\bar F_2}\left( \theta \right) = 1$$

; while the first one may admit the whole range of loss sizes, but its tail is ignored.

$${\bar F_2}\left( \theta \right) = 1$$

; while the first one may admit the whole range of loss sizes, but its tail is ignored.

We could in principle let the spliced survival function have a jump (mass point) at the threshold

![]() $$\theta $$

, but jumps in the middle of an elsewhere continuous survival function are hardly plausible in the real world, such that typically one combines continuous pieces to obtain a continuous function (apart from maybe a mass point at a maximum as it emerges from right censoring). Beyond being continuous, the two pieces often are (more or less) smooth, so it could make sense to demand some smoothness at

$$\theta $$

, but jumps in the middle of an elsewhere continuous survival function are hardly plausible in the real world, such that typically one combines continuous pieces to obtain a continuous function (apart from maybe a mass point at a maximum as it emerges from right censoring). Beyond being continuous, the two pieces often are (more or less) smooth, so it could make sense to demand some smoothness at

![]() $$\theta $$

too. A range of options is provided below.

$$\theta $$

too. A range of options is provided below.

Tail replacement seems natural, but splicing can be more general. The idea is to start with two distributions that do not intersect:

• The body distribution

$${\bar F_b}\left( x \right)$$

has all loss probability between

$${\bar F_b}\left( x \right)$$

has all loss probability between

$$0$$

and

$$0$$

and

$$\theta $$

, that is,

$$\theta $$

, that is,

$${\bar F_b}\left( x \right) = 0$$

for

$${\bar F_b}\left( x \right) = 0$$

for

$$\theta \le x$$

.

$$\theta \le x$$

.• The tail distribution

$${\bar F_t}\left( x \right)$$

has all probability above

$${\bar F_t}\left( x \right)$$

has all probability above

$$\theta $$

, that is,

$$\theta $$

, that is,

$${\bar F_t}\left( x \right) = 1$$

for

$${\bar F_t}\left( x \right) = 1$$

for

$$x \le \theta $$

.

$$x \le \theta $$

.

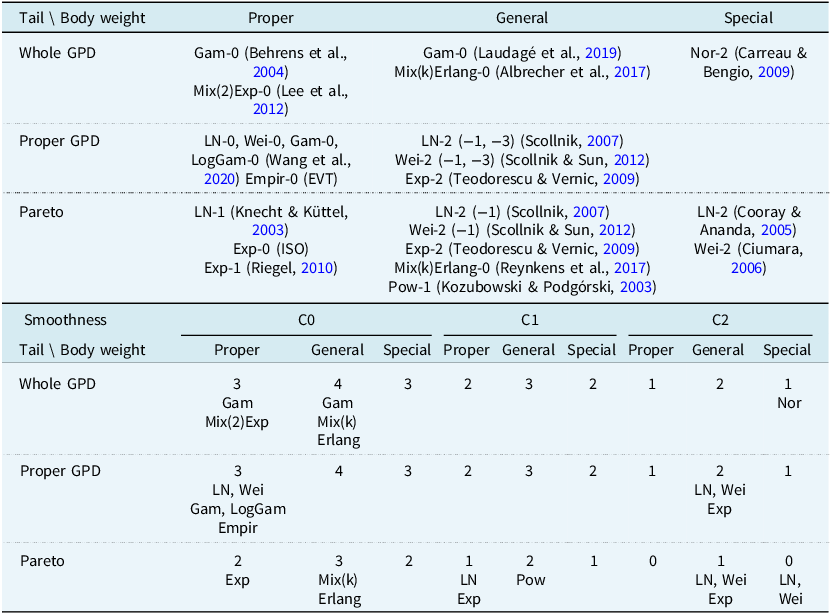

The spliced distribution is simply the weighted average of the two, which means that formally splicing is a special case of mixing, allowing for the same easy calculations. For an overview see Table 1.

Table 1. Structure of a spliced distribution

Note that here the weights can be chosen arbitrarily.

![]() $$r$$

is a parameter and quantifies the probability of a loss being not greater than the threshold

$$r$$

is a parameter and quantifies the probability of a loss being not greater than the threshold

![]() $$\theta $$

, while

$$\theta $$

, while

![]() $$1 - r$$

is the large-loss probability.

$$1 - r$$

is the large-loss probability.

If we combine an arbitrary cdf

![]() $${F_1}$$

with a tail-only cdf

$${F_1}$$

with a tail-only cdf

![]() $${F_2}$$

starting at

$${F_2}$$

starting at

![]() $$\theta $$

, we formally first have to right truncate

$$\theta $$

, we formally first have to right truncate

![]() $${F_1}$$

at

$${F_1}$$

at

![]() $$\theta $$

, which after some algebra yields a compact equation.

$$\theta $$

, which after some algebra yields a compact equation.

For a threshold

![]() $$\theta \gt 0$$

and two models represented by their survival functions, the body (also called the bulk or head) model

$$\theta \gt 0$$

and two models represented by their survival functions, the body (also called the bulk or head) model

![]() $${\bar F_1}\left( x \right)$$

and the tail model

$${\bar F_1}\left( x \right)$$

and the tail model

![]() $${\bar F_2}\left( x \right)$$

, where

$${\bar F_2}\left( x \right)$$

, where

![]() $${\bar F_2}\left( \theta \right) = 1$$

, the distribution model spliced at

$${\bar F_2}\left( \theta \right) = 1$$

, the distribution model spliced at

![]() $$\theta $$

has the survival function

$$\theta $$

has the survival function

The parameters of this model are the threshold or splicing point

![]() $$\theta $$

, the body weight

$$\theta $$

, the body weight

![]() $$r$$

and the parameters of the two underlying models.

$$r$$

and the parameters of the two underlying models.

Tail replacement is the special case

![]() $$r = {F_1}\left( \theta \right)$$

, where we speak of a proper body (weight).

$$r = {F_1}\left( \theta \right)$$

, where we speak of a proper body (weight).

One could in the definition, more generally, drop the restriction on

![]() $${\bar F_2}$$

, which then in Formula 1 has to be replaced by its left truncation at

$${\bar F_2}$$

, which then in Formula 1 has to be replaced by its left truncation at

![]() $$\theta $$

. However, for Pareto/GPD tails this generalisation is not needed.

$$\theta $$

. However, for Pareto/GPD tails this generalisation is not needed.

The special case

![]() $$r = {F_1}\left( \theta \right)$$

(proper body) has one parameter less. In all other cases the body part of the spliced distribution is similar to the underlying distribution represented by

$$r = {F_1}\left( \theta \right)$$

(proper body) has one parameter less. In all other cases the body part of the spliced distribution is similar to the underlying distribution represented by

![]() $${F_1}$$

but not identical: it is distorted via the probability weight of the body. This adds flexibility and can thus greatly improve fits, but it makes interpretation difficult: the parameters of a spliced model having a Lognormal body are comparable to other such models, or to a pure Lognormal model, only for proper bodies. For such bodies one can compare fits to different data and possibly identify typical parameter values for some markets, which makes the parameters interpretable, just like Pareto alphas (and possibly GPD lambdas).

$${F_1}$$

but not identical: it is distorted via the probability weight of the body. This adds flexibility and can thus greatly improve fits, but it makes interpretation difficult: the parameters of a spliced model having a Lognormal body are comparable to other such models, or to a pure Lognormal model, only for proper bodies. For such bodies one can compare fits to different data and possibly identify typical parameter values for some markets, which makes the parameters interpretable, just like Pareto alphas (and possibly GPD lambdas).

The distinction of proper versus general body weight seems to go largely unnoticed in the literature: typically authors either use proper spliced models (tail replacement) without considering more general splicing, or use arbitrary body weights without mentioning that the resulting body part of the spliced cdf is different from the original one.

In all spliced models,

![]() $$r$$

is an important quantity, describing the percentage of losses below the large-loss area, which for real-world ground-up data should mostly be close to

$$r$$

is an important quantity, describing the percentage of losses below the large-loss area, which for real-world ground-up data should mostly be close to

![]() $$1$$

. Yet, in many splicings treated in the literature,

$$1$$

. Yet, in many splicings treated in the literature,

![]() $$r$$

does not explicitly appear, especially when they are defined in terms of pdf. In such cases, however, one can calculate

$$r$$

does not explicitly appear, especially when they are defined in terms of pdf. In such cases, however, one can calculate

![]() $$r$$

, and should do so: this is a quick way to detect implausible inference results, which may be due to problems with the fit or particular (e.g. incomplete) data.

$$r$$

, and should do so: this is a quick way to detect implausible inference results, which may be due to problems with the fit or particular (e.g. incomplete) data.

Although splicing is quite technical, it has a number of advantages. First, the interpretation (smaller versus large losses) is very intuitive. Second, by combining suitable types of distributions, we can precisely achieve the desired geometries in the body and the tail area, respectively, without having the blending effects of traditional mixing. In particular, by tail replacement we can give well-established ground-up distributions a heavier tail, ideally having interpretable parameters in both the body and the tail. Third, splicing offers very different options for parameter inference, as we will see now.

4.5. Inference: the two worlds

Despite some variation in the details, there are in principle two approaches to the estimation of the model parameters. The first one is theoretically more appealing, the second has more practical appeal.

4.5.1. All-in-one inference

The basic idea is that the spliced model can be treated as if it was a traditional model with a compact cdf or pdf equation. This usually means maximum likelihood (ML) estimation of all parameters in one step, requiring in most (but notably not all) cases a smooth pdf or equivalently a C2 cdf. This approach is coherent and well founded on theoretical grounds, but in practice is challenging. Although the C2 condition reduces the number of parameters, the method requires a lot of data. More importantly, it can pose numerical challenges. ML inference (also least squares, etc.) means finding an optimum of a function on a multi-dimensional space, and the splicing makes this space geometrically very complex. In particular, the inference of the splicing point can be difficult, as examples in Section 6 will illustrate.

4.5.2. Threshold-first approach

Alternatively, one can first estimate the threshold

![]() $$\theta $$

, then split the empirical data and infer the parameters of body and tail separately. To avoid interaction of the inference in the two areas, one must dispense with smoothness conditions; only continuity of the cdf at

$$\theta $$

, then split the empirical data and infer the parameters of body and tail separately. To avoid interaction of the inference in the two areas, one must dispense with smoothness conditions; only continuity of the cdf at

![]() $$\theta $$

can (and is usually) required. Thus one has more parameters than with smoother models, but nevertheless inference here is technically much easier – in each of the two areas one has a traditional inference problem with rather few parameters.

$$\theta $$

can (and is usually) required. Thus one has more parameters than with smoother models, but nevertheless inference here is technically much easier – in each of the two areas one has a traditional inference problem with rather few parameters.

Determining the threshold where the large-loss area (and the typical tail geometry) starts, is admittedly sometimes based on judgement, but it can be based on statistics too, namely Extreme Value Analysis (Albrecher et al., Reference Albrecher, Beirlant and Teugels2017). Technically, the threshold-first option means to:

• set the threshold

$$\theta $$

(according to e.g. preliminary analysis, expert choice, data situation, and so on),

$$\theta $$

(according to e.g. preliminary analysis, expert choice, data situation, and so on),• split the empirical losses into two parts, smaller versus larger than

$$\theta $$

,

$$\theta $$

,• calculate the percentage of the smaller losses, which estimates

$$r$$

,

$$r$$

,• fit the respective models to the smaller/larger losses.

As an option, proper bodies are possible. Here the inference of the body parameters is altered by the constraint

![]() $$r = {F_1}\left( \theta \right)$$

, but is still independent of the tail inference. The inferred parameters are interpretable and can be compared with market experience.

$$r = {F_1}\left( \theta \right)$$

, but is still independent of the tail inference. The inferred parameters are interpretable and can be compared with market experience.

Generally, if the large losses are too few for a reliable tail fit, there could be the possibility of inferring the tail parameters from some larger data set collected from similar business. Such data may be left censored due to a reporting threshold, but they are applicable as long as this threshold is not greater than

![]() $$\theta $$

. For the Pareto/GPD tail model there is the additional option to validate its tail-invariant parameters by comparing the inferred ones with typical market values.

$$\theta $$

. For the Pareto/GPD tail model there is the additional option to validate its tail-invariant parameters by comparing the inferred ones with typical market values.

5. The Lognormal-Pareto world

Let us now apply the splicing procedure to the Lognormal and the proper GP distribution. Starting from the most general case and successively adding constraints, we get a hierarchy (more precisely a partially ordered set) of distributions. While some of the distributions were published earlier, mainly by Scollnik (Reference Scollnik2007), the overall system and its compact notation for the models are our contribution. As before we mostly show the survival function

![]() $$\bar F\left( x \right)$$

.

$$\bar F\left( x \right)$$

.

5.1. General model

Using the common notation

![]() $${\rm{\Phi }}$$

(and later

$${\rm{\Phi }}$$

(and later

![]() $$\phi $$

) for the cdf (pdf) of the standard normal distribution:

$$\phi $$

) for the cdf (pdf) of the standard normal distribution:

The LN-GPD-0 model has the survival function

This is a continuous function in six parameters, inheriting

![]() $$\mu $$

and

$$\mu $$

and

![]() $$\sigma $$

from Lognormal,

$$\sigma $$

from Lognormal,

![]() $$\alpha $$

and

$$\alpha $$

and

![]() $$\lambda $$

from proper GP, plus the splicing point

$$\lambda $$

from proper GP, plus the splicing point

![]() $$\theta $$

and the body weight

$$\theta $$

and the body weight

![]() $$r$$

. As for the parameter space,

$$r$$

. As for the parameter space,

![]() $$\mu $$

can take any real value;

$$\mu $$

can take any real value;

![]() $$\sigma ,\theta ,\alpha \gt 0$$

;

$$\sigma ,\theta ,\alpha \gt 0$$

;

![]() $$\lambda \gt - \theta $$

;

$$\lambda \gt - \theta $$

;

![]() $$0 \lt r \lt 1$$

. Limiting cases are Lognormal (

$$0 \lt r \lt 1$$

. Limiting cases are Lognormal (

![]() $$r = 1$$

,

$$r = 1$$

,

![]() $$\theta = \infty $$

) and proper-GP-only (

$$\theta = \infty $$

) and proper-GP-only (

![]() $$r = 0$$

).

$$r = 0$$

).

To simplify the notation about the Normal distribution, we will sometimes write shortly

The body part of LN-GPD-0 then compactly reads

![]() $1 - {r \over {{\Phi _\theta }}}{\Phi _x}$

.

$1 - {r \over {{\Phi _\theta }}}{\Phi _x}$

.

For more flexibility beyond a proper-GP tail, one can replace the latter by the whole GPD, using the standard parameters

![]() $$\xi $$

,

$$\xi $$

,

![]() $$\tau $$

or the tail-invariant

$$\tau $$

or the tail-invariant

![]() $$\xi $$

,

$$\xi $$

,

![]() $$\omega $$

. The resulting model in six parameters has the same dimension, just a larger parameter space. However, the main motivation of splicing is to get a considerably heavier tail than the body distribution has. For the Lognormal model this means attaching a proper GPD, which is why we (and many other authors) mainly look at this case.

$$\omega $$

. The resulting model in six parameters has the same dimension, just a larger parameter space. However, the main motivation of splicing is to get a considerably heavier tail than the body distribution has. For the Lognormal model this means attaching a proper GPD, which is why we (and many other authors) mainly look at this case.

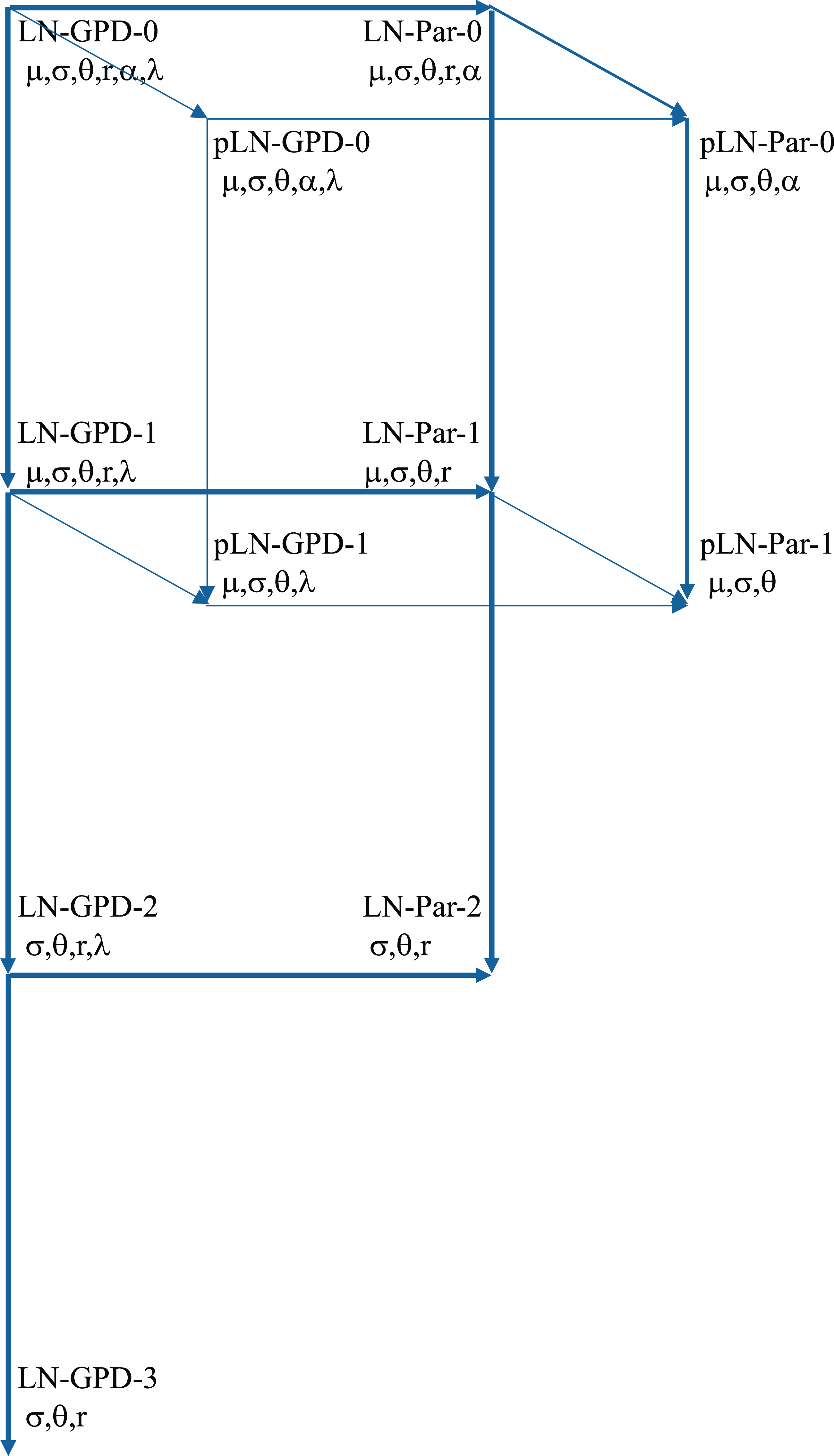

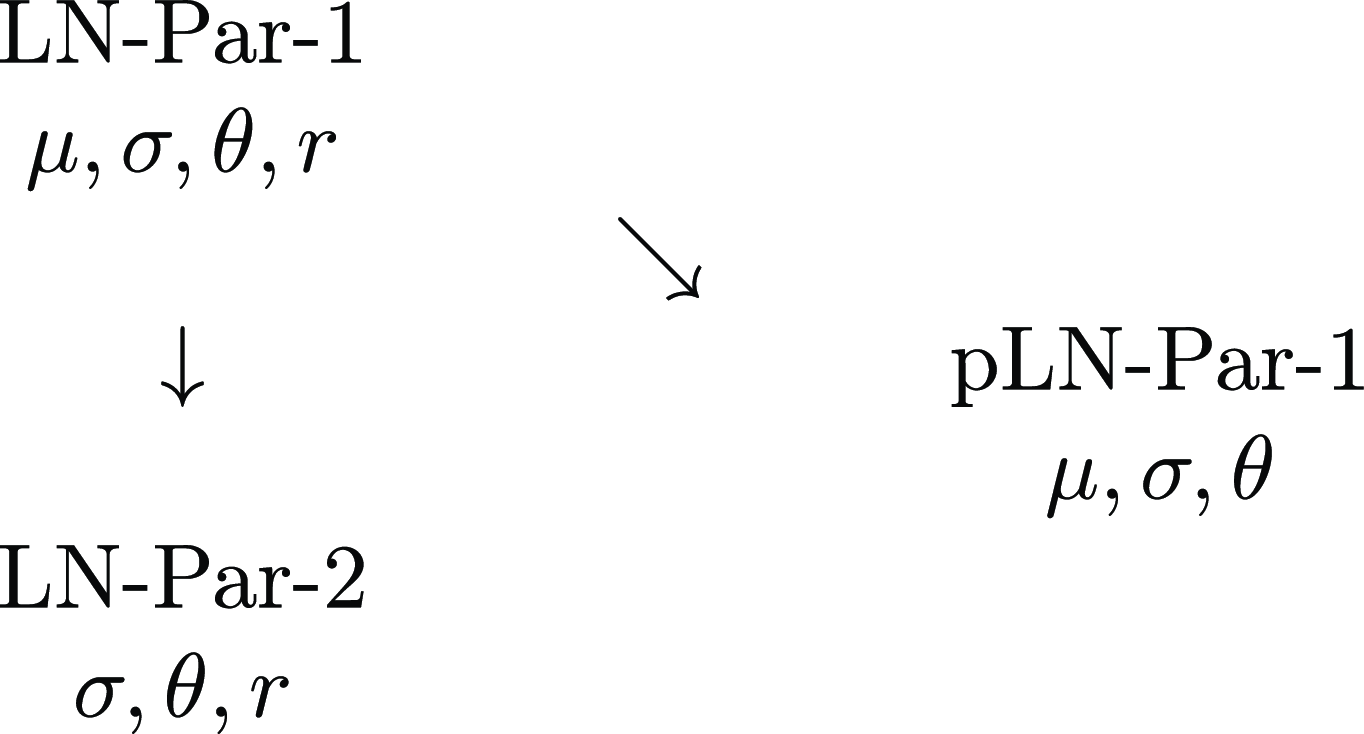

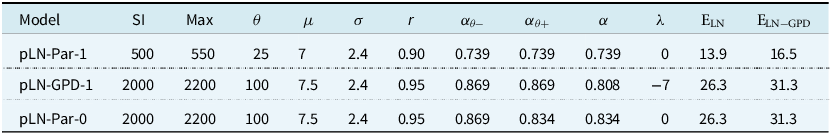

5.2. Natural submodels

From the above six-parameter model we can derive special cases, having less parameters, in three straightforward ways:

Tail: We can choose a Pareto tail, that is, set

![]() $$\lambda = 0$$

. This is always possible, whatever values the other parameters take. We call the resulting model LN-Par-0.

$$\lambda = 0$$

. This is always possible, whatever values the other parameters take. We call the resulting model LN-Par-0.

Body weight: The distribution in the body area is in general not exactly Lognormal, instead it may be distorted via the weight

![]() $$r$$

. For a Lognormal body (tail replacement), one must set the body weight

$$r$$

. For a Lognormal body (tail replacement), one must set the body weight

This choice is always possible, whatever values the other parameters take. We call this model pLN-GPD-0, where “pLN” means proper Lognormal.

Smoothness: If we want the distribution to be smooth, we can require that the pdf be continuous, or more strongly the derivative of the pdf too, and so on. Analogously to the classes C0, C1, C2, … of more or less smooth functions we call the resulting distributions LN-GPD-0, LN-GPD-1, LN-GPD-2, …, according to how many derivatives of the cdf are continuous.

How many smoothness conditions can be fulfilled must be analysed step by step. For C1 we must have that the pdf at

![]() $$\theta\!- $$

and

$$\theta\!- $$

and

![]() $$\theta\!+ $$

be equal. Some algebra yields the following equations, coming in three equivalent variants:

$$\theta\!+ $$

be equal. Some algebra yields the following equations, coming in three equivalent variants:

The second equation describes the local Pareto alpha at

![]() $$\theta\!+ $$

and

$$\theta\!+ $$

and

![]() $$\theta\!- $$

, respectively, while the third one makes clear that one can always find an

$$\theta\!- $$

, respectively, while the third one makes clear that one can always find an

![]() $$\alpha \gt 0$$

fulfilling the C1-condition, whatever values the other parameters take.

$$\alpha \gt 0$$

fulfilling the C1-condition, whatever values the other parameters take.

Note that all LN-GPD variants with continuous pdf must be unimodal: The proper-GP density is strictly decreasing (this holds more generally for the GPD with

![]() $$\xi \gt - 1$$

), thus the pdf of any smooth spliced model with proper-GP tail must have negative slope at

$$\xi \gt - 1$$

), thus the pdf of any smooth spliced model with proper-GP tail must have negative slope at

![]() $$\theta $$

. Thus, the mode of the Lognormal body must be smaller than

$$\theta $$

. Thus, the mode of the Lognormal body must be smaller than

![]() $$\theta $$

and is thus also the (unique) mode of the spliced model. This gives the pdf the (often desired) shape of an asymmetric bell curve with a heavy tail.

$$\theta $$

and is thus also the (unique) mode of the spliced model. This gives the pdf the (often desired) shape of an asymmetric bell curve with a heavy tail.

If the pdf is instead discontinuous at

![]() $$\theta $$

, the resulting spliced C0 model can be bimodal. Say the Lognormal mode is smaller than

$$\theta $$