Adequate assessment of the clinical and administrative burden of hospital-acquired infections (HAIs) is of major epidemiological interest [Reference Grambauer1–Reference Núñez-Núñez4]. In a time-to-event analysis, the influence of these exposures on an outcome of interest, often alongside other risk factors, is usually assessed with a Cox regression model. The resulting hazard ratio (HR) relates the hazard of exposed individuals to that of unexposed individuals at the event times. Conventional epidemiological cohort studies require complete covariate information on all individuals irrespective of the exposure and outcome prevalence. The ascertainment of these risk factors is often expensive and may be a huge endeavour. To decrease the economic burden, conventional sampling designs that require covariate information from all patients with an outcome event, but only from a small proportion of individuals without an outcome event have been introduced. More specialised, sampling designs for time-to-event data require covariate information from all patients with an outcome event, but only from a small proportion of individuals without an outcome event. Popular examples are the nested case-control (NCC) [Reference Thomas5, Reference Borgan6] and the case-cohort design [Reference Prentice7]. Those designs result in competitive estimates compared to the full cohort analysis while at the same time remarkably reducing the necessary resources allocated to the study.

Rare time-dependent exposures but frequent subsequent outcome events are common when assessing the burden of HAIs. In such a situation, nested-case control or case-cohort designs no longer result in the emphasised reduction of resources [Reference Feifel8]. Two-phase sampling designs form more informative samples to assess the association of the exposure by exploiting information on the risk factors of primary interest [Reference Lin9, Reference Borgan10]. They even enable the use of cohort (phase I) data in an estimation based on subsampled (phase II) data [Reference Breslow11, Reference Borgan and Keogh12]. Nonetheless, while conventional two-phase designs reduce the required information based on observed outcome events, they frequently result in too large sample sizes [Reference Feifel8]. Recently, Ohneberg et al. [Reference Ohneberg, Beyersmann and Schumacher13] and Feifel et al. [Reference Feifel8] proposed two schematically different sampling methods, namely exposure density sampling (EDS) and the nested exposure case-control (NECC) design. Being capable of a robust HR estimation while utilizing only limited resources, both NECC and EDS offer researchers an attractive alternative to the expensive full cohort analysis [Reference de Kraker14] while avoiding one of the most serious types of bias – the immortal time bias [Reference Suissa15].

Moreover, in epidemiological studies, cases and controls are often matched on risk factors that are uncomplicated to ascertain. Both exposure-related sampling schemes permit additional matching. Therefore, they enable an estimation that is adjusted for confounders. The model flexibility is increased by matching as assumptions that usually have to be fulfilled for all variables in a statistical model, are not necessary for the matched variables.

This paper revisits NECC and EDS sampling for rare exposures but common and uncommon outcome events. First, we introduce the theoretical background of the two methods. Then, we analyse patients hospitalised in the neuro-intensive care unit (ICU) at the Burdenko Neurosurgery Institute (NSI) in Moscow, Russia [Reference Savin16]. Here, the association of three rare time-dependent infections with different prevalence on length-of-stay in ICU as the outcome event is investigated.

Another frequently observed type of bias is the competing risk bias [Reference Andersen17], which can be adequately addressed by both NECC and EDS. To evaluate their performance in this situation, we extend the data analysis to investigate ICU mortality considering discharge alive as a competing risk.

Methods

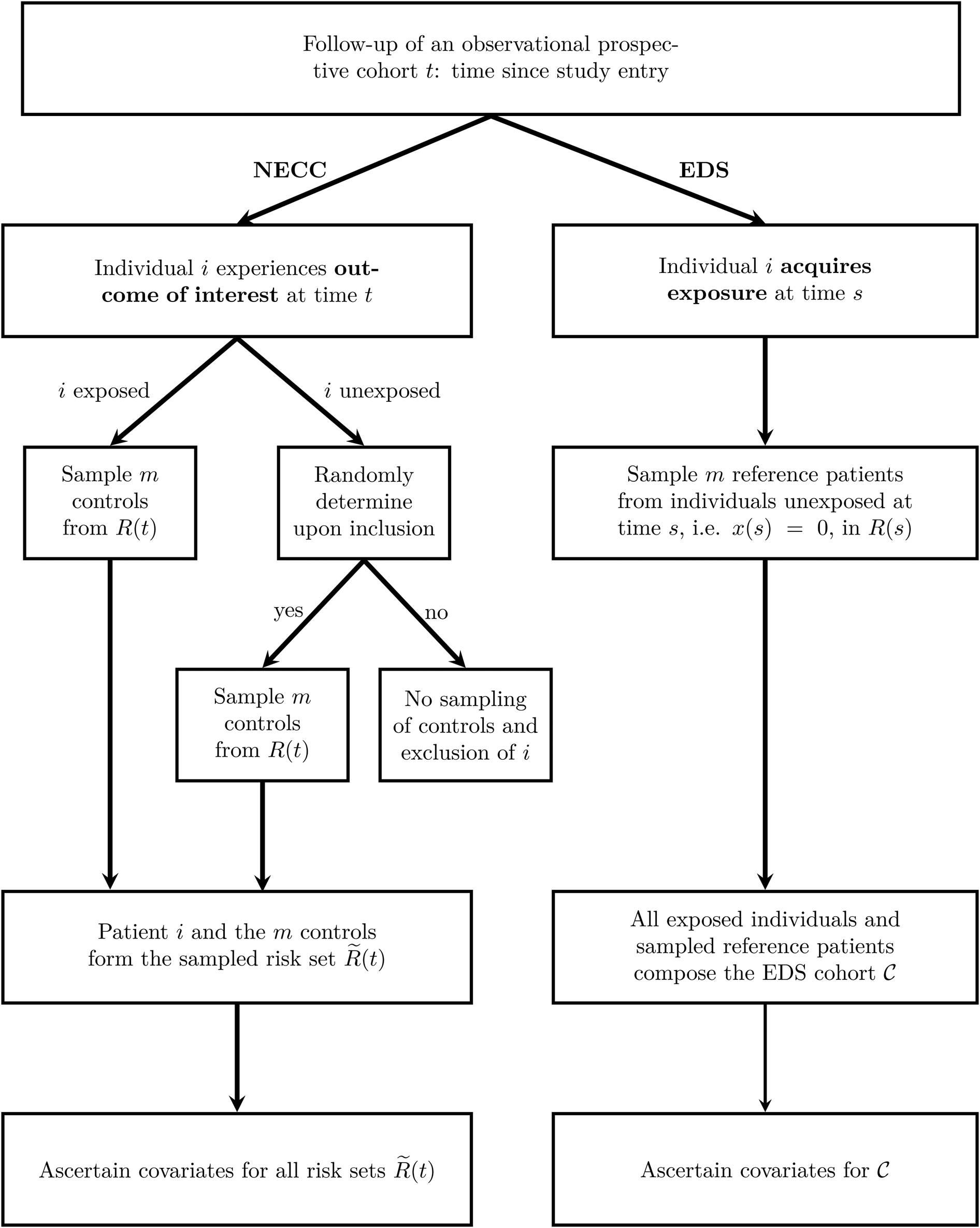

The two sampling schemes are outlined in Figure 1.

Fig. 1. Outline of the two sampling schemes NECC and EDS. The time since study entry is denoted by t. The risk set at time t for the full cohort is R(t) and for the NECC cohort is $\tilde{R}( t )$![]() . The time-dependent exposure state at time t is denoted by x(t), with x(t) = 1 indicating that the patient has acquired the exposure by time t and x(t) = 0 indicating otherwise. Finally, ${\cal C}$

. The time-dependent exposure state at time t is denoted by x(t), with x(t) = 1 indicating that the patient has acquired the exposure by time t and x(t) = 0 indicating otherwise. Finally, ${\cal C}$![]() denotes the EDS cohort, which is all exposed individuals and the sampled reference patients. NECC, nested exposure case-control design, EDS, Exposure density sampling.

denotes the EDS cohort, which is all exposed individuals and the sampled reference patients. NECC, nested exposure case-control design, EDS, Exposure density sampling.

NECC [Reference Feifel8] allocates for each exposed individual i who experiences the outcome of interest at time t a predefined number of controls, denoted by m. Additionally, a small fraction (q) of unexposed individuals that experience the outcome of interest controls are selected. For both exposed and the randomly chosen unexposed individuals, the controls are sampled at the event times accordingly and information on the risk factors is ascertained. Eligible controls are all individuals that have not experienced the outcome of interest before the time t and have not been censored. They are referred to as risk set R(t). For example, when hospital discharge is the outcome, the R(t) comprises all individuals with a length of stay at least as long as that of the individual discharged at time t. NECC corresponds to the conventional NCC design if q is chosen to be one [Reference Thomas5, Reference Borgan6].

EDS [Reference Ohneberg, Beyersmann and Schumacher13] selects for each exposed individual at time s, the time of exposure, m unexposed individuals from R(s) as reference patients. Although showing similarities to controls in nested (exposure) case-control designs, reference patients are allowed to change their exposure status if the exposure is acquired at a later time point than the sampling time. All exposed individuals and all sampled reference patients constitute one cohort, denoted by ${\cal C}$![]() . Herein, all individuals are observed until the outcome or a censoring event. The difference compared to a full cohort is, that patients enter with left-truncated entry times. Left-truncated cohorts or delayed entry studies allow for increasing and decreasing risk sets with progressing time. For EDS, the delayed entry takes place upon sampling.

. Herein, all individuals are observed until the outcome or a censoring event. The difference compared to a full cohort is, that patients enter with left-truncated entry times. Left-truncated cohorts or delayed entry studies allow for increasing and decreasing risk sets with progressing time. For EDS, the delayed entry takes place upon sampling.

Figure 2 illustrates the fundamental concepts of the NECC and EDS design on a fictional ICU cohort with individuals A–J. Here, we provide only a brief description of the sampling. A more detailed explanation also including the estimation concept is in the appendix provided in the Supplementary material.

Fig. 2. Illustration of the NECC design and the EDS using a fictional cohort of 10 individuals (A–J). NECC, nested exposure case-control; EDS, exposure density sampling.

For the NECC design, patient A is included as an unexposed individual with outcome event, and we randomly allocate one control from B, C,…, J, the persons at risk. I is selected and the sampled risk set $\tilde{R}( {t_3} )$![]() consists of A and I. This concept is similar for patients having an outcome event with prior exposure like C. Here, H and C form the set $\tilde{R}( {t_6} )$

consists of A and I. This concept is similar for patients having an outcome event with prior exposure like C. Here, H and C form the set $\tilde{R}( {t_6} )$![]() . For patients with censored observation (see patient E) or with unobserved outcome event that is not selected, no controls are sampled and they will be excluded.

. For patients with censored observation (see patient E) or with unobserved outcome event that is not selected, no controls are sampled and they will be excluded.

For EDS design, the time of exposure is essential. The first exposure in the fictional cohort takes place at t 1. Here, the currently unexposed referent F is allocated to patient G, having the exposure event at that moment. The delayed entry time of G and F is t 1. At the time t 2, the exposed individual C and matched patient A enter the cohort. All individuals except for C and G serve as potential matching candidates. The latter is exposed already, therefore, not eligible as a reference patient anymore. Now, the cohort ${\cal C}$![]() contains the individuals G, F, C, and A. The final EDS cohort ${\cal C}$

contains the individuals G, F, C, and A. The final EDS cohort ${\cal C}$![]() with left-truncated entry times is A, C, F, G, I, and J. The sampling scheme of EDS focuses on exposure times. Thus, individuals with outcome event not yet included in the EDS cohort remain unconsidered.

with left-truncated entry times is A, C, F, G, I, and J. The sampling scheme of EDS focuses on exposure times. Thus, individuals with outcome event not yet included in the EDS cohort remain unconsidered.

HR estimation

A cohort of n individuals, where the hazard rate α i(t) for each individual i follows a Cox proportional hazards [Reference Cox18] model $\alpha _i( t ) = \alpha _0( t ) \exp ( {\beta_1x_{i1}( t ) + \beta_2x_{i2} + \ldots + \beta_kx_{ik}} )$![]() , is assumed with the time since admission to the hospital as a time scale. The exposure of primary interest x i1(t), is time-dependent and characterises whether the exposure of individual i has occurred by time t, x i1(t) = 1, or not x i1(t) = 0. The other covariates are time-independent baseline covariates [Reference Kalbfleisch and Prentice19]. The regression coefficients β 1, …, β k describe the association between the k covariates and the baseline hazard. A maximum likelihood estimator for partial likelihood determines the (log-)HR of the Cox regression [Reference Oakes20]. At each event time t, the covariates of the individual with the outcome event are compared to those of individuals in R(t). The standard error (s.e.) may be obtained from the inverse of the information matrix.

, is assumed with the time since admission to the hospital as a time scale. The exposure of primary interest x i1(t), is time-dependent and characterises whether the exposure of individual i has occurred by time t, x i1(t) = 1, or not x i1(t) = 0. The other covariates are time-independent baseline covariates [Reference Kalbfleisch and Prentice19]. The regression coefficients β 1, …, β k describe the association between the k covariates and the baseline hazard. A maximum likelihood estimator for partial likelihood determines the (log-)HR of the Cox regression [Reference Oakes20]. At each event time t, the covariates of the individual with the outcome event are compared to those of individuals in R(t). The standard error (s.e.) may be obtained from the inverse of the information matrix.

The estimation of the log HR utilizing NECC and EDS data is also based on a partial likelihood [Reference Feifel8]. Both likelihoods are evaluated at the times when the outcome of interest occurred. The sampling and event times coincide for NECC designs. For EDS the sampling time and the time of exposure acquisition are the same.

NECC data can, equivalent to the partial likelihood, be evaluated with a conditional logistic regression as explained in Borgan and Samuelsen [Reference Borgan and Samuelsen21] and extended in Feifel et al. [Reference Feifel8]. The NECC analysis is stratified in time with the sampled risk sets $\tilde{R}( t )$![]() forming the strata. In these sampled risk sets each case is explicitly compared to its allocated controls. The latter aims at approximating all patients still at risk R(t). This approximation is supported by weights to mimic the multitude of individuals at risk, which usually would have been considered. For each individual i the weight is q if the individual is currently unexposed and one if not. This weight varies with the exposure status and requires alteration even within individuals.

forming the strata. In these sampled risk sets each case is explicitly compared to its allocated controls. The latter aims at approximating all patients still at risk R(t). This approximation is supported by weights to mimic the multitude of individuals at risk, which usually would have been considered. For each individual i the weight is q if the individual is currently unexposed and one if not. This weight varies with the exposure status and requires alteration even within individuals.

EDS data are analysed in the same way as a full cohort. The only difference is that the patients enter the analysis with left-truncated entry times. Therefore, both designs respect the time-dependent nature of the exposure.

Additional matching for sampled cohorts

A stratified version of the Cox model accounts for additional covariates like confounders. Here, the baseline hazard is allowed to differ, whereas the HR of the exposure of interest presumably stays the same for all strata. For an individual i the hazard rate in stratum c takes the form α i(t) = α 0c(t)exp (β 1x i1(t)). For a stratified baseline hazard α 0c, restricted sampling of eligible controls or reference individuals is necessary. For NECC, suitable controls are in R(t) and have the same (or similar) covariates as the individual that experiences the event of interest. For EDS, the eligible referents are all not yet exposed to patients at risk with currently similar covariates as the individual who becomes exposed. This procedure is referred to as additional matching.

The partial likelihood for NECC still applies. The stratification is already adjusted for when considering the sampled risk sets. Thus the estimation of the HR remains the same as before. The analysis of the EDS requires an adjustment on the stratification variable. A stratified Cox regression is comparable to a conventional full cohort approach but accounts for the left-truncated entry times to analyse the EDS cohort.

Both designs allow the matching to be performed only in some of the baseline covariates. The remaining covariates can then be adjusted within the stratified regression model.

Competing risks

When competing risks are present (for simplicity, we assume two competing events), a cause-specific Cox model is fitted for each competing event. Separate sampling designs are performed as described previously. Now, an individual experiencing event 1 is not at risk for event 2 anymore (and vice versa). In the cause-specific analysis, they are treated similarly to censored individuals. NECC allows for further modifications to enhance the performance at the sampling phase. Different inclusion probabilities q 1 and q 2 can be chosen for each competing event. For example, selecting a larger inclusion probability for rare events compared to common outcomes (see also the results section). The weights for each event type are then calculated using q 1 or q 2, respectively. Different numbers of controls are also possible.

The competing risk scenario mainly affects the estimation stage. NECC considers the sampled risk sets only for the particular event to estimate the HR. Thus, the NECC partial likelihood for one of the competing events considers only the risk sets with the corresponding outcome event. As in the full cohort analysis, the EDS cohort censors all individuals that experience a competing event when focusing on the HR for the event of interest. This analysis is performed for each competing event. An evaluation of the partial likelihood is done at every event time of the particular event type. Nevertheless, as sampling is exposure rather than outcome-based, all unexposed individuals at risk are eligible as reference patients. This implies primarily that the cause-specific Cox regressions for all event types can be performed on the same EDS subsample.

Results

We analyse patients from the neuro-ICU at the Burdenko NSI in Moscow, Russia. The prospective single-centre cohort study was conducted between 2010 and 2018 and includes 2249 patients who stayed in the ICU for more than 48 h, thus at risk of acquiring an HAI during the ICU stay [Reference Savin16]. In other words, patients enter the study conditional on being in the ICU for at least 48 h. During the follow-up time of 60 days, 685 (30%) patients captured a hospital-acquired urinary tract infection, 211 (9%) a central nervous system infection and 92 (4%) a wound infection. At 60 days, patients are administratively censored (n = 122). Among the 2127 patients with an observed event, 304 patients died in the ICU and 1823 were discharged alive. We use NECC and EDS to study the association between different HAIs and the length of ICU stay, the combined endpoint discharge alive and hospital death. The time of infection is considered both in the sampling design and in the Cox regression to avoid immortal time bias. To adjust for confounding by severity of illness, we control for the Charlson comorbidity score by matching or respectively stratification for the full cohort. The two designs are compared to the full cohort and each other using one, two and four controls/reference patients. To show the differences in efficiency and performance, we also present the results of the conventional NCC. For NECC, we use a sampling probability of 10% (i.e. q = 0.1) for unexposed individuals who experience the outcome of interest.

To obtain a complete picture of the performance of the two designs and the full cohort approach, we draw 1000 bootstrap samples (with replacement) from the original full cohort data [Reference Efron22]. For each bootstrap sample, we estimate the HR using the full cohort approach, the NECC, the EDS and the NCC with the number of controls/reference patients mentioned above. Bootstrap analysis has several advantages. First, each design's empirical s.e.s can be compared to the mean model-based s.e.s. Second, the results provide not only information on the average performance and efficiency of the sampling designs compared to the full cohort approach, but they also allow for an understanding of how well the various methods (including the full cohort) estimate the true HR of the population [Reference Feifel8, Reference Ohneberg, Beyersmann and Schumacher13]. In contrast, a comparison of the designs solely based on the original samples would only allow for a comparison of the NECC's and EDS's estimates and s.e.s with those of the full cohort as reference (gold standard).

We present the mean of the log-HR, the HR and the s.e.s. of the 1000 bootstrap samples. Moreover, we show the mean number of individuals used for the analysis and the mean number of observed events for these individuals. The results for the length of stay are in Table 1. As no differences are observable between the mean model-based s.e.s and sampling s.e.s, we only present the sampling s.e.s.

Table 1. Average results based on 1000 bootstrap samples of the original data for the composite endpoint length of ICU stay

EDS and NECC designs with one, two and four controls/reference patients, NCC design and Cox regressions on the full cohort, have been performed. ICU, intensive care unit; NCC, Nested case-control; EDS, Exposure density sampling; NECC, Nested exposure case-control; CNSI, Central nervous system infection; UTI, Urinary tract infection; WI, Wound infection.

a Number of distinct individuals included.

b Number of events included.

c Adjusted log-hazard ratio of infection.

d Total empirical standard error of log-hazard ratio.

Overall, the differences between the mean HR of the sampling designs and the full cohort are marginal. For the EDS, the largest difference is observed for urinary tract infections (full cohort: 1.09, EDS: 1.11). The largest s.e. of EDS occurs for wound infections with one control/reference patient (s.e. of EDS: 0.19, s.e. of full cohort: 0.11). NECC performs a bit less accurate. The most substantial different HR compared to the full cohort is found for wound infections with one allocated individual (mean HR of NECC: 0.75, mean HR full cohort approach: 0.70). This setting is also the one with the largest s.e., being 0.36 for the NECC. The sample size of the NECC and EDS depends on the prevalence of exposure and the predefined number of sampled controls/reference patients. The higher the prevalence, the more controls/reference patients must be sampled and matched to the exposed patients. As to be expected, the s.e.s decrease with an increasing number of controls/reference patients.

Comparing NECC and EDS, we observe that EDS outperforms NECC in all settings. For exposures with a high prevalence (urinary tract infection), the sample size is comparable. Nonetheless, the s.e.s of EDS are much smaller. For exposures with a low prevalence, estimation with EDS is based on fewer observations. Yet, the s.e.s of EDS remain below those of NECC. The efficient use of controls/reference patients explains this. After sampling with EDS, all reference patients and cases enter the analysis and contribute to the risk set until their event time. In contrast, at each event time, the NECC risk sets are based only on the case, and the controls matched for this specific case. As the outcome is observed for practically all patients, the NCC includes even for the 1:1 sampling almost all patients. Thus, compared to the NCC, the NECC is a more suitable extension of this conventional two-sampling design. The results for NCC designs with more controls are not shown, as they do not provide any additional information.

Regarding the clinical interpretation of the results, we find that both central nervous system and wound infections increase the length of ICU stay. Here, the HR is smaller than one (full cohort 95% confidence interval central nervous system infection: 0.62, 0.85; wound infection: 0.56, 0.88), implying a reduction of the discharge hazard compared to uninfected patients. The sampling designs lead dependent on the number of controls or reference patients m to comparable results. Surprisingly, the acquisition of urinary tract infections shows a tendency to be negatively associated with the length of stay (full cohort 95% confidence interval: 0.97, 1.23). The following analysis provides more details on whether this potential decrease is attributable to death rather than earlier discharge alive.

The dataset provides the opportunity to perform a competing risks analysis and to investigate the performance of the two designs for ICU mortality, which is an outcome with a rather low prevalence (≈14%). Thus, we employ two cause-specific Cox regression models, one for discharge alive and one for death in the ICU. To account for the small number of observed deaths, we use a NECC with q 1 of 20% for the cause-specific analysis of in-ICU mortality. For discharge alive in the cause-specific analysis we apply q 2 = 0.1. The results of the competing risks analysis are in Table 2 for the event type death. As most patients are discharged alive (≈81%), the performance of the sampling designs for this event type are similar to the performance observed for length of stay (see Table S1 in the appendix provided in the Supplementary material). Regarding ICU mortality, both sampling designs provide valid approximations of the results for the full cohort approach despite the additional reduced number of observed outcome events. Nonetheless, the results are less accurate than for frequent outcomes. The most substantial difference between the mean HR of EDS and the full cohort occurs for wound infections with one reference patient (EDS: 1.13, full cohort: 1.06). The s.e. is accordingly the highest in this setting (s.e. of EDS: 0.44, s.e. of full cohort: 0.28). However, also the sample size is significantly decreased (EDS: 176, full cohort: 2249).

Table 2. Average results based on 1000 bootstrap samples of the original data for the endpoint ICU mortality

EDS and NECC designs with one, two and four controls/reference patients, NCC design and Cox regressions on the full cohort, have been performed. ICU, intensive care unit; NCC, Nested case-control; EDS, Exposure density sampling; NECC, Nested exposure case-control; CNSI, Central nervous system infection; UTI, Urinary tract infection; WI, Wound infection.

a Number of distinct individuals included.

b Number of events included.

c Adjusted log HR of infection.

d Total empirical standard error of log-HR.

Considering the NECC, the most substantial difference compared to the full cohort approach is found for central nervous system infections (mean HR NECC: 1.77, mean HR full cohort: 1.58). The highest s.e. of the NECC again occurs for wound infections with one control (s.e. of NECC: 0.61, s.e. of full cohort approach: 0.28). The loss of power is paid off by an apparent increase in cost-effectiveness. 1:1 NECC uses, on average, only 144 patients for the estimation of the HR.

Compared to EDS, we find that NECC has larger s.e.s in all settings, implying that EDS provides on average more precise estimates of the HR. However, despite a larger inclusion probability chosen for NECC (q = 0.2 rather than 0.1), EDS leads to larger sample size for those exposures with a high prevalence (urinary tract infections and central nervous system infections). Given the amount of ascertained covariate information, the NECC may be an attractive alternative here. For urinary tract infections a 1:4 NECC has 45% higher s.e. while using one 49% of the individuals sampled for the 1:1 EDS. Thus, there may be a beneficial trade-off between cost-effectiveness and loss of power when choosing the NECC over the EDS in settings with a rare outcome and a more frequently observed exposure.

The clinical interpretation of the HRs (Table S1 in the Supplementary material) indicates that both central nervous system and wound infections lead to a decreased discharge hazard (full cohort 95% confidence interval central nervous system infection: 0.50, 0.71; wound infection: 0.49, 0.82). The death hazard for central nervous system infections is increased (full cohort 95% confidence interval central nervous system infection: 1.13, 2.21), and thus, the risk of death in the ICU. The association is direct via an increased death hazard and indirect via a decreased discharge hazard. The decreased discharge hazard leads to an extended length of stay, implying that infected patients are longer at risk of dying in the ICU. In contrast, the tendency for a reduced length of stay we previously found for patients acquiring urinary tract infections (or increased for wound infections) cannot finally be explained by a higher (lower) mortality rate. We cannot detect a significant association with the death hazard. In contrast, the discharge-alive hazard is significantly increased, resulting in a decreased length of stay due to faster discharge. However, as we only adjusted for the Charlson score and not, for e.g., other potential infections, we refrain from drawing any clinical conclusion and suspect that additional confounding is still unaccounted.

Inflation factors

The inflation factor (IF) of an exposure-related cohort sampling method is the ratio of the s.e.s of the sampling design and the full cohort. It quantifies the information obtained in the sampled data compared to the full cohort. An IF of 2 suggests that two-sampled cohorts are of the same statistical power as one full cohort. Especially prior to the sampling, the IF provides a rough assessment of the loss of statistical power. The squared reciprocal IF is referred to as relative efficiency [Reference Borgan and Samuelsen21, Reference Cai and Zheng23]. In studies considering a negligible association between a single exposure and the outcome event, the formula-based IF of NECC is ${\rm I}{\rm F}_{{\rm NECC}} = \sqrt {( {m + 1} ) /( {m( {P-qP + q} ) } ) }$![]() . Here, q is the NECC's inclusion probability for unexposed individuals who experience the outcome of interest. The number of controls is m. The prevalence of the exposure is P. As the exposure is time-dependent, P refers to the proportion of exposed patients at the end of follow-up. The IFNECC with q = 1 equals the IF of the NCC $\sqrt {( {m + 1} ) /m}$

. Here, q is the NECC's inclusion probability for unexposed individuals who experience the outcome of interest. The number of controls is m. The prevalence of the exposure is P. As the exposure is time-dependent, P refers to the proportion of exposed patients at the end of follow-up. The IFNECC with q = 1 equals the IF of the NCC $\sqrt {( {m + 1} ) /m}$![]() . This factor holds independent of the censoring and exposure distribution [Reference Goldstein and Langholz24].

. This factor holds independent of the censoring and exposure distribution [Reference Goldstein and Langholz24].

Ohneberg et al. [Reference Ohneberg, Beyersmann and Schumacher13] derived for the EDS ${\rm I}{\rm F}_{{\rm EDS}} = \sqrt {( {1-P} ) ( {m + 1} ) /m}$![]() . Here, m is the number of reference patients per exposed individual. Note the formula-based IF yields a rough approximation, since the time-dependence of the exposure is not taken into account. Additional covariates in the model or large HRs of the time-dependent exposure further alter the proposed designs' efficiency. The precision of the IFs is also affected.

. Here, m is the number of reference patients per exposed individual. Note the formula-based IF yields a rough approximation, since the time-dependence of the exposure is not taken into account. Additional covariates in the model or large HRs of the time-dependent exposure further alter the proposed designs' efficiency. The precision of the IFs is also affected.

Table 3 shows the formula-based and empirical IFs for the combined endpoint. Dividing the total s.e. of the sampling design by the total s.e. of the full cohort derives the latter. The loss in statistical power for EDS is smaller than for NCC and NECC. Especially, for high prevalence and four controls/reference patients, EDS performs comparably to the full cohort with only 60% of the sample size (1409 vs. 2249). Here, the full cohort s.e. for UTI is 0.0613 (refer to Table 1). The s.e. for the EDS with four reference patients is 0.0638 resulting in an IF of 1.0408. The NECC's IF indicates an increase in efficiency compared to the full cohort for decreasing prevalence: the IF is 2.17 for urinary tract infections and 1.92 for wound infections when sampling four controls. Nonetheless, EDS outperforms both the NECC and the NCC, having a smaller IF for the same number of controls/reference patients. For lower prevalence, the IF of 1:1 EDS is smaller than the IF of 1:4 NECC.

Table 3. Inflation factor (IF) of the standard errors

The empirical IF is defined as total s.e.(sampling design)/total s.e.(full cohort). For the NECC, we present the results for q = 0.1. IF, inflation factor; NCC, Nested case-control; EDS, Exposure density sampling; NECC, Nested exposure case-control; CNSI, Central nervous system infection; UTI, Urinary tract infection; WI, Wound infection.

a NCC design.

b EDS.

c NECC design.

Comparing formula-based to the empirical IF, it becomes apparent that the formulas are merely an approximation of the expected IFs. These estimates are closer to the empirical IFs, if the HR is close to one (see urinary tract infection). The strongest deviation occurs for wound infections. Interestingly, while EDS's formula seems to underestimate the empirical IF, the IF-formula of NECC is more conservative by overestimating the empirical IFs. For EDS the IF may become even smaller than one (urinary tract infection, four reference patients).

Discussion

The NECC and EDS are parsimonious competitors to a full cohort analysis when analysing rare time-dependent exposures and not necessarily frequent outcomes. They complement the prominent class of sampling design tailored for studying the association between exposures, that may or may not be rare, and rare outcomes.

For data on HAIs, the NECC and EDS reveal a significant decrease in the required resources while providing accurate HR estimates. Case-control designs like the NCC or the NECC stratify for the risk sets and appear to be a natural choice. Nonetheless, in the considered settings with a common outcome, the EDS outperformed the NECC irrespective of the prevalence of exposure. EDS had smaller s.e.s for a lower number of reference patients.

In the analysis of the competing risks, the benefit of EDS over NECC was not as clear. Especially, in settings with a rather rare outcome (ICU mortality) and a rare exposure prevalence NECC uses substantially fewer controls. Thus, even though the EDS results in smaller s.e.s, the NECC may be the preferred sampling scheme in these settings as it provides accurate estimates at meager costs.

In competing risks analysis, the EDS has the advantage that it utilises the same reference patients to study all competing event types. Eventhough this is also possible using the NECC, we show that an adaption of the inclusion probability q for the outcome prevalence leads to better results for rare outcomes.

We present IFs, that roughly quantify the loss of statistical power of NECC or, respectively, EDS compared to the full cohort. The IF for the EDS has been already proposed by Ohneberg et al. [Reference Ohneberg, Beyersmann and Schumacher13], for the NECC, this manuscript gives the first expression. The IFs confirm the observed performance difference between EDS and NECC designs. However, we also noted that the IFs of the EDS are somewhat optimistic, while the IFs of NECC are rather conservative compared to the empirical IFs.

Finally, we conclude that both designs are attractive alternatives to the full cohort analysis. They are both flexible designs that consider the most commonly observed biases: immortal time bias and competing risks bias. Similar to Bang et al. [Reference Bang25] and Nelson et al. [Reference Nelson26], where EDS-like sampling was conducted in cooperation with a propensity score, EDS and NECC allow for propensity score-adjusted matching. In future applications, this adjusted sampling enables comparable control/reference groups that differ only for the exposure and can account for additional confounders. Our data example indicates that overall the EDS results in more precise estimates of the HR. However, for rare outcomes and rare exposures NECC outperformed EDS concerning economic burden while maintaining a dependable estimate of the HR.

Supplementary material

The supplementary material for this article can be found at https://doi.org/10.1017/S095026882100090X.

Financial support

This work was supported by grant BE-4500/1-2 from the German Research Foundation.

Conflict of interest

None.

Data availability statement

Computing code is available as Supplementary Material. The dataset analyzed during the current study is available in the Zenodo repository, https://doi.org/10.5281/zenodo.3995319.