Introduction

Evolving regulatory expectations for electronic data capture (EDC) systems have increased the technical and compliance burden on academic research organizations [Reference Montano, Prestronk and Johnson1]. Systems like Research Electronic Data Capture (REDCap) are now routinely used to support both investigator-initiated and FDA-regulated studies [Reference Sahoo and Bhatt2]. REDCap is licensed at no cost through institutional membership in the REDCap Consortium and is widely adopted by non-profit, academic, and government research institutions for its flexibility, scalability, and affordability [Reference Harris, Taylor, Thielke, Payne, Gonzalez and Conde3,Reference Harris, Taylor and Minor4].

Unlike commercial EDC platforms, which are centrally hosted and vendor-validated, REDCap is locally hosted and administered. Vanderbilt University Medical Center (VUMC) develops and licenses the software but does not offer pre-validated hosting or maintain system level compliance for consortium partners. Each participating institution serves as the system owner responsible for configuring, validating, and maintaining its local REDCap environment in accordance with all applicable regulatory requirements and institutional policies, including FDA 21 CFR Part 11 (Part-11) where relevant [5].

This decentralized model creates challenges for achieving regulatory readiness. REDCap is highly configurable, widely used outside FDA-regulated trials, and implemented differently at each institution. Prior research highlights considerable variation among Clinical and Translational Science Award (CTSA) hubs in governance, staffing, and infrastructure, with many lacking dedicated regulatory or quality-assurance support [Reference Kane, Trochim and Bar6]. Compliance efforts often require substantial technical and operational investment during setup and throughout the system lifecycle [Reference Montano, Prestronk and Johnson1]. These differences make a single, universal compliance solution unrealistic. A flexible, scalable approach is needed to accommodate local variation while aligning with Part-11 expectations.

In response, the National Center for Advancing Translational Sciences (NCATS) convened the REDCap 21 CFR Part 11 Working Group as a multi-institutional consortium of CTSA-based experts. The group built upon prior efforts, especially the REDCap Consortium’s Rapid Validation Process (RVP), to develop a structured, role-based strategy for achieving and sustaining readiness [Reference Baker, Bosler, Fouw, Jones, Harris and Cheng7]. For the purposes of this work, readiness refers to a state in which a REDCap environment has implemented technical controls, validation evidence, standard operating procedures, and governance processes sufficient to support use under applicable regulatory requirements, including 21 CFR Part 11. The primary purpose of this working group was to develop an Implementation Guide with practical tools for compliance planning, including configuration strategies, documentation templates, and institutional oversight aids. This manuscript outlines the Working Group’s formation, collaborative development process, and deliverables. It also offers a replicable model for institutions navigating similar regulatory and operational challenges for software used in clinical and translational research.

Materials and methods

Working group formation and structure

The NCATS REDCap 21 CFR Part 11 Working Group was convened in late 2023 to address institutional needs for achieving and sustaining regulatory compliance for electronic records and signatures. While the REDCap Consortium’s (RVP) offered a foundation for software-level validation, full compliance also requires governance structures, procedural documentation, and institutional oversight. NCATS leadership identified a gap in practical guidance to support academic institutions aligning local REDCap implementations with regulatory expectations.

The working group was sponsored by the NCATS Informatics Enterprise Committee (iEC). A call for participation was issued during the August 2023 iEC meeting, emphasizing the need for experts in REDCap administration, clinical research operations, regulatory affairs, IT infrastructure, and quality assurance. Institutional leaders were also encouraged to join to ensure representation from governance and compliance functions. In total, 55 individuals from 28 institutions contributed to the group’s activities, with participation ranging from leading subgroup efforts to providing subject-matter expertise or institutional review.

Collaborative development process

The working group met monthly throughout 2024, agreeing early that a written guide would best translate regulatory expectations into actionable steps for REDCap administrators and institutional stakeholders. Members co developed and refined content during meetings, with sections drafted asynchronously between sessions. Ad hoc subgroups addressed core content areas—SOP templates, validation alignment, role descriptions, and glossary terms—then brought drafts to the full group for review. Editorial coordination across co-leads ensured consistent terminology. The Implementation Guide was shaped by questions raised by the REDCap Consortium regarding validation and regulatory compliance. To accommodate varying levels of institutional maturity, the group adopted a four-phase framework: Plan, Configure, Validate, and Maintain.

Implementation guide dissemination

The Implementation Guide was distributed via a version-controlled REDCap survey hosted at VUMC, which captured contact details and institutional context at download (https://redcap.link/part11ig). A follow-up survey, sent automatically 90 days later, gathered feedback on guide use, implementation progress, and perceived barriers. This design supported controlled dissemination and iterative improvement.

All references to the Implementation Guide correspond to version 1.3 (January 2025), the version available at the time of analysis. Appendix labels and numbering reflect that edition and may evolve in future releases. The mappings and examples cited here align with REDCap Long Term Support (LTS) 15.5 and contemporaneous FDA guidance.

A concise mapping of 21 CFR Part 11 subsections (11.10 through 11.300) to REDCap features, institutional procedures, and validation evidence is provided within the guide’s validation appendices.

Results

Guide dissemination and institutional use

The working group released the Implementation Guide on January 22, 2025. The Guide included practical tools including a REDCap LTS compatibility checklist; SOP mapping aids; user rights and storage configuration guidance; and sample validation templates. By July 24, 2025, 259 individuals representing 164 institutions accessed the guide. The majority were REDCap administrators or compliance officers from CTSA hubs and comparable research institutions. To support adoption, These materials were distributed through the VUMC REDCap site and Consortium listservs. Among the 164 institutions, 81 were CTSA hubs and 83 were non-CTSA institutions, demonstrating broad engagement across the research enterprise. Self-reported readiness (n = 163) revealed diverse stages of progress: 47 institutions (28.8%) reported having a Part-11 ready instance, 26 (16.0%) were in the process of establishing one, 75 (46.0%) were exploring readiness, and 15 (9.2%) were not pursuing compliance at that time.

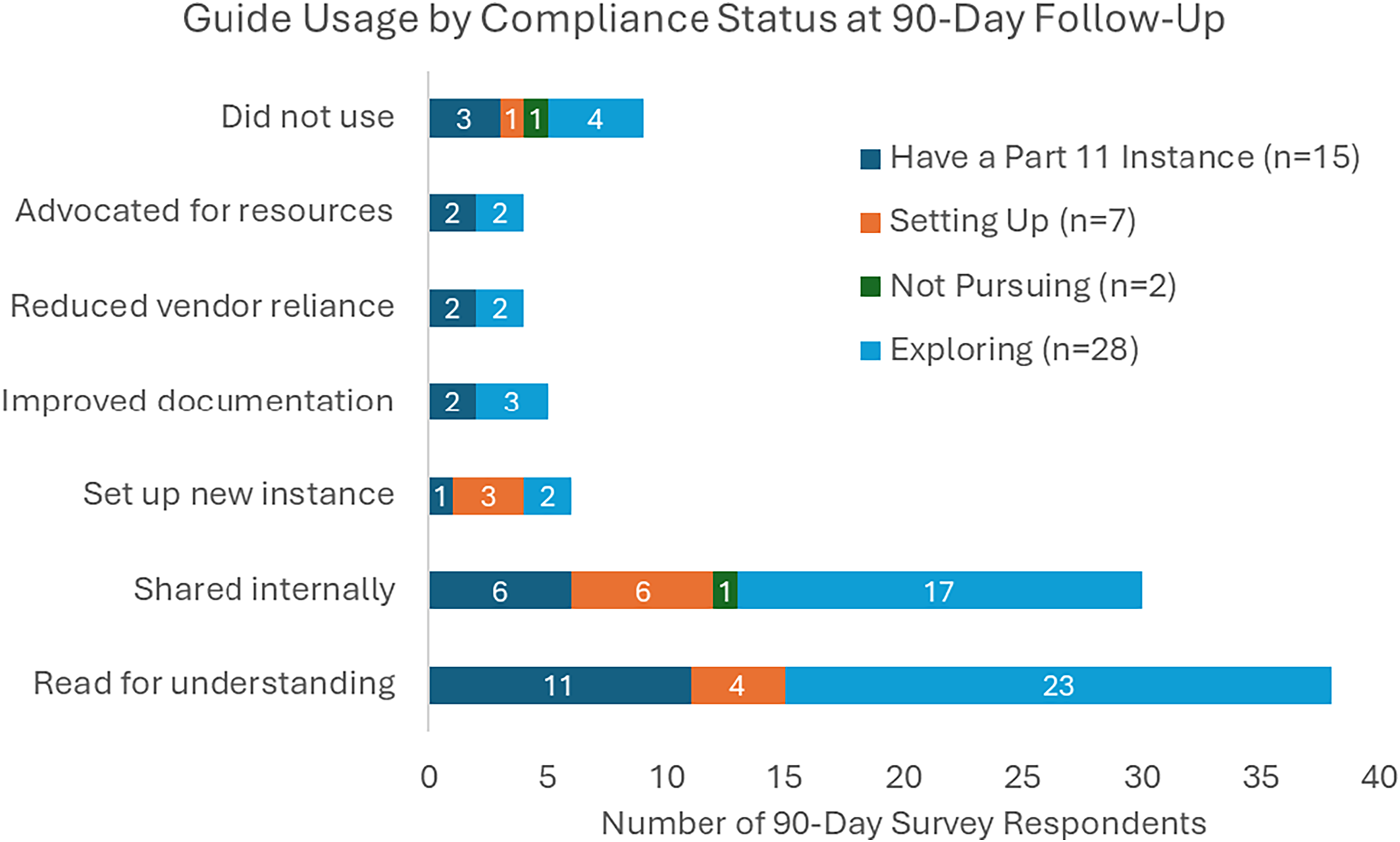

A follow-up survey was sent to 233 individuals whose downloads occurred at least 90 days prior to July 24, 2025. By that date, 54 (23%) individuals had responded, representing a mix of institutional types and initial readiness stages, as captured in the original intake data. Reported use cases included reading the guide to understand REDCap’s role in 21 CFR Part 11 readiness (n = 39; 72%), sharing the guide internally (n = 31; 57%), using it to set up a new Part-11-ready REDCap instance (n = 6; 11%), improving documentation (n = 5; 9%), reducing reliance on external vendors (n = 4; 7%), and advocating for increased institutional resources (n = 4; 7%). Ten respondents (19%) indicated they had not yet used the guide. Figure 1 shows reported use cases stratified by institutional compliance status at the time of guide download.

Figure 1. Reported use of the REDCap 21 CFR part 11 implementation guide at 90-day follow-up (n = 52). Bar chart displays total counts by use case, stratified by initial compliance status. Respondents could select multiple options.

When asked about improvements that could be made to the Implementation Guide, half of all respondents (n = 27) requested more detailed validation guidance and documentation templates. 41% (n = 22) wanted case studies added, while 26% (n = 14) requested clearer software configuration guidance. Nineteen percent (n = 10) felt no changes were needed. Free text feedback highlighted early-stage planning and technical terminology as ongoing challenges, while others described the guide as a catalyst for internal coordination or resource planning.

Two institutions described specific ways they adapted the guide to meet local needs. The Ohio State University Wexner Medical Center used the guide to establish a new REDCap instance aligned with 21 CFR Part 11 requirements, noting that it helped structure validation activities and accelerate decision-making. The University of Utah Clinical and Translational Science Institute employed the guide to conduct a gap analysis, transitioning from a previous validation model and adopting a more timely maintenance strategy using the REDCap Consortium’s REDCap Long-Term Support (LTS) validation package. According to follow-up survey responses, several institutions developed annotated checklists and role-based workflows using examples from the guide. These tools were reported to improve cross-functional collaboration, although some institutions noted challenges related to technical learning curves. These examples illustrate the guide’s adaptability across varied institutional contexts.

Feedback also emphasized the need for clear recommendations related to REDCap version upgrades and external module implementation. Respondents specifically requested examples of change control documentation, regression testing expectations, and criteria for determining when configuration changes require revalidation. The Working Group identified this as a priority area for future updates to the Implementation Guide’s Maintain phase and plans to incorporate structured guidance on these topics in subsequent releases.

Discussion

This initiative demonstrates that a decentralized, consortium-led approach can successfully support research data capture software compliance for locally hosted systems. By leveraging the CTSA network, the NCATS REDCap 21 CFR Part 11 Working Group brought together interdisciplinary expertise across informatics, regulatory affairs, and research operations to develop guidance that adapts to a wide range of institutional contexts.

Unlike validation toolkits developed by commercial (EDC) vendors, the Implementation Guide was written by institutional peers who are directly responsible for REDCap administration and compliance. This grounded the work in operational reality, reflecting variations in institutional maturity, system architecture, and REDCap use cases. The group emphasized shared language and modular design, reinforcing that achieving audit readiness in REDCap requires both technical safeguards and institutional coordination. Vanderbilt develops and licenses REDCap but does not provide validated hosting; each institution functions as its own system owner responsible for local configuration, validation, and documentation.

A key strength of this effort was the integration of technical and regulatory expertise throughout the guide’s development. The phased framework—Plan, Configure, Validate, and Maintain—translated complex regulatory expectations into practical activities aligned with common administrative workflows. This structure supported both high-resource and resource-limited institutions in identifying actionable next steps.

The guide is not intended to replace software-level validation tools like the REDCap Consortium’s (RVP). Rather, it complements them by addressing institutional responsibilities required under 21 CFR Part 11, including governance, access control, and documentation strategies.

The group’s process also highlighted the value of peer-led collaboration rooted in real-world institutional experience. By building on established practices and operational knowledge, the guide serves as more than a technical reference. It presents a flexible model for institutions navigating compliance without centralized vendor infrastructure. Although the guide was created to support U.S. regulatory expectations, its emphasis on modular adoption, policy alignment, and role clarity may be relevant for institutions pursuing similar standards internationally.

In GAMP 5 terms, REDCap functions as a Category 4 configured product, with certain locally developed external modules approaching Category 5 [8, 9]. The Working Group adopted a risk-based Computerized System Validation (CSV) and Computer Software Assurance (CSA) approach, applying greater testing depth to high-impact areas such as audit trails, user management, data integrity, and electronic signatures. Less verification is needed for low-risk configurations.

The guide addresses key compliance features such as electronic signatures, audit trails, and record integrity. REDCap configurations used to support electronic signatures include form locking with authenticated user credentials and eConsent workflows that generate tamper-evident PDF snapshots. While REDCap does not natively enforce two component authentication for all signature scenarios, institutions may combine access controls, audit trails, and policy-based attestations to meet applicable 21 CFR Part 11 requirements. Audit trail requirements include system-level logging, project-level change tracking, secure time synchronization, and periodic review of SOPs.

Limitations and future work

This paper and the guide focus on system-level validation of the REDCap application and its supporting infrastructure. Project-level validation, which encompasses protocol-specific form design, user acceptance testing, and data migration, remains the responsibility of individual research teams. Future work will include bridging strategies that connect these two levels through templates and checklists supporting end toend compliance documentation.

Recommendations in the follow-up survey identified limitations with clarity and usability in the implementation guide. Data presented in this study were self-reported, and respondents may represent institutions already motivated or resourced for compliance, which limits generalizability to smaller institutions. The guide is a support tool rather than a mandated standard, and its effectiveness depends on how it is implemented locally. While the group shared SOP templates and guidance, it did not prescribe institutional uptake or assess audit outcomes.

Future work will include follow-up interviews and improved feedback instruments to evaluate impact. Next steps also include expanding the framework to support project-level configuration, creating shared researcher training resources, and exploring integration with REDCap’s native compliance features. The Working Group also plans to incorporate examples of change-control documentation and additional case studies in future versions of the Implementation Guide.

Conclusion

The REDCap 21 CFR Part 11 Implementation Guide, created by this NCATS-supported Working Group, represents an important step in translating regulatory expectations into actionable and scalable practices within academic research environments. The guide and the collaborative process behind it offer a model for other research networks facing similar regulatory and operational complexity.

Acknowledgements

The authors would like to thank Bob Spaziano from Duke University and Paul Sanborn from the University of Pennsylvania for their contributions to the REDCap 21 CFR Part 11 Implementation Guide. We would also like to acknowledge the other members of the working group: Alexandre Peshansky from Albert Einstein College of Medicine; Michelle Dickey, Eric Leon, and Maxx Somers from Cincinnati Children’s Hospital Medical Center; Eugene Gershteyn from Columbia University Health Sciences; Cory Ennis, Denise Snyder, and Marissa Stroo from Duke University School of Medicine; Evan Gutter and Evan Mitchell from Emory University; Lynn Simpson from Harvard Medical School; May Pini, Savas Sevil, and Rajendra Bose from Icahn School of Medicine at Mount Sinai; Kathryn Jano from New York University School of Medicine; Heather Lansky from Ohio State University; Julie Mitchell from Oregon Health & Science University; Svetlana Rojevsky from Tufts University Boston; Danielle Stephens from University of California San Francisco; Jinhee Pae and Kaitlyn Sternat from University of North Carolina at Chapel Hill; Amanda Davin and Jeanne Holden-Wiltse from University of Rochester Medical Center; Jessica Heimonen and Charlie Gregor from University of Washington; Adam DeFouw from University of Wisconsin-Madison; Bob Geller and Meredith Zozus from UT Health San Antonio; Juellisa Secosky from Virginia Commonwealth University School of Medicine; Cindy Chen, David Kraemer, Jessie Lee and Julie Oetinger from Weill Cornell Medicine; and Arlene Concepcion from Yale University. We also thank Dr Pablo Cure for his role as the working group representative to NCATS

Author contributions

Theresa Baker: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Resources, Validation, Visualization, Writing-original draft, Writing-review & editing, Teresa Bosler: Project administration, Resources, Validation, Writing-review & editing, April Green: Resources, Validation, Writing-review & editing, Jason Lones: Resources, Validation, Writing-review & editing, Katie Keenoy: Resources, Validation, Writing-review & editing, Bridget Adams: Resources, Validation, Writing-review & editing, Bas de Veer: Resources, Validation, Writing-review & editing, Brian Bush: Resources, Validation, Writing-review & editing, Stephanie Oppenheimer: Resources, Validation, Writing-review & editing, Sheree Hemphill: Resources, Validation, Writing-review & editing, Elinora Price: Resources, Validation, Writing-original draft, Haley Neese: Resources, Validation, Writing-review & editing, Ashley Tippett: Resources, Validation, Writing-review & editing, Audrey Perdew: Resources, Validation, Writing-review & editing, Randy Madsen: Resources, Validation, Writing-review & editing, Catherine Bauer-Martinez: Resources, Validation, Writing-review & editing, Robert Bradford: Resources, Validation, Writing-review & editing, Paul Harris: Conceptualization, Funding acquisition, Supervision, Writing-review & editing, Alex Cheng: Conceptualization, Data curation, Investigation, Methodology, Writing-review & editing.

Funding statement

This work was supported by funding by the (NCATS) of the National Institutes of Health(NIH) with grants UL1TR002243 (VUMC), U24TR004432 (VUMC), U24TR004437 (VUMC), UM1TR004548 (OSU), UL1TR002369 (OHSU), UL1TR002345 (WashU), UM1TR004929 (Wake Forest), UM1TR004360 (VCU), UM1TR004528 (CWRU), UL1TR002553(Duke), UL1TR001863(Yale), U2CTR002818 (CCHMC), UM1TR004409 (Utah), UL1TR004419 (MSSM), UL1TR001450 (MUSC), and UM1TR004406 (UNC). Working group coordination was supported by NCATS through the Coordination, Communication, and Operations Support (CCOS) Center under Contract No. 75N95022C00030. The opinions expressed represent those of the authors and do not reflect the opinion of the US Department of Health and Human Services (DHHS), the (NIH), or the (NCATS).

Competing interests

None of the authors have any relevant conflicts of interest to report.