Book contents

- Frontmatter

- Contents

- Preface

- 1 Introduction

- 2 gaussian processes

- 3 modeling with gaussian processes

- 4 model assessment, selection, and averaging

- 5 decision theory for optimization

- 6 utility functions for optimization

- 7 common bayesian optimization policies

- 8 computing policies with gaussian processes

- 9 implementation

- 10 theoretical analysis

- 11 extensions and related settings

- 12 a brief history of bayesian optimization

- A the gaussian distribution

- B methods for approximate bayesian inference

- C gradients

- D annotated bibliography of applications

- references

- Index

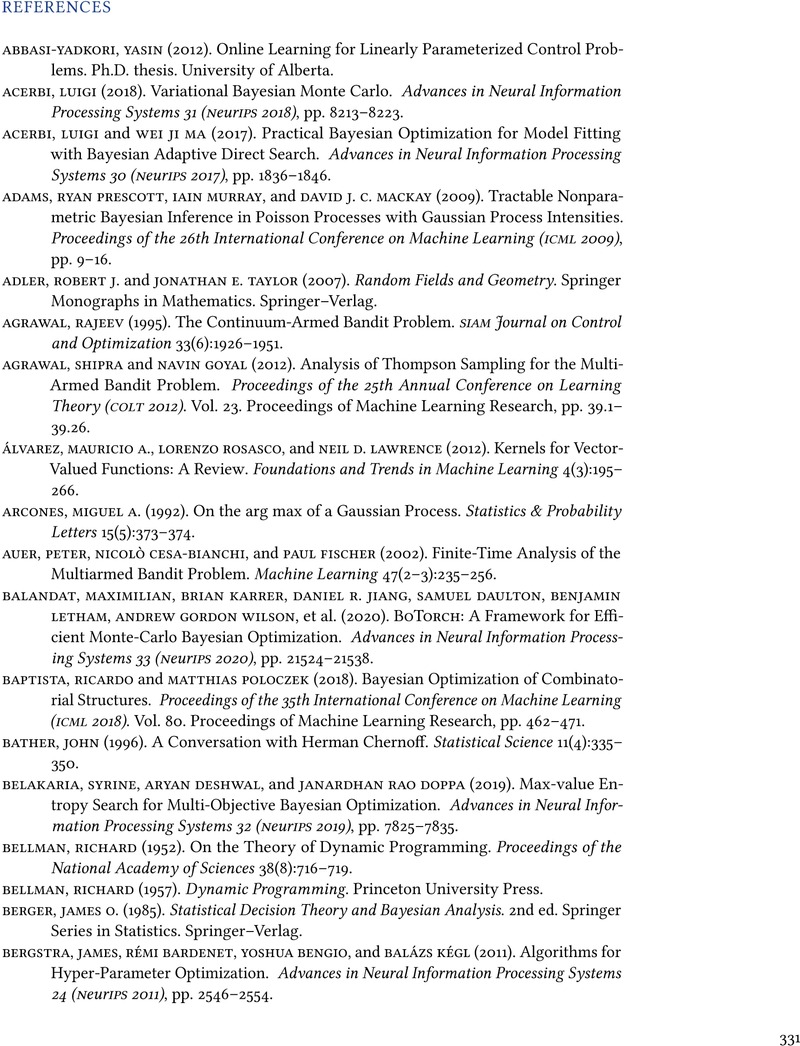

- References

references

Published online by Cambridge University Press: 25 January 2023

- Frontmatter

- Contents

- Preface

- 1 Introduction

- 2 gaussian processes

- 3 modeling with gaussian processes

- 4 model assessment, selection, and averaging

- 5 decision theory for optimization

- 6 utility functions for optimization

- 7 common bayesian optimization policies

- 8 computing policies with gaussian processes

- 9 implementation

- 10 theoretical analysis

- 11 extensions and related settings

- 12 a brief history of bayesian optimization

- A the gaussian distribution

- B methods for approximate bayesian inference

- C gradients

- D annotated bibliography of applications

- references

- Index

- References

Summary

Information

- Type

- Chapter

- Information

- Bayesian Optimization , pp. 331 - 352Publisher: Cambridge University PressPrint publication year: 2023