2.1 Introduction

The case study is a broad church. Case studies come in a great variety of forms, for a great variety of purposes, using a great variety of methods – including both methods typically labelled ‘qualitative’ and ones typically labelled ‘quantitative’.Footnote 1 My focus here is on case studies that aim to establish causal conclusions about the very case studied. Much of the discussion about the advantages and disadvantages of case study methods for drawing causal conclusions supposes that the aim is to draw causal conclusions that can be expected to hold more widely than in the case at hand. This is not my focus. My focus is the reverse. I am concerned with using knowledge that applies more widely, in consort with local knowledge, to construct a case study that will help predict what will happen in the single case – this case, involving this policy intervention, here and now. These involve what philosophers call a ‘singular causal claim’ – a claim about a causal connection in a specific single individual case, whether the individual is a particular person, a class, a school, a village or an entire country, viewed as a whole. It is often argued that causal conclusions require a comparative methodology. On this view the counterfactual is generally supposed to be the essence of singular causality: In situations where treatment T and outcome O both occur, ‘T caused O’ meansFootnote 2 ‘If T had not occurred, then O would not have’.Footnote 3 And it is additionally supposed that the only way to establish that kind of counterfactual is by contrasting cases where T occurs with those where T does not occur in circumstances that are the same as the first with respect to all other factors affecting O other than the occurrence of T and its downstream effects.

My discussion aims to show that neither of these suppositions is correct.Footnote 4 Nor do we take them to be correct, at least if the dictum ‘actions speak louder than words’ is to be believed. We all regularly, in daily life and in professional practice, bet on causal claims about single individuals and guide our actions by these bets without the aid of comparison. Juries decide whether the defendant committed the crime generally without consulting a case just like this one except for the defendant’s actions; I confidently infer that it was my second daughter (not the first, not my granddaughter, not Santa) who slipped Northanger Abbey into my Christmas stocking; and the NASA investigating team decided that the failure of an O-ring seal in the right solid rocket booster caused the Challenger disaster in which all seven crew were killed.

It might be objected that these causal judgments are made without the rigor demanded in science and wished for in policy. That would be surprising if it were generally true since we treat a good many of these as if we can be reasonably certain of them. Some 975 days after the Challenger disaster, Space Shuttle Discovery – with redesigned solid rocket boosters – was launched with five crew members aboard (and it returned safely four days later). Though not much of practical importance depends on it, I am sure who gave me Northanger Abbey. By contrast, people’s lives are seriously affected by the verdicts of judges, juries, and magistrates. Though we know that mistakes here are not uncommon, nobody suggests that our abilities to draw singular causal conclusions in this domain are so bad that we might as well flip a coin to decide on guilt or innocence.

I take it to be clear that singular causal claims like these can be true or false, and that the reasoning and evidence that backs them up can be better or worse. The question I address in Section 2.3, with a ‘potted’ example in Section 2.4, is: What kinds of information make good evidence for singular causal claims about the results of policy interventions, both post-hoc evaluations – ‘Did this intervention achieve the targeted outcome when it was implemented here in this individual case?’ – and ex ante predictions – ‘Is this intervention likely to produce the targeted outcome if implemented here in this individual case?’ I believe that the catalogue of evidence types I outline wears its plausibility on its face. But I do not think that is enough. Plausible is, ceteris paribus, better than implausible, but it is better still when the proposals are grounded in theory – credible, well argued, well-warranted theory. To do this job I turn to a familiar theory that is commonly used to defend other conventional scientific methods for causal inference, from randomized controlled trials (RCTs) to qualitative comparative analysis, causal Bayes nets (Bayesian networks) methods, econometric instrumental variables, and others. In Section 2.5, I outline this theory and explain how it can be used to show that the kinds of facts described in the evidence catalogue are evidence for causation in the single case.

So, what kinds of facts should we look for in a case study to provide evidence about a singular casual claim there – for instance, a claim of the kind we need for program evaluation: Did this program/treatment (T) as it was implemented in this situation (S) produce an outcome (O) of interest here? Did T cause O in S?

I call the kinds of evidence one gets from case studies for singular causal claims individualized evidence. This is by contrast with RCTs, which provide what I call anonymous evidence for singular causal claims. I shall explain this difference before proceeding to my catalogue because it helps elucidate the relative advantages and disadvantages of RCTs versus case studies for establishing causal claims.

2.2 What We Can Learn from an RCT

Individualized evidence speaks to causal claims about a particular identified individual; anonymous evidence speaks about one or more unidentified individuals. RCTs and group-comparison observational studies provide anonymous evidence about individual cases. This may seem surprising since a standard way of talking makes it sound as if RCTs establish general causal claims – ‘It works’ – and not claims about individuals at all. But RCTs by themselves establish a claim only about averages, and about averages only in the population enrolled in the experiment. What kind of claim is that? To understand the answer a little formalism is required. [See Appendix 2.1 for more complete development.]

A genuinely positive effect size in an RCT where the overall effects of other ‘confounding’ variables are genuinely balanced between treatment and control groups – let’s call this an ‘ideal’ RCT – would establish that at least some individuals in the study population were caused by the treatment to have the targeted outcome. This is apparent in the informal argument that positive results imply causal claims: ‘If there are more cases of the outcome in the treatment than in the control group, something must have caused this. If the only difference between the two groups is the treatment and its downstream effects, then the positive outcomes of at least some of the individuals in the treatment group must have been caused by the treatment.’

This is established more formally via the rigorous account of RCT results in common use that traces back to Reference RubinRubin (1974) and Reference HollandHolland (1986), which calls on the kind of theory appealed to in Section 2.5. We assume that whether one factor causes another in an individual is not arbitrary but that there is something systematic about it. There is a fact of the matter about what factors at what levels in what combinations produce what levels for the outcome in question for each individual. Without serious loss of generality, we can represent all the causal possibilities that are open for an individual i in a simple linear equation, called a potential outcomes equation:

In this equation the variable O on the left represents the targeted outcome; c= signifies that the two sides of the equation are equal and that the factors on the right are causes of those on the left. T(i), which represents the policy intervention under investigation, may or may not genuinely appear there; that is, α(i) may be zero. The equation represents the possible values the outcome can take given various combinations of values a complete set of causes for it takes. W(i) represents in one fell swoop all the causes that might affect the level of the outcome for this individual that do not interact with the treatment.Footnote 5 α represents the overall effect of factors that interact with the treatment. ‘Interact’ means that the amount the treatment contributes to the outcome level for individual i depends on the value of α(i). Economists and statisticians call these ‘interactive’ variables; psychologists tend to call them ‘moderator’ variables; and philosophers term them ‘support’ variables. For those not familiar with support factors, consider the standard philosopher’s example of striking a match to produce a flame. This only works if there is oxygen present; oxygen is a support factor without which the striking will not produce a flame.

Interactive/support variables really matter to understanding the connection between the statistical results of an RCT and the causal conclusions inferred from them. The statistical result that is normally recorded in an RCT is the effect size. ‘Effect size’ can mean a variety of things. But all standard definitions make it a function of this: the difference in outcome means between treatment and control groups. What can this difference in the average value of the outcome in the two groups teach us about the causal effects of the treatment on individuals enrolled in the experiment? What can readily be shown is that in an ideal RCT this difference in means between treatment and control is the mean value of α(i), which represents the support factors – the mean averaged across all the individuals enrolled in the experiment. So the effect size is a function of the mean of the support/interactive variables – those variables that determine whether, and to what extent, the treatment can produce the outcome for the individual. If the average of α(i) is not zero, then there must be at least some individuals in that population for which α(i) was not zero. That means that for some individuals – though we know not which – T genuinely did contribute to the outcome. Thus, we can conclude from a positive mean difference between treatment and control in an ideal RCT that ‘T caused O in some members of the population enrolled in the experiment.’Footnote 6

You should also note one other feature of α(i). Suppose that we represent the value of the policy variable in the control group from which it is withheld by 0. This is another idealization, especially for social experiments and even for many medical ones, where members of the control groups may manage to get the treatment despite being assigned to control. But let’s suppose it. Then α(i)T(i) – α(i)C(i) = α(i)T(i) – 0 = α(i)T(i), letting C represent the value of the treatment when that treatment is not experienced. So α(i) represents also the ‘boost’ to O that i gets from receiving the policy treatment. This is often called ‘the individual treatment effect’.

When could we expect the same positive average effect size in an RCT on a new population? In the abstract that is easy to say. First, T must be capable of producing O in the new population. There must be possible support factors that can get it to work. If there aren’t, no amount of T will affect O for anyone. Again, philosophers have a potted example: No amount of the fertility drug Clomiphene citrate will make any man get pregnant. In development studies we might use Angus Reference DeatonDeaton’s (2010) fanciful example of a possible World Bank proposal to reduce poverty in China by building railway stations, a proposal that is doomed to failure when looked at in more detail because the plan is to build them in deserts where nobody lives. Then the two experiments will result in the same effect size just in case the mean of T’s support factors is the same in the two. And how would we know this? That takes a great deal of both theoretical and local knowledge about the two populations in question – knowledge that the RCTs themselves go no way toward providing.Footnote 7

Much common talk makes it sound as if RCTs can do more, in particular that they can establish what holds generally or what can be expected in a new case. Perhaps the idea is that if you can establish a causal conclusion then somehow, because it is causal, it is general. That’s not true, neither for the causal results established for some identified individuals in an RCT nor for a causal result for a single individual subject that might be established in a case study. Much causality is extremely local: local to toasters of a particular design, to businesses with a certain structure, to fee-paying schools in university towns in the south of England, to families with a certain ethnic and religious background and immigration history … The tendency to generalize seems especially strong if ‘the same’ results are seen in a few cases – which they seldom are, as can be noted from a survey of meta-analyses and systematic reviews. But that is induction by simple enumeration, which is a notoriously bad way to reason (swan 1 is white, swan 2 is white … so all the swans in Sydney Harbour are white).

A study – no matter whether it is a case study or it uses the methodology of the RCT, Bayes nets methods for causal inference, instrumental variables, or whatever – by itself can only show results about the population on which the data is collected. To go beyond that, we need to know what kinds of results travel, and to where. And to do that takes a tangle of different kinds of studies, theories, conceptual developments, and trial and error. This is underlined by work in science studiesFootnote 8 and by recent philosophical work on evidence and induction. See, for instance, John Reference NortonNorton’s (2021) material theory of induction: Norton argues that inductive inferences are justified by facts, where facts include anything from measurement results to general principles. Parallel lessons follow from the theory of evidence I endorse (Reference Cartwright, Karakostas and DieksCartwright 2013), the argument theory, in which a fact becomes evidence for a conclusion in the context of a good argument for that conclusion, an argument that inevitably requires other premises.

What I want to underline here with respect to RCTs is that, without the aid of lots of other premises, their results are confined to the population enrolled in the study; and what a positive result in an ideal RCT shows is that the treatment produced the outcome in some individuals in that population. For all we know these may be the only individuals in the world that the treatment would affect that way. The same is true if we use a case study to establish that T caused O in a specific identified individual. Perhaps this is extremely unlikely. But the study does nothing to show that; to argue it – either way – requires premises from elsewhere.

I also want to underline a number of other facts that I fear are often underplayed.

The RCT provides anonymous evidence. We may be assured that T caused O in some individuals in the study population, but we know not which. I call this ‘Where’s Wally?’ evidence. We know he’s there somewhere, but the study does not reveal him.

The study establishes an average; it does not tell us how the average is made up. Perhaps the policy is harmful as well as beneficial – it harms a number of individuals, though on average the effect is positive.

We’d like to know about the variance, but that is not so easy to ascertain. Is almost everyone near the average or do the results for individuals vary widely? The mean of the individual effect sizes can be estimated directly from the difference in means between the treatment and the control groups. But the variance cannot be estimated without substantial statistical assumptions about the distribution. Yet one of the advantages of RCTs is supposed to be that we can get results without substantial background assumptions.

I have been talking about an ideal RCT in a very special sense of ‘ideal’: one in which the net effect of confounding factors is genuinely balanced between treatment and control. But that is not what random allocation guarantees for confounders even at baseline. What randomization buys is balance ‘in the long run’. That means that if we did the experiment indefinitely often on exactly the same population, the observed difference in means between treatment and control groups would converge on the true difference.

That’s one reason we want experiments to have a large number of participants: it makes it more likely that what we observe in a single run is not far off the true average, though we know it still should be expected to be off a bit, and sometimes off a lot. Yet many social experiments, including many development RCTs, are done on small experimental populations.

Randomization only affects the baseline distribution of confounders. What happens after that? Blinding is supposed to help control differences, but there are two problems. First, a great many social experiments are poorly blinded: often everybody knows who is in treatment versus control – from the study subjects themselves to those who administer the policy to those who measure the outcomes to those who do the statistical analyses – and all of these can make significant differences. Second, without reasonable local background knowledge about the lives of the study participants (be they individuals or villages), it is hard to see how we have reason to suppose that no systematic differences affect the two groups post randomization.

Sometimes people say they want RCTs because RCTs measure average effect sizes and we need these for cost–benefit analyses. They do, and we do. But the RCT measures the average effect size in the population enrolled in the experiment. Generally, we need to do cost–benefit analysis for a different population, so we need the average effect size there. The RCT does not give us that.

I do not rehearse these facts to attack RCTs. RCTs are a very useful tool for causal inference – for inferring anonymous singular causal claims. I only list these cautions so that they will be kept in mind in deciding which tool – an RCT or a case study or some other method or some combination – will give the most reliable inference to singular causal claims in any particular case.

I turn now to the case study and how it can warrant singular causal claims – in this case, individualized ones.

2.3 A Category Scheme for Types of Evidence for Singular Causation That a Case Study Can Provide

Suppose a program T has been introduced into a particular setting S in hopes of producing outcome O there. We have good reason to think O occurred. Now we want to know whether T, as it was in fact implemented in S, was (at least partly) responsible.Footnote 9 What kinds of information should we try to collect in our case study to provide evidence about this? In this section I offer a catalogue of types of evidence that can help. I start by drawing some distinctions. However, it is important to make a simple point at the start. I aim to lay out a catalogue of kinds of evidence that, if true, can speak for or against singular causal claims. How compelling that evidence is will depend on:

how strong the link, if any, is between the evidence and the conclusion,

how sure we can be about the strength of this link, and

how warranted we are in taking the evidence claim to be true.

All three of these are hostages to ignorance, which is always the case when we try to draw conclusions from our evidence. In any particular case we may not be all that sure about the other factors that need to be in place to forge a strong link between our evidence claim and our conclusion, we may worry whether what we see as a link really is one, and we may not be all that sure about the evidence claim itself. The elimination of alternatives is a special case where the link is known to be strong: If we have eliminated alternatives then the conclusion follows without the need for any further assumptions. But, as always, we still face the problem of how sure we can be of the evidence claim. Have we really succeeded in eliminating all alternatives? No matter what kind of evidence claim we are dealing with, it is a rare case when we are sure our evidence claims are true and we are sure how strong our links are, or even if they are links at all. That’s why, when it comes to evidence, the more the better.

The first distinction that can help provide a useful categorization for types of evidence for singular causal claims is that between direct and indirect evidence:

Direct: Evidence that looks at aspects of the putative causal relationship itself to see if it holds.

Indirect: Evidence that looks at features outside the putative causal relationship that bear on the existence of this relationship.

Indirect. The prominent kind of indirect evidence is evidence that helps eliminate alternatives. If O occurred in S, and anything other than T has been ruled out as a cause of O in S’s case, then T must have done it. This is what Alexander Reference BirdBird (2010, 345) calls ‘Holmesian inference’ because of the famous Holmes remark that when all the other possibilities have been eliminated, what remains must be responsible even if improbable. RCTs provide indirect evidence, eliminating alternative explanations by (in the ideal) distributing all the other possible causes of O equally between treatment and control groups. But we don’t need a comparison group to do this. We can do this in the case study as well, if we know enough about what the other causes might be like, and/or about the history of the situation S. We do this in physics experiments regularly. But we don’t need physics to do it. It is, for instance, how I know it was my cat that stole the pork chop from the frying pan while I wasn’t looking.

Direct. I have identified at least four different kinds of direct evidence possible for the individualized singular causal claim that T caused O in S:

1. The character of the effect: Does O occur at the time, in the manner, and of the size to be expected had T caused it? (For those who are familiar with his famous paper on symptoms of causality, Reference Bradford HillBradford Hill (1965) endorses this type of evidence.)

2. Symptoms of causation: Not symptoms that T occurred but symptoms that T caused the outcome, side effects that could be expected had T operated to produce O. This kind of inference is becoming increasingly familiar as people become more and more skilled at drawing inferences from ‘big data’. As Suzy Moat puts it, “People leave this large amount of data behind as a by-product of simply carrying on with their lives.” Clever users of big data can reconstruct a great deal about our individual lives from the patterns they find there.Footnote 10

3. Presence of requisite support factors (moderator/interactive variables): Was everything in place that needed to be in order for T to produce O?

4. Presence of expectable intermediate steps (mediator variables): Were the right kinds of intermediate stages present?

Which of these types of evidence will be possible to obtain in a given case will vary from case to case. Any of them that we can gather will be equally relevant for post-hoc evaluation and for ex ante prediction, though we certainly won’t ever be able to get evidence of type 2 before the fact. I am currently engaged in an NSF-funded research project, Policy Prediction: Making the Most of the Evidence, that aims to use the situation-specific causal equations model (SCEM) framework sketched in Section 2.5 to expand this catalogue of evidence types and to explore more ways to use it for policy prediction.

2.4 A Diagrammatic Example

Let me illustrate with one of those diagrammatic examples we philosophers like, this one constructed from my simple-minded account of how an emetic works. It may be a parody of a real case study, but it provides a clear illustration of each of these types of evidence.

Imagine that yesterday I inadvertently consumed a very harmful poison. Luckily, I realized I had done so and thereafter swallowed a strong emetic. I vomited violently and have subsequently not suffered any serious symptoms of poisoning. I praise the emetic: It saved me! What evidence could your case study collect for that?

Elimination of alternatives: There are very low survival rates with this poison. So it is not likely my survival was spontaneous. And there’s nothing special about me that would otherwise explain my survival having consumed the poison. I don’t have an exceptional body mass, I hadn’t been getting slowly acclimatised to this poison by earlier smaller doses, I did not take an antidote, etc.

Presence of required support factors (other factors without which the cause could not be expected to produce this effect): The emetic was swallowed before too much poison was absorbed from the stomach.

Presence of necessary intermediate step: I vomited.

Presence of symptoms of the putative causes acting to produce the effect: There was much poison in the vomit, which is a clear side effect of the emetic’s being responsible for my survival.

Characteristics of the effect: The amount of poison in the vomit was measured and compared with the amount I had consumed. I suffered just the effects of remaining amount of poison; and the timing of the effect and size were just right.

2.5 Showing This Kind of Information Does Indeed Provide Evidence about Singular Causation

I developed the scheme in Section 2.3 for warranting singular causal claims bottom-up by surveying case studies in engineering, applied science, policy evaluation, and fault diagnoses, inter alia. But a more rigorous grounding is possible: these types all provide information relevant for filling in features of a situation-specific causal equations model (SCEM). Once you see what a SCEM is, this is apparent by inspection, so I will not belabor that point. Instead, I will spend time defending the SCEM framework itself.

A SCEM is a set of equations that express (one version of) what is sometimes called the ‘logic model’ of the policy intervention: a model of how the policy treatment T is supposed to bring about the targeted outcome O, step by step. Each of the equations is itself what in Section 2.2 was called a ‘potential outcomes equation’. (In situations where the kind of quantitative precision suggested by these equations seems impossible or inappropriate, there is an analogous Boolean form for yes–no variables, familiar in philosophy from Reference MackieMackie (1965) and in social science from qualitative comparative analysis [e.g., Reference Rihoux and RaginRihoux and Ragin 2008].)

To build a SCEM, start with the outcome O of interest. Just what should the policy have led to at the previous stage that will produce O at the final stage? Let’s call that ‘O-1’. Recalling that a single cause is seldom enough to produce an effect on its own, what are the support factors necessary for O-1 to produce O? Represent the net effect of all the support factors by ‘α-1’. Establishing that these support factors were/will be in place or not provides important evidence about whether O can be brought about by O-1. If not, then certainly T cannot produce O (at least not in the way you expect). Consider as well what other factors will be in place at the penultimate stage that will affect O. These affect the size or level of O. You want to know about those because they provide alternative explanations for the level of O that occurs; they are also relevant for judging the size T’s contribution would have to be if T were to contribute to the outcome. Represent the net effect of all these together by ‘W-1’. How O depends on all these factors can then be represented in a potential outcomes equation like this:

Work backwards, step by step, constructing a potential outcomes equation for each stage until the start, where T is introduced. The resulting set of equations is the core of the SCEM for this case.

But there is more. Think about the support factors (represented by the αs) that need to be in place at each stage. These are themselves effects; they have a causal history that can be expressed in a set of potential outcomes equations that can be added to the core SCEM. This is important information too: Knowing about the causes of the causes of an effect is a clue to whether the causes will occur and thus to whether the effect can be expected. The factors that do not interact with O-1 (represented by W-1) but that also affect O have causal histories as well that can be represented in a series of potential outcomes equations and added to the SCEM. So too with all the Ws in the chain. For purposes of evaluation, we may also want to include equations in which O figures as a cause since seeing that the effects of O obtain gives good evidence that O itself occurred. We can include as much or as little of the causal histories of various variables in the SCEM as we find useful.

I am not suggesting that we can construct SCEMs that are very complete, but I do suggest that this is what Nature does. Even in the single case, what causes what is not arbitrary – at least not if there is to be any hope that we can make reasonable predictions, explanations, and evaluations. There is a system to how Nature operates, and we have learned that generally this is what the system is like: Some factors can affect O in this individual and some cannot. All those that can affect an outcome appear in Nature’s own potential outcomes equation for that outcome. Single factors seldom contribute on their own so the separate terms in Nature’s equations will generally consist of combinations of mutually interacting factors. So Nature’s equations look much like ours. Or, rather, when we do it well, ours look much like Nature’s since hers are what we aim to replicate.

So: A successful SCEM for a specific individual provides a concise representation of what causal sequences are possible for that individual given the facts about that individual and its situation – what values the quantities represented can take in relation to values of their causes and effects. Some of the features represented in the SCEM will be ones we can influence, and some of these are ones we would influence in implementing the policy; others will take the values that naturally evolve from their causal past. The interpretation of these equations will become clearer as I defend their use.

I offer three different arguments to support my claim that SCEMs are good for treating singular causation: 1) their use for this purpose is well developed in the philosophy literature; 2) singular causation thus treated satisfies a number of common assumptions; 3) the potential outcomes equations that make up a SCEM are central to the formal defense I described in Section 2.2 that RCTs can establish causal conclusions.Footnote 11

1) The SCEM framework is an adaptation for variables with more than two values of J. L. Reference MackieMackie’s (1965) famous account in which causes are INUS conditions for their effects. In the adaptation, causes are INUS conditions for contributions to the effect,Footnote 12 where an INUS condition for a contribution to O(i) is an Insufficient but Necessary part of an Unnecessary but Sufficient condition for a contribution to it. Each of the additive terms (α(i)T(i) and W(i)) on the right of the equation O(i) c= α(i)T(i) + W(i) represents a set of conditions that together are sufficient for a contribution to O(i) but they are unnecessary since many things can contribute to O; and each component of an additive term (e.g., α(i) and T(i)) is an insufficient but necessary part of it – both are needed and neither is enough alone. This kind of situation-specific causal equations model for treating singular causation is also familiar in the contemporary philosophy of science literature, especially because of the widely respected work of Christopher Hitchcock.Footnote 13

2) The SCEM implies a number of characteristics for singular causal relations that they are widely assumed to have:

the causal relation is irreflexive (nothing causes itself)

the causal relation is asymmetric (if T causes O, O does not cause T)

causes occur temporally before their effects

there are causes to fix every effect

causes of causes of an effect are themselves causes of that effect (since substituting earlier causes of the causes in an equation yields a POE valid for a different coarse-graining of the time)Footnote 14

causal relations give rise to noncausal correlations.Footnote 15

3) Each equation in a SCEM is a potential outcomes equation of the kind that is used in the Rubin/Holland argument I laid out in Section 2.2 to show that RCTs can produce causal conclusions: A SCEM is simply a reiteration of the POE used to represent singular causation in the treatment of RCTs, expanded to include causes of causes of the targeted outcome and, sometimes, further effects as well. So, if we buy the Rubin/Holland argument about why a positive difference in means between treatment and control groups provides evidence that the treatment has caused the outcome in at least some members of the treatment group, it seems we are committed to taking POEs, and thus SCEMs, as a good representation of the causal possibilities open to individuals in the study population.

Warning: Equations like these are sometimes treated as if they represent ‘general causal principles’. That is a mistake. To see why, it is useful to think in terms of a threefold distinction among equations we use in science and policy, and similarly for more qualitative principles:

Equations and principles that represent the context-relative causal possibilities that obtain for a specific single individual, as in the SCEMs discussed here.

Equations and principles that represent the context-relative causal possibilities for a specific population. These often look just like a SCEM so it appears as if the causal possibilities are the same for every member of the population. This can be misleading for two reasons. First, for some individuals in the population some of the α(i)s may be fixed at 0 so that the associated cause can never contribute to the outcome for them. Second, the W(i)s can contain a variable that applies only to the single individual i (as noted in footnote 5). So there can be unique causal possibilities for each member of the population despite the fact that the equation makes it look as if they are all the same.

Equations and principles that hold widely. I suggest reserving the term ‘general principles’ for these, which are relatively context free, like the law of the lever or perhaps ‘People act so as to maximize their expected utility.’ These are the kinds of principles that we suppose ground the single-case causal possibilities represented in SCEMs and the context-relative principles that describe the causal possibilities for specific populations. These general principles tend to employ very abstract concepts, by contrast with the far more concrete, operationalizable ones that describe study results on individuals or populations – abstract concepts such as ‘utility’, ‘force’, ‘democracy’. They are also generally different in form from SCEMs. Think, for instance, about the form of Maxwell’s equations, which ground the causal possibilities for any electromagnetic device: these are not SCEM-like in form at all. It is in an instantiation of these in a real concrete arrangement located in space and time that genuine causal possibilities, of the kind represented in SCEMs, arise.

I note the differences between equations representing general principles and those representing causal possibilities for a single case or for a specific population to underline that knowing general principles is not enough to tell us what we need to know to predict policy outcomes for specific individuals, whether these are individual students or classes or villages, considered as a whole, or specific populations in specific places. Knowing Maxwell’s principles will not tell you how to repair your Christmas-tree lights. For that you need context-specific local knowledge about what the local arrangements are that call different general principles into play, both together and in sequence. That’s what will enable you to build a good SCEM that you can use for predicting and explaining outcomes. The same unfortunately is true for the use of general principles to predict the results of development and other social policies. Good general principles should be very reliable, but it takes a lot of thinking and a lot of local knowledge to figure out how to deploy them to model concrete situations. This is one of the principal reasons why we need case studies.

Thinking about how local arrangements call different general principles into play or not is key to how to make good use of our general knowledge to build local SCEMs. Consider a potted version of the case of the failure of the class-size reduction program that California implemented in 1996/97 based on the successes of Tennessee’s STAR project (which was attested by a good RCT) and Wisconsin’s SAGE program. Let us suppose for purposes of illustration that these three general principles obtain widely:

Smaller classes are conducive to better learning outcomes.

Poor teaching inhibits learning.

Poor classroom facilities inhibit learning.

Imagine that in Tennessee there were good teacher-training schools with good routes into local teaching positions and a number of new schools with surplus well-equipped classrooms that had resulted from a vigorous, well-funded school-building program. In California there was a great deal of political pressure and financial incentivization to introduce the program all at once (it was rolled out in most districts within three months of the legislation being passed); there were few well-trained unemployed teachers and no vigorous program for quick recruitment; and classrooms, we can suppose, were already overcrowded. These arrangements in California called all three principles into play at once; thus – so this story goes – the good effects promised by the operation of the first principle were outweighed by the harmful effects of the other two. Learning outcomes did not improve across the state, and in some places they got worse.Footnote 16 The arrangements in Tennessee called into play only the first principle, which accounts for the improved outcomes there.

How would you know whether to expect the results in California to match those of Tennessee and Wisconsin? Not by looking for superficial ‘similarities’ between the two. I recommend a case study, one that builds a SCEM for California, modelling the sequential steps by which the policy is supposed to achieve the targeted outcomes and then modelling what factors are needed in order for each step to lead to the next and what further causes are supposed to ensure that these factors are in place. We can’t do this completely, but reviewing the California case, it seems there was ample evidence – evidence of the kinds laid out in the catalogue of Section 2.3 – to fill in enough of the SCEM to see that a happy outcome was not to be expected.

2.6 Conclusion

How much evidence of the kinds in my catalogue and in what combinations must a case study deliver, and how secure must it be, in order to provide a reasonable degree of certainty about a causal claim about the case? There’s no definitive answer. That’s a shame. But this is not peculiar to case studies; it is true for all methods for causal inference.

Consider the RCT. If we suppose the treatment does satisfy the independence assumptions noted in Appendix 2.1, we can calculate how likely a given positive difference in means is if the treatment had no effect and the difference was due entirely to chance. But for most social policy RCTs there are good reasons to suppose the treatment does not satisfy the independence assumptions. The allocation mechanism often is not by a random-outcome device; there is not even single blinding let alone the quadruple we would hope for (of the subjects, the program administrators and overseers, those who measure outcomes, and those who do the statistical analysis); numbers enrolled in the experiment are often small; dropouts, noncompliance, and control group members accessing the treatment outside the experiment are not carefully monitored; sources of systematic differences between treatment and control groups after randomization are not well thought through and controlled; etc. – the list is long and well known. Often this is the best we can do, and often it is better than nothing. The point is that there are no formulae for how to weigh all this up to calculate what level of certainty the experiment provides that the treatment caused the outcome in some individuals in the experimental population. Similarly with all other methods of causal inference. Some things can be calculated – subject to assumptions. But there is seldom a method for calculating how the evidence that the assumptions are satisfied stacks up, and we often have little general idea about what that evidence should even look like. Judgment – judgment without rules to fall back on – is required in all these cases. I see no good arguments that the judgments are systematically more problematic in case studies than anywhere else.

The same holds when it comes to expecting the same results elsewhere. Maybe if you have a big effect size in an RCT with lots of subjects enrolled and good reason to think that the independence assumptions were satisfied, you have reason to think that in a good number of individuals the treatment produced the outcome. For a single case study, you can have at best good reason to think that the treatment caused the outcome in one individual. Perhaps knowing it worked for a number of individuals gives better grounds for expecting it to work in the next. Perhaps not. Consider economist Angus Reference DeatonDeaton’s (2015) suggestions about St. Mary’s school, which is thinking about adopting a new training program because a perfect RCT elsewhere has shown it improves test scores by X. But St. Joseph’s down the road adopted the program and got Z. What should St. Mary’s do? It is not obvious, or clear, that St. Joseph’s is not a better guide than the RCT, or indeed an anecdote about another school. After all, St. Mary’s is not the mean, and may be a long way from it. Which is a better guide – or any guide at all – depends on how similar, in just the right ways, the individual/individuals in the study are to the new one we want predictions about. And how do we know what the right ways are? Well, a good case study at St. Joseph’s can at least show us what mattered for it to work there, which can be some indication of what it might take to work at St. Mary’s since they share much underlying structure.Footnote 17 In this case it looks like the advantage for exporting the study result may lie with the case study and not with the higher numbers.

Group-comparison studies do have the advantage that they can estimate an effect size – for the study population. That may be just what we need – for instance, in a post-hoc evaluation where the program contractors are to be paid by size of result. But we should beware of the assumption that this number is useful elsewhere. We have seen that it depends on the mean value of the net contribution of the interactive/support factors in the study population. It takes a lot of knowledge to warrant the assumption that the support factors at work in a new situation will have the same mean.

What can we conclude in general, then, about how secure causal conclusions from case studies are or how well they can be exported? Nothing. But other methods fare no better.

There is one positive lesson we can draw. We often hear the claim that case studies may be good for suggesting causal hypotheses but it takes other methods to test them. That is false. Case studies can test causal conclusions. And a well-done case study can establish causal results more securely than other methods if they are not well carried out or we if have little reason to accept the assumptions it takes to justify causal inference from their results.

3.1 Introduction

Experiments of all kinds have once again become popular in the social sciences (Reference Druckman, Green, Kuklinski and LupiaDruckman et al. 2011). Of course, psychology has long used them. But in my own field of political science, and in adjacent areas such as economics, far more experiments are conducted now than in the twentieth century (Reference JamisonJamison 2019). Lab experiments, survey experiments, field experiments – all have become popular (for example, Reference Karpowitz and MendelbergKarpowitz and Mendelberg 2014; Reference MutzMutz 2011; and Reference Gerber and GreenGerber and Green 2012, respectively; Reference AchenAchen 2018 gives an historical overview).

In political science, much attention, both academic and popular, has been focused on field experiments, especially those studying how to get citizens to the polls on election days. Candidates and political parties care passionately about increasing the turnout of their voters, but it was not until the early twenty-first century that political campaigns became more focused on testing what works. In recent years, scholars have mounted many field experiments on turnout, often with support from the campaigns themselves. The experiments have been aimed particularly at learning the impact on registration or turnout of various kinds of notifications to voters that an election was at hand. (Reference Green, McGrath and AronowGreen, McGrath, and Aronow 2013 reviews the extensive literature.)

Researchers doing randomized experiments of all kinds have not been slow to tout the scientific rigor of their approach. They have produced formal statistical models showing that an RCT is typically vastly superior to an observational (nonrandomized) study. In statistical textbooks, of course, experimental randomization has long been treated as the gold standard for inference, and that view has become commonplace in the social sciences. More recently, however, critics have begun to question this received wisdom. Reference CartwrightCartwright (2007a, Reference Cartwright, Chao and Reiss2017, Chapter 2 this volume) and her collaborators (Reference Cartwright and HardieCartwright and Hardie 2012) have argued that RCTs have important limitations as an inferential tool. Along with Reference Heckman and SmithHeckman and Smith (1995), Reference DeatonDeaton (2010) and others, she has made it clear what experiments can and cannot hope to do.

So where did previous arguments for RCTs go wrong? In this short chapter, I take up a prominent formal argument for the superiority of experiments in political science (Reference Gerber, Green, Kaplan and TeeleGerber et al. 2014). Then, building on the work of Reference Stokes and TeeleStokes (2014), I show that the argument for experiments depends critically on emphasizing the central challenge of observational work – accounting for unobserved confounders – while ignoring entirely the central challenge of experimentation – achieving external validity. Once that imbalance is corrected, the mathematics of the model leads to a conclusion much closer to the position of Cartwright and others in her camp.

3.2 The Gerber–Green–Kaplan Model

Reference Gerber, Green, Kaplan and TeeleGerber, Green, and Kaplan (2014) make a case for the generic superiority of experiments, particularly field experiments, over observational research. To support their argument, they construct a straightforward model of Bayesian inference in the simplest case: learning the mean of a normal (Gaussian) distribution. This mean might be interpreted as an average treatment effect across the population of interest if everyone were treated, with heterogeneous treatment effects distributed normally. Thus, denoting the treatment-effects random variable by Xt and the population variance of the treatment effects by

![]() , we have the first assumption:

, we have the first assumption:

Gerber et al. implicitly take

![]() to be known; we follow them here.2

to be known; we follow them here.2

In Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014)’s setup, there are two ways to learn about µ. The first is via an RCT, such as a field experiment. They take the view that estimation of population parameters by means of random sampling is analogous to the estimation of treatment effects by means of randomized experimentation (Reference Gerber, Green, Kaplan and TeeleGerber et al. 2014, 32 at fn. 8). That is, correctly conducted experiments are always unbiased estimates of the population parameter.

Following Gerber et al.’s mathematics but making the experimental details a bit more concrete, suppose that the experiment has a treatment and a control group, each of size n, with individual outcomes distributed normally and independently:

![]() in the experimental group and

in the experimental group and

![]() in the control group. That is, the mathematical expectation of outcomes in the treatment group is the treatment effect µ, while the expected effect in the control group is 0. We assume that the sampling variance is the same in each group and that this variance is known. Let the sample means of the experimental and control groups be

in the control group. That is, the mathematical expectation of outcomes in the treatment group is the treatment effect µ, while the expected effect in the control group is 0. We assume that the sampling variance is the same in each group and that this variance is known. Let the sample means of the experimental and control groups be

![]() and

and

![]() respectively, and let their difference be

respectively, and let their difference be

![]() .

.

Then, by the textbook logic of pure experiments plus familiar results in elementary statistics, the difference

![]() is distributed as:

is distributed as:

which is unbiased for the treatment effect µ. Thus, we may define a first estimate of the treatment effect by

![]() : It is the estimate of the treatment effect coming from the experiment. This is the same result as in Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014, 12), except that we have spelled out here the dependence of the variance on the sample size.

: It is the estimate of the treatment effect coming from the experiment. This is the same result as in Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014, 12), except that we have spelled out here the dependence of the variance on the sample size.

Next, Gerber et al. assume that there is a second source of knowledge about µ, this time from an observational study with m independent observations, also independent of the experimental observations. Via regression or other statistical methods, this study generates a normally distributed estimate of the treatment effect µ, with known sampling variance

![]() . However, because the methodology is not experimental, Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014, 12–13) assume that the effect is estimated with confounding, so that its expected value is distorted by a bias term β. Hence, the estimate from the observational study

. However, because the methodology is not experimental, Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014, 12–13) assume that the effect is estimated with confounding, so that its expected value is distorted by a bias term β. Hence, the estimate from the observational study

![]() is distributed as:

is distributed as:

We now have two estimates,

![]() and

and

![]() , and we want to know how to combine them. One can proceed by constructing a minimum-mean-squared error estimate in a classical framework, or one can use Bayesian methods. Since both approaches give the same result in our setup and since the Bayesian logic is more familiar, we follow Gerber et al. in adopting it. In that case, we need prior distributions for each of the unknowns.

, and we want to know how to combine them. One can proceed by constructing a minimum-mean-squared error estimate in a classical framework, or one can use Bayesian methods. Since both approaches give the same result in our setup and since the Bayesian logic is more familiar, we follow Gerber et al. in adopting it. In that case, we need prior distributions for each of the unknowns.

With all the variances assumed known, there are just two unknown parameters, µ and β. An informative prior on µ is not ordinarily adopted in empirical research. At the extreme, as Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014, 15) note, a fully informative prior for µ would mean that we already knew the correct answer for certain and we would not care about either empirical study, and certainly not about comparing them. Since our interest is in precisely that comparison, we want the data to speak for themselves. Hence, we set the prior variance on µ to be wholly uninformative; in the usual Bayesian way we approximate its variance by infinity.Footnote 1

The parameter β also needs a prior. Sometimes we know the likely size and direction of bias in an observational study, and in that case we would correct the observational estimate by subtracting the expected size of the bias, as Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014, 14) do. For simplicity here, and because it makes no difference to the argument, we will assume that the direction of the bias is unknown and has prior mean zero, so that subtracting its mean has no effect. Then the prior distribution is:

Here

![]() represents our uncertainty about the size of the observational bias. Larger values indicate more uncertainty. Standard Bayesian logic then shows that our posterior distribution for the observational study on its own is

represents our uncertainty about the size of the observational bias. Larger values indicate more uncertainty. Standard Bayesian logic then shows that our posterior distribution for the observational study on its own is

![]() .

.

Now, under these assumptions, Bayes’ Theorem tells us how to combine the observational and experimental evidence, as Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014, 14) point out. In accordance with their argument, the resulting combined or aggregated estimate

![]() is a weighted average of the two estimates

is a weighted average of the two estimates

![]() and

and

![]() :

:

where p is the fraction of the weight given to the observational evidence, and

(6)

(6)

This result is the same as Gerber et al.’s, except that here we had no prior information about µ, which simplifies the interpretation without altering the implication that they wish to emphasize.

That implication is this: Since

![]() ,

,

![]() , n, and m are just features of the observed data, the key aspect of p is our uncertainty about the bias term

, n, and m are just features of the observed data, the key aspect of p is our uncertainty about the bias term

![]() , which is captured by the prior variance

, which is captured by the prior variance

![]() . Importantly, Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014, 15) argue that we often know relatively little about the size of likely biases in observational research. In the limit, they say, we become quite uncertain, and

. Importantly, Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014, 15) argue that we often know relatively little about the size of likely biases in observational research. In the limit, they say, we become quite uncertain, and

![]()

![]() . In that case, obviously,

. In that case, obviously,

![]() in Equation (6), and the observational evidence gets no weight at all in Equation (5), not even if its sample size is very large.

in Equation (6), and the observational evidence gets no weight at all in Equation (5), not even if its sample size is very large.

This limiting result is Reference Gerber, Green, Kaplan and TeeleGerber et al.’s (2014, 15) Illusion of Observational Learning Theorem. It formalizes the spirit of much recent commentary in the social sciences, in which observational studies are thought to be subject to biases of unknown, possibly very large size, whereas experiments follow textbook strictures and therefore reach unbiased estimates. Moreover, in an experiment, as the sample size goes to infinity, the correct average treatment effect is essentially learned with certainty.Footnote 2 Thus, only experiments tell us the truth. The mathematics here is unimpeachable, and the conclusion and its implications seem to be very powerful. Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014, 19–21) go on to demonstrate that under conditions like these, little or no resources should be allocated to observational research. We cannot learn anything from it. The money should go to field experiments such as those they have conducted, or to other experiments.

3.3 A Learning Theorem with No Thumb on the Scale

Gerber et al.’s Illusion of Observational Learning Theorem follows rigorously from their assumptions. The difficulty is that those assumptions combine jaundiced cynicism about observational studies with gullible innocence about experiments. As they make clear in the text, the authors themselves are neither unrelievedly cynical nor wholly innocent about either kind of research. But the logic of their mathematical conclusion turns out to depend entirely on their becoming sneering Mr. Hydes as they deal with observational research, and then transforming to kindly, indulgent Dr. Jekylls when they move to RCTs.

To see this, consider the standard challenge of experimental research: external validity, discussed in virtually every undergraduate methodology text (for example, Reference Kellstedt and WhittenKellstedt and Whitten 2009, 75–76). Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014, 22–23) mention this problem briefly, but they see it as a problem primarily for laboratory experiments because the inferential leap to the population is larger than for field experiments. The challenges that they identify for field experiments consist primarily in administering them properly. Even then, they suggest that statistical adjustments can often correct the biases induced (Reference Gerber, Green, Kaplan and TeeleGerber et al. 2014, 23–24). The flavor of their remarks may be seen in the following sentence:

The external validity of an experiment hinges on four factors: whether the subjects in the study are as strongly influenced by the treatment as the population to which a generalization is made, whether the treatment in the experiment corresponds to the treatment in the population of interest, whether the response measure used in the experiment corresponds to the variable of interest in the population, and how the effect estimates were derived statistically.

What is missing from this list are the two critical factors emphasized in the work of recent critics of RCTs: heterogeneity of treatment effects and the importance of context. A study of inducing voter turnout in a Michigan Republican primary cannot be generalized to what would happen to Democrats in a general election in Louisiana, where the treatment effects are likely to be very different. There are no Louisianans in the Michigan sample, no Democrats, and no general election voters. Hence, no within-sample statistical adjustments are available to accomplish the inferential leap. Biases of unknown magnitude remain, and these are multiplied when one aims to generalize to a national population as a whole. As Reference CartwrightCartwright (2007a; Chapter 2 this volume), Reference Cartwright and HardieCartwright and Hardie 2012, Reference DeatonDeaton (2010), and Reference Stokes and TeeleStokes (2014) have spelled out, disastrous inferential blunders occur commonly when a practitioner of field experiments imagines that they work the way Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014) assume that they work in their Bayesian model assumptions. Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014, 32 at fn. 6) concede in a footnote: “Whether bias creeps into an extrapolation to some other population depends on whether the effects vary across individuals in different contexts.” But that crucial insight plays no role in their mathematical model.

What happens in the Gerber et al. model when we take a more evenhanded approach? If we assume, for example, that experiments have a possible bias

![]() stemming from failures of external validity, then in parallel to the assumption about bias in observational research, we might specify our prior beliefs about external invalidity bias as normally and independently distributed:

stemming from failures of external validity, then in parallel to the assumption about bias in observational research, we might specify our prior beliefs about external invalidity bias as normally and independently distributed:

Then the posterior distribution of the treatment estimate from the experimental research would be

![]() , and the estimate combining both observational and experimental evidence would become:

, and the estimate combining both observational and experimental evidence would become:

where q is the new fraction of the weight given to the observational evidence, and

(9)

(9)

A close look at this expression (or taking partial derivatives) shows that the weight given to observational and experimental evidence is an intuitively plausible mix of considerations.

For example, an increase in m (the sample size of the observational study) reduces the denominator and thus raises q; this means that, all else equal, we should have more faith in observational studies with more observations. Conversely, increases in n, the sample size of an experiment, raise the weight we put on the experiment. In addition, the harder that authors have worked to eliminate confounders in observational research (small

![]() ), the more we believe them. And the fewer the issues with external validity in an experiment (small

), the more we believe them. And the fewer the issues with external validity in an experiment (small

![]() ), the more weight we put on the experiment. That is what follows from Gerber et al.’s line of analysis when all the potential biases are put on the table, not just half of them. But, of course, all these implications have been familiar for at least half a century. Carried out evenhandedly, the Bayesian mathematics does no real work and brings us no real news.

), the more weight we put on the experiment. That is what follows from Gerber et al.’s line of analysis when all the potential biases are put on the table, not just half of them. But, of course, all these implications have been familiar for at least half a century. Carried out evenhandedly, the Bayesian mathematics does no real work and brings us no real news.

Gerber et al. arrived at their Illusion of Observational Learning Theorem only by assuming away the problems of external validity in experiments. No surprise that experiments look wonderful in that case. But one could put a thumb on the other side of the scale: Suppose we assume that observational studies, when carefully conducted, have no biases due to omitted confounders, while experiments continue to have arbitrarily large problems with external validity. In that case,

![]() and

and

![]() . A look at Equations (8) and (9) then establishes that in that case, we get an Illusion of Experimental Learning Theorem: Experiments can teach us nothing, and no one should waste time and money on them. But of course, this inference is just as misleading as Gerber et al.’s original theorem.

. A look at Equations (8) and (9) then establishes that in that case, we get an Illusion of Experimental Learning Theorem: Experiments can teach us nothing, and no one should waste time and money on them. But of course, this inference is just as misleading as Gerber et al.’s original theorem.

Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014, 11–12, 15, 26–30) concede that observational research sometimes works very well. When observational biases are known to be small, they see a role for that kind of research. But they never discuss a similar condition for valid experimental studies. Even in their verbal discussions, which are more balanced than their mathematics, they continue to write as if experiments had no biases: “experiments produce unbiased estimates regardless of whether the confounders are known or unknown” (Reference Gerber, Green, Kaplan and TeeleGerber et al. 2014, 25). But that sentence is true only if external validity is never a problem. Their theorem about the unique value of experimental work depends critically on that assumption. Alas, the last decade or two have taught us forcefully, if we did not know it before, that their assumption is very far from being true. Just as instrumental variable estimators looked theoretically attractive when they were developed in the 1950s and 1960s but often failed in practice (Reference BartelsBartels 1991), so too the practical limitations of RCTs have now come forcefully into view.

Experiments have an important role in political science and in the social sciences generally. So do observational studies. But the judgment as to which of them is more valuable in a particular research problem depends on a complex mixture of prior experience, theoretical judgment, and the details of particular research designs. That is the conclusion that follows from an evenhanded set of assumptions applied to the model Reference Gerber, Green, Kaplan and TeeleGerber et al. (2014) set out.

3.4 Conclusion

Causal inference of any kind is just plain hard. If the evidence is observational, patient consideration of plausible counterarguments, followed by the assembling of relevant evidence, can be, and often is, a painstaking process.Footnote 3 Faced with those challenges, researchers in the current intellectual climate may be tempted to substitute something that looks quicker and easier – an experiment.

The central argument for experiments (RCTs) is that the randomization produces identification of the key parameter. That is a powerful and seductive idea, and it works very well in textbooks. Alas, this modus operandi does not work nearly so well in practice. Without an empirical or theoretical understanding of how to get from experimental results to the relevant population of interest, stand-alone RCTs teach us just as little as casual observational studies. In either case, there is no royal road to secure inferences, as Nancy Cartwright has emphasized. Hard work and provisional findings are all we can expect. As Reference CartwrightCartwright (2007b) has pungently remarked, experiments are not the gold standard, because there is no gold standard.

4.1 Introduction

What lessons can be learned from the international community’s slow and piecemeal response to the Ebola epidemic in Guinea, Sierra Leone, and Liberia in 2014? Are the histories and outcomes of microfinance programs in one country or by one lender relevant beyond each country or lender? How can we judge whether the early results of a medical or other experiment are so powerfully indicative of either success or failure that the experiment should be stopped even before all cases are treated or all the evidence is in?

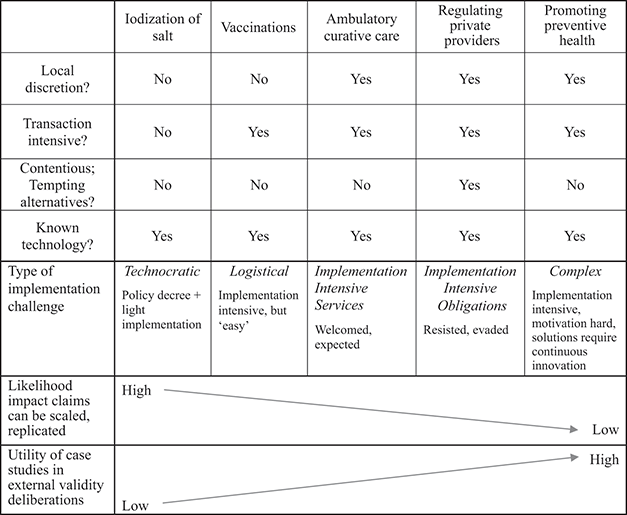

Case studies are one approach to addressing such questions. Yet one of the most common critiques of case study methods is that the results of individual case studies cannot be readily generalized. Oxford professor Bent Flyvbjerg notes that when he first became interested in in-depth case study research in the 1990s, his teachers and colleagues tried to dissuade him from using case studies, arguing “you cannot generalize from a single case.” Flyvbjerg concluded that this view constitutes a conventional wisdom that “if not directly wrong, is so oversimplified as to be grossly misleading” (Reference FlyvbjergFlyvbjerg, 2006: 219). Similarly, the present chapter notes that the conventional wisdom is not fully wrong, as techniques for generalizing from individual case studies are complex and potentially fallible. The chapter concurs with Flyvbjerg, however, in concluding that we have means of assessing which findings will and will not generalize. For some case studies and some findings, generalization beyond the individual case is not warranted. In other contexts, we can make contingent generalizations from one or more case studies, or generalizations to a subset of a population that shares a well-defined set of features. In still other instances, sweeping generalizations to large and diverse populations are possible even from a single case study. The answer to whether case studies generalize is “It depends.” It depends on our prior causal knowledge, our prior knowledge of populations of cases and of the frequency of contextual variables that enable or disable causal mechanisms, the evidence that emerges from process tracing on case studies (see Chapter 7), and how that evidence updates our prior knowledge of causal mechanisms and the contexts in which they do and do not operate.

A second, and related, critique of case studies is that their findings do not cumulate into successive improvements in theories. The present chapter, in contrast, argues that case studies can contribute to developing two different kinds of progressively better theories. First, case studies can lead to improved theories about individual causal mechanisms and the scope conditions under which they operate. Claims about causal mechanisms are one of the most common kinds of theory in both the social and physical sciences. Second, case studies can contribute to improved “typological theories,” or theories about how combinations of causal mechanisms interact in specified issue areas and distributions of resources, stakeholder interests, legitimacy, and institutions. Later case studies can build upon, test, qualify, and extend typological theories developed in earlier ones.

This chapter first clarifies different conceptions of “generalization” in statistical and case study research. It then discusses four kinds of generalization from case studies: generalization from the selection and study of “typical” cases, generalization from most- and least-likely cases, mechanism-based generalization, and generalization via typological theories. The chapter uses studies of the 2014 Ebola epidemic as a running example to illustrate many of these kinds of generalization, and it draws on studies of microfinance programs and medical experiments to illustrate particular kinds of generalization.

4.2 Statistical Versus Case Study Views on “Generalization”

While the accurate explanation of individual historical cases is important and useful, the ability to generalize beyond individual cases is rightly considered a key component of both theoretical progress and policy relevance. Theories are abstractions that simplify the task of perceiving and operating in the world, and without some degree and kind of generalization little simplification is possible. But “generalization” can take on several meanings, and scholars and policy-makers vary in their views on what kinds of generalizations are either possible or pragmatically useful, partly depending on whether their methodological training was mostly in quantitative or qualitative approaches. Thus, it is important to clarify the different meanings that scholars in different methodological traditions typically give to the term “generalization.”

Among researchers whose main methods are statistical analysis of observational data, “generalization” is commonly treated as a question of the “average effect” observed between a specified independent variable and the dependent variable of interest in a population. This average effect is represented by the coefficients on the statistically significant independent variables in a regression equation.Footnote 1 Similarly, for researchers who use experimental methods, generalization takes the form of the estimated “average treatment effect,” measured as the average difference in outcomes between the treated and untreated groups from a large number of randomly selected units.Footnote 2

Generalization from statistical analysis of observational data depends on several assumptions, most notably: 1) that the treatment of one unit does not affect the outcome of another unit (the Stable Unit Treatment Value Assumption, or SUTVA); and, 2) that independent variables have “constant effects” across the units (or, related, the “unit homogeneity” assumption that two units will have the same value on the dependent variable when they have the same value on the explanatory variable).Footnote 3 These are very demanding assumptions, and they do not hold up when there are interaction effects among independent variables, or when there are learning or selection effects through which the outcome (or expected outcome) in one individual or group affects the behavior, treatment, or outcome of another individual or group.

For statistical methods, the possibility that there may in fact be interaction effects, selection effects, and learning can create what is known as the “ecological inference problem.” Specifically, even if a statistical correlation holds up for a population, and even if the correlation is causal, it is a potential fallacy to infer that any one case in the population is causally explained by the correlation that is observed at the population level. When interaction effects exist, a variable that raises the average outcome for a population may have a greater or smaller effect, or zero effect or even a negative effect, on the outcome for an individual case.

For example, in the 1960s, on the basis of statistical and other evidence, it became generally (and rightly) accepted as true that smoking increases the general prevalence of lung cancer for large groups of people. This generalization is an adequate basis for the policy recommendation that governments should discourage smoking. Yet the generalization that smoking on average increases the incidence of lung cancer does not tell us whether any one individual contracted lung cancer due to smoking.Footnote 4 Some people who smoke develop lung cancer but others do not, and some people who do not smoke develop lung cancer.Footnote 5 Scientists using statistical methods to assess epidemiological and experimental data have more recently begun to understand some of the genetic, environmental, and behavioral factors (in addition to the decision on whether to smoke) that affect the probability that a specific individual will develop lung cancer. This supports more targeted policy recommendations on whether an individual with particular genes is at especially high risk if they choose to smoke. For example, recent studies indicate that individuals with a mutation in a region on chromosome 15 will have a greatly increased risk of contracting lung cancer if they smoke (Reference PrayPray 2008: 17). Even in this subgroup, however, it cannot be said with certainty that any one individual developed lung cancer because of smoking, as not every individual with this mutation gets lung cancer even if they smoke.Footnote 6

Statistical researchers are well aware that strong assumptions are required to extend inferences from populations to individual cases, and they are typically careful to make clear that their models do not necessarily explain individual cases (although the results of statistical studies are often oversimplified in media reports and applied to individual cases). Case study researchers in the social sciences tend to be particularly skeptical about strong assumptions regarding constant effects, unit homogeneity, and independence of cases. These researchers often think that high-order interaction effects, interdependencies among cases across space or time, and other forms of complexity are common in social life. Consequently, qualitative researchers in the social sciences typically doubt whether there are many nontrivial single-variable generalizations that apply in consistent ways across large populations of cases in society.

Case study researchers thus face the obverse of the ecological inference problem: often it is neither possible nor desirable to “generalize” from one or a few case studies to a population in the sense of developing estimates of average causal effects. Yet, at the same time, case study researchers do aspire to derive conclusions from case studies that are useful beyond the specific cases studied. Instead of seeking estimates of average effects for a population, case study researchers attempt to identify narrower “contingent generalizations” that apply to subsets of a population that share combinations of independent variables. Case study researchers thus develop “typological” or “middle range” theories about how similar combinations of variables lead to similar outcomes through similar processes or pathways. These researchers often focus on hypothesized causal mechanisms and their scope conditions, posing research questions in the following form: “Under what conditions does this mechanism have a positive effect on the outcome, under what conditions does it have zero effect, and under what conditions does it have a negative effect?”