Introduction

Nudges are behavioural policy interventions that slightly modify the decisional context, without much affecting material incentives and without enforcing a particular choice, thus limiting the infringement of freedom and preserving autonomy. Nudges have been used all over the world in many domains such as health (e.g., displaying red or green coloured spots on food items to indicate healthiness), the environment (e.g., indicating your neighbours’ consumption of electricity on your electricity bills), retirement (e.g., changing the default option from non-enrolment to enrolment in the pension plan provided by companies), and among many others (see Thaler & Sunstein, Reference Thaler and Sunstein2009; Oliver, Reference Oliver2013; World Bank, 2015; OECD, 2017; Sanders et al., Reference Sanders, Snijders and Hallworth2018a; Szazi et al., Reference Szaszi, Palinkas, Palfi, Szollosi and Aczel2018).

The ethical debate over nudges has been quite rich over the past 15 years or so. A large portion of the debate has focused on whether or not nudges effectively preserve the liberty and the autonomy of the targeted population. Most contributions on this point have been philosophical and theoretical (see the review of Congiu & Moscati (Reference Congiu and Moscati2021)). However, there is a growing set of empirical studies that aim to contribute to this point by measuring the way people (i.e., not scholars with an interest in nudges) judge various nudges to be more or less acceptable (see the references cited below and in Section 7.3 of Congiu & Moscati (Reference Congiu and Moscati2021)).

In this article, we aim to further our understanding of the main conclusion reached by Sunstein and Reisch (Reference Sunstein and Reisch2019) in their book-length discussion of a large set of acceptability studies on nudging that they conducted. Their main conclusion is that ‘the majority of citizens of most nations have no views, either positive or negative, about nudging in general; their assessment turns on whether they approve of the purposes and effects of particular nudges’ (Sunstein & Reisch, Reference Sunstein and Reisch2019, p. 7). This means that, for example, people do not find that changing a default option is acceptable or unacceptable in itself as a public policy. Their acceptability judgements depend on the domain of application and therefore on the exact purpose of a nudge, for example, changing a default option so that you are automatically saving for your retirement is usually judged as more acceptable than changing a default option so that you are automatically paying a carbon compensation fee on plane tickets (see, e.g., Yan & Yates, Reference Yan and Yates2019). Many studies have found these types of results for a large set of domains (see, e.g., Hagman et al., Reference Hagman, Andersson, Västfjäll and Tinghög2015, Reference Hagman, Erlandsson, Dickert, Tinghög and Västfjäll2022; Jung & Mellers, Reference Jung and Mellers2016; Tannenbaum et al., Reference Tannenbaum, Fox and Rogers2017; Davidai & Shafir, Reference Davidai and Shafir2020; Osman et al., Reference Osman, Fenton, Pilditch, Lagnado and Neil2018; Sunstein & Reisch, Reference Sunstein and Reisch2019).

However, while most acceptability studies are based on descriptions of nudges, we still have a relatively poor understanding of the extent to which, for a given nudge in a given domain, different descriptions of the same purpose and effects could yield different acceptability ratings. We, therefore, propose to study whether framing the purposes and effects of nudges as ‘increasing desirable behaviour’ or as ‘decreasing undesirable behaviour’ impacts acceptability ratings.

We also propose to study the main conjecture that Sunstein and Reisch (Reference Sunstein and Reisch2019) derive from the positive relation they observe between how much citizens trust their political institutions and how acceptable they rate nudges. Their conjecture is that ‘endorsement of nudges in general might increase when citizens are invited to participate, actively choose, and offer feedback on planned interventions.’ In other words, we should consider ‘the importance of public participation and consultation with respect to behaviourally informed policies’ (Sunstein & Reisch, Reference Sunstein and Reisch2019, p. 73). We, therefore, propose to study whether including in the description of a nudge that it has been designed through a consultation with the targeted population can increase its acceptability.

Background

Our study is motivated by the lack of systematic control for how the purpose and effects of nudges are framed in acceptability studies, as well as by the lack of experimental studies on the effect of public participation on the acceptability ratings of nudges.

Framing purpose and effectiveness

As said above, most acceptability studies are based on descriptions of nudges. When describing a nudge, it is often natural to include a description of its purpose in order to ensure that subjects have a correct understanding of what they are evaluating. It is, therefore, very common to mention that a given nudge is meant, for instance, ‘to encourage the consumption of healthier alternatives’ (Hagman et al., Reference Hagman, Andersson, Västfjäll and Tinghög2015, p. 445) or ‘to reduce childhood obesity’ (Sunstein & Reisch, Reference Sunstein and Reisch2019, p. 32). Notice how these two examples differ: the first one frames the purpose of a nudge in terms of an increase in desirable behaviour (healthy eating), while the second frames the purpose of another nudge as a decrease in the outcome of undesirable behaviour (obesity).

One can make the following two observations from a careful reading of the materials used in acceptability studies that are based on descriptions of nudges. On the one hand, there are studies in which the purposes of nudges are framed in no systematic way: as increases in desirable behaviour, as decreases in undesirable behaviour, as increases in the outcome of desirable behaviour, as decreases in the outcome of undesirable behaviour, or as combinations of some of these possibilities (e.g., Hagman et al., Reference Hagman, Andersson, Västfjäll and Tinghög2015; Osman et al., Reference Osman, Fenton, Pilditch, Lagnado and Neil2018; Sunstein & Reisch, Reference Sunstein and Reisch2019). On the other hand, there are studies that systematically frame the purposes of nudges in the same way, usually as increases in desirable behaviour (e.g., Tannenbaum et al., Reference Tannenbaum, Fox and Rogers2017; Hagman et al., Reference Hagman, Erlandsson, Dickert, Tinghög and Västfjäll2022). In both cases, there is no systematic test for the possible effect that the framing of the purpose of a given nudge might have on its acceptability.

The study that comes closest to testing for such an effect is Jung and Mellers (Reference Jung and Mellers2016). In one of their experiments (study 2), they tested (among other manipulations) the impact of framing the purpose of a nudge as, for example, helping people ‘to enjoy the benefits of good health’ (i.e., as an increase in the outcome of the desirable behaviour of being enrolled in a basic medical plan) or ‘to avoid the costs of poor health’ (i.e., as a decrease in the outcome of the undesirable behaviour of not being enrolled in a basic medical plan). They implemented this manipulation for five nudges and found no effect on acceptability. The manipulation that we propose to implement is different in two ways. Firstly, we frame the purposes of three nudges as increases in desirable behaviour or as decreases in undesirable behaviour and the purpose of one nudge as an increase in the outcome of desirable behaviour or as a decrease in the outcome of undesirable behaviour. Secondly, our framing of the purposes of nudges is accompanied by a similar framing of their effectiveness.Footnote 1

Mentioning some information about the effectiveness of nudges in their descriptions seems important to us in order to neutralize as much as possible people's tendency to hold inaccurate perceptions of the effectiveness of nudges, which in turn tend to strongly influence their judgements of acceptability. Indeed, the perceived effectiveness of a nudge is often found to be a very good predictor of its acceptability, even if that perception is inaccurate. For instance, Jung and Mellers (Reference Jung and Mellers2016) found that nudges that imply little deliberation from the targeted population (such as switching default options) are perceived as being less acceptable and less effective than nudges that imply more deliberation (such as reminders), even though empirical studies have shown that the former tend to be more effective than the latter. This empirical link between perceived effectiveness and acceptability has been found in a number of studies (see, e.g., Petrescu et al., Reference Petrescu, Hollands, Couturier, Ng and Marteau2016; Bang et al., Reference Bang, Shu and Weber2018; Cadario & Chandon, Reference Cadario and Chandon2020; Djupegot & Hansen, Reference Djupegot and Hansen2020; Gold et al., Reference Gold, Lin, Ashcroft and Osman2021). However, when the effectiveness of a nudge is displayed explicitly during the evaluation task, the presence of this information tends to increase its acceptability (see, e.g., Pechey et al., Reference Pechey, Burge, Mentzakis, Suhrcke and Marteau2014; Arad & Rubinstein, Reference Arad and Rubinstein2018; Reynolds et al., Reference Reynolds, Archer, Pilling, Kenny, Hollands and Marteau2019, Reference Reynolds, Stautz, Pilling, van der Linden and Marteau2020; Rafiq, Reference Rafiq2021). This is especially the case for non-deliberative nudges, which tend to become more acceptable than deliberative nudges when information about their effectiveness is provided (see, e.g., Sunstein & Reisch, Reference Sunstein and Reisch2019, chap. 7; Davidai & Shafir, Reference Davidai and Shafir2020).

The remarks made above about the lack of control and systematicity in the way the purposes of nudges are framed in acceptability studies apply with the same force to the way the effectiveness of nudges is framed. The study that comes closest to controlling for the framing of effectiveness is Gold et al. (Reference Gold, Lin, Ashcroft and Osman2021). In their experiments, they tested the impact of providing arguments for the effectiveness of a nudge by explaining how it is supposed to work to, for example, ‘adopt/maintain a healthier diet’ or ‘reduce or even stop smoking’ (p. 10), or for its lack of effectiveness by explaining how it could backfire and lead to, for example, ‘increases [in] people's overall daily calorie intake’ or ‘increases in smoking.’ They found a small effect of the framing of effectiveness for two nudges out of five – explaining how these nudges are supposed to work slightly increases their acceptability. Our manipulation of the framing of effectiveness is different in that we were more systematic in framing only the effectiveness of nudges (not their potential lack of effectiveness) by presenting numerical data on how they actually increased desirable behaviour or decreased undesirable behaviour. This is more in line with the studies mentioned in the previous paragraph, as is our way of only mentioning how the nudge is supposed to work without framing this explanation in a specific direction.

The effect of public participation

Several scholars have discussed cases in which citizens were directly involved in the design of a nudge or in the collective decision process that led to its implementation (John, Reference John2018; de Jonge et al., Reference De Jonge, Zeelenberg and Verlegh2018; Sanders et al., Reference Sanders, Snijders and Hallworth2018b; John & Stoker, Reference John and Stoker2019). This is usually done through various forms of deliberative forums in which the exchange of ideas can take place. Sunstein and Reisch (Reference Sunstein and Reisch2019, chap. 6) conjecture that the nudges that are implemented as a result of these experiences of deliberative democracy will be perceived as being more acceptable than nudges that are implemented more traditionally without consultation with citizens. Studies on the acceptability of nudges have not yet tested this conjecture to the best of our knowledge. In our study, we propose a very simple manipulation to provide such a test.

The present study

The empirical study that we detail below investigates the impact of two factors on people's acceptability judgements about nudges: (1) whether the decision to implement the nudge was made in consultation with representatives of the targeted population (mention of a consultation, no mention of a consultation) and (2) the framing of both the purpose behind the nudge and its effectiveness (increase in desirable behaviour for both purpose and effectiveness, decrease in undesirable behaviour for both purpose and effectiveness). We tested two hypotheses:

Hypothesis 1: The mention of a consultation with representatives of the targeted population will increase the acceptability of a nudge.

Hypothesis 2: The framing of nudges’ purposes and effectiveness will impact their acceptability.

We expected that the mention of a consultation with representatives of the targeted population would increase the acceptability of a nudge because it decreases the arbitrariness that one can potentially perceive in a nudge that is imposed in a technocratic fashion. We also expected that the framing of both the purposes and the effectiveness of a nudge would impact their acceptability, though we were not sure in which direction since there are mixed results in the literature and this type of joint framing had not been previously tested to the best of our knowledge.

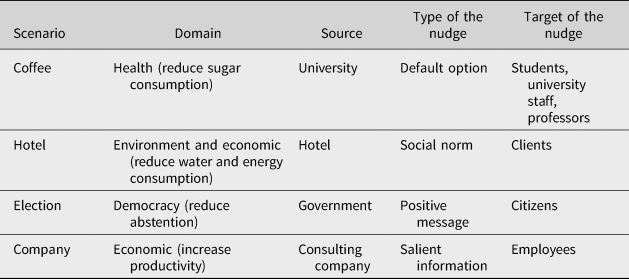

In order to increase the generalizability of the potential effects, each subject had to evaluate the acceptability of four nudges, which all varied in several dimensions (identity of the nudger, identity of the targeted population, behavioural domain, and type of nudge). Three of the nudges were genuine policies that had already been implemented somewhere in the world and one was a fictitious but plausible one. Finally, we also collected individual data on gender, age, education, and political opinions with no particular expectations of observing particular interaction effects.

Experimental design

We conducted an online randomized experiment implemented using Qualtrics. Starting from August 18, 2020, participants were recruited using a snowball sampling: the link to the study was publicly shared on social media (LinkedIn and Facebook) by the second author, by his Master's official account, and by the head of his Master (not involved in the study). Individuals were encouraged to share the study. We aimed to recruit as many participants as possible until August 31, 2020, and end up with 171 participants (81 males, 85 females, 5 NA) aged from 17 to 84 (M = 41.24, SD = 16.15).Footnote 2

We used a 2 (framing: increase in desirable behaviour vs. decrease in undesirable behaviour) × 2 (consultation: mention vs. no mention) between-subject design. Each participant first read an introduction explaining (1) that they were taking part in research conducted at our University, (2) what nudges are and that participants will evaluate the acceptability of four nudges, and (3) that responses are anonymous and personal information is not collected. Each participant was then asked to read the description of a nudge and rate its acceptability. Each participant followed this procedure for a total of four nudge scenarios. When one scenario had been rated, another scenario appeared, and it was not possible to go back to previous scenarios to change the acceptability rating. At the end of the experiment, participants answered questions about their gender, age, education, and political opinions. Participants were not compensated for their participation in the study. Three of the four nudge scenarios were inspired by real nudges. The ‘coffee’ scenario described a change in the default amount of sugar in drinks supplied by university coffee vending machines in order to reduce sugar consumption (inspired by Priolo et al. (Reference Priolo, Milhabet, Bertolino, Juille, Jullien, Lecouteux, Rafaï and Thérouanne2022)). The ‘hotel’ scenario described the communication to hotel clients of the share of previous clients who had chosen to reuse their towel in order to reduce water consumption by the hotel (inspired by Bohner & Schlüte (Reference Bohner and Schlüter2014)). The ‘election’ scenario described a government sending its citizens an encouraging SMS in order to reduce abstention in the election (inspired by Gerber & Rogers (Reference Gerber and Rogers2009)). The last and fictitious nudge scenario was the ‘company’ scenario: it described a company that implemented a system for tracking work hours on their employees’ computers to reduce the time spent surfing the internet (inspired by Felsen et al. (Reference Felsen, Castelo and Reiner2013)). The four scenarios were presented to each participant in a random order. The scenarios were chosen to test our hypotheses on a large variety of nudges (see Table 1).Footnote 3

Table 1. Characteristics of the four nudge scenarios.

These scenarios present diversity (1) in the type of nudge evaluated (default option, social norm, positive message, salient information), (2) in the source of the nudge (university, company, government, employer), (3) in the target of the nudge (people working or studying at the university, consumers, citizens, employees), and (4) in the behavioural domain of the nudge (health, environment, democracy, economic). Variability in the scenarios was not introduced to directly test which nudge characteristics are potential moderators of the framing and consultation effects, but rather to stress the robustness of potential effects in numerous situations. Our design was not adapted to attribute a difference in acceptance and effect heterogeneity to any particular nudge characteristic (except for the framing of the nudges and the presence of consultation, which were manipulated), since these characteristics always vary in more than one dimension from one scenario to another, preventing rigorous analysis due to confounding variables. All scenarios were constructed with a similar structure:

1. Introduction of the behavioural domain: We describe the domain in which a behavioural intervention is justified.

2. Purpose [frame ++ / frame − −]: We describe the purpose of the nudger in terms of an increase in desirable behaviour (frame ++) or in terms of a decrease in undesirable behaviour (frame − −).

3. Decision to implement the nudge [mention of a consultation, no mention of a consultation]: We either mention that the implementation of the nudge was decided in consultation with representatives of the targeted population (mention of a consultation), or we do not mention anything about how that decision was taken (no mention of a consultation).

4. Description of the nudge: We describe the type of nudge and explain why this nudge could be effective.

5. Effectiveness of the nudge [frame++/frame − −]: We communicate the effectiveness of the nudge, in terms of an increase in desirable behaviour (frame ++) or in terms of a decrease in undesirable behaviour (frame − −).

For each participant, we therefore manipulate two dimensions of the scenarios. The first dimension is the frame we used to describe the nudge scenario. Both the purpose of the nudge and its effectiveness are described either in terms of an increase in desirable behaviour (frame ++) or in terms of a decrease in undesirable behaviour (frame − −). We decided to manipulate the purpose and the effectiveness of the nudge simultaneously to always present them in the same frame (mixing the framing of the purpose and effectiveness would have been very confusing). The other dimension is whether the targeted population had a voice in the decision process that led to the implementation of the nudge (mention of a consultation) or not (no mention of a consultation). Table 2 summarizes the relevant differences between treatments. To avoid spillover effects, we decided to present all the scenarios to each participant in the same condition (i.e., same combination of frame and consultation).

Table 2. Experimental manipulation in each nudge scenario.

Our dependent variable was obtained from the acceptability scale proposed by Tannenbaum et al. (Reference Tannenbaum, Fox and Rogers2017), that we adapted into French.Footnote 4 Our acceptability scale is composed of the following items:

1. Do you support this policy?

2. Do you oppose this policy? (R)

3. Do you think that this policy is ethical?

4. Do you think that this policy is manipulative? (R)

5. Do you think that this policy is unethical? (R)

6. Do you think that this policy is coercive? (R)

Each item was rated on a 5-point Likert scale (1 = Not agreeing at all, 5 = Totally agreeing). ‘(R)’ indicates reversed items. For each scenario, we average up the scores of all items (for the reversed items, we added 6 and subtracted their score) to compute a single Acceptability index. We used Acceptability indexes (one for each scenario) as the dependent variables of linear regression models with framing and consultation treatments as independent variables. We estimated these models with and without including the individuals’ characteristics as controls.

As control variables, we collected information at the end of the experiment about gender (masculine, feminine, other), age, and political opinion (from 1 = far left to 5 = far right). At the time of the experiment, we did not expect particular interactions between these controls and the effect of framing and consultation but aimed to investigate potential interaction as an exploratory analysis. In total, 158 out of 171 participants provided complete answers to the control variables.Footnote 5 We conducted a power analysis using pwr package for R. With 171 participants, and 5% type I error rate: the expected power to detect medium effect size (Cohen's d = 0.5) is equal to 0.902, and the expected power to detect Cohen's d = 0.431 is equal to 80%.

Results

Data and the R-script statistics are publicly available on osf.Footnote 6

Internal validity and correlation between acceptability indexes

We first tested the internal validity of the acceptability scale across the fourth scenario. We found a Cronbach alpha ranging from 0.741 to 0.893, suggesting that items in the acceptability scale capture a similar concept. Table 3 summarizes mean and standard deviation of the acceptability index and acceptability scale's Cronbach alpha. Figure 1 shows the distribution of the acceptability index. Note that the maximum acceptability rating for a nudge is 5 (mean of the 6 items, each rated on a maximum of 5 points).Footnote 7

Figure 1. Distribution of the Acceptability index. Note: Distribution of the Acceptability Index for the Coffee (Top Left), Hotel (Top Right), Election (Bottom Left) and Company (Bottom Right) Scenarios.

Table 3. Mean and standard deviation of the Acceptability index in the four nudge scenarios, and across scenarios.

Note: Standard error in parenthesis. Differences between mean acceptability indexes across scenarios are all significant at the 0.001 level (two-tailed paired t-test).

Confirmatory results

We conducted separate OLS regressions with each scenario's acceptability measures as dependent variables, and with ‘frame ++’ and consultation as independent variables. We ran regressions with and without control variables (including gender, age, political opinion, level of education, and the order of the scenarios within the experiment).

The results of the regressions are summarized in Table 4 (models 1, 3, 5, 7).

Table 4. OLS results. Acceptability across the scenarios.

Note: Standard errors in parentheses. p < 0.1; *p < 0.05; **p < 0.01; ***p < 0.001. Control variables indicating the order of the scenario within the experiment and the participant's level of education are not significant at the 10% level in any regression and are masked for the sake of clarity. Difference in the number of observations is explained by participants who did not answer the demographic questions.

In the coffee and in the election scenarios, we found evidence that a positive frame and the mention of a consultation with the targeted population reduced acceptability ratings (in the coffee scenario (model 1): Cohen's d = −0.181, p = 0.0190 for the positive frame and Cohen's d = −0.208, p = 0.0072 for consultation; in the election scenario (model 5): Cohen's d = −0.158, p = 0.0410 for the positive frame and Cohen's d = −0.182, p = 0.0183 for consultation). Interactions between the two treatments are positive and comparable to the direct effects, but significant only at the 10% level for the coffee scenario (Cohen's d = 0.139, p = 0.0710) and at the 5% level for the election scenario (Cohen's d = 0.174, p = 0.0239). To summarize, in the election and coffee scenarios, we found a reduction in acceptability ratings for both positive frame and consultation, but those effects do not sum up. We found no significant effects for the hotel and company scenarios.

Introducing control variables allows us to stress the robustness of these results. In the coffee scenario, the significance of framing (Cohen's d = −0.205, p = 0.0111) and consultation (Cohen's d = −0.252, p = 0.0019) are unchanged and the interaction terms became significant at the 5% level (Cohen's d = 0.173, p = 0.0312). In the election scenario, the effect of a consultation remains significant at the 5% level (Cohen's d = −0.163, p = 0.0425) and the effect of framing is now significant only at the 10% level (Cohen's d = −0.135, p = 0.1381), but the interaction effect is not more statistically significant (p = 0.1050). For the company scenario, we found a significant positive interaction effect (Cohen's d = 0.190, p = 0.0185) and a negative effect of the positive frame, significant at the 10% level (Cohen's d = −0.146, p = 0.0690). Overall, these results can be considered as mixed and not as clearly supporting our hypotheses, since an effect of the framing has been detected only in half of the scenarios, and since an effect of the consultation has been detected in half of the scenarios but in the opposite direction as hypothesized.

Explanatory results

Besides the reported confirmatory results, this experiment allows us to investigate to what extent the individuals’ characteristics are predictors of the acceptability of the nudges. At the time of the study, we did not formulate precise hypotheses on this point and we thus present these results as exploratory. We observed that in the coffee scenario, participants who identified as men found the nudge less acceptable than those who identified as women (Cohen's d = −0.171, p = 0.0334). Individuals with more right-wing political opinions find the hotel and company scenarios more acceptable (Cohen's d = 0.218, p = 0.007 for the hotel scenario and Cohen's d = 0.326, p < 0.001 for the company scenario). Older participants judge the election scenario less acceptable (Cohen's d = −0.197, p = 0.0145), but judge the company scenario more acceptable than their younger counterparts (Cohen's d = 0.161, p = 0.0445). We found no significant effect of the level of education and of the order in which the scenarios were presented.

Discussion

We tested whether people's acceptability judgements about nudges would be influenced by the framing of both their purpose and effectiveness and positively influenced by mentioning that their implementation was made through a consultation with representatives of the targeted population. Four different nudge scenarios were used to assess the robustness of these potential influences.

We did not find general support for these two hypotheses. More precisely, we found no support for both hypotheses in two nudge scenarios: the nudge through social norm in the hotel scenario and the nudge through salient information in the company scenario. Besides traditional explanations linked with insufficient sample sizes or failure of the experimental manipulation, preventing the detection of the effects, we offer the following speculative explanation for a lack of such effects. Notice that these two scenarios are the only two that involve a private organization. One could argue that because there is a clear purpose behind most decisions implemented by private organizations, namely a profit motive, people are less inclined to infer tacit information from the way information is communicated, that is, from the framing of the purpose and effectiveness of the nudge, so that such framing does not impact their acceptability judgements. As for a lack of effect on acceptability of the mention of a consultation, this could be explained, in line with the ideals of deliberative democratic theory, by the fact that it is participation itself in the consultation (i.e., not its mere mention) that is a transformative experience which then impacts individuals’ judgements (see Rosenberg, Reference Rosenberg2007).

For the remaining two nudge scenarios (the nudge through a change in the default option in the coffee scenario and the nudge through an SMS reminder in the election scenario), we found evidence that presenting both the purpose and the effectiveness of the nudge in decreasing undesirable behaviour (in sugar consumption or in abstention) had a positive impact on acceptability ratings (significantly so in the coffee scenario and weakly significantly so in the election scenario). These results are in line with the seminal results of Meyerowitz and Chaiken's (Reference Meyerowitz and Chaiken1987) that health prevention campaigns that highlight the bad consequences of inaction tend to be more effective than the ones that highlight the good consequences of undertaking health-improving action. This tendency is traditionally explained by loss aversion (information about undesirable consequences are represented as losses and therefore have more impact than information about desirable consequences which are represented as gains; see Drouin et al., Reference Drouin, Young and King2018, p. 215 for a concise discussion).Footnote 8

We also found (still in these same two cases) that mentioning the presence of a consultation with the targeted population in the decision process to implement the nudge had a negative impact on acceptability ratings (again, significantly so in the coffee scenario and weakly significantly so in the election scenario). This is a rather surprising result that plainly contradicts our first hypothesis (that mentioning a consultation would increase acceptability). We can speculate, based on insights from contributions on deliberative democratic processes, that people who initially judge these nudges to not be very acceptable polarize their judgement if they learn that the nudges were implemented through a consultation. People who do not necessarily care for these nudges might be annoyed by the deployment of what they might consider to be ‘meaningless consultation’ (John, Reference John2018, p. 125) or processes that ‘place a high burden on citizens in terms of their time’ (John, Reference John2018, p. 126) for relatively unimportant stakes. Or it can be that the mention of the consultation led people to not only judge the acceptability of the nudges but also devalue the contradictory judgements of those who participated to the consultation (Rosenberg, Reference Rosenberg2007, p. 343). In any case, this reduction in acceptability ratings due to the mention of a consultation with representatives of the targeted population clearly calls for further studies.Footnote 9

Concerning our exploratory results, we found that women judged the coffee scenario more acceptable than men (we did not observe gender differences for the other scenarios). This is in line with standard results supporting a higher concern for health in women compared to men (Bertakis et al., Reference Bertakis, Azari, Helms, Callahan and Robbins2000). We also found that older participants judged the election scenario to be less acceptable than their younger counterparts. We can cynically speculate that older people might be more disillusioned by democratic participation than younger people (who have had less opportunity to be disappointed by politicians). Finally, we found that participants with more right-wing political opinions judge the company scenario to be more acceptable. Previous studies have shown that the political opinions of people positively influence their acceptability judgements about nudges when the nudger and/or the political valence of the nudge is congruent with these political opinions (Tannenbaum et al., Reference Tannenbaum, Fox and Rogers2017). For instance, the Bush Administration or nudges that simplify the procedures to obtain tax breaks for high-income individuals are congruent with conservative political opinions (for a general discussion of these results, see Sunstein & Reisch, Reference Sunstein and Reisch2019, chap. 3). Arguably, of our four nudges, the one in the company scenario has both the nudger (the manager of the company) and the political valence of the nudge (increased productivity at work) that are the most congruent with more right-wing political opinions. This result is also congruent with previous ones showing that nudges implemented by businesses are found to be more acceptable by people with right-wing political opinions than they are by people with left-wing opinions (see, e.g., Cadario & Chandon, Reference Cadario and Chandon2019).

To conclude, we found that acceptability ratings of nudges can be increased by describing their purpose and effectiveness in terms of the reduction of undesirable behaviour (i.e., by a joint negative framing) and can be decreased by mentioning that their implementations were made through a consultation with representatives of the targeted population. We found evidence for these effects only for nudges implemented by a public organization – there is no such evidence for nudges implemented by a private organization. Nevertheless, that acceptability ratings can be lowered by mentioning that a nudge was implemented through a consultation with representatives of the targeted population is a surprising result that calls for further work.

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/bpp.2022.13.

Acknowledgements

This article reports the results of an experiment conducted during Arthur Ribaillier's master thesis, entitled ‘L'acceptabilité des nudges digitaux,’ under Ismaël Rafaï's supervision. The authors thank the LAPCOS (Université Côte d'Azur) for technical support, and Isabelle Milhabet, Daniel Priolo, Pierre Therouane, Guilhem Lecouteux, Agnès Féstré, and Mira Toumi for their valuable comments. We thank Yvonne van der Does for proofreading this article.

Funding

The authors have not received any particular financial support.

Conflict of interest

The authors report no conflict of interest.