Highlights

-

• Greater language experience predicts higher accuracy and reduced listening effort.

-

• Language experience effects vary with stimulus demands and listening conditions.

-

• Language experience shrinks quiet–noise perceptual gaps in sentence listening.

-

• More effort is needed to minimize quiet–noise perceptual gaps in sentence listening.

1. Introduction

The definition of multilingualism has changed from a narrow view, where bilinguals were defined as individuals with native-like proficiency in two languages (Bloomfield, Reference Bloomfield1933) to a more comprehensive perspective that sees it as a continuous, heterogeneous phenomenon (Antoniou et al., Reference Antoniou, Pliatsikas and Schroeder2023; Berthele, Reference Berthele2021; Cowan et al., Reference Cowan, Paroby, Leibold, Buss, Rodriguez and Calandruccio2022; DeLuca et al., Reference DeLuca, Rothman, Bialystok and Pliatsikas2019; Di Pisa et al., Reference Di Pisa, Pereira Soares and Rothman2021; Gullifer et al., Reference Gullifer, Kousaie, Gilbert, Grant, Giroud, Coulter, Klein, Baum, Phillips and Titone2021; Gullifer & Titone, Reference Gullifer and Titone2020; Luk, Reference Luk2023; Luk & Bialystok, Reference Luk and Bialystok2013; Marian & Hayakawa, Reference Marian and Hayakawa2021; Surrain & Luk, Reference Surrain and Luk2019; Titone & Tiv, Reference Titone and Tiv2023). This view acknowledges the existence of multiple sources of variability in the language experience of multilinguals. While previous studies have explored the effects of individual aspects of variability in language experience on perceptual performance, the overall impact of language experience, which encompasses multiple interacting factors, has not been thoroughly examined. Most importantly, its influence on listening effort remains unclear. Our study addresses this gap by investigating how variations in language experience affect perceptual performance and listening effort in multilinguals, examining the nuances of these relationships across different listening conditions (quiet and noise) and types of stimuli (words and sentences with low and high predictability).

1.1. Challenges and variability in multilingual perceptual accuracy

Research on speech perception underscores multilinguals’ challenges in noisy environments, mainly when processing speech stimuli (Bsharat-Maalouf & Karawani, Reference Bsharat-Maalouf and Karawani2022a, Reference Bsharat-Maalouf and Karawani2022b; Garcia Lecumberri et al., Reference Garcia Lecumberri, Cooke and Cutler2010; Krizman et al., Reference Krizman, Bradlow, Lam and Kraus2017; Skoe & Karayanidi, Reference Skoe and Karayanidi2019). There are multiple explanations for these challenges. One proposed explanation for these difficulties is that multilinguals may have less precise phonetic representations, which can hinder their lexical access (see Cutler et al., Reference Cutler, Garcia Lecumberri and Cooke2008; Mattys et al., Reference Mattys, Carroll, Li and Chan2010). From this perspective, incomplete or inaccurate phonetic representations can disrupt lexical access and syntactic integration, ultimately hindering speech perception.

Another explanation focuses on the co-activation of multiple languages during processing. Multilinguals activate words from all their known languages simultaneously, creating competition among candidate words and making the process of matching the auditory signal to the appropriate linguistic representation more complex, contributing to their perceptual difficulties (Blumenfeld & Marian, Reference Blumenfeld and Marian2013; Bobb et al., Reference Bobb, Von Holzen, Mayor, Mani and Carreiras2020; Bsharat-Maalouf & Karawani, Reference Bsharat-Maalouf and Karawani2022b; Chen et al., Reference Chen, Bobb, Hoshino and Marian2017; Marian & Spivey, Reference Marian and Spivey2003; Schwartz & Kroll, Reference Schwartz and Kroll2006; Shook & Marian, Reference Shook and Marian2012, Reference Shook and Marian2013; Weber & Cutler, Reference Weber and Cutler2004).

A third explanation attributes multilinguals’ perceptual challenges to higher-level processing limitations due to incomplete lexical-semantic knowledge (Bradlow & Alexander, Reference Bradlow and Alexander2007; Cutler et al., Reference Cutler, Weber, Smits and Cooper2004; Mayo et al., Reference Mayo, Florentine and Buus1997; Skoe & Karayanidi, Reference Skoe and Karayanidi2019). Unlike monolinguals, who rely on extensive exposure to their native language patterns, multilinguals often lack sufficient experience in each of their languages. This limits their ability to use higher-level information to compensate for noise-related disruptions (Cutler et al., Reference Cutler, Garcia Lecumberri and Cooke2008).

Several studies on speech perception support the latter explanation (e.g., Bsharat-Maalouf & Karawani, Reference Bsharat-Maalouf and Karawani2022b; Krizman et al., Reference Krizman, Bradlow, Lam and Kraus2017; Mayo et al., Reference Mayo, Florentine and Buus1997). For example, Bsharat-Maalouf and Karawani (Reference Bsharat-Maalouf and Karawani2022b) showed that multilinguals’ perceptual difficulties are particularly pronounced when processing sentences that rely on lexical-semantic knowledge (e.g., high-predictability sentences) but less so in word-processing tasks. This aligns with Mayo et al. (Reference Mayo, Florentine and Buus1997), who found that multilinguals benefit less from predictability cues than monolinguals in noisy sentence tasks. Importantly, their study highlighted the influence of multilingual language experience, showing that early bilinguals benefited more from predictability cues than late bilinguals, emphasizing the importance of language experience in utilizing higher-level linguistic knowledge.

Although many previous studies have explored how variability in multilingual language experience affects perceptual performance (see Cowan et al., Reference Cowan, Paroby, Leibold, Buss, Rodriguez and Calandruccio2022), they often focused on isolated factors, such as age of acquisition (AoA), proficiency, exposure or frequency of use, overlooking how these factors interact and jointly influence perceptual outcomes (Luk & Bialystok, Reference Luk and Bialystok2013). Thus, one of the goals of this study is to address this gap by conceptualizing language experience as a unified construct that includes multiple factors, including AoA, language proficiency, exposure time and language use demand. More importantly, this study is innovative in extending the investigation beyond speech perception alone. Specifically, we aim to explore how variability in language experience impacts multilingual listening effort – a question that, to date, has been only rarely explored.

1.2. Multilingual listening effort

Listening effort refers to the deliberate allocation of cognitive resources to overcome obstacles during a listening task (Pichora-Fuller et al., Reference Pichora-Fuller, Kramer, Eckert, Edwards, Hornsby, Humes, Lemke, Lunner, Matthen and Mackersie2016). It serves as an indicator of the ease with which the language understanding process occurs (refer to the ease of language understanding model; Rönnberg et al., Reference Rönnberg, Rudner, Foo and Lunner2008, Reference Rönnberg, Lunner, Zekveld, Sörqvist, Danielsson, Lyxell, Dahlström, Signoret, Stenfelt and Pichora-Fuller2013, Reference Rönnberg, Signoret, Andin and Holmer2022, Reference Rönnberg, Holmer and Rudner2019, Reference Rönnberg, Holmer and Rudner2021).

Investigating the effect of variability in language experience on listening effort is particularly relevant because listening effort can differ significantly from speech perception (Winn & Teece, Reference Winn and Teece2021). Critically, previous studies have suggested that listening effort can be involved even when perceptual performance remains stable or unaffected (Borghini & Hazan, Reference Borghini and Hazan2018, Reference Borghini and Hazan2020; Koelewijn et al., Reference Koelewijn, Zekveld, Festen and Kramer2012; McLaughlin et al., Reference McLaughlin, Zink, Gaunt, Spehar, Van Engen, Sommers and Peelle2022; McLaughlin & Van Engen, Reference McLaughlin and Van Engen2020; Ohlenforst et al., Reference Ohlenforst, Wendt, Kramer, Naylor, Zekveld and Lunner2018; Strand et al., Reference Strand, Brown and Barbour2020a; Wendt et al., Reference Wendt, Hietkamp and Lunner2017; Winn et al., Reference Winn, Edwards and Litovsky2015; Winn & Teece, Reference Winn and Teece2021). For example, Borghini and Hazan (Reference Borghini and Hazan2020) found that although multilinguals can achieve similar perceptual accuracy in their second language (L2) compared to that of monolinguals, they exert significantly more listening effort. This finding suggests that maintaining perceptual accuracy in a less dominant language often involves resource-driven strategies, even when processing might appear automatic at a surface level.

Recently, there has been a growing interest in the literature focusing on the role of listening effort among multilingual listeners (see Bsharat-Maalouf et al., Reference Bsharat-Maalouf, Degani and Karawani2023, for review; see also Schmidtke et al., Reference Schmidtke, Bsharat-Maalouf, Degani and Karawani2025). However, current research predominantly focuses on between-participant comparisons, specifically contrasting bilinguals using their L2 with monolinguals using their first language (L1) (e.g., Borghini & Hazan, Reference Borghini and Hazan2018, Reference Borghini and Hazan2020; Brännström et al., Reference Brännström, Rudner, Carlie, Sahlén, Gulz, Andersson and Johansson2021; Lam et al., Reference Lam, Hodgson, Prodi and Visentin2018; Peng & Wang, Reference Peng and Wang2019; Schmidtke, Reference Schmidtke2014; Visentin et al., Reference Visentin, Prodi, Cappelletti, Torresin and Gasparella2019). While these studies provide valuable insights into the increased listening effort that multilinguals experience, they also have significant limitations. Most importantly, in such between-participant comparative studies, the effect of variability in the language experience profile of multilinguals is frequently overlooked because multilingual participants are often treated as a homogeneous group. This approach ignores key differences within these diverse groups and diminishes the impact that varying bilingual profiles can have on outcomes. Additionally, recent studies by Bsharat-Maalouf et al. (Reference Bsharat-Maalouf, Degani and Karawani2023), De Houwer (Reference De Houwer2023), and Rothman et al. (Reference Rothman, Bayram, DeLuca, Di Pisa, Dunabeitia, Gharibi, Hao, Kolb, Kubota and Kupisch2023) highlight other shortcomings in between-participant comparative research, suggesting a need for more nuanced investigations. They highlight the prevalence of multilingualism globally, cautioning that individuals grouped for comparison against bilinguals in many studies (often classified as monolinguals) may encompass individuals with bilingual experiences (De Houwer, Reference De Houwer2023; Prior & van Hell, Reference Prior and van Hell2021; Rothman et al., Reference Rothman, Bayram, DeLuca, Di Pisa, Dunabeitia, Gharibi, Hao, Kolb, Kubota and Kupisch2023). Acknowledging such limitations, in this study, we depart from the traditional separation of participants into distinct groups. Instead, we adopt a novel approach (as suggested by Rothman et al., Reference Rothman, Bayram, DeLuca, Di Pisa, Dunabeitia, Gharibi, Hao, Kolb, Kubota and Kupisch2023), treating multilinguals as one large heterogeneous sample without separating them into specific groups. This approach encourages treating multilinguals as a continuous variable (Luk & Bialystok, Reference Luk and Bialystok2013) and hypothesizes that variability within the language experience profile of multilinguals may ultimately predict outcomes irrespective of multilingual status (Rothman et al., Reference Rothman, Bayram, DeLuca, Di Pisa, Dunabeitia, Gharibi, Hao, Kolb, Kubota and Kupisch2023).

To date, investigations into how language experience variability affects multilingual listening effort have primarily been addressed in the studies by Schmidtke (Reference Schmidtke2014), Francis et al. (Reference Francis, Tigchelaar, Zhang and Zekveld2018), and Borghini and Hazan (Reference Borghini and Hazan2018, Reference Borghini and Hazan2020). Results, however, have been inconsistent. In Schmidtke’s (Reference Schmidtke2014) study, the association between variability in language proficiency and listening effort was examined among Spanish (L1)–English (L2) bilinguals during the perception of English single words presented in a quiet condition. The results showed that bilinguals with higher English proficiency had an earlier pupil response than those with lower language proficiency, suggesting faster cognitive processing and lower effort (Hyönä et al., Reference Hyönä, Tommola and Alaja1995; Zekveld et al., Reference Zekveld, Kramer and Festen2011). This pattern of findings was not replicated in the studies by Francis et al. (Reference Francis, Tigchelaar, Zhang and Zekveld2018) and Borghini and Hazan (Reference Borghini and Hazan2018, Reference Borghini and Hazan2020), which investigated a sentence listening task presented in noise. In particular, in Francis et al. (Reference Francis, Tigchelaar, Zhang and Zekveld2018), increased pupil dilation, indicating increased effort, was observed among Dutch (L1)–English (L2) bilinguals with higher English proficiency. However, it is noteworthy that this correlation was observed only in a few of the experimental conditions examined, prompting the authors to highlight the necessity for further studies in the field. Similarly, in Borghini and Hazan’s (Reference Borghini and Hazan2018, Reference Borghini and Hazan2020) studies with Italian (L1)–English (L2) bilinguals, none of the predictors related to the language experience profile (length of residence in an English environment, overall English usage or English proficiency) predicted pupil response. Nonetheless, Borghini and Hazan (Reference Borghini and Hazan2018, Reference Borghini and Hazan2020) acknowledged that this observation does not entirely negate a potential relationship, attributing this finding to methodological factors. For instance, the absence of objective proficiency tests in their earlier study (Borghini & Hazan, Reference Borghini and Hazan2018) raised concerns about biases in self-reported measures. Further, in their recent study (Borghini & Hazan, Reference Borghini and Hazan2020), the authors suggested that introducing the task at a less favorable signal-to-noise ratios (SNRs) for highly proficient bilinguals compared to their less proficient counterparts might have introduced confounding effects.

Given the disparity in the findings of these studies, the current study aims to delve deeper into how variability in language experience influences multilingual listening effort. Unlike the above research that predominantly focused on investigating such association within a relatively homogenous population of multilingual individuals, in the current study, we treat different multilingual speakers (including Arabic–Hebrew–English trilinguals and Hebrew–English bilinguals) as one cohesive group. Additionally, different from prior investigations (Borghini & Hazan, Reference Borghini and Hazan2018, Reference Borghini and Hazan2020) that primarily focused on the association within L2 (less dominant, later acquired language), our focus extends to examining all languages spoken by multilinguals (three languages for trilinguals [Arabic, Hebrew and English] and two languages for bilinguals [Hebrew and English]). We investigate the association regardless of language order or dominance, encompassing all languages spoken by participants. This comprehensive approach expands our understanding of how language experience variability shapes listening effort, offering insights that extend beyond specific language contexts and apply more broadly, even beyond multilingual contexts.

Moreover, unlike previous studies, which examined the association between variability in language experience and listening effort in either quiet or noisy conditions, and limited stimuli to single words or sentences, the current research takes a broader approach. Here, the association is examined in quiet and noisy listening conditions while incorporating single words and sentences with different predictability levels as the presented stimuli. Including both listening conditions is essential to understand whether variability in language experience affects listening effort solely under adverse conditions or if this effect persists in quiet listening conditions, where perceptual accuracy is assumed to be at ceiling and performance may be supported by more automatic, surface-level processing.

Because we test different types of stimuli in the same study, our study can examine how variability in language experience affects listening effort at different levels of linguistic processing. Single words serve as a baseline, limiting the influence of higher-level linguistic elements, such as sentence semantics and syntax, allowing us to focus on processing with minimal involvement of higher-order factors. In contrast, sentences – particularly those with high predictability – depend more on lexical-semantic knowledge, which is essential for forming realistic expectations that facilitate listening. Thus, by comparing listening effort across sentences of high and low predictability, we aim to assess whether variability in language experience enhances the ability to utilize these lexical-semantic cues effectively to reduce listening effort.

Lastly, in our study, we examine the effects of variability in language experience, accounting for often-overlooked demographic factors, including socioeconomic status (SES) and cognitive abilities (see Cowan et al., Reference Cowan, Paroby, Leibold, Buss, Rodriguez and Calandruccio2022; Gathercole et al., Reference Gathercole, Kennedy and Thomas2016; Shokuhifar et al., Reference Shokuhifar, Javanbakht, Vahedi, Mehrkian and Aghadoost2025). To ensure that language experience is the primary focus, we included data on SES (assessing maternal education level) and cognitive abilities (measuring working memory span and executive control) as covariates in our analyses. This helps distinguish the effects of language experience from other demographic influences. Additionally, to address the limitations raised by Borghini and Hazan (Reference Borghini and Hazan2018, Reference Borghini and Hazan2020), we collected data regarding participants’ language experience using both subjective and objective measures. Moreover, in the noise condition, all participants were tested using the same fixed SNR to maintain consistency in the listening conditions across the entire sample.

In summary, the current study aims to (1) examine the relationships between variability in language experience and multilingual perceptual performance and listening effort focusing on the overall impact of this variability rather than isolating specific factors and (2) understand the nuances of these relationships across different listening conditions (quiet and noise) and stimulus types (words and low- and high-predictability sentences).

Building on the reviewed literature, we hypothesize that greater language experience (i.e., earlier AoA, higher proficiency and greater use and exposure) will be associated with improved perceptual accuracy and reduced listening effort. This hypothesis is grounded in the assumption that extensive language experience will contribute to more robust linguistic representations, reduce cross-language interference and facilitate more efficient use of contextual cues during processing. We also expect that the effect of language experience will be modulated by both the listening condition and the linguistic demands of the stimulus. Specifically, for single words, where contextual cues are minimal, we predict that the effect of language experience on listening effort will be more pronounced in noisy conditions, such that greater experience will help compensate for the acoustic degradation. For sentences, we expect that language experience will have a stronger impact on high-predictability sentences than on low-predictability ones, suggesting that experience enhances the ability to exploit top-down contextual information when it is available.

To address the above objectives, data from Bsharat-Maalouf et al. (Reference Bsharat-Maalouf, Schmidtke, Degani and Karawani2025) were reanalyzed. The original study utilized pupillometry to measure listening effort in multilinguals during listening tasks and treated language experience dichotomously, focusing on comparisons between L1 and L2. In contrast, the current study adopts a continuous framework to examine how variability in language experience shapes listening effort.

The participant sample from Bsharat-Maalouf et al. (Reference Bsharat-Maalouf, Schmidtke, Degani and Karawani2025) was retained and expanded to include Hebrew (L1)–English (L2) bilinguals, thereby broadening the range of language experiences analyzed. Furthermore, listening effort in the third language (L3) of Arabic–Hebrew multilinguals was incorporated into the analyses – an aspect not examined in the original study – further enriching the diversity of language experiences represented.

Each participant completed listening tasks involving single words and sentences with varying predictability in their spoken languages, presented in quiet and noisy conditions. Perceptual accuracy was measured alongside detailed data on language experience profiles, collected using a combination of subjective self-reports and objective assessments. This comprehensive approach enabled an in-depth exploration of how variability in language experience influences perceptual accuracy and listening effort across diverse listening conditions and linguistic stimuli.

2. Methods

2.1. Participants

Ninety-two young adult university students (M age = 24.836, SD = 4.560 years, 73 females, 19 males) participated in the study. Data from four additional participants were excluded because they did not complete the entire experimental protocol.

The sample included multilingual participants, of whom 47 were Hebrew–English bilinguals, with Hebrew as their L1 and English as their L2, and 45 were Arabic–Hebrew–English trilingual speakers, with Arabic as their L1, Hebrew as their L2 and English as their L3.

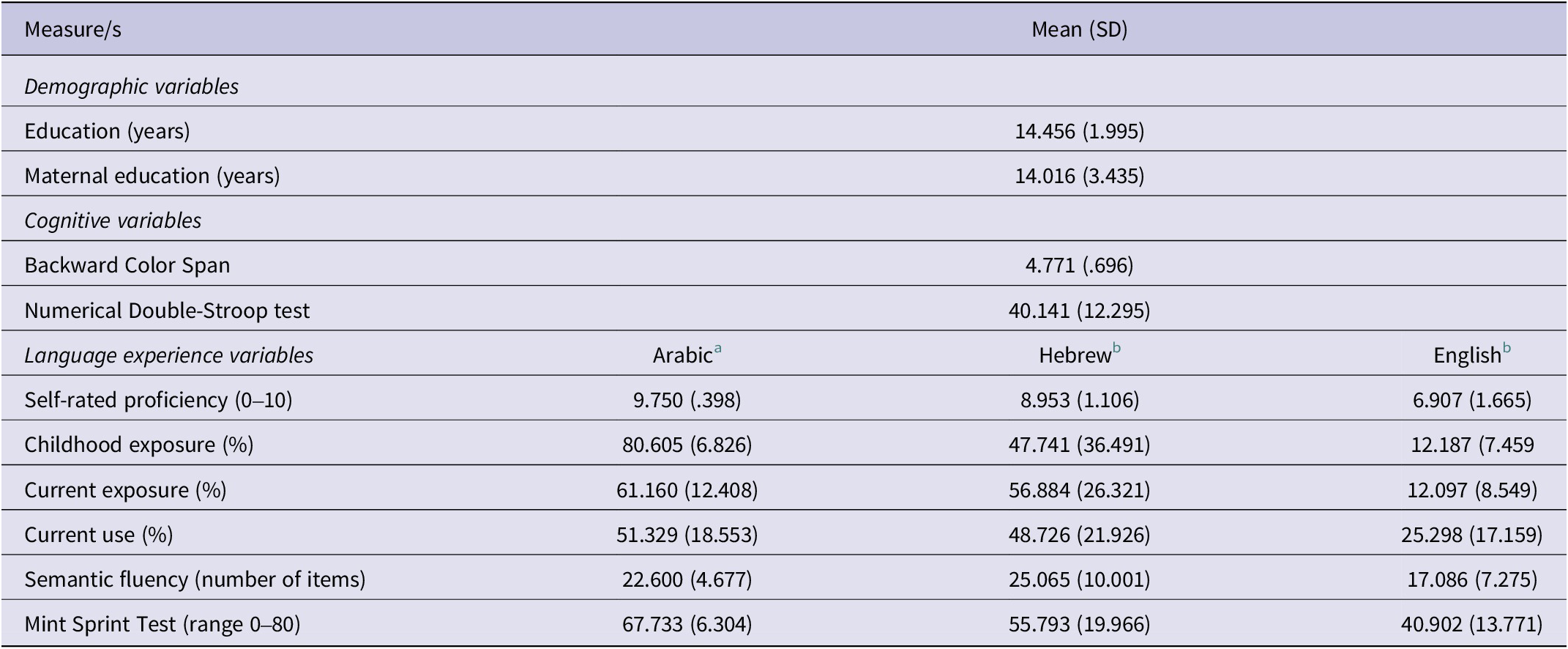

These multilingual participants acquired their L1 from birth, while the additional language/s (L2 in bilinguals and L2 and L3 in trilinguals) were acquired later in childhood through formal instruction during elementary school years. Proficiency levels across languages and individuals varied, but critically, these were evaluated using both subjective and objective measures. Comprehensive demographic information, as well as information regarding participants’ cognitive abilities (working memory span and executive control abilities), were collected (refer to Section 2.3). Descriptive data for the entire sample are summarized in Table 1. A detailed group-wise breakdown comparing trilingual and bilingual participants is provided in Supplementary Table S1, with additional information on the trilingual group available in Bsharat-Maalouf et al. (Reference Bsharat-Maalouf, Schmidtke, Degani and Karawani2025).

Table 1. Participant characteristics (N = 92)

Note. Mean values and SDs (in parentheses). Proficiency ratings were averaged across speaking, reading, speech comprehension and writing. Exposure percentages cover diverse contexts (work, university, friends, family and free time) and use percentages average across activities (speaking, reading, writing, social media, music listening and TV watching).

a Language experience variables associated with the Arabic language are exclusively drawn from the trilingual subset (their L1).

b Language experience factors related to Hebrew and English encompass data from both trilinguals and bilinguals combined. In this combined analysis, Hebrew served as the L2 for trilinguals and the L1 for bilinguals, while English served as the L3 for trilinguals and the L2 for bilinguals. For a group-wise breakdown (trilingual vs. bilingual), refer to Supplementary Table S1.

Participants reported no cognitive or neural disorders, cataracts or hearing impairments. They refrained from using drugs or medications before the experiment and had either normal vision or corrected-to-normal vision. They further affirmed the absence of any history relating to language or learning disabilities. Participants were recruited through advertisements on social media on campus, provided their written informed consent following the university’s ethics committee guidelines and received either course credit or monetary compensation for their time.

2.2. Experiment overview

As reported by Bsharat-Maalouf et al. (Reference Bsharat-Maalouf, Schmidtke, Degani and Karawani2025), participants’ perceptual performance and the effort associated with listening to speech stimuli were examined during a listening task, which included the presentation of words and sentences in participants’ respective spoken languages. Thus, trilinguals were examined in listening tasks presented in Arabic (L1), Hebrew (L2) and English (L3), and bilinguals were examined in Hebrew (L1) and English (L2). All participants completed the listening tasks in their L1 and L2 during a single experimental session, with language administration order counterbalanced across participants. Trilinguals completed the L3 listening task in a separate session, on average 2.56 days (SD = 1.73) apart from the first session. The order of language administration was tested and showed no significant effect (p > .65).

We collected data regarding participants’ language experience using both subjective and objective measures. A detailed language history questionnaire (Multilingual Language Background Questionnaire; Abbas et al., Reference Abbas, Degani, Elias, Prior and Silawi2024) was completed at the end of the experimental protocol. Two objective proficiency tests, including a semantic fluency test (Gollan et al., Reference Gollan, Montoya and Werner2002; Kavé, Reference Kavé2005) and a picture-naming test (Multilingual Naming Test [MINT] sprint; Garcia & Gollan, Reference Garcia and Gollan2022) were administered in the participants’ respective languages. The objective tests in each respective language spoken by the participants were administered directly after completing the listening task in that language. In addition, participants completed two cognitive tests: A working memory test (Backward Color Span, adapted from Riches, Reference Riches2012) and a cognitive control task (Numerical Double-Stroop, adapted from Draheim et al., Reference Draheim, Tsukahara, Martin, Mashburn and Engle2021), administered in a randomized order after the experimental session(s).

On average, each language’s listening task took approximately 1 hour to complete, and the entire protocol lasted 3 hours and 25 minutes for trilinguals and 2 hours and 25 minutes for bilinguals.

2.3. Materials for individual differences

2.3.1. Language history questionnaire

We collected demographic information about the participants and their language experience background via a language history questionnaire – a modified version of the validated Language Experience and Proficiency Questionnaire (Marian et al., Reference Marian, Blumenfeld and Kaushanskaya2007). The initial section of the questionnaire covered basic demographic details such as age, gender, years of education and maternal education. The second section of the questionnaire included questions focusing on language learning history, encompassing AoA and learning contexts. Additionally, participants reported proficiency levels, rated on a scale from 0 to 10, in reading, writing, speaking and speech comprehension. They also reported childhood and current exposure percentages, along with current use percentages across diverse contexts and activities. A complete computerized questionnaire version can be accessed at https://osf.io/preprints/psyarxiv/jfk8b (Abbas et al., Reference Abbas, Degani, Elias, Prior and Silawi2024). Descriptive statistics outlining participants’ demographic and language experience profiles can be found in Table 1 and Supplementary Table S1.

2.3.2. Objective proficiency tests

To enhance the accuracy of language proficiency assessment, acknowledging potential pitfalls in self-reported measures (Tomoschuk et al., Reference Tomoschuk, Ferreira and Gollan2019; Zell & Krizan, Reference Zell and Krizan2014), we included two objective proficiency tests: the Semantic Fluency test (Gollan et al., Reference Gollan, Montoya and Werner2002; Kavé, Reference Kavé2005) and the Mint Sprint test (MINT Sprint; Garcia & Gollan, Reference Garcia and Gollan2022). In the Semantic Fluency test, participants were presented with two semantic categories within each language and asked to produce as many exemplars as possible in 60 seconds per category. To obtain a single semantic fluency score for each participant in each language, we summed the produced exemplars across both categories. In the Mint Sprint test (Garcia & Gollan, Reference Garcia and Gollan2022), participants were asked to name 80 pictures in two rounds: the first was constrained by a time limit (3 minutes), and the second was without restrictions, allowing participants to name any skipped pictures. The total picture naming score was calculated by summing words produced in both rounds (ranging from 0 to 80; see Table 1). Multilinguals underwent the objective proficiency tests in their L1, L2 and L3, while bilinguals were assessed in their L1 and L2.

2.3.3. Cognitive tests

Our investigation aimed to evaluate participants’ cognitive abilities through non-verbal cognitive tasks, ensuring a language-independent evaluation (see Messer et al., Reference Messer, Leseman, Boom and Mayo2010; Yoo & Kaushanskaya, Reference Yoo and Kaushanskaya2012, for more information regarding linguistic knowledge effect on cognitive measures). As a measure of working memory span, we administered the Backward Color Span test (adapted from Riches, Reference Riches2012). In this test, participants were presented with sequentially displayed circles of various colors on a computer screen. Using a computer mouse, they were instructed to tap the colors in reverse order. Across three trials for each sequence length (ranging from 2 to 7 colors), participants advanced if successfully solving at least two trials, with the span increasing by one color. The task ended when two or more errors were made in a given sequence length. The participant’s final score was determined by the highest sequence length reached (see Table 1).

In addition, the Numerical Double-Stroop test (adapted from Draheim et al., Reference Draheim, Tsukahara, Martin, Mashburn and Engle2021), testing executive control abilities, was administered. This test required participants to make comparative judgments about pairs of numbers, considering two dimensions: number of digits and numerical value. Specifically, participants were presented with three sequences of numbers on the computer screen: one at the top and two at the bottom, and they were required to choose one of the two bottom sequences whose digits’ value represented the number of digits of the upper sequence (while ignoring its numerical value). For instance, if the top sequence was “222”, and the bottom sequences were “33” and “222”, participants correctly chose “33” as the value of its digits matched the number of digits (3) of the upper sequence. Participants had 90 seconds to provide as many correct answers as possible, and the final score was based on the number of correctly solved trials. Practice trials preceded both cognitive tests to ensure participant understanding of the tasks.

2.4. Materials for the listening task

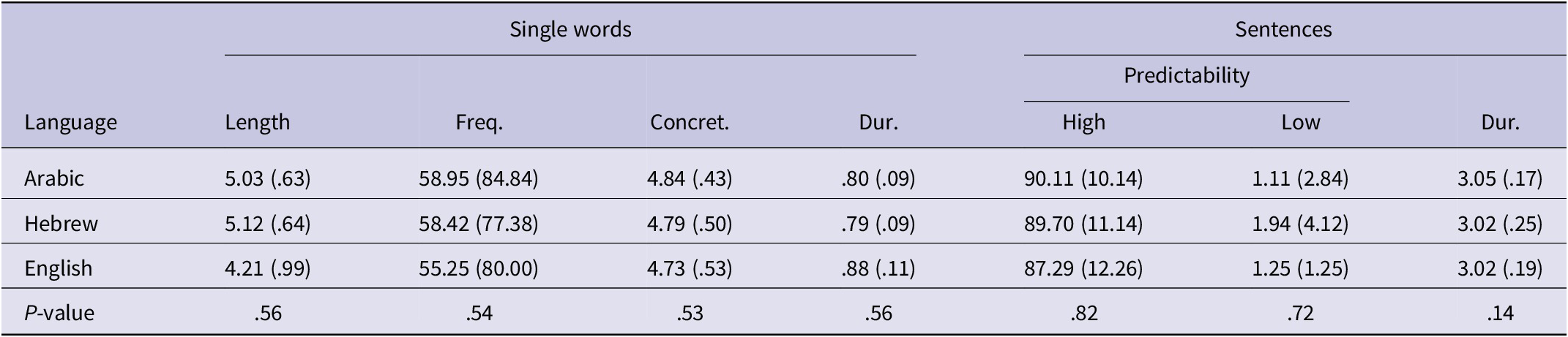

Words and sentences in Arabic, Hebrew and English were used in the current study. The stimuli for Arabic and Hebrew were developed by the authors for the study, as reported in Bsharat-Maalouf et al. (Reference Bsharat-Maalouf, Schmidtke, Degani and Karawani2025). English stimuli were initially selected from Kalikow et al. (Reference Kalikow, Stevens and Elliott1977), Bradlow and Alexander (Reference Bradlow and Alexander2007) and Fallon et al. (Reference Fallon, Trehub and Schneider2002). However, some English sentences were adapted to align with the structure and length of those in Arabic and Hebrew. Following these modifications, we ensured that stimuli across the three languages were matched using several norming studies (see Bsharat-Maalouf et al., Reference Bsharat-Maalouf, Schmidtke, Degani and Karawani2025). The general procedure for matching stimuli is summarized below and in Table 2.

Table 2. Single words and sentence characteristics in Arabic, Hebrew and English

Note. SDs in parentheses. Single Word length represents the number of phonemes; Frequency (Freq.) are counts per million (extracted from Sketch Engine; Kilgarriff et al., Reference Kilgarriff, Baisa, Bušta, Jakubíček, Kovář, Michelfeit, Rychlý and Suchomel2014); Concreteness (Concret.) is rated on a scale of 1 (low) to 5 (high) based on a normalization study. Duration (Dur.) represents the recorded stimuli’s duration, in seconds. All sentences consist of six content words, with high and low predictability determined through a norming study. For details on the norming procedures, refer to Bsharat-Maalouf et al. (Reference Bsharat-Maalouf, Schmidtke, Degani and Karawani2025). No significant differences were observed between languages across all measures (p > .14).

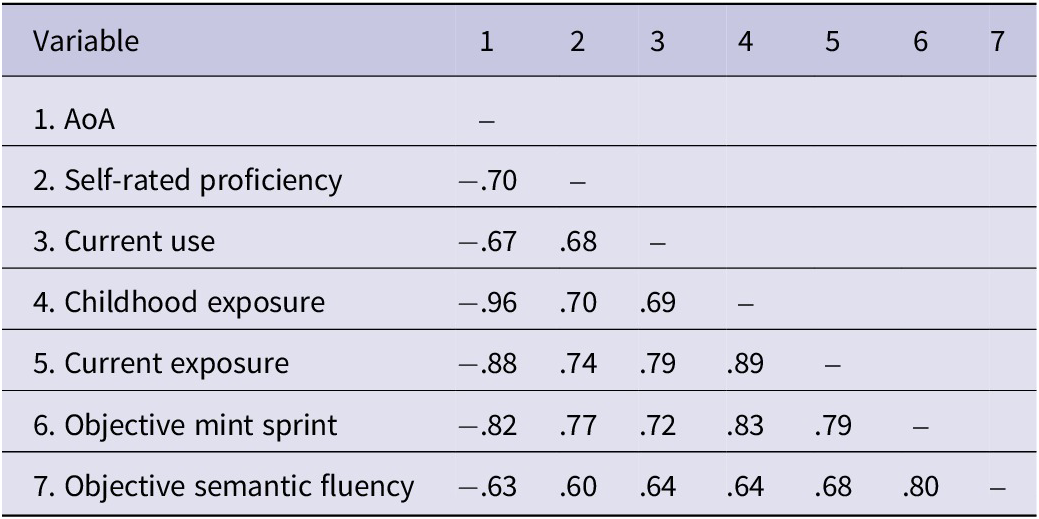

Table 3. Correlations among predictors related to language experience

Note. All correlations were significant at p < .001. Correlations are calculated over a total of 229 observations: (45Trilinguals × 3Languages) + (47Bilinguals × 2Languages).

Words across languages were not translation equivalents and did not overlap in form (i.e., were not cognates or false cognates). A norming study was conducted to allow the matching of words across languages in terms of length, frequency and concreteness (see Table 2). Specifically, we ensured that words in all three languages were matched in length (number of pronounced phonemes) and frequency (based on counts per million extracted from Sketch Engine; Kilgarriff et al., Reference Kilgarriff, Baisa, Bušta, Jakubíček, Kovář, Michelfeit, Rychlý and Suchomel2014). Concreteness norms were based on ratings of trilinguals, who rated the concreteness of Arabic, Hebrew and English words, and bilinguals, who rated the concreteness of Hebrew and English words, using a 5-point scale (1 = not concrete at all to 5 = very concrete).

Additionally, separate norming studies were conducted with the trilingual population to ensure familiarity (i.e., the ability to provide translation in the native language) with all Hebrew and English stimuli and with the bilingual population to ensure familiarity with the English stimuli. Participants in the norming studies did not take part in the main experiment.

Each word in the study was incorporated into a sentence. All sentences were syntactically correct and plausible, maintaining a consistent structure of six content words per sentence. The sentence set contained both high- and low-predictability sentences, with predictability assessed for each language through a norming study (see details in Bsharat-Maalouf et al., Reference Bsharat-Maalouf, Schmidtke, Degani and Karawani2025). Briefly, in this norming study, participants were presented with sentences in each language that ended with a blank and were asked to complete the sentence with the first word that came to mind. Sentences were revised to meet predictability criteria: high predictability sentences required at least 60% of participants to select the target word, while low predictability sentences were revised if more than 10% chose the target word. Native Arabic speakers normed Arabic sentences, while Arabic and Hebrew speakers normed Hebrew and English sentences. Predictability levels were carefully balanced across languages to ensure cross-language comparability. The final list of stimuli is available at https://osf.io/zkvu3. No significant differences were observed across language stimuli (p > .14; see Table 2).

Words and sentences were recorded by native female speakers in a sound-attenuated booth at a 44.1 kHz sample rate and 32-bit resolution. The duration of recordings across the three languages was comparable (see Table 2). Praat software (Boersma & Weenink, Reference Boersma and Weenink2009) was utilized to normalize recording intensity, resulting in a similar average root mean square across languages. In each language, half of the stimuli were presented in quiet and half in noise. Speech-shaped noise, matching the long-term average spectrum of each language’s stimuli, was used as a masker in the noise condition. The noise was presented at an SNR of 0 dB, selected based on pilot data demonstrating it as an intermediate level of challenge that avoids overwhelming participants and helps maintain their focus throughout the session.

Thus, the experimental design included six conditions within each language: single words in quiet, single words in noise, high predictability sentences in quiet, high predictability sentences in noise, low-predictability sentences in quiet and low-predictability sentences in noise. Nine experimental versions were created (three in each language), each comprising three lists that were rotated across versions. This was done to ensure that each stimulus was presented to each participant only twice, in different blocks, under different listening conditions (quiet/noise) and stimulus types (word/sentence). For example, if the stimulus “salt” was presented as a single word in quiet in one block, it was later presented to the same participant within a high predictability sentence in noise in another block.

2.5. Listening task procedure

The listening task procedure consisted of 240 randomized trials per language, organized into 8 blocks of 30 trials each. These blocks included a mix of single words and sentences, presented randomly within each block to prevent participants from predicting the stimulus type. Half of the blocks (four blocks) were presented in quiet, and the remaining in noise. The order in which the quiet and noisy blocks were presented was randomized among participants.

Participants were seated within a sound-attenuated booth and engaged in a listening task while positioned in front of a computer screen placed 65 centimeters away. Pupil size changes were recorded using the Eyelink Portable Duo (SR Research, Kanata, Ontario, Canada), with a focus on the right eye at a sampling rate of 1,000 Hz. A chin rest was utilized to promote stability and precision in pupil size measurement. Stimuli were presented binaurally to participants through headphones ensuring a uniform intensity level. Throughout the experiment, the room was moderately dim with stable luminance and no external light, and the computer screen displayed a constant grey background color (RGB values: 225, 225, 225).

Each trial in the listening task started with a black fixation cross on the computer screen, which remained visible as speech stimuli were presented through headphones exactly 1 second later. Following stimulus offset, the fixation cross persisted for an additional 3 seconds, during which either silence or noise was presented based on the block condition.

This period allowed time for the pupil to reach maximum dilation (Winn et al., Reference Winn, Wendt, Koelewijn and Kuchinsky2018). Following this interval, a response prompt in the form of a question mark directed participants to verbally repeat the stimulus they had heard. The trial ended with a blank screen displayed for 1.5 seconds (see Bsharat-Maalouf et al., Reference Bsharat-Maalouf, Schmidtke, Degani and Karawani2025). To maintain an accurate measurement of pupil size, calibration and validation preceded each block. While pupil recording remained continuous throughout the experiment, the analysis specifically focused on the period preceding participants’ verbal repetition of the stimulus.

Breaks were permitted between trials or at any point upon the participant’s request. Additionally, a planned break was given between tests in different languages. No feedback was provided to participants during the experiment. Before the listening task, participants were given instructions in their native language (Arabic for trilinguals and Hebrew for bilinguals). Before the experimental blocks, multilinguals completed a practice session to familiarize themselves with the listening task requirements.

2.6. Data coding and pre-processing

Participants’ verbal responses were recorded and scored for exact word repetition for single words (0 = correct, 1 = incorrect) and the accurate repeated words from each sentence (ranging from 0 to 6). The accuracy of each type of stimulus served as the dependent variable for the perceptual analyses. These were further included as covariates in the pupillometry analyses.

Pupil recordings, measured in arbitrary units, were converted to millimeters. Following the procedure used in Bsharat-Maalouf et al. (Reference Bsharat-Maalouf, Schmidtke, Degani and Karawani2025), pupil data underwent a multistep preprocessing procedure to address missing data and blinks. Specifically, pupil diameters deviating more than 3 SDs below the mean diameter of each trial were identified as blinks using Eyelink Data Viewer software (SR Research Ltd., version 4.3.1). Given that blinks lead to partial occlusion and unreliable measurements (Siegle et al., Reference Siegle, Ichikawa and Steinhauer2008; Zekveld et al., Reference Zekveld, Koelewijn and Kramer2018), the 100 milliseconds before and after a blink event were excluded. Subsequently, trials with over 25% missing observations were excluded, leading to the exclusion of 0.49% of the data. For the remaining trials, linear interpolation replaced missing values, and a smoothing filter was employed to minimize noise (Winn et al., Reference Winn, Wendt, Koelewijn and Kuchinsky2018).

Trials with no verbal responses (1.12% of the data) were excluded from the analysis. This exclusion ensures focusing on pupil data from trials where participants demonstrated attentiveness (Wendt et al., Reference Wendt, Koelewijn, Książek, Kramer and Lunner2018; Zekveld et al., Reference Zekveld, Kramer and Festen2010; Zekveld & Kramer, Reference Zekveld and Kramer2014). A baseline for each trial was established by averaging the last 200 milliseconds of the pre-stimulus period. This baseline was subtracted from subsequent measurements, yielding baseline-corrected values. The pupil analyses focused on two key dependent variables commonly employed in pupil response studies (e.g., Schmidtke, Reference Schmidtke2014; Zekveld et al., Reference Zekveld, Kramer and Festen2010): peak amplitude, representing the maximum positive dilation observed in a trial compared to the baseline, and peak latency, indicating the time taken for the peak dilation amplitude to occur. Analyses of pupil mean (the average pupil dilation observed in a trial compared to the baseline), which resulted in a similar pattern, are reported in Supplementary Table S2 and Supplementary Figure S1. Following previous studies using pupillometry in language research (e.g., Borghini & Hazan, Reference Borghini and Hazan2018; Bsharat-Maalouf et al., Reference Bsharat-Maalouf, Schmidtke, Degani and Karawani2025; Russo et al., Reference Russo, Hoen, Karoui, Demarcy, Ardoint, Tuset, De Seta, Sterkers, Lahlou and Mosnier2020; Schmidtke, Reference Schmidtke2014, Reference Schmidtke2018; Schmidtke & Tobin, Reference Schmidtke and Tobin2024; Wendt et al., Reference Wendt, Dau and Hjortkjær2016, Reference Wendt, Hietkamp and Lunner2017; Yao et al., Reference Yao, Connell and Politzer-Ahles2023), we considered larger peak amplitudes and pupil means, and delayed peak latencies as indicators of increased listening effort.

2.7. Data analysis and model structure

The study aimed to examine the relationship between variability in language experience and multilingual listening effort, measured here through pupil responses (peak amplitude and peak latency). Additionally, to corroborate findings from prior research, we explored the relationship between variability in language experience and participants’ perceptual accuracy. Various predictors related to language experience were gathered, encompassing AoA, self-rated proficiency, childhood and current exposure, current use and scores from objective proficiency tests (Semantic Fluency and Mint Sprint tests). Further, control variables pertaining to SES (indexed by maternal education) and cognitive abilities (assessed through Backward Color Span and Numerical Double-Stroop tests) were collected.

Notably, our analysis considered all languages spoken by each participant (three languages for trilinguals and two languages for bilinguals), treating the entire sample as one multilingual group. Thus, trilinguals contributed data related to language experience concerning Arabic (L1), Hebrew (L2) and English (L3), and bilinguals contributed data concerning Hebrew (L1) and English (L2).

To avoid multicollinearity in the predictors, we first examined the correlations among the predictors related to language experience. As shown in Table 3, significant associations were observed. Indeed, the Kaiser–Meyer–Olkin measure of sampling adequacy of .876 and Bartlett’s test of sphericity (p < .001) suggested that the predictors related to language experience were highly correlated, supporting the rationale for employing a principal component analysis (PCA; Pearson, Reference Pearson1901). Factors with eigenvalues exceeding 1 were extracted, revealing that a single factor could capture language experience predictors and explain 78.610% of the variance. This factor, termed “overall language experience” (see below), was incorporated into subsequent analyses.

2.7.1. Description of the overall language experience factor

The overall language experience factor, derived from the PCA, provides a holistic measure of the language experience within each language of the multilingual individual. Below is a qualitative interpretation of how this factor operates:

AoA. This aspect reflects when the language was first learned. Individuals who acquired the language earlier in life typically had higher scores on the overall language experience factor, while later AoA generally resulted in lower scores.

Self-rated proficiency. Reflecting individuals’ self-assessment of their language skills. Higher self-rates corresponded with a higher score on the overall language experience factor.

Current language use. Reflecting the frequency of current language use. More frequent use aligned with higher scores on the overall language experience factor.

Childhood and current exposure. Reflecting both past and present exposure to the language. Higher exposure levels aligned with higher scores in the overall language experience factor.

Objective proficiency. Measured through the Mint Sprint and Semantic Fluency tests. High objective proficiency scores were reflected by a higher overall language experience factor.

In summary, the overall language experience factor aggregated diverse linguistic dimensions, offering a holistic profile of multilingual’s language acquisition history, self-perceived and objective proficiency, usage and exposure. A high-factor score reflects early acquisition, high proficiency and extensive use and exposure. In contrast, lower scores indicate later acquisition, lower proficiency and limited use and exposure.

The correlation analysis among other demographic or cognitive variables (maternal education, Backward Color Span and Numerical Double-Stroop tests) showed weak and non-significant correlations (see Supplementary Table S3). The Kaiser–Meyer–Olkin measure of sampling adequacy, with a value of .502, and Bartlett’s test of sphericity (p = .603) collectively indicate that PCA for these variables was not justified. Therefore, these variables were entered separately into the models as covariates (see below). Finally, we verified that the correlations among all final predictors were low (did not exceed .136), reducing concerns related to multicollinearity (see Supplementary Table S3). With this assurance, we proceeded to evaluate the impact of the overall language experience variable on perceptual accuracy and pupil responses, accounting for SES (indexed by maternal education), working memory span (indexed by the Backward Color Span score), and cognitive control ability (indexed by the numerical Double-Stroop score). All measures were normalized prior to analysis.

Generalized linear mixed-effects models (Brown, Reference Brown2021) were employed for data analysis. Logistic mixed-effects models were utilized for analyzing single-word accuracy, given the binary nature of the responses (0 = incorrect, 1 = correct). A negative binomial mixed-effects model was applied to analyze sentence accuracy, which ranged from 0 (no accurate words repeated) to 6 (all words repeated accurately), accommodating the count-based nature of the responses (Hilbe, Reference Hilbe2011). For both perceptual accuracy and pupil data, separate analyses were conducted for single words and sentences, considering the different scales of the dependent variables.

The initial model for each of the dependent variables (perceptual accuracy, peak amplitude and peak latency) incorporated the fixed effects of listening condition (quiet vs. noise, with the quiet set as a reference), and overall language experience (derived from the PCA), along with their interaction. In addition, demographic and cognitive variables, including maternal education, Backward Color Span task and Numerical Double-Stroop, were entered as control variables. Trial order was also included as a covariate to account for potential fatigue effects (McLaughlin et al., Reference McLaughlin, Zink, Gaunt, Reilly, Sommers, Van Engen and Peelle2023). Additionally, perceptual accuracy was considered as a covariate in the pupil models to address variations in perceptual performance that might confound pupil responses (Zekveld & Kramer, Reference Zekveld and Kramer2014). As such, the effects reported in these models are interpreted while controlling for variation in perceptual accuracy.

To simultaneously address variance attributed to participants and items, the random structure of the models incorporated by-participant and by-item intercepts. The models included a by-item slope for listening condition. In line with the current study’s emphasis on individual differences, random slopes specific to participants were not incorporated into any of the models.

For the sentence perceptual and pupil models, the same basic model structure was applied, adding a fixed effect for context (high vs. low predictability, with low as the reference) and its interactions with listening condition and overall language experience. By-item random slopes were also included for context.

The “buildmer” function from the buildmer package (version 2.8; Voeten, Reference Voeten2019) in R (version 4.2.2; R Core Team, 2021) was used when convergence issues arose in the maximal model. This function utilizes a backward stepwise elimination approach, initiating from the most complex model and iteratively simplifying random slopes until convergence is achieved, while keeping all theoretically relevant fixed effects in the model (using the “include” subcommand). When necessary, to examine interactions and pairwise comparisons, the selected model was refitted using (g)lmer and followed by the “testInteractions” function from the “phia” package (version 0.2-1; De Rosario-Martinez et al., Reference De Rosario-Martinez, Fox, Team and De Rosario-Martinez2015), incorporating Bonferroni adjustments to account for multiple comparisons.

Tables 4 and 5 present model summaries for perceptual and pupillometry data derived from the summary function. Note that, due to the dummy coding of the categorical fixed effects, the effects presented in these tables reflect simple effects rather than main effects. The main effects of the fixed variables were determined using the chi-square test (for perceptual data) and the anova function (for pupil data), and these results are presented in the text. Significance was assessed at an alpha level of .05.

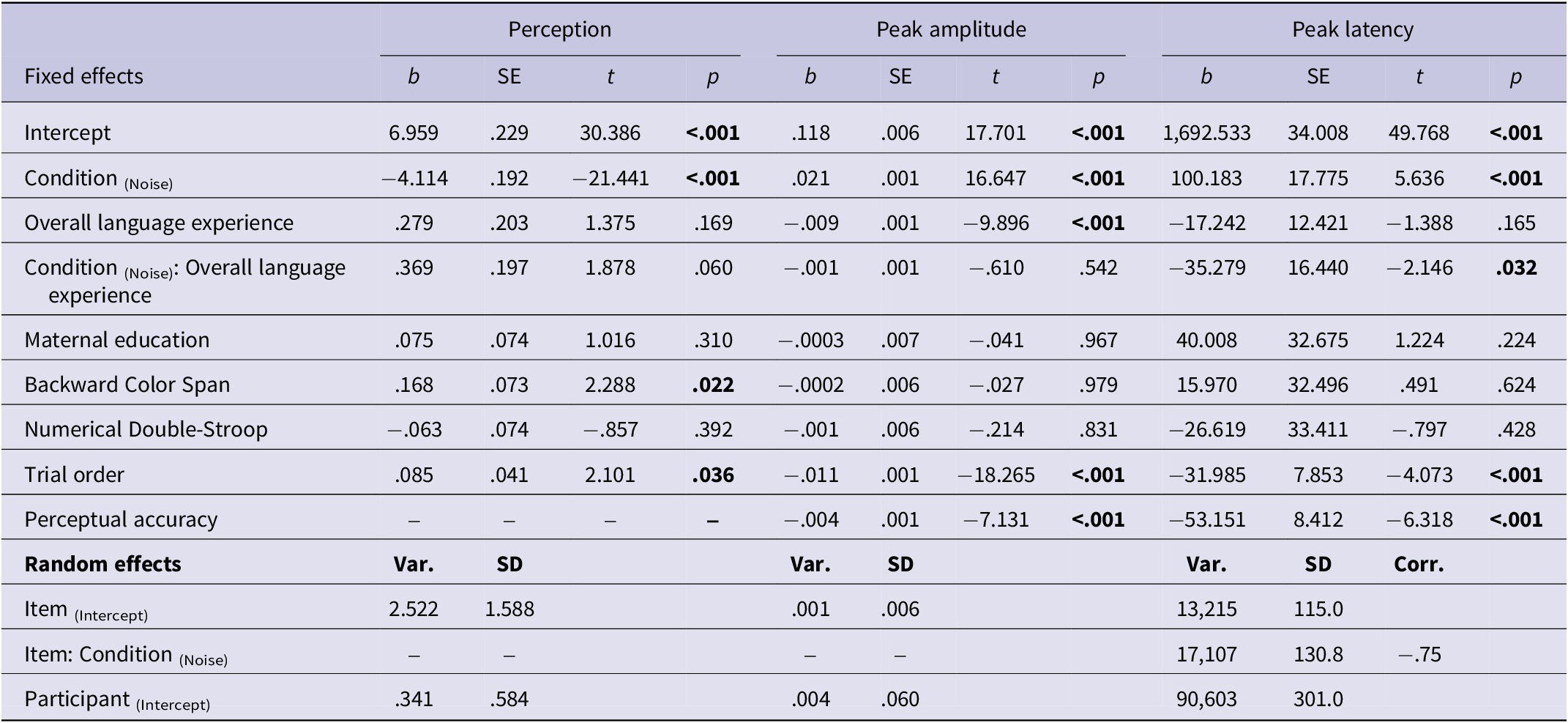

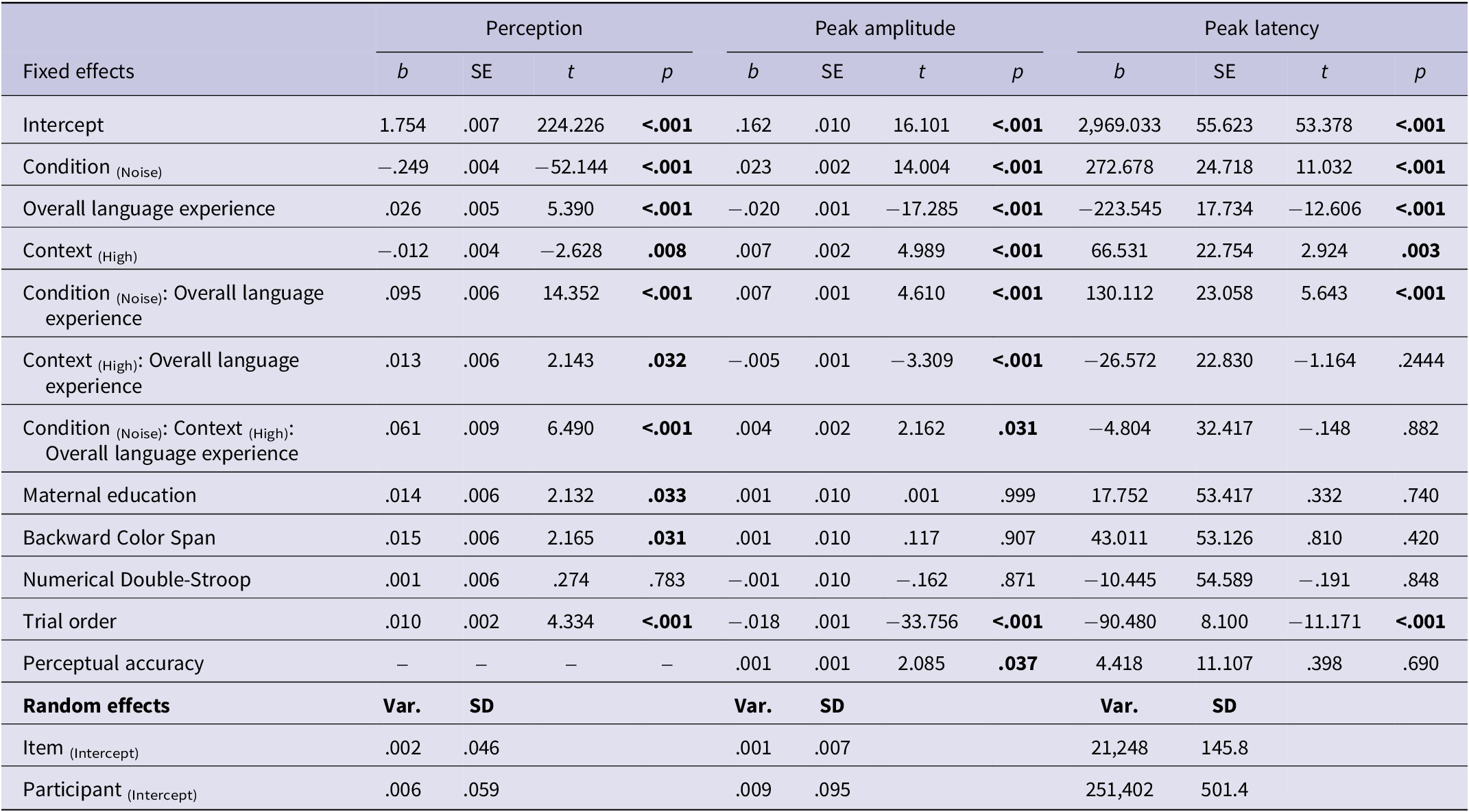

Table 4. Model summary for single words perception and pupillometry

Note. Fixed effects reflect simple effects relative to the reference level when other variables are at their reference level. For main effects, see χ 2, F and p-values in the text. Bold values indicate effects that are statistically significant, with p values less than 0.05. For the mean pupil model, refer to Supplementary Table S2. Perceptual accuracy was included as a covariate in pupillometry models.

Table 5. Model summary for sentence perception and pupillometry

Note. For main effects, see χ 2, F and p-values in the text. Bold values indicate effects that are statistically significant, with p values less than 0.05. For the mean pupil model, refer to Supplementary Table S2.

3. Results

The results related to perceptual accuracy and pupillometry are summarized below and in Supplementary Table S4. In addition, the impact of maternal education and cognitive covariates is also summarized.

3.1. Single words

3.1.1. Perceptual accuracy

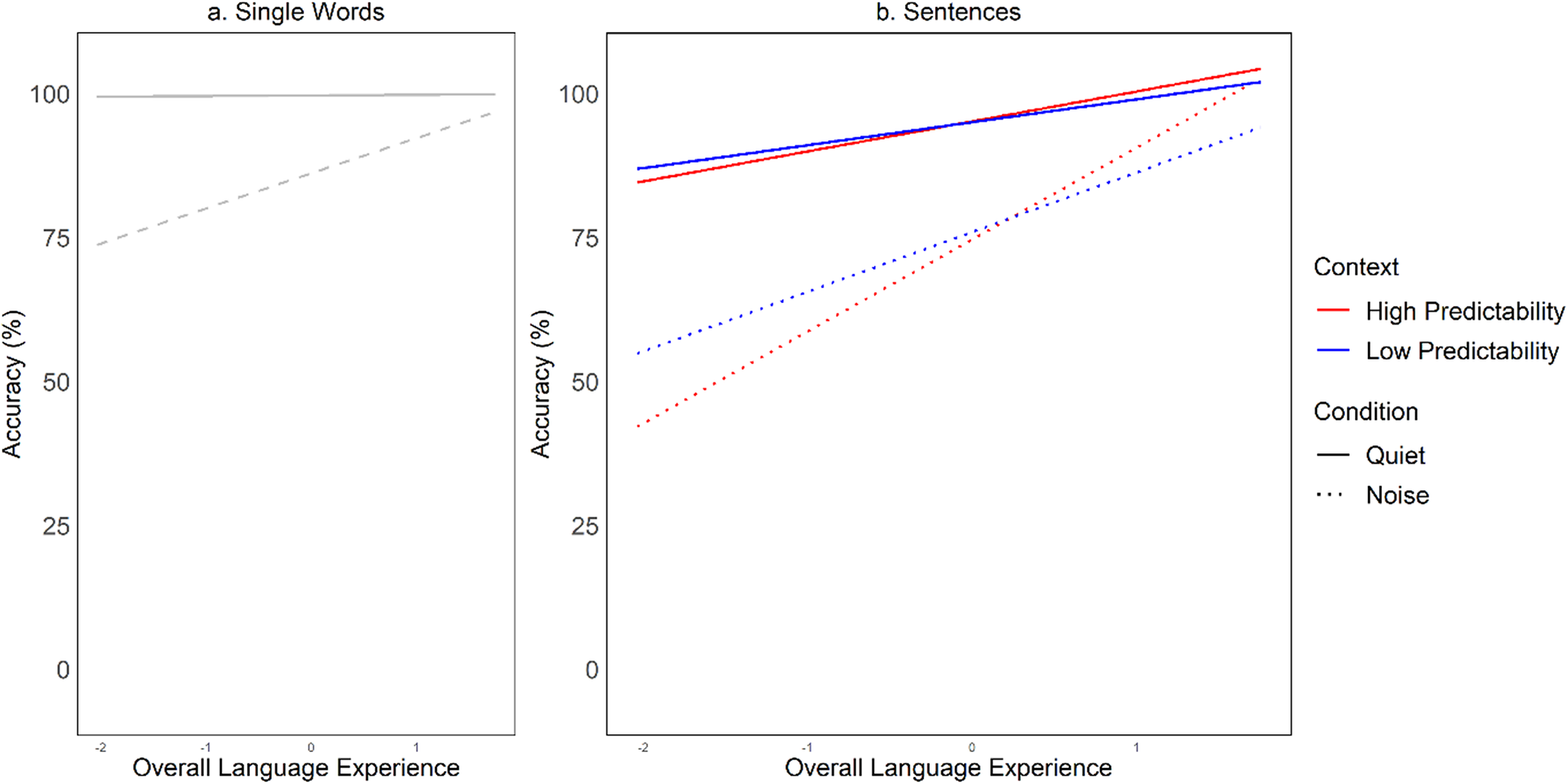

Perceptual accuracy for single words was higher in quiet than in noise (χ 2 (1) = 526.933, p < .001; Quiet: M = 99.631, SD = 6.062; Noise: M = 99.631, SD = 34.533). Overall language experience predicted accuracy (χ 2 (1) = 77.143, p < .001), with greater overall language experience associated with higher perceptual accuracy (see Figure 1A). However, the interaction between listening condition and overall language experience variables did not reach significance (χ 2 (1) = 3.528, p = .060; possibly due to a ceiling effect; see the final paragraph of Section 4).

Figure 1. The effect of language experience on perceptual accuracy of single words (A) and sentences (B), categorized by context: high (marked in red) and low (marked in blue) predictability, across the two listening conditions: quiet (indicated in solid lines) and noise (dashed lines). The overall language experience is derived from the principal component analysis, incorporating both subjective and objective measures. The X-axis is scaled, with higher values indicating greater overall language experience.

3.1.2. Pupillometry

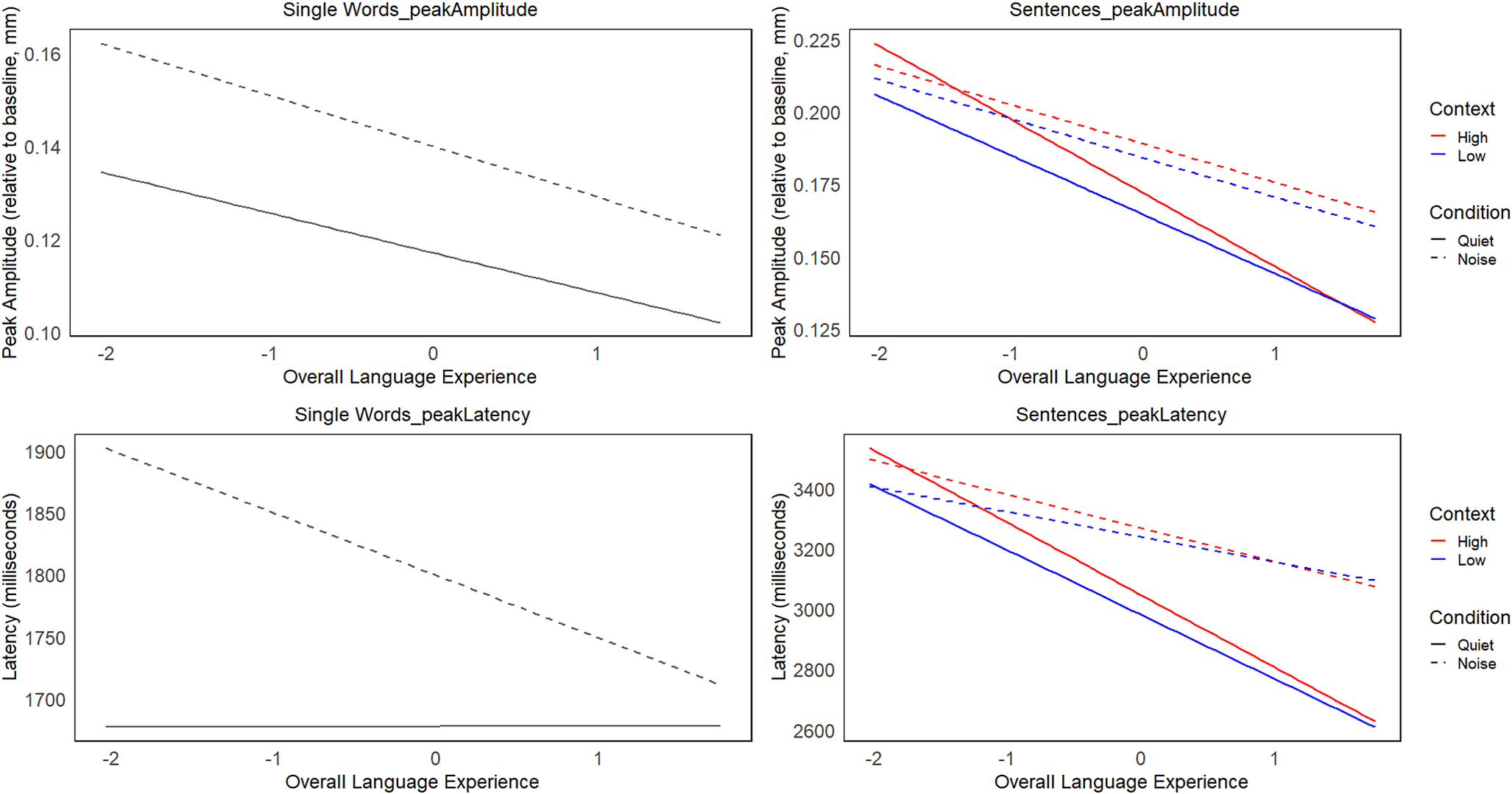

Peak amplitude was larger, and peak latency was more delayed in the noise condition compared with the quiet condition (F(1, 17,622.3) = 277.110, p < .001; F(1, 345.2) = 31.766, p < .001, respectively) (see Supplementary Figure S2a). Greater overall language experience predicted smaller peak amplitudes (F(1, 800.6) = 178.362, p < .001) and earlier peaks (F(1, 861.6) = 14.273, p < .001), indicating reduced listening effort with better language experience (see the left panels in Figure 2). While the interaction between listening condition and overall language experience was not significant for peak amplitude (F(1, 17,480.4) = .372, p = .541), it reached significance for peak latency (F(1, 1,676.3) = 4.605, p = .032), showing that the effect of overall language experience on peak latency was more pronounced in noisy conditions (see the left panels in Figure 2 and Supplementary Table S5 for pairwise comparisons).

Figure 2. The effect of language experience on peak amplitude (top panels) and peak latency (bottom panels) for single words (left panels) and sentences (right panels) categorized by context: high and low predictability and listening condition. The overall language experience is derived from the principal component analysis, incorporating both subjective and objective measures. The X-axis is scaled, with higher values indicating greater overall language experience.

3.1.3. Covariates effects

Maternal education did not significantly impact single-word accuracy. However, higher performance on the Backward Color Span task was associated with higher perceptual accuracy. The Numerical Double-Stroop task showed no significant effect on single-word accuracy.

None of the variables – maternal education, Backward Color Span or Numerical Double-Stroop – had a significant effect on pupillometry measures (see Table 4).

3.2. Sentences

3.2.1. Perceptual accuracy

Perceptual accuracy for sentences was higher in quiet than in noise (χ 2 (1) = 2417.804, p < .001). Overall language experience predicted the accuracy of sentences (χ 2 (1) = 752.167, p < .001), with greater overall language experience associated with higher perceptual accuracy (see Figure 1B). Significant two-way interactions were observed between overall language experience and listening condition (χ 2 (1) = 706.086, p < .001), and between overall language experience and context (χ 2 (1) = 74.449, p < .001). Additionally, a significant three-way interaction was observed among overall language experience, listening condition and context (χ 2 (1) = 42.126, p < .001). Pairwise comparisons with Bonferroni corrections (see Supplementary Table S5) showed that in quiet, greater overall language experience enhanced accuracy similarly for both high- and low-predictability sentences. However, in noise, the effect was more pronounced for high-predictability sentences.

3.2.2. Pupillometry

Peak amplitude was larger, and peak latency was more delayed in noise compared with quiet (F(1, 35,104) = 300.028, p < .001; F(1, 35,352) = 182.1365, p < .001, respectively) (see Supplementary Figure S2). Greater overall language experience was significantly associated with smaller peak amplitudes (F(1, 1,025) = 609.359, p < .001) and earlier peaks (F(1, 1,084) = 229.011, p < .001) (see the right panels in Figure 2). The effects of the overall language experience were also moderated by the listening condition and context.

For peak amplitude, significant interactions between overall language experience and listening condition (F(1, 35,402) = 71.538, p < .001), as well as overall language experience and context (F(1, 35,240) = 6.213, p = .012), were observed. A significant three-way interaction among overall language experience, listening condition and context (F(1, 35,316) = 4.6757, p = .030) showed that in quiet, greater overall language experience was associated with smaller peak amplitudes in both sentence types, but the effect was stronger in high-predictability than low-predictability sentences. In noise, however, the effect of overall language experience on peak amplitude was similar for both sentence types (see Supplementary Table S5).

For peak latency, the influence of overall language experience was larger in quiet than in noise, as indicated by the significant interaction between overall language experience and listening condition (F(1, 35,375) = 58.3908, p < .001; see Supplementary Table S5). However, the two-way interaction between overall language experience and context (F(1, 35,213) = 3.179, p = .075), and the three-way interaction among overall language experience, listening condition and context (F(1, 35,280) = .022, p = .882) were not significant.

3.2.3. Covariates effects

Maternal education had a significant effect on sentence perception, with higher maternal education associated with improved accuracy. Better performance on the Backward Color Span task was also associated with better perceptual accuracy of sentences. The Numerical Double-Stroop task showed no significant effect.

Maternal education, Backward Color Span and Numerical Double-Stroop showed no significant effect on pupillometry measures (see Table 5).

Summary of perceptual and pupillometry results. As expected, for both single words and sentences, better perceptual accuracy and lower listening effort (peak amplitude and peak latency) were observed when listening in quiet compared with noisy conditions. Overall, greater overall language experience predicted better accuracy and reduced listening effort. Nevertheless, the impact of the overall language experience was modulated by the listening condition and the linguistic demands of the stimulus presented.

For single words, overall language experience facilitated perceptual accuracy across both quiet and noisy conditions, showing that greater experience with the language helped listeners maintain high levels of accuracy. However, the effect of overall language experience on listening effort was more pronounced in noise, where greater language experience was associated with reduced effort, as indicated by earlier peaks.

For sentences, overall language experience had a stronger effect on high-predictability sentences than on low-predictability ones. This may suggest that language experience is especially important when high linguistic knowledge can be used to make predictions. Of note, this effect was evident in the perceptual performance in the noisy condition, but in the listening effort (peak amplitude) under quiet conditions.

Our findings showed that while better working memory capacity enhanced accuracy for both single words and sentences, and maternal education supported perceptual accuracy in sentence processing, neither cognitive abilities nor maternal education appeared to impact pupillometric measures of listening effort.

4. Discussion

The current study aimed to investigate the relationship between variability in multilingual language experience – evaluated through a combination of subjective measures (questionnaire) and objective tests (proficiency tests) – and perceptual performance and listening effort. To provide insights into the nuanced nature of these relationships, we conducted analyses across different listening conditions, including quiet and noise, as well as different stimulus types, encompassing single words and sentences with two predictability levels. These factors were examined across all languages multilingual individuals speak (three languages for trilinguals and two languages for bilinguals). Thus, rather than the traditional approach of comparing L1 and L2, this study treated all languages equally by considering language experience as a continuous variable. This framework allows for a more holistic understanding of how language experience shapes performance and listening effort, emphasizing that its influence extends across all the languages an individual speaks.

Our findings showed lower perceptual accuracy in noisy conditions compared with quiet ones, along with larger and delayed pupil responses in noise, suggesting increased effort. These findings confirm and align with previous research (e.g., Bsharat-Maalouf et al., Reference Bsharat-Maalouf, Degani and Karawani2023; Bsharat-Maalouf & Karawani, Reference Bsharat-Maalouf and Karawani2022a; Garcia Lecumberri et al., Reference Garcia Lecumberri, Cooke and Cutler2010; Mattys et al., Reference Mattys, Davis, Bradlow and Scott2012; Rennies et al., Reference Rennies, Schepker, Holube and Kollmeier2014; Rosenhouse et al., Reference Rosenhouse, Haik and Kishon-Rabin2006). We found a positive association between overall language experience and perceptual accuracy, further supporting previous findings (see Cowan et al., Reference Cowan, Paroby, Leibold, Buss, Rodriguez and Calandruccio2022, for a review). Notably, in our analysis, language experience was treated as a multifaceted construct encompassing multiple factors rather than a single variable.

Critically, we observed that language experience affects listening effort, with greater overall language experience being associated with smaller and earlier pupil responses compared to lower levels of language experience. This correlation was held across all tested speech stimuli, including single words and sentences with high and low predictability levels.

4.1. Language experience effect on listening effort across stimuli and listening conditions

In this study, single words were used as a baseline to reduce the influence of higher-level linguistic elements, such as sentence semantics and syntax. This design allowed us to focus on the impact of language experience on listening effort without the involvement of contextual processing. The observed correlation between language experience and listening effort for these stimuli can be explained by the reduced quality of phonetic representations. Greater language experience enhances the richness and accuracy of these representations (Gollan et al., Reference Gollan, Montoya, Fennema-Notestine and Morris2005, Reference Gollan, Montoya, Cera and Sandoval2008, Reference Gollan, Slattery, Goldenberg, Van Assche, Duyck and Rayner2011, Reference Gollan, Starr and Ferreira2015; Schmidtke, Reference Schmidtke2016; Sebastián-Gallés et al., Reference Sebastián-Gallés, Echeverría and Bosch2005), allowing them to store more detailed and precise information and align more efficiently with incoming signals. This alignment facilitates perception, reducing the need for extensive cognitive resources during listening and ultimately minimizing listening effort (Rönnberg et al., Reference Rönnberg, Rudner, Foo and Lunner2008, Reference Rönnberg, Lunner, Zekveld, Sörqvist, Danielsson, Lyxell, Dahlström, Signoret, Stenfelt and Pichora-Fuller2013, Reference Rönnberg, Holmer and Rudner2019, Reference Rönnberg, Holmer and Rudner2021, Reference Rönnberg, Signoret, Andin and Holmer2022).

We propose that multilingual language co-activation may also contribute to the association between language experience and listening effort for single words. Our findings suggest that greater language experience may be associated with better handling of language co-activation, leading to reduced effort. Greater language experience may aid the multilingual listener in prioritizing the activation of the target linguistic representation over other competing candidates allowing easier selection from the pool of activated options. This potentially contributes to minimizing occurrences of mismatches, facilitating lexical access and reducing listening effort.

Interestingly, for single words – where linguistic demands are more straightforward and require minimal higher-level processing – greater language experience provided a stronger benefit in reducing listening effort under noisy conditions than in quiet ones. This suggests that in challenging listening conditions, well-developed phonetic representations and efficient co-activation management are especially valuable, likely due to the increased potential for mismatches, which calls for more precise phonetic representation and selection to support listening.

Our analysis of the correlation between language experience and listening effort across sentences with varying levels of predictability sheds light on the role of higher-level processing. By examining both high- and low-predictability sentences, we observed how sentence-level factors, including semantic context and predictability, influenced the relationship between language experience and listening effort. High-predictability sentences, in particular, rely on lexical-semantic knowledge, allowing listeners to form accurate expectations that facilitate more efficient perception. Our findings indicated that greater language experience reduced listening effort for sentences, especially those with high predictability. This effect was most prominent in quiet conditions, where the absence of background noise and the availability of a clear signal allowed listeners to fully leverage their language experience and predictive abilities. Extensive exposure to language patterns enabled listeners to form realistic expectations, facilitating more efficient processing and significantly reducing listening effort. However, in noisy conditions, where incoming sentences were disrupted and mismatches were more likely, even the benefits of greater language experience were insufficient to fully alleviate listening effort. This finding underscores the need to investigate additional factors that could mitigate listening effort under such challenging conditions.

One key factor to consider is the type of noise. In this study, we used stationary noise, which caused energetic masking by overlapping directly with the speech signal, making it difficult to filter out. In contrast, dynamic noise, such as multi-talker babble, includes temporal gaps that might allow listeners to engage in “dip listening.” This skill enables listeners to capitalize on brief pauses in background noise to process speech more effectively (Russo et al., Reference Russo, Hoen, Karoui, Demarcy, Ardoint, Tuset, De Seta, Sterkers, Lahlou and Mosnier2020; Vestergaard et al., Reference Vestergaard, Fyson and Patterson2011). These pauses provide moments to capture clearer speech segments, enhancing alignment with existing linguistic representations and expectations and potentially amplifying the benefits of greater language experience. Future research should, therefore, explore how dynamic noise impacts listening effort and whether variations in language experience can modulate this effort effectively.

4.2. Perceptual accuracy versus listening effort

Our study revealed interesting patterns when considering the impact of language experience on perceptual accuracy and listening effort. For single words, greater language experience consistently enhanced accuracy across listening conditions. As illustrated in Figure 1, the difference in accuracy between quiet and noisy conditions remained stable across all levels of language experience. However, the relationship with listening effort told a different story. As shown in Figure 2, the benefits of language experience in reducing effort were significantly more pronounced in noisy conditions. This indicates that while noise similarly affected accuracy regardless of language experience, individuals with greater language experience expended less effort in processing speech in noise. This dissociation highlights that listening effort can reveal nuances not captured by perceptual accuracy alone (Bsharat-Maalouf et al., Reference Bsharat-Maalouf, Degani and Karawani2023, Reference Bsharat-Maalouf, Schmidtke, Degani and Karawani2025; Winn & Teece, Reference Winn and Teece2021), offering a deeper understanding of the cognitive processes involved, especially under challenging conditions.

Also, for sentences, the effects of language experience varied across measures: Greater language experience led to smaller differences in perceptual accuracy between quiet and noisy conditions (see Figure 1). However, this accuracy benefit came at the cost of increased listening effort, as greater language experience was associated with larger variations in effort across conditions (see Figure 2). This pattern suggests that sustaining perceptual accuracy in noise may require more effortful processing, shedding light on the interplay between automatic and resource-demanding processes in language comprehension. While language experience enhances accuracy, this improvement does not always result purely from automatic processing. In challenging scenarios, sustaining comprehension may require additional cognitive resources, reflecting a shift from automatic to more effortful processing. This adaptability suggests that greater language experience facilitates flexible resource management, enabling the allocation of cognitive resources when automatic processing alone is insufficient.

Taken together, our findings suggest that perceptual accuracy and listening effort are distinct constructs shaped by different cognitive resources and experiences, reinforcing the need to examine both for a comprehensive understanding of auditory processing (Bsharat-Maalouf et al., Reference Bsharat-Maalouf, Degani and Karawani2023, Reference Bsharat-Maalouf, Schmidtke, Degani and Karawani2025; Winn & Teece, Reference Winn and Teece2021). To capture the full range of cognitive demands involved in listening, future research should incorporate varied measures that can provide insights into the cumulative effort beyond immediate processing.

4.3. Relations to prior research and future directions

The observation that greater language experience is linked to reduced listening effort aligns with Schmidtke (Reference Schmidtke2014), who found that listening effort decreases with greater proficiency in bilinguals. However, it contrasts with the conclusions drawn by Francis et al. (Reference Francis, Tigchelaar, Zhang and Zekveld2018) and Borghini and Hazan (Reference Borghini and Hazan2018, Reference Borghini and Hazan2020). Specifically, in the studies by Francis et al. and Borghini and Hazan, factors linked to greater language experience either increased listening effort (Francis et al., Reference Francis, Tigchelaar, Zhang and Zekveld2018) or did not predict the level of effort exerted by multilinguals during listening tasks (Borghini & Hazan, Reference Borghini and Hazan2018, Reference Borghini and Hazan2020). This contradiction may stem from methodological disparities that warrant deeper examination. For instance, a notable distinction between the studies lies in the use of babble noise in prior studies compared to the speech-shaped noise employed in our investigation. Francis et al. (Reference Francis, Tigchelaar, Zhang and Zekveld2018) found that proficiency in a language predicted higher effort in bilinguals when the signal and the masker were in the same language. Specifically, higher proficiency in L2 predicted greater effort when the signal was presented in L2 and accompanied by an L2 masker. One explanation raised by the authors for this positive correlation was that individuals with higher language proficiency are more susceptible to interference from language-specific maskers, which outweighs any proficiency benefit for signal perception. Thus, using speech-shaped noise in our study, which lacks semantic content, could explain why our findings differ. Moreover, while previous studies predominantly focused on investigating these associations within specific languages, our study examined the association in multilingual of a more diverse background. Consequently, the findings from previous studies may be specific to the languages or populations examined and not readily comparable to other linguistic contexts, highlighting the need for further investigation. An important additional avenue for future research is to analyze multilinguals by their distinct language profiles rather than treating them as a single group. For example, similarity between languages can increase cross-language co-activation and interference, raising cognitive load and limiting the benefits of language experience in reducing effort. In contrast, more distinct languages may reduce such interference, allowing greater language experience to more effectively lower listening effort. This may reveal important insights into additional sources of variability within the multilingual population that may affect listening effort. Finally, our investigation considered overall language experience as a composite factor, unlike previous studies that isolated individual aspects of language experience. These methodological differences further challenge direct comparisons between studies.

While the current study contributes valuable insights into the interplay between language experience variability and multilingual perceptual performance and listening effort, several limitations warrant attention in future research endeavors. To enhance the generalizability of the current findings and bolster their ecological validity, a broader range of maskers, such as multi-talker babble noise, should be examined. Furthermore, potential ceiling effect in the single-word perceptual performance may obscure additional effects of language experience on processing speech in noise. Crucially, this further supports the need for studies in which additional measures that go beyond behavioral indices alone are explored. Moreover, as mentioned previously, in the current study, we used pupillometry as an objective measure of listening effort. This choice is supported by the recent review of Bsharat-Maalouf et al. (Reference Bsharat-Maalouf, Degani and Karawani2023), which highlights pupillometry as an effective, real-time measure of mental processes, providing consistent results for multilingual listening effort. Nonetheless, integrating additional subjective or behavioral indicators of listening effort would offer additional insights (see Alhanbali et al., Reference Alhanbali, Dawes, Millman and Munro2019; Strand et al., Reference Strand, Ray, Dillman-Hasso, Villanueva and Brown2020b).

While we carefully matched our stimuli across key properties and found no significant differences, we did not analyze the spectral content of the stimuli, which may have influenced perceptual performance and listening effort, especially in noisy conditions. Additionally, the absence of formal hearing screenings in this study could have introduced variability. However, because we examined language experience across all languages spoken by each participant, our analysis, in some respects, allows for within-individual control. Lastly, the exploration of additional facets of multilingual experience variability beyond those examined in this study, such as code-switching patterns (Han et al., Reference Han, Li and Filippi2022; Hofweber et al., Reference Hofweber, Marinis and Treffers-Daller2020; Ng & Yang, Reference Ng and Yang2022) holds promise for deeper insights into their impact on listening effort.

As we await further research, the current study represents a notable step forward in emphasizing the importance of moving beyond oversimplified comparisons between multilinguals and monolinguals when referring to listening effort. It highlights the importance of recognizing the diverse range of experiences within multilingual populations. Acknowledging this diversity enables researchers, educators and healthcare professionals to tailor interventions and communications to meet the unique needs of each multilingual individual and to facilitate the implementation of personalized strategies to address specific challenges.

Supplementary material

The supplementary material for this article can be found at http://doi.org/10.1017/S1366728925100254.

Data availability statement

The stimuli, data and analysis scripts supporting this study’s findings are openly available in OSF at https://osf.io/mbfsy.

Funding statement

This study was supported by the Israel Science Foundation (ISF) (Grant No. 2031/22 awarded to H.K.). D.B.-M. was awarded a student fellowship from the University of Haifa.

Competing interests

The authors have no conflicts of interest to disclose.