Impact Statement

Structural health monitoring (SHM) utilizes sensor technology to identify and detect potential damage to critical infrastructure like bridges. However, the available measurements can be affected by external factors such as temperature, requiring data-driven methods to account for these influences. This study presents an interpretable framework based on functional data analysis to effectively utilize the data’s structure and characteristics, including daily and seasonal patterns, when adjusting for confounding effects. Many existing methods for removing environmental effects are encompassed as special cases. Additionally, damage-sensitive features are readily accessible and can be monitored using state-of-the-art control charts.

1. Introduction

Structural health monitoring (SHM) employs sensor technologies to collect data from structures such as bridges to detect, localize, or quantify damage (Deraemaeker et al., Reference Deraemaeker, Reynders, De Roeck and Kullaa2008; Kullaa, Reference Kullaa2011; Hu et al., Reference Hu, Moutinho, Caetano, Magalhães and Cunha2012; Magalhães et al., Reference Magalhães, Cunha and Caetano2012). These field measurements often exhibit missing data and are influenced by environmental and operational factors such as temperature, wind, humidity, or traffic load (Wang et al., Reference Wang, Yang, Yi, Zhang and Han2022). Multiple studies, including Wang et al. (Reference Wang, Yang, Yi, Zhang and Han2022), Han et al. (Reference Han, Ma, Xu and Liu2021) and the references therein, identify temperature as a predominant factor affecting structural stiffness and material properties due to thermal expansion and contraction (Han et al., Reference Han, Ma, Xu and Liu2021). Consequently, data-driven methods are essential to take these confounding effects in SHM data analysis into account.

With respect to separating temperature-induced responses from structural responses, it can be distinguished between so-called “input–output” methods and “output-only” approaches (Wang et al., Reference Wang, Yang, Yi, Zhang and Han2022). In the first case, both the sensor measurements and observations of the confounding variables, such as temperature, are considered, while in the latter case, as the name suggests, only the responses of the structures are used, often using projection methods such as principal component analysis (PCA). Among input–output methods, a prevalent approach is regressing sensor measurements on environmental or operational variables, also known under the name response surface modeling. Then, following the so-called “subtraction method,” the predicted sensor data is subtracted from the actually observed data, and the residuals are used for further analysis. For fitting regression function(s) to the data, various methods have been used in the SHM literature, ranging from simple linear or polynomial regression to advanced machine learning approaches such as artificial neural networks, see Avci et al. (Reference Avci, Abdeljaber, Kiranyaz, Hussein, Gabbouj and Inman2021). However, often, these methods ignore that output data may exhibit daily or yearly patterns or that error terms are correlated over time, e.g., if estimating unknown parameters through least-squares (Cross et al., Reference Cross, Koo, Brownjohn and Worden2013; Maes et al., Reference Maes, Van Meerbeeck, Reynders and Lombaert2022). In other cases, rather restrictive parametric assumptions known from time series analysis are made (Hou and Xia, Reference Hou and Xia2021). Furthermore, input–output and output-only methods are typically considered to be completely different approaches. An overarching framework is still missing where, for instance, it is possible to switch from one approach to the other without changing the downstream monitoring procedure, or the common situation can be handled where measurements are available only for a few confounding variables while others are unobserved or unknown.

In light of this, we will present a novel functional data analysis (FDA) perspective to address these challenges in SHM. FDA has been an area of intensive methodological research over the last two or three decades. For an introduction to FDA in general and an overview of recent developments, the works by Ramsay and Silverman (Reference Ramsay and Silverman2005), Wang et al. (Reference Wang, Chiou and Müller2016), and Gertheiss et al. (Reference Gertheiss, Rügamer, Liew and Greven2024) are recommended. Existing applications of FDA within SHM are reviewed by Momeni and Ebrahimkhanlou (Reference Momeni and Ebrahimkhanlou2022). In SHM, so far, FDA has primarily been used for distributional regression and change point detection. For instance, Chen et al. (Reference Chen, Bao, Tang, Chen and Li2020) used FDA to segment data by time and analyze corresponding probability density functions through warping functions and functional principal component analysis (FPCA). Other notable contributions include the works by Chen et al. (Reference Chen, Bao, Li and Spencer2018), Chen et al. (Reference Chen, Bao, Li and Spencer2019), and Chen et al. (Reference Chen, Lei, Bao, Deng, Zhang and Li2021), who developed methods for imputing missing data using distribution-to-distribution and distribution-to-warping functional regression, and Lei et al. (Reference Lei, Chen and Li2023a), Lei et al. (Reference Lei, Chen, Li and Wei2023c), and Lei et al. (Reference Lei, Chen, Li and Wei2023b) focused on outlier detection and change-point detection in FDA. Jiang et al. (Reference Jiang, Wan, Yang, Ding and Xue2021) modeled temperature-induced strain relationships using warping functions and FPCA.

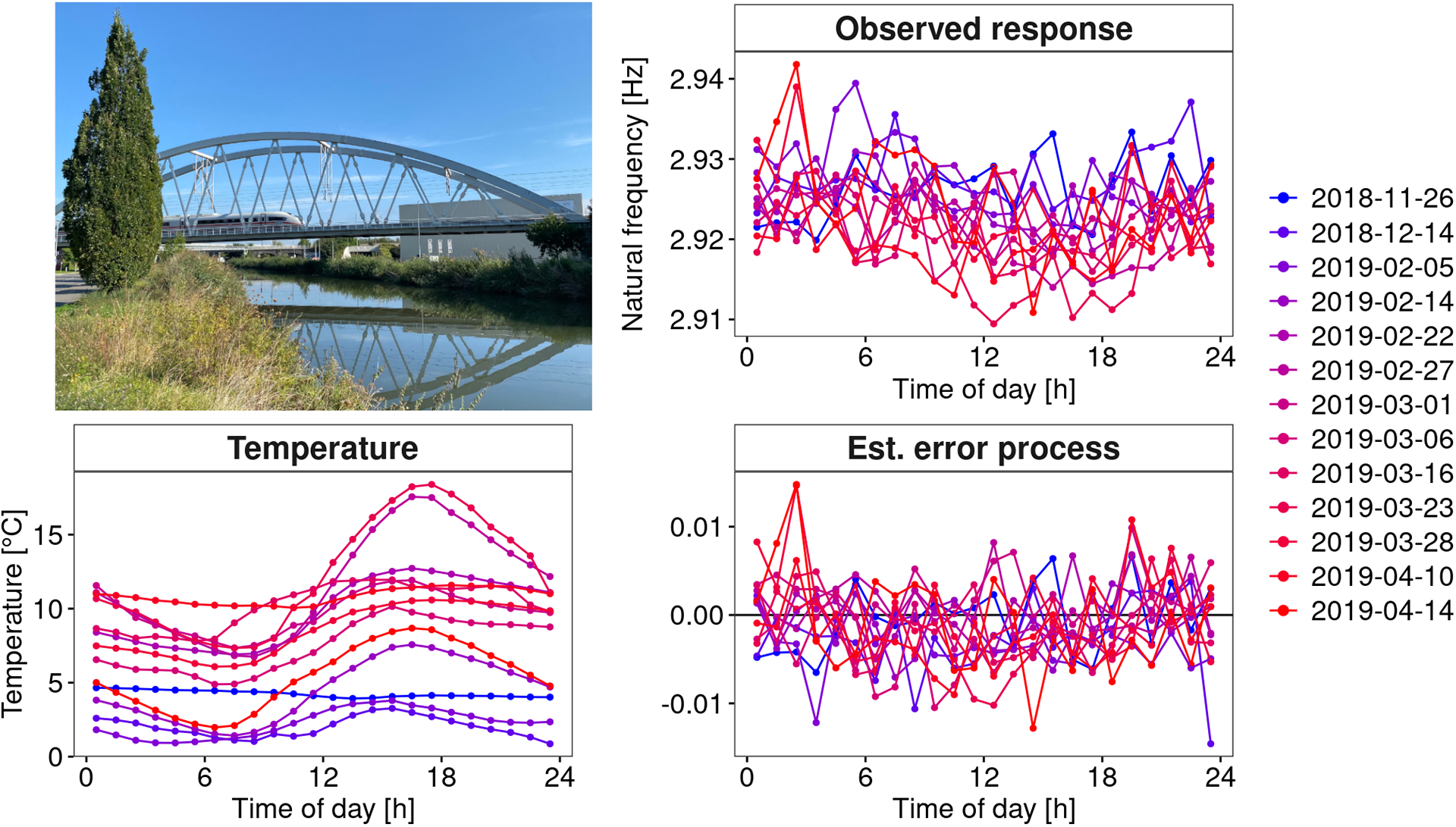

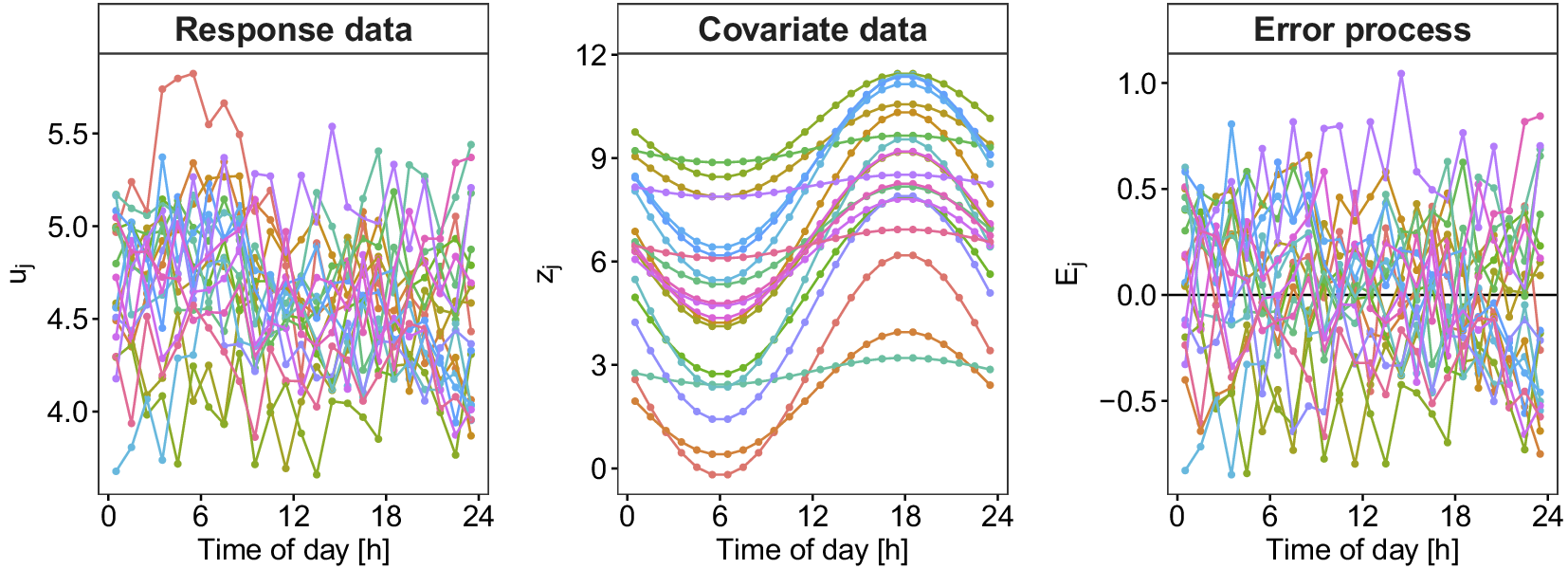

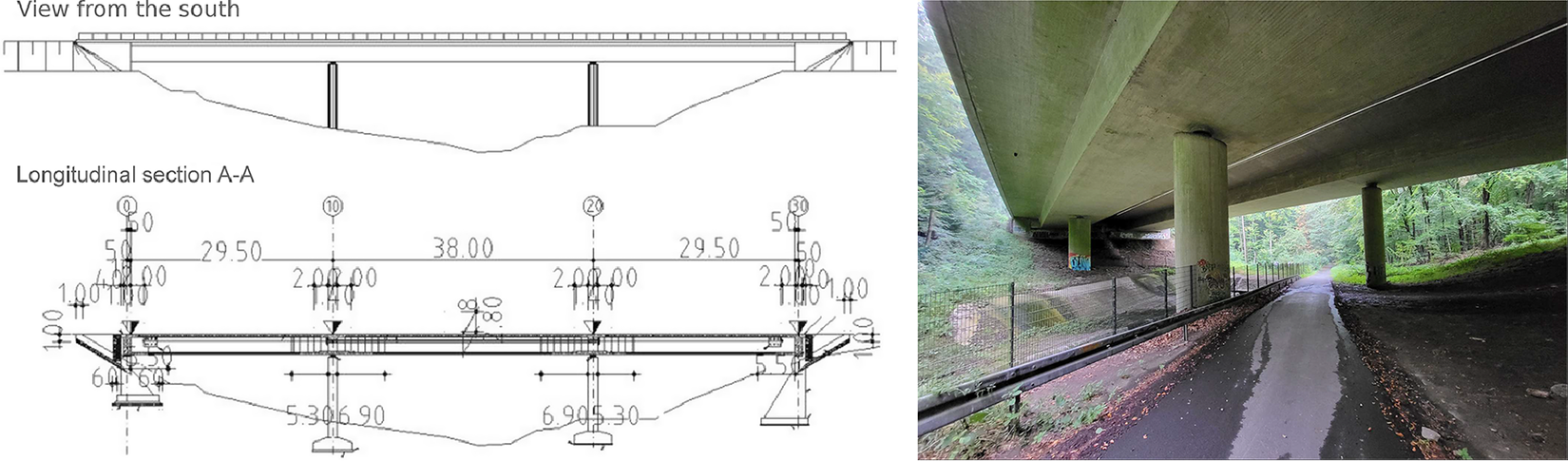

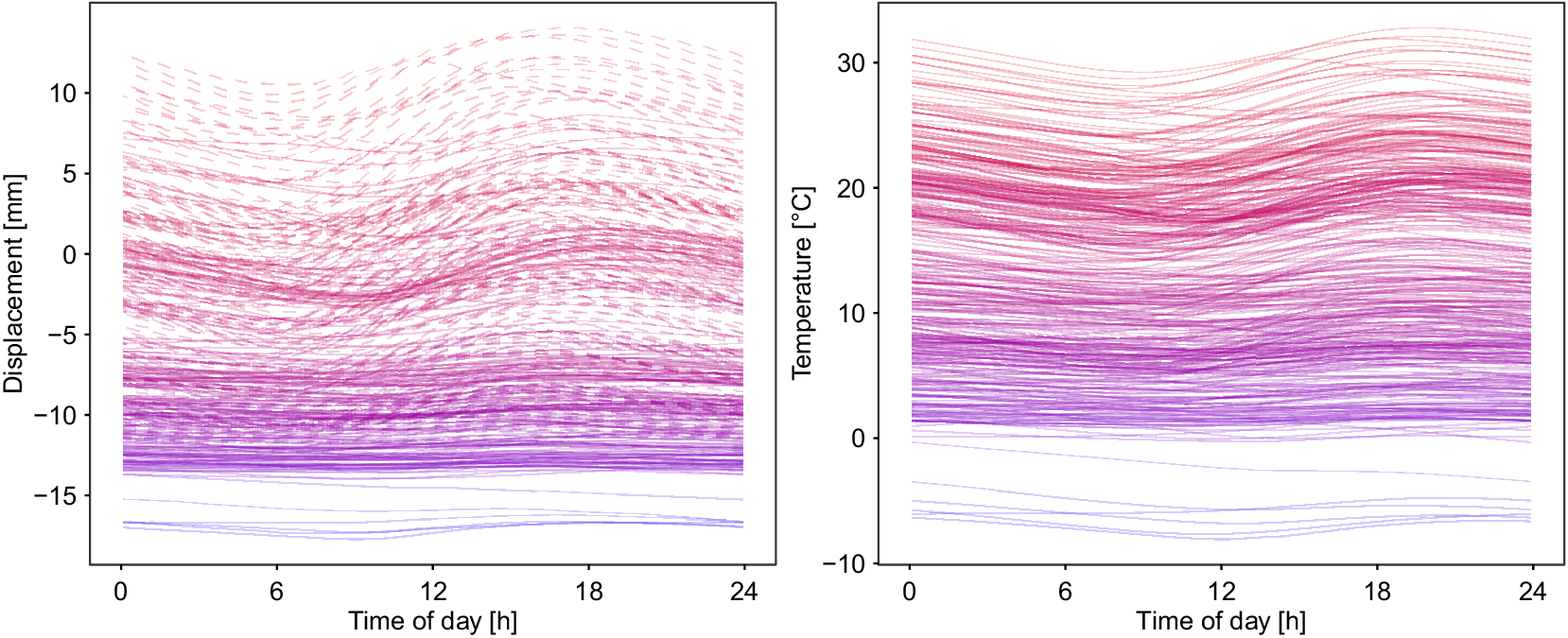

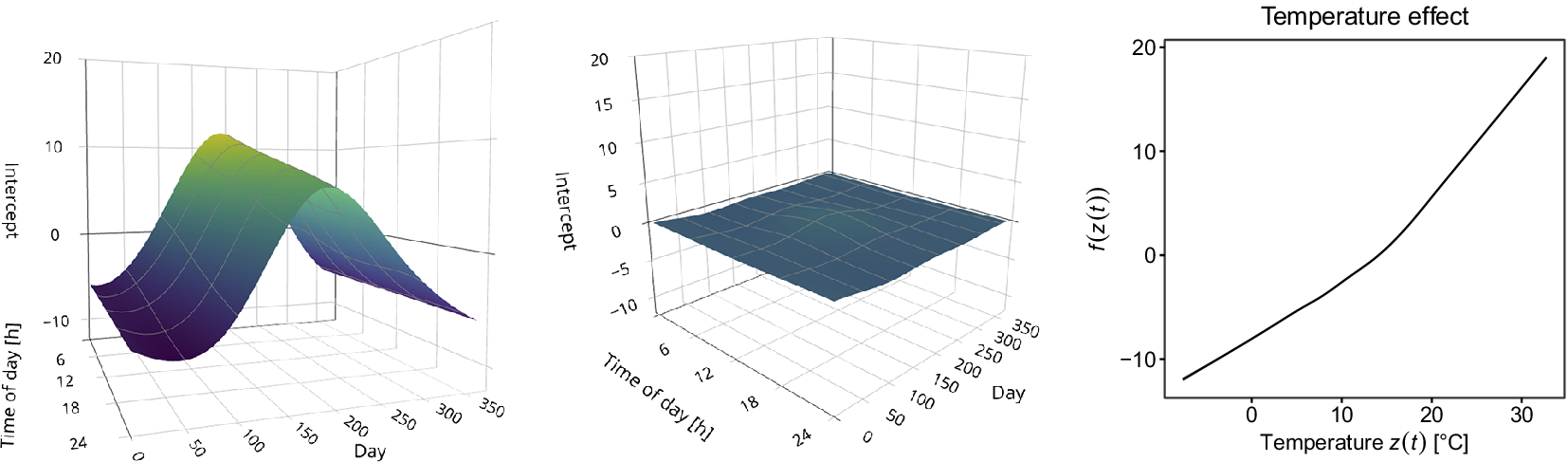

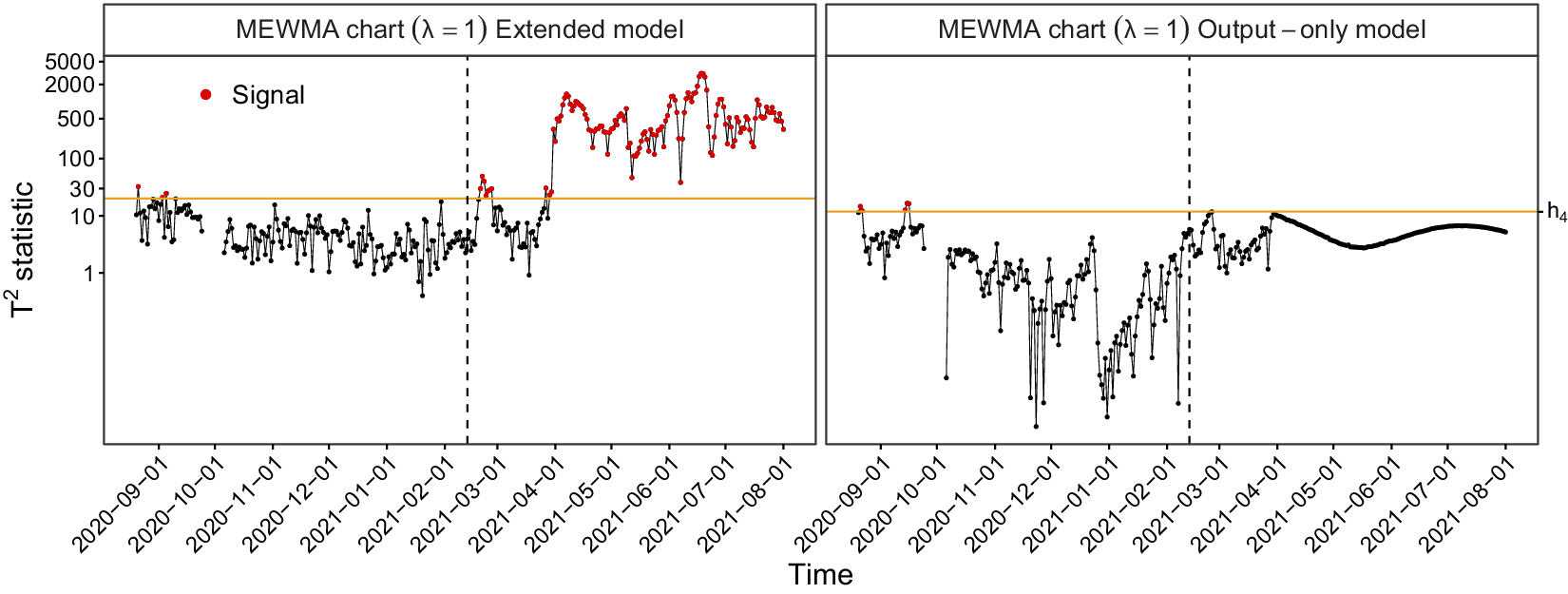

To elucidate our functional perspective, consider Figure 1. Similar plots in SHM studies, such as Xia et al. (Reference Xia, Chen, Weng, Ni and Xu2012), Xia et al. (Reference Xia, Zhang, Tian and Zhang2017), and Zhou et al. (Reference Zhou, Serban and Gebraeel2011), have been used to illustrate the relationship between natural frequencies, strain or displacement, and temperature. However, to the best of our knowledge, this functional nature of the data has never been exploited in SHM when correcting for confounding effects. The data in Figure 1 are from the KW51 railway bridge (Maes and Lombaert, Reference Maes and Lombaert2020), near Leuven, Belgium, monitored from October 2, 2018 to January 15, 2020, including a retrofitting period from May 15 to September 27, 2019. This dataset contains hourly natural frequencies and steel surface temperature (more details on the bridge will be given in Section 4). The top-right panel of Figure 1 shows the bridge’s natural frequency (mode 6) for selected days before retrofitting. It appears plausible to assume that there is some underlying daily pattern common to all profiles, which might be caused by environmental influences that may follow some recurring pattern as well. Those patterns and relationships can be further analyzed through FDA, among other things, by regressing the natural frequency profiles on the temperature curves shown in the bottom-left panel of Figure 1. From the colors, it is already visible that there is a negative association between temperature and the natural frequency, meaning that lower temperatures lead to a higher frequency; compare, e.g., Xia et al. (Reference Xia, Hao, Zanardo and Deeks2006), Xia et al. (Reference Xia, Chen, Weng, Ni and Xu2012). The bottom-right panel shows the resulting error profiles when using a conventional, non-functional piecewise linear model to account for this effect, as done by Maes et al. (Reference Maes, Van Meerbeeck, Reynders and Lombaert2022).

Figure 1. KW51 Bridge from the south side in September 2024 (top-left). The natural frequency of mode 6 for some selected days before the retrofitting started (top-right) and the corresponding steel temperature curves (bottom-left). If a simple piecewise linear model is used for temperature adjustment, the error profiles (bottom-right) are obtained.

If considering entire daily profiles rather than individual measurements as the sampling instances, this regression task is called “function-on-function” regression. In the statistical process monitoring (SPM) literature, it is part of so-called profile monitoring, see Woodall et al. (Reference Woodall, Spitzner, Montgomery and Gupta2004), Woodall (Reference Woodall2007), Maleki et al. (Reference Maleki, Amiri and Castagliola2018) for detailed reviews on that topic. Current state-of-the-art methodologies, such as the (linear) functional regression control chart (FRCC) by Centofanti et al. (Reference Centofanti, Lepore, Menafoglio, Palumbo and Vantini2021), have limitations in SHM applications, where the relationship between temperature and natural frequencies is often nonlinear (Han et al., Reference Han, Ma, Xu and Liu2021). Additionally, by integrating over the entire domain (i.e., the entire day in Figure 1), the employed functional linear model allows “future observations” to influence the current value of the response (Wittenberg et al., Reference Wittenberg, Knoth and Gertheiss2024). Specifically, if (material) temperature data is available directly from the structure itself instead of ambient temperature, it appears more plausible to use a so-called concurrent model, where the response data at time point

![]() $ t $

depends only on the steel temperature at time

$ t $

depends only on the steel temperature at time

![]() $ t $

, not the temperature over the entire day. Finally, the assumption of complete datasets, e.g., made in Capezza et al. (Reference Capezza, Centofanti, Lepore, Menafoglio, Palumbo and Vantini2023), is impractical because SHM sensor data often exhibit dropouts due to power or sensor failure. To address these challenges often found in SHM, this paper will consider the very general framework of functional additive mixed models (Scheipl et al., Reference Scheipl, Staicu and Greven2015; Greven and Scheipl, Reference Greven and Scheipl2017) instead of the common functional linear models used by Centofanti et al. (Reference Centofanti, Lepore, Menafoglio, Palumbo and Vantini2021) and Capezza et al. (Reference Capezza, Centofanti, Lepore, Menafoglio, Palumbo and Vantini2023). By doing so, this paper also provides methodological novelty concerning profile monitoring in a more general sense. Besides the regression task, the functional perspective on SHM data offers an elegant way of “de-noising” the data and extracting features through functional principal component scores that can be used as inputs to advanced multivariate control charts. The methods presented in this article can be applied directly to sensor measurements (e.g., strains, inclinations, accelerations) or extracted damage-sensitive features (e.g., eigenfrequencies). That is why this paper will use the more general term “system outputs.” In summary, using the methods discussed, we can model (i) recurring daily and yearly patterns as well as (ii) confounding effects flexibly and interpretably. Furthermore, the presented functional approach also accounts for (iii) correlations and (iv) heteroscedasticity within error profiles. Finally, it will (v) extract covariate-adjusted features from the system outputs and (vi) unify all these aspects within a coherent statistical framework called Covariate-Adjusted Functional Data Analysis for SHM (CAFDA-SHM). This combined approach includes subtraction methods, PCA-based projections (both often used in SHM), and the FRCC in one universal framework, encompassing multiple aspects that have only been considered partially in previous approaches.

$ t $

, not the temperature over the entire day. Finally, the assumption of complete datasets, e.g., made in Capezza et al. (Reference Capezza, Centofanti, Lepore, Menafoglio, Palumbo and Vantini2023), is impractical because SHM sensor data often exhibit dropouts due to power or sensor failure. To address these challenges often found in SHM, this paper will consider the very general framework of functional additive mixed models (Scheipl et al., Reference Scheipl, Staicu and Greven2015; Greven and Scheipl, Reference Greven and Scheipl2017) instead of the common functional linear models used by Centofanti et al. (Reference Centofanti, Lepore, Menafoglio, Palumbo and Vantini2021) and Capezza et al. (Reference Capezza, Centofanti, Lepore, Menafoglio, Palumbo and Vantini2023). By doing so, this paper also provides methodological novelty concerning profile monitoring in a more general sense. Besides the regression task, the functional perspective on SHM data offers an elegant way of “de-noising” the data and extracting features through functional principal component scores that can be used as inputs to advanced multivariate control charts. The methods presented in this article can be applied directly to sensor measurements (e.g., strains, inclinations, accelerations) or extracted damage-sensitive features (e.g., eigenfrequencies). That is why this paper will use the more general term “system outputs.” In summary, using the methods discussed, we can model (i) recurring daily and yearly patterns as well as (ii) confounding effects flexibly and interpretably. Furthermore, the presented functional approach also accounts for (iii) correlations and (iv) heteroscedasticity within error profiles. Finally, it will (v) extract covariate-adjusted features from the system outputs and (vi) unify all these aspects within a coherent statistical framework called Covariate-Adjusted Functional Data Analysis for SHM (CAFDA-SHM). This combined approach includes subtraction methods, PCA-based projections (both often used in SHM), and the FRCC in one universal framework, encompassing multiple aspects that have only been considered partially in previous approaches.

The remainder of the article is structured as follows. In Section 2, our functional data approach is introduced, and our model training and monitoring workflow is presented. The results of a Monte Carlo simulation study validating the developed methods are presented in Section 3. A structural health monitoring application for dynamic response data of the KW51 railway bridge and a second case study for a quasi-static response of a reinforced concrete bridge can be found in Section 4, respectively. Section 5 offers some concluding remarks. Appendix A provides some further modeling options beyond Section 2. For researchers and practitioners who want to apply the newly proposed methods, the code is open source and publicly available at https://github.com/wittenberg/CAFDA-SHM_code.

2. A functional data approach for modeling and monitoring system outputs

2.1. Basic model structure

The model we assume for “in-control” (IC) data has the following basic form. To keep things simple, we first restrict ourselves to a single, functional covariate

![]() $ {z}_j(t) $

, e.g., denoting the temperature at time

$ {z}_j(t) $

, e.g., denoting the temperature at time

![]() $ t\in \mathcal{T},\mathcal{T}=\left(0\mathrm{h},24\mathrm{h}\right] $

, and day

$ t\in \mathcal{T},\mathcal{T}=\left(0\mathrm{h},24\mathrm{h}\right] $

, and day

![]() $ j $

, and a single system output

$ j $

, and a single system output

![]() $ {u}_j(t) $

. The latter could be a rather raw sensor measurement (yet preprocessed in some sense), such as strain or inclination data, or extracted features, such as natural frequencies. Then, we assume the basic model

$ {u}_j(t) $

. The latter could be a rather raw sensor measurement (yet preprocessed in some sense), such as strain or inclination data, or extracted features, such as natural frequencies. Then, we assume the basic model

where

![]() $ \alpha (t) $

is a fixed functional intercept,

$ \alpha (t) $

is a fixed functional intercept,

![]() $ f\left({z}_j(t)\right) $

is a fixed, potentially nonlinear effect of temperature, and

$ f\left({z}_j(t)\right) $

is a fixed, potentially nonlinear effect of temperature, and

![]() $ {E}_j(t) $

is a day-specific, functional error term with zero mean and a common covariance, i.e.,

$ {E}_j(t) $

is a day-specific, functional error term with zero mean and a common covariance, i.e.,

![]() $ \unicode{x1D53C}\left({E}_j(t)\right)=0 $

,

$ \unicode{x1D53C}\left({E}_j(t)\right)=0 $

,

![]() $ \mathrm{Cov}\left({E}_j(s),{E}_j(t)\right)=\Sigma \left(s,t\right) $

,

$ \mathrm{Cov}\left({E}_j(s),{E}_j(t)\right)=\Sigma \left(s,t\right) $

,

![]() $ s,t\in \mathcal{T} $

. In the FDA framework used here, sampling instances are days instead of single measurement points, and the daily profiles are considered the quantities of interest. This model has two advantages over scalar-on-scalar(s) regression as typically used for response surface modeling in SHM:

$ s,t\in \mathcal{T} $

. In the FDA framework used here, sampling instances are days instead of single measurement points, and the daily profiles are considered the quantities of interest. This model has two advantages over scalar-on-scalar(s) regression as typically used for response surface modeling in SHM:

-

• The functional intercept

$ \alpha (t) $

captures recurring daily patterns that cannot be explained through the available environmental or operational variables, e.g., because the factors causing them are not recorded/available. In the case of long-term monitoring, we may extend the one-dimensional

$ \alpha (t) $

captures recurring daily patterns that cannot be explained through the available environmental or operational variables, e.g., because the factors causing them are not recorded/available. In the case of long-term monitoring, we may extend the one-dimensional

$ \alpha (t) $

to a two-dimensional surface

$ \alpha (t) $

to a two-dimensional surface

$ \alpha \left(t,{d}_j\right) $

, where

$ \alpha \left(t,{d}_j\right) $

, where

$ {d}_j $

denotes the time/date of the year corresponding to day

$ {d}_j $

denotes the time/date of the year corresponding to day

$ j $

in the dataset. The latter accounts for a potential second, i.e., yearly level of periodicity.

$ j $

in the dataset. The latter accounts for a potential second, i.e., yearly level of periodicity. -

• The error term

$ {E}_j(t) $

is typically not a white noise process but correlated over time, i.e., in the

$ {E}_j(t) $

is typically not a white noise process but correlated over time, i.e., in the

$ t $

-direction. Furthermore, variances may vary over the day. For instance, error variances may be lower at night (e.g., because there is less traffic, no influence by the sun, etc.). In other words,

$ t $

-direction. Furthermore, variances may vary over the day. For instance, error variances may be lower at night (e.g., because there is less traffic, no influence by the sun, etc.). In other words,

$ \Sigma \left(s,t\right) $

is not necessarily zero for

$ \Sigma \left(s,t\right) $

is not necessarily zero for

$ s\ne t $

, and

$ s\ne t $

, and

$ \Sigma \left(t,t\right) $

is not constant. For illustration, the (estimated) error process for some selected days for the KW51 data is shown in Figure 1 (bottom-right), where a piecewise linear model with one breakpoint was used for temperature adjustment, as suggested in the literature (Moser and Moaveni, Reference Moser and Moaveni2011; Worden and Cross, Reference Worden and Cross2018; Maes et al., Reference Maes, Van Meerbeeck, Reynders and Lombaert2022). Apparently, those profiles exhibit some more structure than pure white noise. However, ignoring this correlation when fitting

$ \Sigma \left(t,t\right) $

is not constant. For illustration, the (estimated) error process for some selected days for the KW51 data is shown in Figure 1 (bottom-right), where a piecewise linear model with one breakpoint was used for temperature adjustment, as suggested in the literature (Moser and Moaveni, Reference Moser and Moaveni2011; Worden and Cross, Reference Worden and Cross2018; Maes et al., Reference Maes, Van Meerbeeck, Reynders and Lombaert2022). Apparently, those profiles exhibit some more structure than pure white noise. However, ignoring this correlation when fitting

$ \alpha $

and

$ \alpha $

and

$ f $

through ordinary least squares or common maximum likelihood, assuming conditional independence between measurements, will typically lead to less accurate estimates. More importantly, if (conditional) independence is assumed but not given, measures of statistical uncertainty, such as confidence and prediction intervals or control limits, will be biased. In SHM, this can be particularly harmful as these quantities are used to determine if measurements are “out-of-control.” For instance, if a memory-based control chart with the assumption of independence is used to detect a mean shift in an error process, as shown in Figure 1, the false positive rate will be substantially increased (Knoth and Schmid, Reference Knoth, Schmid, Lenz and Wilrich2004). The big advantage of the FDA framework over standard parametric assumptions known from time series analysis, such as auto-correlation of some specific order, is that the error process can be modeled in a very flexible, semiparametric fashion through functional principal component analysis.

$ f $

through ordinary least squares or common maximum likelihood, assuming conditional independence between measurements, will typically lead to less accurate estimates. More importantly, if (conditional) independence is assumed but not given, measures of statistical uncertainty, such as confidence and prediction intervals or control limits, will be biased. In SHM, this can be particularly harmful as these quantities are used to determine if measurements are “out-of-control.” For instance, if a memory-based control chart with the assumption of independence is used to detect a mean shift in an error process, as shown in Figure 1, the false positive rate will be substantially increased (Knoth and Schmid, Reference Knoth, Schmid, Lenz and Wilrich2004). The big advantage of the FDA framework over standard parametric assumptions known from time series analysis, such as auto-correlation of some specific order, is that the error process can be modeled in a very flexible, semiparametric fashion through functional principal component analysis.

In the case of SHM, there is another important aspect to consider with respect to

![]() $ {E}_j(t) $

: This process contains the relevant information for the monitoring task since it captures deviations from the system output

$ {E}_j(t) $

: This process contains the relevant information for the monitoring task since it captures deviations from the system output

![]() $ \alpha (t)+f\left({z}_j(t)\right) $

that would be expected for a specific, let us say, temperature at time

$ \alpha (t)+f\left({z}_j(t)\right) $

that would be expected for a specific, let us say, temperature at time

![]() $ t $

if the structure is “in-control.” For exploiting this information, it is beneficial to further decompose this process into a more structural component

$ t $

if the structure is “in-control.” For exploiting this information, it is beneficial to further decompose this process into a more structural component

![]() $ {w}_j(t) $

and white noise

$ {w}_j(t) $

and white noise

![]() $ {\unicode{x025B}}_j(t) $

with variance

$ {\unicode{x025B}}_j(t) $

with variance

![]() $ {\sigma}^2 $

, i.e.,

$ {\sigma}^2 $

, i.e.,

Since

![]() $ {\unicode{x025B}}_j(t) $

is assumed to be pure noise, it does not carry relevant information, and

$ {\unicode{x025B}}_j(t) $

is assumed to be pure noise, it does not carry relevant information, and

![]() $ {w}_j(t) $

should be the part to focus on for monitoring purposes. To decompose

$ {w}_j(t) $

should be the part to focus on for monitoring purposes. To decompose

![]() $ {E}_j(t) $

into

$ {E}_j(t) $

into

![]() $ {w}_j(t) $

and

$ {w}_j(t) $

and

![]() $ {\unicode{x025B}}_j(t) $

, we use functional principal component analysis (FPCA). It is based on the Karhunen–Loeve expansion (Karhunen, Reference Karhunen1947; Loève, Reference Loève1946), which allows us to expand the random function

$ {\unicode{x025B}}_j(t) $

, we use functional principal component analysis (FPCA). It is based on the Karhunen–Loeve expansion (Karhunen, Reference Karhunen1947; Loève, Reference Loève1946), which allows us to expand the random function

![]() $ {E}_j(t) $

as

$ {E}_j(t) $

as

$$ {E}_j(t)=\sum \limits_{r=1}^{\infty }{\xi}_{rj}{\phi}_r(t), $$

$$ {E}_j(t)=\sum \limits_{r=1}^{\infty }{\xi}_{rj}{\phi}_r(t), $$

where

![]() $ {\phi}_r $

are orthonormal eigenfunctions of the covariance, i.e.,

$ {\phi}_r $

are orthonormal eigenfunctions of the covariance, i.e.,

![]() $ {\int}_{\mathcal{T}}{\phi}_r(t){\phi}_k(t) dt=1 $

if and only if

$ {\int}_{\mathcal{T}}{\phi}_r(t){\phi}_k(t) dt=1 $

if and only if

![]() $ k=r $

and zero otherwise. In particular, according to Mercer’s theorem (Mercer, Reference Mercer1909),

$ k=r $

and zero otherwise. In particular, according to Mercer’s theorem (Mercer, Reference Mercer1909),

$$ \mathrm{Cov}\left({E}_j(s),{E}_j(t)\right)=\Sigma \left(s,t\right)=\sum \limits_{r=1}^{\infty }{\nu}_r{\phi}_r(s){\phi}_r(t) $$

$$ \mathrm{Cov}\left({E}_j(s),{E}_j(t)\right)=\Sigma \left(s,t\right)=\sum \limits_{r=1}^{\infty }{\nu}_r{\phi}_r(s){\phi}_r(t) $$

with decreasing eigenvalues

![]() $ {\nu}_1\ge {\nu}_2\ge \dots \ge 0 $

. Furthermore,

$ {\nu}_1\ge {\nu}_2\ge \dots \ge 0 $

. Furthermore,

![]() $ {\xi}_{rj} $

are uncorrelated random scores with mean zero and variance

$ {\xi}_{rj} $

are uncorrelated random scores with mean zero and variance

![]() $ {\nu}_r $

,

$ {\nu}_r $

,

![]() $ r=1,2,\dots $

, and are independently normal if

$ r=1,2,\dots $

, and are independently normal if

![]() $ {E}_j $

is a Gaussian process.

$ {E}_j $

is a Gaussian process.

In FPCA, the sum in (3) is typically truncated at a finite upper limit

![]() $ m $

, which gives the best approximation of

$ m $

, which gives the best approximation of

![]() $ {E}_j $

with

$ {E}_j $

with

![]() $ m $

basis functions (Rice and Silverman, Reference Rice and Silverman1991). Looking at the fraction

$ m $

basis functions (Rice and Silverman, Reference Rice and Silverman1991). Looking at the fraction

![]() $ \left\{{\sum}_{r=1}^m{\nu}_r\right\}/\left\{{\sum}_{r=1}^{\infty }{\nu}_r\right\} $

allows a quantitative assessment of the variance explained by the approximation. For choosing the concrete value

$ \left\{{\sum}_{r=1}^m{\nu}_r\right\}/\left\{{\sum}_{r=1}^{\infty }{\nu}_r\right\} $

allows a quantitative assessment of the variance explained by the approximation. For choosing the concrete value

![]() $ m $

, there are various methods available (compare, e.g., Wang et al., Reference Wang, Chiou and Müller2016). One possibility is to use a value of

$ m $

, there are various methods available (compare, e.g., Wang et al., Reference Wang, Chiou and Müller2016). One possibility is to use a value of

![]() $ m $

such that a high proportion, e.g., 95% or 99%, of the overall variance is explained (e.g., Sørensen et al., Reference Sørensen, Goldsmith and Sangalli2013; Gertheiss et al., Reference Gertheiss, Rügamer, Liew and Greven2024), here we will use 99% throughout the paper. By choosing such a large value, it is reasonable to assume that the selected

$ m $

such that a high proportion, e.g., 95% or 99%, of the overall variance is explained (e.g., Sørensen et al., Reference Sørensen, Goldsmith and Sangalli2013; Gertheiss et al., Reference Gertheiss, Rügamer, Liew and Greven2024), here we will use 99% throughout the paper. By choosing such a large value, it is reasonable to assume that the selected

![]() $ m $

eigenfunctions account for all relevant features in the data, and the remainder is merely noise. Consequently, we set

$ m $

eigenfunctions account for all relevant features in the data, and the remainder is merely noise. Consequently, we set

$$ {w}_j(t)=\sum \limits_{r=1}^m{\xi}_{rj}{\phi}_r(t), $$

$$ {w}_j(t)=\sum \limits_{r=1}^m{\xi}_{rj}{\phi}_r(t), $$

and use the scores

![]() $ {\xi}_{1j},\dots, {\xi}_{mj} $

as damage-sensitive features for monitoring. As the functions

$ {\xi}_{1j},\dots, {\xi}_{mj} $

as damage-sensitive features for monitoring. As the functions

![]() $ \alpha, f,{\phi}_1,\dots, {\phi}_m $

are estimated from IC data in the model training phase, it is the scores obtained for future data that tell us whether the system outputs deviate from the values that would be expected for an IC structure over the day for given values of the covariate (and the specific time of the year, if

$ \alpha, f,{\phi}_1,\dots, {\phi}_m $

are estimated from IC data in the model training phase, it is the scores obtained for future data that tell us whether the system outputs deviate from the values that would be expected for an IC structure over the day for given values of the covariate (and the specific time of the year, if

![]() $ \alpha \left(t,{d}_j\right) $

instead of

$ \alpha \left(t,{d}_j\right) $

instead of

![]() $ \alpha (t) $

is used in model (1)). How to estimate the different model components, such as

$ \alpha (t) $

is used in model (1)). How to estimate the different model components, such as

![]() $ \alpha, f,{\phi}_1,\dots, {\phi}_m $

from the training data, and how to estimate the scores on future data, will be described in Section 2.2 below. At this point, it is important to note that if environmental and operational variables are present beyond

$ \alpha, f,{\phi}_1,\dots, {\phi}_m $

from the training data, and how to estimate the scores on future data, will be described in Section 2.2 below. At this point, it is important to note that if environmental and operational variables are present beyond

![]() $ z $

, and those variables are measured, model (1) can be extended to include multivariate covariates, see Section 2.4. If there are additional confounding effects that are not measured or not known at all, we can proceed analogously to the popular output-only method using (multivariate) PCA. That means we assume that the first, let us say,

$ z $

, and those variables are measured, model (1) can be extended to include multivariate covariates, see Section 2.4. If there are additional confounding effects that are not measured or not known at all, we can proceed analogously to the popular output-only method using (multivariate) PCA. That means we assume that the first, let us say,

![]() $ \rho $

components mainly account for variation induced by latent factors (Cross et al., Reference Cross, Manson, Worden and Pierce2012), so we only use the remaining scores

$ \rho $

components mainly account for variation induced by latent factors (Cross et al., Reference Cross, Manson, Worden and Pierce2012), so we only use the remaining scores

![]() $ {\xi}_{jr} $

,

$ {\xi}_{jr} $

,

![]() $ r>\rho $

, for monitoring. An important advantage of model (1) over output-only methods is that available covariate information can still be exploited through

$ r>\rho $

, for monitoring. An important advantage of model (1) over output-only methods is that available covariate information can still be exploited through

![]() $ f $

. How to modify our approach in terms of a pure output-only method if no covariate information is available at all will also be described in Section 2.4.

$ f $

. How to modify our approach in terms of a pure output-only method if no covariate information is available at all will also be described in Section 2.4.

2.2. Model training

Model fitting is carried out on in-control/Phase-I training data only. This will be described in Sections 2.2.1 and 2.2.2. In Section 2.2.3, we will discuss how to obtain the scores for Phase-II data that can be used for monitoring.

2.2.1. Basic model training strategy

For estimating functions such as

![]() $ \alpha $

or

$ \alpha $

or

![]() $ f $

in (1), we follow an approach popular in functional data analysis and semiparametric regression, compare Greven and Scheipl (Reference Greven and Scheipl2017) and Wood (Reference Wood2017). The unknown function, say

$ f $

in (1), we follow an approach popular in functional data analysis and semiparametric regression, compare Greven and Scheipl (Reference Greven and Scheipl2017) and Wood (Reference Wood2017). The unknown function, say

![]() $ f $

, is expanded in basis functions such that

$ f $

, is expanded in basis functions such that

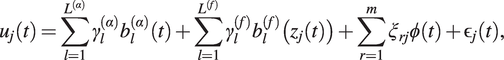

$$ f(z)=\sum \limits_{l=1}^L{\gamma}_l{b}_l(z). $$

$$ f(z)=\sum \limits_{l=1}^L{\gamma}_l{b}_l(z). $$

A popular choice for

![]() $ {b}_1(z),\dots, {b}_L(z) $

is a cubic B-spline basis, which means that

$ {b}_1(z),\dots, {b}_L(z) $

is a cubic B-spline basis, which means that

![]() $ f $

is a cubic spline function (de Boor, Reference de Boor1978; Dierckx, Reference Dierckx1993). For being sufficiently flexible with respect to the types of functions that can be fitted through (6), typically, a rich basis with a large

$ f $

is a cubic spline function (de Boor, Reference de Boor1978; Dierckx, Reference Dierckx1993). For being sufficiently flexible with respect to the types of functions that can be fitted through (6), typically, a rich basis with a large

![]() $ L $

is chosen. A large

$ L $

is chosen. A large

![]() $ L $

, however, often leads to wiggly estimated functions if the basis coefficients

$ L $

, however, often leads to wiggly estimated functions if the basis coefficients

![]() $ {\gamma}_1,\dots, {\gamma}_L $

are fit without any smoothness constraint. The latter is typically imposed by adding a so-called penalty term when fitting the unknown coefficients through least-squares or maximum likelihood. A popular penalty is the integrated squared second derivative

$ {\gamma}_1,\dots, {\gamma}_L $

are fit without any smoothness constraint. The latter is typically imposed by adding a so-called penalty term when fitting the unknown coefficients through least-squares or maximum likelihood. A popular penalty is the integrated squared second derivative

![]() $ {\int}_{\mathcal{D}}{\left[{f^{\prime}}^{\prime }(z)\right]}^2 dz $

, where

$ {\int}_{\mathcal{D}}{\left[{f^{\prime}}^{\prime }(z)\right]}^2 dz $

, where

![]() $ \mathcal{D} $

is the domain of

$ \mathcal{D} $

is the domain of

![]() $ f $

. An alternative is a (quadratic) penalty on the discrete second- or third-order differences of the basis coefficients

$ f $

. An alternative is a (quadratic) penalty on the discrete second- or third-order differences of the basis coefficients

![]() $ {\gamma}_l $

, which gives a so-called P-spline, see Eilers and Marx (Reference Eilers and Marx1996) for details. If we assume that the eigenfunctions

$ {\gamma}_l $

, which gives a so-called P-spline, see Eilers and Marx (Reference Eilers and Marx1996) for details. If we assume that the eigenfunctions

![]() $ {\phi}_1,\dots, {\phi}_m $

from (5) are known, model (1) becomes

$ {\phi}_1,\dots, {\phi}_m $

from (5) are known, model (1) becomes

$$ {u}_j(t)=\sum \limits_{l=1}^{L^{\left(\alpha \right)}}{\gamma}_l^{\left(\alpha \right)}{b}_l^{\left(\alpha \right)}(t)+\sum \limits_{l=1}^{L^{(f)}}{\gamma}_l^{(f)}{b}_l^{(f)}\left({z}_j(t)\right)+\sum \limits_{r=1}^m{\xi}_{rj}\phi (t)+{\unicode{x025B}}_j(t), $$

$$ {u}_j(t)=\sum \limits_{l=1}^{L^{\left(\alpha \right)}}{\gamma}_l^{\left(\alpha \right)}{b}_l^{\left(\alpha \right)}(t)+\sum \limits_{l=1}^{L^{(f)}}{\gamma}_l^{(f)}{b}_l^{(f)}\left({z}_j(t)\right)+\sum \limits_{r=1}^m{\xi}_{rj}\phi (t)+{\unicode{x025B}}_j(t), $$

where

![]() $ {\gamma}_l^{\left(\alpha \right)} $

,

$ {\gamma}_l^{\left(\alpha \right)} $

,

![]() $ {b}_l^{\left(\alpha \right)} $

,

$ {b}_l^{\left(\alpha \right)} $

,

![]() $ {\gamma}_l^{(f)} $

, and

$ {\gamma}_l^{(f)} $

, and

![]() $ {b}_l^{(f)} $

are the basis coefficients and basis functions for

$ {b}_l^{(f)} $

are the basis coefficients and basis functions for

![]() $ \alpha $

and

$ \alpha $

and

![]() $ f $

, respectively, and

$ f $

, respectively, and

![]() $ {\xi}_{rj} $

are day-specific random effects. Given a training dataset of system outputs and covariate information

$ {\xi}_{rj} $

are day-specific random effects. Given a training dataset of system outputs and covariate information

![]() $ \left\{{u}_j\left({t}_{ji}\right),{z}_j\left({t}_{ji}\right)\right\} $

for days

$ \left\{{u}_j\left({t}_{ji}\right),{z}_j\left({t}_{ji}\right)\right\} $

for days

![]() $ j=1,\dots, J $

and time points

$ j=1,\dots, J $

and time points

![]() $ {t}_{ji}\in \mathcal{T} $

,

$ {t}_{ji}\in \mathcal{T} $

,

![]() $ i=1,\dots, {N}_j $

, the unknown parameters

$ i=1,\dots, {N}_j $

, the unknown parameters

![]() $ {\gamma}_l^{\left(\alpha \right)},{\gamma}_l^{(f)} $

can be estimated through penalized least-squares, whereas the random effects

$ {\gamma}_l^{\left(\alpha \right)},{\gamma}_l^{(f)} $

can be estimated through penalized least-squares, whereas the random effects

![]() $ {\xi}_{rj} $

can be predicted using (linear) mixed models approaches. Specifying

$ {\xi}_{rj} $

can be predicted using (linear) mixed models approaches. Specifying

![]() $ {\xi}_{rj} $

as random effects makes this part of the model robust against over-fitting, thanks to the implicit shrinkage of random effects; compare, e.g., Fahrmeir et al. (Reference Fahrmeir, Kneib, Lang and Marx2013). The trade-off between model complexity concerning

$ {\xi}_{rj} $

as random effects makes this part of the model robust against over-fitting, thanks to the implicit shrinkage of random effects; compare, e.g., Fahrmeir et al. (Reference Fahrmeir, Kneib, Lang and Marx2013). The trade-off between model complexity concerning

![]() $ \alpha $

and

$ \alpha $

and

![]() $ f $

and fit to the data is controlled through the strength of the penalty on

$ f $

and fit to the data is controlled through the strength of the penalty on

![]() $ \alpha $

and

$ \alpha $

and

![]() $ f $

. Instead of using a penalty, model complexity could also be controlled through the number of basis functions

$ f $

. Instead of using a penalty, model complexity could also be controlled through the number of basis functions

![]() $ {L}^{\left(\alpha \right)} $

and

$ {L}^{\left(\alpha \right)} $

and

![]() $ {L}^{(f)} $

, but using a penalty makes the method robust against the concrete choice of

$ {L}^{(f)} $

, but using a penalty makes the method robust against the concrete choice of

![]() $ {L}^{\left(\alpha \right)} $

and

$ {L}^{\left(\alpha \right)} $

and

![]() $ {L}^{(f)} $

as it allows using large numbers (e.g., around 20), while controlling model complexity through smoothing; compare, e.g., Gertheiss et al. (Reference Gertheiss, Rügamer, Liew and Greven2024) and Wood (Reference Wood2017). There are a couple of options for choosing the strength of the penalty in a specific application, such as (generalized) cross-validation. In our case, the quadratic penalties used can also be incorporated into the mixed model framework, such that the strength of the penalty can be determined through (restricted) maximum likelihood, analogously to the variance components

$ {L}^{(f)} $

as it allows using large numbers (e.g., around 20), while controlling model complexity through smoothing; compare, e.g., Gertheiss et al. (Reference Gertheiss, Rügamer, Liew and Greven2024) and Wood (Reference Wood2017). There are a couple of options for choosing the strength of the penalty in a specific application, such as (generalized) cross-validation. In our case, the quadratic penalties used can also be incorporated into the mixed model framework, such that the strength of the penalty can be determined through (restricted) maximum likelihood, analogously to the variance components

![]() $ {\nu}_1,\dots, {\nu}_m $

and

$ {\nu}_1,\dots, {\nu}_m $

and

![]() $ {\sigma}^2 $

, see Wood (Reference Wood2011) and Wood (Reference Wood2017) for details. An in-depth discussion of these methods is beyond the scope of this paper. However, an important point to note is that model (1) and hence (7) is not identifiable in this general form given above because we may simply add some constant

$ {\sigma}^2 $

, see Wood (Reference Wood2011) and Wood (Reference Wood2017) for details. An in-depth discussion of these methods is beyond the scope of this paper. However, an important point to note is that model (1) and hence (7) is not identifiable in this general form given above because we may simply add some constant

![]() $ c $

to

$ c $

to

![]() $ \alpha $

while at the same time subtracting

$ \alpha $

while at the same time subtracting

![]() $ c $

from

$ c $

from

![]() $ f $

. Then, the two models are equivalent. In other words,

$ f $

. Then, the two models are equivalent. In other words,

![]() $ \alpha $

and

$ \alpha $

and

![]() $ f $

are only identifiable up to vertical shifts. That is why an overall constant

$ f $

are only identifiable up to vertical shifts. That is why an overall constant

![]() $ {\alpha}_0 $

is typically introduced in terms of

$ {\alpha}_0 $

is typically introduced in terms of

and

![]() $ \tilde{\alpha} $

and

$ \tilde{\alpha} $

and

![]() $ f $

are centered in some sense. The constraint used in the R package mgcv (Wood, Reference Wood2017), which we use here for model training, is

$ f $

are centered in some sense. The constraint used in the R package mgcv (Wood, Reference Wood2017), which we use here for model training, is

![]() $ {\sum}_{i,j}\tilde{\alpha}\left({t}_{ji}\right)=0\hskip0.35em \mathrm{and}\hskip0.35em {\sum}_{i,j}f\left({z}_j\left({t}_{ji}\right)\right)=0 $

, respectively. Furthermore, it is worth noting that measurement points

$ {\sum}_{i,j}\tilde{\alpha}\left({t}_{ji}\right)=0\hskip0.35em \mathrm{and}\hskip0.35em {\sum}_{i,j}f\left({z}_j\left({t}_{ji}\right)\right)=0 $

, respectively. Furthermore, it is worth noting that measurement points

![]() $ {t}_{ji} $

neither need to be the same for each day nor on a regular grid. As a consequence, missing values in the output profiles or the covariate curves are allowed.

$ {t}_{ji} $

neither need to be the same for each day nor on a regular grid. As a consequence, missing values in the output profiles or the covariate curves are allowed.

2.2.2. Estimation of eigenfunctions

In Section 2.2.1, we assumed that the eigenfunctions

![]() $ {\phi}_r $

,

$ {\phi}_r $

,

![]() $ r=1,\dots, m $

, are known. In practice, those functions need to be estimated from the training data in some way. To do so, we first fit model (1), or a modification from below, with a working independence assumption concerning

$ r=1,\dots, m $

, are known. In practice, those functions need to be estimated from the training data in some way. To do so, we first fit model (1), or a modification from below, with a working independence assumption concerning

![]() $ {E}_j(t) $

(Scheipl et al., Reference Scheipl, Staicu and Greven2015). Specifically, this means that

$ {E}_j(t) $

(Scheipl et al., Reference Scheipl, Staicu and Greven2015). Specifically, this means that

![]() $ {E}_j(t) $

from (1) is white noise and

$ {E}_j(t) $

from (1) is white noise and

![]() $ {w}_j(t) $

from (2) is omitted; compare, e.g., Ivanescu et al. (Reference Ivanescu, Staicu, Scheipl and Greven2015). Then, we use the resulting estimates of the error process for FPCA, plug in the estimated eigenfunctions

$ {w}_j(t) $

from (2) is omitted; compare, e.g., Ivanescu et al. (Reference Ivanescu, Staicu, Scheipl and Greven2015). Then, we use the resulting estimates of the error process for FPCA, plug in the estimated eigenfunctions

![]() $ {\hat{\phi}}_r $

, and fit the final model as discussed above. Such a two-step approach has worked well in the past (Gertheiss et al., Reference Gertheiss, Goldsmith and Staicu2017). For FPCA, we use an approach based on Yao et al. (Reference Yao, Müller and Wang2005) that accommodates functions with additional white noise errors and thus works relatively generally (Gertheiss et al., Reference Gertheiss, Rügamer, Liew and Greven2024). The idea is to incorporate smoothing into estimating the covariance in (4). The estimation is based on the cross-products of observed points within error curves,

$ {\hat{\phi}}_r $

, and fit the final model as discussed above. Such a two-step approach has worked well in the past (Gertheiss et al., Reference Gertheiss, Goldsmith and Staicu2017). For FPCA, we use an approach based on Yao et al. (Reference Yao, Müller and Wang2005) that accommodates functions with additional white noise errors and thus works relatively generally (Gertheiss et al., Reference Gertheiss, Rügamer, Liew and Greven2024). The idea is to incorporate smoothing into estimating the covariance in (4). The estimation is based on the cross-products of observed points within error curves,

![]() $ {E}_j\left({t}_{ji}\right){E}_j\left({t}_{j{i}^{\prime }}\right) $

, which are rough estimates of

$ {E}_j\left({t}_{ji}\right){E}_j\left({t}_{j{i}^{\prime }}\right) $

, which are rough estimates of

![]() $ Cov\left(E\left({t}_{ji}\right),E\left({t}_{j{i}^{\prime }}\right)\right) $

. All cross-products are pooled, and a smoothing method of choice is used for bivariate smoothing. Yao et al. (Reference Yao, Müller and Wang2005) used local polynomial smoothing, while the refund R package (Goldsmith et al., Reference Goldsmith, Scheipl, Huang, Wrobel, Di, Gellar, Harezlak, McLean, Swihart, Xiao, Crainiceanu and Reiss2022) used here employs penalized splines. If an additional white noise error is assumed (as we do here), the diagonal cross-products approximate the variance of the structural component plus the error variance. The diagonal is thus left out for smoothing. Once the smooth covariance is available, the orthogonal decomposition (4) is done numerically on a fine grid using the usual matrix eigendecomposition.

$ Cov\left(E\left({t}_{ji}\right),E\left({t}_{j{i}^{\prime }}\right)\right) $

. All cross-products are pooled, and a smoothing method of choice is used for bivariate smoothing. Yao et al. (Reference Yao, Müller and Wang2005) used local polynomial smoothing, while the refund R package (Goldsmith et al., Reference Goldsmith, Scheipl, Huang, Wrobel, Di, Gellar, Harezlak, McLean, Swihart, Xiao, Crainiceanu and Reiss2022) used here employs penalized splines. If an additional white noise error is assumed (as we do here), the diagonal cross-products approximate the variance of the structural component plus the error variance. The diagonal is thus left out for smoothing. Once the smooth covariance is available, the orthogonal decomposition (4) is done numerically on a fine grid using the usual matrix eigendecomposition.

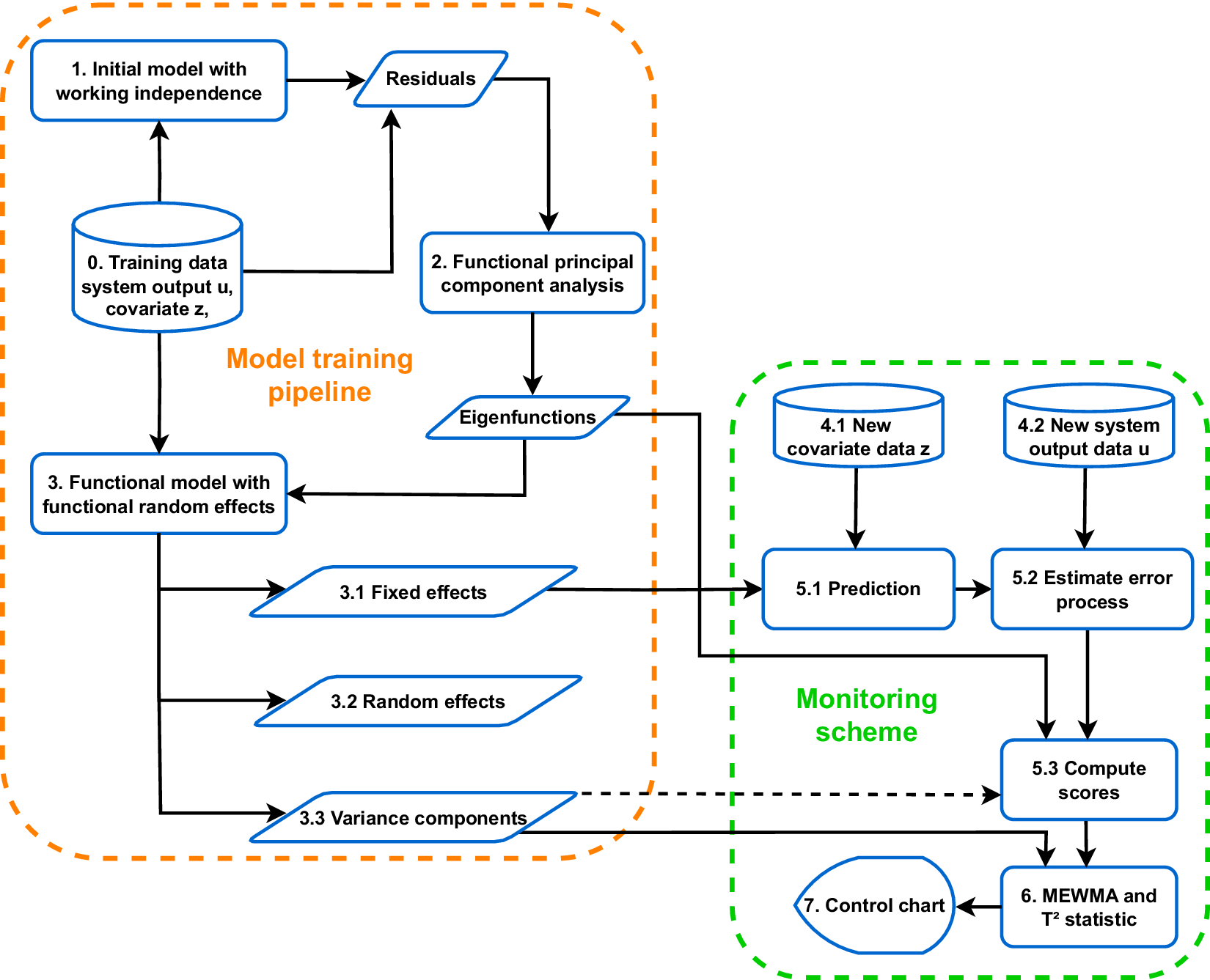

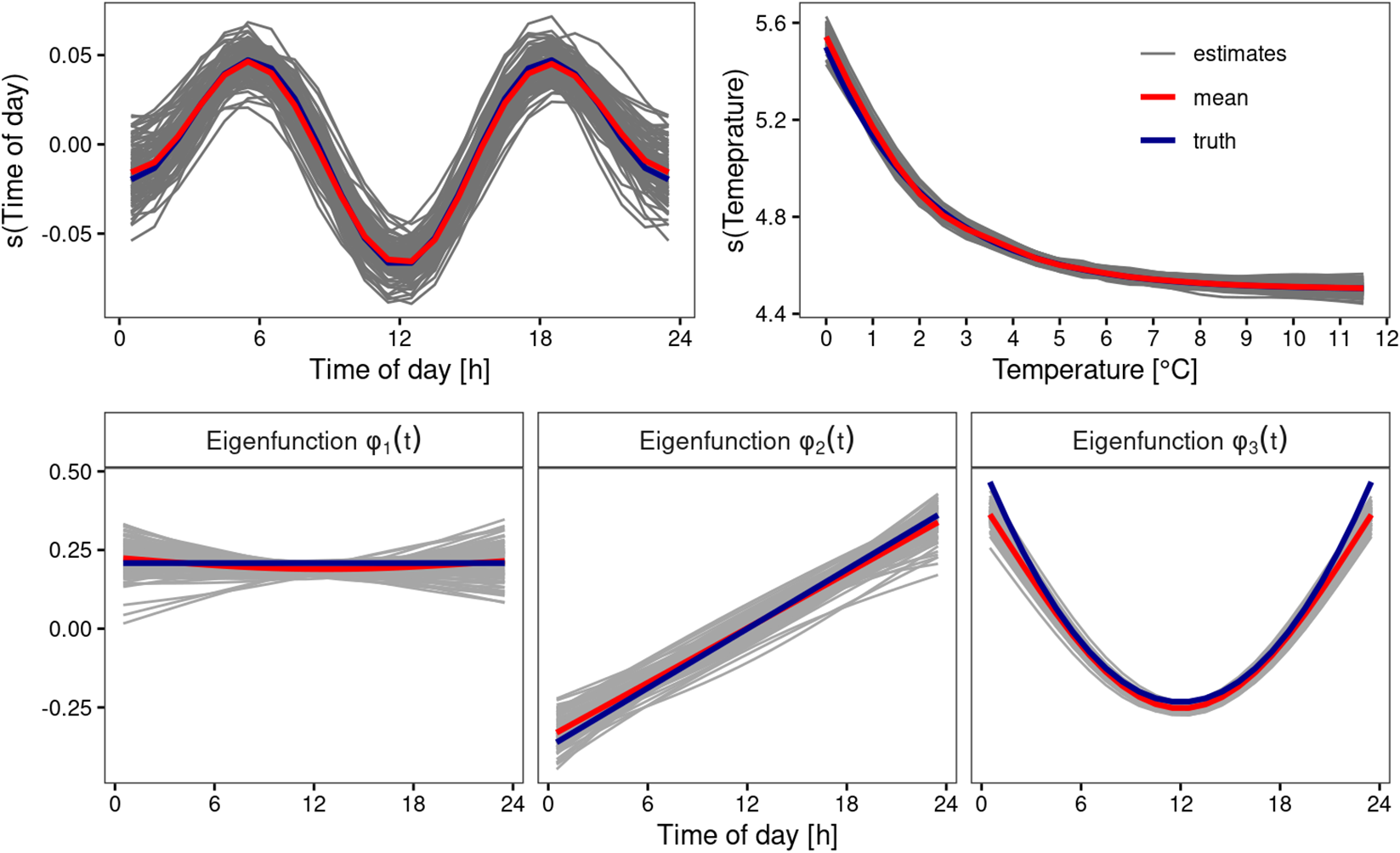

The entire model training workflow is summarized in the orange part of Figure 2. We first use the Phase-I data (step 0) to fit the initial model with working independence in step 1. On the resulting residuals, the “observed” error process, we carry out FPCA to obtain estimates of the eigenfunctions (step 2). For choosing the number of components, we use the usual threshold of 95% or 99% of the variance explained. Then, the eigenfunctions are used to train the final model in step 3. From this model, we obtain estimates of the fixed and random effects, the variance components, and the residuals. Particularly, the fixed effects, the eigenfunctions, and the variance components will be needed to estimate Phase-II component scores and implement a monitoring scheme as described below.

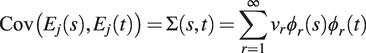

Figure 2. Visual summary of the CAFDA-SHM framework.

2.2.3. Estimation of phase-II scores

Once the mixed model for function-on-function regression has been trained, it can be used to monitor future system outputs if the covariates in the trained model are available as well. The corresponding data is denoted as “new covariate data

![]() $ z $

” and “new system output data

$ z $

” and “new system output data

![]() $ u $

” in Figure 2. An essential input for the monitoring scheme described in the next subsection are the principal component scores

$ u $

” in Figure 2. An essential input for the monitoring scheme described in the next subsection are the principal component scores

![]() $ {\xi}_{1,g},\dots, {\xi}_{m,g} $

for a new day

$ {\xi}_{1,g},\dots, {\xi}_{m,g} $

for a new day

![]() $ g $

in Phase II. To estimate those scores from the new data, we first use the fixed effects from the Phase-I model,

$ g $

in Phase II. To estimate those scores from the new data, we first use the fixed effects from the Phase-I model,

![]() $ \hat{\alpha}\left({t}_{gi}\right) $

and

$ \hat{\alpha}\left({t}_{gi}\right) $

and

![]() $ \hat{f}\left({z}_g\left({t}_{gi}\right)\right) $

from (1), to obtain a prediction for the system outputs

$ \hat{f}\left({z}_g\left({t}_{gi}\right)\right) $

from (1), to obtain a prediction for the system outputs

![]() $ {u}_g\left({t}_{gi}\right) $

. Here,

$ {u}_g\left({t}_{gi}\right) $

. Here,

![]() $ {t}_{gi} $

,

$ {t}_{gi} $

,

![]() $ i=1,\dots, {N}_g $

, denote the time instances of the covariate

$ i=1,\dots, {N}_g $

, denote the time instances of the covariate

![]() $ {z}_g\left({t}_{gi}\right) $

available at day

$ {z}_g\left({t}_{gi}\right) $

available at day

![]() $ g $

. Then, those predictions are subtracted from the actually observed

$ g $

. Then, those predictions are subtracted from the actually observed

![]() $ {u}_g\left({t}_{gi}\right) $

to obtain estimated measurements

$ {u}_g\left({t}_{gi}\right) $

to obtain estimated measurements

![]() $ {\hat{E}}_g\left({t}_{gi}\right) $

of the error process. If those time points are sufficiently dense over the course of day

$ {\hat{E}}_g\left({t}_{gi}\right) $

of the error process. If those time points are sufficiently dense over the course of day

![]() $ g $

, which is typically the case if day

$ g $

, which is typically the case if day

![]() $ g $

is (nearly) over, the scores can be estimated through numerical integration such that

$ g $

is (nearly) over, the scores can be estimated through numerical integration such that

However, sometimes there is a substantial amount of missing values in the

![]() $ u $

or

$ u $

or

![]() $ z $

data, e.g., due to technical problems, or — more importantly — the score estimates have to be available before the new day is over. In the latter case, we may say that all the data points after some time point are “missing.” In both situations, the interpretation as a mixed model is helpful, as this also allows for predicting the random effects for a reduced set of measurement points

$ z $

data, e.g., due to technical problems, or — more importantly — the score estimates have to be available before the new day is over. In the latter case, we may say that all the data points after some time point are “missing.” In both situations, the interpretation as a mixed model is helpful, as this also allows for predicting the random effects for a reduced set of measurement points

![]() $ {t}_{gi} $

.

$ {t}_{gi} $

.

For that purpose, let

![]() $ {\boldsymbol{\phi}}_{gr}={({\phi}_r({t}_{g1}),\dots, {\phi}_r({t}_{g{N}_g}))}^{\mathrm{T}} $

be the

$ {\boldsymbol{\phi}}_{gr}={({\phi}_r({t}_{g1}),\dots, {\phi}_r({t}_{g{N}_g}))}^{\mathrm{T}} $

be the

![]() $ r $

th eigenfunction evaluated at time points

$ r $

th eigenfunction evaluated at time points

![]() $ {t}_{gi} $

,

$ {t}_{gi} $

,

![]() $ i=1,\dots, {N}_g $

,

$ i=1,\dots, {N}_g $

,

![]() $ r=1,\dots, m $

, and

$ r=1,\dots, m $

, and

![]() $ {\Sigma}_{{\mathbf{E}}_g} $

the covariance matrix of the error vector

$ {\Sigma}_{{\mathbf{E}}_g} $

the covariance matrix of the error vector

![]() $ {\mathbf{E}}_g={\left({E}_g\left({t}_{g1}\right),\dots, {E}_g\left({t}_{gN_g}\right)\right)}^{\mathrm{T}} $

. Then, assuming a Gaussian distribution, the conditional expectation of the score

$ {\mathbf{E}}_g={\left({E}_g\left({t}_{g1}\right),\dots, {E}_g\left({t}_{gN_g}\right)\right)}^{\mathrm{T}} $

. Then, assuming a Gaussian distribution, the conditional expectation of the score

![]() $ {\xi}_{r,g} $

given

$ {\xi}_{r,g} $

given

![]() $ {\mathbf{E}}_g $

is

$ {\mathbf{E}}_g $

is

![]() $ \unicode{x1D53C}\left({\xi}_{r,g}|{\mathbf{E}}_g\right)={\nu}_r{\boldsymbol{\phi}}_{gr}^{\mathrm{T}}{\Sigma}_{{\mathbf{E}}_g}^{-1}{\mathbf{E}}_g,r=1,\dots, m $

(Yao et al., Reference Yao, Müller and Wang2005). Due to (2) and (5), the matrix

$ \unicode{x1D53C}\left({\xi}_{r,g}|{\mathbf{E}}_g\right)={\nu}_r{\boldsymbol{\phi}}_{gr}^{\mathrm{T}}{\Sigma}_{{\mathbf{E}}_g}^{-1}{\mathbf{E}}_g,r=1,\dots, m $

(Yao et al., Reference Yao, Müller and Wang2005). Due to (2) and (5), the matrix

![]() $ {\Sigma}_{{\mathbf{E}}_g} $

can be estimated as

$ {\Sigma}_{{\mathbf{E}}_g} $

can be estimated as

![]() $ {\hat{\Sigma}}_{{\mathbf{E}}_g}={\hat{\boldsymbol{\Phi}}}_g\hskip0.1em \mathrm{diag}\hskip0.1em ({\hat{\nu}}_1,\dots, {\hat{\nu}}_m){\hat{\boldsymbol{\Phi}}}_g^{\mathrm{T}}+{\hat{\sigma}}^2{\mathbf{I}}_{N_g} $

, with

$ {\hat{\Sigma}}_{{\mathbf{E}}_g}={\hat{\boldsymbol{\Phi}}}_g\hskip0.1em \mathrm{diag}\hskip0.1em ({\hat{\nu}}_1,\dots, {\hat{\nu}}_m){\hat{\boldsymbol{\Phi}}}_g^{\mathrm{T}}+{\hat{\sigma}}^2{\mathbf{I}}_{N_g} $

, with

![]() $ {\hat{\boldsymbol{\Phi}}}_g=({\hat{\boldsymbol{\phi}}}_{g1}|\dots |{\hat{\boldsymbol{\phi}}}_{gm}) $

. After plugging in the estimates

$ {\hat{\boldsymbol{\Phi}}}_g=({\hat{\boldsymbol{\phi}}}_{g1}|\dots |{\hat{\boldsymbol{\phi}}}_{gm}) $

. After plugging in the estimates

![]() $ {\hat{\sigma}}^2 $

,

$ {\hat{\sigma}}^2 $

,

![]() $ {\hat{\nu}}_r $

,

$ {\hat{\nu}}_r $

,

![]() $ {\hat{\phi}}_r $

,

$ {\hat{\phi}}_r $

,

![]() $ r=1,\dots, m $

, from the model training phase and

$ r=1,\dots, m $

, from the model training phase and

![]() $ {\hat{\mathbf{E}}}_g={\left({\hat{E}}_g\left({t}_{g1}\right),\dots, {\hat{E}}_g\left({t}_{gN_g}\right)\right)}^{\mathrm{T}} $

from above, we obtain the estimated scores

$ {\hat{\mathbf{E}}}_g={\left({\hat{E}}_g\left({t}_{g1}\right),\dots, {\hat{E}}_g\left({t}_{gN_g}\right)\right)}^{\mathrm{T}} $

from above, we obtain the estimated scores

These scores can then be used as input to a control chart. The workflow of estimating Phase-II scores is also summarized in Figure 2 (steps 4.1–5.3). The dashed arrow towards the scores (5.3 in Figure 2) indicates that variance components

![]() $ {\hat{\nu}}_1,\dots, {\hat{\nu}}_m $

and

$ {\hat{\nu}}_1,\dots, {\hat{\nu}}_m $

and

![]() $ {\hat{\sigma}}^2 $

are needed if using (10) but not for (9).

$ {\hat{\sigma}}^2 $

are needed if using (10) but not for (9).

2.3. Control charts

After accounting for environmental influences through appropriate modeling, the system outputs can be monitored by applying a control chart. A multivariate Hotelling control chart is often used to quickly detect a shift in the structural condition (Deraemaeker et al., Reference Deraemaeker, Reynders, De Roeck and Kullaa2008; Magalhães et al., Reference Magalhães, Cunha and Caetano2012; Comanducci et al., Reference Comanducci, Magalhães, Ubertini and Cunha2016). Here, we employ a memory-type control chart, the Multivariate Exponentially Weighted Moving Average (MEWMA) introduced by Lowry et al. (Reference Lowry, Woodall, Champ and Rigdon1992) to the Phase-II scores

![]() $ {\xi}_{1,g},\dots, {\xi}_{m,g} $

,

$ {\xi}_{1,g},\dots, {\xi}_{m,g} $

,

![]() $ g=1,2,\dots $

, from Section 2.2.3. The MEWMA contains the Hotelling chart as a special case. The MEWMA chart assumes serially independent, normally distributed vectors

$ g=1,2,\dots $

, from Section 2.2.3. The MEWMA contains the Hotelling chart as a special case. The MEWMA chart assumes serially independent, normally distributed vectors

![]() $ {\boldsymbol{\xi}}_1,{\boldsymbol{\xi}}_2,\dots $

of dimension

$ {\boldsymbol{\xi}}_1,{\boldsymbol{\xi}}_2,\dots $

of dimension

![]() $ m $

with

$ m $

with

![]() $ {\boldsymbol{\xi}}_g\sim \mathcal{N}(\boldsymbol{\mu}, \boldsymbol{\Lambda} ) $

. We follow Knoth (Reference Knoth2017) and define a mean vector

$ {\boldsymbol{\xi}}_g\sim \mathcal{N}(\boldsymbol{\mu}, \boldsymbol{\Lambda} ) $

. We follow Knoth (Reference Knoth2017) and define a mean vector

![]() $ \boldsymbol{\mu} $

that follows a change point model

$ \boldsymbol{\mu} $

that follows a change point model

![]() $ \boldsymbol{\mu} ={\boldsymbol{\mu}}_0 $

for

$ \boldsymbol{\mu} ={\boldsymbol{\mu}}_0 $

for

![]() $ g<\tau $

and

$ g<\tau $

and

![]() $ \boldsymbol{\mu} ={\boldsymbol{\mu}}_1 $

for

$ \boldsymbol{\mu} ={\boldsymbol{\mu}}_1 $

for

![]() $ g\ge \tau $

for an unknown time point

$ g\ge \tau $

for an unknown time point

![]() $ \tau $

and by definition

$ \tau $

and by definition

![]() $ {\boldsymbol{\mu}}_0=\mathbf{0} $

, see Section 2.1. For in-control data, the scores

$ {\boldsymbol{\mu}}_0=\mathbf{0} $

, see Section 2.1. For in-control data, the scores

![]() $ {\xi}_{1,g},\dots, {\xi}_{m,g} $

are assumed to be uncorrelated with variances

$ {\xi}_{1,g},\dots, {\xi}_{m,g} $

are assumed to be uncorrelated with variances

![]() $ {\nu}_1,\dots, {\nu}_m $

, see also Section 2.1. Hence, the covariance matrix

$ {\nu}_1,\dots, {\nu}_m $

, see also Section 2.1. Hence, the covariance matrix

![]() $ \boldsymbol{\Lambda} $

is diagonal with

$ \boldsymbol{\Lambda} $

is diagonal with

![]() $ \boldsymbol{\Lambda} =\mathrm{diag}({\nu}_1,\dots, {\nu}_m) $

. Then, we apply the following smoothing procedure to compute the MEWMA statistic (step 6 in Figure 2)

$ \boldsymbol{\Lambda} =\mathrm{diag}({\nu}_1,\dots, {\nu}_m) $

. Then, we apply the following smoothing procedure to compute the MEWMA statistic (step 6 in Figure 2)

with

![]() $ g=1,2,\dots $

, smoothing constant

$ g=1,2,\dots $

, smoothing constant

![]() $ 0<\lambda \le 1 $

, and the estimated scores

$ 0<\lambda \le 1 $

, and the estimated scores

![]() $ {\hat{\xi}}_{r,g} $

from (9) or (10) collected in

$ {\hat{\xi}}_{r,g} $

from (9) or (10) collected in

![]() $ {\hat{\boldsymbol{\xi}}}_g $

such that

$ {\hat{\boldsymbol{\xi}}}_g $

such that

![]() $ {\hat{\boldsymbol{\xi}}}_g={\left({\hat{\xi}}_{1,g},\dots, {\hat{\xi}}_{m,g}\right)}^{\mathrm{T}} $

. The smoothing parameter

$ {\hat{\boldsymbol{\xi}}}_g={\left({\hat{\xi}}_{1,g},\dots, {\hat{\xi}}_{m,g}\right)}^{\mathrm{T}} $

. The smoothing parameter

![]() $ \lambda $

controls the sensitivity of the shift to be detected. Smaller values of

$ \lambda $

controls the sensitivity of the shift to be detected. Smaller values of

![]() $ \lambda $

such as

$ \lambda $

such as

![]() $ \lambda \in \left\{\mathrm{0.1,0.2,0.3}\right\} $

, are usually selected to detect smaller shifts (Hunter, Reference Hunter1986), while

$ \lambda \in \left\{\mathrm{0.1,0.2,0.3}\right\} $

, are usually selected to detect smaller shifts (Hunter, Reference Hunter1986), while

![]() $ \lambda =1 $

results in the Hotelling chart. In this study, we use

$ \lambda =1 $

results in the Hotelling chart. In this study, we use

![]() $ \lambda =0.3 $

. The control statistic is the Mahalanobis distance

$ \lambda =0.3 $

. The control statistic is the Mahalanobis distance

with an asymptotic covariance matrix of

![]() $ {\boldsymbol{\omega}}_g $

,

$ {\boldsymbol{\omega}}_g $

,

![]() $ {\boldsymbol{\Lambda}}_{\boldsymbol{\omega}}={\lim}_{g\to \mathrm{\infty}}\mathrm{C}\mathrm{o}\mathrm{v}({\boldsymbol{\omega}}_g)=\{\frac{\lambda }{2-\lambda}\}\boldsymbol{\Lambda} $

. Note, if the scores in

$ {\boldsymbol{\Lambda}}_{\boldsymbol{\omega}}={\lim}_{g\to \mathrm{\infty}}\mathrm{C}\mathrm{o}\mathrm{v}({\boldsymbol{\omega}}_g)=\{\frac{\lambda }{2-\lambda}\}\boldsymbol{\Lambda} $

. Note, if the scores in

![]() $ {\hat{\boldsymbol{\xi}}}_g $

are estimated on a reduced set of measurement points (e.g., due to missing values), the model-based estimates

$ {\hat{\boldsymbol{\xi}}}_g $

are estimated on a reduced set of measurement points (e.g., due to missing values), the model-based estimates

![]() $ {\hat{\nu}}_1,\dots, {\hat{\nu}}_m $

(step 3.3 in Figure 2) may not give the correct variances of the scores. In a case like this, it is recommended to run steps 5.1–5.3 from Figure 2 on the training data but with the reduced set of measurement points to obtain adjusted estimates of the variances (through the empirical versions), which can then be used in

$ {\hat{\nu}}_1,\dots, {\hat{\nu}}_m $

(step 3.3 in Figure 2) may not give the correct variances of the scores. In a case like this, it is recommended to run steps 5.1–5.3 from Figure 2 on the training data but with the reduced set of measurement points to obtain adjusted estimates of the variances (through the empirical versions), which can then be used in

![]() $ \boldsymbol{\Lambda} $

.

$ \boldsymbol{\Lambda} $

.

The MEWMA chart issues an alarm if

![]() $ {T}_i^2>{h}_4 $

, i.e., the control statistic is above the threshold value

$ {T}_i^2>{h}_4 $

, i.e., the control statistic is above the threshold value

![]() $ {h}_4 $

. The stopping time

$ {h}_4 $

. The stopping time

![]() $ N=\mathrm{min}\{g\ge 1:{T}_g^2>{h}_4\} $

, also known as average run length (ARL), is often used to measure the control chart’s performance. It is defined as the average number of observations until the chart signals an alarm. If the process is in-control, the ARL (ARL

$ N=\mathrm{min}\{g\ge 1:{T}_g^2>{h}_4\} $

, also known as average run length (ARL), is often used to measure the control chart’s performance. It is defined as the average number of observations until the chart signals an alarm. If the process is in-control, the ARL (ARL

![]() $ {}_0 $

) should be high to avoid false alarms. If there is a change in the underlying process, the ARL (ARL

$ {}_0 $

) should be high to avoid false alarms. If there is a change in the underlying process, the ARL (ARL

![]() $ {}_1 $

) should be low to detect changes quickly. To determine the threshold value, the ARL must be calculated when the process is in-control, usually applying a grid search or a secant rule. This ARL

$ {}_1 $

) should be low to detect changes quickly. To determine the threshold value, the ARL must be calculated when the process is in-control, usually applying a grid search or a secant rule. This ARL

![]() $ {}_0 $

can be calculated as described in Knoth (Reference Knoth2017) and is implemented in R package spc (Knoth, Reference Knoth2022). An evaluation of our CAFDA framework on artificial data is demonstrated in Section 3.

$ {}_0 $

can be calculated as described in Knoth (Reference Knoth2017) and is implemented in R package spc (Knoth, Reference Knoth2022). An evaluation of our CAFDA framework on artificial data is demonstrated in Section 3.

2.4. Modified and extended models

Our basic model (1) is a particular case of a functional additive mixed model, as introduced and discussed by Scheipl et al. (Reference Scheipl, Staicu and Greven2015), Scheipl et al. (Reference Scheipl, Gertheiss and Greven2016), and Greven and Scheipl (Reference Greven and Scheipl2017), which can be simplified, modified, or extended in various ways depending on the available data and prior knowledge. Some alternative specifications that may be an option with the data available in our case studies in Section 4 are recapped as follows.

-

• Standard linear models: If we have reason to believe that potential daily patterns can be explained entirely through variations in

$ z $

, we can replace

$ z $

, we can replace

$ \alpha (t) $

with a constant

$ \alpha (t) $

with a constant

$ {\alpha}_0 $

. Similarly, if it is believed that the association of the confounder

$ {\alpha}_0 $

. Similarly, if it is believed that the association of the confounder

$ z $

and system output

$ z $

and system output

$ u $

is linear, we can replace

$ u $

is linear, we can replace

$ f\left({z}_j(t)\right) $

in (1) with

$ f\left({z}_j(t)\right) $

in (1) with

$ \beta {z}_j(t) $

. So, the standard linear regression model is a special case in our broader framework. Typically, if fitting the more flexible model (1) to the data, a nearly constant

$ \beta {z}_j(t) $

. So, the standard linear regression model is a special case in our broader framework. Typically, if fitting the more flexible model (1) to the data, a nearly constant

$ \alpha $

and a close to linear

$ \alpha $

and a close to linear

$ f $

will indicate that the simpler model is sufficient.

$ f $

will indicate that the simpler model is sufficient. -

• Unmeasured covariates: If covariate information is unavailable,

$ f\left({z}_j(t)\right) $

can be omitted, and our approach turns into an output-only method using FPCA. In that case, replacing

$ f\left({z}_j(t)\right) $

can be omitted, and our approach turns into an output-only method using FPCA. In that case, replacing

$ \alpha (t) $

with

$ \alpha (t) $

with

$ \alpha \left(t,{d}_j\right) $

is highly recommended. The reason for this is that variables such as temperature typically vary over the day and year, imposing a specific yearly pattern on

$ \alpha \left(t,{d}_j\right) $

is highly recommended. The reason for this is that variables such as temperature typically vary over the day and year, imposing a specific yearly pattern on

$ u $

as well. The latter can be modeled through

$ u $

as well. The latter can be modeled through

$ \alpha \left(t,{d}_j\right) $

.

$ \alpha \left(t,{d}_j\right) $

. -

• Multiple covariates: If

$ p $

covariates

$ p $

covariates

$ {z}_{j1}(t),\dots, {z}_{jp}(t) $

, e.g., temperature, relative humidity, wind speed, solar radiation, have an effect, or if several temperature sensors are used to account for the local temperatures at different spatial locations in the construction material, their effects can be combined additively in (1) by replacing the term

$ {z}_{j1}(t),\dots, {z}_{jp}(t) $

, e.g., temperature, relative humidity, wind speed, solar radiation, have an effect, or if several temperature sensors are used to account for the local temperatures at different spatial locations in the construction material, their effects can be combined additively in (1) by replacing the term

$ f\left({z}_j(t)\right) $

with

$ f\left({z}_j(t)\right) $

with

$ {\sum}_{k=1}^p{f}_k\left({z}_{jk}(t)\right) $

.

$ {\sum}_{k=1}^p{f}_k\left({z}_{jk}(t)\right) $

. -

• Covariate interactions: If

$ {z}_{jk}(t),{z}_{jl}(t) $

are believed to have an interacting effect on the system output, e.g., temperature and relative humidity, model (1) can also contain (two-way) interactions in terms of

$ {z}_{jk}(t),{z}_{jl}(t) $

are believed to have an interacting effect on the system output, e.g., temperature and relative humidity, model (1) can also contain (two-way) interactions in terms of

$ {f}_{kl}\left({z}_{jk}(t),{z}_{jl}(t)\right) $

. In theory, interactions of higher order are possible as well. However, it can be challenging to estimate the corresponding parameter functions due to the computing resources and amount of data needed to learn those interactions reliably, compare Section 2.2.

$ {f}_{kl}\left({z}_{jk}(t),{z}_{jl}(t)\right) $

. In theory, interactions of higher order are possible as well. However, it can be challenging to estimate the corresponding parameter functions due to the computing resources and amount of data needed to learn those interactions reliably, compare Section 2.2.

While all those models are so-called concurrent models, which are useful to adjust for the temperature of the structure itself, the framework of functional additive mixed models is flexible enough to cope with delayed effects (as, e.g., plausible for ambient temperature) using so-called historical functional effects (Scheipl et al., Reference Scheipl, Gertheiss and Greven2016). Also, the very popular linear function-on-function regression approach (Centofanti et al., Reference Centofanti, Lepore, Menafoglio, Palumbo and Vantini2021) is included as a special case. An overview of various modeling options beyond those discussed above is provided in Appendix A. The decision about the model to use should be based on subject matter knowledge, the available data, the goodness-of-fit, and the parameter estimates. As pointed out, concurrent models are particularly useful if the structure’s temperature is measured. If more than one (potential) confounder is measured, the model should be able to include multiple covariates. To distinguish between additive effects and interactions, the model fits should be compared, and the estimated (two-dimensional) surface

![]() $ {f}_{kl} $

should be inspected visually. The less complex (additive) model should be chosen if the two model fits are similar and the surface looks like two additive effects; see also Section 4.1. Sometimes, it may even be known from prior studies that a particular confounder effect is (almost) linear. In a case like this, however, choosing a more complex model like (1) is usually fine, too, because due to the penalty, the estimated

$ {f}_{kl} $

should be inspected visually. The less complex (additive) model should be chosen if the two model fits are similar and the surface looks like two additive effects; see also Section 4.1. Sometimes, it may even be known from prior studies that a particular confounder effect is (almost) linear. In a case like this, however, choosing a more complex model like (1) is usually fine, too, because due to the penalty, the estimated

![]() $ f $

will typically turn out to be (nearly) linear if the data point in that direction (compare Section 4.2).

$ f $

will typically turn out to be (nearly) linear if the data point in that direction (compare Section 4.2).

The resulting modifications concerning model fitting can be summarized as follows. If model (1) is simplified, parameterization (7) simplifies, as well. For instance, if an output-only approach based entirely on FPCA is used, the part in (7) referring to the regression function

![]() $ f $

vanishes. If the latter is assumed to be linear, only the corresponding

$ f $

vanishes. If the latter is assumed to be linear, only the corresponding

![]() $ \beta $

has to be estimated. If

$ \beta $

has to be estimated. If

![]() $ \beta $

is allowed to change over the course of the day in terms of

$ \beta $

is allowed to change over the course of the day in terms of

![]() $ \beta (t) $

, this function can be expanded in basis functions as it was done with

$ \beta (t) $

, this function can be expanded in basis functions as it was done with

![]() $ f $

in (6) and estimated analogously. If, on the other hand, multiple covariates are given, and model (1) is extended to

$ f $

in (6) and estimated analogously. If, on the other hand, multiple covariates are given, and model (1) is extended to

![]() $ {\sum}_{k=1}^p{f}_k\left({z}_{jk}(t)\right) $

instead of a single

$ {\sum}_{k=1}^p{f}_k\left({z}_{jk}(t)\right) $

instead of a single

![]() $ f\left({z}_j(t)\right) $

, we have to add basis representations

$ f\left({z}_j(t)\right) $

, we have to add basis representations

![]() $ {\sum}_{l_k=1}^{L^{\left({f}_k\right)}}{\gamma}_{l_k}^{\left({f}_k\right)}{b}_{l_k}^{\left({f}_k\right)}\left({z}_{jk}(t)\right) $

for each component

$ {\sum}_{l_k=1}^{L^{\left({f}_k\right)}}{\gamma}_{l_k}^{\left({f}_k\right)}{b}_{l_k}^{\left({f}_k\right)}\left({z}_{jk}(t)\right) $

for each component

![]() $ {f}_k $

,

$ {f}_k $

,

![]() $ k=1,\dots, p $

, in (7). If we want to account for both daily and yearly patterns in a purely additive way, that is,

$ k=1,\dots, p $

, in (7). If we want to account for both daily and yearly patterns in a purely additive way, that is,

![]() $ \alpha \left(t,{d}_j\right)={\alpha}_0+{\tilde{\alpha}}_1(t)+{\tilde{\alpha}}_2\left({d}_j\right) $

, the procedure is completely analogous. However, if

$ \alpha \left(t,{d}_j\right)={\alpha}_0+{\tilde{\alpha}}_1(t)+{\tilde{\alpha}}_2\left({d}_j\right) $

, the procedure is completely analogous. However, if

![]() $ \alpha \left(t,{d}_j\right) $

is supposed to be a surface, or if a two-way interaction, say

$ \alpha \left(t,{d}_j\right) $

is supposed to be a surface, or if a two-way interaction, say

![]() $ f\left({z}_{j1}(t),{z}_{j2}(t)\right) $

in (1), is to be fitted, some minor modifications are necessary. The unknown functions (

$ f\left({z}_{j1}(t),{z}_{j2}(t)\right) $

in (1), is to be fitted, some minor modifications are necessary. The unknown functions (

![]() $ \alpha $

and

$ \alpha $

and

![]() $ f $

, respectively) now have two arguments, meaning that the basis needs to be chosen appropriately, e.g., as a so-called tensor product basis. For instance, if considering

$ f $

, respectively) now have two arguments, meaning that the basis needs to be chosen appropriately, e.g., as a so-called tensor product basis. For instance, if considering

![]() $ f $

, equation (6) turns into

$ f $

, equation (6) turns into

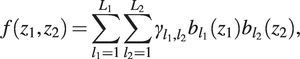

$$ f\left({z}_1,{z}_2\right)=\sum \limits_{l_1=1}^{L_1}\sum \limits_{l_2=1}^{L_2}{\gamma}_{l_1,{l}_2}{b}_{l_1}\left({z}_1\right){b}_{l_2}\left({z}_2\right), $$

$$ f\left({z}_1,{z}_2\right)=\sum \limits_{l_1=1}^{L_1}\sum \limits_{l_2=1}^{L_2}{\gamma}_{l_1,{l}_2}{b}_{l_1}\left({z}_1\right){b}_{l_2}\left({z}_2\right), $$

and smoothing is typically done in both the

![]() $ {z}_1 $

- and

$ {z}_1 $

- and

![]() $ {z}_2 $

-directions. Having said that, the model complexity increases in terms of the number of basis coefficients

$ {z}_2 $

-directions. Having said that, the model complexity increases in terms of the number of basis coefficients

![]() $ {\gamma}_{l_1,{l}_2} $

that need to be fitted. As pointed out earlier, higher-order interactions can also be estimated, but the number of coefficients increases even further. Bivariate (or even higher dimensional) smoothers allowing for terms such as (13) are available in mgcv, and corresponding estimates will be shown in the real-world data evaluations in Section 4. The approach for bivariate

$ {\gamma}_{l_1,{l}_2} $

that need to be fitted. As pointed out earlier, higher-order interactions can also be estimated, but the number of coefficients increases even further. Bivariate (or even higher dimensional) smoothers allowing for terms such as (13) are available in mgcv, and corresponding estimates will be shown in the real-world data evaluations in Section 4. The approach for bivariate

![]() $ \alpha \left(t,{d}_j\right) $

or

$ \alpha \left(t,{d}_j\right) $

or

![]() $ f\left({z}_j(t),t\right) $

cases, where the potentially nonlinear covariate effect is allowed to change over the course of the day, is analogous. In summary, in any of the cases considered here, the final model is linear in the basis coefficients and random effects, and all those quantities can be estimated/predicted through penalized least squares in a mixed model framework.

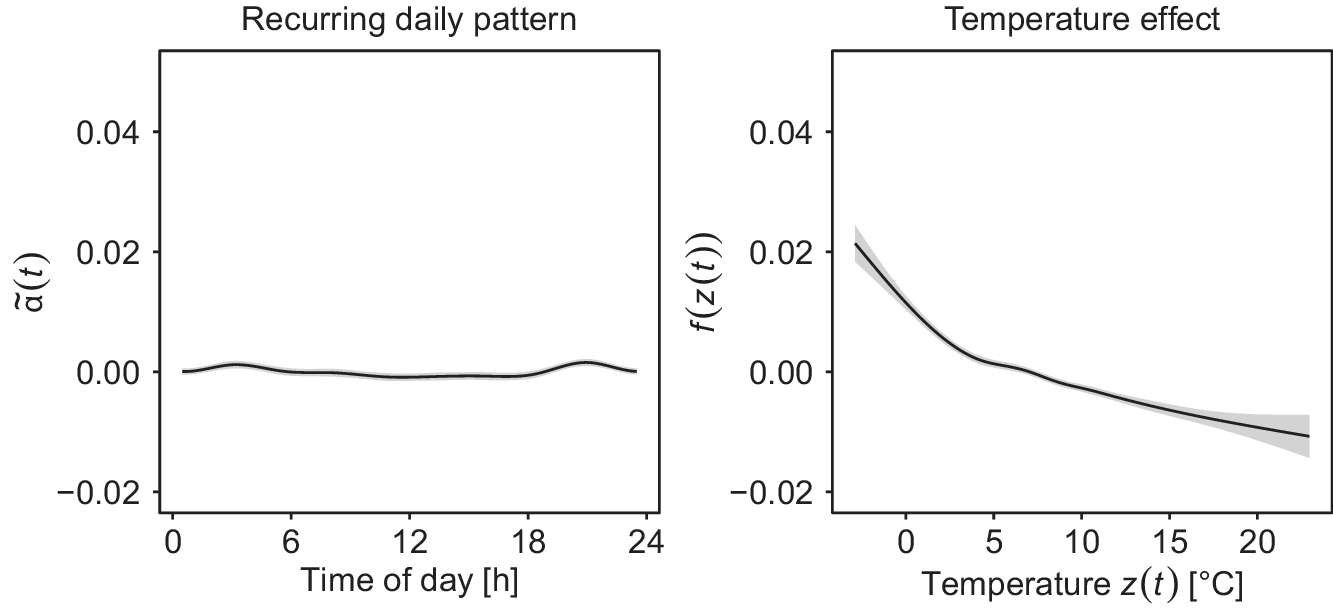

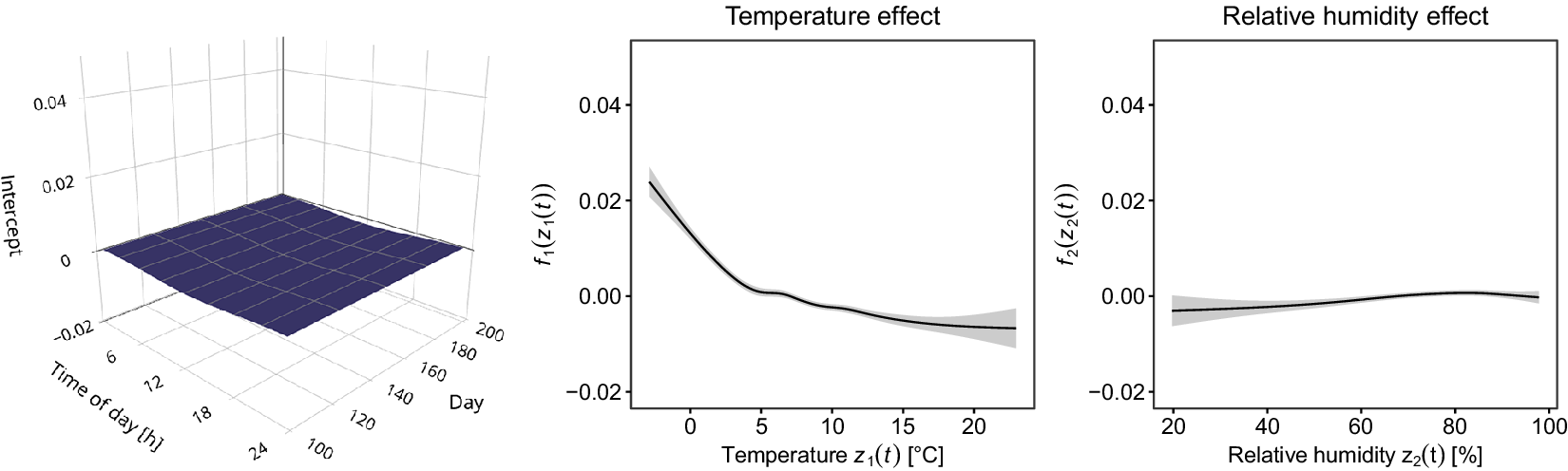

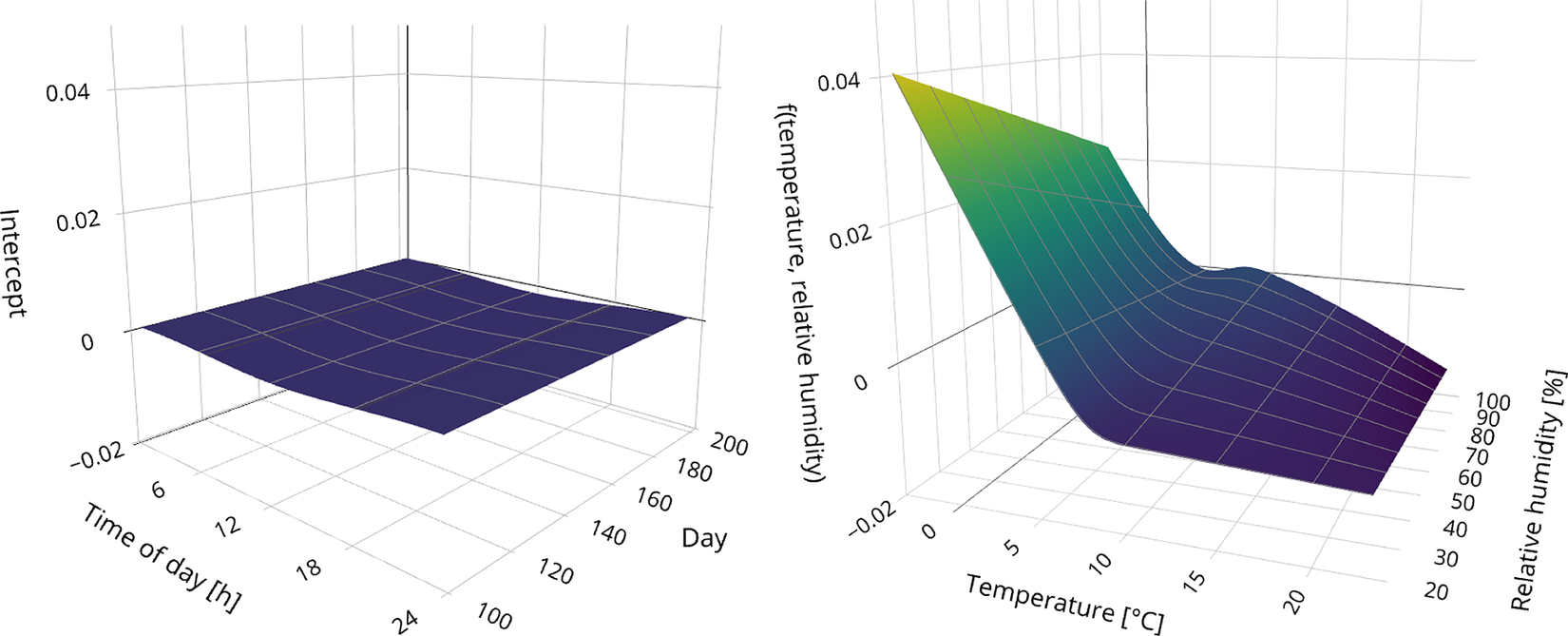

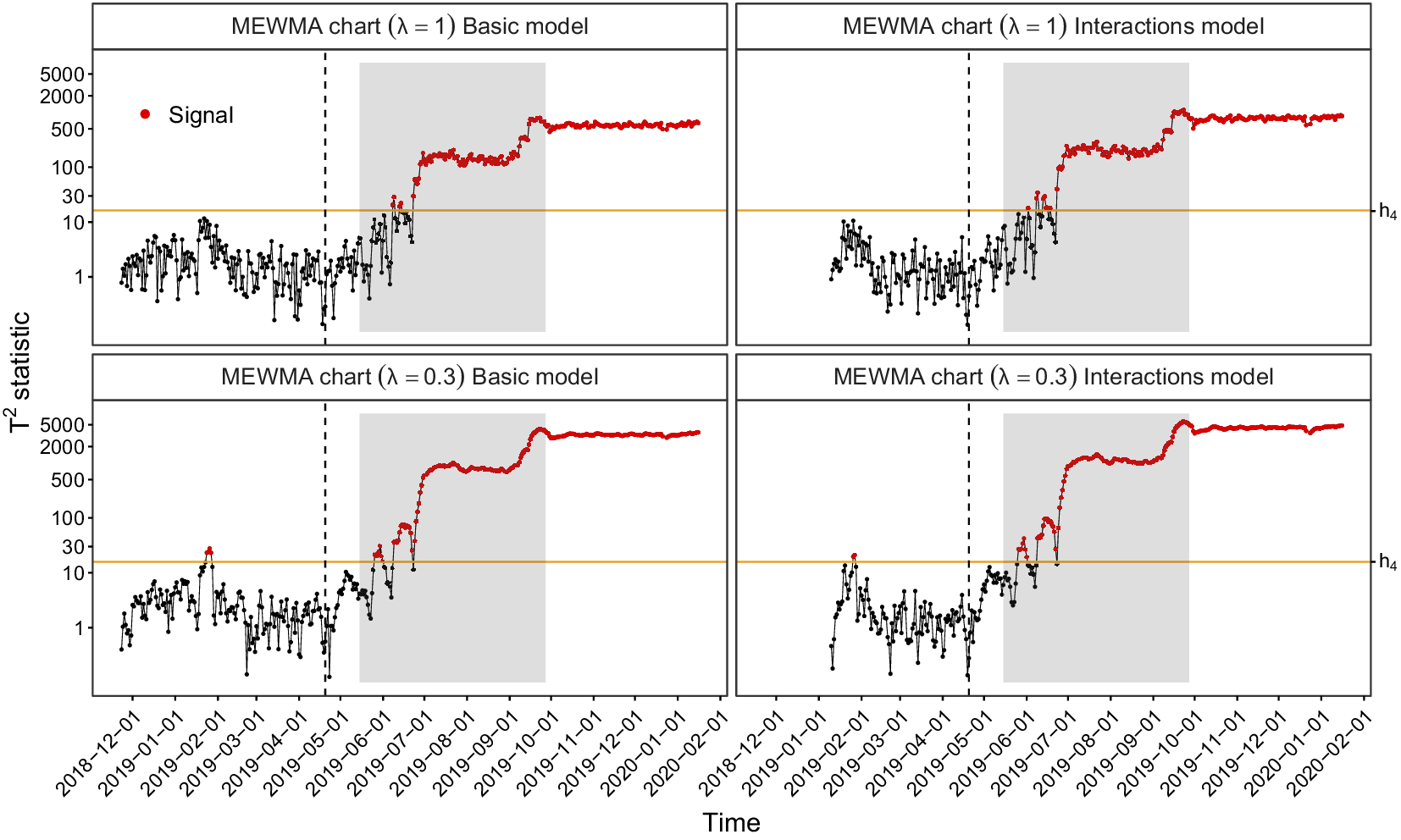

$ f\left({z}_j(t),t\right) $