1. Introduction

Sparse identification of nonlinear dynamics (SINDy) (Brunton, Proctor & Kutz Reference Brunton, Proctor and Kutz2016a) is one of the prominent data-driven tools to obtain governing equations of nonlinear dynamics in a form that we can understand. The SINDy algorithm enables us to discover a governing equation from time-discretized data and identify dominant terms from a large set of potential terms that are likely to be involved in the model. Recently, the usefulness of the SINDy has been demonstrated in various fields (Champion, Brunton & Kutz Reference Champion, Brunton and Kutz2019b; Hoffmann, Fröhner & Noé Reference Hoffmann, Fröhner and Noé2019; Zhang & Schaeffer Reference Zhang and Schaeffer2019; Deng et al. Reference Deng, Noack, Morzyński and Pastur2020). Here, let us introduce some efforts, especially in the fluid dynamics community. Loiseau, Noack & Brunton (Reference Loiseau, Noack and Brunton2018b) utilized SINDy to present general reduced-order modelling (ROM) framework for experimental data: sensor data and particle image velocimetry data. The model was investigated using a transient and post-transient laminar cylinder wake. They reported that the nonlinear full-state dynamics can be modelled with sensor-based dynamics and SINDy-based estimation for coefficients of ROM. The SINDy which takes into account control inputs (called SINDYc) was also investigated by Brunton, Proctor & Kutz (Reference Brunton, Proctor and Kutz2016b) using the Lorenz equations. Loiseau & Brunton (Reference Loiseau and Brunton2018) combined SINDy and proper orthogonal decomposition (POD) to enforce energy-preserving physical constraints in the regression procedure toward the development of a new data-driven Galerkin regression framework. For the time-varying aerodynamics, Li et al. (Reference Li, Kaiser, Laima, Li, Brunton and Kutz2019) identified vortex-induced vibrations on a long-span suspension bridge utilizing the SINDy algorithm extended to parametric partial differential equations (Rudy et al. Reference Rudy, Brunton, Proctor and Kutz2017). As a novel method to perform the order reduction of data and SINDy simultaneously, there is a customized autoencoder (AE) (Champion et al. Reference Champion, Lusch, Kutz and Brunton2019a) introducing the SINDy loss in the loss function of deep AE networks. The sparse regression idea has also recently been propagated to turbulence closure modelling purposes (Beetham & Capecelatro Reference Beetham and Capecelatro2020; Schmelzer, Dwight & Cinnella Reference Schmelzer, Dwight and Cinnella2020; Beetham, Fox & Capecelatro Reference Beetham, Fox and Capecelatro2021; Duraisamy Reference Duraisamy2021). As reported in these studies, by employing the SINDy to predict the temporal evolution of a system, we can obtain ordinary differential equations, which should be helpful to many applications, e.g. control of a system. In this way, the propagation of the use of SINDy can be seen in the fluid dynamics community.

We here examine the possibility of SINDy-based modelling of low-dimensionalized complex fluid flows presented in figure 1. Following our preliminary tests with the van der Pol oscillator and the Lorenz attractor presented in the Appendices, we apply the SINDys with two regression methods, (1) thresholded least square algorithm (TLSA) and (2) adaptive least absolute shrinkage and selection operator (Alasso), to a two-dimensional cylinder wake at ![]() $Re_D=100$, its transient process, and a wake of two-parallel cylinders as examples of high-dimensional dynamics. In this study, a convolutional neural network-based autoencoder (CNN-AE) is utilized to handle these high dimensional data with SINDy whose library matrix is suitable for low-dimensional variable combinations (Kaiser, Kutz & Brunton Reference Kaiser, Kutz and Brunton2018). The CNN-AE here is employed to map a high-dimensional dynamics into low-dimensional latent space. The SINDy is then utilized to obtain a governing equation of the mapped low-dimensional latent vector. Unifying the dynamics of the latent vector obtained via SINDy with the CNN decoder which can remap the low-dimensional latent vector to the original dimension, we can present the temporal evolution of high-dimensional dynamics and avoid the issue with high-dimensional variables as discussed above. The scheme of the present ROM is inspired by Hasegawa et al. (Reference Hasegawa, Fukami, Murata and Fukagata2019, Reference Hasegawa, Fukami, Murata and Fukagata2020a,Reference Hasegawa, Fukami, Murata and Fukagatab) who utilized a long short term memory instead of SINDy in the present ROM framework. The paper is organized as follows. We provide details of SINDy and CNN-AE in § 2. We present the results for high-dimensional flow examples with the CNN-AE/SINDy ROM in §§ 3.1, 3.2 and 3.3. In § 3.4, we also discuss its applicability to turbulence using the nine-equation turbulent shear flow model, although without considering the CNN-AE. At last, concluding remarks with outlook are offered in § 4.

$Re_D=100$, its transient process, and a wake of two-parallel cylinders as examples of high-dimensional dynamics. In this study, a convolutional neural network-based autoencoder (CNN-AE) is utilized to handle these high dimensional data with SINDy whose library matrix is suitable for low-dimensional variable combinations (Kaiser, Kutz & Brunton Reference Kaiser, Kutz and Brunton2018). The CNN-AE here is employed to map a high-dimensional dynamics into low-dimensional latent space. The SINDy is then utilized to obtain a governing equation of the mapped low-dimensional latent vector. Unifying the dynamics of the latent vector obtained via SINDy with the CNN decoder which can remap the low-dimensional latent vector to the original dimension, we can present the temporal evolution of high-dimensional dynamics and avoid the issue with high-dimensional variables as discussed above. The scheme of the present ROM is inspired by Hasegawa et al. (Reference Hasegawa, Fukami, Murata and Fukagata2019, Reference Hasegawa, Fukami, Murata and Fukagata2020a,Reference Hasegawa, Fukami, Murata and Fukagatab) who utilized a long short term memory instead of SINDy in the present ROM framework. The paper is organized as follows. We provide details of SINDy and CNN-AE in § 2. We present the results for high-dimensional flow examples with the CNN-AE/SINDy ROM in §§ 3.1, 3.2 and 3.3. In § 3.4, we also discuss its applicability to turbulence using the nine-equation turbulent shear flow model, although without considering the CNN-AE. At last, concluding remarks with outlook are offered in § 4.

Figure 1. Covered examples of fluid flows in the present study.

2. Methods

2.1. SINDy

Sparse identification of nonlinear dynamics (Brunton et al. Reference Brunton, Proctor and Kutz2016a) is performed to identify nonlinear governing equations from time series data in the present study. The temporal evolution of the state ![]() $\boldsymbol {x}(t)$ in a typical dynamical system can often be represented in the form of an ordinary differential equation,

$\boldsymbol {x}(t)$ in a typical dynamical system can often be represented in the form of an ordinary differential equation,

To explain the SINDy algorithm, let ![]() $\boldsymbol {x}(t)$ be

$\boldsymbol {x}(t)$ be ![]() $(x(t),y(t))$ hereinafter, although the SINDy can also handle the dynamics with higher dimensions, as will be considered later. First, the temporally discretized data of

$(x(t),y(t))$ hereinafter, although the SINDy can also handle the dynamics with higher dimensions, as will be considered later. First, the temporally discretized data of ![]() $\boldsymbol {x}$ are collected to arrange a data matrix

$\boldsymbol {x}$ are collected to arrange a data matrix ![]() $\boldsymbol {X}$,

$\boldsymbol {X}$,

\begin{equation} \boldsymbol{X}= \left( \begin{array}{c} \boldsymbol{x}^{T}(t_1) \\ \boldsymbol{x}^{T}(t_2) \\ \vdots \\ \boldsymbol{x}^{T}(t_m)\end{array} \right) = \left( \begin{array}{cc} x(t_1) & y(t_1) \\ x(t_2) & y(t_2) \\ \vdots & \vdots \\ x(t_m) & y(t_m) \end{array} \right). \end{equation}

\begin{equation} \boldsymbol{X}= \left( \begin{array}{c} \boldsymbol{x}^{T}(t_1) \\ \boldsymbol{x}^{T}(t_2) \\ \vdots \\ \boldsymbol{x}^{T}(t_m)\end{array} \right) = \left( \begin{array}{cc} x(t_1) & y(t_1) \\ x(t_2) & y(t_2) \\ \vdots & \vdots \\ x(t_m) & y(t_m) \end{array} \right). \end{equation}

We then collect the time series data of the time-differentiated value ![]() $\dot {\boldsymbol x}(t)$ to construct a time-differentiated data matrix

$\dot {\boldsymbol x}(t)$ to construct a time-differentiated data matrix ![]() $\dot {\boldsymbol {X}}$,

$\dot {\boldsymbol {X}}$,

\begin{equation} \dot{\boldsymbol{X}}= \left( \begin{array}{c} \dot{\boldsymbol{x}}^{T}(t_1) \\ \dot{\boldsymbol{x}}^{T}(t_2) \\ \vdots \\ \dot{\boldsymbol{x}}^{T}(t_m) \end{array} \right) = \left( \begin{array}{cc} \dot{x}(t_1) & \dot{y}(t_1) \\ \dot{x}(t_2) & \dot{y}(t_2) \\ \vdots & \vdots \\ \dot{x}(t_m) & \dot{y}(t_m)\end{array} \right). \end{equation}

\begin{equation} \dot{\boldsymbol{X}}= \left( \begin{array}{c} \dot{\boldsymbol{x}}^{T}(t_1) \\ \dot{\boldsymbol{x}}^{T}(t_2) \\ \vdots \\ \dot{\boldsymbol{x}}^{T}(t_m) \end{array} \right) = \left( \begin{array}{cc} \dot{x}(t_1) & \dot{y}(t_1) \\ \dot{x}(t_2) & \dot{y}(t_2) \\ \vdots & \vdots \\ \dot{x}(t_m) & \dot{y}(t_m)\end{array} \right). \end{equation}

In the present study, the time-differentiated values are obtained with the second-order central-difference scheme. Note in passing that results in the present study are not sensitive to the differential scheme with appropriate time steps for construction of ![]() $\dot {\boldsymbol X}$. Then, we prepare a library matrix

$\dot {\boldsymbol X}$. Then, we prepare a library matrix ![]() $\varTheta (\boldsymbol {X})$ including nonlinear terms of

$\varTheta (\boldsymbol {X})$ including nonlinear terms of ![]() $\boldsymbol {X}$,

$\boldsymbol {X}$,

\begin{equation} \varTheta (\boldsymbol{X}) = \left( \begin{array}{cccccc} | & | & | & | & | & | \\ 1 & \boldsymbol{X} & \boldsymbol{X}^{P_2} & \boldsymbol{X}^{P_3} & \boldsymbol{X}^{P_4} & \boldsymbol{X}^{P_5} \\ | & | & | & | & | & | \end{array} \right), \end{equation}

\begin{equation} \varTheta (\boldsymbol{X}) = \left( \begin{array}{cccccc} | & | & | & | & | & | \\ 1 & \boldsymbol{X} & \boldsymbol{X}^{P_2} & \boldsymbol{X}^{P_3} & \boldsymbol{X}^{P_4} & \boldsymbol{X}^{P_5} \\ | & | & | & | & | & | \end{array} \right), \end{equation}

where ![]() $\boldsymbol {X}^{P_i}$ is

$\boldsymbol {X}^{P_i}$ is ![]() $i$th-order polynomials constructed by

$i$th-order polynomials constructed by ![]() $x$ and

$x$ and ![]() $y$. The set of nonlinear potential terms here includes up to fifth-order terms, although what terms are included can be arbitrarily set. We finally determine a coefficient matrix

$y$. The set of nonlinear potential terms here includes up to fifth-order terms, although what terms are included can be arbitrarily set. We finally determine a coefficient matrix ![]() $\varXi$,

$\varXi$,

\begin{equation} \varXi = (\xi_x \quad \xi_y) = \left( \begin{array}{cc} \xi _{(x,\ 1)} & \xi _{(y,\ 1)} \\ \xi _{(x,\ 2)} & \xi _{(y,\ 2)} \\ \vdots & \vdots \\ \xi _{(x,\ l)} & \xi _{(y,\ l)}\end{array} \right), \end{equation}

\begin{equation} \varXi = (\xi_x \quad \xi_y) = \left( \begin{array}{cc} \xi _{(x,\ 1)} & \xi _{(y,\ 1)} \\ \xi _{(x,\ 2)} & \xi _{(y,\ 2)} \\ \vdots & \vdots \\ \xi _{(x,\ l)} & \xi _{(y,\ l)}\end{array} \right), \end{equation}in the state equation,

using an arbitrary regression method, such as TLSA, least absolute shrinkage and selection operator (Lasso) and so on. The subscript ![]() $l$ in (2.5) denotes the row index of the library matrix. Once the coefficient matrix

$l$ in (2.5) denotes the row index of the library matrix. Once the coefficient matrix ![]() $\varXi$ is obtained, the governing equation is identified as

$\varXi$ is obtained, the governing equation is identified as

In this study, we use the TLSA used in Brunton et al. (Reference Brunton, Proctor and Kutz2016a) and the Alasso (Zou Reference Zou2006) to obtain the coefficient matrix ![]() $\varXi$ following our preliminary tests. Details can be found in the Appendices.

$\varXi$ following our preliminary tests. Details can be found in the Appendices.

2.2. CNN-AE

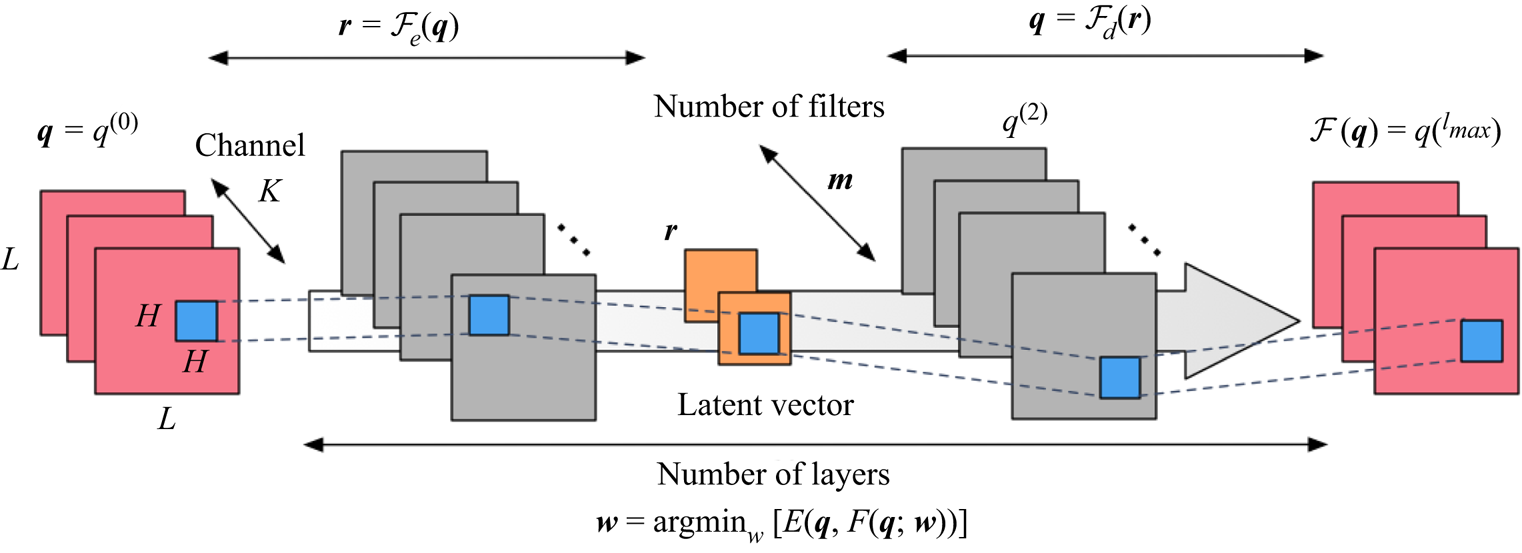

We use a CNN (LeCun et al. Reference LeCun, Bottou, Bengio and Haffner1998) AE (Hinton & Salakhutdinov Reference Hinton and Salakhutdinov2006) for order reduction of high-dimensional data, as shown in figure 2. As an example, we show the CNN-AE with three hidden layers. In a training process, the AE ![]() ${\mathcal {F}}$ is trained to output the same data as the input data

${\mathcal {F}}$ is trained to output the same data as the input data ![]() $\boldsymbol q$ such that

$\boldsymbol q$ such that ![]() ${\boldsymbol q}\approx {{\mathcal {F}}}({\boldsymbol q};{\boldsymbol w})$, where

${\boldsymbol q}\approx {{\mathcal {F}}}({\boldsymbol q};{\boldsymbol w})$, where ![]() ${\boldsymbol w}$ denotes the weights of the machine learning model. The process to optimize the weights

${\boldsymbol w}$ denotes the weights of the machine learning model. The process to optimize the weights ![]() $\boldsymbol w$ can be formulated as an iterative minimization of an error function

$\boldsymbol w$ can be formulated as an iterative minimization of an error function ![]() $E$,

$E$,

For the use of the AE as a dimension compressor, the dimension of an intermediate space called the latent space ![]() $\boldsymbol \eta$ is smaller than that of the input or output data

$\boldsymbol \eta$ is smaller than that of the input or output data ![]() $\boldsymbol q$ as illustrated in figure 2. When we are able to obtain the output

$\boldsymbol q$ as illustrated in figure 2. When we are able to obtain the output ![]() ${{\mathcal {F}}}(\boldsymbol q)$ similar to the input

${{\mathcal {F}}}(\boldsymbol q)$ similar to the input ![]() $\boldsymbol q$ such that

$\boldsymbol q$ such that ![]() ${\boldsymbol q}\approx {{\mathcal {F}}}({\boldsymbol q})$, it can be guaranteed that the latent vector

${\boldsymbol q}\approx {{\mathcal {F}}}({\boldsymbol q})$, it can be guaranteed that the latent vector ![]() $\boldsymbol r$ is a low-dimensional representation of its input or output

$\boldsymbol r$ is a low-dimensional representation of its input or output ![]() $\boldsymbol q$. In an AE, the dimension compressor is called the encoder

$\boldsymbol q$. In an AE, the dimension compressor is called the encoder ![]() ${{\mathcal {F}}}_e$ (the left-hand part in figure 2) and the counterpart is referred to as the decoder

${{\mathcal {F}}}_e$ (the left-hand part in figure 2) and the counterpart is referred to as the decoder ![]() ${{\mathcal {F}}}_d$ (the right-hand part in figure 2). Using them, the internal procedure of the AE can be expressed as

${{\mathcal {F}}}_d$ (the right-hand part in figure 2). Using them, the internal procedure of the AE can be expressed as

In the present study, the dimension of the latent space for the problems of a cylinder wake and its transient process is set to two following our previous work (Murata, Fukami & Fukagata Reference Murata, Fukami and Fukagata2020). Murata et al. (Reference Murata, Fukami and Fukagata2020) reported that the flow fields can be mapped into a low-dimensional latent space successfully while keeping the information of high-dimensional flow fields. For the example of a two-parallel cylinder wake in § 3.3, the dimension of the latent space is set to four to handle its quasi-periodicity, accordingly. For construction of the AE, the ![]() $L_2$ norm error is applied as the error function

$L_2$ norm error is applied as the error function ![]() $E$ and the Adam optimizer (Kingma & Ba Reference Kingma and Ba2014) is utilized for updating the weights in the iterative training process.

$E$ and the Adam optimizer (Kingma & Ba Reference Kingma and Ba2014) is utilized for updating the weights in the iterative training process.

Figure 2. Convolutional neural network-based autoencoder.

Next, let us briefly explain the convolutional neural network. In the scheme of the convolutional neural network, a filter ![]() $h$, whose size is

$h$, whose size is ![]() $H\times H,$ is utilized as the weights

$H\times H,$ is utilized as the weights ![]() $\boldsymbol w$, as shown in figure 3

$\boldsymbol w$, as shown in figure 3![]() $(a)$. The mathematical expression here is

$(a)$. The mathematical expression here is

\begin{equation} q^{(l)}_{ijm} = {\psi}\left(\sum^{K-1}_{k=0}\sum^{H-1}_{p=0}\sum^{H-1}_{s=0}h_{p{s}km}^{(l)} q_{i+p-C,j+{s-C},k}^{(l-1)} + b^{(l)}_{ijm}\right), \end{equation}

\begin{equation} q^{(l)}_{ijm} = {\psi}\left(\sum^{K-1}_{k=0}\sum^{H-1}_{p=0}\sum^{H-1}_{s=0}h_{p{s}km}^{(l)} q_{i+p-C,j+{s-C},k}^{(l-1)} + b^{(l)}_{ijm}\right), \end{equation}

where ![]() $C=\textrm {floor}(H/2)$,

$C=\textrm {floor}(H/2)$, ![]() $q^{(l)}$ is the output at layer

$q^{(l)}$ is the output at layer ![]() $l$, and

$l$, and ![]() $K$ is the number of variables in the data (e.g.

$K$ is the number of variables in the data (e.g. ![]() $K=1$ for black-and-white photos and

$K=1$ for black-and-white photos and ![]() $K=3$ for RGB images). Although not shown in figure 3,

$K=3$ for RGB images). Although not shown in figure 3, ![]() $b$ is a bias added to the results of the filter operation. The activation function

$b$ is a bias added to the results of the filter operation. The activation function ![]() $\psi$ is usually a monotonically increasing nonlinear function. The AE can achieve more effective dimension reduction than the linear theory-based method, i.e. POD (Lumley Reference Lumley1967), thanks to the nonlinear activation function (Milano & Koumoutsakos Reference Milano and Koumoutsakos2002; Fukami et al. Reference Fukami, Hasegawa, Nakamura, Morimoto and Fukagata2020b; Murata et al. Reference Murata, Fukami and Fukagata2020). In the present study, a hyperbolic tangent function

$\psi$ is usually a monotonically increasing nonlinear function. The AE can achieve more effective dimension reduction than the linear theory-based method, i.e. POD (Lumley Reference Lumley1967), thanks to the nonlinear activation function (Milano & Koumoutsakos Reference Milano and Koumoutsakos2002; Fukami et al. Reference Fukami, Hasegawa, Nakamura, Morimoto and Fukagata2020b; Murata et al. Reference Murata, Fukami and Fukagata2020). In the present study, a hyperbolic tangent function ![]() $\psi (a)=(e^{a}-e^{-a})\cdot (e^{a}+e^{-a})^{-1}$ is adopted for the activation function following Murata et al. (Reference Murata, Fukami and Fukagata2020). Moreover, we use the convolutional neural network to construct the AE because the CNN is good at handling high-dimensional data with lower computational costs than fully connected models, i.e. multilayer perceptron, thanks to the filter concept which assumes that pixels of images have no strong relationship with those of far areas. Recently, the use of CNN has emerged to deal with high-dimensional problems including fluid dynamics (Fukami, Fukagata & Taira Reference Fukami, Fukagata and Taira2019a,Reference Fukami, Fukagata and Tairab; Fukami et al. Reference Fukami, Nabae, Kawai and Fukagata2019c; Fukami, Fukagata & Taira Reference Fukami, Fukagata and Taira2020a; Fukami et al. Reference Fukami, Maulik, Ramachandra, Fukagata and Taira2021b; Omata & Shirayama Reference Omata and Shirayama2019; Morimoto, Fukami & Fukagata Reference Morimoto, Fukami and Fukagata2020a; Fukami, Fukagata & Taira Reference Fukami, Fukagata and Taira2021a; Matsuo et al. Reference Matsuo, Nakamura, Morimoto, Fukami and Fukagata2021; Morimoto et al. Reference Morimoto, Fukami, Zhang, Nair and Fukagata2021), although this concept was originally developed in the field of computer science.

$\psi (a)=(e^{a}-e^{-a})\cdot (e^{a}+e^{-a})^{-1}$ is adopted for the activation function following Murata et al. (Reference Murata, Fukami and Fukagata2020). Moreover, we use the convolutional neural network to construct the AE because the CNN is good at handling high-dimensional data with lower computational costs than fully connected models, i.e. multilayer perceptron, thanks to the filter concept which assumes that pixels of images have no strong relationship with those of far areas. Recently, the use of CNN has emerged to deal with high-dimensional problems including fluid dynamics (Fukami, Fukagata & Taira Reference Fukami, Fukagata and Taira2019a,Reference Fukami, Fukagata and Tairab; Fukami et al. Reference Fukami, Nabae, Kawai and Fukagata2019c; Fukami, Fukagata & Taira Reference Fukami, Fukagata and Taira2020a; Fukami et al. Reference Fukami, Maulik, Ramachandra, Fukagata and Taira2021b; Omata & Shirayama Reference Omata and Shirayama2019; Morimoto, Fukami & Fukagata Reference Morimoto, Fukami and Fukagata2020a; Fukami, Fukagata & Taira Reference Fukami, Fukagata and Taira2021a; Matsuo et al. Reference Matsuo, Nakamura, Morimoto, Fukami and Fukagata2021; Morimoto et al. Reference Morimoto, Fukami, Zhang, Nair and Fukagata2021), although this concept was originally developed in the field of computer science.

Figure 3. Internal procedure of convolutional neural network: ![]() $(a)$ filter operation with activation function and

$(a)$ filter operation with activation function and ![]() $(b)$ maximum pooling and upsampling.

$(b)$ maximum pooling and upsampling.

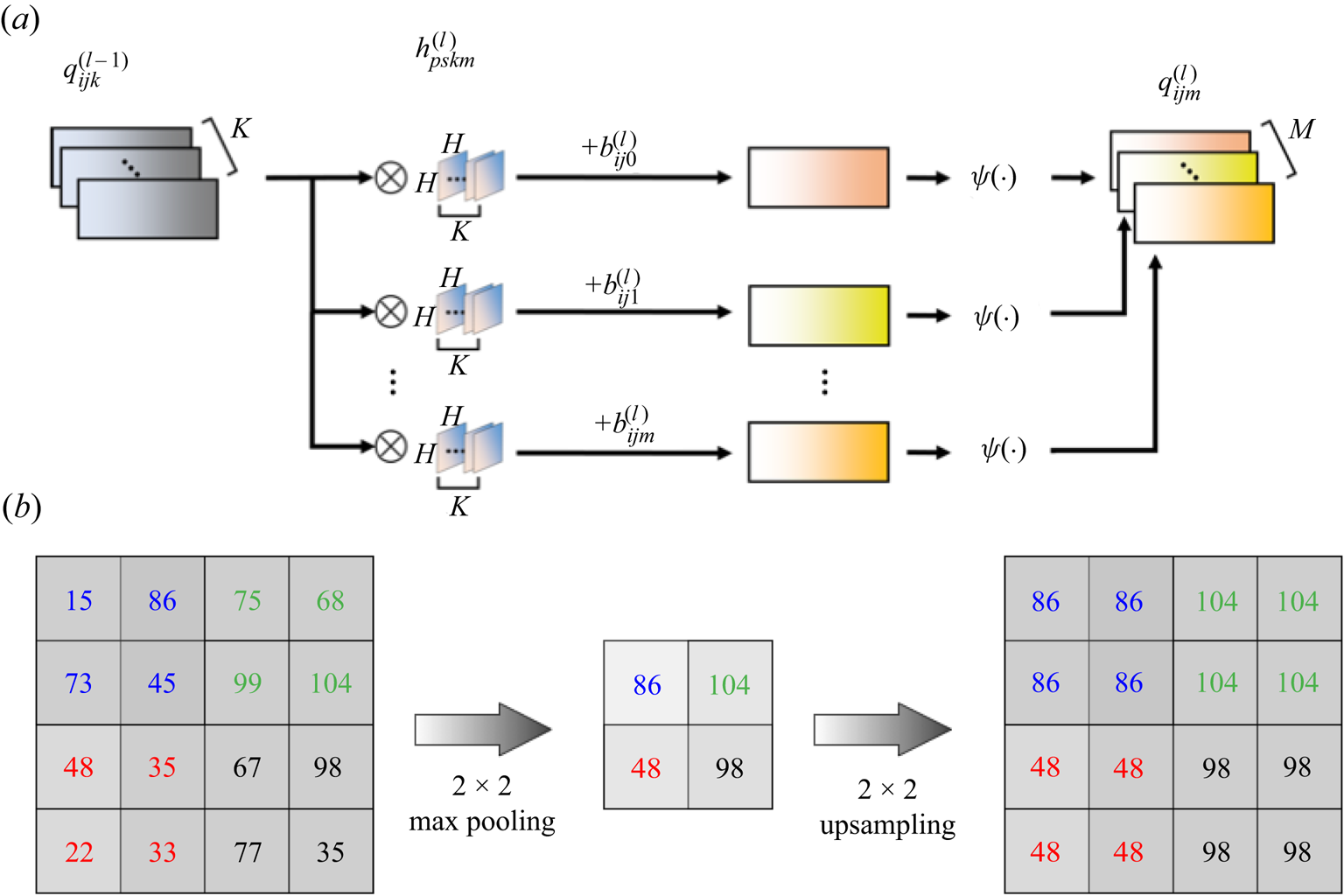

2.3. The CNN-SINDy-based ROM

By combining the CNN-AE and SINDy, we present the machine-learning-based ROM (ML-ROM) as shown in figure 4. The CNN-AE first works to map a high-dimensional flow field into low-dimensional latent space. The SINDy is then performed to obtain a governing equation of the mapped low-dimensional latent vector. Unifying the predicted latent vector by the SINDy with the CNN decoder, we can obtain the temporal evolution of high-dimensional flow field as presented in figure 4. Moreover, the issue with high-dimensional variables and SINDy can also be avoided. Note again that the present ROM is inspired by Hasegawa et al. (Reference Hasegawa, Fukami, Murata and Fukagata2019, Reference Hasegawa, Fukami, Murata and Fukagata2020a,Reference Hasegawa, Fukami, Murata and Fukagatab) who capitalized on a long short-term memory instead of SINDy. We apply this framework to a two-dimensional cylinder wake in § 3.1, its transient process in § 3.2 and a wake of two-parallel cylinders in § 3.3. In this study, we perform a fivefold cross-validation (Brunton & Kutz Reference Brunton and Kutz2019) to create all machine learning models, although only the results of a single case will be presented, for brevity. The sample code for the present reduced-order model is available from https://github.com/kfukami/CNN-SINDy-MLROM.

Figure 4. The CNN-SINDy-based ROM for fluid flows.

3. Results and discussion

3.1. Example 1: periodic cylinder wake

Here, let us consider a two-dimensional cylinder wake using a two-dimensional direct numerical simulation (DNS). The governing equations are the incompressible continuity and Navier–Stokes equations,

where ![]() $\boldsymbol {u}$ and

$\boldsymbol {u}$ and ![]() $p$ represent the velocity vector and pressure, respectively. All quantities are made dimensionless by the fluid density, the free stream velocity and the cylinder diameter. The Reynolds number based on the cylinder diameter is

$p$ represent the velocity vector and pressure, respectively. All quantities are made dimensionless by the fluid density, the free stream velocity and the cylinder diameter. The Reynolds number based on the cylinder diameter is ![]() $Re _D=100$. The size of the computational domain is

$Re _D=100$. The size of the computational domain is ![]() $L_x=25.6$ and

$L_x=25.6$ and ![]() $L_y=20.0$ in the streamwise (

$L_y=20.0$ in the streamwise (![]() $x$) and the transverse (

$x$) and the transverse (![]() $y$) directions, respectively. The origin of coordinates is defined at the centre of the inflow boundary. A Cartesian grid with the uniform grid spacing of

$y$) directions, respectively. The origin of coordinates is defined at the centre of the inflow boundary. A Cartesian grid with the uniform grid spacing of ![]() $\Delta x=\Delta y = 0.025$ is used; thus, the number of grid points is

$\Delta x=\Delta y = 0.025$ is used; thus, the number of grid points is ![]() $(N_x, N_y)=(1024, 800)$. We use the ghost cell method (Kor, Badri Ghomizad & Fukagata Reference Kor, Badri Ghomizad and Fukagata2017) to impose the no-slip boundary condition on the surface of cylinder whose centre is located at

$(N_x, N_y)=(1024, 800)$. We use the ghost cell method (Kor, Badri Ghomizad & Fukagata Reference Kor, Badri Ghomizad and Fukagata2017) to impose the no-slip boundary condition on the surface of cylinder whose centre is located at ![]() $(x,y)=(9,0)$. In the present study, we utilize the flows around the cylinder as the training data set, i.e.

$(x,y)=(9,0)$. In the present study, we utilize the flows around the cylinder as the training data set, i.e. ![]() $8.2 \leq x \leq 17.8$ and

$8.2 \leq x \leq 17.8$ and ![]() $-2.4 \leq y \leq 2.4$ with

$-2.4 \leq y \leq 2.4$ with ![]() $(N_x^{*}, N_y^{*})=(384, 192)$. The fluctuation components of streamwise velocity

$(N_x^{*}, N_y^{*})=(384, 192)$. The fluctuation components of streamwise velocity ![]() $u$ and transverse velocity

$u$ and transverse velocity ![]() $v$ are considered as the input and output attributes for CNN-AE. The time interval of the flow field data is

$v$ are considered as the input and output attributes for CNN-AE. The time interval of the flow field data is ![]() $\Delta t = 0.025$ corresponding to approximately 230 snapshots per a period with the Strouhal number

$\Delta t = 0.025$ corresponding to approximately 230 snapshots per a period with the Strouhal number ![]() $St=0.172$.

$St=0.172$.

As mentioned above, the SINDy is employed to identify the ordinary differential equations that govern the temporal evolution of the mapped vector obtained by the CNN encoder. The time history and the trajectory of the mapped latent vector ![]() ${\boldsymbol r}=(r_1,r_2)$ are shown in figure 5. For the assessment of the candidate models, we integrate the differential equations to reproduce the time history. Note in passing that the indices based on the

${\boldsymbol r}=(r_1,r_2)$ are shown in figure 5. For the assessment of the candidate models, we integrate the differential equations to reproduce the time history. Note in passing that the indices based on the ![]() $L_2$ error are not suitable since the slight difference between the period of reproduced oscillation and that of original one results in large

$L_2$ error are not suitable since the slight difference between the period of reproduced oscillation and that of original one results in large ![]() $L_2$ errors for oscillating systems. We here use the amplitude and the frequency of oscillation to evaluate the similarity between the reproduced waveform and the original one. Hereafter, the error rate of the amplitude and the frequency are shown in the figures below for simplicity. The number of terms is also considered for the parsimonious model selection.

$L_2$ errors for oscillating systems. We here use the amplitude and the frequency of oscillation to evaluate the similarity between the reproduced waveform and the original one. Hereafter, the error rate of the amplitude and the frequency are shown in the figures below for simplicity. The number of terms is also considered for the parsimonious model selection.

Figure 5. Latent vector dynamics of a periodic shedding case: ![]() $(a)$ time history and

$(a)$ time history and ![]() $(b)$ trajectory.

$(b)$ trajectory.

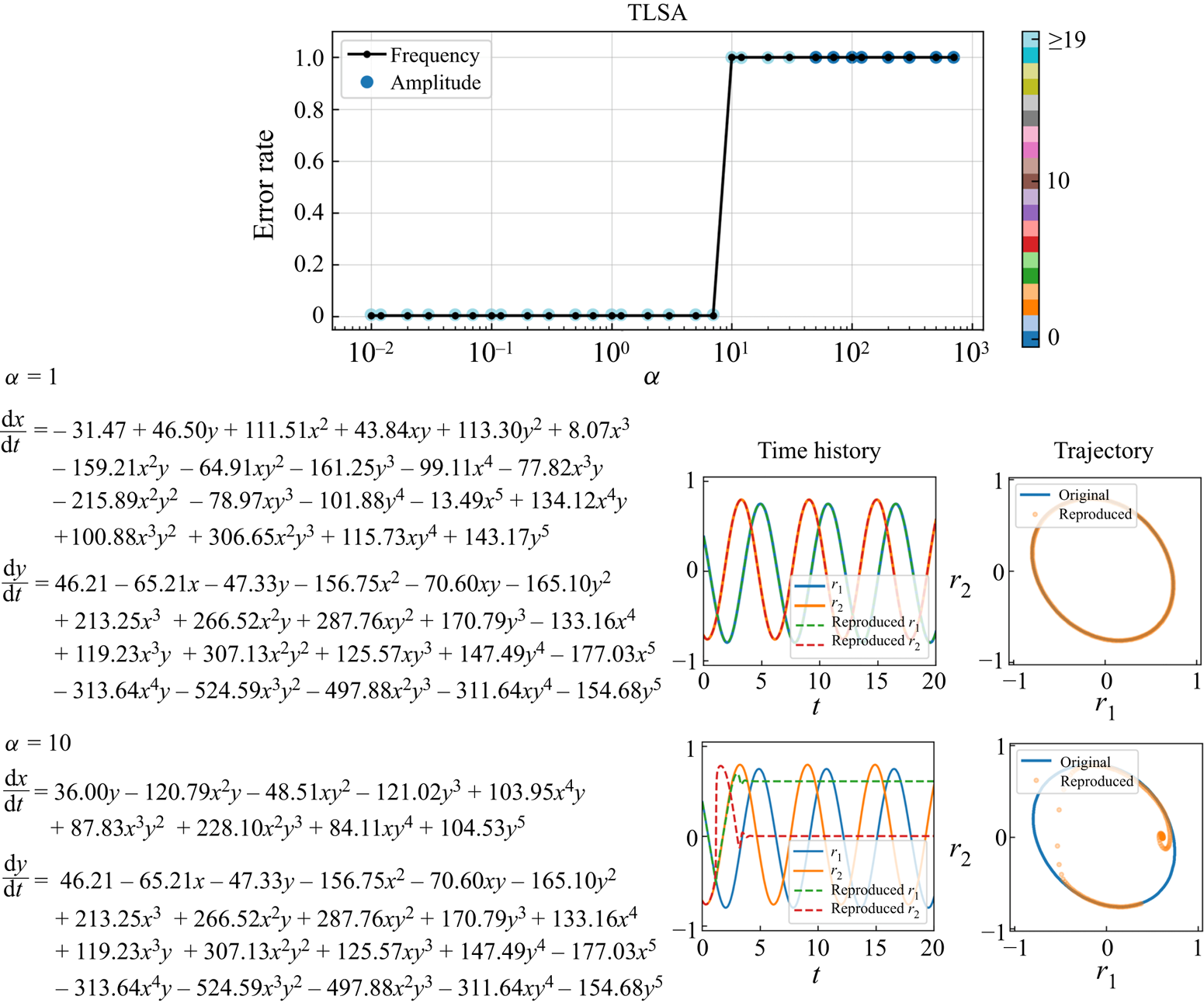

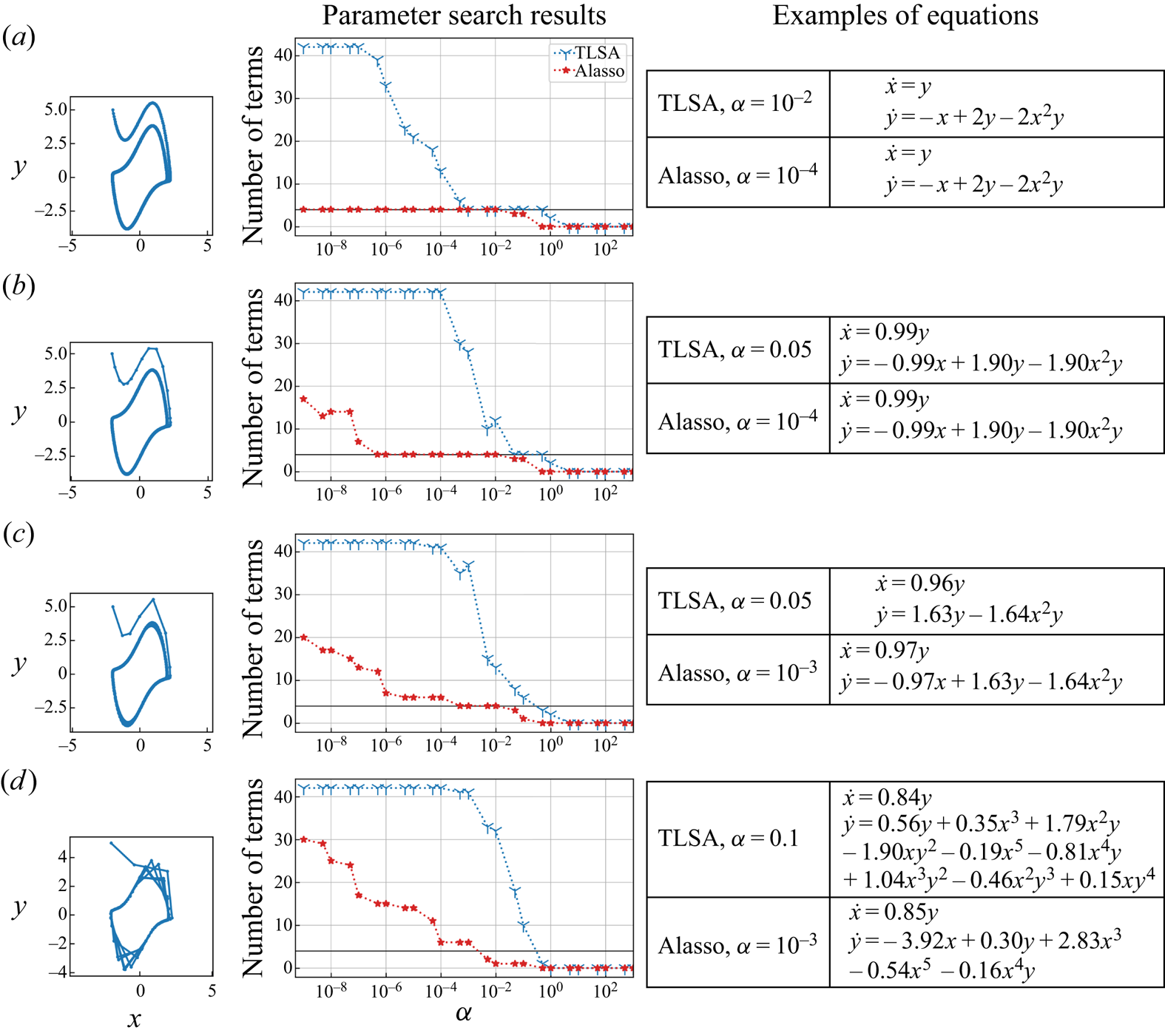

We consider two regression methods for SINDy, i.e. TLSA and Alasso, following our previous tests. Let us present the results of parameter search utilizing TLSA in figure 6. Note here that we use 10 000 mapped vectors for the construction of a SINDy model. Using ![]() $\alpha =1$, the reproduced trajectory is in excellent agreement with the original one. However, there are many terms in the ordinary differential equations and some coefficients are too large, although the oscillation are presented with a shallow range (

$\alpha =1$, the reproduced trajectory is in excellent agreement with the original one. However, there are many terms in the ordinary differential equations and some coefficients are too large, although the oscillation are presented with a shallow range (![]() $-1, 1$). The result with the TLSA here is likely because we do not use the data processing, which causes the large regression coefficients and non-sparse results. On the other hand, the reproduced trajectory with

$-1, 1$). The result with the TLSA here is likely because we do not use the data processing, which causes the large regression coefficients and non-sparse results. On the other hand, the reproduced trajectory with ![]() $\alpha =10$ converges to a point around

$\alpha =10$ converges to a point around ![]() $(r_1,r_2)=(0.6,0)$, although the model becomes parsimonious. Since the predicted latent vector converges after

$(r_1,r_2)=(0.6,0)$, although the model becomes parsimonious. Since the predicted latent vector converges after ![]() $t\approx 4$ as shown in the time history of figure 6, the temporal evolution of the flow field by the CNN decoder is also frozen, as shown in figure 7. Other observation here is that there remains no terms in the ordinary differential equations with high threshold, i.e.

$t\approx 4$ as shown in the time history of figure 6, the temporal evolution of the flow field by the CNN decoder is also frozen, as shown in figure 7. Other observation here is that there remains no terms in the ordinary differential equations with high threshold, i.e. ![]() $\alpha \geq 50$.

$\alpha \geq 50$.

Figure 6. Results with TLSA for the periodic cylinder example. Parameter search results, examples of obtained equations, and reproduced trajectories with ![]() $\alpha =1$ and

$\alpha =1$ and ![]() $\alpha =10$ are shown. Colour of amplitude plot indicates the total number of terms. In the ordinary differential equations, the latent vector components

$\alpha =10$ are shown. Colour of amplitude plot indicates the total number of terms. In the ordinary differential equations, the latent vector components ![]() $(r_1, r_2)$ are represented by

$(r_1, r_2)$ are represented by ![]() $(x,y)$ for clarity.

$(x,y)$ for clarity.

Figure 7. Temporally evolved flow fields of DNS and the reproduced flow field with ![]() $\alpha =1$ and

$\alpha =1$ and ![]() $\alpha =10$. With

$\alpha =10$. With ![]() $\alpha =10$, the flow field is frozen after around

$\alpha =10$, the flow field is frozen after around ![]() $t=4$ (enhanced using blue colour).

$t=4$ (enhanced using blue colour).

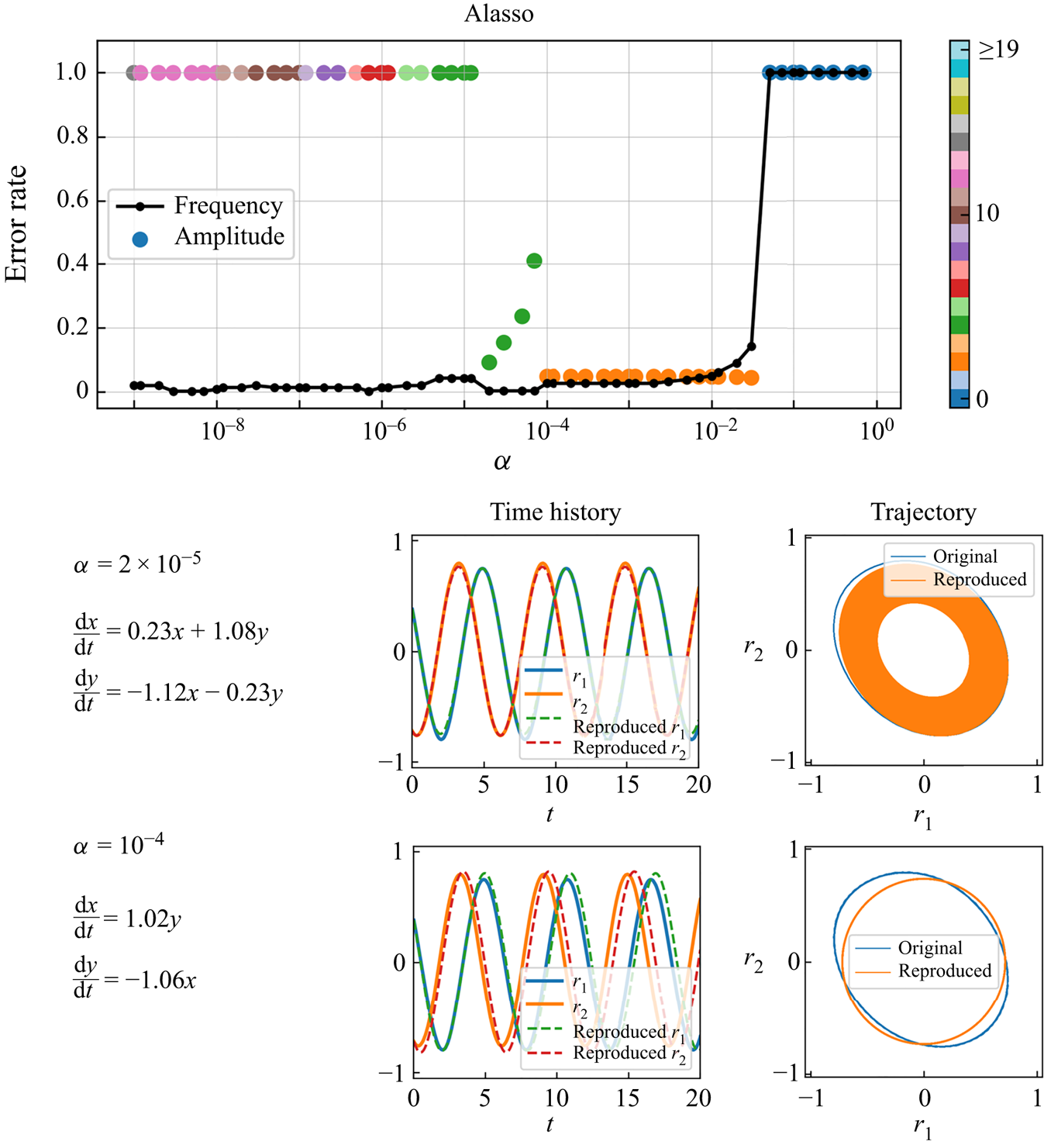

With Alasso as the regression method, we can find the candidate models with low errors at ![]() $\alpha =2 \times 10^{-5}$ and

$\alpha =2 \times 10^{-5}$ and ![]() $1 \times 10^{-4}$, as shown in figure 8. At

$1 \times 10^{-4}$, as shown in figure 8. At ![]() $\alpha =2 \times 10^{-5}$, the ordinary differential equations consist of four coefficients in total and the reproduced trajectory gradually converges as shown. On the other hand, by choosing the appropriate sparsity constant, i.e.

$\alpha =2 \times 10^{-5}$, the ordinary differential equations consist of four coefficients in total and the reproduced trajectory gradually converges as shown. On the other hand, by choosing the appropriate sparsity constant, i.e. ![]() $\alpha = 1 \times 10^{-4}$, the circle-like oscillating trajectory, which is similar to the reference, can be represented with only two terms. We then check the reproduced flow field by the combination of the predicted latent vector by SINDy and CNN decoder, as shown in figure 9. The temporal evolution of high-dimensional dynamics can be reproduced well using the proposed model, although the period of the two fields are slightly different.

$\alpha = 1 \times 10^{-4}$, the circle-like oscillating trajectory, which is similar to the reference, can be represented with only two terms. We then check the reproduced flow field by the combination of the predicted latent vector by SINDy and CNN decoder, as shown in figure 9. The temporal evolution of high-dimensional dynamics can be reproduced well using the proposed model, although the period of the two fields are slightly different.

Figure 8. Results with Alasso of a periodic cylinder example. Parameter search results, examples of obtained equations, and reproduced trajectories with ![]() $\alpha =2 \times 10^{-5}$ and

$\alpha =2 \times 10^{-5}$ and ![]() $\alpha =1 \times 10^{-4}$ are shown. Colour of amplitude plot indicates the total number of terms. In the ordinary differential equations, the latent vector components

$\alpha =1 \times 10^{-4}$ are shown. Colour of amplitude plot indicates the total number of terms. In the ordinary differential equations, the latent vector components ![]() $(r_1, r_2)$ are represented by

$(r_1, r_2)$ are represented by ![]() $(x,y)$ for clarity.

$(x,y)$ for clarity.

Figure 9. Time history of the latent vector and temporal evolution of the wake of DNS and the reproduced field at ![]() $(a)$

$(a)$ ![]() $t=1025$,

$t=1025$, ![]() $(b)$

$(b)$ ![]() $t=1026$,

$t=1026$, ![]() $(c)$

$(c)$ ![]() $t=1027$ and

$t=1027$ and ![]() $(d)$

$(d)$ ![]() $t=1028$.

$t=1028$.

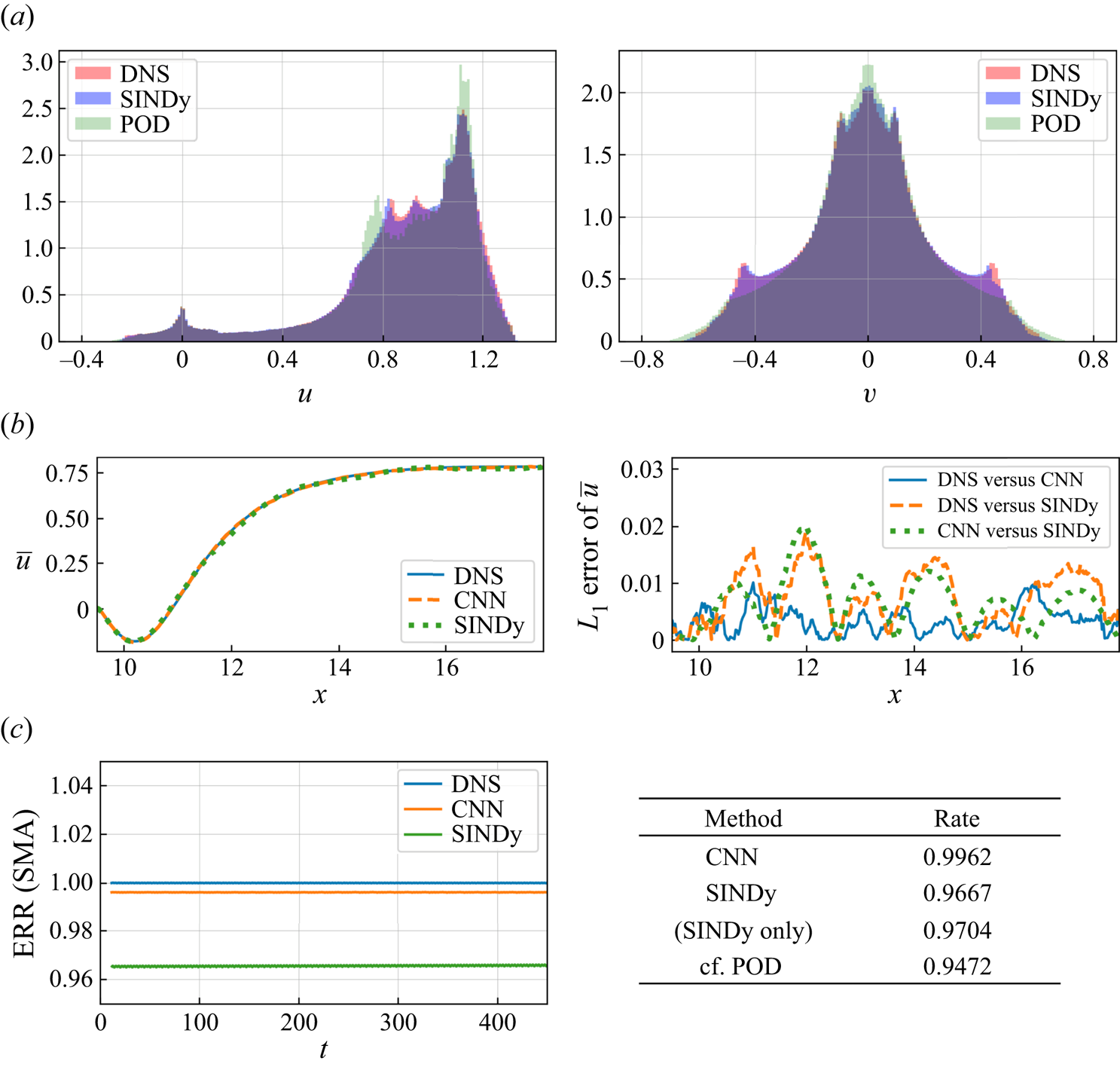

The quantitative analysis with the probability density function, the mean streamwise velocity at ![]() $y=0$, and the time history of ERR is summarized in figure 10. The ERR

$y=0$, and the time history of ERR is summarized in figure 10. The ERR ![]() ${\mathcal {R}}$ is defined as

${\mathcal {R}}$ is defined as

\begin{equation} {\mathcal{R}}= \dfrac{ \int_S (u^{\prime 2}_{ Rep}+{v^{\prime}}^{2}_{ Rep})\,\textrm{d}S }{ \int_S (u^{\prime 2}_{ DNS}+v^{\prime 2}_{ DNS}) \,\textrm{d}S }, \end{equation}

\begin{equation} {\mathcal{R}}= \dfrac{ \int_S (u^{\prime 2}_{ Rep}+{v^{\prime}}^{2}_{ Rep})\,\textrm{d}S }{ \int_S (u^{\prime 2}_{ DNS}+v^{\prime 2}_{ DNS}) \,\textrm{d}S }, \end{equation}

where ![]() $(u'_{ Rep},v'_{ Rep})$ and

$(u'_{ Rep},v'_{ Rep})$ and ![]() $(u'_{ DNS}, v'_{ DNS}$) denote the fluctuation components of the reproduced velocity and the original velocity, respectively. For the probability density function, the distribution of the CNN-SINDy model is in excellent agreement with the reference DNS data. Since the SINDy model of the present case integrates the latent vector via the CNN-AE which can map a high-dimensional flow field into low-dimensional space efficiently thanks to the nonlinear activation function (Murata et al. Reference Murata, Fukami and Fukagata2020), the SINDy outperforms POD with two modes as long as the appropriate waveforms can be obtained. In other words, the obtained equation can be integrated stably. The success of the CNN-SINDy-based modelling can be also seen with time-averaged velocity and energy containing rate in figure 10.

$(u'_{ DNS}, v'_{ DNS}$) denote the fluctuation components of the reproduced velocity and the original velocity, respectively. For the probability density function, the distribution of the CNN-SINDy model is in excellent agreement with the reference DNS data. Since the SINDy model of the present case integrates the latent vector via the CNN-AE which can map a high-dimensional flow field into low-dimensional space efficiently thanks to the nonlinear activation function (Murata et al. Reference Murata, Fukami and Fukagata2020), the SINDy outperforms POD with two modes as long as the appropriate waveforms can be obtained. In other words, the obtained equation can be integrated stably. The success of the CNN-SINDy-based modelling can be also seen with time-averaged velocity and energy containing rate in figure 10.

Figure 10. Evaluation of reproduced flow field with a periodic shedding. ![]() $(a)$ Probability density function,

$(a)$ Probability density function, ![]() $(b)$ mean streamwise velocity at

$(b)$ mean streamwise velocity at ![]() $y=0$ and

$y=0$ and ![]() $(c)$ the energy reconstruction rate (ERR). Simple moving average (SMA) of ERR is shown here for the clearness.

$(c)$ the energy reconstruction rate (ERR). Simple moving average (SMA) of ERR is shown here for the clearness.

3.2. Example 2: transient wake of cylinder flow

As the second example for the combination of CNN and SINDy, we consider the transient process of a flow around a circular cylinder at ![]() ${Re}_D=100$. For taking into account the transient process, the streamwise length of the computational domain and that of the flow field data are extended to

${Re}_D=100$. For taking into account the transient process, the streamwise length of the computational domain and that of the flow field data are extended to ![]() $L_x=51.2$ and

$L_x=51.2$ and ![]() $8.2 \leq x \leq 37$, i.e.

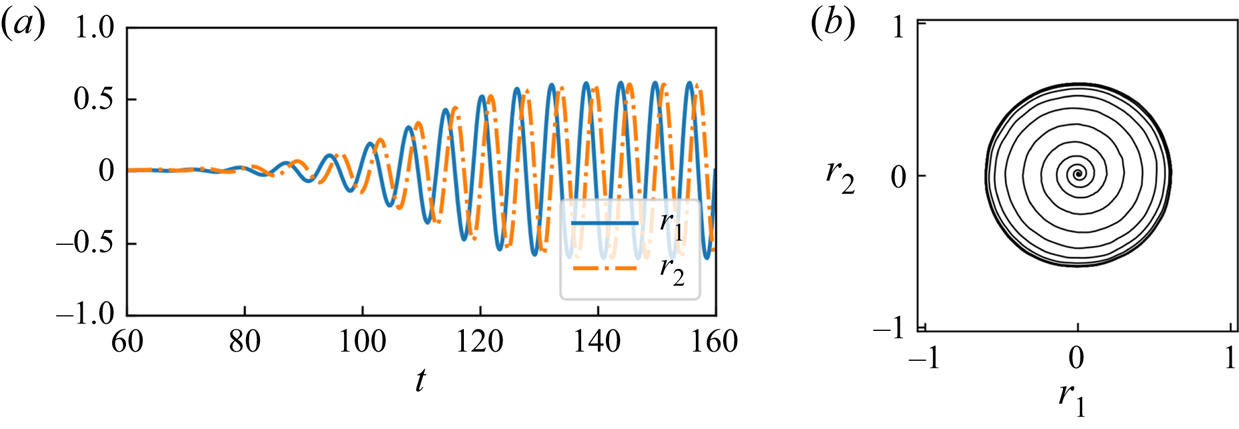

$8.2 \leq x \leq 37$, i.e. ![]() $N_x^{*}=1152$, same set-up with Murata et al. (Reference Murata, Fukami and Fukagata2020). The time history and the trajectory in the latent space are shown in figure 11. Since the trajectory looks like a circle as shown in figure 11

$N_x^{*}=1152$, same set-up with Murata et al. (Reference Murata, Fukami and Fukagata2020). The time history and the trajectory in the latent space are shown in figure 11. Since the trajectory looks like a circle as shown in figure 11![]() $(b)$, we use the residual sum of error of

$(b)$, we use the residual sum of error of ![]() $r_1^{2}+r_2^{2}$ between the original value and the reproduced value as the evaluation index in this example.

$r_1^{2}+r_2^{2}$ between the original value and the reproduced value as the evaluation index in this example.

Figure 11. Latent vector dynamics of the transient process: (![]() $a$) time history and (

$a$) time history and (![]() $b$) trajectory.

$b$) trajectory.

For SINDy, we use the data with ![]() $t=[60,160]$. The library matrix contains up to fifth-order terms. Although equations, which can provide the correct temporal evolution of the latent vector, can be obtained with Alasso by including higher-order terms, the model here is, of course, not sparse and so difficult to interpret.

$t=[60,160]$. The library matrix contains up to fifth-order terms. Although equations, which can provide the correct temporal evolution of the latent vector, can be obtained with Alasso by including higher-order terms, the model here is, of course, not sparse and so difficult to interpret.

The parameter search results with TLSA for transient wake are summarized in figure 12. Since the trajectory here is simple as shown, we can obtain the governing equations, which provide the reasonable agreement with the reference, at ![]() $\alpha =7\times 10^{-2}$. With a higher sparsity constant, i.e.

$\alpha =7\times 10^{-2}$. With a higher sparsity constant, i.e. ![]() $\alpha =0.7$, the equation provides a temporal evolution of the latent vector with almost no oscillation.

$\alpha =0.7$, the equation provides a temporal evolution of the latent vector with almost no oscillation.

Figure 12. Results with TLSA of the transient example. Parameter search results, examples of obtained equations, and reproduced trajectories with ![]() $\alpha =7 \times 10^{-2}$ and

$\alpha =7 \times 10^{-2}$ and ![]() $\alpha =0.7$ are shown. Colour of amplitude plot indicates the total number of terms. In the ordinary differential equations, the latent vector components

$\alpha =0.7$ are shown. Colour of amplitude plot indicates the total number of terms. In the ordinary differential equations, the latent vector components ![]() $(r_1, r_2)$ are represented by

$(r_1, r_2)$ are represented by ![]() $(x,y)$ for clarity.

$(x,y)$ for clarity.

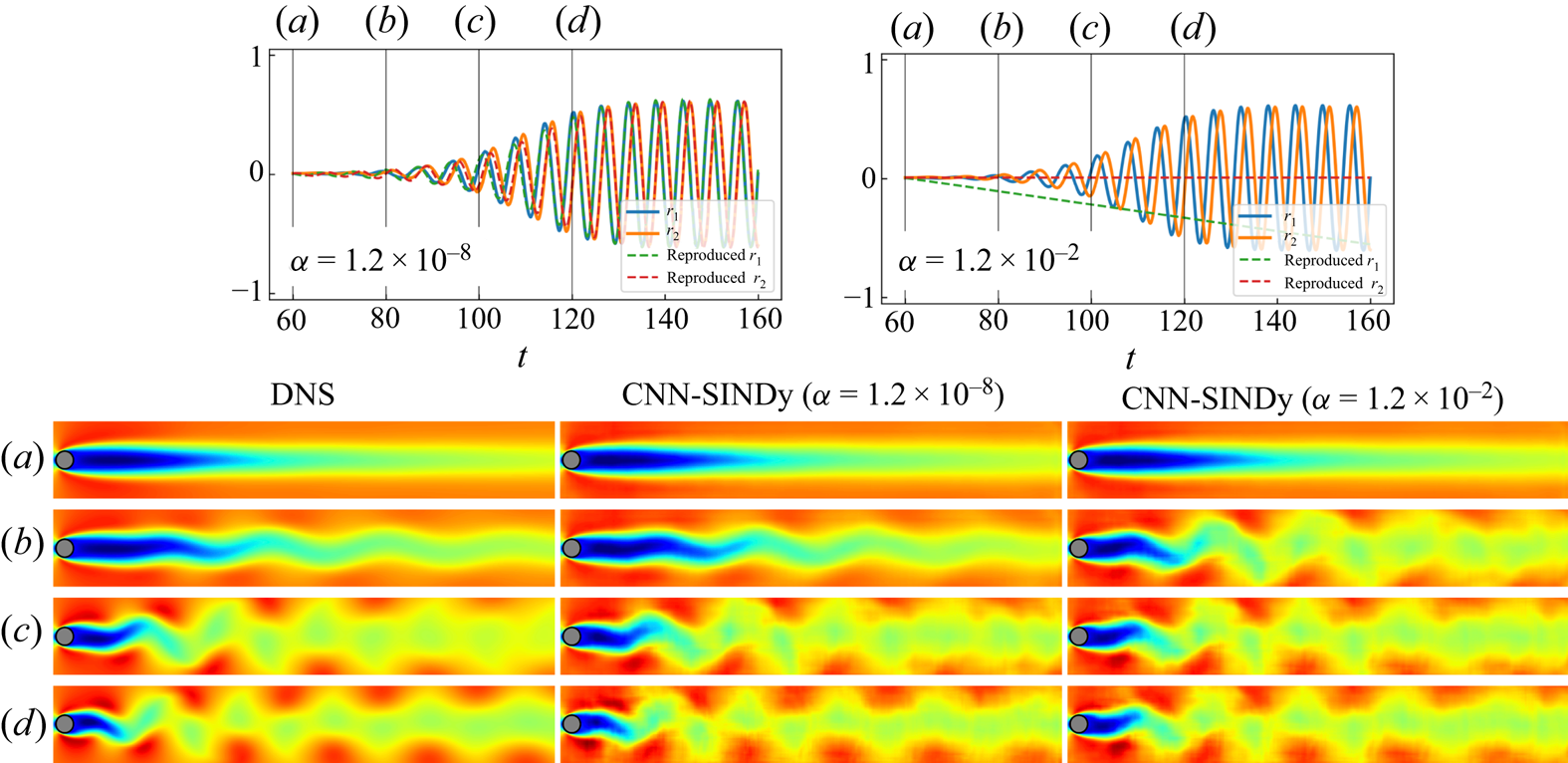

Alasso is also considered, as shown in figure 13. Although the number of terms is larger than that in the periodic shedding example, the trajectory can be reproduced successfully at ![]() $\alpha =1.2 \times 10^{-8}$. With a higher sparsity constant, e.g.

$\alpha =1.2 \times 10^{-8}$. With a higher sparsity constant, e.g. ![]() $\alpha =1.2 \times 10^{-2}$, the developing oscillation is not reproduced due to a lack of number of terms. Noteworthy here is that the error for frequency is almost negligible in the range of

$\alpha =1.2 \times 10^{-2}$, the developing oscillation is not reproduced due to a lack of number of terms. Noteworthy here is that the error for frequency is almost negligible in the range of ![]() $5\times 10^{-6}\leq \alpha \leq 10^{-3}$ in spite of the high error rate regarding

$5\times 10^{-6}\leq \alpha \leq 10^{-3}$ in spite of the high error rate regarding ![]() $x^{2}+y^{2}$. This is because the slight oscillation occurs with a similar frequency to the solution. Although the identified equation here is more complex than the well known transient system with a two-dimensional paraboloic manifold (Sipp & Lebedev Reference Sipp and Lebedev2007; Loiseau & Brunton Reference Loiseau and Brunton2018; Loiseau, Brunton & Noack Reference Loiseau, Brunton and Noack2018a), it is likely caused by the complexity of captured information inside the AE modes compared with the POD modes (Murata et al. Reference Murata, Fukami and Fukagata2020). This observation enables us to notice the trade-off relationship between the used information associated with the number of modes and the sparsity of equation. Hence, taking a constraint for nonlinear AE modes to be useful, e.g. orthogonal, may be helpful in identifying more sparse dynamics (Champion et al. Reference Champion, Lusch, Kutz and Brunton2019a; Ladjal, Newson & Pham Reference Ladjal, Newson and Pham2019; Jayaraman & Mamun Reference Jayaraman and Mamun2020).

$x^{2}+y^{2}$. This is because the slight oscillation occurs with a similar frequency to the solution. Although the identified equation here is more complex than the well known transient system with a two-dimensional paraboloic manifold (Sipp & Lebedev Reference Sipp and Lebedev2007; Loiseau & Brunton Reference Loiseau and Brunton2018; Loiseau, Brunton & Noack Reference Loiseau, Brunton and Noack2018a), it is likely caused by the complexity of captured information inside the AE modes compared with the POD modes (Murata et al. Reference Murata, Fukami and Fukagata2020). This observation enables us to notice the trade-off relationship between the used information associated with the number of modes and the sparsity of equation. Hence, taking a constraint for nonlinear AE modes to be useful, e.g. orthogonal, may be helpful in identifying more sparse dynamics (Champion et al. Reference Champion, Lusch, Kutz and Brunton2019a; Ladjal, Newson & Pham Reference Ladjal, Newson and Pham2019; Jayaraman & Mamun Reference Jayaraman and Mamun2020).

Figure 13. Results with Alasso of the transient example. Parameter search results, examples of obtained equations, and reproduced trajectories with ![]() $\alpha =1.2 \times 10^{-8}$ and

$\alpha =1.2 \times 10^{-8}$ and ![]() $\alpha =1.2 \times 10^{-2}$ are shown. Colour of amplitude plot indicates the total number of terms. In the ordinary differential equations, the latent vector components

$\alpha =1.2 \times 10^{-2}$ are shown. Colour of amplitude plot indicates the total number of terms. In the ordinary differential equations, the latent vector components ![]() $(r_1, r_2)$ are represented by

$(r_1, r_2)$ are represented by ![]() $(x,y)$ for clarity.

$(x,y)$ for clarity.

The streamwise velocity fields reproduced by the CNN-AE and the integrated latent variables with the Alasso-based SINDy are presented in figure 14. The present two sparsity constants here correspond to the results in figure 13. With ![]() $\alpha =1.2\times 10^{-8}$, the flow field can successfully be reproduced thanks to nonlinear terms in the identified equations by SINDy. In contrast, it is tough to capture the transient behaviour correctly by the CNN-SINDy model with

$\alpha =1.2\times 10^{-8}$, the flow field can successfully be reproduced thanks to nonlinear terms in the identified equations by SINDy. In contrast, it is tough to capture the transient behaviour correctly by the CNN-SINDy model with ![]() $\alpha =1.2\times 10^{-2}$, which can explicitly be found by the comparison among

$\alpha =1.2\times 10^{-2}$, which can explicitly be found by the comparison among ![]() $(b)$ (where

$(b)$ (where ![]() $t=80$) in figure 14. This corresponds to the observation that the sparse model cannot reproduce the trajectory well in figure 13.

$t=80$) in figure 14. This corresponds to the observation that the sparse model cannot reproduce the trajectory well in figure 13.

Figure 14. Time history of latent vector and the temporal evolution of the wake of DNS and the reproduced field at ![]() $(a)$

$(a)$ ![]() $t=60$,

$t=60$, ![]() $(b)$

$(b)$ ![]() $t=80$,

$t=80$, ![]() $(c)$

$(c)$ ![]() $t=100$ and

$t=100$ and ![]() $(d)$

$(d)$ ![]() $t=120$.

$t=120$.

3.3. Example 3: two-parallel cylinders wake

To demonstrate the applicability of the present CNN-SINDy-based ROM to more complex wake flows, let us here consider a flow around two-parallel cylinders whose radii are different from each other (Morimoto et al. Reference Morimoto, Fukami, Zhang and Fukagata2020b, Reference Morimoto, Fukami, Zhang, Nair and Fukagata2021), as presented in figure 1. Unlike the wake dynamics of two identical circular cylinders as described in Kang (Reference Kang2003), the coupled wakes behind two side-by-side uneven cylinders exhibits more complex vortex interactions, due to the mismatch in their individual shedding frequencies. Training flow snapshots are prepared with a DNS with the open-source computational fluid dynamics (known as CFD) toolbox OpenFOAM (Weller et al. Reference Weller, Tabor, Jasak and Fureby1998), using second-order discretization schemes in both time and space. We arrange the two circular cylinders with a size ratio of ![]() $r$ and a gap of

$r$ and a gap of ![]() $gD$, where

$gD$, where ![]() $g$ is the gap ratio and

$g$ is the gap ratio and ![]() $D$ is the diameter of the lower cylinder. The Reynolds number is fixed at

$D$ is the diameter of the lower cylinder. The Reynolds number is fixed at ![]() $Re_D=100$. The two cylinders are placed

$Re_D=100$. The two cylinders are placed ![]() $20D$ downstream of the inlet where a uniform flow with velocity

$20D$ downstream of the inlet where a uniform flow with velocity ![]() $U_{\infty }$ is prescribed, and

$U_{\infty }$ is prescribed, and ![]() $40D$ upstream of the outlet with zero pressure. The side boundaries are specified as slip and are

$40D$ upstream of the outlet with zero pressure. The side boundaries are specified as slip and are ![]() $40D$ apart. The time steps for the DNS and the snapshot sampling are, respectively, 0.01 and 0.1. A notable feature of wakes associated with the present two-cylinder position is complex wake behaviour caused by varying the size ratio

$40D$ apart. The time steps for the DNS and the snapshot sampling are, respectively, 0.01 and 0.1. A notable feature of wakes associated with the present two-cylinder position is complex wake behaviour caused by varying the size ratio ![]() $r$ and the gap ratio

$r$ and the gap ratio ![]() $g$ (Morimoto et al. Reference Morimoto, Fukami, Zhang and Fukagata2020b, Reference Morimoto, Fukami, Zhang, Nair and Fukagata2021). In the present study, the wake with the combination of

$g$ (Morimoto et al. Reference Morimoto, Fukami, Zhang and Fukagata2020b, Reference Morimoto, Fukami, Zhang, Nair and Fukagata2021). In the present study, the wake with the combination of ![]() $\{r,g\}=\{1.15,2.0\}$, which is a quasi-periodic in time, is considered for the demonstration. For the training of both the CNN-AE and the SINDy, we use 2000 snapshots. The vorticity field

$\{r,g\}=\{1.15,2.0\}$, which is a quasi-periodic in time, is considered for the demonstration. For the training of both the CNN-AE and the SINDy, we use 2000 snapshots. The vorticity field ![]() $\omega$ is used as a target attribute in this example.

$\omega$ is used as a target attribute in this example.

Prior to the use of SINDy, let us determine the size of latent variables ![]() $n_r$ of this example, as summarized in figure 15. We here construct AEs with two

$n_r$ of this example, as summarized in figure 15. We here construct AEs with two ![]() $n_r$ cases:

$n_r$ cases: ![]() $n_r=3$ and

$n_r=3$ and ![]() $n_r=4$. As presented in figure 15

$n_r=4$. As presented in figure 15![]() $(a)$, the recovered flow fields through the AE-based low-dimensionalization are in reasonable agreement with the reference DNS snapshots. Quantitatively speaking, the

$(a)$, the recovered flow fields through the AE-based low-dimensionalization are in reasonable agreement with the reference DNS snapshots. Quantitatively speaking, the ![]() $L_2$ error norms between the reference and the decoded fields are approximately 14 % and 5 % for each case. Although both models achieve the smooth spatial reconstruction, we can find the significant difference by focusing on their temporal behaviours in figure 15

$L_2$ error norms between the reference and the decoded fields are approximately 14 % and 5 % for each case. Although both models achieve the smooth spatial reconstruction, we can find the significant difference by focusing on their temporal behaviours in figure 15![]() $(b)$. While the time trace with

$(b)$. While the time trace with ![]() $n_r=4$ shows smooth variations for all four variables, the curves with

$n_r=4$ shows smooth variations for all four variables, the curves with ![]() $n_r=3$ exhibit a shaky behaviour despite the quasi-periodic nature of the flow. This is caused by the higher error level of the

$n_r=3$ exhibit a shaky behaviour despite the quasi-periodic nature of the flow. This is caused by the higher error level of the ![]() $n_r=3$ case. Furthermore, it is also known that a high-error level due to an overcompression via AE is highly influential for a temporal prediction of them since there should be exposure bias (Endo, Tomobe & Yasuoka Reference Endo, Tomobe and Yasuoka2018) occurring by a temporal integration (Hasegawa et al. Reference Hasegawa, Fukami, Murata and Fukagata2020a,Reference Hasegawa, Fukami, Murata and Fukagatab; Nakamura et al. Reference Nakamura, Fukami, Hasegawa, Nabae and Fukagata2021). In what follows, we use the AE with

$n_r=3$ case. Furthermore, it is also known that a high-error level due to an overcompression via AE is highly influential for a temporal prediction of them since there should be exposure bias (Endo, Tomobe & Yasuoka Reference Endo, Tomobe and Yasuoka2018) occurring by a temporal integration (Hasegawa et al. Reference Hasegawa, Fukami, Murata and Fukagata2020a,Reference Hasegawa, Fukami, Murata and Fukagatab; Nakamura et al. Reference Nakamura, Fukami, Hasegawa, Nabae and Fukagata2021). In what follows, we use the AE with ![]() $n_r=4$ for the SINDy-based ROM.

$n_r=4$ for the SINDy-based ROM.

Figure 15. Autoencoder-based low-dimensionalization for the wake of the two-parallel cylinders. ![]() $(a)$ Comparison of the reference DNS, the decoded field with

$(a)$ Comparison of the reference DNS, the decoded field with ![]() $n_r=3$ and the decoded field with

$n_r=3$ and the decoded field with ![]() $n_r=4$. The values underneath the contours indicate the

$n_r=4$. The values underneath the contours indicate the ![]() $L_2$ error norm.

$L_2$ error norm. ![]() $(b)$ Time series of the latent variables with

$(b)$ Time series of the latent variables with ![]() $n_r=3$ and 4.

$n_r=3$ and 4.

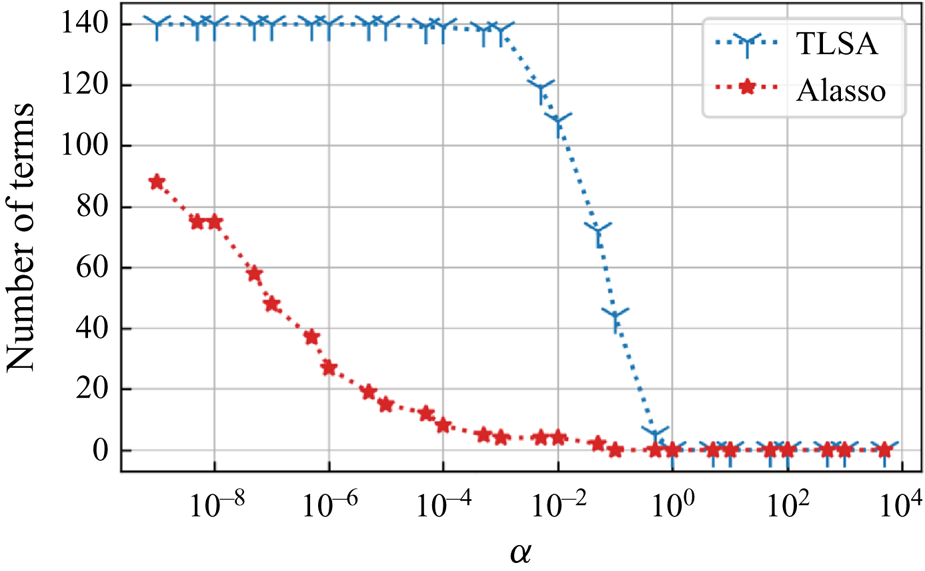

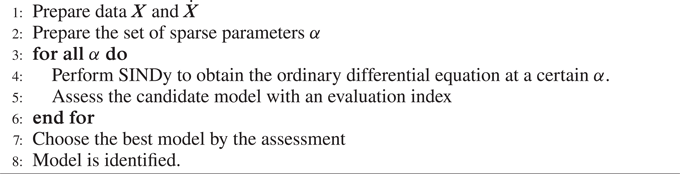

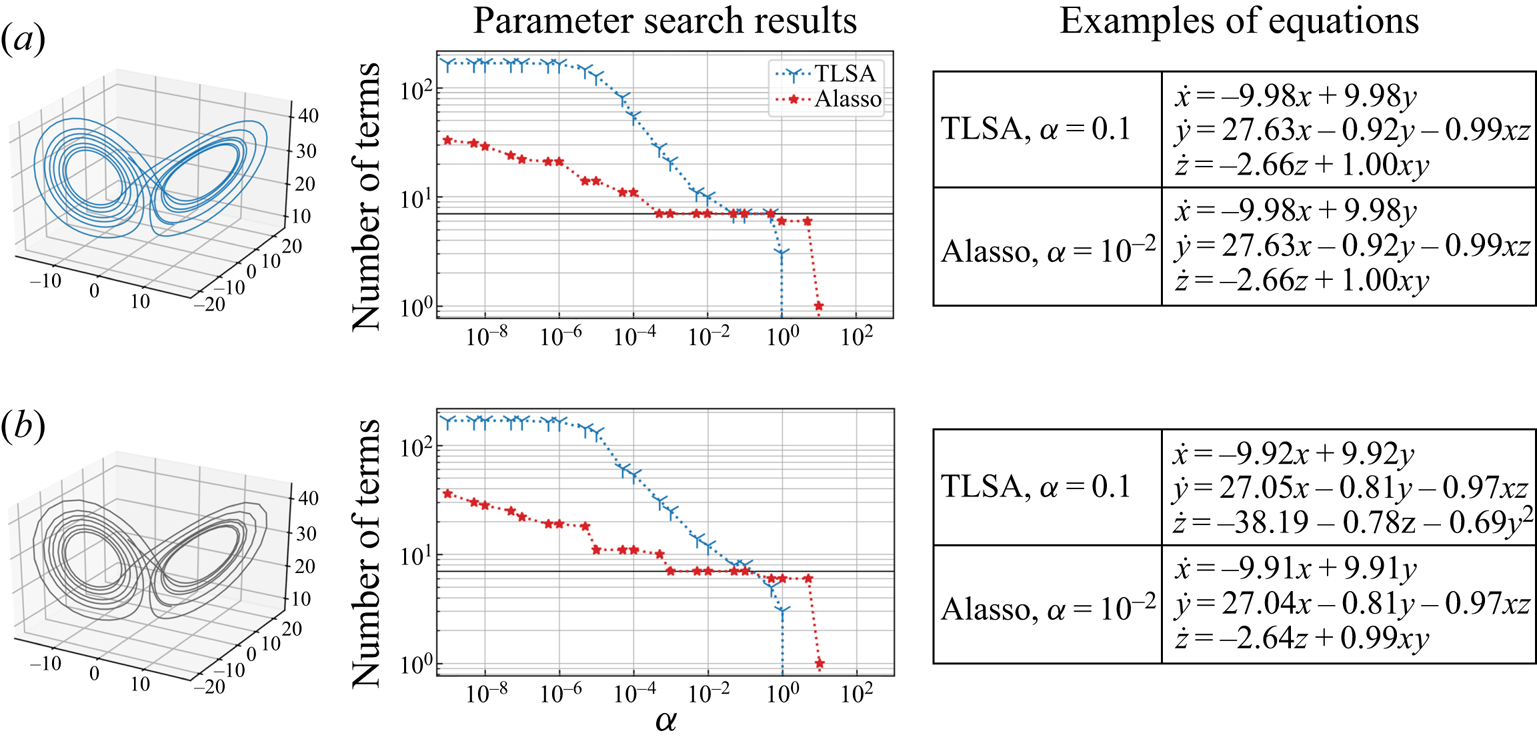

Let us perform SINDy with TLSA and Alasso for the AE latent variables with ![]() $n_r=4$. For the construction of a library matrix of this example, we include up to third-order terms in terms of four latent variables. The relationship between the sparsity constant

$n_r=4$. For the construction of a library matrix of this example, we include up to third-order terms in terms of four latent variables. The relationship between the sparsity constant ![]() $\alpha$ and the number of terms in identified equations is examined in figure 16. Similarly to the previous two examples with a single cylinder, the trends for TLSA and Alasso are different from each other. Note that one can only refer to this map to check the sparsity trend of the identified equation, since there is no model equation. To validate the fidelity of the equations, we need to integrate identified equations and decode a flow field using the decoder part of AE.

$\alpha$ and the number of terms in identified equations is examined in figure 16. Similarly to the previous two examples with a single cylinder, the trends for TLSA and Alasso are different from each other. Note that one can only refer to this map to check the sparsity trend of the identified equation, since there is no model equation. To validate the fidelity of the equations, we need to integrate identified equations and decode a flow field using the decoder part of AE.

Figure 16. The relationship between the sparsity constant ![]() $\alpha$ and the number of terms in identified equations via SINDys with TLSA and Alasso for the two-parallel cylinder wake example.

$\alpha$ and the number of terms in identified equations via SINDys with TLSA and Alasso for the two-parallel cylinder wake example.

The identified equations via the TLSA-based SINDy are investigated in figure 17. As examples, the cases with ![]() $\alpha =0.1$ and 0.5 are shown. The case with

$\alpha =0.1$ and 0.5 are shown. The case with ![]() $\alpha =1$ which contains nonlinear terms shows a diverging behaviour at

$\alpha =1$ which contains nonlinear terms shows a diverging behaviour at ![]() $t\approx 90$. The sparse equation obtained with

$t\approx 90$. The sparse equation obtained with ![]() $\alpha =0.5$ can provide stable solutions for

$\alpha =0.5$ can provide stable solutions for ![]() $r_1$ and

$r_1$ and ![]() $r_2$; however, its sparseness causes the unstable integration for

$r_2$; however, its sparseness causes the unstable integration for ![]() $r_3$ and

$r_3$ and ![]() $r_4$. Note in passing that the TLSA-based modelling cannot identify the stable solution although we have carefully examined the influence on the sparsity factors for other cases not shown here.

$r_4$. Note in passing that the TLSA-based modelling cannot identify the stable solution although we have carefully examined the influence on the sparsity factors for other cases not shown here.

Figure 17. Integration of the identified equations via the TLSA-based SINDy for the two-parallel cylinder wake example. The cases are ![]() $(a)$ TLSA,

$(a)$ TLSA, ![]() $\alpha = 0.1$ and

$\alpha = 0.1$ and ![]() $(b)$ TLSA,

$(b)$ TLSA, ![]() $\alpha = 0.5$.

$\alpha = 0.5$.

We then use Alasso as a regression function of SINDy, as summarized in figure 18. The cases with ![]() $\alpha =5\times 10^{-7}$ and

$\alpha =5\times 10^{-7}$ and ![]() $10^{-6}$ are shown. The identified equation with

$10^{-6}$ are shown. The identified equation with ![]() $\alpha =5\times 10^{-7}$ can successfully be integrated and its temporal behaviour is in good agreement with the reference curve provided by the AE-based latent variables. However, what is notable here is that the equation with

$\alpha =5\times 10^{-7}$ can successfully be integrated and its temporal behaviour is in good agreement with the reference curve provided by the AE-based latent variables. However, what is notable here is that the equation with ![]() $\alpha =10^{-6}$ shows the decaying behaviour with an increasing of the integration window despite the equation form being very similar to the case with

$\alpha =10^{-6}$ shows the decaying behaviour with an increasing of the integration window despite the equation form being very similar to the case with ![]() $\alpha =5\times 10^{-7}$. Some nonlinear terms are eliminated due to the slight increase in the sparsity constant. These results indicate the importance of the careful choice for both regression functions and the sparsity constants associated with them.

$\alpha =5\times 10^{-7}$. Some nonlinear terms are eliminated due to the slight increase in the sparsity constant. These results indicate the importance of the careful choice for both regression functions and the sparsity constants associated with them.

Figure 18. Integration of the identified equations via the Alasso-based SINDy for the two-parallel cylinder wake example. The cases are ![]() $(a)$ Alasso,

$(a)$ Alasso, ![]() $\alpha =5\times 10^{-7}$ and

$\alpha =5\times 10^{-7}$ and ![]() $(b)$ Alasso,

$(b)$ Alasso, ![]() $\alpha =10^{-6}$.

$\alpha =10^{-6}$.

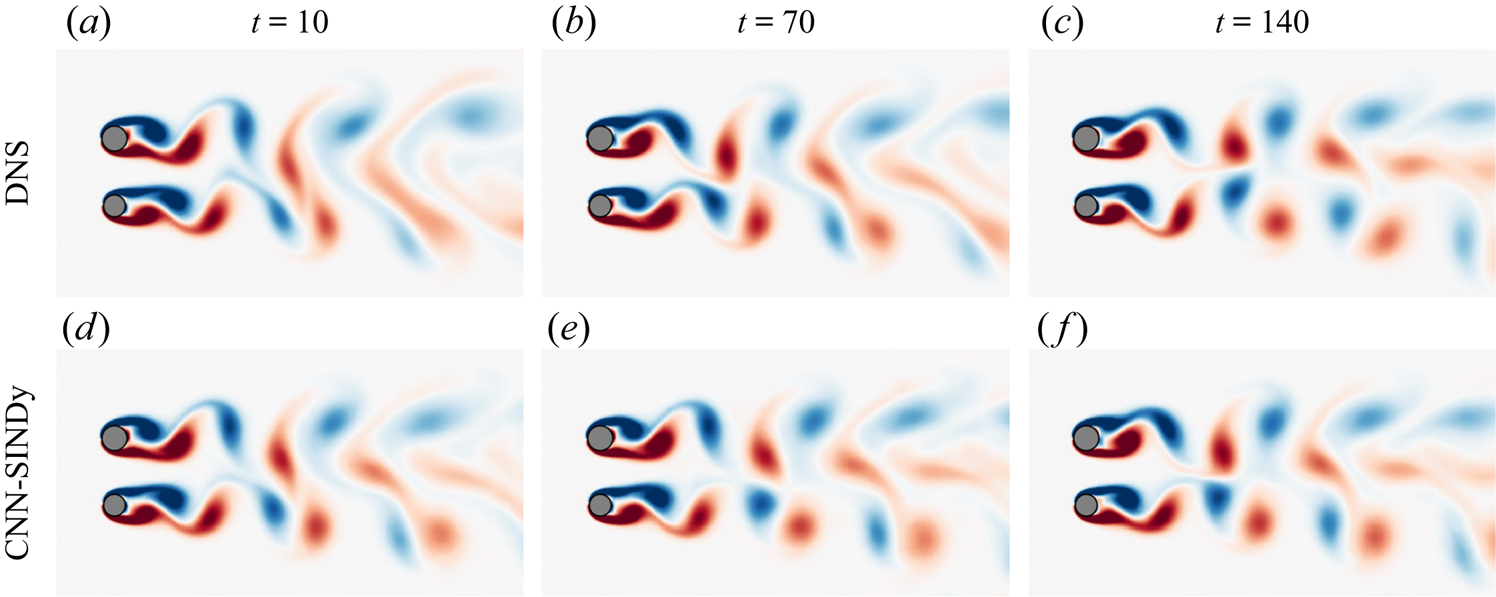

Based on the results above, the integrated variables with the case of Alasso with ![]() $\alpha =5\times 10^{-7}$ are fed into the CNN decoder, as presented in figure 19. The reproduced flow fields are in reasonable agreement with the reference DNS data, although there is a slight offset due to numerical integration. Hence, the present reduced-order model is able to achieve a reasonable wake reconstruction by following only the temporal evolution of low-dimensionalized vector and caring the selection of parameters, which is akin to the observation of single cylinder cases.

$\alpha =5\times 10^{-7}$ are fed into the CNN decoder, as presented in figure 19. The reproduced flow fields are in reasonable agreement with the reference DNS data, although there is a slight offset due to numerical integration. Hence, the present reduced-order model is able to achieve a reasonable wake reconstruction by following only the temporal evolution of low-dimensionalized vector and caring the selection of parameters, which is akin to the observation of single cylinder cases.

Figure 19. Reproduced fields with the CNN-SINDy-based ROM of the two-parallel cylinders example. The case of Alasso with ![]() $\alpha =5\times 10^{-7}$ is used for SINDy. The DNS flow fields are also shown for the comparison.

$\alpha =5\times 10^{-7}$ is used for SINDy. The DNS flow fields are also shown for the comparison.

3.4. Outlook: nine-equation shear flow model

One of the remaining issues of the present CNN-SINDy model is the applicability to flows where a lot of spatial modes are required to reconstruct the flow, e.g. turbulence. With the current scheme for the CNN-AE, it is difficult to compress turbulent flow data while keeping the information of high-dimensional dynamics (Murata et al. Reference Murata, Fukami and Fukagata2020). We have also recently reported that the difficulty for turbulence low-dimensionalization still remains even if we use a customized AE, although it can achieve the better mapping than that by conventional AE and POD (Fukami, Nakamura & Fukagata Reference Fukami, Nakamura and Fukagata2020c). In addition, the number of terms in a coefficient matrix must be drastically increased for the turbulent case unless the flow can be expressed with a smaller number of modes. Hence, our next question here is ‘can we also use SINDy if a turbulent flow can be mapped into a smaller number of modes?’. In this section, let us consider a nine-equation turbulent shear flow model between infinite parallel free-slip walls under a sinusoidal body force (Moehlis, Faisst & Eckhardt Reference Moehlis, Faisst and Eckhardt2004) as the preliminary example for the application to turbulence with low number of modes.

In the nine-equation model, various statistics, including mean velocity profile, streaks and vortex structures, can be represented with only nine Fourier modes ![]() ${\boldsymbol u}_j({\boldsymbol x})$. Analogous to POD, the flow fields can be mathematically expressed with a superposition of temporal coefficients and modes such that

${\boldsymbol u}_j({\boldsymbol x})$. Analogous to POD, the flow fields can be mathematically expressed with a superposition of temporal coefficients and modes such that

\begin{equation} {\boldsymbol u}({\boldsymbol x},t) = \sum_{j=1}^{9} a_j(t){\boldsymbol u}_j({\boldsymbol x}). \end{equation}

\begin{equation} {\boldsymbol u}({\boldsymbol x},t) = \sum_{j=1}^{9} a_j(t){\boldsymbol u}_j({\boldsymbol x}). \end{equation}Here, nine ordinary differential equations for the nine mode coefficients are as follows:

\begin{gather} \dfrac{\textrm{d}a_2}{\textrm{d}t}={-}Re^{{-}1}\left(\dfrac{4\mu^{2}}{3}+\gamma\right)a_2+\dfrac{5\sqrt{2}}{3\sqrt{3}}\dfrac{\gamma^{2}}{\kappa_{\zeta\gamma}}a_4a_6-\dfrac{\gamma^{2}}{\sqrt{6}\kappa_{\zeta\gamma}}a_5a_7-\dfrac{\zeta\mu\gamma}{\sqrt{6}\kappa_{\zeta\gamma}\kappa_{\zeta\mu\gamma}}a_5a_8\nonumber\\ -\sqrt{\dfrac{3}{2}}\dfrac{\mu\gamma}{\kappa_{\mu\gamma}}a_1a_3-\sqrt{\dfrac{3}{2}}\dfrac{\mu\gamma}{\kappa_{\mu\gamma}}a_3a_9, \end{gather}

\begin{gather} \dfrac{\textrm{d}a_2}{\textrm{d}t}={-}Re^{{-}1}\left(\dfrac{4\mu^{2}}{3}+\gamma\right)a_2+\dfrac{5\sqrt{2}}{3\sqrt{3}}\dfrac{\gamma^{2}}{\kappa_{\zeta\gamma}}a_4a_6-\dfrac{\gamma^{2}}{\sqrt{6}\kappa_{\zeta\gamma}}a_5a_7-\dfrac{\zeta\mu\gamma}{\sqrt{6}\kappa_{\zeta\gamma}\kappa_{\zeta\mu\gamma}}a_5a_8\nonumber\\ -\sqrt{\dfrac{3}{2}}\dfrac{\mu\gamma}{\kappa_{\mu\gamma}}a_1a_3-\sqrt{\dfrac{3}{2}}\dfrac{\mu\gamma}{\kappa_{\mu\gamma}}a_3a_9, \end{gather} \begin{gather} \dfrac{\textrm{d}a_3}{\textrm{d}t}={-}\dfrac{\mu^{2}+\gamma^{2}}{Re}a_3+\dfrac{2}{\sqrt{6}}\dfrac{\zeta\mu\gamma}{\kappa_{\zeta\gamma}\kappa_{\mu\gamma}}(a_4a_7+a_5a_6)\nonumber\\ +\dfrac{\mu^{2}(3\zeta^{2}+\gamma^{2})-3\gamma^{2}(\zeta^{2}+\gamma^{2})}{\sqrt{6}\kappa_{\zeta\gamma}\kappa_{\mu\gamma}\kappa_{\zeta\mu\gamma}}a_4a_8, \end{gather}

\begin{gather} \dfrac{\textrm{d}a_3}{\textrm{d}t}={-}\dfrac{\mu^{2}+\gamma^{2}}{Re}a_3+\dfrac{2}{\sqrt{6}}\dfrac{\zeta\mu\gamma}{\kappa_{\zeta\gamma}\kappa_{\mu\gamma}}(a_4a_7+a_5a_6)\nonumber\\ +\dfrac{\mu^{2}(3\zeta^{2}+\gamma^{2})-3\gamma^{2}(\zeta^{2}+\gamma^{2})}{\sqrt{6}\kappa_{\zeta\gamma}\kappa_{\mu\gamma}\kappa_{\zeta\mu\gamma}}a_4a_8, \end{gather} \begin{gather} \dfrac{\textrm{d}a_4}{\textrm{d}t}={-}\dfrac{3\zeta^{2}+4\mu^{2}}{3Re}a_4-\dfrac{\zeta}{\sqrt{6}}a_1a_5-\dfrac{10}{3\sqrt{6}}\dfrac{\zeta^{2}}{\kappa_{\zeta\gamma}}a_2a_6\nonumber\\ -\sqrt{\dfrac{3}{2}}\dfrac{\zeta\mu\gamma}{\kappa_{\zeta\gamma}\kappa_{\mu\gamma}}a_3a_7-\sqrt{\dfrac{3}{2}}\dfrac{\zeta^{2}\mu^{2}}{\kappa_{\zeta\gamma}\kappa_{\mu\gamma}\kappa_{\zeta\mu\gamma}}a_3a_8-\dfrac{\zeta}{\sqrt{6}}a_5a_9, \end{gather}

\begin{gather} \dfrac{\textrm{d}a_4}{\textrm{d}t}={-}\dfrac{3\zeta^{2}+4\mu^{2}}{3Re}a_4-\dfrac{\zeta}{\sqrt{6}}a_1a_5-\dfrac{10}{3\sqrt{6}}\dfrac{\zeta^{2}}{\kappa_{\zeta\gamma}}a_2a_6\nonumber\\ -\sqrt{\dfrac{3}{2}}\dfrac{\zeta\mu\gamma}{\kappa_{\zeta\gamma}\kappa_{\mu\gamma}}a_3a_7-\sqrt{\dfrac{3}{2}}\dfrac{\zeta^{2}\mu^{2}}{\kappa_{\zeta\gamma}\kappa_{\mu\gamma}\kappa_{\zeta\mu\gamma}}a_3a_8-\dfrac{\zeta}{\sqrt{6}}a_5a_9, \end{gather} \begin{gather} \dfrac{\textrm{d}a_5}{\textrm{d}t}={-}\dfrac{\zeta^{2}+\mu^{2}}{Re}a_5+\dfrac{\zeta}{\sqrt{6}}a_1a_4+\dfrac{\zeta^{2}}{\sqrt{6}\kappa_{\zeta\gamma}}a_2a_7\nonumber\\ -\dfrac{\zeta\mu\gamma}{\sqrt{6}\kappa_{\zeta\gamma}\kappa_{\zeta\mu\gamma}}a_2a_8+\dfrac{\zeta}{\sqrt{6}}a_4a_9+\dfrac{2}{\sqrt{6}}\dfrac{\zeta\mu\gamma}{\kappa_{\zeta\gamma}\kappa_{\mu\gamma}}a_3a_6, \end{gather}

\begin{gather} \dfrac{\textrm{d}a_5}{\textrm{d}t}={-}\dfrac{\zeta^{2}+\mu^{2}}{Re}a_5+\dfrac{\zeta}{\sqrt{6}}a_1a_4+\dfrac{\zeta^{2}}{\sqrt{6}\kappa_{\zeta\gamma}}a_2a_7\nonumber\\ -\dfrac{\zeta\mu\gamma}{\sqrt{6}\kappa_{\zeta\gamma}\kappa_{\zeta\mu\gamma}}a_2a_8+\dfrac{\zeta}{\sqrt{6}}a_4a_9+\dfrac{2}{\sqrt{6}}\dfrac{\zeta\mu\gamma}{\kappa_{\zeta\gamma}\kappa_{\mu\gamma}}a_3a_6, \end{gather} \begin{gather} \dfrac{\textrm{d}a_6}{\textrm{d}t}={-}\dfrac{3\zeta^{2}+4\mu^{2}+3\gamma^{2}}{3Re}a_6+\dfrac{\zeta}{\sqrt{6}}a_1a_7+\sqrt{\dfrac{3}{2}}\dfrac{\mu\gamma}{\kappa_{\zeta\mu\gamma}}a_1a_8\nonumber\\ +\dfrac{10}{3\sqrt{6}}\dfrac{\zeta^{2}-\gamma^{2}}{\kappa_{\zeta\gamma}}a_2a_4-2\sqrt{\dfrac{2}{3}}\dfrac{\zeta\mu\gamma}{\kappa_{\zeta\gamma}\kappa_{\mu\gamma}}a_3a_5+\dfrac{\zeta}{\sqrt{6}}a_7a_9+\sqrt{\dfrac{3}{2}}\dfrac{\mu\gamma}{\kappa_{\zeta\mu\gamma}}a_8a_9, \end{gather}

\begin{gather} \dfrac{\textrm{d}a_6}{\textrm{d}t}={-}\dfrac{3\zeta^{2}+4\mu^{2}+3\gamma^{2}}{3Re}a_6+\dfrac{\zeta}{\sqrt{6}}a_1a_7+\sqrt{\dfrac{3}{2}}\dfrac{\mu\gamma}{\kappa_{\zeta\mu\gamma}}a_1a_8\nonumber\\ +\dfrac{10}{3\sqrt{6}}\dfrac{\zeta^{2}-\gamma^{2}}{\kappa_{\zeta\gamma}}a_2a_4-2\sqrt{\dfrac{2}{3}}\dfrac{\zeta\mu\gamma}{\kappa_{\zeta\gamma}\kappa_{\mu\gamma}}a_3a_5+\dfrac{\zeta}{\sqrt{6}}a_7a_9+\sqrt{\dfrac{3}{2}}\dfrac{\mu\gamma}{\kappa_{\zeta\mu\gamma}}a_8a_9, \end{gather} \begin{gather} \dfrac{\textrm{d}a_7}{\textrm{d}t}={-}\dfrac{\zeta^{2}+\mu^{2}+\gamma^{2}}{Re}a_7-\dfrac{\zeta}{\sqrt{6}}(a_1a_6+a_6a_9)\nonumber\\ +\dfrac{1}{\sqrt{6}}\dfrac{\gamma^{2}-\zeta^{2}}{\kappa_{\zeta\gamma}}a_2a_5+\dfrac{1}{\sqrt{6}}\dfrac{\zeta\mu\gamma}{\kappa_{\zeta\gamma}\kappa_{\mu\gamma}}a_3a_4, \end{gather}

\begin{gather} \dfrac{\textrm{d}a_7}{\textrm{d}t}={-}\dfrac{\zeta^{2}+\mu^{2}+\gamma^{2}}{Re}a_7-\dfrac{\zeta}{\sqrt{6}}(a_1a_6+a_6a_9)\nonumber\\ +\dfrac{1}{\sqrt{6}}\dfrac{\gamma^{2}-\zeta^{2}}{\kappa_{\zeta\gamma}}a_2a_5+\dfrac{1}{\sqrt{6}}\dfrac{\zeta\mu\gamma}{\kappa_{\zeta\gamma}\kappa_{\mu\gamma}}a_3a_4, \end{gather}

where ![]() $\zeta$,

$\zeta$, ![]() $\mu$ and

$\mu$ and ![]() $\gamma$ are constant values,

$\gamma$ are constant values, ![]() $\kappa _{\zeta \gamma }=\sqrt {\zeta ^{2}+\gamma ^{2}}$,

$\kappa _{\zeta \gamma }=\sqrt {\zeta ^{2}+\gamma ^{2}}$, ![]() $\kappa _{\mu \gamma }=\sqrt {\mu ^{2}+\gamma ^{2}}$,

$\kappa _{\mu \gamma }=\sqrt {\mu ^{2}+\gamma ^{2}}$, ![]() $\kappa _{\zeta \mu \gamma }=\sqrt {\zeta ^{2}+\mu ^{2}+\gamma ^{2}}$. These coefficients are multiplied to corresponding Fourier modes which have individual roles in reconstructing a flow, e.g. basic profile, streak and spanwise flows. We refer enthusiastic readers to Moehlis et al. (Reference Moehlis, Faisst and Eckhardt2004) for details.

$\kappa _{\zeta \mu \gamma }=\sqrt {\zeta ^{2}+\mu ^{2}+\gamma ^{2}}$. These coefficients are multiplied to corresponding Fourier modes which have individual roles in reconstructing a flow, e.g. basic profile, streak and spanwise flows. We refer enthusiastic readers to Moehlis et al. (Reference Moehlis, Faisst and Eckhardt2004) for details.

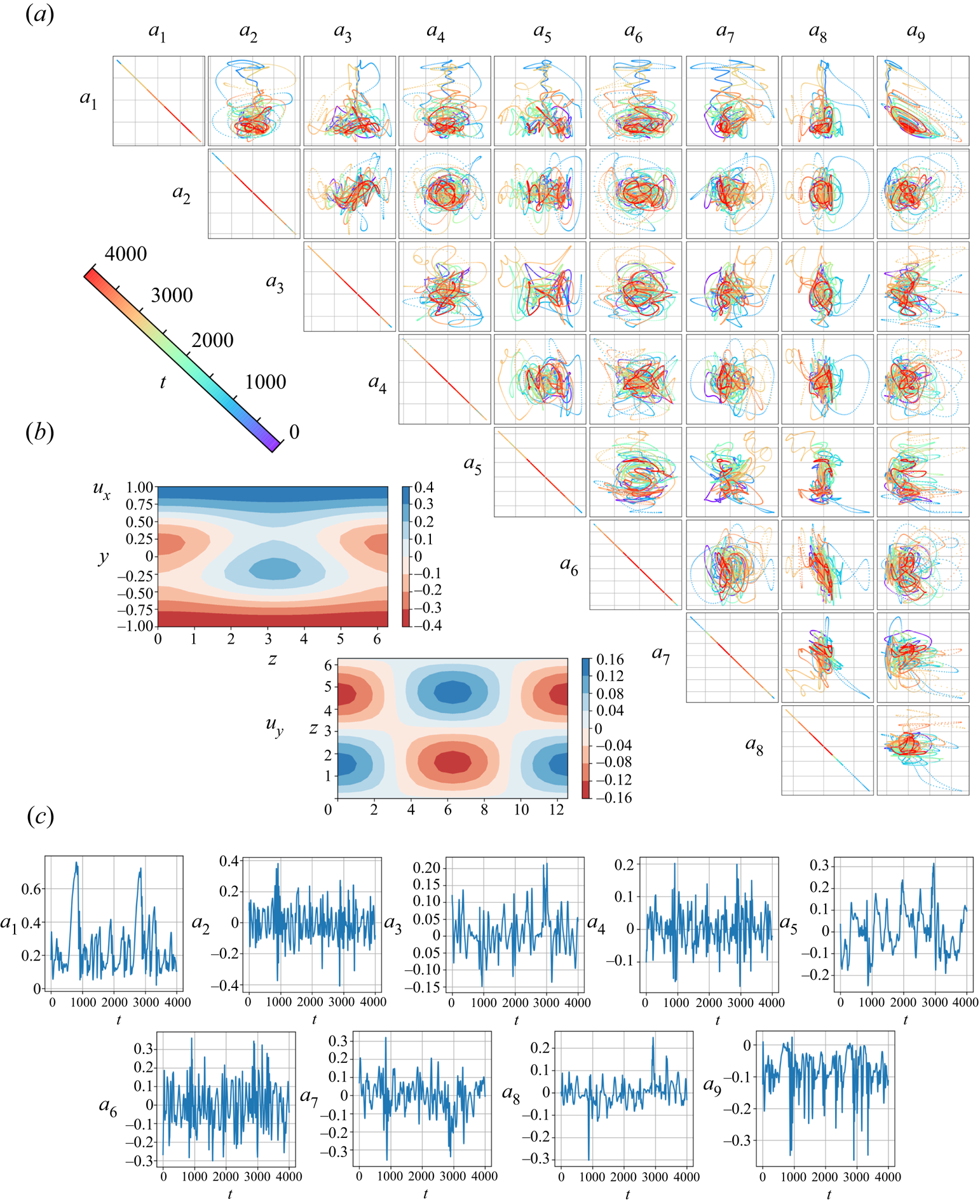

In this section, we aim to obtain the coefficient matrix for the simultaneous time differential equations (3.5) to (3.13) using SINDy. In the following, let us consider the Reynolds number based on the channel half-height ![]() $\delta$ and laminar velocity

$\delta$ and laminar velocity ![]() $U_0$ at a distance of

$U_0$ at a distance of ![]() $\delta /2$ from the top wall set to

$\delta /2$ from the top wall set to ![]() $Re = 400$. The initial condition for numerical integration of the equations above is

$Re = 400$. The initial condition for numerical integration of the equations above is ![]() $(a^{0}_1,a^{0}_1,a^{0}_2,a^{0}_3,a^{0}_4,a^{0}_5,a^{0}_6,a^{0}_7,a^{0}_8,a^{0}_9)=(1, 0.07066, -0.07076, 0, 0, 0, 0, 0)$ with a random small perturbation for

$(a^{0}_1,a^{0}_1,a^{0}_2,a^{0}_3,a^{0}_4,a^{0}_5,a^{0}_6,a^{0}_7,a^{0}_8,a^{0}_9)=(1, 0.07066, -0.07076, 0, 0, 0, 0, 0)$ with a random small perturbation for ![]() $a_4$, which is the same set up as the reference code by Srinivasan et al. (Reference Srinivasan, Guastoni, Azizpour, Schlatter and Vinuesa2019). The lengths and the number of grids of the computational domain are set to

$a_4$, which is the same set up as the reference code by Srinivasan et al. (Reference Srinivasan, Guastoni, Azizpour, Schlatter and Vinuesa2019). The lengths and the number of grids of the computational domain are set to ![]() $(L_x, L_y, L_z)=(4{\rm \pi} , 2, 2{\rm \pi} )$ and

$(L_x, L_y, L_z)=(4{\rm \pi} , 2, 2{\rm \pi} )$ and ![]() $(N_x,N_y,N_z)=(21,21,21)$, respectively. The constant values are set to

$(N_x,N_y,N_z)=(21,21,21)$, respectively. The constant values are set to ![]() $(\zeta , \mu , \gamma )=(2{\rm \pi} /L_x, {\rm \pi}/2, 2{\rm \pi} /L_z)$ and the time step is 0.5. Examples of streamwise-averaged velocity

$(\zeta , \mu , \gamma )=(2{\rm \pi} /L_x, {\rm \pi}/2, 2{\rm \pi} /L_z)$ and the time step is 0.5. Examples of streamwise-averaged velocity ![]() $u_x$ contour and velocity

$u_x$ contour and velocity ![]() $u_y$ contour at the midplane with the temporal evolution of the amplitudes

$u_y$ contour at the midplane with the temporal evolution of the amplitudes ![]() $a_i$ and the pairwise correlations of the present nine coefficients are shown in figure 20. The chaotic nature of the considered problem can be seen. For performing SINDy, we use 10 000 discretized coefficients as the training data.

$a_i$ and the pairwise correlations of the present nine coefficients are shown in figure 20. The chaotic nature of the considered problem can be seen. For performing SINDy, we use 10 000 discretized coefficients as the training data.

Figure 20. ![]() $(a)$ Pairwise correlations of nine coefficients.

$(a)$ Pairwise correlations of nine coefficients. ![]() $(b)$ Example contours of velocity

$(b)$ Example contours of velocity ![]() $u_x$ and velocity

$u_x$ and velocity ![]() $u_y$ at midplane.

$u_y$ at midplane. ![]() $(c)$ Temporal evolution of amplitudes

$(c)$ Temporal evolution of amplitudes ![]() $a_i$.

$a_i$.

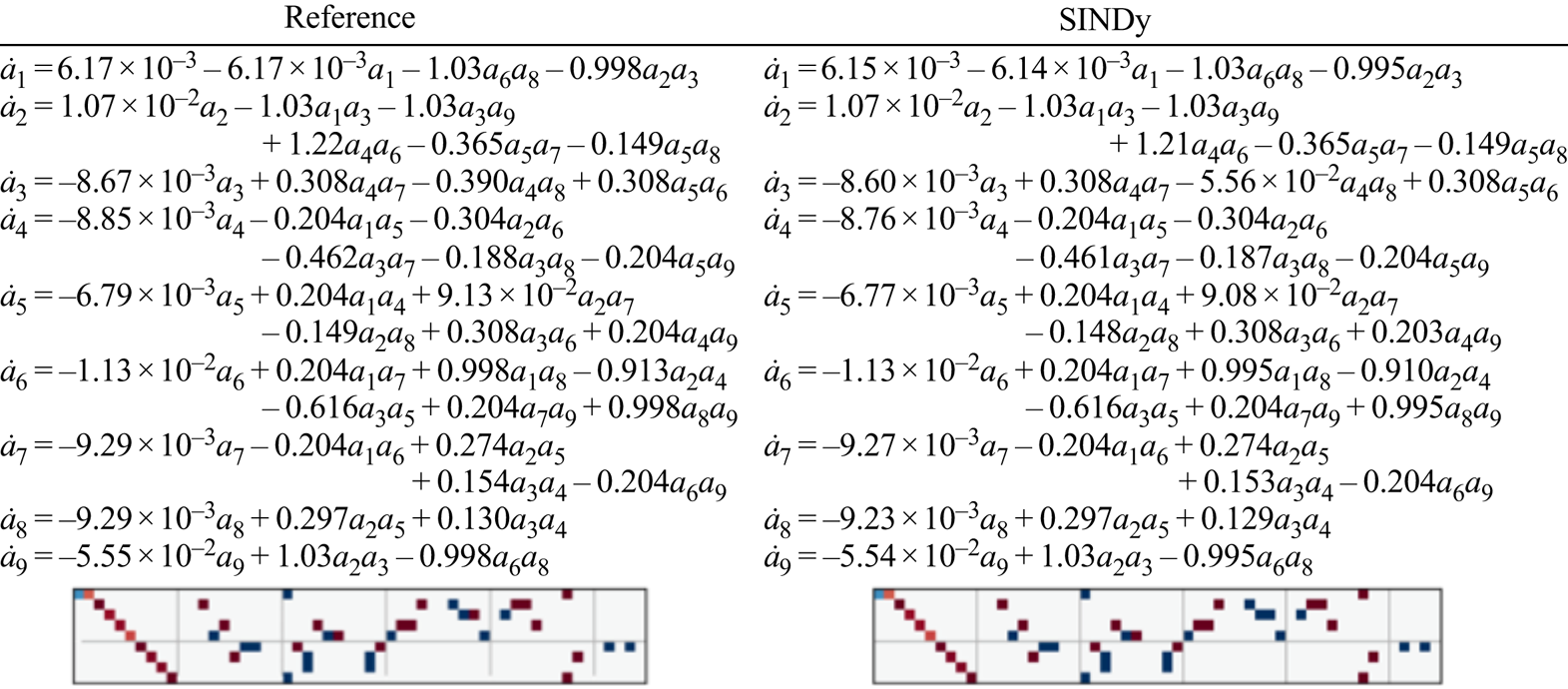

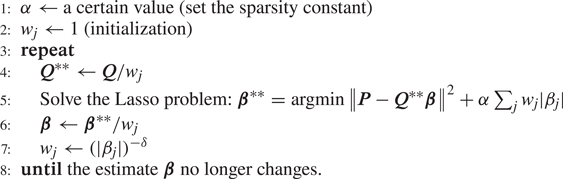

The SINDy in this section is also performed with TLSA and Alasso following the discussions above. Since the equations for the temporal coefficients are constructed up to second-order terms, the coefficient matrix also includes up to second-order terms, as shown in figure 21![]() $(a)$. The total number of terms considered here is 55. The results with TLSA and Alasso are summarized in figure 21

$(a)$. The total number of terms considered here is 55. The results with TLSA and Alasso are summarized in figure 21![]() $(b)$. The matrices located in the right-hand area correspond to the coefficient matrix in figure 21

$(b)$. The matrices located in the right-hand area correspond to the coefficient matrix in figure 21![]() $(a)$. Similar to the results above, the model using TLSA has some huge values due to a lack of penalty terms. Especially, the effects by overfitting can be seen for the low-order portion. On the other hand, the remarkable ability of the SINDy can be seen with the Alasso. By giving the appropriate sparsity constant

$(a)$. Similar to the results above, the model using TLSA has some huge values due to a lack of penalty terms. Especially, the effects by overfitting can be seen for the low-order portion. On the other hand, the remarkable ability of the SINDy can be seen with the Alasso. By giving the appropriate sparsity constant ![]() $\alpha$, the governing equations can be represented successfully. The details of each magnitude of the coefficients are shown in figure 22. It is striking that the dominant terms are perfectly captured by using SINDy, although the magnitudes are slightly different. These noteworthy results indicate that a governing equation of low-dimensionalized turbulent flows can be obtained from only time series data by using SINDy with appropriate parameter selections. In other words, we may also be able to construct a machine-learning-based reduced-order model for turbulent flows with the interpretable sense as a form of equation, if a well-designed model for mapping into low-dimensional manifolds can be constructed.

$\alpha$, the governing equations can be represented successfully. The details of each magnitude of the coefficients are shown in figure 22. It is striking that the dominant terms are perfectly captured by using SINDy, although the magnitudes are slightly different. These noteworthy results indicate that a governing equation of low-dimensionalized turbulent flows can be obtained from only time series data by using SINDy with appropriate parameter selections. In other words, we may also be able to construct a machine-learning-based reduced-order model for turbulent flows with the interpretable sense as a form of equation, if a well-designed model for mapping into low-dimensional manifolds can be constructed.

Figure 21. The SINDy for the nine-equation shear flow model. ![]() $(a)$ Schematic of coefficient matrix

$(a)$ Schematic of coefficient matrix ![]() $\beta$.

$\beta$. ![]() $(b)$ Relationship between the sparsity constant

$(b)$ Relationship between the sparsity constant ![]() $\alpha$ and the number of terms with the obtained coefficient matrices.

$\alpha$ and the number of terms with the obtained coefficient matrices.

Figure 22. Comparison of the governing equation for temporal coefficients.

The robustness of SINDy against noisy measurements observed in the training pipeline for this example is finally assessed in figure 23. We here introduce the Gaussian perturbation for the training data as

where ![]() ${\boldsymbol f}$ is a target vector,

${\boldsymbol f}$ is a target vector, ![]() $\gamma$ is the magnitude of the noise and

$\gamma$ is the magnitude of the noise and ![]() ${\boldsymbol n}$ denotes the Gaussian noise. As the magnitude of noise, four cases

${\boldsymbol n}$ denotes the Gaussian noise. As the magnitude of noise, four cases ![]() $\gamma _m=\{0.05, 0.10, 0.20, 0.40\}$ are considered. With

$\gamma _m=\{0.05, 0.10, 0.20, 0.40\}$ are considered. With ![]() $\gamma = 0.05$, SINDy is still be able to identify the equation accordingly. However, the first-order terms are eliminated and unnecessary high-order terms are added with an increasing of the noise magnitude of the training data, although the whole trend of coefficient matrix can be captured. These results imply that care should be taken for noisy observations in training data depending on the problem-setting users handling, although the SINDy can guarantee its robustness for a slight perturbation.

$\gamma = 0.05$, SINDy is still be able to identify the equation accordingly. However, the first-order terms are eliminated and unnecessary high-order terms are added with an increasing of the noise magnitude of the training data, although the whole trend of coefficient matrix can be captured. These results imply that care should be taken for noisy observations in training data depending on the problem-setting users handling, although the SINDy can guarantee its robustness for a slight perturbation.

Figure 23. Noise robustness of SINDy for the nine-shear turbulent flow example.

4. Conclusion

We performed a SINDy for low-dimensionalized fluid flows and investigated influences of the regression methods and parameter considered for construction of SINDy-based modelling. Following our preliminary test, the SINDys with two regression methods, the TLSA and the Alasso, were applied to the examples of a wake around a cylinder, its transient process, and a wake of two-parallel cylinders, with a CNN-AE. The CNN-AE was employed to map high-dimensional flow data into a two-dimensional latent space using nonlinear functions. Temporal evolution of the latent dynamics could be followed well by using SINDy with an appropriate parameter selection for both examples, although the required number of terms for the coefficient matrix are varied with each other due to the difference in complexity.

Finally, we also investigated the applicability of SINDy to turbulence with low-dimensional representation using a nine-shear flow model. The governing equation could be obtained successfully by utilizing Alasso with the appropriate sparsity constant. The results indicated that ML-ROM for turbulent flows can be perhaps presented in the interpretable sense in the form of an equation, if a well-designed model for mapping high-dimensional complex flows into low-dimensional manifolds can be constructed. With regard to the low-dimensional manifolds of fluid flows, the novel work by Loiseau et al. (Reference Loiseau, Brunton and Noack2018a) discussed the nonlinear relationship between lower-order POD modes and higher-order counterparts of cylinder wake dynamics by relating it to a triadic interaction among POD modes. We may be able to borrow the concept here to investigate the possible nonlinear correlations in the data, although it will be tackled in the future since it is not easy to apply their idea directly to the nine-equation shear flow model which is composed of more complex relationships among modes. Otherwise, a combination of uncertainty quantification (Maulik et al. Reference Maulik, Fukami, Ramachandra, Fukagata and Taira2020), transfer learning (Guastoni et al. Reference Guastoni, Encinar, Schlatter, Azizpour and Vinuesa2020a; Morimoto et al. Reference Morimoto, Fukami, Zhang and Fukagata2020b) and Koopman-based frameworks (Eivazi et al. Reference Eivazi, Guastoni, Schlatter, Azizpour and Vinuesa2021) may also be possible extensions toward more practical applications.