1 Introduction

Message-passing is a common communication model for developing concurrent and distributed systems where concurrent computations communicate through the passing of messages via send and recv operations. With growing demand for robust concurrent programming support at the language level, many programming languages or frameworks, including Scala (Haller & Odersky, Reference Haller and Odersky2009), Erlang (Armstrong et al., Reference Armstrong, Virding, Wikstrom and Williams1996), Go (Gerrand, Reference Gerrand2010), Rust (Klabnik & Nichols, Reference Klabnik and Nichols2020), Racket (Rac, 2019), Android (And, 2020), and Concurrent ML (Reppy, Reference Reppy1991), have adopted this model, providing support for writing expressive (sometimes first-class) communication protocols.

In many applications, the desire to express priority over communication arises. The traditional approach to this is to give priority to threads (Mueller, Reference Mueller1993). In a shared memory model, where concurrent access is regulated by locks, this approach works well. The trivial application of priority to message-passing languages, however, fails when messages are not just simple primitive values but communication protocols themselves (i.e. first-class representations of communication primitives and combinators). These first-class entities allow threads to perform communication protocols on behalf of their communication partners – a common paradigm in Android applications. For example, consider a thread receiving a message carrying a protocol from another thread. It is unclear with which priority the passed protocol should be executed – should it be the priority of the sending thread, the priority of receiving thread, or a user-specified priority?

In message-passing models such as Concurrent ML (CML), threads communicate synchronously according to the protocols constructed from send and receive primitives and combinators. In CML, synchronizing on the communication protocol triggers the execution of the protocol. Importantly, CML provides selective communication, allowing for computations to pick nondeterministically between a set of available messages or block until a message arrives. As a result of nondeterministic selection, the programmer is unable to impose preference over communications. If the programmer wants to encode preference, more complicated protocols must be introduced, whereas adding priority to selective communication gives the programmer to ability to specify the order in which messages should be picked.

Adding priority to such a model is challenging. Consider a selective communication, where multiple potential messages are available, and one must be chosen. If the selective communication only looks at messages and not their blocked senders, a choosing thread may inadvertently pick a low priority thread to communicate with when there is a thread with higher priority waiting to be unblocked. Such a situation would lead to priority inversion. Since these communication primitives must therefore be priority-aware, a need arises for clear rules about how priorities should compose and be compared. Such rules should not put an undue burden on the programmer or complicate the expression of already complex communication protocols.

In this paper, we propose a tiered-priority scheme that defines prioritized messages as first-class citizens in a CML-like message-passing language. Our scheme introduces the core computation within a message, an action, as the prioritized entity. We provide a realization of our priority scheme called PrioCML, as a modification to Concurrent ML. To demonstrate the practicality of PrioCML, we evaluate its performance by extending an existing web server and X-windowing toolkit. The main contributions of this paper are:

-

1. We define a meaning for priority in a message-passing model with a tiered-priority scheme. To our knowledge, this is the first definition of priority in a message-passing context. Crucially, we allow the ability for threads of differing priorities to communicate and provide the ability to prioritize first-class communication protocols.

-

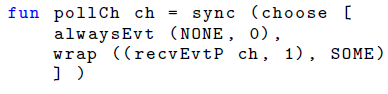

2. We present a new language PrioCML, which provides this tiered-priority scheme. PrioCML can express the semantics of polling, which cannot be modeled correctly in CML due to nondeterministic communication.

-

3. We formalize PrioCML using a novel approach to Concurrent ML semantics focusing on communication as the reduction of communication actions. We leverage this approach to express our tiered-priority scheme and prove several important properties, most notably, freedom from communication derived priority inversions.

-

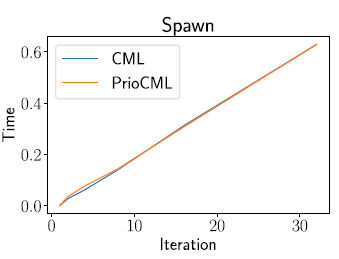

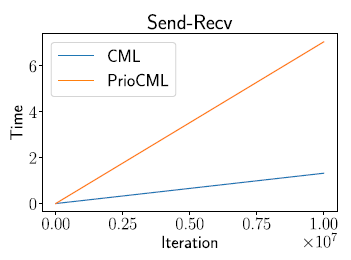

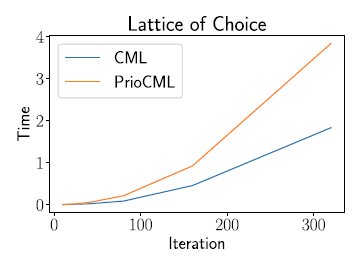

4. We implement the language PrioCML and evaluate its performance on the buyer-seller protocol, Swerve web server, and the eXene windowing toolkit as well as microbenchmarks.

This paper extends our previous work of PrioCML (Chuang et al., Reference Chuang, Iraci, Ziarek, Morales and Orchard2021) by providing a formal semantics of PrioCML, a case study and discussion of the buyer-seller protocol, added implementation details, as well as the tiered-priority scheme.

2 Background

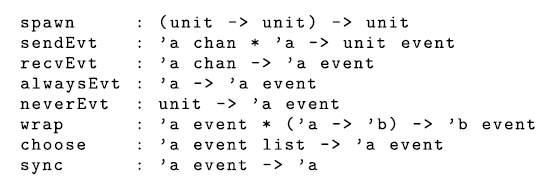

We realize our priority scheme in the context of Concurrent ML (CML), a language extension of Standard ML (Milner et al., Reference Milner, Tofte and Macqueen1997). CML enables programmers to express first-class synchronous message-passing protocols with the primitives shown in Figure 1. The core building blocks of protocols in CML are events and event combinators. The two communication base events are sendEvt and recvEvt. Both are defined over a channel, a conduit through which a message can be passed. Here sendEvt specifies putting a value into the channel, and recvEvt specifies extracting a value from the channel. It is important to note both sendEvt and recvEvt are the functions to construct events, and those events do not perform their specified actions until synchronized on using the sync primitive. Thus, the meaning of sending or receiving a value is the composition of synchronization, and an event – sync (sendEvt(c, v)) will place the value v on channel c and, sync (recvEvt(c)) will remove a value v from channel c. In CML, both sending and receiving are synchronous, and therefore, the execution of the protocol will block unless there is a matching action.

Fig. 1. Core CML primitives.

The expressive power of CML is derived from the ability to compose events using event combinators to construct first-class communication protocols. We consider two such event combinators: wrap and choose. The wrap combinator takes an event e1 and a post-synchronization function f and creates a new event e2. Note that the function f is a value of type ’a -> ’b. When the event e2 is synchronized on, the actions specified in the original event e1 are executed; then, the function f is applied to the result. Thus, the result of synchronizing on the event e2 is the result of the function f.

To allow the expression of complex communication protocols, CML supports selective communication. The event combinator choose takes a list of events and picks an event from this list to be synchronized on. For example, sync (choose([recvEvt(c1), sendEvt(c2, v2)])) will pick between recvEvt(c1) and sendEvt(c2, v2) and based on which event is chosen will execute the action specified by that event. The semantics of choice depends on whether any of the events in the input event list have a matching communication partner available. Simply put, choose picks an available event, if only one is available, or nondeterministically picks an event from the subset of available events out of the input list. For example, if some other thread in our system performed sync (sendEvt(c1, v1)), then choose will pick recvEvt(c1). However, if a third thread has executed recvEvt(c2), then choose will pick nondeterministically between recvEvt(c1) and sendEvt(c2, v2). If no events are available, then choose will block until one of the events becomes available. The always event (alwaysEvt) creates an event that is always available and returns the value it stores when synchronized on. Always events are useful for providing default behaviors within choice. Dually, the never event (neverEvt) creates an event that is never available. If part of a choice, it can never be selected, and if synchronized on directly, the synchronization will never complete. This is useful for providing general definitions of constructs using choice.

A key innovation in the development of CML was support for protocols as first-class entities. Specifically, this means a protocol, represented by an event, is a value and can itself be passed over a channel. Once an event has been constructed from base events and event combinators, it can be communicated to another participant. This is the motivation for the division of communication into two distinct parts: the creation of an event and the synchronization on that event. In CML, the first-class nature of events means these phases may happen a different number of times and on different threads. In CML programs, first-class events provide an elegant encoding of call-back like behaviors.

3 Motivation

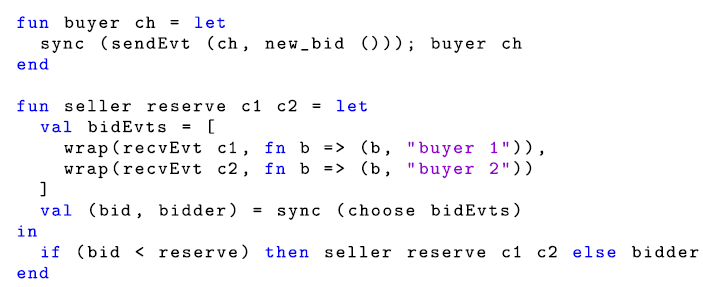

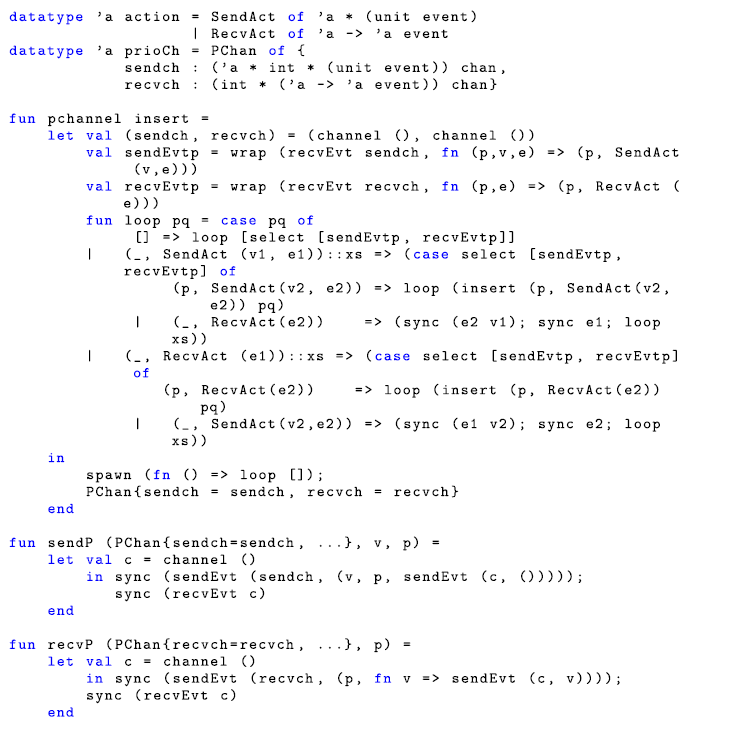

The desire for priority naturally occurs anywhere we wish to encode preference. Consider the Buyer-Seller protocol, commonly used an example protocol in both distributed systems (Ezhilchelvan & Morgan, Reference Ezhilchelvan and Morgan2001) and session types work (Vallecillo et al., Reference Vallecillo, Vasconcelos and Ravara2006). The protocol is a model of the interactions between a used book seller and a buyer negotiating the price for a book. We consider the variant in which there are two buyers submitting competing bids on a book from the seller. The seller receives the offers one at a time and solicits another offer if the offer is rejected. The protocol progresses until a buyer places an offer that the seller accepts. We can implement this protocol in CML by giving each buyer a channel along which they can submit bids to the seller. The seller selects a bid using the CML choose primitive to nondeterministically select a pending offer. The synchronous nature of CML send means that a buyer may only submit one bid at a time; they are blocked from executing until the bid is chosen. The core of this protocol is shown in Figure 2. We use the CML wrap event combinator to attach a label to the bid indicating which buyer it was received from.

Fig. 2. The two buyer-seller protocol.

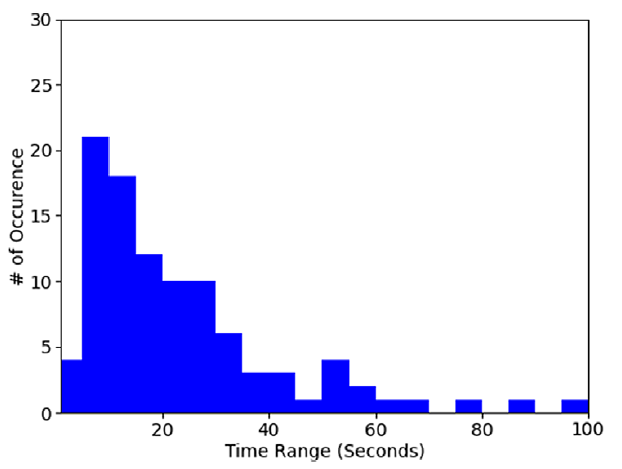

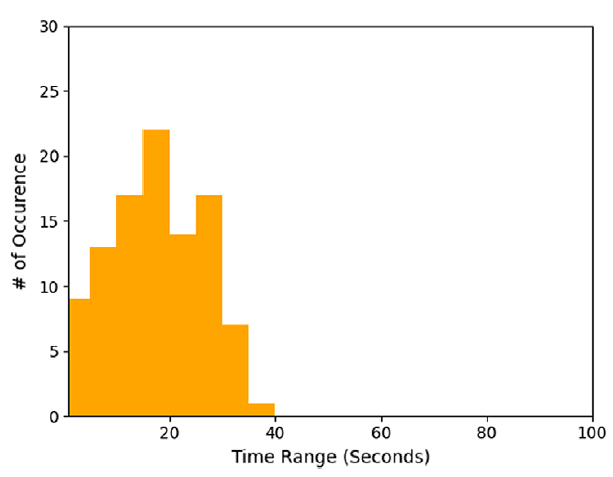

Observe the choose primitive is entirely responsible for picking which buyer gets a chance to submit a bid. Although we express no preference in this protocol, the semantics of choose in CML are nondeterministic. Thus, while we would like for both buyers to have equal opportunity to submit bids, CML provides no guarantee of this. Fundamentally, what we desire is notion of fairness in the choice between the buyers. We roughly expect that the nondeterministic selection grants both buyers equal chance to be chosen. If the selections each round are statistically independent however, one buyer may, by chance, be able to place a significantly larger number of offers. This is because the number of bids placed has no influence on the selection in the next round. If a buyer is unlucky, they may be passed over several rounds in a row. To combat this CML integrates a heuristic for preventing thread starvation. That heuristic will attempt to prevent one buyer from repeatedly being selected, but makes no guarantees. We examine the effectiveness of this heuristic in Section 6.2. With priority, we could encode a much stronger fairness property. If we could express a preference between the buyers based on number of previous bids, we could enforce a round-robin selection that would guarantee the buyers submit an equal number of bids. The addition of priority would allow the programmer to control the undesirable aspects of nondeterminism in the system.

Where to add priority in the language, however, is not immediately clear. In a message-passing system, we have two entities to consider: computations, as represented by threads, and communications, as represented by first-class events. In our example, the prioritized element is communication, not computation. If we directly applied a thread-based model of priority to the system, the priority of that communication would be tied to the thread that created it. To prioritize a communication alone, we could isolate a communication into a dedicated thread to separate its priority. While simple, this approach has a few major disadvantages. It requires an extra thread to be spawned and scheduled. This approach also is not easily composed, with a change of priority requiring the spawning of yet another thread. A bigger issue is that the introduction of the new thread would then pass the communication message to the spawned thread, and the original thread is then unblocked as the message is sent to the spawned thread. This breaks the guarantee that the sent value will have been received in the continuation of the send that the synchronous behavior of CML provides. When communication is the only method to order computations between threads, this is a major limitation on what can be expressed.

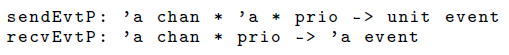

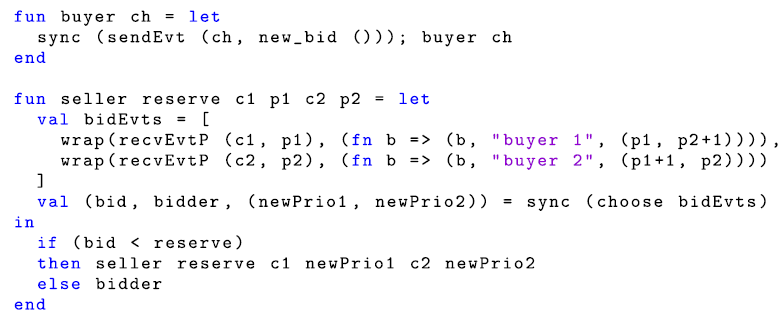

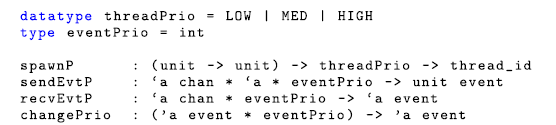

Instead, consider what happens if we attach priority directly to communication. In the case of CML, since communications are first-class entities, this would mean prioritizing events. Assume we extend our language with new forms of events, like sendEvtP and recvEvtP which take an additional parameter that specifies the priority as shown in following type signature

We can use these new primitives to realize a prioritized variant of the protocol as code shown in Figure 3. Each receive event is wrapped with a post-synchronization function that increases the priority of the other buyer. This means that the higher priority will go to the buyer that has submitted fewer bids. PrioCML choice will always pick the event with highest priority within a selection.

Fig. 3. The prioritized two buyer-seller protocol.

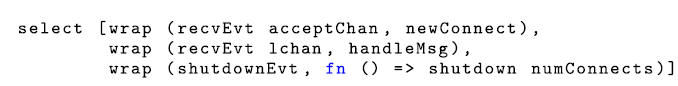

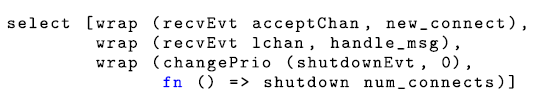

This need to express a preference when presented with nondeterminism occurs in many CML programs. Consider as another example a web server written in CML. For such a server, it is important to handle external events gracefully and without causing errors for clients. One such external event is a shutdown request. We want the server to terminate, but only once it has reached a consistent state and without prematurely breaking client connections. Conceptually, each component needs to be notified of the shutdown request and act accordingly. We can elegantly accomplish this by leveraging the first-class events of CML. If a server is encoded to accept new work via communication in its main processing loop, we can add in shutdown behavior by using selective communication. Specifically, we can pick between a shutdown notification and accepting new work. The component can either continue or begin the termination process. However, by introducing selective communication, we also introduce nondeterminism into our system. The consequence is that we have no guarantee that the server will process the shutdown event if it consistently has the option to accept new work. The solution is to again use priority to constrain the nondeterministic behavior. By attaching a higher priority to the shutdown event, we express our desire that given the option between accepting new work and termination, we would prefer termination.

While event priority allows us to express communication priority, we still desire a way to express the priority of the computations. In the case of our server, we may want to give a higher priority to serving clients over background tasks like logging. The issue here is not driven by communications between threads but rather competing for computation. As such, we need a system with both event (communication) and thread (computation) priority.

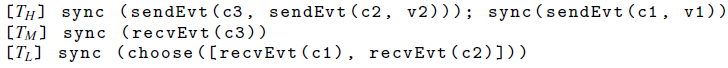

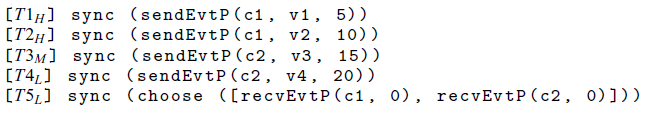

The introduction of priorities in computation presents several problems when integrated with synchronous message-passing. Considering the priorities of threads and events in isolation gives rise to priority inversion caused by communication. Priority inversion happens when communication patterns result in a low priority thread getting scheduled in place of a high-priority thread due to a communication choosing the low priority thread over the high-priority one. This arises because we have no guarantee that the communication priorities agree with the thread priorities. To see this effect, consider the CML program shown in code where we use

![]() $T_P$

to annotate the thread priority as high, medium, or low priority.

$T_P$

to annotate the thread priority as high, medium, or low priority.

The programmer is free to specify event priorities that contradict the priorities of threads. Therefore, to avoid priority inversion, we must make choose aware of thread priority. A naive approach is to force the thread priority onto events. That is, an event would have the priority equal to that of the thread that created it. We can realize this approach in this example by changing sendEvt and recvEvt to sendEvtP and recvEvtP with thread priorities as the arguments. At first glance, it seems to solve the problem that shows up in the example above. The choice in

![]() $T_L$

now can pick recvEvtP(c1, LOW) as the matching sendEvtP(c1, v1, HIGH) comes from

$T_L$

now can pick recvEvtP(c1, LOW) as the matching sendEvtP(c1, v1, HIGH) comes from

![]() $T_H$

. This approach effectively eliminates event priorities, reviving all of the above issues with a purely thread-based model.

$T_H$

. This approach effectively eliminates event priorities, reviving all of the above issues with a purely thread-based model.

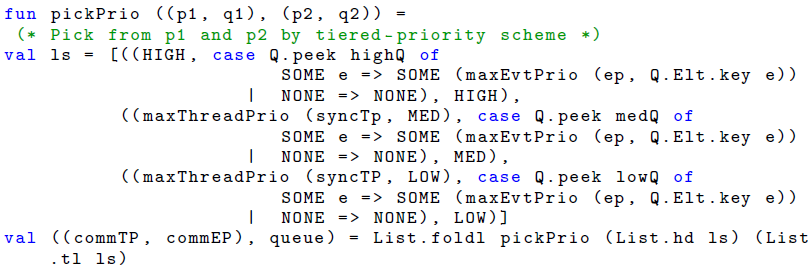

The desirable solution is to combine the priorities of the thread and the event. In order to avoid priority inversion, the thread priority must take precedence. This scheme resolves the problem illustrated. To resolve choices between threads of the same priority, we allow the programmer to specify an event priority. This priority is considered after the priority of all threads involved. This allows the message in our shutdown example to properly take precedence over other messages from high-priority threads.

This scheme is nearly complete but is complicated by CML’s exposure to events as first-class entities. Specifically, events can be created within one thread and sent over a channel to another thread for synchronization. When that happens, applying the priority of the thread that created the event brings back the possibility of priority inversion. To see why, consider the example in code:

In this example,

![]() $T_H$

sends a sendEvt over the channel c3 which will be received and synchronized on by

$T_H$

sends a sendEvt over the channel c3 which will be received and synchronized on by

![]() $T_M$

. It is to be noted that this sendEvt will be at the highest priority (which was inherited from its creator

$T_M$

. It is to be noted that this sendEvt will be at the highest priority (which was inherited from its creator

![]() $T_H$

) even though it is synchronized on by

$T_H$

) even though it is synchronized on by

![]() $T_M$

.

$T_M$

.

![]() $T_H$

then sends out a value v1 on channel c1.

$T_H$

then sends out a value v1 on channel c1.

![]() $T_L$

has to choose between receiving the value on channel c1 or on channel c2. Since

$T_L$

has to choose between receiving the value on channel c1 or on channel c2. Since

![]() $T_H$

and

$T_H$

and

![]() $T_M$

are both of higher priority than

$T_M$

are both of higher priority than

![]() $T_L$

, they will both execute their communications before

$T_L$

, they will both execute their communications before

![]() $T_L$

does. Thus,

$T_L$

does. Thus,

![]() $T_L$

will have to make a choice between either unblocking

$T_L$

will have to make a choice between either unblocking

![]() $T_M$

or

$T_M$

or

![]() $T_H$

(by receiving on channel c2 or c1 respectively). Recall that the priority is determined by the thread that created the event and not by the thread that synchronizes it in the current scenario. Therefore, this choice will be nondeterministic; both communications are of the same priority as those created by the same thread.

$T_H$

(by receiving on channel c2 or c1 respectively). Recall that the priority is determined by the thread that created the event and not by the thread that synchronizes it in the current scenario. Therefore, this choice will be nondeterministic; both communications are of the same priority as those created by the same thread.

![]() $T_L$

might choose to receive on channel c2 and thus allow the medium priority thread

$T_L$

might choose to receive on channel c2 and thus allow the medium priority thread

![]() $T_M$

to run while the high-priority thread

$T_M$

to run while the high-priority thread

![]() $T_H$

is still blocked – a priority inversion.

$T_H$

is still blocked – a priority inversion.

The important observation to be made from this example is that priority, when inherited from a thread, should be from the thread that synchronizes on an event instead of the thread that creates the event. This matches our intuition about the root of priority inversion, as the synchronizing thread is the one that blocks, and priority inversion happens when the wrong threads remain blocked.

We have now reconciled the competing goals of user-defined event priority and inversion-preventing thread priority. In doing so, we arrive at a tiered-priority scheme. The priority given to threads takes precedence, as is necessary to prevent priority inversion. A communication’s thread priority inherits from the thread that synchronizes on the event, as was shown to be required. When there is a tie between thread priorities, the event priority is used to break it. We note that high-priority communications tend to come from higher priority computations. Thus, this approach is flexible enough to allow the expression of priority in real-world systems.

4 Semantics

We now provide a formal semantics of PrioCML. Prior semantic frameworks for CML (e.g. Reppy, Reference Reppy2007; Ziarek et al., Reference Ziarek, Sivaramakrishnan and Jagannathan2011) maintain per-channel message queues. This closely mirrors the main implementations of CML. To introduce priorities, we must assert that prioritization is correct with respect to all other potential communications. These properties can be expressed more clearly when the full set of possible communications is readily accessible. We thus model our semantics on actions, which encode the effect to be produced by an event and represent an in-flight message. These actions are kept in a single pool called the action collection. In Section 5, we show how these semantics can be realized as a modification to existing CML implementations with per-channel queues.

4.1 The PrioCML communication lifecycle

Before defining the semantics, we explore the process of PrioCML communication in the abstract, highlighting several key steps in the lifecycle. To express a communication, a programmer starts by creating an event. Themselves values, events represent a series of communication steps to be enacted and are constructed by taking a small set of base events, and applying event combinators to construct a desired communication. Base events in PrioCML come in one of four forms: send, receive, always, and never. Send and receive allow synchronous communication over channels. Always and never represent single ended communications that can either always succeed immediately or will block forever. While useful in defining generic event combinators, they will also play a crucial internal role in the given PrioCML semantics by capturing the state of inactive threads. Each base event has a matching constructor (sendEvtP, recvEvtP, alwaysEvt, and neverEvt). These can be freely combined using event combinators (e.g. choose, wrap) and passed along channels as first-class values.

Event values have the form

![]() ${\varepsilon}\left[ \, {q} \, \right]$

, where q is one of following action-generating primitive: sendAct, recvAct, alwaysAct, neverAct, chooseAct, and wrapAct. The event context

${\varepsilon}\left[ \, {q} \, \right]$

, where q is one of following action-generating primitive: sendAct, recvAct, alwaysAct, neverAct, chooseAct, and wrapAct. The event context

![]() $\varepsilon$

does not contain any event information but represents that no further reduction of the action-generating primitives is possible until synchronization. This delineation is important as it means the actions are generated at synchronization time and thus inherit the thread priority of the synchronizing thread and not the thread which created the event value.

$\varepsilon$

does not contain any event information but represents that no further reduction of the action-generating primitives is possible until synchronization. This delineation is important as it means the actions are generated at synchronization time and thus inherit the thread priority of the synchronizing thread and not the thread which created the event value.

When it is time for the enclosed communication steps (protocol) to be enacted, the sync primitive is used to perform event synchronization. The action-generating primitive inside the event context is reduced to a set of actions. For each base event type, there is a corresponding action. These actions represent the effect of a potential communication. In the case of a choice event, there may be multiple actions generated as there may be multiple potential (but mutually exclusive) communications. As each synchronization has exactly one result, we conceptualize any synchronization as being a choice between all of the generated actions. Nested choices are effectively flattened, and base events interpreted as choices with one element. Each action carries a choice id, a tag which uniquely identifies the synchronization that created it. This is used to prevent multiple actions from a single synchronization from being enacted.

Upon synchronization, the new actions are added to the action collection, a pool of all actions active in the system. From this pool, communication is chosen by an oracle which implements the prioritization. If the oracle picks a communication that is an always action, the enclosed value is given to the corresponding thread to restart execution. A communication can also be a pair of send and receive actions. In this case, any competing actions from the same choices are removed, the value is passed to form two always actions (one with the passed value for the receiving end and one with a unit value for the sending end), and the always actions thrown back into the pool. The selection process is then repeated until an always action is chosen, thereby passing a value to a thread and unblocking it.

We note that synchronization serves as the context switching point. A thread stops execution upon synchronizing, and the thread corresponding to the selected action takes its place. A fundamental property of our system (4.23) is that the thread executing always has the highest possible priority. In our system, this property can only be invalidated upon a communication, which requires synchronization and thus gives the system an opportunity to context switch.

4.2 Semantic rules

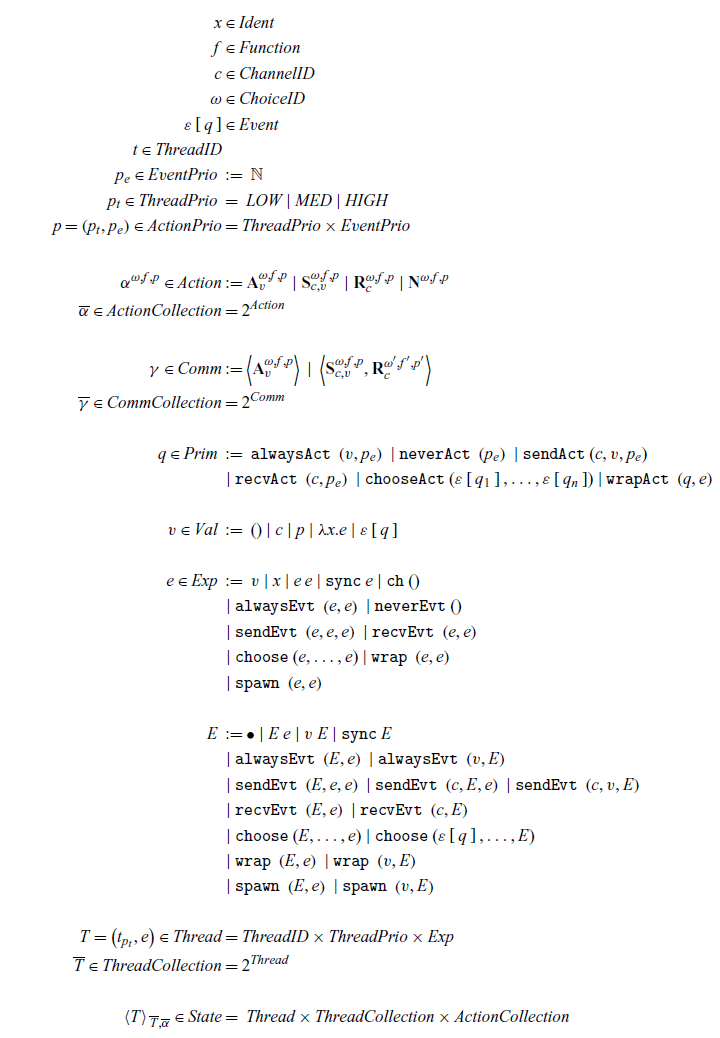

The syntax of our formalism is given in Figure 4. We define a minimal call-by-value language with communication primitives. We use q to define communication event primitives, which must appear wrapped in an event context

![]() ${\varepsilon}\left[ \, {q} \, \right]$

. Such a context prevents the reduction of the inner event until synchronization, at which point the thread information is captured. Event contexts are never encoded by the programmer directly but instead generated by the event primitive expressions. The full set of expressions is represented by e and values by v.

${\varepsilon}\left[ \, {q} \, \right]$

. Such a context prevents the reduction of the inner event until synchronization, at which point the thread information is captured. Event contexts are never encoded by the programmer directly but instead generated by the event primitive expressions. The full set of expressions is represented by e and values by v.

Fig. 4. Core syntax.

Our program state is a triple of a currently executing thread

![]() $\left(T\right)$

, a collection of suspended threads

$\left(T\right)$

, a collection of suspended threads

![]() $\left(\overline{T}\right)$

, and a collection of current actions

$\left(\overline{T}\right)$

, and a collection of current actions

![]() $\left(\overline{\alpha}\right)$

. A thread contains a thread id

$\left(\overline{\alpha}\right)$

. A thread contains a thread id

![]() $\left(t\right)$

coupled with a thread priority of High, Med, or Low, and a current expression in an evaluation context

$\left(t\right)$

coupled with a thread priority of High, Med, or Low, and a current expression in an evaluation context

![]() ${E}\left[ \, {\cdot} \, \right]$

. We assume thread ids to be opaque values supporting only equality. A program begins execution with a single thread containing the program as its expression and an empty thread collection and action collection.

${E}\left[ \, {\cdot} \, \right]$

. We assume thread ids to be opaque values supporting only equality. A program begins execution with a single thread containing the program as its expression and an empty thread collection and action collection.

Actions, representing the communication action to be effected by an event, can be one of four varieties: always

![]() $\left( \mathbf{A}^{{\omega}, {f}, {p}}_{v} \right)$

, send

$\left( \mathbf{A}^{{\omega}, {f}, {p}}_{v} \right)$

, send

![]() $\left( \mathbf{S}^{{\omega}, {f}, {p}}_{{c}, {v}} \right)$

, receive

$\left( \mathbf{S}^{{\omega}, {f}, {p}}_{{c}, {v}} \right)$

, receive

![]() $\left( \mathbf{R}^{{\omega}, {f}, {p}}_{c} \right)$

, or never

$\left( \mathbf{R}^{{\omega}, {f}, {p}}_{c} \right)$

, or never

![]() $\left( \mathbf{N}^{{\omega}, {f}, {p}} \right)$

. Actions carry a choice id

$\left( \mathbf{N}^{{\omega}, {f}, {p}} \right)$

. Actions carry a choice id

![]() $\omega$

, a wrapping function f, a priority (containing both a thread priority

$\omega$

, a wrapping function f, a priority (containing both a thread priority

![]() $p_t$

and a non-negative integer event priority

$p_t$

and a non-negative integer event priority

![]() $p_e$

) p, and if appropriate a channel c or value v. The choice id

$p_e$

) p, and if appropriate a channel c or value v. The choice id

![]() $\omega$

uniquely identifies the choice to which the action belongs and thus the corresponding thread. We note that channels in our semantics are not a structure that stores pending actions, but merely a tag used to determine if a send and receive action can be paired. Actions that are able to be enacted are represented by communications

$\omega$

uniquely identifies the choice to which the action belongs and thus the corresponding thread. We note that channels in our semantics are not a structure that stores pending actions, but merely a tag used to determine if a send and receive action can be paired. Actions that are able to be enacted are represented by communications

![]() $\left(\gamma\right)$

, which can consist of either a lone always action

$\left(\gamma\right)$

, which can consist of either a lone always action

![]() $\left\langle \mathbf{A}^{{\omega}, {f}, {p}}_{v} \right\rangle$

or a matching pair of send and receive actions

$\left\langle \mathbf{A}^{{\omega}, {f}, {p}}_{v} \right\rangle$

or a matching pair of send and receive actions

![]() $\left\langle \mathbf{S}^{{\omega}, {f}, {p}}_{{c}, {v}}, \mathbf{R}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{c} \right\rangle$

. We note that the channel must match between the send and receive actions in a communication. We refer to a set of actions (communications, threads) as an action collection

$\left\langle \mathbf{S}^{{\omega}, {f}, {p}}_{{c}, {v}}, \mathbf{R}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{c} \right\rangle$

. We note that the channel must match between the send and receive actions in a communication. We refer to a set of actions (communications, threads) as an action collection

![]() $\overline{\alpha}$

(communication collection

$\overline{\alpha}$

(communication collection

![]() $\overline{\gamma}$

, thread collection

$\overline{\gamma}$

, thread collection

![]() $\overline{T}$

), which is an element of the power set of actions (communications, threads).

$\overline{T}$

), which is an element of the power set of actions (communications, threads).

Program steps are represented by state transitions

![]() $\rightarrow$

. We define several auxiliary relations used in defining the program step. Selection

$\rightarrow$

. We define several auxiliary relations used in defining the program step. Selection

![]() $\rightsquigarrow$

maps an action collection to the chosen action and an action collection containing all action still valid after that choice. We note that section is a relation, and any one of multiple valid choices may result from a given action collection. Action generation

$\rightsquigarrow$

maps an action collection to the chosen action and an action collection containing all action still valid after that choice. We note that section is a relation, and any one of multiple valid choices may result from a given action collection. Action generation

![]() $\hookrightarrow$

creates an action from an action primitive, a thread priority, and a choice id. Communication generation

$\hookrightarrow$

creates an action from an action primitive, a thread priority, and a choice id. Communication generation

![]() $\Rightarrow_{\overline{\alpha}}$

is a relation between an action and all possible communications involving that action, parameterized by the set of available actions. We adopt the convention that when applied to a set of actions, the communication generation relation maps to the union of all sets resulting from the application of the relation to each element of the input set.

$\Rightarrow_{\overline{\alpha}}$

is a relation between an action and all possible communications involving that action, parameterized by the set of available actions. We adopt the convention that when applied to a set of actions, the communication generation relation maps to the union of all sets resulting from the application of the relation to each element of the input set.

\begin{align*} \rightarrow &\in \textit{State} \rightarrow \textit{State} \\ \rightsquigarrow &\in \textit{ActionCollection} \rightarrow \textit{Comm} \times \textit{ActionCollection} \\ \hookrightarrow &\in \textit{Prim} \times \textit{ThreadPrio} \times \textit{ChoiceID} \rightarrow \textit{ActionCollection} \\ \Rightarrow_{\overline{\alpha}} &\in \textit{ActionCollection} \rightarrow 2^{\textit{Action}\,\times\textit{Comm}} \\ \Psi &\in \textit{CommCollection} \rightarrow \textit{Comm} \\ \le_{prio} &\in Comm \times Comm\end{align*}

\begin{align*} \rightarrow &\in \textit{State} \rightarrow \textit{State} \\ \rightsquigarrow &\in \textit{ActionCollection} \rightarrow \textit{Comm} \times \textit{ActionCollection} \\ \hookrightarrow &\in \textit{Prim} \times \textit{ThreadPrio} \times \textit{ChoiceID} \rightarrow \textit{ActionCollection} \\ \Rightarrow_{\overline{\alpha}} &\in \textit{ActionCollection} \rightarrow 2^{\textit{Action}\,\times\textit{Comm}} \\ \Psi &\in \textit{CommCollection} \rightarrow \textit{Comm} \\ \le_{prio} &\in Comm \times Comm\end{align*}

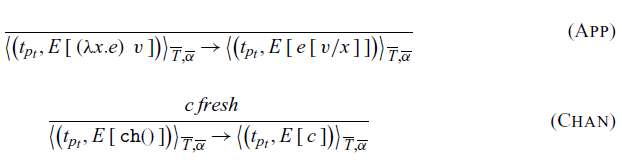

Function application is defined in the rule App. Channel creation happens when a channel expression is evaluated (rule Chan), and a new channel id c is generated.

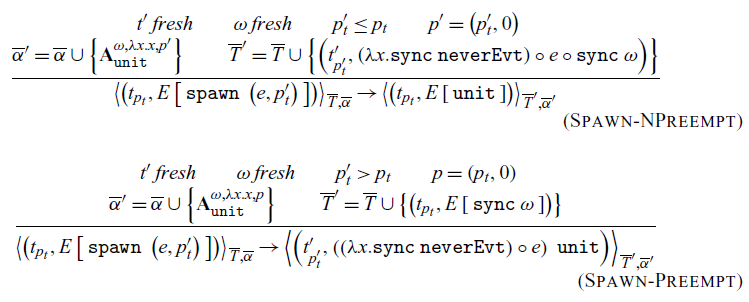

Threads are created through the spawn primitives with a user-specified priority. Spawn broken up in two cases, Spawn-NPreempt and Spawn-Preempt. These two rules cover the cases of spawning a thread with lower or higher priority, respectively. We distinguish here because we wish to maintain the invariant that the highest priority thread able to make progress is the one executing. If the thread we are spawning has lower (or equal) priority, we can continue executing the current thread. We add the newly spawned thread to the thread collection by blocking it on an always action

![]() $\left(\mathbf{A}^{{\omega}, {\lambda x.x}, {p^\prime}}_{\texttt{unit}}\right)$

and adding that action to the action collection

$\left(\mathbf{A}^{{\omega}, {\lambda x.x}, {p^\prime}}_{\texttt{unit}}\right)$

and adding that action to the action collection

![]() $\left(\overline{\alpha}^\prime\right)$

. This preserves the invariant that all threads in the thread collection are blocked synchronizing on a choice (here, a choice of one action). If it is the case that the newly created thread has a higher priority than the one executing, we must switch to it immediately. To switch threads, we block the currently executing thread by synchronizing on an always action with the event priority equal to zero. We then set the new thread as the currently executing thread. In both cases, the expression that forms the body is the composition (represented by the function composition operator,

$\left(\overline{\alpha}^\prime\right)$

. This preserves the invariant that all threads in the thread collection are blocked synchronizing on a choice (here, a choice of one action). If it is the case that the newly created thread has a higher priority than the one executing, we must switch to it immediately. To switch threads, we block the currently executing thread by synchronizing on an always action with the event priority equal to zero. We then set the new thread as the currently executing thread. In both cases, the expression that forms the body is the composition (represented by the function composition operator,

![]() $\circ$

) of the always synchronization, the function e given to spawn, and a synchronization on a never event at thread completion. This approach avoids the complication of removing the threads from the collection and ensures that the thread will cease execution. As its name implies, a never action cannot be part of communication and thus will not be selected.

$\circ$

) of the always synchronization, the function e given to spawn, and a synchronization on a never event at thread completion. This approach avoids the complication of removing the threads from the collection and ensures that the thread will cease execution. As its name implies, a never action cannot be part of communication and thus will not be selected.

Following rules form the mechanism by which an event is evaluated. For each base action, there is a corresponding event primitive. Each event is reduced to an action-generating function inside an event context

![]() $\varepsilon$

. Note that the action-generating function only carries the event priority and not the thread priority. This is because this reduction happens at event creation time and not event synchronization time. If we were to capture the thread priority at this point, it would allow for priority inversion as outlined in Section 3.

$\varepsilon$

. Note that the action-generating function only carries the event priority and not the thread priority. This is because this reduction happens at event creation time and not event synchronization time. If we were to capture the thread priority at this point, it would allow for priority inversion as outlined in Section 3.

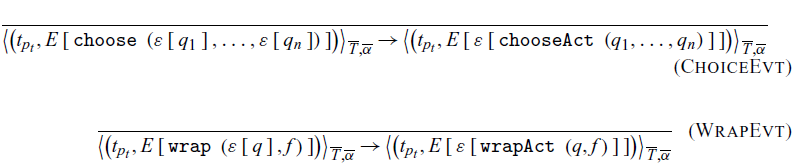

The rules ChoiceEvt and WrapEvt expand the event context

![]() $\varepsilon$

, the new context will have chooseAct and wrapAct, respectively. Both chooseAct and wrapAct enclose their inner actions and then unpack it upon the synchronization. We also note that nested choices retain their nested structure at this stage (rule ChoiceEvt); for example, a choose event is in the list of another choose event. These are later collapsed in the rule ChoiceAct.

$\varepsilon$

, the new context will have chooseAct and wrapAct, respectively. Both chooseAct and wrapAct enclose their inner actions and then unpack it upon the synchronization. We also note that nested choices retain their nested structure at this stage (rule ChoiceEvt); for example, a choose event is in the list of another choose event. These are later collapsed in the rule ChoiceAct.

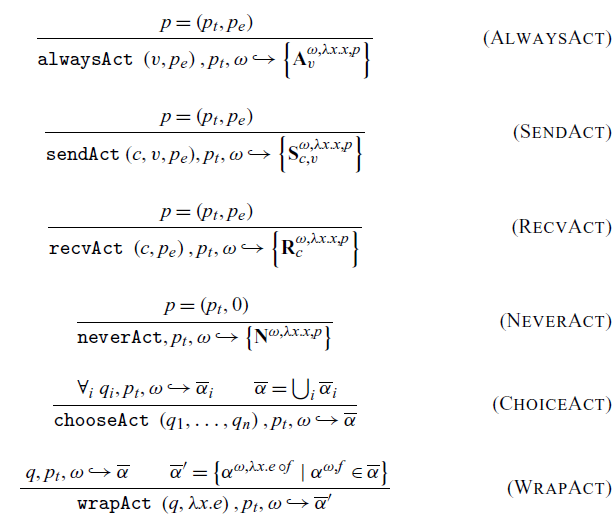

The action semantics deal with the process of synchronizing on an action. They are aided by an auxiliary relation, the action generation operator

![]() $\hookrightarrow$

. In the simplest case, shown in the rule AlwaysAct, a single always action-generating function is transformed into the corresponding always action. Note that this rule is where the thread priority is incorporated into the action because this reduction happens at synchronization. Therefore, we capture the thread priority of the thread that will be blocked by this action, as is necessary for our desired properties to hold (see Theorem 4.23 and Lemma 4.22). The rules SendAct and RecvAct work similarly. In the more complex case of choice, handled by the rule ChoiceAct, we need to combine all of the actions produced by the events being combined, as a choice can result in multiple actions being produced. In the case of a nested choice operation, the rule applies recursively, taking the union of all generated actions and effectively flattening the choice. For rule WrapAct, the wrap operation also relies on the action set and maps each action in the set to an action with the function to wrap composed with the wrapping function f of each action.

$\hookrightarrow$

. In the simplest case, shown in the rule AlwaysAct, a single always action-generating function is transformed into the corresponding always action. Note that this rule is where the thread priority is incorporated into the action because this reduction happens at synchronization. Therefore, we capture the thread priority of the thread that will be blocked by this action, as is necessary for our desired properties to hold (see Theorem 4.23 and Lemma 4.22). The rules SendAct and RecvAct work similarly. In the more complex case of choice, handled by the rule ChoiceAct, we need to combine all of the actions produced by the events being combined, as a choice can result in multiple actions being produced. In the case of a nested choice operation, the rule applies recursively, taking the union of all generated actions and effectively flattening the choice. For rule WrapAct, the wrap operation also relies on the action set and maps each action in the set to an action with the function to wrap composed with the wrapping function f of each action.

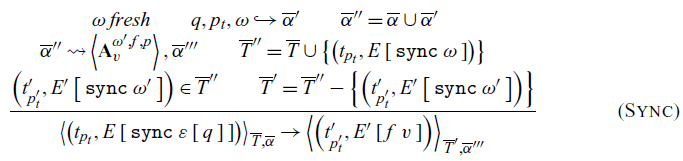

The actual synchronization is defined by the rule Sync. It generates a fresh choice id

![]() $\omega$

, as each choice id conceptually represents a single synchronization operation and connects the synchronizing thread to the actions in the action collection. The action generation operator

$\omega$

, as each choice id conceptually represents a single synchronization operation and connects the synchronizing thread to the actions in the action collection. The action generation operator

![]() $\hookrightarrow$

is then used to create the set of new actions

$\hookrightarrow$

is then used to create the set of new actions

![]() $\left(\overline{\alpha}^\prime\right)$

to be added to the action collection. This set of new actions is combined with the existing actions

$\left(\overline{\alpha}^\prime\right)$

to be added to the action collection. This set of new actions is combined with the existing actions

![]() $\left(\overline{\alpha}\right)$

to derive the intermediate action collection

$\left(\overline{\alpha}\right)$

to derive the intermediate action collection

![]() $\overline{\alpha}^{\prime\prime}$

. This collection is then fed into the selection relation

$\overline{\alpha}^{\prime\prime}$

. This collection is then fed into the selection relation

![]() $\rightsquigarrow$

to obtain the chosen communication and the new action collection

$\rightsquigarrow$

to obtain the chosen communication and the new action collection

![]() $\overline{\alpha}^{\prime\prime\prime}$

. The Sync rule requires that this communication be an always. If not, it must be a send-receive pair, and thus, the rule ReducePair can be applied repeatedly until an always communication is selected. The oracle is responsible for selecting a communication as described in Oracle. The choice id

$\overline{\alpha}^{\prime\prime\prime}$

. The Sync rule requires that this communication be an always. If not, it must be a send-receive pair, and thus, the rule ReducePair can be applied repeatedly until an always communication is selected. The oracle is responsible for selecting a communication as described in Oracle. The choice id

![]() $\omega^\prime$

from the chosen communication is used to select the correct thread out of the thread collection. Because the chosen communication may belong to the currently executing thread, which is not in the thread collection

$\omega^\prime$

from the chosen communication is used to select the correct thread out of the thread collection. Because the chosen communication may belong to the currently executing thread, which is not in the thread collection

![]() $\overline{T}$

, we must search the set of all threads

$\overline{T}$

, we must search the set of all threads

![]() $\overline{T}^{\prime\prime}$

. Upon finding the desired thread, we remove it from the set of all threads to obtain the new thread collection

$\overline{T}^{\prime\prime}$

. Upon finding the desired thread, we remove it from the set of all threads to obtain the new thread collection

![]() $\overline{T}^{\prime\prime}$

. Lastly, we must continue executing the resumed thread by applying the wrapping function f to the value stored in the action.

$\overline{T}^{\prime\prime}$

. Lastly, we must continue executing the resumed thread by applying the wrapping function f to the value stored in the action.

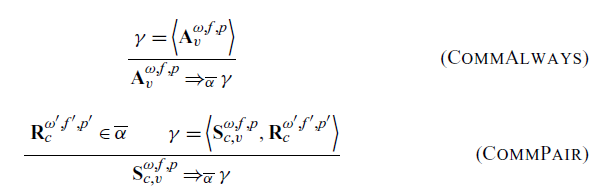

Now com the rules that govern the grouping of actions from the action collection into communications. A communication

![]() $\gamma$

is either a single always action or a send and receive pair. The communication generating operator

$\gamma$

is either a single always action or a send and receive pair. The communication generating operator

![]() $\Rightarrow_{\overline{\alpha}}$

is parameterized by

$\Rightarrow_{\overline{\alpha}}$

is parameterized by

![]() $\overline{\alpha}$

the action collection it is operating in. We define the operator over a single action in the rules CommAlways and CommPair, then adopt the convention that application of the operator to a set produces the image of that set, which is a set of all possible outputs of the operator from the elements of that input set. We note that in the rule CommPair we only operate on send actions and ignore receive actions. We do assert, however, that a compatible receive action is present in the set and generates one possible output (and thus communication) for each receive action. This choice is arbitrary, but either the sends or the receives must be ignored as inputs to prevent duplicate entries in the communication set. Further, the channel of the send and receive actions must match. Here the channel ids are treated as a tag which indicates which send and receive actions are allowed to be paired.

$\overline{\alpha}$

the action collection it is operating in. We define the operator over a single action in the rules CommAlways and CommPair, then adopt the convention that application of the operator to a set produces the image of that set, which is a set of all possible outputs of the operator from the elements of that input set. We note that in the rule CommPair we only operate on send actions and ignore receive actions. We do assert, however, that a compatible receive action is present in the set and generates one possible output (and thus communication) for each receive action. This choice is arbitrary, but either the sends or the receives must be ignored as inputs to prevent duplicate entries in the communication set. Further, the channel of the send and receive actions must match. Here the channel ids are treated as a tag which indicates which send and receive actions are allowed to be paired.

The remaining next rules define the selection relation

![]() $\rightsquigarrow$

. This relation maps an action collection to a chosen communication and an action collection. This new action collection contains all of the actions that do not conflict with the chosen communication. This is critical in the case of choice, as it removes all unused actions generated during the choice.

$\rightsquigarrow$

. This relation maps an action collection to a chosen communication and an action collection. This new action collection contains all of the actions that do not conflict with the chosen communication. This is critical in the case of choice, as it removes all unused actions generated during the choice.

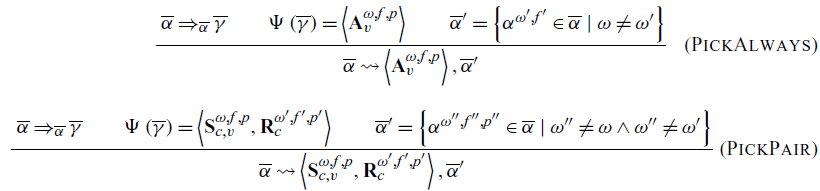

In the simplest case, there is an always communication that is chosen by the oracle. This behavior is defined in the rule PickAlways. Here the communication collection

![]() $\overline{\gamma}$

is generated from the action collection

$\overline{\gamma}$

is generated from the action collection

![]() $\overline{\alpha}$

, and the oracle

$\overline{\alpha}$

, and the oracle

![]() $\Psi$

is invoked. The action collection is then filtered to remove any actions with a choice id

$\Psi$

is invoked. The action collection is then filtered to remove any actions with a choice id

![]() $\omega^\prime$

matching the chosen action’s

$\omega^\prime$

matching the chosen action’s

![]() $\omega$

. The always communication chosen by the oracle is returned, along with the filtered action collection

$\omega$

. The always communication chosen by the oracle is returned, along with the filtered action collection

![]() $\overline{\alpha}^\prime$

.

$\overline{\alpha}^\prime$

.

In the more complicated case, the oracle picks a send-receive communication. Then the rule PickPair applies. Similar to PickAlways, we generate the communications, pick one, and filter out all actions that conflict with either action in the communication. Note that PickPair results in a send-receive communication as the output of the selection relation

![]() $\rightsquigarrow$

. However, in order to continue the execution of a thread, the Sync rule requires that right side of the relation be an always communication.

$\rightsquigarrow$

. However, in order to continue the execution of a thread, the Sync rule requires that right side of the relation be an always communication.

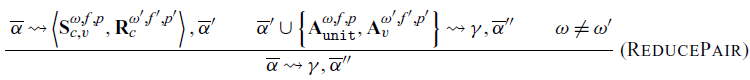

In order to output an always communication from the send-receive communication in rule PickPair, we apply the recursive rule ReducePair (rule at the bottom). If the selection over an action collection results in a send-receive pair as communication, we can reduce the send and receive to a pair of always actions and retry the selection. Conceptually, the send and receive actions are paired, and the values are passed through the channel. Each resultant always action carries the value to be returned: unit for the send and v for the receive. Those are added to the action collection, which is then used in the recursive usage of the selection relation

![]() $\rightsquigarrow$

$\rightsquigarrow$

Rule ReducePair is what makes selection a relation and not a true functional map. This rule is necessary to create an invariant fundamental to the operation of these semantics: if there exists a member of the relation

![]() $\overline{\alpha} \rightsquigarrow \gamma, \overline{\alpha}^{\prime}$

, then there exists a member

$\overline{\alpha} \rightsquigarrow \gamma, \overline{\alpha}^{\prime}$

, then there exists a member

![]() $\overline{\alpha} \rightsquigarrow \gamma^\prime, \overline{\alpha}^{\prime}$

, where

$\overline{\alpha} \rightsquigarrow \gamma^\prime, \overline{\alpha}^{\prime}$

, where

![]() $\gamma^\prime$

is an always communication. This stems from the ability to apply ReducePair if the relation can produce a send-receive pair. Note that

$\gamma^\prime$

is an always communication. This stems from the ability to apply ReducePair if the relation can produce a send-receive pair. Note that

![]() $\omega \neq \omega^\prime$

assert the two actions of the pair

$\omega \neq \omega^\prime$

assert the two actions of the pair

![]() $\gamma$

are from different threads since each choice id

$\gamma$

are from different threads since each choice id

![]() $\omega$

and

$\omega$

and

![]() $\omega^\prime$

is generated upon synchronization. Once this rule is applied, there is an always action in the collection that has the priority inherited from the original send-receive communication. We know this priority to be (at least tied as) the highest. As a consequence, any action collection that can produce a communication can produce an always communication, as required by Sync.

$\omega^\prime$

is generated upon synchronization. Once this rule is applied, there is an always action in the collection that has the priority inherited from the original send-receive communication. We know this priority to be (at least tied as) the highest. As a consequence, any action collection that can produce a communication can produce an always communication, as required by Sync.

Conceptually, ReducePair encodes the act of communication in our system. The pairing of the send and receive and subsequent replacement by always actions is where values are passed from the sender to the receiver. We believe this view of communication as a reduction from a linked send and receive pair to two independent always actions provides a novel and useful way to conceptualize communication in message-passing systems. It provides a way to encode a number of properties, proofs of which can be found in Section 4.3.

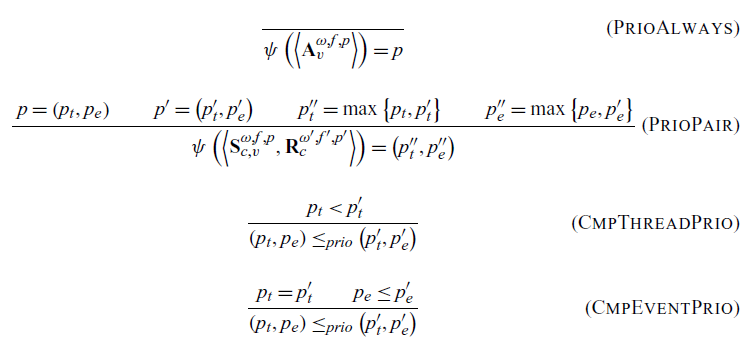

The priority of communication is derived from the priority of its constituent actions. For an always communication, handled by the rule PrioAlways, this is simply the priority of the action. In the case of a send-receive pair, we need the max to be taken. We must take the max because this ensures that the priority of communication is always at least that of any of its actions. This invariant is crucial in our proofs of correctness. The rule PrioPair implements this behavior.

Priority is given a lexographic ordering. It is compared first by the thread component, as shown in the rule CmpThreadPrio. This ensures that a lower priority thread’s communication will never be chosen over a higher priority thread’s communication. If the thread priorities are the same, the rule CmpEventPrio says we then look at the event priorities.

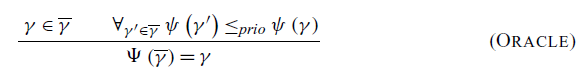

The selection of a communication

![]() $\gamma$

from the set of all possible communications

$\gamma$

from the set of all possible communications

![]() $\overline{\gamma}$

is done by an oracle

$\overline{\gamma}$

is done by an oracle

![]() $\Psi$

. The oracle looks at all possible communications and (under these semantics) picks the one with the highest priority. The rule Oracle defines the oracle’s selection to have the highest priority of all possible communications. If there is a tie, we allow the oracle to choose nondeterministically.

$\Psi$

. The oracle looks at all possible communications and (under these semantics) picks the one with the highest priority. The rule Oracle defines the oracle’s selection to have the highest priority of all possible communications. If there is a tie, we allow the oracle to choose nondeterministically.

4.3 Proof of important properties

To provide some intuition about the operation of the above semantics, we now present proofs of several important properties of our semantic model.

4.3.1 Communication priority inversion

We start by showing that the selection operation fulfills the necessary properties used later to prove the lack of communication priority inversion and correctness of thread scheduling (Theorem 4.4). We say a selection causes a communication priority inversion (Definition 4.2) if the selection makes it impossible for an existing higher priority communication to be selected in the future. This happens when a selection eliminates (Definition 4.1) a communication, meaning it is no longer present in the communication collection derived from the resulting action collection. We note this is a relaxed definition of communication priority inversion that opens up opportunities for an oracle to make locally suboptimal decisions as long as they do not preclude making the optimal decision later. The oracle given in the semantics does not use this flexibility and will always choose the immediately highest priority communication.

Definition 4.1 (Elimination). A communication

![]() $\gamma$

is eliminated by the selection

$\gamma$

is eliminated by the selection

![]() $\overline{\alpha} \rightsquigarrow \gamma^{\prime}, \overline{\alpha}^{\prime}$

, where

$\overline{\alpha} \rightsquigarrow \gamma^{\prime}, \overline{\alpha}^{\prime}$

, where

![]() $\overline{\alpha} \Rightarrow_{\overline{\alpha}} \overline{\gamma}$

and

$\overline{\alpha} \Rightarrow_{\overline{\alpha}} \overline{\gamma}$

and

![]() $\overline{\alpha}^{\prime} \Rightarrow_{\overline{\alpha}} \overline{\gamma}^\prime$

, if

$\overline{\alpha}^{\prime} \Rightarrow_{\overline{\alpha}} \overline{\gamma}^\prime$

, if

![]() $\gamma \in \overline{\gamma}$

but

$\gamma \in \overline{\gamma}$

but

![]() $\gamma \notin \overline{\gamma}^\prime$

.

$\gamma \notin \overline{\gamma}^\prime$

.

Definition 4.2 (Selection Communication Priority Inversion). A selection

![]() $\overline{\alpha} \rightsquigarrow \gamma, \overline{\alpha}^{\prime}$

exhibits Communication Priority Inversion if it eliminates a communication

$\overline{\alpha} \rightsquigarrow \gamma, \overline{\alpha}^{\prime}$

exhibits Communication Priority Inversion if it eliminates a communication

![]() $\gamma^\prime$

, (

$\gamma^\prime$

, (

![]() $\gamma^\prime \not= \gamma$

). where

$\gamma^\prime \not= \gamma$

). where

![]() $\psi \left( \gamma^\prime \right) \not \le_{prio} \psi\left( \gamma \right)$

$\psi \left( \gamma^\prime \right) \not \le_{prio} \psi\left( \gamma \right)$

Lemma 4.3 (Selection Priority). For a selection

![]() $\overline{\alpha} \rightsquigarrow \gamma, \overline{\alpha}^{\prime}$

, we have that for all

$\overline{\alpha} \rightsquigarrow \gamma, \overline{\alpha}^{\prime}$

, we have that for all

![]() $\gamma^{\prime\prime} \in \overline{\gamma}$

,

$\gamma^{\prime\prime} \in \overline{\gamma}$

,

![]() $\psi\left(\gamma^{\prime\prime}\right) \le_{prio} \psi\left(\gamma\right)$

where

$\psi\left(\gamma^{\prime\prime}\right) \le_{prio} \psi\left(\gamma\right)$

where

![]() $\overline{\alpha} \Rightarrow_{\overline{\alpha}} \overline{\gamma}$

.

$\overline{\alpha} \Rightarrow_{\overline{\alpha}} \overline{\gamma}$

.

Proof. By induction over the depth of the recursion. There are three rules by which a selection can be made: PickAlways, PickPair, and ReducePair.

In the case that the selection was by rules PickAlways or PickPair, we have that

![]() $\Psi \left(\overline{\gamma}\right) = \gamma$

. By the definition of

$\Psi \left(\overline{\gamma}\right) = \gamma$

. By the definition of

![]() $\Psi$

in the rule Oracle, we have that for all

$\Psi$

in the rule Oracle, we have that for all

![]() $\gamma^{\prime\prime} \in \overline{\gamma}$

,

$\gamma^{\prime\prime} \in \overline{\gamma}$

,

![]() $\psi\left(\gamma^{\prime\prime}\right) \le_{prio} \psi\left(\gamma\right)$

.

$\psi\left(\gamma^{\prime\prime}\right) \le_{prio} \psi\left(\gamma\right)$

.

If the selection was made by the recursive rule ReducePair, we have by the inductive hypothesis that our property holds for the antecedents

![]() $\overline{\alpha} \rightsquigarrow \left< \mathbf{S}^{{\omega}, {f}, {p}}_{{c}, {v}}, \mathbf{R}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{c} \right>, \overline{\alpha}^{\prime\prime}$

and

$\overline{\alpha} \rightsquigarrow \left< \mathbf{S}^{{\omega}, {f}, {p}}_{{c}, {v}}, \mathbf{R}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{c} \right>, \overline{\alpha}^{\prime\prime}$

and

![]() $\overline{\alpha}^{\prime\prime} \cup \left\{ \mathbf{A}^{{\omega}, {f}, {p}}_{\texttt{unit}}, \mathbf{A}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{v} \right\} \rightsquigarrow \gamma, \overline{\alpha}^{\prime}$

. Thus, we know that for all

$\overline{\alpha}^{\prime\prime} \cup \left\{ \mathbf{A}^{{\omega}, {f}, {p}}_{\texttt{unit}}, \mathbf{A}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{v} \right\} \rightsquigarrow \gamma, \overline{\alpha}^{\prime}$

. Thus, we know that for all

![]() $\gamma^{\prime\prime} \in \overline{\gamma}$

,

$\gamma^{\prime\prime} \in \overline{\gamma}$

,

![]() $\psi\left(\gamma^{\prime\prime}\right) \le_{prio} \psi\left( \left< \mathbf{S}^{{\omega}, {f}, {p}}_{{c}, {v}}, \mathbf{R}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{c} \right> \right)$

. By the rule PrioPair, we have that the priority of the selection

$\psi\left(\gamma^{\prime\prime}\right) \le_{prio} \psi\left( \left< \mathbf{S}^{{\omega}, {f}, {p}}_{{c}, {v}}, \mathbf{R}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{c} \right> \right)$

. By the rule PrioPair, we have that the priority of the selection

![]() $\left< \mathbf{S}^{{\omega}, {f}, {p}}_{{c}, {v}}, \mathbf{R}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{c} \right>$

was both

$\left< \mathbf{S}^{{\omega}, {f}, {p}}_{{c}, {v}}, \mathbf{R}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{c} \right>$

was both

![]() $p \le_{prio} \psi\left( \left< \mathbf{S}^{{\omega}, {f}, {p}}_{{c}, {v}}, \mathbf{R}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{c} \right> \right)$

and

$p \le_{prio} \psi\left( \left< \mathbf{S}^{{\omega}, {f}, {p}}_{{c}, {v}}, \mathbf{R}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{c} \right> \right)$

and

![]() $p^\prime \le_{prio} \psi\left( \left< \mathbf{S}^{{\omega}, {f}, {p}}_{{c}, {v}}, \mathbf{R}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{c} \right> \right)$

. This is because the communication priority takes the max of each priority component and thus can be no less than either action priority. We note that the priorities of the generated always events are p and

$p^\prime \le_{prio} \psi\left( \left< \mathbf{S}^{{\omega}, {f}, {p}}_{{c}, {v}}, \mathbf{R}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{c} \right> \right)$

. This is because the communication priority takes the max of each priority component and thus can be no less than either action priority. We note that the priorities of the generated always events are p and

![]() $p^\prime$

, and that

$p^\prime$

, and that

![]() $\left< \mathbf{A}^{{\omega}, {f}, {p}}_{\texttt{unit}} \right>$

and

$\left< \mathbf{A}^{{\omega}, {f}, {p}}_{\texttt{unit}} \right>$

and

![]() $\left<\mathbf{A}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{v} \right>$

are members of

$\left<\mathbf{A}^{{\omega^\prime}, {f^\prime}, {p^\prime}}_{v} \right>$

are members of

![]() $\overline{\gamma}^{\prime\prime}$

, where

$\overline{\gamma}^{\prime\prime}$

, where

![]() $\overline{\alpha}^{\prime\prime} \Rightarrow_{\overline{\alpha}^{\prime\prime}} \overline{\gamma}^ {\prime\prime}$

. Assume WLOG, the higher priority, and thus priority of the selection, to be p. Then again by our inductive hypothesis we obtain for all

$\overline{\alpha}^{\prime\prime} \Rightarrow_{\overline{\alpha}^{\prime\prime}} \overline{\gamma}^ {\prime\prime}$

. Assume WLOG, the higher priority, and thus priority of the selection, to be p. Then again by our inductive hypothesis we obtain for all

![]() $\gamma^{\prime\prime} \in \overline{\gamma}$

,

$\gamma^{\prime\prime} \in \overline{\gamma}$

,

![]() $\psi\left(\gamma^{\prime\prime}\right) \le_{prio} p \le_{prio} \psi\left(\gamma\right)$

. By transitivity, our property thus holds.

$\psi\left(\gamma^{\prime\prime}\right) \le_{prio} p \le_{prio} \psi\left(\gamma\right)$

. By transitivity, our property thus holds.

Theorem 4.4 (Selection Priority Inversion Freedom). No selection

![]() $\overline{\alpha} \rightsquigarrow \gamma, \overline{\alpha}^{\prime}$

exhibits Communication Priority Inversion under the given oracle

$\overline{\alpha} \rightsquigarrow \gamma, \overline{\alpha}^{\prime}$

exhibits Communication Priority Inversion under the given oracle

![]() $\Psi$

.

$\Psi$

.

Proof. Assume for sake of contradiction a selection

![]() $\overline{\alpha} \rightsquigarrow \gamma, \overline{\alpha}^{\prime}$

exhibits Communication Priority Inversion by eliminating a communication

$\overline{\alpha} \rightsquigarrow \gamma, \overline{\alpha}^{\prime}$

exhibits Communication Priority Inversion by eliminating a communication

![]() $\gamma^\prime$

. Then by the rules PickAlways and PickPair, we have that

$\gamma^\prime$

. Then by the rules PickAlways and PickPair, we have that

![]() $\Psi \left(\overline{\gamma}\right) = \gamma$

, where

$\Psi \left(\overline{\gamma}\right) = \gamma$

, where

![]() $\overline{\alpha} \Rightarrow_{\overline{\alpha}} \overline{\gamma}$

. By the definition of

$\overline{\alpha} \Rightarrow_{\overline{\alpha}} \overline{\gamma}$

. By the definition of

![]() $\Psi$

in the rule Oracle, we have that for all

$\Psi$

in the rule Oracle, we have that for all

![]() $\gamma^{\prime\prime} \in \overline{\gamma}$

,

$\gamma^{\prime\prime} \in \overline{\gamma}$

,

![]() $\psi\left(\gamma^{\prime\prime}\right) \le_{prio} \psi\left(\gamma\right)$

. Because

$\psi\left(\gamma^{\prime\prime}\right) \le_{prio} \psi\left(\gamma\right)$

. Because

![]() $\gamma^\prime$

was eliminated, we know

$\gamma^\prime$

was eliminated, we know

![]() $\gamma^{\prime} \in \overline{\gamma}$

. Thus

$\gamma^{\prime} \in \overline{\gamma}$

. Thus

![]() $\psi\left(\gamma^{\prime} \right) \le_{prio} \psi\left(\gamma\right)$

. This contradicts our assumption, as by our definition of Communication Priority Inversion,

$\psi\left(\gamma^{\prime} \right) \le_{prio} \psi\left(\gamma\right)$

. This contradicts our assumption, as by our definition of Communication Priority Inversion,

![]() $\psi \left( \gamma^\prime \right) \not \le_{prio} \psi\left( \gamma \right)$

.

$\psi \left( \gamma^\prime \right) \not \le_{prio} \psi\left( \gamma \right)$

.

4.3.2 Reduction relation

We can now show that any possible program transition does not produce a communication priority inversion (Theorem 4.8). Here we define our communication priority inversion over reductions and programs (Definition 4.7) analogously to our previous definition over selections (Definition 4.2). For the proof, we define an annotation to the reduction relation that encapsulates the communication used by that reduction (or

![]() $\emptyset$

in the case that no communication occurs during the reduction). Leveraging this annotation, we can examine if the selection in the reduction eliminates a higher priority communication. Under the given oracle (and for any correct oracle), such elimination can never happen. Thus, a reduction, and by extension, a program trace, cannot exhibit communication priority inversion.

$\emptyset$

in the case that no communication occurs during the reduction). Leveraging this annotation, we can examine if the selection in the reduction eliminates a higher priority communication. Under the given oracle (and for any correct oracle), such elimination can never happen. Thus, a reduction, and by extension, a program trace, cannot exhibit communication priority inversion.

Definition 4.5 (Annotation). If

![]() $S \rightarrow S^\prime$

by application of rule Sync where

$S \rightarrow S^\prime$

by application of rule Sync where

![]() $\overline{\alpha} \rightsquigarrow \gamma^{\prime}, \overline{\alpha}^{\prime}$

, then

$\overline{\alpha} \rightsquigarrow \gamma^{\prime}, \overline{\alpha}^{\prime}$

, then

![]() $S \rightarrow_{\gamma^\prime} S^\prime$

. If

$S \rightarrow_{\gamma^\prime} S^\prime$

. If

![]() $S \rightarrow S^\prime$

by any other rule,

$S \rightarrow S^\prime$

by any other rule,

![]() $S \rightarrow_\emptyset S^\prime$

.

$S \rightarrow_\emptyset S^\prime$

.

Definition 4.6 (Reduction Elimination). A communication

![]() $\gamma$

is eliminated by the reduction

$\gamma$

is eliminated by the reduction

![]() $\left\langle {T} \right\rangle_{\,{\overline{T}}, {\overline{\alpha}}} \rightarrow \left\langle {T} \right\rangle_{\,{\overline{T}^\prime}, {\overline{\alpha}^\prime}}^\prime$

, where

$\left\langle {T} \right\rangle_{\,{\overline{T}}, {\overline{\alpha}}} \rightarrow \left\langle {T} \right\rangle_{\,{\overline{T}^\prime}, {\overline{\alpha}^\prime}}^\prime$

, where

![]() $\overline{\alpha} \Rightarrow_{\overline{\alpha}} \overline{\gamma}$

and

$\overline{\alpha} \Rightarrow_{\overline{\alpha}} \overline{\gamma}$

and

![]() $\overline{\alpha}^{\prime} \Rightarrow_{\overline{\alpha}} \overline{\gamma}^\prime$

, if

$\overline{\alpha}^{\prime} \Rightarrow_{\overline{\alpha}} \overline{\gamma}^\prime$

, if

![]() $\gamma \in \overline{\gamma}$

but

$\gamma \in \overline{\gamma}$

but

![]() $\gamma \notin \overline{\gamma}^\prime$

.

$\gamma \notin \overline{\gamma}^\prime$

.

Definition 4.7 (Reduction Communication Priority Inversion). A reduction

![]() $S \rightarrow_\gamma S^\prime$

exhibits Communication Priority Inversion if it eliminates a communication

$S \rightarrow_\gamma S^\prime$

exhibits Communication Priority Inversion if it eliminates a communication

![]() $\gamma^\prime$

, (

$\gamma^\prime$

, (

![]() $\gamma^\prime \not= \gamma$

) where

$\gamma^\prime \not= \gamma$

) where

![]() $\psi \left( \gamma^\prime \right) \not \le_{prio} \psi\left( \gamma \right)$

$\psi \left( \gamma^\prime \right) \not \le_{prio} \psi\left( \gamma \right)$

Theorem 4.8 (Priority Inversion Freedom). No reduction

![]() $S \rightarrow S^\prime$

exhibits Communication Priority Inversion under the given oracle

$S \rightarrow S^\prime$

exhibits Communication Priority Inversion under the given oracle

![]() $\Psi$

.

$\Psi$

.

Proof. Assume for sake of contradiction a reduction

![]() $S \rightarrow S^\prime$

exhibits Communication Priority Inversion by eliminating a communication

$S \rightarrow S^\prime$

exhibits Communication Priority Inversion by eliminating a communication

![]() $\gamma^\prime$

. Note that by Definition 4.7, the reduction must be of the annotated form

$\gamma^\prime$

. Note that by Definition 4.7, the reduction must be of the annotated form

![]() $S \rightarrow_\gamma S^\prime$

and therefore been an application of the rule Sync. Let

$S \rightarrow_\gamma S^\prime$

and therefore been an application of the rule Sync. Let

![]() $S = \left\langle {T} \right\rangle_{\,{\overline{T}}, {\overline{\alpha}}}$

and

$S = \left\langle {T} \right\rangle_{\,{\overline{T}}, {\overline{\alpha}}}$

and

![]() $S^\prime = \left\langle {T} \right\rangle_{\,{\overline{T}^\prime}, {\overline{\alpha}^\prime}} ^\prime$

, and

$S^\prime = \left\langle {T} \right\rangle_{\,{\overline{T}^\prime}, {\overline{\alpha}^\prime}} ^\prime$

, and

![]() $\overline{\alpha} \Rightarrow_{\overline{\alpha}} \overline{\gamma}$

and

$\overline{\alpha} \Rightarrow_{\overline{\alpha}} \overline{\gamma}$

and

![]() $\overline{\alpha}^{\prime} \Rightarrow_ {\overline{\alpha}} \overline{\gamma}^\prime$

. By Definition 4.6, we have that

$\overline{\alpha}^{\prime} \Rightarrow_ {\overline{\alpha}} \overline{\gamma}^\prime$

. By Definition 4.6, we have that

![]() $\gamma^\prime \in \overline{\gamma}$

but

$\gamma^\prime \in \overline{\gamma}$

but

![]() $\gamma^\prime \notin \overline{\gamma}^\prime$

. Thus, the selection performed,

$\gamma^\prime \notin \overline{\gamma}^\prime$

. Thus, the selection performed,

![]() $\overline{\alpha} \rightsquigarrow \gamma, \overline{\alpha}^ {\prime}$

, eliminates

$\overline{\alpha} \rightsquigarrow \gamma, \overline{\alpha}^ {\prime}$

, eliminates

![]() $\gamma^\prime$

. by applying Definition 4.2, we have that the selection exhibits Communication Priority Inversion. This contradicts the prior result of Theorem 4.4. Hence, no such

$\gamma^\prime$

. by applying Definition 4.2, we have that the selection exhibits Communication Priority Inversion. This contradicts the prior result of Theorem 4.4. Hence, no such

![]() $\gamma^\prime$

can exist, and therefore, no reduction exhibits Communication Priority Inversion.

$\gamma^\prime$

can exist, and therefore, no reduction exhibits Communication Priority Inversion.

Corollary 4.9 (Program Priority Inversion Freedom). Any program trace

![]() $S \rightarrow^\ast S^\prime$

does not contain a Communication Priority Inversion.

$S \rightarrow^\ast S^\prime$

does not contain a Communication Priority Inversion.

4.3.3 Thread scheduling

Building on top of our prior results around communication priority inversion, we can now make the even stronger statement that under the given oracle, the highest priority thread that can make progress is the one executing. To do so, we must first define what it means for a thread to make progress. We say a thread is capable of making progress if it is ready (Definition 4.17). This happens when the thread is able to participate in a communication (see Definitions 4.13, 4.14, 4.15, and 4.16). We also observe that the semantics preserve an important invariant about the form of the threads waiting in the thread collection. They all must be synchronizing on a set of actions represented by a choice id (Lemma 4.19). This is integral to the cooperative threading model specified by the semantics because it implies that all threads are blocked from communicating. Even in cases where the communication is not meaningful, blocking can be encoded as synchronizing on an always action and termination as synchronizing on never.

These definitions provide the groundwork for inductively asserting that in any valid program state, the thread executing has higher priority than any ready thread in the collection (Theorem 4.23). The proof of this breaks down into three cases representing the three rules that modify the thread and action collections. The spawn cases are the simpler ones. We need two variants of the spawn rules to capture which thread should be blocked based on priority: the newly spawned thread or the spawning thread. Correctness stems from the fact that spawn always injects a synchronization to block the lower priority thread. The sync case is more complicated. At its core, the proof asserts that the priority of an action is linked to the thread that created it, and that thread is the one waiting on it. Note again that an action is generated from an event by the synchronizing thread, as was shown to be necessary in Section 3. Correctness of communication selection implies the correctness of action selection because thread and action priorities are linked, and this linkage is preserved through selection as well as the replacement of a communication pair with always actions. This in turn implies the correctness of thread selection. As a result, the thread currently executing is always the highest priority, showing our cooperative semantics are equivalent to traditional preemptive semantics that chooses only to preempt a thread for a higher priority one.

Definition 4.10 (Initial State). The initial state of a program is the state

![]() $S_0 = \left\langle {\left( {0_{LOW}}, {e_0} \right)} \right\rangle_{\,{\emptyset}, {\emptyset}}$

.

$S_0 = \left\langle {\left( {0_{LOW}}, {e_0} \right)} \right\rangle_{\,{\emptyset}, {\emptyset}}$

.

Definition 4.11 (Reachable State). A reachable state S is a state such that

![]() $S_0 \rightarrow^{\ast} S$

.

$S_0 \rightarrow^{\ast} S$

.

Definition 4.12 (Final State). A final state S is a state such that

![]() $S = \left\langle {\left( {t_{p_{t}}}, {{E}\left[ \, {\texttt{sync}\ \omega}\, \right]} \right)} \right\rangle_{\,{\overline{T}}, {\overline{\alpha}}}$

where