Crossref Citations

This article has been cited by the following publications. This list is generated based on data provided by

Crossref.

Blaiszik, Ben

Ward, Logan

Schwarting, Marcus

Gaff, Jonathon

Chard, Ryan

Pike, Daniel

Chard, Kyle

and

Foster, Ian

2019.

A data ecosystem to support machine learning in materials science.

MRS Communications,

Vol. 9,

Issue. 4,

p.

1125.

2020.

Chemical Physics and Quantum Chemistry.

Vol. 81,

Issue. ,

p.

291.

Collins, Eric M.

and

Raghavachari, Krishnan

2020.

Effective Molecular Descriptors for Chemical Accuracy at DFT Cost: Fragmentation, Error-Cancellation, and Machine Learning.

Journal of Chemical Theory and Computation,

Vol. 16,

Issue. 8,

p.

4938.

Westermayr, Julia

and

Marquetand, Philipp

2020.

Machine learning and excited-state molecular dynamics .

Machine Learning: Science and Technology,

Vol. 1,

Issue. 4,

p.

043001.

Huerta, E. A.

Khan, Asad

Davis, Edward

Bushell, Colleen

Gropp, William D.

Katz, Daniel S.

Kindratenko, Volodymyr

Koric, Seid

Kramer, William T. C.

McGinty, Brendan

McHenry, Kenton

and

Saxton, Aaron

2020.

Convergence of artificial intelligence and high performance computing on NSF-supported cyberinfrastructure.

Journal of Big Data,

Vol. 7,

Issue. 1,

Dral, Pavlo O.

2020.

Quantum Chemistry in the Age of Machine Learning.

The Journal of Physical Chemistry Letters,

Vol. 11,

Issue. 6,

p.

2336.

Dral, Pavlo O.

Owens, Alec

Dral, Alexey

and

Csányi, Gábor

2020.

Hierarchical machine learning of potential energy surfaces.

The Journal of Chemical Physics,

Vol. 152,

Issue. 20,

Dandu, Naveen

Ward, Logan

Assary, Rajeev S.

Redfern, Paul C.

Narayanan, Badri

Foster, Ian T.

and

Curtiss, Larry A.

2020.

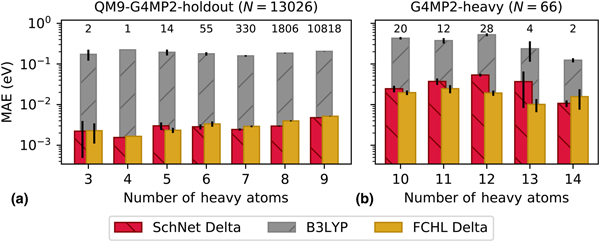

Quantum-Chemically Informed Machine Learning: Prediction of Energies of Organic Molecules with 10 to 14 Non-hydrogen Atoms.

The Journal of Physical Chemistry A,

Vol. 124,

Issue. 28,

p.

5804.

Kaundinya, Prathik R.

Choudhary, Kamal

and

Kalidindi, Surya R.

2021.

Machine learning approaches for feature engineering of the crystal structure: Application to the prediction of the formation energy of cubic compounds.

Physical Review Materials,

Vol. 5,

Issue. 6,

Alexander, Francis J

Ang, James

Bilbrey, Jenna A

Balewski, Jan

Casey, Tiernan

Chard, Ryan

Choi, Jong

Choudhury, Sutanay

Debusschere, Bert

DeGennaro, Anthony M

Dryden, Nikoli

Ellis, J Austin

Foster, Ian

Cardona, Cristina Garcia

Ghosh, Sayan

Harrington, Peter

Huang, Yunzhi

Jha, Shantenu

Johnston, Travis

Kagawa, Ai

Kannan, Ramakrishnan

Kumar, Neeraj

Liu, Zhengchun

Maruyama, Naoya

Matsuoka, Satoshi

McCarthy, Erin

Mohd-Yusof, Jamaludin

Nugent, Peter

Oyama, Yosuke

Proffen, Thomas

Pugmire, David

Rajamanickam, Sivasankaran

Ramakrishniah, Vinay

Schram, Malachi

Seal, Sudip K

Sivaraman, Ganesh

Sweeney, Christine

Tan, Li

Thakur, Rajeev

Van Essen, Brian

Ward, Logan

Welch, Paul

Wolf, Michael

Xantheas, Sotiris S

Yager, Kevin G

Yoo, Shinjae

and

Yoon, Byung-Jun

2021.

Co-design Center for Exascale Machine Learning Technologies (ExaLearn).

The International Journal of High Performance Computing Applications,

Vol. 35,

Issue. 6,

p.

598.

Huerta, E. A.

and

Zhao, Zhizhen

2021.

Handbook of Gravitational Wave Astronomy.

p.

1.

Omar, Ömer H.

del Cueto, Marcos

Nematiaram, Tahereh

and

Troisi, Alessandro

2021.

High-throughput virtual screening for organic electronics: a comparative study of alternative strategies.

Journal of Materials Chemistry C,

Vol. 9,

Issue. 39,

p.

13557.

Ward, Logan

Dandu, Naveen

Blaiszik, Ben

Narayanan, Badri

Assary, Rajeev S.

Redfern, Paul C.

Foster, Ian

and

Curtiss, Larry A.

2021.

Graph-Based Approaches for Predicting Solvation Energy in Multiple Solvents: Open Datasets and Machine Learning Models.

The Journal of Physical Chemistry A,

Vol. 125,

Issue. 27,

p.

5990.

Collins, Eric M.

and

Raghavachari, Krishnan

2021.

A Fragmentation-Based Graph Embedding Framework for QM/ML.

The Journal of Physical Chemistry A,

Vol. 125,

Issue. 31,

p.

6872.

Unzueta, Pablo A.

Greenwell, Chandler S.

and

Beran, Gregory J. O.

2021.

Predicting Density Functional Theory-Quality Nuclear Magnetic Resonance Chemical Shifts via Δ-Machine Learning.

Journal of Chemical Theory and Computation,

Vol. 17,

Issue. 2,

p.

826.

Westermayr, Julia

and

Marquetand, Philipp

2021.

Machine Learning for Electronically Excited States of Molecules.

Chemical Reviews,

Vol. 121,

Issue. 16,

p.

9873.

Westermayr, Julia

Gastegger, Michael

Schütt, Kristof T.

and

Maurer, Reinhard J.

2021.

Perspective on integrating machine learning into computational chemistry and

materials science.

The Journal of Chemical Physics,

Vol. 154,

Issue. 23,

Das, Sambit Kumar

Chakraborty, Sabyasachi

and

Ramakrishnan, Raghunathan

2021.

Critical benchmarking of popular composite thermochemistry models and density functional approximations on a probabilistically pruned benchmark dataset of formation enthalpies.

The Journal of Chemical Physics,

Vol. 154,

Issue. 4,

Torres-Cruz, Fred

Sahu, Ajay Kumar

Huayhua, Ruben Ticona

Julio, Martin

Bellido, Merma

Limachi, Isaac Ortega

and

Huanca, Julio Cesar Laura

2022.

Comparative Analysis of High-Performance Computing Systems and Machine Learning in Enhancing Cyber Infrastructure: A Multiple Regression Analysis Approach.

p.

69.

Cencer, Morgan M.

Suslick, Benjamin A.

and

Moore, Jeffrey S.

2022.

From skeptic to believer: The power of models.

Tetrahedron,

Vol. 123,

Issue. ,

p.

132984.