Introduction

The complexity, accuracy, and fluency (CAF) framework is grounded in theories of second language (L2) acquisition and performance. It relates to models of L2 competence, proficiency, and processing (Housen & Kuiken, Reference Housen and Kuiken2009). Within this framework, complexity refers to the breadth and sophistication of linguistic structures learners produce, primarily focusing on syntactic aspects. Accuracy pertains to the precision and correctness of language use, reflecting the degree to which a learner’s output adheres to the norms of the target language. Fluency addresses the fluidity, smoothness, and speed of language production. As Ortega (Reference Ortega, Kortmann and Szmrecsanyi2012) notes, CAF measures serve multiple functions: describing performance, benchmarking development, and gauging proficiency. These measures have become critical metrics for evaluating L2 proficiency across both instructional settings and standardized language assessments, demonstrating their predictive strength for language proficiency and writing quality (Crossley, Kyle, Allen, Guo, & McNamara, Reference Crossley, Kyle, Allen, Guo and McNamara2014; Plakans, Gebril, & Bilki, Reference Plakans, Gebril and Bilki2019).

As the field advances, there is a growing acknowledgment of the need to integrate the three CAF measures and treat them as a whole rather than a separate construct (Larsen-Freeman, Reference Larsen-Freeman2009; Norris & Ortega, Reference Norris and Ortega2009; Teng & Huang, Reference Teng and Huang2023). This integrated approach reflects the inherently dynamic and holistic nature of language learning and underscores the importance of viewing CAF as interconnected dimensions that mutually inform assessments of learner language development (Hou, Loerts, & Verspoor, Reference Hou, Loerts, Verspoor, Fogal and Verspoor2020).

Parallel to these theoretical developments, significant progress has occurred in the automated measurement of syntactic complexity and fluency. Advances in natural language processing (NLP) have made the automated calculation of syntactic complexity widely accessible through tools such as L2 Syntactic Complexity Analyzer (L2SCA; Lu, Reference Lu2010) and the Tool for the Automatic Analysis of Syntactic Sophistication and Complexity (TAASSC; Kyle & Crossley, Reference Kyle and Crossley2018). Fluency measures, typically operationalized through word counts and sentence-level metrics, have similarly been automated with relative ease using existing NLP techniques.

However, accuracy, particularly the precise identification and quantification of linguistic errors, remains a domain traditionally reliant on resource-intensive human evaluation. Existing automated tools, such as Grammarly (https://app.grammarly.com/), offer learner-centric corrective feedback but do not provide the quantitative accuracy indices extensively used in L2 research, such as errors per T-unit, errors per clause, or proportions of error-free units (Polio & Shea, Reference Polio and Shea2014). The recent emergence of generative AI models, such as ChatGPT and other large language models (LLMs), has sparked interest in the feasibility of automated error analysis (Mizumoto, Shintani, Sasaki, & Teng, Reference Mizumoto, Shintani, Sasaki and Teng2024; Pfau, Polio, & Xu, Reference Pfau, Polio and Xu2023). These developments suggest significant potential for automating accuracy assessment, thereby addressing a major gap in current research and practical applications.

In response to this opportunity, the present research report introduces Auto Error Analyzer, a prototype web application specifically designed to automate the computation and reporting of accuracy metrics within the CAF framework. By leveraging cutting-edge generative AI (Llama 3.3), this tool efficiently and affordably quantifies accuracy in L2 learner texts, aligning closely with human evaluation benchmarks. This integration not only completes the automation of all CAF dimensions but also enables more scalable and reliable analyses of L2 production data, bridging the gap between human judgment and AI-driven assessment.

Background

Complexity and fluency in L2 development research

The CAF measures have been a fundamental framework for evaluating learners’ linguistic proficiency. They play a critical role in both the task-based assessment of L2 oral and written performance in classroom settings (Koizumi & In’nami, Reference Koizumi and In’nami2024) and in the context of norm-referenced language testing environments (Plakans et al., Reference Plakans, Gebril and Bilki2019). Complexity encompasses the breadth and sophistication of linguistic structures that a learner is capable of producing, emphasizing the employment of advanced lexical, morphological, and syntactic complexity (Housen et al., Reference Housen, Kuiken, Vedder, Housen, Kuiken and Vedder2012; Pallotti, Reference Pallotti, Winke and Brunfaut2021). Researchers have developed numerous indices to quantify syntactic complexity measures in L2 writing, such as mean length of T-unit (MLT), mean length of clause (MLC), coordinate phrases per clause (CP/C), complex nominals per clause (CN/C), dependent clause ratio (DC/C), T-unit complexity ratio (C/T), mean length of sentence (MLS), sentence coordination ratio (T/S), and dependent clauses per T-unit (DC/T) (see Hwang & Polio, Reference Hwang and Polio2023 for comprehensive review of syntactic complexity indices in L2 writing studies). Recently, researchers have expanded the analytical methods with more refined syntactic indices that capture specific dimensions of complexity, including phrasal elaboration, structural variations, and clause-level relationships (Biber, Gray, Staples, & Egbert, Reference Biber, Gray, Staples and Egbert2020; Crossley, Reference Crossley2020; Kyle & Crossley, Reference Kyle and Crossley2018).

These syntactic complexity measures capture the elaborateness and hierarchy of a learner’s sentences. In empirical studies, such complexity indices have been shown to predict writing proficiency (e.g., Kuiken & Vedder, Reference Kuiken and Vedder2019; Phuoc & Barrot, Reference Phuoc and Barrot2022) and elucidate text variation (e.g., Tao & Aryadoust, Reference Tao and Aryadoust2024). For example, Mizumoto and Eguchi (Reference Mizumoto and Eguchi2023) explored the efficacy of automated essay scoring (AES) with the GPT-3 text-davinci-003 model on the 12,100 essays in the ETS Corpus of Non-Native Written English (TOEFL11). Their findings revealed that the inclusion of the above-mentioned syntactic complexity measures and additional linguistic features, such as lexical diversity (Kyle, Crossley, & Jarvis, Reference Kyle, Crossley and Jarvis2021) and lexical sophistication (Crossley, Kyle, & Berger, Reference Crossley, Kyle and Berger2018), significantly improved the accuracy of the scoring compared to using the GPT model alone.

Advances in NLP in recent years have made syntactic complexity easier to assess, using tools such as L2SCA (Lu, Reference Lu2010) and TAASSC (Kyle, Reference Kyle2016). In addition, L2SCA’s online interface, the Web-based L2 Syntactic Complexity Analyzer (https://aihaiyang.com/software/l2sca/), offers an accessible platform for easy online operations (Ai & Lu, Reference Ai, Lu, Díaz-Negrillo, Ballier and Thompson2013). These tools have enabled automated large-scale analysis of syntactic complexity without manual coding, thereby being widely used in numerous studies in the field. It is important to note that the current version of the tool primarily incorporates syntactic complexity measures to support its accuracy calculations. Specifically, syntactic complexity indices, derived from well-established NLP methodologies and validated tools (e.g., L2SCA, TAASSC), serve as foundational units (e.g., T-units and clauses) for quantifying accuracy metrics such as errors per T-unit or clause. While lexical and morphological complexities are equally significant, their computational integration differs substantially and is beyond the immediate scope focused on accuracy assessment. Future development will explore the incorporation of these additional complexity dimensions to provide a more comprehensive analysis of L2 production.

Fluency, on the other hand, concerns the flow and speed of language production (articulation rate), breakdown (pauses), and repair (reformulations and false starts), emphasizing the ability to communicate ideas without undue pausing or hesitation. Within the CAF framework, fluency has been straightforwardly operationalized in L2 writing as the total number of words, or at times, clauses or T-units per text (Plakans et al., Reference Plakans, Gebril and Bilki2019). With the advent of computer-based writing as the standard, writing fluency now also focuses on minimal pauses, few revisions, and significant word production (Garcés-Manzanera, Reference Garcés-Manzanera2024; Gilquin, Reference Gilquin2024). Consequently, there has been an increase in studies using keystrokes as a measure of writing fluency, facilitated by keystroke logging programs (e.g., Mohsen, Reference Mohsen2021). Recently, some researchers have expanded fluency measurement methods by integrating eye-tracking technology, providing further insights into the cognitive processes underlying L2 fluency (Chukharev-Hudilainen, Saricaoglu, Torrance, & Feng, Reference Chukharev-Hudilainen, Saricaoglu, Torrance and Feng2019; Lehtilä & Lintunen, Reference Lehtilä and Lintunen2025; Révész, Michel, Lu, Kourtali, Lee, & Borges, Reference Révész, Michel, Lu, Kourtali, Lee and Borges2022).

Among the three dimensions of complexity, accuracy, and fluency (CAF), fluency, particularly measured by word count, is recognized as a predictor of both writing quality and overall language development in second language (L2) learners (Plakans et al., Reference Plakans, Gebril and Bilki2019). Research has consistently demonstrated that word count serves as a robust predictor of variance in L2 writing assessment scores (Ferris, Reference Ferris1994; Gebril & Plakans, Reference Gebril and Plakans2013; Goh, Sun, & Yang, Reference Goh, Sun and Yang2020). For example, in a study examining linguistic microfeatures as predictors of L2 writing quality, Crossley et al. (Reference Crossley, Kyle, Allen, Guo and McNamara2014) identified word count (or word type) as a significant determinant of essay scores, demonstrating that test-takers who produced more text generally received higher evaluations of their writing.

Accuracy in L2 research: Significance and challenges

Accuracy, in the CAF framework, denotes the precision and correctness of language use, reflecting how closely a learner’s production in L2 adheres to the grammatical, syntactic, and lexical norms of the target language (Housen, Kuiken, & Vedder, Reference Housen, Kuiken, Vedder, Housen, Kuiken and Vedder2012). High accuracy is evident when an L2 text has few or no errors in syntax, morphology, or lexis.

Accuracy is not just a predictor of proficiency, evidenced by its correlation with proficiency levels (Thewissen, Reference Thewissen2013) and writing scores (Polio & Shea, Reference Polio and Shea2014); it also holds a pivotal role in classrooms through formative assessment. The ability to identify, correct, and reconstruct the interlanguage of learners, including their errors, is essential. Consequently, accuracy is a key indicator for both researchers and practitioners alike, underscoring its importance in evaluating language proficiency and guiding the instructional process (Pallotti, Reference Pallotti2017).

To evaluate accuracy, researchers have developed a variety of metrics and coding schemes. Updating Polio (Reference Polio1997), Polio and Shea (Reference Polio and Shea2014) comprehensively reviewed accuracy measures used in L2 writing research. They reported that common measures include (a) holistic scales for linguistic accuracy, (b) error-free units (e.g., error-free T-units), (c) the number of errors per 100 words, (d) the number of specific error types (e.g., grammar errors such as articles), and (e) measures considering the severity of the error (e.g., weighted error-free clauses). Pallotti (Reference Pallotti, Winke and Brunfaut2021) similarly highlighted several of these indicators, particularly emphasizing measures such as the average number of errors per unit (clause, sentence, or T-unit) and the proportion of error-free units, thereby reaffirming key metrics identified in Polio and Shea’s earlier review.

These measures provide a detailed view of accuracy by quantifying not just how many errors are present but also their types and distribution. For instance, one learner’s essay might have eight errors per 100 words (mainly minor spelling mistakes), while another’s has four errors per 100 words but with two being severe grammatical errors; different accuracy metrics would capture different aspects of these texts’ correctness. Notably, accuracy metrics like error-free clause counts and error rates have demonstrated validity by aligning with expert judgments and predicting writing performance (Polio & Shea, Reference Polio and Shea2014). In other words, texts with fewer errors (or higher proportions of error-free sentences) tend to receive higher ratings, confirming accuracy as an essential indicator of writing quality.

Despite its importance, accuracy has historically been the most challenging CAF dimension to measure automatically. Complexity and fluency lend themselves to automation because they deal with structural and quantitative features that computers can parse (sentence lengths, word counts, etc.). Accuracy, in contrast, requires identifying linguistic errors, a task that traditionally relies on human evaluators who can distinguish true errors from stylistic variations. While automated grammar checkers (e.g., Grammarly) and other Automated Writing Evaluation (AWE) tools provide corrective feedback to users, they do not directly output research-friendly accuracy metrics like those reviewed above (Polio & Shea, Reference Polio and Shea2014). For example, a tool like Grammarly might underline a misused article or a verb tense error and suggest a correction, but it will not tell a researcher “this essay has 5 errors in 200 words” or “80% of the sentences are error-free.” As a result, studies analyzing accuracy have had to resort to labor-intensive human coding of errors in student texts. This manual process raises concerns about consistency and scalability. Researchers must agree on what counts as an error, train raters, and spend considerable time on analysis―a barrier to large-scale or classroom-level implementation. In short, accuracy remains the least automated component of CAF, and improving this state of affairs is a pressing need in L2 research.

Automating accuracy measurement

Recent advancements in NLP and artificial intelligence (AI) are beginning to bridge the gap in accuracy assessment. Researchers have started comparing cutting-edge AI language models with existing grammar checking tools to evaluate how accurately each can detect and evaluate errors in L2 production, especially in writing. Traditional tools such as Grammarly or Criterion offer quick fixes for obvious mistakes, yet they sometimes miss context-dependent errors and, as noted, do not report comprehensive accuracy statistics. Crossley, Bradfield, and Bustamante (Reference Crossley, Bradfield and Bustamante2019) introduced the Grammar and Mechanics Error Tool (GAMET), based on LanguageTool v3.2, to automatically assess structural and mechanical errors in texts. Although GAMET achieved high precision in identifying certain error types (e.g., spelling and typographical errors), its overall accuracy and recall were still limited, particularly in grammar and punctuation error detection. Consequently, while GAMET represented an important attempt at automating accuracy assessment, it lacked the comprehensive precision offered by newer, generative AI-based methods.

In contrast, the new generation of AI, exemplified by large language models (LLMs) like Generative Pre-trained Transformer (GPT), can analyze text with greater depth and potentially provide more detailed error analysis. A key question has been whether these AI models can match or approach human accuracy in identifying and judging L2 writing errors, and early evidence is promising. For example, Pfau et al. (Reference Pfau, Polio and Xu2023) conducted a pioneering study on GPT-4’s accuracy assessment capabilities, examining 100 essays written by non-native English speakers. They used ChatGPT to detect and classify errors in each sentence and had human experts verify the AI’s performance. Their findings revealed strong alignment between ChatGPT and human evaluators, with a correlation of .97. Such a high correlation suggests that generative AI can replicate human-level accuracy judgments with remarkable precision. Building on the work of Pfau et al., Mizumoto, Yasuda, and Tamura (Reference Mizumoto, Yasuda and Tamura2024) directly compared the accuracy evaluations of generative AI models with both human assessments and Grammarly’s feedback, using a sample of 232 L2 English writing scripts. The AI (based on ChatGPT) not only showed a strong correlation (ρ = .79) with human judgment of errors, but it actually outperformed the grammar checker in mimicking human evaluations. Specifically, the AI’s error counts and ratings were more in line with the human experts’ (higher agreement) than Grammarly’s were, and the AI’s accuracy metrics were slightly better predictors of the students’ actual writing scores on a proficiency exam.

These studies demonstrate promising results, with generative AI (GPT) showing high accuracy in detecting grammatical errors and aligning closely with human judgments. The evidence supports the use of generative AI as a viable tool for automated accuracy assessment, suggesting that generative AI is now sufficiently developed to warrant consideration for practical implementation. The present work takes up this charge by focusing on accuracy automation, directly addressing the identified gap in tools in L2 research.

Having identified the critical gap in automated accuracy measurement, the following section introduces Auto Error Analyzer, a tool specifically designed to address these limitations through its innovative approach to error detection, classification, and reporting for L2 production assessment.

Description of the tool

Indices calculated in the Auto Error Analyzer

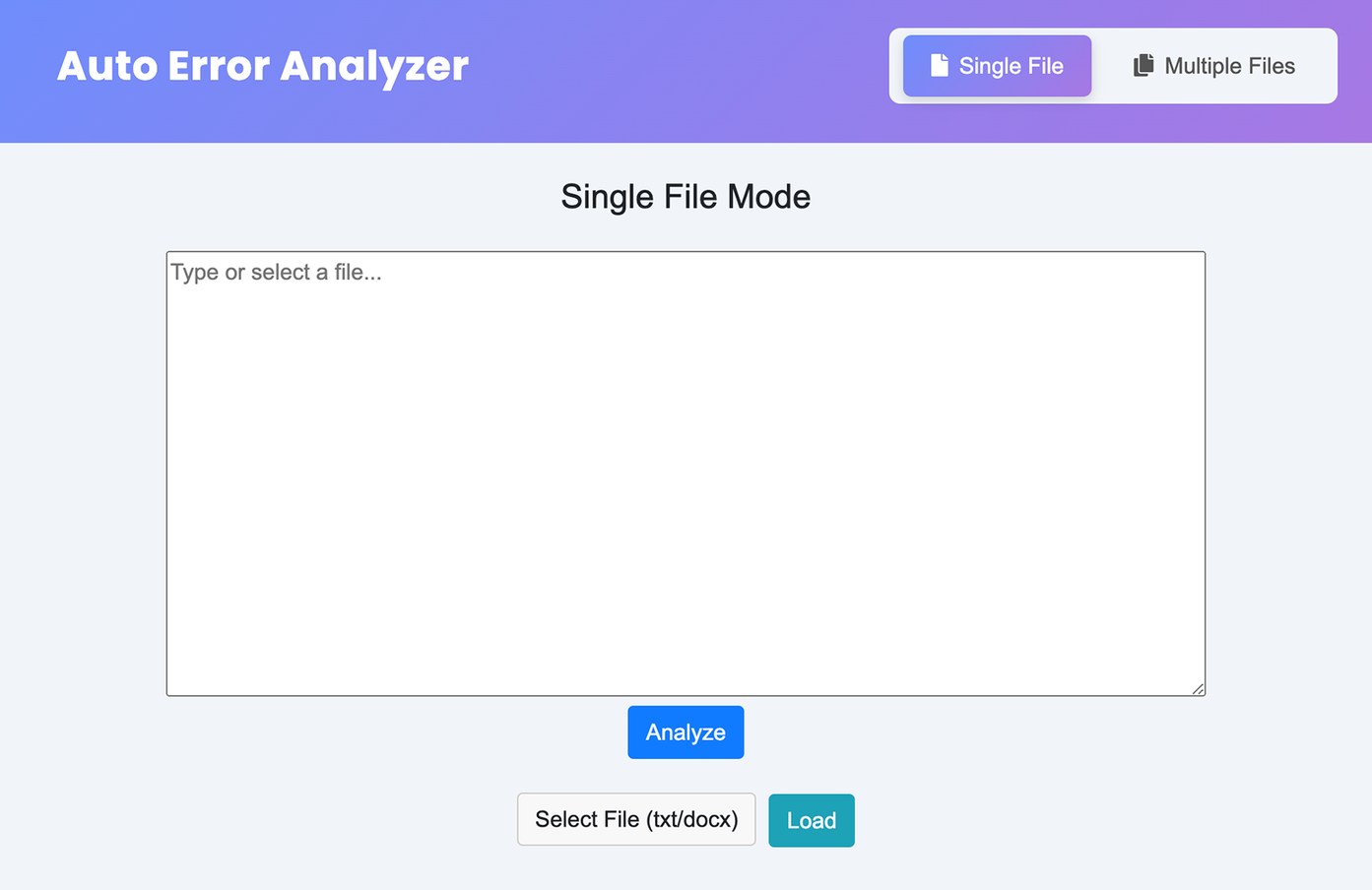

The newly developed tool, named “Auto Error Analyzer,” is freely accessible at https://langtech.jp/autoerror/. Figure 1 shows the Auto Error Analyzer landing page. Users can analyze English text entered manually or uploaded as text (.txt) or Microsoft Word (.docx) files.

Figure 1. Auto Error Analyzer landing page.

In addition to written texts, Auto Error Analyzer can also process transcribed spoken data. Since the tool focuses on error detection and classification, its core functionalities apply to both written and transcribed spoken texts, making it useful for analyzing a wider range of learner production.

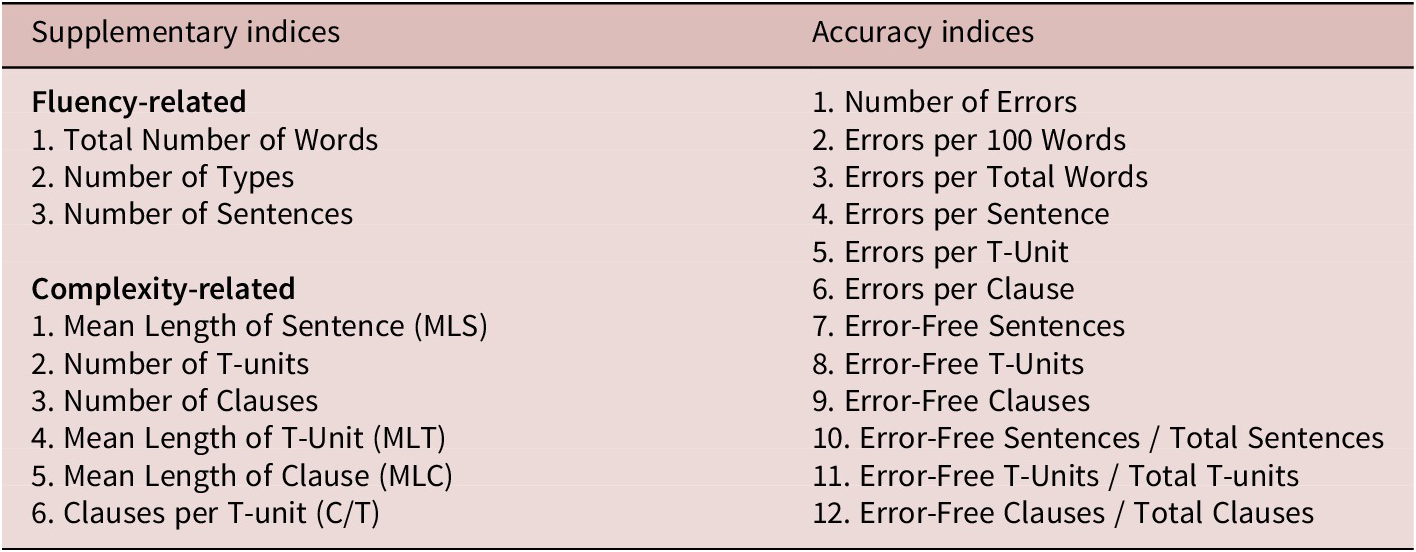

Table 1 presents the indices calculated by the Auto Error Analyzer. To compute accuracy indices, fluency-related indices (e.g., total number of words and sentences) and complexity-related indices (e.g., T-units and clauses) are also displayed. Since one of the defining features of the Auto Error Analyzer is its automated calculation of accuracy indices, computing T-units and clauses is essential for deriving accuracy measures such as Errors per T-unit, Errors per Clause, Error-free T-units, and Error-free Clauses. These supplementary indices are displayed on the left of Table 1 and are included in the web application for reference purposes. This is because the Auto Error Analyzer is specifically designed to focus on the automatic calculation of accuracy indices.

Table 1. Indices calculated in the Auto Error Analyzer

The main category, accuracy, includes 12 indices that are based on widely used accuracy measures in L2 writing research (Polio & Shea, Reference Polio and Shea2014). In a recent study by Mizumoto et al. (Reference Mizumoto and Plonsky2024), GPT-4 was employed for error identification because of its demonstrated high accuracy in detecting and correcting grammatical errors, thus providing a robust baseline for AI-based accuracy assessment. Specifically, GPT-4 was used via OpenAI’s API (Application Programming Interface, a set of protocols and tools for software applications to communicate with each other). For Auto Error Analyzer, Llama 3.3 through Groq’s API is used. This model offers capabilities comparable to GPT-4, with the added advantages of significantly faster processing and lower API costs. Given that processing speed and cost-efficiency are critical when delivering LLM-powered services, these advantages—significantly faster processing and lower API costs—make Llama 3.3 an optimal and practical choice, particularly suitable for real-time applications and large-scale analyses. The prompt used for error analysis in Auto Error Analyzer is the same as the one employed in Mizumoto et al. (Reference Mizumoto and Plonsky2024):

[Prompt]

Reply with a corrected version of the input sentence with all grammatical, spelling, and punctuation errors fixed. Be strict about the possible errors. If there are no errors, reply with a copy of the original sentence. Please do not add any unnecessary explanations.

Extraction of T-units and clauses

In this application, the extraction of T-units and clauses from text involves distinct processes aimed at analyzing syntactic and grammatical structures to ensure clear and meaningful segmentation. A T-unit, or minimal terminable unit, represents an independent clause along with any attached dependent clauses or modifying phrases. To identify T-units, the program analyzes the text using an NLP approach that examines conjunctions, punctuation, and overall sentence structure. Specifically, the application focuses on conjunctions such as “and,” “but,” or “so,” and punctuation marks like semicolons and conjunctive adverbs to determine logical points for splitting sentences into meaningful units. Each potential split point is carefully assessed within the application to confirm the presence of a complete independent clause, ensuring the resulting units are coherent and self-contained.

Clauses, on the other hand, are segments within sentences identified primarily through their verbs. In this developed application, the identification of clauses involves analyzing verbs that form the core of these grammatical structures. By focusing on the main actions and their syntactic relationships, the program identifies both independent and dependent clauses, distinguishing them clearly within complex sentences. This process within the program also considers special grammatical structures like coordinated clauses and relative clauses, ensuring accurate representation and readability.

Following extraction, the application further refines T-units and clauses by correcting punctuation, contractions, and spacing, enhancing clarity and readability. If discrepancies arise, it performs additional refinements and reanalyses. This iterative approach ensures that the final output accurately represents the intended linguistic structures. By automating this step, the application establishes refined T-units and clauses as foundational units for calculating accuracy indices, such as errors per T-unit and errors per clause, within the overall accuracy measurement process.

In technical terms, the application uses NLP techniques similar to those in tools like L2SCA and TAASSC. Specifically, the approach used here mirrors the methodologies of these established tools by parsing text at the sentence and word level. However, unlike TAASSC, which does not directly compute T-units and clauses, this application employs custom-developed code written from scratch, inspired by the results of T-unit and clause extraction as implemented in L2SCA. While L2SCA utilizes the Stanford Parser (Klein & Manning, Reference Klein and Manning2003), this application employs spaCy, a robust NLP library in Python, complemented by custom-developed algorithms specifically tailored to replicate similar outcomes (see the online supplementary material for the actual code: https://osf.io/jyf3r/).

Indices such as Errors per T-unit, Errors per Clause, Error-free T-units, and Error-free Clauses are calculated based on errors detected and T-units and clauses. That is, after the errors are automatically identified in the program, a simple matching approach categorizes them within T-units and clauses, enabling the calculation of these indices.

Technical implementation of error analysis with Llama 3.3

The error analysis component of Auto Error Analyzer is powered by Llama 3.3, accessed through Groq’s API service. This implementation involves several technical steps that transform raw text input into structured error analysis output.

First, the input text undergoes preprocessing to normalize spacing, handle special characters, and segment the text into individual sentences. This segmentation is crucial for the sentence-by-sentence analysis approach used by the tool.

Next, each sentence is submitted to the Llama 3.3 model via Groq’s API with the specific prompt shown earlier. The implementation uses asynchronous API calls to optimize processing time, with appropriate rate limiting to respect API constraints. When the model returns a corrected sentence, a diff algorithm compares the original and corrected versions to identify specific changes. These changes are then classified into the 23 error categories (detailed below) using another set of API calls (see the online supplementary material for the actual code and prompt: https://osf.io/jyf3r/).

After error identification and classification, a mapping algorithm associates these errors with the previously extracted T-units and clauses to generate unit-level metrics such as errors per T-unit. This association uses span indices that track the exact character positions of each error, T-unit, and clause within the original text, ensuring accurate alignment even with complex nested structures.

Error types detected by the tool

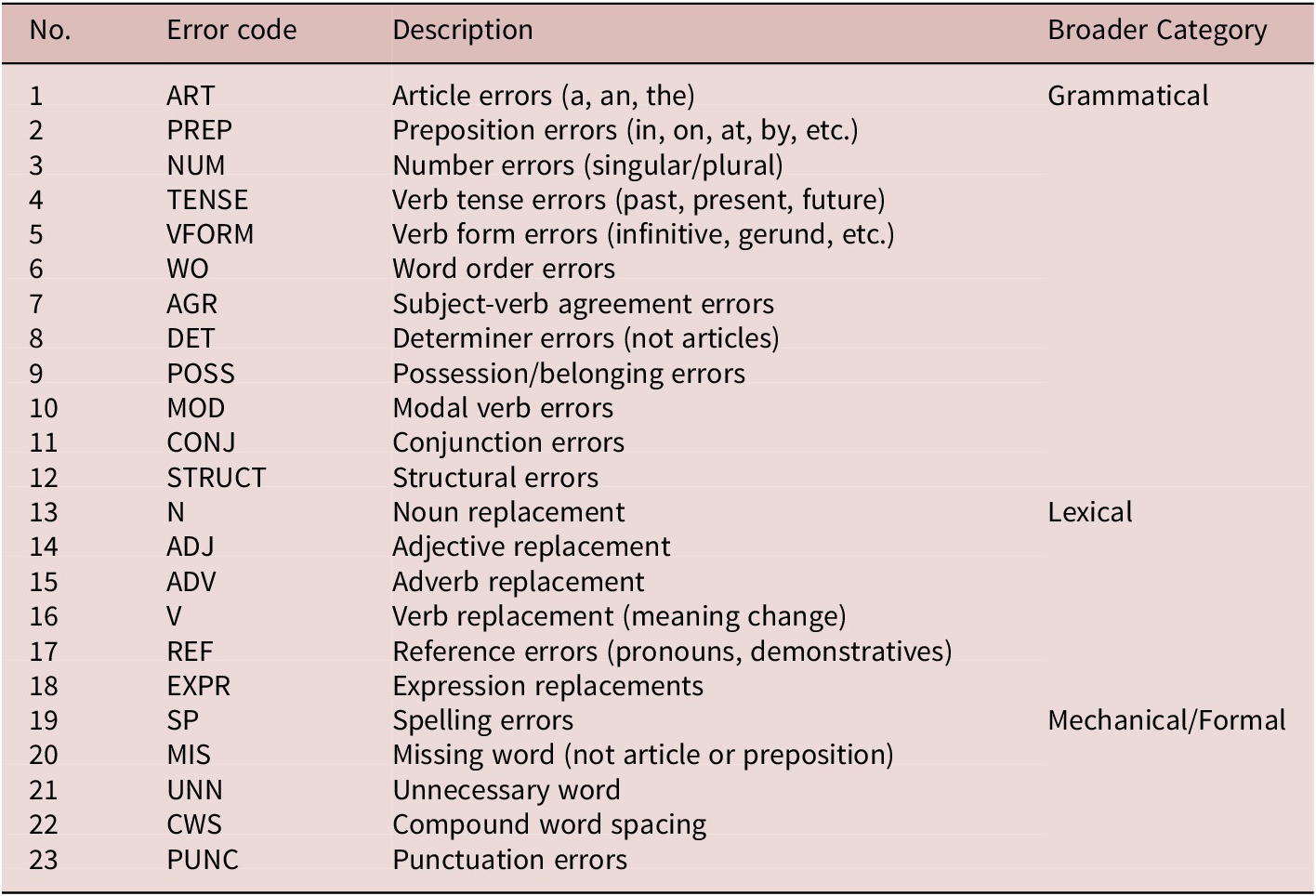

Defining what constitutes an “error” has proven to be elusive in L2 research (Pallotti, Reference Pallotti, Winke and Brunfaut2021, p. 4). To address this challenge systematically, Auto Error Analyzer implements a comprehensive classification framework comprised of 23 error categories (Table 2). This taxonomy was developed through careful synthesis of established error coding schemes, including the Cambridge Learner Corpus Error Coding Scheme (Nicholls, Reference Nicholls2003) and L2 studies on error analysis or written corrective feedback (e.g., Dagneaux, Denness, & Granger, Reference Dagneaux, Denness and Granger1998; Ferris, Reference Ferris2011; Lee, Reference Lee2004; Lira-Gonzales & Nassaji, Reference Lira-Gonzales and Nassaji2020). The resulting categories are organized into three primary domains—grammatical, lexical, and mechanical dimensions—providing a structured approach to error identification and classification.

Table 2. Error types in Auto Error Analyzer

Note. For specific examples of each error, refer to the online supplementary material (https://osf.io/jyf3r/).

The grammatical categories (e.g., article errors, verb tense errors, subject-verb agreement errors) align closely with error taxonomies in previous studies, addressing basic syntactic and morphological error types (e.g., Ferris, Reference Ferris2011). In addition, discourse-level errors (e.g., conjunction errors and structural errors) are informed by cohesion frameworks, addressing coherence, logical connectivity, and higher-order discourse issues (e.g., Zhang et al., Reference Zhang, Diaz, Chen, Wu, Qian, Voss and Yu2024). Lexical error categories (e.g., collocation errors, inappropriate expressions, idiomatic usage) reflect corpus-based approaches that emphasize lexical appropriateness and collocational accuracy (e.g., Agustín-Llach, Reference Agustín-Llach2017). Mechanical/formal errors (e.g., spelling errors, punctuation errors, unnecessary words) correspond to surface-level or orthographic inaccuracies (e.g., Crossley et al., Reference Crossley, Bradfield and Bustamante2019). By integrating insights from established frameworks and empirical studies, the proposed 23-category scheme offers a theoretically sound, empirically grounded, and comprehensive tool for accurately capturing the full range of English as a Second Language/English as a Foreign Language (ESL/EFL) learner errors, thereby enhancing its relevance for research and pedagogical applications.

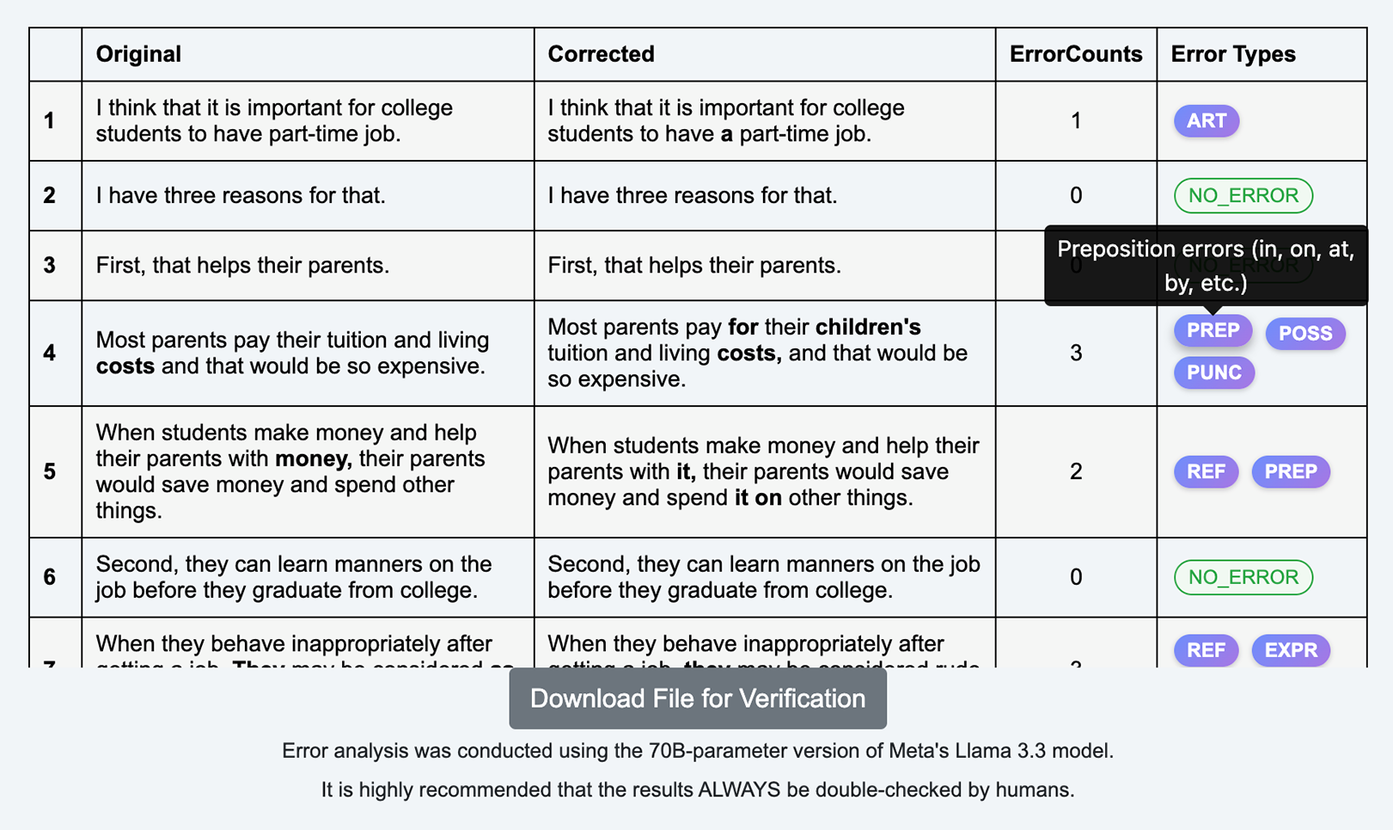

Figure 2 illustrates how Auto Error Analyzer presents its analysis results. In addition to the accuracy indices mentioned in Table 1, the tool displays the original sentences, corrected sentences, error counts, and corresponding error types in a tabular format for easy inspection. Corrections within the sentences are highlighted in bold, allowing users to quickly identify the changes. By automatically assigning error types based on the developed error categories, the tool enables users to not only count errors but also gain a clear understanding of the specific types of errors present in the text.

Figure 2. Sample of error analysis output in Auto Error Analyzer.

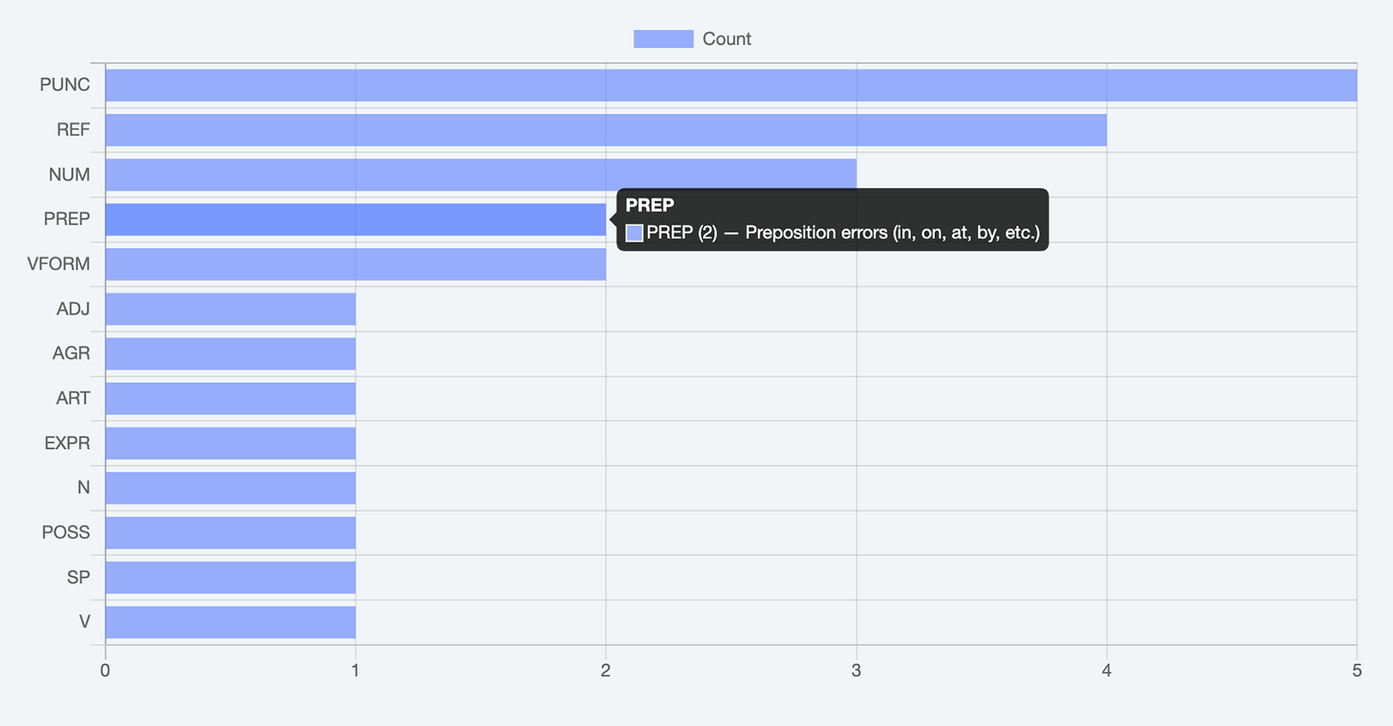

Furthermore, as shown in Figure 3, the tool provides a visual distribution of the various error types detected in the user’s input text. By presenting this information in an easy-to-understand format, the tool allows users to intuitively grasp not only the presence of errors but also the specific patterns and trends in their writing. This feature can help users improve their writing skills by making them more aware of recurring mistakes.

Figure 3. Error distribution plot in Auto Error Analyzer.

Another key feature of Auto Error Analyzer is its ability to download a CSV (comma-separated value) file that contains even more detailed information than the tables shown in Figure 2 (accessible via the “Download File for Verification” button). This file allows users to double-check for any inaccuracies. Specifically, it includes various linguistic metrics for each sentence, such as T-unit counts, Error-free T-units, Clause counts, Error-free Clauses, Extracted T-units, Extracted Clauses, and Clause-signaling verbs. Additionally, it provides error categories and a detailed mapping of the specific parts where errors occur, ensuring precise inspection. This enables users to verify T-unit and clause segmentation and confirm the accuracy of error detection and corrections. By facilitating this review process, users can conduct a final manual check, ensuring the reliability of reported figures, even in research contexts where numerical accuracy is critical. Since the program may not be 100% error-free, this human oversight serves as a safeguard. The philosophy behind Auto Error Analyzer’s development is rooted in the belief that, even in the AI era, continuous human validation remains essential. This approach highlights the importance of collaboration between AI and human expertise.

In line with open science principles in applied linguistics (Plonsky, Reference Plonsky2024)―which promote transparency and reproducibility in research (In’nami, Mizumoto, Plonsky, & Koizumi, Reference In’nami, Mizumoto, Plonsky and Koizumi2022), including application development (Mizumoto, Reference Mizumoto and Plonsky2024)―all code and files, except for the API key, are publicly accessible on OSF (https://osf.io/jyf3r/), GitHub (https://github.com/mizumot/AutoErrorAnalyzer), and IRIS (https://www.iris-database.org/details/37VPp-fKbgz). By making the code publicly available like Kim, Williams, and McCallum (Reference Kim, Williams and McCallum2024) (https://github.com/phoenixwilliams/RAML_tutorial) and Kyle (https://github.com/kristopherkyle/TAASSC), I hope other researchers will build upon this tool as a foundation for further development and enhancement.

To ensure that Auto Error Analyzer provides a reliable and valid measurement of linguistic errors, a systematic validation was conducted focusing on two critical aspects: the accuracy of T-unit and clause extraction, which forms the foundation for structural analysis, and the alignment between the tool’s automated error detection and human expert judgment.

Evaluation of the tool’s performance

To assess the validity of the metrics calculated by Auto Error Analyzer, a performance comparison was conducted on two levels: (1) T-units and clause extraction, and (2) error analysis. For this comparison, I randomly selected 100 essays written by Japanese EFL university students from the dataset provided by Mizumoto et al. (Reference Mizumoto and Plonsky2024), available on OSF (https://osf.io/bj8kq/), using Microsoft Excel’s RAND function. Each essay was segmented into individual sentences, and two researchers with Ph.D. degrees in applied linguistics independently counted the errors and assigned the error types listed in Table 2. Their counts were then recorded. To align with the LLM-based accuracy assessment, both researchers received training using examples from 30 additional essays analyzed for errors by ChatGPT (GPT-4) in Mizumoto et al. (Reference Mizumoto and Plonsky2024), which were not part of the selected 100 essays. This training allowed them to familiarize themselves with typical error patterns and types. For the initial inter-rater reliability assessment in terms of the number of errors, Krippendorff’s alpha was calculated, resulting in a high agreement rate (α = .92), surpassing the recommended threshold of .80 (Krippendorff, 2004). In cases of disagreement, the final error counts were determined through discussion between the two raters and the author of this article. The assignment of error types followed the same process.

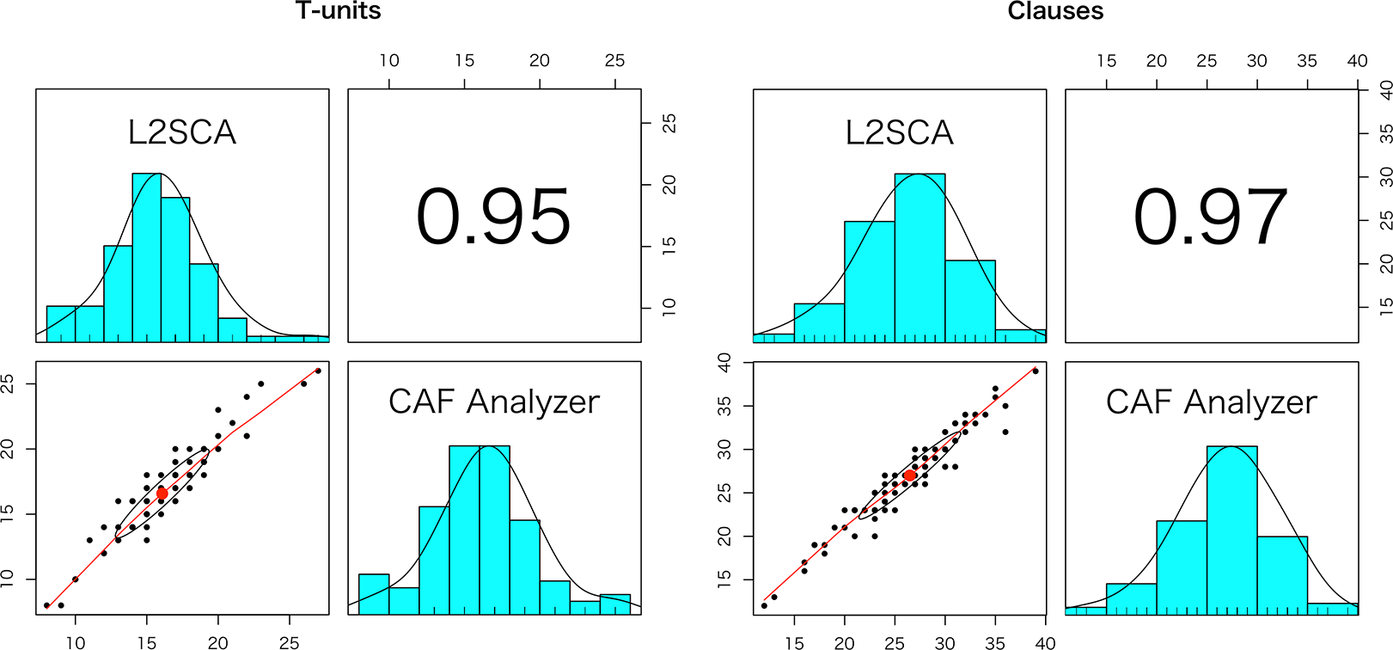

For T-unit and clause validation, I compared the counts obtained using the Web-based L2SCA (https://aihaiyang.com/software/l2sca/) (Ai & Lu, Reference Ai, Lu, Díaz-Negrillo, Ballier and Thompson2013) with the T-units and clauses generated by Auto Error Analyzer for the same set of 100 essays. As seen in Figure 4, both T-units and clauses obtained from L2SCA and Auto Error Analyzer are nearly identical, with T-units (r = .95, 95% CI [.93, .97]) and Clauses (r = .97, 95% CI [.95, .98]). This indicates a very high level of agreement, confirming that the T-units and clauses extracted by Auto Error Analyzer align closely with those from L2SCA, a tool widely used in the L2 field.

Figure 4. Correlations of T-units and clauses calculated with web-based L2SCA and Auto Error Analyzer.

To compare human error evaluations with the automated error analysis provided by Llama 3.3 used in Auto Error Analyzer, I calculated the errors per 100 words for each essay using both methods. Since both metrics met the assumptions of normality, I computed the correlation coefficient, which showed a high agreement (r = .94, 95% CI [.92, .96] as shown in Figure 5). This result confirms the high level of trustworthiness in the Auto Error Analyzer’s automated accuracy calculation using Llama 3.3.

Figure 5. Correlation of errors per 100 words: Human raters and Auto Error Analyzer.

While correlation analysis demonstrates the general agreement between human raters and Auto Error Analyzer in quantifying errors, a more detailed evaluation of the tool’s classification accuracy is necessary. To comprehensively assess the performance of Auto Error Analyzer at the categorical level, I calculated three standard machine learning evaluation metrics: Precision, Recall, and F1-score.

These metrics, when applied to the errors identified by Auto Error Analyzer (i.e., Llama 3.3) versus those identified by human annotators, provide critical insight into the tool’s ability to correctly identify specific error instances (Precision), its comprehensiveness in finding all errors (Recall), and its overall effectiveness as measured by the harmonic mean of these values (F1-score). Precision measures the proportion of correctly identified errors out of all instances the model flagged as errors, reflecting the model’s ability to avoid false positives. Recall measures the proportion of actual errors that were successfully identified by the model, indicating its ability to detect errors comprehensively. F1-score combines precision and recall into a single metric, calculated as 2 × (precision × recall) ÷ (precision + recall), providing a balanced measurement that accounts for both the model’s accuracy in identifying errors and its ability to find all errors present in the text.

The calculation employed the microaverage method, meaning true positives, false positives, and false negatives were aggregated across all error categories as a single combined set. The microaverage approach was chosen due to the multi-label nature of the dataset, where each sentence could have multiple error categories assigned, and where the distribution of error categories varied significantly.

Using the dataset of 100 essays annotated by both human raters and Auto Error Analyzer, I obtained the following microaverage results: Precision = .96, Recall = .94, and F1-score = .95. These results indicate that Auto Error Analyzer demonstrates a high degree of agreement with human annotators in error detection. The strong Precision suggests that the model makes relatively few false-positive classifications, meaning it rarely marks correct language use as erroneous. The high Recall indicates that Auto Error Analyzer effectively identifies most errors that human raters detect. The balanced F1-score confirms the reliability of the model in automated error classification, further supporting its application in research contexts where detailed error analysis is required.

Ethical considerations in Auto Error Analyzer

The development and implementation of Auto Error Analyzer raises important ethical considerations regarding AI-assisted language assessment. Central to my approach is a commitment to transparency and open science. All code used in this tool is publicly available through OSF, GitHub, and IRIS repositories, allowing researchers to inspect, modify, and improve upon the underlying mechanisms. This transparency is crucial for building trust in automated assessment tools and enables the research community to understand precisely how error detection and classification occur. Regarding data privacy, Auto Error Analyzer processes texts on demand without storing user data on the server after analysis is complete. Each analysis session remains separate, ensuring that sensitive learner texts are not retained or used for purposes beyond the immediate analysis requested by the user. While the tool democratizes access to sophisticated error analysis capabilities for researchers and educators worldwide, I emphasize that Auto Error Analyzer is designed to complement, not replace, human judgment. Particularly in high-stakes assessment contexts, results should be verified by qualified human evaluators who can contextualize automated findings within broader educational goals and learner needs. By acknowledging these ethical dimensions, I hope to promote the responsible implementation of AI-assisted language analysis tools that empower rather than diminish human expertise in language teaching and research.

Concluding remarks

This research report, which aims to automate error metrics calculation, bridges theoretical foundations with practical application by detailing the development of a web app and presenting the tool’s validation results. Although the tool is not without flaws, it provides researchers with a means to streamline error analysis. Given that human expertise remains essential in defining error constructs, annotating examples, validating AI outputs, and interpreting assessment results, Auto Error Analyzer functions as a complementary tool, enhancing rather than replacing human judgment. This integration of AI and human expertise can support more informed and effective decision-making in language learning and assessment.

Looking forward, Auto Error Analyzer is envisioned as a valuable springboard for L2 research utilizing error-related metrics. This initial prototype includes only basic functionalities. Expanding it to incorporate additional linguistic indices, along with features such as lexical diversity, lexical sophistication, and cohesion measures (see Crossley, Reference Crossley2020), is feasible. Future versions could also integrate capabilities for automated holistic scoring (Mizumoto & Eguchi, Reference Mizumoto and Eguchi2023), rubric-based analytic scoring (Yavuz, Çelik, & Yavaş Çelik, Reference Yavuz, Çelik and Yavaş Çelik2024), and tailored feedback (Steiss et al., Reference Steiss, Tate, Graham, Cruz, Hebert, Wang and Olson2024).

Despite its promising capabilities, the tool has several important limitations to consider. The system can identify and categorize a wide range of common errors but may struggle with more nuanced, context-dependent errors that require deeper pragmatic understanding or cultural awareness. For instance, errors related to register appropriateness, genre conventions, or culturally specific expressions may be misidentified or overlooked entirely. The error classification system, though comprehensive, operates within the constraints of predetermined categories that may not capture all the subtleties human evaluators can recognize. Additionally, the current version offers limited customization options—users cannot modify the existing error classification scheme or add new categories to suit specific research needs. The tool may also show bias toward standard varieties of English, potentially misidentifying features of World Englishes or regional dialects as errors. Furthermore, since validation was primarily conducted with texts from Japanese EFL students, performance may vary with texts from writers with different first language (L1) backgrounds or when analyzing different text genres beyond academic writing. It is also worth noting that Auto Error Analyzer leverages Llama 3.3 via Groq’s API, benefiting from both its remarkable speed and affordable pricing. However, potential changes in API costs or model specifications could affect its continued viability. Although Llama is currently available as open-source from Meta, the possibility of future access restrictions cannot be entirely dismissed.

These possibilities and challenges are areas for resolution in further tool development. Fortunately, this tool and the foundational research behind it (e.g., Kyle, Reference Kyle2016; Kyle, Choe, Eguchi, LaFlair, & Ziegler, Reference Kyle, Choe, Eguchi, LaFlair and Ziegler2021; Lu, Reference Lu2010) uphold the principles of open science and the philosophy of “sharing is caring.” Therefore, regardless of any future changes, the development of tools like Auto Error Analyzer will continue to be possible, and further advancements can be expected.

Supplementary material

The supplementary material for this article can be found at https://osf.io/jyf3r/.

Data availability statement

The experiment in this article earned Open Data and Open Materials badges for transparent practices. The materials are available at https://osf.io/jyf3r/.

Acknowledgments

This project was supported by the Japan Society for the Promotion of Science (JSPS) KAKENHI Grant No. 25K00482. In preparing this manuscript, I used ChatGPT (GPT-4) to enhance the clarity and coherence of the language, ensuring it aligns with the standards expected in scholarly journals. While ChatGPT assisted in refining the language, it did not contribute to the development of any original ideas. This project also benefited—albeit indirectly—from the work of pioneering researchers such as Dr. Xiaofei Lu and Dr. Kristopher Kyle, whose commitment to open science is exemplified by the public availability of their code.