Introduction

The population of individuals within the oldest-old age range (85 years and older) is rapidly growing (Vincent & Velkoff, Reference Vincent and Velkoff2010). However, the lack of available data with a comprehensive assessment of cognitive functions in healthy agers over age 85 limits research in this age cohort. The developers of the NIH Toolbox Cognitive Battery (NIH TB-CB) limited their collection of normative data to those ages 3–85. Technology use in the cognitive testing environment is emerging, so ensuring the validity of using these new neuropsychological testing methods in the aging population is essential – especially since this oldest-old population may be less likely than other age groups to be comfortable with technology usage. Results of this study inform the use of the NIH TB-CB in future research in the oldest-old population.

The NIH TB-CB strives towards brevity, portability, and homogeneity in neurobehavioral assessment research through short tasks performed on an iPad (Gershon et al., Reference Gershon, Wagster, Hendrie, Fox, Cook and Nowinski2013). The Cognitive Battery covers a wide range of cognitive domains, including executive functioning, episodic memory, language, processing speed, attention, and working memory (Gershon et al., Reference Gershon, Wagster, Hendrie, Fox, Cook and Nowinski2013). The NIH Toolbox has been shown to be valid in diverse samples of varying age, language, race, ethnicity, gender, education, developmental disability, and neurological conditions (Carlozzi, Goodnight, et al., Reference Carlozzi, Goodnight, Casaletto, Goldsmith, Heaton, Wong and Tulsky2017; Flores et al., Reference Flores, Casaletto, Marquine, Umlauf, Moore, Mungas and & Heaton2017; Hackett et al., Reference Hackett, Krikorian, Giovannetti, Melendez-Cabrero, Rahman, Caesar, Chen, Hristov, Seifan, Mosconi and Isaacson2018; Heaton et al., Reference Heaton, Akshoomoff, Tulsky, Mungas, Weintraub, Dikmen, Beaumont, Casaletto, Conway, Slotkin and Gershon2014; Hessl et al., Reference Hessl, Sansone, Berry-Kravis, Riley, Widaman, Abbeduto, Schneider, Oaklander, Rhodes and Gershon2016; Ma et al., Reference Ma, Carlsson, Wahoske, Blazel, Chappell, Johnson, Asthana and Gleason2021; Mungas et al., Reference Mungas, Widaman, Zelazo, Tulsky, Heaton, Slotkin and Gershon2013; Tulsky et al., Reference Tulsky, Holdnack, Cohen, Heaton, Carlozzi, Wong, Boulton and Heinemann2017; Weintraub, Dikmen, et al., Reference Weintraub, Dikmen, Heaton, Tulsky, Zelazo, Bauer, Carlozzi, Slotkin, Blitz, Fox, Beaumont, Mungas, Nowinski, Richler, Deocampo, Anderson, Manly, Borosh, Havlik, Conway, Edwards, Freund, King, Moy, Witt and Gershon2013; Weintraub et al., Reference Weintraub, Dikmen, Heaton, Tulsky, Zelazo, Slotkin, Carlozzi, Bauer, Fox, Havlik, Beaumont, Mungas, Manly, Moy, Conway, Edwards, Nowinski and Gershon2014). For example, Mungas et al. (Reference Mungas, Heaton, Tulsky, Zelazo, Slotkin, Blitz and Gershon2014) examined younger and older age groups but only up to age 85. While the NIH TB-CB has been used in older adult samples (O’Shea et al., Reference O’Shea, Fieo, Woods, Williamson, Porges and Cohen2018), to our knowledge, no data findings have been reported on the effectiveness of the NIH TB-CB as a battery to measure cognitive functions in healthy individuals over age 85.

Factor analysis of the NIH Toolbox cognitive measures with standard neuropsychological tests of the same domains of cognition revealed good construct validity (Mungas et al., Reference Mungas, Heaton, Tulsky, Zelazo, Slotkin, Blitz and Gershon2014), supporting correspondence between a given domain and a test used to measure it, which was indicated by the individual tests showing both strong associations with the hypothesized cognitive domains (i.e., convergent validity) and weak relationships between each of the tests and other domains (i.e., discriminant validity). In this case, Mungas et al. (Reference Mungas, Heaton, Tulsky, Zelazo, Slotkin, Blitz and Gershon2014) tested the validity of the NIH TB-CB in a cohort of adults, 20 to 85 years of age using confirmatory factor analysis. Although the NIH Toolbox assesses six specific domains (working memory, executive function, episodic memory, processing speed, vocabulary, and reading), they found that a five-factor model best describes the relationship between the NIH TB-CB and standard neuropsychological measures: Vocabulary, Reading, Episodic Memory, Working Memory, and Executive Function/Processing Speed with the NIH TB-CB tests falling largely within expected domains (Mungas et al., Reference Mungas, Heaton, Tulsky, Zelazo, Slotkin, Blitz and Gershon2014). This factor structure did not vary across younger (ages 20–60) and older adults (ages 60–85), supporting the use of the NIH TB-CB as a measure of cognitive health across the adult age range from 20 to 85. Investigating the factor structure of the NIH TB-CB in individuals older than age 85 provides an important opportunity to evaluate its utility for assessing cognitive functions in the fastest growing age group within the population of healthy older adults.

This study examines the validity of the NIH TB-CB cognitive domains in cognitively healthy older adults over age 85, which, to our knowledge, has yet to be reported. We employed a series of confirmatory factor analyses to investigate the convergent and discriminant validity, as well as the dimensional structure underlying the NIH TB-CHB and other validated measures of cognition in healthy older adults aged 85 years old and over. We hypothesized that the factor structure of the NIH Toolbox would be consistent across the lifespan, and thus the 5-factor model, derived from a younger adult sample (Mungas et al., Reference Mungas, Heaton, Tulsky, Zelazo, Slotkin, Blitz and Gershon2014), would have a better model fit than alternative factor models. We also sought to evaluate the influence of demographic characteristics and computer use in this oldest-old age cohort on NIH TB-CB Composite scores.

Method

Participants

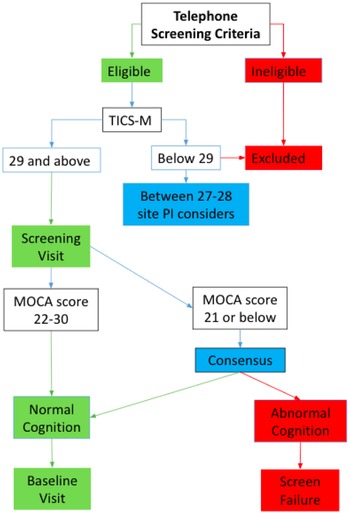

We analyzed data collected from the McKnight Brain Aging Registry, a cohort of community-dwelling, cognitively unimpaired older adults, 85 to 99 years of age. Figure 1 shows the extensive participant screening process. During initial screening over the phone, trained study coordinators administered the Telephone Interview for Cognitive Status modified (Cook et al., Reference Cook, Marsiske and McCoy2009) and an interview to assess for major exclusion criteria, which included individuals under age 85, severe psychiatric conditions, and neurological conditions, and cognitive impairment. Following the telephone screening, eligible participants underwent an in-person screening visit during which they were evaluated by a neurologist, a detailed medical history was obtained to assess health status and eligibility, and the Montreal Cognitive Assessment (MoCA) was administered (Nasreddine et al., Reference Nasreddine, Phillips, Bédirian, Charbonneau, Whitehead, Collin, Cummings and Chertkow2005). An additional point was added for adjustment of the MoCA score to account for non-white race and/or education equal to or below 12th grade. This adjustment was for the purpose of fairly screening individuals of lower education or non-white backgrounds and this adjustment is not based on normative data. The study was conducted in accordance with the Helsinki declaration. Approval for the study was received from the Institutional Review Boards at each of the data collection sites including University of Alabama at Birmingham, University of Florida, University of Miami, and University of Arizona.

Figure 1. Participant screening process. Telephone screening criteria included exclusion for major physical disabilities, dependence in instrumental activities of daily living or basic activities of daily living, uncontrolled medical conditions that would limit life expectancy or interfere with participation in the study, severe psychiatric conditions, neurological conditions (i.e., major vessel stroke, Parkinson’s disease, dementia), active substance abuse or alcohol dependence, less than 6th-grade reading level, vision or hearing deficits that would cause impediment to cognitive test administration, MRI contraindications, and inability to follow study protocol and task instructions due to cognitive impairment. TICS-M was administered over the phone. An additional evaluation was included in the screening visit, including examination by a neurologist, geriatric depression scale, and detailed medical history.

Our fully screened sample consists of 192 community-dwelling individuals aged 85–99. We removed data from 13 participants from the analysis due to missingness related to administrator error, low visual acuity, participant’s color blindness, or participant not completing the task. This left a remaining 179 participants in our sample. Only 138 participants were given the questionnaire related to computer use since this was adopted after data collection had begun; therefore analyses with the computer frequency variable are based on those 138 participants. Data from this group of healthy agers were collected using a standardized protocol across the four McKnight Institutes: University of Alabama at Birmingham, University of Florida, University of Miami, and University of Arizona. We recruited participants through mailings, flyers, physician referrals, and community-based recruitment.

Cognitive measures

Testing was performed by staff trained and certified across the four sites to administer the test battery. Testing was administered across two visits on separate days. We performed quality control on behavioral data through the double data entry tool in Redcap, wherein we entered data twice, and discrepancies were identified and corrected (Harris et al., Reference Harris, Taylor, Minor, Elliott, Fernandez, O’Neal, McLeod, Delacqua, Delacqua, Kirby and Duda2019, Reference Harris, Taylor, Thielke, Payne, Gonzalez and Conde2009). The data were then again visually inspected and assessed for potential outliers and errors.

NIH TB-CB measures

We used scores from the NIH TB-CB (Gershon et al., Reference Gershon, Wagster, Hendrie, Fox, Cook and Nowinski2013; Weintraub et al., Reference Weintraub, Dikmen, Heaton, Tulsky, Zelazo, Slotkin, Carlozzi, Bauer, Fox, Havlik, Beaumont, Mungas, Manly, Moy, Conway, Edwards, Nowinski and Gershon2014), including the Dimensional Change Card Sort (DCCS) Test, the Flanker Inhibitory Control and Attention Test, the Picture Sequence Memory Test, the Pattern Comparison Processing Speed Test, the List Sorting Working Memory Test, the Oral Reading Recognition Test, and the Picture Vocabulary Test. The DCCSTest measures executive function by indicating a target characteristic and then instructing participants to quickly select the object that matches the indicated characteristic for that trial (either shape or color). The Flanker Inhibitory Control and Attention Test measures executive function by having participants quickly select the correct direction of an arrow among a set of arrows. The Pattern Comparison Processing Speed Test measures processing speed by having participants quickly decide whether or not two images match. The Picture Sequence Memory Test measures episodic memory by asking participants to place cards in a particular order from memory. The List Sorting Working Memory Test measures working memory by presenting an increasing number of pictures and then instructing participants to order the pictures by size and semantic category from memory. The Oral Reading Recognition Test measures language by having participants read aloud words shown on the screen. The Picture Vocabulary Test measures language by presenting a word verbally and instructing participants to select one of four images that best describes the word. Table 1 includes NIH TB-CB measures and their associated domains. Only raw or calculated scores were used for the analysis. We also performed follow-up analysis with demographically corrected scores for the NIH TB-CB scores.

Table 1. NIH toolbox measures and associated cognitive domains

Standard neuropsychological measures

We used standard neuropsychological tests with strong psychometric properties within the same domains as those used in the NIH TB-CB. Memory functioning was assessed through the California Verbal Learning Test II (Delis et al., Reference Delis, Kramer, Kaplan and Ober1987), a word list learning task, and the Benson Figure Test (Beekly et al., Reference Beekly, Ramos, Lee, Deitrich, Jacka, Wu, Hubbard, Koepsell, Morris and Kukull2007), a visual memory task. Executive functioning was assessed through the Trail Making Test B (Gaudino et al., Reference Gaudino, Geisler and Squires1995), visual attention and switching task; Wechsler adult intelligence scale-fourth edition (WAIS-IV) Matrix Reasoning (Benson et al., Reference Benson, Hulac and Kranzler2010) subtest, which involves recognizing and utilizing pattern recognition and integration; and the Stroop Color Word-Inhibition test (MacLeod, Reference MacLeod1991; MacLeod, Reference MacLeod1992), an inhibition task. Language/Vocabulary was assessed through the WAIS-IV Similarities (Benson et al., Reference Benson, Hulac and Kranzler2010), which involves explaining abstract relationships between two words. Processing speed was assessed through the WAIS-IV Coding and Symbol Search (Benson et al., Reference Benson, Hulac and Kranzler2010) subtests which both involve speeded visual processing. Lastly, working memory was assessed through the WAIS-IV Letter-Number sequencing subtest (Benson et al., Reference Benson, Hulac and Kranzler2010), a task involving sequencing a set of letters and numbers, and Digit Span (Beekly et al., Reference Beekly, Ramos, Lee, Deitrich, Jacka, Wu, Hubbard, Koepsell, Morris and Kukull2007), a number recall task including recall backward. Table 2 includes the standard neuropsychological measures and their associated domains. Only raw or calculated scores were used. We also performed follow-up analysis with demographically corrected scores for the NIH TB-CB scores.

Table 2. Standard neuropsychological measures and associated cognitive domains

Confirmatory factor analysis

Based on the methods of previous work from Mungas et al. (Reference Mungas, Heaton, Tulsky, Zelazo, Slotkin, Blitz and Gershon2014), we performed a series of confirmatory factor analyses, which allowed us to assess the degree to which the original conceptual model of the NIH TB-CB aligns with the factor structure of the NIH TB-CB and standard neuropsychological measures of the same cognitive domains within the oldest-old. We compared models matching this conceptual model, as well as alternative models, detailed in Table 3.

Table 3. Alternative models

Following Mungas et al. (Reference Mungas, Heaton, Tulsky, Zelazo, Slotkin, Blitz and Gershon2014), we included the following tests of model fit: overall Chi-square test of model fit as well as the Tucker Lewis Index (Tucker & Lewis, Reference Tucker and Lewis1973), Comparative Fit Index (Bentler & Bonett, Reference Bentler and Bonett1980; Bentler, Reference Bentler1990), the root mean square error of approximation (Browne & Cudeck, Reference Browne and Cudeck1992), and Standardized Root Mean Square Residual (Bentler, Reference Bentler1989). We evaluated modification indices to see if there could be any significant improvement in the model by changing model parameters. We compared models using the Akaike Information Criterion (AIC). This approach can further establish the reproducibility of previous findings (Mungas et al., Reference Mungas, Heaton, Tulsky, Zelazo, Slotkin, Blitz and Gershon2014) while extending it to our oldest-old cohort. We used R and the lavaan package to perform confirmatory factor analysis (Rosseel, Reference Rosseel2012).

Evaluation of validity

Convergent validity was assessed by examining factor loadings of NIH TB-CB on their domain factor and evaluating the correlation between an average of the standard neuropsychological measures of a domain and the NIH TB-CB of the domain. Discriminant validity was assessed by examining modification indices cross-loadings of NIH TB-CB measures, identifying high inter-correlation of factors, and evaluating the correlation between an average of the standard neuropsychological measures of a domain and the NIH TB-CB of a different domain. These methods of assessing validity of cognitive measures have been applied previously (Andresen, Reference Andresen2000; Carlozzi, Tulsky, et al., Reference Carlozzi, Tulsky, Wolf, Goodnight, Heaton, Casaletto and & Heinemann2017; Heaton et al., Reference Heaton, Akshoomoff, Tulsky, Mungas, Weintraub, Dikmen, Beaumont, Casaletto, Conway, Slotkin and Gershon2014; Tulsky et al., Reference Tulsky, Holdnack, Cohen, Heaton, Carlozzi, Wong, Boulton and Heinemann2017; Weintraub, Bauer, et al., Reference Weintraub, Bauer, Zelazo, Wallner-Allen, Dikmen, Heaton, Tulsky, Slotkin, Blitz, Carlozzi, Havlik, Beaumont, Mungas, Manly, Borosh, Nowinski and Gershon2013).

Multiple regression with NIH TB-CB composite scores

Composite scores are automatically generated through the NIH TB-CB. Prior work that generated normative data indicated that there is a decline of fluid composite scores with age and a plateau of crystallized composite scores after middle age (Casaletto et al., Reference Casaletto, Umlauf, Beaumont, Gershon, Slotkin, Akshoomoff and Heaton2015). The composite scores from our sample fit with this trend (Table 4) with relatively similar crystallized scores as other older adults and lower fluid scores than younger adults age groups (Casaletto et al., Reference Casaletto, Umlauf, Beaumont, Gershon, Slotkin, Akshoomoff and Heaton2015). Three multiple linear regressions predicted the three NIH TB-CB Uncorrected Composite Standard Scores-Total, Crystallized, and Fluid. Predictors included years of education, age, gender (1 = Male, 2 = Female), race (1 = White, 2 = Black/African American, 3 = Asian), and computer use frequency (0 = No computer experience/Not used a computer in last 3 months, 1 = Less than 1 hr a week, 2 = 1 hr but less than 5 hr a week, 3 = 5 hr but less than 10 hr a week, 4 = 10 hr but less than 15 hr a week, 5 = At least 15 hr a week). Table 4 includes descriptive statistics for these variables.

Table 4. Participant characteristics

* Note that demographic corrections are not available for individuals over age 85; therefore, corrections for all participants, including those over 85 years of age, were based on normative data for individuals aged 85 years old.

Results

Model fit

Based on prior studies, we hypothesized that the 5-factor model of the NIH TB-CB and standard neuropsychological measures would have a better fit than alternative factor models. We found that the 5-factor (Language, Memory, Working Memory, Executive, and Speed) and 6-factor (Vocabulary, Reading, Memory, Working Memory, Executive, and Speed) models have similar fit indices that indicate good fit (Table 5). The 6-factor model had a slightly smaller AIC (5-factor AIC = 16608.769 and 6-factor AIC = 16606.818). We, therefore, chose the 6-factor model as the best fit. The model aligns with the original six domains of the NIH TB-CB (working memory, executive function, episodic memory, processing speed, language, and reading) (Gershon et al., Reference Gershon, Wagster, Hendrie, Fox, Cook and Nowinski2013).

Table 5. Model fit indices

Overall chi-squared measures how well a model compares to observed data. Comparative Fit Index (CFI) examines the discrepancy between data and the hypothesized model. Tucker Lewis Index (TLI) analyzes the discrepancy between the x2 of the hypothesized model and the null model. The Root Mean Squared Error of Approximation (RMSEA) analyzes discrepancy between the hypothesized model (with optimal parameter estimates) and population covariance matrix. The Standardized Root Mean Square Residual (SRMR) is the root of the discrepancy between the sample covariance matrix and model covariance matrix. The Akaike Information Criterion (AIC) is a value used to evaluate how well a model fits the data. Lower is a better fit.

The 5-factor model found in Mungas et al. (Reference Mungas, Heaton, Tulsky, Zelazo, Slotkin, Blitz and Gershon2014) (Vocabulary, Reading, Memory, Working Memory, Executive/Speed) was different from the 5-factor model our study found to be a good fit in the oldest-old sample. Mungas et al. (Reference Mungas, Heaton, Tulsky, Zelazo, Slotkin, Blitz and Gershon2014) found that the model that combined executive and speed into one factor, and separated vocabulary and reading into two separate factors, was a better fit than a 5-factor model that separated executive and speed factors and instead combined vocabulary and reading into a language factor (Table 5). We did not find an inter-correlation between executive and speed factors>.9 as was found in prior studies (Mungas et al., Reference Mungas, Heaton, Tulsky, Zelazo, Slotkin, Blitz and Gershon2014; Tulsky et al., Reference Tulsky, Holdnack, Cohen, Heaton, Carlozzi, Wong, Boulton and Heinemann2017).

Convergent validity

Standardized coefficients for the 6-factor model (Table 6) showed NIH TB-CB measures loaded strongly on their respective factors, supporting convergent validity. Picture Vocabulary loaded very highly (.82) on the Vocabulary factor; List Sorting loaded highly (.634) on the Working Memory factor; both DCCS and Flanker loaded strongly (.62 and .585) on the Executive Functioning factor; Picture Sequencing loaded strongly (.533) on the Memory factor, and Pattern Comparison had a moderate loading (.442) on the Speed factor. When we analyzed the correlation between an average of the standard neuropsychological measures of a domain and the NIH TB-CB of the domain, we found Picture Sequence, List Sorting, Pattern Comparison, and Picture Vocabulary all had adequate correlations for convergent validity; however, Flanker and DCCS measures had weak correlations with the executive functioning standard neuropsychological measures, and therefore convergent validity was not supported based on this metric (Andresen, Reference Andresen2000). Since we did not have a standard neuropsychological measure available to load with the NIH TB-CB Oral Reading Task, convergent validity for the Reading domain was not assessed.

Table 6. Standardized coefficients for the 6-factor model

Discriminant validity

Only one weak modification index indicated a split loading of the NIH TB-CB Flanker measure on the Vocabulary factor. The lack of strong cross-loadings between factors indicated discriminant validity of our model. Additionally, the inter-correlations among the six factors (inter-correlation range of r = .157–.811; Table 7) were within acceptable limits as used in a prior NIH TB validity study (Tulsky et al., Reference Tulsky, Holdnack, Cohen, Heaton, Carlozzi, Wong, Boulton and Heinemann2017). The consistently highest inter-correlations were between executive functioning and other domains (inter-correlations range of r = .428–.811; Table 7). While vocabulary and reading were correlated (r = .641), these two crystallized intelligence factors were also related to fluid intelligence factors; therefore, there was no clear crystallized/fluid separation.

Table 7. Inter-correlation of factors for the 6-factor model

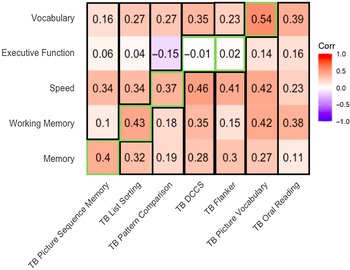

The correlation between an average of the standard neuropsychological measures of a domain and the NIH TB-CB of a different domain indicated Picture Sequence, List Sorting, Pattern Comparison, and Picture Vocabulary all had correlations to other domains that were smaller than the correlation to their domain (Figure 2). However, Flanker and DCCS measures had higher correlations to domains outside Executive Functioning; therefore, this metric indicates poor discriminant validity for these measures.

Figure 2. Correlations between NIH TB-CB measures and standard neuropsychological domain average. TB = NIH TB-CB measures; green outline = within domain correlation, consistent with convergent validity; black outline = outside domain correlation, consistent with discriminant validity.

Together, convergent and discriminant validity evidence indicates sufficient construct validity of the NIH TB-CB within an 85+ cohort, with relatively weaker construct validity for executive functioning measures in the NIH TB-CB.

Predictors of NIH TB-CB composite scores

Race (β = −3.5, p = .009), data collection site (β = 1.98, p = .017), computer use frequency (β = 1.19, p = .007), and years of education (β = .64, p = .021) were significant predictors of the NIH TB-CB Total composite score and the overall model’s adjusted R2 was .1541 (p < .001) There was a significant R 2-change of .164 (p < .001) between the first block of the covariate, site, and the second block with race, gender, age, years of education, and computer use frequency.

Only computer use frequency (β = 1.12, p = .02) was a significant predictor of NIH TB-CB Fluid composite score, and the overall model’s adjusted R 2 was .03 (p = .07). There was a significant R 2-change of .0717 (p = .042) between the first block of the covariate, site, and the second block with race, gender, age, years of education, and computer use frequency.

Race (β = −4.16, p = .001) and years of education (β = 1.1, p < .001) were significant predictors of NIH TB-CB Crystallized composite score, and the overall model’s adjusted R 2 was .21 (p < .001). There was a significant R 2-change of .17 (p < .001) between the first block of the covariate, site, and the second block with race, gender, age, years of education, and computer use frequency.

Follow-up analysis with demographically corrected scores for the NIH TB-CB

We repeated the analysis with demographically corrected scores for the NIH TB-CB and found no differences in the interpretation of the findings including no changes in determination of best-fitting model, validity or predictors of NIH TB-CB Composite Scores (see Supplemental Table 1 and 2 for model fit indices and model standardized coefficients).

Discussion

In our cohort of cognitively unimpaired, older adults over 85 years of age, the NIH TB-CB tests and standard neuropsychological measures had convergent and discriminant validity, consistent with the six domains of cognition initially intended to be evaluated by the NIH TB-CB. These findings suggest the NIH TB-CB has construct validity in oldest-old adults, ages 85–99. The 5-factor model (model 5a), which combines reading and vocabulary into a language factor, also displayed a good model fit. There was relatively less evidence to support the combination of executive function and speed factors, as shown in the 5-factor model by Mungas et al. (Reference Mungas, Heaton, Tulsky, Zelazo, Slotkin, Blitz and Gershon2014). However, there were strong relationships between executive function and all other factors. We also found that computer use frequency strongly predicted the total and fluid NIH TB-CB composite scores, suggesting that either: (1) greater experience with computers impacts performance on this tablet-based assessment; or (2) having lower cognitive capacity (reflected in the NIH TB-CB scores) leads to less computer use.

Cognitive dedifferentiation and the executive decline hypothesis

Cognitive dedifferentiation describes the tendency for separable cognitive abilities (such as language and executive function) to become less separable with age; dedifferentiation may reflect underlying cognitive impairment (Baltes et al., Reference Baltes, Cornelius, Spiro, Nesselroade and Willis1980; Batterham et al., Reference Batterham, Christensen and Mackinnon2011; Hülür et al., Reference Hülür, Ram, Willis, Schaie and Gerstorf2015; Wallert et al., Reference Wallert, Rennie, Ferreira, Muehlboeck, Wahlund, Westman and Ekman2021; Wilson et al., Reference Wilson, Segawa, Hizel, Boyle and Bennett2012). Our findings of more widespread domain inter-correlations with executive functioning could reflect age-related cognitive dedifferentiation. We only included healthy individuals in our sample, so this cognitive dedifferentiation may be a result of healthy aging.

A potential explanation for the strong relationship between executive function and other cognitive domains is that executive functions may play a greater role in supporting nonexecutive task performance in older people, as outlined in the executive decline hypothesis (Crawford et al., Reference Crawford, Bryan, Luszcz, Obonsawin and Stewart2000; Ferrer-Caja et al., Reference Ferrer-Caja, Crawford and Bryan2002; Salthouse et al., Reference Salthouse, Atkinson and Berish2003). Prior factor analysis research has shown similar relationships between executive and nonexecutive tasks (Lamar et al., Reference Lamar, Zonderman and Resnick2002). This reflects on a broader issue in classifying tests as measuring only a single domain. The NIH TB was intentionally developed so that each cognitive domain would be linked to one or two tasks from the toolbox. The domains are not pure, however, and the tests used to assess each are likely to be affected by performance limitations in other domains.

We found that the best-fitting model was the 6 factor model, rather than the 5 factor model (model 5b) that Mungas et al. (Reference Mungas, Heaton, Tulsky, Zelazo, Slotkin, Blitz and Gershon2014) found to best fit data for a younger sample. The difference in best-fit model could be due to increased associations of executive function with all other domains in the oldest-old. Both the hypothesis of greater cognitive dedifferentiation with age and the executive decline hypothesis would predict increased association of executive function with other domains, as was observed. Thus, our result is likely to represent a more holistic effect than simply reflecting a tight coupling between executive functioning and speed domains in this population.

Role of computer use frequency in cognitive performance

Younger age, higher education, non-Hispanic ethnicity, physical health, and mental health have been shown to be predictors of greater computer use (Werner et al., Reference Werner, Carlson, Jordan-Marsh and Clark2011). Additionally, perceptual speed moderates the relationship between age and technology ownership (Kamin & Lang, Reference Kamin and Lang2016). Previous work has indicated a relationship between cognitive performance and the level of computer experience (Fazeli et al., Reference Fazeli, Ross, Vance and Ball2013; Wu et al., Reference Wu, Lewis and Rigaud2019). Since computer use frequency was a significant predictor of total and fluid composite scores, technological familiarity may play a crucial role in performance on NIH TB-CB measures. Participants who were relatively less familiar with technology may have also experienced increased demand on executive functioning as they had to learn technological skills while also performing a cognitive task. Alternatively, since adept use of computers requires cognitive abilities such as executive functioning and processing speed, participants with lower cognitive abilities may tend to avoid engagement with computers in their daily lives due to the cognitive demands of computer use.

Additionally, there are key differences between paper-and-pencil tasks and tablet-based tasks that could impact performance, such as less ability to self-correct, less flexibility for the administrator to pace the task appropriately for the participant, and less engagement between the administrator and the participant (Aşkar et al., Reference Aşkar, Altun, Cangöz, Cevik, Kaya and Türksoy2012). Attitudes towards computers could have resulted in a lower frequency of computer use and, therefore, a negative impact on their cognitive scores (Fazeli et al., Reference Fazeli, Ross, Vance and Ball2013). Future work should investigate participants’ disposition towards computers and their current computer use. This could impact the usability of the NIH TB-CB in older samples since older adults are less likely to have familiarity with technology than younger cohorts (Victorson et al., Reference Victorson, Manly, Wallner-Allen, Fox, Purnell, Hendrie, Havlik, Harniss, Magasi, Correia and Gershon2013; Werner et al., Reference Werner, Carlson, Jordan-Marsh and Clark2011). Researchers may need to assess a participant’s technology use to determine the appropriateness of using the NIH TB-CB. Alternatively, composite scores could account for current and past computer use in the calculation of standardized scores (Lee Meeuw Kjoe et al., Reference Lee Meeuw Kjoe, Agelink van Rentergem, Vermeulen and Schagen2021).

Limitations

This study has limitations. We did not have a standard neuropsychological measure similar to the Oral Reading test available in the dataset, so convergent validity for that factor could not be fully tested in our study. Our sample is also mostly white and highly educated, which limits the generalizability of this work. We also acknowledge that in our confirmatory factor analysis, we could not account for variability that may have occurred across data collection sites. However, substantial efforts were made to homogenize data collection across sites and we included site as a covariate in our regression models (Section “Predictors of NIH TB-CB Composite Scores”). Future work could further describe the oldest-old cohort through comparisons of this sample to other age cohorts who also completed the iPad version of the NIH TB-CB.

Conclusions

The NIH TB-CB was created to solve issues of inconsistency and difficulty of administration of neuropsychological testing in research, focusing on those ages 3 to 85. Our findings suggest that this test battery could be valuable for the assessment of cognitive health in individuals over 85. Having a common metric on an easy-to-use iPad tablet could enable research studies to include larger and more representative samples of older adults, and researchers could easily compare these scores to other studies which have adopted the NIH TB-CB. The NIH TB-CB could also be helpful since it can provide precise timing metrics along with cognitive accuracy scores for use in aging studies. This work has confirmed the construct validity and the feasibility of the NIH TB-CB in an 85+ sample, which will provide a basis for the usability of the battery in future older adult research. However, there may be limitations in the NIH TB-CB’s ability to validly assess individuals with low computer use and validly measure executive functioning, possibly due to age-related changes in executive functioning’s relationship with other cognitive domains. This work provides a pathway towards broadening the age span of the NIH TB-CB to 99 years of age which will allow longitudinal and cohort studies to compare across almost the entire human lifespan (3–99).

Supplementary material

The supplementary material for this article can be found at https://doi.org/10.1017/S1355617722000443

Funding statement

The authors would like to acknowledge the bulk of the work was supported by the McKnight Brain Research Foundation. Additional funding was received from grants from the National Institutes of Health (R01 MD012467, R01 NS029993, R01 NS040807, U01EY025858, U24 NS107267, U19AG065169, AG072980, AG019610, AG067200), the National Center for Advancing Translational Sciences (UL1 TR002736, KL2 TR002737), the Florida Department of Health, and the state of Arizona and Arizona Department of Health Services. Sara Nolin was funded through the NIH/NINDS T32NS061788-12 07/2008.

Conflicts of interest

None.