Data is everywhere, but who can harvest it?Footnote 1

A year or so before the first known case of the interspecies mad cow disease or bovine spongiform encephalopathy (BSE) was discovered in British cattle in April 1985, I witnessed cattle herded into a chute known as a ‘cattle crush’.Footnote 2 This was in gum-tree-logging country of East Gippsland, Australia (land repurposed from indigenous nations of Bidewell, Yuin, Gunnaikurnai and the Monero or Ngarigo people).Footnote 3 As a child, I watched cattle with floppy ear tags and flexing muscles being led up a narrow chute. Their heads were secured by a neck yoke. Pneumatic constraints aligned the animal bodies to restrict their movements. Each animal was inspected by hand, assessed for a unique ear-tag code, and positioned for inoculations. I recall the laborious nature of these tasks – for animals and humans – but did not comprehend how the architecture, leading into iron-slatted trucks, bound cattle for slaughter. The ‘crush’ has not only informed a supply chain of market produce, but is an infrastructural component of recording data, and, as argued here, contributes to developing AI systems.Footnote 4

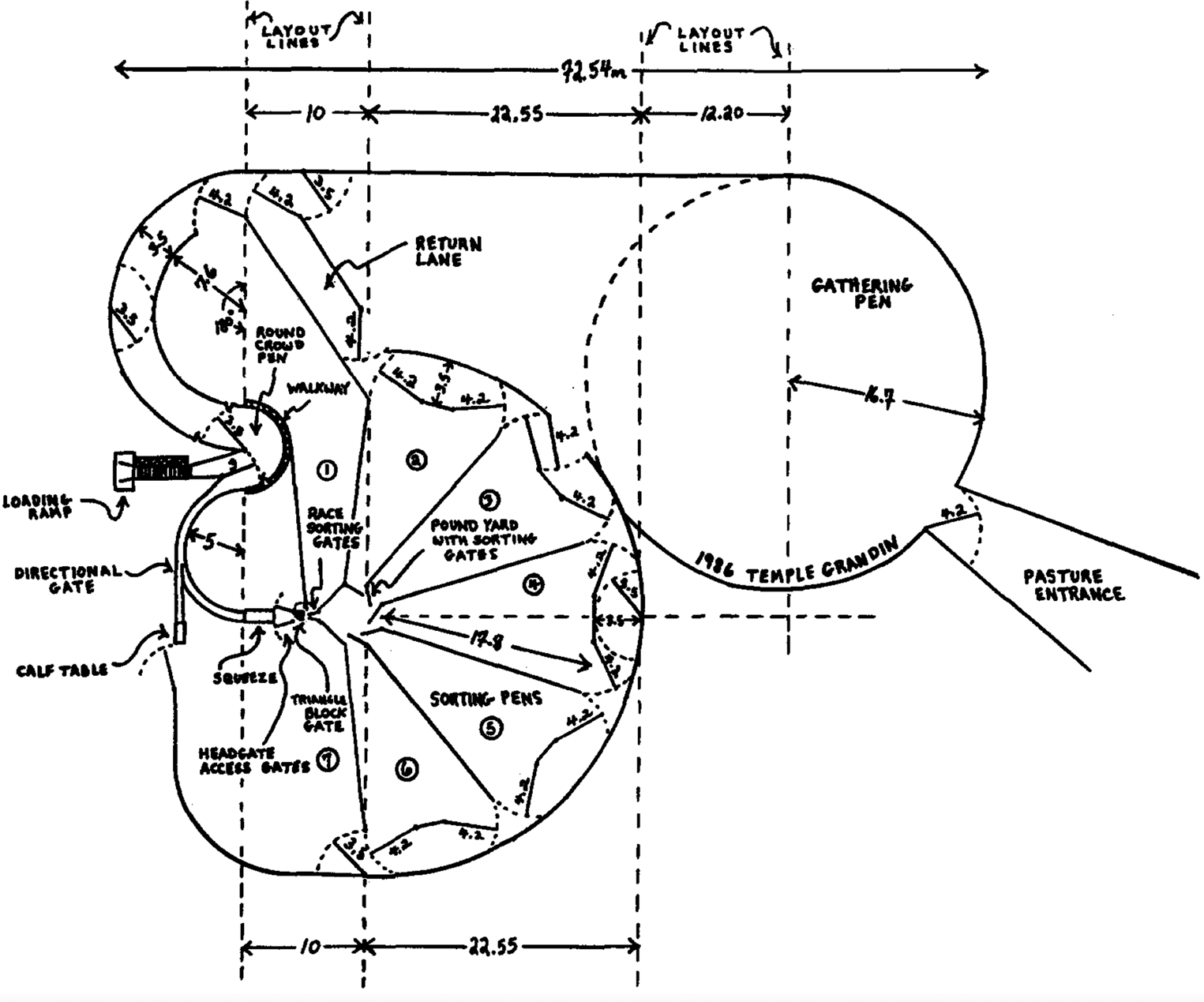

Equivalents to the cattle crush, such as the French shoeing trevis (a loop for hoofing animals), have been central to animal husbandry and herd management across multiple cultures. From the 1980s, the ‘crush’ was incrementally modified to incorporate computerized processes for biosecurity, vaccinations, electronic tagging and digital meat assessment.Footnote 5 In the United States, Temple Grandin led a majority of industrial designs for cattle crushes and animal-handling environments during this era. Aiming to decrease stress on animals from intrusive and unnecessary manual handling, she designed ‘crushes’ for over one-third of cattle and hogs processed across the country.Footnote 6 In the Netherlands in 1983 experiments with visual imaging analysis (VIA) utilized digital video as a grading device for meat quality.Footnote 7 The objective was to assist farmers to better visualize and calculate yield on animals for commercial purposes. The crush abetted uses of electronic sensors, digital logging and automated algorithms to optimize architectural and informational corridors leading cattle to slaughter. Experimental data captured from live animals was analysed across image generation, digital processing and graphical interpretation.Footnote 8 In 1991 Robin D. Tillet at the Robotics and Control Group of the Siloe Research Institute provided the first overview of agricultural innovations and challenges in the use of visual computer analysis.Footnote 9 Infrastructure built around the legacy of the ‘crush’ for animal identification and the datafication of farm produce in turn contributes to other AI systems. The ‘crush’ not only is a pivotal component into historical, architectural and informational innovations (as seen in Figure 1) in a competitive agri-food supply chain, but is characterized by ‘third-party’ artificial-intelligence (AI) products and data companies fuelling different interests.Footnote 10 This includes undertakings to improve monitoring of vehicles and people in airports or on train stations. As this paper argues, key technologies and data shaped in the agricultural sector may transfer across domains, species, networks and institutions.

Figure 1. A layout for corralling livestock in Australia and South America, including a squeeze or ‘cattle crush’ as part of the electronic sorting and computerized systems. Reprinted from Temple Grandin, ‘The design and construction of facilities for handling cattle’, Livestock Production Science (1997) 49(2), pp. 103–19, 112, with permission from Elsevier.

Today in Australia, off-the-shelf Microsoft Kinect cameras (originally developed by PrimeSense for use in gaming consoles) are now deployed in the crush to build a three-dimensional data model of individual cattle. Using open-source software development kits (SDKs) and computer vision tools, farmers visualize animal bodies aligned within the architecture of the crush. A form of infrared projection mapping, a pattern of light spots is projected onto any object – here, a cow's body – so that a computer vision unit can detect the illuminated region. Any shift in patterns received, relative to a static reference capture, helps display the shapes in real time and render them as a contoured three-dimensional image. By reconstructing light as a three-dimensional data point cloud, a visual map of the interior body volume can be configured.Footnote 11 This digitized process is similar to other ‘under-the-skin’ techniques, like ultrasonography, neuroimaging and computed tomography. But this one is designed specifically for low-cost adoption, digital storage and fast analytic capacity, motived by agricultural profit margins.

Such imaging techniques afford ‘a digitized dissection’, displaying the animal in statistical curve signatures used to quantify meat products with machine learning. Algorithms convert the curves into computational screen segments classed as volumetric image proportions. This approach helps to calculate data upon precise meat and fat proportions that can be correlated against real-time estimates of export market prices. Furthermore, these statistics can train autonomous robotic systems to slice each carcass into portions that maximize the yield.Footnote 12 Such data is also proving noteworthy to facilitate sales by digital currency for the first time. In 2023, FarmGate, an Australian rural digital payments platform, has allowed accredited vendors to self-assess livestock for sale without a human agent. The platform allows buyers to trace progeny and yield rates based on visualized marble scores that connect and convert easily ‘into new, distributed software platforms and flicking between trading systems’.Footnote 13

As modes of visualization, classification and data analysis, these techniques expand scientific traditions of what Knorr-Cetina and Amann call ‘image arithmetic’ to derive optimum solutions for any visual phenomenon: in this case ‘exploiting calculable properties of visual marks … to derive inferences from image features’.Footnote 14 Yet such data and digital infrastructure do not remain with animals. Thanks to machine learning, digital networks and the data transformations they afford, information to represent objects, events, animals and individuals is distributed. In many cases the origins and owners of this data do not have control over how this is shared, sold on, transformed and duplicated for other purposes.

Historians have done a great deal to show that over time the management of animals and their associated food and agricultural products has relied on diverse techniques of industrialization, identification and product traceability. These have ranged across the trade in animals marked by tags, administrative documentation, certification and branding practices in diverse societies and extend to environmental management techniques that monitor wild species.Footnote 15 Entwined with histories of domestication, anatomical dissection, technical instrumentation, material containment, environmental disruption and empire building are vast genealogies of data. The global development of industrial agriculture enabled the birth of what some agriculturalists called ‘a new information stage’.Footnote 16 By leveraging forms of trade with digital interfacing, this agricultural infrastructure to manage animals has also enabled the constitution of AI systems.

As yet, we do not have a comprehensive history of AI and computer vision systems built in cattle agriculture. This paper offers a preliminary outline of some key developments, starting with electronic identification systems in 1970s and 1980s imaging experiments. This is to build an understanding of the intensified computational analysis of animals and the cross-domain repurposing of AI and data surveillance.Footnote 17 To be specific, my empirical focus on computer vision techniques in the ‘crush’ reveals how consumer-grade video devices can be customized with open-source software amenable to statistical analysis and machine learning. This relies upon animals freely available for data extraction and bodily manipulation.Footnote 18 In the use of machine learning to identify and grade living cattle, the paper further exposes how this innovation builds on training from a 1988 anthropometric military data set. Outlining the series of scientific and institutional connections that utilize this menagerie of data exposes cross-domain purposes allowing all bodies to be sorted, categorized and transformed. The objective is to better understand how techniques of AI are entangled with multiple uses. Doing so requires this cross-species approach, to reveal what Peter Linebaugh described ‘as the many-sided oppositions of living labour brewing within and among modes of technical AI’.Footnote 19

Systems of dual use

It is understandable that the possibility of capturing precise information with computer vision correlated to market prices in real time has seduced the imagination of farmers and data scientists alike.Footnote 20 An emerging ‘precision agriculture’ has recently been touted as a way to tackle climate change and population growth, and to assist farmers to optimize decision making across the supply chain.Footnote 21 For example, in 2016 the national organization for meat and livestock management, Meat and Livestock Australia (MLA), publicly advocated the use of computer vision tools in agricultural management. ‘The technology is simple and robust’, they claimed, following trials conducted by the University of Technology Sydney Centre for Autonomous Systems. Working with Angus cattle on farms and saleyards around Armidale and Grafton in New South Wales, MLA promoted cameras being part of everyday farm management practices: they are ‘easy to operate and source; the sophisticated computer technology does the rest’.Footnote 22 As historians are deeply aware, different elements in supply chains have been modularized, adapted, linked and repurposed across different eras and geographical spaces, and in different networks.

As scholars Simon Coghlan and Christine Parker have argued, at least since Ruth Harrison's Animal Machines (1964), the radical industrialization of farming has produced technical, high-density and mostly automated ways to turn non-humans into quantified objects.Footnote 23 Considering a seven-hundred-year history of domesticating canine breeds, Edmund Russell postulated that ‘animals are technology’ and that industrial and scientific interventions into animal lives have co-evolved with human beings.Footnote 24 Ann Norton Greene explains this coevolution in her history of horses being put to work and functioning as hybridised military instruments during the American Civil War.Footnote 25 She and other scholars have used the concept of Enviro-tech as a frame of interpretation that includes positioning animals as integral to the creation of modern technology and calls for a more entwined or distributed view of the intersections between nature, agriculture, humans, animals and technology than historians have traditionally sought to attend to.Footnote 26 By examining both how animals can be technologies, and the uses of technology upon animals, we may extend Donna Haraway's insistence that animals bring specific solidities into the apparatus of technological production, and herein can be viewed as sites of technical amalgamation in AI systems, and hybrid data components that may shape other uses.Footnote 27

Taking enviro-tech studies as a locus of interpretation, the crush becomes a pivot point in this paper to draw together computerized designs and ancillary data from animal identification into the frame of AI and the modularized componentry it requires to operate. On the one hand this emphasizes material technologies that enable techniques of machine learning to be brought into the farm. Second, it complements Etienne Benson's account of cattle guards in the United States, with the crush as ‘a constructed space of encounter where bodies of machines, animals, and humans weave complex paths around each other’.Footnote 28 This includes infrastructure to visually datify and anatomically dissect hundreds, if not thousands, of distinct economic products from animal bodies.Footnote 29 On the other hand, by tracing different empirical and scientific experiments conducted over global research contexts, potential problems are highlighted when pioneering AI techniques across domains and for use in commercially significant and globalized data contexts. It is perhaps due to the commercial proliferation and duplication of AI and machine learning models, that repurposed training datasets and common algorithmic decision-making modules allow ‘harms that could have been relatively limited or circumscribed’ to be scaled-up from techniques on animals to build other uses very quickly.Footnote 30

As Herbert Simon, a conceptual pioneer in the AI field, stated, ‘cattle are artifacts of our ingenuity … adapted to human goals and purposes’.Footnote 31 Yet if cattle and ‘the crush’ have helped optimize computational methods, as argued here, then Amoore and Hall's complementary account of computer visualization in airport border security as ‘digital dissection’ shows a modular constitutive element that may speed up automated technical decision making on any body.Footnote 32

Most computer scientists in the AI field cannot forecast how code will operate elsewhere, such as the values it brings, ‘where it might have a “dual use” in civilian/military sectors’.Footnote 33 As imaging has improved, computer algorithms can be customized and built to detect varied shapes. If the internal structure of animal bodies enables digitization of shape and data, this comparison is then distributed across machine-learning pipelines, networked systems and modelling comparisons and integrated into market supply and human-tracking purposes.Footnote 34

The concept of ‘dual use’ is where an intended use or primary purpose for technology which is ‘proposed as good (or at least not bad) leads to a secondary purpose which is bad and was not intended by those who developed the technology in the first place’.Footnote 35 Kelly Bronson and Phoebe Stengers suggest that agribusinesses may avoid the degree of public activism raised in opposition to big data and algorithmic decision making in human social systems, as despite innovating with similar machinery, resources and labour to assist in collecting data and translating into efforts to strengthen market positions, agribusinesses are not yet read as handling sensitive data or training bodily surveillance systems.Footnote 36 As agronomic data is captured and structured for digitization, much is also rendered reproducible, exportable and interoperable through vast ‘networked arrays that capture, record, and process information on a mass scale … and that require significant capital resources to develop and to maintain’.Footnote 37 This paper therefore poses questions casting across shifting data infrastructures and into empirical substantiations where such a viewpoint intersects with regulatory concerns, data sovereignty and ‘dual-use’ implications.Footnote 38 The next section characterizes how these relationships were initially forged in international research communities of the 1970s and 1980s that brought electronic animal identification systems together for biosecurity purposes, and started utilizing computer recognition experiments.Footnote 39

The ‘crush’ of electronic and computed animal identification

On 8 and 9 April 1976, the first international symposium on Cow Identification Systems and their Applications was held at Wageningen, the Netherlands, presenting results from British, German, US and Dutch farming practices, research institutes and private companies.Footnote 40 Food logistics companies such as Alfa Laval (notable for early milking machines and their 1894 centrifugal milk–cream separator) and the Dutch company Nedap displayed early inductively powered identification systems.Footnote 41 Nedap exhibited wearable electronic transponders, collars attached around a cow's neck, that were read through a livestock ‘interrogation corridor’ (by 1997 nearly two million units had been sold worldwide).Footnote 42 Radio frequency microchip circuits called RFID tags were developed for use on cattle following the invention of short-range radio telemetry at Los Alamos in 1975, with the support of the Atomic Energy Commission and the Animal and Plant Health Inspection Service of the US Department of Agriculture.Footnote 43 RFID tags were not used merely to track cattle ranging in open fields but to read their identity as a tagged object passing within the range of a microwave antenna device.Footnote 44 The crush offered a convenient interrogation site. This technology found similar uses to log motor vehicles at toll booths, and to record package confirmation at supermarkets, post offices or shipping terminals. A primary aim in each case was to identify and to obtain product data speedily for real-time economic processing. Since the RFID tag could also store information on the age, sex or breeding of the animal and connect the owner, farm and vaccination status, this facility fed into data administration that raised the possibility of integrating different elements in real time for financial management.Footnote 45 From 1984, the emergence of mad cow disease in Britain led international regulators to call for improved traceability and information on meat product origins. Tasks were to record and indicate in which countries animals were born, reared, slaughtered and cut (or deboned), including an identifiable reference for traceability. Prior to mad cow disease, much product information would not go further than the slaughter stage.Footnote 46 Monitoring each animal on data from progeny to real-time abattoir processing reduced labour constraints is considered a ‘critical success factor’ to the economic decision making of scaling an agri-business.Footnote 47

That traceability of animals and meat products was an international security concern became recognized in the first International Standardization Organization meeting about animal identification products in 1991.Footnote 48 To ensure interoperability and design compatibility between different manufacturers, ‘some millions of devices’ were tested in Europe; problems with tampering led to a recommendation that ‘devices should be fixed to an animal’.Footnote 49 This challenge to register each animal via electronic devices became a significant factor to drive all sorts of prototyping. First-generation devices, such as RFID tags and transponders, were modified to measure body temperature and heart rate for animal fertility and health. In a 1999 special issue of Computers and Electronics in Agriculture, Wim Rossing of the Wageningen University Institute of Agricultural Engineering provided a survey devoted to electronic identification methods in this period. The use of ingestible electronic oral transponders and epidermis injectables showed many adopted from medical sensing devices. Many devices attached or injected into animals entailed some stress and perhaps pain, and often required bodily restraint ‘in a crush’.Footnote 50 Unobtrusive methods were sought that would likely influence behaviour and health less than attaching transponders, electronic collars or ingestibles. The search for a method to identify each specimen reliably and permanently with no adverse effects on animals led to an increase in remote biometric modelling. Important to this discussion is how the use of a stanchion (a prototypical ‘crush’) was critical to obtain cow noseprints in 1922; such identification methods regained traction in the rise of remote digital imaging and computational biometric modelling from 1991.Footnote 51 Resultant experiments produced material ingenuity and information traded between species, sectors, databases and mathematic models.Footnote 52

In the digital prototyping and algorithmic assessment of animals that followed, many sought to adopt computer vision and AI pattern recognition experiments on static objects and human beings prevalent from the 1980s. These involved algorithms that improved object recognition performance from curves, straight lines, shape descriptions and structural models of shapes.Footnote 53 In 1993, mathematical algorithms began to isolate, segment, and decipher the different shapes found in animal bodies specifically at the compositional boundary between fat and meat.Footnote 54 Computer vision methods adopted technology from medicine, anatomy and physiology. For example, magnetic resonance imaging (MRI) was used to determine composition in living animals with ‘high contrast, cross-sectional images of any desired plane … to measure volumes of muscles and organs’, but became cost-prohibitive for large-scale mass-market adoption.Footnote 55 Similarly, the US Food Safety and Quality Service (FSQS) collaborated with NASA's Jet Propulsion Laboratory to aid instrumental assessment of beef using ultrasound compared to remote video analysis from 1994 to 2003.Footnote 56 However, using computer algorithms in combination with digital video was considered more viable. This was due to cost and to 1980s and 1990s research on butchery that showed an ability to efficiently separate and segment bodies into digital shapes to enhance robotic butchery operations.Footnote 57 As digital shape and imaging analysis spread, benchmarking the data that was diffused across arrays of information taken from different sectors, bodies and laboratory experiments became difficult to trace back to the original sources.Footnote 58 How was it then possible to integrate such computerized analysis in agriculture, but to keep these uses separate to each domain? As Bowker and Star claim, the classificatory application of these digital assessment tools can become interoperable in infrastructure: tools of this kind can be inverted, plugged into and standardized for uses elsewhere that are ‘not accidental, but are constitutive’, of each other.Footnote 59 On 4 November 2010, techniques for three-dimensional imaging illustrated this point in the sale of a consumer video game console – the X-Box and its controller, the Microsoft Kinect. Topping 10 million sales during the first three months after launch due to its global availability, a customizable software development kit or SDK was added for release in 2012. Scientific researchers in electronic engineering and robotics quickly began leveraging the three-dimensional imaging tool for ways to analyse, simulate and predict body movements using biometrics and machine learning.Footnote 60

Although it takes work, the perceived value of machine- and deep-learning models are the purported abilities to facilitate ‘a general transformation of qualities into quantities … [enabling] simple numerical comparisons between otherwise complex entities’.Footnote 61 In the sources of data and the statistical methods of machine learning, multiple practices bloomed in the domains of biometric identification, virtual reality, clinical imaging and border security. Yet the value of animals was their utility as unwitting data accomplices to imaging experiments that can train machine-learning processes without close ethical scrutiny. History foregrounds that such systems are not, in fact, uniformly applied, nor isolable in their uses or development.

If computer vision is trained on data from which to make new classifications and inferences beyond explicit programming, then what is gained or lost if techniques and technologies are transferred between different sectors and sites, and between humans and non-human animals? The next section examines the implications of these interconnections by focusing on computer vision experiments conducted in the past decade in New South Wales, Australia.

‘Extreme values’ on the farm

Australian government officials have offered guides on how to manually palpate an animal, and assess by hand proportions of anatomical fat, muscle and tissue depths, to then make a produce assessment on grades of composition and thus speculate on profitability.Footnote 62 Yet the graphical and anatomical knowledge of flesh, bones, sinew, skin and tissue as the primary determination for ‘economical cuts of meat’ has since transformed into digital visualization. Computer vision has transformed economic decision making taking place upon, or within, animals. Information historically assessed by hand or intimately by knife could now be performed remotely, largely unobtrusively, on creatures properly aligned to digital means. Indeed, early dissection techniques can be correlated to pictorial knowledges that multiple societies displayed in anatomical drawings of animal and human bodies. Represented by mathematical fragments, segments and curved geometries, these line drawings are considered ‘graphical primitives’ to the calculation of shapes and volumes that have since coalesced into ‘computational relations between them’.Footnote 63 As James Elkins claims, software developers that render three-dimensional computer graphics ‘have inherited pictorial versions of naturalism as amenable to computation’ and transferred to uses in scientific, medical and military sectors.Footnote 64

Contemporary animal identification commonly utilizes open-source software architectures and modular camera systems – this is in part due to the availability of pre-existing software, standardized training data and stabilized image labelling, translating into an ease of adoption.

In 2018, global food conglomerate Cargill invested in the Irish dairy farm start-up Cainthus (named after the corner of the eye) due to its computer vision software claiming to identify animals from distinctive facial recognition acts. According to Dave Hunt, Cainthus CEO, this precision management tool ‘observes on a meter-by-meter basis … there is no emotion, there is no hype … just good decisions and a maximization of productivity’.Footnote 65 These types of facial recognition system were also tested in Australia during 2019, by utilizing four cameras to simultaneously capture ‘on average 400 images per sheep’ as a proof of concept and as a data library for sheep identification.Footnote 66 The manufacturing of a sheep face classifier using Google's Inception V3 open-source architecture (trained on the ImageNet database; see Bruno Moreschi's contribution to this issue) illustrates how consumer-grade items, such as Logitech C920 cameras, can be quickly integrated into data analysis in modern farm environments. Yet this also changes the types of data that can drive machine learning analytical pipelines, in particular the use of statistical analysis tools.

Two years prior, in 2017, Australian robotics researchers from the University of Technology Sydney partnered with Meat and Livestock Australia using a purpose-built measurement ‘crush’ associated with cattle handling to accommodate two off-the-shelf RGB-D Microsoft Kinect cameras. Positioned to capture three-dimensional images of cattle while standing, their goal was to precisely digitize the fat and muscle composition in living Angus cows and steers.Footnote 67 The second step was to train the computer vision system to produce a robust predictive function between these – meat–fat marbling traits – and then to calculate an optimal yield from each beast.

In this experiment, the data model was intended to ‘learn’ a non-linear relationship between the surface curvatures of an animal, represented from within a Microsoft three-dimensional point cloud, and to translate that data into statistical values on the relative proportions of MS (muscle) to P8 (portion of fat) that could be representative in order to yield market prices.Footnote 68 To compare how their statistical model performed as an accurate machine-learning evaluation, the team compared results from human expert cattle assessors and those deduced from ultrasound detection. The proof of the concept aimed to demonstrate both the importance of ‘curvature shapes’ as a digital measure that could accurately verify product yield, and the feasibility of modelling such statistical ‘traits’ as assigned values for machine learning improving with each animal.

Yet in the precision that the experiment claimed to have reached there is a deeper phenomenon of scientific dislocation. Historians describe this as ‘the accumulation of evidence from converging investigations in which no single experiment is decisive or memorable in method or in execution’.Footnote 69 In this instance, curiously, the statistical and algorithmic data relationships deduced from animal shapes had first been trained on a 1988 data set from 180 anthropometric measurements taken on almost nine thousand soldiers in the US military.Footnote 70 Data collected at the United States Army Natick Research, Development and Engineering Center, under contract to Dr Claire C. Gordon, chief of the Anthropology Section, Human Factors Branch, used a method of software analysis engineered by Thomas D. Churchill, titled the X-Val or ‘extreme values’ computer program.Footnote 71 It is important to locate this military data here historically due to the mobility of its bankable metrics amenable to software innovation that then became adaptable and transformable, across species, continents and digital evaluations.

A secondary review of the 1988 Natick data set conducted in 1997 deduced ‘the undesirability of applying data from [such] non-disabled [and highly fit] populations’ to the design of equipment or techniques for bodies of other types.Footnote 72 And yet in this instance, the research institutions involved in the cattle crush experiment had utilized the data in 2012 to create a ‘shoulder-to-head signature’ to monitor people across office environments and then five years later applied these data sets to animals.Footnote 73 Arguably, due to the collaborative nature of the research groups, multiple institutions involved, and sectors of interest in robotics and video analytics, the knotting of methods used to digitize animals, to track people and to identify body parts were overlooked as unrelated or inconsequential. Yet the studies show that the team continued to use statistical shoulder-to-head signatures to reduce human beings to synthetic shapes classed as ‘ellipsoids’. Statistical data from a 2017 cattle crush experiment boosted this automated recognition system to count and track individuals on a city train station.Footnote 74

Comparing the mobility of data from the ‘cattle crush’ experiment to the ‘train station’ experiment, a set of relations can be drawn forward to show how modelling of humans and animals produces interoperable data sets for computer vision. In their reduction to metrics, statistics and ‘shapes’, a combination of visual cultures in scientific experimentation collides with odd epistemic transference between species. The slippage is a reduction of bodies to ‘curve signatures’ that permits cannibalizing data from otherwise unrelated sources. Yet no matter the efforts to reduce or to recontextualize such information, it always remains sensitive information, at least in potential abilities to foment and formalize a transfer into dual-use arenas.

The politics of such data entanglements remain under-studied. However in 2019 the Australian Strategic Policy Institute initiated a review into the University of Technology Sydney because of concerns that five experiments developing mapping algorithms for public security harboured potential China Electronics Technology Corporation products for use in Chinese surveillance systems. This resulted in suspending a public-security video experiment due to ‘concerns about potential future use’ and triggered a review into UTS policies.Footnote 75 Similarly, Australian entities involved in critical infrastructure projects, such as in the transport and agricultural sectors, are subject to laws requiring supply chain traceability to resist benefiting from exploited labour that may subsist in third-party data products.Footnote 76 These regulatory efforts can be extended to statistical and computer vision technology experiments on animals and in agriculture that may escape this type of notice. As this paper seeks to illustrate, collaborative ventures of scientific innovation create ‘a snarled nest of relations’ manufactured across data and supply chainsFootnote 77 – in this case, an appropriation of metrics that maximize military forces, surveil civilians or increase farm profits.

Conclusion: sectors that resist guardrails in AI

Lorraine Daston verified that ‘ancient languages record not epics like the Gilgamesh or the Iliad but rather merchants’ receipts: like five barrels of wine, twenty-two sheepskins, and so on’.Footnote 78 These early receipts and dissection offcuts informed the economic powers of ancient societies and built ‘ritualised numerical operations of ownership in tattooing, excising, incising, carving, or scarifying’.Footnote 79 In these identifiable bodily marking and sacrificial dissections – of humans and animals – is an early genealogy to computational AI strategies. Threading a course across the history of agriculture to that of isolated computing acts, the ‘dual-use dilemma’ reveals a high-stakes game of scientific research in the accrual of data for prototyping, and the creation of intellectual property situated in the complex interrelations of AI.Footnote 80

As Blanchette argues, ‘humans too are “tamed” in defining the technical processes of vertical integration, standardization, and monopolization for managing production into global scales for achieving efficiency and profitability’.Footnote 81 This pertains not just to horizontal and vertical divisions of labour, but to the computational forms of monitoring across species divides in which physical bodies are most visible, but ‘statistical surveillance’ is somewhat invisible.Footnote 82

König has called this manifold of military, agricultural and civilian techniques and data sets ‘the algorithmic leviathan’ in that it entwines the pioneering aspirations of 1980s artificial intelligence, from pattern recognition to the use of analogical logics in particular.Footnote 83 Although the nature of the statistical analysis and the computational technologies has changed, it is the infrastructural foundations that allow the tracking, segmenting and partitioning of all bodies. By considering the configurations between agriculture, data and technology in this way, this paper has highlighted the relevance of enviro-tech frameworks to perhaps assist historians and surveillance scholars with regulatory ambitions for increased transparency in AI systems. This is important amidst the dramatic transitions from an ‘over-the-skin’ type to an ‘under-the-skin’ type of surveillance on human beings that was ushered in due to COVID-19 – from a utility of infrared scanning, thermal imaging, drone monitoring and proximity detection techniques that stood in for close body contact and as anticipatory determinations for public security.Footnote 84

As Australian farms increase their scale to maintain profitability – with astonishing land use – autonomous technologies, such as unmanned aerial vehicles, fill gaps in monitoring. A transition to drone ‘shepherds’, robotic harvesters and high-tech surveillance applies to virtual fencing and ‘precision’ planting that enable statistical data to aid financial decision tools.Footnote 85 While a so-called ‘Fourth Agricultural Revolution’ is consequential to a nation's economic growth and food productivity, a diffusion of data across third parties and digital networks links credentials to sustainability and environmental management but may carry unanticipated consequences.Footnote 86 Farming is no longer family-run: it is big business, it is national security, and it is data-intensive. Here, too, Australian farmers face pressure from industrial organizations to transition to digital techniques. John Deere, for instance, has entered union disputes to resist a growing right-to-repair movement, showing that the tractor is not simply an earth-moving machine but, instead, a tool for data collection. Attempts to restrict access to intellectual software property and to prevent self-maintenance are ending up in the courts.Footnote 87 Farming has built on post-war aims to become ‘smart’ like cities, and yet it is interlaced with logistical tools suitable for the Internet of Things and the rise in the mobility of global data.Footnote 88

By tracing how computer vision developed in agriculture for animal biosecurity this paper indicates sets of technical and institutional links to AI systems, data, statistics and imaging. Yet these complex chains within experimentation can be vulnerable to bundling together such security measures, and its associated infrastructure, with the detection, surveillance, and containment of all manner of phenomena across scientific domains, manufacturing sectors, public spaces and for dual-use foundations.Footnote 89 What is critical is how what is being modelled is more deeply implicated in securing data that can be fungible for a range of ‘AI’ activities whether in the supply chain of nation-state surveillance or that foistered upon Amazon workers.Footnote 90 These acts threaten to grow and may remain unregulated by the kind of checks found in AI bills like the European Commission that is viewing real-time biometric identification as a high-risk scenario.Footnote 91 Underneath AI systems exist ‘warped and unclear geometries’ in the datasets and statistical models that can resist domain-specific guardrails, and, as argued elsewhere, intensive agriculture generates problems that cut across regulatory domains.Footnote 92 As an historically significant force behind burgeoning economies and infrastructural innovation agriculture and the use of animals assist machine-learning models not just to try and determine ‘what this is’, but to manufacture, assemble and to extract elements of nature into maximum yield. Yet as we near the limits of the harvest the data might also be cannibalised for other uses.

Acknowledgements

I am most grateful to Richard Staley for his wise editorial guidance, and to Matthew Jones, Stephanie Dick and Jonnie Penn for their generous, insightful advice on different versions. And to Director Kathryn Henne at RegNet - School of Global Governance and Regulation at the Australian National University for her continued support.