1 Introduction

Proof assistants offer a promising way of delivering the strongest guarantee of correctness. Many software properties can be stated and verified using the currently available tools such as, e.g. Coq, Agda, and Isabelle. In the current work, we focus our attention on the Coq proof assistant based on dependent-type theory (calculus of inductive constructions—CIC). Since the calculus of Coq is also a programming language, it is possible to execute programmes directly in the proof assistant. The expressiveness of Coq’s type system allows for writing specifications directly in a programme type. These specification can be expressed, for example, in the form of pre- and postconditions using subset types implemented in Coq using the dependent pair type (

![]() $\Sigma$

-type). However, in order to integrate the formally verified code with existing components, one would like to obtain a programme in other programming languages. One way of achieving this is to extract the executable code from the formalised development. Various verified developments rely extensively on the extraction feature of proof assistants (Cruz-Filipe and Spitters Reference Cruz-Filipe and Spitters2003; Filliâtre & Letouzey Reference Filliâtre and Letouzey2004; Leroy Reference Leroy2006; Cruz-Filipe & Letouzey Reference Cruz-Filipe and Letouzey2006; Klein et al. Reference Klein, Andronick, Elphinstone, Murray, Sewell, Kolanski and Heiser2014). However, currently, the standard extraction feature in proof assistants focuses on producing code in conventional functional languages (Haskell, OCaml, Standard ML, Scheme, etc.). Nowadays, there are many new important target languages that are not covered by the standard extraction functionality.

$\Sigma$

-type). However, in order to integrate the formally verified code with existing components, one would like to obtain a programme in other programming languages. One way of achieving this is to extract the executable code from the formalised development. Various verified developments rely extensively on the extraction feature of proof assistants (Cruz-Filipe and Spitters Reference Cruz-Filipe and Spitters2003; Filliâtre & Letouzey Reference Filliâtre and Letouzey2004; Leroy Reference Leroy2006; Cruz-Filipe & Letouzey Reference Cruz-Filipe and Letouzey2006; Klein et al. Reference Klein, Andronick, Elphinstone, Murray, Sewell, Kolanski and Heiser2014). However, currently, the standard extraction feature in proof assistants focuses on producing code in conventional functional languages (Haskell, OCaml, Standard ML, Scheme, etc.). Nowadays, there are many new important target languages that are not covered by the standard extraction functionality.

An example of a domain that experiences rapid development and the increased importance of verification is the smart contract technology. Smart contracts are programmes deployed on top of a blockchain. They often control large amounts of value and cannot be changed after deployment. Unfortunately, many vulnerabilities have been discovered in smart contracts and this has led to huge financial losses (e.g. TheDAO, Footnote 1 Parity’s multi-signature wallet Footnote 2 ). Therefore, smart contract verification is crucially important. Functional smart contract languages are becoming increasingly popular, e.g. Simplicity (O’Connor Reference O’Connor2017), Liquidity (Bozman et al. Reference Bozman, Iguernlala, Laporte, Le Fessant and Mebsout2018), Plutus (Chapman et al. Reference Chapman, Kireev, Nester and Wadler2019), Scilla (Sergey et al. Reference Sergey, Nagaraj, Johannsen, Kumar, Trunov and Chan2019) and LIGO. Footnote 3 A contract in such a language is a partial function from a message type and a current state to a new state and a list of actions (transfers, calls to other contracts), making smart contracts more amenable for formal verification. Functional smart contract languages, similarly to conventional functional languages, are often based on a well-established theoretical foundation (variants of the Hindley–Milner type system). The expressive type system, immutability, and message-passing execution model allow for ruling out many common errors in comparison with conventional smart contract languages such as Solidity.

For the errors that are not caught by the type checker, a proof assistant, in particular Coq, can be used to ensure correctness. Once properties of contracts are verified, one would like to execute them on blockchains. At this point, the code extraction feature of Coq would be a great asset, but extraction to smart contract languages is not available in Coq.

There are other programming languages of interest in different domains that are not covered by the current Coq extraction. Among these, Elm (Feldman Reference Feldman2020)—a functional language for web development and Rust (Klabnik & Nichols Reference Klabnik and Nichols2018)—a multi-paradigm systems programming language, are two examples.

Another issue we face is that the current implementation of Coq extraction is written in OCaml and is not itself verified, potentially breaking the guarantees provided by the formalised development. We address this issue by using an existing formalisation of the meta-theory of Coq and provide a framework that is implemented Coq itself. Being written in Coq gives us a significant advantage since it makes it possible to apply various techniques to verify the development itself.

The current work extends and improves the results previously published and presented by the same authors at the conference Certified Programs and Proofs (Annenkov et al. Reference Annenkov, Milo, Nielsen and Spitters2021) in January 2021. We build on the ConCert framework (Nielsen & Spitters Reference Nielsen and Spitters2019; Annenkov et al. Reference Annenkov, Nielsen and Spitters2020) for smart contracts verification in Coq and the MetaCoq project (Sozeau et al. Reference Sozeau, Anand, Boulier, Cohen, Forster, Kunze, Malecha, Tabareau and Winterhalter2020). We summarise the contributions as the following, marking with

![]() ${}^{\dagger}$

the contributions that extend the previous work.

${}^{\dagger}$

the contributions that extend the previous work.

-

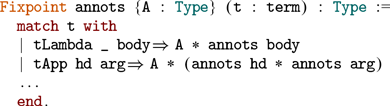

We provide a general framework for extraction from Coq to a typed functional language (Section 5.1). The framework is based on certified erasure (Sozeau et al. Reference Sozeau, Boulier, Forster, Tabareau and Winterhalter2019) of MetaCoq. The output of MetaCoq’s erasure procedure is an AST of an untyped functional programming language

${\lambda_\square}$

. In order to generate code in typed programming languages, we implement an erasure procedure for types and inductive definitions. We add the typing information for all

${\lambda_\square}$

. In order to generate code in typed programming languages, we implement an erasure procedure for types and inductive definitions. We add the typing information for all

${\lambda_\square}$

definitions and implement an annotation mechanism allowing for adding annotations in a modular way—without changing the AST definition. We call the resulting representation

${\lambda_\square}$

definitions and implement an annotation mechanism allowing for adding annotations in a modular way—without changing the AST definition. We call the resulting representation

${\lambda^T_\square}$

and use it as an intermediate representation. Moreover, we implement and prove correct an optimisation procedure that removes unused arguments. The procedure allows us to optimise away some computationally irrelevant bits left after erasure.

${\lambda^T_\square}$

and use it as an intermediate representation. Moreover, we implement and prove correct an optimisation procedure that removes unused arguments. The procedure allows us to optimise away some computationally irrelevant bits left after erasure. -

We implement pre-processing passes before the erasure stage (see Section 5.2). After running all the passes, we generate correctness proofs. The passes include

-

$\eta$

-expansion;

$\eta$

-expansion; -

expansion of match branches

${}^{\dagger}$

;

${}^{\dagger}$

; -

inlining

${}^{\dagger}$

.

${}^{\dagger}$

.

-

-

We develop in Coq pretty-printers for obtaining extracted code from our intermediate representation to the following target languages.

-

Liquidity—a functional smart contract language for the Dune network (see Section 5.3).

-

CameLIGO—a functional smart contract language from the LIGO family for the Tezos network (see Section 5.3)

${}^{\dagger}$

.

${}^{\dagger}$

. -

Elm—a general purpose functional language used for web development (see Section 5.4).

-

Rust—a multi-paradigm systems programming languages (see Section 5.5)

${}^{\dagger}$

.

${}^{\dagger}$

.

-

-

We develop an integration infrastructure, required to deploy smart contracts written in Rust on the Concordium blockchain

${}^{\dagger}$

.

${}^{\dagger}$

. -

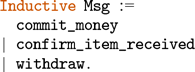

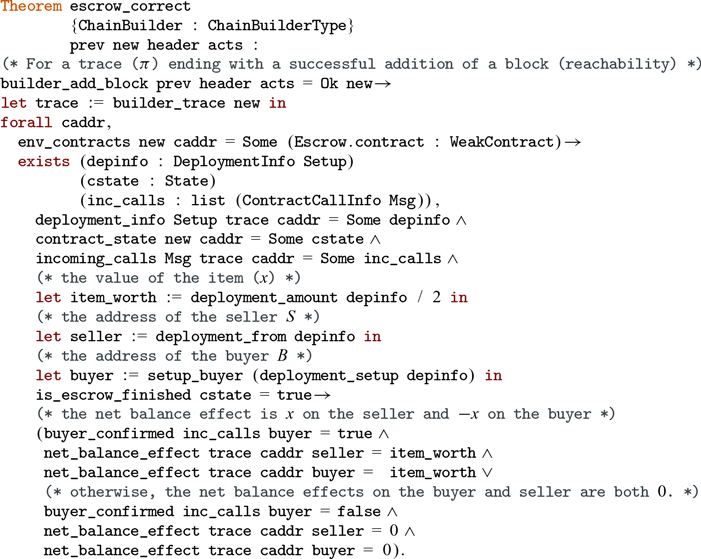

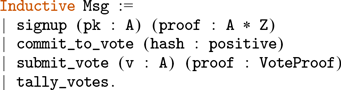

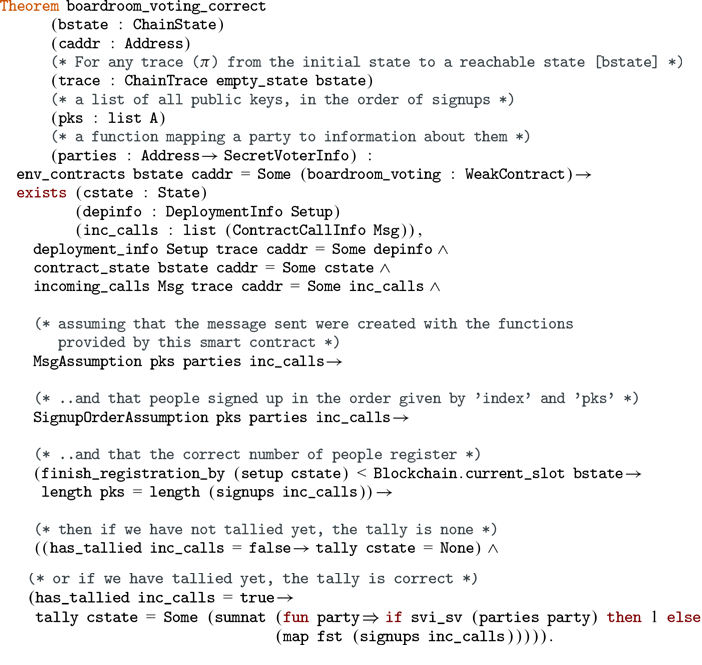

We provide case studies of smart contracts in ConCert by proving properties of an escrow contract and an anonymous voting contract based on the Open Vote Network protocol (Section 6 and 7). We apply our extraction functionality to study the applicability of our pipeline to the developed contracts.

Apart from the extensions marked above, we have improved over the previous work in the following points.

-

The erasure procedure for types now covers type schemes. We provide the updated procedure along with the discussion in Section 5.1.1

-

We extract the escrow contract to new target languages and finalise the extraction of the boardroom voting contract, which was not previously extracted. For the Elm extraction, we develop a verified web application that uses dependent types to encode the validity of the data in the application model. We demonstrate how the fully functional web application can be produced from the formalisation.

2 The pipeline

We begin by describing the whole pipeline covering the full process of starting with a program in Coq and ending with extracted code in one of the target languages. This pipeline is shown in Figure 1. The items marked with

![]() ${}^{*}$

(also given in

${}^{*}$

(also given in

![]() ${\textsf{green}}$

) are contributions of this work and the items in

bold cursive

are verified. The MetaCoq project (Sozeau et al. Reference Sozeau, Anand, Boulier, Cohen, Forster, Kunze, Malecha, Tabareau and Winterhalter2020) provides us with metaprogramming facilities and formalisation of the meta-theory of Coq, including the verified erasure procedure.

${\textsf{green}}$

) are contributions of this work and the items in

bold cursive

are verified. The MetaCoq project (Sozeau et al. Reference Sozeau, Anand, Boulier, Cohen, Forster, Kunze, Malecha, Tabareau and Winterhalter2020) provides us with metaprogramming facilities and formalisation of the meta-theory of Coq, including the verified erasure procedure.

Fig. 1. The pipeline.

We start by developing a programme in Gallina that can use rich Coq types in the style of certified programming, see e.g. Chlipala (Reference Chlipala2013). In the case of smart contracts, we can use the machinery available in ConCert to test and verify the properties of interacting smart contracts. We obtain a Template Coq representation by quoting the program. This representation is close to the actual AST representation in the Coq kernel. We then apply a number of certifying transformations to this representation (see Section 5.2). This means that we produce a transformed term along with a proof term, witnessing that the transformed term is equal to the original in the theory of Coq. Currently, we assume that the transformations applied to the Template Coq representations preserve convertibility. Therefore, we can easily certify them by generating simple proofs consisting essentially of the constructor of Coq’s equality type eq_refl. Although the transformations themselves are not verified, generated proofs give strong guarantees that the behaviour of the term has not been altered. One can configure the pipeline to apply several transformations, in this case, they will be composed together and applied to the Template Coq term. The correctness proofs are generated after all the specified transformations are applied.

The theory of Coq is presented by the predicative calculus of cumulative inductive constructions (PCUIC) (Timany & Sozeau Reference Timany and Sozeau2017), which is essentially a cleaned-up version of the kernel representation. The translation from the Template Coq representation to PCUIC is mostly straightforward. Currently, MetaCoq provides the type soundness proof for the translation, but computational soundness (wrt. weak call-by-value evaluation) is not verified. However, the MetaCoq developers plan to close this gap in the near future. Most of the meta-theoretic results formalised by the MetaCoq project use the PCUIC representation (see Section 3 for the details about different MetaCoq representations).

From PCUIC, we obtain a term in an untyped calculus of erased programs

![]() ${\lambda_\square}$

using the verified erasure procedure of MetaCoq. By

${\lambda_\square}$

using the verified erasure procedure of MetaCoq. By

![]() ${\lambda^T_\square}$

, we denote

${\lambda^T_\square}$

, we denote

![]() ${\lambda_\square}$

enriched with typing information, which we obtain using our erasure procedure for types (see Section 5.1.1). Specifically, we add to the

${\lambda_\square}$

enriched with typing information, which we obtain using our erasure procedure for types (see Section 5.1.1). Specifically, we add to the

![]() ${\lambda_\square}$

representation of MetaCoq the following.

${\lambda_\square}$

representation of MetaCoq the following.

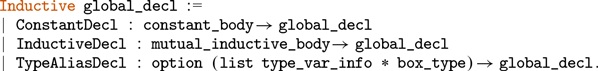

-

Constants and definitions of inductive types in the global environment store the corresponding “erased” types (

in Section 5.1.1).

in Section 5.1.1). -

We explicitly represent type aliases (definitions that accept some parameters and return a type) as entries in the extended global environment.

-

The nodes of the

${\lambda_\square}$

AST can be optionally annotated with the corresponding “erased” types (see Section 5.3).

${\lambda_\square}$

AST can be optionally annotated with the corresponding “erased” types (see Section 5.3).

The typing information is required for extracting to typed functional languages. The

![]() ${\lambda^T_\square}$

representation is essentially a core of a pure statically typed functional programming language. Our extensions make it a convenient intermediate representation containing enough information to generate code in various target languages.

${\lambda^T_\square}$

representation is essentially a core of a pure statically typed functional programming language. Our extensions make it a convenient intermediate representation containing enough information to generate code in various target languages.

The pipeline provides a way of specifying optimisations in a compositional way. These optimisations are applied to the

![]() ${\lambda^T_\square}$

representation. Each optimisation should be accompanied with a proof of computational soundness wrt. the big-step call-by-value evaluation relation for

${\lambda^T_\square}$

representation. Each optimisation should be accompanied with a proof of computational soundness wrt. the big-step call-by-value evaluation relation for

![]() ${\lambda_\square}$

terms. The format for the computational soundness is fixed and the individual proofs are combined in the top-level statement covering given optimisation steps (see Theorem 2). At the current stage, we provide an optimisation that removes dead arguments of functions and constructors (see Section 5.1.2).

${\lambda_\square}$

terms. The format for the computational soundness is fixed and the individual proofs are combined in the top-level statement covering given optimisation steps (see Theorem 2). At the current stage, we provide an optimisation that removes dead arguments of functions and constructors (see Section 5.1.2).

The optimised

![]() ${\lambda^T_\square}$

code is then can be printed using the pretty-printers developed directly in Coq. The target languages include two categories: languages for smart contracts and general-purpose languages. The Rust programming language is featured in both categories. However, the use case of Rust as a smart contract language requires slightly more work for integrating the resulting code with the target blockchain infrastructure (see Section 5.5).

${\lambda^T_\square}$

code is then can be printed using the pretty-printers developed directly in Coq. The target languages include two categories: languages for smart contracts and general-purpose languages. The Rust programming language is featured in both categories. However, the use case of Rust as a smart contract language requires slightly more work for integrating the resulting code with the target blockchain infrastructure (see Section 5.5).

Our trusted computing base (TCB) includes Coq itself, the quote functionality of MetaCoq and the pretty-printing to target languages. While the erasure procedure for types is not verified, it does not affect the soundness of the pipeline (see discussion in Section 5.1).

When extracting large programmes, the performance of the pipeline inside Coq might become an issue. In such cases, it is possible to obtain an OCaml implementation of our pipeline using the standard Coq extraction. However, this extends the TCB with the OCaml implementation of extraction and the pre-processing pass, since the proof terms will not be generated and checked in the extracted OCaml code.

Our development is open-source and available in the GitHub repository https://github.com/AU-COBRA/ConCert/tree/journal-2021. In the text, we refer to our formalisation using the following link format: ![]() . The path starts at the root of the project’s repository, and the optional lemma_name parameter refers to a definition in the file.

. The path starts at the root of the project’s repository, and the optional lemma_name parameter refers to a definition in the file.

Pretty-printing and formalisation of target languages. The printing procedure itself is a partial function in Coq that might fail (or emit errors in the resulting code) due to unsupported constructs. The unsupported constructs could result from limitations of a target language, or a pretty-printer itself. Similarly to the standard extraction of Coq, the printed code may be untypable. The reason is that the type system of Coq is stronger than the type systems of the target languages. In the standard extraction, it is solved with type coercions, but in most of our target languages, the mechanisms to force the type checker to accept the extracted definitions are missing. We discuss these issues and some solutions in Section 5. Note, that the pipeline gives a guarantee that the

![]() ${\lambda^T_\square}$

code will evaluate to a correct value. Therefore, if the corresponding pretty-printer is correct, the computational behaviour of the extracted code is sound. We discuss the restrictions for each target language in Sections 5.3–5.5.

${\lambda^T_\square}$

code will evaluate to a correct value. Therefore, if the corresponding pretty-printer is correct, the computational behaviour of the extracted code is sound. We discuss the restrictions for each target language in Sections 5.3–5.5.

As we mentioned earlier, the pretty-printing is part of the TCB. It bridges the gap between

![]() ${\lambda^T_\square}$

and a target language. This gap varies for various target languages. Elm is very close to

${\lambda^T_\square}$

and a target language. This gap varies for various target languages. Elm is very close to

![]() ${\lambda^T_\square}$

. CameLIGO and Liquidity are not too far, but have several restrictions and require mapping

${\lambda^T_\square}$

. CameLIGO and Liquidity are not too far, but have several restrictions and require mapping

![]() ${\lambda^T_\square}$

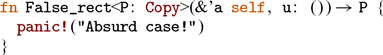

constructs to native types and operations. We also apply several standard extraction techniques when printing to a concrete syntax. For example, we replace absurd cases (unreachable branches) in pattern matching with exceptions or non-terminating functions. Such transformations have certain semantic consequences specific to each target language, which are currently captured only informally by the printing procedures.

${\lambda^T_\square}$

constructs to native types and operations. We also apply several standard extraction techniques when printing to a concrete syntax. For example, we replace absurd cases (unreachable branches) in pattern matching with exceptions or non-terminating functions. Such transformations have certain semantic consequences specific to each target language, which are currently captured only informally by the printing procedures.

From that point of view, the pipeline offers partial correctness guarantees ending with the

![]() ${\lambda^T_\square}$

representation. To extend the guarantees provided by extraction and close this gap, one needs to consider the semantics of the target languages. That is, can add a translation step from

${\lambda^T_\square}$

representation. To extend the guarantees provided by extraction and close this gap, one needs to consider the semantics of the target languages. That is, can add a translation step from

![]() ${\lambda^T_\square}$

to the target language syntax and prove the translation correct. Ongoing work at Tezos on formalising the semantics of LIGO languages

Footnote 4

would allow for connecting our

${\lambda^T_\square}$

to the target language syntax and prove the translation correct. Ongoing work at Tezos on formalising the semantics of LIGO languages

Footnote 4

would allow for connecting our

![]() ${\lambda^T_\square}$

semantics with the CameLIGO semantics, and eventually get a verified pipeline producing verified Michelson code, directly executed by the Tezos infrastructure.

${\lambda^T_\square}$

semantics with the CameLIGO semantics, and eventually get a verified pipeline producing verified Michelson code, directly executed by the Tezos infrastructure.

The gap between

![]() ${\lambda^T_\square}$

and Rust is larger, and it would be beneficial to provide a translation that would take care of modelling memory allocation, for example. Projects like RustBelt (Jung et al. Reference Jung, Jourdan, Krebbers and Dreyer2021) and Oxide (Weiss et al. Reference Weiss, Gierczak, Patterson, Matsakis and Ahmed2019) are aiming to give formal semantics to Rust. However, currently, they do not formalise the Rust surface language.

${\lambda^T_\square}$

and Rust is larger, and it would be beneficial to provide a translation that would take care of modelling memory allocation, for example. Projects like RustBelt (Jung et al. Reference Jung, Jourdan, Krebbers and Dreyer2021) and Oxide (Weiss et al. Reference Weiss, Gierczak, Patterson, Matsakis and Ahmed2019) are aiming to give formal semantics to Rust. However, currently, they do not formalise the Rust surface language.

3 The MetaCoq project

Since MetaCoq (Anand et al. Reference Anand, Boulier, Cohen, Sozeau and Tabareau2018) is integral to our work, we briefly introduce the project structure and explain how different parts of it are relevant to our development.

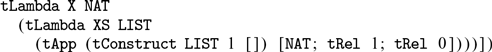

Template Coq. This subproject adds metaprogramming facilities to Coq. That is, Coq definitions can be quoted giving an AST of the original term represented as an inductive data type term internally in Coq. The term type and related data types in Template Coq are very close to the actual implementation of the Coq kernel written in OCaml, which makes the quote/unquote procedures straightforward to implement. This representation is suitable for defining various term-level transformations as Coq functions. The results of such transformations can be unquoted back to an ordinary Coq definition (provided that the resulting term is well-typed).

The Template Coq metaprogramming facilities are used as the first step in our pipeline. Given a (potentially verified and dependently typed) program in Coq, we can use quote to obtain the program’s AST that is then transformed, extracted, optimised, and finally pretty-printed to one of the target languages (see Figure 1). The transformations at the level of the Template Coq AST are used as a pre-processing step in the pipeline.

Template Coq features vernacular commands for obtaining the quoted representations, e.g. ![]() . In addition to that, it features the template monad, which is similar in spirit to the IO monad and allows for interacting with the Coq environment (quote, unquote, query, and add new definitions). We use the template monad in our pipeline for various transformations whenever such interaction is required. For example, we use it for implementing proof generating transformations (see Section 5.2).

. In addition to that, it features the template monad, which is similar in spirit to the IO monad and allows for interacting with the Coq environment (quote, unquote, query, and add new definitions). We use the template monad in our pipeline for various transformations whenever such interaction is required. For example, we use it for implementing proof generating transformations (see Section 5.2).

PCUIC. Predicative calculus of cumulative inductive constructions (PCUIC) is a variant of the calculus of inductive constructions (CIC) that serves as the underlying theoretical foundation of Coq. In essence, PCUIC representations is a “cleaned-up” version of the Template Coq AST: it lacks lacks type casts and has the standard binary application, compared to the n-ary application in Template Coq. MetaCoq features translation between the two representations.

Various meta-theoretic results about PCUIC has been formalised in MetaCoq, including the verified type checker (Sozeau et al. Reference Sozeau, Boulier, Forster, Tabareau and Winterhalter2019). In our development, we use the certified programming approach that relies on the results related to reduction and typing.

Verified erasure. One important part of the MetaCoq project that we build on is the verified erasure procedure. The erasure procedure takes a PCUIC term as input and produces a term in

![]() ${\lambda_\square}{}$

. The meta-theory of PCUIC developed as part of MetaCoq is used extensively in erasure implementation and formalisation of the correctness results. The erasure procedure is quite subtle and its formalisation is a substantial step towards the fully verified extraction pipeline. We discuss the role and the details of MetaCoq’s verified erasure in Section 5.1.

${\lambda_\square}{}$

. The meta-theory of PCUIC developed as part of MetaCoq is used extensively in erasure implementation and formalisation of the correctness results. The erasure procedure is quite subtle and its formalisation is a substantial step towards the fully verified extraction pipeline. We discuss the role and the details of MetaCoq’s verified erasure in Section 5.1.

4 The ConCert framework

The present work builds on and extends the ConCert smart contract certification framework presented by the three authors of the present work at the conference Certified Programmes and Proofs in January 2020 (Annenkov et al. Reference Annenkov, Nielsen and Spitters2020). In this section, we briefly describe relevant parts of ConCert along with the extensions developed in Annenkov et al. (Reference Annenkov, Milo, Nielsen and Spitters2021) and in the present work.

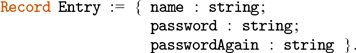

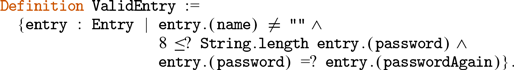

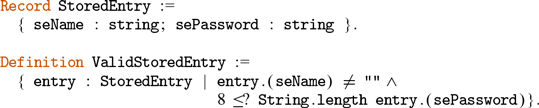

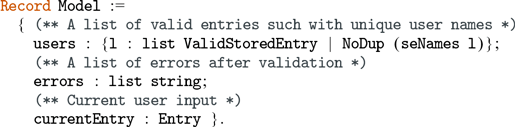

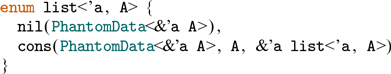

Execution Layer. The execution layer provides a model that allows for reasoning on contract execution traces which makes it possible to state and prove temporal properties of interacting smart contracts. In the functional smart contract model, the contracts consist of two functions.

![]()

The init function is called after the contract is deployed on the blockchain. The first parameter of type Chain represents the blockchain from a contract’s point of view. The ContractCallContext parameter provides data about the current call, e.g. caller address, the amount sent to the contract, etc. Setup is a user defined type that supplies custom parameters to the initialisation function.

![]()

The receive function represents the main functionality of the contract that is executed for each call to the contract. Chain and ContractCallContext are the same as for the init function. State represents the current state of the contract and Msg is a user-defined type of messages that contract accepts. The result of the successful executions is a new state and a list of actions represented by ActionBody. The actions can be transfers, calls to other contracts (including the contract itself), and contract deployment actions.

However, reasoning about the contract functions in isolation is not sufficient. A call to receive potentially emits more calls to other contracts or to itself. To capture the whole execution process, we define the type of executions traces ChainTrace as the reflexive-transitive closure of the proof-relevant relation ChainStep : ChainState → ChainState → Type. ChainStep captures how the blockchain state evolves once new blocks as added and contract calls are executed.

Extraction Layer. Annenkov et al. (Reference Annenkov, Nielsen and Spitters2020) presented a verified embedding of smart contracts to Coq. This work shows how it is possible to verify a contract as a Coq function and then extract it into a programme in a functional smart contract language. This layer represents an interface between the general extraction machinery we have developed and the use case of smart contracts. In the case of smart contract languages, it is necessary to provide functionality for integrating the extracted smart contracts with the target blockchain infrastructure. In practice, it means that we should be able to map the abstractions of the execution layer (contract’s view of the blockchain, call context data) on corresponding components in the target blockchain.

Currently, all extraction functionality we have developed, regardless of the relation to smart contracts, is implemented in the extraction layer of ConCert. In the future, we plan to separate the general extraction component from the blockchain-specific functionality.

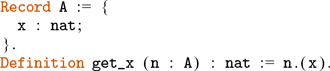

5 Extraction

The Coq proof assistant comes with a dependently typed programming language Gallina that allows due to the language’s rich type system to write programmes together with their specifications in the style of certified programming (see e.g. Chlipala Reference Chlipala2013). Coq features a special universe of types for writing program specifications, the universe of propositions ![]() . For example, the type {n : nat | 0 ≤ n } belongs to so-called subset types, which are essentially a special case of a dependent pair type (

. For example, the type {n : nat | 0 ≤ n } belongs to so-called subset types, which are essentially a special case of a dependent pair type (

![]() $\Sigma$

-type). In this example, 0 ≤ n is a proposition, i.e. it belongs to the universe

$\Sigma$

-type). In this example, 0 ≤ n is a proposition, i.e. it belongs to the universe ![]() . Subset types allow for encoding many useful invariants when writing programmes in Gallina. An inhabitant of {n : nat | 0 ≤ n } is a pair with the first component being a natural number and the second component—a proof that the number is strictly greater than zero. In the theory of Coq, subset types are represented as an inductive type with one constructor:

. Subset types allow for encoding many useful invariants when writing programmes in Gallina. An inhabitant of {n : nat | 0 ≤ n } is a pair with the first component being a natural number and the second component—a proof that the number is strictly greater than zero. In the theory of Coq, subset types are represented as an inductive type with one constructor:

![]()

where {x : A | P x} is a notation for sig A P.

The invariant represented by a second component can be used to ensure, for example, that division by zero never happens since we require that arguments can only be strictly positive numbers. The proofs of specifications are only used to build other proofs and do not affect the computational behaviour of the programme, apart from some exceptions called the empty and singleton elimination principle. Footnote 5 The Prop universe marks such computationally irrelevant bits. Moreover, types appearing in terms are also computationally irrelevant. For example, in System F this is justified by parametric polymorphism. This idea is used in the Coq proof assistant to extract the executable content of Gallina terms into OCaml, Haskell, and Scheme. The extraction functionality thus enables proving properties of functional programmes in Coq and then automatically producing code in one of the supported languages. The extracted code can be integrated with existing developments or used as a stand-alone programme.

The first extraction using ![]() as a marker for computationally irrelevant parts of programs was introduced by Paulin-Mohring (Reference Paulin-Mohring1989) in the context of the calculus of construction (CoC), which earlier versions of Coq were based on. This first extraction targeted System

as a marker for computationally irrelevant parts of programs was introduced by Paulin-Mohring (Reference Paulin-Mohring1989) in the context of the calculus of construction (CoC), which earlier versions of Coq were based on. This first extraction targeted System

![]() $\mathrm{F}_\omega$

, which can be seen as a subset of CoC, allowing one to get the extracted term internally in CoC. The current Coq extraction mechanism is based on the theoretical framework from a PhD thesis by Letouzey (Reference Letouzey2004). Letouzey extended the previous work of Paulin-Mohring (Reference Paulin-Mohring1989) and adapted it to the full calculus of inductive constructions. The target language of the current extraction is untyped, allowing to accommodate more features from the expressive type system of Coq. The untyped representation has a drawback; however, the typing information is still required when extracting to statically typed programming languages. To this end, Letouzey considers practical issues for implementing an efficient extraction procedure, including recovering the types in typed target languages, using type coercions (Obj.magic) when required, and various optimisations. The crucial part of the extraction process is the erasure procedure that utilises the typing information to prune irrelevant parts. That is, types and propositions in terms are replaced with

$\mathrm{F}_\omega$

, which can be seen as a subset of CoC, allowing one to get the extracted term internally in CoC. The current Coq extraction mechanism is based on the theoretical framework from a PhD thesis by Letouzey (Reference Letouzey2004). Letouzey extended the previous work of Paulin-Mohring (Reference Paulin-Mohring1989) and adapted it to the full calculus of inductive constructions. The target language of the current extraction is untyped, allowing to accommodate more features from the expressive type system of Coq. The untyped representation has a drawback; however, the typing information is still required when extracting to statically typed programming languages. To this end, Letouzey considers practical issues for implementing an efficient extraction procedure, including recovering the types in typed target languages, using type coercions (Obj.magic) when required, and various optimisations. The crucial part of the extraction process is the erasure procedure that utilises the typing information to prune irrelevant parts. That is, types and propositions in terms are replaced with

![]() $\square$

(a box). Formally, it is expressed as a translation from CIC (Calculus of Inductive Constructions) to

$\square$

(a box). Formally, it is expressed as a translation from CIC (Calculus of Inductive Constructions) to

![]() ${\lambda_\square}{}$

(an untyped version of CIC with an additional constant

${\lambda_\square}{}$

(an untyped version of CIC with an additional constant

![]() $\square$

). The translation is quite subtle and is discussed in detail in by Letouzey (Reference Letouzey2004). Letouzey also provides two (pen-and-paper) proofs that the translation is computationally sound: one proof is syntactic and uses the operational semantics and the other proof is based on the realisability semantics. Computational soundness means that the original programmes and the erased programmes compute the same (in a suitable sense) value.

$\square$

). The translation is quite subtle and is discussed in detail in by Letouzey (Reference Letouzey2004). Letouzey also provides two (pen-and-paper) proofs that the translation is computationally sound: one proof is syntactic and uses the operational semantics and the other proof is based on the realisability semantics. Computational soundness means that the original programmes and the erased programmes compute the same (in a suitable sense) value.

Having this in mind, we have identified two essential points:

-

The target languages supported by the standard Coq extraction do not include many new target languages, that represent important use cases (smart contracts, web programming).

-

Since the extraction implementation becomes part of a TCB, one would like to mechanically verify the extraction procedure in Coq itself and the current Coq extraction is not verified.

Therefore, it is important to build a verified extraction pipeline in Coq itself that also allows for defining pretty-printers for new target languages.

Until recently, the proof of correctness of one of the essential ingredients, the erasure procedure, was only done on paper. However, the MetaCoq project made an important step towards verified extraction by formalising the computational soundness of erasure (Sozeau et al. Reference Sozeau, Boulier, Forster, Tabareau and Winterhalter2019, Section 4). The MetaCoq’s verified erasure is defined for predicative calculus of cumulative inductive constructions (PCUIC) a variant of CIC that closely corresponds to the meta-theory of Coq. See Section 3 for a brief description of the project’s structure and Sozeau et al. (Reference Sozeau, Boulier, Forster, Tabareau and Winterhalter2019), Section 2 for the detailed exposition of the calculus. The result of the erasure is a

![]() ${\lambda_\square}{}$

term, that is, a term in an untyped calculus. On the other hand, integration with typed functional languages requires recovering the types from the untyped output of the erasure procedure. Letouzey (Reference Letouzey2004) solves this problem by designing an erasure procedure for types and then using a modified type inference algorithm, based on the algorithm

${\lambda_\square}{}$

term, that is, a term in an untyped calculus. On the other hand, integration with typed functional languages requires recovering the types from the untyped output of the erasure procedure. Letouzey (Reference Letouzey2004) solves this problem by designing an erasure procedure for types and then using a modified type inference algorithm, based on the algorithm

![]() $\mathcal{M}$

by Lee & Yi (Reference Lee and Yi1998), to recover types and check them against the type produced by extraction. Because the type system of Coq is more powerful than type systems of the target languages (e.g. Haskell or OCaml), not all the terms produced by extraction will be typable. In this case, the modified type inference algorithm inserts type coercions forcing the term to be well-typed. If we start with a Coq term the type of which is outside the OCaml type system (even without using dependent types), the extraction might have to resort to Obj.magic in order to make the definition well-typed. For example, the code snippet below

$\mathcal{M}$

by Lee & Yi (Reference Lee and Yi1998), to recover types and check them against the type produced by extraction. Because the type system of Coq is more powerful than type systems of the target languages (e.g. Haskell or OCaml), not all the terms produced by extraction will be typable. In this case, the modified type inference algorithm inserts type coercions forcing the term to be well-typed. If we start with a Coq term the type of which is outside the OCaml type system (even without using dependent types), the extraction might have to resort to Obj.magic in order to make the definition well-typed. For example, the code snippet below

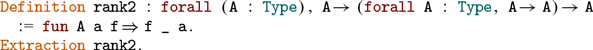

gives the following output on extraction to OCaml:

These coercions are “safe” in the sense that they do not change the computational properties of the term, they merely allow to pass the type checking.

5.1 Our extraction

The standard Coq extraction targets conventional general-purpose functional programming languages. Recently, there has been a significant increase in the number of languages that are inspired by these, but due to the narrower application focus are different in various subtle details. We have considered the area of smart contract languages (Liquidity and CameLIGO), web programming (Elm) and general-purpose languages with a functional subset (Rust). They often pose more challenges than the conventional targets for extraction. We have identified the following issues.

-

1. Most of the smart contract languages Footnote 6 and Elm do not offer a possibility to insert type coercions forcing the type checking to succeed.

-

2. The operational semantics of

${\lambda_\square}{}$

has the following rule (Sozeau et al. Reference Sozeau, Boulier, Forster, Tabareau and Winterhalter2019, Section 4.1): if

${\lambda_\square}{}$

has the following rule (Sozeau et al. Reference Sozeau, Boulier, Forster, Tabareau and Winterhalter2019, Section 4.1): if

${{\Sigma} \vdash {t_1} \triangleright {\square}}$

and

${{\Sigma} \vdash {t_1} \triangleright {\square}}$

and

${{\Sigma} \vdash {t_2} \triangleright {\mathit{v}}}$

then

${{\Sigma} \vdash {t_2} \triangleright {\mathit{v}}}$

then

${{\Sigma} \vdash {\bigl(t_1~~t_2 \bigr)} \triangleright {\square}}$

, where

${{\Sigma} \vdash {\bigl(t_1~~t_2 \bigr)} \triangleright {\square}}$

, where

${{-} \vdash {-} \triangleright {-}}$

is a big-step evaluation relation for

${{-} \vdash {-} \triangleright {-}}$

is a big-step evaluation relation for

${\lambda_\square}{}$

,

${\lambda_\square}{}$

,

$t_1$

and

$t_1$

and

$t_2$

are

$t_2$

are

${\lambda_\square}{}$

terms, and

${\lambda_\square}{}$

terms, and

$\mathit{v}$

is a

$\mathit{v}$

is a

${\lambda_\square}{}$

value. This rule can be implemented in OCaml using the unsafe features, which are, again, not available in most of our target languages. In lazy languages, this situation never occurs (Letouzey Reference Letouzey2004, Section 2.6.3), but most of the languages we consider follow the eager evaluation strategy.

${\lambda_\square}{}$

value. This rule can be implemented in OCaml using the unsafe features, which are, again, not available in most of our target languages. In lazy languages, this situation never occurs (Letouzey Reference Letouzey2004, Section 2.6.3), but most of the languages we consider follow the eager evaluation strategy. -

3. Data types and recursive functions are often restricted. E.g. Liquidity, CameLIGO (and other LIGO languages) do not allow for defining recursive data types (like lists and trees) and limits recursive definitions to tail recursion on a single argument. Instead, these languages offer built-in lists and finite maps (dictionaries).

-

4. Rust has a fully-featured functional subset, but being a language for systems programming, does not have a built-in garbage collector.

We can make design choices, concerning the point above, in such a way that the soundness of the extraction will not be affected, given that terms evaluate in the same way before and after extraction. In the worst case, the extracted term will be rejected by the type checker of the target language. However, some care is needed: the pretty-printing step still presents a gap in verification. A more careful treatment of the semantics of target languages would allow extending the guarantees. Some subtleties come from, e.g. handling of absurd pattern-matching cases and its interaction with optimisations. As for now, we apply the approach inspired by the standard extraction in such situations.

Let us consider in detail what the restrictions outlined above mean for extraction. The first restriction means that certain types will not be extractable. Therefore, our goal is to identify a practical subset of extractable Coq types and give the user access to transformations helping to produce well-typed programs. The second restriction is hard to overcome, but fortunately, this situation should not often occur on the fragment we want to work. Moreover, as we noticed before, terms that might give an application of a box to some other term will be ill-typed and thus, rejected by the type checker of the target language. The third restriction can be addressed by mapping Coq’s data types (lists, finite maps) to the corresponding primitives in a target language. The fourth restriction applies only to Rust and means that we have to provide a possibility to “plug-in” a memory management implementation. Luckily, Rust libraries contain various implementations one can choose from.

At the moment, we consider the formalisation of typing in target languages out of scope for this project. Even though the extraction of types is not verified, it does not compromise run-time safety: if extracted types are incorrect, the target language’s type checker will reject the extracted program. If we followed the work by Letouzey (Reference Letouzey2004), which the current Coq extraction is based on, giving guarantees about typing would require formalising of target languages type systems, including a type inference algorithm (possibly algorithm

![]() $\mathcal{M}$

). The type systems of many languages we consider are not precisely specified and are largely in flux. Moreover, for the target languages without unsafe coercions, some of the programs will be untypable in any case. On the other hand, for more mature languages (e.g. Elm) one can imagine connecting our formalisation of extraction with a language formalisation, proving the correctness statement for both the run-time behaviour and the typability of extracted terms.

$\mathcal{M}$

). The type systems of many languages we consider are not precisely specified and are largely in flux. Moreover, for the target languages without unsafe coercions, some of the programs will be untypable in any case. On the other hand, for more mature languages (e.g. Elm) one can imagine connecting our formalisation of extraction with a language formalisation, proving the correctness statement for both the run-time behaviour and the typability of extracted terms.

We extend the work on verified erasure (Sozeau et al. Reference Sozeau, Boulier, Forster, Tabareau and Winterhalter2019) and develop an approach that uses a minimal amount of unverified code that can affect the soundness of the verified erasure. Our approach adds an erasure procedure for types, verified optimisations of the extracted code and pretty-printers for several target languages. The main observation is that the intermediate representation

![]() ${\lambda^T_\square}$

corresponds to the core of a generic functional language. Therefore, our pipeline can be used to target various functional languages with transformations and optimisations applied generically to the intermediate representation.

${\lambda^T_\square}$

corresponds to the core of a generic functional language. Therefore, our pipeline can be used to target various functional languages with transformations and optimisations applied generically to the intermediate representation.

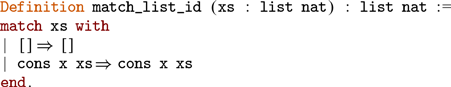

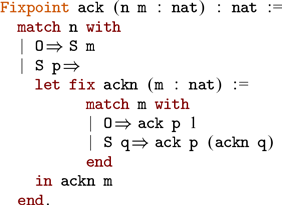

Before introducing our approach, let us give some examples of how the verified erasure works and motivate the optimisations we propose.

produces the following

![]() ${\lambda_\square}{}$

code:

${\lambda_\square}{}$

code:

where the

![]() $\square$

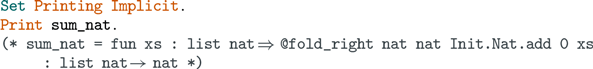

symbol corresponds to computationally irrelevant parts. The first two arguments to the erased versions of fold_right are boxes, since fold_right in Coq has two implicit type arguments. They become visible if we switch on printing of implicit arguments:

$\square$

symbol corresponds to computationally irrelevant parts. The first two arguments to the erased versions of fold_right are boxes, since fold_right in Coq has two implicit type arguments. They become visible if we switch on printing of implicit arguments:

In this situation we have at least two choices: remove the boxes by some optimisation procedure, or leave the boxes and extract fold_right in such a way that the first two arguments belong to some dummy data type. Footnote 7 The latter choice cannot be made for some smart contract languages due to restrictions on recursion (fold_right is not tail-recursive), therefore, we have to remap fold_right and other functions on lists to the corresponding primitive functions. Generally, removing such dummy arguments in the extracted code is beneficial for other reasons: the code size is smaller and some redundant reductions can be avoided.

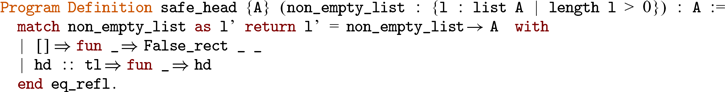

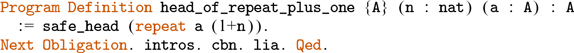

In the following example,

the square function erases to

The corresponding language primitive would be a function with the following signature: TargetLang.map: (’a → ’b) → ’a list → ’b list. Clearly, there are two extra boxes in the extracted code that prevent us from directly replacing Coq.Lists.List.map with TargetLang.map. Instead, we would like to have the following:

In this case, we can provide a translation table to the pretty-printing procedure mapping Coq.Lists.List.map to TargetLang.map. Alternatively, if one does not want to remove boxes, it is possible to implement a more sophisticated remapping procedure. It could replace Coq.Lists.List.map _ with TargetLang.map, but it would require finding all constants applied to the right number of arguments (or

![]() $\eta$

-expand constants) and only then replace them. Remapping inductive types in the same style would involve more complications: constructors of polymorphic inductive types will have an extra argument of a dummy type. This would require more complicated pretty-printing of pattern-matching in addition to the similar problem with extra arguments on the application sites.

$\eta$

-expand constants) and only then replace them. Remapping inductive types in the same style would involve more complications: constructors of polymorphic inductive types will have an extra argument of a dummy type. This would require more complicated pretty-printing of pattern-matching in addition to the similar problem with extra arguments on the application sites.

By implementing the optimisation procedure we achieve several goals: make the code size smaller, remove redundant computations, and make the remapping easier. Removing the redundant computations is beneficial for smart contract languages since it decreases the computation cost in terms of gas. Users typically pay for calling smart contracts and the price is determined by the gas consumption. That is, gas serves as a measure of computational resources required for executing a contract. Smaller code size is also an advantage from the point of view of gas consumption. For some blockchains, the overall cost of a contract call depends on its size.

It is important to separate these two aspects of extraction: erasure (given by the translation CIC

Footnote 8

→

![]() ${\lambda_\square}{}$

) and optimisation of

${\lambda_\square}{}$

) and optimisation of

![]() ${\lambda_\square}{}$

terms to remove unnecessary arguments. The optimisations we propose remove some redundant reductions, make the output more readable and facilitate the remapping to the target language’s primitives. Our implementation strategy of extraction is the following: (i) take a term and erase it and its dependencies recursively to get an environment; (ii) analyse the environment to find optimisable types and terms; (iii) optimise the environment in a consistent way (e.g. in our

${\lambda_\square}{}$

terms to remove unnecessary arguments. The optimisations we propose remove some redundant reductions, make the output more readable and facilitate the remapping to the target language’s primitives. Our implementation strategy of extraction is the following: (i) take a term and erase it and its dependencies recursively to get an environment; (ii) analyse the environment to find optimisable types and terms; (iii) optimise the environment in a consistent way (e.g. in our

![]() ${\lambda^T_\square}$

, the types must be adjusted accordingly); (iv) pretty-print the result in the target language syntax according to the translation table containing remapped constants and inductive types.

${\lambda^T_\square}$

, the types must be adjusted accordingly); (iv) pretty-print the result in the target language syntax according to the translation table containing remapped constants and inductive types.

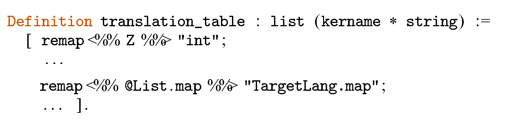

The mechanism of remapping constants and inductive types is similar to the Coq functionality Extract Inlined Constant and ![]() . Since we run the extraction pipeline inside Coq, the we use ordinary Coq definitions to build a translation table that we pass to the pipeline. For example, the typical translation table would look as the following.

. Since we run the extraction pipeline inside Coq, the we use ordinary Coq definitions to build a translation table that we pass to the pipeline. For example, the typical translation table would look as the following.

We use remap ≤ %% coq_def %%≥ “target_def" to produce an entry in the translation table, where the ≤ %% coq_def %%≥ notation uses MetaCoq to resolve the full name of the given definition. Note that the translation table is an association list with kername as keys. The kername type is provided by MetaCoq and represents fully qualified names of Coq definitions.

5.1.1 Erasure for types

Let us discuss our first extension to the verified erasure presented in Sozeau et al. (Reference Sozeau, Boulier, Forster, Tabareau and Winterhalter2019), namely an erasure procedure for types. It is a crucial part for extracting to a typed target language. Currently, the verified erasure of MetaCoq provides only a term erasure procedure which will erase any type in a term to a box. For example, a function using the dependent pair type (

![]() $\Sigma$

-type) might have a signature involving

$\Sigma$

-type) might have a signature involving ![]() , i.e. representing numbers that are larger than 10. Applying MetaCoq’s term erasure will erase this in its entirety to a box, while we are interested in a procedure that instead erases only the type scheme in the second argument: we expect type erasure to produce

, i.e. representing numbers that are larger than 10. Applying MetaCoq’s term erasure will erase this in its entirety to a box, while we are interested in a procedure that instead erases only the type scheme in the second argument: we expect type erasure to produce ![]() , where the square now represents an irrelevant type.

, where the square now represents an irrelevant type.

While our target languages have type systems that are Hindley-Milner based (and therefore, can recover types of functions), we still need an erasure procedure for types to be able to extract inductive types. Moreover, our target languages support various extensions, and their compilers may not always succeed to infer types. For example, Liquidity has overloading of some primitive operations, e.g. arithmetic operations for primitive numeric types. Such overloading introduces ambiguities that cannot be resolved by the type checker without type annotations. CameLIGO requires writing even more types explicitly. Thus, the erasure procedure for types is also necessary to produce such type annotations. The implementation of this procedure is inspired by Letouzey (Reference Letouzey2004).

We have chosen a semi-formal presentation in order to guide the reader through the actual implementation while avoiding clutter from the technicalities of Coq. We use concrete Coq syntax to represent the types of CIC. We do not provide syntax and semantics of CIC, for more information we refer the reader to Sozeau et al. (Reference Sozeau, Boulier, Forster, Tabareau and Winterhalter2019), Section 2. The types of

![]() ${\lambda^T_\square}$

are represented by the grammar below.

${\lambda^T_\square}$

are represented by the grammar below.

Here

![]() ${\overline{i}}$

represents levels of type variables,

${\overline{i}}$

represents levels of type variables, ![]() and

and ![]() range over names of inductive types and constants respectively. Essentially,

range over names of inductive types and constants respectively. Essentially, ![]() represents types of an OCaml-like functional language extended with constructors

represents types of an OCaml-like functional language extended with constructors

![]() ${\square}$

(“logical” types) and

${\square}$

(“logical” types) and

![]() $\mathbb{T}$

(types that are not representable in the target language). In some cases both

$\mathbb{T}$

(types that are not representable in the target language). In some cases both

![]() ${\square}$

and

${\square}$

and

![]() $\mathbb{T}$

can later be removed from the extracted code by optimisations, although

$\mathbb{T}$

can later be removed from the extracted code by optimisations, although

![]() $\mathbb{T}$

might require type coercions in the target language. Note also that the types do not have binders, since they represent prenex-polymorphic types. The levels of type variables are indices into the list of type variable names starting from the head of the list. E.g. the type ’a → ’b is represented as

$\mathbb{T}$

might require type coercions in the target language. Note also that the types do not have binders, since they represent prenex-polymorphic types. The levels of type variables are indices into the list of type variable names starting from the head of the list. E.g. the type ’a → ’b is represented as ![]() for the context

for the context

![]() $[a;b]$

. Additionally, we use colours to distinguish between the CIC terms and the target

$[a;b]$

. Additionally, we use colours to distinguish between the CIC terms and the target ![]() .

.

Definition 1 (Erasure for types).

The erasure procedure for types is given by functions

![]() ${\mathcal{E}^T}{}$

,

${\mathcal{E}^T}{}$

,

![]() $\mathcal{E}_{app}^T{}$

and

$\mathcal{E}_{app}^T{}$

and

![]() ${\mathcal{E}_{head}^T}{}$

in Figure 2.

${\mathcal{E}_{head}^T}{}$

in Figure 2.

Fig. 2. Erasure from CIC types to

![]() ${\mathtt{box\_type}}$

.

${\mathtt{box\_type}}$

.

The

![]() ${\mathcal{E}^T}{}$

function takes four parameters. The first is a context

${\mathcal{E}^T}{}$

function takes four parameters. The first is a context

![]() $\mathtt{Ctx}$

represented as a list of assumptions. The second is an erasure context

$\mathtt{Ctx}$

represented as a list of assumptions. The second is an erasure context

![]() $\mathtt{ECtx}$

represented as a sized list (vector) that follows the structure of

$\mathtt{ECtx}$

represented as a sized list (vector) that follows the structure of

![]() $\mathtt{Ctx}$

; it contains either a translated type variable

$\mathtt{Ctx}$

; it contains either a translated type variable

![]() $\mathtt{TV}$

, information about an inductive type

$\mathtt{TV}$

, information about an inductive type

![]() $\mathtt{Ind}$

, or a marker

$\mathtt{Ind}$

, or a marker

![]() $\mathtt{Other}$

for items in

$\mathtt{Other}$

for items in

![]() $\mathtt{Ctx}$

that do not fit into the previous categories. The last two parameters represent terms of CIC corresponding to types and an index of the next type variable. The next type variable index is wrapped in the

$\mathtt{Ctx}$

that do not fit into the previous categories. The last two parameters represent terms of CIC corresponding to types and an index of the next type variable. The next type variable index is wrapped in the

![]() $\mathtt{option}$

type, and becomes

$\mathtt{option}$

type, and becomes

![]() $\mathtt{None}$

if no more type variables should be produced. The function

$\mathtt{None}$

if no more type variables should be produced. The function

![]() ${\mathcal{E}^T}{}$

returns a tuple consisting of a list of type variables and a

${\mathcal{E}^T}{}$

returns a tuple consisting of a list of type variables and a ![]() .

.

First, we describe the most important auxiliary functions outlined on the Figure 2. An important device used to determine erasable types (the ones we turn into the special target types

![]() ${\square}$

and

${\square}$

and

![]() $\mathbb{T}$

) is the function

$\mathbb{T}$

) is the function ![]() , where the return type

, where the return type

![]() $\mathtt{type\_flag}$

is defined as a record with two fields:

$\mathtt{type\_flag}$

is defined as a record with two fields:

![]() $\mathtt{is\_logical}$

and

$\mathtt{is\_logical}$

and

![]() $\mathtt{is\_arity}$

. The

$\mathtt{is\_arity}$

. The

![]() $\mathtt{is\_logical}$

field carries a boolean, while

$\mathtt{is\_logical}$

field carries a boolean, while

![]() $\mathtt{is\_arity}$

carries a proof or a disproof of convertibility to an arity. That is, whether a term is an arity up to reductions. For the purposes of the presentation in the paper, we treat

$\mathtt{is\_arity}$

carries a proof or a disproof of convertibility to an arity. That is, whether a term is an arity up to reductions. For the purposes of the presentation in the paper, we treat

![]() $\mathtt{is\_arity}$

as a boolean value, while in the implementation we use the proofs carried by

$\mathtt{is\_arity}$

as a boolean value, while in the implementation we use the proofs carried by

![]() $\mathtt{is\_arity}$

to solve proof obligations for the definition of

$\mathtt{is\_arity}$

to solve proof obligations for the definition of

![]() ${\mathcal{E}^T}{}$

.

${\mathcal{E}^T}{}$

.

A type is an arity if it is a (possibly nullary) product into a sort: ![]() for

for ![]() and

and ![]() a vector of (possibly dependent) binders and types. Inhabitants of arities are type schemes.

a vector of (possibly dependent) binders and types. Inhabitants of arities are type schemes.

The predicate

![]() $\mathtt{is\_sort}$

is not a field of

$\mathtt{is\_sort}$

is not a field of

![]() $\mathtt{type\_flag}$

, but it uses the data of

$\mathtt{type\_flag}$

, but it uses the data of

![]() $\mathtt{is\_arity}$

field to tell whether a given type is a sort, i.e.

$\mathtt{is\_arity}$

field to tell whether a given type is a sort, i.e. ![]() . Sorts are always arities; we use

. Sorts are always arities; we use

![]() $\mathtt{is\_sort}$

to turn a proof of converitibility to an arity into a proof of convertivility to a sort (or return

$\mathtt{is\_sort}$

to turn a proof of converitibility to an arity into a proof of convertivility to a sort (or return

![]() $\mathtt{None}$

if it is not the case). Finally, a type is logical when it is a proposition (i.e. inhabitants are proofs) or when it is an arity into

$\mathtt{None}$

if it is not the case). Finally, a type is logical when it is a proposition (i.e. inhabitants are proofs) or when it is an arity into ![]() (i.e. inhabitants are propositional type schemes). As concrete examples,

(i.e. inhabitants are propositional type schemes). As concrete examples,

![]() ${\mathtt{Type}}$

is an arity and a sort, but not logical.

${\mathtt{Type}}$

is an arity and a sort, but not logical. ![]() is logical, an arity, but not a sort.

is logical, an arity, but not a sort. ![]() is neither of the three.

is neither of the three.

We use the reduction function

![]() ${\mathtt{red}_{\beta\iota\zeta}}$

in order to reduce the term to

${\mathtt{red}_{\beta\iota\zeta}}$

in order to reduce the term to

![]() $\beta\iota\zeta$

weak head normal form. Here,

$\beta\iota\zeta$

weak head normal form. Here,

![]() $\beta$

is reduction of applied

$\beta$

is reduction of applied

![]() $\lambda$

-abstractions,

$\lambda$

-abstractions,

![]() $\iota$

is reduction of

$\iota$

is reduction of

![]() $\mathtt{match}$

on constructors, and

$\mathtt{match}$

on constructors, and

![]() $\zeta$

is reduction of the

$\zeta$

is reduction of the

![]() $\mathtt{let}$

construct. The

$\mathtt{let}$

construct. The

![]() $\mathtt{infer}{}$

function is used to recover typing information for subterms. We also make use of the destructuring

$\mathtt{infer}{}$

function is used to recover typing information for subterms. We also make use of the destructuring

![]() $\mathtt{let}~(a,b) := \ldots$

for tuples and projections

$\mathtt{let}~(a,b) := \ldots$

for tuples and projections

![]() $\mathtt{fst}$

,

$\mathtt{fst}$

,

![]() $\mathtt{snd}$

.

$\mathtt{snd}$

.

The erasure procedure proceeds as follows. First, it reduces the CIC term to

![]() $\beta\iota\zeta$

weak head normal form. Then, it uses

$\beta\iota\zeta$

weak head normal form. Then, it uses

![]() ${\mathtt{flag\_of\_type}}{}$

to determine how to erase the reduced type. If it is a logical type, it immediately returns

${\mathtt{flag\_of\_type}}{}$

to determine how to erase the reduced type. If it is a logical type, it immediately returns

![]() ${\Box}$

, if not, it performs case analysis on the result. The most interesting cases are dependent function types and applications. For function types, if the domain type is logical, it produces

${\Box}$

, if not, it performs case analysis on the result. The most interesting cases are dependent function types and applications. For function types, if the domain type is logical, it produces

![]() ${{\square}}$

for the resulting domain type and erases the codomain recursively. If the domain is a “normal” type (not logical and not an arity), it erases recursively both the domain and the codomain. Otherwise, the type is an arity and we add a new type variable into

${{\square}}$

for the resulting domain type and erases the codomain recursively. If the domain is a “normal” type (not logical and not an arity), it erases recursively both the domain and the codomain. Otherwise, the type is an arity and we add a new type variable into

![]() $\Gamma_e$

for the recursive call and append a variable name to the returned list. For an application, the procedure decomposes it into a head term and a (possibly empty) list of arguments using

$\Gamma_e$

for the recursive call and append a variable name to the returned list. For an application, the procedure decomposes it into a head term and a (possibly empty) list of arguments using

![]() ${\mathtt{decompose\_app}}{}$

. Then, it uses

${\mathtt{decompose\_app}}{}$

. Then, it uses

![]() ${\mathcal{E}_{head}^T}{}$

to erase the head and

${\mathcal{E}_{head}^T}{}$

to erase the head and

![]() $\mathcal{E}_{app}^T{}$

to process all the arguments. Note that in the Coq implementation, when we erase an application

$\mathcal{E}_{app}^T{}$

to process all the arguments. Note that in the Coq implementation, when we erase an application ![]() , we drop the arguments, if the head of the application is not an inductive or a constant. Other applied

, we drop the arguments, if the head of the application is not an inductive or a constant. Other applied ![]() construtors would correspond to a not well-formed application. In the cases not covered by the case analysis, we emit

construtors would correspond to a not well-formed application. In the cases not covered by the case analysis, we emit

![]() $\mathbb{T}$

.

$\mathbb{T}$

.

For example, the type of ![]() erases to the following.

erases to the following.

One of the advantages of implementing the extraction pipeline fully in Coq is that we can use certified programming techniques along with the verified meta-theory provided by MetaCoq. The actual type signatures of the functions in Figure 2 restrict the input to well-typed CIC terms. In the implementation, we are required to show that the reduction machinery we use does not break the well-typedness of terms. For that purpose, we use two results: the reduction function is sound with respect to the relational specification, and the subject reduction lemma, that is, reduction preserves typing.

The termination argument is given by a well-founded relation since erasure starts with

![]() $\beta\iota\zeta$

-reduction using the

$\beta\iota\zeta$

-reduction using the

![]() ${\mathtt{red}_{\beta\iota\zeta}}$

function and then later recurses on subterms of the reduced term. This reduction function is defined in MetaCoq also by using well-founded recursion. Due to the use of well-founded recursion, we write

${\mathtt{red}_{\beta\iota\zeta}}$

function and then later recurses on subterms of the reduced term. This reduction function is defined in MetaCoq also by using well-founded recursion. Due to the use of well-founded recursion, we write

![]() ${\mathcal{E}^T}{}$

as a single function in our formalisation by inlining the definitions of

${\mathcal{E}^T}{}$

as a single function in our formalisation by inlining the definitions of

![]() $\mathcal{E}_{app}^T{}$

and

$\mathcal{E}_{app}^T{}$

and

![]() ${\mathcal{E}_{head}^T}{}$

; this makes the well-foundedness argument easier. We extensively use the Equations Coq plugin (Sozeau & Mangin Reference Sozeau and Mangin2019) in our development to help manage the proof obligations related to well-typed terms and recursion.

${\mathcal{E}_{head}^T}{}$

; this makes the well-foundedness argument easier. We extensively use the Equations Coq plugin (Sozeau & Mangin Reference Sozeau and Mangin2019) in our development to help manage the proof obligations related to well-typed terms and recursion.

The difference with our previous erasure procedure for types given in Annenkov et al. (Reference Annenkov, Milo, Nielsen and Spitters2021) is twofold. First, we make the procedure total. That means that it does not fail in the cases when it hits a non-prenex type, instead, it tries to do its best or emits

![]() $\mathbb{T}$

if no further options are possible. That is, for rank2 from Section 5 we get the following type.

$\mathbb{T}$

if no further options are possible. That is, for rank2 from Section 5 we get the following type.

Clearly, the body of rank2 a f = f

![]() $\Box$

a cannot be well-typed, since the type of a is not

$\Box$

a cannot be well-typed, since the type of a is not

![]() $\mathbb{T}$

. However, in some situations, it is better to let the extraction produce some result that could be optimised later. For example, if we never used the f argument, it could be removed by optimisations leaving us with the definition whose that does not mention

$\mathbb{T}$

. However, in some situations, it is better to let the extraction produce some result that could be optimised later. For example, if we never used the f argument, it could be removed by optimisations leaving us with the definition whose that does not mention

![]() $\mathbb{T}$

.

$\mathbb{T}$

.

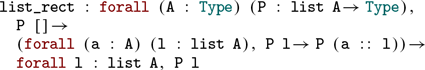

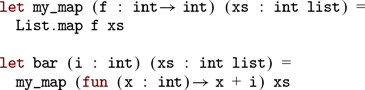

In particular, we have improved the handling of arities that makes it possible to extract programs defined in terms of elimination principles. For example one can define map in the following way: ![]() . Where list_rect is the dependent elimination principle for lists.

. Where list_rect is the dependent elimination principle for lists.

Clearly, the type of list_rect is too expressive for the target languages we consider. However, it is still possible to extract a well-typed term for the definition of map above. The extracted type of list_rect looks as follows.

Second, we have introduced an erasure procedure for type schemes. The procedure allows us to handle type aliases, that is, Coq definitions that when applied to some arguments return a type. Type aliases are used quite extensively in the standard library. For example, the standard finite maps FMaps contain definitions like ![]() . In

. In

![]() $\eta$

-expanded form it is a function that take a type and returns a type:

$\eta$

-expanded form it is a function that take a type and returns a type: ![]() . Without this extension, we would not be able to extract programmes that use such definitions.

. Without this extension, we would not be able to extract programmes that use such definitions.

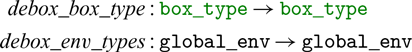

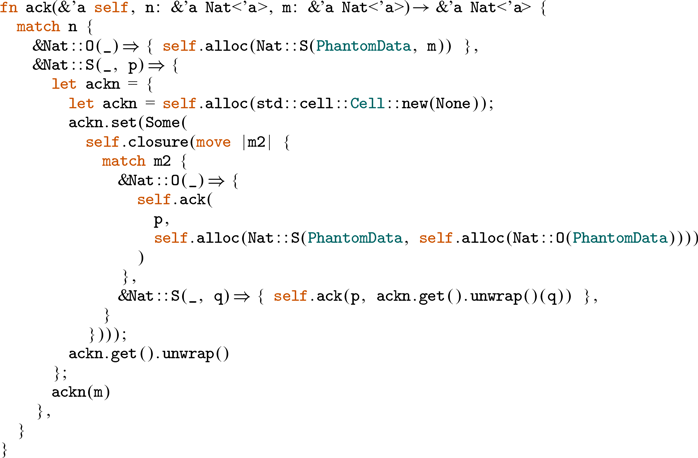

Definition 2 (Erasure for type schemes).

![]()

The erasure procedure for type schemes is given by two functions

![]() ${\mathcal{E}^{TS}}{}$

and

${\mathcal{E}^{TS}}{}$

and

![]() ${\mathcal{E}_\eta^{TS}}{}$

in Figure 3.

${\mathcal{E}_\eta^{TS}}{}$

in Figure 3.

Fig. 3. Erasure for type schemes.

The signatures of

![]() ${\mathcal{E}^{TS}}{}$

and

${\mathcal{E}^{TS}}{}$

and

![]() ${\mathcal{E}_\eta^{TS}}{}$

are similar to

${\mathcal{E}_\eta^{TS}}{}$

are similar to

![]() ${\mathcal{E}^T}{}$

but we also add a new context

${\mathcal{E}^T}{}$

but we also add a new context

![]() $\mathtt{ACtx}$

representing the type of a type scheme, which we call arity. So, for an arity

$\mathtt{ACtx}$

representing the type of a type scheme, which we call arity. So, for an arity

![]() $\forall (a : A) (b : B) \ldots (z : Z), \mathtt{Type}$

, we have

$\forall (a : A) (b : B) \ldots (z : Z), \mathtt{Type}$

, we have

![]() $\Gamma_a = [(a,A); (b,B);\ldots;(z,Z)]$

. The

$\Gamma_a = [(a,A); (b,B);\ldots;(z,Z)]$

. The

![]() ${\mathcal{E}^{TS}}{}$

function reduces the term and then, if it is a lambda-abstraction, looks at the result of

${\mathcal{E}^{TS}}{}$

function reduces the term and then, if it is a lambda-abstraction, looks at the result of

![]() ${\mathtt{flag\_of\_type}}$

for the domain type. If it is a sort (or, more generally, an arity) it adds a type variable. If the reduced term is not a lambda abstraction, we know that it requires

${\mathtt{flag\_of\_type}}$

for the domain type. If it is a sort (or, more generally, an arity) it adds a type variable. If the reduced term is not a lambda abstraction, we know that it requires

![]() $\eta$

-expansion, since its type is

$\eta$

-expansion, since its type is

![]() $\forall (a' : A'), t$

. Therefore, we call

$\forall (a' : A'), t$

. Therefore, we call

![]() ${\mathcal{E}_\eta^{TS}}{}$

with the arity context

${\mathcal{E}_\eta^{TS}}{}$

with the arity context

![]() $(a',A') :: \Gamma_a$

. In

$(a',A') :: \Gamma_a$

. In

![]() ${\mathcal{E}_\eta^{TS}}{}$

, we use the

${\mathcal{E}_\eta^{TS}}{}$

, we use the ![]() operation that increments the indices of all variables in t by one. The term

operation that increments the indices of all variables in t by one. The term ![]() denotes an application of a term

denotes an application of a term

![]() $\mathit{{t}}$

with incremented variable indices to a variable with index

$\mathit{{t}}$

with incremented variable indices to a variable with index

![]() ${\overline{0}}$

A simple example of a type scheme is the following:

${\overline{0}}$

A simple example of a type scheme is the following:

It erases to a pair consisting of a list of type variables and a ![]() :

:

Type schemes that use dependent types can also be erased. For example, one can create an abbreviation for the type of sized lists.

which gives us the following type alias

where sig corresponds to the dependent pair type in Coq given by the notation ![]() . The erased type can be further optimised by removing the occurrences of

. The erased type can be further optimised by removing the occurrences of

![]() ${\square}$

and irrelevant type variables.

${\square}$

and irrelevant type variables.

The two changes described above bring our implementation closer to the standard extraction of Coq and allow for more programs to be extracted in comparison with our previous work. Returning

![]() $\mathbb{T}$

instead of failing creates more opportunities for target languages that support unsafe type casts.

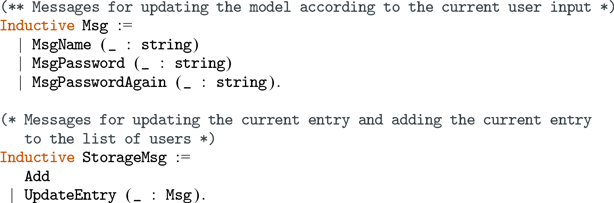

$\mathbb{T}$