1. Introduction

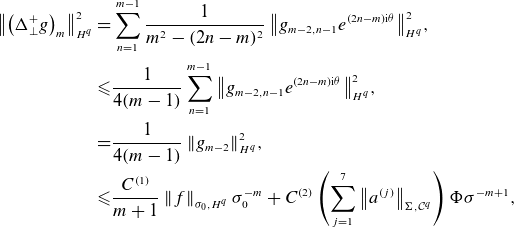

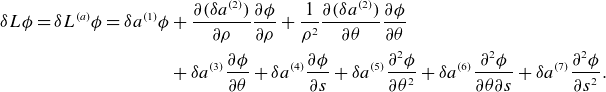

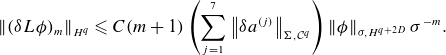

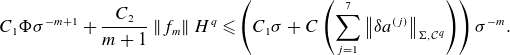

The design of stellarators is a computationally intensive task. The most basic problem in stellarator design – that of computing the magnetic field – requires solving the steady-state magnetohydrostatics (MHS) equations. These equations are difficult to solve for reasons familiar to many problems in physics: they are nonlinear and three-dimensional. Popular MHS equilibrium solvers include VMEC (Hirshman Reference Hirshman1983), DESC (Dudt & Kolemen Reference Dudt and Kolemen2020) and SPEC (Hudson et al. Reference Hudson, Dewar, Dennis, Hole, McGann, von Nessi and Lazerson2012), all of which take seconds to minutes to compute a single equilibrium. Beyond equilibrium solving, there are potentially many other stellarator objectives that are expensive to compute, with plasma stability metrics being a major example. When optimising for stellarators, the costs of equilibrium and objective solving can limit the speed of the overall design process. This, in combination with the high dimensionality of specifying three-dimensional (3-D) fields, motivates a need for simpler alternatives.

Recently, near-axis expansion (Mercier Reference Mercier1964; Solov’ev & Shafranov Reference Solov’ev, Shafranov and Leontovich1970) has gained traction as an alternative to full 3-D MHS solvers. The near-axis expansion works by asymptotically expanding all of the relevant plasma variables (such as magnetic coordinates, pressure, rotational transform and plasma current) in the distance from the magnetic axis, which is assumed to be small relative to a characteristic magnetic scale length. The resulting equations are a hierarchy of one-dimensional ordinary differential equations (ODEs), which can be solved orders of magnitude faster than 3-D equilibria. This allows for one to quickly find large numbers of optimised stellarators (Landreman Reference Landreman2022; Giuliani Reference Giuliani2024), something that was previously unavailable to the stellarator community.

In addition to the speed, the near-axis expansion has other benefits. For instance, Garren & Boozer (Reference Garren and Boozer1991) showed that quasisymmetry imposes more constraints than free parameters in the expansion, leading to the conjecture that non-axisymmetric but perfectly quasisymmetric stellarators cannot exist. Many objectives have been defined and computed for the near-axis expansion, including quasisymmetry (Landreman & Sengupta Reference Landreman and Sengupta2019), quasi-isodynamicity (Mata et al. Reference Mata, Plunk and Jorge2022), isoprominence (Burby et al. Reference Burby, Duignan and Meiss2023), and Mercier and magnetic-well conditions for stability (Landreman & Jorge Reference Landreman and Jorge2020; Kim et al. Reference Kim, Jorge and Dorland2021). There is evidence that other higher-order effects such as ballooning and linear gyrokinetic stability could be investigated as well (Jorge & Landreman Reference Jorge and Landreman2020). The near-axis expansion has also been combined with a type of quadratic flux minimising surfaces and coil optimisation to create free-field optimised quasi-axisymmetric (QA) equilibria (Giuliani Reference Giuliani2024). In sum, the connection among easily expressed objectives, a relatively low-dimensional equilibrium description and fast computation has led to the increased use of the near-axis expansion.

However, the near-axis expansion is not without drawbacks. The primary drawback is fundamental: the expansion has limited accuracy far from the axis. For instance, in the ‘far-axis’ regime, there can be large errors in the magnetic shear and magnetic surfaces can self-intersect (Landreman Reference Landreman2022). The paper by Jorge & Landreman (Reference Jorge and Landreman2020) also indicates that higher-order terms may be needed for stability; such as magnetic curvature terms. Unfortunately, attempts to use higher-order terms have resulted in divergent asymptotic series, limiting the accuracy to small plasma volumes. Most series go to first, second or sometimes third order in the distance from the axis in the relevant quantities, with any more terms typically reducing accuracy rather than improving it. Therefore, if we want to include more physics objectives over larger volumes in the near-axis expansion, we must overcome the issue of series divergence.

Unfortunately, the issue of divergence is confounded by many of the assumptions that can be incorporated into the near-axis expansion. The most extreme case is that of QA stellarators, where it has been shown that the system of equations for quasi-axisymmetry is overdetermined beyond third order in the expansion. Obviously, unless one relaxes the problem (e.g. via anisotropic pressure; Rodríguez & Bhattacharjee Reference Rodríguez and Bhattacharjee2021), one cannot generally ask for a convergent QA near-axis expansion in such a circumstance. In the simpler case of non-quasisymmetric stellarators with smooth pressure gradients and nested surfaces, it is still unknown whether there are non-axisymmetric solutions to MHS (Grad Reference Grad1967; Constantin et al. Reference Constantin, Drivas and Ginsberg2021a ). Recent work has found that perturbing for small force (Constantin et al. Reference Constantin, Drivas and Ginsberg2021b ) or non-flat metrics (Cardona et al. Reference Cardona, Duignan and Perrella2024) allow for integrable solutions, but currently, there is no guarantee of solutions of MHS, let alone convergent asymptotic expansions.

So, to begin the task of building convergent numerical methods for the near-axis expansion, we focus on a problem we know is solvable: Laplace’s equation for vacuum magnetic potentials following Jorge et al. Reference Jorge and Landreman(2020). This can be solved in direct (Mercier) coordinates (Mercier Reference Mercier1964) with no assumption of nested surfaces. Additionally, because solutions of Laplace’s equation are real analytic, there exist near-axis expansions of the equation that converge within a neighbourhood of the axis. Despite these guarantees, even the near-axis expansion of Laplace’s equation diverges.

In this paper, we show that the vacuum near-axis expansion diverges for a reason: Laplace’s equation as a near-axis expansion is ill-posed (§ 3, following background in § 2). To address this issue, we introduce a small regularisation term to Laplace’s equation and expand to find a regularised near-axis expansion. We do this by including a viscosity term to Laplace’s equation that damps the highly oscillatory unstable modes responsible for the ill-posedness. By appropriately bounding the input of the near-axis expansion in a Sobolev norm, we prove that this term results in a uniformly converging near-axis expansion within a neighbourhood of the axis.

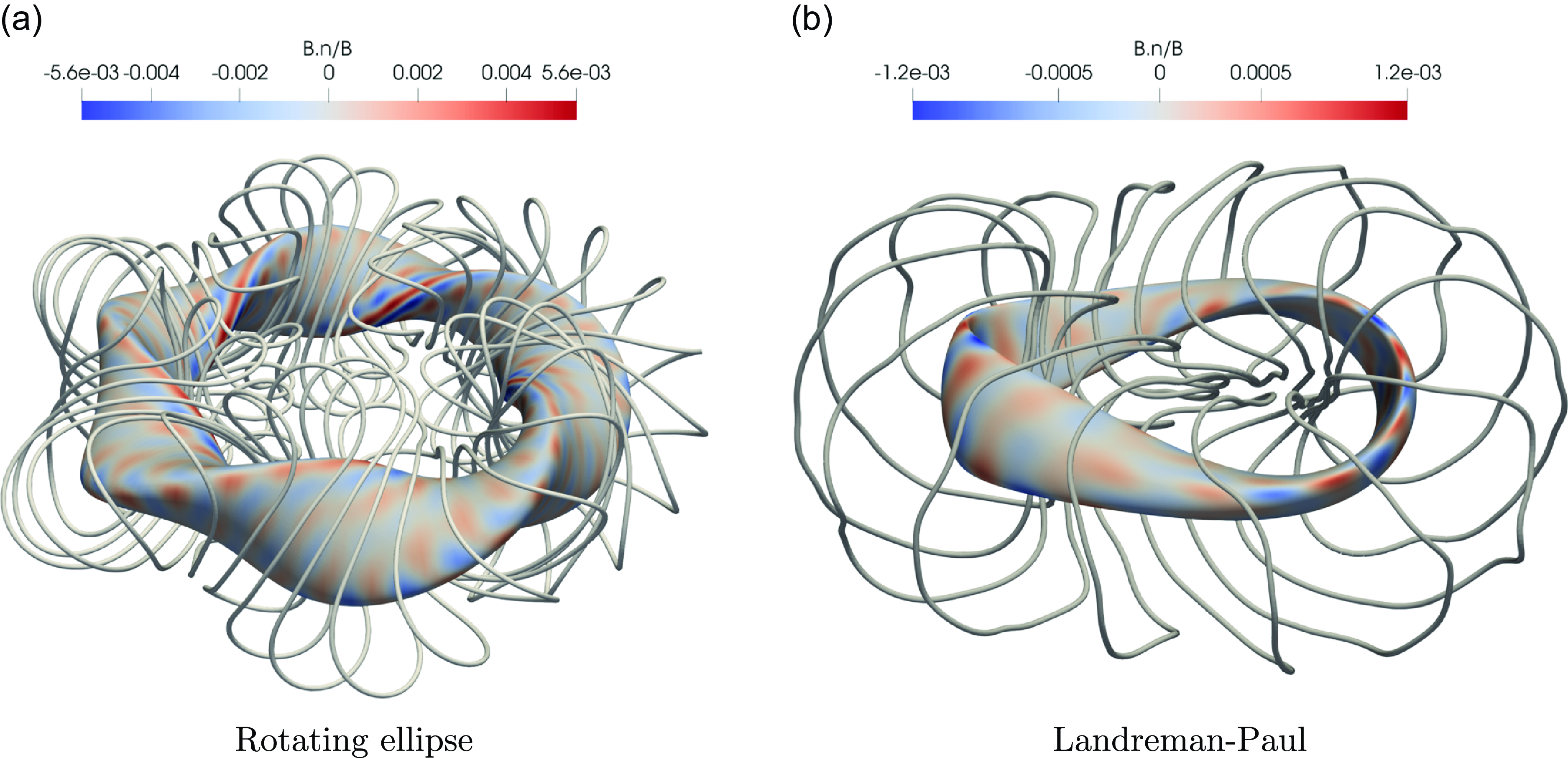

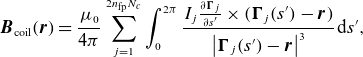

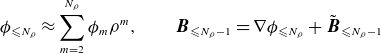

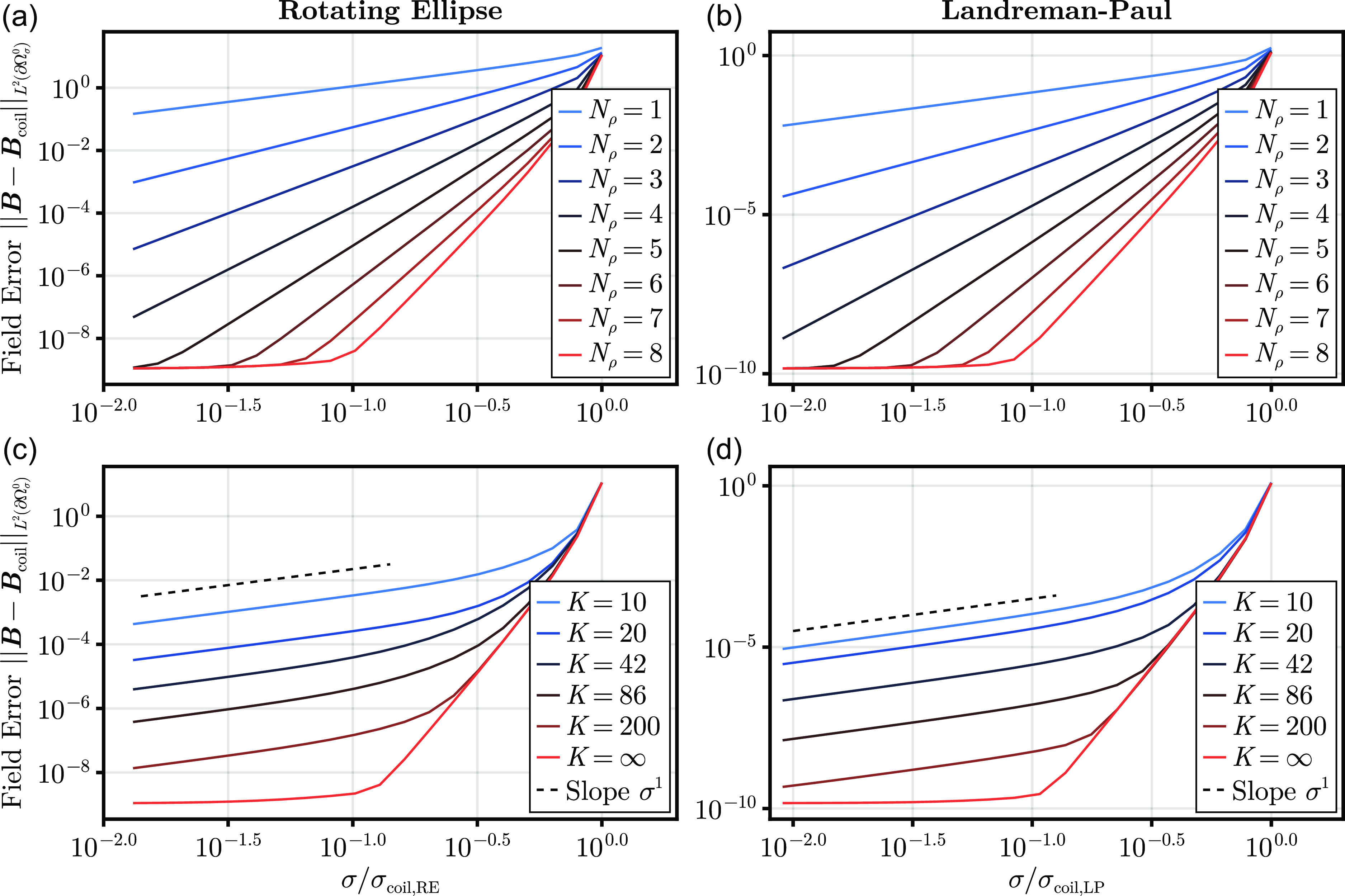

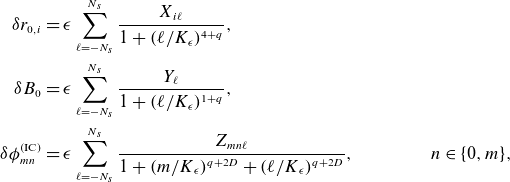

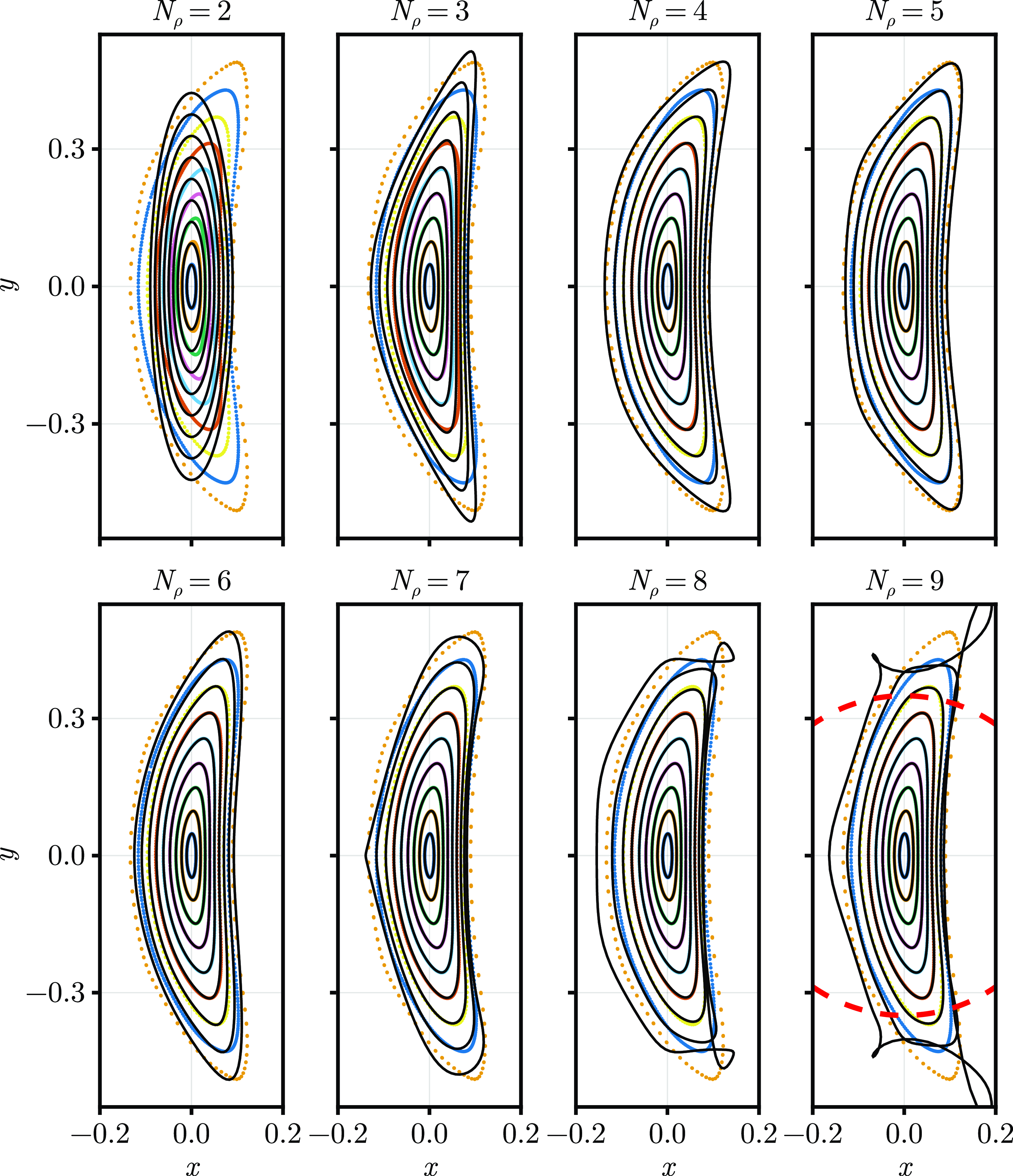

Following the theory, we describe a pseudo-spectral method for finding solutions to the near-axis expansion to arbitrary order in § 4. In § 5, we use the numerical method to show two examples of high-order near-axis expansions: the rotating ellipse and Landreman–Paul (Landreman & Paul Reference Landreman and Paul2022). We find that the near-axis expansion magnetic field, rotational transform and magnetic surfaces can converge accurately near the axis for unperturbed initial data. The region of convergence is observed to be dictated by the distance from the magnetic axis to the coils. Then, by perturbing the on-axis inputs, we show that the regularised expansion obeys Laplace’s more accurately farther from the axis. Finally, we conclude in § 6.

2. Background

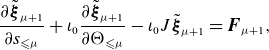

In this section, we introduce the near-axis expansion for the vacuum field equilibrium problem. This presentation follows closely that of Jorge et al. Reference Jorge and Landreman(2020). We begin with a discussion of the geometry of the near-axis problem (§ 2.1), introduce the near-axis expansion (§ 2.2), define the magnetic field problem (§ 2.3) and finally discuss finding straight field-line magnetic coordinates (§ 2.4). For a fuller discussion of the near-axis expansion to all orders, including with pressure gradients, we recommend the papers by Jorge et al. Reference Jorge and Landreman(2020) and Sengupta et al. Reference Sengupta, Rodriguez, Jorge, Landreman and Bhattacharjee(2024). For ease of reference, we have summarised the equations in the background in box (2.30) for the magnetic field and box (2.60) for magnetic coordinates.

2.1. Near-axis geometry

We define a magnetic axis as a

![]() $C^\infty$

closed curve

$C^\infty$

closed curve

![]() $\boldsymbol{r}_0 : \mathbb{T} \to \mathbb{R}^3$

with

$\boldsymbol{r}_0 : \mathbb{T} \to \mathbb{R}^3$

with

![]() $\boldsymbol{r}_0' \neq 0$

and a non-zero tangent magnetic field (see § 2.3 for details about the field). We define a near-axis domain about the axis with radius

$\boldsymbol{r}_0' \neq 0$

and a non-zero tangent magnetic field (see § 2.3 for details about the field). We define a near-axis domain about the axis with radius

![]() $\sigma$

as

$\sigma$

as

We note that the assumption that the axis is infinitely differentiable is necessary for the near-axis expansion to formally go to arbitrary order, and we will eventually reduce the required regularity for the inputs of the regularised expansion, summarised in box (3.30).

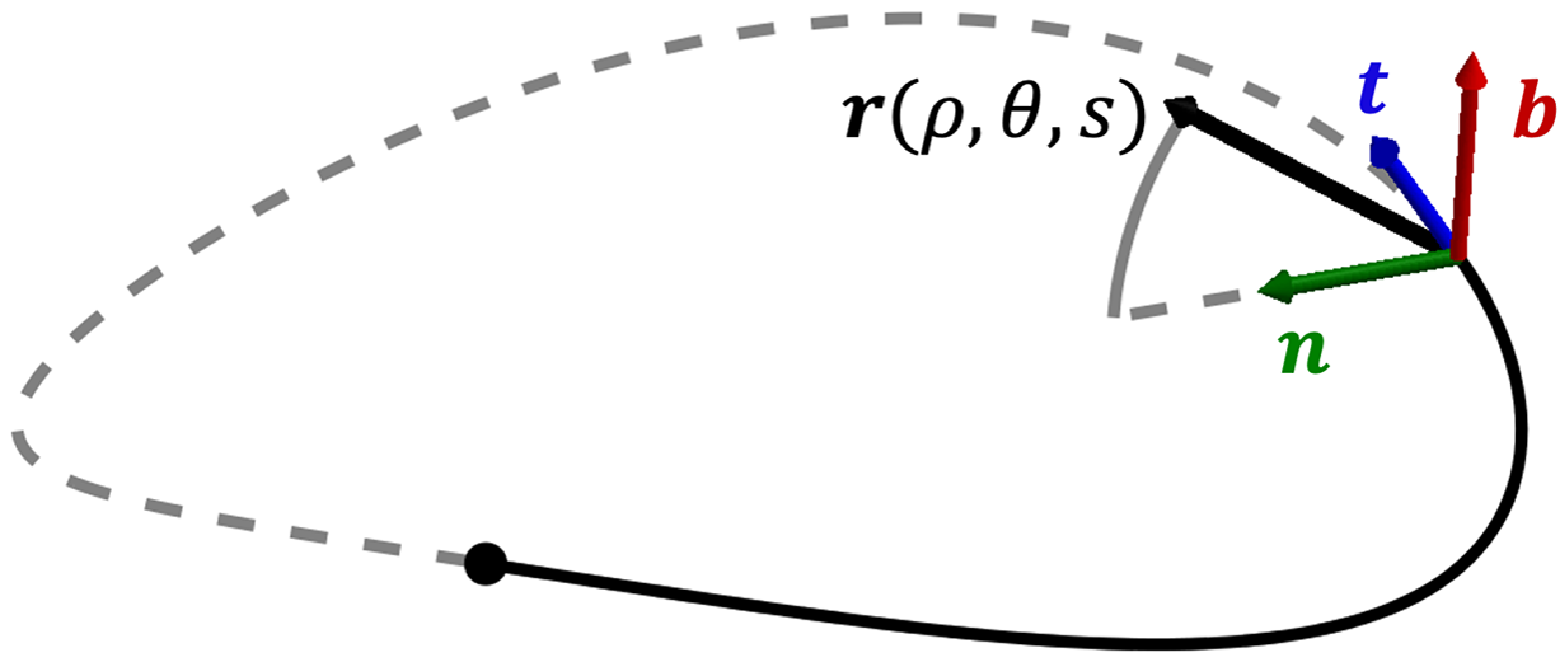

Figure 1. Schematic of the direct near-axis Frenet–Serret coordinate frame.

We define a direct coordinate system

![]() $\boldsymbol{r} : \Omega _\sigma ^0 \to \Omega _\sigma$

where

$\boldsymbol{r} : \Omega _\sigma ^0 \to \Omega _\sigma$

where

![]() $(\rho ,\theta ,s) \in \Omega _\sigma ^0 = [0,\sigma ) \times \mathbb{T}^2$

is the solid torus as (see figure 1)

$(\rho ,\theta ,s) \in \Omega _\sigma ^0 = [0,\sigma ) \times \mathbb{T}^2$

is the solid torus as (see figure 1)

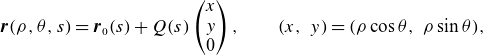

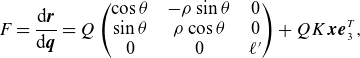

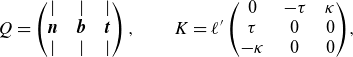

\begin{equation} \boldsymbol{r}(\rho , \theta , s) = \boldsymbol{r}_0(s) + Q(s) \begin{pmatrix} x \\ y \\ 0 \end{pmatrix}, \qquad (x, \ y) = (\rho \cos \theta , \ \rho \sin \theta ), \end{equation}

\begin{equation} \boldsymbol{r}(\rho , \theta , s) = \boldsymbol{r}_0(s) + Q(s) \begin{pmatrix} x \\ y \\ 0 \end{pmatrix}, \qquad (x, \ y) = (\rho \cos \theta , \ \rho \sin \theta ), \end{equation}

where

![]() $Q$

is an orthonormal basis for the local coordinates at the axis with the tangent vector in the third column, i.e.

$Q$

is an orthonormal basis for the local coordinates at the axis with the tangent vector in the third column, i.e.

![]() $\boldsymbol{t} = \boldsymbol{r}_0' / \ell ' = Q\boldsymbol{e}_3$

, where we assume

$\boldsymbol{t} = \boldsymbol{r}_0' / \ell ' = Q\boldsymbol{e}_3$

, where we assume

![]() $\ell ' = \left \lVert \boldsymbol r_0' \right \rVert \gt 0$

. Both the

$\ell ' = \left \lVert \boldsymbol r_0' \right \rVert \gt 0$

. Both the

![]() $\boldsymbol{x} = (x,y,s)$

and the

$\boldsymbol{x} = (x,y,s)$

and the

![]() $\boldsymbol{q} = (\rho , \theta ,s)$

coordinate frames are useful, as

$\boldsymbol{q} = (\rho , \theta ,s)$

coordinate frames are useful, as

![]() $\boldsymbol{x}$

is non-singular with respect to the coordinate transformation, while

$\boldsymbol{x}$

is non-singular with respect to the coordinate transformation, while

![]() $\boldsymbol{q}$

diagonalises the near-axis expansion operator. To perform calculus in the

$\boldsymbol{q}$

diagonalises the near-axis expansion operator. To perform calculus in the

![]() $\boldsymbol{q}$

basis, we require the induced metric from

$\boldsymbol{q}$

basis, we require the induced metric from

![]() $\mathbb{R}^3$

. For this, we first compute the coordinate derivative

$\mathbb{R}^3$

. For this, we first compute the coordinate derivative

\begin{equation} F = \frac {\mathrm{d} \boldsymbol{r}}{\mathrm{d} \boldsymbol{q}} = Q \left(\begin{array}{c@{\quad}c@{\quad}c} \cos \theta & - \rho \sin \theta & 0\\ \sin \theta & \rho \cos \theta & 0\\ 0 & 0 & \ell ' \end{array}\right) + Q K \boldsymbol{x} \boldsymbol{e}_3^T, \end{equation}

\begin{equation} F = \frac {\mathrm{d} \boldsymbol{r}}{\mathrm{d} \boldsymbol{q}} = Q \left(\begin{array}{c@{\quad}c@{\quad}c} \cos \theta & - \rho \sin \theta & 0\\ \sin \theta & \rho \cos \theta & 0\\ 0 & 0 & \ell ' \end{array}\right) + Q K \boldsymbol{x} \boldsymbol{e}_3^T, \end{equation}

where

![]() $K$

is an antisymmetric matrix determining the derivative of

$K$

is an antisymmetric matrix determining the derivative of

![]() $Q$

in the near-axis basis

$Q$

in the near-axis basis

![]() $Q'(s) = Q K$

. Using this matrix, the metric is computed as

$Q'(s) = Q K$

. Using this matrix, the metric is computed as

For the numerical examples in this paper, we specifically consider the Frenet–Serret frame, meaning the local basis

![]() $Q$

and its derivative are defined by

$Q$

and its derivative are defined by

\begin{equation} Q = \left(\begin{array}{c@{\quad}c@{\quad}c} | & | & | \\ \boldsymbol{n} & \boldsymbol{b} & \boldsymbol{t} \\ | & | & | \end{array}\right), \qquad K = \ell ' \left(\begin{array}{c@{\quad}c@{\quad}c} 0 & -\tau & \kappa \\ \tau & 0 & 0 \\ -\kappa & 0 & 0 \end{array}\right)\!, \end{equation}

\begin{equation} Q = \left(\begin{array}{c@{\quad}c@{\quad}c} | & | & | \\ \boldsymbol{n} & \boldsymbol{b} & \boldsymbol{t} \\ | & | & | \end{array}\right), \qquad K = \ell ' \left(\begin{array}{c@{\quad}c@{\quad}c} 0 & -\tau & \kappa \\ \tau & 0 & 0 \\ -\kappa & 0 & 0 \end{array}\right)\!, \end{equation}

where

![]() $\kappa = \left \lVert \boldsymbol{t}' \right \rVert /\ell '$

is the axis curvature,

$\kappa = \left \lVert \boldsymbol{t}' \right \rVert /\ell '$

is the axis curvature,

![]() $\boldsymbol{n} = \left \lVert \boldsymbol{t}' \right \rVert /(\kappa \ell ')$

is the normal vector,

$\boldsymbol{n} = \left \lVert \boldsymbol{t}' \right \rVert /(\kappa \ell ')$

is the normal vector,

![]() $\boldsymbol{b} = \boldsymbol{t} \times \boldsymbol{n}$

is the binormal vector and

$\boldsymbol{b} = \boldsymbol{t} \times \boldsymbol{n}$

is the binormal vector and

![]() $\tau = -\left \lVert \boldsymbol{b}' \right \rVert /\ell '$

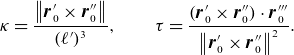

is the axis torsion. Alternative forms of the curvature and torsion are

$\tau = -\left \lVert \boldsymbol{b}' \right \rVert /\ell '$

is the axis torsion. Alternative forms of the curvature and torsion are

\begin{equation} \kappa = \frac {\left \lVert \boldsymbol{r}_0' \times \boldsymbol{r}_0'' \right \rVert }{(\ell ')^3}, \qquad \tau = \frac {(\boldsymbol{r}_0' \times \boldsymbol{r}_0'') \cdot \boldsymbol{r}_0'''}{\left \lVert \boldsymbol{r}_0' \times \boldsymbol{r}_0'' \right \rVert ^2}. \end{equation}

\begin{equation} \kappa = \frac {\left \lVert \boldsymbol{r}_0' \times \boldsymbol{r}_0'' \right \rVert }{(\ell ')^3}, \qquad \tau = \frac {(\boldsymbol{r}_0' \times \boldsymbol{r}_0'') \cdot \boldsymbol{r}_0'''}{\left \lVert \boldsymbol{r}_0' \times \boldsymbol{r}_0'' \right \rVert ^2}. \end{equation}

For the Frenet–Serret coordinate system to be well-defined and non-singular on

![]() $\Omega _\sigma ^0$

, we require

$\Omega _\sigma ^0$

, we require

![]() $\sigma ^{-1} \gt \kappa \gt 0$

. In particular, no straight segments are allowed in the Frenet–Serret frame, disallowing quasi-isodynamic (QI) stellarators (Mata et al. Reference Mata, Plunk and Jorge2022). An alternative choice for axis coordinates that allow for straight segments is Bishop’s coordinates (Bishop Reference Bishop1975; Duignan & Meiss Reference Duignan and Meiss2021).

$\sigma ^{-1} \gt \kappa \gt 0$

. In particular, no straight segments are allowed in the Frenet–Serret frame, disallowing quasi-isodynamic (QI) stellarators (Mata et al. Reference Mata, Plunk and Jorge2022). An alternative choice for axis coordinates that allow for straight segments is Bishop’s coordinates (Bishop Reference Bishop1975; Duignan & Meiss Reference Duignan and Meiss2021).

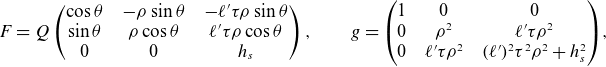

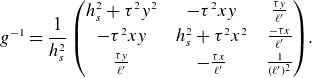

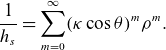

Replacing the Frenet–Serret basis into (2.3), we obtain

\begin{equation} F = Q \left(\begin{array}{c@{\quad}c@{\quad}c} \cos \theta & - \rho \sin \theta & -\ell ' \tau \rho \sin \theta \\ \sin \theta & \rho \cos \theta & \ell ' \tau \rho \cos \theta \\ 0 & 0 & h_s \end{array}\right),\qquad g = \left(\begin{array}{c@{\quad}c@{\quad}c} 1 & 0 & 0 \\ 0 & \rho ^2 & \ell ' \tau \rho ^2 \\ 0 & \ell ' \tau \rho ^2 & (\ell ')^2\tau ^2 \rho ^2 + h_s^2 \end{array}\right)\!, \end{equation}

\begin{equation} F = Q \left(\begin{array}{c@{\quad}c@{\quad}c} \cos \theta & - \rho \sin \theta & -\ell ' \tau \rho \sin \theta \\ \sin \theta & \rho \cos \theta & \ell ' \tau \rho \cos \theta \\ 0 & 0 & h_s \end{array}\right),\qquad g = \left(\begin{array}{c@{\quad}c@{\quad}c} 1 & 0 & 0 \\ 0 & \rho ^2 & \ell ' \tau \rho ^2 \\ 0 & \ell ' \tau \rho ^2 & (\ell ')^2\tau ^2 \rho ^2 + h_s^2 \end{array}\right)\!, \end{equation}

where

The local volume ratio is given by

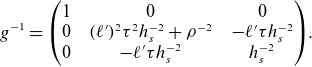

and the inverse metric is

\begin{equation} g^{-1} = \left(\begin{array}{c@{\quad}c@{\quad}c} 1 & 0 & 0 \\ 0 & (\ell ')^2 \tau ^2 h_s^{-2} + \rho ^{-2} & -\ell ' \tau h_s^{-2} \\ 0 & -\ell ' \tau h_s^{-2} & h_s^{-2} \end{array}\right)\!. \end{equation}

\begin{equation} g^{-1} = \left(\begin{array}{c@{\quad}c@{\quad}c} 1 & 0 & 0 \\ 0 & (\ell ')^2 \tau ^2 h_s^{-2} + \rho ^{-2} & -\ell ' \tau h_s^{-2} \\ 0 & -\ell ' \tau h_s^{-2} & h_s^{-2} \end{array}\right)\!. \end{equation}

To find metrics associated with the more general coordinate system (2.2), we consider transformations of the form

![]() $(\rho ,\theta ,s) \mapsto (\rho ,\omega (\theta ,s),s)$

, where

$(\rho ,\theta ,s) \mapsto (\rho ,\omega (\theta ,s),s)$

, where

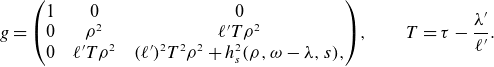

![]() $\omega (\theta ,s) =\theta + \lambda (s)$

. This transformation rotates the orthonormal frame, changing the metric to

$\omega (\theta ,s) =\theta + \lambda (s)$

. This transformation rotates the orthonormal frame, changing the metric to

\begin{equation} g = \left(\begin{array}{c@{\quad}c@{\quad}c} 1 & 0 & 0 \\ 0 & \rho ^2 & \ell ' T \rho ^2 \\ 0 & \ell ' T \rho ^2 & (\ell ')^2T^2 \rho ^2 + h_s^2(\rho ,\omega -\lambda ,s), \end{array}\right)\!, \qquad T = \tau - \frac {\lambda '}{\ell '}. \end{equation}

\begin{equation} g = \left(\begin{array}{c@{\quad}c@{\quad}c} 1 & 0 & 0 \\ 0 & \rho ^2 & \ell ' T \rho ^2 \\ 0 & \ell ' T \rho ^2 & (\ell ')^2T^2 \rho ^2 + h_s^2(\rho ,\omega -\lambda ,s), \end{array}\right)\!, \qquad T = \tau - \frac {\lambda '}{\ell '}. \end{equation}

In this way, the general set of near-axis frames can be represented by a simple replacement of

![]() $\tau$

with

$\tau$

with

![]() $T$

. A special case of this transformation is when

$T$

. A special case of this transformation is when

![]() $T=0$

, yielding (Mercier Reference Mercier1964)

$T=0$

, yielding (Mercier Reference Mercier1964)

In this coordinate frame, the metric becomes diagonal:

![]() $g = \textrm{Diag}(1, \rho ^2, h_s^2(\rho ,\omega -\lambda ,s))$

. The fact that

$g = \textrm{Diag}(1, \rho ^2, h_s^2(\rho ,\omega -\lambda ,s))$

. The fact that

![]() $g$

is diagonalised is convenient for theoretical manipulations, but variables expressed in terms of

$g$

is diagonalised is convenient for theoretical manipulations, but variables expressed in terms of

![]() $\omega$

are multivalued for curves with non-integer total torsion. This unfortunate consequence is important for numerical methods, as Fourier series cannot be used in

$\omega$

are multivalued for curves with non-integer total torsion. This unfortunate consequence is important for numerical methods, as Fourier series cannot be used in

![]() $s$

and additional consistency requirements are necessary. This is part of the motivation for using the Frenet–Serret frame for the numerical examples herein.

$s$

and additional consistency requirements are necessary. This is part of the motivation for using the Frenet–Serret frame for the numerical examples herein.

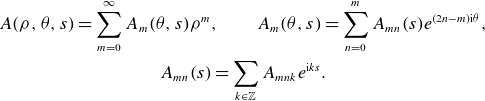

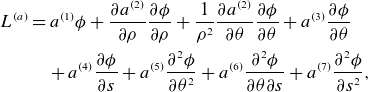

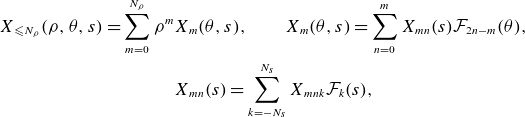

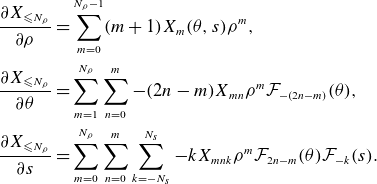

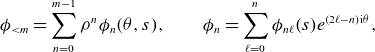

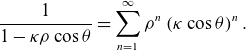

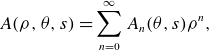

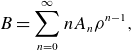

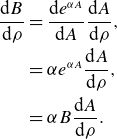

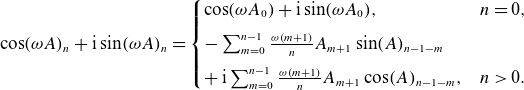

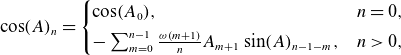

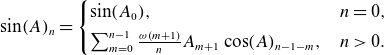

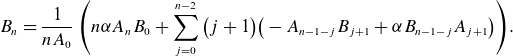

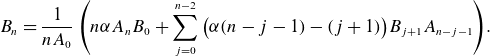

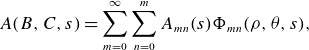

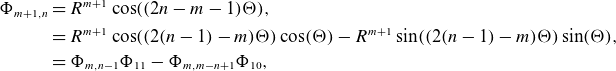

2.2. Near-axis expansion

Now, we consider expansions of functions

![]() $A \in C^\infty (\Omega _\sigma ^0)$

about the magnetic axis. We formally expand in small distances from the axis

$A \in C^\infty (\Omega _\sigma ^0)$

about the magnetic axis. We formally expand in small distances from the axis

![]() $\rho \ll \min \kappa ^{-1}$

as

$\rho \ll \min \kappa ^{-1}$

as

\begin{gather} A(\rho , \theta , s) = \sum _{m=0}^{\infty }A_m(\theta ,s) \rho ^m, \qquad A_m(\theta , s) = \sum _{n=0}^m A_{mn}(s) e^{(2n-m){\mathrm{i}}\theta }, \nonumber \\ A_{mn}(s) = \sum _{k\in \mathbb{Z}} A_{mnk}e^{{\mathrm{i}} k s}. \end{gather}

\begin{gather} A(\rho , \theta , s) = \sum _{m=0}^{\infty }A_m(\theta ,s) \rho ^m, \qquad A_m(\theta , s) = \sum _{n=0}^m A_{mn}(s) e^{(2n-m){\mathrm{i}}\theta }, \nonumber \\ A_{mn}(s) = \sum _{k\in \mathbb{Z}} A_{mnk}e^{{\mathrm{i}} k s}. \end{gather}

This expansion is not guaranteed to converge anywhere for

![]() $A\in C^\infty$

, but it is asymptotic to

$A\in C^\infty$

, but it is asymptotic to

![]() $A$

near the axis, i.e.

$A$

near the axis, i.e.

where we define

![]() $A_{\lt m}$

as the partial sum

$A_{\lt m}$

as the partial sum

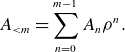

\begin{equation} A_{\lt m} = \sum _{n=0}^{m-1} A_n \rho ^n. \end{equation}

\begin{equation} A_{\lt m} = \sum _{n=0}^{m-1} A_n \rho ^n. \end{equation}

In defining magnetic coordinates, we also find it convenient to expand

![]() $A$

in

$A$

in

![]() $\boldsymbol x$

as

$\boldsymbol x$

as

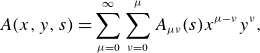

\begin{equation} A(x,y,s) = \sum _{\mu =0}^{\infty } \sum _{\nu = 0}^\mu A_{\mu \nu }(s) x^{\mu -\nu } y^\nu , \end{equation}

\begin{equation} A(x,y,s) = \sum _{\mu =0}^{\infty } \sum _{\nu = 0}^\mu A_{\mu \nu }(s) x^{\mu -\nu } y^\nu , \end{equation}

where we use Latin indices for the

![]() $\boldsymbol{q}$

frame and Greek indices for the

$\boldsymbol{q}$

frame and Greek indices for the

![]() $\boldsymbol{x}$

frame. If we require

$\boldsymbol{x}$

frame. If we require

![]() $A$

to be real-analytic on

$A$

to be real-analytic on

![]() $\Omega _\sigma ^0$

, there additionally exists a

$\Omega _\sigma ^0$

, there additionally exists a

![]() $\sigma ' \lt \sigma$

so that the the asymptotic series converges uniformly on

$\sigma ' \lt \sigma$

so that the the asymptotic series converges uniformly on

![]() $\Omega _{\sigma '}^0$

(this does not necessarily extend to all of

$\Omega _{\sigma '}^0$

(this does not necessarily extend to all of

![]() $\Omega _\sigma ^0$

).

$\Omega _\sigma ^0$

).

Throughout this paper, we attempt to minimise the number of complicated summation formulae resulting from the near-axis expansion (NAE). For instance, if we have two functions

![]() $A, B\in C^{\infty }$

and we want the

$A, B\in C^{\infty }$

and we want the

![]() $m$

th component of the series of

$m$

th component of the series of

![]() $C=AB$

, we will write

$C=AB$

, we will write

![]() $C_{m} = (AB)_{m}$

, rather than

$C_{m} = (AB)_{m}$

, rather than

As expressions become increasingly complicated, this notation provides a concise description of the mathematics involved. In Appendix B, we define a number of relevant operations on series that the interested reader can use to expand the expressions within this paper. In § 4, we discuss how this is similarly convenient for the purposes of programming NAE operations. Rather than implementing residuals via complicated summation formulae, the operations in Appendix B are called, allowing for a simple framework for developing new code.

An important exception to the general rule of condensing notation is in defining any linear operators that must be inverted through the course of an asymptotic expansion. Detailed understanding of such operators are necessary for both numerical implementation and analysis on the series.

2.3. Vacuum fields

In the steady state, the vacuum magnetic field

![]() $\boldsymbol{B}\in C^\infty (\Omega _\sigma ^0)$

satisfies

$\boldsymbol{B}\in C^\infty (\Omega _\sigma ^0)$

satisfies

where

![]() $\boldsymbol{J}$

is the plasma current density. The fundamental near-axis assumption is that

$\boldsymbol{J}$

is the plasma current density. The fundamental near-axis assumption is that

![]() $\boldsymbol{B}$

evaluated on the axis is non-zero and parallel to the magnetic axis, i.e. for some

$\boldsymbol{B}$

evaluated on the axis is non-zero and parallel to the magnetic axis, i.e. for some

![]() $B_0 \in C^{\infty }(\mathbb{T})$

,

$B_0 \in C^{\infty }(\mathbb{T})$

,

Off the axis, we express the magnetic field on

![]() $\Omega _\sigma ^0$

as

$\Omega _\sigma ^0$

as

where

![]() $\phi \in C^{\infty }(\Omega _\sigma ^0)$

is the magnetic potential satisfying

$\phi \in C^{\infty }(\Omega _\sigma ^0)$

is the magnetic potential satisfying

![]() $\left .\nabla \phi \right |_{\rho =0} = 0$

. Then, taking the divergence of (2.20), we find the magnetic scalar potential satisfies Poisson’s equation,

$\left .\nabla \phi \right |_{\rho =0} = 0$

. Then, taking the divergence of (2.20), we find the magnetic scalar potential satisfies Poisson’s equation,

By construction, the field

![]() $\boldsymbol{B}$

in (2.20) is locally the gradient of some function, so it is curl-free. This means the contributions from

$\boldsymbol{B}$

in (2.20) is locally the gradient of some function, so it is curl-free. This means the contributions from

![]() $\tilde {\boldsymbol{B}}$

in (2.20) can locally be absorbed to recover Laplace’s equation for the potential. However, because

$\tilde {\boldsymbol{B}}$

in (2.20) can locally be absorbed to recover Laplace’s equation for the potential. However, because

![]() $\Omega _\sigma$

is not simply connected and

$\Omega _\sigma$

is not simply connected and

![]() $\boldsymbol B \cdot \boldsymbol t$

is single-valued on axis, the closed-loop axis integral

$\boldsymbol B \cdot \boldsymbol t$

is single-valued on axis, the closed-loop axis integral

![]() $\oint \boldsymbol{B} \cdot \mathrm{d} \boldsymbol \ell$

demonstrates that it is not possible for

$\oint \boldsymbol{B} \cdot \mathrm{d} \boldsymbol \ell$

demonstrates that it is not possible for

![]() $\boldsymbol{B}$

to be globally the gradient of a single-valued function

$\boldsymbol{B}$

to be globally the gradient of a single-valued function

![]() $\phi$

. In contrast, the Poisson’s equation formulation contains only single-valued functions, which is convenient both numerically and analytically.

$\phi$

. In contrast, the Poisson’s equation formulation contains only single-valued functions, which is convenient both numerically and analytically.

In coordinates, we can write the magnetic field in (2.20) as

where we assume summation over repeated indices and

![]() $g_{ij}$

and

$g_{ij}$

and

![]() $g^{ij}$

are the components of the metric and inverse metric, respectively. Then, Poisson’s equation in coordinates becomes

$g^{ij}$

are the components of the metric and inverse metric, respectively. Then, Poisson’s equation in coordinates becomes

Multiplying this by

![]() $h_s$

and expanding this in the

$h_s$

and expanding this in the

![]() $\boldsymbol q$

coordinate system, we have

$\boldsymbol q$

coordinate system, we have

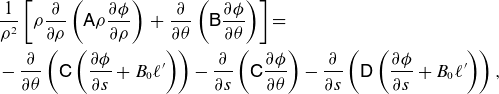

\begin{align} &\frac {1}{\rho ^2}\left [ \rho \frac {\partial }{\partial \rho }\left (\mathsf{A} \rho \frac {\partial \phi }{\partial \rho } \right )\right . + \left .\frac {\partial }{\partial \theta }\left (\mathsf{B} \frac {\partial \phi }{\partial \theta }\right )\right ] = \nonumber \\ &- \frac {\partial }{\partial \theta }\left ( \mathsf{C} \left ( \frac {\partial \phi }{\partial s} + B_0 \ell '\right ) \right ) - \frac {\partial }{\partial s}\left ( \mathsf{C} \frac {\partial \phi }{\partial \theta } \right ) - \frac {\partial }{\partial s} \left (\mathsf{D} \left (\frac {\partial \phi }{\partial s} + B_0 \ell ' \right ) \right ), \end{align}

\begin{align} &\frac {1}{\rho ^2}\left [ \rho \frac {\partial }{\partial \rho }\left (\mathsf{A} \rho \frac {\partial \phi }{\partial \rho } \right )\right . + \left .\frac {\partial }{\partial \theta }\left (\mathsf{B} \frac {\partial \phi }{\partial \theta }\right )\right ] = \nonumber \\ &- \frac {\partial }{\partial \theta }\left ( \mathsf{C} \left ( \frac {\partial \phi }{\partial s} + B_0 \ell '\right ) \right ) - \frac {\partial }{\partial s}\left ( \mathsf{C} \frac {\partial \phi }{\partial \theta } \right ) - \frac {\partial }{\partial s} \left (\mathsf{D} \left (\frac {\partial \phi }{\partial s} + B_0 \ell ' \right ) \right ), \end{align}

where

From here, we can substitute the asymptotic expansions of

![]() $\phi$

and the coefficients

$\phi$

and the coefficients

![]() $\mathsf{A}$

,

$\mathsf{A}$

,

![]() $\mathsf{B}$

,

$\mathsf{B}$

,

![]() $\mathsf{C}$

and

$\mathsf{C}$

and

![]() $\mathsf{D}$

into (2.24). At each order in

$\mathsf{D}$

into (2.24). At each order in

![]() $\rho$

, Poisson’s equation becomes

$\rho$

, Poisson’s equation becomes

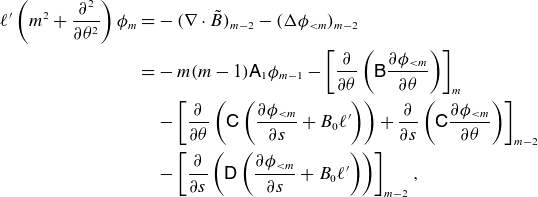

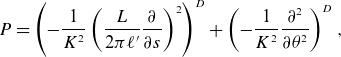

\begin{align} \ell ' \left (m^2 + \frac {\partial ^2}{\partial \theta ^2}\right ) \phi _{m} =& - (\nabla \cdot \tilde {B})_{m-2} -(\Delta \phi _{\lt m})_{m-2}\nonumber \\ =&- m(m-1) \mathsf{A}_1 \phi _{m-1} - \left [\frac {\partial }{\partial \theta }\left (\mathsf{B} \frac {\partial \phi _{\lt m}}{\partial \theta } \right )\right ]_m \nonumber \\ &- \left [ \frac {\partial }{\partial \theta }\left ( \mathsf{C} \left (\frac {\partial \phi _{\lt m}}{\partial s} + B_0 \ell ' \right ) \right ) + \frac {\partial }{\partial s}\left ( \mathsf{C} \frac {\partial \phi _{\lt m}}{\partial \theta } \right )\right ]_{m-2} \\ \nonumber &- \left [\frac {\partial }{\partial s} \left (\mathsf{D} \left ( \frac {\partial \phi _{\lt m}}{\partial s} + B_0 \ell '\right ) \right )\right ]_{m-2}, \end{align}

\begin{align} \ell ' \left (m^2 + \frac {\partial ^2}{\partial \theta ^2}\right ) \phi _{m} =& - (\nabla \cdot \tilde {B})_{m-2} -(\Delta \phi _{\lt m})_{m-2}\nonumber \\ =&- m(m-1) \mathsf{A}_1 \phi _{m-1} - \left [\frac {\partial }{\partial \theta }\left (\mathsf{B} \frac {\partial \phi _{\lt m}}{\partial \theta } \right )\right ]_m \nonumber \\ &- \left [ \frac {\partial }{\partial \theta }\left ( \mathsf{C} \left (\frac {\partial \phi _{\lt m}}{\partial s} + B_0 \ell ' \right ) \right ) + \frac {\partial }{\partial s}\left ( \mathsf{C} \frac {\partial \phi _{\lt m}}{\partial \theta } \right )\right ]_{m-2} \\ \nonumber &- \left [\frac {\partial }{\partial s} \left (\mathsf{D} \left ( \frac {\partial \phi _{\lt m}}{\partial s} + B_0 \ell '\right ) \right )\right ]_{m-2}, \end{align}

where

![]() $\mathsf{A}_1 = (h_s)_1 = -\ell ' \kappa \cos \theta$

. The right-hand side of (2.26) does not depend on orders of

$\mathsf{A}_1 = (h_s)_1 = -\ell ' \kappa \cos \theta$

. The right-hand side of (2.26) does not depend on orders of

![]() $\phi$

higher than

$\phi$

higher than

![]() $\phi _{m-1}$

, so inverting the left-hand-side operator gives an iteration for obtaining

$\phi _{m-1}$

, so inverting the left-hand-side operator gives an iteration for obtaining

![]() $\phi _m$

at each order.

$\phi _m$

at each order.

However, the operator

![]() $\ell ' (m^2 + ({\partial ^2}/{\partial \theta ^2)})$

is singular, so we must confirm there are no secular terms. Specifically, we always have an unknown homogeneous solution at

$\ell ' (m^2 + ({\partial ^2}/{\partial \theta ^2)})$

is singular, so we must confirm there are no secular terms. Specifically, we always have an unknown homogeneous solution at

![]() $\mathcal{O}(m)$

of the form

$\mathcal{O}(m)$

of the form

![]() $\phi _{m0} e^{-i m \theta } + \phi _{mm} e^{i m \theta }$

. For this, we use Fredholm’s alternative, which states that (2.26) is solvable if the right-hand side is orthogonal to the null space of the adjoint of the operator on the left-hand side. Because the operator is self-adjoint, the right-hand side must be orthogonal to

$\phi _{m0} e^{-i m \theta } + \phi _{mm} e^{i m \theta }$

. For this, we use Fredholm’s alternative, which states that (2.26) is solvable if the right-hand side is orthogonal to the null space of the adjoint of the operator on the left-hand side. Because the operator is self-adjoint, the right-hand side must be orthogonal to

![]() $e^{\pm i m \theta }$

, i.e.

$e^{\pm i m \theta }$

, i.e.

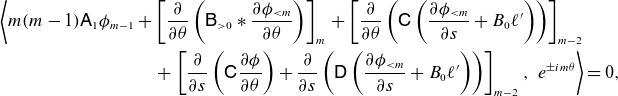

\begin{align} &\left \langle m(m-1) \mathsf{A}_1 \phi _{m-1} + \left [\frac {\partial }{\partial \theta }\left (\mathsf{B}_{\gt 0} * \frac {\partial \phi _{\lt m}}{\partial \theta } \right )\right ]_m \right . + \left [ \frac {\partial }{\partial \theta }\left ( \mathsf{C} \left ( \frac {\partial \phi _{\lt m}}{\partial s} + B_0 \ell '\right ) \right )\right ]_{m-2}\nonumber \\ &\qquad\qquad\qquad\quad\quad\,\,\,+ \left .\left [\frac {\partial }{\partial s}\left ( \mathsf{C} \frac {\partial \phi }{\partial \theta } \right ) + \frac {\partial }{\partial s} \left (\mathsf{D} \left ( \frac {\partial \phi _{\lt m}}{\partial s} + B_0 \ell '\right ) \right )\right ]_{m-2}, \ e^{\pm i m \theta }\right \rangle =0, \end{align}

\begin{align} &\left \langle m(m-1) \mathsf{A}_1 \phi _{m-1} + \left [\frac {\partial }{\partial \theta }\left (\mathsf{B}_{\gt 0} * \frac {\partial \phi _{\lt m}}{\partial \theta } \right )\right ]_m \right . + \left [ \frac {\partial }{\partial \theta }\left ( \mathsf{C} \left ( \frac {\partial \phi _{\lt m}}{\partial s} + B_0 \ell '\right ) \right )\right ]_{m-2}\nonumber \\ &\qquad\qquad\qquad\quad\quad\,\,\,+ \left .\left [\frac {\partial }{\partial s}\left ( \mathsf{C} \frac {\partial \phi }{\partial \theta } \right ) + \frac {\partial }{\partial s} \left (\mathsf{D} \left ( \frac {\partial \phi _{\lt m}}{\partial s} + B_0 \ell '\right ) \right )\right ]_{m-2}, \ e^{\pm i m \theta }\right \rangle =0, \end{align}

where the inner product is defined by

The

![]() $m-2$

coefficient of any analytic function is orthogonal to

$m-2$

coefficient of any analytic function is orthogonal to

![]() $e^{i m \theta }$

, so we can remove the

$e^{i m \theta }$

, so we can remove the

![]() $\mathsf{C}$

and

$\mathsf{C}$

and

![]() $\mathsf{D}$

terms. The same argument allows us to remove the torsion terms in

$\mathsf{D}$

terms. The same argument allows us to remove the torsion terms in

![]() $\mathsf{B}$

. This means only contributions from

$\mathsf{B}$

. This means only contributions from

![]() $\mathsf{A}_1 = \mathsf{B}_1 = - \ell ' \kappa \cos \theta$

and

$\mathsf{A}_1 = \mathsf{B}_1 = - \ell ' \kappa \cos \theta$

and

![]() $\phi _{m-1}$

survive, so we only need to verify

$\phi _{m-1}$

survive, so we only need to verify

Using the identity

![]() $\cos \theta = (e^{i \theta } + e^{-i \theta })/2$

, a quick calculation confirms the above identity holds.

$\cos \theta = (e^{i \theta } + e^{-i \theta })/2$

, a quick calculation confirms the above identity holds.

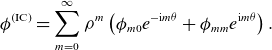

Now, we consider the problem where

![]() $\phi$

is unknown. The fact that the Fredholm condition is automatically satisfied at each order implies that

$\phi$

is unknown. The fact that the Fredholm condition is automatically satisfied at each order implies that

![]() $\phi _{m0}$

and

$\phi _{m0}$

and

![]() $\phi _{mm}$

are free parameters at each order in the near-axis expansion. So, these coefficients are an infinite-dimensional set of initial conditions for the near-axis expansion of Poisson’s equation. Intuitively, one can think of the coefficients

$\phi _{mm}$

are free parameters at each order in the near-axis expansion. So, these coefficients are an infinite-dimensional set of initial conditions for the near-axis expansion of Poisson’s equation. Intuitively, one can think of the coefficients

![]() $\phi _{m0}$

and

$\phi _{m0}$

and

![]() $\phi _{mm}$

as specifying the Fourier coefficients of an infinitely thin tube about the magnetic axis. In this way, the imposition of conditions at each order compensates for the fact that partial differential equations (PDEs) typically satisfy conditions on co-dimension-1 surfaces, whereas the near-axis expansion is specified on a co-dimension-2 curve.

$\phi _{mm}$

as specifying the Fourier coefficients of an infinitely thin tube about the magnetic axis. In this way, the imposition of conditions at each order compensates for the fact that partial differential equations (PDEs) typically satisfy conditions on co-dimension-1 surfaces, whereas the near-axis expansion is specified on a co-dimension-2 curve.

In addition to

![]() $\phi _{m0}$

and

$\phi _{m0}$

and

![]() $\phi _{mm}$

as free parameters, we also treat

$\phi _{mm}$

as free parameters, we also treat

![]() $\boldsymbol{r}_0$

and

$\boldsymbol{r}_0$

and

![]() $B_0$

as inputs to the near-axis problem. The requirement that the magnetic field be tangent to the axis with magnitude

$B_0$

as inputs to the near-axis problem. The requirement that the magnetic field be tangent to the axis with magnitude

![]() $B_0$

results in a constraint that

$B_0$

results in a constraint that

![]() $\phi _{00} = \phi _{10} = \phi _{11} = 0$

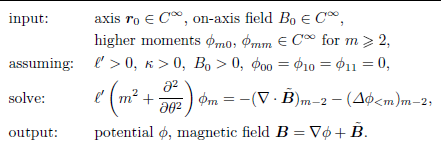

. In total, the direct vacuum near-axis problem can be written as

$\phi _{00} = \phi _{10} = \phi _{11} = 0$

. In total, the direct vacuum near-axis problem can be written as

2.4. Straight field-line coordinates: leading order

Given a solution magnetic field from box (2.30), we consider the problem of finding straight field-line magnetic coordinates. We assume that the magnetic field is locally elliptic about the axis and the rotation number is irrational, so that the leading-order behaviour is rotation about the magnetic axis. This means that both hyperbolic orbits (x-points) and on-axis resonant perturbations are excluded from this work. In the language of Hamiltonian normal forms, the leading order field-line dynamics is conjugate to a non-resonant harmonic oscillator; see Burby et al. Reference Burby, Duignan and Meiss(2021) and Duignan & Meiss (Reference Duignan and Meiss2021) for a more rigorous derivation of magnetic coordinates in the near-axis expansion. We note that our process of finding coordinates is formal: we make no claims that this problem converges in the limit. However, in § 5, we find that this procedure appears to converge well numerically.

To find magnetic coordinates, we attempt to build a conjugacy between magnetic field-line dynamics

![]() $\dot {\boldsymbol{r}} = (B^s)^{-1} \boldsymbol{B}(\boldsymbol{r})$

and straight field-line dynamics

$\dot {\boldsymbol{r}} = (B^s)^{-1} \boldsymbol{B}(\boldsymbol{r})$

and straight field-line dynamics

![]() $\dot {\boldsymbol{\xi }} = (- \iota (\psi ) \eta , \, \iota (\psi ) \xi , \, 1)$

, where

$\dot {\boldsymbol{\xi }} = (- \iota (\psi ) \eta , \, \iota (\psi ) \xi , \, 1)$

, where

![]() $\boldsymbol{\xi } = (\xi , \, \eta , \, s) \in \mathbb{R}^2 \times \mathbb{T}$

are Cartesian coordinates,

$\boldsymbol{\xi } = (\xi , \, \eta , \, s) \in \mathbb{R}^2 \times \mathbb{T}$

are Cartesian coordinates,

![]() $\psi =\xi ^2 + \eta ^2$

is a flux-like coordinate and

$\psi =\xi ^2 + \eta ^2$

is a flux-like coordinate and

![]() $\iota$

is the rotational transform. To make the connection with straight field-line coordinates precise, consider the transformation to polar coordinates

$\iota$

is the rotational transform. To make the connection with straight field-line coordinates precise, consider the transformation to polar coordinates

![]() $(\xi , \eta ) \to \sqrt {\psi }(\cos \gamma , \sin \gamma )$

. Then, the field-line is traced by

$(\xi , \eta ) \to \sqrt {\psi }(\cos \gamma , \sin \gamma )$

. Then, the field-line is traced by

![]() $(\dot {\psi },\, \dot {\gamma },\, \dot {s}) = (0, \, \iota (\psi ), \, 1)$

, i.e. magnetic field lines are straight with slope

$(\dot {\psi },\, \dot {\gamma },\, \dot {s}) = (0, \, \iota (\psi ), \, 1)$

, i.e. magnetic field lines are straight with slope

![]() $\iota$

. However, we use the Cartesian version of magnetic coordinates because it removes the coordinate singularity associated with polar coordinates, simplifying the following steps.

$\iota$

. However, we use the Cartesian version of magnetic coordinates because it removes the coordinate singularity associated with polar coordinates, simplifying the following steps.

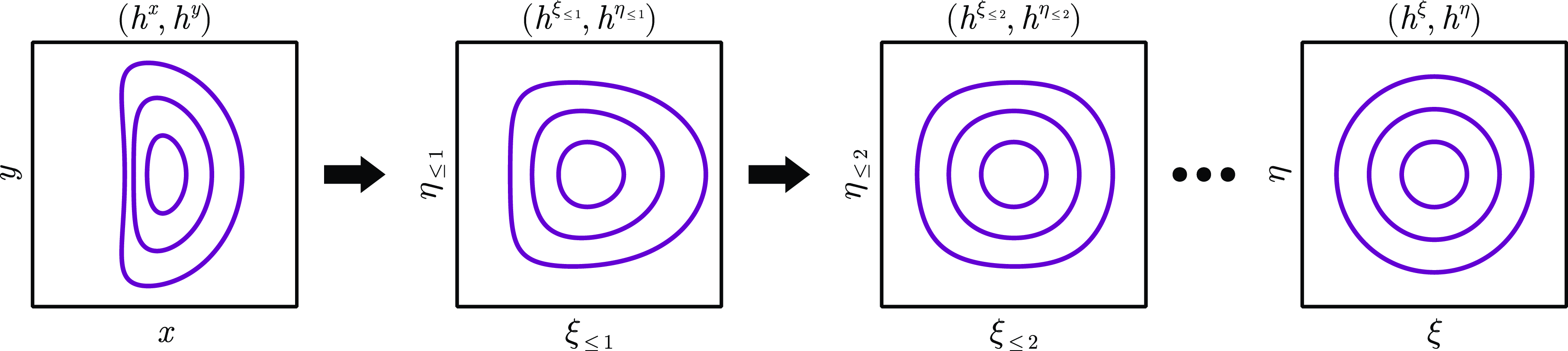

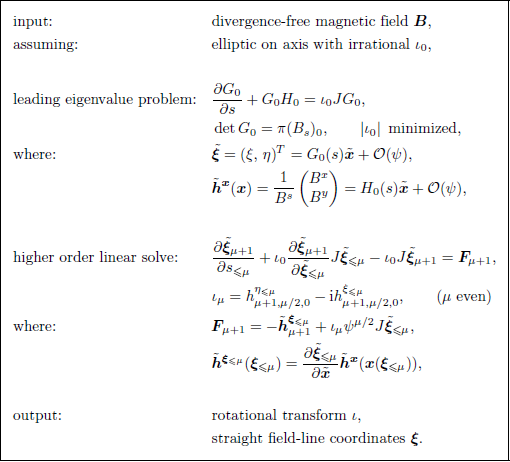

Figure 2. A schematic of the process of finding straight field-line coordinates. On the left, we plot the surfaces of the magnetic field

![]() $(h^x,h^y)$

on a cross-section for fixed

$(h^x,h^y)$

on a cross-section for fixed

![]() $s$

. Moving one plot to the right, the leading correction transforms to a coordinate frame where the main elliptic component is eliminated. Going one further, the next correction accounts for the most prominent triangularity. This process continues until, in

$s$

. Moving one plot to the right, the leading correction transforms to a coordinate frame where the main elliptic component is eliminated. Going one further, the next correction accounts for the most prominent triangularity. This process continues until, in

![]() $(\xi ,\eta )$

coordinates, the magnetic surfaces are nested circles.

$(\xi ,\eta )$

coordinates, the magnetic surfaces are nested circles.

There are two main steps to our process of finding magnetic coordinates: the leading-order problem and the higher-order problems (see figure 2 for a sketch of the process). If we use the notation

![]() $\tilde {\boldsymbol{x}} = (x,\, y)$

and

$\tilde {\boldsymbol{x}} = (x,\, y)$

and

![]() $\tilde {\boldsymbol{\xi }} = (\xi , \, \eta )$

for the out-of-plane coordinates, the leading order transformation takes the form

$\tilde {\boldsymbol{\xi }} = (\xi , \, \eta )$

for the out-of-plane coordinates, the leading order transformation takes the form

We will find that the problem for

![]() $G_0$

is an eigenvalue problem for the on-axis rotation number, as is typical for the linearised dynamics about a fixed point. In the following section, we will discuss the inductive step to higher orders.

$G_0$

is an eigenvalue problem for the on-axis rotation number, as is typical for the linearised dynamics about a fixed point. In the following section, we will discuss the inductive step to higher orders.

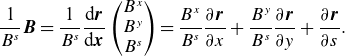

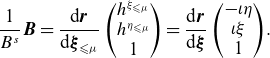

To begin, consider the contravariant form of the Cartesian near-axis magnetic field

\begin{equation} \frac {1}{B^s} \boldsymbol B = \frac {1}{B^s} \frac {\mathrm{d} \boldsymbol{r}}{\mathrm{d} \boldsymbol{x}} \begin{pmatrix} B^x \\ B^y \\ B^s \end{pmatrix} = \frac {B^x}{B^s} \frac {\partial \boldsymbol{r}}{\partial x} + \frac {B^y}{B^s} \frac {\partial \boldsymbol{r}}{\partial y} + \frac {\partial \boldsymbol{r}}{\partial s}. \end{equation}

\begin{equation} \frac {1}{B^s} \boldsymbol B = \frac {1}{B^s} \frac {\mathrm{d} \boldsymbol{r}}{\mathrm{d} \boldsymbol{x}} \begin{pmatrix} B^x \\ B^y \\ B^s \end{pmatrix} = \frac {B^x}{B^s} \frac {\partial \boldsymbol{r}}{\partial x} + \frac {B^y}{B^s} \frac {\partial \boldsymbol{r}}{\partial y} + \frac {\partial \boldsymbol{r}}{\partial s}. \end{equation}

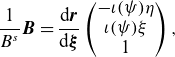

We would like to equate this to the straight field-line dynamics as

\begin{equation} \frac {1}{B^s} \boldsymbol{B} = \frac {\mathrm{d} \boldsymbol{r}}{\mathrm{d} \boldsymbol{\xi }} \begin{pmatrix} - \iota (\psi ) \eta \\ \iota (\psi ) \xi \\ 1 \end{pmatrix}, \end{equation}

\begin{equation} \frac {1}{B^s} \boldsymbol{B} = \frac {\mathrm{d} \boldsymbol{r}}{\mathrm{d} \boldsymbol{\xi }} \begin{pmatrix} - \iota (\psi ) \eta \\ \iota (\psi ) \xi \\ 1 \end{pmatrix}, \end{equation}

where

![]() $\iota$

depends smoothly upon the radial label as

$\iota$

depends smoothly upon the radial label as

where we emphasise

![]() $\iota _\mu = 0$

for odd

$\iota _\mu = 0$

for odd

![]() $\mu$

. Multiplying both sides by

$\mu$

. Multiplying both sides by

![]() $\mathrm{d} \boldsymbol{\xi } / \mathrm{d} \boldsymbol{r}$

, we find the Floquet conjugacy problem

$\mathrm{d} \boldsymbol{\xi } / \mathrm{d} \boldsymbol{r}$

, we find the Floquet conjugacy problem

where

where

![]() $J$

is known as the symplectic matrix. Our problem is to solve (2.35) for

$J$

is known as the symplectic matrix. Our problem is to solve (2.35) for

![]() $\xi (x,y)$

,

$\xi (x,y)$

,

![]() $\eta (x,y)$

and

$\eta (x,y)$

and

![]() $\iota (\psi )$

.

$\iota (\psi )$

.

To find the leading-order problem for (2.35), we note the magnetic field

![]() $(h^x,h^y)$

is linear at leading order:

$(h^x,h^y)$

is linear at leading order:

Substituting this, (2.31) and

![]() $\iota = \iota _0 + \mathcal{O}(\psi )$

into (2.35), we have

$\iota = \iota _0 + \mathcal{O}(\psi )$

into (2.35), we have

The leading order problem (2.38) is a Floquet eigenvalue problem for the linearised field-line dynamics about the magnetic axis. Assuming that the near-axis expansion is elliptic at leading order, the value of

![]() $\iota _0$

is real. Otherwise,

$\iota _0$

is real. Otherwise,

![]() $\iota _0$

is not real, meaning ellipticity can be numerically verified for a given input.

$\iota _0$

is not real, meaning ellipticity can be numerically verified for a given input.

There are many equivalent solutions to (2.38), owing to the symmetries that if

![]() $(G_0, \iota _0)$

satisfies (2.38), then the following are also solutions:

$(G_0, \iota _0)$

satisfies (2.38), then the following are also solutions:

where

![]() $R$

is a rotation matrix

$R$

is a rotation matrix

The question of which solution to choose is then a question of practicalities. For instance, one could choose the rotational transform corresponding to a non-twisting right-handed coordinate frame

![]() $\boldsymbol{\xi }_{1}$

. Here, ‘non-twisting’ means that closed coordinate lines near the axis, implicitly defined as curves

$\boldsymbol{\xi }_{1}$

. Here, ‘non-twisting’ means that closed coordinate lines near the axis, implicitly defined as curves

![]() $\boldsymbol r(x,y,s)$

where

$\boldsymbol r(x,y,s)$

where

![]() $\tilde {\boldsymbol{\xi }}(x,y,s) \neq 0$

is held constant, can be continuously deformed to a point on

$\tilde {\boldsymbol{\xi }}(x,y,s) \neq 0$

is held constant, can be continuously deformed to a point on

![]() $\mathbb{R}^3 \backslash \boldsymbol r_0$

. In other words, the coordinates lines do not link with the axis. In this frame,

$\mathbb{R}^3 \backslash \boldsymbol r_0$

. In other words, the coordinates lines do not link with the axis. In this frame,

![]() $\iota _0$

agrees with the intuitive definition of the rotational transform as the limiting ratio of poloidal turns divided by toroidal turns of fieldlines about the axis. Other choices may have other benefits, e.g. there may be an eigenfunction that

$\iota _0$

agrees with the intuitive definition of the rotational transform as the limiting ratio of poloidal turns divided by toroidal turns of fieldlines about the axis. Other choices may have other benefits, e.g. there may be an eigenfunction that

![]() $G_0$

behaves best numerically. In this paper, we opt for an option that is easy to implement: we take the real solution where

$G_0$

behaves best numerically. In this paper, we opt for an option that is easy to implement: we take the real solution where

![]() $\iota _0$

has the smallest magnitude and

$\iota _0$

has the smallest magnitude and

![]() $\det G_0$

is positive. From here, other equivalent coordinates can easily be found by applying the transformations in (2.39).

$\det G_0$

is positive. From here, other equivalent coordinates can easily be found by applying the transformations in (2.39).

For the scaling of

![]() $G_0$

, we choose

$G_0$

, we choose

![]() $\psi$

to be the actual magnetic flux at leading order. The formula for the flux is

$\psi$

to be the actual magnetic flux at leading order. The formula for the flux is

where

![]() $D_\psi = \{(\xi ,\eta ) \mid \xi ^2 + \eta ^2 \lt \psi \}$

and

$D_\psi = \{(\xi ,\eta ) \mid \xi ^2 + \eta ^2 \lt \psi \}$

and

Pulling this back to the

![]() $\boldsymbol{\xi }$

frame, we have

$\boldsymbol{\xi }$

frame, we have

Both

![]() $B_s$

and

$B_s$

and

![]() $G_0$

are constant in

$G_0$

are constant in

![]() $\boldsymbol{\xi }$

to leading order, so (2.43) at leading order becomes

$\boldsymbol{\xi }$

to leading order, so (2.43) at leading order becomes

Setting this equal to

![]() $\psi$

, we find

$\psi$

, we find

where we note that this is only possible when

![]() $(B_s)_0$

is chosen to be positive.

$(B_s)_0$

is chosen to be positive.

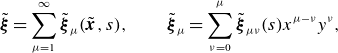

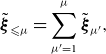

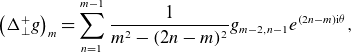

2.5. Straight field-line coordinates: higher order

Now that we have the leading-order behaviour, we iterate to go to higher order. To do so, first define the near-axis expansion of

![]() $\tilde {\boldsymbol{\xi }}$

near the axis as

$\tilde {\boldsymbol{\xi }}$

near the axis as

\begin{equation} \tilde {\boldsymbol{\xi }} = \sum _{\mu =1}^\infty \tilde {\boldsymbol{\xi }}_\mu (\tilde {\boldsymbol{x}}, s), \qquad \tilde {\boldsymbol{\xi }}_\mu = \sum _{\nu = 0}^\mu \tilde {\boldsymbol{\xi }}_{\mu \nu }(s) x^{\mu -\nu } y^\nu , \end{equation}

\begin{equation} \tilde {\boldsymbol{\xi }} = \sum _{\mu =1}^\infty \tilde {\boldsymbol{\xi }}_\mu (\tilde {\boldsymbol{x}}, s), \qquad \tilde {\boldsymbol{\xi }}_\mu = \sum _{\nu = 0}^\mu \tilde {\boldsymbol{\xi }}_{\mu \nu }(s) x^{\mu -\nu } y^\nu , \end{equation}

and define the partial sums as

\begin{equation} \tilde {\boldsymbol{\xi }}_{\leqslant \mu } = \sum _{\mu ' = 1}^{\mu }\tilde {\boldsymbol{\xi }}_{\mu '}, \end{equation}

\begin{equation} \tilde {\boldsymbol{\xi }}_{\leqslant \mu } = \sum _{\mu ' = 1}^{\mu }\tilde {\boldsymbol{\xi }}_{\mu '}, \end{equation}

where at leading order,

![]() $\tilde {\boldsymbol{\xi }}_{\leqslant 1} = \tilde {\boldsymbol{\xi }}_1 = G_0 \tilde {\boldsymbol{x}}.$

$\tilde {\boldsymbol{\xi }}_{\leqslant 1} = \tilde {\boldsymbol{\xi }}_1 = G_0 \tilde {\boldsymbol{x}}.$

At each order in the iteration, we consider the update to be a function of the previous coordinates, i.e.

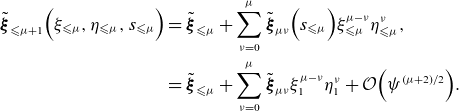

\begin{align} \tilde {\boldsymbol{\xi }}_{\leqslant \mu +1}\Big(\xi _{\leqslant \mu }, \eta _{\leqslant \mu }, s_{\leqslant \mu }\Big) &= \tilde {\boldsymbol{\xi }}_{\leqslant \mu } + \sum _{\nu =0}^\mu \tilde {\boldsymbol{\xi }}_{\mu \nu }\Big(s_{\leqslant \mu }\Big) \xi _{\leqslant \mu }^{\mu -\nu } \eta _{\leqslant \mu }^\nu \nonumber,\\ &= \tilde {\boldsymbol{\xi }}_{\leqslant \mu } + \sum _{\nu =0}^\mu \tilde {\boldsymbol{\xi }}_{\mu \nu } \xi _{1}^{\mu -\nu } \eta _{1}^\nu + \mathcal{O}\Big(\psi ^{(\mu +2)/2}\Big). \end{align}

\begin{align} \tilde {\boldsymbol{\xi }}_{\leqslant \mu +1}\Big(\xi _{\leqslant \mu }, \eta _{\leqslant \mu }, s_{\leqslant \mu }\Big) &= \tilde {\boldsymbol{\xi }}_{\leqslant \mu } + \sum _{\nu =0}^\mu \tilde {\boldsymbol{\xi }}_{\mu \nu }\Big(s_{\leqslant \mu }\Big) \xi _{\leqslant \mu }^{\mu -\nu } \eta _{\leqslant \mu }^\nu \nonumber,\\ &= \tilde {\boldsymbol{\xi }}_{\leqslant \mu } + \sum _{\nu =0}^\mu \tilde {\boldsymbol{\xi }}_{\mu \nu } \xi _{1}^{\mu -\nu } \eta _{1}^\nu + \mathcal{O}\Big(\psi ^{(\mu +2)/2}\Big). \end{align}

We explicitly write the transformed toroidal coordinate

![]() $s_{\leqslant \mu }=s$

so that it is clear that

$s_{\leqslant \mu }=s$

so that it is clear that

![]() $({\partial }/{\partial s})$

and

$({\partial }/{\partial s})$

and

![]() $({\partial }/{\partial s_{\leqslant \mu })}$

are different operators. The purpose of performing the update in this way is primarily to make the update step as clear as possible.

$({\partial }/{\partial s_{\leqslant \mu })}$

are different operators. The purpose of performing the update in this way is primarily to make the update step as clear as possible.

To wit, the magnetic field in the new frame satisfies

\begin{equation} \frac {1}{B^s} \boldsymbol{B} = \frac {\mathrm{d} \boldsymbol{r}}{\mathrm{d} \boldsymbol{\xi }_{\leqslant \mu }} \begin{pmatrix} h^{\xi _{\leqslant \mu }} \\ h^{\eta _{\leqslant \mu }} \\ 1 \end{pmatrix} = \frac {\mathrm{d} \boldsymbol{r}}{\mathrm{d} \boldsymbol{\xi }} \begin{pmatrix} -\iota \eta \\ \iota \xi \\ 1 \end{pmatrix}\!. \end{equation}

\begin{equation} \frac {1}{B^s} \boldsymbol{B} = \frac {\mathrm{d} \boldsymbol{r}}{\mathrm{d} \boldsymbol{\xi }_{\leqslant \mu }} \begin{pmatrix} h^{\xi _{\leqslant \mu }} \\ h^{\eta _{\leqslant \mu }} \\ 1 \end{pmatrix} = \frac {\mathrm{d} \boldsymbol{r}}{\mathrm{d} \boldsymbol{\xi }} \begin{pmatrix} -\iota \eta \\ \iota \xi \\ 1 \end{pmatrix}\!. \end{equation}

We can use the first equality to write

\begin{align} \tilde {\boldsymbol{h}}^{\boldsymbol{\xi }_{\leqslant \mu }}(\boldsymbol{\xi }_{\leqslant \mu }) &= \begin{pmatrix} h^{\xi _{\leqslant \mu }} \big(\boldsymbol{\xi }_{\leqslant \mu }\big) \\[2pt] h^{\eta _{\leqslant \mu }} \big(\boldsymbol{\xi }_{\leqslant \mu }\big) \end{pmatrix} \nonumber \\ &= \frac {\partial \tilde {\boldsymbol{\xi }}_{\leqslant \mu }}{\partial \tilde {\boldsymbol{x}}} \tilde {\boldsymbol{h}}^{\boldsymbol{x}}\left(\boldsymbol{x}\big(\boldsymbol{\xi }_{\leqslant \mu }\big)\right) + \frac {\partial \tilde {\boldsymbol{\xi }}_{\leqslant \mu }}{\partial s}, \end{align}

\begin{align} \tilde {\boldsymbol{h}}^{\boldsymbol{\xi }_{\leqslant \mu }}(\boldsymbol{\xi }_{\leqslant \mu }) &= \begin{pmatrix} h^{\xi _{\leqslant \mu }} \big(\boldsymbol{\xi }_{\leqslant \mu }\big) \\[2pt] h^{\eta _{\leqslant \mu }} \big(\boldsymbol{\xi }_{\leqslant \mu }\big) \end{pmatrix} \nonumber \\ &= \frac {\partial \tilde {\boldsymbol{\xi }}_{\leqslant \mu }}{\partial \tilde {\boldsymbol{x}}} \tilde {\boldsymbol{h}}^{\boldsymbol{x}}\left(\boldsymbol{x}\big(\boldsymbol{\xi }_{\leqslant \mu }\big)\right) + \frac {\partial \tilde {\boldsymbol{\xi }}_{\leqslant \mu }}{\partial s}, \end{align}

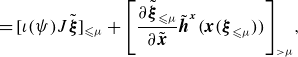

\begin{align} &= [\iota (\psi ) J \tilde {\boldsymbol{\xi }}]_{\leqslant \mu } + \left [ \frac {\partial \tilde {\boldsymbol{\xi }}_{\leqslant \mu }}{\partial \tilde {\boldsymbol{x}}}\tilde {\boldsymbol{h}}^{\boldsymbol{x}}(\boldsymbol{x}(\boldsymbol{\xi }_{\leqslant \mu }))\right ]_{\gt \mu }\!,\\[6pt] \nonumber\end{align}

\begin{align} &= [\iota (\psi ) J \tilde {\boldsymbol{\xi }}]_{\leqslant \mu } + \left [ \frac {\partial \tilde {\boldsymbol{\xi }}_{\leqslant \mu }}{\partial \tilde {\boldsymbol{x}}}\tilde {\boldsymbol{h}}^{\boldsymbol{x}}(\boldsymbol{x}(\boldsymbol{\xi }_{\leqslant \mu }))\right ]_{\gt \mu }\!,\\[6pt] \nonumber\end{align}

where we use the notation

![]() $f_{\gt \mu } = \sum _{\nu \gt \mu } f_{\nu }$

. To get from the second to the third line, we have used the inductive assumption that

$f_{\gt \mu } = \sum _{\nu \gt \mu } f_{\nu }$

. To get from the second to the third line, we have used the inductive assumption that

![]() $\boldsymbol{\xi }_{\leqslant \mu }$

matches

$\boldsymbol{\xi }_{\leqslant \mu }$

matches

![]() $\boldsymbol{\xi }$

up to order

$\boldsymbol{\xi }$

up to order

![]() $\mu$

, meaning that

$\mu$

, meaning that

![]() $\boldsymbol{\xi }_{\leqslant \mu }$

is a straight field-line coordinate system up to order

$\boldsymbol{\xi }_{\leqslant \mu }$

is a straight field-line coordinate system up to order

![]() $\mu$

. In this way, we will find that the update residual depends neatly upon the transformed magnetic field.

$\mu$

. In this way, we will find that the update residual depends neatly upon the transformed magnetic field.

It is worth noting that (2.51) has two new operations that have not been introduced so far. The first is that we are computing

![]() $\boldsymbol{x}(\boldsymbol{\xi }_{\leqslant \mu })$

, i.e. we are inverting the coordinate transformation. The second is that we are composing functions with this inversion as

$\boldsymbol{x}(\boldsymbol{\xi }_{\leqslant \mu })$

, i.e. we are inverting the coordinate transformation. The second is that we are composing functions with this inversion as

![]() $\tilde {\boldsymbol{h}}^{\boldsymbol{x}}(\boldsymbol{x}(\boldsymbol{\xi }_{\leqslant \mu }))$

. So, for any function

$\tilde {\boldsymbol{h}}^{\boldsymbol{x}}(\boldsymbol{x}(\boldsymbol{\xi }_{\leqslant \mu }))$

. So, for any function

![]() $f(\boldsymbol{x})$

and coordinate transformation

$f(\boldsymbol{x})$

and coordinate transformation

![]() $\boldsymbol{\xi }(\boldsymbol{x})$

, we can compute the equivalent function in indirect coordinates as

$\boldsymbol{\xi }(\boldsymbol{x})$

, we can compute the equivalent function in indirect coordinates as

![]() $f(\boldsymbol{\xi })$

using these two steps. Moreover, the inverse transformation

$f(\boldsymbol{\xi })$

using these two steps. Moreover, the inverse transformation

![]() $\boldsymbol{x}(\boldsymbol{\xi })$

can be precomputed for all transformations one wishes to perform of this type. This gives a framework to move back and forth between direct and indirect near-axis formalisms to high order. For more details on how these transformations are computed, see Appendices (B.6) and (B.7).

$\boldsymbol{x}(\boldsymbol{\xi })$

can be precomputed for all transformations one wishes to perform of this type. This gives a framework to move back and forth between direct and indirect near-axis formalisms to high order. For more details on how these transformations are computed, see Appendices (B.6) and (B.7).

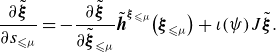

Given the residual field (2.51), we can use the second equality in (2.49) to find the updated equation

\begin{equation} \frac {\partial \tilde {\boldsymbol{\xi }}}{\partial s_{\leqslant \mu }} = - \frac {\partial \tilde {\boldsymbol{\xi }}}{\partial \tilde {\boldsymbol{\xi }}_{\leqslant \mu }} \tilde {\boldsymbol{h}}^{\boldsymbol{\xi }_{\leqslant \mu }}\big(\boldsymbol{\xi }_{\leqslant \mu }\big) + \iota (\psi ) J \tilde {\boldsymbol{\xi }}. \end{equation}

\begin{equation} \frac {\partial \tilde {\boldsymbol{\xi }}}{\partial s_{\leqslant \mu }} = - \frac {\partial \tilde {\boldsymbol{\xi }}}{\partial \tilde {\boldsymbol{\xi }}_{\leqslant \mu }} \tilde {\boldsymbol{h}}^{\boldsymbol{\xi }_{\leqslant \mu }}\big(\boldsymbol{\xi }_{\leqslant \mu }\big) + \iota (\psi ) J \tilde {\boldsymbol{\xi }}. \end{equation}

Note that this has the exact same form as (2.35), except we have shifted the underlying coordinates. Substituting

![]() $\tilde {\boldsymbol{\xi }}_{\leqslant \mu +1} = \tilde {\boldsymbol{\xi }}_{\leqslant \mu } + \tilde {\boldsymbol{\xi }}_\mu$

into (2.52), we obtain

$\tilde {\boldsymbol{\xi }}_{\leqslant \mu +1} = \tilde {\boldsymbol{\xi }}_{\leqslant \mu } + \tilde {\boldsymbol{\xi }}_\mu$

into (2.52), we obtain

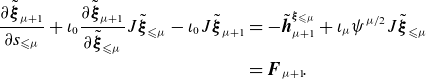

\begin{align} \frac {\partial \tilde {\boldsymbol{\xi }}_{\mu +1}}{\partial s_{\leqslant \mu }} + \iota _0 \frac {\partial \tilde {\boldsymbol{\xi }}_{\mu +1}}{\partial \tilde {\boldsymbol{\xi }}_{\leqslant \mu }} J \tilde {\boldsymbol{\xi }}_{\leqslant \mu } - \iota _0 J \tilde {\boldsymbol{\xi }}_{\mu +1} &= - \tilde {\boldsymbol{h}}^{\boldsymbol{\xi }_{\leqslant \mu }}_{\mu +1} + \iota _\mu \psi ^{\mu /2}J \tilde {\boldsymbol{\xi }}_{\leqslant \mu } \nonumber \\ &= \boldsymbol{F}_{\mu +1}\!. \end{align}

\begin{align} \frac {\partial \tilde {\boldsymbol{\xi }}_{\mu +1}}{\partial s_{\leqslant \mu }} + \iota _0 \frac {\partial \tilde {\boldsymbol{\xi }}_{\mu +1}}{\partial \tilde {\boldsymbol{\xi }}_{\leqslant \mu }} J \tilde {\boldsymbol{\xi }}_{\leqslant \mu } - \iota _0 J \tilde {\boldsymbol{\xi }}_{\mu +1} &= - \tilde {\boldsymbol{h}}^{\boldsymbol{\xi }_{\leqslant \mu }}_{\mu +1} + \iota _\mu \psi ^{\mu /2}J \tilde {\boldsymbol{\xi }}_{\leqslant \mu } \nonumber \\ &= \boldsymbol{F}_{\mu +1}\!. \end{align}

Because the leading order problem (2.38) is an eigenvalue problem, (2.53) has the form of a higher-order correction to the eigenvalue and eigenfunction. To see what we might expect, consider the eigenvalue problem

![]() $K\boldsymbol y=\lambda M \boldsymbol y$

, where each term is expanded in a small parameter, e.g.

$K\boldsymbol y=\lambda M \boldsymbol y$

, where each term is expanded in a small parameter, e.g.

![]() $K=K_0 + K_1 \epsilon + K_2 \epsilon ^2 + \ldots$

for small

$K=K_0 + K_1 \epsilon + K_2 \epsilon ^2 + \ldots$

for small

![]() $\epsilon$

. The analogous update equation would be

$\epsilon$

. The analogous update equation would be

where

![]() $\boldsymbol{R}_\mu$

contains all of the residual terms. Assume for simplicity that

$\boldsymbol{R}_\mu$

contains all of the residual terms. Assume for simplicity that

![]() $\lambda _0$

is an isolated eigenvalue. Then, there is a single secular term, which can be identified by taking the inner product of the above expression with the leading left eigenvector

$\lambda _0$

is an isolated eigenvalue. Then, there is a single secular term, which can be identified by taking the inner product of the above expression with the leading left eigenvector

![]() $\boldsymbol{z}_0$

to give

$\boldsymbol{z}_0$

to give

![]() $\lambda _\mu = -(\boldsymbol{z}_0^T \boldsymbol{R}_\mu )/(\boldsymbol{z}_0^T M_0 \boldsymbol{y}_0)$

. Once this is satisfied, the equation can be solved for

$\lambda _\mu = -(\boldsymbol{z}_0^T \boldsymbol{R}_\mu )/(\boldsymbol{z}_0^T M_0 \boldsymbol{y}_0)$

. Once this is satisfied, the equation can be solved for

![]() $\boldsymbol{y}_\mu$

, where we typically choose the free component in

$\boldsymbol{y}_\mu$

, where we typically choose the free component in

![]() $\boldsymbol{y}_0$

so that the norm is constant.

$\boldsymbol{y}_0$

so that the norm is constant.

To perform the same steps on (2.53), we first diagonalise the left-hand-side operator by converting to polar coordinates

![]() $(\xi _{\leqslant \mu }, \eta _{\leqslant \mu }) = R_{\leqslant \mu } (\cos \Theta _{\leqslant \mu }, \sin \Theta _{\leqslant \mu })$

as

$(\xi _{\leqslant \mu }, \eta _{\leqslant \mu }) = R_{\leqslant \mu } (\cos \Theta _{\leqslant \mu }, \sin \Theta _{\leqslant \mu })$

as

\begin{equation} \frac {\partial \tilde {\boldsymbol{\xi }}_{\mu +1}}{\partial s_{\leqslant \mu }} + \iota _0 \frac {\partial \tilde {\boldsymbol{\xi }}_{\mu +1}}{\partial \Theta _{\leqslant \mu }} - \iota _0 J \tilde {\boldsymbol{\xi }}_{\mu +1} = \boldsymbol{F}_{\mu +1}, \end{equation}

\begin{equation} \frac {\partial \tilde {\boldsymbol{\xi }}_{\mu +1}}{\partial s_{\leqslant \mu }} + \iota _0 \frac {\partial \tilde {\boldsymbol{\xi }}_{\mu +1}}{\partial \Theta _{\leqslant \mu }} - \iota _0 J \tilde {\boldsymbol{\xi }}_{\mu +1} = \boldsymbol{F}_{\mu +1}, \end{equation}

where

\begin{equation} \tilde {\boldsymbol{\xi }}_{\mu +1} = (R_{\leqslant \mu })^{\mu +1} \sum _{n=0}^{\mu } \sum _{\ell \in \mathbb{Z}} \tilde {\boldsymbol{\xi }}_{\mu +1,n\ell } e^{(2n-\mu ){\mathrm{i}} \theta + {\mathrm{i}} \ell s_{\leqslant \mu }}. \end{equation}

\begin{equation} \tilde {\boldsymbol{\xi }}_{\mu +1} = (R_{\leqslant \mu })^{\mu +1} \sum _{n=0}^{\mu } \sum _{\ell \in \mathbb{Z}} \tilde {\boldsymbol{\xi }}_{\mu +1,n\ell } e^{(2n-\mu ){\mathrm{i}} \theta + {\mathrm{i}} \ell s_{\leqslant \mu }}. \end{equation}

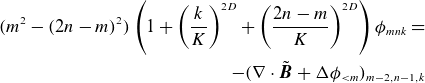

After substitution, we find that

where the

![]() $2\times 2$

left-hand-side matrix has the eigenvalues

$2\times 2$

left-hand-side matrix has the eigenvalues

![]() $\lambda _{\mu +1,n\ell 0} = \iota _0 {\mathrm{i}} (2n - \mu ) + {\mathrm{i}} \ell$

and

$\lambda _{\mu +1,n\ell 0} = \iota _0 {\mathrm{i}} (2n - \mu ) + {\mathrm{i}} \ell$

and

![]() $\lambda _{\mu +1,n \ell 1} = \iota _0 {\mathrm{i}} (2n - \mu - 2) + {\mathrm{i}} \ell$

with corresponding right eigenvectors

$\lambda _{\mu +1,n \ell 1} = \iota _0 {\mathrm{i}} (2n - \mu - 2) + {\mathrm{i}} \ell$

with corresponding right eigenvectors

![]() $(\boldsymbol{v}_0, \boldsymbol{v}_1) = ([-{\mathrm{i}}/\sqrt {2}, 1/\sqrt {2}], [{\mathrm{i}}/\sqrt {2},1/ \sqrt {2}])$

. So, the resulting updates in the coefficients are

$(\boldsymbol{v}_0, \boldsymbol{v}_1) = ([-{\mathrm{i}}/\sqrt {2}, 1/\sqrt {2}], [{\mathrm{i}}/\sqrt {2},1/ \sqrt {2}])$

. So, the resulting updates in the coefficients are

where

![]() $\overline {\boldsymbol{v}}_k$

are the corresponding left eigenvectors due to

$\overline {\boldsymbol{v}}_k$

are the corresponding left eigenvectors due to

![]() $(\iota _0(2n-\mu -1)+\ell ){\mathrm{i}} I - \iota _0 J$

being skew-adjoint.

$(\iota _0(2n-\mu -1)+\ell ){\mathrm{i}} I - \iota _0 J$

being skew-adjoint.

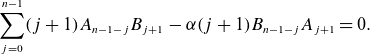

There are two cases where the update (2.58) fails. The first case is when

![]() $2n - \mu - 2k = 0$

and

$2n - \mu - 2k = 0$

and

![]() $\ell = 0$

, occurring only when

$\ell = 0$

, occurring only when

![]() $\mu$

is even. This is the standard secularity that indicates that

$\mu$

is even. This is the standard secularity that indicates that

![]() $\iota _\mu$

must be updated, giving the condition that

$\iota _\mu$

must be updated, giving the condition that

![]() $\left \langle \overline {\boldsymbol{v}}_{0} , \ \boldsymbol{F}_{\mu +1,\mu /2,0} \right \rangle = \left \langle \overline {\boldsymbol{v}}_{1} , \ \boldsymbol{F}_{\mu +1,\mu /2+1,0} \right \rangle = 0$

for single-valued solutions, where we note that these formulae are equivalent for real magnetic fields. The resulting formula is

$\left \langle \overline {\boldsymbol{v}}_{0} , \ \boldsymbol{F}_{\mu +1,\mu /2,0} \right \rangle = \left \langle \overline {\boldsymbol{v}}_{1} , \ \boldsymbol{F}_{\mu +1,\mu /2+1,0} \right \rangle = 0$

for single-valued solutions, where we note that these formulae are equivalent for real magnetic fields. The resulting formula is

The second case of failure is when

![]() $\iota _0$

is rational, as then there are other values of

$\iota _0$

is rational, as then there are other values of

![]() $(\mu ,n,k,\ell )$

such that (2.58) is singular. This is attributed to the expanding number of

$(\mu ,n,k,\ell )$

such that (2.58) is singular. This is attributed to the expanding number of

![]() $\theta$

modes at each order, where higher-order poloidal perturbations resonate with the axis. To avoid this, extra resonant terms in the higher-order magnetic field must be introduced to avoid secularity (Duignan & Meiss Reference Duignan and Meiss2021) (equivalently, this requires adding terms to the Hamiltonian normal form). Here, we assume that

$\theta$

modes at each order, where higher-order poloidal perturbations resonate with the axis. To avoid this, extra resonant terms in the higher-order magnetic field must be introduced to avoid secularity (Duignan & Meiss Reference Duignan and Meiss2021) (equivalently, this requires adding terms to the Hamiltonian normal form). Here, we assume that

![]() $\iota _0$

is irrational so that the iteration is well defined.

$\iota _0$

is irrational so that the iteration is well defined.

We note that there is still one undetermined part of the problem: what value to choose for

![]() $\langle {\overline {\boldsymbol{v}}_{0}},{\tilde {\boldsymbol{\xi }}_{\mu +1,\mu /2,0}}\rangle$

and its complex conjugate. Because this is arbitrary, we currently set this coefficient to

$\langle {\overline {\boldsymbol{v}}_{0}},{\tilde {\boldsymbol{\xi }}_{\mu +1,\mu /2,0}}\rangle$

and its complex conjugate. Because this is arbitrary, we currently set this coefficient to

![]() $0$

. However, other options could be to choose this value to improve the radius of convergence or to match the flux (2.43). In summary, the straight field-line coordinate process is

$0$

. However, other options could be to choose this value to improve the radius of convergence or to match the flux (2.43). In summary, the straight field-line coordinate process is

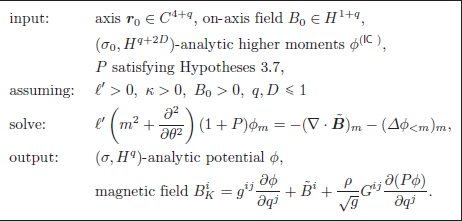

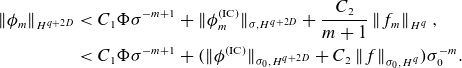

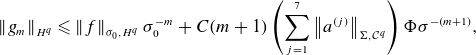

3. Ill-posedness and regularisation

In this section, we describe how the near-axis problem is ill-posed (§ 3.1) and how we can regularise the problem (§ 3.2). In § 3.3, we state how the near-axis expansion of

![]() $\phi$

converges under suitable input assumptions (Theorem (3.8) and Corollary (3.9)). Most proofs can be found in Appendix A, where the individual sections are referred to after each statement.

$\phi$

converges under suitable input assumptions (Theorem (3.8) and Corollary (3.9)). Most proofs can be found in Appendix A, where the individual sections are referred to after each statement.

3.1. Ill-posedness

We define a problem as ill-posed if it is not well-posed, where the standard definition of a well-posed problem is that:

-

(i) the solution exists;

-

(ii) the solution is unique;

-

(iii) the solution is continuous in the initial data.

We note that the interpretations of these statements depend on what space we require the solution to belong to and over which topology continuity is described in. For instance, it is straightforward to show existence and uniqueness in the sense of a formal power series.

Proposition 3.1.

Consider the near-axis problem in box (

2.30

), with all inputs in

![]() $C^{\infty }$

. Then, there exists a unique formal power series solution

$C^{\infty }$

. Then, there exists a unique formal power series solution

![]() $\phi _n(s)$

at each order.

$\phi _n(s)$

at each order.

Proof.

Simply notice that the residual at each order is

![]() $C^\infty$

if every previous order is. Then, because the inverse of

$C^\infty$

if every previous order is. Then, because the inverse of

![]() $\Delta _\perp$

of

$\Delta _\perp$

of

![]() $C^{\infty }$

functions is

$C^{\infty }$

functions is

![]() $C^\infty$

, we satisfy the Fredholm alternative, and we specify the null space the operator at each order, we have a unique solution.

$C^\infty$

, we satisfy the Fredholm alternative, and we specify the null space the operator at each order, we have a unique solution.

Note that this proposition says nothing about the convergence of the power series to a solution off-axis; it only shows that we can find the coefficients of the power series. So, formal existence does not necessarily imply good or consistent computational results.

Another straightforward existence result for harmonic inputs is the following.

Proposition 3.2.

Let

![]() $\boldsymbol{B} = \nabla \phi + \tilde {\boldsymbol{B}}$

be a valid vacuum magnetic field on

$\boldsymbol{B} = \nabla \phi + \tilde {\boldsymbol{B}}$

be a valid vacuum magnetic field on

![]() $\Omega _\sigma$

with a real-analytic axis

$\Omega _\sigma$

with a real-analytic axis

![]() $\boldsymbol{r}_0$

and

$\boldsymbol{r}_0$

and

![]() $\sigma \gt 0$

. Then, the near-axis expansion using the coefficients corresponding to

$\sigma \gt 0$

. Then, the near-axis expansion using the coefficients corresponding to

![]() $\phi$

converges uniformly on a smaller domain

$\phi$

converges uniformly on a smaller domain

![]() $\Omega _{\sigma '}$

with

$\Omega _{\sigma '}$

with

![]() $0\lt \sigma '\lt \sigma$

.

$0\lt \sigma '\lt \sigma$

.

Proof. See § A.1

Proposition (3.2) is a useful result because it says that vacuum fields can, in principle, be written as solutions to the infinite near-axis problem. However, this is a difficult theorem to use in practice, as it is difficult to verify a priori whether the input data to the near-axis expansion agree with a solution of Poisson’s equation.

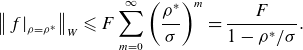

So, for a more computational approach, we must define normed spaces of inputs and outputs that agree with notions of convergence. To intuit what the correct space may be, we observe that the radial direction behaves as a ‘time-like’ variable, whereas the

![]() $\theta$

and

$\theta$

and

![]() $s$

behave more like spatial variables. That is, the near-axis PDE can be thought of as propagating surface information off the axis. This motivates a decision to separate our treatment of these coordinates. Moreover, we desire convergence in a power series in the radial variable, so we choose to treat it in an analytic manner. In contrast, the coefficients

$s$

behave more like spatial variables. That is, the near-axis PDE can be thought of as propagating surface information off the axis. This motivates a decision to separate our treatment of these coordinates. Moreover, we desire convergence in a power series in the radial variable, so we choose to treat it in an analytic manner. In contrast, the coefficients

![]() $\phi _m(\theta ,s)$

are obtained by solving a linear PDE at each order, so we treat them in a Sobolev sense as a function of

$\phi _m(\theta ,s)$

are obtained by solving a linear PDE at each order, so we treat them in a Sobolev sense as a function of

![]() $\theta$

and

$\theta$

and

![]() $s$

.

$s$

.

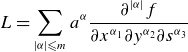

To this end, let

![]() $\alpha = (\alpha _1,\alpha _2)$

be a multi-index of degree

$\alpha = (\alpha _1,\alpha _2)$

be a multi-index of degree

![]() $2$

and

$2$

and

![]() $\left | \alpha \right | = \sum _j \alpha _j$

. We define the

$\left | \alpha \right | = \sum _j \alpha _j$

. We define the

![]() $H^q$

Sobolev norm of functions

$H^q$

Sobolev norm of functions

![]() $f : \mathbb{T}^2 \to \mathbb{R}$

as

$f : \mathbb{T}^2 \to \mathbb{R}$

as

where

and

![]() $\mu$

is the Lebesgue measure on

$\mu$

is the Lebesgue measure on

![]() $\mathbb{T}^2$

. We additionally define the

$\mathbb{T}^2$

. We additionally define the

![]() $C^q$

norm of a

$C^q$

norm of a

![]() $q$

-times differentiable function

$q$

-times differentiable function

![]() $f : \mathbb{T}^2 \to \mathbb{R}$

as

$f : \mathbb{T}^2 \to \mathbb{R}$

as

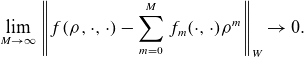

Then, we define the following convenient near-axis function space.

Definition 3.3.

Let

![]() $\sigma \gt 0$

and

$\sigma \gt 0$

and

![]() $W$

be a Banach space on functions on

$W$

be a Banach space on functions on

![]() $\mathbb{T}^2$

. We define a function

$\mathbb{T}^2$

. We define a function

![]() $f : \Omega _\sigma ^0 \to \mathbb{R}^d$

as

$f : \Omega _\sigma ^0 \to \mathbb{R}^d$

as

![]() $(\sigma ,W)$

-analytic if

$(\sigma ,W)$

-analytic if

![]() $f$

has a convergent near-axis expansion of the form

$f$

has a convergent near-axis expansion of the form

\begin{equation} f(\rho ,\theta ,s) = \sum _{m=0}^\infty f_m(\theta , s)\rho ^m, \qquad f_m(\theta ,s) = \sum _{n=0}^m f_{mn}(s) e^{(2n-m){\mathrm{i}} \theta }, \end{equation}

\begin{equation} f(\rho ,\theta ,s) = \sum _{m=0}^\infty f_m(\theta , s)\rho ^m, \qquad f_m(\theta ,s) = \sum _{n=0}^m f_{mn}(s) e^{(2n-m){\mathrm{i}} \theta }, \end{equation}

where the norm

is bounded. Here, convergence of the near-axis expansion is pointwise in

![]() $\rho$

and in norm in

$\rho$

and in norm in

![]() $(\theta ,s)$

, i.e. for all

$(\theta ,s)$

, i.e. for all

![]() $\rho \lt \sigma$

,

$\rho \lt \sigma$

,

\begin{equation} \lim _{M\to \infty }\left \lVert f(\rho ,\cdot ,\cdot ) - \sum _{m=0}^M f_m(\cdot ,\cdot ) \rho ^m \right \rVert _{W} \to 0. \end{equation}

\begin{equation} \lim _{M\to \infty }\left \lVert f(\rho ,\cdot ,\cdot ) - \sum _{m=0}^M f_m(\cdot ,\cdot ) \rho ^m \right \rVert _{W} \to 0. \end{equation}

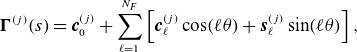

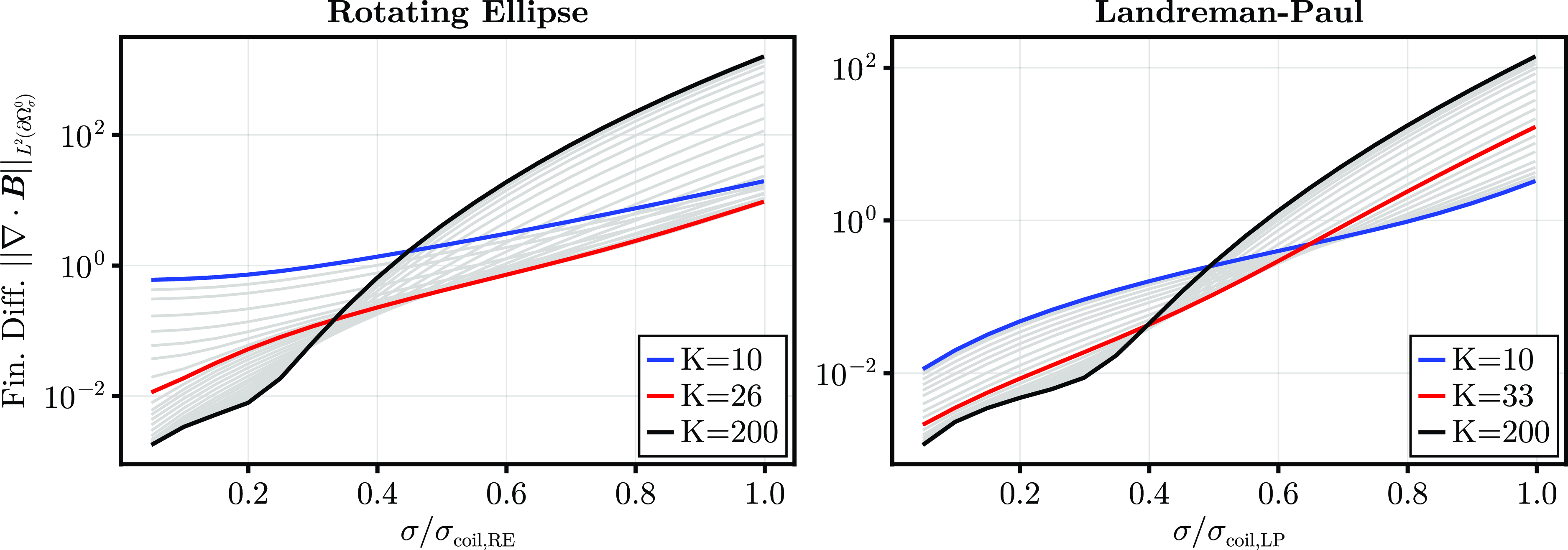

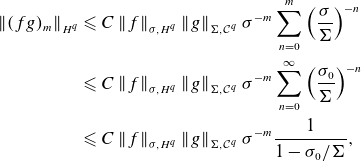

Paralleling standard linear regularity theory (Evans Reference Evans2010), we will consider near-axis solutions